Abstract

Objective

To improve breast radiographers’ individual performance by using automated software to assess the correctness of breast positioning and compression in tomosynthesis screening.

Materials and methods

In this retrospective longitudinal analysis of prospective cohorts, six breast radiographers with varying experience in the field were asked to use automated software to improve their performance in breast compression and positioning. The software tool automatically analyzes craniocaudal (CC) and mediolateral oblique (MLO) views for their positioning quality by scoring them according to PGMI classifications (perfect, good, moderate, inadequate) and checking whether the compression pressure is within the target range. The positioning and compression data from the studies acquired before the start of the project were used as individual baselines, while the data obtained after the training were used to test whether conscious use of the software could help the radiographers improve their performance. The percentage of views rated perfect or good and the percentage of views in target compression were used as overall metrics to assess changes in performance.

Results

Following the use of the software, all radiographers significantly increased the percentage of images rated as perfect or good in both CCs and MLOs. Individual improvements ranged from 7 to 14% for CC and 10 to 16% for MLO views. Moreover, most radiographers exhibited improved compression performance in CCs, with improvements up to 16%.

Conclusion

Active use of a software tool to automatically assess the correctness of breast compression and positioning in breast cancer screening can improve the performance of radiographers.

Clinical relevance statement

This study suggests that the use of a software tool for automatically evaluating correctness of breast compression and positioning in breast cancer screening can improve the performance of radiographers on these metrics, which may ultimately lead to improved screening outcomes.

Key Points

• Proper breast positioning and compression are critical in breast cancer screening to ensure accurate diagnosis.

• Active use of the software increased the quality of craniocaudal and mediolateral oblique views acquired by all radiographers.

• Improved performance of radiographers is expected to improve screening outcomes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Breast cancer is a major public health concern, and early detection through screening is crucial for improved outcomes [1]. Mammography is the most widely used imaging modality for breast cancer detection, but its accuracy can be impacted by factors such as breast positioning and compression [2,3,4,5]. Proper breast positioning in mammography is crucial for several reasons. It helps to ensure that all areas of the breast are properly captured in the image [3], leading to better visualization of any potential abnormalities, and reduces the amount of radiation [6] required to produce a high-quality image. In addition, proper positioning can make the mammography experience better for the patient, reducing discomfort. More importantly, correct breast positioning helps ensure that the radiologist is able to obtain accurate, detailed images that are essential for accurate diagnosis [7].

Digital breast tomosynthesis (DBT) is a newer form of mammography that uses multiple projection images to create a three-dimensional reconstruction of the breast, and has been shown to improve cancer detection rates compared to conventional mammography [8,9,10,11,12]. However, as requirements of breast positioning and compression are unchanged with DBT, the accuracy of DBT is also dependent on proper breast positioning and compression.

The quality of breast positioning during mammography or DBT can be assessed using visual methods such as the “PGMI Image Evaluation System” published in 1994 by the National Health System Breast Screening Program of the UK [13] or the criteria included in the Mammography Quality Control Manual published by the American College of Radiology in 1999 [14], or derived visual methods [15]. Visual assessment methods have two main limitations: one is the need for sampling, as visual assessment is time-consuming, and the other is inter-observer variability [16].

In recent years, automatic software tools have been developed to evaluate the correctness of breast positioning and compression during mammography or tomosynthesis [5, 17,18,19,20,21]. Systematic collection of positioning and compression data can be used as a quality assurance tool, which is much more powerful than other visual methods that require manual completion of a form and inevitably rely on small image samples [15, 22, 23].

The purpose of this study was to investigate whether the informed use of automated software to assess the quality of positioning and compression can improve the individual performance of breast radiographers by increasing the number of high-quality mammograms.

Materials and methods

Study population

This retrospective longitudinal analysis of prospective cohorts aimed to evaluate the performance of six breast radiographers in breast positioning and compression during tomosynthesis screening. The study data were obtained from the RIBBS (Risk-Based Breast Screening) study, a prospective screening trial aiming to assess the effectiveness and sustainability of a personalized screening model for young women based on individual breast density and breast cancer risk.

The RIBBS study invited women aged 45 residing in an area close to our Institution, willing and able to provide written informed consent and comply with scheduled visits, examinations, and other procedures. All accepting women underwent DBT, which was independently evaluated by two breast radiologists (double reading). Quantitative breast density was measured from the DBT images, and individual risk was assessed using the Tyrer-Cuzick risk model [24]. Imaging protocols and intervals of the subsequent screening rounds were defined based on the breast density and risk category (low, intermediate, high). Exclusion criteria comprised a personal history of breast cancer, known BRCA or PALB2 mutations, pregnancy or breastfeeding, and disorders incompatible with protocol requirements and follow-up. Further details about the RIBBS study can be found at ClinicalTrials.gov (NCT05675085). The study is currently ongoing for subsequent screening rounds, and enrolled women continue to be rescreened with personalized protocols determined by their breast density and individual risk until they reach the age of 50. The study received ethics committee approval, and all 10,269 recruited 45-year-old women signed an informed consent form, permitting the use of their data for any subsequent retrospective analysis. As a result, the current study was considered exempt from review by the Ethics Committee.

In this quality improvement project, a retrospective evaluation was conducted of breast positioning and compression parameters measured with an automated software tool from two DBT data sets belonging to the RIBBS study. All radiographers in service at the beginning of the project, with breast imaging experience ranging from 0 to more than 25 years, agreed to participate. Acceptance of this diversity of work experience was a key aspect of our project, which aimed to provide a flexible and personalized learning experience for each radiographer.

Two Hologic DBT units were used to acquire all screening examinations. Each machine captured 15 projection images over a 15° arc (± 7.5°) and reconstructed tomosynthesis planes that were sampled every 1 mm in the z direction. Bilateral DBT in two views (craniocaudal—CC, and mediolateral oblique—MLO) with synthetic mammography (SM) reconstructed from DBT was used for the screening protocol [8, 9, 11, 25].

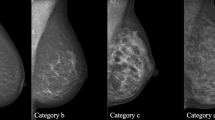

Automated assessment of breast positioning and compression

In this study, breast positioning and compression parameters were evaluated using Volpara Analytics (Volpara Health). The software systematically analyzes CC and MLO images acquired by different modalities (digital mammography, tomosynthesis, and contrast-enhanced mammography) and collects different types of data, including volumetric breast density, breast compression and positioning parameters, exposure data, and radiation dose in a single cloud-based database. The software employs the Volpara TruPGMI clinical function, which assesses breast positioning based on international best practices, such as the UK PGMI standard, and the adapted PGMI criteria from Australia, New Zealand, Norway, and the American College of Radiology [26]. This method establishes four positioning metrics for CC views and seven for MLOs, categorizing outcomes into four classes (perfect—P; good—G; moderate—M; inadequate—I), as reported in Table 1. Furthermore, the software calculates the percentage of images classified as perfect or good (% P + G), serving as a comprehensive measure of positioning accuracy.

In addition, the software determines the compression pressure applied to the breast by dividing the compression force reported in the DICOM header by the software-derived contact area of the breast with the compression plate. The target compression pressure range was set between 7 and 15 kPa [19, 27]; pressure values below or above that range are classified as low and high compressions, respectively. The percentage of images with compression in the target range is provided as a metric of compression quality.

The software presents breast positioning and compression performance metrics in interactive dashboards accessible through a web browser. Radiographers can review and monitor their performance, identifying areas for improvement by comparing their metrics against aggregate measures from their peers within the institution and other Volpara Analytics users globally (over 5200 radiographers with more than 85 million images from several countries) [27]. The software interface ensures that radiographers can exclusively access data generated from the images they acquired themselves, without access to data from other radiographers.

Quality improvement method

The software was available to the breast radiographers since 2019 via the exam room computer, but they were not actively encouraged to use it until 2022. In 2022, a quality improvement project was initiated to support the individual improvement of radiographers, using the software. The project was led by a medical physicist, the lead radiographer, and a thesis student (undergraduate radiographer).

A large dataset of DBT screening studies acquired by five of the six radiographers participating in the study served as the “baseline” for compression and positioning data (“BEFORE training” dataset, Sep–Dec 2021). Individual metrics were compared with the median of the global distribution of software users [27]. Results from this baseline were shared with the five radiographers who produced them and a newly graduated colleague who joined the team at the start of the project.

A training course was organized to familiarize all radiographers with software functionalities, and comprehensive assessment and open discussions of positioning and compression criteria were conducted, using real sample cases. Radiographers were encouraged to actively engage with the software interface individually to evaluate their data. This included a combination of individual use of the software, which could vary among radiographers, and regular participation in monthly face-to-face meetings with a thesis student. During these meetings, radiographers received comprehensive assistance in analyzing their data, identifying areas for improvement, and tracking progress.

To provide context, a 2-month training period was scheduled in January and February 2022 to allow radiographers to familiarize themselves with the software before the test period began in March 2022. The testing period was designed to facilitate improvements in individual metrics that were below the global median.

Regular face-to-face meetings with the thesis student played a key role in ensuring that all radiographers remained well informed about changes in their performance and were equipped to effectively address their areas for improvement. Throughout the study, the purpose of using the software was to promote a collaborative environment among radiographers, emphasizing teamwork over competition.

In essence, the “active use of the software” in this study represented a holistic approach, combining individual interactions with the software and personalized support provided during monthly meetings by the thesis student to facilitate performance analysis, improvement, and continuous evaluation of progress.

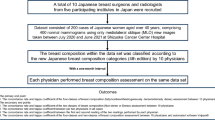

The dataset used to test the impact of using the software consisted of screening images acquired between March and December 2022 from the five radiographers who contributed to the baseline and the newly joined colleague (“AFTER training” dataset). Figure 1 illustrates the experiment’s schematics.

Schematic representation of the study design. The evaluation of the radiographer’s performance in breast compression and positioning was conducted using a set of screening data acquired before software training. Baseline performance was established and used as a reference point. Then, radiographers participated in a course to establish individual improvement goals and a training period to familiarize themselves with the automated software. Finally, post-training screening data were compared with baseline data to assess the improvement of the software aid

Statistical analysis

Individual changes in positioning metrics and compression performance were analyzed, and the differences in the proportions of images rated perfect or good before and after the training period for each radiographer were tested using a two-sample Z test of proportions. A p-value of less than 0.05 was considered statistically significant. Statistical analysis was performed using OriginPro 2020b (OriginLab Corporation) and MedCalc v. 20.216 (MedCalc Software Ltd).

The study was conducted in adherence to SQUIRE (Standards for QUality Improvement Reporting Excellence) guidelines v 2.0 [28].

Results

Study population

The study population included 2407 women in the pre-software training cohort and 3986 in the post-software cohort. The characteristics of the study population are given in Table 2 in terms of median values and their 95% confidence intervals (95% CI), and include the women’s age, breast thickness and compression force extracted from the DICOM image header, and breast volume, volumetric breast density (VBD), contact area, and compression pressure calculated by the Volpara software.

Among the six radiographers engaged in the project, three possessed more than 25 years of experience in mammography and tomosynthesis. Of the other three, two had experience of 2.5 years and 1.5 years, respectively. The last member started her role in breast radiology from January 2022. In total, 9609 views (4815 CCs and 4794 MLOs) were analyzed for the before training period, and 15,853 views (7942 CCs and 7911 MLOs) for the after training period. Table 3 provides details on the breast imaging experience of each radiographer at the beginning of the study and the total number of DBT screening studies and views. Each screening study included two CCs and two MLOs; however, some discrepancies between number of CCs and MLOs are caused by some software exclusions because of possible implant presence in the field of view, mosaic in case of extremely large breasts, medial–lateral or lateral-medial views.

Baseline performance

In Fig. 2, the before training performance of five radiographers who contributed to the study is presented in terms of (a) percentage of CCs and MLOs scored perfect or good, and (b) percentage of CCs and MLOs within the target compression. In addition, the median performance of all software users is shown as a benchmark. It is worth noting that already from baseline all five radiographers performed better in CC positioning than in MLOs, exceeding the median only for CC views. In contrast, all demonstrated better compression performance for MLOs than for CCs, with results well above the median value. Only R2 achieved a percentage of CCs in target compression above the overall median.

a Baseline positioning performance for five of the six radiographers involved in the study; positioning performance is represented by the percentage of images scored perfect or good according to the PGMI method. b Baseline compression performance for five of the six radiographers involved in the study; compression performance is represented by the percentage of images that fall within target compression. Results are shown separately for CC and MLO views. Global median values for both positioning and compression metrics, used as benchmarks, are also provided

In order to determine individual practical improvement goals, all baseline positioning and compression metrics were evaluated for the CC and MLO views, shown in Table 4, along with the global medians as reference values. The percentage of images (CCs and MLOs) that met each positioning and compression metric was reported for each radiographer. Values below the global median were highlighted in bold.

Performance improvement

After the training period, all six radiographers (the five for which baseline performance was reported, plus the new entry) started to actively use the software to improve their positioning and compression performance in screening.

Figure 3 displays individual changes in specific positioning metrics, along with the median and top 10% values from the global distribution of software users. The criterion “Pec shape” for MLO views was excluded from this representation because it was not part of the original PGMI method. Although it is a useful metric, it is not necessary to achieve a score of “Good” and is strongly correlated with two other critical metrics, “Pec to PNL Met” and “Adequate Pec.” In addition, this criterion was excluded for graphical purposes.

Comparison between baseline and final datasets after an active software use for each breast positioning metric and each breast radiographer. a “Nipple in profile” metric for CC views; b “Nipple midline” metric for CC views; c “PNL met” metric for CC views; d “No cutoff” metric for CC views; e “Nipple in profile” metric for MLO views; f “IMF visible” metric for MLO views; g “Pec to PNL” metric for MLO views; h “Adequate Pec” metric for MLO views; i “No Pec skinfolds” metric for MLO views; j “No cutoff” metric for MLO views

Considering the “No cutoff” metric as the most crucial factor (necessary to avoid rating the image inadequate), it is observed that only one radiographer (R1) improved this criterion in CC views, while three radiographers (R1, R3, R5) did so in MLO views. Nonetheless, the “No cutoff” metric for CC views fell between the median and top 10% of the global distribution for all radiographers, including the new one, as did the metric for MLO views, except for R4, who lowered the percentage of no-cutoff images below the global median compared to the baseline value. Three other important metrics were “PNL Met” for CC views and “Pec to PNL” and “Adequate Pec” for MLO views. Each radiographer improved all these metrics, surpassing the top 10% line for MLOs. The “Nipple in profile” metric showed improvement for MLO views by most radiographers, while for CC views, it already exceeded the top 10% mark. The “Nipple midline” metric improved for CC views by all radiographers, and MLO inframammary fold visibility was enhanced by the majority of them. Lastly, the “No Pec skinfold” metric remained relatively stable for all radiographers, falling between the global median and top 10% of software users.

It is noteworthy that the young radiographer who joined the breast radiology team after graduation and actively used the software from the beginning performed equivalent to more experienced colleagues and even exceeded the overall medians in some metrics.

Figure 4 shows that all changes in individual positioning metrics affected the proportion of images rated as perfect or good. The active use of the software tool significantly increased the proportion of images rated as perfect or good (in both CCs and MLOs) for each radiographer. Individual improvements ranged from 7 to 14% for CC and 10 to 16% for MLO views.

a Comparison between percentage of CC images rated perfect or good in the baseline (in blue) and in the final dataset (in red) after starting the active software use. b Comparison between percentage of MLO images rated perfect or good in the baseline and in the final dataset after starting the active software use

Radar plots in Fig. 5 illustrate the percentage of images within the target compression in periods before and after training for active use of the software.

Overall, there was a rise in the number of correctly compressed CC views (up to 16%), with the majority of radiographers performing equally or better than the global median. When it came to MLO compression, only R2 showed improvement compared to the baseline, but all radiographers except R3 demonstrated significantly better MLO compression than the global median of software users.

Discussion

The present study aimed to evaluate the impact of using automated software that assesses the correctness of breast compression and positioning based on the PGMI method on the performance of breast radiographers. Results indicated that after software training and active use, all five evaluated breast radiographers showed an increase in the percentage of images rated as perfect or good, as well as in the percentage of images achieving the target compression. The sixth radiographer, who began using the software from the start, performed above the median for CC views and nearly as well as more experienced colleagues for MLO views. These findings indicate that an active and informed use of automated software which can assess the correctness of breast compression and positioning based on the PGMI method can improve radiographer performance regardless of experience level in mammography or tomosynthesis screening.

Regular updates and interactions with the thesis student played a crucial role in helping radiographers identify areas for improvement and make informed adjustments to their positioning and compression techniques.

The project was designed to support individual improvements, focusing on each radiographer’s specific needs and areas for development, rather than aiming for a standardized level of performance. Taking a data-driven approach, radiographers were encouraged to concentrate on specific aspects of their practice most relevant to their professional growth. The flexibility of the project and the personalized learning experience proved useful in helping radiographers to effectively identify and address their weaknesses.

Other factors, such as breast size, breast density, and radiographers’ fatigue, were intentionally excluded from the study, as the primary objective was to verify the effectiveness of the software tool in improving individual radiographer performance, rather than engaging in a speculative analysis of factors associated with breast mispositioning or under/over-compression.

The study by Pal et al demonstrated that visual evaluation of positioning criteria can lead to an improvement in mammographic positioning skills among radiographers [23]. However, visual assessment has limitations, particularly in the time-consuming process of evaluating a limited number of mammography/tomosynthesis examinations, potentially reducing the strength of performance evaluation before and after training. Moreover, visual assessment often lacks consensus among radiographers and radiologists in interpreting positioning criteria, as highlighted by Spuur [29] and Taylor [30].

In a study by Waade et al, 156 screening mammography exams were visually evaluated using the PGMI method by two experienced radiographers, and the resulting positioning criteria were compared to those generated by the software used in our study [17]. The researchers observed substantial to almost perfect agreement between the software and radiographers for certain criteria, such as nipple in profile and PNL met in CC views, and slight to moderate agreement for others, such as adequate Pec and IMF visible in MLO views. Overall, they found that the agreement between radiographers was better than that between radiographers and the software [17]. Picard et al conducted a similar study in which they compared the paired visual assessment of positioning quality by a radiographer and a radiologist with that provided by automated software. They found that while some disparities existed between the readers’ subjective assessments and the objective assessments, considerable agreement existed between readers and software regarding the overall assessment of positioning [31].

It is important not to interpret the disagreement between visual evaluation and software as a failure of the software tool itself. In order to achieve “the full visualization of breast from the pectoral muscle to the nipple” described in the PGMI, software needs to perform precise geometrical measurements, such as measuring the Pec to PNL distance in each of the CC and MLO views for their comparison. In contrast, visual evaluation by human readers works differently and does not rely solely on geometric measurements. Visual evaluation can be quite subjective and influenced by image aesthetics or personal preferences. Poor agreement levels have been demonstrated between expert readers on evaluation of the depiction of various features of the normal tissue [32]. Therefore, differences in the evaluations between the software and visual assessments do not necessarily indicate a problem with the software tool, but rather may indicate a difference in the techniques utilized by humans and software to verify the same positioning criteria.

The use of an automated software to systematically score mammography images according to the PGMI criteria provides a more consistent, efficient, and effective approach to evaluating the quality of breast positioning in mammography and tomosynthesis screening.

Limitations of the study include the small number of radiographers involved, all from a single center, which may limit the generalizability of the results to other settings or populations. In addition, the study evaluated the short-term impact of software use on positioning and compression performance, leaving open questions about the sustainability of the observed improvements over an extended period. Another limitation concerns the specific screening population of the study, which included only women aged 46–47, a selection dictated by screening population available at our institution. However, we emphasize that the study design and methodology could be applicable to any screening population.

In conclusion, this study suggests that the use of a software tool for automatically evaluating correctness of breast compression and positioning in breast cancer screening can potentially improve radiographer performance, which may ultimately lead to improved patient outcomes. It also reiterates that the path to improvement does not depend on technology alone, but is a collective endeavor rooted in human awareness and proactive engagement. The active participation of radiographers in analyzing their performance emerged as a catalyst for the observed improvements, underscoring the synergy between technology and human action in achieving excellence in breast radiography.

Abbreviations

- CC:

-

Craniocaudal

- DBT:

-

Digital breast tomosynthesis

- IMF:

-

Inframammary fold

- MLO:

-

Mediolateral oblique

- Pec:

-

Pectoral muscle

- PGMI:

-

Perfect good moderate inadequate

- PNL:

-

Pectoral muscle to nipple line

References

Ginsburg O, Yip C-H, Brooks A et al (2020) Breast cancer early detection: a phased approach to implementation. Cancer 126(Suppl 10):2379–2393. https://doi.org/10.1002/cncr.32887

Taplin SH, Rutter CM, Finder C, Mandelson MT, Houn F, White E (2002) Screening mammography: clinical image quality and the risk of interval breast cancer. AJR Am J Roentgenol 178:797–803. https://doi.org/10.2214/ajr.178.4.1780797

Bassett LW, Hirbawi IA, DeBruhl N, Hayes MK (1993) Mammographic positioning: evaluation from the view box. Radiology 188:803–806. https://doi.org/10.1148/radiology.188.3.8351351

Eklund GW, Cardenosa G, Parsons W (1994) Assessing adequacy of mammographic image quality. Radiology 190:297–307. https://doi.org/10.1148/radiology.190.2.8284372

Holland K, Sechopoulos I, Mann RM, den Heeten GJ, van Gils CH, Karssemeijer N (2017) Influence of breast compression pressure on the performance of population-based mammography screening. Breast Cancer Res 19:126. https://doi.org/10.1186/s13058-017-0917-3

Gennaro G, Bernardi D, Houssami N (2018) Radiation dose with digital breast tomosynthesis compared to digital mammography: per-view analysis. Eur Radiol 28:573–581. https://doi.org/10.1007/s00330-017-5024-4

Henderson LM, Benefield T, Bowling JM et al (2015) Do mammographic technologists affect radiologists’ diagnostic mammography interpretative performance? AJR Am J Roentgenol 204:903–908. https://doi.org/10.2214/AJR.14.12903

Skaane P, Bandos AI, Eben EB et al (2014) Two-view digital breast tomosynthesis screening with synthetically reconstructed projection images: comparison with digital breast tomosynthesis with full-field digital mammographic images. Radiology 271:655–663. https://doi.org/10.1148/radiol.13131391

Bernardi D, Macaskill P, Pellegrini M et al (2016) Breast cancer screening with tomosynthesis (3D mammography) with acquired or synthetic 2D mammography compared with 2D mammography alone (STORM-2): a population-based prospective study. Lancet Oncol 17:1105–1113. https://doi.org/10.1016/S1470-2045(16)30101-2

Zackrisson S, Lång K, Rosso A et al (2018) One-view breast tomosynthesis versus two-view mammography in the Malmö Breast Tomosynthesis Screening Trial (MBTST): a prospective, population-based, diagnostic accuracy study. Lancet Oncol 19:1493–1503. https://doi.org/10.1016/S1470-2045(18)30521-7

Caumo F, Montemezzi S, Romanucci G et al (2021) Repeat screening outcomes with digital breast tomosynthesis plus synthetic mammography for breast cancer detection: results from the prospective Verona pilot study. Radiology 298:49–57. https://doi.org/10.1148/radiol.2020201246

Pattacini P, Nitrosi A, Giorgi Rossi P et al (2022) A randomized trial comparing breast cancer incidence and interval cancers after tomosynthesis plus mammography versus mammography alone. Radiology 303:256–266. https://doi.org/10.1148/radiol.211132

Guidance for breast screening mammographers. In: GOV.UK. https://www.gov.uk/government/publications/breast-screening-quality-assurance-for-mammography-and-radiography/guidance-for-breast-screening-mammographers. Accessed 7 Mar 2023

Hendrick RE, Bassett LO, Botsco MA et al (1999) Mammography quality control manual, 4th edn. American College of Radiology, Reston

Galli V, Pini M, De Metrio D, de Bianchi PS, Bucchi L (2021) An image quality review programme in a population-based mammography screening service. J Med Radiat Sci 68:253–259. https://doi.org/10.1002/jmrs.487

Boyce M, Gullien R, Parashar D, Taylor K (2015) Comparing the use and interpretation of PGMI scoring to assess the technical quality of screening mammograms in the UK and Norway. Radiography 21:342–347. https://doi.org/10.1016/j.radi.2015.05.006

Waade GG, Danielsen AS, Holen ÅS et al (2021) Assessment of breast positioning criteria in mammographic screening: agreement between artificial intelligence software and radiographers. J Med Screen 28:448–455. https://doi.org/10.1177/0969141321998718

Branderhorst W, de Groot JE, Highnam R et al (2015) Mammographic compression–a need for mechanical standardization. Eur J Radiol 84:596–602. https://doi.org/10.1016/j.ejrad.2014.12.012

García E, Martí R, Martí J et al (2022) Simultaneous pectoral muscle and nipple location in MLO mammograms, considering image quality assumptions. In: Bosmans H, Marshall N, Van Ongeval C (eds) 16th International Workshop on Breast Imaging (IWBI2022). SPIE, Leuven, Belgium, p 42

Bülow T, Meetz K, Kutra D et al (2013) Automatic assessment of the quality of patient positioning in mammography. In: Novak CL, Aylward S (eds) Lake Buena Vista (Orlando Area). Florida, USA, p 867024

Sze Man Kwok, Chandrasekhar R, Attikiouzel Y (2004) Automatic assessment of mammographic positioning on the mediolateral oblique view. In: 2004 International Conference on Image Processing, 2004. ICIP ’04. IEEE, Singapore, pp 151–154

Rouette J, Elfassy N, Bouganim N, Yin H, Lasry N, Azoulay L (2021) Evaluation of the quality of mammographic breast positioning: a quality improvement study. CMAJ Open 9:E607–E612. https://doi.org/10.9778/cmajo.20200211

Pal S, Ikeda DM, Jesinger RA, Mickelsen LJ, Chen CA, Larson DB (2018) Improving performance of mammographic breast positioning in an academic radiology practice. AJR Am J Roentgenol 210:807–815. https://doi.org/10.2214/AJR.17.18212

Tyrer J, Duffy SW, Cuzick J (2004) A breast cancer prediction model incorporating familial and personal risk factors. Stat Med 23:1111–1130. https://doi.org/10.1002/sim.1668

Hofvind S, Hovda T, Holen ÅS et al (2018) Digital breast tomosynthesis and synthetic 2D mammography versus digital mammography: evaluation in a population-based screening program. Radiology 287:787–794. https://doi.org/10.1148/radiol.2018171361

Johnston L, Highnam R (2022) TruePGMI: AI for mammography quality improvement. https://sites.google.com/d/1jhwhjwyp8PWPga1GLKZ4BVbi2OgWteqQ/p/1dcSnPIrDAStTMexKkJ2dMFtsISuJ5XGa/edit?pli=1. Accessed 2 May 2023

Mammography Analytics | Analytics. In: Volpara Health. https://www.volparahealth.com/breast-health-platform/products/analytics/. Accessed 2 May 2023

Ogrinc G, Davies L, Goodman D, Batalden P, Davidoff F, Stevens D (2016) SQUIRE 2.0 (Standards for QUality Improvement Reporting Excellence): revised publication guidelines from a detailed consensus process. BMJ Qual Saf 25:986–992. https://doi.org/10.1136/bmjqs-2015-004411

Spuur K, Webb J, Poulos A, Nielsen S, Robinson W (2018) Mammography image quality and evidence based practice: analysis of the demonstration of the inframammary angle in the digital setting. Eur J Radiol 100:76–84. https://doi.org/10.1016/j.ejrad.2018.01.004

Taylor K, Parashar D, Bouverat G et al (1995) (2017) Mammographic image quality in relation to positioning of the breast: a multicentre international evaluation of the assessment systems currently used, to provide an evidence base for establishing a standardised method of assessment. Radiography (Lond) 23:343–349. https://doi.org/10.1016/j.radi.2017.03.004

Picard M, Cockmartin L, Buelens K et al (2022) Objective and subjective assessment of mammographic positioning quality. In: Bosmans H, Marshall N, Van Ongeval C (eds) 16th International Workshop on Breast Imaging (IWBI2022). SPIE, Leuven, Belgium, p 22

Boita J, Van Engen RE, Mackenzie A et al (2021) Validation of a candidate instrument to assess image quality in digital mammography using ROC analysis. Eur J Radiol 139:109686. https://doi.org/10.1016/j.ejrad.2021.109686

Acknowledgements

The authors are users of Volpara Analytics software.

Funding

This research is financially supported by “5x1000 anno di riferimento 2016 – Approccio globale alle problematiche oncologiche (AGON)”, which provides funding for open access publication.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Guarantor

The scientific guarantor of this publication is Gisella Gennaro.

Conflict of interest

The authors of this manuscript declare relationships with the following companies: Volpara software users.

Statistics and biometry

One of the authors has significant statistical expertise.

Informed consent

This retrospective longitudinal analysis of prospective cohorts aimed to evaluate the performance of six breast radiographers in breast positioning and compression during tomosynthesis screening. The study data were obtained from the RIBBS (Risk-Based Breast Screening) study, a prospective screening trial aiming to assess the effectiveness and sustainability of a personalized screening model for young women based on individual breast density and breast cancer risk. The RIBBS study received ethics committee approval, and all 10,269 recruited 45-year-old women signed an informed consent form, permitting the use of their data for any subsequent retrospective analysis. Given the comprehensive informed consent obtained for the RIBBS study, specific additional consent for the current analysis was not required and the study was considered exempt from further review by the Ethics Committee.

Ethical approval

As all data used in the current analysis were derived from the existing pool of information obtained through the RIBBS study, and no additional interventions or interactions with participants occurred, there was no need for additional ethical approval.

Study subjects or cohorts overlap

There is no cohort overlap.

Methodology

• retrospective longitudinal analysis of prospective cohorts

• observational

• performed at one institution

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gennaro, G., Povolo, L., Del Genio, S. et al. Using automated software evaluation to improve the performance of breast radiographers in tomosynthesis screening. Eur Radiol (2023). https://doi.org/10.1007/s00330-023-10457-x

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00330-023-10457-x