Abstract

Objectives

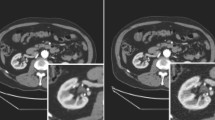

PET/CT is a first-line tool for the diagnosis of lung cancer. The accuracy of quantification may suffer from various factors throughout the acquisition process. The dynamic PET parametric Ki provides better quantification and improve specificity for cancer detection. However, parametric imaging is difficult to implement clinically due to the long acquisition time (~ 1 h). We propose a dynamic parametric imaging method based on conventional static PET using deep learning.

Methods

Based on the imaging data of 203 participants, an improved cycle generative adversarial network incorporated with squeeze-and-excitation attention block was introduced to learn the potential mapping relationship between static PET and Ki parametric images. The image quality of the synthesized images was qualitatively and quantitatively evaluated by using several physical and clinical metrics. Statistical analysis of correlation and consistency was also performed on the synthetic images.

Results

Compared with those of other networks, the images synthesized by our proposed network exhibited superior performance in both qualitative and quantitative evaluation, statistical analysis, and clinical scoring. Our synthesized Ki images had significant correlation (Pearson correlation coefficient, 0.93), consistency, and excellent quantitative evaluation results with the Ki images obtained in standard dynamic PET practice.

Conclusions

Our proposed deep learning method can be used to synthesize highly correlated and consistent dynamic parametric images obtained from static lung PET.

Key Points

• Compared with conventional static PET, dynamic PET parametric Ki imaging has been shown to provide better quantification and improved specificity for cancer detection.

• The purpose of this work was to develop a dynamic parametric imaging method based on static PET images using deep learning.

• Our proposed network can synthesize highly correlated and consistent dynamic parametric images, providing an additional quantitative diagnostic reference for clinicians.

Similar content being viewed by others

Abbreviations

- 2-[18F]FDG:

-

2-Deoxy-2-[18F]fluoro-D-glucose

- cycleGAN:

-

Cycle generative adversarial network

- OSEM:

-

Ordered subset expectation maximization

- PCC:

-

Pearson correlation coefficient

- PET/CT:

-

Positron emission tomography/computed tomography

- PSF:

-

Point spread function

- PSNR:

-

Peak signal-to-noise ratio

- RMSE:

-

Root mean square error

- ROI:

-

Region of interest

- SD:

-

Standard deviation

- SE:

-

Squeeze-and-Excitation

- sKi:

-

Synthesized Ki

- SSIM:

-

Structural similarity

- SUV:

-

Standardized uptake value

- TOF:

-

Time-of-flight

References

Bunyaviroch T, Coleman RE (2006) PET evaluation of lung cancer. J Nucl Med 47:451–469

Ambrosini V, Nicolini S, Caroli P et al (2012) PET/CT imaging in different types of lung cancer: an overview. Eur Radiol 81:988–1001

Hochhegger B, Alves GRT, Irion KL et al (2015) PET/CT imaging in lung cancer: indications and findings. J Bras Pneumol 41:264–274

Al-Sugair A, Coleman RE (1998) Applications of PET in lung cancer. Semin Nucl Med 28:303–319

Coleman RE (1999) PET in lung cancer. J Nucl Med 40:814–820

De Ruysscher D, Nestle U, Jeraj R, MacManus M (2012) PET scans in radiotherapy planning of lung cancer. Lung Cancer 75:141–145

Heusch P, Buchbender C, Köhler J et al (2014) Thoracic staging in lung cancer: prospective comparison of 18F-FDG PET/MR imaging and 18F-FDG PET/CT. J Nucl Med 55:373–378

Sheikhbahaei S, Mena E, Yanamadala A et al (2017) The value of FDG PET/CT in treatment response assessment, follow-up, and surveillance of lung cancer. AJR Am J Roentgenol 208:420–433

Sheikhbahaei S, Verde F, Hales RK, Rowe SP, Solnes LB (2020) Imaging in therapy response assessment and surveillance of lung cancer: evidenced-based review with focus on the utility of 18F-FDG PET/CT. Clin Lung Cancer 21:485–497

Zhang X, Cherry SR, Xie Z, Shi H, Badawi RD, Qi J (2020) Subsecond total-body imaging using ultrasensitive positron emission tomography. Proc Natl Acad Sci U S A 117:2265–2267

Cherry SR, Jones T, Karp JS, Qi J, Moses WW, Badawi RD (2018) Total-body PET: maximizing sensitivity to create new opportunities for clinical research and patient care. J Nucl Med 59:3–12

Badawi RD, Shi H, Hu P et al (2019) First human imaging studies with the EXPLORER total-body PET scanner. J Nucl Med 60:299–303

Wu Y, Feng T, Zhao Y et al (2022) Whole-body parametric imaging of 18F-FDG PET using uEXPLORER with reduced scanning time. J Nucl Med 63:622–628

Wu J, Liu H, Ye Q et al (2021) Generation of parametric Ki images for FDG PET using two 5-min scans. Med Phys 48:5219–5231

Tomasi G, Turkheimer F, Aboagye E (2012) Importance of quantification for the analysis of PET data in oncology: review of current methods and trends for the future. Mol Imaging Biol 14:131–146

Price PM, Badawi RD, Cherry SR, Jones T (2014) Ultra staging to unmask the prescribing of adjuvant therapy in cancer patients: the future opportunity to image micrometastases using total-body 18F-FDG PET scanning. J Nucl Med 55:696–697

Huang Y, Lu H, Liu F et al (2012) Solitary pulmonary nodules differentiated by dynamic F-18 FDG PET in a region with high prevalence of granulomatous disease. J Radiat Res 53:306–312

Ye Q, Wu J, Lu Y et al (2018) Improved discrimination between benign and malignant LDCT screening-detected lung nodules with dynamic over static 18F-FDG PET as a function of injected dose. Phys Med Biol 63:175015

Zaidi H, Karakatsanis N (2017) Towards enhanced PET quantification in clinical oncology. Br J Radiol 91:20170508

Fahrni G, Karakatsanis NA, Di Domenicantonio G, Garibotto V, Zaidi H (2019) Does whole-body Patlak 18 F-FDG PET imaging improve lesion detectability in clinical oncology? Eur Radiol 29:4812–4821

Jeong YJ, Kang DY, Jeong J, Kim JK, Yoon G (2021) Restoration of full dynamic data using only early and late dynamic data from amyloid PET image. J Nucl Med 62:1441–1441

Zhu JY, Park T, Isola P, Efros AA (2017) Unpaired image-to-image translation using cycle-consistent adversarial networks. Proceedings of the IEEE international conference on computer vision, pp 2223–2232

Pan Y, Liu M, Lian C, Zhou T, Xia Y, Shen D (2018) Synthesizing missing PET from MRI with cycle-consistent generative adversarial networks for Alzheimer’s disease diagnosis. International conference on medical image computing and computer-assisted intervention, pp 455–463

Lei Y, Dong X, Wang T et al (2019) Whole-body PET estimation from low count statistics using cycle-consistent generative adversarial networks. Phys Med Biol 64:215017

Liu Y, Lei Y, Wang Y et al (2019) Evaluation of a deep learning-based pelvic synthetic CT generation technique for MRI-based prostate proton treatment planning. Phys Med Biol 64:205022

Zhang X, Xie Z, Berg E et al (2020) Total-body dynamic reconstruction and parametric imaging on the uEXPLORER. J Nucl Med 61:285–291

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7132–7141

Sanaat A, Shiri I, Arabi H, Mainta I, Nkoulou R, Zaidi H (2021) Deep learning-assisted ultra-fast/low-dose whole-body PET/CT imaging. Eur J Nucl Med Mol Imaging 48:2405–2415

Zhang Z, Yang L, Zheng Y (2018) Translating and segmenting multimodal medical volumes with cycle-and shape-consistency generative adversarial network. Proceedings of the IEEE conference on computer vision and pattern recognition, pp 9242–9251

Huang Z, Wu Y, Fu F et al (2022) Parametric image generation with the uEXPLORER total-body PET/CT system through deep learning. Eur J Nucl Med Mol Imaging 49:2482–2492

Ronneberger O, Fischer P, Brox T (2015) U-net: convolutional networks for biomedical image segmentation. International conference on medical image computing and computer-assisted intervention, pp 234–241

Huynh-Thu Q, Ghanbari M (2008) Scope of validity of PSNR in image/video quality assessment. Electron Lett 44:800–801

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13:600–612

Benesty J, Chen J, Huang Y, Cohen I (2009) Pearson correlation coefficient. Noise reduction in speech processing, pp 1-4

Deng F, Li X, Yang F et al (2021) Low-dose 68 Ga-PSMA prostate PET/MRI imaging using deep learning based on MRI priors. Front Oncol. https://doi.org/10.3389/fonc.2021.818329

Wang H, Huang Z, Zhang Q et al (2021) A preliminary study of dual-tracer PET image reconstruction guided by FDG and/or MR kernels. Med Phys 48:5259–5271

Funding

This work was supported by the National Natural Science Foundation of China (32022042, 81871441, and 62101540), the Shenzhen Excellent Technological Innovation Talent Training Project of China (RCJC20200714114436080), the Shenzhen Science and Technology Program (RCBS20210706092218043), the Guangdong Innovation Platform of Translational Research for Cerebrovascular Diseases of China, the China Postdoctoral Science Foundation (2022M713290), the Key Project of Henan Province Medical Science and Technology Project (LHGJ20210005), and the Henan Provincial Science and Technology Research Projects (222102310627).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Guarantor

The scientific guarantor of this publication is Zhanli Hu.

Conflict of interest

The authors of this manuscript declare no relationships with any companies whose products or services may be related to the subject matter of the article.

Statistics and biometry

No complex statistical methods were necessary for this paper.

Informed consent

Written informed consent was obtained from all subjects (patients) in this study.

Ethical approval

The Ethics Committee of Henan Provincial People’s Hospital & People’s Hospital of Zhengzhou University approved this study (approval number: IRB 2020-127).

Methodology

• prospective

• experimental

• performed at one institution

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

ESM 1

(DOCX 497 kb)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, H., Wu, Y., Huang, Z. et al. Deep learning–based dynamic PET parametric Ki image generation from lung static PET. Eur Radiol 33, 2676–2685 (2023). https://doi.org/10.1007/s00330-022-09237-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00330-022-09237-w