Abstract

Symptom checkers are increasingly used to assess new symptoms and navigate the health care system. The aim of this study was to compare the accuracy of an artificial intelligence (AI)-based symptom checker (Ada) and physicians regarding the presence/absence of an inflammatory rheumatic disease (IRD). In this survey study, German-speaking physicians with prior rheumatology working experience were asked to determine IRD presence/absence and suggest diagnoses for 20 different real-world patient vignettes, which included only basic health and symptom-related medical history. IRD detection rate and suggested diagnoses of participants and Ada were compared to the gold standard, the final rheumatologists’ diagnosis, reported on the discharge summary report. A total of 132 vignettes were completed by 33 physicians (mean rheumatology working experience 8.8 (SD 7.1) years). Ada’s diagnostic accuracy (IRD) was significantly higher compared to physicians (70 vs 54%, p = 0.002) according to top diagnosis. Ada listed the correct diagnosis more often compared to physicians (54 vs 32%, p < 0.001) as top diagnosis as well as among the top 3 diagnoses (59 vs 42%, p < 0.001). Work experience was not related to suggesting the correct diagnosis or IRD status. Confined to basic health and symptom-related medical history, the diagnostic accuracy of physicians was lower compared to an AI-based symptom checker. These results highlight the potential of using symptom checkers early during the patient journey and importance of access to complete and sufficient patient information to establish a correct diagnosis.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The arsenal of therapeutic options available to patients with inflammatory rheumatic diseases (IRD) increased significantly in the last decades. The effectiveness of these therapeutics, however, largely depends on the time between symptom onset and initiation of therapy [1]. Despite various efforts [2], this diagnostic and resulting therapeutic delay could not be significantly reduced [2, 3]. Up to 60% of new referrals to rheumatologists do not end up with a diagnosis of an inflammatory rheumatic disease [4, 5]. On the contrary, due to a decreasing number of rheumatologists and ageing population, this delay is expected to increase even further in the near future [6]. Additionally, illegible and incomplete paper-based referral forms further complicate non-standardized subjective triage decisions of rheumatology referrals.

A big hope to accelerate the time until a final diagnosis are digital symptom assessment tools, such as symptom checkers (SC) [7,8,9,10,11,12,13]. One of the most promising tools that is currently available is artificial intelligence (AI)-based Ada, already used for more than 15 million health assessments in 130 countries [14]. In a case-vignette-based comparison to general physicians (GP) and other SC, Ada showed the greatest coverage of diagnoses (99%) and highest diagnostic accuracy (71%), although being inferior to GP diagnostic accuracy (82%) [15]. The physician version of Ada could significantly reduce the time until diagnosis for rare rheumatic diseases [16] and importantly the majority of rheumatic patients would recommend it to other patients after having used it [5, 7]. Additionally, patients who had previously experienced diagnostic errors are more likely to use symptom checkers [17].

Regarding the diagnostic accuracy of SC, Powley et al. showed that only 4 out of 21 patients with immune-mediated arthritis were given a top diagnosis of rheumatoid arthritis or psoriatic arthritis [18]. 19.4% of individuals using an online-self-referral screening system for axial spondyloarthritis were actually diagnosed with the disease by rheumatologists [19]. Recently we revealed the low diagnostic accuracy (sensitivity: 43%; specificity: 64%) of Ada regarding correct IRD detection [5] in a first randomized controlled trial in rheumatology. In this trial the diagnostic accuracy of Ada, that is solely based on patient medical history, was compared to the final physician diagnosis based on medical history, laboratory results, imaging results and physical examination. Solely based on medical history, Ehrenstein et al. previously showed that even experienced rheumatologists could correctly detect IRD status only in 14% of newly presenting patients [20]. We hypothesized that the relatively low diagnostic accuracy of Ada and other SC is largely based on the information asymmetry in the previous trials (physicians having access to more information than SC) and that the diagnostic accuracy of SC would not be inferior to physicians’ if only based on the same information input.

The objective of this study was hence to compare the diagnostic accuracy of an AI-based symptom checker app (Ada) and physicians regarding the presence/absence of an IRD, solely relying on basic health and symptom-related medical history.

Materials and methods

For this purpose, we used data from the interim analysis of the Evaluation of Triage Tools in Rheumatology (bETTeR) study [5].

The bETTeR dataset

bETTeR is an investigator-initiated multi-center, randomized controlled trial (DRKS00017642) that recruited 600 patients newly presenting to three rheumatology outpatient clinics in Germany [5, 7]. Prior to seeing a rheumatologist, patients completed a structured symptom assessment using Ada and a second tool (Rheport). The final rheumatologists’ diagnosis, reported on the discharge summary report was then compared as a gold standard to Ada’s and Rheport’s diagnostic suggestions. Rheumatologists had no restrictions regarding medical history taking, ordering of laboratory markers, physical examination or usage of imaging to establish their diagnosis.

However, to enable a fairer diagnostic performance comparison of Ada and physicians, in the present study, we reduced the information asymmetry by giving physicians only access to information (basic health data, present, absent, unsure symptoms) that was also available to Ada.

Description of AI-based symptom checker Ada

Ada (www.ada.com) is a free medical app, available in multiple languages, that has been used for more than 15 million health assessments in 130 countries [14]. Similar to a physician-based anamnesis the chatbot starts by inquiring about basic health information and then continues to ask additional questions based on the symptoms entered. Once symptom assessment is finished, the user receives a structured summary report including basic health data, present, excluded and uncertain symptoms. Furthermore, a top disease suggestion (D1), up to 5 total disease suggestions (D5) and the respective likelihood and action advice is also presented to the user. The app is artificial-intelligence-based, constantly updated and disease coverage is not limited to rheumatology [15]. Median app completion time was 7 min [5].

Online survey

An anonymous survey was developed using Google Forms, and eligible rheumatologists in leadership positions were contacted to complete the survey and invite further eligible colleagues. Participants had to confirm that they were (1) physicians, (2) fluent in German with (3) previous work experience in rheumatology care. Participants not fulfilling these criteria were not eligible. Basic demographic information including age, sex, resident/consultant status, years of professional work experience and current workplace (University hospital/other hospital/rheumatology practice) was queried.

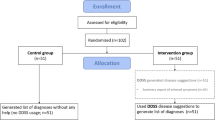

Participants then completed four patient vignettes. Based on the presented basic health data, present, absent and unsure symptoms (see Fig. 1), participants were required to state if an inflammatory rheumatic disease was present (yes/no); a top diagnosis (D1), up to two additional diagnostic suggestions (D3) and their perceived confidence in making a correct diagnosis.

Case vignettes

The sample size was based on the interim results from the bETTeR study [5]. Including all diagnostic suggestions (up to five) Ada correctly classified 89/164 (54%) as non/inflammatory rheumatic diseases and correctly detected 29/54 IRD patients with a sensitivity of 54%. In a study by Ehrenstein et al. [20], rheumatologists had a sensitivity of 73% for detection of an IRD (55/75 correctly detected). Based on these assumptions, we did a sample size calculation using McNemar’s test for two dependent groups. With a power of 80% and a type 1 error of 5%, n = 113 completed case vignettes are needed to reject the null hypothesis that Ada and rheumatologists have an equal diagnostic accuracy regarding IRD classification of the top diagnosis.

To reflect a real-world IRD/non-IRD case mix, similar to the interim analysis [5] and a further observational study [4], we chose a mix of 40%/60% of IRD/non-IRD patient case vignettes. Additionally, 50% were “difficult” to diagnose cases. Difficult cases were defined as cases, where the referring physician suspected a different diagnosis than the gold standard diagnosis. The remaining 50% were “easy” to diagnose with a final gold standard diagnosis matching the suspected diagnosis of the referring physician.

Based on these predefined requirements, a total of 20 clinical patient vignettes (Supplementary Material 1) were randomly chosen from the interim bETTeR dataset. This set of 20 clinical vignettes was divided in five sets of four clinical vignettes per set to ensure completion of four clinical vignettes per participant.

Data analysis

Participant demographics were reported using descriptive statistics. All diagnostic suggestions were manually reviewed. If an IRD was among the top three (D3) or top five suggestions (Ada D5), respectively, D3 and D5 were summarized as IRD-positive (even if non-IRD diagnoses were also among the suggestions). Proportions of correctly classified patients were compared between rheumatologists and Ada using Mc Nemar’s test for two dependent groups.

The relationship between years of work experience (general and in rheumatology) and correctly classifying a patient as having an IRD was assessed using generalized linear mixed models with a random intercept, a binary distribution and logit link function.

Results

Participant demographics

A total of 132 vignettes were completed by 33 physicians between September 24, 2021, and October 14, 2021. Table 1 displays the participant demographics. Mean age was 39 years (27–57 years, standard deviation (SD) 8.2), 15 (46%) participants were female. 22 (67%) were board-certified specialists. An equal number of participants was working at a rheumatology practice or in a university hospital (both n = 16, 49%). Mean professional experience and experience in rheumatology care was 12 (SD 7.4) and 8.8 (SD 7.1) years, respectively.

Comparison of diagnostic accuracy

Correct classification as inflammatory rheumatic disease

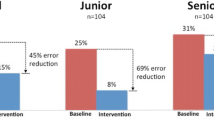

Ada classified IRD status (IRD/non-IRD) significantly more often correctly compared to physicians according to top diagnosis, 93/132 (70%) vs 70/132 (53%), p = 0.002; as well as numerically more often according to the top 3 diagnoses listed, 78/132 (59%) vs 66/132 (50%), p = 0.011. Regarding the top diagnosis, this resulted in a sensitivity and specificity of Ada and physicians of 71 and 60%, compared to 64 and 47%, see Table 2. Figure 2 depicts the proportion of correctly identified IRD status from Ada and physicians by number of included diagnoses and case difficulty according to IRD-status from the gold standard diagnosis.

Work experience was not related to correctly detecting IRD among the top 3 diagnoses for rheumatologists (Odds ratio (OR) per year of work experience 1.01; 95% CI 0.94; 1.06), neither were years of experience working in rheumatology (OR 0.99; 95% CI 0.93; 1.06). The mean self-perceived probability of a correct diagnosis was 60% for case vignettes in which the rheumatologists were able to detect the correct IRD status within the top 3 diagnoses and 55% for the case vignettes in which they were not.

Correct final diagnosis

Ada listed the correct diagnosis more often compared to physicians as top diagnosis 71/132 (54%) vs 42/132 (32%), p < 0.001; as well as among the top 3 diagnoses, 78/132 (59%) vs 55/132 (42%), p < 0.001). Supplementary Fig. 1 lists the most common top diagnosis suggested by participants per case. Figure 3 depicts the percentage of correctly classified patients reported by Ada and physicians by a number of considered diagnoses and case difficulty according to the final diagnosis as gold standard. Probabilities for correct top diagnoses of physicians and Ada were mostly meaningfully higher than those of incorrect diagnoses, although Ada reported a higher probability for incorrect diagnoses in difficult cases, see Fig. 4.

Work experience was not related to suggesting the correct diagnosis among the top 3 for rheumatologists (Odds ratio (OR) per year of work experience 0.98; 95% CI 0.93; 1.03), neither were years of experience working in rheumatology (OR 0.97; 95% CI 0.93; 1.03).

The mean self-perceived probability of a correct diagnosis was 61% for case vignettes in which the rheumatologists were able to detect the correct diagnosis among the top 3 diagnoses and 55% for the case vignettes in which they were not.

Discussion

In this study, we compared the diagnostic accuracy of physicians with clinical experience in rheumatology to Ada, an AI-based symptom checker, in situations of diagnostic uncertainty, i.e. solely relying on basic health and symptom-related medical history. This situation reflects the current onboarding process to rheumatology specialist care and the growing necessity to triage patients with IRD from those with non-inflammatory symptoms. Rheumatologists often have access to limited information (no imaging results, no laboratory parameters) to make a standardized, objective triage decision of referrals, resulting in non-transparent and potentially wrong triage decisions. Digital referral forms are rarely used [2], often resulting in additional poor readability of the hand-written information.

In contrast to our hypothesis, we did not show inferiority but to the best of our knowledge, for the first time a significant superiority of a symptom checker compared to physicians regarding correct IRD-detection (70 vs 53%, p = 0.002) and actual diagnosis (54 vs. 32%, p < 0.001). This superiority of Ada was independent of case difficulty and IRD status.

In line with the results by Ehrenstein et al. [20], we could show the high diagnostic uncertainty of physicians when deprived of information exceeding medical history, resulting in a low diagnostic accuracy. Additionally, we were able to show that physicians and Ada are mostly able to correctly assess the likelihood of a correct diagnosis (Fig. 3). Interestingly, Ada reported a higher probability of incorrect diagnoses in difficult cases.

Our results highlight the potential of supporting digital diagnostic tools and the need for a maximum of available patient information to inform adequate triaging of rheumatic patients. Electronically available patient information would reduce data redundancy and increases readability and completeness of data.

We think that similarly to increasing the diagnostic accuracy of rheumatologists [20], an essential step to improve the diagnostic accuracy of symptom checkers in rheumatology would be to include laboratory parameters (i.e. elevated CRP, presence of auto-antibodies) and imaging results (i.e. presence of sacroiliitis for axial spondyloarthritis). To improve triage decisions a symptom-based checklist of mandatory additionally required information could be made available to referring physicians. Routine measurement of the level of diagnostic (un)certainty could help to standardize symptom-based test-ordering decisions and continuously improve the triage service [21].

Surprisingly, we could also show that the diagnostic accuracy of physicians was not increasing with years of clinical experience (in rheumatology). In contrast, in a previous study with medical students, we could show that years of medical studies were the most important factor for a correct diagnosis and more helpful than using Ada for diagnostic support [22]. This could be due to the fact that rheumatologists only had access to Ada’s summary report and could not actively interact with the patient. Additionally, this study showed that the probability stated by Ada for an incorrect diagnostic suggestion is often higher than for a correct diagnostic suggestion, in line with results for difficult cases from this study.

This study has several limitations. Although vignettes were carefully selected to include cases of various difficulty and a representative sample of IRD cases, the sample size remains limited and further studies are needed. Importantly, previous studies indicated that the diagnostic accuracy of Ada is very user and disease dependent [22, 23]. Furthermore, Ada had the advantage of interaction with patients and physicians only had access to Ada’s summary reports (not being able to interact with patients and ask additional questions). To address these limitations, we are currently prospectively assessing Ada’s diagnostic accuracy used by patients compared to physicians limited to medical history taking (with no access to Ada’s results). The power calculation and inclusion of physicians with varying levels of experience in rheumatology care and different working sites strengthen the results of this study.

Conclusion

Limited to basic health and symptom-related medical history, the diagnostic accuracy of physicians was lower compared to an AI-based symptom checker, highlighting the importance of access to complete and sufficient information and potential of digital support to make accurate triage and diagnostic decisions in rheumatology.

Data availability

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

References

Burgers LE, Raza K, van der Helm-van Mil AH (2019) Window of opportunity in rheumatoid arthritis - definitions and supporting evidence: from old to new perspectives. RMD Open 5:e000870. https://doi.org/10.1136/rmdopen-2018-000870

Benesova K, Lorenz H-M, Lion V et al (2019) Early recognition and screening consultation: a necessary way to improve early detection and treatment in rheumatology? : Overview of the early recognition and screening consultation models for rheumatic and musculoskeletal diseases in Germany. Z Rheumatol 78:722–742. https://doi.org/10.1007/s00393-019-0683-y

Sørensen J, Hetland ML (2015) Diagnostic delay in patients with rheumatoid arthritis, psoriatic arthritis and ankylosing spondylitis: results from the Danish nationwide DANBIO registry. Ann Rheum Dis 74:e12–e12. https://doi.org/10.1136/annrheumdis-2013-204867

Feuchtenberger M, Nigg AP, Kraus MR, Schäfer A (2016) Rate of proven rheumatic diseases in a large collective of referrals to an outpatient rheumatology clinic under routine conditions. Clin Med Insights Arthritis Musculoskelet Disord 9:181–187. https://doi.org/10.4137/CMAMD.S40361

Knitza J, Mohn J, Bergmann C et al (2021) Accuracy, patient-perceived usability, and acceptance of two symptom checkers (Ada and Rheport) in rheumatology: interim results from a randomized controlled crossover trial. Arthritis Res Ther 23:112. https://doi.org/10.1186/s13075-021-02498-8

Krusche M, Sewerin P, Kleyer A et al (2019) Specialist training quo vadis? Z Rheumatol 78:692–697. https://doi.org/10.1007/s00393-019-00690-5

Knitza J, Muehlensiepen F, Ignatyev Y, et al (2022) Patient’s Perception of Digital Symptom Assessment Technologies in Rheumatology: Results From a Multicentre Study. Frontiers in Public Health 10:

Knevel R, Knitza J, Hensvold A, et al Rheumatic? - A Digital Diagnostic Decision Support Tool for Individuals Suspecting Rheumatic Diseases: A Multicenter Pilot Validation Study. Front Med (Lausanne) in press

Moens HJ, van der Korst JK (1991) Computer-assisted diagnosis of rheumatic disorders. Semin Arthritis Rheum 21:156–169. https://doi.org/10.1016/0049-0172(91)90004-j

Knitza J, Krusche M, Leipe J (2021) Digital diagnostic support in rheumatology. Z Rheumatol. https://doi.org/10.1007/s00393-021-01097-x

Alder H, Michel BA, Marx C et al (2014) Computer-based diagnostic expert systems in rheumatology: where do we stand in 2014? Int J Rheumatol 2014:672714. https://doi.org/10.1155/2014/672714

Knitza J, Knevel R, Raza K et al (2020) Toward earlier diagnosis using combined ehealth tools in rheumatology: the joint pain assessment scoring tool (JPAST) project. JMIR Mhealth Uhealth 8:e17507. https://doi.org/10.2196/17507

Semigran HL, Linder JA, Gidengil C, Mehrotra A (2015) Evaluation of symptom checkers for self diagnosis and triage: audit study. BMJ 351:h3480. https://doi.org/10.1136/bmj.h3480

Butcher M Ada Health built an AI-driven startup by moving slowly and not breaking things. In: TechCrunch. https://social.techcrunch.com/2020/03/05/move-slow-and-dont-break-things-how-to-build-an-ai-driven-startup/. Accessed 29 Jan 2021

Gilbert S, Mehl A, Baluch A et al (2020) How accurate are digital symptom assessment apps for suggesting conditions and urgency advice? A clinical vignettes comparison to GPs. BMJ Open 10:e040269. https://doi.org/10.1136/bmjopen-2020-040269

Ronicke S, Hirsch MC, Türk E et al (2019) Can a decision support system accelerate rare disease diagnosis? Evaluating the potential impact of Ada DX in a retrospective study. Orphanet J Rare Dis 14:69. https://doi.org/10.1186/s13023-019-1040-6

Meyer AND, Giardina TD, Spitzmueller C et al (2020) Patient perspectives on the usefulness of an artificial intelligence-assisted symptom checker: cross-sectional survey study. J Med Internet Res 22:e14679. https://doi.org/10.2196/14679

Powley L, McIlroy G, Simons G, Raza K (2016) Are online symptoms checkers useful for patients with inflammatory arthritis? BMC Musculoskelet Disord 17:362. https://doi.org/10.1186/s12891-016-1189-2

Proft F, Spiller L, Redeker I et al (2020) Comparison of an online self-referral tool with a physician-based referral strategy for early recognition of patients with a high probability of axial spa. Semin Arthritis Rheum 50:1015–1021. https://doi.org/10.1016/j.semarthrit.2020.07.018

Ehrenstein B, Pongratz G, Fleck M, Hartung W (2018) The ability of rheumatologists blinded to prior workup to diagnose rheumatoid arthritis only by clinical assessment: a cross-sectional study. Rheumatology (Oxford) 57:1592–1601. https://doi.org/10.1093/rheumatology/key127

Bhise V, Rajan SS, Sittig DF et al (2018) Defining and measuring diagnostic uncertainty in medicine: a systematic review. J Gen Intern Med 33:103–115. https://doi.org/10.1007/s11606-017-4164-1

Knitza J, Tascilar K, Gruber E et al (2021) Accuracy and usability of a diagnostic decision support system in the diagnosis of three representative rheumatic diseases: a randomized controlled trial among medical students. Arthritis Res Ther 23:233. https://doi.org/10.1186/s13075-021-02616-6

Jungmann SM, Klan T, Kuhn S, Jungmann F (2019) Accuracy of a Chatbot (Ada) in the diagnosis of mental disorders: comparative case study with lay and expert users. JMIR Form Res 3:e13863. https://doi.org/10.2196/13863

Acknowledgements

We thank all the patients and physicians who participated in this study. The present work was performed to fulfill the requirements for obtaining the degree “Dr. med.” for Markus Gräf and is part of the PhD thesis of JK (AGEIS, Université Grenoble Alpes, Grenoble, France).

Funding

Open Access funding enabled and organized by Projekt DEAL. This study was partially supported by Novartis Pharma GmbH, Nürnberg, Germany (Grant number: 33419272). Novartis employees were not involved in the design and conduct of the study. They did not contribute to the collection, analysis, and interpretation of data. They did not support the authors in the development of the manuscript. This work was supported by the Deutsche Forschungsgemeinschaft (DFG—FOR 2886 PANDORA—B01/A03/Z01 to GS and AK).

Author information

Authors and Affiliations

Contributions

Conceptualization: JK, MG, JC, and NV.; methodology: JK, MG, JC, JL, MK, MW, SK, JM, AH, JH, PK, SK, PA, NV, and GS; formal analysis: JK, MG, and JC; data curation: JK, MG, and JC; writing—original draft preparation: JK, MG, and JC; writing—review and editing: JK, MG, DS, JC, JL, MK, MW, SK, JM, AH, JH, PK, SK, PA, NV, and GS; visualization: JC; supervision: GS and NV; funding acquisition: JK, MW, and AH. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

JK has received research support from Novartis Pharma GmbH. SK and MW are members of RheumaDatenRhePort. JK is a member of the scientific board of RheumaDatenRhePort.

Ethical approval

The study was approved by the ethics committee of the medical faculty of the university of Erlangen-Nürnberg, Germany (106_19 Bc), approved 23rd July 2019. Informed consent was obtained from all subjects involved in the study. Written informed consent has been obtained from the patients to publish this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gräf, M., Knitza, J., Leipe, J. et al. Comparison of physician and artificial intelligence-based symptom checker diagnostic accuracy. Rheumatol Int 42, 2167–2176 (2022). https://doi.org/10.1007/s00296-022-05202-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00296-022-05202-4