Abstract

In economic applications, the behavior of objects (e.g., individuals, firms, or households) is often modeled as a function of microeconomic and/or macroeconomic conditions. While macroeconomic conditions are common to all objects and change only over time, microeconomic conditions are object-specific and thus vary both among objects and through time. The simultaneous modeling of microeconomic and macroeconomic conditions has proven to be extremely difficult for these applications due to the mismatch of dimensions, potential interactions, and the high number of parameters to estimate. By marrying recurrent neural networks with conditional factor models, we propose a new white-box machine learning method, the recurrent double-conditional factor model (RDCFM), which allows for the modeling of the simultaneous and combined influence of micro- and macroeconomic conditions while being parsimoniously parameterized. Due to the low degree of parameterization, the RDCFM generalizes well and estimation remains feasible even if the time-series and the cross-section are large. We demonstrate the suitability of our method using an application from the financial economics literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Modeling how the behavior of objects, for example, individuals, households, or firms (\(\varvec{y}_t\)) depends on macroeconomic (\(\varvec{z}_t\)) and/or microeconomic (\(\varvec{C}_{t}\)) conditions is a common interest in business studies, economics, and other research areas. Macroeconomic conditions, in a broader sense, are environmental variables that are common to all objects and thus have only a time-series dimension, while microeconomic conditions are object-specific and have both a time-series and a cross-sectional dimension. While the former affect the time-series variability of all objects simultaneously—although to varying degrees—the latter drive the cross-sectional variation among the objects as well as their individual temporal evolution, provided that these object-specific conditions change. A well-known example of such applications in academic research and business practice is the prediction of customer shopping behavior (e.g., Scholdra et al. 2021). For example, Ma et al. (2011) analyze the role of macroeconomic variables \(\varvec{z}_t\) (e.g., gasoline price and gross domestic product (GDP) growth), and Kamakura and Yuxing Du (2012) examine how the microeconomic conditions (i.e., characteristics) of households \(\varvec{C}_t\) (e.g., household’s net income or demographics) explain dispersion in a household’s total spending (\(\varvec{y}_t\)). Another application that is frequently studied in the accounting, finance, or operations research area is the prediction of financial distress or bankruptcy (e.g., Liang et al. 2016; Abid et al. 2022). Hernandez Tinoco and Wilson (2013) study how the probability of a firm becoming financially distressed or bankrupt (\(\varvec{y}_t\)) depends on macroeconomic \(\varvec{z}_t\) (e.g., retail price index or treasury bill rates) and microeconomic \(\varvec{C}_t\) (e.g., liabilities-to-assets and equity price) variables. The list of further potential applications is long; therefore, we summarize further examples from various research fields in Table 1.

Table 1 shows that modeling the influences of both macroeconomic and microeconomic variables on response variables is a common problem in a wide variety of economic applications. The mismatch between the dimensions of the macroeconomic and microeconomic variables and the potential existence of interaction effects pose challenges in modeling. In general, there are two major approaches to model \(\varvec{y}_{t}\), namely cross-sectional and time-series approaches. A common methodological choice to model a large cross-section over time is to stack cross-sectional and time-series observations into panel matrices and estimate the relationships using methods such as pooled ordinary least squares (POLS) or feed-forward neural networks (FFNNs). Potential interactions of micro and macro variables are accounted for by creating interaction terms. However, this approach faces two drawbacks. First, by interacting macro- and microeconomic variables, the dimensionality increases, which requires the estimation of many parameters.Footnote 1 Second, by stacking the data set, POLS or FFNNs treat the entire data set as a cross-section; thus, no temporal dynamics can be accounted for. In time-series analyses, methods such as vector autoregressive (VAR) models (Sims 1980) or recurrent neural networks (RNNs) (Rumelhart et al. 1986b; Elman 1990) are used to capture the temporal dependencies in the data. However, both approaches suffer considerably from the curse of dimensionality, meaning that only a few response variables and no microeconomic conditions can be considered.Footnote 2 Accordingly, it is desirable to have a method that can extract the relevant time-series and cross-sectional information from a potentially large number of macroeconomic and microeconomic variables, respectively. Furthermore, the method should be able to model the simultaneous and combined influence of both types of information and should also be able to capture temporal dynamics of the macroeconomic variables. At the same time, it should be ensured that the dimensionality of the parameter space is not inflated so that the interpretation and estimation is feasible on the one hand and to achieve a better generalization (i.e., out-of-sample performance) on the other hand.

In this paper, we present a novel approach that models the time-series and the cross-section simultaneously, satisfying the above-mentioned desired properties. Specifically, we assume that the response variables \(\varvec{y}_{t}\) follow a factor structure with factors \(\varvec{f}_{t}\) and factor loadings \(\varvec{B}_{t}\), where the factors follow a VAR process. The time-series factors represent common state variables that drive the common temporal variation in the response variables and the loadings explain the “reaction” of each response to changes in the factors. Accordingly, factor loadings explain the cross-sectional dispersion among the responses. Both factors and factor loadings are unobservable; however, we assume that macroeconomic variables \(\varvec{z}_{t}\) are informative for identifying the factors, which are also assumed to follow a VAR process. Furthermore, microeconomic variables \(\varvec{C}_{t}\) are assumed to be informative for explaining the differences in factor sensitivities. Thus, our model is based on three model features that individually have been successfully applied in the literature. Because we condition both factors and factor loadings on observable variables and we allow for an autonomous dynamic factor process, we call our model the recurrent double-conditional factor model (RDCFM).

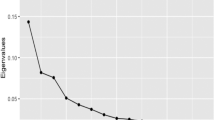

Each of the three model features can be motivated both economically and technically. We discuss the advantages of the two perspectives throughout the paper and, therefore, emphasize only the central perspectives at this point. The first model feature represents the basic element of our model and is inspired by conditional factor models, as, for example, studied in Ferson and Harvey (1991, 1993) or Maio (2013). That is, we condition unobservable factors \(\varvec{f}_{t}\), which are assumed to drive the common time-series variation in the response variables, on exogeneous macroeconomic variables \(\varvec{z}_{t}\). This compresses the relevant information from a potentially large number of noisy macroeconomic conditions into a small number of common factors. In comparison with other dimensionality reduction approaches such as principal component analysis (PCA) the assumption that factors depend on exogenous variables drastically reduces the number of parameters to estimate and also allows one to better interpret these factors.

The second model feature accounts for a potential autonomous evolution of the conditional factors \(\varvec{f}_{t}\) through time by allowing them to follow a VAR process. This turns static conditional factor models into dynamic factor models, which are particularly popular in macroeconomic applications (e.g., Kose et al. 2003; Del Negro and Otrok 2008; Jungbacker et al. 2011; Bai and Wang 2015). The dynamics of the factors are achieved by marrying conditional factor models with RNNs, which have been proven to effectively capture the temporal evolution in dynamic systems (Rumelhart et al. 1986b; Elman 1990). As a result, the conditional factors have internal memory that makes it possible to maintain information on current macroeconomic conditions over several periods. From a technical perspective, the combination of a conditional factor model and an RNN allows for efficient parameter estimation using well-established methods from the machine learning literature.

The third model feature allows factor loadings \(\varvec{B}_{t}\) to be conditional on observed microeconomic conditions \(\varvec{C}_{t}\), as, for example, suggested in Vermeulen et al. (1993) and Avramov and Chordia (2006). Recently, Kelly et al. (2019a) propose instrumented principal component analysis (IPCA), a PCA variant that also assumes conditional factor loadings. By conditioning factor loadings on microeconomic variables, we model a response’s “reaction” to changes in the state variables (i.e., factors) as a function of its microeconomic conditions. The estimation of conditional factor loadings has several advantages. First, in large cross-sections, modeling loadings as a function of responses’ microeconomic conditions reduces the number of parameters to estimate compared to unconditional loadings. This, on the one hand, makes model estimation feasible in high dimensional datasets and, on the other hand, increases the generalizability of the model. Second, if microeconomic conditions change over time, the factor loadings also become time-varying, avoiding parameter-rich specifications of time-varying loadings (e.g., Bali et al. 2009, 2017; Su and Wang 2017). Third, as opposed to unconditional loadings, estimating conditional factor loadings does not require a large time-series, as only its current microeconomic conditions need to be known. Last, conditional loadings allows to analyze why loadings differ across responses. While unconditional loadings are “black boxes”, modeling them instead as a function of microeconomic conditions allows one to analyze which variables account the most for cross-sectional variation.

In a broader sense, the RDCFM can be seen as a framework that nests a family of conditional factor models, all of which are applicable to both regression and classification problems. Depending on the specific application at hand, the model features can be enabled and disabled to obtain another model that may be more suited to the respective research application. However, the only empirical requirement is that the response variables \(\varvec{y}_{t}\) and macroeconomic conditions \(\varvec{z}_{t}\) are observed over time. At the model’s lowest level, factors are assumed to be conditional on macroeconomic variables, and the additional model features (i.e., VAR process, conditional factor loadings) are deactivated. Accordingly, factor loadings are assumed to be unconditional. We refer to this model as conditional factor model (CFM). Additionally, one can allow the factors to follow a VAR process to obtain the recurrent conditional factor model (RCFM). When estimating conditional factor loadings, the CFM and RCFM become the double-conditional factor model (DCFM) and RDCFM, respectively. The model feature to estimate conditional loadings is particularly interesting when analyzing a large cross-section because this drastically reduces the number of parameters to estimate. None of these modifications leads to a substantial change in parameter estimation, making this a one-size-fits-all estimator. Depending on the specific application, a model feature may be relevant or irrelevant. Using the Clark and West (2007) test, we show how the relevant model features can be identified by researchers. In summary, no a priori considerations are required to identify the most suitable model for the specific application at hand. As a result, the RDCFM and its variants can be used in numerous regression and classification applications (see Table 1) and are thus of interest to researchers in various disciplines.

As a hybrid type of a conditional factor model and RNN, the RDCFM itself can also be considered a machine learning method; however, it contributes to the literature on “white-box” machine learning (Loyola-Gonzalez 2019; Nagel 2021) because it is characterized by a high degree of interpretability. We demonstrate this using an application from the financial economics literature, namely, the prediction of stock returns. We choose this application because the dataset (1) is publicly available, (2) has been widely used in the finance literature, allowing us to directly compare the performance of the RDCFM with that of existing factor models, and (3) is characterized by a large time-series and cross-section, which makes this an ideal application for the RDCFM. Specifically, we condition the factors on empirical asset pricing factors \(\varvec{z}_t\) (e.g., Fama and French 2015) and the factor loadings on stock characteristics \(\varvec{C}_t\), such as financial indicators of firms (e.g., Banz 1981; Rosenberg et al. 1985). First, using the Clark and West (2007) test, we show how to identify the relevant model features (i.e., conditional factors, VAR process, and conditional factor loadings). This not only provides transparency into the model’s inner workings but also allows for the identification of crucial information sources driving the predictions. For the application at hand, we find that all model features contribute to the prediction of stock returns. Second, having identified the most appropriate model for the respective application, we show how to identify the relevant drivers within a specific model feature. That is, we show how to assess the importance and statistical significance of the macroeconomic and microeconomic variables. Furthermore, using Granger (1969) causality and impulse-response analysis, we analyze how time-series information is processed in the model. Within our application, we find that only a few empirical asset pricing factors significantly contribute to model performance, while many asset characteristics explain loadings on conditional factors. Impulse-response analysis reveals that lagged information in empirical asset pricing factors captures relevant information for future returns that is neglected by previous models. Last, we also find that the RDCFM outperforms the prevailing models in the literature as a result of the large amount of information being processed while being parsimoniously parameterized. In comparison, the RDCFM estimates only 0.5% of the number of parameters estimated in an RNN or in PCA, and only 9% of the number of parameters required for estimating IPCA.

The remainder of this paper is organized as follows. Section 2 describes the fusion of an RNN and conditional factor models to form the RDCFM and discusses the estimation of the model parameters. In Sect. 3, we present our empirical application. Finally, Sect. 4 concludes the paper.

2 Methodology

2.1 Recurrent double-conditional factor model

Assume that the realizations of the response variables \(i = 1, \dots , N\) at time \(t = 1, \dots , T\) follow a low-dimensional factor structure with \(j = 1,\dots , K\) common factors:

where \(y_{i,t}\) denotes the outcome of the i-th response variable at time t, \(f_{j,t}\) denotes the realization of the j-th factor at time t, \(\beta _{i,j,t}\) is the individual sensitivity of the i-th response variable to the j-th factor at time t, and \(e_{i,t}\) represents the component of \(y_{i,t}\) that is not captured by the low-dimensional factor structure for which we assume that \(\text {COV}\left( e_{i,t},f_{j,t}\right) = 0 \; \; \forall i,j,t\). Note that, for simplicity and without loss of generality, we drop the intercept in Eq. (1) and in our subsequent derivations. However, the intercept can easily be included by adding a constant factor. In matrix notation, we can rewrite Eq. (1) as follows:

with \(\varvec{y}_t\) denoting a \(1 \times N\) vector of the response variables, \(\varvec{f}_t\) is a \(1 \times K\) vector of latent factors, and \(\varvec{B}_t\) denotes the \(N \times K\) matrix of factor loadings at time t. \(\varvec{e}_t\) is a \(1 \times N\) vector of residuals. The factors \(\varvec{f}_{t}\) are common to all objects; however, the sensitivities to these factors \(\varvec{B}_{t}\) differ among them. Accordingly, the factors capture global shocks that drive the common time-series variations of all responses and the factor loadings explain the cross-sectional differences among them. The true factors and factor loadings are not observable in reality; however, we assume that we observe two sets of variables, i.e., macroeconomic \(\varvec{z}_t\) and microeconomic \(\varvec{C}_t\) variables, that are informative about either the factors or factor loadings, respectively. We first describe the estimation of the conditional factors and proceed with notes on modeling conditional factor loadings.

The basic building block of our model is the extraction of latent factors from a potentially large number of macroeconomic conditions (e.g., Ferson and Harvey 1991, 1993; Maio 2013). Denote \(\varvec{z}_t\) as a \(1 \times L\) dimensional vector of L macroeconomic variables. For the sake of clarity, we implicitly assume that \(\varvec{z}_t\) may contain a constant (i.e., constant environment) and variables of any lag polynomial, that is, \(\varvec{z}_t = [\varvec{z}_{t}^{*}, \varvec{z}_{t-1}^{*},\ldots , \varvec{z}_{t-p}^{*}]\), where p is the maximum lag order and \(\varvec{z}_{t}^{*}\) denotes raw, unlagged macroeconomic variables. We assume that \(\varvec{z}_t\) is informative about the factor realizations \(\varvec{f}_t\), i.e., the factors are conditional on \(\varvec{z}_t\). Accordingly, the factors are linear combinations of \(\varvec{z}_t\), as described in Eq. (3):

where \(f_{j,t}\) denotes the j-th factor at time t, conditional on factor instruments \(\varvec{z}_t\). The term \(\omega _{l,j}\) represents the time-invariant coefficient of the l-th factor instrument on the j-th factor. In matrix notation, we can write:

with \(\varvec{\Omega }\) denoting an \(L \times K\) matrix of coefficients of the factor instruments. The elements of \(\varvec{\Omega }\) are the only free parameters for latent factor extraction, and we choose them such that the factor structure in Eq. (2) best explains the response variables. For details on the estimation procedure, we refer to Sect. 2.3. Technically, the construction of the factors can be conceptualized as dimensionality reduction from a potentially large number of (noisy) macroeconomic predictors to a smaller number of (denoised) macroeconomic state variables that capture only the relevant information of the macroeconomic variables.

In time-series analyses, e.g., macroeconomics, dynamic factor models have proven to be superior to static models; therefore, there are many attempts to allow for VAR factors (e.g., Kose et al. 2003; Del Negro and Otrok 2008; Jungbacker et al. 2011; Bai and Wang 2015). By estimating dynamic factors, temporal dependencies in the data are captured and the model can easily be iterated into the future to obtain more accurate predictions. We therefore transform the static conditional factor representation in Eq. (4) into a dynamic representation by allowing the factors to follow an autonomous dynamic process. Inspired by research on RNNs (Rumelhart et al. 1986b; Elman 1990), we implement dynamic factors as described in Eq. (5):

with \(\varvec{AR}\) being a matrix of VAR(1) coefficients of dimension \(K \times K\). Accordingly, the factor realizations are conditional on exogenous time-series variables and on their own past realizations, enabling information to be persistent for multiple periods. The tanh transformation of past factor realizations ensures that the VAR process is stable (i.e., produces stationary factors). This avoids imposing constraints on the coefficients of the \(\varvec{AR}\) matrix, thus increasing computational efficiency, and, allows to capture complex nonlinear temporal dependencies. The factor specification in Eq. (5) links the conditional factor model to RNNs (e.g., Rumelhart et al. 1986b; Elman 1990), which is a key property of the RDCFM for efficient parameter optimization. We discuss this relationship in Sect. 2.2.

Frequently, estimates for factor loadings are obtained via an ordinary least squares (OLS) regression of the response variable on the factors, which implicitly assumes that loadings do not change over time. However, when modeling dynamic systems, this assumption might not hold. Some attempts in the literature to dynamically model factor loadings are presented in Del Negro and Otrok (2008), Bali et al. (2009, 2017). However, these approaches require the estimation of many parameters, increasing the complexity of the model. We follow the approach proposed in Vermeulen et al. (1993), Kelly et al. (2019a, b) and condition factor loadings on microeconomic conditions (i.e., characteristics of the responses) and thus allow theoretical or empirical evidence to be incorporated into the modeling of factor loadings. For example, Kose et al. (2003) find that small and volatile countries are less sensitive to fluctuations in a global business cycle compared to large and stable countries. At the firm level, Fama and French (1993) find that small stocks with high book-to-market equity ratios have higher loadings on a distress factor than large stocks with low book-to-market equity ratios. By incorporating such information directly into the modeling of factor loadings, we can obtain more precise estimates and are also able to explain differences in factor loadings in a model-driven manner. Furthermore, we obtain time-varying loadings if the conditioning variables evolve over time.

Denote \(\varvec{C}_t\) as an \(N \times M\) matrix of characteristics of the responses at time t with M denoting the number of characteristics. As for the factor instruments, we implicitly assume that \(\varvec{C}_t\) may contain characteristics of any lag polynomial and a constant characteristic that explains a part of the factor loadings that is independent of any characteristic. In line with Vermeulen et al. (1993) and Kelly et al. (2019a, b), we obtain conditional factor loadings as linear combinations of these characteristics. Specifically, we estimate a matrix \(\varvec{\Gamma }_{\beta }\) of dimension \(M \times K\) that assigns for each factor loading a fixed coefficient to each characteristic. Accordingly, the conditional sensitivity \(\beta _{i,j,t}\) of a response i to a factor j at time t can be written as:

where \(\gamma _{m,j}\) denotes the coefficient of the m-th characteristic for the loading on the j-th factor. The term \(c_{i,m,t}\) refers to the m-th characteristic of response i at time t. We rewrite Eq. (6) in matrix notation and obtain the matrix of conditional factor loadings \(\varvec{B}_t\) as follows:

By conditioning the factor loadings on observable characteristics, we achieve a drastic parameter reduction compared to unconditional factor loadings if \(M < N\). Furthermore, we also allow for time variation in loadings, provided that the characteristics change over time. As a result, the factor loadings not only explain the cross-sectional dispersion among the responses but also model an individual temporal evolution due to changes in the response’s microeconomic conditions.

After deriving the construction of both the dynamic conditional factors and the conditional factor loadings, we can write the RDCFM as an extended version of Eq. (2) as follows:

The RDCFM nests several model variants that can be obtained by enabling and disabling certain model features. For example, when estimating unconditional instead of conditional factor loadings, we obtain either a conditional factor model (CFM) in the spirit of Ferson and Harvey (1991, 1993) (\(\varvec{AR} = 0\) and unconditional loadings) or a recurrent conditional factor model (RCFM) (\(\varvec{AR} \ne 0\) and unconditional loadings). Additionally, we can also estimate a double-conditional factor model (DCFM) with static conditional factors and conditional factor loadings (\(\varvec{AR} = 0\) and conditional loadings).

The preceding derivations and the model formulation in Eq. (8) address the solution of regression problems. As discussed in “Appendix 1.2”, the concepts described here can be adapted to classification problems with only a few methodological changes.

2.2 Relation to recurrent neural networks

This section emphasizes the similarities of RDCFMs with RNNs and discusses the advantages of our model. We focus on “standard” RNNs as originally presented in Rumelhart et al. (1986a) and Elman (1990). An RNN identifies a current hidden system state \(\varvec{s}_{t}\) and estimates the output by mapping this state to the responses via an arbitrary activation function g(x). When solving regression problems, it is common practice to assume a linear activation function:

where \(\varvec{W_y}\) is a weight matrix of dimension \(N \times K\), with K denoting the number of hidden states. The \(1 \times K\) vector of hidden states is obtained by:

where \(\varvec{W_s}\) is a \(K \times K\) weight matrix that describes the transition of the previous state to the next state and \(\varvec{W_z}\) is an \(L \times K\) weight matrix to map the input variables \(\varvec{z}_t\) onto the hidden states. The \(1 \times K\) vector \(\varvec{b}\) denotes the bias of the hidden states (i.e., a constant state).

The weight matrices \(\varvec{W_z}\) and \(\varvec{W_s}\) are equivalent to the RDCFM parameter matrices \(\varvec{\Omega }\) and \(\varvec{AR}\), respectively. Assuming that the factor instruments include a constant factor instrument, the bias term \(\varvec{b}\) in Eq. (10) is equivalent to the \(\varvec{\Omega }\) coefficient on the constant factor instrument. Accordingly, the hidden states of an RNN are analogous to the latent factors of the RDCFM with the difference that we apply the tanh transformation in the RDCFM only to the past factor realizations. We do so to be more in line with linear conditional factor models; however, if one wishes to be more in line with RNNs, then conditional factors may also be extracted according to Eq. (10). As a result, the extraction of latent factors would be nonlinear. Interpreting the hidden states as latent factors implies that the weight matrix \(\varvec{W_y}\) can be interpreted as a matrix of (time-invariant) factor loadings.

Figure 1 illustrates the RDCFM in analogy to the graphical representation of RNNs as presented in, for example, Zimmermann et al. (2005). The RDCFM is unfolded in time for three time steps, with the first two time steps (\((t-1)\) and t) indicating in-sample periods and the last time step (\(t+1\)) denoting an out-of-sample time step. The dots indicate that there may be several previous and subsequent time steps \((t-r)\) and \((t+h)\), respectively. The extraction of conditional factors in the RDCFM is equivalent to the construction of the hidden states in an RNN. However, instead of estimating a parameter-rich output weight matrix \(\varvec{W_{y}}\), the RDCFM uses information in \(\varvec{C}_{t}\) to estimate a time-varying conditional output weight matrix (i.e., factor loadings). The advantage of conditioning the factor loadings increases as the difference between the number of response variables and the number of characteristics becomes larger. We will highlight this throughout our empirical analysis. To summarize, one may regard the RDCFM as an extension of conditional factor models (originated in econometrics) or as an extension of RNNs (originated in machine learning), or as an unifying model connecting these two distinct strands of research. It is worth mentioning that the RNN is included as a special case of the RDCFM when estimating unconditional factor loadings, i.e., the RCFM.

Recurrent double-conditional factor model. The figure shows the RDCFM unfolded in time for three periods. The three dots indicate that the model has previous time steps and may also have several “out-of-sample” time steps (i.e., periods after t, which denotes the current point in time). The terms \(\varvec{z}_{t}\) and \(\varvec{C}_{t}\) denote the vectors and matrices of macroeconomic and microeconomic variables, respectively. The latent factors are represented by \(\varvec{f}_t\), and the response is \(\varvec{y}_t\). The model is characterized by three matrices \(\varvec{\Omega }\), \(\varvec{AR}\), and \(\varvec{\Gamma }_{\beta }\)

2.3 Parameter estimation

This subsection describes the numerical estimation of the RDCFM parameters for solving regression problems. We discuss the methodological changes related to the solution of classification problems in “Appendix 1.2”. In addition to the model parameters \(\varvec{\Gamma }_{\beta }\), \(\varvec{\Omega }\), and \(\varvec{AR}\), we treat the initialization of the factors \(\varvec{f}_0\) for the VAR process as a set of free parameters. As long as the number of factors is small, this does not significantly increase the number of free parameters. We choose to find the parameters according to a least squares criterion; that is, we minimize the sum of squared residuals:

Given a matrix of latent factors, we can analytically solve for \(\varvec{\Gamma }_{\beta }\) via linear least squares. However, due to the existence of dot products between factors and factor loadings and the (nonlinear) VAR process of the factors, we must solve for \(\varvec{\Omega }\), \(\varvec{AR}\), and \(\varvec{f}_{0}\) numerically via nonlinear least squares. We begin by describing the estimation of the factor loading coefficients \(\varvec{\Gamma }_{\beta }\) and continue with the more complex estimation of \(\varvec{\Omega }\), \(\varvec{AR}\), and \(\varvec{f}_0\).Footnote 3

According to Vermeulen et al. (1993) and Kelly et al. (2019a, b), conditional factor loadings can be obtained via a POLS regression of the pooled response variables \(\varvec{\widetilde{Y}}\) on a matrix of pooled cross-sectional instruments \(\varvec{\widetilde{C}}\) interacted with latent factors \(\varvec{\widetilde{F}}\)Footnote 4:

We denote stacked variables with \((\widetilde{\bullet })\), i.e., \(\varvec{\widetilde{Y}}\) is an \((NT)\times 1\) vector, \(\varvec{\widetilde{C}}\) is an \((NT)\times M\) matrix, and \(\varvec{\widetilde{F}}\) is an \((NT)\times K\) matrix, where each observation of the factors is repeated N times. To be more precise, the least-squares estimate for \(\text {vec}\left( \varvec{\Gamma }_{\beta }^{'}\right)\) is:

The estimates of \(\varvec{\Gamma }_{\beta }\) are arranged as an \((MK) \times 1\) vector; therefore, the vector has to be reshaped into an \(M\times K\) matrix.

Given any arbitrary estimate of \(\varvec{\Omega }\), \(\varvec{AR}\), and \(\varvec{f}_0\), we can construct the corresponding factors and thus provide the estimate for \(\varvec{\Gamma }_{\beta }\). Therefore, the objective function from Eq. (11) can be reduced to depend only on \(\varvec{\Omega }\), \(\varvec{AR}\), and \(\varvec{f}_0\). Because our objective still contains dot products between factors and factor loadings and further includes a (nonlinear) VAR factor process, we solve for \(\varvec{\Omega }\), \(\varvec{AR}\), and \(\varvec{f}_0\) numerically via a nonlinear least squares algorithm. The problem may be solved by any derivative-free solver or solver that uses first derivatives approximated by finite differences. However, as the sizes of the time-series and cross-section increase, the use of a derivative-free solver or finite difference approximation becomes computationally infeasible. One way to address this problem is to analytically calculate the gradient of the loss function with respect to the unknown parameters. However, as each factor depends on previous factor realizations, the gradient calculation becomes more complex. By taking advantage of the link between the RDCFM and RNNs, we address this problem by using backpropagation through time (BPTT) to analytically compute the gradient (Rumelhart et al. 1986b; Werbos 1988, 1990). BPTT accumulates the gradient over time, taking into account the temporal interconnections of the factors. To save space, we present the derivation of the gradient calculation in “Appendix 1.1”. Given the gradient with respect to the model parameters, we update the parameters using the well-established Broyden–Fletcher–Goldfarb–Shanno (BFGS) (Broyden 1970; Fletcher 1970; Goldfarb 1970; Shanno 1970) second-order optimization algorithm because it has been extensively researched in operations research over time and provides powerful, fast, and computationally efficient solutions.

3 Empirical application

In Sect. 2.1, we show that the building blocks of the RDCFM architecture can be enabled and disabled to obtain a family of models, including the DCFM, RCFM, and CFM. This property makes the RDCFM a flexible model that can be adapted to a wide variety of applications.

For illustration purposes, we apply the RDCFM to the prediction of stock returns. We choose this application because the data set (1) is publicly available, (2) is characterized by a large time-series and cross-section, and (3) has been widely used in the finance literature, allowing us to directly compare the performance of the RDCFM with that of existing financial models. In Sect. 3.1, we introduce the asset pricing application by describing the data set. We proceed with the details on model estimation and validation in Sect. 3.2. The presentation of our empirical results in Sect. 3.3 is threefold. First, we demonstrate how researchers can identify which model features of the RDCFM (i.e., conditional factors, conditional loadings, and VAR process) are important in a specific application (Sect. 3.3.1). Second, we show how to identify the performance drivers within a model feature by using permutation importance, Granger causality, and impulse-response analysis (Sect. 3.3.2). Last, we compare the performance of the RDCFM with that of prevalent benchmark models in Sect. 3.3.3.

3.1 Data

For comparability with the previous results reported in the literature and because the data are publicly available, we use the same dataset as studied in Kelly et al. (2019a). The sample spans the period from July 1963 to May 2014, covering 599 months and 11,452 individual stocks. The total number of available firm-month return observations is 1.4 million.

The assumption that stock returns \(\varvec{y}_{t}\) follow a low-dimensional factor structure is in line with economic theory (Ross 1976). We condition the factors \(\varvec{f}_{t}\) on a constant to account for a permanent environment and on ten empirical asset pricing factors that are well-known to explain the time-series variation in stock returns (e.g., Fama and French 1993, 2015). The literature suggests that these empirical factors may proxy for future GDP growth (Liew and Vassalou 2000) or innovations in state variables (Petkova 2006); therefore, we use these as conditioning variables for the factors. We provide a detailed description of the factor instruments in “Appendix 2.1”. To be consistent with the asset pricing research, we do not lag the factor instruments, such that factor realizations explain the common contemporaneous temporal variation in the returns. We also follow the asset pricing literature when imposing an orthogonality constraint on the estimated factors (Ross 1976) by requiring the variance inflation factors (VIFs) of the conditional factors to be less than 2.Footnote 5 Note that this restriction is finance-specific and may not be relevant in other applications. We further restrict the Euclidean norm of the factors to control their magnitude, i.e., we require the maximum norm of the factors, scaled by the number of observations, to be less than one.Footnote 6

A common assumption is that the sensitivities to risk factors \(\varvec{B}_{t}\) are static (e.g., Fama and French 1993). In an asset pricing context, these sensitivities to risk factors can be interpreted as asset-specific systematic risks.Footnote 7 Because a firm may evolve from a small to a large business, assuming that these risks are constant over the life cycle of a firm may be an oversimplification and may thus lead to model misspecification. The literature finds that stock returns vary with the spectrum of firm-specific characteristics \(\varvec{C}_{t}\), e.g., market value (Banz 1981) or the book-to-market equity ratio (Rosenberg et al. 1985). Based on these findings, Fama and French (1993) argue that small firms with high book-to-market equity ratios earn higher returns because they have higher loadings on a distress factor. Accordingly, the asset-specific risk may be conditional on the asset’s characteristics. The literature identifies hundreds of characteristics that appear to explain the cross-sectional dispersion in (stock) returns (Hou et al. 2020); thus, there is a large number of possible factor loading instruments. We condition the factor loadings on a constant characteristic and on the same 36 firm characteristics as studied in Kelly et al. (2019a). We provide a description in “Appendix 2.2”. We lag each characteristic by one month and apply a cross-sectional rank transformation to the characteristics to map them into the [\(-\)0.5, 0.5] interval. Note that this data transformation is finance-specific and, technically, any other (or no) form of data transformation can be used.

3.2 Model estimation and validation

We use recursive model estimation to evaluate the out-of-sample model performance (Kelly et al. 2019a). Specifically, we start by using the first 120 months of observations for the estimation of the model parameters (i.e., in-sample period) and proceed to estimate the (out-of-sample) one-month ahead returns in \(t = 121\). Next, we expand the time horizon and re-estimate the parameters by using in-sample data up to \(t = 121\) and estimate the next month’s returns in \(t=122\). We continue until the sample ends in period \(t = 599\).

Our central performance metric is the out-of-sample mean squared prediction error (MSPE) because it is the most prevalent for regression problems in numerous research disciplines and is directly targeted by the objective function in Eq. (11).Footnote 8 We define the MSPE as:

with \(N_{t+1}\) denoting the number of response variables in \(t+1\), and \(\varvec{\beta }_{i,t}\) denoting the object-specific factor loadings, with the subscript t indicating that the factor loadings are based on lagged characteristics, i.e., \(\varvec{\beta }_{i,t} = \varvec{C}_{t} \varvec{\Gamma }_{\beta }\). The term \(\hat{\varvec{\lambda }}_{t+1}\) denotes the ex ante factor estimate in \(t+1\). Because we do not lag the factor instruments, we use their in-sample mean to obtain the factor estimate, as described in Eq. (15):

with \(\bar{\varvec{z}}\) denoting the \(1 \times L\) vector of the (in-sample) means of the factor instruments.

To evaluate the relevance of a certain model feature, we test whether the model’s out-of-sample prediction error significantly decreases when the respective feature is enabled. Similarly, we test whether estimating an additional factor improves model performance. For this purpose, we use the Clark and West (2007) test for nested models.Footnote 9 We do not report the details of the implementation here and instead refer to “Appendix 1.4”.

3.3 Empirical results

3.3.1 Analyzing model feature importance

Our empirical analysis starts with a performance comparison of the RDCFM and its variants by reporting their out-of-sample MSPEs in Table 2. The MSPE of the one-factor RDCFM is 250.6 and monotonically decreases up to five factors, where the RDCFM achieves its minimum MSPE of 249.3. In comparison, the minimum MSPE of the static DCFM (i.e., VAR process disabled) is 250.1 when estimating six factors. In general, the RDCFM has lower prediction errors than the DCFM in any pairwise comparison, indicating that the VAR component is informative for capturing the variation in stock returns.

While the prediction errors of models with conditional loadings (i.e., RDCFM and DCFM) tend to decrease with additional factors, we find the opposite for models with unconditional loadings (i.e., RCFM and CFM). The MSPE for the RCFM increases from 251.0 for the one-factor model to 274.0 when six factors are included. Likewise, the error of the CFM increases with additional factors, even if the differences are smaller. This indicates an overfitting effect with respect to the factor loading estimates. Table 3 shows the number of parameters to estimate for the models. Although additional factors only slightly increase the number of additional model parameters for models with conditional factor loadings, the parameter space for the RCFM and CFM is massively inflated. The number of parameters required to estimate the factors increases at the same rate for all models, but the RCFM and CFM estimate NK parameters for the (unconditional) factor loadings, while the RDCFM and DCFM estimate only MK parameters for the (conditional) factor loadings. To quantify the advantage of conditioning factor loadings in this application, the RDCFM estimates less than 0.5% as many parameters as the RCFM while estimating the same number of factors. Accordingly, by conditioning the factor loadings on microeconomic variables, the RDCFM not only processes more information but also is parsimoniously parameterized, resulting in improved out-of-sample performance.

Next, we test which model features in fact lead to a statistically significant lower prediction error. Specifically, using the Clark and West (2007) test, we test whether (1) the RDCFM has significantly lower prediction errors than the CFM, RCFM, and DCFM, (2) the DCFM has lower errors compared to the CFM, and (3) the RCFM outperforms the CFM. Table 4 reports the differences in the MSPEs of the models. If the null hypothesis of equal MSPE values can be rejected at the 1%, 5%, or 10% levels, we denote this with asterisks. Panel A reports the differences in MSPE when testing the RDCFM against the DCFM, RCFM, and CFM. The RDCFM significantly outperforms all its variants in each specification, suggesting that both the VAR process of the factors and the conditional factor loadings are informative for predicting stock returns. The differences in the MSPE values monotonically increase with additional factors (except for the six-factor RDCFM and DCFM) and are statistically significant at the 1% level. The finding that conditioning factor loadings on observable variables significantly improves the prediction accuracy is also confirmed by comparing the DCFM with the CFM (Panel B). The differences monotonically increase with the number of factors, and again, all differences are statistically significant at the 1% level. The monotonicity in the MSPE differences of the conditional and unconditional models is due to the overfitting of the unconditional models when estimating many parameters for the factor loadings. The results in Panel C show that the RCFM cannot outperform the CFM when estimating at least three factors. This is due to the large number of free parameters in both models. In the RCFM, overfitting occurs because the VAR process captures random noise in the responses rather than systematic movements. The resulting model is too complex and fails to explain unseen data. Combined with the results from Panel A, this suggests that the VAR process of the factors is informative only in combination with the dimensionality reduction due to the estimation of conditional factor loadings.

After determining that all features of the RDCFM are relevant in this application, we ask how many factors are truly relevant. To address this question, we compare the MSPE values of the RDCFM with different numbers of factors and test for statistically significant differences by using the Clark and West (2007) test. Table 5 reports the results. The main interest is the diagonal, as this indicates whether the addition of an extra factor significantly reduces the prediction error. We find that the null hypothesis of equal predictive accuracy can be rejected at the 1% level if up to five factors are estimated. We therefore use this specification in our subsequent analyses.

3.3.2 Analyzing importance within model features

Having identified that all model features are relevant for our application, we next aim to identify the the performance drivers within a specific feature. Specifically, we delve deeper into each model feature of the five-factor RDCFM, by examining (1) which microeconomic variables mainly contribute to the conditional factor loadings, (2) which macroeconomic variables are most relevant for explaining the common time-series variation in the responses, and (3) whether the nonlinear VAR process contains valuable information.

Our analysis starts with an assessment of the relevance of microeconomic conditions. Specifically, we use permutation importance, an approach established in the machine learning literature, to assess how microeconomic variables contribute to the model performance (e.g., Breiman 2001). Permutation importance is easy to implement, characterized by a high degree of interpretability, and and does not depend on the underlying model or application; hence, it can be used to identify the important variables in the DCFM, RCFM, and CFM. We define the importance of an instrument as the increase in the in-sample mean squared error (\(MSE^{IS}\)),Footnote 10 as a result of shuffling the order of its observations while leaving everything else unchanged. When the increase in model error is larger, the variable is more important for the model.

We first obtain the model parameters from our best model (i.e., five-factor RDCFM) found in Table 2, i.e., \(\varvec{\Omega }\), \(\varvec{AR}\), \(\varvec{f_0}\), and \(\varvec{\Gamma }_{\beta }\). For \(M = 100\) permutations, we randomly shuffle the observations of an instrument, recalculate the conditional factor loadings as described in Eq. (7) and estimate the response variables (i.e., returns) according to Eq. (2). We rank the instruments according to the average change in \(MSE^{IS}\) compared to the original model. A large increase in \(MSE^{IS}\) indicates a high level of importance, whereas a small increase indicates low importance. We augment the permutation importance results by testing whether an instrument statistically significantly contributes to the overall model performance, by performing an F-test based on a double-bootstrap apporach, as described in “Appendix 1.3.1”.

Table 6 reports the results, which generally support the findings in the previous literature. Of the 36 firm-level characteristics considered, 17 capture the variation in the cross-section of stock returns, supporting the view that asset returns are multidimensional (Cochrane 2011; Kelly et al. 2019a). By far, the most important firm characteristics are market value (mktcap: 11.4) and total assets (assets: 7.88), both acting as proxies for firm size (Banz 1981). Other important predictors are the market beta (beta: 2.17) (Sharpe 1964; Lintner 1965; Frazzini and Pedersen 2014), twelve-month momentum (mom: 2.04) as documented in Jegadeesh and Titman (1993) and Asness et al. (2013), and assets-to-market (a2me: 1.82) (Fama and French 1992). Interestingly, the ranking matches the importance ranking reported in Kelly et al. (2019a) for IPCA, which also suggests that the short-term reversal (strev: 1.49) and turnover (turn: 0.98) (Datar et al. 1998) characteristics are important. In summary, the findings reveal that many asset characteristics have predictive power for future returns. However, many characteristics frequently proposed for predicting future returns, such as accruals (oa) (Sloan 1996), free cash flow (freecf) (Lakonishok et al. 1994), earnings-to-price (e2p) (Basu 1983), and book-to-market (bm) (Rosenberg et al. 1985) do not account for the substantial variation in returns, after controlling for other characteristics.

Next, we inspect the conditional factors. Due to the memory effect of the latent factors, a factor instrument may affect factor construction and, thus, the model estimates for several periods. Our subsequent analysis is twofold. We start by using permutation importance to assess the overall relevance of the factor instruments. Because permutation importance breaks the temporal order of the instruments, we not only assess how current instruments contribute to the model performance but also measure the relevance of the instruments through \(\varvec{AR}\). However, permutation importance does not reveal how information flows through the RDCFM. We therefore use Granger (1969) causality and impulse-response analysis, two indispensable tools in time-series analyses, to reveal the short- and long-term memory effects of the latent factors.

Table 7 reports the permutation importance results for the factor instruments.Footnote 11 Statistical significance of a factor instrument is assessed using the test procedure described in “Appendix 1.3.2”. Among all factor instruments, only five significantly contribute to the model performance, with the most important factor instrument being the market return (Mkt). Shuffling its order yields an increase in the model’s MSE\(^{IS}\) of 21.3. This finding is consistent with economic theory and empirical findings that there is a priced market factor in stock returns (Sharpe 1964; Lintner 1965; Fama and French 1993, 2015). The next most important instruments are SMB, WML, HMLm, and ROE, which generally supports the findings in the literature that they capture a substantial fraction of variation in the cross-section of stock returns (Fama and French 1993; Carhart 1997; Barillas and Shanken 2018).

After having identified the relevant microeconomic and macroeconomic variables, we next inspect the temporal dynamics in the RDCFM. The previous performance comparison reveals that the VAR process of the factors captures relevant information for future returns; however, how does information flow through the RDCFM?

We begin the analysis of the temporal dynamics of the factors by using the Granger (1969) causality test. Granger causality is a statistical test that assesses whether there are significant autocorrelations across the time-series (Granger 1969, 1980, 1988). Specifically, Granger causality tests whether any lag of a series (with a prespecified lag length p) contributes to the prediction of another series. Therefore, the Granger causality test is based on an F-test of the null hypothesis that the restricted model, i.e., the model that drops a factor, has similar predictive power to the unrestricted model that includes all lagged factors. In our RDCFM specification, we allow for a lag of length one; hence, we restrict our attention to the first lag, which reduces the Granger causality test to a t-test of the regression coefficients.

Table 8 reports the estimated coefficients of a VAR(1) model. The overall findings indicate that there are significant temporal dependencies between the conditional factors. Specifically, the first factor exhibits significant autocorrelation (0.07, p value = 0.14%) and also “Granger-causes” the third (0.11, p value = 0.00%) and fourth (\(-\) 0.09, p value = 0.00%) factors. Interestingly, lagged realizations of the third factor are informative for the first factor (\(-\) 0.42, p value = 2.50%), indicating that there is a direct feedback loop between them. Such direct feedback loops exist if causality is bidirectional, implying complex interdependence among conditional factors. A direct feedback loop can also be observed for the fourth and fifth factors: The fourth factor Granger-causes the fifth factor (\(-\) 0.20, p value = 1.92%), and the fifth factor Granger-causes the fourth factor (\(-\) 0.03, p value = 0.00%), indicating that information returns to the fourth factor in the next period through the fifth factor.

The results in Table 8 show that there are significant temporal dependencies between conditional factors; however, direct feedback loops make it difficult to follow the information flow. Moreover, indirect feedback loops may occur, making interpretation even more difficult. As opposed to direct feedback loops shown above, information returns to a conditional factor over several periods if there are indirect feedback loops. To illustrate this, assume a time-series A Granger-causes B, and B Granger-causes C. If C Granger-causes A then there is an indirect feedback loop between A and C through B. As a result, in a system with many temporally interconnected variables, it is challenging to follow the information flow. Furthermore, because only a fraction of past information is shared among the factors, it is questionable how long certain information is relevant for factor construction and, thus, for affecting the common time-series variation of the response variables. Therefore, we quantify the persistence of system shocks by using impulse-response analysis, a tool frequently applied in time-series analyses (e.g., Potter 2000; Lütkepohl and Schlaak 2019; Lee et al. 2021).

Impulse-response analysis quantifies the flow of information by comparing two sample paths for the variable(s) of interest. One path is subject to an exogenous shock in a system variable at time \(t_i\) (which denotes the impulse period) and the other is not. By comparing the evolution of these paths through time, we analyze how the shock in \(t_i\) affects all system variables in future periods \(t_{i + 1}, t_{i + 2} \dots , t_{i + R}\) with R denoting the length of the forecasting horizon.

In our last in-sample period (\(t = 599\)), we add a one-standard-deviation shock to the factor realizations of our five-factor RDCFM and estimate the factors for the following \(r = 1, \dots , 5\) periods. We use the generalized impulse-response function of Koop et al. (1996) to quantify the magnitude and persistence of exogenous shocks:

where \(GI_{f_j}(t_{i}+r,\nu _{t_i},\Phi _{t_{i} - 1})\) denotes the impulse-response of the j-th factor in period \(t_{i}+r\), \(t_{i}\) refers to the shock period, \(\nu _{t_{i},j}\) is the one-standard-deviation exogenous shock to the j-th system variable (factor) at \(t_i\), and \(\Phi _{t_i-1}\) denotes the information available upon the shock period. The first term of Eq. (16) thus represents the factor process under an exogenous shock, and the second term denotes the baseline factor process. By analyzing how an isolated impulse in a factor at \(t_i\) affects the next period’s realizations, impulse-response analysis may provide a better picture of the factor dynamics.

Figure 2 presents the responses of each factor in our five-factor model to an isolated shock to each factor. Specifically, we add a one-standard-deviation shock to the j-th factor realization in the last sample period (\(t = 599\)) and “overshoot” our system for \(R = 5\) periods. That is, we calculate factors for periods \(t = 600,\ldots ,604\) according to Eq. (5), where \(\varvec{f}_{599}\) receives a shock. The x-axis in Fig. 2 denotes the index of the period following the impulse period. The first factor responds most strongly to impulses in the first factor itself, which supports the findings above that this factor exhibits a high degree of autocorrelation. The first factor also responds to impulses in the second and third factors. However, the responses to the impulse in factors one and three approach zero in the second period following the shock (i.e., \(t = 601\)). Interestingly, the response to impulses in the second factor lasts for two periods, with a change in sign after the first period. However, even the response to the second factor converges to zero at \(t = 602\), suggesting that the first factor has only short-term memory. The responses for most factors last at most two periods, with the fourth factor having the longest memory. The responses to impulses in the first and fourth factors last for at least three periods. The responses to the second factor approach zero after the fourth period. The large degree of autocorrelation with itself and feedback loops across the factors may cause the fourth factor to have the longest memory.

Responses to impulses in factors. The figure presents the impulse-response functions of each factor to shocks in each other factor. The results are based on the RDCFM with \(K = 5\) factors. All parameters are estimated using the entire data set of \(T = 599\) periods. We shock the factor \(\varvec{f}_{599}\) and predict the latent factors for 5 periods ahead, that is, \(t = 600, \ldots , 604\). The x-axis denotes the index of the period following the impulse period

The impulse-response analysis in Fig. 2 shows that the factors have memory, allowing system shocks to persist for several periods. Since our factors are latent variables constructed from observable variables, such system shocks have their origin in shocks to the factor instruments. Therefore, we perform impulse-response analysis to directly analyze how factor instruments contribute to the factor realizations, not only for the current factor composition but also for the subsequent periods due to the memory of the factors. Again, we estimate all model parameters using the entire data set. We estimate factors for the next \(R = 5\) periods and assume that a one-standard-deviation shock hits the system through the time-series instruments at time \(t=600\). Because we do not lag the factor instruments, we hold all other factor instruments at their mean (i.e., zero for the centered nonconstant instruments). Thus, the only nonzero elements in the instrument vector at time \(t = 600\) are the l-th factor instrument and the constant. The only nonzero element for the subsequent periods is the constant factor instrument.

The results are shown in Fig. 3. We also report the immediate responses of each factor to shocks in the factor instruments (\(r = 0\)), which quantifies the sensitivity of a factor to changes in the instruments through \(\varvec{\Omega }\). The responses with respect to past shocks in the instruments start in period \(r > 0\). In \(r = 0\), the first factor strongly reacts to shocks in the market (Mkt) and size (SMB) factors. However, the responses in \(r = 1\) are close to zero, indicating that these variables are less important for subsequent periods.

Responses to impulses in factor instruments. The figure presents the impulse-response functions of each factor to shocks to each factor instrument. The results are based on the RDCFM with \(K = 5\) factors. The x-axis denotes the index of the period following the impulse period, with 0 denoting the impulse period

As already indicated by Fig. 2, the fourth factor has the longest memory process. Again, its responses show an interesting pattern. In \(r=0\), the factor reacts to MKT, SMB, HML, RMW, and ROE. Due to the large degree of autocorrelation with themselves, it is not surprising that shocks to these variables cause longer responses. The contemporaneous contribution of WML to the fourth factor is zero, as indicated by the response in \(r=0\). However, there is a response in \(r = 1\), suggesting that WML enters the fourth factor due to correlation with lagged factors. Interestingly, the response to WML in the first period after the impulse is larger than that to the market return, RMW, ROE, and HML (in absolute terms). A look at the other factors reveals that, with the only exception being the second factor, the responses of all factors with respect to innovations in WML at \(r = 1\) are distinguishable from zero, suggesting that lagged WML is relevant for explaining the time-series variation of stock returns.

3.3.3 Comparison with benchmark models

Within the model family, the RDCFM outperforms all other conditional factor models. However, how does it perform compared to benchmark models previously studied in the literature? In the forecasting literature, it is common to compare model forecasts with a naive benchmark model to analyze whether a model provides any valuable information. Therefore, we compare the predictions of the RDCFM with the prevailing (time-series) mean forecast. However, for the prediction of individual stock returns, a naive zero-return forecast often outperforms the mean model (Gu et al. 2020; Cakici et al. 2023a, b; Fieberg et al. 2023); therefore, we additionally compare the RDCFM with the zero return benchmark. To analyze whether the RDCFM can compete with other factor models from the finance literature, we compare the performance of the RDCFM relative to these models. We begin with an empirical benchmark, i.e., empirical factor models, including the capital asset pricing model (CAPM), the Fama-French 3-, 4-, 5-, and 6-factor models (Carhart 1997; Fama and French 1993, 2015, 2018). Given the observable factors, we estimate the unconditional factor loadings via OLS and the conditional factor loadings according to Eq. (13). The second set of benchmark models includes statistical factor models based on PCA (Connor and Korajczyk 1988) and IPCA (Kelly et al. 2019a). PCA extracts factors through an eigenvalue decomposition of the covariance matrix of stock returns. However, estimating the covariance matrix of individual stock returns requires a workaround because we have to address the missing data. We follow a large branch of the literature and extract latent factors from grouped data, i.e., portfolios (Kozak et al. 2018). Specifically, we use the same characteristic-managed portfolios as in Kelly et al. (2019a), extract the latent factors from the covariance matrix of portfolio returns, and estimate asset-specific loadings via OLS regression. A PCA equivalent with conditional loadings is IPCA (Kelly et al. 2019a, b), which finds latent factors and \(\varvec{\Gamma }_{\beta }\) coefficients by alternating least squares. That is, given an estimate for the factors, IPCA estimates \(\varvec{\Gamma }_{\beta }\) as described in Eq. (13) and updates the factor estimates via cross-sectional regressions of characteristic-managed portfolio returns on the estimated \(\varvec{\Gamma }_{\beta }\) coefficients.

Table 9 reports the differences in the MSPEs between the RDCFM and the benchmark models. Positive differences indicate that the benchmark models have higher prediction errors. Note that for the empirical factor models, \(K = 1,3,\ldots ,6\) refers to the CAPM and the Fama-French 3-, 4-, 5-, and 6-factor models. Because the benchmark models and the RDCFM are non-nested, we test the statistical significance of the MSPE differences by using the cross-sectional version of the Diebold and Mariano (1995) test (Gu et al. 2020; Fieberg et al. 2023). If the RDCFM significantly outperforms a benchmark model at the 1%, 5%, or 10% level, we denote this with asterisks. We find that in every specification, the RDCFM has a significantly lower prediction error than the prevailing mean and zero-return forecasts and outperforms all prevailing benchmark factor models. The differences are particularly large when the RDCFM is compared to models with unconditional loadings. This finding, once again, indicates that models with unconditional factor loadings suffer from overfitting and/or are misspecified in this application.

Table 10 reports the number of free parameters to estimate for the benchmark factor models. Since the empirical factor models use prespecified factors, the additional number of parameters for each additional factor is N or M when estimating unconditional or conditional factor loadings, respectively. In addition to the parameters for the factor loadings, PCA and IPCA estimate T additional parameters for each factor. For the six-factor model, IPCA estimates 3816 parameters, whereas the RDCFM estimates only 320 parameters. Accordingly, the RDCFM achieves parsimonious parametrization by incorporating information from macroeconomic variables, which results in its superior performance compared to IPCA.

4 Conclusion

We propose the RDCFM, a new white-box machine learning method that allows for the simultaneous modeling of the time-series and the cross-section of response variables. By marrying RNNs with economic factor models, our model (1) uses conditioning information included in macroeconomic variables to create common latent factors that drive the common time-series variation in the response variables, (2) allows these factors to follow an internal (nonlinear) VAR process, and (3) uses microeconomic variables to estimate conditional, time-varying loadings on these dynamic conditional factors. Since both factors and factor loadings are linear combinations of observable instrumental variables, we provide a low-parameter specification of dynamic latent factors and time-varying factor loadings, which results in improved parameter stability and generalizability. We demonstrate the use of the RDCFM in an application from the financial economics literature, namely the prediction of stock returns, and find that it outperforms prevailing benchmark factor models.

The RDCFM is not restricted to the conditioning of factors and factor loadings on macroeconomic and microeconomic variables. In a broader sense, the factor instruments may be more accurately described as state variables and the factor loading instruments may be described as object-specific features. Thus, the RDCFM is a general-purpose method that can be applied to solve numerous regression or classification problems—not limited to applications from business studies and economics. The RDCFM’s only empirical restriction is that the response variables and macroeconomic conditions are observable over time. Then, the model features can be enabled and disabled to best suit the particular application.

Even if the RDCFM is already a powerful tool for factor modeling, we see the scope for its possible extensions. The memory of the factors is short-term because we restrict attention to a VAR process of lag order one. The concept of conditional factor loadings may be incorporated into modern neural network architectures that allow for longer memory processes, for example, long short-term memory networks or gated recurrent unit networks. Regarding factor loadings, the estimation of \(\varvec{\Gamma }_{\beta }\) may be modified to account for nonlinear relationships (a nonlinear extension to IPCA is proposed by Gu et al. 2021). Furthermore, one may also allow for VAR factor loadings to capture (short-term) autocorrelations across factor loadings.

Notes

For illustration purposes, we refer to Gu et al. (2020). They predict U.S. stock returns by using 94 firm characteristics, 74 indicator variables (i.e., microeconomic variables) and 8 macroeconomic variables. They stack the data and use all 94 characteristics, 74 indicators, and interactions of the characteristic and macroeconomic variables, which results in a total of 920 variables.

Although there are numerous extensions to VAR models to address this problem [e.g., VAR models with exogenous variables (VARX), factor-augmented VAR (FA-VAR) (Bernanke et al. 2005), and panel VAR (PVAR) (Canova et al. 2012)], they are highly parameterized; therefore, data limitations remain a problem. For example, Canova et al. (2012) use a PVAR model to model six time-series of ten European countries. They further include four exogenous macroeconomic variables, which results in a total of 3840 parameters.

Note that, in Eq. (11), we use the sum of squared residuals as the objective function because it is the most commonly used objective function for solving regression problems in the literature. However, any differentiable objective function can be used. Without methodological changes, the objective function can be exchanged or augmented to employ regularization to allow for shrinkage (\(L^2\)) and sparsity (\(L^1\)) (Tibshirani 1996; Zho and Hastie 2005). If it is not possible to solve analytically for the \(\varvec{\Gamma }_{\beta }\) coefficients after changing the objective function, then one can solve for \(\varvec{\Gamma }_{\beta }\) using nonlinear least squares as we demonstrate in “Appendix 1.2” for the binary cross-entropy objective function to solve classification problems. Regarding regularization terms, we do not include \(L^1\) or \(L^2\) penalties in our empirical application because our dimensionality reduction yields a parsimonious model that—as we show in the empirical part of this paper—does not suffer from overfitting.

Note that we dropped the intercept in Eq. (1). Technically, there is no difference between including or excluding the intercept; therefore, the intercept can easily be included by adding a constant factor to the matrix of latent factors.

Note that the actual choice of the maximal VIF does not qualitatively change the results. Setting the maximum VIF to 2 ensures that the factors are mutually uncorrelated. Stricter thresholds may further reduce the correlation among the factors but may also increase the computation time. We find that a VIF of 2 is a good tradeoff between orthogonality and computational effort.

This is similar to the normalization of eigenvectors in a PCA, which are scaled to be unit vectors. Again, the actual choice of the maximum norm does not significantly affect the results.

Generally, factor models describe not only the first moment but also the second moment, i.e., the variance–covariance matrix of the responses. Instead of computing a full variance–covariance matrix, which requires the estimation of \(N(N+1)/2\) free parameters, the variance–covariance matrix can be obtained by the following decomposition (e.g., Moskowitz 2003):

$$\begin{aligned} \varvec{\Sigma }_{t}^{y} = \varvec{B}_{t} \varvec{\Sigma }^{f} \varvec{B}_{t}^{'} + \varvec{D} \end{aligned}$$where \(\varvec{\Sigma }_{t}^{y}\) is the \(N \times N\) covariance matrix of the response variables in t, \(\varvec{\Sigma }^{f}\) is the \(K \times K\) covariance matrix of the factors, and \(\varvec{D}\) is an \(N \times N\) diagonal matrix of residual variances. For example, Jacobs et al. (2005) show that the assumption of a factor model improves the estimation of the variance–covariance matrix. Since the conditional factor loadings \(\varvec{B}_{t}\) are time-varying, the RDCFM models a time-varying covariance matrix without estimating additional parameters.

Due to the way the conditional factors are specified (i.e., factors are conditional on non-lagged instruments), the RDCFM is not only a prediction but also an explanatory model. For the interested (finance) reader we also evaluate the explanatory power of the factor structure for contemporaneous returns in “Appendices 3.1 (in-sample) and 7.2 (out-of-sample)”. However, the analysis of the model’s explanatory power is very finance-specific. Since this paper aims to present a new general purpose method and not its application to financial economics, we only present the results on the predictive performance of the models in the main analysis.

Clark and McCracken (2005) and Clark and West (2007) show that the commonly used Diebold and Mariano (1995) test is undersized in nested models. If we test the relevance of the VAR process, then the DCFM and CFM are nested in the RDCFM and RCFM, respectively. Similarly, if we test for the relevance of additional factors, then the higher-dimensional factor models nest lower-dimensional factor models. Technically, the models with unconditional factor loadings are not nested in models with conditional loadings. Accordingly, in this case, the Clark and West (2007) test may be unsuitable for comparisons, however, in unreported results, we find that the results are qualitatively unchanged when applying the Diebold and Mariano (1995) test.

“Appendix 3.1” reports the in-sample performance of the competing RDCFM variants and 7.2 reports the corresponding out-of-sample measures. The results suggest that the RDCFM generalizes well, indicating that the identified factor structure is stable. The \(MSE^{IS}\) of the five-factor RDCFM is 217.8.

Note that we re-estimate \(\varvec{\Gamma }_{\beta }\) after obtaining new factor estimates, using the shuffled factor instruments. In unreported results, we find larger declines in \(MSE^{IS}\) when we do not re-estimate \(\varvec{\Gamma }_{\beta }\). However, the overall ranking does not change.

Note that missing values in the vector of errors and in the matrix of factor loadings are replaced with zeros, i.e., they do not contribute to the overall error flow.

To ensure the equivalence of the recurrent and feedforward system, unfolding in time assumes that the weights for the feedforward system are the same for each layer, i.e., the “shared weights” property.

Note that the derivative of the sigmoid function is \(z \left( 1 - z \right)\).

References

Abid I, Ayadi R, Guesmi K, Mkaouar F (2022) A new approach to deal with variable selection in neural networks: an application to bankruptcy prediction. Ann Oper Res 313(2):605–623

Agrawal VV, Yücel Ş (2022) Design of electricity demand-response programs. Manag Sci 68(10):7441–7456

Asness CS, Moskowitz Tobias J, Pedersen LH (2013) Value and momentum everywhere. J Finance 68(3):929–985

Avramov D, Chordia T (2006) Asset pricing models and financial market anomalies. Rev Financ Stud 19(3):1001–1040

Bai J, Wang P (2015) Identification and Bayesian estimation of dynamic factor models. J Bus Econ Stat 33(2):221–240

Bali TG, Cakici N, Tang Y (2009) The conditional beta and the cross-section of expected returns. Financ Manag 38(1):103–137

Bali TG, Engle RF, Tang Y (2017) Dynamic conditional beta is alive and well in the cross section of daily stock returns. Manag Sci 63(11):3760–3779

Banz RW (1981) The relationship between return and market value of common stocks. J Financ Econ 9(1):3–18

Barillas F, Shanken J (2018) Comparing asset pricing models. J Finance 73(2):715–754

Basu S (1983) The relationship between earnings’ yield, market value and return for NYSE common stocks. J Financ Econ 12(1):129–156

Beasley MS (1996) An empirical analysis of the relation between the board of director composition and financial statement fraud. Account Rev 71(4):443–465

Bernanke B, Boivin J, Eliasz P (2005) Measuring monetary policy: a factor augmented vector autoregressive (FAVAR) approach. Q J Econ 120:387–422

Bertsimas D, Bjarnadóttir MV, Kane MA, Kryder JC, Pandey R, Vempala S, Wang G (2008) Algorithmic prediction of health-care costs. Oper Res 56(6):1382–1392

Blasques F, Hoogerkamp MH, Koopman SJ, van de Werve I (2021) Dynamic factor models with clustered loadings: forecasting education flows using unemployment data. Int J Forecast 37(4):1426–1441

Boone T, Ganeshan R, Hicks RL, Sanders NR (2018) Can google trends improve your sales forecast? Prod Oper Manag 27(10):1770–1774

Breiman L (2001) Random forests. Mach Learn 45(1):5–32

Broyden CG (1970) The convergence of a class of double-rank minimization algorithms. IMA J Appl Math 6(1):76–90

Cakici N, Fieberg C, Metko D, Zaremba A (2023a) Machine learning goes global: cross-sectional return predictability in international stock markets. J Econ Dyn Control 155(104):725

Cakici N, Fieberg C, Metko D, Zaremba A (2023b) Predicting returns with machine learning across horizons, firm size, and time. J Financ Data Sci 5(4):119–144

Canova F, Ciccarelli M, Ortega E (2012) Do institutional changes affect business cycles? Evidence from Europe. J Econ Dyn Control 36(10):1520–1533

Carhart MM (1997) On persistence in mutual fund performance. J Finance 52(1):57–82

Cecchini M, Aytug H, Koehler GJ, Pathak P (2010) Detecting management fraud in public companies. Manag Sci 56(7):1146–1160

Chou P, Chuang HHC, Chou YC, Liang TP (2022) Predictive analytics for customer repurchase: interdisciplinary integration of buy till you die modeling and machine learning. Eur J Oper Res 296(2):635–651

Clark TE, McCracken MW (2005) Evaluating direct multistep forecasts. Econom Rev 24(4):369–404

Clark TE, West KD (2007) Approximately normal tests for equal predictive accuracy in nested models. J Econom 138(1):291–311

Cochrane JH (2011) Presidential address: discount rates. J Finance 66(4):1047–1108

Cohen MC, Zhang R, Jiao K (2022) Data aggregation and demand prediction. Oper Res 70:2597–2618

Connor G, Korajczyk RA (1988) Risk and return in an equilibrium APT: application of a new test methodology. J Financ Econ 21:1–64

Datar VT, Naik YN, Radcliffe R (1998) Liquidity and stock returns: an alternative test. J Financ Mark 1(2):203–219

Del Negro M, Otrok CM (2008) Dynamic factor models with time-varying parameters: measuring changes in international business cycles. Working paper

Diebold F, Mariano R (1995) Comparing predictive accuracy. J Bus Econ Stat 13(3):253–263

Elman J (1990) Finding structure in time. Cogn Sci 14(2):179–211

Erişen E, Iyigun C, Tanrısever F (2017) Short-term electricity load forecasting with special days: an analysis on parametric and non-parametric methods. Ann Oper Res. https://doi.org/10.1007/s10479-017-2726-6

Fama EF, French KR (1992) The cross-section of expected stock returns. J Finance 47(2):427–465

Fama EF, French KR (1993) Common risk factors in the returns on stocks and bonds. J Financ Econ 33(1):3–56

Fama EF, French KR (2015) A five-factor asset pricing model. J Financ Econ 116(1):1–22

Fama EF, French KR (2018) Choosing factors. J Financ Econ 128(2):234–252

Ferson WE, Harvey CR (1991) The variation of economic risk premiums. J Polit Econ 99(2):385–415

Ferson WE, Harvey CR (1993) The risk and predictability of international equity returns. Rev Financ Stud 6(3):527–566

Fieberg C, Metko D, Poddig T, Loy T (2023) Machine learning techniques for cross-sectional equity returns’ prediction. OR Spectrum 45(1):289–323

Fletcher R (1970) A new approach to variable metric algorithms. Comput J 13(3):317–322

Frazzini A, Pedersen LH (2014) Betting against beta. J Financ Econ 111(1):1–25

Goldfarb D (1970) A family of variable-metric methods derived by variational means. Math Comput 24(109):23–26

Granger CWJ (1969) Investigating causal relations by econometric models and cross-spectral methods. Econometrica 37(3):424

Granger CWJ (1980) Testing for causality. J Econ Dyn Control 2:329–352

Granger CWJ (1988) Causality, cointegration, and control. J Econ Dyn Control 12(2–3):551–559

Greene WH (2003) Econometric analysis, 5th edn. Prentice Hall, Upper Saddle River

Gross DB, Souleles NS (2002) An empirical analysis of personal bankruptcy and delinquency. Rev Financ Stud 15(1):319–347