Abstract

Recently, the innovation contests have been an important tool to provide ideas or solutions for companies. In a homogenous innovation contest model, the output form of contestants is usually assumed as a sum of their effort and a random shock. In this paper, we focus on the multiplicative output form in an innovation contest, in which the output form of contestants is a product of their effort and a random shock. We address several contest problems in the model with multiplicative output form, including the award scheme, entry policy, and endogenous duration. We show that the results in the model with additive output form do not always hold in the model with multiplicative output form. Our findings can explain some practical observations and suggest that the model with multiplicative output form can also be a good alternative, which enrich the contest theory.

Similar content being viewed by others

Data availability

My manuscript has no associated data or the data will not be deposited.

References

Ales L, Cho SH, Körpeoğlu E (2017) Optimal award scheme in innovation tournaments. Oper Res 65(3):693–702

Ales L, Cho SH, Körpeoğlu E (2020) Innovation tournaments with multiple contributors. Prod Oper Manag 30(6):1772–1784

Brabham DC (2013) Crowdsourcing. MIT Press, Cambridge

Boudreau KJ, Lacetera N, Lakhani KR (2011) Incentives and problem uncertainty in innovation contests: an empirical analysis. Manag Sci 57(5):843–863

Che YK, Gale I (2003) Optimal design of research contests. Am Econ Rev 93(3):646–671

Chen YJ, Dai T, Korpeoglu CG, Körpeoğlu E, Sahin O, Tang CS, Xiao S (2020) Innovative online platforms: research opportunities. Manuf Serv Oper Manag 22(3):430–445

Cason T, Masters W, Sheremeta R (2020) Winner-take-all and proportional-prize contests: theory and experimental results. J Econ Behav Organ 175:314–327

Daley B, Wang R (2018) When to release feedback in a dynamic tournament. Decis Anal 15(1):11–26

Fullerton RL, McAfee RP (1999) Auction in entry into tournaments. J Polit Econ 107(3):573–605

Gerchak Y, He QM (2003) When will the range of prizes in tournaments increase in the noise or in the number of players? Int Game Theory Rev 5:151–166

Hu M, Wang L (2020) Joint vs. separate crowdsourcing contests. Manag Sci 67(5):2711–2728

Kalra A, Shi M (2001) Designing optimal sales contests: a theoretical perspective. Mark Sci 20(2):170–193

Körpeoğlu E, Cho SH (2017) Incentives in contests with heterogeneous solvers. Manag Sci 64(6):2709–2715

Körpeoğlu E, Korpeoglu CT, Hafalir IE (2021) Parallel innovation contests. Oper Res, Forthcoming

Korpeoglu C, Körpeoğlu E, Tunç S (2020) Optimal duration of innovation contests. Manuf Serv Oper Manag 23(3):657–675

Lang M, Seel C, Strack P (2014) Deadlines in stochastic contests. J Math Econ 52:134–142

Mihm J, Schlapp J (2019) Sourcing innovation: on feedback in contests. Manag Sci 65(2):559–576

Moldovanu B, Sela A (2001) The optimal allocation of prizes in contests. Am Econ Rev 91(3):542–558

Seel C (2018) Contests with endogenous deadlines. J Econ Manag Strategy 27(1):119–133

Seel C, Strack P (2016) Continuous time contests with private information. Math Oper Res 41(3):1093–1107

Shao B, Shi L, Xu B, Liu L (2012) Factors affecting participation of solvers in crowdsourcing: an empirical study from china. Electron Mark 22(2):73–82

Stouras KI, Hutchison-Krupat J, Chao RO (2021) The role of participation in innovation contests. Manag Sci, Published Online

Taylor CR (1995) Digging for golden carrots: an analysis of research tournaments. Am Econ Rev 85(4):872–890

Terwiesch C, Xu Y (2008) Innovation contests, open innovation, and multiagent problem solving. Manag Sci 54(9):1529–1543

Tian X (2021) Innovation contests with risk-averse participants. Nav Res Logist, Published Online

Tian X, Bi GB (2021) Award scheme in random trial contests. Ann Oper Res 302(1):313–325

Tian X, Bi GB, Shen XB, Liu LD (2021) Crowdsourcing contests with entry cost. Int Trans Oper Res 28(3):1371–1392

Acknowledgements

The work is partially supported by the National Natural Science Foundation of China [Grant 71731010, 71571174].

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1: Proof of Lemma 1

Solving the optimizing problem of contestants:

Using the first-order condition, at optimality, we have

Recall that

Taking the first-order derivative of \(Pr(q_{i} \text { ranks order j })\) with respect to \(e_{i}\) and by symmetry \(e_{i}=e^*\) at optimality in the first-order derivative \(\frac{\partial Pr(q_{i} \text { ranks order j })}{\partial e_i}\) (see, Ales et al. 2020), it is not hard to verify that \(\frac{\partial Pr(q_{i} \text { ranks order 1 })}{\partial e_i}=\frac{1}{e^*}\mathbb {E}\big [\epsilon _{1}^{n-1}f(\epsilon _{1}^{n-1})\big ]\), \(\frac{\partial Pr(q_{i} \text { ranks order j })}{\partial e_i}=\frac{1}{e^*}\left\{ \mathbb {E}\big [\epsilon _{j}^{n-1}f(\epsilon _{j}^{n-1})\big ]-\mathbb {E}\big [\epsilon _{j-1}^{n-1}f(\epsilon _{j-1}^{n-1})\big ]\right\}\) for \(2\le j\le n-1\), and \(\frac{\partial Pr(q_{i} \text { ranks order n })}{\partial e_i}=-\frac{1}{e^*}\mathbb {E}\big [\epsilon _{n-1}^{n-1}f(\epsilon _{n-1}^{n-1})\big ]\). Recall that \(C(e_i)=e_i^b\), via simple calculation, we can obtain this lemma. \(\square\)

Appendix 2: Proof of Lemma 2

In the model with endogenous duration, the optimizing problem of contestants is given by

Using the first-order condition, at optimality, we have

Similar to the proof of Lemma 1 and since \(C(e_i,T)=e_i^bT^{1-b}\), using simple calculation, we can obtain this lemma. \(\square\)

Appendix 3: Proof of Lemma 3

In the endogenous duration contest model, the optimal duration \(T^{*}\) can be obtained by the following maximization problem:

Let k be the Lagrange multiplier. The optimal contest duration \(T^{*}\) and the optimal Lagrange multiplier \(k^{*}(\ge 0)\) satisfy the following Kuhn–Tucker conditions:

Then, the optimal contest duration

where \(A=\sum _{j=1}^{L}\big [U(V_{j})-U(V_{j+1})\big ]{\mathbb {E}}\big [\epsilon _{j}^{n}f(\epsilon _{j}^{n})\big ]\). \(\square\)

Appendix 4: Proof of Proposition 1

Note that, this proposition can be easily obtained by Tian (2021) who also analyze the multiplicative utility function model, see the proof of their Theorem 6. They assume the contestants are risk-averse and the random shock follows the exponential distributions, while we assume it follows the log-normal distributions and the contestants are either risk-taking, risk-neutral, or risk-averse. Below, we come to the proof.

By Lemma 1 and \(C^{\prime }(e)e\) is an increasing function, then to maximize the sponsor’s profit \(\pi\), we only need to maximize

where \(l_{j}^{n}={\mathbb {E}}\big [\epsilon _{j}^{n-1}f(\epsilon _{j}^{n-1})\big ]-{\mathbb {E}}\big [\epsilon _{j-1}^{n-1}f(\epsilon _{j-1}^{n-1})\big ]\) for \(j \in \{2,3,\ldots ,n-1\}\), \(l_{n}^{n}=-{\mathbb {E}}\big [\epsilon _{n-1}^{n-1}f(\epsilon _{n-1}^{n-1})\big ]<0\), and \(l_{1}^{n}={\mathbb {E}}\big [\epsilon _{1}^{n-1}f(\epsilon _{1}^{n-1})\big ]>0\).

Next, we will show that for log-normal distributions, given any L, there exists a critical value \(n_{L}\) such that

Using integration by parts, it is not hard to verify that

Therefore, we have \(l_{j}^{n}={\mathbb {E}}\big [\epsilon _{j}^{n-1}f(\epsilon _{j}^{n-1})\big ]-{\mathbb {E}}\big [\epsilon _{j-1}^{n-1}f(\epsilon _{j-1}^{n-1})\big ]=-\int _{0}^{\infty }\frac{(n-1)!}{(j-1)!(n-j)!}\left( 1-F(x)\right) ^{j-1}{F(x)}^{n-j}\left( xf(x)\right) ^{\prime }\mathrm{d}x\) for \(j \in \{2,3,\ldots ,n-1\}\). Similarly, we can obtain

Also, we have \(l_{2}^{n}=-\int _{0}^{\infty }{F(x)}^{n-1}\big (xf(x)\big )^{\prime }\mathrm{d}x+\int _{0}^{\infty }\big (1-F(x)\big ){F(x)}^{n-1}\left( \frac{\big (xf(x)\big )^{\prime }}{f(x)}\right) ^{\prime }\mathrm{d}x\). Using integration by parts, we have \(l_{1}^{n}={\mathbb {E}}\big [\epsilon _{1}^{n-1}f(\epsilon _{1}^{n-1})\big ]=-\int _{0}^{\infty }{F(x)}^{n-1}\big (xf(x)\big )^{\prime }\mathrm{d}x\). Recall that

we obtain \(\left( \frac{\big (xf(x)\big )^{\prime }}{f(x)}\right) ^{\prime }=-\frac{1}{x}\). Therefore, \(l_{1}^{n}\ge l_{2}^{n}\ge \cdots \ge l_{L}^{n}\).

By some calculation, we have \(-\big (xf(x)\big )^{\prime }=f(x)\frac{\ln x-\mu }{\sigma ^2}\) and

Therefore, \(\frac{n}{n-1}l_{j}^{n}\) can be viewed as the expectation of \(\frac{\ln x-\mu }{\sigma ^2}\) under the PDF of \(\epsilon _{j-1}^{n+1}\). It is not hard to verify that when \(n\rightarrow \infty\), \(\frac{n}{n-1}l_{j}^{n}\rightarrow \infty\), which implies that \(l_j^n>0\) if \(n\ge n^{j}\) for some critical value \(n^{j}\). Let \(n_{L}=\max \{n^{j},1\le j \le L\}\), then we can obtain if \(n>n_{L}\) for some critical value \(n_{L}\), we have \(l_j^n>0\) for any \(1\le j\le L\).

Finally, we come to the sponsor’s optimization problem as follows:

When \(U(V)=V\), and \(l_{j}^{n}\) decreases in j. Then \(Vl_{1}^{n}>\sum _{j=1}^{L}V_{j}l_{j}^{n}\) for \(L\ge 2\), which implies a single-winner award scheme is optimal. The case when \(U(V)=V^{s}\) with \(s>1\) is similar. When \(0<s<1\). It is not hard to verify that, at optimality,

Then, we have \(V_{j}^*>0\) for any \(1\le j\le L\), which implies a multiple-winner award scheme is optimal. \(\square\)

Appendix 5: Proof of Proposition 2

Note that, this proposition can be easily obtained by Tian (2021), see the proof of their Theorem 6. Below, we come to the proof.

By the proof of Proposition 1, if \(0<s<1\), it is not hard to verify that \(V_{i}^*=\frac{(l_{i}^{n})^{\frac{1}{1-s}}}{\sum _{j=1}^{L}(l_{j}^{n})^{\frac{1}{1-s}}}\). Next, we will show that \(l_{j-1}^n-l_{j}^{n}\ge l_{j}^{n}-l_{j+1}^{n}\) for any \(2\le j\le L-1\). Using integration by parts, we have

By some calculation, we have \(\left( \frac{\left( \frac{\big (xf(x)\big )^{\prime }}{f(x)}\right) ^{\prime }}{f(x)}\right) ^{\prime }<0\). Therefore, we can obtain \(l_{j-1}^n-l_{j}^{n}\ge l_{j}^{n}-l_{j+1}^{n}\). Also, by \(V_{j}^*=\frac{(l_{j}^{n})^{\frac{1}{1-s}}}{\sum _{j=1}^{L}(l_{j}^{n})^{\frac{1}{1-s}}}\) and \(r_{j}^*=V_{j}^*/V\), we can obtain \(r_{j-1}^*-r_{j}^*> r_{j}^*-r_{j+1}^*\) for any \(2\le j\le L-1\). \(\square\)

Appendix 6: Proof of Proposition 3

Using integration by part, we have

Therefore, by the proof of Proposition 1, we have

if \(n\ge {\tilde{n}}_{L}\) for some critical value \({\tilde{n}}_{L}\). By Lemma 1, we have

Thus, the EAQ decreases in n if \(n\ge {\tilde{n}}_{L}\) for some critical value \({\tilde{n}}_{L}\). \(\square\)

Appendix 7: Proof of Proposition 4

Since \(\epsilon\) is log-normally distributed, then \(F(x)=\int _{0}^{x}f(t)\mathrm{d}t=\int _{0}^{x}\frac{1}{t\sigma \sqrt{2\pi }}\exp ^{-\frac{(\ln t-\mu )^2}{2\sigma ^{2}}}\mathrm{d}t=\Phi (\frac{\ln x-\mu }{\sigma })\), where \(\Phi (\cdot )\) is the CDF of the standard normal distribution. Thus, we have

Using the random shock transformation \({\hat{\epsilon }}=\gamma \epsilon ^{\alpha }\), we have

Since \(\Phi (x)\le 1\), then

On the other hand, \(\forall ~\epsilon >0\), \(\exists ~p<1\), such that \(np^{n-1}>n-\epsilon\), where \((1-\frac{\epsilon }{n})^{\frac{1}{n-1}}<p<1\). Then, \(\exists ~r\in {\mathcal {R}}^+\), \(\Phi (r)\ge p\).

Then, we have \(\lim _{\alpha \rightarrow \infty }{\mathbb {E}}[{{{\hat{\epsilon }}}}_{1}^{n}]\ge np^{n-1}>n-\epsilon\). Let \(\epsilon \rightarrow 0\), we obtain

In addition, \(\hbox {EMQ}=e^{*}\cdot {\mathbb {E}}[\epsilon _{1}^{n}]\). Then

By the above analysis, we have when \(\alpha \rightarrow \infty\), \(\frac{\frac{{\mathbb {E}}[\epsilon _{1}^{n+1}]}{n+1}}{\frac{{\mathbb {E}}[\epsilon _{1}^{n}]}{n}}\rightarrow 1\). Next, we will show that \(\frac{(n+1)e^{*}(n+1)}{ne^{*}(n)}>1\). Using integration by parts, we have

By simple calculation, we have \(\Big [\frac{xf(x)}{\big (1-F(x)\big )}\Big ]^{\prime }=\frac{f(x)}{1-F(x)}\left( \frac{\big (xf(x)\big )^{\prime }}{f(x)}+x\frac{f(x)}{1-F(x)}\right) =\frac{f(x)}{1-F(x)}(-\frac{\ln x-\mu }{\sigma ^{2}}+x\frac{f(x)}{1-F(x)})\).

If \(\ln x\le \mu\), then we can easily obtain that \(\Big [\frac{xf(x)}{\big (1-F(x)\big )}\Big ]^{\prime }>0\).

If \(\ln x>\mu\), we have

To proceed, we need the following property:

By this property, we have \(\frac{\ln x-\mu }{\sigma ^{2}}\le x\frac{f(x)}{1-F(x)}\le \frac{\ln x-\mu }{\sigma ^{2}}+\frac{1}{\ln x-\mu }\). Thus, \(\Big [\frac{xf(x)}{\big (1-F(x)\big )}\Big ]^{\prime }>0\). Then, \((n+1){\mathbb {E}}\big [\epsilon _{j}^{n}f(\epsilon _{j}^{n})\big ]\) increases in n for any \(1\le j\le L\). By Lemma 1, we have \(\frac{(n+1)e^{*}(n+1)}{ne^{*}(n)}>1\). Thus, there exists some critical value \(\alpha _1\) such that if \(\alpha >\alpha _1\), the EMQ increases in n. \(\square\)

Appendix 8: Proof of Proposition 5

By the proof of Proposition 4, \(n{\mathbb {E}}\big [\epsilon _{j}^{n-1}f(\epsilon _{j}^{n-1})\big ]\) increases in n. In addition,

Then, this integral can be viewed as the expectation of \(\frac{xf(x)}{1-F(x)}\) under the PDF of \(\epsilon _{j+1}^{n}\). When \(n\rightarrow \infty\), \(\epsilon _{j+1}^{n}\rightarrow \infty\) with probability one. Since \(\epsilon\) is log-normally distributed, then we can verify that when \(x\rightarrow \infty\), \(\frac{xf(x)}{1-F(x)}\rightarrow \infty\), i.e., \(n{\mathbb {E}}\big [\epsilon _{j}^{n-1}f(\epsilon _{j}^{n-1})\big ]\rightarrow \infty\) when \(n\rightarrow \infty\).

Considering the participation constraint

and Lemma 1, \(bC(e^*)=\sum _{j=1}^{L}\big [U(V_{j})-U(V_{j+1})\big ]{\mathbb {E}}\big [\epsilon _{j}^{n-1}f(\epsilon _{j}^{n-1})\big ]\). When \(\frac{\sum _{j=1}^{L}U(V_j)}{n}=\frac{V}{n}\), it is easy to see that there exists an upper bound \({\overline{n}}\) to guarantee the participation constraint hold. \(\square\)

Appendix 9: Proof of Proposition 6

By Lemma 3, the optimal duration is

where \(A=\sum _{j=1}^{L}\big [U(V_{j})-U(V_{j+1})\big ]{\mathbb {E}}\big [\epsilon _{j}^{n-1}f(\epsilon _{j}^{n-1})\big ]\). Then, we can obtain that the optimal duration \(T^*\) increases in the total award V and decreases in the urgency \(\delta\).

By the proof of Proposition 4, we have \({\mathbb {E}}[\epsilon _{1}^{n}]^b\cdot {\mathbb {E}}\big [\epsilon _{j}^{n-1}f(\epsilon _{j}^{n-1})\big ]\) increases in n for \(\alpha >\alpha _1\) where \(\alpha _1\) is the critical value. Then, the optimal duration \(T^*\) increases in the number of contestants n if \(\alpha >\alpha _1\). \(\square\)

Appendix 10: Proof of Proposition 7

First, by Lemma 3, the optimal duration is \(T^{*}=\Big [{\mathbb {E}}[\epsilon _{1}^{n}](\frac{A}{b})^{\frac{1}{b}}\frac{b-1}{b}\frac{1}{\delta }\Big ]^{b}\) where \(A=\sum _{j=1}^{L}\big [U(V_{j})-U(V_{j+1})\big ]{\mathbb {E}}\big [\epsilon _{j}^{n-1}f(\epsilon _{j}^{n-1})\big ]\). Then, the profit of the sponsor is given by

Next, we have

By simple calculation, we have \(\frac{\partial \pi _{T}}{A}>0\). Therefore, by Propositions 1 and 2, we can verify this proposition. \(\square\)

Appendix 11: An example to show the rationality of the multiplicative output form

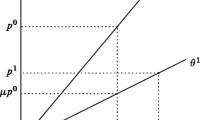

Below, we will show that in an innovation contest, the multiplicative output form also share some rationality. Suppose that there are two contestants in an innovation contest. In the additive output form, the performance quality of contestant i is

where \({\tilde{e}}_{i}\) is contestant i’s effort and \({\tilde{\epsilon }}_{i}\) is the random shock which is assumed as normally distributed with zero mean and the variance being 1/2. Suppose that \({\tilde{e}}_{1}=0\) and \({\tilde{e}}_{2}=1\). Then, the wining probability of contestant 1 is

This implies that contestant 1 puts zero effort and has the winning probability being 0.1587, which is irrational.

However, in the multiplicative output form, let

where \(e_{i}\) is contestant i’s effort and \(\epsilon _{i}\) is the random shock which is assumed as log-normally distributed with the mean being 1 and the variance being 1/2. Suppose that \(e_{1}=0\) and \(e_{2}=1\). Then, in the model with multiplicative output form, the wining probability of contestant 1 is

This example shows that the model with multiplicative output form also shares some rationality.

Appendix 12: The explanation to Korpeoglu (2020)

If the sponsor faces a multiplicative discounting, the sponsor’s maximization problem is given as:

In Korpeoglu (2020), who consider this maximization problem in the model with additive output form, see their page 12. Note that, considering a multiplicative discounting in the model with multiplicative output form, it is not hard to verify that the optimal duration is \(\delta \frac{b}{b-1}\). In this case, we can also verify that for any urgency, the optimal award scheme is the same as Propositions 1 and 2.

We come to Korpeoglu (2020) and show why the difference occurs. Since in the model with multiplicative output form, the optimal effort satisfies

then the optimal duration \(T^*\) does not affect the participation constraint:

However, in Korpeoglu (2020), see their proof of their Lemma 2 (page 26). In their Equation (14), their optimal duration affects the participation constraint. In their Lemma 2, there are two solutions of the optimal duration, and the optimal duration in a low sponsor’s urgency case can yield the optimality of multiple-winner scheme, see the proof of their Theorem 2, while in this paper, there is only one solution of the optimal duration.

Below, we explain this phenomenon. First, at optimality, in the model with additive output form (Korpeoglu 2020), the unit effort \(\frac{e^*}{T}\) is constant as the duration T increases, see their page 12, while in the model with multiplicative output form (this paper), the unit effort \(\frac{e_T^*}{T}\) is decreasing as the duration T increases, which can be easily obtained by our Lemma 2. Second, the contestants’ cost \(C(e_{T}^{*},T)=(e_{T}^{*})^{b}T^{1-b}=(\frac{e_T^*}{T})^{b}T\) can be constant as the duration T increases in the model with multiplicative output form, i.e., the duration in the model with multiplicative output form does not affect the participation constraint. Third, in an innovation contest, the trade-off of a contestant is her cost and profit (award). The duration can increase the contestants’ cost but not increase the award, and the rational contestants can also reduce their unit cost among the whole duration. Then, the total cost can be the same. As a result, the duration in the model with multiplicative output form does not affect the participation constraint. In the end, note that, in the endogenous duration contest model, a proper duration \(T^*\) should be obtained by the sponsor’s trade-off in which a long duration can provide high-quality solutions as well as yield a large duration cost.

Appendix 13: Some numerical studies

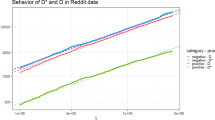

First, we give the EAQ and EMQ in the model with additive output form in Fig. 3, which shows that the EAQ decreases in n when \(n\ge 6\) and the EMQ increases in n when \(n\ge 5\). Note that, in Fig. 1b, in the model with multiplicative output form, the EMQ does not increase in n (in which, \(\mathbb {VAR}[\epsilon ]\approx 1.718\)); in Fig. 3b, in the model with additive output form, the EMQ increases in n (in which, \(\mathbb {VAR}[{\tilde{\epsilon }}]=1\)). These suggest that compared with the model with additive output form, if the EMQ is preferred, it is more likely to adopt a restricted-entry policy in the model with multiplicative output form.

Second, by numerical studies, we have even three contestants can guarantee the optimality of a two-winner award scheme for different distributions of random shock, which are shown in Table 1.

Rights and permissions

About this article

Cite this article

Tian, X., Bi, G. Multiplicative output form and its applications to problems in the homogenous innovation contest model. OR Spectrum 44, 709–732 (2022). https://doi.org/10.1007/s00291-022-00667-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00291-022-00667-y