Abstract

We consider the possibility of free receptor (antigen/cytokine) levels rebounding to higher than the baseline level after the application of an antibody drug using a target-mediated drug disposition model. It is assumed that the receptor synthesis rate experiences homeostatic feedback from the receptor levels. It is shown for a very fast feedback response, that the occurrence of rebound is determined by the ratio of the elimination rates, in a very similar way as for no feedback. However, for a slow feedback response, there will always be rebound. This result is illustrated with an example involving the drug efalizumab for patients with psoriasis. It is shown that slow feedback can be a plausible explanation for the observed rebound in this example.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we continue our investigation into the phenomenon of receptor rebound, i.e., a post-dose rise in receptor levels to higher than pre-dose (baseline). In our previous paper (Aston et al. 2014), we showed that if no homeostatic feedback is present, rebound will occur if and only if the elimination rate of the target-drug complex is slower than both the elimination rate of the drug and the elimination rate of the target. Binding to cell-surface receptors typically results in accelerated turnover of the anti-body and hence complex, so it can be expected that rebound will occur in rare cases only. However, Ng et al. (2005) describe a treatment for psoriasis patients with efalizumab in which rebound is observed. They also derive a model that shows good agreement with the experimental observations. A main difference between this model and standard TMDD models is that the model includes receptor feedback for the synthesis rate. In the basic TMDD model, reduction of free target levels does not have any impact on the endogenous production or elimination rate of the free target, i.e., no endogenous feedback control exists to compensate for the antibody effect on target. The model in Ng et al. (2005) includes such feedback control via an additional differential equation for the synthesis rate. Another indicator of the importance of feedback in the synthesis rate is the recent paper by Kristensen et al. (2013), in which it is postulated that the protein synthesis rate is the predominant regulator of protein expression during differentiation.

In this paper we investigate how receptor feedback influences the occurrence of rebound in the TMDD model. The receptor feedback is usually a dynamical process via some moderators and we modify the TMDD model to include feedback by adding an additional differential equation. If the feedback is very fast, a quasi-equilibrium approach can be used and the feedback can be included in the synthesis term itself Hek (2010). This approach is similar to the one leading to the Michaelis–Menten approximation or the Quasi-Steady-State approximation. We will use the term “direct feedback” for the quasi-equilibrium approximation. The modification to include feedback is presented in full detail in Sect. 3, after a review of the main results of our first paper (Aston et al. 2014) on rebound in the basic TMDD model without feedback in Sect. 2. A wide class of feedback functions is considered with some natural assumptions on their action.

The TMDD model with the direct feedback approximation is analysed in Sect. 4. This analysis shows that the existence or non-existence of rebound is still linked to the elimination rates, though rebound can be expected in a larger region in the elimination parameter plane, see the left plot in Fig. 1. The general case of feedback via a moderator is analysed in Sect. 5. Now the response speed of the feedback moderator plays an important role as well as the elimination rates. It is shown that there is a similar region in the elimination rate plane as for the basic TMDD model for which rebound will occur for any response speed. Furthermore, if the feedback responds slowly to a change in the receptor levels, rebound will occur for any value of the elimination rates. A schematic overview of this result is in the right plot of Fig. 1.

A schematic overview of the elimination plane. On the horizontal axis is the elimination rate of the ligand (antibody/drug), denoted by \(k_\mathrm{e(L)}\), and on the vertical axis is the elimination rate of the receptor (antigen/target), denoted by \(k_\mathrm{out}\). The elimination rate of the antibody–antigen complex (drug–target product) is denoted by \(k_\mathrm{e(P)}\). On the left is an overview of the occurrence of rebound in the case of the direct feedback approximation. In the red region, rebound will occur and in the green region no rebound can occur. The symbols h, m and M are related to the feedback function and will be defined in Sect. 4. In the case where there is no feedback, \(m=h=M=0\) and the region in which there is rebound is the red region above the line \(k_\mathrm{out}=k_\mathrm{e(P)}\). On the right is an overview of the occurrence of feedback, depending on the speed of the feedback response. There is a red region in the elimination plane, that equals the direct feedback rebound region, for which rebound will occur for any speed of the feedback response. In addition, if the response is slow, rebound will occur for any elimination values. The precise response speed for which the rebound stops occurring will vary across the pink region (color figure online)

The TMDD model is a one-compartment model. In Sect. 6, we consider briefly two classes of more general models with feedback (one class is related to multiple compartments, the other to more general feedback mechanisms) and show that rebound will generically occur in such models for slow feedback moderator response. In this paper, the word generic refers to the assumptions that the linearisation about the baseline state has no degenerate eigenvalues and that trajectories approaching the attracting baseline state do so tangent to the eigenvector associated with the least attracting eigenvalue. Section 7 illustrates the theory for two examples. The first example is a standard TMDD model for the IgE mAb omalizumab. We discuss what the effect of the two types of feedback mechanisms would be in this case . The second example is the model in Ng et al. (2005) which describes the efficacy of efalizumab for patients with psoriasis. Simulations show that the model does not lead to rebound if the feedback is turned off, but a significant rebound (about 140% of baseline) will occur if feedback is included. These results can be predicted with our analysis and underpin the observations of rebound in some patients. Finally, Sect. 8 contains a discussion of the results obtained in this paper and poses some open questions. The proofs of some of the technical results in the paper are given in the Appendix.

2 Review of previous results

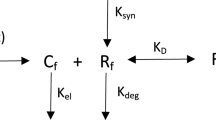

In our previous paper (Aston et al. 2014), we considered a one-compartment TMDD model based on the original work of Levy (1994) where the ligand L binds reversibly with the receptor R to form a receptor-ligand complex P as shown in Fig. 2. The TMDD model assumes a mechanism-based reaction to explain the ligand-receptor interaction. The parameters of the model are the binding rate constants \(k_\mathrm{on}\) and \(k_\mathrm{off}\), the receptor turnover and elimination rates \(k_\mathrm{in}\) and \(k_\mathrm{out}\), and the elimination rates of the ligand and complex \(k_\mathrm{e(L)}\) and \(k_\mathrm{e(P)}\). The system is assumed to be initially at steady state, into which a single bolus infusion \(L_0\) of the ligand into the central (plasma) compartment is made (represented in Fig. 2 by ‘In’). The differential equations that comprise the mathematical model for this system are given by

A steady state of this system is given by \(L=P=0\), \(R=k_\mathrm{in}/k_\mathrm{out}\). Adding the bolus injection \(L_0\) gives the initial conditions

After the ligand is added to the system in its baseline state, initially the receptor level decreases, but after a while it goes up again and returns to its baseline value, since the steady state is globally asymptotically stable (Aston et al. 2014). Rebound occurs if, in the return to the baseline value, the receptor level increases to values above the baseline \(R_0\). In Aston et al. (2014), we determined precise conditions for the existence and non-existence of rebound. Our main result was the following.

Theorem 2.1

Rebound occurs in Eqs. (1)–(3) if and only if

This result shows that rebound occurs if and only if the elimination rate of the product is slower than the elimination rates of both the ligand and the receptor. This is represented graphically by different regions in the \((k_\mathrm{e(L)},k_\mathrm{out})\) plane, as shown in Fig. 3. The above condition for rebound in terms of non-dimensional parameters is given by

where

The green region shows the part of the \((k_\mathrm{e(L)},k_\mathrm{out})\) parameter plane where there is no rebound, and the red region shows where there is rebound. The boundaries are given by the lines \(k_\mathrm{e(L)}=k_\mathrm{e(P)}\) and \(k_\mathrm{out}=k_\mathrm{e(P)}\). This figure is similar to Fig. 2 in Aston et al. (2014) (color figure online)

Intuitively, if the clearance of the complex is slow relative to the other two clearance rates, when there is a significant build-up of the complex due to the rapid binding of ligand and receptor, then this complex is only eliminated slowly. This results in an additional “production” of the receptor when the complex dissociates which is likely to lead to rebound. Our analysis quantifies this and we now show how these thresholds that we derived previously are affected if receptor feedback is added to the system.

3 Including feedback in the TMDD model

We now extend our previous work on rebound in the basic TMDD model by extending the model to include feedback on the synthesis rate. The feedback gives an increase in the production rate of the receptor if the receptor levels drop under the baseline. Similarly, if the receptor level exceeds the baseline value, then the feedback induces a decrease in the production rate. Thus, this mechanism can be classified as negative feedback.

We start with the TMDD equations (1)–(3) but now assume that the rate of production of R (\(k_\mathrm{in}\)) is not constant but varies as R changes. Thus, Eq. (2) is now modified to have the form

The new feedback variable F, which will be related to R, has the effect of varying the production rate of the receptor. The feedback is usually via some moderator and its dynamics is described with the differential equation

The constant \(\alpha \ge 0\) determines the strength of the feedback and determines the time scale on which the feedback moderator responds to changes in the receptor levels. Clearly, if \(\alpha =0\), then F is a constant and the model reduces to the basic TMDD model with no feedback. If \(\alpha \) is small, the feedback response is slow, while if \(\alpha \) is larger, there is a very fast feedback response. Formally, dividing (6) through by \(\alpha \) and taking the limit \(\alpha \rightarrow \infty \), we see that the derivative term vanishes and the fixed relation

is obtained. This motivates a quasi-equilibrium approximation in which the differential equation for F is replaced with the relation (7). We will call this the direct feedback approximation.

Next we consider the function H(R). The function must be such that the model has the following features:

-

the production rate of the receptor, denoted by \(k_\mathrm{in}F\), should be positive;

-

the receptor concentration should have the baseline \(R=R_0=k_\mathrm{in}/k_\mathrm{out}\);

-

the feedback mechanism should reduce the production rate of the receptor when the receptor level exceeds the baseline and should increase it when the receptor level is below the baseline.

This leads to the following general assumptions on the function H(R):

-

(H1)

\(H(R)>0\) for all \(R\ge 0\);

-

(H2)

\(H(R_0)=1\), where \(R_0=k_\mathrm{in}/k_\mathrm{out}\);

-

(H3)

\(H(R)>1\) when \(0\le R<R_0\) and \(0<H(R)<1\) when \(R>R_0\);

-

(H4)

\(H\in C^2([0,\infty ))\).

We note that assumption (H1) ensures that in case of the direct feedback approximation (i.e, F is a function of R and (7) holds) the feedback \(F>0\), so that the production rate of R is always positive. Alternatively, if the dynamics of F is described by the differential equation (6), then this condition ensures that

and so the line \(F=0\) cannot be crossed from above to below. This also ensures that \(F>0\) for all \(t>0\) and hence the production rate is always positive.

The basic TMMD model without feedback, Eqs. (1)–(3), has a steady state solution given by \(L=P=0\), \(R=R_0=k_\mathrm{in}/k_\mathrm{out}\). This will still be a steady state of our modified equations in both the full model and the direct feedback approximation, provided that (H2) holds. For the full model, we observe that the steady state value for F is \(F=1\). Finally, the assumption (H3) ensures that the production of the receptor slows down when there is excess receptor, and increases when the receptor level is below the baseline and (H4) is a technical assumption assuring sufficient smoothness. Combining (H2)–(H4) implies that \(H'(R_0)\le 0\). We note that if H is a positive, strictly decreasing, smooth function with \(H(R_0)=1\), then (H1)–(H4) are satisfied. However, the stated conditions are more general and do not require H to be strictly decreasing.

We now have two modified TMDD models that incorporate feedback. For the direct feedback approximation, we use (7) to replace F with H(R) which gives the differential equations

Alternatively, the full feedback model involves an extra differential equation for the moderator F and the modified TMDD model is given by

The initial conditions for both models are given by (4), with the extra condition \(F(0)=1\) for the feedback moderator.

We non-dimensionalise these equations in the same way as in Aston et al. (2014). We note that our new variable F is non-dimensional, and so we define the dimensionless variables

We also define a new function

The above assumptions on the function H(R) translate into the following assumptions on h(y):

-

(h1)

\(h(y)>0\) for all \(y\ge 0\);

-

(h2)

\(h(1)=1\);

-

(h3)

\(h(y)>1\) when \(0\le y<1\) and \(0<h(y)<1\) when \(y>1\);

-

(h4)

\(h\in C^2([0,\infty ))\).

For later use, we define

and note that the assumptions (h2)–(h4) imply that \(h_0\ge 0\).

In terms of the non-dimensional quantities, the direct feedback equations (8)–(10) become

with initial conditions

where dot denotes differentiation with respect to \(\tau \) and the dimensionless parameters are defined as in Aston et al. (2014) by

Clearly, this choice of non-dimensionalisation requires that \(k_\mathrm{on}\ne 0\) and \(k_\mathrm{in}\ne 0\) (so that \(R_0\ne 0\)). We note that all parameters must be non-negative due to their physical meaning, and additionally we will assume that they are in fact all strictly positive. The three variables x, y, and z are related to physical quantities and so must also be non-negative.

Similarly, the non-dimensional equations for the full feedback model (11)–(14) are given by

with initial conditions

Furthermore,

is a non-dimensional measure for the response speed of the feedback moderator.

4 Analysis of the TMDD model with direct feedback

In this section, we will analyse the TMDD model with the direct feedback approximation, i.e., the Eqs. (16)–(18), with initial conditions (19), and seek to determine conditions for the existence or non-existence of rebound in this model. However, we start by proving some basic results for the model equations.

4.1 Invariance, steady states and stability

For the basic TMDD model, we proved in Aston et al. (2014) that the positive octant \(x,y,z\ge 0\) is invariant; that there is a unique steady state in the positive octant; and that this steady state is a global attractor. In this section we show that the same properties hold for the TMDD model with direct feedback. The proofs of these results can be found in Appendix A. We start by stating invariance of the positive octant.

Lemma 4.1

The octant of \({\mathbb {R}}^3\) defined by \(x,y,z\ge 0\) is invariant under the flow of Eqs. (16)–(18).

This result shows that the equations are a good model in the sense that the concentrations of the ligand, complex and receptor can never go negative. Next we consider the steady states of Eqs. (16)–(18).

Lemma 4.2

In the region of \({\mathbb {R}}^3\) defined by \(x,y,z\ge 0\), the Eqs. (16)–(18) have a unique steady state given by

This steady state is globally asymptotically stable.

This result shows that for all non-negative initial conditions and for all positive parameter values, each of the variables will converge to their unique steady state value.

It is also straightforward to show that the eigenvalues of the Jacobian of Eqs. (16)–(18) evaluated at the steady state (26) are very similar to those given in Aston et al. (2014). In particular, the two eigenvalues \(\lambda _1\) and \(\lambda _2\) are the same as previously and are given by

while the third eigenvalue, which previously was \(\lambda _3=-k_3\), is now given by

where we recall that \(h_0= -h'(1)\). The corresponding eigenvectors are given by

4.2 Receptor dynamics

Before considering the full model, we first consider the effect of feedback on the dynamics of y, the non-dimensional form of the receptor, in the absence of the ligand or product. As with the basic TMDD model, we note that \(x=z=0\) satisfies (16) and (17). Substituting \(x=z=0\) into (18), we obtain the single differential equation

Assumption (h2) ensures that the previous steady state value \(y=1\) is also a steady state of this equation while assumption (h3) ensures that this is the only non-negative steady state solution and that \(\dot{y}<0\) if \(y>1\) and \(\dot{y}>0\) if \(0\le y<1\). The theory of scalar, autonomous differential equations (Jordan and Smith 2007) then tells us that if the initial condition \(y_0\) is less than the steady state (\(y_0<1\)) then \(y(\tau )\) will increase monotonically to the steady state, whereas if \(y_0\) is greater than the steady state (\(y_0>1\)), then \(y(\tau )\) will decrease monotonically to the steady state. As \(y(\tau )\) is monotonic, there is no possibility of rebound occurring in the dynamics of the receptor on its own.

Assumptions (h2) and (h3) together with definition (15) imply that

As \(h_0\ge 0\), the inclusion of the function h(y) ensures that the asymptotic rate of convergence to the steady state (proportional to \(e^{-k_3(h_0+1)t}\)) will be faster than or equal to the rate of convergence which occurs when \(h(y)=1\) (proportional to \(e^{-k_3 t}\)). Thus, qualitatively, the direct feedback has the effect of speeding up the monotonic convergence to the steady state in the absence of ligand and product, but there is no rebound in this case, i.e., if \(y_0<1\), then \(y(\tau )< 1\) for all \(\tau \ge 0\).

4.3 Conditions for rebound

Having established that feedback does not lead to rebound in the absence of the ligand, we now investigate the effect of adding the ligand. A local approximation of the feedback near the baseline will give a linear feedback function. So we first consider the special case that the feedback function h(y) is linear for \(y\le 1\). It turns out to be straightforward to extend our results for the TMDD model without feedback to this case. The general nonlinear case will be analysed next and builds on the ideas of the linear analysis.

4.3.1 A mainly linear feedback function

We consider a feedback function which is linear on a y-interval including [0, 1]:

where \(h_0>0\), \(0<\beta < 1\), and \(h_1\in C^2\left( \left( {1+\frac{\beta }{h_0}},\infty \right) \right) \), \(h_1(y)\in (0,1)\), with

This function satisfies all the conditions (h1)–(h4). For the linear part of the function h, we note that \(h(y)-y=(1+h_0)(1-y)\) and so Eq. (18) becomes

We then have the following result.

Theorem 4.3

Rebound occurs in Eqs. (16), (17), (33) if and only if

Proof

We note that for \(0\le y\le 1\), Eqs. (16), (17) and (33) are the same as the basic TMDD model that we studied in Aston et al. (2014) except that \(k_3\) has been replaced by \(k_3(1+h_0)\). We claim that the results of Theorem 3.1 of Aston et al. (2014) apply in this case also after making this parameter replacement. To see this, we note that when proving the absence of rebound the only relevant values of y are \(0\le y\le 1\) and so the matching point \(y=1+\beta /h_0\) in (33) is not reached and the feedback function for \(y>1\) is therefore not relevant. Moreover, the proof of rebound in Aston et al. (2014) involves only the asymptotic behaviour near to the globally stable steady state \((x,y,z)=(0,1,0)\) and the dynamics for \(0\le y\le 1+\frac{{{\beta }}}{h_0}\) will give all the necessary information to determine this asymptotic behaviour. Hence, we can conclude that Theorem 3.1 in Aston et al. (2014) can be applied in this situation with \(k_3\) replaced by \(k_3(1+h_0)\). \(\square \)

Converting back to the dimensional parameters gives the following result.

Corollary 4.4

Consider the mainly linear feedback function

where \(H_0>0\), \(0<\beta <1\), \(H_1(R)\in C^2((R_0+\frac{\beta }{H_0},\infty ))\), \(H_1(R)\in (0,1)\) and \(H_1\) and its first and second derivatives match the linear function at \(R=R_0+\beta /H_0\).

Rebound occurs in the model given by Eqs. (8)–(10) with this feedback function if and only if

The rebound region in this case is shown in Fig. 4. We note by comparison with Fig. 3 that this mainly linear feedback function has enlarged the region in which rebound occurs compared to the case with no feedback.

The green region shows the part of the \((k_\mathrm{e(L)},k_\mathrm{out})\) parameter plane where there is no rebound, and the red region shows where there is rebound for H(R) given by (34). The solid line \(k_\mathrm{out}=k_\mathrm{e(P)}\) denotes the boundary of the rebound region when no feedback is present and the dashed line \(k_\mathrm{out}=k_\mathrm{e(P)}/(1+R_0H_0)\) denotes the boundary of the rebound region when feedback is present (color figure online)

4.3.2 A general nonlinear feedback function

We now consider the more general case where the feedback function h is nonlinear. We express the function h(y) in the form

Differentiating (35), evaluating at \(y=1\) and using (15), we see that

Condition (h3) implies that

With this form of h, Eq. (18) becomes

Remark

We note that expanding h(y) in a Taylor series with remainder gives that \(\tilde{h}(y)=-h'(\xi (y))\) where \(\xi (y)\) lies between y and 1. However, the fact that \(\xi (y)\) is an unknown function is not helpful, which is why we use the form of h given in (35).

We now consider regions similar to the ones defined for the model without feedback in Aston et al. (2014). First we consider region II and show that no rebound occurs in this region.

Theorem 4.5

Rebound does not occur if \(k_1\le k_4\).

Proof

Following through the proof of Theorem 3.4 of Aston et al. (2014) for these modified equations, the only difference is that

Now \(\tilde{h}(0)>0\) by (37) and so \(\dot{y}|_{y=0}>0\) when \(z\ge 0\), which is the condition required on this plane in the proof of Theorem 3.4 of Aston et al. (2014). Thus, the result holds in this case also. \(\square \)

The next result considers a region similar to region III and we show that rebound does occur.

Theorem 4.6

Rebound occurs if \(k_1>k_4\) and \(k_3(1+h_0)>-\lambda _1(k_1,k_2,k_4)\), where \(\lambda _1\) is given by (27).

Proof

The Jacobian matrix for Eqs. (16), (17) and (38) evaluated at the steady state is the same as that for the simple TMDD model given in Aston et al. (2014) but with \(k_3\) replaced by \(k_3(1+h_0)\). The proof of Theorem 3.5 of Aston et al. (2014) (region III) is entirely based on the eigenvalues and eigenvectors of this matrix, and so the result holds in this case also, with the appropriate replacement of \(k_3\). Since \(\lambda _1\) does not involve \(k_3\), there is no change to this eigenvalue. \(\square \)

The proofs in regions I and IV of the \((k_1,k_3)\) parameter plane in Aston et al. (2014) both made use of the fact that for the model without feedback the plane \(v=y+z=1\) is invariant when \(k_3=k_4\). However, this is no longer the case for our modified equations which include feedback. The differential equation for the dimensionless variable associated with the total amount of receptor \(v=y+z\) is now given by

and so

Clearly no condition on the parameters will give this derivative to be zero (unless \(\tilde{h}\) is constant, the case considered in the previous section), and so we see, as already stated, that the plane \(v=1\) is no longer invariant. However, the function h(y) has a continuous derivative by (h4) and this implies that \(\tilde{h}(y)\) is continuous. Thus the Extreme Value Theorem for continuous functions gives that \(\tilde{h}(y)\) has a minimum and a maximum for \(y\in [0,1]\). So we define

Using (36), this immediately implies that

Also, since \(\tilde{h}(y)>0\) for all \(0\le y<1\) by (37), we get

Since m and M are the minimum and maximum of \(\tilde{h}(y)\), then clearly

and substituting for \(\tilde{h}(y)\) from (35) and rearranging gives

Graphically, this means that on the interval \(y\in [0,1]\), the function h(y) is bounded by two straight lines, both of which pass through the point (1, 1), and which have slopes \(-m\) and \(-M\). This situation is illustrated in Fig. 5. Note that the Mean Value Theorem gives that \(-M\ge \min \{h'(y)\mid 0\le y \le 1\}\) and \(-m \le \max \{h'(y)\mid 0\le y \le 1\}\).

With the bounds m and M, we can give a slightly weaker version of Lemma 2.3 in Aston et al. (2014) and get regions in the \((k_3,k_4)\)-plane for which the dimensionless variable v associated with the total amount of receptor stays on one side of the plane \(v=1\).

Lemma 4.7

For all \(\tau \ge 0\), the dimensionless variable v associated with the total amount of receptor satisfies

Proof

We consider the vector field at the plane \(v=1\). Equation (40) gives

Since we have restricted attention to the plane \(v=1\), this implies that \(y+z=1\) and so \(y\le 1\) as z must be non-negative. As \(1-y\) is non-negative, we can use the bounds on \(\tilde{h}(y)\) given in (41) to get

First we consider the case \(k_4 \ge k_3(1+M)\). In this case, (45) gives \(\dot{v}|_{v=1}\le (1-y)\left( k_3(1+M)-k_4\right) \le 0\), hence the vector field on the plane \(v=1\) is either tangent to \(v=1\) or points towards \(v<1\). This implies that the region of phase space defined by \(x,y,z\ge 0\), \(v=y+z\le 1\) is invariant in forward time since the vector field on the four boundary planes is either tangent to the plane or points into the region (Smith 1995). This was proved for the planes \(x=0\), \(y=0\) and \(z=0\) in Lemma 4.1 and for the plane \(v=1\) above. Since \(v(0)=1\) and this region is invariant, we conclude that \(v(\tau )\le 1\) for all \(\tau \ge 0\).

Next we consider the case \(k_4 \le k_3(1+m)\). Now the other inequality in (45) gives \(\dot{v}|_{v=1}\ge (1-y)\left( k_3(1+m)-k_4\right) \ge 0\), hence the vector field on the plane \(v=1\) is either tangent to \(v=1\) or points towards \(v>1\). This implies that the region of phase space defined by \(x,y,z\ge 0\), \(v=y+z\ge 1\) is invariant in forward time. Since \(v(0)=1\), this implies that \(v(\tau )\ge 1\) for all \(\tau \ge 0\). \(\square \)

With this observation, we can now derive a result on rebound for regions similar to regions I and IV in Aston et al. (2014).

Theorem 4.8

Define m and M as in (41), i.e., the function h(y) satisfies (44).

-

(i)

Rebound does not occur if

$$\begin{aligned} k_3\le \frac{k_4}{1+M} \end{aligned}$$(46) -

(ii)

Rebound does occur if

$$\begin{aligned} \frac{k_4}{1+m}<k_3<-\frac{\lambda _1}{1+h_0} \end{aligned}$$(47)

Using (42) and (43), we note that

This illustrates in particular that there is no overlap between the region for which we have shown the existence of rebound and the region for which we have shown that there is no rebound.

Proof of Theorem 4.8

-

(i)

The case when (46) holds is similar to region I in Aston et al. (2014) and has the property that \(v(\tau )\le 1\) for all \(\tau \ge 0\) by Lemma 4.7. Thus for all \(\tau \ge 0\), we have \(y(\tau )\le v(\tau )\le 1\) and so rebound cannot occur in this case.

-

(ii)

When (47) holds (a condition similar to the one for region IV in Aston et al. (2014)), Lemma 4.7 ensures that \(v(\tau )\ge 1\) for all \(\tau \ge 0\). The right hand inequality in (47) implies that \(\lambda _1<\lambda _3=-k_3(1+h_0)\) and so the eigenvalue closest to zero is \(\lambda _3\), as occurred in region IV previously. Thus, trajectories will generically approach the steady state tangent to the y-axis, since the eigenvector associated with the eigenvalue \(\lambda _3\) points along this axis. As the trajectory always satisfies \(v\ge 1\) for all \(\tau \ge 0\) then we again conclude that generically, almost all orbits will approach \(y=1\) from above and hence rebound occurs.

We must again eliminate the possibility that an orbit might approach the steady state tangent to one of the other eigenvectors for a particular choice of parameters. As previously, the linearised manifold in the direction of the eigenvector \(v_2\) is outside of the phase space and so an orbit cannot approach tangent to this one-dimensional manifold.

The one-dimensional linearised manifold in the direction of the eigenvector \(v_1\) given in (30) is

$$\begin{aligned} \left[ \begin{array}{c} x\\ z\\ y \end{array}\right] = \left[ \begin{array}{c} 0\\ 0\\ 1\end{array}\right] +a\, \left[ \begin{array}{c} \mu (k_2+k_4+\lambda _1)(\lambda _1+k_3(1+h_0))\\ ~~\,\lambda _1+k_3(1+h_0)\\ -(\lambda _1+k_4)\end{array}\right] . \end{aligned}$$We note that \(\lambda _1+k_3(1+h_0)<0\) from the right hand inequality in (47). Thus, we must take \(a<0\) to ensure that this linearised manifold gives positive values of x and z (since \(k_2+k_4+\lambda _1>0\) by Lemma 2.7 of Aston et al. 2014). The linearised manifold for \(v=y+z\) is then given by

$$\begin{aligned} v=1+a\,(k_3(1+h_0)-k_4). \end{aligned}$$Using (42) and the left hand inequality of (47) gives \(k_3(1+h_0)-k_4\ge k_3(1+m)-k_4>0\). Thus, this one-dimensional linearised manifold has a negative slope and so occurs for \(v<1\). Since the trajectory in this case must satisfy \(v\ge 1\), it is clearly not possible for it to approach the steady state tangent to this manifold. Thus, we conclude that all trajectories must approach the steady state tangent to the y-axis from above and hence rebound will occur. \(\square \)

In Theorems 4.6 and 4.8, we have proved that rebound occurs on both sides of the line \(\lambda _1=\lambda _3\) with \(k_3>k_4/(1+m)\). We now show that there is also rebound along this line.

Theorem 4.9

Rebound occurs if \(k_3=-\lambda _1/(1+h_0)\) and \(k_3>k_4/(1+m)\).

Proof

The proof uses exactly the same arguments as the proof of Theorem 3.7 in Aston et al. (2014). Along the curve \(k_3=-\lambda _1/(1+h_0)\), the eigenvalues \(\lambda _1\) and \(\lambda _3\) collide and have one eigenvector, which is along the y-axis, and one generalised eigenvector. Thus all solutions will asymptotically align with the y-axis. Since \(k_4/(1+m)<k_3\), Lemma 4.7 gives that \(v(\tau )\ge 1\) and the intersection of the y-axis and the region \(v(\tau )\ge 1\) is the part of the y-axis with \(y\ge 1\). Hence all orbits must approach the steady state \((x,y,z)=(0,1,0)\) from above and rebound will occur. \(\square \)

We summarise the results of Theorems 4.5, 4.6, 4.8 and 4.9 as follows.

Theorem 4.10

Define

For the model equations (16)–(18) and for any function h(y) satisfying the assumptions (h1)–(h4)

-

there is no rebound if \(k_1\le k_4\) or \(k_1>k_4\) and \(k_3\le k_4/(1+M)\);

-

rebound does occur if \(k_1>k_4\) and \(k_3>\min \left( k_4/(1+m), -\lambda _1/(1+h_0)\right) \).

We can also express these results in the terms of the dimensional parameters. Let H(R) be a function satisfying the assumptions (H1)–(H4) and define

For the model equations (8)–(10) with \(H_0=-H'(R_0)\)

-

there is no rebound if \(k_\mathrm{e(L)}\le k_\mathrm{e(P)}\) or \(k_\mathrm{e(L)}>k_\mathrm{e(P)}\) and \(k_\mathrm{out}<k_\mathrm{e(P)}/(1+M)\);

-

rebound does occur if \(k_\mathrm{e(L)}>k_\mathrm{e(P)}\) and \(k_\mathrm{out}>\min \left( k_\mathrm{e(P)}/(1+m), -\lambda _1k_\mathrm{on}R_0/\right. \left. (1+R_0H_0)\right) \).

These results are illustrated in Fig. 6.

Regions in the \((k_\mathrm{e(L)},k_\mathrm{out})\) plane where rebound does and does not occur for the nonlinear feedback function H(R). Red indicates regions where rebound will occur and green indicates regions where rebound does not occur. In the white region, rebound may or may not occur depending on the particular feedback function H (color figure online)

We now focus on a few aspects of the theorem and compare it with the case where there is no feedback (\(F=1\), and so \(h_0=0=H_0\)).

-

In the equations without feedback, rebound occurred in the region \(k_\mathrm{e(P)}<k_\mathrm{e(L)}\) and \(k_\mathrm{e(P)}<k_\mathrm{out}\) (regions III and IV) and with feedback, rebound also occurs in this region.

-

In the equations without feedback, there was no rebound in the region \(k_\mathrm{e(L)}\le k_\mathrm{e(P)}\) (region II) and with feedback, there is also no rebound in this region.

-

In the equations without feedback, there was no rebound in the region \(k_\mathrm{out}<k_\mathrm{e(P)}<k_\mathrm{e(L)}\) (the part of region I that lies outside region II), but with feedback, there is rebound in some parts of this region but not in others, the precise details depend on the function h.

-

In the region \(k_\mathrm{e(L)}>k_\mathrm{e(P)}\), \(k_\mathrm{e(P)}/(1+M)\le k_\mathrm{out}\le \min \left( k_\mathrm{e(P)}/(1+m), -\lambda _1/\right. \left. (1+h_0)\right) \), we have no general results, and the existence or otherwise of rebound will depend on the particular function h.

-

If H is mainly linear, i.e. \(F=H(R)=1+H_0(R_0-R)\), for \(0<R<R_0\), then \(m=M=R_0H_0\). In this case, the region of uncertainly disappears and we get that rebound will occur if and only if \(k_\mathrm{e(P)}<k_\mathrm{e(L)}\) and \(k_\mathrm{e(P)}<k_\mathrm{out}(1+H_0R_0)\).

-

If H is nonlinear but with \(m=R_0H_0\) (in which case the lower bounding curve for H(R) is the tangent to the curve at \(R=R_0\)), then the red region where there is rebound for \(k_\mathrm{out}>k_\mathrm{e(P)}/(1+R_0H_0)\) in Fig. 4 is preserved and the region of uncertainty is below this line. Conversely, if \(M=R_0H_0\) (so that the upper bounding curve for H(R) is the tangent at \(R=R_0\)), then the green region where there is no rebound for \(k_\mathrm{out}\le k_\mathrm{e(P)}/(1+R_0H_0)\) in Fig. 4 is preserved and the region of uncertainty is above this line.

-

We note that when \(k_\mathrm{e(L)}<k_\mathrm{e(P)}\) (the left green region in Fig. 6), then there is no rebound in the direct feedback model for any feedback function H(R). As discussed above, when \(k_\mathrm{e(L)}>k_\mathrm{e(P)}\), then the form of the feedback function determines whether or not rebound occurs.

In summary, the region where rebound occurs increases slightly to include some more values with \(k_\mathrm{out}<k_\mathrm{e(P)}<k_\mathrm{e(L)}\) when direct feedback is introduced into the basic TMDD model.

5 Analysis of the full TMDD with feedback model

In the full TMMD with feedback model, we have an extra differential equation that governs the dynamics of the moderator w and so our equations are now (20)–(23) with initial condition (24). As mentioned previously, the parameter \(\epsilon \) influences the time scale for the response dynamics. If \(\epsilon \) is small, the feedback responds slowly and we will show that this always leads to rebound. On the other hand, if \(\epsilon \) is very large, the feedback responds very fast and we will show that rebound will occur in the same region as found with the direct feedback approximation.

5.1 Dynamics of the receptor with feedback, without ligand

Before considering the full model with feedback, we first consider the effect of feedback on the dynamics of the receptor y (or R in dimensional form) in the absence of the ligand or product. We substitute \(x=z=0\) into (20)–(23) to give the two equations

Again, it is easily shown, using (h2) and (h3), that these equations have the unique steady state \(y=w=1\), i.e. the baseline values. Below we will prove the following rebound result.

Theorem 5.1

For any \(\epsilon \in (0,\infty )\), there are always some initial conditions with \(y(0)<1\) which give rise to trajectories with rebound.

First we consider the linear stability of the baseline state as this gives the local behaviour near to the baseline. The Jacobian matrix evaluated at this steady state is given by

(recalling that \(h_0=-h'(1)\ge 0\)). The eigenvalues of \(J_0\) can be found as solutions of the quadratic characteristic polynomial derived from this matrix. The discriminant of this quadratic equation is

and the eigenvalues are

while the eigenvectors are of the form

Note that in this section, the definition of the eigenvalue \(\lambda _3\) is different from that used in the previous section. The discriminant D is itself a quadratic function of \(\epsilon \) which has two positive roots given by

Clearly the discriminant is negative between these roots and positive elsewhere, and so we have the following result.

Lemma 5.2

The Eqs. (49)–(50) have a unique steady state at \(y=w=1\) and the eigenvalues \(\lambda _3\) and \(\lambda _4\) of the Jacobian matrix \(J_0\) are

-

both real and negative if \(0<\epsilon \le \epsilon _1^-\) or if \(\epsilon \ge \epsilon _1^+\);

-

a complex conjugate pair with negative real part if \(\epsilon _1^-<\epsilon <\epsilon _1^+\).

Furthermore, when \(\epsilon \rightarrow \infty \), \(\lambda _3\rightarrow -\infty \) and \(\lambda _4\rightarrow \lambda _\infty =-k_3(1+h_0)\).

For a proof of the last statement, see Appendix A. Lemma 5.2 is summarised in Fig. 7 with the direction of movement of the eigenvalues indicated corresponding to increasing \(\epsilon \).

When \(\epsilon =0\), we see that \(\lambda _3=-k_3\) and \(\lambda _4=0\). As \(\epsilon \) increases from zero, the two eigenvalues move towards each other and collide on the negative real axis when \(\epsilon =\epsilon _1^-\). After this collision, the eigenvalues become complex with \(\mathrm{Re}(\lambda _{3,4})=-(k_3+\epsilon )/2\), which decreases monotonically with \(\epsilon \). As \(\epsilon \) increases further, the two complex eigenvalues collide on the real axis when \(\epsilon =\epsilon _1^+\) and become real again. As \(\epsilon \) increases from \(\epsilon _1^+\) to \(\infty \), \(\lambda _4\) increases from the point of collision to \(-k_3(1+h_0)\) and \(\lambda _3\) decreases to \(-\infty \) from this point. At the collision values \(\epsilon =\epsilon _1^\pm \), the eigenvalues \(\lambda _3=\lambda _4\) are given by

Note that this implies that \(\lambda _*^+<\lambda _\infty <\lambda _*^-\), as \(\lambda _\infty =-k_3(1+h_0)\).

Lemma 5.2 implies linear stability, but a stronger stability result can be proved. The proof of this result uses a Lyapunov function and is given in Appendix A.

Lemma 5.3

In the region of \({\mathbb {R}}^2\) defined by \(y,w\ge 0\), the steady state \(y=w=1\) of Eqs. (49), (50) is globally asymptotically stable when \(\epsilon >0\).

Thus the trajectory for all non-negative initial conditions will eventually approach the steady state \((y,w)=(1,1)\) and Theorem 5.1 can be proved by analysing the solutions close to the steady states. The following lemma gives the detailed results, with the proof again in Appendix A.

Lemma 5.4

-

If \(0<\epsilon {{{}\le \epsilon _1^-}}\) and \(y(0)<1\), then rebound in Eqs. (49), (50) will occur for all \(w(0) {{{}>1}}\) and for some values of \(w(0) {{{}\le 1}}\).

-

If \(\epsilon _1^-<\epsilon _1<\epsilon _1^+\) then rebound occurs for any initial condition with \(y(0)<1\).

-

If \(\epsilon _1^+ {{{}\le \epsilon <\infty }}\) and \(y(0)<1\), then rebound in Eqs. (49), (50) will occur for some initial conditions which satisfy \(w(0)>1\).

Having established the effect of the feedback on the behaviour of the receptor without ligand and product, we next consider the effect of adding the ligand.

5.2 Invariance, steady states and stability

Before considering rebound, we first state invariance and stability results for the full TMDD system with feedback, similar to the results in Sect. 4.1 for the TMDD system with direct feedback. The proofs of the statements in this section can be found in Appendix A.

The first three of our variables are related to physical quantities and so must be non-negative and we have also assumed that the feedback variable w is non-negative. Therefore, we now establish the invariance of the region with \(x,y,z,w\ge 0\) for Eqs. (20)–(23).

Lemma 5.5

The region of \({\mathbb {R}}^4\) defined by \(x,y,z,w\ge 0\) is invariant under the flow of Eqs. (20)–(23) when \(\epsilon >0\).

As with our previous model, this again shows that none of the variables can go negative, which is an essential property of a good model. We now consider the steady states of Eqs. (20)–(23).

Lemma 5.6

In the region of \({\mathbb {R}}^4\) defined by \(x,y,z,w\ge 0\), there is a unique steady state of Eqs. (20)–(23), given by

The Jacobian matrix obtained from Eqs. (20)–(23) evaluated at the steady state solution (57) is given by

(recalling that \(h_0=-h'(1)\)). The eigenvalues of this matrix can be found from the top left \(2\times 2\) matrix and the bottom right \(2\times 2\) matrix. From the top left matrix, we obtain the eigenvalues \(\lambda _1\) and \(\lambda _2\) that we had previously, given by (27) and (28). The bottom right matrix is the same as \(J_0\), defined in (51), and so the eigenvalues of this matrix are \(\lambda _3\) and \(\lambda _4\) as defined in (52) and (53). The corresponding eigenvectors are given by

We now immediately get the linear stability of the steady state solution as all eigenvalues \(\lambda _i\) are strictly negative.

Lemma 5.7

The steady state (57) of Eqs. (20)–(23) is linearly stable when \(\epsilon >0\).

This result implies that all trajectories with initial conditions sufficiently close to the steady state will converge to it. On its own , it does not guarantee convergence for all initial conditions. However, for this model, a stronger stability result can again be proved.

Lemma 5.8

The steady state \((x,z,y,w)=(0,0,1,1)\) of Eqs. (20)–(23) is globally asymptotically stable when \(\epsilon >0\).

This result ensures that for any non-negative initial conditions and for all positive parameter values, each of the variables will converge to their unique steady state value, as with the previous model.

The proofs of these two results are again in Appendix A.

5.3 Rebound

In this section, we focus on the analysis of rebound in the latter stages of the evolution, i.e., the approach to the steady state. The linearised system will play an important role in this, but first we consider the limiting case \(\epsilon =0\) and derive some estimates on the initial behaviour of the moderator.

For \(\epsilon =0\), the full TMDD equations with feedback via a moderator, i.e., (20)–(23), reduce to

Hence w is constant. With the initial condition (24), this implies \(w=1\) and the system reduces to the TMDD equation without feedback as studied in our previous paper (Aston et al. 2014). However, if w has a different initial condition, say \(w=w_0\ne 1\), then the system will converge to the steady state \((x,z,y,w)=(0,0,w_0,w_0)\). Within the hyperplane \(w=w_0\), this equilibrium is an attractor. Thus if \(w_0>1\), then the receptor will converge to a value above the original baseline. Note that in this case, we have \(\lambda _4=0\), corresponding to the invariance of the moderator w and the line of steady states \((0,0,w_0,w_0)\).

For \(\epsilon >0\) and the initial condition (24), we can show that initially the moderator w will increase to values above its baseline and hence the production of the receptor will be stimulated.

Lemma 5.9

For \(\epsilon >0\) and \(h_0>0\), there exists a \(\tau ^*>0\) such that \(w(\tau )>1\) for all \(\tau \in (0,\tau ^*)\).

Proof

The initial condition is \(w(0)=1\) and since this is also the steady state value of w, we have \(\dot{w}(0)=0\). Differentiating (23) and evaluating at \(\tau =0\) gives

and the result follows immediately from this. \(\square \)

The initial conditions give that \(\dot{y} (0)= -\frac{1}{\mu }\), hence initially y will decrease and take values smaller than its baseline value 1. The following lemma implies that if there is no rebound, then \(w(\tau )\) must be larger than 1 for all time. This observation will be used several times later to derive a contradiction on the assumption that there is no rebound.

Lemma 5.10

If \(\epsilon >0\), \(h_0>0\) and \(0\le y(\tau )\le 1\) for all \(\tau \ge 0\), then \(w(\tau )>1\) for \(\tau >0\).

Proof

Assume that \(\epsilon >0\), \(h_0>0\) and \(0\le y(\tau )\le 1\) for all \(\tau \ge 0\). We evaluate \(\dot{w}\) along the hyperplane \(w=1\) giving

By hypothesis (h3), \(h(y)>1\) if \(y<1\) and so \(\dot{w}|_{w=1}>0\) in this case. Since \(w(\tau )>1\) for sufficiently small \(\tau \) by Lemma 5.9, this result shows that \(w(\tau )\) cannot touch or cross the line \(w=1\) when \(y(\tau )<1\).

Next we will show by contradiction that \(y(\tau )=1=w(\tau )\) is impossible too. We assume that there is some \(\tilde{\tau }>0\) such that \(w(\tilde{\tau })=1\) with \(w(\tau )>1\) for \(\tau \in (0,\tilde{\tau })\), i.e. \(\tilde{\tau }\) is the first moment that \(w(\tau )\) equals one, and that \(y(\tilde{\tau })=1\) also. Using the model equations (20)–(23), we obtain

Thus, using Taylor series, y and w near to \(\tilde{\tau }\) are given by

The assumption \(w(\tau )>1\) for \(\tau <\tilde{\tau }\) and \(y(\tau )\le 1\) for all \(\tau \) together with \(h_0>0\) imply that \(\dot{y}(\tilde{\tau })=0\). Looking at the expressions for the derivatives at \(\tilde{\tau }\), this gives \(x(\tilde{\tau })=\mu k_2z(\tilde{\tau })\), \(\ddot{w}(\tilde{\tau })=0\) and \(\dddot{w}(\tilde{\tau })=-\epsilon h_0 \ddot{y}(\tilde{\tau })\). Going to the next order in the Taylor series, since \(y(\tau )\le 1\), then we must have that \(\ddot{y}(\tilde{\tau })\le 0\). If \(\ddot{y}(\tilde{\tau })<0\) then this implies that \(w(\tilde{\tau }+\hat{\tau })<1\) for \(\hat{\tau }<0\) and \(|\hat{\tau }|\) sufficiently small, which contradicts our earlier assumption on w. Thus, the only possibility is that \(\ddot{y}(\tilde{\tau })=0\). Using the relation \(x(\tilde{\tau })= \mu k_2 z(\tilde{\tau })\) we then find that

and so this second derivative is zero if either \(z(\tilde{\tau })=0\) or \(k_1=k_4\). If \(z(\tilde{\tau })=0\), then \(x(\tilde{\tau })=\mu k_2 z(\tilde{\tau })=0\) too. As we also have \(y(\tilde{\tau })=1=w(\tilde{\tau })\), this would imply that the solution is at the equilibrium at \(t=\tilde{\tau }\). Uniqueness of solutions gives that this is not possible. With the alternative option \(k_1=k_4\), evaluating \(\dot{x}-\mu k_2 \dot{z}\), \(\dot{y}\) and \(\dot{w}\) on the line

shows that this line is invariant under the dynamics. At \(t=0\), \(x(0)-\mu k_2 z(0)=1\), hence initially the solution is not on this line and therefore it can never be on the line in finite time, which rules out the possibility that \(k_1=k_4\) and we have come to a contradiction. We therefore conclude that \(w(\tilde{\tau })\ne 1\) and so it follows that \(w(\tau )>1\) for all \(\tau >0\). \(\square \)

Corollary 5.11

If \(\epsilon >0\), \(h_0>0\) and \(w(t^*)\le 1\) for some \(t^*>0\), then rebound occurs.

Proof

This result is simply the converse of Lemma 5.10. \(\square \)

The observation that initially the moderator will be larger than 1, combined with the fact that for \(\epsilon =0\), the initial condition \(w(0)>1\) leads to rebound, makes it likely that rebound will happen for all small values of \(\epsilon \). This is indeed the case as will be shown below.

5.3.1 Relative position of the eigenvalues

To analyse rebound in the latter stages of the evolution, we focus on solutions near to the baseline state. We are especially interested in the eigenvalue of the Jacobian matrix (58) with real part closest to zero, since generically trajectories approach the steady state tangent to the eigenvector corresponding to this eigenvalue. We note that the eigenvalues \(\lambda _1\) and \(\lambda _2\) are always real, depend on \(k_1\), \(k_2\) and \(k_4\), do not depend on \(\epsilon \) and \(k_3\), and that \(\lambda _2<\lambda _1\). Furthermore, for \(k_2\) and \(k_4\) fixed, the eigenvalue \(\lambda _1(k_1,k_2,k_4)\) is monotonic decreasing in \(k_1\) and

On the other hand, the eigenvalues \(\lambda _3\) and \(\lambda _4\) depend on \(k_3\) and \(\epsilon \), do not depend on \(k_1\), \(k_2\) and \(k_4\), and \(\mathrm {Re}(\lambda _3)\le \mathrm {Re}(\lambda _4)\). Thus, the eigenvalue with real part closest to zero will be either \(\lambda _1\) or \(\lambda _4\). As observed before, \(\lambda _1\) does not depend on \(\epsilon \), and for small \(\epsilon \) we have

Thus, for sufficiently small \(\epsilon \), the eigenvalue closest to zero will be \(\lambda _4\). To describe how this changes when \(\epsilon \) increases, we consider four \(\lambda _1\) intervals that are defined in terms of \(\lambda _*^\pm \) (see (56)) and \(\lambda _\infty \) (see Lemma 5.2)—a summary is sketched in Fig. 8:

The relative positioning of the eigenvalues in the \(k_1\)–\(k_3\) plane with \(k_2\) and \(k_4\) fixed. Recall that \(\lambda _\infty (k_3) = -k_3(1+h_0)\) and \(\lambda _*^\pm (k_3) =-k_3(1+h_0\pm \sqrt{h_0(1+h_0)})\), thus the three curves are relations of the form \(k_3=\lambda _1(k_1,k_2,k_4)/ f(h_0)\), with \(f(h_0)\) the appropriate function

-

\(\lambda ^-_*(k_3)\le \lambda _1(k_1,k_2,k_4)<0\) (i.e., \(k_3\ge \frac{-\lambda _1}{1+h_0- \sqrt{h_0(1+h_0)}}\)):

Now \(\lambda _1\) is in the interval where \(\lambda _4\) takes real values, hence the eigenvalues \(\lambda _4\) and \(\lambda _1\) cross at the point where \(\lambda _4=\lambda _1\) and solving this equation for \(\epsilon \) gives

$$\begin{aligned} \epsilon= & {} \epsilon _2(\lambda _1,k_3):=-\lambda _1 \left( \frac{\lambda _1+k_3}{\lambda _1+k_3(1+h_0)}\right) = \epsilon _1^-(k_3) - \frac{(\lambda _1-\lambda _*^-)^2}{\lambda _1-\lambda _\infty }\nonumber \\= & {} \epsilon _1^+(k_3) +\frac{ (\lambda _1-\lambda _*^+)^2}{\lambda _\infty -\lambda _1} . \end{aligned}$$(61)Note that \(\lambda _1+k_3(1+h_0)=\lambda _1-\lambda _\infty \ne 0\) in this interval and so \(\epsilon _2(\lambda _1,k_3)\) is well defined. Thus \(\epsilon _2(\lambda _1,k_3)= \epsilon _1^-(k_3)\), when \(\lambda _1=\lambda _*^-\). Altogether, we conclude that in this \(\lambda _1\) interval, we have that \(\lambda _4>\lambda _1\) if \(0<\epsilon <\epsilon _2(\lambda _1,k_3).\)

-

\(\lambda _\infty (k_3)\le \lambda _1(k_1,k_2,k_4)\le \lambda ^-_*(k_3)\) (i.e., \(\frac{-\lambda _1}{1+h_0}\le k_3\le \frac{-\lambda _1}{1+h_0-\sqrt{h_0(1+h_0)}}\)):

Here \(\lambda _1\) is in the interval where \(\lambda _4\) takes complex values, hence for \(\epsilon <\epsilon _1^-(k_3)\), \(\lambda _4\) is real and will be the eigenvalue closest to zero. At \(\epsilon =\epsilon _1^-(k_3)\), \(\lambda _4\) and \(\lambda _3\) merge and become a complex conjugate pair. For \(\epsilon \) just larger than \(\epsilon _1^-\), the real part of the complex pair will be closest to zero and this will be the case until the real part of the complex eigenvalues crosses \(\lambda _1\). This occurs when \(\mathrm{Re}(\lambda _{3,4})=\lambda _1\) and since \(\mathrm{Re}(\lambda _{3,4})= -(k_3+\epsilon )/2\), this crossing occurs at \(\epsilon =\epsilon _3\), where

$$\begin{aligned} \epsilon _3(\lambda _1,k_3):=-(k_3+2\lambda _1) \end{aligned}$$Since \(\epsilon ^\pm _1 = -(k_3+2\lambda ^\pm _*)\), it follows immediately that

$$\begin{aligned} \epsilon _1^-(k_3)<\epsilon _3(\lambda _1,k_3)<\epsilon _1^+(k_3), \hbox { for } \lambda _*^+<\lambda _1<\lambda ^-_*. \end{aligned}$$(62)Note that \(\epsilon _3(\lambda _1,k_3)= \epsilon _1^-(k_3)=\epsilon _2(\lambda _1,k_3)\), when \(\lambda _1=\lambda _*^-\). Thus in this interval, we have that \(\mathrm {Re}(\lambda _4)>\lambda _1\) for \(0<\epsilon <\epsilon _3(\lambda _1,k_3)\).

-

\(\lambda ^+_*(k_3)\le \lambda _1(k_1,k_2,k_4)<\lambda _\infty (k_3)\) (i.e., \(\frac{-\lambda _1}{1+h_0+ \sqrt{h_0(1+h_0)}}\le k_3< \frac{-\lambda _1}{1+h_0}\)):

Now \(\lambda _1\) is in the interval where \(\lambda _4\) takes both real and complex values. If \(\epsilon >\epsilon _1^+(k_3)\), then \(\lambda _4\) is real and we can use the calculation in the first point to conclude that \(\lambda _4>\lambda _1\) if \(\epsilon >\epsilon _2(\lambda _1,k_3)\). If \(\epsilon _1^-(k_3)<\epsilon <\epsilon _1^+(k_3)\), then \(\lambda _4\) is complex and the calculation above gives that \(\mathrm {Re}(\lambda _4)>\lambda _1\) if \(0<\epsilon <\epsilon _3(\lambda _1,k_3)\). Note that \(\epsilon _2(\lambda _1,k_3)>\epsilon _1^+(k_3)>\epsilon _3(\lambda _1,k_3)\) in the open \(\lambda _1\) interval and that \(\epsilon _3(\lambda _1,k_3)\) and \(\epsilon _2(\lambda _1,k_3)\) collide with \(\epsilon _1^+(k_3)\) when \(\lambda _1=\lambda ^+_*(k_3)\). Furthermore, \(\epsilon _2(\lambda _1,k_3)\rightarrow \infty \) for \(\lambda _1\rightarrow \lambda _\infty \). So altogether we have that in this interval

$$\begin{aligned} \mathrm {Re}(\lambda _4)>\lambda _1 ~\hbox { if }~ 0<\epsilon <\epsilon _3(\lambda _1,k_3) ~\hbox { or }~ \epsilon >\epsilon _2(\lambda _1,k_3). \end{aligned}$$ -

\(\lambda _1(k_1,k_2,k_4)<\lambda ^+_*(k_3)\) (i.e., \(k_3< \frac{-\lambda _1}{1+h_0+ \sqrt{h_0(1+h_0)}}\)):

Since \(\mathrm {Re}(\lambda _4)\ge \lambda ^+_*(k_3)\), we see immediately that in this interval \(\mathrm {Re}(\lambda _4)> \lambda _1\) for all \(\epsilon >0\).

To visualise this information, we have sketched the \(\lambda _1\) intervals in the \(k_1\)-\(k_3\) plane in Fig. 8.

5.3.2 Rebound in the latter stages of the evolution

Now that we have determined the positioning of the eigenvalues, we can state when rebound occurs in the latter stages of the evolution.

Theorem 5.12

Define m as in Theorem 4.10, i.e., \(m=\inf \{\frac{h(y)-1}{y-1}\mid 0\le y <1 \}\) and define \(f_*^+(h_0) = 1+h_0+\sqrt{h_0(1+h_0)}\), hence \(\lambda _*^+=-k_3f_*^+(h_0)\).

-

(i)

If \(k_3<\min \left( \frac{-\lambda _1}{f_*^+(h_0)}, \frac{k_4}{1+m}\right) \), then rebound occurs generically for \(0<\epsilon <\epsilon _1^+{{(k_3)}}\).

-

(ii)

If \(k_1>k_4\) and

-

(a)

\(\frac{-\lambda _1}{f^+_*(h_0)}< k_3 <\min \left( \frac{-\lambda _1}{1+h_0},\frac{k_4}{1+m}\right) \), then rebound occurs generically for \(0<\epsilon <\epsilon _2{{(\lambda _1,k_3)}}\);

-

(b)

\(k_3> \min \left( \frac{-\lambda _1}{1+h_0},\frac{k_4}{1+m}\right) \), then rebound occurs generically for all \(\epsilon >0\).

-

(a)

-

(iii)

If \(k_1<k_4\) and

-

(a)

\(-\frac{\lambda _1}{f_*^+(h_0)}<k_3\le -\lambda _1\) then rebound occurs generically for \(0<\epsilon <\epsilon _3{{(\lambda _1,k_3)}}\);

-

(b)

\(k_3>-\lambda _1\) then rebound occurs generically for \(0<\epsilon <-\lambda _1\).

-

(a)

These results are summarised in Fig. 9.

The proof of this theorem will be given via two lemmas which determine when the eigenvalue closest to zero can be associated with rebound. First we consider the case that the eigenvalue \(\lambda _4\) (or its real part if complex) is closest to zero.

Lemma 5.13

If the eigenvalue \(\lambda _4\) (or its real part if complex) is closest to zero and

-

\(0<\epsilon <\epsilon _1^+\) or

-

\(\epsilon \ge \epsilon _1^+\), and \(k_3>\frac{k_4}{1+m}\),

then rebound occurs (generically only for \(\epsilon _1^-<\epsilon <\epsilon _1^+\)).

The proof of this lemma can be found in Appendix B.

Next we consider the case that the eigenvalue \(\lambda _1\) is closest to zero.

Lemma 5.14

If \(\lambda _1\) is the eigenvalue closest to zero, then rebound occurs generically if \(k_1>k_4\) or if \(\epsilon <-\lambda _1\).

Again, the proof of this lemma can be found in Appendix B.

With the lemmas above, we are ready to prove Theorem 5.12.

Proof of Theorem 5.12

The results in this Theorem are essentially obtained by applying Lemmas 5.13 and 5.14 in each of the four regions described in Sect. 5.3.1. The results from this process are summarised in Table 1. The observations about the relative position of \(\epsilon _2\), \(\epsilon _3\) and \(\epsilon ^+\) in the various regions follow from (61) and (62). In the regions \(\lambda _*^+<\lambda _1<\lambda _*^-\), the case \(\mathrm{Re}(\lambda _4)<\lambda _1\) leads to the range \(\epsilon _3<\epsilon <-\lambda _1\). This range is not empty only when \(\epsilon _3<-\lambda _1\). The definition of \(\epsilon _3\) shows that this condition holds if \(\lambda _1>-k_3\), which is why this condition is included in the second row. For the third row, we observe that \(\lambda _\infty =-k_3(1+h_0)<-k_3\), so the range \(\epsilon _3<\epsilon <-\lambda _1\) is always empty if \(\lambda _1<\lambda _\infty \).

The two columns containing conditions for rebound in Table 1 can be combined in some cases, as shown in Table 2, where we separate out two regions in the parameter plane defined by \(k_1\le k_4\) and \(k_1>k_4\). For the third row, we have used that \(\lambda _1(k_1,k_2,k_4)\) is monotonic decreasing in \(\lambda _1\). Hence if \(k_1\le k_4\), then \(\lambda _1(k_1,k_2,k_4)\ge \lambda _1(k_4,k_2,k_4)=-k_4\) (see (60)). Thus if \(\lambda _1<\lambda _\infty =-k_3(1+h_0)\) and \(k_1\le k_4\), then \(k_3<-\frac{\lambda _1}{1+h_0}\le \frac{k_4}{1+h_0}\le \frac{k_4}{1+m}\).

We note in Table 2 that in many cases, there are neighbouring intervals with only the boundary point between them missing. Thus, filling in these specific points (which are proved below) gives the results shown in Table 3. The results in Theorem 5.12 are obtained by converting the eigenvalue ranges in Table 3 into conditions involving the parameters, using that \(\lambda _\infty =-k_3(1+h_0)\) and \(\lambda _*^+=-k_3f_*^+(h_0)\).

We now consider a number of special cases that fill in the gaps that allow us to go from Tables 2 to 3.

-

\(\lambda _*^-<\lambda _1<0\), \(\epsilon =\epsilon _2\).

When \(\epsilon =\epsilon _2\), then \(\lambda _1=\lambda _4\) and so there are two repeated eigenvalues that are the least negative. With some calculations, it can be seen that the eigenvectors \(v_1\) and \(v_4\) collide (the denominator \((k_3+\lambda _1)(\epsilon _3+\lambda _1)+\epsilon _2k_3h_0\) in the third and fourth entry vanishes, so the eigenvector has to be rescaled first to see this), hence we are in the case of an eigenvector and a generalised eigenvector. The eigenvector is \(v_4=(0,0,k_3,k_3+\lambda _4)\) and generically solutions will align with this eigenvector. In the proof of Lemma B.1, it is shown that \(k_3+\lambda _4>0\) when \(\epsilon \le \epsilon _1^-\). Now \(\epsilon _2<\epsilon _1^-\) for \(\lambda _1>\lambda _*^-\) by (61) and this implies that the third and fourth components of \(v_4\) have the same sign. The proof in Lemma B.1 now applies to prove that generically rebound occurs in this case.

-

\(\lambda _1=\lambda _*^-\), \(\epsilon =\epsilon _2=\epsilon _3=\epsilon _1^-\).

In this case there is triple eigenvector with one eigenvector \(v_1=v_4=v_3 = (0,0,k_3,k_3+\lambda _4)\) together with two generalised eigenvectors. Again, generically solutions align with this eigenvector and, as above, the third and fourth entries have the same sign, thus and so rebound can be proved via a contradiction argument.

-

\(\lambda _*^+<\lambda _1<\lambda _\infty \), \(\epsilon =\epsilon _2\) with \(k_3>\frac{k_4}{1+m}\).

This is similar to the first case as the two eigenvalues \(\lambda _1\) and \(\lambda _4\) coincide when \(\epsilon =\epsilon _2\) with an eigenvector and a generalised eigenvector in this case. Generically solutions will align asymptotically with the eigenvector. The proof of Lemma B.2 can now be applied in this case.

-

\(\lambda _*^+<\lambda _1<\lambda _*^-\), \(\epsilon =\epsilon _3\) with \(k_1>k_4\) or \(k_3>-\lambda _1\).

In this case, the real part of the complex pair \(\lambda _{3,4}\) equals the eigenvalue \(\lambda _1\). Approaching the steady state via the complex pair leads to rebound. The proof of Lemma 5.14 also applies with \(\epsilon =\epsilon _3\) provided that \(\epsilon _3+\lambda _1<0\) and this occurs if \(k_3>-\lambda _1\) and so rebound occurs if the steady state is approached tangent to \(v_1\). If the approach occurs via a linear combination of the three eigenvectors, then clearly rebound will then occur in this case also. Hence we can conclude that rebound will occur generically in this case.

The rebound results that are summarised in Table 3 are shown in Fig. 9. There are two cases shown here, depending on whether or not the curve \(\lambda _1=\lambda _*^+\) intersects the line \(k_3=\frac{k_4}{1+m}\). Since \(\lim _{k_1\rightarrow \infty }=-(k_2+k_4)\) (see (60)), if \(k_2<k_4\frac{f_*^+-(1+m)}{1+m}\) then the curve \(\lambda _1=\lambda _*^+\) is always below \(k_3=\frac{k_4}{1+m}\). Also, as \(\lambda _1(k_4,k_2,k_4)=-k_4\) (see (60)), then it can be shown that the line \(k_3=\frac{k_4}{1+m}\) is above the line \(\lambda _*^+\) at this point, and so the line can never go below the curve as the curve is monotonically increasing with \(k_1\). \(\square \)

Remarks

-

Some of the rebound results that we proved in Lemmas 5.13 and 5.14 hold only generically, and some cases always give rebound. However, for the sake of simplicity, we have not distinguished between these in Theorem 5.12 but simply refer to the existence of generic rebound.

-

In Theorem 5.12, we have proved the existence of rebound in a variety of cases. However, it should be noted that we have not proved or disproved the existence of rebound in other parameter regions, and so we do not have results for all possible parameter values.

-

From Theorem 5.12, we can conclude that there exists \(\epsilon _0>0\) such that rebound occurs for all \(\epsilon \) satisfying \(0<\epsilon <\epsilon _0\) (see Fig. 9). Thus, for all parameter values, rebound occurs for sufficiently small \(\epsilon >0\). This might seem to contradict the result for the limit \(\epsilon =0\) (the no-feedback case) where there is a large region in the parameter plane for which there is no rebound. The explanation is that for those parameter values, the magnitude of the rebound decays to zero as \(\epsilon \) goes to zero.

6 Generalisations

Thus far, we have considered the basic TMDD model with feedback dynamics which is linear in the feedback moderator F. We now extend this model in two directions. First we extend the TMDD model to allow for more compartments and secondly we consider more general types of feedback dynamics. Below we show that some of the rebound results can be extended to these more general models.

6.1 Generalised TMDD model with feedback via a moderator

In this section we consider a generalisation of the basic TMDD model to more compartments. It is assumed that the receptor is still only in one compartment. In the basic TMDD model, the variable y represents the normalised receptor in this compartment. Now we will assign y to represent either the normalised free receptor (as before) or it could be the normalised total amount of receptor. The normalised ligand-product components (x and z) of the basic TMDD model are generalised to a vector \(\mathbf {x}\) that represents the ligand and product in the compartments. The generalised TMDD model with feedback becomes

where \(\mathbf {x}\in \mathbb {R}^n\), \(n\ge 1\) and \(y,w\in \mathbb {R}\). We note that if \(\mathbf{f}_1\) depends only on \(\mathbf{x}\) but not on y, or if \(f_2\) depends only on y but not on \(\mathbf{x}\), then the coupling between \(\mathbf{x}\) and y is in one direction only. In this case, the model could not be classed as a generalised TMDD model, although our results below still hold in these cases. We assume that the assumptions (h1)–(h4) on the feedback function h(y) hold and we make the following assumptions on the functions \(\mathbf {f}_1\) and \(f_2\):

-

There exists an \(\mathbf {x}_0\in \mathbb {R}^n\) such that the Eqs. (63)–(65) have the steady state solution \(\mathbf {x}=\mathbf {x}_0\), \(y=w=1\). This implies that \(\mathbf {f}_1(\mathbf {x}_0,1)=\mathbf {0}\) and \(f_2(\mathbf {x}_0,1)=0\). We also assume that this steady state is globally asymptotically stable.

-

In the basic TMMD model, a change in the receptor level while the ligand and product are at baseline does not move the ligand or product away from baseline and we make a similar assumption for this model, i.e., \(\mathbf {f}_1(\mathbf {x}_0,y)=0\) for all \(y\ge 0\) which implies that \(\frac{\partial \mathbf {f}_{1}}{\partial y}(\mathbf {x}_0,1)=0\). Similarly, the function \(f_2\) is not affected by the receptor dynamics if the ligand and product are at baseline, i.e., \(f_2(\mathbf {x}_0,y)=0\) for all \(y\ge 0\) which implies that \(\frac{\partial f_{2}}{\partial y}(\mathbf {x}_0,1)=0\). In fact, in both cases, all that we really require is the weaker derivative condition.

-

The initial conditions are given by

$$\begin{aligned} \mathbf {x}=\mathbf {x}_1,\quad y=w=1 \end{aligned}$$for some \(\mathbf {x}_1\ne \mathbf {x}_0\) with \(\mathbf {x}_1\) such that \(f_2(\mathbf {x}_1,1)<0\). Hence initially the amount of receptor will decrease.

-

The Jacobian matrix \(D_{\mathbf {x}}\mathbf {f}_1(\mathbf {x}_0,1)\) has no zero or purely imaginary eigenvalues.

With these assumptions, the Jacobian matrix evaluated at the steady state is given by

Note that the condition \(\frac{\partial \mathbf {f}_{1}}{\partial y}(\mathbf {x}_0,1)=0\) leads to a block lower triangular structure in this matrix. This implies that the eigenvalues of this matrix are the eigenvalues of the Jacobian matrix \(D_{\mathbf {x}}\mathbf {f}_{1}(\mathbf {x}_0,1)\) and the eigenvalues of the \(2\times 2\) bottom right matrix in \(J(\mathbf {x}_0,1,1)\). The global asymptotic stability of the steady state \((\mathbf {x}_0,1,1)\) implies that all eigenvalues have negative or zero real part. Note that the eigenvalues of \(D_{\mathbf {x}}\mathbf {f}_{1}(\mathbf {x}_0,1)\) do not depend on \(\epsilon \) and that our final assumption implies that the eigenvalue closest to zero will have strictly negative real part. The \(2\times 2\) bottom right matrix is the same as in the basic TMDD model with feedback. We denote its eigenvalues by \(\lambda _n\) and \(\lambda _{n-1}\), where the eigenvalue \(\lambda _n\) corresponds to \(\lambda _4\) earlier and \(\lambda _{n-1}\) to \(\lambda _3\). Thus \(\lambda _n\) is the closest eigenvalue to zero for sufficiently small \(\epsilon \).

We define

where \(\tilde{\epsilon }_2\) is the value of \(\epsilon \) at which the eigenvalue \(\lambda _n\) coincides with the eigenvalue closest to zero of the matrix \(D_{\mathbf {x}}f_{1}(\mathbf {x}_0,1)\). Recall that \(\epsilon _1^-\) is the value of \(\epsilon \) for which \(\lambda _n\) and \(\lambda _{n-1}\) become a complex pair of eigenvalues. We then have the following result.

Theorem 6.1

If the above assumptions on \(\mathbf {f}_1\) and \(f_2\) hold, then rebound occurs generically for \(0<\epsilon <\tilde{\epsilon }_0\).

Proof

The condition \(f_2(\mathbf {x}_1,1)<0\) ensures that \(\dot{y}(0)<0\), which is required in the proof of Lemma 5.9. The w equation is unchanged in this setting and so Lemma 5.10 also holds. The proof of Lemma B.1 also holds in this setting, although the exceptional case that the trajectory approaches the steady state tangent to a different eigenvector cannot be excluded outright in this more general case, and so we only have a generic result. \(\square \)

If the bottom right \(2\times 2\) matrix in \(J(\mathbf {x}_0,1,1)\) has complex eigenvalues with real part closest to zero of all the eigenvalues, then again there will be oscillation about the steady state in y as the steady state is approached and so rebound occurs infinitely often but with exponentially decreasing amplitude.

6.2 Generalisation of the dynamics of the feedback

In our considerations so far, the feedback dynamics has been linear in the feedback moderator F (or w in the non-dimensional equations), see (14) or (23). In this section we will consider a more general form of feedback, and focus on the case of a small value of the parameter \(\alpha \) (\(\epsilon \)). In particular, we again assume that the receptor equation is described by (13) and describe the dynamics of F by

Non-dimensionalising as before, we obtain a new equation for the feedback variable w (\(=F\)) given by

where

and \(\epsilon \) is defined by (25). We make a number of assumptions regarding the function g:

-

(h1’)

\(g(1,1)=0\)

-

(h2’)

\(-g_1:=\frac{\partial g}{\partial y}(1,1)<0\)

-

(h3’)

\(-g_2:=\frac{\partial g}{\partial w}(1,1)<0\)

The assumption (h1’) ensures that the equations retain the steady state with \(y=w=1\). Assumption (h2’) ensures that when the system is perturbed from the steady state by reducing y, which is what happens when the ligand is injected, then w will increase, resulting in greater production of the receptor. Finally, assumption (h3’) ensures that the steady state \(w=1\) of (67), assuming that \(y=1\) is held fixed, is stable.

We now show that some of the results obtained previously also hold in this more general setting. We again start by considering the Jacobian matrix of Eqs. (20)–(22), (67) evaluated at the steady state (57), which is given by

Theorem 6.2

If assumptions \((h1')\), \((h2')\) and \((h3')\) hold, and if \(g(y,1)>0\) for all \(y\in [0,1)\), then there exists \(\epsilon _0>0\) such that rebound occurs in the model given by Eqs. (20)–(22), (67) for all \(\epsilon \) satisfying \(0<\epsilon <\epsilon _0\).

Proof

We note that assumptions (h1\('\)) and (h2\('\)) imply that \(g(y,1)>0\) for \(y<1\) sufficiently close to 1. The extra assumption requires that this holds for all \(y\in [0,1)\).

The proofs of Lemmas 5.9, 5.10 and B.1 can easily be adapted to this modified model. In particular, for Lemma 5.9, \(\dot{w}(0)=0\) as before and in this case, we have

and so Lemma 5.9 holds.

For Lemma 5.10, we note that

using our stated assumption. Combined with Lemma 5.9, this implies that \(w(\tau )\) cannot touch or cross the line \(w=1\) when \(y(\tau )<1\). The second part of the proof of Lemma 5.10 shows by contradiction that \(y(\tau )=w(\tau )=1\) is impossible and the same proof holds in this case with \(h_0\) replaced by \(g_1\).

The Jacobian matrix associated with Eqs. (49) and (67) (analogous to (51)) is given by

which has eigenvalues \(\lambda _3=-k_3\), \(\lambda _4=0\) when \(\epsilon =0\) as previously. These two eigenvalues collide when the discriminant of the characteristic polynomial is zero, and this first occurs when

With this definition of \(\epsilon _1^-\), we claim that Lemma B.1 holds also. To see this, we note that the eigenvector \(v_4\) given by (59) is the same in this case, but with \(\lambda _4\) as the eigenvalue of (69) that is closest to zero. We now have

It is easily verified that \(k_3>\epsilon _1^-g_2\) and this implies that \(k_3+\lambda _4>0\) for \(0<\epsilon <\epsilon _1^-\). Thus, if \(0<\epsilon <\epsilon _1^-\), then the third and fourth components of the eigenvector \(v_4\) have the same sign and the proof of Lemma B.1 again implies that rebound occurs.

The second part of the proof of Lemma B.1 considered the non-generic cases of the trajectory approaching the steady state tangent to one of the other eigenvectors. For the eigenvector \(v_3\) in (59), we note that it is easily verified that \(k_3+\lambda _3>0\) and so the same arguments apply in this case. The argument for the eigenvector \(v_2\) is unchanged, while for \(v_1\), the requirement for the third and fourth components of the eigenvector to be the same is \(\epsilon g_2+\lambda _1<0\) and it is easily verified that this holds.

If \(\epsilon =\epsilon _2\) corresponds to the point at which the eigenvalues \(\lambda _1=-k_4\) and \(\lambda _2\) coincide, then the conditions of Lemma B.1 hold with \(\epsilon _0=\min (\epsilon _1^-,\epsilon _2)\) and so we conclude that rebound therefore occurs for all \(\epsilon \) satisfying \(0<\epsilon <\epsilon _0\). \(\square \)

This result shows that for this generalised model, rebound always occurs for sufficiently small \(\epsilon >0\) even if there is no rebound without feedback (\(\epsilon =0\)), as is the case for the original TMDD model with feedback moderator.

Clearly, if the bottom right \(2\times 2\) matrix in (68) has complex eigenvalues which have real part closest to zero of all the eigenvalues, then there will again be oscillation about the steady state in y and so rebound occurs infinitely often but with exponentially decreasing magnitude.

7 Applications

We now consider two applications of these results to two different models. The first is the standard TMDD model with feedback. The second is a more complicated model of the effect of efalizumab on patients with psoriasis. This model is an application of the generalizations in Sect. 6.

7.1 Example 1

We consider the TMDD equations including feedback with moderator given by (11)–(14). We use parameter values for the IgE mAb omalizumab (Sun 2001; Agoram et al. 2007), namely \(k_\mathrm{e(L)}=0.024~\mathrm{day}^{-1}\), \(k_\mathrm{e(P)}=0.201~\mathrm{day}^{-1}\), \(k_\mathrm{out}=0.823~\mathrm{day}^{-1}\), \(R_0=2.688~\mathrm{nM}\), \(k_\mathrm{off}=0.900~\mathrm{day}^{-1}\), \(k_\mathrm{on}=0.592~\mathrm{(nM\,day)}^{-1}\), \(k_\mathrm{in}=k_\mathrm{out}R_0=2.212224~\mathrm{nM day}^{-1}\), and initial drug dose \(L_0=14.8148\) nM. These parameter values have \(k_\mathrm{e(L)}<k_\mathrm{e(P)}\) and so no rebound will occur when there is no feedback or in the case of direct feedback for any feedback function H(R). This follows directly from Theorem 2.1, Corollary 4.4, and Theorem 4.10 since \(k_\mathrm{e(L)}<k_\mathrm{e(P)}\) implies that the parameter values are in the left green region in Figs. 3, 4, and 6.

However if there is feedback via a moderator then Theorem 5.12 implies that rebound occurs for all sufficiently small \(\alpha >0\), even though there is no rebound at \(\alpha =0\). The parameters for this example give

Thus Theorem 5.12 gives that the parameters are in the upper left region of Fig. 9 and that rebound will happen for \(\alpha <0.135\) (using \(\alpha =\epsilon /0.625\)). However, this is a lower bound on the region of rebound. To illustrate this, we have used the mainly linear feedback function H(R) in (34) with \(H_0=1\). The magnitude of the rebound is sufficiently small in this case that a nonlinear function \(H_1(R)\) is not required. The maximum rebound is plotted in Fig. 10 for a range of values of \(\alpha \) which does indeed show that there is rebound for all \(\alpha >0\) and sufficiently small. For this example, the rebound ends at approximately \(\alpha =0.98\). In this case, a local maximum for R persists for larger values of \(\alpha \), but with \(R_\mathrm{max}/R_0<1\). The value of time \(t_\mathrm{max}\) at which the maximum rebound occurs is also shown in Fig. 10. We note that as \(\alpha \rightarrow 0\) then \(t_\mathrm{max}\rightarrow \infty \) and so the time of maximum rebound moves to infinity as the magnitude decreases. The same mechanism does not apply when the rebound disappears around \(\alpha =1\) since a local maximum persists in this case but at values lower than baseline, as already discussed.

Left a plot of the relative magnitude of rebound \(R_\mathrm{max}/R_0\) for feedback with a moderator and the mainly linear feedback function (34) with \(H_0=1\) for a range of values of \(\alpha \). Right a plot of the time at which the maximum rebound occurs

We have numerically sampled larger values of \(\alpha \) and there is no indication of further rebound occurring. Of course we know that when \(\alpha \rightarrow \infty \), the feedback with moderator becomes the direct feedback case, for which there is no rebound in this example.

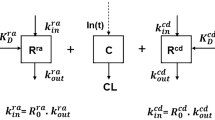

7.2 Example 2

In a study on the effect of efalizumab on patients with psoriasis (Ng et al. 2005), rebound was reported in some patients. The model equations proposed in Ng et al. (2005) are