Abstract

In uncertain environments, animals often face the challenge of deciding whether to stay with their current foraging option or leave to pursue the next opportunity. The voluntary decision to persist at a location or with one option is a critical cognitive ability in animal temporal decision-making. Little is known about whether foraging insects form temporal expectations of reward and how these expectations affect their learning and rapid, short-term foraging decisions. Here, we trained bumblebees on a simple colour discrimination task whereby they entered different opaque tunnels surrounded by coloured discs (artificial flowers) and received reinforcement (appetitive sugar water or aversive quinine solution depending on flower colour). One group received reinforcement immediately and the other after a variable delay (0–3 s). We then recorded how long bees were willing to wait/persist when reinforcement was delayed indefinitely. Bumblebees trained with delays voluntarily stayed in tunnels longer than bees trained without delays. Delay-trained bees also waited/persisted longer after choosing the reward-associated flower compared to the punishment-associated flower, suggesting stimulus-specific temporal associations. Strikingly, while training with delayed reinforcement did not affect colour discrimination, it appeared to facilitate the generalisation of temporal associations to ambiguous stimuli in bumblebees. Our findings suggest that bumblebees can be trained to form temporal expectations, and that these expectations can be incorporated into their decision-making processes, highlighting bumblebees’ cognitive flexibility in temporal information usage.

Significance statement

The willingness to voluntarily wait or persist for potential reward is a critical aspect of decision-making during foraging. Investigating the willingness to persist across various species can shed light on the evolutionary development of temporal decision-making and related processes. This study revealed that bumblebees trained with delays to reinforcement from individual flowers were able to form temporal expectations, which, in turn, generalised to ambiguous stimuli. These findings contribute to our understanding of temporal cognition in an insect and the potential effects of delayed rewards on foraging behaviour.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The use of temporal information can be key to ensuring adaptability and resilience of animals in fluctuating environmental conditions. For example, in foraging, temporally accurate predictions of food availability can help animals maximise the efficiency of energy collection (Wainselboim et al. 2002; Henderson et al. 2006), while minimising exposure to risk (Metcalfe et al. 1999; Dunphy-Daly et al. 2010; Wang et al. 2018). Temporal information of food availability can also be used to approximate optimal foraging routes and therein avoid re-visitation of depleted sites (Ohashi et al. 2008; Trapanese et al. 2019). In animal communication, temporal patterns and structures of communication signals can be modulated (Filippi et al. 2019), can be picked up by their conspecific receivers (Seeley et al. 2000), and can also be essential for maintaining social cohesion in animal colonies (Brandl et al. 2021). In navigation, temporal information can also be utilised by animals to calculate distance travelled (Parent et al. 2016).

While the capacity for utilising temporal information is relatively well studied in mammals and birds, for many other taxa, particularly invertebrates, the ability to form and utilise temporal expectations, i.e. learning when events are likely to occur, remains less explored (Skorupski and Chittka 2006; Skorupski et al. 2006; Ng et al. 2021). In bumblebees, a sense of timing has been investigated in a few studies. For example, bumblebees have been suggested to be capable of anticipating and reacting to food schedules that recur at fixed intervals (Boisvert and Sherry 2006). Bumblebees seemed to also recall the timing of high-quality feeders with different fixed-interval schedules, and time their visitations to coincide with the availability of these rewards (Boisvert et al. 2007). However, it is still not clear how bumblebees’ temporal expectations, if possible, affect their learning and decision-making.

Here, we hypothesised that bumblebees could form temporal expectations, i.e. can learn that reinforcement follows a flower choice after a short time delay (0–3 s). We adopted a colour discrimination paradigm that allowed freely flying bees to voluntarily choose to stay/persist at a flower, or leave to find reward elsewhere. We set out to test our hypothesis and to determine how the formation of temporal expectations might affect colour discrimination learning and colour and temporal generalisation in bumblebees.

Methods

Experimental animals and setup

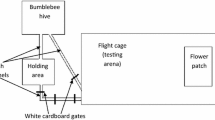

Bumblebees (Bombus terrestris audax) from commercially available colonies (Agralan Ltd, UK) were housed in a wooden nest-box connected to a flight arena (100-cm length × 71-cm width × 71-cm height). Seven colonies were used in total. Colonies were provided with ~7-g irradiated pollen (Koppert B.V., NL) every 2 days. Bees involved in the experiment were given access to sucrose solution during the experiment and sucrose was provided to the colony ad libitum outside of experimental work.

Experimental procedures

Pre-training

Bees were first allowed to fly and look for food in the arena. At this pre-training stage, all bees were allowed to access the arena freely. Three opaque white acrylic tunnels (20 mm Ø × 20-mm length) were placed on the far wall of the arena (Fig. 1A). The tunnels were held in place by a small piece of metal attached with hot glue to the tunnel and magnets adhered to the wall by means of hot glue. Rarely, a bee would land on and enter a tunnel; however, usually the experimenter would reach carefully into the arena and gently guide a bee into a small plastic cup and then place the cup around one of the tunnels. The bee would eventually find the droplet of 30% sucrose solution via a small hole in the wall at the back of the tunnel (Fig. 1A). Once a bee entered a tunnel and fed on sucrose solution on her own volition, she was subsequently number tagged for individual identification. The bee was placed in a bee queen marking cage with a sponge plunger. While the bee was immobilised in the cage, a numbered tag (Bienen-Voigt & Warnholz GmbH & Co. KG, DE) was superglued (Loctite Power Flex Gel, Henkel Ltd., Hemel Hempstead, UK) to her thorax. The bee was placed back in the colony and allowed to return to foraging. Bees tended to return to foraging within 5–10 min. Once a bee came back again to forage on her own, she would be allowed to begin the training phase.

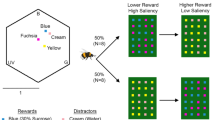

Experimental setup and procedure. A The colour stimuli presented during training and the learning test. B The colour stimuli presented during the transfer test. C Aerial close-up view of setup. A servo connected to a microcontroller system was attached to the outer back wall. As soon as the bee entered the tunnel, the experimenter pressed a button, which operated a servo. The servo moved the outer back wall to make the holes on both the inner and outer back walls align which allowed the bee access to reinforcement. D The training procedure for the delayed group, where the bees experienced a random delay between 0 and 3 s before they received reinforcement. E The procedure conducted in all tests, where bees received no reinforcement after making choices and their waiting times were recorded. F Human visual depiction of each of the colours used in the experiments with bee colour hexagon loci. G Loci of stimuli colours in bee colour space, describing the range of colours a bee can see given their three photoreceptors maximally sensitive to UV, blue, and green light (Chittka 1992). Dots indicate each of the colour loci of the stimuli used in the experiments and are shown with colours as perceived by humans. The distance from the centre indicates spectral purity (or its perceptual analogue, saturation). The closer the dots are together, the more similar they look to a bee. H Relative spectral reflectance plot of each of the targets used

Training

Training was done in the same arena as pre-training. However, for training, six opaque white acrylic tunnels were placed on the wall and around each tunnel was placed a circular piece of laminated coloured paper, either blue or green (Fig. 1A). In each training bout, a bee was allowed to freely forage in the tunnels and collect a full crop of nectar before returning home. At the moment a bee entered a tunnel, the experimenter would press a button that was connected to a microcontroller system (Arduino Uno, Arduino, Somerville, MA, USA) which moved the outer back wall after 0 to 3 s (random uniform distribution) to align the hole in the inner wall at the back of the tunnel with a hole in the outer wall (Fig. 1C). This gave the bee access to reinforcement (Fig. 1D; 10 μL rewarding 50% w/w sucrose solution for a correct choice and 10 μL aversive saturated quinine hemisulfate solution for an incorrect choice) on a platform attached to the outer wall under the holes. Each solution was refilled after a bee visited, drank from it, and then left the tunnel. We used a variable delay so that only after a variable but relatively short amount of time could bees gain access to reinforcement, which allowed their voluntary persistence (or willingness to wait) for reward to be tested subsequently. The delays were determined using the Arduino code function “random (0,3)” which produced a uniform distribution of delays between 0 and 3 s. We chose 0–3 s because during pilot experiments (unpublished data), we found that when trained with longer delays (e.g. 10–20 s), bees sometimes took excessive amounts of time (e.g. minutes) to leave a tunnels during unreinforced tests and when trained with shorter delays (all less than 1 s) bees usually left immediately upon entering the tunnel and failed to keep engaged with the task. Because of natural variation in human response time to seeing the bee fully inside the tunnel and pressing the button, and variation in how long a bee took to get to the back of the tunnel, we would have to assume some small (approximately 1 s) additional variation to the delay times experienced. Note that 3 s should be ecologically relevant for bumblebees, as previous work investigating duration in searching behaviour and handling time spans 3 s and beyond (e.g. Gegear and Laverty 1995; Krishna and Keasar 2019; Richter et al. 2023). Colours of stimuli were printed with a high-resolution ink jet printer. Tunnels and stimuli were removed from the arena and washed (70% ethanol) and were air-dried in the lab between bouts, to eliminate use of olfactory cues. Platforms and walls were washed and dried similarly and reinforcement solutions were replaced before each bee’s visit to the arena. Tunnels and stimuli were re-arranged pseudo-randomly between training bouts with the purpose to avoid bees from associating certain spatial locations with reward or punishment. One group of bees (delayed group; n = 21; four colonies) was trained individually until each bee had reached at least 60% performance based on correct choices during one bout (minimum 5 bouts). Another group of bees (control group, n=19, three colonies) was trained similarly on the same blue and green coloured stimuli but without delay to reinforcement.

Unrewarded tests

Each trained bee subsequently underwent two unrewarded tests, in a pseudorandom order. To assess the voluntary waiting/persistence of bumblebees on trained stimuli, we conducted a learning test using exactly the same stimuli as in training (Fig. 1A and F–H). To test whether such voluntary waiting/persistence behaviour could be transferred to similar but novel stimuli, we conducted a transfer test using three stimuli each with colours perceptually intermediate between the training stimuli, with one more similar to the correct colour in previous training (near correct), one more similar to the incorrect colour (near incorrect), and one in between the near correct and near incorrect colour (middle; Fig. 1B and F–H). Unlike during training, bees never gained access to reinforcement, i.e. the holes in the walls were never aligned (Fig. 1E). Once bees entered the arena, their behaviour was video-recorded (YI Action camera, 120fps, YI Technology, Bellevue, WA, USA). In this way, we were later able to measure how long bees remained in each tunnel, which we took as waiting/persisting time. Each test lasted 3 min, at which point the bee was gently collected in a plastic cup and transferred to the tunnel connecting the arena and hive. The stimuli and setup were then cleaned (70% ethanol), the stimuli rearranged on the wall, and reinforcement was placed as in training on the platforms of the back wall. The tested bee was then allowed into the arena for a refreshment training bout. Tested bees received three such bouts before the second unrewarded test. Once a bee experienced both unrewarded tests, she was placed back into the hive and prevented from further participation in this experiment.

Data and statistical analyses

Solomon coder (beta 19.08.02) was used to record the duration bees spent in each tunnel. For analyses of all videos, a blind protocol was employed, in that each video filename was coded so that the experimenter doing the analysis was blind to the training of each bee. We carried out the visualisations in Python (version 3.7.4; Python Software Foundation, Wilmington, DE, USA, "http://www.python.org/psf") and used the R package (version 4.1.0) for statistical analysis, lme4 (version 1.1–27.1) for statistical analysis with generalised linear mixed modelling (GLMM), and emmeans (version 1.8.5) for post hoc pairwise comparisons. When analysing the test performances, the proportion of choices was used as the dependent variable, and the models were fitted assuming a binomial distribution with a logit link function. Group (delayed or control) or type of choices (e.g. correct or incorrect) was included as a fixed factor while individual and colony identities were considered as random factors. The number of choices each bee performed was included as “weight”. The persistence time was analysed with GLMMs, assuming a Gamma distribution with an inverse link function, with group or type of choices included as a fixed factor and individual identity as well as colony identity as random factors. Significance of the fixed factors was tested using a likelihood ratio test (LRT). Flower colour (i.e. blue colour or green colour being rewarded) was not significant in the LRT and thus was excluded. Post hoc analysis was performed using least-square means (“lsmeans” function in “emmeans” package) with Tukey correction.

Ethical note

Although there are no current legal requirements regarding insect care and use in research in any country (Gibbons et al. 2022), experimental design and procedures were guided by the 3Rs principles (replacement, reduction, and refinement (Russell and Burch 1959; Fischer et al. 2023)). The behavioural tests were non-invasive, and the types of manipulations used are experienced by bumblebees during their natural foraging life. The bumblebees were cared for on a daily basis by trained and competent staff, which included routine monitoring of welfare and provision of correct and adequate food during the experimental period.

Results

In the learning test, where we used the same-coloured stimuli that were familiar to the bees from their training, bees from both groups chose the correct stimuli significantly more often (delayed: mean ± SEM = 73.210% ± 4.487%, GLMM: Z = 3.467, P = 5.260e−4, 95% confidence interval, CI [0.341, 1.942]; control: mean ± SEM = 74.400% ± 4.493%, GLMM: Z = 4.740, P = 2.140e−6, 95% CI [0.691, 1.666]; Fig. 2A).There was no significant difference in the proportions of correct choices by bees in the delayed group and control group (Fig. 2A; GLMM: Z = 0.348, P = 0.728, 95% CI [−0.965, 1.349]), suggesting that the effects on colour discrimination learning with and without a delay are similar. However, bees in the delayed group (mean ± SEM = 6.937 ± 0.653) spent significantly longer time in the tunnel than bees in the control group (mean ± SEM = 3.550 ± 0.208; GLMM: Z = 4.451, P = 8.550e−04, 95% CI [0.067, 0.173]; Fig. 2C).

Proportion of choices and time spent in a tunnel. A Proportion of correct choices in the learning test for delayed group and control group. B The proportions of choices for each option (near correct, middle, near incorrect) in transfer test for both groups. C Mean time that each bee spent in each tunnel that they entered in learning test. D Mean time that each bee spent in each tunnel that they entered in transfer test. In panels A and B, each grey dot represents proportion of choices by an individual bumblebee. In panels C and D, each grey dot represents the time for which a bee spent in a tunnel. Vertical lines indicate mean ± SEM

In the transfer test, bees from the control group significantly preferred the near correct option over middle option, and the middle option over the near incorrect option (near correct: mean ± SEM = 55.430% ± 6.165%, middle: mean ± SEM = 31.315% ± 6.016%, near incorrect: mean ± SEM = 13.254% ± 4.193%; LRT: χ2 = 117.49, P = 2.2e−16; post hoc analysis: near correct – middle: P = 6.862e−13, 95% CI [1.040, 2.020], near correct – near incorrect: P = 2.776e−14, 95% CI [1.680, 2.800], middle – near incorrect: P = 0.012, 95% CI [0.127, 1.290]). In contrast, the preference of the delayed group for the near correct option was not as clear (near correct: mean ± SEM = 37.404% ± 3.487%, middle: mean ± SEM = 37.178% ± 4.389%, near incorrect: mean ± SEM = 25.418% ± 3.620%; LRT: χ2 = 8.166, P = 0.017; post hoc analysis: near correct – middle: P = 0.880, 95% CI [−0.359, 0.544], near correct – near incorrect: P = 0.021, 95% CI [0.066, 1.010], middle – near incorrect: P = 0.071, 95% CI [−0.029, 0.920]; Fig. 2B). Interestingly, bees from the delayed group overall spent a significantly longer time in the tunnel than the control group (delayed: mean ± SEM = 7.742 ± 0.534, control: mean ± SEM = 3.888 ± 0.434; GLMM: Z = 3.649, P = 2.630e−04, 95% CI [0.054, 0.179]; Fig. 2D). Following this, we performed further analyses focusing on the variations in waiting/persisting times among different stimuli and groups.

In the learning test, bees from the delayed group spent significantly more time in the tunnels of the correct stimuli (mean ± SEM = 6.937 ± 0.653) than the incorrect ones (mean ± SEM = 5.102 ± 0.599; GLMM: T = 4.243, P = 2.210e−05, 95% CI [0.036, 0.097]; Fig. 3A). In contrast, bees from the control group spent less time in the correct stimuli tunnels (correct: mean ± SEM = 3.224 ± 0.240, incorrect: 3.895 ± 0.332; GLMM: T = −2.159, P = 0.031, 95% CI [−0.090, −0.004; Fig. 3A). Cross-comparison between the two groups showed that the delayed group spent longer in the correct stimuli tunnels than the control group (Fig. 3A; GLMM: T = 6.829, P = 8.580e−12, 95% CI [0.151, 0.273]), but there was no significant difference in the amount of time spent in the incorrect stimuli tunnels between the two groups (Fig. 3A; GLMM: T = 1.686, P = 0.092, 95% CI [−0.013, 0.175]).

Mean time spent in the tunnel for each of the options. A Mean time spent in correct and incorrect tunnels in learning test. B Mean time spent in near correct, middle, and near incorrect tunnels in transfer test. Each grey dot represents the average duration of time a bee spent in the tunnels. Vertical lines indicate mean ± SEM

Similar to the learning test, bees from the delayed group spent a significantly longer time in the near correct stimuli tunnels than the near incorrect stimuli tunnels (near correct: mean ± SEM = 8.942 ± 0.892, middle: mean ± SEM = 7.797 ± 1.016, near incorrect: mean ± SEM = 6.191 ± 0.785; LRT: χ2 = 7.594, P = 0.022; post hoc analysis: near correct – middle: P = 0.524, 95% CI [−0.045, 0.017], near correct – near incorrect: P = 0.016, 95% CI [−0.081, −0.007], middle – near incorrect: P = 0.187, 95% CI [−0.069, 0.010]; Fig. 3B). However, there was no significant difference in time spent among all options in the control group (near correct: mean ± SEM = 3.549 ± 0.633, middle: mean ± SEM = 4.161 ± 0.890, near incorrect: mean ± SEM = 4.021 ± 0.659; LRT: χ2 = 1.135, P = 0.567; Fig. 3B). The time spent in the near correct stimuli tunnels in the delayed group was longer than that in the control group (Fig. 3B; GLMM: T = 3.765, P = 1.66e−04, 95% [0.078, 0.248]). These results further support the idea that training with delayed reinforcement can facilitate a generalisation of the temporal expectations to ambiguous stimuli.

Discussion

In the current study, we have interpreted the time spent in a tunnel as a bumblebees’ voluntary willingness to wait/persist for reinforcement. The results from the learning test indicate that bees trained with delay to reinforcement subsequently exhibited a voluntary waiting/persistence response, supporting the assumption that bumblebees might be able to form temporal expectations. The results from the transfer test suggest that training with delays affects the generalisation of choices as well as the temporal expectations to ambiguous stimuli.

Waiting/persisting times for the delayed group were greater for the previously rewarded colour than the previously punished colour in the learning test, and increased in proportion to the similarity between the ambiguous flower’s colour and the trained rewarded colour in the transfer test. One possibility for how this behaviour could have manifested is if bees formed a temporal expectation only for choices associated with sugar reward, but not for those associated with punishment. In other words, sugar reward might encourage bees to spend a longer time (about 3 s on average) in the tunnel of certain coloured stimuli, while punishment simply had no such effect. This hypothesis might also explain why, in the learning test, the delayed group’s time spent in the incorrect tunnels was not significantly longer than that of the control group; that is, bees did not learn to wait/persist in the incorrect tunnels as there were no rewards. These observations suggest that bees’ voluntary persistence (or willingness to wait), while requiring training with delayed reinforcement, is stimulus specific and can generalise to ambiguous stimuli.

We also observed a clear difference in performance during the transfer test between the delayed group and the control group. For the control group, the proportion of choices for near correct, middle, and near incorrect stimuli exhibited a stepwise descent, whereas a similar gradient of behaviour was not observed for the delayed group. We argue that this might be a result of delayed reinforcement during training diminishing the strength of association between colour and reward. Indeed, studies have long ago shown that delayed reinforcement can result in lower acquisition rate (Thorndike 1911). Our results indicate that delayed reinforcement can affect the generalisation of the learned colour-reward/punishment associations without necessarily affecting specifically learned colour-reward/punishment associations.

Another interesting behaviour worth discussing is that, in the learning test, bees entered the incorrect tunnels but did not spend much time inside. This cannot be simply accounted for by “mistakes”, because if the bees accidentally chose incorrectly, this would mean they did so because they thought it was a correct choice, and therefore should have waited as long as they did in the correct tunnels. Previous research has argued for the presence of “exploration” behaviour in bumblebees (Evans and Raine 2014), which suggests that foraging errors may sometimes be part of a foraging strategy that aids bees to find food sources faster in a changing environment. In other words, bees might have known that the incorrect choices were incorrect, but still decided to check “just in case things have changed”. Here, in the learning test, correct choices provided no access to reward, and thus bees might have been more prone to turn to an exploration strategy. This idea is supported by the fact that there is no significant difference in how much time the delayed group or the control group spent in the incorrect tunnel — perhaps, as discussed below, because they did not wait at all.

Our results also provide some support for the idea that bumblebees might be able to estimate time intervals, which in our case, was the time between the action of entering a tunnel and the presentation of reinforcement. Previous research has suggested that bumblebees have the ability for interval timing. For example, Boisvert and Sherry (2006) trained bees to extend their proboscis and find reward in a small hole only at certain time intervals following a light signal. After training, bumblebees were more likely to extend their proboscis near those times for which they had received reward following the light signal. In our paradigm, we might assume that the average time spent for the control group is the time that bees take simply to get to the back of the tunnel, check for access to reinforcement, turn around, and leave. If true, the difference between the delayed groups’ time spent in correct tunnels vs the control groups’ average time spent in either tunnel could be taken as the time interval bees learned. This difference, 3.4 s, is very close to the upper limit of the waiting times bees experienced earlier in the training (max 3 s). Therefore, our findings may add some support to the idea that bumblebees have the capacity for interval timing (However, for a critical perspective on these types of measurements and their interpretation for interval timing, see Craig et al. (2014)).

Note that our paradigm is similar to the willingness-to-wait task often used with humans (McGuire and Kable 2015). This task has typically been used to investigate self-control with respect to delayed gratification — i.e. how much time an animal is willing to invest in exchange for a delayed but greater reward (Rosati et al. 2007). Typically, subjects are presented with an option which if chosen would provide them with immediate reward, but they can instead wait/persist for a delayed higher reward. In our task, the immediate option was to leave the tunnel and search elsewhere. While rats typically wait for a few seconds in exchange for three times as much food, monkeys can wait a few minutes, suggesting a dramatic interspecies variation. Previous work has been argued to provide evidence for self-control in honeybees (Cheng et al. 2002). Our design was not intended to address whether bumblebees exert self-control and cannot speak directly to self-control. However, we speculate that because we could train bees to wait/persist in the tunnels for reinforcement, instead of simply leaving if not rewarded immediately, bumblebees also have the potential for some level of self-control.

We would also like to briefly discuss how our results speak to the vast literature investigating when animals should decide to leave a foraging site, i.e. patch of flowers as opposed to a single flower like our present results. How long animals should spend in a particular patch is one of several key decisions that foragers must make, according to optimal foraging theory (Pyke et al. 1977). Optimal foraging theory predicts that foragers should work to maximise the net energy intake rate and minimise the net energy consumption rate. Based on this assumption, many hypotheses and theories have been proposed to address the question of when to leave a patch of flowers. For example, the “giving-up time” rule predicts that animals should leave the patch when they spend a certain period of time (the “giving-up time”) searching but receiving no rewards, and the “fixed time” rule predicts that foragers should spend a fixed amount of time searching for reward, regardless of the profitability, before leaving the current patch (Krebs et al. 1974). For both of these rules, decisions are heavily based on temporal information. In contrast, the assessment rule states that foragers’ decision to leave a patch should be based solely on how much reward they have collected in that patch (Green 1984). The prescient marginal value theorem (MVT; Charnov 1976) integrates both temporal information and reward history, stating that animals should leave the current patch when the rate of finding reward is reduced by patch depletion. Here, we found that bumblebees decide when to leave a single flower based on their previous temporal experience with these flowers and can adjust the level of persistence on new flowers according to their similarity with familiar ones. This suggests that when deciding on when to leave a single flower, bees are utilising temporal information, previous rewarding experiences, and the cues provided by the flowers. Although the persistence duration was tested on individual flowers instead of a flower patch, our results indicate the breadth of information bumblebees can utilise and integrate when making very rapid foraging decisions.

Data availability

All data generated or analysed during this study have been included as supplementary materials.

References

Boisvert MJ, Sherry DF (2006) Interval timing by an invertebrate, the bumble bee Bombus impatiens. Curr Biol 16:1636–1640

Boisvert MJ, Veal AJ, Sherry DF (2007) Floral reward production is timed by an insect pollinator. Proc R Soc B-Biol Sci 274:1831–1837

Brandl HB, Griffith SC, Farine DR, Schuett W (2021) Wild zebra finches that nest synchronously have long-term stable social ties. J Anim Ecol 90:76–86

Charnov EL (1976) Optimal foraging, the marginal value theorem. Theor Popul Biol 9:129–136

Cheng K, Peña J, Porter MA, Irwin JD (2002) Self-control in honeybees. Psychon Bull Rev 9:259–263

Chittka L (1992) The colour hexagon: a chromaticity diagram based on photoreceptor excitations as a generalized representation of colour opponency. J Comp Physiol A 170:533–543

Craig DPA, Varnon CA, Sokolowski MBC, Wells H, Abramson CI (2014) An assessment of fixed interval timing in free-flying honey bees (Apis mellifera ligustica): an analysis of individual performance. PLoS One 9:e101262

Dunphy-Daly MM, Heithaus MR, Wirsing AJ, Mardon JS, Burkholder DA (2010) Predation risk influences the diving behavior of a marine mesopredator. Open Ecol J 3:8–15

Evans LJ, Raine NE (2014) Foraging errors play a role in resource exploration by bumble bees (Bombus terrrestris). J Comp Physiol A 200:475–484

Filippi P, Hoeschele M, Spierings M, Bowling DL (2019) Temporal modulation in speech, music, and animal vocal communication: evidence of conserved function. Ann N Y Acad Sci 1453:99–113

Fischer B, Barrett M, Adcock S, Barron A, Browning H, Chittka L, Drinkwater E, Gibbons M, Haverkamp A, Perl C (2023) Guidelines for protecting and promoting insect welfare in research. Insect Welfare Research Society. https://doi.org/10.13140/RG.2.2.19325.64484

Gegear RJ, Laverty TM (1995) Effect of flower complexity on relearning flower-handling skills in bumble bees. Can J Zool 73:2052–2058

Gibbons M, Crump A, Chittka L (2022) Insects may feel pain, says growing evidence – here’s what this means for animal welfare laws. In: The Conversation https://theconversation.com/insects-may-feel-pain-says-growing-evidence-heres-what-this-means-for-animal-welfare-laws-195328. Accessed 14 Dec 2022

Green RF (1984) Stopping rules for optimal foragers. Am Nat 123:30–43

Henderson J, Hurly TA, Bateson M, Healy SD (2006) Timing in free-living rufous hummingbirds, Selasphorus rufus. Curr Biol 16:512–515

Krebs JR, Ryan JC, Charnov EL (1974) Hunting by expectation or optimal foraging? A study of patch use by chickadees. Anim Behav 22:953–964

Krishna S, Keasar T (2019) Bumblebees forage on flowers of increasingly complex morphologies despite low success. Anim Behav 155:119–130

McGuire JT, Kable JW (2015) Medial prefrontal cortical activity reflects dynamic re-evaluation during voluntary persistence. Nat Neurosci 18:760–766

Metcalfe NB, Fraser NHC, Burns MD (1999) Food availability and the nocturnal vs. diurnal foraging trade-off in juvenile salmon. J Anim Ecol 68:371–381

Ng L, Garcia JE, Dyer AG, Stuart-Fox D (2021) The ecological significance of time sense in animals. Biol Rev 96:526–540

Ohashi K, Leslie A, Thomson JD (2008) Trapline foraging by bumble bees: V. Effects of experience and priority on competitive performance. Behav Ecol 19:936–948

Parent J-P, Brodeur J, Boivin G (2016) Use of time in a decision-making process by a parasitoid. Ecol Entomol 41:727–732

Pyke GH, Pulliam HR, Charnov EL (1977) Optimal foraging: a selective review of theory and tests. Q Rev Biol 52:137–154

Richter R, Dietz A, Foster J, Spaethe J, Stöckl A (2023) Flower patterns improve foraging efficiency in bumblebees by guiding approach flight and landing. Funct Ecol 37:763–777

Rosati AG, Stevens JR, Hare B, Hauser MD (2007) The evolutionary origins of human patience: temporal preferences in chimpanzees, bonobos, and human adults. Curr Biol 17:1663–1668

Russell WMS, Burch RL (1959) The principles of humane experimental technique. Methuen, London

Seeley TD, Mikheyev AS, Pagano GJ (2000) Dancing bees tune both duration and rate of waggle-run production in relation to nectar-source profitability. J Comp Physiol A 186:813–819

Skorupski P, Chittka L (2006) Animal cognition: an insect’s sense of time? Curr Biol 16:R851–R853

Skorupski P, Spaethe J, Chittka L (2006) Visual search and decision making in bees: time, speed, and accuracy. Int J Comp Psychol 19:342–357

Thorndike EL (1911) Animal intelligence: experimental studies. Macmillan Press, Lewiston, NY, US

Trapanese C, Meunier H, Masi S (2019) What, where and when: spatial foraging decisions in primates. Biol Rev 94:483–502

Wainselboim AJ, Roces F, Farina WM (2002) Honeybees assess changes in nectar flow within a single foraging bout. Anim Behav 63:1–6

Wang M-Y, Chittka L, Ings TC (2018) Bumblebees express consistent, but flexible, speed-accuracy tactics under different levels of predation threat. Front Psychol 9:1601

Acknowledgements

F.P. was funded by Guangzhou Science and Technology Planning Project (2023A04J1967), Guangdong Basic and Applied Basic Research Foundation (2023A1515012168), National Natural Science Foundation of China (32371135, 31970994). Y.Z. was supported by the China Scholarship Council (202008440515).

Author information

Authors and Affiliations

Contributions

Yonghe Zhou: Investigation, Formal analysis, Data Curation, Writing – Original Draft, Writing – Review & Editing, Visualisation HaDi MABouDi: Conceptualisation, Methodology, Investigation, Writing – Original Draft Chaoyang Peng: Investigation Hiruni Samadi Galpayage Dona: Methodology, Validation Selene Gutierrez Al-Khudhairy: Investigation, Writing – Original Draft, Writing – Review & Editing Lars Chittka: Writing – Original Draft, Writing – Review & Editing, Supervision Cwyn Solvi: Conceptualization, Methodology, Writing – Original Draft, Writing – Review & Editing, Supervision Fei Peng: Conceptualization, Writing – Original Draft, Writing – Review & Editing, Visualisation, Supervision, Funding Acquisition

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Communicated by A. Avarguès-Weber

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is a contribution to the Topical Collection “Toward a Cognitive Ecology of Invertebrates” - Guest Editors: Aurore Avarguès-Weber and Mathieu Lihoreau

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhou, Y., MaBouDi, H., Peng, C. et al. Bumblebees display stimulus-specific persistence behaviour after being trained on delayed reinforcement. Behav Ecol Sociobiol 78, 3 (2024). https://doi.org/10.1007/s00265-023-03414-7

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00265-023-03414-7