Abstract

Prey strategically respond to the risk of predation by varying their behavior while balancing the tradeoffs of food and safety. We present here an experiment that tests the way the same indirect cues of predation risk are interpreted by bank voles, Myodes glareolus, as the game changes through exposure to a caged weasel. Using optimal patch use, we asked wild-caught voles to rank the risk they perceived. We measured their response to olfactory cues in the form of weasel bedding, a sham control in the form of rabbit bedding, and an odor-free control. We repeated the interviews in a chronological order to test the change in response, i.e., the changes in the value of the information. We found that the voles did not differentiate strongly between treatments pre-exposure to the weasel. During the exposure, vole foraging activity was reduced in all treatments, but proportionally increased in the vicinity to the rabbit odor. Post-exposure, the voles focused their foraging in the control, while the value of exposure to the predator explained the majority of variation in response. Our data also suggested a sex bias in interpretation of the cues. Given how the foragers changed their interpretation of the same cues based on external information, we suggest that applying predator olfactory cues as a simulation of predation risk needs further testing. For instance, what are the possible effective compounds and how they change “fear” response over time. The major conclusion is that however effective olfactory cues may be, the presence of live predators overwhelmingly affects the information voles gained from these cues.

Significance statement

In ecology, “fear” is the strategic response to cues of risk an animal senses in its environment. The cues suggesting the existence of a predator in the vicinity are weighed by an individual against the probability of encounter with the predator and the perceived lethality of an encounter with the predator. The best documented such response is variation in foraging tenacity as measured by a giving-up density. In this paper, we show that an olfactory predator cue and the smell of an interspecific competitor result in different responses based on experience with a live-caged predator. This work provides a cautionary example of the risk in making assumptions regarding olfactory cues devoid of environmental context.

Similar content being viewed by others

Introduction

Perhaps one of the greatest questions occupying behavioral ecologists, as well as some neurobiologists today, is how animals interpret information they gather in their environment (e.g., Dielenberg and McGregor 2001; Zimmer et al. 2006; Pakanen et al. 2014; Drakeley et al. 2015). In many instances, animals born in the lab, even first generation, exhibit weakened responses to predators which they would encounter on a day-to-day basis in nature (e.g., Burns et al. 2009; Feenders et al. 2011; Troxell-Smith et al. 2015). Similarly, but on a larger scale, ecologists have been puzzling over the inability of prey to recognize risk from unfamiliar, invasive, predators (Carthey and Banks 2014). From the opposite side, some wild species, such as Australian bush rats (Rattus fuscipes), have been shown to exhibit stress when exposed to urine and fur of red foxes (Vulpes vulpes) despite sharing no evolutionary history with any foxes (Banks 1998; Spencer et al. 2014). These examples have led to an increased debate on the value of information gained from cues of predation risk, especially in the absence of an actual predator.

To bring an example of this debate, we once more return to the prey naiveté literature and to the Australian researchers who pioneer this research. Banks and Dickman (2007) offer a framework to think about how prey interpret risk cues of novel predators as three levels of naiveté. They argue that multiple behavioral mechanisms are acting to prevent proper interpretation and responses to those novel risks. The first is the lack of a neurological pathway to recognize the cue as a predator cue altogether (e.g., Blumstein et al. 2000; Carthey et al. 2017). The second level is a mismatch between the interpretation of the cue and the behavioral response. The best example for this type of naiveté is New Zealand’s kakapo parrot (Strigops habroptilus) that recognizes cats (Felis catus) as predators but stares them down as opposed to fleeing (Karl and Best 1982). Last, they argue that simply being bad at using your tools against the novel predator is a form of naiveté, and in our opinion, that is still up for debate.

We began this introduction on the broad and applied implications of responding to predator cues because “fear” responses are some of the strongest traits inherited from ancestors. Anti-predator traits currently used by living beings were refined by millions of years of necessity to avoid predation (Vincent and Brown 2005). Otherwise, the current species would have experienced extinction along their line of decent (Darwin 1859). Based on this simple Darwinistic logic, we expect and assume that animals would recognize the odor of their evolutionarily known predators as a sign of risk. This logical assumption is still controversial.

Some studies, both in the laboratory and in the field verified the expected responses of prey to scent-mediated increase in predation risk from known predators (Ylönen and Ronkainen 1994; Koskela et al. 1997; Fuelling and Halle 2004; Ylönen et al. 2006). However, an equally large number of studies have found weak or no correlations between olfactory cues and anti-predator behaviors (Mappes et al. 1998; Trebatická et al. 2012). The ecological literature is filled with examples of predator odor impacting the behavior of prey in varying ways. For example, small mammal traps sprayed by odor, regardless if predator or conspecific, yield better trapping success than odorless traps (Apfelbach et al. 2005). Passerines will nest in boxes sprayed with weasel scent when competition for odor-free boxes gets high. However, in this case, the stress in the weasel scent–sprayed boxes resulted in increased fledging rate (Monkkonen et al. 2009). But perhaps one of the strongest examples of a mismatch is derived from native Australian marsupials which fail to appropriately respond to dingo scents, for example, the western gray kangaroos that instead of avoiding the predator odors are drawn to them (Parsons et al. 2005; Mella et al. 2014).

So why do we find variations in response to predator odor? A recent review by Parsons et al. (2018) formulated the pathways by which predator odors function and are recognized. They state that three complementary catalysts can generate a response to such cues: neurobiological, chemical, and contextual. They suggest that these interact to determine whether a scent is perceived as risky or attractive. Thus, we assume that the information gleaned from the olfactory cue can be interpreted in a different manner in different environmental or temporal context, in different habitats, and under different social regimes. We speculate that human researchers underestimate the cognitive and neural abilities of prey individuals to interpret the information in a cue. In colloquial and anthropomorphic terms, we can observe a number of examples: (1) Not “Danger: a predator is here!”, but “A predator was here a while ago and I can do whatever until it comes back”; (2) Not “I smell predator X therefore I must run or hide”, but “Predator X poses Y amount of risk to me. Therefore, I can take some risk to get food my competitors may avoid.”

The reason we can speculate that the responses are more complex lies in the basic difference between the neurological approach to predation risk (and post-traumatic stress) and that of behavioral ecology. Neurology approaches “fear” as the activation of a pathway between the hippocampus and the amygdala following an unforeseen exposure to a risk cue (Gross and Canteras 2012), i.e., as the response to an “Act of God”. However, as ecologists, we must argue here that an anti-predator behavior is more than an instinctive freezing or fleeing response. Instead, it is a strategic response based on the innate and acquired information an animal processes in the cerebral cortex, which in turn influences and regulates the production of stress hormones in the amygdala (Brown 2010).

In this paper, we set out to test how the interpretation of an olfactory cue may change based on the available information a prey individual has on the acute danger from predators in close vicinity. Using an interview chamber approach (cf. Bleicher 2014; Bleicher and Dickman 2016), we aim to “ask” the animals how they perceived the difference between olfactory cues of a predator, a cue of a competitor (herbivore) as a sham control, and a cue-less control. We request the forgiveness of some sensitive readers for the use of colloquiality, but we perceive this anthropomorphism (an interview) to be appropriate in this context. We apply this method (a type of bioassay) to determine how an individual animal perceives a “question” we pose. We then follow-up with repeated measures to address how the individual’s perception changes over time or based on the experience we subject it to. In this case, we interview individuals to assess how they interpret the information posed by an olfactory cue of a predator. We are interested in understanding the changes in an animal’s perceived risk on three scenarios: (1) after a few weeks in the lab and before exposure to a live predator, (2) while being exposed to a caged predator in an adjacent room, and (3) after that exposure. We decided to approach this example using a model system studied for decades, the bank vole (Myodes glareolus) and the least weasel (Mustela nivalis nivalis) as its predator (cf. Korpimäki et al. 1996; Sundell et al. 2008).

In this experiment, we expect that the voles would use resources optimally, balancing the tradeoffs of food and safety (cf. Brown 1988). We hypothesize that the odor of a predator would cause the voles to increase their vigilance and thus reduce their foraging in patches (cf. Brown 1999). We also expect the interpretation of the predator’s cue to change based on the exposure to the predator. From other empirical studies with small mammals, we can expect that exposure to a predator can have a lingering effect in the apprehension sensed by the prey (Dall et al. 2001). Last, we hypothesize that the energy needs, and the corresponding behavioral response, of the two sexes would not be the same. In the bank vole, the females are territorial and take the brunt of the energetic costs of reproduction while males move through the landscape in search of copulations (Horne and Ylönen 1996; Trebatická et al. 2012). In early spring, at the start of the breeding season, this sex bias in energetic tradeoffs should be at its peak. Therefore, we expect that the males would show greater apprehension around the predator cues as their transient nature exposes them to more predation risk than the females who are more sessile in their territories and resource focused at this time of year.

Given these expectations, we aim to answer four questions in this three-stage experiment:

-

1.

Do voles differentiate between the odor of a predator and that of a competitor? If so, do they forage less in the presence of the odor of a predator than that of a competitor?

-

2.

Does recent exposure to the predator invigorate a response to an olfactory cue of that predator?

-

3.

Does information about an active predator nearby affect the activity patterns of the prey species in relation to both the predator and the competitor cues?

-

4.

Does the information about a live predator linger post-exposure? If so, for how long?

Methods

Study species

The bank vole is one of the most common small rodents in northern temperate and boreal forests (Stenseth 1985). It is granivorous-omnivorous (Hansson 1979) and can live in a wide range of forest habitats. In central Finland, bank voles are known to breed between three and five times within the breeding season, May through September. Their average litter size is 5–6 pups. Bank voles are prey for a diverse predator assemblage which includes the least weasel and the stoat (Mustela erminea) (Ylönen 1989).

The least weasel is a specialist predator on rodents and the major cause of mortality in boreal voles, especially during a population’s decline (Korpimäki et al. 1991; Norrdahl and Korpimäki 1995, 2000). Bank voles are able to detect the odor of mustelids and change their behavior accordingly (Ylönen 1989; Jędrzejewska and Jędrzejewski 1990; Jędrzejewski and Jędrzejewska 1990; Mappes and Ylönen 1997; Mappes et al. 1998; Bolbroe et al. 2000; Pusenius and Ostfeld 2000; Ylönen et al. 2003). The least weasel, like other small mustelids, has a very potent anal gland secretion (Apfelbach et al. 2005) which has been shown to be interpreted by voles as a cue of predation risk (e.g., Ylönen et al. 2006; Haapakoski et al. 2012).

The study was conducted at the Konnevesi Research Station of the University of Jyväskylä, 70 km north of Jyväskylä. The study was conducted in the laboratory and the bank voles were trapped from the forests surrounding the research station (N 62° 41′ 18″, E 26° 17′ 12″) as well as in the forests near Oulainen (N 64° 17′ 56″, E 24° 49′ 35″). The voles were housed in solitary standard laboratory rodent boxes (43 × 26 × 15 cm3). Wood chips were used to keep the cages dry, hay was provided as bedding material, and rodent food pellets and fresh water were available ad libitum. Light: dark time ratio in the animal rooms was set to 18:6 h, which corresponds roughly to the natural light-dark regime during the experimental period. Four days prior to the experiment, the animals were removed from ad libitum food and put on a diet of poor-quality food, 3 g of millet per day, while meeting the basic energy needs of these animals (cf. Eccard and Ylönen 2006). The change to a poor diet was given as an incentive for the animals to keep foraging in the novel environment of our study systems and counteract neophobia expected in satiated animals (Amézquita et al. 2013; Näslund and Johnsson 2016). All applicable international, national, and/or institutional guidelines for the use of animals were followed and were approved by the animal experimentation committee of the University of Jyväskylä, permit number: ESAVI/6370/04.10.07/2014.

Study system design

Six interview chamber systems were constructed in concordance with Bleicher (2014) and Bleicher and Dickman (2016) and Bleicher et al. 2018. Each system was constructed from a 30 cm diameter bucket (as a nest box) attached by 5 cm diameter, 30 cm long PVC tubing to three gray plastic storage bins (hereafter rooms) 40 × 30 × 23 cm high (Appendix S1). Each room was equipped with a square (19 × 19 × 10 cm high) box (henceforth patch) with two 5-cm-diameter holes drilled in the side to allow the vole access (Appendix S2). Each patch was filled with 1 l of sand and was set with 1.5 ± 0.02 g of millet. The total amount of food in the system equaled one and a half times the daily needed energy for a foraging vole (cf. Eccard and Ylönen 2006).

The rooms were equipped with a cue-box 11 × 11 × 6 cm attached to the roof of the room to create different treatments: weasel bedding (wood shavings, urine, hair, and fecal matter), rabbit (Oryctolagus cuniculus) bedding, and a control (cf. Sundell et al. 2008). Each cue-box was replenished with fresh bedding daily. The cardinal directions in which each treatment was placed was randomized between systems.

On direct predator exposure nights, two of the three-room interview systems (Nos. 5 and 6), were converted to four-room systems adding a room with hardware cloth screen directly adjacent to a cage (30 × 52 × 26 cm) in which a live weasel was caged. The weasel was caged only during experiments and was released into a larger 2-m-long holding pen when not in use (Appendix S3). To adjust for the larger systems, we decreased the amount of food per patch to 1.1 ± 0.02 g and to maintain the same encounter rate with a food item, decreased the amount of sand in the patch to 0.75 l. To avoid the sound of the caged weasel traveling throughout the system, the rooms adjacent to the weasel cage were located in a different lab-room with the PVC tubes drilled through the wooden wall between the lab-rooms.

We define an experimental round as the time an animal spent in a system on a given night. At the start of every round, a single vole was placed in the nest box and had access to each of the different patches via the PVC tubing. Each vole was allowed 2 h to forage, following the protocol of Bleicher (2012), and the expectation that this allowed sufficient time for voles to move between, and forage in, the different treatments while not giving enough time for habituation to the treatments. For the short period of time, diverging from the “normal” patch-use protocol (Bedoya-Perez et al. 2013) was aimed at getting the initial response of the animal, i.e., its “gut feeling” and not measure its ability to understand how we are manipulating it on the long run. We did not control for conspecific odors but avoided cross-contamination by consistently keeping each olfactory treatment in the same rooms consistently.

Brown (1988) stated that an animal foraging in a patch will quit harvesting when the costs associated with resource harvesting coupled with the costs associated with predation risk equal the energetic value of the patch as perceived by the forager. As the vole depletes a patch, the diminishing returns render other patches more valuable (a missed opportunity cost). The difference in missed opportunity costs drives animals across the landscape (between rooms) examining and comparing patches (Smith and Brown 1991; Berger-Tal and Kotler 2014). At the end of each round, the amount of resources the forager did not use in the patch, due to the aforementioned costs, is the giving-up density (GUD). We ran up to five rounds (of 2 h each) per “night” starting at 17:00 in accordance with activity patterns recorded by Ylönen (1988). The GUD as the measurement in our systems provides for data collection that is independent of the observer, a blinded design, thus allowing for avoidance of sampler bias.

Forty field-caught bank voles were interviewed (20 male and 20 females) for nine nights between the nights of May 6th–May 26th, 2018. Each individual vole was “interviewed” for nine nights in a row, randomizing systems and hours of the interview as to minimize the effects of time and location. Of the nine nights, the first two nights an animal was interviewed were without the live predator (hereafter pre-exposure). On the third and fourth nights, an animal was exposed to both a live weasel in addition to the olfactory cues (hereafter exposure). On the remaining five nights, the animal was exposed again to olfactory cues only (hereafter post-exposure). At the end of each round, the animal was removed and returned to its holding container and fed with extra 3 g of millet. Each of the patches was sieved and the weight of remaining resources recorded to obtain the GUD. The systems were reset after each 2-h round with fresh new patches and the next round run with a new vole.

Data analyses

For the analysis of the data in this paper, we did not use the GUD as in a traditional approach. To be able to compare the three-room and four-room system data we preferred to use the proportion of resources harvested (initial density-GUD/initial density). Because the runs are limited in time (2 h), the GUD does not reflect a quitting density but more of an initial response (if prolonged, we expected habituation and thus loss of relevance). Therefore, the traditional approach to the analysis of GUD data (e.g., St Juliana et al. 2011; Shrader et al. 2012), a general linear model (GLM), was not meaningful in this instance and would result in low explanatory power (we provide this weak analysis in Appendix S4). It is important to note that we excluded the live weasel treatment from all analyses (except for random forests analysis) to allow for a fully crossed experimental design. We felt confident in our ability to do this as only two voles had foraged in the live weasel patches, and those under 0.02 g of millet within the margin of error. For the same reason, we also only used the first two nights of the post-exposure rounds (marginal variation in 5 days post-exposure Appendix S4).

As an alternative, we used a combination of three statistical approaches. First, we ran log-linear tabulations for foraging activity (foraged vs. unforaged patches) (cf. Bleicher et al. 2016). We tabulated the data as a factor of chronology (pre-exposure, exposure, post-exposure) and treatment. We ran the analysis as a three-way contingency table using Vassar Stats calculator. We used this analysis to test the effects of each variable, the interaction of the two, and each nested within the other.

Second, we used Statistica© to run a series of tests of concordance as a repeated measure testing the response of individuals using the proportion of resources harvested as the dependent variable. Using Friedman’s tests of concordance, we first tested whether the vole’s foraging tenacity repeated the same pattern based on the chronology of the experiment. In the second test, we compared their response to olfactory cues. We also compared the foraging tenacity of individuals as factors of the interaction of treatment and chronological order, as well as treatment nested within chronological order.

Last, we chose to run our data through a random-forest regression analysis. This Bayesian machine-learning test uses the data and repeated sampling to determine the importance of factors in the decision-making process of voles. In this analysis, we used a normalized proportion of resources harvested (within patch) using an arcsine × √ transformation as the dependent variable. We used chronology, treatment (including live weasel), sex, and round (night within chronology). This analysis generates a decision tree ranked by importance from the top node to the lowest importance in final nodes. The final nodes on the tree provide likely hypotheses for significant differences but do not constitute statistical pairwise comparisons.

Data availability

All data generated or analyzed during this study are included in this published article and its supplementary information files.

Results

When we measured the individual’s response to risk using foraging tenacity and tested whether they were in agreement about the value of patches in the different treatments, we found that there was no concordance between the voles in response to the cues as a main effect (Table 1). However, we found concordance in the change in foraging tenacity between the three segments of the experiment (pre-, post-, during-exposure). Here, we found that the interviews during-exposure resulted in a mean rank, the lower the rank the greater the risk, of 1.31. This compared to 2.46 and 2.23 for pre- and post-exposure interviews, respectively. The interaction of treatment and chronology, ranking the nine subcategories, found concordance between the individuals as well.

When we measured concordance between treatments nested under each of the chronological interviews, we found concordance in risk assessment only during- and post-exposure. During the exposure, the voles ranked the weasel-cue treatment as the greatest risk with the rabbit and controls having similar ranks. Post-exposure, the voles ranked the control as the least dangerous and avoided both rabbit and weasel cues similarly.

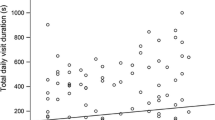

Both the voles’ foraging activity (number of patches visited) and tenacity (proportion of resources harvested) were impacted similarly by the absence of the predator pre- and post-exposure (Fig.1 and Fig. 2A, respectively). The vole’s activity was lower in all types of patches when the weasel was nearby (Table 2). In addition, they foraged less in patches than on nights when the predator was absent (Appendix S4).

Cumulative proportion of patches harvested by voles in the systems. Each bar represents the foraging activity of 39 voles foraging for two consecutive nights (one female removed showing signs of pregnancy). On the x-axis, we state the olfactory treatments as collected at the different chronological states of the experiment, pre-, post-, and during-exposure to the live weasel. The value for the live weasel was excluded from the statistical analysis of activity patterns but is presented here for the comparative power it provides

Mean proportion of resources harvested ± SE based on (A) the chronological order of interviews (x-axis) and (B) olfactory cue treatments nested under each chronological order. The value for the live weasel was excluded from the statistical analysis of activity patterns but is presented here for the comparative power it provides. Note that these values are the pre-normalized values which were transformed using a arcsine × sqrt transformation

In the three-way contingency table, the voles were more active in control patches as expected pre- and post-exposure. In the sham control (rabbit bedding), they were most active during nights of direct exposure to the weasel (Fig. 1). Proportionally, there was no difference in activity for weasel-cue treatment patches pre- and post-exposure. The major difference between the pre-exposure interviews to the post-exposure ones was the activity rate in the sham treatments decreasing from 64 to 54%.

Addressing the foraging tenacity, the proportion of food foraged in the patches was better addressed using a random-forest analysis (Table 3) than the traditional method of GLM (Appendix S4). The decision-tree risk estimates were low, 0.0217 ± 0.001 standard error and 0.025 ± 0.002 for the training and test of the model, respectively. This analysis ranked the variables by importance, i.e., the proportion of the decisions it influenced in the model. Chronology was the most important influencing 100% of decisions. Treatment influenced 85% of decisions while the sex of the vole influenced 17% and the repetition (round 1 or 2) influenced 16% of decisions.

Reading the decision tree is from left to right (Fig. 3). The higher (further left) the split in the tree, the more important that split variable is in influencing the decision-making process of the foraging animal. The major split was chronology based, separating the exposure from the interviews where there were cues alone (Fig. 2B). We will present the results first for the direct exposure interviews and then in a separate paragraph address the pre- and post-exposure interviews.

Decision-tree visualization based on a random-forest analysis using proportion of patches harvested (normalized using an arcsine × sqrt transformation). The further up the tree a split occurs the more important that variable is to the decision-making process of the voles. The asterisk denotes a terminal node (bold frame) which in the original tree produced further nodes; however, the difference in foraging was lower than 1% and was thus removed from the figure for clarity purposes

Under direct exposure, only twice were the patches in direct view of the weasel foraged. Thus, the fact that this category resulted in a terminal node this early is suggestive of a high importance to that treatment. The analysis then revealed that there is no difference in response to all the other treatments. Females were as weary in the first and second night of an interview, foraging 14.4% and 15.3% of patches respectively. Meanwhile, males decreased foraging from 12% on the first night to 8% on the second.

Pre- and post-exposure, voles gave greater attention to the cue treatments. The model analyzes the dataset remaining after each split in the tree and chooses the next split based on the categories and variable that have the greatest variance in means. Therefore, we present both the important splits revealed in the decision-making of a population of voles (Table 3, Fig. 3), but also present the mean proportion of resources harvested within each split category. Pre-exposure, the control patches were foraged to a mean of 19.4%, but after exposure, the foraging increased to 30.1% (Figs. 2, and 3). The sexes then diverged on the response between the weasel and rabbit (sham) cues. Males foraged less, a mean of 16.7%, while females foraged resources to a mean 21.9%. For the females, the no significant difference appears between weasel (lower with 23.7%) and rabbit (higher with 24.1%) treatments on nights when no live predator is present. Males were more attuned to the differences between the rabbit and the weasel cues, foraging 17.8% and 15.8% of resources respectively. Post-exposure, the males took more risk with the weasel cues foraging 19.4% of resources compared with 11.1% pre-exposure.

Discussion

The voles clearly made decisions regarding the use of space when interacting with olfactory cues. The information a forager gains from olfactory cues varies based on a number of environmental and temporal variables (Sih 1992; Lima and Bednekoff 1999; Gonzalo et al. 2009). The interpretation of the cues clearly impact the strategic behaviors of the foragers and the way the animals will balance the tradeoffs of food and safety (Brown et al. 1999; Bytheway et al. 2013; Bleicher 2017). This experiment provides a clear example of how the same cues vary in the information they provide over a short period of time.

We believe the strongest evidence supporting the fact that a cue changes its meaning is the lack of a main effect to the cue treatments in all three analyses. This suggests immediately that each cue is not interpreted in the same way at each of the chronological intervals. This is a reflection of the changes in the state the animal is in. The animals in this experiment were wild caught; however, they came into the experiment after a few weeks in a lab setting with ad libitum resources available to them.

At the first encounter with our systems, the animal arrives with a certain naiveté towards the cues. This could likely suggest that the animals may not have perceived a risk cue as relevant to their existence as lab animals. Previous laboratory studies show anti-predatory behavioral responses to olfactory cues manifested on larger scales in both movement and foraging decisions (e.g., Ylönen et al. 2006; Haapakoski et al. 2015). However, recent observations suggest that individuals approach and inspect any odor cue scented trap or box, inspecting its riskiness more carefully and adjusting responses. That study shows evidence for variation in personality traits within a population, and sex biases in risk taking (Korpela et al. 2011). Accordingly, the trend of decrease in foraging between the control and the weasel (with sham in between) suggests the weasel cue may be interpreted as suspicious, and the animals cautiously investigated those patches. The fact that the changes between treatments intensified in the repeated measures suggests that with the realization that the predator is a threat, all the other cues also change in value (Parsons et al. 2018).

This experiment provides other examples of the shifting value of information. The relative partiality of voles to forage in the rabbit-cue (sham) treatment during-exposure provides another strong example. The simplest explanation of this result lies in the fact that a prey leaves cues for its predators to interpret (Ylönen et al. 2003). On exposure nights, the voles are aware of the imminent danger lurking in the system. Therefore, the optimal strategy they can apply is to forage in the environment that masks their presence mixed with competitor cues. Similar to the idea of safety in numbers (e.g., Rosenzweig et al. 1997), a forager can hide behind the smell of a prey of higher caloric value. The rationale behind this strategy is that the predator would approach that environment in search of a different type of prey providing enough time for the less valuable forager to escape. A number of model papers by Lima and Dill (1990) and Brown (1992, 1999) suggest that evolution would drive prey to sacrifice resources in the form of competition due to risk of predation. This is even strengthened by empirical evidence of some species even cloaking their own odor as in the example of ground squirrel masking their odor towards snakes with the musk of other snakes (Clucas et al. 2008).

Post-exposure, the voles’ foraging and visitation in both weasel and rabbit-cue treatments were low while increasing in the control. Two possible explanations, separate or combined, would result in this observed decision. The first possibility is that the reduced foraging over the exposure nights resulted in animals with higher energetic needs, i.e., starved individuals. Hungry individuals can be forced to move large distances to find resources (e.g., Haythornthwaite and Dickman 2006); however, they are also likely to exploit resources to a greater extent when they encounter a valuable patch (Raveh et al. 2011). The opposite explanation would suggest that after experiencing the existential fear during the exposure nights, the voles are now more sensitive to the cue of the predator and fear being flushed out by the “competition” (the non-present rabbit) into a risky situation. While this second possible hypothesis stands in contradiction to the explanation we gave for the observation we made on exposure nights, the amount foraged on those nights was significantly lower. We will not be able to tease the strategic reasoning the voles are taking in this instance, and this could be used for future experimentation managing the animal’s energetic state.

In addition to the overwhelming effect of the live predator, the caged weasel, the random-forest analysis suggested sex-dependent differences. Similarly, to energy state-dependent decision-making, sexual selection drives individuals of the opposite sexes to make decisions related to mate finding and territoriality. In the bank vole, females are territorial (Koskela et al. 1997) while males move large distances in search of mates (Kozakiewicz et al. 2007). As a virtue of living a sessile life, as females do, the risk associated with cues are more relevant. Therefore, we do not see habituation from one night to the next in the initial interview. However, when the cues persist—and the level of risk is tuned up by exposure the predator—the opposite is correct. Post-exposure, we observe that females decreased foraging on the second night of the interview.

The transient nature of males means they are encountering the cues, and are assessing them, as novel cues each time. We therefore find no habituation to the treatments in the systems, but instead an increase in perceived risk from the first to the second night of each interview. In direct contact with the weasel, the entire harvest rate of males drops by 25% from the first to the second night of the interview. To the males, moving on larger scales, the repeated encounter with the weasel may be a measure of greater weasel encounter chances. Previous studies show that males respond to mammalian predators by decreasing their exploratory behavior (Norrdahl and Korpimäki 1998).

Our setup allowed us to examine how the interpretation of a cue changed based on the change of individual voles’ environment and state from partial naiveté to predators through a period of heightened risk to gradually fading memory of danger in a post-predator environment. We found that by examining the relationship between cues simultaneously, we can begin to extract some of the building blocks of the strategic behaviors exhibited by a forager. While not surprising that the responses to cues varied, the novelty here is that it calls the interpretation of predation cues into scrutiny. The findings here particularly question the way we administer predation stress using olfactory cues. We are approaching the time where we will be able to observe the responses an individual makes as it stimulates neurobiological circuits. We must reckon with the fact that an individual’s cognition and neural processes which takes places in animals’ brain affect the interpretation of cues to a much greater extent than we acknowledge in our simplified models. We, as a scientific community, need to consider “fear” responses in future experimentation.

Further, this framework allows us to ponder the value of the information prey gather from encountering novel predators in the invasive species scenarios we started with. While a major emphasis is put in studies to why prey are naïve to the cues left by a predator, we suggest that some effort be directed towards the investigation of what the animals are actually interpreting these cues to mean. Finally, due to the inherent dependency of the olfactory cues on the predators that leave them behind—the movement of the predator generates a temporal and spatial pattern in which the prey navigate, forage, and mate. We show here the result of the way prey respond in an evolutionary game, where the prey evolved the optimal ability to interpret the innuendos of the odor left behind by their predator in a complex environment.

References

Amézquita A, Castro L, Arias M, Gonzalez M, Esquival C (2013) Field but not lab paradigms support generalisation by predators of aposematic polymorphic prey: the Oophaga histrionica complex. Evol Ecol 27:769–782. https://doi.org/10.1007/s10682-013-9635-1

Apfelbach R, Blanchard CD, Blanchard RJ, Hays RA, McGregor IS (2005) The effects of predator odors in mammalian prey species: a review of field and laboratory studies. Neurosci Biobehav Rev 29:1123–1144. https://doi.org/10.1016/j.neubiorev.2005.05.005

Banks PB (1998) Responses of Australian bush rats, Rattus fuscipes, to the odor of introduced Vulpes vulpes. J Mammal 74:1260–1264

Banks PB, Dickman CR (2007) Alien predation and the effects on multiple levels of prey naiveté. Trends Ecol Evol 22:229–230. https://doi.org/10.1016/j.tree.2007.02.003

Bedoya-Perez MA, Carthey AJR, Mella VSA, Mcarthur C, Banks PB (2013) A practical guide to avoid giving up on giving-up densities. Behav Ecol Sociobiol 67:1541–1553. https://doi.org/10.1007/s00265-013-1609-3

Berger-Tal O, Kotler BP (2014) State of emergency: behavior of gerbils is affected by the hunger state of their predators. Ecology 91:593–600

Bleicher SS (2012) Prey response to predator scent cues; a manipulative experimental series of a changing climate. MSc Thesis, Ben Gurion University of the Negev

Bleicher SS (2014) Divergent behaviour amid convergent evolution: common garden experiments with desert rodents and vipers. PhD Disertation, University of Illinois at Chicago

Bleicher SS (2017) The landscape of fear conceptual framework: definition and review of current applications and misuses. PeerJ 1:1–14. https://doi.org/10.7717/peerj.3772

Bleicher SS, Brown JS, Embar K, Kotler BP (2016) Novel predator recognition by Allenby’s gerbil (Gerbillus andersoni allenbyi): do gerbils learn to respond to a snake that can “see” in the dark? Isr J Ecol Evol 62:178–185. https://doi.org/10.1080/15659801.2016.1176614

Bleicher SS, Dickman CR (2016) Bust economics: foragers choose high quality habitats in lean times. PeerJ 4:e1609. https://doi.org/10.7717/peerj.1609

Bleicher SS, Kotler BP, Shalev O, Dixon A, Brown JS (2018) Divergent behavior amid convergent evolution : a case of four desert rodents learning to respond to known and novel vipers. PLoS One 13:e0200672

Blumstein DT, Daniel JC, Griffin AS, Evans CS (2000) Insular tammar wallabies (Macropus eugenii) respond to visual but not acoustic cues from predators. Behav Ecol 11:528–535. https://doi.org/10.1093/beheco/11.5.528

Bolbroe T, Jeppesen LL, Leirs H (2000) Behavioural response of field voles under mustelid predation risk in the laboratory: more than neophobia. Ann Zool Fennici 37:169–178

Brown JS (1988) Patch use as an indicator of habitat preference, predation risk, and competition. Behav Ecol Sociobiol 22:37–47. https://doi.org/10.1007/BF00395696

Brown JS (1992) Patch use under predation risk: I. Model and predictions. Ann Zool Fenn 29:301–309

Brown JS (1999) Vigilance, patch use and habitat selection: foraging under predation risk. Evol Ecol Res 1:49–71

Brown JS (2010) Ecology of fear. In: Breed MD, Moore J (eds) Encyclopedia of animal behaviour. Elsevier Ltd, Oxford, pp 581–587

Brown JS, Laundre JW, Gurung M (1999) The ecology of fear: optimal foraging, game theory, and trophic interactions. J Mammal 80:385–399

Burns JG, Saravanan A, Rodd FH (2009) Rearing environment affects the brain size of guppies: lab-reared guppies have smaller brains than wild-caught guppies. Ethology 115:122–133. https://doi.org/10.1111/j.1439-0310.2008.01585.x

Bytheway JP, Carthey AJR, Banks PB (2013) Risk vs. reward: how predators and prey respond to aging olfactory cues. Behav Ecol Sociobiol 67:715–725. https://doi.org/10.1007/s00265-013-1494-9

Carthey AJR, Banks PB (2014) Naïveté in novel ecological interactions: lessons from theory and experimental evidence. Biol Rev 89:932–949. https://doi.org/10.1111/brv.12087

Carthey AJR, Bucknall MP, Wierucka K, Banks PB (2017) Novel predators emit novel cues: a mechanism for prey naivety towards alien predators. Sci Rep 7:16377. https://doi.org/10.1038/s41598-017-16656-z

Clucas B, Rowe MP, Owings DH, Arrowood PC (2008) Snake scent application in ground squirrels, Spermophilus spp.: a novel form of antipredator behaviour? Anim Behav 75:299–307

Dall SRX, Kotler BP, Bouskila A (2001) Attention, “apprehension” and gerbils searching in patches. Ann Zool Fenn 38:15–23

Darwin C (1859) On the origin of species by means of natural selections, or the preservation of favoured races in the struggle for life. John Murray, London

Dielenberg RA, McGregor IS (2001) Defensive behavior in rats towards predatory odors: a review. Neurosci Biobehav Rev 25:597–609

Drakeley M, Lapiedra O, Kolbe JJ (2015) Predation risk perception, food density and conspecific cues shape foraging decisions in a tropical lizard. PLoS One 10:e0138016. https://doi.org/10.1371/journal.pone.0138016

Eccard JA, Ylönen H (2006) Adaptive food choice of bank voles in a novel environment: choices enhance reproductive status in winter and spring. Ann Zool Fenn 43:2–8

Feenders G, Klaus K, Bateson M (2011) Fear and exploration in European starlings (Sturnus vulgaris): a comparison of hand-reared and wild-caught birds. PLoS One 6:e19074. https://doi.org/10.1371/journal.pone.0019074

Fuelling O, Halle S (2004) Breeding suppression in free-ranging grey-sided voles under the influence of predator odour. Oecologia 138:151–159. https://doi.org/10.1007/s00442-003-1417-y

Gonzalo A, López P, Martín J (2009) Learning, memorizing and apparent forgetting of chemical cues from new predators by Iberian green frog tadpoles. Anim Cogn 12:745–750. https://doi.org/10.1007/s10071-009-0232-1

Gross CT, Canteras NS (2012) The many paths to fear. Nat Rev Neurosci 13:651–658. https://doi.org/10.1038/nrn3301

Haapakoski M, Sundell J, Ylönen H (2012) Predation risk and food: opposite effects on overwintering survival and onset of breeding in a boreal rodent. J Anim Ecol 81(6):1183–1192

Haapakoski M, Sundell J, Ylönen H (2015) Conservation implications of change in antipredator behavior in fragmented habitat: Boreal rodent, the bank vole, as an experimental model. Biol Conserv 184:11–17

Hansson L (1979) Condition and diet in relation to habitat in bank voles Clethrionomys glareolus: population or community approach? Oikos 30:55–63

Haythornthwaite AS, Dickman CR (2006) Long-distance movements by a small carnivorous marsupial: how Sminthopsis youngsoni (Marsupialia: Dasyuridae) uses habitat in an Australian sandridge desert. J Zool 270:543–549. https://doi.org/10.1111/j.1469-7998.2006.00186.x

Horne TJ, Ylönen H (1996) Female bank voles (Clethrionomys glareolus) prefer dominant males; but what if there is no choice? Behav Ecol Sociobiol 38:401–405. https://doi.org/10.1007/s002650050257

Jędrzejewska B, Jędrzejewski W (1990) Antipredatory behaviour of bank voles and prey choice of weasels - enclosure experiments. Ann Zool Fennici 27:321–328

Jędrzejewski W, Jędrzejewska B (1990) Effect of a predator’s visit on the spatial distribution of bank voles: experiments with weasels. Can J Zool 68:660–666. https://doi.org/10.1139/z90-096

Karl BJ, Best HA (1982) Feral cats on Stewart Island – their foods, and their effects on kakapo. J Zool 9:287–293

Korpela K, Sundell J, Ylönen H (2011) Does personality in small rodents vary depending on population density? Oecologia 165(1):67–77

Korpimäki E, Koivunen V, Hakkarainen H (1996) Microhabitat use and behavior of voles under weasel and raptor predation risk: predator facilitation? Behav Ecol 7:30–34. https://doi.org/10.1093/beheco/7.1.30

Korpimäki E, Norrdahl K, Rinta-Jaskari T (1991) Responses of stoats and least weasels to fluctuating food abundances: is the low phase of the vole cycle due to mustelid predation? Oecologia 88:552–561. https://doi.org/10.1007/BF00317719

Koskela E, Mappes T, Ylönen H (1997) Territorial behaviour and reproductive success of bank vole Clethrionomys glareolus females. J Anim Ecol 66:341–349

Kozakiewicz M, Chołuj A, Kozakiewicz A (2007) Long-distance movements of individuals in a free-living bank vole population: an important element of male breeding strategy. Acta Theor 52:339–348

Lima SL, Bednekoff PA (1999) Temporal variation in danger drives antipredator behavior: the predation risk allocation hypothesis. Am Nat 153:649–659

Lima SL, Dill LM (1990) Behavioral decisions made under the risk of predation: a review and prospectus. Can J Zool 68:619–640

Mappes T, Koskela E, Ylönen H (1998) Breeding suppression in voles under predation risk of small mustelids: laboratory or methodological artifact? Oikos 82:365–369. https://doi.org/10.2307/3546977

Mappes T, Ylönen H (1997) Reproductive effort of female bank voles in a risky environment. Evol Ecol 11:591–598. https://doi.org/10.1007/s10682-997-1514-1

Mella VSA, Cooper CE, Davies SJJF (2014) Behavioural responses of free-ranging western grey kangaroos (Macropus fuliginosus) to olfactory cues of historical and recently introduced predators. Austral Ecol 39:115–121. https://doi.org/10.1111/aec.12050

Monkkonen M, Forsman JT, Kananoja T, Ylönen H (2009) Indirect cues of nest predation risk and avian reproductive decisions. Biol Lett 5:176–178. https://doi.org/10.1098/rsbl.2008.0631

Näslund J, Johnsson JI (2016) State-dependent behavior and alternative behavioral strategies in brown trout (Salmo trutta L.) fry. Behav Ecol Sociobiol 70:2111–2125

Norrdahl K, Korpimäki E (1995) Mortality factors in a cyclic vole population. Proc R Soc Lond B 261:49–53. https://doi.org/10.1098/rspb.1995.0116

Norrdahl K, Korpimäki E (2000) The impact of predation risk from small mustelids on prey populations. Mammal Rev 30:147–156. https://doi.org/10.1046/j.1365-2907.2000.00064.x

Norrdahl K, Orpimäki EK (1998) Does mobility or sex of voles affect risk of predation by mammalian predators? Ecology 79:226–232. https://doi.org/10.1890/0012-9658(1998)079[0226:DMOSOV]2.0.CO;2

Pakanen VM, Rönkä N, Thomson RL, Koivula K (2014) Informed renesting decisions: the effect of nest predation risk. Oecologia 174:1159–1167. https://doi.org/10.1007/s00442-013-2847-9

Parsons MH, Apfelbach R, Banks PB, Cameron EZ, Dickman CR, Frank ASK, Jones ME, McGregor IS, McLean S, Müller-Schwarze D, Sparrow EE, Blumstein DT (2018) Biologically meaningful scents: a framework for understanding predator-prey research across disciplines. Biol Rev 93(1):98–114

Parsons MH, Lamont BB, Kovacs BR, Davies SJJF (2005) Effects of novel and historic predator urines on semi-wild Western grey kangaroos. J Wildl Manag 71:1225–1228. https://doi.org/10.2193/2006-096

Pusenius J, Ostfeld RS (2000) Effects of stoat’s presence and auditory cues indicating its presence on tree seedling predation by meadow voles. Oikos 91:123–130. https://doi.org/10.1034/j.1600-0706.2000.910111.x

Raveh A, Kotler BP, Abramsky Z, Krasnov BR (2011) Driven to distraction: detecting the hidden costs of flea parasitism through foraging behaviour in gerbils. Ecol Lett 14:47–51. https://doi.org/10.1111/j.1461-0248.2010.01549.x

Rosenzweig ML, Abramsky Z, Subach A (1997) Safety in numbers: sophisticated vigilance by Allenby’s gerbil. P Natl Acad Sci USA 94:5713–5715. https://doi.org/10.1073/pnas.94.11.5713

Shrader AM, Kerley GIH, Brown JS, Kotler BP (2012) Patch use in free-ranging goats: does a large mammalian herbivore forage like other central place foragers? Ethology 118:967–974. https://doi.org/10.1111/j.1439-0310.2012.02090.x

Sih A (1992) Prey uncertainty and the balancing of antipredator and feeding needs. Am Nat 139:1052–1069

Smith RJ, Brown JS (1991) A practical technique for measuring the behavior of foraging animals. Am Biol Teach 53:236–242

Spencer EE, Crowther MS, Dickman CR (2014) Risky business: do native rodents use habitat and odor cues to manage predation risk in Australian deserts? PLoS One 9:e90566. https://doi.org/10.1371/journal.pone.0090566

St Juliana J, Kotler BP, Brown JS, Mukherjee S, Bouskila A (2011) The foraging response of gerbils to a gradient of owl numbers. Evol Ecol Res 13:869–878

Stenseth N (1985) Geographic distribution of Clethrionomys species. Ann Zool Fenn 22:215–219

Sundell J, Trebatická L, Oksanen T, Ovaskainen O, Haapakoski, Ylonen H (2008) Predation on two vole species by a shared predator: antipredatory response and prey preference. Popul Ecol 50:257–266. https://doi.org/10.1007/s10144-008-0086-4

Trebatická L, Suortti P, Sundell J, Ylönen H (2012) Predation risk and reproduction in the bank vole. Wildl Res 39:463–468

Troxell-Smith SM, Tutka MJ, Albergo JM, Balu D, Brown JS (2015) Foraging decisions in wild versus domestic Mus musculus: what does life in the lab select for? Behav Process 122:43–50

Vincent TL, Brown JS (2005) Evolutionary game theory, natural selection, and Darwinian dynamics. Cambridge University Press, Cambridge

Ylönen H (1988) Diel activity and demography in an enclosed population of the vole Clethrionomys glareolus (Screb.). Ann Zool Fenn 25:221–228

Ylönen H (1989) Weasels Mustela nivalis suppress reproduction in cyclic bank voles Clethrionomys glareolus. Oikos 55:138–140. https://doi.org/10.2307/3565886

Ylönen H, Eccard JA, Jokinen I, Sundell J (2006) Is the antipredatory response in behaviour reflected in stress measured in faecal corticosteroids in a small rodent? Behav Ecol Sociobiol 60:350–358. https://doi.org/10.1007/s00265-006-0171-7

Ylönen H, Ronkainen H (1994) Breeding suppression in the bank vole as antipredatory adaptation in a predictable environment. Evol Ecol 8:658–666. https://doi.org/10.1007/BF01237848

Ylönen H, Sundell J, Tiilikainen R, Eccard JA, Horne T (2003) Weasels’ (Mustela nivalis nivalis) preference for olfactory cues of the vole (Clethrionomys glareolus). Ecology 84:1447–1452. https://doi.org/10.1890/0012-9658(2003)084[1447:WMNNPF]2.0.CO;2

Zimmer RK, Schar DW, Ferrer RP, Krug PJ, Kats LB, Michel WC (2006) The scent of danger: tetrodotoxin (TTX) as an olfactory cue of predation risk. Ecol Monogr 76:585–600

Acknowledgments

Open access funding provided by University of Jyväskylä (JYU). We thank the technical staff of the Konnevesi Research Station for building study systems. We also thank the reviewers whose suggestions made this paper more meaningful and clear.

Funding

The experiment was financially supported by the Finnish Academy research grant 2015–2019 for HY, No. 288990, 11.5.2015.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

The study was conducted under permission for animal experimentation of the University of Jyväskylä, permit number: ESAVI/6370/04.10.07/2014.

Additional information

Communicated by C. Soulsbury

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Bleicher, S.S., Ylönen, H., Käpylä, T. et al. Olfactory cues and the value of information: voles interpret cues based on recent predator encounters. Behav Ecol Sociobiol 72, 187 (2018). https://doi.org/10.1007/s00265-018-2600-9

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00265-018-2600-9