Abstract

Purpose

This study analyses the performance and proficiency of the three Artificial Intelligence (AI) generative chatbots (ChatGPT-3.5, ChatGPT-4.0, Bard Google AI®) and in answering the Multiple Choice Questions (MCQs) of postgraduate (PG) level orthopaedic qualifying examinations.

Methods

A series of 120 mock Single Best Answer’ (SBA) MCQs with four possible options named A, B, C and D as answers on various musculoskeletal (MSK) conditions covering Trauma and Orthopaedic curricula were compiled. A standardised text prompt was used to generate and feed ChatGPT (both 3.5 and 4.0 versions) and Google Bard programs, which were then statistically analysed.

Results

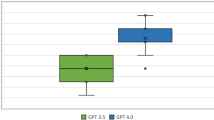

Significant differences were found between responses from Chat GPT 3.5 with Chat GPT 4.0 (Chi square = 27.2, P < 0.001) and on comparing both Chat GPT 3.5 (Chi square = 63.852, P < 0.001) with Chat GPT 4.0 (Chi square = 44.246, P < 0.001) with. Bard Google AI® had 100% efficiency and was significantly more efficient than both Chat GPT 3.5 with Chat GPT 4.0 (p < 0.0001).

Conclusion

The results demonstrate the variable potential of the different AI generative chatbots (Chat GPT 3.5, Chat GPT 4.0 and Bard Google) in their ability to answer the MCQ of PG-level orthopaedic qualifying examinations. Bard Google AI® has shown superior performance than both ChatGPT versions, underlining the potential of such large language processing models in processing and applying orthopaedic subspecialty knowledge at a PG level.

Similar content being viewed by others

Data Availability

The data is available with the corresponding author, if needed.

References

ChatGPT: Optimizing Language Models for Dialogue (2024). OpenAI. https://openai.com/blog/chatgpt/ (Accessed 15 March 2024)

Bard is now Gemini - Gemini, Google's new AI (2024). https://gemini.google.com/ (Accessed 15 March 2024)

The Lancet Digital Health (2023) ChatGPT: friend or foe? Lancet Digit Health 5(3):e102. https://doi.org/10.1016/S2589-7500(23)00023-7

Nune A, Iyengar KP, Manzo C, Barman B, Botchu R (2023) Chat generative pre-trained transformer (ChatGPT): potential implications for rheumatology practice. Rheumatol Int 43(7):1379–1380. https://doi.org/10.1007/s00296-023-05340-3

Saad A, Jenko N, Ariyaratne S, Birch N, Iyengar KP, Davies AM, Vaishya R, Botchu R (2024) Exploring the potential of ChatGPT in the peer review process: An observational study. Diabetes Metab Syndr 18(2):102946. https://doi.org/10.1016/j.dsx.2024.102946. (Advance online publication)

Fowler T, Pullen S, Birkett L (2023) Performance of ChatGPT and Bard on the official part 1 FRCOphth practice questions. British J Ophthalmol bjo-2023–324091. Advance online publication. https://doi.org/10.1136/bjo-2023-324091

Farhat F, Chaudhry BM, Nadeem M, Sohail SS, Madsen DØ (2024) Evaluating Large Language Models for the National Premedical Exam in India: Comparative Analysis of GPT-3.5, GPT-4, and Bard. JMIR Med Educ 10:e51523. https://doi.org/10.2196/51523

Iyengar KP, Jain VK, Vaishya R (2021) Virtual postgraduate orthopaedic practical examination: a pilot model. Postgrad Med J 97(1152):650–654. https://doi.org/10.1136/postgradmedj-2020-138726

Naidoo M (2023) The pearls and pitfalls of setting high-quality multiple choice questions for clinical medicine. S Afr Fam Pract 65(1):e1–e4. https://doi.org/10.4102/safp.v65i1.5726

Gilson A, Safranek CW, Huang T, Socrates V, Chi L, Taylor RA, Chartash D (2023) How Does ChatGPT Perform on the United States Medical Licensing Examination (USMLE)? The Implications of Large Language Models for Medical Education and Knowledge Assessment. JMIR Med Educ 9:e45312. https://doi.org/10.2196/45312

Saad A, Iyengar KP, Kurisunkal V, Botchu R (2023) Assessing ChatGPT’s ability to pass the FRCS orthopaedic part A exam: A critical analysis. Surgeon 21(5):263–266. https://doi.org/10.1016/j.surge.2023.07.001

Huang RS, Lu KJQ, Meaney C, Kemppainen J, Punnett A, Leung FH (2023) Assessment of Resident and AI Chatbot Performance on the University of Toronto Family Medicine Residency Progress Test: Comparative Study. JMIR Med Educ 9:e50514. https://doi.org/10.2196/5051

Vaishya R, Misra A, Vaish A (2023) ChatGPT: Is this version good for healthcare and research? Diabetes Metab Syndr 17(4):102744. https://doi.org/10.1016/j.dsx.2023.102744

Vaishya R, Kambhampati SBS, Iyengar KP, Vaish A (2023) ChatGPT in the current form is not ready for unaudited use in healthcare and scientific research. Cancer Res, Stat Treat 6(2):336–337. https://doi.org/10.4103/crst.crst_144_23

Ariyaratne S, Iyengar KP, Nischal N, Chitti Babu N, Botchu R (2023) A comparison of ChatGPT-generated articles with human-written articles. Skeletal radiology, 52(9), 1755–1758. https://doi.org/10.1007/s00256-023-04340-5

Ariyaratne S, Jenko N, Mark Davies A, Iyengar KP, Botchu R (2023) Could ChatGPT Pass the UK Radiology Fellowship Examinations? Acad Radiol S1076-6332(23):00661–X. https://doi.org/10.1016/j.acra.2023.11.026. (Advance online publication)

Lum ZC (2023) Can Artificial Intelligence Pass the American Board of Orthopaedic Surgery Examination? Orthopaedic Residents Versus ChatGPT. Clin Orthop Relat Res 481(8):1623–1630. https://doi.org/10.1097/CORR.0000000000002704

SICOT Diploma Examination (2023). https://www.sicot.org/diploma-examination (Accessed 17 March 2023)

Elnikety S (2023) (2015) Your guide to the SICOT diploma in trauma and orthopaedics. Bull Royal Coll Surg England 97(1):E12–E14. https://doi.org/10.1308/147363515X14134529299385(Accessed17March

National Board of Examinations in Medical Sciences (2023). URL available at: https://www.natboard.edu.in/ (Accessed 17 March 2023)

Sullivan GM, Artino AR Jr (2013) Analyzing and interpreting data from Likert-type scales. J Grad Med Educ 5(4):541–542. https://doi.org/10.4300/JGME-5-4-18

Vaishya R, Scarlat MM, Iyengar KP (2022) Will technology drive orthopaedic surgery in the future? Int Orthop 46(7):1443–1445. https://doi.org/10.1007/s00264-022-05454-6

Mavrogenis AF, Scarlat MM (2023) Thoughts on artificial intelligence use in medical practice and in scientific writing. Int Orthop 47(9):2139–2141. https://doi.org/10.1007/s00264-023-05936-1

Mavrogenis AF, Hernigou P, Scarlat MM (2024) Artificial intelligence, natural stupidity or artificial stupidity: who is today the winner in orthopaedics? What is true and what is fraud? What legal barriers exist for scientific writing? Int Orthop 48(3):617–623. https://doi.org/10.1007/s00264-024-06102-x

Mavrogenis AF, Scarlat MM (2023) Artificial intelligence publications: synthetic data, patients, and papers. Int Orthop 47(6):1395–1396. https://doi.org/10.1007/s00264-023-05830-w

Masalkhi M, Ong J, Waisberg E, Lee AG (2024) Google DeepMind’s gemini AI versus ChatGPT: a comparative analysis in ophthalmology. Eye (London, England), https://doi.org/10.1038/s41433-024-02958-w. Advance online publication

Extance A (2023) ChatGPT has entered the classroom: how LLMs could transform education. Nature 623(7987):474–477. https://doi.org/10.1038/d41586-023-03507-3

Chatterjee S, Bhattacharya M, Pal S, Lee SS, Chakraborty C (2023) ChatGPT and large language models in orthopedics: from education and surgery to research. J Exp Orthop 10(1):128. https://doi.org/10.1186/s40634-023-00700-1

Korneev A, Lipina M, Lychagin A, Timashev P, Kon E, Telyshev D et al (2023) Systematic review of artificial intelligence tack in preventive orthopaedics: is the land coming soon? Int Orthop 47(2):393–403. https://doi.org/10.1007/s00264-022-05628-2

Thibaut G, Dabbagh A, Liverneaux P (2024) Does Google’s Bard Chatbot perform better than ChatGPT on the European hand surgery exam? Int Orthop 48(1):151–158. https://doi.org/10.1007/s00264-023-06034-y

Acknowledgements

We are grateful to Ms. Anupama Rawat of Indraprastha Apollo Hospitals, New Delhi, for her help in compiling the result data of this study.

Author information

Authors and Affiliations

Contributions

RV, KPI-Conceptualization, data collection and analysis, literature search, manuscript writing, editing and final approval.

KPI, MP, RB, KS, VJ, AV- Literature search, data collection and analysis, manuscript writing, references, editing, supervision, and final approval.

MMS- Conceptualization, Manuscript editing and final approval.

Corresponding author

Ethics declarations

Ethics approval

None, since it is not a clinical article.

Informed consent

Not applicable.

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Vaishya, R., Iyengar, K.P., Patralekh, M.K. et al. Effectiveness of AI-powered Chatbots in responding to orthopaedic postgraduate exam questions—an observational study. International Orthopaedics (SICOT) (2024). https://doi.org/10.1007/s00264-024-06182-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00264-024-06182-9