Abstract

We consider control strategies for large-scale interacting agent systems under uncertainty. The particular focus is on the design of robust controls that allow to bound the variance of the controlled system over time. To this end we consider \({\mathcal {H}}_\infty \) control strategies on the agent and mean field description of the system. We show a bound on the \({\mathcal {H}}_\infty \) norm for a stabilizing controller independent on the number of agents. Furthermore, we compare the new control with existing approaches to treat uncertainty by generalized polynomial chaos expansion. Numerical results are presented for one-dimensional and two-dimensional agent systems.

Similar content being viewed by others

1 Introduction

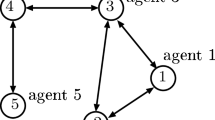

We consider the mathematical modelling and control of phenomena of collective dynamics under uncertainties. These phenomena have been studied in several fields such as socio-economy, biology, and robotics where systems of interacting particles are given by self-propelled particles, such as animals and robots, see e.g. [1, 7, 15, 24, 32]. Those particles interact according to a possibly nonlinear model, encoding various social rules as attraction, repulsion, and alignment. A particular feature of such models is their rich dynamical structure, which includes different types of emerging patterns, including consensus, flocking, and milling [17, 23, 29, 45, 50]. Understanding the impact of control inputs in such complex systems is of great relevance for applications. Results in this direction allow to design optimized actions such as collision-avoidance protocols for swarm robotics [14, 26, 46, 48], pedestrian evacuation in crowd dynamics [16, 22], supply chain policies [18, 34], the quantification of interventions in traffic management [31, 49, 51] or in opinion dynamics [27, 28]. Further, the introduction of uncertainty in the mathematical modelling of real-world phenomena seems to be unavoidable for applications, since often at most statistical information of the modelling parameters is available. The latter has typically been estimated from experiments or derived from heuristic observations [5, 8, 37]. To produce effective predictions and to describe and understand physical phenomena, we may incorporate parameters reflecting the uncertainty in the interaction rules, and/or external disturbances [13].

Here, we are concerned with the robustness of controls influencing the evolution of the collective motion of an interacting agent system. The controls we are considering are aimed to stabilize the system’s dynamics under external uncertainty. From a mathematical point of view, a description of self-organized models is provided by complex system theory, where the overall dynamics are depicted by a large-scale system of ordinary differential equations (ODEs).

More precisely, we consider the control of high-dimensional dynamics accounting N agents with state \(v_i(t,\theta ) \in {\mathbb {R}}^d,\, i=1,\ldots ,N\), evolving according to

where \(A=[a_{ij}]\in \mathbb {R}^{N\times N}\) defines the nature of pairwise interaction among agents, and \(\theta =(\theta _1,\ldots ,\theta _Z)^\top \in \mathbb {R}^{Z\times d}\) is an independent component random input vector with a given probability density distribution on \({\mathbb {R}}\) as \(\rho \equiv \rho _1\otimes \ldots \otimes \rho _Z\). The control signal \(u_i(t,\theta )\in \mathbb {R}^d\) is designed to stabilize the state toward a target state \({\bar{v}}\in \mathbb {R}^{N\times d}\), and its action is influenced by the random parameter \(\theta \). This is also due to the fact, that later we will be interested in closed–loop or feedback controls on the state \((v_1.\dots ,v_N)\) that in turn dependent on the unknown parameter \(\theta .\)

Of particular interest will be controls designed via minimization of linear quadratic (parametric) regulator functional such as

with Q positive semi-definite matrix of order N, R positive definite matrix of order N and r is a discount factor. In this case, the linear quadratic dynamics allow for an optimal control \(u^*\) stabilising the desired state \(v_d=0\), expressed in feedback form, and obtained by solving the associated matrix Riccati equations. Those aspects will be also addressed in more detail below.

In order to assess the performances of controls, and quantify their robustness we propose estimates using the concept of \({\mathcal {H}}_\infty \) control. In this setting different approaches have been studied in the context of \({\mathcal {H}}_\infty \) control and applied to first-order and higher-order multiagent systems, see e.g. [21, 40,41,42,43,44], in particular for an interpretation of \({\mathcal {H}}_\infty \) as dynamic games we refer to [6]. Here we will study an approach based on the derivation of sufficient conditions in terms of linear matrix inequalities (LMIs) for the \({\mathcal {H}}_\infty \) control problem. In this way, consensus robustness will be ensured for a general feedback formulation of the control action. Additionally, we consider the large–agent limit and show that the robustness is guaranteed independently of the number of agents.

Furthermore, we will discuss the numerical realization of system (1.1) employing uncertainty quantification techniques. In general, at the numerical level, techniques for uncertainty quantification can be classified into non-intrusive and intrusive methods. In a non-intrusive approach, the underlying model is solved for fixed samples with deterministic schemes, and statistics of interest are determined by numerical quadrature, typical examples are Monte-Carlo and stochastic collocation methods [19, 54]. While in the intrusive case, the dependency of the solution on the stochastic input is described as a truncated series expansion in terms of orthogonal functions. Then, a new system is deduced that describes the unknown coefficients in the expansion. One of the most popular techniques of this type is based on stochastic Galerkin (SG) methods. In particular, generalized polynomial chaos (gPC) gained increasing popularity in uncertainty quantification (UQ), for which spectral convergence on the random field is observed under suitable regularity assumptions [19, 35, 36, 52, 54]. The methods, here developed, make use of the stochastic Galerkin (SG) for the microscopic dynamics while in the mean-field case we combine SG in the random space with a Monte Carlo method in the physical variables.

The manuscript is organized as follows, in Sect. 2 we introduce the problem setting and propose different feedback control laws; in Sect. 3 we reformulate the problem in the setting of \({\mathcal {H}}_\infty \) control and provide conditions for the robustness of the controls in the microscopic and mean-field case. Section 4 is devoted to the description of numerical strategies for the simulation of the agent systems, and to different numerical experiments, which assess the performances and compare different methods.

2 Control of Interacting Agent System with Uncertainties

The following notation is introduced with the control of high-dimensional systems of interacting agents with random inputs. We consider the evolution of N agents with state \(v(t,\theta )\in {\mathbb {R}}^{ N\times d}\) as follows

with deterministic initial data \(v_i(0)=v_{i}^0\) for \(i=1,\ldots ,N\). For the rest of the article, we focus on the model (2.1), namely Eq. (1.1) where we chose that \(a_{ij} = \bar{p}\) is constant and equal for every agent, with \(\bar{p} \in {\mathbb {R}}\) bounded. \(\theta _k \in \Omega _k\subseteq {\mathbb {R}}^{d}\) for \(k=1,\ldots ,Z\) are random inputs, distributed according to a compactly supported probability density \(\rho \equiv \rho _1 \otimes \dots \otimes \rho _Z\), i.e., \(\rho _k(\theta )\ge 0\) a.e., \(\text {supp}(\rho _k)\subseteq \Omega _k \) and \(\int _{\Omega _k} \rho _k(\theta )\, d\theta =1\). For simplicity, we also assume that the random inputs have zero average \({\mathbb {E}}[\theta _k] =0\), (please refer to Remark 2.1 for the general case \({\mathbb {E}}[\theta _k] \ne 0\)). The control signal \(u(t,\theta )\in \mathbb {R}^{N\times d}\) is designed minimizing the (parameterized) objective

with \(\nu >0\) being a penalization parameter for the control energy, the norm \(\vert \cdot \vert \) being the usual Euclidean norm in \({\mathbb {R}}^d\). The discount factor \(\exp (-r \tau )\) is introduced to have a well-posed integral.

We assume that \(\bar{v}\) is a prescribed consensus point, namely, in the context of this work we are interested in reaching a consensus velocity \(\bar{v}\in \mathbb {R}^d\) such that \(v_{1}=\ldots =v_{N}=\bar{v}\), and w.l.o.g. we can assume \(\bar{v} = 0\). Note that \(\bar{v}=0\) is also the steady state of the dynamics in absence of disturbances. Hence, we may view \(u(\cdot ,\theta )\) as a stabilizing control of the zero steady state of the system. Furthermore, we will be interested in feedback controls u.

Recall that the (deterministic) linear model (2.1), without uncertainties, allows a feedback stabilization by solving the resulting optimal control problem through a Riccati equations [2, 3, 33]. The functional J in (2.2), in the absence of disturbances, reads as follows

where \(Q \equiv R = \frac{1}{N}\text {Id}_N\). In this case the controlled dynamics (2.1) is reformulated in a matrix–vector notation

with \(B=\text {Id}_N\) the identity matrix of order N, and

The matrix K associated to feedback form of the optimal control has to fulfilll the Riccati matrix-equation of the following form

For a general linear system, we need to solve the \(N\times N\) equations to find K, which can be costly for large-scale agent-based dynamics. However, we can use the same argument of [3] and exploit the symmetric structure of the Laplacian matrix A to reduce the algebraic Riccati equation. Unlike in [3], where they investigate the case with finite terminal time, here we state the following proposition for the infinite horizon case with discount factor r.

Proposition 2.1

(Properties of the Algebraic Riccati Equation (ARE)) For the linear dynamics (2.3), the solution of the Riccati equation (2.5) reduces to the solution of

where \(k_d, k_o\) are the entries of the matrix K in the algebraic Riccati equation (2.5). In particular, K is given by

\(\delta _{ij}\) is the Kronecker delta function.

In order to allow the limit of infinitely many agents \(N\rightarrow \infty \), we introduce the following scalings

and keeping the same notation also for the scaled variables \(k_d,k_o\), the system (2.6) reads

The previous considerations motivate to extend formula (2.3) to the parametric case (2.1). Hence, in the presence of parametric uncertainty the feedback control is written explicitly as follows

The question arises if the given feedback is robust with respect to the uncertainties \(\theta \). In the following, we will provide a measure for the robustness of control (2.8) in the framework of \({\mathcal {H}}_\infty \) control. Some additional remarks allow generalizing formula (2.8).

Remark 2.1

(Non-zero average) In the presence of general uncertainties with known expectations, we modify the control (2.8) for model (2.1) including a correction factor given by the expected values of the random inputs,

for \(\mu _k={\mathbb {E}}[\theta _k]\) for \(k=1,\ldots ,Z\).

Remark 2.2

(Averaged control) In the case of a deterministic feedback control, we may consider the expectation of the objective (2.2) subject to the noisy model (2.1)

where we introduce the matrices \(Q=R= \frac{1}{N} \text {Id}_N\). In this case, we have the following deterministic optimal feedback control is deduced

where \(\mu _k={\mathbb {E}}[\theta _k]\) for \(k=1,\ldots ,Z\), \({k}_d\), \({k}_o\) satisfy equations (2.7).

We refer to Appendix 1 for detail computations for the synthesis of (2.10).

Remark 2.3

(Time independent uncertainty) In this work we consider the uncertainty \(\theta \) constant in time since we want to use less information as possible about the system, we assume to not know the evolution of \(\theta \) in time. We can see the constant \(\theta \) as the maximum value over time extracted from the support of its distribution

The maximum value of \(\theta \) is equivalent to consider the extremal cases in the support where the uncertainty is maximal. Doing this we are aiming for a robust control in the worst case scenario. Nevertheless, the results we will derive in this article (in particular Theorem 3.2), hold true also for a time dependent uncertainty at the microscopic case, see e.g. [21, 42]. For the mean-field limit we consider in Sect. 3.1, a time-dependent noise would lead to a diffusion term, this case is not treated in the manuscript but it is planned as future work.

3 Robustness in the \({\mathcal {H}}_\infty \) Setting

In the context of \({\mathcal {H}}_\infty \) theory, controllers are synthesized to achieve stabilization with guaranteed performance. In this section, we exploit the theory of Linear Matrix Inequality (LMI) to show the robustness of the control. The introduction of LMI methods in control has dramatically expanded the types and complexity of the systems we can control. In particular, it is possible to use LMI solvers to synthesize optimal or suboptimal controllers and estimators for multiple classes of state-space systems and without giving a complete list of references, we refer to [9, 20, 38, 47].

Consider the linear system (2.1) with control (2.8) in the following reformulation

where we consider the random input vector \(\theta = (\theta _1,\ldots ,\theta _Z)^\top \in {\mathbb {R}}^{Z\times d}\), and the matrices

with \(\mathbbm {1}\) a matrix of ones of dimension \(N\times Z\). We introduce the frequency transfer function \(\hat{G}(s):= (s \text {Id}_N-{\widehat{A}})^{-1} {\widehat{B}}\), such that

\({\hat{G}} \in R{\mathcal {H}}_\infty \). The latter is the set of proper rational functions with no poles in the closed complex right half-plane, and the signal norm \(\Vert \cdot \Vert _{{\mathcal {H}}_\infty }\) measuring the size of the transfer function in the following sense

where for a given matrix P, \({{\bar{\sigma }}} (P)\) is the largest singular value of P. The general \({\mathcal {H}}_\infty \)-optimal control problem consists of finding a stabilizing feedback controller \(u = -\frac{1}{\nu }K\) which minimizes the cost function (3.3) and we refer to Appendix 1 and to [21] for more details.

However, the direct minimization of the cost \(\Vert \hat{G} \Vert _{{\mathcal {H}}_\infty }\) is in general a very hard task, and possibly unfeasible by direct methods. To reduce the complexity, a possibility consists in finding conditions for the stabilizing controller that achieves a norm bound for a given threshold \(\gamma >0\),

Hence, robustness of a given control \(u= -\frac{1}{\nu }K\) is measured in terms of the smallest \(\gamma \) satisfying (3.4). In order to provide a quantitative result, we can rely on the following result

Lemma 3.1

Given the frequency transfer function \(\hat{G}\), associated to (3.1), a necessary and sufficient condition to guarantee the \({\mathcal {H}}_\infty \) bound (3.4) for \(\gamma >0\), is to prove, that there exists a positive definite square matrix X of order N, \(X> 0\), such that the following algebraic Riccati equation holds

For detailed proof of this result, we refer to Appendix 1.

Theorem 3.2

Consider system (3.1) with structure induced by (2.1), and a square matrix X with the following structure

Then for N sufficiently large and finite, control \(u=-\frac{1}{\nu }K\) given by equation (2.8) is \({\mathcal {H}}_\infty \)-robust for any \(\gamma \) and \(c_N\) such that \(\gamma \ge \frac{1}{c_N}\), \(c_N>0\), where

Proof

Under the hypothesis of the theorem, (3.5) reduces to the following system of equations

We scale the off-diagonal elements \(x_o\) of X according to

which, as we will see later, is also the consistent scaling for a mean-field description of X.

Then, the previous system reads

From these two equations of system (3.9), and setting

we obtain two second order equations for \(x_d\) and \({\tilde{x}}_o\)

For N sufficiently large, their solutions is given by

Note that we are interested in the large particle limit and hence may allow that the previous quantities \({\mathcal {O}}\left( \frac{1}{N}\right) \) are not exactly zero, but tend to zero at a rate \(\frac{1}{N}.\) Hence, we write the matrix X as

and the eigenvalues of X are

If we subtract equations in (3.9) we find the following second order equation in the variable \(\lambda = \left( x_d - \frac{{\tilde{x}}_o}{\sqrt{N}}\right) \)

with its solutions \( \lambda ^{\pm } = x_d - \frac{{\tilde{x}}_o}{\sqrt{N}} = \gamma c_N \pm \sqrt{ \gamma ^2 c_N^2 - 1}, \) and for

Therefore, we have \( \lambda _i = \lambda ^{\pm } \quad \text {for} \quad i = 1,\dots , N-1\) and \(\lambda _N = \lambda ^{\pm } + N \frac{{\tilde{x}}_o}{\sqrt{N}}.\) Hence, there exists a matrix X satisfying (3.5), for

In this case, the eigenvalues are \(\lambda _i = \lambda _N= \lambda ^{\pm }\). In order to ensure the positivity of the eigenvalue \(\lambda ^{\pm } = \gamma c_N \pm \sqrt{\gamma ^2 c_N^2-1}\) needs to be non–negative. This can be expressed in terms of the choice of the parameters \(\gamma \) and \(c_N:\) First of all, we have \(\gamma \ge \frac{1}{c_N}\) to ensure the existence of the square root. In addition, provided that \(c_N>0\) we obtain

This finishes the proof. \(\square \)

We want to recall and highlight the fact that Theorem 3.2 offers a robustness condition in the case of uncertainty with zero mean. The result can be generalized in the presence of uncertainties with known expectations, adding a correction factor to the matrix \({\widehat{A}}\) in (3.2), given by the expected values of the random inputs.

Remark 3.1

We observe that Theorem 3.2 quantifies the robustness of the feedback control with a lower bound on the parameters of the model. In particular, we can achieve minimal value of \(\gamma \) for large values of \(c_N\) in (3.6), for example if we fix the values \({\bar{p}}, k_d,k_o\) and N for decreasing values of the penalization \(\nu \) the control is more robust.

3.1 Mean-Field Estimates for \({\mathcal {H}}_\infty \) Control

Large system of interacting agents can be efficiently represented at different scales to reduce the complexity of the microscopic description. Of particular interest are models able to describe the evolution of a density of agents and its moments [10, 12, 30].

In this section, we analyse robustness of controls in the case of a large number of agents, i.e. \(N\gg 1\), by means of the mean-field limit of the interacting system. Hence, we consider the density distribution of agents \(f=f(t,v,\theta )\) to describe the collective behaviour of the ensemble of agents. The empirical joint probability distribution of agents for the system (2.1), is given by

where \(\delta (\cdot )\) is a Dirac measure over the trajectories \(v_i(t,\theta )\) dependent on the stochastic variable \(\theta = (\theta _1,\ldots ,\theta _Z)\).

Hence, assuming enough regularity assuming that agents remain in a fixed compact domain for all N and in the whole time interval [0, T], the mean-field limit of dynamics (2.1) is obtained formally as

with initial data \( f(0,v,\theta ) = f^0(v,\theta )\).

The latter is obtained as the limit of \(f^N(0,v,\theta )\) in the Wasserstein distance given a sequence of initial agents, [10, 11]. The quantity \(m_1[f]\) denotes the first moment of f with respect to v

For the many-particle limit, we recover a mean-field estimate of the \({\mathcal {H}}_\infty \) condition similarly as in 3.2. Indeed, for \(N\rightarrow \infty \) the nonlinear system (3.9) yields

Hence, for any fixed finite N the matrix X is diagonal with the entry

To ensure that X is positive definite, we only have to assume that \(\gamma \ge \dfrac{1}{c},\) where \(\gamma \) is the bound of the \({\mathcal {H}}_\infty \) norm, and \(c = \bar{p}+k_d/\nu + O(\frac{1}{N})\) corresponds to the value defined by equation (3.12). This shows that for any N there exists a positive definite matrix that guarantees robust stabilization. Note that the condition for any N is the limit of the finite-dimensional conditions of the previous Lemma 3.1 and Theorem 3.2.

Remark 3.2

For explicit values of the Riccati coefficients we can characterize the previous estimates more precisely. In particular, for \(N\rightarrow \infty \) system (2.7) reduces to

with solutions

In this particular case, the condition of Theorem 3.2 becomes

In Fig. 1 we depict the lower bound of \(\gamma \) for \(r=0\) for different values of \(\nu \) and \(\bar{p}\). As expected, smaller values of \(\gamma \), hence more robustness, is obtained if the penalization factor \(\nu \) is small or when \(\bar{p}\) is large. The latter corresponds to a stronger attraction between agents.

Numerical computation of the lower bound value of \(\gamma \) in (3.14) as function of \(\nu \) and \(\bar{p}\) when \(r=0\), i.e. \(\gamma (\nu , \bar{p}) = \sqrt{{\nu }/(\bar{p}^2 \nu +1)}\)

4 Numerical Approximation of the Uncertain Dynamics

In this section, we present numerical tests based on linear microscopic and mean-field equations in presence of uncertainties. In particular, we give numerical evidence of the robustness of the feedback control (2.8) and illustrate a comparison with the averaged control (A.3). For the numerical approximation of the random space, we employ the Stochastic Galerkin (SG) method belonging to the class of generalized polynomial chaos (gPC) ( [39, 54]). In the mean-field setting, the evolution of the density distribution is approximated with a Monte Carlo (MC) method, in a similar spirt of particle based gPC techniques developed in [13].

4.1 SG Approximation for Robust Constrained Interacting Agent Systems

We approximate the dynamics using a stochastic Galerkin approach applied to the interacting particle system with uncertainties [4, 13]. Polynomial chaos expansion provides a way to represent a random variable with finite variance as a function of an M-dimensional random vector using a polynomial basis that is orthogonal to the distribution of this random vector. Depending on the distribution, different expansion types are distinguished, as shown in Table 1.

We recall first some basic notions on gPC approximation techniques and for the sake of simplicity we consider a one-dimensional setting for the dynamical state \(v_i,\) i.e., \(d=1\). Let \((\Omega ,{\mathcal {F}}, P)\) be a probability space where \(\Omega \) is an abstract sample space, \({\mathcal {F}}\) a \(\sigma -\)algebra of subsets of \(\Omega \) and P a probability measure on \({\mathcal {F}}\). Let us define a random variable

where \(I_\Theta \in {\mathbb {R}}^Z\) is the range of \(\theta \) and \({\mathcal {B}}({\mathbb {R}}^Z)\) is the Borel \(\sigma \)-algebra of subsets of \({\mathbb {R}}^Z\), we recall that Z is the dimension of the random input \(\theta =(\theta _1,\ldots ,\theta _Z)\) and where we assume that each component is independent.

We consider the linear spaces generated by orthogonal polynomials of \(\theta _j\) with degree up to M: \(\lbrace \Phi _{k_j}^{(j)} (\theta )\rbrace _{k_j=0}^M\), with \(j = 1,\dots , Z\). Assuming that the probability law for the function \(v_i(t,\theta )\) has a finite second order moment, the complete polynomial chaos expansion of \(v_i\) is given by

where the coefficients \(\hat{v}_{i,k_1\dots k_Z} (t)\) are defined as

where the expectation operator \({\mathbb {E}}_{\theta }\) is computed with respect to the joint distribution \(\rho =\rho _1\otimes \ldots \otimes \rho _Z\), and where \(\{\Phi ^{(j)}_{k}(\theta _j)\}_k\) is a set of polynomials which constitute the optimal basis with respect to the known distribution \(\rho (\theta _j)\) of the random variable \(\theta _j\), such that

with \(\delta _{hk}\) the Kronecker delta. From the numerical point of view, we may have an exponential order of convergence for the SG series expansion, unlike Monte Carlo techniques for which the order is \({\mathcal {O}}(1/\sqrt{M})\) where M is the number of samples. Considering the noisy model 2.1 with control \(u_i(t)\) in 2.8, we have

We apply the SG decomposition to the solution of the differential equation \(v_i(t,\theta )\) in (4.2) and to the stochastic variable \(\theta _k\), and for \(i=1,\dots , N, \ l=1,\dots ,Z\), we have

with

Then we obtain the following polynomial chaos expansion

Multiplying by \(\prod _{j=1}^Z\Phi _{k_j}^{(j)}(\theta _{j}) \) and integrating with respect to the distribution \(\rho (\theta )\), we end up with

For the numerical tests, we approximate the integrals using quadrature rules.

Remark 4.1

For model 2.10, where the control is averaged with respect to the random sources,

the SG approximation is given by

We recover the mean and the variance of the random variable \(v(\theta )\) as

4.2 Numerical Tests

In this section we present different numerical tests on microscopic and mean-field dynamics, to compare the robustness of controls described in Sect. 2. We analyze one- and two-dimensional dynamics, for every test we consider the attractive case with \(\bar{p}=1\). The initial distribution of agents \(v_0\) is chosen such that consensus to the target \({\bar{v}} = 0\) would not be reached without control action. We implement the SG approximations in (4.4) and (4.8) and we perform the time integration until the final time \(T=1\) of the resulting system through a 4th order Runge–Kutta method.

In Test 1 (4.2.1) and Test 2 (4.2.2) we take into account a dynamics with \(Z= 2\) additive uncertanties represented by two different uniform density distributions. While in Test 3 (4.2.3) we present the case of a random variable \(\theta _1\) with Gaussian distribution \({\mathcal {N}}(\mu , \sigma ^2)\), and \(\theta _2\) with uniform distribution \({\mathcal {U}}(a, b)\). Observe that even if the results of this work are theoretically proven for bounded uncertainties, we show in Test 3 that, numerically, they are also valid for Gaussian unbounded noise. This assumption of normal and uniform distributions for the stochastic parameter corresponds to the case of Hermite and Legendre polynomial chaos expansions, respectively, as shown in Table 1. For every test, we have \(M=10\) terms of the SG decomposition.

4.2.1 Test 1: One-Dimensional Microscopic Consensus Dynamics

In the one-dimensional microscopic case we take \(N=100\) agents, and a uniform initial distribution of agents, \(v_0\sim {\mathcal {U}}(10,20)\). Figure 2 shows means, as continuous and dashed lines, and confidence regions of the two noisy dynamics for different distributions \(\rho _1, \ \rho _2\). The shaded region is computed as the region between the values

Numerical results show that both introduced controls are capable to drive the agents to the desired state even in the case of a dynamics dependent on random inputs. Moreover, we can observe that, with the \({\mathcal {H}}_\infty \) control, the variance of the uncertain dynamics is stabilized over time, while in the case of a averaged control the variance keeps growing. This is because the averaged control has information only on the mean value of the state and uncertainty, while the \({\mathcal {H}}_\infty \) feedback control directly depends on the state, and as a consequence on the randomness of the dynamics. This is also expected given the robustness estimate on the feedback control.

4.2.2 Test 2: One-Dimensional Mean-Field Consensus Dynamics

In the mean-field limit, the Monte Carlo (MC) method is employed for the approximation of the distribution function \(f(t,v,\theta )\) in the phase space whereas the random space at the particle level is approximated through the SG technique.

Considering this MC-SG scheme, we work on an agent system using Monte Carlo sampling with \(N_s = 10^4\) agents, then we consider the SG scheme at the microscopic level. The probability density \(f(t,v,\theta )\) is then reconstructed as the histogram of \(v(t,\theta )\). The reconstruction step of the mean density has been done with 50 bins. The mean and the variance of the statistical quantity are computed as follows

where for \(l,h = 1,\dots , L\) and \(\hat{v}_{k,j} \in {\mathbb {R}}^{N_s}\), \(f(t,v,\theta ^{lh}) \) is reconstructed as the histogram of the data \(v(\theta ^{lh}) = \sum _{k,j=0}^M \hat{v}_{k,j} \Phi _k(\theta _1^l) \Psi _j(\theta _2^h).\)

Test 3. Mean-field one-dimensional case. a Mean and b standard deviation over time for the \({\mathcal {H}}_\infty \) control in (2.8), with parameters \(\bar{p}= 1,\ \nu = 0.01, \ \rho _1 \sim {\mathcal {U}}(-10,10), \ \rho _2\sim {\mathcal {U}}(-25,25)\)

Test 3. Mean-field one-dimensional case. a Mean and b standard deviation over time for the averaged control in (2.10), with parameters \(\bar{p}= 1, \ \nu = 0.01, \ \rho _1\sim {\mathcal {U}}(-10,10), \ \rho _2\sim {\mathcal {U}}(-25,25)\)

We consider the same parameters as in Test 1 for the one dimensional microscopic case, where \(\theta _1, \theta _2\) are uncertainties respectively uniform distributions \({\mathcal {U}}(-10,10)\) and \({\mathcal {U}}(-25,25)\). Hence we approximate the integrals in (4.9) using a Legendre-Gauss quadrature rules with \(L = 40\) quadrature points. Figures 3 and 4 show a consistent behavior with the microscopic case in the right plot of Fig. 2 where we used exactly the same parameters, in particular we observe that less dispersion of the density for control of type (2.8).

4.2.3 Test 3: Two-Dimensional Microscopic Consensus Dynamics

In this section we investigate the two-dimensional microscopic case, observe that unlike the previous tests, here we numerically present the case of an unbounded Gaussian distribution \(\rho _1,\) added to a uniform one \(\rho _2\). We take \(N=100\) agents, and an initial configuration uniformly distributed on a 2D disc, as shown in Fig. 5. 2D means and confidence regions of the two noisy dynamics can be seen in Fig. 6, for different values of the penalization factor \(\nu \) and different distributions \(\rho _1, \ \rho _2\).

We recall that, for the control u in Eq. (2.8), the size of the transfer function \(\hat{G}\) related to the state-space system (3.1) in terms of the \({\mathcal {H}}_\infty \) signal norm is

From Theorem 3.2 we know that the \({\mathcal {H}}_\infty \) control u is robust with a constant \(\gamma > \frac{1}{c_N}\), \(c_N>0\). We compute the value \(c_N\) for the two cases in Fig. 6, and we have \(c_N = 14.29\) for a penalization factor \(\nu =0.01\), while \(c_N = 4.55\) for \(\nu = 0.1\). As expected, we observe smaller regions for a smaller value of \(\nu \), interpreted as the control cost.

5 Conclusions

The introduction of uncertainties in multiagent systems is of paramount importance for the description of realistic phenomena. Here we focused on the mathematical modelling and control of collective dynamics with random inputs and we investigated the robustness of controls proposing estimates based on \({\mathcal {H}}_\infty \) theory in the linear setting. Reformulating the control problem as a robust \({\mathcal {H}}_\infty \) control problem, we derived sufficient conditions in terms of linear matrix inequalities (LMIs) to ensure the control performance, independently of the type of random inputs. Moreover, a robustness analysis is provided also in a mean-field framework, showing consistent results with the microscopic scale. Different numerical tests were proposed to compare the \({\mathcal {H}}_\infty \) control with control synthesized minimizing the expectation of a function with respect to the random inputs. The numerical methods here developed make use of the stochastic Galerkin (SG) expansion for the microscopic dynamics while in the mean-field case we combine an SG expansion in the random space with a Monte Carlo method in the physical variables. The numerical experiments show that both controls are capable to drive the average particle trajectories towards a consensus state considering multiple sources of randomness and in different dimensions. We further observe that, in the \({\mathcal {H}}_\infty \) setting, the variance is stabilized over time, this is not surprising since the \({\mathcal {H}}_\infty \) control accounts for the random state in a feedback form, whereas in the noiseless control setting the uncertainty is averaged out. Nonetheless, these results confirm the goodness of the estimates for the control robustness for the uncertain dynamics. Further analysis is needed to extend these results in the \({\mathcal {H}}_\infty \) setting to non-linear dynamics with uncertainities. This can be studied for example introducing the so-called Hamilton-Jacobi-Isaacs equation, whose solution can be extremely challenging due to the high-dimensionality of multi-agent systems.

References

Albi, G., Bellomo, N., Fermo, L., Ha, S.-Y., Kim, J., Pareschi, L., Poyato, D., Soler, J.: Vehicular traffic, crowds, and swarms: from kinetic theory and multiscale methods to applications and research perspectives. Math. Models Methods Appl. Sci. 29(10), 1901–2005 (2019)

Albi, G., Bicego, S., Kalise, D.: Gradient-augmented supervised learning of optimal feedback laws using state-dependent Riccati equations. IEEE Control Syst. Lett. 6, 836–841 (2021)

Albi, G., Herty, M., Kalise, D., Segala, C.: Moment-driven predictive control of mean-field collective dynamics. SIAM J. Control Optim. 60(2), 814–841 (2022)

Albi, G., Pareschi, L., Zanella, M.: Uncertainty quantification in control problems for flocking models. Math. Probl. Eng. 2015, 04 (2015)

Ballerini, M., Cabibbo, N., Candelier, R., Cavagna, A., Cisbani, E., Giardina, I., Orlandi, A., Parisi, G., Procaccini, A., Viale, M., et al.: Empirical investigation of starling flocks: a benchmark study in collective animal behaviour. Anim. Behav. 76(1), 201–215 (2008)

Başar, T., Bernhard, P.: H-Infinity Optimal Control and Related Minimax Design Problems: A Dynamic Game Approach. Springer, New York (2008)

Bellomo, N., Soler, J.: On the mathematical theory of the dynamics of swarms viewed as complex systems. Math. Models Methods Appl. Sci. 22(suppl. 1), 1140006, 29, (2012)

Bongini, M., Fornasier, M., Hansen, M., Maggioni, M.: Inferring interaction rules from observations of evolutive systems i: the variational approach. Math. Models Methods Appl. Sci. 27(05), 909–951 (2017)

Boyd, S., El Ghaoui, L., Feron, E., Balakrishnan, V.: Linear Matrix Inequalities in System and Control Theory. SIAM, Philadelphia (1994)

Carrillo, J.A., Choi, Y.P., Hauray, M.: The derivation of swarming models: mean-field limit and Wasserstein distances. In: Collective Dynamics from Bacteria to Crowds, pp. 1–46. Springer, New York (2014)

Carrillo, J.A., D’Orsogna, M.R., Panferov, V.: Double milling in self-propelled swarms from kinetic theory. Kinet. Relat. Models 2(2), 363–378 (2009)

Carrillo, J.A., Fornasier, M., Toscani, G., Vecil, F.: Particle, kinetic, and hydrodynamic models of swarming. In: Naldi, G., Pareschi, L., Toscani, G. (eds.) Mathematical Modeling of Collective Behavior in Socio-Economic and Life Sciences, pp. 297–336. Springer, New York (2010)

Carrillo, J.A., Pareschi, L., Zanella, M.: Particle based gPC methods for mean-field models of swarming with uncertainty. Commun. Comput. Phys. 25(2), 508–531 (2018)

Choi, Y.-P., Kalise, D., Peszek, J., Peters, A.A.: A collisionless singular Cucker-Smale model with decentralized formation control. SIAM J. Appl. Dyn. Syst. 18(4), 1954–1981 (2019)

Cordier, S., Pareschi, L., Toscani, G.: On a kinetic model for a simple market economy. J. Stat. Phys. 120(1–2), 253–277 (2005)

Cristiani, E., Piccoli, B., Tosin, A.: Multiscale modeling of pedestrian dynamics. MS &A, vol. 12. Model. Simul. Appl. Springer, Cham (2014)

Cucker, F., Smale, S.: Emergent behavior in flocks. IEEE Trans. Autom. Control 52(5), 852–862 (2007)

Degond, P., Göttlich, S., Herty, M., Klar, A.: A network model for supply chains with multiple policies. Multiscale Model. Simul. 6(3), 820–837 (2007)

Dimarco, G., Pareschi, L., Zanella, M.: Uncertainty quantification for kinetic models in socio–economic and life sciences. In: Uncertainty Quantification for Hyperbolic and Kinetic Equations, pp. 151–191. Springer (2017)

Duan, G.-R., Yu, H.-H.: LMIs in Control Systems: Analysis. Design and Applications. CRC Press, Boca Raton (2013)

Dullerud, G.E., Paganini, F.: A Course in Robust Control Theory: A Convex Approach, vol. 36. Springer, New York (2013)

Dyer, J.R., Johansson, A., Helbing, D., Couzin, I.D., Krause, J.: Leadership, consensus decision making and collective behaviour in humans. Philos. Trans. R. Soc. B 364(1518), 781–789 (2009)

D’Orsogna, M.R., Chuang, Y.-L., Bertozzi, A.L., Chayes, L.S.: Self-propelled particles with soft-core interactions: patterns, stability, and collapse. Phys. Rev. Lett. 96(10), 104302 (2006)

Estrada-Rodriguez, G., Gimperlein, H.: Interacting particles with Lévy strategies: limits of transport equations for swarm robotic systems. SIAM J. Appl. Math. 80(1), 476–498 (2020)

Franklin, G.F., Powell, J.D., Emami-Naeini, A., Powell, J.D.: Feedback Control of Dynamic Systems, vol. 4. Prentice Hall, Upper Saddle River (2002)

Freudenthaler, G., Meurer, T.: PDE-based multi-agent formation control using flatness and backstepping: analysis, design and robot experiments. Automatica 115, 108897, 13 (2020)

Garnier, J., Papanicolaou, G., Yang, T.-W.: Consensus convergence with stochastic effects. Vietnam J. Math. 45(1–2), 51–75 (2017)

Goddard, B.D., Gooding, B., Short, H., Pavliotis, G.: Noisy bounded confidence models for opinion dynamics: the effect of boundary conditions on phase transitions. IMA J. Appl. Math. 87(1), 80–110 (2022)

Gómez-Serrano, J., Graham, C., Le Boudec, J.-Y.: The bounded confidence model of opinion dynamics. Math. Models Methods Appl. Sci. 22(2), 1150007, 46 (2012)

Ha, S.-Y., Tadmor, E.: From particle to kinetic and hydrodynamic descriptions of flocking. Kinet. Relat. Models 1(3), 415–435 (2008)

Han, Y., Hegyi, A., Yuan, Y., Hoogendoorn, S., Papageorgiou, M., Roncoli, C.: Resolving freeway jam waves by discrete first-order model-based predictive control of variable speed limits. Transport. Res. Part C 77, 405–420 (2017)

Herty, M., Pareschi, L.: Fokker-Planck asymptotics for traffic flow models. Kinet. Relat. Models 3(1), 165–179 (2010)

Herty, M., Pareschi, L., Steffensen, S.: Mean-field control and Riccati equations. Netw. Heterog. Media 10(3), 699–715 (2015)

Herty, M., Ringhofer, C.: Feedback controls for continuous priority models in supply chain management. Comput. Methods Appl. Math. 11(2), 206–213 (2011)

Hu, J., Jin, S.: Uncertainty quantification for kinetic equations. In: Uncertainty Quantification for Hyperbolic and Kinetic Equations, pp. 93–229. Springer, New York (2017)

Hu, J., Jin, S., Xiu, D.: A stochastic Galerkin method for Hamilton-Jacobi equations with uncertainty. SIAM J. Sci. Comput. 37(5), A2246–A2269 (2015)

Katz, Y., Tunstrøm, K., Ioannou, C.C., Huepe, C., Couzin, I.D.: Inferring the structure and dynamics of interactions in schooling fish. Proc. Natl. Acad. Sci. U.S.A. 108(46), 18720–18725 (2011)

Khalil, I., Doyle, J., Glover, K.: Robust and Optimal Control. Prentice Hall, New Jersey (1996)

Le Maître, O., Knio, O.M.: Spectral Methods for Uncertainty Quantification: With Applications to Computational Fluid Dynamics. Springer, New York (2010)

Lin, P., Jia, Y.: Robust H-infinity consensus analysis of a class of second-order multi-agent systems with uncertainty. IET Control Theory Appl. 4(3), 487–498 (2010)

Liu, J., Zhang, Y., Liu, H., Yu, Y., Sun, C.: Robust event-triggered control of second-order disturbed leader-follower mass: a nonsingular finite-time consensus approach. Int. J. Robust Nonlinear Control 29(13), 4298–4314 (2019)

Liu, Y., Jia, Y.: Robust H-infinity consensus control of uncertain multi-agent systems with time delays. Int. J. Control Autom. Syst. 9, 12 (2011)

Luo, Y., Zhu, W.: Event-triggered h-infinity finite-time consensus control for nonlinear second-order multi-agent systems with disturbances. Adv. Differ. Equ. 2021(1), 1–19 (2021)

Mo, L.P., Zhang, H.Y., Hu, H.Y.: Finite-time H-infinity consensus of multi-agent systems with a leader. In: Applied Mechanics and Materials, Vol. 241, pp. 1608–1613. Trans Tech Publ (2013)

Motsch, S., Tadmor, E.: Heterophilious dynamics enhances consensus. SIAM Rev. 56(4), 577–621 (2014)

Oh, K.-K., Park, M.-C., Ahn, H.-S.: A survey of multi-agent formation control. Automatica 53, 424–440 (2015)

Peet, M.M.: Lecture Notes in LMI Methods in Optimal and Robust Control. Arizona State University, Tempe (2020)

Peters, A.A., Middleton, R.H., Mason, O.: Leader tracking in homogeneous vehicle platoons with broadcast delays. Automatica 50(1), 64–74 (2014)

Stern, R.E., Cui, S., Delle Monache, M.L., Bhadani, R., Bunting, M., Churchill, M., Hamilton, N., Pohlmann, H., Wu, F., Piccoli, B., et al.: Dissipation of stop-and-go waves via control of autonomous vehicles: field experiments. Transp. Res. Part C 89, 205–221 (2018)

Toscani, G.: Kinetic models of opinion formation. Commun. Math. Sci. 4(3), 481–496 (2006)

Tosin, A., Zanella, M.: Kinetic-controlled hydrodynamics for traffic models with driver-assist vehicles. Multiscale Model. Simul. 17(2), 716–749 (2019)

Tosin, A., Zanella, M.: Uncertainty damping in kinetic traffic models by driver-assist controls. arXiv preprint arXiv:1904.00257 (2019)

Willems, J.: Least squares stationary optimal control and the algebraic Riccati equation. IEEE Trans. Autom. Control 16(6), 621–634 (1971)

Xiu, D.: Numerical Methods for Stochastic Computations. Princeton University Press, Princeton (2010)

Yakubovich, V.A.: Solution of certain matrix inequalities encountered in non-linear control theory. In: Doklady Akademii Nauk, Vol. 156, pp. 278–281. Russian Academy of Sciences (1964)

Yakubovich, V.A.: The method of matrix inequalities in the stability theory of nonlinear control systems, i, ii, iii. Autom. Remote Control 25(4), 905–917 (1967)

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

MH and CS thank the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) for the financial support through 320021702/GRK2326, 333849990/IRTG-2379, HE5386/18-1,19-2,22-1,23-1 and under Germany’s Excellence Strategy EXC-2023 Internet of Production 390621612. GA thanks the Italian Ministry of Instruction, University and Research (MIUR) to support this research with funds coming from PRIN Project 2017 (No. 2017KKJP4X entitled “Innovative numerical methods for evolutionary partial differential equations and applications”).

Appendices

Appendix A: Averaged Control

In this section we consider a control by minimizing the expectation of the cost functional (2.2) subject to the noisy model (2.1). Hence, we consider the expected value of the quadratic cost

where we introduce the matrices \(Q=R= \frac{1}{N} \text {Id}_N\). We claim that in this case an optimal feedback control is obtained as follows

where \({K} \in {\mathbb {R}}^{N \times N}\) and \({S} \in {\mathbb {R}}^{N \times Z}\) fulfill the Riccati matrix-equations

Theorem A.1

Assume matrices K and S have the following structures

Matrices K and S are defined by 2 and 1 elements respectively.

Then the \(i-th\) component of the control u is given by

where \({k}_d\) and \({k}_o\), after a scaling, turn out to be the same as in equations (2.7), while s satisfies

with \(\alpha (N) = \frac{N-1}{N}\).

Proof

From Proposition 2.1 of [3], we can prove that \({k}_d\), \({k}_o\) satisfy equations (2.7). Given the structure of the matrices S, K and B, and solving the second equation in (A.2) componentwise leads to the following identities:

We can further simplify the Riccati-matrix system (A.2) using the dependency of coefficients \(a_d,a_o\). And since we are interested in the dynamics of large number of agents, we introduce the following scalings

For the sake of simplicity, we keep the same notation also for the scaled variables \(s, k_d, k_o\). Under this scaling, the second equation in (A.2) reads

and the Riccati feedback law (A.1) is given by Eq. (A.3) The coefficients \(s(t), k_d(t), k_o(t)\), have to be determined integrating backwards in time. \(\square \)

Remark A.1

In the infinite horizon case and without discount factor, (A.4) reduces to

hence s = \(\nu \).

Appendix B: \({\mathcal {H}}_\infty \) Control Setting

Define a state-space system \(G: L^2 \rightarrow L^2\) by \(y = G\theta \), with \(\theta \) a random input as defined in (4.1), if

where v(t) is the system state, and y(t) is the observed output. It is proved (see e.g. [9, 21]) that for any stable state-space system, G, there exists a frequency transfer function \(\hat{G} \in R{\mathcal {H}}_\infty \) such that

where s is a complex number and \(R{\mathcal {H}}_\infty \) is the set of proper rational functions with no poles in the closed right half-plane, in particular \(R{\mathcal {H}}_\infty = R \cap {\mathcal {H}}_\infty \), where R is the space of rational functions and \({\mathcal {H}}_\infty \) is a signal space of “transfer functions” for linear time-invariant systems, we refer to [9, 20, 25] for further theoretical details.

State space \({\widetilde{A}},{\widetilde{B}},C,D\) or the transfer function is a representation of a system and these formats uses matrices or complex-valued functions (a signal) to parameterize the representation. The signal norm \(\Vert \cdot \Vert _{{\mathcal {H}}_\infty }\) measures the size of the transfer function in a certain sense and the \({\mathcal {H}}_\infty \)-optimal control problem consists of finding a stabilizing controller \(u = K y\) which minimizes the cost function

The direct minimization of the cost \(\Vert \hat{G} \Vert _{{\mathcal {H}}_\infty }\) turns out to be a very hard problem, and it is therefore not feasible to tackle it directly. Instead, it is much easier to construct conditions that state whether there exists a stabilizing controller which achieves the norm bound

for a given \(\gamma > 0\).

The history of LMIs in the analysis of dynamical systems begins in about 1890, when Lyapunov published his seminal work introducing what we now call Lyapunov theory. One of the major next major contributions that we use in this work came in the early 1960s, when Yakubovich, Popov, Kalman, and other researchers succeeded in reducing the solution of the LMIs to what we now call the positive-real (PR) lemma, that shows how LMIs can be used to constrain the eigenvalues of a system [55, 56].

Lemma B.1

Given the frequency transfer function \(\hat{G}\), the following are equivalent:

-

\(\Vert \hat{G} \Vert _{{\mathcal {H}}_\infty } \le \gamma \).

-

\(\exists \) a positive definite square matrix of order N, \(X> 0\) s.t. (B.2) holds.

$$\begin{aligned} \begin{bmatrix} {\widetilde{A}}^\top X + X{\widetilde{A}} &{} X{\widetilde{B}} \\ {\widetilde{B}}^\top X &{} -\gamma I_Z \end{bmatrix} + \frac{1}{\gamma } \begin{bmatrix} C^\top \\ D^\top \end{bmatrix} \begin{bmatrix} C&D \end{bmatrix} < 0. \end{aligned}$$(B.2)

In [53] the following Lemma with equivalent characterization through a Riccati equation as been established:

Lemma B.2

The following are equivalent:

-

\(\exists X> 0\) s.t. (B.2) holds.

-

\(\exists X> 0\) s.t. (B.3) holds.

$$\begin{aligned}{} & {} {\widetilde{A}}^\top X + X{\widetilde{A}} - (X{\widetilde{B}} + C^\top D) (-\gamma I_Z + \frac{1}{\gamma } D^\top D)^{-1} ({\widetilde{B}}^\top X + D^\top C) + \frac{1}{\gamma } C^\top C \nonumber \\{} & {} \quad = 0. \end{aligned}$$(B.3)

Proof

The structure of of Eq. (B.2) is

this system can be solved using the Schur-complement theory. Provided that \({\widehat{D}}^{-1}\) exists, we have

Hence, provided that exists X such that (B.3) has a solution, then for all \( \xi \)

Further, (B.3) is the Schur-complement of

Hence for \(\eta = -\hat{D}\hat{B}^\top \xi \) and \(\forall \xi \), we have that

For a general vector \(\left[ \xi \quad \varrho \right] ^{\top }\), we compute

and then

for \(\gamma \) sufficiently large. \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Albi, G., Herty, M. & Segala, C. Robust Feedback Stabilization of Interacting Multi-agent Systems Under Uncertainty. Appl Math Optim 89, 16 (2024). https://doi.org/10.1007/s00245-023-10078-2

Accepted:

Published:

DOI: https://doi.org/10.1007/s00245-023-10078-2

Keywords

- Agent-based dynamics

- Mean-field equations

- Uncertainty quantification

- Stochastic Galerkin

- \({\mathcal {H}}_\infty \) control