Abstract

We study epidemic models where the infectivity of each individual is a random function of the infection age (the elapsed time since infection). To describe the epidemic evolution dynamics, we use a stochastic process that tracks the number of individuals at each time that have been infected for less than or equal to a certain amount of time, together with the aggregate infectivity process. We establish the functional law of large numbers (FLLN) for the stochastic processes that describe the epidemic dynamics. The limits are described by a set of deterministic Volterra-type integral equations, which has a further characterization using PDEs under some regularity conditions. The solutions are characterized with boundary conditions that are given by a system of Volterra equations. We also characterize the equilibrium points for the PDEs in the SIS model with infection-age dependent infectivity. To establish the FLLNs, we employ a useful criterion for weak convergence for the two-parameter processes together with useful representations for the relevant processes via Poisson random measures.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Kermack and McKendrick pioneered the introduction of PDE models to describe the epidemic dynamics for models with infection-age dependent (variable) infectivity in 1932 [19]. The underlying assumption of their model is that the infectious periods have a general distribution with density which is modeled through an infection-age dependent recovery rate, the infectious individuals having an infection-age dependent infectivity, and the recovered ones a recovery-age susceptibility. In the present paper, we do not consider possible loss of immunity. We defer to a work in preparation the study of variable susceptibility. In the present paper, we mainly consider the SIR model (although we can allow for an exposed period, as will be explained below) and the SIS model. This work is a continuation of our first work on non-Markov epidemic models [25], and our work on varying infectivity models [10], see also [24]. In those papers, we show that certain deterministic Volterra type integral equations are Functional Law of Large Numbers (FLLN) limits of adequate individual based stochastic models. An important feature of our stochastic models is that they are non-Markov (since the infectious duration need not have an exponential distribution), and as a result the limiting deterministic models are equations with memory. Note that as early as in 1927, Kermack and McKendrick introduced in their seminal paper [18] a SIR model with both infection-age dependent infectivity and infection-age dependent recovery rate, the latter allowing the infectious period to have an arbitrary absolutely continuous distribution (the infection-age dependent recovery rate is the hazard rate function of the infectious period). One part of that paper is devoted to the simpler case of constant rates, and apparently most of the later literature on epidemic models has concentrated on this special case, which leads to simpler ODE models, the corresponding stochastic models being Markov models, at the price of the models being less realistic. For example, the recent studies in Covid-19 [11, 30] indicates that using the ODE models can lead to an underestimation of the basic reproduction number \(R_0\).

In this paper, we go back to the original model of Kermack and McKendrick [18], with two new aspects. First, as in our previous publications, we want to obtain the deterministic model as a law of large numbers limit of stochastic models, and second, we distribute the various infected individuals at time t according to their infection-age, and establish a PDE for the “density of individuals” being infected at time t, with infection-age x.

In our stochastic epidemic model, each individual is associated with a random infectivity, which varies as a function of the age of infection (elapsed time since infection). The random infectivity functions, effective during the infected period, are assumed to be i.i.d. for the various individuals, and will also generate the infectious period. The infectivity function is assumed to be càdlàg with a given number of discontinuities, and upper bounded by a deterministic constant. In particular, the law of the infectious period can be completely arbitrary. Our modeling approach allows the random infectivity functions to have an initial period of time during which they take zero values, corresponding to the exposed period. Thus our model generalizes both the classical SIR and SEIR models. To describe the epidemic dynamics of the model, we use a (two-parameter or measure-valued) stochastic process that tracks at each time t the number of individuals that have been infected for a duration less than or equal to a certain amount of time x, and an associated aggregate infectivity process which at each time t sums up the infectivities of all individuals who are infected. From these processes, we can describe the cumulative infection process, the total number of infected individuals as well as the number of recovered ones at each time. We use similar processes to describe the epidemic dynamics for the SIS model with infection-age dependent infectivity.

In the asymptotic regime of a large population (i.e., as the total population size N tends to infinity), we establish the FLLN for the epidemic dynamics. The limits are characterized by a set of deterministic Volterra-type integral equations (Theorem 2.1). Under certain regularity conditions, the density function of the two-parameter (calendar time and infection age) limit process can be described by a one-dimensional PDE (Proposition 3.1 in the case where the distribution of the infectious period is absolutely continuous). Its solution is characterized with a boundary condition satisfying a one-dimensional Volterra-type integral equation. The aggregate infectivity limit process can be described by an integral of the average infectivity function with respect to the limiting two-parameter infectious process (Corollary 3.1, see also Remark 3.4). For the classical SIR model, we recover the well-known linear PDE first proposed by Kermack and McKendrick [19]. We further derive the PDE model when the distribution of the infectious period need not be absolutely continuous (Proposition 3.2 and see also Corollary 3.3 where the infectious periods are deterministic). These PDE models are new to the literature of epidemiology. For the SIS model, we also describe the limiting epidemic dynamics and the PDE representations, and derive the equilibrium quantities associated with the PDE and total count limit (assuming convergence to the equilibria).

1.1 Literature Review

Non-Markov stochastic epidemic models lead (via the FLLN) to deterministic models, which are either low dimensional evolution equation with memory (i.e., Volterra type integral equations), or else coupled ODE/PDE models, where the two variables are the time and the age of infection (time since infection). The first paper of Kermack and McKendrick [18] adopts the first point of view, and the two next [19, 20] the second one. In our recent previous work on this topic [10, 25], we have adopted the first description. The goal of the present paper is to show that in the limit of a large population, our stochastic individual based model with age of infection dependent infectivity and recovery rate converges as well to a limiting system of PDE/ODEs.

While the general model from [18] was largely neglected until rather recently, most of the literature concentrating on the particular case of constant rates, there has been since the 1970s some papers considering infection-age dependent epidemic models, see in particular [14]. More recently, several papers have introduced coupled PDE/ODE models for studying age of infection dependent both infectivity and recovery rate, see in particular [6, 16, 22, 28, 29] and Chapter 13 in [23]. In [8], the authors consider a stochastic epidemic model with contract-tracing, tracking the infection duration since detection for each individual, and use a measure-valued Markov process to describe the epidemic dynamics. They prove a FLLN with a large population and establish a PDE limit, and also prove a FCLT with a SPDE limit process. Since the beginning of the Covid-19 pandemic, a huge number of papers have been produced, with various models of the propagation of this disease. Most of them use ODE models, but a few, notably [9, 12, 13, 17] consider age of infection dependent infectivity, and possibly recovery rate. The last two derive the ODE/PDE model as a law of large numbers limit of stochastic individual based models. The article [12] considers a branching process approximation of the early phase of an epidemic, and the way they model the dependence of the rate of infection with respect to the age of infection is less general than in our model. Recently, the authors in [9] study contact tracing in an individual-based epidemic model via an “infection graph" of the population, and prove the local convergence of the random graph to a Poisson marked tree and a Kermack and McKendrick type of PDE limit for the dynamics by tracking the infection age.

Note also that one way that many authors have chosen in order to improve the realism of ODE models is by increasing the number of compartments. For instance, dividing the infectious compartment into subcompartments, each one corresponding to a different infection rate, is a way to introduce a (piecewise constant) infection age dependent infectivity. In a way, this means approaching a non-Markov process of a given dimension by a higher dimensional Markov process, or approaching a system differential equations with memory by a higher dimensional system of ODEs. In the present paper, we show that the system of integral equations with memory introduced in our earlier work [25] can be replaced by an ODE/PDE system, i.e., an infinite dimensional differential equation. At the level of the stochastic finite population model, this means replacing a non-Markov finite dimensional Markov process by a high dimensional process (whose dimension is bounded by the total population size N, which tends to infinity in our asymptotic). See Remarks 3.2 and 3.7 below.

We also like to mention the relevant work in queueing systems where the elapsed service times are tracked using two-parameter or measure-valued processes. The most relevant to us are the infinite-server (IS) queueing models studied in [1, 26, 27], where FLLN and FCLT are established for two-parameter processes to tracking elapsed and residual service times. However, the proof techniques we employ in this paper are very different from those papers. Here we exploit the representations with Poisson random measures and use a new weak convergence criterion (Theorem 5.1). In addition, despite similarities with the IS queueing models, the stochastic epidemic models have an arrival (infection) process that depend on the state of the system. As a consequence, the limits in the FLLNs result in PDEs while the IS queueing models do not.

1.2 Organization of the Paper

The paper is organized as follows. In Sect. 2, we describe the stochastic epidemic model with infection-age dependent infectivity, and state the FLLN. In Sect. 3, we present the PDE models from the FLLN limits, and also characterize the solution properties of the PDEs. The limits and PDE for the SIS model are presented in Sect. 4, which also considers the equilibrium behavior. In Sect. 5, we prove the FLLN. The Appendix gives the proof of the convergence criterion in Theorem 5.1.

1.3 Notations

All random variables and processes are defined on a common complete probability space \((\Omega , {{\mathcal {F}}}, {\mathbb {P}})\). The notation \(\Rightarrow \) means convergence in distribution. We use \({{\textbf{1}}}_{\{\cdot \}}\) for the indicator function, and occasionally use \({{\textbf{1}}}\{\cdot \}\) for better readability. Throughout the paper, \({{\mathbb {N}}}\) denotes the set of natural numbers, and \({\mathbb {R}}^k ({\mathbb {R}}^k_+)\) denotes the space of k-dimensional vectors with real (nonnegative) coordinates, with \({\mathbb {R}}({\mathbb {R}}_+)\) for \(k=1\). For \(x,y \in {\mathbb {R}}\), we denote \(x\wedge y = \min \{x,y\}\) and \(x\vee y = \max \{x,y\}\). Let \(D=D({\mathbb {R}}_+;{\mathbb {R}})\) denote the space of \({\mathbb {R}}\)-valued càdlàg functions defined on \({\mathbb {R}}_+\). Throughout the paper, convergence in D means convergence in the Skorohod \(J_1\) topology, see Chapter 3 of [4]. Also, \(D^k\) stands for the k-fold product equipped with the product topology. Let C be the subset of D consisting of continuous functions. Let \(C^1\) consist of all differentiable functions whose derivative is continuous. Let \(D_\uparrow \) denote the set of increasing functions in D. Let \(D_D= D({\mathbb {R}}_+; D({\mathbb {R}}_+;{\mathbb {R}}))\) be the D-valued D space, and the convergence in the space \(D_D\) means that both D spaces are endowed with the Skorohod \(J_1\) topology. The space \(C_C\) is equivalent to \(C({\mathbb {R}}_+^2; {\mathbb {R}}_+)\). Let \(C_\uparrow ({\mathbb {R}}_+^2;{\mathbb {R}}_+)\) denote the space of continuous functions from \({\mathbb {R}}_+^2\) into \({\mathbb {R}}_+\), which are increasing as a function of their second variable. For any increasing càdlàg function \(F(\cdot ): {\mathbb {R}}_+\rightarrow {\mathbb {R}}_+\), abusing notation, we write F(dx) by treating \(F(\cdot )\) as the positive (finite) measure on \({\mathbb {R}}_+\) whose distribution function is F. For any \({\mathbb {R}}\)-valued càdlàg function \(\phi (\cdot )\) on \({\mathbb {R}}_+\), the integral \(\int _{a}^b \phi (x)F(dx)\) represents \(\int _{(a,b]} \phi (x) F(dx)\) for \(a<b\).

2 Model and FLLN

2.1 Model Description

We consider an epidemic model in which the infectivity rate depends on the age of infection (that is, how long the individuals have been infected). Specifically, each individual i is associated with an infectivity process \(\lambda _i(\cdot )\), and we assume that these random functions are i.i.d.. Let \(\eta _i= \inf \{t>0: \lambda _i(r) = 0, \, \forall r \ge t\}\) be the infected period corresponding to the individual that gets infected at time \(\tau ^N_i\). The \(\eta _i\)’s are i.i.d., with a cumulative distribution function (c.d.f.) F. Let \(F^c=1-F\).

Individuals are grouped into susceptible, infected and recovered ones. Let the population size be N and \(S^N(t)\), \(I^N(t)\) and \(R^N(t)\) denote the numbers of the susceptible, infected and recovered individuals at time t. We have the balance equation: \( N= S^N(t) + I^N(t) + R^N(t)\), \( t \ge 0. \) Assume that \(S^N(0)>0\), \(I^N(0)>0\) and \(R^N(0)=0\). Let \({\mathfrak {I}}^N(t,x)\) be the number of infected individuals at time t that have been infected for a duration less than or equal to x. Note that for each t, \({\mathfrak {I}}^N(t,x)\) is nondecreasing in x, which is the distribution of \(I^N(t)\) over the infection-ages. Let \(A^N(t)\) be the cumulative number of newly infected individuals in (0, t], with the infection times \(\{\tau ^N_i: i \in {{\mathbb {N}}}\}\).

Let \(\{\tau _{j,0}^N, j =1,\dots , I^N(0)\}\) be the times at which the initially infected individuals at time 0 became infected. Then \({\tilde{\tau }}_{j,0}^N = -\tau _{j,0}^N\), \( j =1,\dots , I^N(0)\), represent the amount of time that an initially infected individual has been infected by time 0, that is, the age of infection at time 0. WLOG, assume that \(0> \tau _{1,0}^N> \tau _{2,0}^N> \cdots >\tau _{I^N(0),0}^N\) (or equivalently \(0< {\tilde{\tau }}_{1,0}^N< {\tilde{\tau }}_{2,0}^N< \cdots < {\tilde{\tau }}_{I^N(0),0}^N\)). Set \({\tilde{\tau }}_{0,0}^N=0\). We define \({\mathfrak {I}}^N(0,x) = \max \{j \ge 0: {\tilde{\tau }}_{j,0}^N \le x\}\), the number of initially infected individuals that have been infected for a duration less than or equal to x at time 0. Assume that there exists \(0 \le {\bar{x}}< \infty \) such that \(I^N(0) = {\mathfrak {I}}^{N}(0, {\bar{x}})\) a.s.

Each initially infected individual \(j=1,\dots , I^N(0)\), is associated with an infectivity process \(\lambda _j^0(\cdot )\), and we assume that they are also i.i.d., with the same law as \(\lambda _i(\cdot )\). This is reasonable since it is for the same disease, and the infectivity for the initially and newly infected individuals with the same infection age should have the same law. The infectivity processes take effect at the epochs of infection. For each j, let \(\eta ^0_j =\inf \{t>0: \lambda _j^0({\tilde{\tau }}_{j,0}^N+r)=0,\, \forall r \ge t\}\) be the remaining infectious period, which depends on the elapsed infection time \({\tilde{\tau }}_{j,0}^N\), but is independent of the elapsed infection times of other initially infected individuals. In particular, the conditional distribution of \(\eta ^0_j\) given that \({\tilde{\tau }}_{j,0}^N=s>0\) is given by

Note that the \(\eta ^0_j\)’s are independent but not identically distributed.

For an initially infected individual \(j=1,\dots ,I^N(0)\), the infection age is given by \({\tilde{\tau }}^N_{j,0}+ t\) for \(0 \le t \le \eta ^0_j\), during the remaining infectious period. For a newly infected individual i, the infection age is given by \(t- \tau ^N_i\), for \( \tau ^N_i \le t \le \tau ^N_i +\eta _i\) during the infectious period. Note that \(\lambda _i(\cdot )\) and \(\lambda ^0_j(\cdot )\) are equal to zero on \({\mathbb {R}}_{-}\).

The aggregate infectivity process at time t is given by

(Note that the notation \({\mathfrak {I}}^N\) was used for the infectivity process in [10, 24].) The instantaneous infection rate at time t can be written as

The counting process of newly infected individuals \(A^N(t)\) can be written as

where Q is a standard Poisson random measure on \({\mathbb {R}}^2_+\) (see, e.g., [7, Chapter VI]).

Among the initially infected individuals, the number of individuals who have been infected for a duration less than or equal to x at time t is equal to

Recall the age limit of the initially infected individuals \({\bar{x}}\) at time zero. Thus, the number of the initially infected individuals that remain infected at time t can be written as

Among the newly infected individuals, the number of individuals who have been infected for a duration less than or equal to x at time t is equal to

Thus, the number of newly infected individuals that remain infected at time t can be written as

We also have the total number of individuals infected at time t that have been infected for a duration which is less than or equal to x:

Note that for each t, the support of the measure \({\mathfrak {I}}^N_0(t,dx)\) is included in \([0, t+{\bar{x}}]\) and the support of the measure \({\mathfrak {I}}^N_1(t, dx)\) is included in [0, t]. Thus

Here we occasionally use \(\infty \) in the second component for convenience with the understanding that \({\mathfrak {I}}^N_0(t,x) = {\mathfrak {I}}^N_0(t,t+{\bar{x}})\) for \(x>t+{\bar{x}}\) and \({\mathfrak {I}}^N_1(t,x) ={\mathfrak {I}}^N_1(t,t) \) for \(x>t\).

We also have for \(t\ge 0\),

We remark that the sample paths of \({\mathfrak {I}}^N(t,x)\) belong to the space \(D_D\), denoting \( D({\mathbb {R}}_+;D({\mathbb {R}}_+;{\mathbb {R}}))\), the D-valued D space, but not in the space \(D({\mathbb {R}}_+^2; {\mathbb {R}})\). We prove the weak convergence in the space \(D_D\) where both D spaces are endowed with the Skorohod \(J_1\) topology. Note that the space \(D({\mathbb {R}}_+^2; {\mathbb {R}})\) is a strict subspace of \(D_D\), although they are equivalent in the continuous cases, that is, \(C({\mathbb {R}}_+^2; {\mathbb {R}}) = C_C\). See more discussions on these spaces in [2, 3, 26, 27].

Remark 2.1

The SEIR model. Suppose that \(\lambda _i(t)=0\) for \(t\in [0,\xi _i)\), where \(\xi _i<\eta _i\), and denote I as the compartment of infected (not necessarily infectious) individuals. An individual who gets infected at time \(\tau ^N_i\) is first exposed during the time interval \([\tau ^N_i,\tau ^N_i+\zeta _i)\), and then infectious during the time interval \((\tau ^N_i+\zeta _i,\tau ^N_i+\eta _i)\). One may state that the individual is infected during the time interval \([\tau ^N_i,\tau ^N_i+\eta _i)\). At time \(\tau ^N_i+\eta _i\), he recovers. All what follows covers perfectly this situation. In other words, our model accomodates perfectly an exposed period before the infectious period, which is important for many infectious diseases, including the Covid-19. However, we distinguish only three compartments, S for susceptible, I for infected (either exposed or infectious), R for recovered.

In the sequel, the time interval \([\tau ^N_i,\tau ^N_i+\eta _i)\) will be called the infectious period, although it might rather be the period during which the individual is infected (either exposed or infectious).

2.2 FLLN

Define the LLN-scaled processes \({\bar{X}}^N= N^{-1} X^N\) for any processes \(X^N\). We make the following assumptions on the initial quantities.

Assumption 2.1

There exists a deterministic continuous nondecreasing function \({\bar{{\mathfrak {I}}}}(0,x)\) for \(x\ge 0\) with \({\bar{{\mathfrak {I}}}}(0,0)=0\) such that \({\bar{{\mathfrak {I}}}}^{N}(0,\cdot ) \rightarrow {\bar{{\mathfrak {I}}}}(0,\cdot )\) in D in probability as \(N\rightarrow \infty \). Let \({\bar{I}}(0) = {\bar{{\mathfrak {I}}}}(0, {\bar{x}})\). Then \(({\bar{I}}^N(0), {\bar{S}}^N(0)) \rightarrow ({\bar{I}}(0), {\bar{S}}(0)) \in (0,1)^2\) in probability as \(N\rightarrow \infty \) where \( {\bar{S}}(0) =1 - {\bar{I}}(0)\in (0,1)\).

Remark 2.2

Recall that \({\bar{{\mathfrak {I}}}}^{N}(0,\cdot )\) describes the distribution of the initially infected individuals over the ages of infection. The assumption means that there is a corresponding limiting continuous distribution as the population size goes to infinity.

Suppose now that the r.v.’s \(\{\tau _{j,0}^N\}_{1\le j\le N}\) are not ordered, but rather i.i.d., with a common distribution function G which we assume to be continuous. It then follows from the law of large numbers that Assumption 2.1 holds in this case.

We make the following assumption on the random function \(\lambda \).

Assumption 2.2

Let \(\lambda (\cdot )\) be a process having the same law of \(\{\lambda _j^0(\cdot )\}_j\) and \(\{\lambda _i(\cdot )\}_i\). Assume that there exists a constant \(\lambda ^*\) such that for each \(0<T<\infty \), \(\sup _{t\in [0,T]} \lambda (t) \le \lambda ^*\) almost surely. Assume that there exist an integer k, a random sequence \(0=\zeta ^0 \le \zeta ^1 \le \cdots \le \zeta ^k \) and associated random functions \(\lambda ^\ell \in C({\mathbb {R}}_+;[0,\lambda ^*])\), \(1\le \ell \le k\), such that

In addition, we assume that there exists a deterministic nondecreasing function \(\varphi \in C({\mathbb {R}}_+;{\mathbb {R}}_+)\) with \(\varphi (0)=0\) such that \(|\lambda ^\ell (t) - \lambda ^\ell (s)| \le \varphi (t-s)\) almost surely for all \(t,s \ge 0\) and for all \(\ell \ge 1\). Let \({\bar{\lambda }}(t) = {\mathbb {E}}[\lambda _i(t)] ={\mathbb {E}}[\lambda ^0_j(t)]\) and \(v(t) =\textrm{Var}(\lambda (t)) = {\mathbb {E}}\big [\big (\lambda (t) - {{\bar{\lambda }}}(t)\big )^2\big ]\) for \(t\ge 0\).

Remark 2.3

Recall that the basic reproduction number \(R_0\) is the mean number of susceptible individuals whom an infectious individual infects in a large population otherwise fully susceptible. In the present model, clearly

Suppose that \(\lambda _i(t)={\tilde{\lambda }}(t){{\textbf{1}}}_{t<\eta _i}\), where \({\tilde{\lambda }}(t)\) is a deterministic function. Then

In the standard SIR model with \({\tilde{\lambda }}(t) \equiv \lambda \) and \({\mathbb {E}}[\eta ] = \int _0^\infty F^c(t)dt\), the formula above reduces to the well known \(R_0 = \lambda {\mathbb {E}}[\eta ]\). See, e.g., [5]. We obtain the same formula if the deterministic function \({\tilde{\lambda }}(t)\) is replaced by a process \(\lambda _i(t)\) independent of \(\eta _i\), with mean \({\tilde{\lambda }}(t)\). More precisely, in that case the sequence \(({\lambda }_i(t),\eta _i)_{i\ge 1}\) is assumed to be i.i.d., and for each i, \({\lambda }_i\) and \(\eta _i\) are independent.

The proof of the following Theorem, which is the main result of this section, will be given in Sect. 5. For a function \(u(t,x) \in D_{D_\uparrow }\), we use the equivalent notations \(d_x u(t,x)\) and \(u_x(t,x)\) for the partial derivative w.r.t. x, while u(t, dx) denotes the measure whose distribution function is \(x\mapsto u(t,x)\), which coincides with \(u_x(t,x)dx\) if that last map is differentiable. In particular, \(u_x(t,0)\) indicates the partial derivative evaluated at \(x=0\).

Theorem 2.1

Under Assumptions 2.1 and 2.2, as \(N\rightarrow \infty \),

where the limits are the unique continuous solution to the following set of integral equations, for \(t, x\ge 0\),

with

The function \({\bar{{\mathfrak {I}}}}(t,x)\) is nondecreasing in x for each t. As a consequence, \({\bar{I}}^N\rightarrow {\bar{I}}\) in D in probability as \(N\rightarrow \infty \) where

3 PDE Models

One can regard \({\bar{{\mathfrak {I}}}}(t,x)\) as the ‘distribution function’ of \({\bar{I}}(t) = {\bar{{\mathfrak {I}}}}(t,t+{\bar{x}})\) over the ‘ages’ \(x \in [0,t+{\bar{x}})\) for each fixed t. If \(x\mapsto {\bar{{\mathfrak {I}}}}(t,x)\) is absolutely continuous, we denote by \({\bar{{\mathfrak {i}}}}(t,x) ={\bar{{\mathfrak {I}}}}_x(t,x)\) the density function of \({\bar{{\mathfrak {I}}}}(t,x)\) with respect to x. Note that \({\bar{S}}(t)=0\) for \(t<0\) and \({\bar{{\mathfrak {i}}}}(t,x)=0\) both for \(t<0\) and \(x < 0\).

3.1 The Case F Absolutely Continuous

In this subsection, we assume that F is absolutely continuous, \(F(dx)=f(x)dx\), and we denote by \(\mu (x)\) the hazard function of the r.v. \(\eta \), i.e., \(\mu (x):=f(x)/F^c(x)\) for \(x\ge 0\). If the density function \({\bar{{\mathfrak {i}}}}(t,x)\) exists, we obtain the following PDE representation.

Proposition 3.1

Suppose that F is absolutely continuous, with the density f, and that \({\bar{{\mathfrak {I}}}}(0,x)\) is differentiable with respect to x, with the density function \({\bar{{\mathfrak {i}}}}(0,x)\). Then for \(t>0\), the increasing function \({\bar{{\mathfrak {I}}}}(t,\cdot )\) is absolutely continuous, and (t, x) a.e. in \((0,+\infty )^2\),

with the initial condition \({\bar{{\mathfrak {i}}}}(0,x)= {\bar{{\mathfrak {I}}}}_x(0,x)\) for \(x \in [0,{\bar{x}}]\), and the boundary condition

with the convention that \(F^c=1\) on \({\mathbb {R}}_-\), and that the integrand in (3.2) is zero when \(F^c(x)=0\).

In addition,

Moreover, the PDE (3.1) has a unique solution which is given as follows. For \(x\ge t\),

while for \(t>x\),

and the boundary function is the unique solution of the integral equation

Remark 3.1

The PDE (3.1) can be considered as a linear equation, with a nonlinear boundary condition which is the integral Eq. (3.6).

It follows from (3.4) and (3.5) that \(F^c(x)=0\) implies that \({\bar{{\mathfrak {i}}}}(t,x)=0\). This is why we can impose that the integrand in the right hand side of (3.2) is zero whenever \(F^c(x)=0\).

We remark that the PDE given in [19] resembles that given in (3.1), see Eqs. (28)–(29), see also Eq. (2.2) in [15]. In particular, the function \(\mu (x)\) is interpreted as the recovery rate at infection age x. Equivalently, it is the hazard function of the infectious duration.

Remark 3.2

In a sense, what we do in the present paper can be interpreted as follows: we replace the two-dimensional system of equations with memory (2.13)–(2.14) (with, see (2.17), \({\bar{\Upsilon }}(t)\) replaced by \({\bar{S}}(t) {\overline{{{\mathcal {I}}}}}(t)\)) by the infinite dimensional system of ODE-PDE (2.13)–(3.1)–(3.2) (with, see again (2.17), \({\bar{\Upsilon }}(t)\) replaced by \({\bar{{\mathfrak {i}}}}(t,0)\)).

At the level of our population of size N, we have a two-dimensional non-Markov process \((S^N(t),{\mathcal {I}}^N(t))\). For any \(t\ge 0\), let \({\bar{{\mathfrak {i}}}}^N(t)\) denote the measure whose distribution function is \(x\mapsto {\bar{{\mathfrak {I}}}}^N(t,x)\). Theorem 2.1 implies that locally uniformly in t, \({\bar{{\mathfrak {i}}}}^N(t)\) converges weakly to the measure which has the density \({\bar{{\mathfrak {i}}}}(t,x)\) w.r.t. Lebesque’s measure. \({\bar{{\mathfrak {i}}}}^N(t)\) is a point measure which assigns the mass \(N^{-1}\) to any x which is the infection age of one of the individuals infected at time t. Clearly, from the knowledge of \({\bar{{\mathfrak {i}}}}^N(t)\), we can deduce the values of both \(I^N(0)\) and \(A^N(t)\), hence of \(S^N(0)\) and of \(S^N(t)\) (see (2.9)). Note that the points of the measure \({\bar{{\mathfrak {i}}}}^N(t)\) which are larger than t are the \(\{{\tilde{\tau }}^N_{j,0}+t, 1\le j\le I^N(0)\}\), and those which are less than t are the \(\{t-\tau ^N_i, 1\le i\le A^N(t)\}\). Hence from (2.2), \({\mathcal {I}}^N(t)\) is a function of both \({\bar{{\mathfrak {i}}}}^N(t)\) and the \(\lambda _i\)’s. The same is true for \(\Upsilon ^N(t)\). Conditionally upon the \(\lambda _i\)’s, the process \({\bar{{\mathfrak {i}}}}^N(t)\) is a measure-valued Markov process, which evolves as follows. Each point x which belongs to it increases at speed 1, dies at rate \(\mu (x)\), and new points are added at rate \(\Upsilon ^N(t)\). \({\bar{{\mathfrak {i}}}}^N(t)\) is determined by a sequence of at most N positive numbers; it can be considered as an element of \(\cup _{k=1}^N{\mathbb {R}}^k\). We have “Markovianized” the two-dimensional non-Markov process \((S^N(t),{\mathcal {I}}^N(t))\), at the price of increasing dramatically the dimension.

Note that the pair composed of \({\bar{{\mathfrak {i}}}}^N(t)\) and the collection \(\{\lambda ^0_j, 1\le j\le I^N(0); \lambda _i, 1\le i\le A^N(t)\}\) is a Markov process with values in \(\cup _{k=1}^N({\mathbb {R}}\times D)^k\). \({\bar{{\mathfrak {i}}}}^N(t)\) evolves as above, and each new \(\lambda _i\) is a random element of D with the same law, independent of everything else.

We expect to write and study the equation for the measure-valued Markov process \({\bar{{\mathfrak {i}}}}^N(t)\) in a future work.

Remark 3.3

Recall the special case in Remark 2.3 with \(\lambda _i(t)={{\tilde{\lambda }}}(t){{\textbf{1}}}_{t<\eta _i}\), where \({{\tilde{\lambda }}}(t)\) is a deterministic function. Then \({{\bar{\lambda }}}(t)={{\tilde{\lambda }}}(t)F^c(t)\), and \({\mathbb {E}}\big [\lambda ^0(t)|{{\tilde{\tau }}}^N_{0}=y\big ]={{\tilde{\lambda }}}(t+y)\frac{F^c(t+y)}{F^c(y)}\). In that case, the boundary condition in (3.2) becomes

This is usually how the boundary condition is imposed in the literature of PDE epidemic models (see, e.g., [15, Eq. (2.5)], [22, Eq. (1.1)] and [12, Eq. (2)]). This expression has clearly a very intuitive interpretation. \({\bar{{\mathfrak {i}}}}(t,0)\) is the instantaneous rate for an individual to get infected at time t (resulting in a newly infectious individual with a zero age of infection), while the right hand side is the instantaneous infection rate by the existing infectious population at time t, which depends on all the infectious individuals with all ages of infection. This of course includes time \(t=0\), which formulates a constraint on the initial condition \(\{\bar{\mathfrak I}(0,x)\}_{0\le x\le {\bar{x}}}\).

Proof

By the fact that F has a density, we see that the two partial derivatives of \({{\bar{{\mathfrak {I}}}}}\) exist (t, x) a.e. From (2.15), they satisfy

and

Thus, summing up (3.7) and (3.8), we obtain for \(t>0\) and \(x>0\),

Denote \( {\bar{{\mathfrak {I}}}}_{x,t}(t,x) = \frac{\partial ^2 {\bar{{\mathfrak {I}}}}(t,x)}{\partial x \partial t} = \frac{\partial }{\partial x} {\bar{{\mathfrak {I}}}}_t(t,x) \) and \( {\bar{{\mathfrak {I}}}}_{x,x}(t,x)= \frac{\partial ^2 {\bar{{\mathfrak {I}}}}(t,x)}{\partial x \partial x}\). By taking the derivative on both sides of (3.9) with respect to x (possibly in the distributional sense for each term on the left), we obtain for \(t>0\) and \(x>0\),

Since \( \frac{\partial ^2 {\bar{{\mathfrak {I}}}}(t,x)}{\partial x \partial t} = \frac{\partial ^2 {\bar{{\mathfrak {I}}}}(t,x)}{\partial t \partial x}\), we obtain the expression

As concerns the boundary condition, we note that, given (3.3), (3.4) and (3.5), (3.2) and (3.6) are equivalent. Hence we will establish (3.6), (3.3), (3.4) and (3.5).

For the boundary condition \({{\bar{{\mathfrak {i}}}}}(t,0)\), by (2.14) and (2.17), we have

where by (2.13),

Thus we obtain the expression in (3.6). We next prove that equation (3.6) has a unique non-negative solution. Observe that \(u(t) = {{\bar{{\mathfrak {i}}}}}(t,0)\) is also a solution to

and any non-negative solution of (3.6) solves (3.12).

First, note that since for any \(t\ge 0\), \(0\le {\bar{\lambda }}(t)\le \lambda ^*\),

from which we conclude that \(\int _0^tu(s) ds\le {\bar{S}}(0)\). Indeed, if that were not the case, there would exist a time \(T_{{\bar{S}}(0)}<t\) such that \(\int _0^{T_{{\bar{S}}(0)}} u(s) ds={\bar{S}}(0)\), hence \(\int _0^t u(s) ds\ge {\bar{S}}(0)\) and from (3.12), we would have \(u(t)=0\) for any \(t\ge T_{{\bar{S}}(0)}\), so that indeed \(\int _0^tu(s) ds\le {\bar{S}}(0)\).

Under Assumption 2.2, using (3.13), if \(u_1(t)\) and \(u_2(t)\) are two nonnegative integrable solutions, then

which, combined with Gronwall’s Lemma, implies that \(u_1 \equiv u_2\). Now existence is provided by the fact that the function \({{\bar{{\mathfrak {i}}}}}(t,0)\) is a non-negative solution of (3.12).

Note also that clearly, using a combination of an argument similar to that used for uniqueness, and of the classical estimate on Picard iterations for ODEs, one could establish that the sequence defined by \(u^{(0)}(t)\equiv 0\) and for \(n\ge 0\),

given \( {\bar{{\mathfrak {i}}}}(0,\cdot )\), is a Cauchy sequence in \(C({\mathbb {R}}_+)\), hence existence.

We next derive the explicit solution expressions in (3.4) and (3.5). It follows from (3.11)Â that for \(x\ge t\), \(0\le s\le t\),

while for \(t>x\), \(0\le s\le x\),

Integrating the first identity from \(s=0\) to \(s=t\), we deduce that for \(x\ge t\),

so that for \(x\ge t\),

Now for \(t>x\), we integrate the second identity from \(s=0\) to \(s=x\), and get

Hence for \(t>x\),

Clearly, (3.4) is equivalent to (3.14), (3.5) is equivalent to (3.15), and (3.1) follows from (3.11), (3.14) and (3.15). \(\square \)

Corollary 3.1

The formula (2.14) for \( {\overline{{{\mathcal {I}}}}}(t) \) can be rewritten

where \(F^c(z)=1\), for \(z\le 0\).

Proof

We first deduce from (2.15) that for \(t>x\), \(x\mapsto {{\bar{{\mathfrak {I}}}}}(t,x)\) is differentiable, and \({{\bar{{\mathfrak {I}}}}}(t,dx)=F^c(x){{\bar{\Upsilon }}}(t-x)\), and for fixed t, on \([0, {\bar{x}}]\), the function \(y\rightarrow \bar{{\mathfrak {I}}}(t,t+y)\) is of finite total variation and satisfies \(\bar{{\mathfrak {I}}}(t,t+dy) = \frac{F^c(t+y)}{F^c(y)} \bar{{\mathfrak {I}}}(0,dy)\). Inserting the resulting formulas for \( \bar{{\mathfrak {I}}}(0,dy)\) and \({{\bar{\Upsilon }}}\) in the first and second integrals of the right hand side of (2.14), we obtain

from which the result follows. \(\square \)

Remark 3.4

In the special case \(\lambda _i(t)={{\tilde{\lambda }}}(t){{\textbf{1}}}_{t<\eta _i}\) as discussed in Remark 3.3, (3.16) reduces to the very simple formula

A similar formula holds if we replace the deterministic function \({{\tilde{\lambda }}}(t)\) by a copy \(\lambda _i(t)\) of a random function, which is independent of \(\eta _i\), as discussed in Remark 2.3, and whose expectation is \({{\tilde{\lambda }}}(t)\). Then, we have

Since \({{\bar{{\mathfrak {i}}}}}(t,0) = {{\bar{\Upsilon }}}(t)\), the results above can be stated using this expression of \({{\bar{\Upsilon }}}\).

In the special case of exponentially distributed infectious periods, i.e. \(\mu (x)\equiv \mu \), we obtain the following well known results, see, e.g., [16, 22, 28].

Corollary 3.2

If the c.d.f. \(F(t) = 1-e^{-\mu t}\), we have for \(t>0\) and \(x>0\),

with the initial condition \({\bar{{\mathfrak {i}}}}(0,x)\) given for \(x \in [0, {\bar{x}}]\) and the boundary condition for \({\bar{{\mathfrak {i}}}}(t,0)\) as given in (3.6).

Proof

In this case, the above proof simplifies. Indeed, we have for \(t\ge 0\) and \(x\ge 0\),

By taking derivative with respect to x when \(t>0\) and \(x>0\), we obtain that equation (3.8) becomes

Taking derivatives of this equation with respect to t and x, we obtain for \(t>0\) and \(x>0\),

The boundary conditions follow in the same way as in the general model. \(\square \)

Remark 3.5

If the remaining infectious periods of the initially infectious individuals \(\{\eta ^0_j, j=1,\dots , I^N(0)\}\) are i.i.d. with c.d.f. \(F_0\) instead of depending on the infection age in (2.1), then we obtain the limits

(noting that they are not continuous unless \(F_0\) is continuous), and assuming the density functions exist, we obtain the PDE:

where \(\mu _0(t)=f_0(t)/F^c_0(t)\). As in the proof of Proposition 3.1, we obtain that the PDE (3.1) has a unique solution which is given as follows. For \(x\ge t\),

while for \(t>x\),

and the boundary function is the unique solution of the integral equation

3.2 The General Case

We now generalize the result of Proposition 3.1 to the case where the distribution F is not absolutely continuous. We denote below by \(\nu \) the law of \(\eta \), i.e. the measure whose distribution function is F. For reasons which will be explained in Remark 3.6 below, we shall in this subsection use the left continuous versions of F and \(F^c\). In order to simplify notations, we define

Proposition 3.2

Suppose that \({\bar{{\mathfrak {I}}}}(0,x)\) is differentiable with respect to x, with the density function \({\bar{{\mathfrak {i}}}}(0,x)\). Then for \(t>0\), the increasing function \({\bar{{\mathfrak {I}}}}(t,\cdot )\) is absolutely continuous, and the following identity holds:

(i.e., the distribution which appears on the left hand side of (3.20) equals the measure which has the density \(-\frac{{\bar{{\mathfrak {i}}}}(t,x)}{G^c(x)}\) with respect to the measure \(\nu \)) with the initial condition \({\bar{{\mathfrak {i}}}}(0,x)= {\bar{{\mathfrak {I}}}}_x(0,x)\) for \(x \in [0,{\bar{x}}]\), and the boundary condition

with the convention that \(G^c=1\) on \({\mathbb {R}}_-\), and that the integrand in (3.21) is zero whenever \(G^c(x)=0\).

In addition,

Moreover, the PDE (3.20) has a unique solution which is given as follows. For \(x\ge t\),

while for \(t>x\),

and the boundary function is the unique solution of the integral equation

Remark 3.6

The product \(\frac{{\bar{{\mathfrak {i}}}}(t,x)}{G^c(x)}\nu (dx)\) can also be rewritten as

where the second factor can be thought of as the “hazard measure”, i.e., the generalization of the hazard function, of the r.v. \(\eta \). The reason why we want to have \(G^c(x)\) in the denominator, and not \(F^c(x)\) is the following. If the support of \(\nu \) is \([0,x_{max}]\), and \(\nu (\{x_{max}\})>0\), then \(F^c(x_{max})=0\), while \(G^c(x_{max})>0\) and we need a positive denominator at the point \(x_{max}\), since \(\nu (\{x_{max}\})>0\).

For consistency, in the present subsection we always choose the left continuous version G (resp. \(G^c\)) of F (resp. \(F^c\)). Of course, in the case where F is absolutely continuous this makes no difference.

Remark 3.7

Remark 3.2 can be extended to the present case of a general distribution function F, replacing the infinite dimensional system of ODE-PDE (2.13)–(3.1)–(3.2) by (2.13)–(3.20)–(3.21).

Proof

We first rewrite Eq. (2.15) as

Differentiating \({\bar{{\mathfrak {I}}}}(t,x)\) in x can be done exactly as in the proof of Proposition 3.1. Concerning the differentiation in t, the differentiation with respect to t appearing in the integrands \(G^c(t+y)\) and \(G^c(t-s)\) is now a bit more delicate: those functions have not been assumed to be differentiable. Their derivatives in the distributional sense is a measure, whose bracket with a measurable bounded function makes sense, so that

As a consequence, the above modification in the proof of Proposition 3.1 yields

Differentiating with respect to x finally yields

We thus deduce that for \(x\ge t\), \(0\le s\le t\),

while for \(t>x\), \(0\le s\le x\),

Let us integrate the first identity on the interval [0, t). We get

We conclude that for \(x\ge t\),

We finally consider the case \(t>x\), and integrate the second identity on the interval [0, x), yielding:

so that, for \(t>x\),

and we have established (3.23), (3.24), as well as (3.20). The rest of the proof is the same as that of Proposition 3.1. \(\square \)

The case of a deterministic duration \(\eta \) is a particular case of the last Proposition.

Corollary 3.3

Suppose that the infectious periods are deterministic and equal to \(t_i\), i.e., \(F(t)={{\textbf{1}}}_{t\ge t_i}\), \(G(t)={{\textbf{1}}}_{t>t_i}\). Then we have

with \(\delta _{t_i}(x) \) being the Dirac measure at \(t_i\), with the initial condition \({\bar{{\mathfrak {i}}}}(0,x) =\partial _x{\bar{{\mathfrak {I}}}}(0,x)\) for \(x \in [0,t_i]\), and the boundary condition

Note also that the boundary function \({\bar{{\mathfrak {i}}}}(t,0)\) solves the following Volterra equation: if \(0<t<t_i\),

and if \(t\ge t_i\),

The PDE (3.26) has a unique solution \({\bar{{\mathfrak {i}}}}(t,x)\), which is given as follows. \({\bar{{\mathfrak {i}}}}(t,x)=0\) if \(x\ge t_i\). For \(t\le x<t_i\),

while for \(x<t\wedge t_i\),

Remark 3.8

The total fraction of the population infected during the epidemic is given by

where \({{\bar{{\mathfrak {i}}}}}(t,0)\) is the solution to (3.6). We also refer the reader to equation (12) in Kaplan [17], based on his constructed “Scratch" model.

4 On the SIS Model with Infection-Age Dependent Infectivity

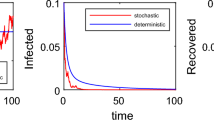

In the SIS model, the infectious individuals become susceptible once they recover. Since \(S^N(t) + I^N(t) = N\) for each \(t\ge 0\) with a population size N, the epidemic dynamics is determined by the process \(I^N(t)\) alone, and we have the same representations of the processes \({\mathfrak {I}}^N_0(t,x)\) and \({\mathfrak {I}}^N_1(t,x)\) in (2.5) and (2.7), respectively, while in the representations of \(A^N\) in (2.4) and \(\Upsilon ^N\) in (2.3), the process \(S^N(t)\) is replaced by \(S^N(t) = N - I^N(t)\). The aggregate infectivity process \({{\mathcal {I}}}^N(t)\) is still given by (2.2). The two processes \(({\mathfrak {I}}^N, {{\mathcal {I}}}^N)\) determine the dynamics of the SIS epidemic model. Under Assumptions 2.1 and 2.2,

where

for \(t,x \ge 0\). If \({\mathfrak {I}}(0,x)\) is differentiable and F is absolutely continuous, then the density function \({\bar{{\mathfrak {i}}}}(t,x)= \frac{\partial {\bar{{\mathfrak {I}}}}(t,x)}{\partial x}\) exists and satisfies again (3.1). The same calculations as in the case of the SIR model lead to (3.4), (3.5) and (3.2). However, the formula for \({\bar{S}}(t)\) is different in the case of the SIS model, that is, (3.3) does not hold. Instead, we have

Thus, the Volterra equation on the boundary reads

whose form is similar to the one for the SIR model.

It is also clear that if the c.d.f. \( F(t) = 1- e^{-\beta t}\), we have the same PDE for \({\bar{{\mathfrak {i}}}}(t,x)\) as given in (3.19) with \(\mu (x) = \beta \) and the boundary condition:

If the c.d.f. F of the infectious period is not absolutely continuous, but \({\mathfrak {I}}(0,x)\) is differentiable, then we have essentially the same result as in Proposition 3.2, except that (3.22) is replaced by (4.4), and (3.25) by (4.5).

Recall that the standard SIS model has a nontrivial equilibrium point \({\bar{I}}^*= 1-\beta /\lambda \) if \(\beta <\lambda \), where \(\lambda \) is the infection rate (the bar over \(\lambda \) is dropped for convenience), and \(1/\beta \) is the mean of the infectious periods. See Sect. 4.3 in [25] for the account of the SIS model with general infectious periods. Here we consider the model in the generality of infection-age dependent infectivity. Note that we provide the explicit expressions for the equilibria below assuming they exist. We do not prove the existence of the limit of \( {\bar{{\mathfrak {I}}}}(t,x)\) as \(t\rightarrow \infty \), which we leave as future work.

Proposition 4.1

Suppose that \( \lim {\bar{{\mathfrak {I}}}}(t,x) \rightarrow {\bar{{\mathfrak {I}}}}^*(x)\) exists as \(t\rightarrow \infty \) and \( {\bar{I}}^*={\bar{{\mathfrak {I}}}}^*(\infty )\). If \(R_0=\int _0^\infty {{\bar{\lambda }}}(y)dy\le 1\), \({\bar{I}}^*=0\) (the disease free equilibrium). In the complementary case, \(R_0=\int _0^\infty {{\bar{\lambda }}}(y)dy>1\), if \({\bar{{\mathfrak {I}}}}(0,{\bar{x}})>0\),

The density function \({\bar{{\mathfrak {i}}}}(t,x)\) has an equilibrium \( {\bar{{\mathfrak {i}}}}^*(x)\) in the age of infection x, given by

where \(\beta ^{-1}=\int _0^\infty F^c(t)dt \in (0,\infty )\) is the expectation of the duration of the infectious period. If F has a density f, then the equilibrium density \( {\bar{{\mathfrak {i}}}}^*(x)\) satisfies

Proof

The fact that \( {\bar{I}}^*=0\) if \(R_0\le 1\) and \(>0\) if \(R_0>1\) follows from branching process arguments, and the fact that the start of the epidemic can be approximated by a branching process, see e.g. Sect. 1.3 in [5]. Assume that the equilibrium \({\bar{{\mathfrak {I}}}}^*(x) := {\bar{{\mathfrak {I}}}}(\infty ,x)\) exists. We deduce from (4.3), combined with (3.16), that \({\bar{{\mathfrak {I}}}}^*(x)\) must satisfy

where \(F_e(x)=\beta \int _0^x F^c(s) ds\), the equilibrium (stationary excess) distribution. Letting \(x\rightarrow \infty \) in this formula, we deduce

Combining the last two equations, we obtain

Plugging this formula in the previous identity, we deduce that

Then the formula (4.6) can be directly deduced from this equation. The formula (4.7) follows by taking the derivative with respect to x in (4.9). \(\square \)

Remark 4.1

If the distribution F is exponential, that is, \(F(x) = 1-e^{-\beta x}\), then we obtain

where \({\bar{I}}^*\) is given in (4.6).

Remark 4.2

Suppose that \(\lambda _i(t)=\lambda (t){{\textbf{1}}}_{t<\eta _i}\), where \(\lambda (t)\) is a deterministic function, as in Remark 3.4. Then \({{\bar{\lambda }}}(t) = \lambda (t) F^c(t)\). If \(\lambda (t) \equiv \lambda \) is a constant and F has mean \(\beta ^{-1}\), then \({\bar{I}}^*\) in (4.6)

which reduces to the well known result for the standard SIS model with constant rates, assuming \(\beta <\lambda \).

5 Proof of the FLLN

In this section, we prove Theorem 2.1. We will need the following theorem. A similar pre-tightness criterion can be found in Theorem 3.5.1 in Chapter 6 of [21], which extends that in the Corollary on page 83 of [4] to the space \(C([0,1]^k, {\mathbb {R}})\). Those proofs can be easily extended to the space \(D_D\). For the convenience of the reader, we give a proof of the following result in Sect. 6 below.

Theorem 5.1

Let \(\{X^N: N \ge 1\}\) be a sequence of random elements in \(D_D\). If the following two conditions are satisfied: for any \(T,S>0\),

-

(i)

for any \(\epsilon >0\), \( \sup _{t \in [0,T]}\sup _{s\in [0,S]} {\mathbb {P}}\big ( |X^N(t, s)|> \epsilon \big ) \rightarrow 0\) as \(N\rightarrow \infty \), and

-

(ii)

for any \(\epsilon >0\), as \(\delta \rightarrow 0\),

$$\begin{aligned}&\limsup _{N\rightarrow \infty } \sup _{t\in [0,T]} \frac{1}{\delta } {\mathbb {P}}\bigg ( \sup _{u \in [0,\delta ]}\sup _{s \in [0,S]} |X^N(t+u,s) - X^N(t,s)|> \epsilon \bigg ) \rightarrow 0, \\&\limsup _{N\rightarrow \infty } \sup _{s\in [0,S]} \frac{1}{\delta } {\mathbb {P}}\bigg ( \sup _{v \in [0,\delta ]}\sup _{t \in [0,T]} |X^N(t,s+v) - X^N(t,s)| > \epsilon \bigg ) \rightarrow 0, \end{aligned}$$

then \(X^N(t,s)\rightarrow 0 \) in probability, locally uniformly in t and s, as \(N\rightarrow \infty \).

We shall also use repeatedly the following Lemma.

Lemma 5.1

Let \(f\in D({\mathbb {R}}_+)\) and \(\{g_N\}_{N\ge 1}\) be a sequence of elements of \(D_\uparrow ({\mathbb {R}}_+)\) which is such that \(g_N\rightarrow g\) locally uniformly, where \(g\in C_\uparrow ({\mathbb {R}}_+)\). Then for any \(T>0\),

Proof

The assumption implies that the sequence of measures \(g_N(dt)\) converges weakly, as \(N\rightarrow \infty \), towards the measure g(dt). Since moreover f is bounded, and the set of discontinuities of f is of g(dt) measure 0, this is essentially a minor improvement of the Portmanteau theorem, see [4]. \(\square \)

5.1 Convergence of \({\mathfrak {I}}^{N}_0(t,x) \)

We first treat the process \({\mathfrak {I}}^{N}_0(t,x) \) in (2.5).

Lemma 5.2

Under Assumption 2.1,

in probability, where the limit \({\bar{{\mathfrak {I}}}}_0(t,x)\) is given by

Proof

Recall that

Note that the pair of variables \(({\tilde{\tau }}_{j,0}^N, \eta ^0_j)\) satisfies (2.1), and \({\mathfrak {I}}^N(0, (x-t)^+) = \max \{j\ge 1: {\tilde{\tau }}_{j,0}^N \le (x-t)^+\}\). Let

We will first show that \(\widetilde{{\mathfrak {I}}}^N_0(t,x) \rightarrow {\bar{{\mathfrak {I}}}}_0(t,x)\) (this will be step 1 of the proof), and then that \({\bar{{\mathfrak {I}}}}^{N}_0(t,x) - \widetilde{{\mathfrak {I}}}^N_0(t,x)\rightarrow 0\) (this will be step 2 of the proof), both in probability, locally uniformly in t and x, as \(N\rightarrow \infty \).

Step 1 We show that, as \(N\rightarrow \infty \),

From Lemma 5.1, Assumption 2.1 and the continuous mapping theorem, we deduce that for any \(t,x \ge 0\), \(\widetilde{{\mathfrak {I}}}^N_0(t,x) \rightarrow {\bar{{\mathfrak {I}}}}_0(t,x)\) in probability, as \(N\rightarrow \infty \). It thus remains to show that the sequence \(\{X^N:=\widetilde{{\mathfrak {I}}}^N_0-{\bar{{\mathfrak {I}}}}_0,\ N\ge 1\}\) satisfies condition (ii) in Theorem 5.1. In fact, it is easily seen that it is sufficient to verify condition (ii) with \(X^N=\widetilde{{\mathfrak {I}}}^N_0\). Indeed, both

tend to 0, as \(\delta \rightarrow 0\), which is an easy consequence of the computations which follow. Let us now consider \(X^N=\widetilde{{\mathfrak {I}}}^N_0\). We have

which gives

Consequently,

The limit in probability of the first term on the right of the last inequality equals

which tends to 0 as \(\delta \rightarrow 0\), since \(F^c\) is continuous on the right and the integrand is between 0 and 1. The second term on the right of the above inequality is nonnegative and upper bounded by

which converges in probability towards

and this last expression tends to 0 as \(\delta \rightarrow 0\). Combining the above arguments, we deduce that for \(\epsilon >0\), if \(\delta >0\) is small enough,

We next consider

Hence,

The term on the right of the last inequality converges in probability as \(N\rightarrow \infty \), towards

which tends to 0 as \(\delta \) tends to 0. Again we easily deduce from these computations that for any \(\epsilon >0\), if \(\delta >0\) is small enough,

We have established (5.4).

Step 2 We finally show that \(V^N(t,x):= {\bar{{\mathfrak {I}}}}^{N}_0(t,x) - \widetilde{{\mathfrak {I}}}^{N}_0(t,x)\) satisfies the two conditions of Theorem 5.1. We have

We first check condition (i) from Theorem 5.1. We have

where the second term in the first equality is equal to zero by the independence of \(\eta ^0_j\) and \(\eta ^0_{j'}\) given the times \({\tilde{\tau }}_{j,0}^N\) and \({\tilde{\tau }}_{j',0}^N\) and by using a conditioning argument. This implies that as \(N\rightarrow \infty \),

and thus condition (i) in Theorem 5.1 holds.

We next show condition (ii) from Theorem 5.1, that is, for any \(\epsilon >0\), as \(\delta \rightarrow 0\),

and

We first prove (5.5). We have

For the first term,

By the conditional independence of the \(\eta ^0_j\)’s, the first term on the right of (5.8) is bounded by

which converges to zero as \(N\rightarrow \infty \). Since by Assumption 2.1\( {\bar{{\mathfrak {I}}}}(0,\cdot )\) is continuous, thanks to Lemma 5.1, \(\limsup _N\) of the second term is upper bounded by

which is zero for \(\delta >0\) small enough (clearly uniformly over \(t \in [0,T]\)).

The second term on the right of (5.7) is treated exactly as the last term we have just analyzed. Finally for the third term, we note that

Thanks to Assumption 2.1, the \(\limsup _N\) of this probability is upper bounded by

which is zero for \(\delta >0\) small enough, since \( {\bar{{\mathfrak {I}}}}(0,\cdot )\) is continuous. The uniformity over \(t\in [0,T]\) is obvious. Thus we have shown (5.5).

We next prove (5.6). Observe that

from which we obtain

Then following the same argument as for the second term on the right of (5.7), we can conclude (5.6). \(\square \)

5.2 Convergence of \({\bar{{\mathfrak {I}}}}^{N}_1\)

We first write the process \(A^N\) as

where

and

where \({\overline{Q}}(ds,du) = Q(ds,du) - ds du\) is the compensated PRM.

Lemma 5.3

Under Assumption 2.2, the process \(\{M^N_A(t): t\ge 0\}\) is a square-integrable martingale with respect to the filtration \({{\mathcal {F}}}^N_A= \{{{\mathcal {F}}}^N_A(t): t\ge 0\}\) where

The quadratic variation of \(M^N_A(t)\) is given by

Proof

It is clear that \(M^N_A(t) \in {{\mathcal {F}}}^N_A(t)\), and \({\mathbb {E}}[|M_A^N(t)|] \le 2 {\mathbb {E}}[\Lambda ^N(t)] \le 2 \lambda ^* Nt <\infty \) for each \(t\ge 0\), under Assumption 2.2. It suffices to verify the martingale property: for \(t_2>t_1\ge 0\),

which can be checked using the above definition of the filtration. In addition, \({\mathbb {E}}[(M_A^N(t))^2] = {\mathbb {E}}[\Lambda ^N(t)] \le \lambda ^* N t<\infty \) for each \(t\ge 0\). The rest is standard. \(\square \)

Recall that \(({\bar{A}}^N, {\bar{S}}^N, {\bar{\Upsilon }}^N) := N^{-1}(A^N, S^N, \Upsilon ^N) \).

Lemma 5.4

Under Assumptions 2.1 and 2.2, the sequence of processes \(\{({\bar{A}}^N, {\bar{S}}^N): N \in {{\mathbb {N}}}\}\) is tight in \(D^2\). The limit of each convergence subsequence of \(\{{\bar{A}}^N\}\), denoted by \({\bar{A}}\), satisfies

and

Proof

It is clear that under Assumption 2.2, if \({\bar{\Lambda }}^N(t):=\int _0^t {\bar{\Upsilon }}^N(u) du\), \({\bar{\Lambda }}^N(0)=0\) and

Since

it follows from Doob’s inequality that \({\bar{M}}^N_A(t)\) tends to 0 in probability, locally uniformly in t. The tightness of \(\{{\bar{A}}^N: N \in {{\mathbb {N}}}\}\) in D follows. Since \({\bar{S}}^N = {\bar{S}}^N(0) - {\bar{A}}^N\) and \({\bar{S}}^N(0) \Rightarrow {\bar{S}}(0)\) from Assumption 2.1, we obtain the tightness of \(\{{\bar{S}}^N: N \in {{\mathbb {N}}}\}\) in D, and thus the claim of the lemma. \(\square \)

In the following of this section, we consider a convergent subsequence of \({\bar{A}}^N\).

Recall that

Lemma 5.5

Under Assumptions 2.1 and 2.2, along a subsequence of \({\bar{A}}^N\) which converges weakly to \({\bar{A}}\),

where the limit \({\bar{{\mathfrak {I}}}}_1(t,x)\) is given by

Proof

Let

We can write (from now on, \(\int _a^b\) stands for \(\int _{(a,b]}\))

Then from Lemma 5.1, we deduce that for any \(t,x\ge 0\),

We will next show that for any \(\epsilon >0\), there exists \(\delta >0\) such that the following holds for any (t, x):

It is not hard to deduce from (5.19) and (5.20), by a two-dimensional extension of the argument of the Corollary on page 83 of [4], that as \(N\rightarrow \infty \), \(\breve{{\mathfrak {I}}}^N_1(t,x) \Rightarrow {\bar{{\mathfrak {I}}}}_1(t,x)\) locally uniformly in t and x. Whenever \(t\le t'\le t+\delta \) and \(x\le x'\le x+\delta \), we have

Since \({\bar{A}}^N(t)\Rightarrow \int _0^t{\bar{\Upsilon }}(s)ds\) locally uniformly in t, and \({\bar{\Upsilon }}(s)\le \lambda ^*\), the limit in law of the right hand side of the last inequality is bounded by

which is less than \(\epsilon \) for \(\delta >0\) small enough. Hence, (5.20) follows.

Let now

To prove (5.16), it remains to show that, as \(N\rightarrow \infty \),

We apply Theorem 5.1. By Markov’s inequality and the decomposition of \(A^N(t)\) in (5.9) with \({\mathbb {E}}[M_A^N(t)]=0\), we obtain

The result then follows from the next two lemmas. \(\square \)

Lemma 5.6

Under the assumptions of Lemma 5.5, for \(\epsilon >0\), as \(\delta \rightarrow 0\),

Proof

We have

Then we obtain

Let \(\breve{Q}(ds,dr,dz)\) denote a PRM on \({\mathbb {R}}_+^3\) with mean measure \(\nu (ds,dr,dz) = ds dr F(dz)\) and \(\widetilde{Q}\) denote the associated compensated PRM. By the Markov inequality, we obtain the first term is bounded by \(9\epsilon ^{-2}\) times

where the last inequality follows from (5.14). The first term converges to zero as \(N\rightarrow \infty \), and we note that

since \(F(r)\le 1\). Hence

For the second term in (5.23), we have

where the first term converges to zero as \(N \rightarrow \infty \) by the convergence \({\bar{M}}^N_A (t) \rightarrow 0\) in probability, locally uniformly in t, while the second term is bounded as in (5.24).

For the last term in (5.23), we use the martingale decomposition of \({\bar{A}}^N\) and the bound for \({\bar{\Upsilon }}^N\) in (5.14), and obtain

which, since \({\bar{M}}_A^N(t)\rightarrow 0\) locally uniformly in t, implies that, provided \(\delta <\epsilon /\lambda ^*\),

Thus we have shown that (5.22) holds. \(\square \)

Lemma 5.7

Under the assumptions of Lemma 5.5, for \(\epsilon >0\), as \(\delta \rightarrow 0\),

Proof

Observe that

from which we obtain

Then the claim follows from the same argument as the one used to treat the last term in (5.23) in the end of the proof of the previous lemma. \(\square \)

5.3 Convergence of the Aggregate Infectivity Process

Recall \( {\mathcal {I}}^N\) in (2.2), and let \(\overline{{\mathcal {I}}}^N := N^{-1} {\mathcal {I}}^N\). Define

Lemma 5.8

Under Assumptions 2.1 and 2.2, along a convergent subsequence of \({\bar{A}}^N\) which converges weakly to \({\bar{A}}\), we have in probability,

Proof

We write

where

We first consider \({{\overline{\Xi }}}^N_0(t)\). For each fixed t, by conditioning on \(\sigma \{I^N(0,y): 0\le y \le {\bar{x}}\} = \sigma \{{\tilde{\tau }}^N_{j,0}, j=1,\dots , I^N(0)\}\), we obtain

We then have for \(t,u>0\),

Then by Assumption 2.2, writing \(\lambda ^0_j(t) = \sum _{\ell =1}^k\lambda ^{0,\ell }_j(t) {{\textbf{1}}}_{[\zeta _j^{\ell -1}, \zeta _j^\ell )}(t)\), we have

Both terms on the right hand side are increasing in u, and thus, we have

Here for the second term, we have

hence

The first term on the right of (5.29) tends to 0 as \(N\rightarrow \infty \), since by conditioning on \(\sigma \{{\mathfrak {I}}^N(0,y): 0\le y \le {\bar{x}}\} = \sigma \{{\tilde{\tau }}^N_{j,0}, j=1,\dots , I^N(0)\}\), and since the \(\zeta _j^\ell \)’s are mutually independent and globally independent of the \({\tilde{\tau }}^N_{j,0}\)’s, we obtain

The second term on the right of (5.29) equals

whose limsup as \(N\rightarrow \infty \) is bounded from above by

Since for each \(1\le \ell \le k\),

is continuous and equals 0 at \(\delta =0\), for any \(\epsilon >0\), there exists \(\delta >0\) small enough such that the above quantity vanishes. Thus, we have shown that

Next, consider \(\Delta _0^{N,2}(t,u)\), which is \(\Delta _0^{N,1}(t,u)\), with the j-th term in the absolute value being replaced by its conditional expectation given \({\tilde{\tau }}^N_{j,0}\). The computations which led above to (5.28) give

So the same arguments as those used above yield that (5.30) holds with \(\Delta _0^{N,1}(t,u)\) replaced by \(\Delta _0^{N,2}(t,u)\).

Thus we have shown that in probability, \( {{\overline{\Xi }}}^N_0 \rightarrow 0\) in D as \( N \rightarrow \infty \). The convergence \({{\overline{\Xi }}}^N_1\rightarrow 0\) in D in probability follows from the proof of Lemma 4.6 in [10]. In fact, the above proof of \( {{\overline{\Xi }}}^N_0 \rightarrow 0\) can be adapted to that proof by observing the similar roles of \(A^N\) and \({\mathfrak {I}}^N(0,\cdot )\). This completes the proof. \(\square \)

Lemma 5.9

Under Assumptions 2.1 and 2.2, along a convergent subsequence of \({\bar{A}}^N\) which converges weakly to \({\bar{A}}\),

where \( \widetilde{{{\mathcal {I}}}}(t)\) is given by

Proof

By the above lemma, it suffices to show that

The expression of \( \widetilde{{{\mathcal {I}}}}^N\) in (5.27) can be rewritten as

It follows from Lemma 5.1 that for any \(t>0\), as \(N\rightarrow \infty \), \(\widetilde{{{\mathcal {I}}}}^N(t) \Rightarrow \widetilde{{{\mathcal {I}}}}(t)\). It remains to show that the sequence \(\widetilde{{{\mathcal {I}}}}^N\) is tight in D. For that purpose, exploiting the Corollary on page 83 of [4], it suffices to show that for any \(\epsilon >0\),

(5.35) follows from the fact that, with \(G_\delta (s):=\sup _{0\le u\le \delta }|{{\bar{\lambda }}}(s+u)-{{\bar{\lambda }}}(s)|\),

Now \({\bar{{\mathfrak {I}}}}(0,dy)\) a.e., \(G_\delta (y+t)\rightarrow 0\), and since \(0\le G_\delta (y+t)\le \lambda ^*\), it follows from Lebesgue’s dominated convergence that \(\int _0^{{\bar{x}}}G_\delta (y+t){\bar{{\mathfrak {I}}}}(0,dy)\rightarrow 0\), as \(\delta \rightarrow 0\),hence for \(\delta >0\) small enough, this quantity is less than \(\epsilon \), and the indicator vanishes.

It remains to establish (5.36). We have

hence

The result follows since the sum of the two first terms on the right are less than \(\epsilon /2\) for \(\delta >0\) small enough, while the two last terms tend to 0, as \(N\rightarrow \infty \). \(\square \)

5.4 Completing the Proof of Theorem 2.1

By Lemmas 5.2 and 5.5, we have that, along a subsequence,

where \({{\bar{{\mathfrak {I}}}}}_0(t,x)\) and \({{\bar{{\mathfrak {I}}}}}_1(t,x)\) are given in (5.2) and (5.17), respectively. Also recall that \({\bar{S}}^N={\bar{S}}^N(0) - {\bar{A}}^N\) by (2.9). We need to show the joint convergence

or equivalently,

Indeed, first thanks to Lemma 5.8, we can replace \({\overline{{{\mathcal {I}}}}}^N\) by \(\widetilde{{{\mathcal {I}}}}^N\). Next we have the decompositions

where \(\widetilde{{{\mathcal {I}}}}_0^N\) and \(\widetilde{{{\mathcal {I}}}}_1^N\) are respectively the first and the second term on the right of the identity (5.34). By the independence of the quantities associated with initially and newly infected individuals, it suffices to prove the joint convergence of the processes \(({{\bar{{\mathfrak {I}}}}}_0^N, \widetilde{{{\mathcal {I}}}}_0^N)\) and that of the processes \(({\bar{A}}^N,{{\bar{{\mathfrak {I}}}}}_1^N,\widetilde{{{\mathcal {I}}}}_1^N)\) separately. We have proved in Lemma 5.2 that \({{\bar{{\mathfrak {I}}}}}_0^N\rightarrow {{\bar{{\mathfrak {I}}}}}_0\) in \(D_D\) in probability, and it follows from the arguments in the proof of Lemma 5.9 that \(\widetilde{{{\mathcal {I}}}}_0^N\rightarrow \widetilde{{{\mathcal {I}}}}_0\) in D in probability, where \(\widetilde{{{\mathcal {I}}}}_0\) is the first term on the right of the identity (5.32). Hence, the joint convergence \(({{\bar{{\mathfrak {I}}}}}_0^N, \widetilde{{{\mathcal {I}}}}_0^N) \rightarrow ({{\bar{{\mathfrak {I}}}}}_0,\widetilde{{{\mathcal {I}}}}_0)\) in \(D^2\) in probability is immediate.

Exploiting again (5.21), we see that the joint convergence \(({\bar{A}}^N,{{\bar{{\mathfrak {I}}}}}_1^N,\widetilde{{{\mathcal {I}}}}_1^N)\Rightarrow ({\bar{A}}, {{\bar{{\mathfrak {I}}}}}_1, \widetilde{{{\mathcal {I}}}}_1)\) will be a consequence of

where \(\widetilde{{{\mathcal {I}}}}_1\) denotes the second term on the right of the identity (5.32). Since \(\breve{{\mathfrak {I}}}^N_1(t,x) = \int _{(t-x)^+}^t F^c(t-s) d {\bar{A}}^N(s)\) and \(\widetilde{{{\mathcal {I}}}}_1^N(t)=\int _0^t {{\bar{\lambda }}}(t-s) d{\bar{A}}^N(s)\), the joint finite dimensional convergence is a consequence of the continuous mapping theorem and Lemma 5.1. Hence the result follows from tightness. We have proved the joint convergence property in (5.37).

Recall the expression of \({{\overline{\Upsilon }}}^N(t) = {\bar{S}}^N(t)\overline{{\mathcal {I}}} ^N(t)\). Applying the continuous mapping theorem again, we obtain that

Thus by (5.13), we conclude that

Therefore, the limit \(({\bar{S}}, \widetilde{{{\mathcal {I}}}})\) satisfies the set of integral equations in (2.13), (2.14) and the limit \(\widetilde{{{\mathcal {I}}}}\) coincides with \({\overline{{{\mathcal {I}}}}}\) defined by (2.14). Then, the limit \(\widetilde{{\mathfrak {I}}}\) coincides with \({\bar{{\mathfrak {I}}}}\) in (2.15). The limits \({\bar{I}}\) in (2.18) and \({\bar{R}}\) in (2.16) then follow immediately. The set of integral equations has a unique deterministic solution. Indeed, it is easy to see that the system of equations (2.13) and (2.14) (together with the first part of (2.17)) has a unique solution \(({\bar{S}}, {\overline{{{\mathcal {I}}}}})\), given the initial values \({{\bar{{\mathfrak {I}}}}}(0,\cdot )\). The other processes \({{\bar{{\mathfrak {I}}}}}, {\bar{I}}, {\bar{R}}\) are then uniquely determined. Hence the whole sequence converges in probability.

From (2.15), we deduce that for all \(t>0\),

This prove the second equality in (2.17).

It remains to prove the continuity. The continuity in t of \({\bar{S}}(t)\) is clear. Let us prove that \(t\mapsto {{\overline{{{\mathcal {I}}}}}}(t)\) is continuous. Since \(\lambda _i\) is càdlàg and bounded, it is easily checked that \(t\mapsto {{\bar{\lambda }}}(t)={\mathbb {E}}[\lambda (t)]\) is also càdlàg. In fact it is continuous if all the \(F_\ell \)’s for \(1\le \ell \le k\) are continuous. The points of discontinuity of \({{\bar{\lambda }}}(t)\) are the points where one of the laws of the \(\zeta ^\ell \) has some mass. The set of those points is at most countable. Consequently, if \(t_n\rightarrow t\), the set of y’s where \({{\bar{\lambda }}}(t_n+y)\) may not converge to \({{\bar{\lambda }}}(t+y)\) is at most countable, and this is a set of zero \({{\bar{{\mathfrak {I}}}}}(0,dy)\) measure. Since moreover \(0\le {{\bar{\lambda }}}(t_n+y)\le \lambda ^*\), \(t\rightarrow \int _0^{{\bar{x}}}{{\bar{\lambda }}}(y+t){{\bar{{\mathfrak {I}}}}}(0,dy)\) is continuous. Let us now consider the second term in (2.14). We first note that since \({{\bar{\lambda }}}(t-s)\le \lambda ^*\) and \({\bar{S}}(t)\le 1\), it follows from (2.14), (2.17) and Gronwall’s Lemma that \({{\overline{{{\mathcal {I}}}}}}(t)\le \lambda ^*e^{\lambda ^*t}\). Let \(t_n\rightarrow t\). We have

Clearly the above right hand side tends to 0, as \(n\rightarrow \infty \). A similar argument shows that \({\bar{R}}\) and \({\bar{I}}\) are continuous, and that \((t,x)\mapsto {{\bar{{\mathfrak {I}}}}}(t,x)\) is continuous. Finally, since the convergence holds in \(D\times D\times D_D\times D\) and the limits are continuous, the convergence is locally uniform in t and x. This completes the proof of Theorem 2.1.

References

Aras, A.K., Liu, Y., Whitt, W.: Heavy-traffic limit for the initial content process. Stoch. Syst. 7(1), 95–142 (2017)

Balan, R.M., Saidani, B.: Weak convergence and tightness of probability measures in an abstract skorohod space. arXiv preprint arXiv:1907.10522 (2019)

Bickel, P.J., Wichura, M.J.: Convergence criteria for multiparameter stochastic processes and some applications. Ann. Math. Stat. 42(5), 1656–1670 (1971)

Billingsley, P.: Convergence of Probability Measures. Wiley, New York (1999)

Britton, T., Pardoux, E.: Stochastic epidemics in a homogeneous community. In: Britton, T., Pardoux, E. (eds.) Stochastic Epidemic Models with Inference. Part I. Lecture Notes in Mathematics, pp. 1–120. Elsevier, Amsterdam (2019)

Chen, Y., Yang, J., Zhang, F.: The global stability of an SIRS model with infection age. Math. Biosci. Eng. 11(3), 449–469 (2014)

Çinlar, E.: Probability and Stochastics. Springer, Berlin (2011)

Clémençon, S., Chi Tran, V., De Arazoza, H.: A stochastic SIR model with contact-tracing: large population limits and statistical inference. J. Biol. Dyn. 2(4), 392–414 (2008)

Duchamps, J.-J., Foutel-Rodier, F., Schertzer, E.: General epidemiological models: Law of large numbers and contact tracing. arXiv preprint arXiv:2106.13135 (2021)

Forien, R., Pang, G., Pardoux, É.: Epidemic models with varying infectivity. SIAM J. Appl. Math. 81(5), 1893–1930 (2021)

Forien, R., Pang, G., Pardoux, É.: Estimating the state of the Covid-19 epidemic in France using a model with memory. R. Soc. Open Sci. 8, 2023273 (2021)

Foutel-Rodier, F., Blanquart, F., Courau, P., Czuppon, P., Duchamps, J.-J., Gamblin, J., Kerdoncuff, É., Kulathinal, R., Régnier, L., Vuduc, L., et al.: From individual-based epidemic models to McKendrick–von Foerster PDEs: a guide to modeling and inferring COVID-19 dynamics. J. Math. Biol. 85, 43 (2022). https://doi.org/10.1007/s00285-022-01794-4

Gaubert, S., Akian, M., Allamigeon, X., Boyet, M., Colin, B., Grohens, T., Massoulié, L., Parsons, D.P., Adnet, F., Chanzy, É., et al.: Understanding and monitoring the evolution of the Covid-19 epidemic from medical emergency calls: the example of the paris area. Comptes Rendus. Math. 358(7), 843–875 (2020)

Hoppensteadt, F.: An age dependent epidemic model. J. Franklin Inst. 297(5), 325–333 (1974)

Inaba, H.: Kermack and McKendrick revisited: the variable susceptibility model for infectious diseases. Jpn. J. Ind. Appl. Math. 18(2), 273–292 (2001)

Inaba, H., Sekine, H.: A mathematical model for Chagas disease with infection-age-dependent infectivity. Math. Biosci. 190(1), 39–69 (2004)

Kaplan, E.H.: OM Forum-COVID-19 scratch models to support local decisions. Manuf. Serv. Oper. Manag. 22(4), 645–655 (2020)

Kermack, W. O., McKendrick, A. G.: A contribution to the mathematical theory of epidemics. Proc. R. Soc. Lond. Ser. A 115(772), 700–721 (1927)

Kermack, W. O., McKendrick, A. G.: Contributions to the mathematical theory of epidemics. II. The problem of endemicity. Proc. R. Soc. Lond. Ser. A 138(834), 55–83 (1932)

Kermack, W. O., McKendrick, A. G.: Contributions to the mathematical theory of epidemics. III. Further studies of the problem of endemicity. Proc. R. Soc. Lond. Ser. A 141(843), 94–122 (1933)

Khoshnevisan, D.: Multiparameter Processes: An Introduction to Random Fields. Springer Science & Business Media, Berlin (2002)

Magal, P., McCluskey, C.: Two-group infection age model including an application to nosocomial infection. SIAM J. Appl. Math. 73(2), 1058–1095 (2013)

Martcheva, M.: An Introduction to Mathematical Epidemiology, vol. 61. Springer, Berlin (2015)

Pang, G., Pardoux, E.: Functional central limit theorems for epidemic models with varying infectivity. Stochastics (2022). https://doi.org/10.1080/17442508.2022.2124870

Pang, G., Pardoux, É.: Functional limit theorems for non-Markovian epidemic models. Ann. Appl. Probab. 32(3), 1615–1665 (2022)

Pang, G., Whitt, W.: Two-parameter heavy-traffic limits for infinite-server queues. Queueing Syst. 65(4), 325–364 (2010)

Pang, G., Zhou, Y.: Two-parameter process limits for an infinite-server queue with arrival dependent service times. Stoch. Process. Appl. 127(5), 1375–1416 (2017)

Thieme, H.R., Castillo-Chavez, C.: How may infection-age-dependent infectivity affect the dynamics of HIV/AIDS? SIAM J. Appl. Math. 53(5), 1447–1479 (1993)

Zhang, Z., Peng, J.: A SIRS epidemic model with infection-age dependence. J. Math. Anal. Appl. 331, 1396–1414 (2007)

Zoltan Fodor, S.D.K., Kovacs, T.G.: Why integral equations should be used instead of differential equations to describe the dynamics of epidemics. arXiv:2004.07208 (2020)

Acknowledgements

We thank the reviewers on the helpful comments that have improved the exposition of our paper. Guodong Pang is partly supported by the NSF grant DMS-2216765.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Proof of Theorem 5.1

Appendix: Proof of Theorem 5.1

Given \(\delta >0\), we define the two sets

For any \(t\in [0,T]\), we define \(\gamma _{T,\delta }(t)\) to be the element of \(\Gamma _{T,\delta }\) such that \(\gamma _{T,\delta }(t)\le t<\gamma _{T,\delta }(t)+\delta \), and for any \(s\in [0,S]\), we define \(\gamma _{S,\delta }(s)\) to be the element of \(\Gamma _{S,\delta }\) such that \(\gamma _{S,\delta }(s)\le s<\gamma _{S,\delta }(s)+\delta \).

Let (t, s) and \((t',s')\) be two points in \([0,T]\times [0,S]\) such that \(|t-t'|\vee |s-s'|\le \delta \). We have

Hence

It then follows from (ii) that, as \(\delta \rightarrow 0\),

This, combined with (i), implies the result. \(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Pang, G., Pardoux, É. Functional Law of Large Numbers and PDEs for Epidemic Models with Infection-Age Dependent Infectivity. Appl Math Optim 87, 50 (2023). https://doi.org/10.1007/s00245-022-09963-z

Accepted:

Published:

DOI: https://doi.org/10.1007/s00245-022-09963-z