Abstract

Nonzero-sum stochastic differential games with impulse controls offer a realistic and far-reaching modelling framework for applications within finance, energy markets, and other areas, but the difficulty in solving such problems has hindered their proliferation. Semi-analytical approaches make strong assumptions pertaining to very particular cases. To the author’s best knowledge, the only numerical method in the literature is the heuristic one we put forward in Aïd et al (ESAIM Proc Surv 65:27–45, 2019) to solve an underlying system of quasi-variational inequalities. Focusing on symmetric games, this paper presents a simpler, more precise and efficient fixed-point policy-iteration-type algorithm which removes the strong dependence on the initial guess and the relaxation scheme of the previous method. A rigorous convergence analysis is undertaken with natural assumptions on the players strategies, which admit graph-theoretic interpretations in the context of weakly chained diagonally dominant matrices. A novel provably convergent single-player impulse control solver is also provided. The main algorithm is used to compute with high precision equilibrium payoffs and Nash equilibria of otherwise very challenging problems, and even some which go beyond the scope of the currently available theory.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Stochastic differential games model the interaction between players whose objective functions depend on the evolution of a certain continuous-time stochastic process. The subclass of impulse games focuses on the case where the players only act at discrete (usually random) points in time by shifting the process. In doing so, each of them incurs into costs and possibly generates “gains” for the others at the same time. They constitute a generalization of the well-known (single-player) optimal impulse control problems [33, Chpt.7-10], which have found a wide range of applications in finance, energy markets and insurance [5, 9, 13, 23, 31], among plenty of other fields.

From a deterministic numerical viewpoint, an impulse control problem entails the resolution of a differential quasi-variational inequality (QVI) to compute the value function and, when possible, retrieve an optimal strategy. Policy-iteration-type algorithms [4, 15, 16] undoubtedly occupy an ubiquitous place in this respect, especially in the infinite horizon case.

The presence of a second player makes matters much more challenging, as one needs to find two optimal (or equilibrium) payoffs dependent on one another, and the optimal strategies take the form of Nash equilibria (NEs). And while impulse controls give a more realistic setting than “continuous” controls in applications such as the aforementioned, they normally lead to less tractable and technical models.

It is not surprising then, that the literature in impulse games is limited and mainly focused on the zero-sum case [8, 17, 21]. The more general and versatile nonzero-sum instance has only recently received attention. The authors of [1] consider for the first time a general two-player game where both participants act through impulse controls,Footnote 1 and characterize certain type of equilibrium payoffs and NEs via a system of QVIs by means of a verification theorem. Using this result, they provide the first example of an (almost) fully analytically solvable game, motivated by central banks competing over the exchange rate. The result is generalized to N players in [10], which also gives a semi-analytical solution (i.e., depending on several parameters found numerically) to a concrete cash management problem.Footnote 2 A different, more probabilistic, approach is taken in [24] to find a semi-analytical solution to a strategic pollution control problem and to prove another verification theorem.

The previous examples, and the lack of others,Footnote 3 give testimony of how difficult it is to explicitly solve nonzero-sum impulse games. The analytical approaches require an educated guess to start with and (with the exception of the linear game in [1]) several parameters need to be solved for in general from highly-nonlinear systems of equations coupled with order conditions. All of this can be very difficult, if not prohibitive, when the structure of the game is not simple enough. Further, all of them (as well as the majority of concrete examples in the impulse control literature) assume linear costs. In general, for nonlinear costs, the state to which each player wants to shift the process when intervening is not unique and it depends on the starting point. This effectively means that infinite parameters may need to be solved for, drastically discouraging this methodology.

While the need for numerical schemes able to handle nonzero-sum impulse games is obvious, unlike in the single-player case, this is an utterly underdeveloped line of research. Focusing on the purely deterministic approach, solving the system of QVIs derived in [1] involves handling coupled free boundary problems, further complicated by the presence of nonlinear, nonlocal and noncontractive operators. Additionally, solutions will typically be irregular even in the simplest cases such as the linear game. Moreover, the absence of a viscosity solutions framework such as that of impulse control [37] means that it is not possible to know whether the system of QVIs has a solution (not to mention some form of uniqueness) unless one can explicitly solve it. This is further exacerbated by the fact that even defining such a system requires a priori assumptions on the solution (the unique impulse property). This is also the case in [24].

To the author’s best knowledge, the only numerical method available in the literature is our algorithm in [3], which tackles the system of QVIs by sequentially solving single-player impulse control problems combined with a relaxation scheme. Unfortunately, the choice of the relaxation scheme is not obvious in general and the convergence of the algorithm relies on a good initial guess. It was also observed that stagnation could put a cap on the accuracy of the results, without any simple solution to it. Lastly, while numerical validation was performed, no rigorous convergence analysis was provided.

Restricting attention to the one-dimensional infinite horizon two-player case, this paper puts the focus on certain nonzero-sum impulse games which display a symmetric structure between the players. This class is broad enough to include many interesting applications; no less than the competing central banks problem (whether in its linear form [1] or others considered in the single bank formulation [4, 19, 30, 32]), the cash management problem [10] (reducing its dimension by a simple change of variables) and the generalization of many impulse control problems to the two-player case.

For this class of games, an iterative algorithm is presented which substantially improves [3, Alg.2] by harnessing the symmetry of the problem, removing the strong dependence on the initial guess and dispensing with the relaxation scheme altogether. The result is a simpler and more intuitive, precise and efficient routine, for which a convergence analysis is provided. It is shown that the overall routine admits a representation that strongly resembles, both algorithmically and in its properties, that of the combined fixed-point policy-iteration methods [14, 26], albeit with nonexpansive operators. Still, a certain contraction property can still be established.

To perform the analysis, we impose assumptions on the discretization scheme used on the system of QVIs and the discrete admissible strategies. These naturally generalize those of the impulse control case [4] and admit graph-theoretic interpretations in terms of weakly chained diagonally dominant (WCDD) matrices and their recently introduced matrix sequences counterpart [7]. We establish a clear parallel between these discrete type assumptions, the behaviour of the players and the Verification Theorem.

Section 1 deals with the analytical problem. Starting with an overview of the model (Sect. 1.1), we recall the Verification Theorem of [1] and the system of QVIs we want to solve (Sect. 1.2). We then give a precise definition of the class of symmetric nonzero-sum impulse games and establish some preliminary results (Sect. 1.3).

Section 2 considers the analogous discrete problem. Section 2.1 specifies a general discrete version of the system of QVIs, such that any discretization scheme compliant with the assumptions to be imposed will enjoy the same properties. Section 2.2 presents the iterative algorithm, and shows how the impulse control problems that need to be sequentially solved have a unique solution that can be handled by policy iteration. Additionally, Sect. 2.3 provides an alternative general solver for impulse control problems. It consists of an instance of fixed-point policy-iteration that is noncompliant with the standard assumptions [26] and, as far as the author knows, was not used in the context of impulse control before, other than heuristically in [3]. We prove its convergence under the present framework.

Section 2.4 characterizes the overall iterative algorithm as a fixed-point policy-iteration-type method, allowing for reformulations of the original problem and results pertaining to the solutions. The necessary matrix and graph-theoretic definitions and results needed are collected in Appendix A for the reader’s convenience. Section 2.5 carries on with the overall convergence analysis and shows to which extent different sets of reasonable assumptions are enough to guarantee convergence to solutions, convergence of strategies and boundedness of iterates. Sufficient conditions for convergence are proved. Discretization schemes are provided in Sect. 2.6.

Section 3 presents the numerical results. In Sect. 3.1, a variety of symmetric nonzero-sum impulse games, many seemingly too complicated to be handled analytically, are explicitly solved for equilibrium payoffs and NE strategies with great precision. This is done on a fixed grid, while considering different performance metrics and addressing practical matters of implementation. In the absence of a viscosity solutions framework to establish convergence to analytical solutions as the grid is refined, Sect. 3.2 performs a numerical validation using the only examples of symmetric solvable games in the literature. Section 3.3 addresses the case of games without NEs. Section 3.4 tackles games beyond the scope of the currently available theory, displaying discontinuous impulses and very irregular payoffs. The latter give insight and motivate further research into this field.

2 Analytical Continuous-Space Problem

In this section we start by reviewing a general formulation of two-player nonzero-sum stochastic differential games with impulse controls, as considered in [1], together with the main theoretical result of the authors: a characterization of certain NEs via a deterministic system of QVIs. The indices of the players are denoted \(i=1,2\). We will generally use i to indicate a given player and j to indicate their opponent. Since no other type of games is considered in this paper, we will often speak simply of “games” for brevity. Afterwards we shall specialize the discussion in the (yet to be specified) symmetric instance.

Throughout the paper, we restrict our attention to the one-dimensional infinite-horizon case. (A similar review is carried out in [3, Sect.1].) Some of the most technical details concerning the well-posedness of the model are left out for brevity and can be found in [1, Sect.2].

2.1 General Two-Player Nonzero-Sum Impulse Games

Let \((\Omega , \mathcal {F}, (\mathcal {F}_t)_{t\ge 0}, \mathbb {P})\) be a filtered probability space under the usual conditions supporting a standard one-dimensional Wiener process W. We consider two players that observe the evolution of a state variable X, modifying it when convenient through controls of the form \(u_i=\{(\tau _i^k,\delta _i^k)\}_{k=1}^\infty \) for \(i=1,2\). The stopping times \((\tau _i^k)\) are their intervention times and the \(\mathcal {F}_{\tau _i^k}\)-measurable random variables \((\delta _i^k)\) are their intervention impulses. Given controls \((u_1,u_2)\) and a starting point \(X_{0^-}=x\in \mathbb {R}\), we assume \(X=X^{x;u_1,u_2}\) has dynamics

for some given drift and volatility functions \(\mu ,\sigma :\mathbb {R}\rightarrow \mathbb {R}\), locally Lipschitz with linear growth.Footnote 4

Equation (1.1.1) states that X evolves as an Itô diffusion in between the intervention times, and that each intervention consists in shifting X by applying an impulse. It is assumed that the players choose their controls by means of threshold-type strategies of the form \(\varphi _i=(\mathcal {I}_i,\delta _i)\), where \(\mathcal {I}_i\subseteq \mathbb {R}\) is a closed set called intervention (or action) region and \(\delta _i:\mathbb {R}\rightarrow \mathbb {R}\) is an impulse function assumed to be continuous. The complement \(\mathcal {C}_i=\mathcal {I}_i^c\) is called continuation (or waiting) region.Footnote 5 That is, player i intervenes if and only if the state variable reaches her intervention region, by applying an impulse \(\delta _i(X_{t^-})\) (or equivalently, shifting \(X_{t^-}\) to \(X_{t^-}+\delta _i(X_{t^-})\)). Further, we impose a priori constraints on the impulses: for each \(x\in \mathbb {R}\) there exists a set \(\emptyset \ne \mathcal {Z}_i(x)\subseteq \mathbb {R}\) (further specified in Sect. 1.3) such that \(\delta _i(x)\in \mathcal {Z}_i(x)\) if \(x\in \mathcal {I}_i\).Footnote 6 We also assume the game has no end and player 1 has the priority should they both want to intervene at the same time. (The latter will be excluded later on; see Definition 1.3.1 and the remarks that follow it.)

Given a starting point and a pair strategies, the (expected) payoff of player i is given by

with \(X=X^{x;u_1,u_2}=X^{x;\varphi _1,\varphi _2}\). For player i, \(\rho _i>0\) represents her (subjective) discount rate, \(f_i:\mathbb {R}\rightarrow \mathbb {R}\) her running payoff, \(c_i:\mathbb {R}^2\rightarrow (0,+\infty )\) her cost of intervention and \(g_i:\mathbb {R}^2\rightarrow \mathbb {R}\) her gain due to her opponent’s intervention (not necessarily non-negative). The functions \(f_i,c_i,g_i\) are assumed to be continuous.

Throughout the paper, only admissible strategies are considered. Briefly, \((\varphi _1,\varphi _2)\) is admissible if it gives well-defined payoffs for all \(x\in \mathbb {R}\), \(\Vert X\Vert _\infty \) has finite moments and, although each player can intervene immediately after the other, infinite simultaneous interventions are precluded.Footnote 7 As an example, if the running payoffs have polynomial growth, the “never intervene strategies” \(\varphi _1=\varphi _2=(\emptyset ,\emptyset \hookrightarrow \mathbb {R})\) are admissible and the game can be played.

Given a game, we want to know whether it admits some Nash equilibrium and how to compute it. Recall that a pair of strategies \((\varphi _1^*,\varphi _2^*)\) is a Nash equilibrium (NE) if for every admissible \((\varphi _1,\varphi _2)\),

i.e., no player can gain from a unilateral change of strategy. If one such NE exists, we refer to \((V_1,V_2)\), with \(V_i(x)= J_i(x;\varphi _1^*,\varphi _2^*)\), as a pair of equilibrium payoffs.

2.2 General System of Quasi-Variational Inequalities

To present the system of QVIs derived in [1], we need to define first the intervention operators. For any \(V_1, V_2:\mathbb {R}\rightarrow \mathbb {R}\) and \(x\in \mathbb {R}\), the loss operator of player i is defined asFootnote 8

When applied to an equilibrium payoff, the loss operator \(\mathcal {M}_i\) gives a recomputed present value for player i due to the cost of her own intervention. Given the optimality of the NEs, one would intuitively expect that \(\mathcal {M}_i V_i\le V_i\) for equilibrium payoffs and that the equality is attained only when it is optimal for player i to intervene. Under this logic:

Definition 1.2.1

We say that the pair \((V_1,V_2)\) has the unique impulse property (UIP) if for each \(i=1,2\) and \(x\in \{\mathcal {M}_i V_i= V_i\}\), there exists a unique impulse, denoted \(\delta _i^*(x)=\delta _i^*(V_i)(x)\in \mathcal {Z}_i(x)\), that realizes the supremum in (1.2.1).Footnote 9

If \((V_1, V_2)\) enjoys the UIP, we define the gain operator of player i as

When applied to equilibrium payoffs, the gain operator \(\mathcal {H}_i\) gives a recomputed present value for player i due to her opponent’s intervention.

Finally, let us denote by \(\mathcal {A}\) the infinitesimal generator of X when uncontrolled, i.e.,

for any \(V:\mathbb {R}\rightarrow \mathbb {R}\) which is \(C^2\) at some open neighborhood of a given \(x\in \mathbb {R}\). We assume this regularity holds whenever we compute \(\mathcal {AV}(x)\) for some V and x. The following Verification Theorem, due to [1, Thm.3.3], states that if a regular enough solution \((V_1,V_2)\) to a certain system of QVIs exists, then it must be a pair of equilibrium payoffs, and a corresponding NE can be retrieved. We state here a simplified version that applies to the one-dimensional infinite-horizon games at hand.Footnote 10

Theorem 1.2.2

(General system of QVIs) Given a game as in Sect. 1.1, let \(V_1,V_2:\mathbb {R}\rightarrow \mathbb {R}\) be pair of functions with the UIP, such that for any \(i,j \in \{1,2\}\), \(i \ne j\):

and \(V_i\in C^2(\mathcal {C}^*_j\backslash \partial \mathcal {C}^*_i)\cap C^1(\mathcal {C}^*_j)\cap C(\mathbb {R})\) has polynomial growth and bounded second derivative on some reduced neighbourhood of \(\partial \mathcal {C}^*_i\). Suppose further \(\big ((\mathcal {I}^*_i,\delta _i^*)\big )_{i=1,2}\) are admissible strategies.Footnote 11

The first equation of system (1.2.3) states that at an equilibrium, a player cannot increase her own payoff by a unilateral intervention. One therefore expects that the equality \(\mathcal {M}_j V_j = V_j\) will only hold when player j intervenes, or in other words, when the value she gains can compensate the cost of her intervention. Consequently, the second equation says that a gain results from the opponent’s intervention. Finally, the last one, means that when the opponent does not intervene, each player faces a single-player impulse control problem.

We conclude this section with some final observations that will be relevant in the sequel:

Remark 1.2.3

An immediate consequence of assuming strictly positive costs is that intervening at any state with a null impulse reduces the payoff of the acting player and is therefore suboptimal. This is also displayed in system (1.2.3): if at some state x, \(\mathcal {M}_jV_j(x)\) was realized for \(\delta =0\), then \(\mathcal {M}_jV_j(x)= V_j(x) + c_j(x,0)<V_j(x)\). At the same time, allowing for vanishing costs often leads to degenerate games in the current framework [1, Sect.4.4]. Hence, assuming \(c_j>0\) is quite reasonable.

Remark 1.2.4

Consider the case of nonegative impulses and cost functions being strictly concave in the impulse as in [17, 21]. That is, \(c_i(x,\delta +\bar{\delta })<c_i(x,\delta )+c_i(x+\delta ,\bar{\delta })\) for all \(x\in \mathbb {R},\ \delta ,\bar{\delta }\ge 0\). This models the situation in which simultaneous interventions are more expensive than a single one to the same effect. In such cases, it is easy to see that in the context of Theorem 1.2.2, player i will only shift the state variable towards her continuation region.Footnote 12

2.3 Symmetric Two-Player Nonzero-Sum Impulse Games

We want to focus our study on games which present a certain type of symmetric structure between the players, generalising the linear game [1] and the cash management game [10].Footnote 13

Notation

The type of games presented in Sect. 1.1 are fully defined by setting the drift, volatility, impulse constraints, discount rates, running payoffs, costs and gains. In other words, any such game can be represented by a tuple \(\mathcal {G}=(\mu ,\sigma ,\mathcal {Z}_i,\rho _i,f_i,c_i,g_i)_{i=1,2}\).

Definitions 1.3.1

We say that a game \(\mathcal {G}=(\mu ,\sigma ,\mathcal {Z}_i,\rho _i,f_i,c_i,g_i)_{i=1,2}\) is symmetric (with respect to zero) if

-

(S1)

\(\mu \) is odd and \(\sigma \) is even (i.e., \(\mu (x)=-\mu (-x)\) and \(\sigma (x)=\sigma (-x)\) for all \(x\in \mathbb {R}\)).

-

(S2)

\(-\mathcal {Z}_2(-x)=\mathcal {Z}_1(x)\subseteq [0,+\infty )\) for all \(x\in \mathbb {R}\) and \(\mathcal {Z}_1(x)=\{0\}=\mathcal {Z}_2(-x)\) for all \(x\ge 0\).

-

(S3)

\(\rho _1=\rho _2\), \(f_1(x)=f_2(-x)\), \(c_1(x,\delta )=c_2(-x,-\delta )\) and \(g_1(x,-\delta )=g_2(-x,\delta )\), for all \(\delta \in \mathcal {Z}_1(x),\ x\in \mathbb {R}\).

We say that the game is symmetric with respect to s (for some \(s\in \mathbb {R}\)), if the s-shifted game \((\mu (x+s),\sigma (x+s),\mathcal {Z}_i(x+s),\rho _i,f_i(x+s),c_i(x+s,\delta ),g_i(x+s,\delta ))_{i=1,2}\) is symmetric. We refer to \(x=s\) as a symmetry line of the game.

Condition (S1) is necessary for the state variable to have symmetric dynamics. In particular, together with (S3), it guarantees symmetry between solutions of the Hamilton–Jacobi–Bellman (HJB) equations of the players when there are no interventions, i.e.,

Examples 1.3.2

The most common examples of Itô diffusions satisfying this assumption are the scaled Brownian motion (symmetric with respect to zero) and the Ornstein–Uhlenbeck (OU) process (symmetric with respect to its long term mean).

Condition (S3) is self-explanatory, while (S2) is only partly so. Indeed, although symmetric constraints on the impulses \(\mathcal {Z}_1(x)=-\mathcal {Z}_2(-x)\) should clearly be a requirement, the rest of (ii) is in fact motivated by the numerical method to be presented and the type of problems it can handle. On the one hand, the third equation of the QVIs system (1.2.3) implies that a stochastic impulse control problem for player i needs to be solved on \(\mathcal {C}^*_j\). The unidirectional impulses assumption is a common one for the convergence of policy iteration algorithms in impulse control.Footnote 14 However, it is often too restrictive for many interesting applications,Footnote 15 such as when the controller would benefit the most from keeping the state variable within some bounded interval instead of simply keeping it “high” or “low” (see, e.g., [9] and [4, Sect.6.1]). Interestingly enough, assuming unidirectional impulses turns out to be less restrictive when there is a second player present, with an opposed objective. Indeed, it can happen that each player needs not to intervene in one of the two directions, and can instead rely on her opponent doing so, while capitalising a gain rather than paying a cost. See examples in Sect. 3.1 with quadratic and degree four running payoffs.

On the other hand, \(\mathcal {Z}_1(x)=\{0\}=\mathcal {Z}_2(-x)\) for all \(x\ge 0\) means that we can assume without loss of generality that the admissible intervention regions do not cross over the symmetry line; i.e., \(\mathcal {I}_1\subseteq (-\infty ,0)\) and \(\mathcal {I}_2\subseteq (0,+\infty )\) for every pair of strategies. (See Remark 1.2.3.) This guarantees in particular that the players never want to intervene at the same time and the priority rule can be disregarded.

There are different reasons why the last mentioned condition is less restrictive than it first appears to be. It is not uncommon to assume connectedness of either intervention or continuation regions (or other conditions implying them) both in impulse control [22] and nonzero-sum games [20, Sect.1.2.1]. The same can be said for assumptions that prevent the players from intervening in unison [20, Sect.1.2.1],[17, Rmk.6.5].Footnote 16 In the context of symmetric games and payoffs (see Lemma 1.3.7) such assumptions would necessarily imply the intervention regions need to be on opposed sides of the symmetry line. Additionally, without any further requirements, strategies such that \(\mathcal {I}_1\supseteq (-\infty ,0]\) and \(\mathcal {I}_2\supseteq [0,+\infty )\) would be inadmissible in the present framework, as per yielding infinite simultaneous impulses.

Definitions 1.3.3

Given a symmetric game, we say that \(\big ((\mathcal {I}_i,\delta _i)\big )_{i=1,2}\) are symmetric strategies (with respect to zero) if \(\mathcal {I}_1=-\mathcal {I}_2\) and \(\delta _1(x)=-\delta _2(-x)\). Given a symmetric game with respect to some \(s\in \mathbb {R}\), we say that\(\big ((\mathcal {I}_i,\delta _i)\big )_{i=1,2}\) are symmetric strategies with respect to s if \(\big ((\mathcal {I}_i-s,\delta _i(x+s))\big )_{i=1,2}\) are symmetric, and we refer to \(x=s\) as a symmetry line of the strategies.

Definition 1.3.4

We say that \(V_1,V_2:\mathbb {R}\rightarrow \mathbb {R}\) are symmetric functions (with respect to zero) if \(V_1(x)=V_2(-x)\). We say that they are symmetric functions with respect to s (for some \(s\in \mathbb {R}\)) if \(V_1(x+s),V_2(x+s)\) are symmetric, and we refer to \(x=s\) as a symmetry line for \(V_1,V_2\).

Remark 1.3.5

Definition 1.3.3 singles out strategies that share the same symmetry line with the game. For the linear game, for example, the authors find infinitely many NEs [1, Prop.4.7], each presenting symmetry with respect to some point s, but only one for \(s=0\) (hence, symmetric in the sense of Definition 1.3.3). At the same time, the latter is the only one for which the corresponding equilibrium payoffs \(V_1,V_2\) have a symmetry line as per Definition 1.3.4. The same is true for the cash management game [10].

Remark 1.3.6

Throughout the paper we will work only with games symmetric with respect to zero, to simplify the notation. Working with any other symmetry line amounts simply to shifting the game and results back and forth.

Lemma 1.3.7

For any symmetric game, strategies \((\varphi _1,\varphi _2)\) and functions \(V_1,V_2:\mathbb {R}\rightarrow \mathbb {R}\):

-

(i)

If \(V_1,V_2\) are symmetric, then \(\mathcal {M}_1V_1,\mathcal {M}_2V_2\) are symmetric.

-

(ii)

If \(V_1,V_2\) are symmetric and have the UIP, then \(\delta _1^*(x)=-\delta _2^*(-x)\) and \(\mathcal {H}_1V_1,\mathcal {H}_2V_2\) are symmetric.

-

(iii)

If \((\varphi _1,\varphi _2)\) are symmetric, then \(J_1(\cdot ;\varphi _1,\varphi _2),J_2(\cdot ;\varphi _1,\varphi _2)\) are symmetric.

-

(iv)

If \(V_1,V_2\) are as in Theorem 1.2.2 and \((\varphi ^*_1,\varphi ^*_2)\) is the corresponding NE of the theorem, then \((\varphi ^*_1,\varphi ^*_2)\) are symmetric if and only if \(V_1,V_2\) are symmetric.

Proof

(i) and (ii) are straightforward from the definitions.

To see (iii), one can check with the recursive definition of the state variable [1, Def.2.2] that \(X^{-x;\varphi _1,\varphi _2}\) has the same law as \(-X^{x;\varphi _1,\varphi _2}\) (recall that the continuation regions are simply disjoint unions of open intervals). Noting also that intervention times and impulses are nothing but jump times and sizes of X, one concludes that \(J_1(x;\varphi _1,\varphi _2)=J_2(-x;\varphi _1,\varphi _2)\), as intended.

Finally, (iv) is a consequence of (i), (ii) and (iii). \(\square \)

Convention 1.3.8

In light of Lemma 1.3.7, for any symmetric game we will often lose the player index from the notations and refer always to quantities corresponding to player 1,Footnote 17 henceforth addressed simply as “the player”. Player 2 shall be referred to as “the opponent”. Statements like “V has the UIP” or “V is a symmetric equilibrium payoff” are understood to refer to \((V(x),V(-x))\). Likewise, “\((\mathcal {I},\delta )\) is admissible” or “\((\mathcal {I},\delta )\) is a NE” refer to the pair \((\mathcal {I},\delta (x)),(-\mathcal {I},-\delta (-x))\).

Due to their general lack of uniqueness, it is customary in game theory to restrict attention to specific type of NEs, depending on the problem at hand (see for instance [29] for a treatment within the classical theory). Motivated by Lemma 1.3.7(iii) and (iv), and by Remark 1.3.5, one can arguably state that symmetric NEs are the most meaningful for symmetric games. Furthermore, Lemma 1.3.7 implies that for symmetric games, one can considerably reduce the complexity of the full system of QVIs (1.2.3) provided the conjectured NE (or equivalently, the pair of payoffs) is symmetric. Using Convention 1.3.8, Theorem 1.2.2 and Lemma 1.3.7 give:

Corollary 1.3.9

(Symmetric system of QVIs) Given a symmetric game as in Definition 1.3.1, let \(V:\mathbb {R}\rightarrow \mathbb {R}\) be a function with the UIP, such that:

and \(V\in C^2(-\mathcal {C}^*\backslash \partial \mathcal {C}^*)\cap C^1(-\mathcal {C}^*)\cap C(\mathbb {R})\) has polynomial growth and bounded second derivative on some reduced neighbourhood of \(\partial \mathcal {C}^*\). Suppose further that \((\mathcal {I}^*,\delta ^*)\) is an admissible strategy.

Note that system (1.3.1) also omits the equation \(\mathcal {M} V - V \le 0\) as per being redundant. Indeed, by Definition 1.3.1 and Remark 1.2.3, at a NE the player does not intervene above 0, nor the opponent below it. Thus, \(\mathcal {M} V - V \le \max \big \{\mathcal {A} V -\rho V + f, \mathcal {M} V- V \}=0\) on \(-\mathcal {C}^*\supset (-\infty ,0]\) and \(\mathcal {M} V- V < 0\) on \([0,+\infty )\).

System (1.3.1) simplifies a numerical problem which is very challenging even in cases of linear structure [3]. In light of the previous, we will focus our attention on symmetric NEs only and numerically solving the reduced system of QVIs (1.3.1).

3 Numerical Discrete-Space Problem

In this section we consider a discrete version of the symmetric system of QVIs (1.3.1) over a fixed grid, and propose and study an iterative method to solve it. As it is often done in numerical analysis for stochastic control, for the sake of generality we proceed first in an abstract fashion without making reference to any particular discretization scheme. Instead, we give some general assumptions any such scheme should satisfy for the results presented to hold. Explicit discretization schemes within our framework are presented in Sect. 2.6 and used in Sect. 3.

3.1 Discrete System of Quasi-variational Inequalities

From now on we work on a discrete symmetric grid

\(\mathbb {R}^G\) denotes the set of functions \(v:G\rightarrow \mathbb {R}\) and \(S:\mathbb {R}^G\rightarrow \mathbb {R}^G\) denotes the symmetry operator, \(Sv(x)=v(-x)\). In general, by an “operator” we simply mean some \(F:\mathbb {R}^G\rightarrow \mathbb {R}^G\), not necessarily linear nor affine unless explicitly stated. We shall identify grid points with indices, functions in \(\mathbb {R}^G\) with vectors and linear operators with matrices; e.g., \(S=(S_{ij})\) with \(S_{ij}=1\) if \(x_i=-x_j\) and 0 otherwise. The (partial) order considered in \(\mathbb {R}^G\) and \(\mathbb {R}^{G\times G}\) is the usual pointwise order for functions (elementwise for vectors and matrices), and the same is true for the supremum, maximum and arg-maximum induced by it.

We want to solve the following discrete nonlinear system of QVIs for \(v\in \mathbb {R}^G\):

where \(f\in \mathbb {R}^G\) and \(L:\mathbb {R}^G\rightarrow \mathbb {R}^G\) is a linear operator. The nonlinear operators \(M,H:\mathbb {R}^G\rightarrow \mathbb {R}^G\) are as follows: let \(\emptyset \ne Z(x)\subseteq \mathbb {R}\) be a finite set for each \(x\in G\), with \(Z(x)=\{0\}\) if \(x\ge 0\). Set \(Z{:}{=}\prod _{x\in \mathbb G}Z(x)\) and for each \(\delta \in Z\) let \(B(\delta ):\mathbb {R}^G\rightarrow \mathbb {R}^G\) be a linear operator, \(c(\delta )\in \mathbb (0,+\infty )^G\) and \(g(\delta )\in \mathbb {R}^G\), the three of them being row-decoupled in the sense of [4, 11] (i.e., row x of \(B(\delta ),c(\delta ),g(\delta )\) depends only on \(\delta (x)\in Z(x)\)). Then

Some remarks are in order. Firstly, in the same fashion as the continuous-space case, the sets \(I^*,C^*\) form a partition of the grid and represent the (discrete) intervention and continuation regions of the player, while \(-I^*,-C^*\) are such regions for the opponent.

Secondly, the general representation of M follows [4, 15]. For the standard choices of \(B(\delta )\), our definition of H is the only one for which a discrete version of Lemma 1.3.7 holds true (see Sect. 2.6). However, since B and g are row-decoupled, \(SB(\delta ^*)S\) and \(g(S\delta ^*)\) cannot be, as each row x depends on \(\delta ^*(-x)\). For this reason and the lack of maximization over \(-I^*\), there is no obvious way to reduce problem (2.1.1) to a classical Bellman problem:

like in the impulse control case [4], to apply Howard’s policy iteration [11, Ho-1]. Furthermore, unlike in the control case, even with unidirectional impulses and good properties for L and \(B(\delta )\), system (2.1.1) may have no solution as in the analytical case [1].

Thirdly, we have defined \(\delta ^*\) in (2.1.3) by choosing one particular maximizing impulse for each \(x\in G\). The main motivation behind fixing one is to have a well defined discrete system of QVIs for every \(v\in \mathbb {R}^G\). (This is not the case for the analytical problem (1.3.1) where the gain operator \(\mathcal {H}\) is not well defined unless V has the UIP.) Being able to plug in any v in (2.1.1) and obtain a residual will be useful in practice, when assessing the convergence of the algorithm (see Sect. 3). Whether a numerical solution verifies, at least approximately, a discrete UIP (and the remaining technical conditions of the Verification Theorem) becomes something to be checked separately a posteriori.

Remark 2.1.1

Choosing the maximum arg-maximum in (2.1.3) is partly motivated by ensuring a discrete solution will inherit the property of Remark 1.2.4. (The proof remains the same, for the discretizations of Sect. 2.6.) We will also motivate it in terms of the proposed numerical algorithm in Remark 2.6.4. Note that in [3] the minimum arg-maximum is used instead for both players. Nevertheless, the replication of property (ii), Lemma 1.3.7, dictates that it is only possible to be consistent with [3] for one of the two players (in this case, the opponent).

3.2 Iterative Algorithm for Symmetric Games

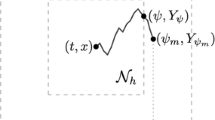

This section introduces the iterative algorithm developed to solve system (2.1.1), which builds on [3, Alg.2] by harnessing the symmetry of the problem and dispenses with the need for a relaxation scheme altogether. It is presented with a pseudocode that highlights the mimicking of system (2.1.1) and the intuition behind the algorithm; namely:

-

The player starts with some suboptimal strategy \(\varphi ^0=(I^0,\delta ^0)\) and payoff \(v^0\), to which the opponent responds symmetrically, resulting in a gain for the player (first equation of (2.1.1); lines 1, 2 and 4 of Algorithm 2.2.1).

-

The player improves her strategy by choosing the optimal response, i.e., by solving a single-player impulse control problem through a policy-iteration-type algorithm (second equation of ( 2.1.1); line 5 of Algorithm 2.2.1).

-

This procedure is iterated until reaching a stopping criteria (lines 6–8 of Algorithm 2.2.1).

Notation 2.2.1

In the following: \(G_{<0}\) and \(G_{\le 0}\) represent the sets of grid points which are negative and nonpositive respectively, and \(\Phi \) the set of (discrete) strategies

Set complements are taken with respect to the whole grid, \({\text {Id}}:\mathbb {R}^G\rightarrow \mathbb {R}^G\) is the identity operator; and given a linear operator \(O:\mathbb {R}^G\rightarrow \mathbb {R}^G\simeq \mathbb {R}^{G\times G}\), \(v\in \mathbb {R}^G\) and subsets \(I,J\subseteq G\), \(v_I\in \mathbb {R}^I\) denotes the restriction of v to I and \(O_{IJ}\in \mathbb {R}^{I\times J}\) the submatrix/operator with rows in I and columns in J.

The scale parameter in line 5 of Algorithm 2.2.1, used throughout the literature by Forsyth, Labahn and coauthors [4, 25,26,27], prevents the enforcement of unrealistic levels of accuracy for points x where \(v^{k+1}(x)\approx 0\). Additionally, note that having chosen the initial guess for the payoff \(v^0\), the initial guess for the strategy is induced by \(v^0\). (The alternative expression for the intervention region gives the same as \(\big \{M v^0- v^0=0\big \}\) for a solution of (2.1.1).)

Line 5 of Algorithm 2.2.1 assumes we have a subroutine \(\textsc {SolveImpulseControl}(w,D)\) that solves the constrained QVI problem:

for fixed \(G_{\le 0}\subseteq D\subseteq G\) (approximate continuation region of the opponent) and \(w\in \mathbb {R}^G\) (approximate payoff due to the opponent’s intervention). Although we only need to solve for \(\tilde{v}=v_D\), the value of \(v_{D^c}=w_{D^c}\) impacts the solution both when restricting the equations and when applying the nonlocal operator M. Hence, the approximate payoff \(v^{k+1/2}\) fed to the subroutine serves to pass on the gain that resulted from the opponent’s intervention and as an initial guess if desired (more on this on Remark 2.3.3).

The remaining of this section consists in establishing an equivalence between problem (2.2.2) and a classical (unconstrained) QVI problem of impulse control. This allows us to prove the existence and uniqueness of its solution. In particular, we will see that SolveImpulseControl can be defined, if wanted, by policy iteration (see the next section for an alternative method and remarks on other possible ones). Let us suppose from here onwards that the following assumptions hold true (see Appendix A for the relevant Definitions A.1.1):

-

(A0)

For each strategy \(\varphi =(I,\delta )\in \Phi \) and \(x\in I\), there exists a walk in graph\(B(\delta )\) from row x to some row \(y\in I^c\).

-

(A1)

\(-L\) is a strictly diagonally dominant (SDD) \(\text{ L }_0\)-matrix and, for each \(\delta \in Z\), \({\text {Id}}-B(\delta )\) is a weakly diagonally dominant (WDD) \(\text{ L }_0\)-matrix.

Remark 2.2.2

(Interpretation) Assumptions (A0), (A1) are (H2),(H3) in [4]. For an impulse operator (say, “\(B(\delta )v(x)=v(x+\delta )\)”), (A0) asserts that the player always wants to shift states in her intervention region to her continuation region through finitely many impulses. (This does not take into account the opponent’s response.) On the other hand, if problem (2.2.2) was rewritten as a fixed point problem, (A1) would essentially mean that the uncontrolled operator is contractive while the controlled ones are nonexpansive (see [15] and [4, Sect.4]).

Theorem 2.2.3

Assume (A0), (A1). Then, for every \(G_{\le 0}\subseteq D\subseteq G\) and \(w\in \mathbb {R}^G\), there exists a unique \(v^*\in \mathbb {R}^G\) that solves the constrained QVI problem (2.2.2). Further, \(v^*_D\) is the unique solution of

where \(\tilde{L}{:}{=}L_{DD},\ \tilde{f}{:}{=}f_D+L_{DD^c}w_{D^c},\ \tilde{Z}{:}{=}\prod _{x\in D}Z(x),\ \tilde{c}(\tilde{\delta }){:}{=}c(\tilde{\delta })-B(\tilde{\delta })_{DD^c}w_{D^c}\text{ and } \tilde{B}(\tilde{\delta }){:}{=}B(\tilde{\delta })_{DD} \text{ for } \tilde{\delta }\in \tilde{Z}; \text{ and } \tilde{M}\tilde{v}{:}{=}\max _{\tilde{\delta }\in \tilde{Z}}\big \{\tilde{B}(\tilde{\delta })\tilde{v} -\tilde{c}(\tilde{\delta })\big \} \text{ for } \tilde{v}\in \mathbb {R}^D\).

Additionally, for any initial guess, the sequence \((\tilde{v}^k)\subseteq \mathbb {R}^D\) defined by policy iteration [4, Thm.4.3] applied to problem (2.2.3), converges exactly to \(v^*_D\) in at most \(|\tilde{\Phi }|\) iterations, with \(\tilde{\Phi }{:}{=}\{\tilde{\varphi }=(I,\tilde{\delta }):\ I\subseteq G_{<0} \text{ and } \tilde{\delta }\in \tilde{Z}\}\) the set of restricted admissible strategies.Footnote 18

Proof

The equivalence between problems (2.2.2) and (2.2.3) is due to simple algebraic manipulation and \(B(\delta ),c(\delta )\) being row-decoupled for every \(\delta \in Z\). \(B(\tilde{\delta }),c(\tilde{\delta })\) are defined in the obvious way for each \(\tilde{\delta }\in \tilde{Z}\).

The rest of the proof is mostly as in [4, Thm.4.3]. Let \(\tilde{{\text {Id}}}={\text {Id}}_{DD}\). Each intervention region I can be identified with its indicator \(\tilde{\psi }=\mathbbm {1}_I\in \{0,1\}^D\) since \(D\supseteq I\). In turn, each \(\tilde{\psi }\) can be identified with the diagonal matrix \(\tilde{\Psi }=\text{ diag }(\tilde{\psi })\in \mathbb {R}^{D\times D}\). Then, problem (2.2.3) takes the form of the classical Bellman problem

if we take

Note that \(\tilde{\Phi }\) can be identified with the Cartesian product

and \(A(\tilde{\varphi }),b(\tilde{\varphi })\) are row-decoupled for every \(\tilde{\varphi }\in \tilde{\Phi }\). Since \(\tilde{\Phi }\) is finite, all we need to show is that the matrices \(A(\tilde{\varphi })\) are monotone (Definitions A.1.1 and [11, Thm.2.1]). Let us check the stronger property (Theorem A.1.4 and Proposition A.1.3) of being weakly chained diagonally dominant (WCDD) \(\text{ L }_0\)-matrices (see Definitions A.1.1).

If (A0) and (A1) also held true for the restricted matrices and strategies, the conclusion would follow. While (A1) is clearly inherited, (A0) may fail to do so, but only in non-problematic cases. To see this, let \(\tilde{\varphi }=(I,\tilde{\delta })\in \tilde{\Phi },\ x\in I\subseteq D\) and let \(\delta \in Z\) be some extension of \(\tilde{\delta }\). Note that row x of \(A(\tilde{\varphi })\) is WDD. We want to show that there is a walk in graph\(A(\tilde{\varphi })\) from x to an SDD row.

By (A0) there must exist some walk \(x=y_0\rightarrow y_1\rightarrow \dots \rightarrow y_n\in I^c\) in graph\(B(\delta )\). If this is in fact a walk from x to \(I^c\cap D\) in graph\(\tilde{B}(\tilde{\delta })\), then it verifies the desired property (just as in [4, Thm.4.3]). If not, then there must be a first \(0\le m< n\) such that the subwalk \(x\rightarrow \dots \rightarrow y_m\) is in graph\(\tilde{B}(\tilde{\delta })\) but \(y_{m+1}\notin D\). Since \(y_m\rightarrow y_{m+1}\) is an edge in graph\(B(\delta )\), we have \(B(\delta )_{y_m,y_{m+1}}\ne 0\) and the WDD row (by (A1)) \(y_m\) of \(\tilde{{\text {Id}}}-\tilde{B}(\tilde{\delta })\) is in fact SDD. Meaning that the subwalk \(x\rightarrow \dots \rightarrow y_m\) verifies the desired property instead. \(\square \)

Remark 2.2.4

(Practical considerations) 1. While convergence is guaranteed to be exact, floating point arithmetic can bring about stagnation [27]. A stopping criteria like that of Algorithm 2.2.1 should be used in those cases, with a tolerance \(\ll tol\). 2. The solution of system (2.2.3) does not change if one introduces a scaling factor \(\lambda >0\): \(\max \big \{\tilde{L} \tilde{v} +\tilde{f}, \lambda \big (\tilde{M} \tilde{v} - \tilde{v}\big )\big \}=0\) [4, Lem.4.1]. This problem-specific parameter is typically added in the implementation to enhance performance [4, 26]. It can intuitively be thought as a units adjustment.

3.3 Iterative Subroutine for Impulse Control

Due to Theorem 2.2.3, a sensible choice for SolveImpulseControl is the classical policy iteration algorithm [4, Thm.4.3] applied to (2.2.3) (i.e., [11, Ho-1] applied to (2.2.4)), adding an appropriately chosen scaling factor \(\lambda \) to improve efficiency (Remark 2.2.4 2). One possible drawback of such choice is the following: at each iteration, one needs to solve the system \(-A(\tilde{\varphi }^k)v^{k+1}+b(\tilde{\varphi }^k)=0\) for some \(\tilde{\varphi }^k\in \tilde{\Phi }\). While the matrix \(\tilde{L}\) typically has a good sparsity pattern in applications (often tridiagonal), the presence of \(\tilde{B}(\tilde{\delta }^k)\) prevents \(A(\tilde{\varphi }^k)\) from inheriting the same structure in general, and makes the resolution of the previous system more costly. (See [26] where this issue is addressed for HJB problems with jump diffusions and regime switching, among others.)

Motivated by the previous observation, this section considers an alternative choice for SolveImpulseControl: an instance of a very general class of algorithms known as fixed-point policy iteration [14, 26]. As far as the author knows, this application to impulse control was never done in the past other than heuristically in [3]. Instead of solving \(-A(\tilde{\varphi }^k)v^{k+1}+b(\tilde{\varphi }^k)=0\) at the k-th iteration, we will solve

(scaled by \(\lambda \)) where the previous iterate value \(v^k\) is given and \(\tilde{\Psi }^k\) is the diagonal matrix with \(\psi ^k\) as diagonal. In other words, we split the original policy matrix \(A(\tilde{\varphi })=\tilde{\mathbb {A}}(\tilde{\varphi })-\tilde{\mathbb {B}}(\tilde{\varphi })\) and we apply a one-step fixed-point approximation,

at each iteration of Howard’s algorithm. The resulting method can be expressed as follows (tol and scale are as in Algorithm 2.2.1):

Lines 1–3 of Sect. 2.3.1 deal with restricting the constrained problem, while the rest give a routine that can be applied to any QVI of the form (2.2.3). Starting from some suboptimal \(\tilde{v}^0\) and \(I^0\), one computes a new payoff \(\tilde{v}^1\) by solving the coupled equations \(\tilde{M}\tilde{v}^0-\tilde{v}^1=0\) on \(I^0\) and \(\tilde{L}\tilde{v}^1+\tilde{f}=0\) outside \(I^0\). A new intervention region \(I^1=\big \{\tilde{L}\tilde{v}^1+\tilde{f}\le \lambda \big (\tilde{M}\tilde{v}^1-\tilde{v}^1\big )\big \}\) is defined and the procedure is iterated.

Algorithmically, the difference with classical policy iteration is that \(\tilde{v}^{k+1}\) is computed in Line 7 with a fixed obstacle \(\tilde{Mv}^k\), changing a quasivariational inequality for a variational one. The resulting method is intuitive and simple to implement, and the linear system (2.3.1) (Line 7) inherits the sparsity pattern of \(\tilde{L}\). For example, for an SDD tridiagonal \(\tilde{L}\), the system can be solved (exactly in exact arithmetic or stably in floating point one) in O(n) operations, with \(n=|D|\) [28, Sect.9.5]. The matrix-vector multiply \(\tilde{B}(\tilde{\delta }^k)\tilde{v}^k\) can take at most \(O(n^2)\) operations, but will reduce to O(n) for standard discretizations of impulse operators.

It is also worth mentioning that Sect. 2.3.1 differs from the so-called iterated optimal stopping [16, 33] in that the latter solves \(\max \big \{\tilde{L} \tilde{v}^{k+1} + \tilde{f}, \tilde{M} \tilde{v}^k - \tilde{v}^{k+1}\big \}=0\) exactly at the k-th iteration (by running a full subroutine of Howard’s algorithm with fixed obstacle), while the former only performs one approximation step.

To establish the convergence of Sect. 2.3.1 in the present framework, we add the following assumption:

-

(A2)

\(B(\delta )\) has nonnegative diagonal elements for all \(\delta \in Z\).

Remark 2.3.1

(A2) and the requirement of (A1) that \({\text {Id}}-B(\delta )\) be a WDD \(\text{ L }_0\)-matrix are equivalent to \(B(\delta )\) being substochastic (see Appendix A). This is standard for impulse operators (see Sect. 2.6) and other applications of fixed-point policy iteration [26, Sect.4-5].

Theorem 2.3.2

Assume (A0)–(A2) and set \(I^0=\emptyset \). Then, for every \(G_{\le 0}\subseteq D\subseteq G\) and \(w\in \mathbb {R}^G\), the sequence \((\tilde{v}^k)\) defined by SolveImpulseControl(w, D) is monotone increasing for \(k\ge 1\) and converges to the unique solution of (2.2.3).

Proof

We can assume without loss of generality that \(\lambda =1\). Sect. 2.3.1 takes the form of a fixed-point policy iteration algorithm as per (2.3.2). Assumptions (A0), (A1) ensure (2.2.3) has a unique solution (Theorem 2.3.1) and that this scheme satisfies [26, Cond.3.1 (i),(ii)]. That is, \(\tilde{\mathbb {A}}(\tilde{\varphi })\) and \(\tilde{\mathbb {A}}(\tilde{\varphi })-\tilde{\mathbb {B}}(\tilde{\varphi })\) are nonsingular M-matrices (see proof of Theorem 2.3.1 and Appendix A) and all coefficients are bounded since \(\tilde{\Phi }\) is finite. In [26, Thm.3.4] convergence is proved under one additional assumption of \(\Vert \cdot \Vert _{\infty }\)-contractiveness [26, Cond.3.1 (iii)], which is not verified in our case. However, the same computations show that the scheme satisfies

Since \(I^0=\emptyset \), and due to (A1) and (A2), \(\tilde{\mathbb {B}}(\tilde{\varphi }^0)=0\) and \(\tilde{\mathbb {B}}(\tilde{\varphi }^k)\ge 0\) for all k. Thus, \((\tilde{v}^k)_{k\ge 1}\) is increasing by monotonicity of \(\tilde{\mathbb {A}}(\tilde{\varphi }^k)\). Furthermore, it must be bounded, since for all \(k\ge 1\):

which gives \(\tilde{v}^{k+1}\le (\tilde{\mathbb {A}}(\tilde{\varphi }^k)-\tilde{\mathbb {B}}(\tilde{\varphi }^k))^{-1}\tilde{\mathbb {C}}\big (\tilde{\varphi }^k\big )\le \max _{\tilde{\varphi }\in \tilde{\Phi }}(\tilde{\mathbb {A}}(\tilde{\varphi })-\tilde{\mathbb {B}}(\tilde{\varphi }))^{-1}\tilde{\mathbb {C}}\big (\tilde{\varphi }\big )\). Hence, \((\tilde{v}^k)\) converges. That the limit solves (2.2.3) is proved as in [26, Lem.3.3]. \(\square \)

Remark 2.3.3

In light of Theorem 2.3.2, moving forward we will set \(I^0=\emptyset \) in Sect. 2.3.1. (Note that the value of \(\tilde{v}^0\) is irrelevant in this case, since \(\tilde{\mathbb {B}}\big (\tilde{\varphi }^0\big )=0\).) It is natural however to choose \(\tilde{v}^0=w_D\) and \(I^0=\big \{\tilde{L}\tilde{v}^0+\tilde{f}\le \lambda \big (\tilde{M}\tilde{v}^0-\tilde{v}^0\big )\big \}\). The experiments performed with the latter choice displayed faster but non-monotone convergence, but this is not proved here. Additionally, exact convergence was often observed.

Remark 2.3.4

For the experiments in Sect. 3, SolveImpulseControl was chosen as Sect. 2.3.1 instead of classical policy iteration, since the former displayed overall lower runtimes when compared to the latter. However, it should be noted that the games considered have relatively large costs, with many parameter values taken from previous works [1, 3, 10]. For smaller costs often occurring in practice, and especially for large-scale problems, the convergence rate of Sect. 2.3.1 can become very slow, as it happens with iterated optimal stopping [34, Rmk.3.1]. This is not so for policy iteration, which converges superlinearly (see [11, Thm.3.4] and [34, Sect.5]), making it a better suited choice in this case (in terms of runtime as well). Furthermore, it has been demonstrated in [35][Sect.7] that the number of steps required for penalized policy iteration to converge remains bounded as the grid is refined. While penalized methods are not considered in the present work, such mesh-independence properties are desirable and could prove paramount in further studies of Algorithm 2.2.1 (see end of Sect. 2.5 and Sect. 3).

3.4 Overall Routine as a Fixed-Point Policy-Iteration-Type Method

The system of QVIs (2.1.1) cannot be reduced in any apparent way to a Bellman formulation (2.1.4) (see comments preceding equation). Notwithstanding, we shall see that Algorithm 2.2.1 does take a very similar form to a fixed-point policy iteration algorithm as in (2.3.2) for some appropriate \(\mathbb {A},\mathbb {B},\mathbb {C}\). Further, assumptions resembling those of the classical case [26] will be either satisfied or imposed to study its convergence. The matrix and graph-theoretic definitions and properties used throughout this section can be found in Appendix A.

Notation 2.4.1

We identify each intervention region \(I\subseteq G_{<0}\) with its indicator function \(\psi =\mathbbm {1}_I\in \{0,1\}^G\) and each \(\psi \) with the diagonal matrix \(\Psi =\text{ diag }(\psi )\in \mathbb {R}^{G\times G}\). The sequences \((v^k)\) and \((\varphi ^k)\), with \(\varphi ^k=(\psi ^k,\delta ^k)\), are the ones generated by Algorithm 2.2.1. SolveImpulseControl is defined as either Sect. 2.3.1 or Howard’s algorithm (Theorem 2.2.3), setting the outputs \(I,\delta \) as done for the former subroutine. We consider \(v^*\in \mathbb {R}^G\) fixed and \(\varphi ^*=(\psi ^*,\delta ^*(v^*))\) the induced strategy with \(\psi ^*{:}{=}\{Lv^*+f\le Mv^*-v^*\}\cap G_{<0}\).

Proposition 2.4.2

Assume (A0)–(A2). Then,

-

(i)

\(\psi ^k=\mathbbm {1}_{\{Lv^k+f\le Mv^k-v^k\}\cap G_{<0}} \text{ and } \delta ^k\in {{\,\mathrm{arg\,max}\,}}_{\delta \in Z}\big \{B(\delta )v^k-c(\delta )\big \}\).

-

(ii)

\(\mathbb {A}\big (\varphi ,\overline{\varphi }\big ){:}{=}{\text {Id}} -\big ({\text {Id}} - \overline{\Psi }-S\Psi S\big )({\text {Id}}+L) - \overline{\Psi }B(\overline{\delta })\) is a WCDD \(\text{ L }_0\)-matrix, and thus a nonsingular M-matrix.

-

(iii)

\(\mathbb {B}\big (\varphi \big ){:}{=}S\Psi B(\delta )S=diag(S\psi ) S B(\delta )S\) is substochastic.

-

(iv)

\(\mathbb {C}\big (\varphi ,\overline{\varphi }\big ){:}{=}\big ({\text {Id}}-\overline{\Psi }-S\Psi S\big )f - \overline{\Psi }c(\overline{\delta }) + S\Psi S g(S\delta )\).

Proof

Using that \((v^{k+1},I^{k+1},\delta ^{k+1})=\textsc {SolveImpulseControl}(v^{k+1/2},(-I^k)^c)\) solves the constrained QVI problem (2.2.2) for \(D=(-I^k)^c\) and \(w=v^{k+1/2}\) (Theorems 2.2.3 or 2.3.2), the recurrence relation (2.4.1) results from simple algebraic manipulation.

Given \(\varphi ,\overline{\varphi }\in \Phi \), (A0) and (A1) ensure \(\mathbb {A}\big (\varphi ,\overline{\varphi }\big )\) is a WCDD \(\text{ L }_0\)-matrix, while (A1) and (A2) imply \(\mathbb {B}\big (\varphi \big )\) is substochastic.

The following corollary is immediate by induction. It gives a representation of the sequence of payoffs in terms of the improving strategies throughout the algorithm.

Corollary 2.4.3

Assume (A0)– (A2). Then,Footnote 19

We now establish some properties of the strategy-dependent matrix coefficients that will be useful in the sequel. Given a WDD (resp. substochastic) matrix \(A\in \mathbb {R}^{G\times G}\), we define its set of “non-trouble states” (or rows) as

and its index of connectivity \(\text{ con }A\) (resp. index of contraction \(\widehat{\text{ con }}A\)) by computing for each state the least length that needs to be walked on graphA to reach a non-trouble one, and then taking the maximum over all states (more details in Appendix A). This recently introduced concept gives an equivalent charaterization of the WCDD property for a WDD matrix as con\(A<+\infty \), and can be efficiently checked for sparse matrices in \(O(|G|)\) operations [7]. On the other hand, if A is substochastic then \(\widehat{\text{ con }}A<\infty \) if and only if its spectral radius verifies \(\rho (A)<1\) (Theorem A.1.6). The proof of the following lemma can be found in Appendix A.

Lemma 2.4.4

Assume (A0)– (A2). Then for all \(\varphi ,\overline{\varphi }\in \Phi \), \(\mathbb {A}^{-1}\mathbb {B}\big (\varphi ,\overline{\varphi }\big )\) is substochastic, \((\mathbb {A}-\mathbb {B})\big (\varphi ,\overline{\varphi }\big )\) is a WDD \(L_0\)-matrix and \(\widehat{con }\big [\mathbb {A}^{-1}\mathbb {B}\big (\varphi ,\overline{\varphi }\big )\big ]\le con \big [(\mathbb {A}-\mathbb {B})\big (\varphi ,\overline{\varphi }\big )\big ]\).

As previously mentioned, system (2.1.1) may have no solution. The matrix coefficients introduced in this section allow us to algebraically characterize the existence of such solutions through strategy-dependent linear systems of equations.

Proposition 2.4.5

Assume (A0)– (A2). Then the following statements are equivalent:

-

(i)

\(v^*\) solves the system of QVIs (2.1.1).

-

(ii)

\(\mathbb {A}\big (\varphi ^*,\varphi ^*\big )v^*=\mathbb {B}\big (\varphi ^*\big )v^* +\mathbb {C}\big (\varphi ^*,\varphi ^*\big )\).

As mentioned in Remark 2.2.2, Assumption (A0) constrains the type of strategies the player can use, but without taking into account the opponent’s response. This is enough for the single-player constrained problems to have a solution and, therefore, for Algorithm 2.2.1 to be well defined. But we cannot expect this restriction to be sufficient in the study of the two-player game and the convergence of the overall routine.

In order to improve the result of Proposition 2.4.5 let us consider the following stronger version of (A0) reflecting the interaction between the player and the opponent.

-

(A1’)

For each pair of strategies \(\varphi ,\overline{\varphi }\in \Phi \), and for each \(x\in \overline{I}\cup (-I)\), there exists a walk in graph\(\big (\overline{\Psi }B(\overline{\delta })+S\Psi B(\delta )S\big )\) from row x to some row \(y\in \overline{C}\cap C\), where \(\overline{C}={\overline{I}}^c,\ C=I^c\).

Remark 2.4.6

(Interpretation) If \(\overline{\varphi },-\varphi \) are the strategies used by the player and the opponent respectively,Footnote 20 then (A0’) asserts that states in their intervention regions will eventually be shifted to the common continuation region. This precludes infinite simultaneous interventions and emulates the admissibility condition of the continuous-state case. Fixing \(I=\emptyset \) we recover (A0). Additionally, (A0’) together with (A1) imply that \((\mathbb {A}-\mathbb {B})(\varphi ,\overline{\varphi })\) is a WCDD \(\text{ L }_0\)-matrix,Footnote 21 hence an M-matrix. This is another one of the assumptions of the classical fixed-point policy iteration [26].

Under this new assumption, the \(\varphi ^*=\varphi ^*(v^*)\)-dependent systems of Proposition 2.4.5 will admit a unique solution. Then solving the original problem (2.1.1) amounts to finding \(v^*\in \mathbb {R}^G\) that solves its induced linear system of equations.

Proposition 2.4.7

Assume (A0’), (A1), (A2). In the context of Proposition 2.4.5, the following statements are also equivalent:

-

(iv)

\(v^*=(\mathbb {A}-\mathbb {B})^{-1}\mathbb {C}\big (\varphi ^*,\varphi ^*\big )\).

-

(v)

\(v^*= ({\text {Id}}-\mathbb {A}^{-1}\mathbb {B})^{-1}\mathbb {A}^{-1}\mathbb {C}\big (\varphi ^*,\varphi ^*\big )=\sum _{n\ge 0}\big (\mathbb {A}^{-1}\mathbb {B}\big )^n\mathbb {A}^{-1}\mathbb {C}\big (\varphi ^*,\varphi ^*\big )\). (cf. equation (2.4.2).)

Proof

Both expressions result from rewriting and solving the systems of Proposition 2.4.5. Assumptions (A0’), (A1) guarantee that \((\mathbb {A}-\mathbb {B})\big (\varphi ^*,\varphi ^*\big )\) is WCDD (Remark 2.4.6) and, in particular, nonsingular. Then (v) is due to Lemma 2.4.4, Theorem A.1.6 and the matrix power series expansion \(({\text {Id}}-X)^{-1}=\sum _{n\ge 0}X^n\), when \(\rho (X)<1\). \(\square \)

3.5 Convergence Analysis

We now study the convergence properties of Algorithm 2.2.1. Henceforth, the UIP refers to the obvious discrete analogous of Definition 1.2.1, where we replace the domain \(\mathbb {R}\), the impulse constraints \(\mathcal {Z}\) and the operator \(\mathcal {M}\) by their discretizations \(G,\ Z\) and M respectively.

The obvious first question to address is whether when Algorithm 2.2.1 converges, it does so to a solution of the system of QVIs (2.1.1). Unlike in the classical Bellman problem (2.1.4), problem (2.1.1) is intrinsically dependent on the particular strategy chosen by the player (see Propositions 2.4.5 and 2.4.7). Accordingly, we start with a lemma addressing what can be said about the convergence of the strategies \((\varphi ^k)\) when the payoffs \((v^k)\) converge.

Notation 2.5.1

\(\partial I^*{:}{=}\{Lv^*+f=Mv^*-v^*\}\cap G_{<0}\) denotes the “border” of the intervention region \(\{Lv^*+f\le Mv^*-v^*\}\cap G_{<0}\) defined by \(v^*\).

Lemma 2.5.2

Assume (A0)– (A2) and suppose \(v^k\rightarrow v^*\). Then:

-

(i)

\(\psi ^k\rightarrow \psi ^*\) in \((\partial I^*)^c\) and \(Mv^k\rightarrow Mv^*\).

-

(ii)

If \(\overline{\psi },\overline{\delta }\) are any two limit points of \((\psi ^k),(\delta ^k)\) resp.,Footnote 22 then

$$\begin{aligned} \overline{\delta }\in \mathop {{{\,\mathrm{arg\,max}\,}}}\limits _{\delta \in Z}\big \{B(\delta )v^*-c(\delta )\big \},\quad \overline{\psi }=0 \text{ on } G_{>=0}\quad \text{ and }\quad \overline{\psi }\in \mathop {{{\,\mathrm{arg\,max}\,}}}\limits _{i\in \{0,1\}}\big \{O_iv^*\big \} \text{ on } G_{<0}, \end{aligned}$$with \(O_0v=Lv+f\) and \(O_1v=Mv-v\).

-

(iii)

If \(v^*\) has the UIP, then \(\delta ^k\rightarrow \delta ^*(v^*)\) and \(Hv^k\rightarrow Hv^*\).

Proof

That \(Mv^k\rightarrow Mv^*\) is clear by continuity of the operators \(B(\delta )\) and finiteness of Z.

Let \(x\in (\partial I^*)^c\) and suppose \(Lv^*(x)+f(x)<Mv^*(x)-v^*(x)\) (the other case being analogous). By continuity of L and M there must exist some \(k_0\) such that \(Lv^k(x)+f(x)<Mv^k(x)-v^k(x)\) for all \(k\ge k_0\), which implies \(\psi ^k(x)=1=\psi ^*(x)\) for \(k\ge k_0\).

The statement about \(\overline{\psi },\overline{\delta }\) is proved as before by considering appropriate subsequences. Consequently, if \(v^*\) has the UIP, then necessarily \(\delta ^k\rightarrow \delta ^*(v^*)\) and \(Hv^k\rightarrow Hv^*\). \(\square \)

As a corollary we can establish that, should the sequence \((v^k)\) converge, its limit must solve problem (2.1.1). If convergence is not exact however (i.e., in finite iterations), then we will ask that \(v^*\) verifies some of the properties of the Verification Theorem in Corollary 1.3.9. Namely, the UIP and a discrete analogous of the continuity in the border of the opponent’s intervention region. We emphasise that our main motivation in solving system (2.1.1) relies in Corollary 1.3.9 and its framework. Additionally, in most practical situations and for fine-enough grids, one can intuitively expect the discretization of an equilibrium payoff as in Corollary 1.3.9 to inherit the UIP. Lastly, we note that the exact equality \(Lv^*+f=Mv^*-v^*\) will typically not be verified for any point in the grid in practice, giving \(\partial I^*=\emptyset \).

Corollary 2.5.3

Assume (A0)– (A2) and suppose \(v^k\rightarrow v^*\). Then:

-

(i)

If the convergence is exact, then \(v^*\) solves the system of QVIs (2.1.1).

-

(ii)

If \(v^*\) has the UIP and \(Lv^*+f=Hv^*-v^*\) on \(-\partial I^*\), then \(v^*\) solves (2.1.1).

Proof

(i) is immediate from the definition of Algorithm 2.2.1.

In the general case, since \(\{0,1\}^G\) is finite, there is a subsequence of \(\big (\psi ^k,\psi ^{k+1}\big )\) that converges to some pair \((\psi ,\overline{\psi })\). Passing to such subsequence, by Lemma 2.5.2, the UIP of \(v^*\) and equation (2.4.1), we get that \(v^*\) solves the system \(\mathbb {A}\big (\varphi ,\overline{\varphi }\big )v^*=\mathbb {B}\big (\varphi \big )v^* +\mathbb {C}\big (\varphi ,\overline{\varphi }\big )\) for \(\varphi =\big (\psi ,\delta ^*(v^*)\big ),\overline{\varphi }=\big (\overline{\psi },\delta ^*(v^*)\big )\) and \(\psi ,\overline{\psi }\) coincide with \(\psi ^*\) except possibly on \(\partial I^*\). Thus, it only remains to show that \(v^*\) also solves the equations of the system (2.1.1) for any \(x\in \partial I^*\cup (-\partial I^*)\).

For \(x\in \partial I^*\), the previous is true by definition. Suppose now \(x\in -\partial I^*\subseteq {\overline{I}}^c\). We have \(\psi ^*(-x)=1\). If \(\psi (-x)=1\), there is nothing to prove. If \(\psi (-x)=0\), then \(x\in {\overline{I}}^c\cap (-I)^c\) and \(0=Lv^*(x)+f(x)=Hv^*(x)-v^*(x)\), where the last equality holds true by assumption. \(\square \)

Lemma 2.5.2 shows to what extent the convergence of the payoffs imply the convergence of the strategies. The following theorem, of theoretical interest, establishes a reciprocal under the stronger assumption (A0’). In general, since the set of strategies \(\Phi \) is finite, the sequence of strategy-dependent coefficients of the fixed-point equations (2.4.1) will always be bounded and with finitely many limit points. However, if the approximating strategies are such that the former coefficients convergence, then Algorithm 2.2.1 is guaranteed to converge. Further, instead of looking at the convergence of \(\big (\mathbb {A},\mathbb {B},\mathbb {C}\big )\big (\varphi ^k,\varphi ^{k+1}\big )\), we can instead consider the weaker condition of \(\big (\mathbb {A}^{-1}\mathbb {B},\mathbb {A}^{-1}\mathbb {C}\big )\big (\varphi ^k,\varphi ^{k+1}\big )\) converging.

Theorem 2.5.4

Assume (A0’), (A1), (A2). If \(\big (\mathbb {A}^{-1}\mathbb {B}\big (\varphi ^k,\varphi ^{k+1}\big )\big )\) and \(\big (\mathbb {A}^{-1}\mathbb {C}\big (\varphi ^k,\varphi ^{k+1}\big ) \big )\) converge, then \((v^k)\) converges.

Proof

Set \(b=\lim _k \mathbb {A}^{-1}\mathbb {C}\big (\varphi ^k,\varphi ^{k+1}\big )\). Since \(\Phi \) is finite, there must exist \(k_0\in \mathbb {N}\) and \(\varphi ,\overline{\varphi }\in \Phi \) such that \(\mathbb {A}^{-1}\mathbb {B}\big (\varphi ^k,\varphi ^{k+1}\big )=\mathbb {A}^{-1}\mathbb {B}\big (\varphi ,\overline{\varphi }\big )\) and \(\mathbb {A}^{-1}\mathbb {C}\big (\varphi ^k,\varphi ^{k+1}\big )=b\) for all \(k\ge k_0\). Moreover, under our assumptions, \((\mathbb {A}-\mathbb {B})\big (\varphi ,\overline{\varphi }\big )\) is a WCDD \(\text{ L }_0\)-matrix. Then Lemma 2.4.4 and Theorem A.1.6 imply that \(\mathbb {A}^{-1}\mathbb {B}\big (\varphi ,\overline{\varphi }\big )\) is contractive for some matrix norm. Lastly, note that the sequence of payoffs \((v^k)_{k\ge k_0}\) now satisfies the classical (constant-coefficients) contractive fixed-point recurrence \(v^{k+1}= \mathbb {A}^{-1}\mathbb {B}\big (\varphi ,\overline{\varphi }\big )v^k + b\), which converges to the unique fixed-point of the equation. \(\square \)

The classical fixed-point policy-iteration framework [14, 26] assumes uniform contractiveness in \(\Vert \cdot \Vert _\infty \) of the sequence of operators. This is a natural norm to consider in a context where matrices have properties defined row by row, such as diagonal dominance.Footnote 23 However, the authors mention convergence in experiments where only \(\Vert \cdot \Vert _\infty \)-nonexpansiveness held true. The latter is the typical case in our context, for the matrices \(\mathbb {A}^{-1}\mathbb {B}\big (\varphi ^k,\varphi ^{k+1}\big )\), which is why Theorem 2.5.4 relies on the fact that a spectral radius strictly smaller than one guarantees contractiveness in some matrix norm.

It is natural to ask whether there is some contractiveness condition that may account for the observations in [14, 26] and that can be generalized to our context to further the study of Algorithm 2.2.1. Imposing a uniform bound on the spectral radii would not only be hard to check, but also difficult to manipulate, as the spectral radius is not sub-multiplicative.Footnote 24 Instead, we can consider the sequential indices of contraction and connectivity, which naturally generalize those of the previous section by means of walks in the graph of a sequence of matrices (see Appendix A for more details). As before, they can be identified with one another (see Lemma A.1.5) and, given substochastic matrices, the sequential index of contraction tells us how many we need to multiply before the result becomes \(\Vert \cdot \Vert _\infty \)-contractive (Theorem A.1.7). Thus, let us consider a uniform bound on the following sequential indices of connectivity:

-

(A0”)

There exists \(m\in \mathbb {N}_0\) such that for any sequence of strategies \((\overline{\varphi }^k)\subseteq \Phi \),

$$\begin{aligned} \text{ con }\left[ \Big ((\mathbb {A}-\mathbb {B})\big (\overline{\varphi }^k,\overline{\varphi }^{k+1}\big )\Big )\right] \le m. \end{aligned}$$

Remark 2.5.5

Given \(\varphi ,\overline{\varphi }\in \Phi \), by considering the sequence \(\varphi ,\overline{\varphi },\varphi ,\overline{\varphi },\dots \), we see that (A0”) implies (A0’). In fact, (A0”) can be interpreted as precluding infinite simultaneous impulses even when the players can adapt their strategies (cf. Remark 2.4.6) and imposing that the number of shifts needed for any state to reach the common continuation region is bounded.

Under this stronger assumption, we have:

Proposition 2.5.6

Assume (A0”), (A1), (A2). Then \((v^k)\) is bounded.

Proof

In a similar way to Lemma 2.4.4, one can check that under (A0”), (A1), (A2) we have the following uniform bound for the sequential indices of contraction:

for any sequence of strategies \((\varphi ^k)\subseteq \Phi \). In other words, not only is any product of the previous substochastic matrices also substochastic (i.e., \(\Vert \cdot \Vert _\infty \)-nonexpansive), but it is also \(\Vert .\Vert _{\infty }\)-contractive when there are at least \(m+1\) factors. Furthermore, since \(\Phi ^{m+1}\) is finite, there must be a uniform contraction constant \(C_1<1\). Let \(C_2>0\) be a uniform bound for \(\mathbb {A}^{-1}\mathbb {C}\). By the representation (2.4.2)Footnote 25

\(\square \)

Given \(n_0\in \mathbb {N}\) and \(k>(m+1)n_0\), the same argument of the previous proof shows that one can decompose \((v^k)\) as \( v^{k+1}=u^k + F(\varphi ^{k-(m+1)n_0},\dots ,\varphi ^k) + w^k, \) for a fixed function F, \(\Vert u^k\Vert _\infty \le C_1^{[k/(m+1)]}\Vert v^0\Vert \rightarrow 0\) and \(\Vert w^k\Vert _\infty \le (m+1)C_2\sum _{n=n_0}^\infty C_1^n\). The latter is small if \(n_0\) is large. Hence, one could heuristically expect that the trailing strategies are often the ones dominating the convergence of the algorithm. In fact, in all the experiments carried out with a discretization satisfying (A0”), (A1), (A2), a dichotomous behaviour was observed: the algorithm either converged or at some point reached a cycle between a few payoffs. In the latter case, and restricting attention to instances in which one heuristically expects a solution to exist (more details in Sect. 3), it was possible to reduce the residual to the QVIs and the distance between the iterates by refining the grid.

The previous motivates the study of Algorithm 2.2.1 when the grid is sequentially refined, instead of fixed. Such an analysis however, would likely entail the need of a viscosity solutions framework as in [2, 12], which does not currently exist in the literature of nonzero-sum stochastic impulse games. Consequently, this analysis and the stronger convergence results that may come out of it are inevitably outside the scope of this paper.

3.6 Discretization Schemes

Let us conclude this section by showing how one can discretize the symmetric system of QVIs (1.3.1) to obtain (2.1.1) in a way that satisfies the assumptions present throughout the paper. Recall that we work on a given symmetric grid \(G:\ x_{-N}=-x_N<\dots<x_{-1}=-x_1<x_0=0<x_1<\dots <x_N\).

Firstly, we want a discretization L of the operator \(\mathcal {A}-\rho {\text {Id}}\) such that \(-L\) is an SDD \(L_0\)-matrix as per (A1). A standard way to do this is to approximate the first (resp. second) order derivatives with forward and backward (resp. central) differences in such a way that we approximate the ordinary differential equation (ODE) \(\frac{1}{2}\sigma ^2 V''+\mu V' - \rho V + f=0\) with an upwind (or positive-coefficients) scheme. More precisely, for each \(x=x_i\in G\) we approximate the first derivative with a forward (resp. backward) difference if its coefficient in the previous equation is nonegative (resp. negative) in \(x_i\),

and the second derivative by

In the case of an equispaced grid with step size h, this reduces toFootnote 26

For the previous stencils to be defined in the extreme points of the grid, we consider two additional points \(x_{-N-1},x_{N+1}\) and replace \(V(x_{-N-1}),V(x_{N+1})\) in the previous formulas by some values resulting from artificial boundary conditions. A common choice is to impose Neumann conditions to solve for \(V(x_{-N-1}),V(x_{N+1})\) using the first order differences from before. For example, in the equispaced grid case, given \(\text{ LBC },\text{ RBC }\in \mathbb {R}\) we solve for \(V(x_{-N}-h)\) (resp. \(V(x_N+h)\)) from the Neumann condition

yielding \(V(x_{-N}-h)\approx V(x_{-N})-\text{ LBC }h\) (resp. \(V(x_N+h)\approx V(x_N)+ \text{ RBC } h\)). The choice of \(\text{ LBC },\text{ RBC }\) is problem-specific and intrinsically linked to that of \(x_N\), although it does not affect the properties of the discrete operators. See more details in Sect. 3.

The described procedure leads to a discretization of the ODE as \(Lv+f=0\), with L satisfying the properties we wanted (with strict diagonal dominance due to \(\rho >0\)). Note that the values of f at \(x_{-N},x_N\) need to be modified to account for the boundary conditions.

Remark 2.6.1

One could increase the overall order of approximation by using central differences as much as possible for the first order derivatives, provided the scheme remains upwind (see [25, 38] for more details). This is not done here in order to simplify the presentation.

We now approximate the impulse constraint sets \(\mathcal {Z}(x)\) (\(x\in \mathbb {R}\)) by finite sets \(\emptyset \ne Z(x)\subseteq [0,+\infty )\) (\(x\in G\)), such that \(Z(x)=\{0\}\) if \(x\ge 0\), and define the impulse operators

where \(v[\![y]\!]\) denotes linear interpolation of v on y using the closest nodes on the grid, and \(v[\![y]\!]=v(x_{\pm N})\) if \(\pm y>\pm x_{\pm N}\) (i.e., “no extrapolation”). This univocally defines the discrete loss and gain operators M and H as per (2.1.2), as well as the optimal impulse \(\delta ^*\) according to (2.1.3). The set of discrete strategies \(\Phi \) is defined as in (2.2.1).

This general discretization scheme satisfies assumptions (A0)– (A2) and one can impose some regularity conditions on the sets \(\mathcal {Z}(x)\) and Z(x) such that the solutions of the discrete QVI problems (2.2.2) converge locally uniformly to the unique viscosity solution of the analytical impulse control problem, as the grid is refined.Footnote 27 See [2, 6] for more details.

Example 2.6.2

In the case where \(\mathcal {Z}(x)=[0,+\infty )\) for \(x<0\), a natural and most simple choice for Z(x) is \(Z(x_i)=\{0,x_{i+1}-x_i,\dots ,x_N-x_i\}\) for \(i<0\). In this case, \(B(\delta )v(x) = v(x+\delta (x))\) and \(Hv(x)=v(x-\delta ^*(-x))+g(x,-\delta ^*(-x))\). This choice, however, does not satisfy (A0’).

In order to preclude infinite simultaneous interventions it is enough to constrain the size of the impulses so that the symmetric point of the grid cannot be reached. That is, \(Z(x)\subseteq [0,-2x)\) for any \(x\in G_{<0}\). In this case, the scheme satisfies the stronger conditions (A0”), (A1), (A2) (and in particular, (A0’)). Intuitively, each positive impulse will lead to a state which is at least one node closer to \(x_0=0\), where no player intervenes. Practically, it makes sense to make this choice when one suspects (or wants to check whether) there is a symmetric NE with no “far-reaching impulses”, in the previous sense.

Proof

To check that (A0”) is indeed satisfied, consider some arbitrary \(\varphi =(I,\delta ),\ \overline{\varphi }=(\overline{I},\overline{\delta })\) and \(x_i\in G\). Note first that if \(x_i\) belongs to the common continuation region \({\overline{I}}^c\cap (-I)^c\) (as it is the case for \(x_0=0\)), then the i-th row of \((\mathbb {A}-\mathbb {B})\big (\varphi ,\overline{\varphi }\big )\) is equal to the i-th row of \(-L\), which is SDD by (A1). We claim that, in general, either the i-th row of \((\mathbb {A}-\mathbb {B})\big (\varphi ,\overline{\varphi }\big )\) is SDD or there is an edge in its graph from \(x_i\) to some \(x_j\) with \(|j|<|i|\). This immediately implies that (in the notation of (A0”)) one can take \(m=N\), as there will always be a walk in graph\(\Big ((\mathbb {A}-\mathbb {B})\big (\overline{\varphi }^k,\overline{\varphi }^{k+1}\big )\Big )\) from \(x_i\) to some SDD row, with length at most N. (The longest that might need to be walked is all the way until reaching the 0-th row, with the distance decreasing in one unit per step.)

To prove the claim, suppose first that \(x_i\in \overline{I}\) (i.e., the intervention region of the player). Hence, \(i<0\). If \(\overline{\delta }=0\), then the i-th row of \((\mathbb {A}-\mathbb {B})\big (\varphi ,\overline{\varphi }\big )\) is again equal to the i-th row of \({\text {Id}}\), thus SDD. If \(0<\overline{\delta }<-2x_i\), then there exist an index k and \(\alpha ,\beta \ge 0\) such that \(i< k\le |i|\), \(\alpha +\beta =1\) and \(x_i+\overline{\delta }=\alpha x_{k-1} +\beta x_k\). Accordingly,

If \(x_i<x_i+\overline{\delta }\le x_{|i|-1}\), then we can assume without loss of generality that \(\beta >0\) and take \(j=k\). (If \(\beta =0\), simply relabel \(k-1\) as k.) If \(x_{|i|-1}<x_i+\overline{\delta }< -x_i = x_{|i|}\), then \(\alpha >0\) and we can take \(j=k-1\).

The case of \(x_i\in -I\) is symmetric and can be proved in the same manner. \(\square \)

Example 2.6.3

If \(\mathcal {Z}(x)=[0,+\infty )\) for \(x<0\), the analogous of Example 2.6.2 is now \(Z(x_i)=\{0,x_{i+1}-x_i,\dots ,x_{-i-1}-x_i\}\) for \(i<0\).

Remark 2.6.4

Consider Example 2.6.3 in the context of Remark 2.1.1. As in Proposition 2.5.6 and due to Theorem A.1.7, the less impulses needed between the two players to reach the common continuation region, the faster that the composition of the fixed-point operators of Algorithm 2.2.1 becomes contractive. Hence, one could intuitively expect that when close enough to the solution, the choice of the maximum arg-maximum in (2.1.3) improves the performance of Algorithm 2.2.1. This is another motivation for such choice.

4 Numerical Results

This section presents numerical results obtained on a series of experiments. See Introduction and Sect. 1.3 for the motivation and applications behind some of them. We do not assume additional constraints on the impulses in the analytical problem. All the results presented were obtained on equispaced grids with step size \(h>0\) (to be specified) and with a discretization scheme as in Sect. 2.6 and Example 2.6.3. The extreme points of the grid are displayed on each graph.

For the games with linear costs and gains of the form \(c(x,\delta )=c^0+c^1\delta \) and \(g(x,\delta )=g^0+g^1\delta \), with \(c^0,c^1,g^0,g^1\) constant, the artificial boundary conditions were taken as LBC \(=c^1\) and RBC \(=g^1\) for a sufficiently extensive grid. They result from the observation that on a hypothetical symmetric NE of the form \(\varphi ^*=\big ((-\infty , \overline{x}], \delta ^*(x)=y^*-x\big )\), with \(\overline{x}<0,\ \overline{x}< y^*\in \mathbb {R}\), the equilibrium payoff verifies \(V(x)=V(y^*)-c^0-c^1(y^*-x)\) for \(x<\overline{x}\) and \(V(x)=V(-y^*)+g^0+g^1(x+y^*)\) for \(x>-\overline{x}\). For other examples, LBC, RBC and the grid extension were chosen by heuristic guesses and/or trial and error. However, in all the examples presented the error propagation from poorly chosen LBC,RBC was minimal.