Abstract

In this paper, we analyse some equity-linked contracts that are related to drawdown and drawup events based on assets governed by a geometric spectrally negative Lévy process. Drawdown and drawup refer to the differences between the historical maximum and minimum of the asset price and its current value, respectively. We consider four contracts. In the first contract, a protection buyer pays a premium with a constant intensity p until the drawdown of fixed size occurs. In return, he/she receives a certain insured amount at the drawdown epoch, which depends on the drawdown level at that moment. Next, the insurance contract may expire earlier if a certain fixed drawup event occurs prior to the fixed drawdown. The last two contracts are extensions of the previous ones but with an additional cancellable feature that allows the investor to terminate the contracts earlier. In these cases, a fee for early stopping depends on the drawdown level at the stopping epoch. In this work, we focus on two problems: calculating the fair premium p for basic contracts and finding the optimal stopping rule for the polices with a cancellable feature. To do this, we use a fluctuation theory of Lévy processes and rely on a theory of optimal stopping.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The recent financial crises have shown that drawdown events can directly affect the incomes of individual and institutional investors. This basic observation suggests that drawdown protection can be very useful in daily practice. For this reason, in this paper we consider a few insurance contracts that can protect the buyer from a large drawdown. By the drawdown of a price process, we mean here the distance of the current value from the maximum value that it has attained to date. In return for the protection, the investor pays a premium. More precisely, we consider the following insurance contracts. In the simplest contract, the protection buyer pays a premium with a constant intensity until the drawdown of fixed size occurs. In return, he/she receives a certain insured amount at the drawdown epoch that depends on the level of drawdown at this moment. Another insurance contract provides protection from any specified drawdown with drawup contingency. The drawup is defined as the current rise of the asset present value over the running minimum. This contract may expire earlier if a certain fixed drawup event occurs prior to the fixed drawdown. This is a very demanding feature of an insurance contract from the investor’s perspective. Indeed, when a large drawup is realised, there is little need to insure against a drawdown. Therefore, this drawup contingency automatically stops the premium payment and is an attractive feature that will potentially reduce the cost of drawdown insurance.

In fact, the buyer of the insurance contract might think that they are unlikely to get large drawdown and he/she might want to stop paying the premium at some other random time. Therefore, we expand the previous two contracts by adding a cancellable feature. In this case, the fee for early stopping depends on the level of drawdown at the stopping epoch.

We focus on two problems: calculating the fair premium \(p^*\) for basic contracts and showing that the investor’s optimal cancellable timing is based on the first passage time of the drawdown process. This allows us to identify the fair price of all of the contracts that we have described.

The shortcomings of the diffusion models in representing the risk related to large market movements have led to the development of various option pricing models with jumps, where large log-returns are represented as discontinuities in prices as a function of time. Therefore, in this paper we model an asset price appearing in these contracts with a geometric spectrally negative Lévy process. In this model, the log-price \(\log S_t=X_t\) is described by a Lévy process without positive jumps. This is a natural generalisation of the Black-Scholes market (for which \(X_t=B_t\) is a Brownian motion), which allows for a more realistic representation of price dynamics and a greater flexibility in calibrating the model to market prices. This will also allow us to reproduce a wide variety of implied volatility skews and smiles (see e.g. [2]).

In this paper we follow Zhang et al. [21] and Palmowski and Tumilewicz [11]. Zhang et al. [21] considered the Black-Scholes model, in contrast to our more general, Lévy-type market. However, they did not consider an insurance contract with a drawup contingency and cancellable feature.

In Zhang et al. [21], and Palmowski and Tumilewicz [11] the insured amount and penalty fee are fixed and constant. In this paper, we allow these quantities to depend on level of drawdown at the maturity of the contract or at the stopping epoch. This new feature in our model allows for more flexible insurance contracts. Analysing this interesting case also requires a deeper understanding of the position of the Lévy process at these stopping times, which is also of theoretical interest. Apparently, this could be achieved by using the fluctuation theory of spectrally negative Lévy processes, and can refine and find new results from the optimal stopping theory. The research conducted in this paper continues a list of papers analysing drawdown and drawup processes, see for example [1, 4, 9, 14, 15, 17,18,19,20].

In this paper, we also give an extensive numerical analysis which shows that suggested optimal stopping times and fair premium rule are easy to find and the implemented algorithm is very efficient. We mainly focus on the case where a logarithm of the asset price is a linear Brownian motion (Black-Scholes model) or drift minus compound Poisson process (so-called Cramér–Lundberg risk process). The dependency of the price of the considered contracts on the chosen model parameters shows some very interesting phenomenon.

The rest of this paper is organised as follows. In Sect. 2 we introduce the main definitions, notations and main identities that will be used later. In Sect. 3, we analyse the insurance contracts that are based only on drawdown (with and without cancellable feature). In Sect. 4, we add an additional possibility of stopping at the first drawup (with and without cancellable feature). Some of our proofs are given in the Appendix.

2 Preliminaries

We work on a complete filtered probability space \((\Omega ,\mathcal {F},{\mathbb {P}})\) satisfying the usual conditions. We model a logarithm of risky underlying asset price \(\log S_t\) by a spectrally negative Lévy process \(X_t\); that is, \(X_t\) is a stationary stochastic process with independent increments, having right-continuous paths with left-hand finite limits and having only negative jumps (or not having jumps at all which means that \(X_t\) is a Brownian motion with linear drift). Any Lévy process is associated with a triple \((\mu ,\sigma ,\Pi )\) by its characteristic function, as:

where \(\mu \in {\mathbb {R}}\), \(\sigma \ge 0\) and a Lévy measure \(\Pi \) satisfies \(\int _{{\mathbb {R}}}(1\wedge y^2)\Pi (\mathop {}\!\mathrm {d}y)<\infty \).

In this paper we focus on two examples of Lévy process \(X_t\). We calculate all of the quantities explicitly and do whole numerical analysis for them. The first concerns a Black-Scholes market under which \(X_t\) is the linear Brownian motion given by

where \(B_t\) is standard Brownian motion and if \({\mathbb {P}}\) is a martingale measure, then \(\mu =r-\sigma ^2/2\) for a risk-free interest rate r and a volatility \(\sigma >0\). Obviously, \(X_t\) in (2) is a spectrally negative Lévy process because it has no jumps.

In another classical example, we focus on a Cramér–Lundberg process with the exponential jumps:

where \({\hat{\mu }}=\mu - \int _{(0,1)}y\Pi (-\mathop {}\!\mathrm {d}y)\), the sequence \(\{\eta _i\}_{\{i\ge 1\}}\) consists of i.i.d. exponentially distributed random variable with a parameter \(\rho >0\) and \(N_t\) is a Poisson process with an intensity \(\beta >0\) independent of the sequence.

These two examples describe the most important features of Lévy-type log-prices: their diffusive nature and their possible jumps. As such, they may serve as core examples of the theory presented in this paper.

The main message of this paper is that fair premiums, prices of all contracts and all optimal stopping rules can be expressed only in terms of two special functions, which are called the scale functions. To define them properly, we introduce the Laplace exponent of \(X_t\):

which is well defined for \(\phi \ge 0\) due to the absence of positive jumps. Recall that, for \(\mu \in {\mathbb {R}}\), \(\sigma \ge 0\) and for a Lévy measure \(\Pi \), by Lévy-Khintchine theorem:

Note that \(\psi \) is zero at the origin, tends to infinity at infinity and it is strictly convex. Therefore, we can properly define a right-inverse \(\Phi :[0,\infty )\rightarrow [0,\infty )\) of the Laplace exponent \(\psi \) given in the (4). Thus,

For \(r\ge 0\) we define a continuous and strictly increasing function \(W^{(r)}\) on \([0,\infty )\) with the Laplace transform given by:

This is the so-called first scale function. From this definition, it also follows that \(W^{(r)}\) is a non-negative function. The second scale function is related to the first one via the following relationship:

In this paper, we assume that either the process \(X_t\) has non-trivial Gaussian component — that is, \(\sigma >0\) (hence, it is of unbounded variation) — or it is of bounded variation and \(\Pi (-\infty ,-y)\) is continuous function for \(y>0\). From [5, Lem 2.4], it follows that

for \({\mathbb {R}}_+=(0,\infty )\). Moreover, under this assumptions the process \(X_t\) has absolutely continuous transition density, that is, for any fixed \(t>0\) the random variable \(X_t\) is absolutely continuous.

Example 1

For linear Brownian motion (2) the Laplace exponent equals

and, therefore, the scale functions, for \(\phi \ge 0\), are given as follows:

where

Example 2

For the Cramér–Lundberg process (3) we have

and, hence, for \(\phi \ge 0\),

where

Let us denote:

In this paper, we analyse the insurance contracts related to the drawdown and drawup processes. The classical definitions for these processes are as follows. Drawdown is the difference between running maximum of the process and its current value. Meanwhile, drawup is the difference between the process current value and its running minimum. Without loss of generality, let us assume that \(X_0=0\). Additionally, one can allow that the drawdown and drawup processes start from some points \(d\ge 0\) and \(u\ge 0\), respectively. That is,

Thus, the above values d and \(-u\) may be interpreted as the historical maximum and historical minimum of process X. In daily practice, zero level of \(X_0\) might be treated as the present position of log-prices of the asset that we work with. In this case, the above interpretations of d and \(-u\) are even more clear.

The following first passage times of drawdown and drawup processes are crucial for further work, respectively:

for some \(a,b>0\).

Later, for fixed \(d,\ u\) we will use the following notational convention:

Finally, we denote \({\mathbb {P}}_{x}\left[ \cdot \right] :={\mathbb {P}}\left[ \cdot |X_0=x\right] \), with \({\mathbb {P}}={\mathbb {P}}_{0}\) and \({\mathbb {E}}_{|d}, {\mathbb {E}}_{x|d},{\mathbb {E}}_{|d|u}, {\mathbb {E}}_{x|d|u}, {\mathbb {E}}_{x}, {\mathbb {E}}\) will be corresponding expectations to the above measures. We will also use the following notational convention: \({\mathbb {E}}[\cdot \ {mathbb {1}}_{(A)}]={\mathbb {E}}[\cdot ;A]\).

The seminal observation for the fluctuation of Lévy processes is the fact the scale functions (6) and (7) are used in solving so-called exit problems given by:

where \(x\le a\), \(r\ge 0\) and

are the first passage times of the process \(X_t\). We finish this section with the formula (given in Mijatović and Pistorius [10, Thm. 3]) that identifies the joint law of \(\{\tau _{U}^+(b), {\overline{X}}_{\tau _{U}^+(b)}\), \({\underline{X}}_{\tau ^+_{U}(b)}\}\), for \(r,u,v\ge 0\):

3 Drawdown Insurance Contract

3.1 Fair Premium

In this section we consider the insurance contract in which a protection buyer pays constant premium \(p>0\) continuously until the drawdown of size \(a>0\) occurs. In return, he/she receives the reward \(\alpha (D_{\tau _{D}^+(a)})\) that depends on the value of the drawdown process at this moment of time. It is natural to assume that \(\alpha (\phi )=0\) for \(\phi <a\). Let \(r\ge 0\) be the risk-free interest rate. The price of the first, basic contract that we consider in this paper equals the discounted value of the future cash-flows:

In this contract, the investor wants to protect herself/himself from the asset price \(S_t=e^{X_t}\) falling down from the previous maximum more than fixed level \(e^a\) for some fixed \(a>0\). In other words, she/he believes that even if the price will go up again after the first drawdown of size \(e^a\) it will not bring her/him sufficient profit. Therefore, she/he is ready to take this type of contract to reduce loss by getting \(\alpha (D_{\tau _{D}^+(a)})\) at the drawdown epoch.

Note that we define the drawdown contract value (12) from the investor’s position. This represents the investor’s average profit when the value is positive or loss when this value is negative. Thus, the contract is unprofitable for the insurance company in the first case and for the investor in the second case. The only fair solution for both sides situation is when the contract value equals zero. Obviously, this means that this is the premium p under which the contract should be constructed or it can serve as the basic reference economical premium.

Definition 1

The premium p is said to be fair when contract value at its beginning is equal to 0. We denote this fair premium by \(p^*\). We will add argument of initial drawdown (and later drawup) to underline its dependence on the initial conditions, e.g. writing \(p^*(d)\) or \(p^*(d,u)\).

The main of goal of this section and the paper is identifying the fair premium \(p^*\) for the first contract (12) under spectrally negative Lévy-type market.

Let us start from the basic observation that:

where

are the Laplace transform of \(\tau _{D}^+ (a)\) and the discounted reward function, respectively. Note that \(\xi \in [0,1]\) is well defined.

Moreover, from now on we assume that for all \(d\ge 0\)

for \(\Xi \) to be well defined. Observe that (16) holds true, such as for the bounded reward function \(\alpha \). To price the contract (12), we start by identifying the crucial functions \(\xi \) and \(\Xi \).

Proposition 2

The value of the drawdown contract (12) equals (13) for \(\xi \) and \(\Xi \) given by:

and

where \(\Pi \) is the Lévy measure of underlying process \(X_t\) defined formally in (5).

Proof

See Appendix. \(\square \)

Note that \(\tau _D^+(a)<\infty \) a.s. which follows from Proposition 2 by taking \(r=0\) in \(\xi (d)\).

From (13) and using Proposition 2, we can derive the following theorem.

Theorem 3

For the contract (12) the fair premium equals:

Example 1

(continued) The linear Brownian motion given in (2) is a continuous process and, therefore, \(D_{\tau _D^+(a)}=a\). The paid reward is always equal to \(\alpha (a):=\alpha \), which corresponds to the results for constant reward function in [11]. The value function equals:

and the fair premium \(p^*\) is given by:

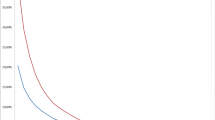

In Fig. 1, we demonstrate the \(p^*\) value for the drawdown insurance contract for the Black-Scholes market for various values of \(\alpha \). We choose the following parameters: \(r=0.01, \mu =0.03, \sigma =0.4, a=10\).

In Fig. 2, we present the contract value f for various premiums p. We choose the same parameters as above and fixed \(\alpha =100\). Finally, for the premium rates, we take the fair premiums when the initial drawdown equals \(d=0\), \(d=5\), \(d=6\) and \(d=7\), respectively.

Example 2

(continued) Let us consider now Cramér–Lundberg process defined in (3). From [11] we have

where

For Cramér–Lundberg model we have \(D_{\tau _D^+(a)}>a\) because of the jump’s presence. Let us consider the general reward function \(\alpha (d)\) for \(d\ge a\). Then

where \({\mathbf {e}}_{\rho }\) is exponentially distributed random variable with parameter \(\rho \). Therefore, the value function and the fair premium are given by

From the above representation of \(\Xi \) we can easily check the condition (16). For example, let \(\alpha (d)=\omega e^{\kappa d}\), where \(\omega \) and \(\kappa \) are some constants. For this reward function, condition (16) is satisfied when:

This inequality is satisfied when \(\kappa <\rho \) and \(\omega <\infty \). Another example can be found in the linear reward function \(\alpha (d)=\alpha _1 +\alpha _2 d\) for some constants \(\alpha _1\) and \(\alpha _2.\) In this case

Thus, the linear reward function satisfied condition (16) always when \(\alpha _1, \alpha _2<\infty \). In particular, for the linear reward function \(\alpha (\cdot )\) we get:

For the Cramér–Lundberg model the we present in Figs. 3 and 4, the \(p^*\) value for various linear reward functions \(\alpha (d)\) and the contract value f(d, p) for two premiums: \(p=p^*(0)\) (which means that we start at the temporary maximum asset price) and for \(p=p^*(7.5)\), respectively.

3.2 Cancellable Feature

We now extend the previous contract by adding a cancellable feature. In other words, we give the investor a right to terminate the contract at any time prior to a pre-specified drawdown of size \(a>0\). To terminate, the contract she/he pays a fee \(c(D_\tau )\) that depends on the level of the drawdown at the termination time. It is intuitive to assume that the penalty function \(c(\cdot )\) is non-increasing. Indeed, if the value of drawdown is increasing, then the investor losses more money and the fee should decrease. For simplicity of future calculation, let also c be in \(C^2({\mathbb {R}}_+)\). The value of the contract that we consider here equals:

where \(\mathcal {T}\) is a family of all \(\mathcal {F}_t\)-stopping times.

One of the main goals of this paper is to identify the optimal stopping rule \(\tau ^*\) that realises the price F(d, p). We start from the simple observation.

Proposition 4

The cancellable drawdown insurance value admits the following decomposition:

where

for f defined in (12).

Proof

Using \({mathbb {1}}_{(\tau \ge \tau _{D}^+(a))}=1-{mathbb {1}}_{(\tau <\tau _{D}^+(a))}\) in (18) we obtain:

Note that the first term does not depend on \(\tau \). The second term depends on \(\tau \) only in event \(\{\tau <\tau _D^+(a)\}\). Then, by using a strong Markov property we get:

This completes the proof. \(\square \)

To determine the optimal cancellation strategy for out contract, it is sufficient to solve the optimal stopping problem represented by the second increment of (19), that is used to identify function G(d, p). We use the “guess and verify” approach. This means that we first guess the candidate stopping rule and then we verify that this is truly the optimal stopping rule using the Verification Lemma given below.

Lemma 5

Let \(\Upsilon _t\) be a right-continuous process living in some Borel state space \({\mathbb {B}}\) killed at some \(\mathcal {F}^\Upsilon _t\)-stopping time \(\tau _0\), where \(\mathcal {F}^\Upsilon _t\) is a right-continuous natural filtration of \(\Upsilon \). Consider the following stopping problem:

for some function V and the family \(\mathcal {F}^\Upsilon _t\)-stopping times \(\mathcal {T}^\Upsilon \). Assume that

The pair \((v^*,\tau ^*)\) is a solution of stopping problem (23) if for

we have

- (i)

\(v^*(\phi )\ge V(\phi )\) for all \(\phi \in {\mathbb {B}}\)

- (ii)

the process \(e^{-rt}v^*(\Upsilon _t)\) is a right-continuous supermartingale.

Proof

The proof follows the same arguments as the proof of [6, Lem. 9.1, p. 240]; see also [12, Th. 2.2, p. 29]. \(\square \)

Using above Verification Lemma 5 we prove that the first passage time of drawdown process below some level \(\theta \) is the optimal stopping time for (20) (hence, also for (19)); that is,

for some optimal \(\theta ^*\in (0,a)\).

For the stopping rule (25), we consider two cases: when \(d>\theta \) and when \(d\le \theta \); that is, we decompose \(g_{\tau _D^-(\theta )}(d,p)\) as follows:

If \(d\le \theta \), then we have situation when investor should stop the contract immediately. In this case we have,

To analyse the complimentary case of \(d>\theta \), observe now that, the choice of class of stopping time (25) implies that \(D_{\tau ^*}=D_{\tau _D^-(\theta )}=\theta \) since X is spectrally negative and it goes upward continuously. This implies that:

From this structure, it follows that it is optimal to never terminate the contract earlier if \({\tilde{f}}(\theta ,p)<0\) for all \(\theta \). To eliminate this trivial case, we assume from now on that there exist at least one \(\theta _0\ge 0\) which satisfies

Recall that by (19) the optimal level \(\theta ^*\) maximises the value function F(d, p) given in (18) and, hence, it also maximises the value function G(d, p) (20). Thus, we have to choose \(\theta ^*\) as follows:

Lemma 6

Assume that (28) holds. Then, there exists \(\theta ^*\) given by (29) and, additionally, \(\theta ^*\) does not depend on starting position of the drawdown d.

Proof

At the beginning, note that by (13), Proposition 2, Assumption (8) and (22), the function \({\tilde{f}}\) is continuous. Moreover, \({\tilde{f}}(a,p)=-\alpha (a)<0\) since for the initial drawdown \(d=a\) the insured drawdown level is achieved at the beginning and the reward has to be paid immediately. Consider now two cases. If \({\tilde{f}}(0,p)<0\), then, by assumption (28), there exist \(\theta _0\) such that \({\tilde{f}}(\theta _0,p)>0\). This implies existence of \(\theta ^*\) defined in (29).

On the other hand, if \({\tilde{f}}(0,p)\ge 0\), then using (27) we have:

Note that by (14)–(15) we have \(\xi ^\prime (0)=0\) and \(\Xi ^\prime (0)=0\). Thus

Recall that scale function \(W^{(r)}\) given in (6) is non-negative. Thus, \(\frac{\partial }{\partial \theta }g_>(d,p,\theta )|_{\theta =0}>0\) as each term in (30) is positive. We can now conclude that there exist local maximum \(\theta ^*>0\). Note that to find \(\theta ^*\) it suffices to make expression in the brackets of (30) equal zero and, therefore, \(\theta ^*\) does not depend on d. \(\square \)

The choice of the stopping rule (25) ensures that the “continuous fit” condition holds:

Indeed, the continuous fit is satisfied for any \(\theta \in (0,a)\) from the continuity of scale function \(W^{(r)}\) and the definition of \(g_>(d,p,\theta )\) given in (27). Moreover, if we choose \(\theta =\theta ^*\) defined in (29), then we have a “smooth fit” property satisfied:

The smooth fit for \(\theta ^*\) follows from its definition (29) and from equation (30). Indeed, since \(\theta ^*\) maximises \(g_>(d,p,\theta )\) (given in (27)) with respect to \(\theta \) it must be a root of (30). Then, by equation (26) we have,

Note that from the above equalities we see that the smooth fit conditions is satisfied whenever the scale function \(W^{(r)}\) has continuous derivative. Thus, the smooth fit condition is always fulfilled in our framework, as we assumed in (8).

We will now verify that the stopping time \(\tau ^*\) given in (25) is indeed optimal. The proof of this fact is based on Verification Lemma 5 and we start all of our considerations from the following lemma.

Lemma 7

By adding superscript c to some process we denote its continuous part. Let \(X_t\) be spectrally negative Lévy process and define two disjoint regions:

for some \(\theta ^*\in (0,a)\). Let p(t, d, u) be \(C^{1,2,2}\)-function on \({\overline{C}}\) and \({\overline{S}}\). We denote

For any function \({\hat{p}}\) we denote:

where \(\Delta D_s = D_s-D_{s-}=-(X_s-X_{s-})=-\Delta X_s\), \(\Delta U_s = U_s-U_{s-}\).

- (i)

Then, the process \(p(t,D_t, U_t)\) is a supermartingale if

\(I(p_C)(t,D_t, U_t)\) is a martingale,

\(I(p_S)(t,D_t, U_t)\) is a supermartingale

and if the following smooth fit condition holds:

$$\begin{aligned} \frac{\partial }{\partial d}p_S(t,d,u)\big |_{d\nearrow \theta ^*} =\frac{\partial }{\partial d}p_C(t,d,u)\big |_{d\searrow \theta ^*},\qquad {(\textit{smooth fit})} \end{aligned}$$(33)for \(\sigma >0\) and if the following continuous fit condition holds:

$$\begin{aligned} p_S(t,\theta ^*,u)=p_C(t,\theta ^*,u)\qquad (\textit{continuous fit}) \end{aligned}$$(34)for \(\sigma =0\) and \(X_t\) being a Lévy process of bounded variation.

- (ii)

If the stopped process \(p_C(t\wedge \tau _D^-(\theta ^*),D_{t\wedge \tau _D^-(\theta ^*)}, U_{t\wedge \tau _D^-(\theta ^*)})\) is a martingale then the process \(I(p_C)(t,D_t, U_t)\) is also a martingale and we have

$$\begin{aligned}&\frac{\partial }{\partial t}p_C(t,d,u)+\mathcal {A}^{(D,U)}p_C(t,d,u)=0,\,&\qquad {(\textit{martingale condition})} \end{aligned}$$(35)$$\begin{aligned}&\frac{\partial }{\partial d}p_C(t,d,u)|_{d=0}=0,\ \frac{\partial }{\partial u}p_C(t,d,u)|_{u=0}= 0,&\qquad {(\textit{normal reflection})} \end{aligned}$$(36)where \(\mathcal {A}^{(D,U)}\) is a full generator of the Markov process \((D_t,U_t)\) defined as follows:

$$\begin{aligned} \mathcal {A}^{(D,U)}{\hat{p}}(t,d,u)&:=-\mu \frac{\partial }{\partial d}{\hat{p}}(t,d,u)+\mu \frac{\partial }{\partial u}{\hat{p}}(t,d,u)+\frac{\sigma ^2}{2}\frac{\partial ^2}{\partial d^2}{\hat{p}}(t,d,u)\nonumber \\&\quad +\frac{\sigma ^2}{2}\frac{\partial ^2}{\partial u^2}{\hat{p}}(t,d,u)-\frac{\sigma ^2}{2}\frac{\partial ^2}{\partial u\partial d}{\hat{p}}(t,d,u)\nonumber \\&\quad +\int _{(0,\infty )}\Bigg ({\hat{p}}(t,d+z,(u-z)\vee 0)-{\hat{p}}(t,d,u)\nonumber \\&\quad -z\frac{\partial }{\partial d}{\hat{p}}(t,d,u){mathbb {1}}_{(z<1)}\nonumber \\&\quad +z\frac{\partial }{\partial u}{\hat{p}}(t,d,u){mathbb {1}}_{(z<1)}\Bigg )\Pi (-\mathop {}\!\mathrm {d}z), \end{aligned}$$(37)for \(\sigma >0\) and

$$\begin{aligned} \mathcal {A}^{(D,U)}{\hat{p}}(t,d,u)&:=-{\hat{\mu }} \frac{\partial }{\partial d}{\hat{p}}(t,d,u)+{\hat{\mu }} \frac{\partial }{\partial u}{\hat{p}}(t,d,u)\nonumber \\&\quad +\int _{(0,\infty )}\left( {\hat{p}}(t,d+z,(u-z)\vee 0)-{\hat{p}}(t,d)\right) \Pi (-\mathop {}\!\mathrm {d}z), \end{aligned}$$(38)for \(\sigma =0\) and \(X_t\) being a Lévy process with bounded variation.

- (iii)

The process \(I(p_S)(t,D_t, U_t)\) is a supermartingale if:

$$\begin{aligned}&\frac{\partial }{\partial t}p_S(t,d,u)+\mathcal {A}^{(D,U)}p_S(t,d,u)\le 0,&\qquad {(\textit{supermartingale condition})} \end{aligned}$$(39)$$\begin{aligned}&\frac{\partial }{\partial d}p_S(t,d,u)|_{d=0}\ge 0,\ \frac{\partial }{\partial u}p_S(t,d,u)|_{u=0}\ge 0. \end{aligned}$$(40)

Proof

See Appendix. \(\square \)

Remark 8

This lemma considers the case of function \(p(t,D_t,U_t)\), which may depend on t, \(D_t\) and \(U_t\). In the framework of the drawdown contract we take

though, which depends on t and d only. In this case, this lemma still holds true by taking all derivatives with respect to u equal to 0. Thus, we simplify the generator \(\mathcal {A}^{(D,U)}\), given in (37), to the full generator of Markov process \(D_t\) only:

for \(\sigma >0\) and

for \(\sigma =0\) and \(X_t\) being a Lévy process with bounded variation.

The main message of this crucial lemma is that we can separately analyse the continuation and stopping regions. In other words, to check the supermartingale condition of Verification Lemma 5, it is enough to prove the martingale property in so-called continuation region C and the supermartingale property in so-called stopping region S. This is possible thanks to the smooth/continuous fit condition that holds at the boundary that links these two regions. We can now solve our optimisation problem.

Theorem 9

Assume that (28) holds. Let non-increasing bounded penalty function \(c: {\mathbb {R}}_+\rightarrow {\mathbb {R}}_+\) be in \(C^2([0,a))\) and satisfies:

If

then the stopping time \(\tau _D^-(\theta ^*)\) for \(\theta ^*\) defined by (29) is the optimal stopping rule for the stopping problem (20) and hence also for the insurance contract (18).

Proof

We have to show that \(\tau _D^-(\theta ^*)\) and, hence, \(g_{\tau _D^-(\theta ^*)}(d,p)\) satisfy all of the conditions of the Verification Lemma 5. We take Markov process \(\Upsilon _t=D_t\), \({\mathbb {B}}={\mathbb {R}}_+\), \(\tau _0=\tau _D^+(a)\) and \(V(\phi )=g_{\tau _D^-(\theta ^*)}(d,p)\) with \(\phi =d\). To prove the domination condition (i) of Verification Lemma 5, we have to show that \(g_{\tau _D^-(\theta ^*)}(d,p)\ge {\tilde{f}}(d,p)\). To prove this, note that from the definition of \(\theta ^*\) we have that \(g_>(d,p,\theta ^*)\ge g_>(d,p,\theta )\) for all \(\theta \in (0,a)\). Furthermore, for \(d>\theta ^*\) we have:

Similarly, for \(d\le \theta ^*\),

which completes the proof of condition i) of Verification Lemma 5.

To prove the second condition (ii) of Verification Lemma 5, we have to show that the process

is a supermartingale. To do this, we prove that \(\left\{ e^{-rt}g_{\tau _D^-(\theta ^*)}(D_{t},p)\right\} \) is a supermartingale by applying Lemma 7 (i) for function \(p(t,d,u):=p(t,d)=e^{-rt}g_{\tau _D^-(\theta ^*)}(d,p)\), for \(d\ge 0\) and \(C=(\theta ^*,\infty )\), \(S=[0,\theta ^*)\). Given that \(\theta ^*\) satisfies the continuous fit condition (31) we can separately consider functions \(p_C(t,d):=e^{-rt}g_>(d,p,\theta ^*){mathbb {1}}_{(d\in (\theta ^*,\infty ))}\) and \(p_S(t,d):=e^{-rt}g_<(d,p,\theta ^*){mathbb {1}}_{(d\in [0,\theta ^*))}\). Moreover, the smooth fit condition (32) is also fulfilled. Thus, according to Lemma 7(i), it is enough to show that \(I(p_C)(t,D_t)\) is a martingale and \(I(p_S)(t,D_t)\) is a supermartingale.

At the beginning note that the function \(g_{\tau _D^-(\theta ^*)}(d,p)\) defined in (21) is bounded. Indeed, this follows from the fact that \({\tilde{f}}\) is bounded which is a consequence of the inequality \(\xi (\cdot )\le 1\), assumption (16) and assumed conditions on fee function \(c(\cdot )\). Thus, by Lemma 7(ii), the martingale condition of \(I(p_C)(t,D_t)\) follows form a martingale property of the process:

This property follows from the Strong Markov Property:

Now, by Lemma 7, we finish the proof if we show that the supermartingale condition for \(e^{-rt}g_<(d,p,\theta ^*){mathbb {1}}_{(d\in [0,\theta ^*))}\) is satisfied. To do so, we first prove that \(e^{-rt}{\tilde{f}}(D_t,p)\) is a supermartingale. Using definition of \({\tilde{f}}\) given in (22), we can write:

Moreover, the processes \(e^{-rt}\xi (D_t)\) and \(e^{-rt}\Xi (D_t)\) are both martingales by Strong Markov Property. Thus, for a supermartingale property for \(e^{-rt}{\tilde{f}}(D_t,p)\) holds if a process \(e^{-rt}\left( \frac{p}{r}-c(d)\right) \) is a supermartingale. Using [16, Thm. 31.5], the latter statement is equivalent to requirement that

which holds true by assumption (41) and that

which follows from the fact that the function c is non-increasing.

To prove supermartingale property of \(I(p_S)\) for \(p_S(t,d)=e^{-rt}g_<(d,p,\theta ^*){mathbb {1}}_{(d\in [0,\theta ^*))}\) we apply Lemma 7(iii). That is, this property holds if for \(d\in [0,\theta ^*)\) we have:

Note that, from (26), we have that \(g_<(d,p,\theta ^*)={\tilde{f}}(d,p)\) for \(d\in [0,\theta ^*)\). Moreover, the indicator appearing in the definition of \(p_S\) is important only in first increment in the sum under above integral. More precisely, because the process D only jumps upward, the above mentioned indicator may produce zero after a possible jump. Taking this observation into account, we can rewrite the above inequality as follows:

The first inequality follows from (42) and the second follows from supermartingale property of \({\tilde{f}}\), as proven previously.

The condition (40) in Lemma 7 (iii) is satisfied since \(g_<(d,p,\theta ^*)={\tilde{f}}(d,p)\) on \(d\in [0,\theta ^*)\) and condition (40) was already proved for \({\tilde{f}}\). This completes the proof. \(\square \)

Remark 10

We will give now a few comments about made assumptions (41) and (42).

Note that condition (41) is satisfied when \(-\mu +\int _{(1,\infty )}z\Pi (-\mathop {}\!\mathrm {d}z)\le 0\) and for all convex non-increasing penalty functions c such that

Indeed, we can rewrite inequality (41) as follows

The expression under the integral sign is positive since

by convexity of c. Now, the right-hand side of inequality (47) is non-positive by (46) and all the terms on the left-hand side are non-negative. Thus, the condition (41) holds true.

Condition (42) holds for the Brownian motion because it has continuous trajectories. Unfortunately, we are unable to give any sufficient conditions for the assumption (42) to hold true. We check this numerically and we show that it holds for all our examples. Note that this condition is simply satisfied whenever the payoff function is non-negative (e.g. for classical American options). In this paper, this is not the case. Indeed, in view of decomposition in Proposition 4, the payoff function \({\tilde{f}}\) can attain negative values.

Example 1

(continued) Let us consider two penalty functions: linear and quadratic:

We choose fixed reward function; that is. \(\alpha (a)=\alpha \). We also have to choose the premium intensity p such that the condition (28) is satisfied. Moreover, note that for the Brownian motion the condition (42) always holds true because of absence of jumps.

Given that the function \({\tilde{f}}\) is decreasing with respect to premium p for both \(c_1(\cdot )\) and \(c_2(\cdot )\), Fig. 5 shows that we can choose \(p\ge 0.1\) in both cases for our set of parameters. Recall that the optimal \(\theta ^*\) maximises function \(g_>(d,p,\theta )\) given in (27). For Brownian motion we have:

By making a plot of this function, we can easily identify the optimal level \(\theta ^*\). This is done in Fig. 6.

Example 2

(continued) We also analyse Cramér–Lundberg model for the linear and quadratic penalty functions (48)–(49) and for the linear reward function \(\alpha (\cdot )\). As Fig. 7 of the function \({\tilde{f}}\) shows, we can take a penalty intensity p bigger than 0.1 for our set of parameters for condition (28) to hold. Moreover, in this case, we have:

In Fig. 8, we numerically check the condition (42) and present the value of \(g_>(d,p,\theta )\) using the above formula. To find optimal stopping level \(\theta ^*\) for the drawdown, we pick the \(\theta \) that maximises the value function.

The condition (42) (left) and the function \(g_>(d,p,\theta )\) (right) for Cramér–Lundberg model, linear \(c_1\) and quadratic \(c_2\) penalty functions, and for various starting drawdown levels d. Parameters: \(p=0.1, r=0.01, {\hat{\mu }}=0.05, \beta =0.1, \rho =2.5, a=10, \alpha (d)=100+10d\)

4 Incorporating Drawup Contingency

4.1 Fair Premium

The investors might like to buy a contract that meets some maturity conditions. This means that contract will end when these conditions will be fulfilled. We add this feature to the previous contracts by considering drawup contingency. In particular, the next contracts may expire earlier if a fixed drawup event occurs prior to drawdown epoch. Choosing the drawup event is natural since it corresponds to some market upward trends. Therefore, the investor might stop believing that a substantial drawdown will happen in the close future and she/he might want to stop paying a premium when this event happens. Under a risk-neutral measure, the value of this contract equals:

where \(a\ge b\ge 0\). At the beginning we find the above value function and later we identify the fair premium \(p^*\) under which

Note that

where

Note that all of the above functions are well defined since \(\nu ,\lambda \in [0,1]\) and \(N(d,\cdot )\le \Xi (d)<\infty \) by (16).

In the next proposition, we identify all of the above quantities. We denote \(x^+=\max (x,0)\).

Proposition 11

Let \(b<a\). For any \(d\in [0,a]\) and \(u\in [0,b]\) we have:

where the joint distribution for \((\tau _U^+(b),{\overline{X}}_{\tau _U^+(b)},{\underline{X}}_{\tau _U^+(b)})\) is given via (11) and where \(\Xi (\cdot )\) is defined in (15).

Moreover, if \(a=b\) then, for \(d,u\in [0,a]\), we have:

Proof

See Appendix. \(\square \)

Identity (52) gives the following theorem.

Theorem 12

The price of the contract (50) is given in (52) and the fair premium defined in (51) equals:

where functions \(\lambda \), \(\nu \) and N are given in Proposition 11.

Example 1

(continued) We continue analysing the case of linear Brownian motion defined in (2). Assume that \(b<a\). To find the price of the contract (50), we use formula (52) and we calculate all of the functions \(\lambda \), \(\nu \) and N given in (53)–(55).

At the beginning, let us consider a case of \(a\le d+u\). By Lemma 11, the expressions for functions \(\nu \) and \(\lambda \) reduce to two-sided exit formulas (9)–(10). These are given explicitly in terms of the scale functions:

Given that Brownian motion has continuous trajectories, we have \(D_{\tau _D^+(a)}=a\). Denoting \(\alpha (a)=\alpha \) from the definition (55) of the function N, we can conclude that

The case of \(a>d+u\) is slightly more complex. First observe that the dual process \({\widehat{X}}_t:=-X_t\) is again a linear Brownian motion with drift \(-\mu \). Moreover, the geometrical observation of the trajectories of the processes X and \({\widehat{X}}\) gives that \({\widehat{U}}_t=D_t\) and \({\widehat{D}}_t=U_t\), where \({\widehat{U}}_t\) and \({\widehat{D}}_t\) are the drawup and drawdown processes for \({\widehat{X}}_t\), respectively. Now, using [10], we can find explicit expressions for the functions \(\lambda \) and \(\nu \):

where \(\mathcal {W}^{(r)}\) and \(\mathcal {Z}^{(r)}\) are the scale functions defined for \({\widehat{X}}_t\) (see also [20] and [11] for all details of calculations).

Because \(N(d,u)=\alpha \nu (d,u)\), the contract value and the fair premium defined in (52) and (56) can be represented as follows:

Figures 9 and 10 show the contract value and the fair premium levels depending on the starting positions of the drawdown and drawup.

Example 2

(continued) Now, assume that \(a=b\) and that the reward function is linear. To analyse the Cramér–Lundberg process we use Proposition 11, which identifies the contract value given in (52). The fair premium \(p^*\) can be derived from (56) of Theorem 12.

Figures 11 and 12 show the contract value and the fair premium levels depending on the starting positions of the drawdown and drawup.

4.2 Cancellable Feature

The last contract that we consider in this paper allows investors to terminate it earlier and it has drawup contingency. In other words, we add the cancellable feature to the previous contract k(d, u, p) given in (50). In this case, the protection buyer can terminate his or her position by paying fee \(c(D_\tau )\ge 0\) at any time prior to drawdown epoch. Note that the value of this fee may depend on the value of the drawdown at the moment of termination. The value of this contract then equals,

To analyse the cancellable feature, we rewrite the contract value in a similar form as that given in Lemma 5. That is, we can represent the drawup contingency contract with cancellable feature as the sum of two parts: one without cancellable feature (it is then k(d, u, p)) and one that depends on a stopping time \(\tau \).

Proposition 13

The cancellable drawup insurance value (57) admits the following decomposition:

where

for k defined in (50) and identified in (52).

Proof

Using \({mathbb {1}}_{(\tau _D^+(a)<\tau _U^+(b)\wedge \tau )}={mathbb {1}}_{(\tau _D^+(a)<\tau _U^+(b))}-{mathbb {1}}_{(\tau<\tau _D^+(a)<\tau _U^+(b))}\) we obtain:

Note that \({mathbb {1}}_{(\tau<\tau _D^+(a)<\tau _U^+(b)}={mathbb {1}}_{(\tau<\tau _D^+(a)\wedge \tau _U^+(b)}{mathbb {1}}_{(\tau _D^+(a)<\tau _U^+(b)}\). Thus, the result follows from the Strong Markov Property applied at the stopping time \(\tau \):

\(\square \)

To identify the value of the contract K and, hence, to find the function H defined in (59) we use, as before, the “guess and verify” approach. The candidate for the optimal stopping strategy \(\tau ^*\) is the first passage time over level \(d-\theta ^*\) for some \(\theta ^* \in (d+u-b,a)\) that will be specified later; that is,

Remark 14

We allow here \(\theta ^*\) to be negative, which corresponds to the rise of the running supremum from d to \(d+|\theta ^*|\). Finally, note that, if \(\theta ^*>0\) then \(\tau ^*\) becomes \(\tau _D^-(\theta ^*)\), as for the drawdown cancellable contract without drawup contingency. For example, by using Verification Lemma 5, to simplify exposition, for \(\theta ^*<0\) we will later treat \(\tau _{d-\theta ^*}^+\) as the first drawdown passage time \(\tau _D^-(\theta ^*)\).

At this point it should be noted that if \({\tilde{k}}(D_{\tau _{d-\theta }^+},U_{\tau _{d-\theta }^+},p)<0\) for all \(\theta \), then it is optimal for the investor to never terminate the contract and, hence, \(\tau =\infty \) and \(h_{\tau }(d,u,p)\)=0. To avoid this trivial case, from now we assume that there exists at least one point \((d_0,u_0)\) with \(d_0\in [0,d)\) and \(u_0>u\) for which \({\tilde{k}}(d_0,u_0,p)>0\). This is equivalent to the following inequality:

We will now find function \(h_{\tau }(d,u,p)\) defined in (60) for the postulated stopping rule

Then, we will maximise \(h_{\tau }(d,u,p)\) over \(\theta \) to find \(\theta ^*\). In the last step, we will utilise the Verification Lemma (checking all its conditions) to verify that the suggested stopping rule is a true optimal.

We start from the first step, which is identifying the function \(h_{\tau }(d,u,p)\) for \(\tau \) given (64). For \(\theta \in (d+u-b,a)\) we denote:

Recall that the underlying process X starts at 0. Note that for \(\theta \ge d\), then \(X_0>d-\theta \) and the investor should stop the contract immediately; that is,

Assume now that \(\theta <d\). We will calculate function \(h_>(d,u,p,\theta )\). Given that the considered process \(X_t\) has no strictly positive jumps and, hence, it crosses upward at all levels continuously, from the definitions of the drawup and drawdown processes we have:

Moreover, on the event \(\{\tau ^+_{d-\theta }<\tau _U^+(b)\wedge \tau _D^+(a)\}\) we can observe that \({\overline{U}}_{\tau ^+_{d-\theta }}<b\) and \({\overline{D}}_{\tau ^+_{d-\theta }}<a\), where \({\overline{U}}_t:=\sup _{s\le t} U_s\) and \({\overline{D}}_t:=\sup _{s\le t} D_s\) denote the running supremum of the processes \(U_t\) and \(D_t\), respectively. In fact, from the definition of the drawup we have \({\overline{U}}_{\tau ^+_{d-\theta }}=U_{\tau ^+_{d-\theta }}\). Thus, the following inequality holds true: \({\underline{X}}_{\tau ^+_{d-\theta }}\wedge (-u)>d-\theta -b\). When analysing the drawdown process, we consider two cases. First, let \(\theta >0\), and then \({\overline{D}}_{\tau ^+_{d-\theta }}=d-{\underline{X}}_{\tau ^+_{d-\theta }}<a\). Second, if \(\theta \le 0\), then we have \({\overline{D}}_{\tau ^+_{d-\theta }}\le (d-\theta )-{\underline{X}}_{\tau ^+_{d-\theta }}\le U_{\tau ^+_{d-\theta }}<b<a\). These observations give an additional inequality: \({\underline{X}}_{\tau ^+_{d-\theta }}>(d-\theta )\vee d-a\). We can now rewrite the function \(h_>\) as follows:

The joint law for \((\tau _{d-\theta }^+,{\underline{X}}_{\tau ^+_{d-\theta }})\) can be derived from two-sided formula given in (9):

The final form of the function \(h_>(d,u,p,\theta )\) can then be expressed as follows:

Because \(h_<(d,u,p,\theta )\) does not depend on \(\theta \), as previously for the drawdown contract, we choose the optimal level \(\theta ^*\) such that it maximises the function \(h_>(d,u,p,\theta )\); that is, we define:

Lemma 15

Assume that (63) holds. Then, there exist \(\theta ^*\) defined in (68).

Proof

First note that \(h_>(d,u,p,\cdot )\) is continuous on \([d+u-b,d]\) because \({\tilde{k}}\) and scale function \(W^{(r)}\) are continuous. If \(\theta \downarrow d+u-b\) then \(D_{\tau ^+_{d-\theta }}\downarrow (d+u-b)^+\) and \(U_{\tau ^+_{d-\theta }}\uparrow b\). Thus,

On the one hand, as \(\theta \uparrow d\) we get \(D_{\tau ^+_{d-\theta }}\uparrow d\) and then,

If \({\tilde{k}}(d,u,p)<0\) then by assumption (63) there exists \(\theta ^*\). On the other hand, when \({\tilde{k}}(d,u,p)\ge 0\) then either \(\theta ^*\) exists inside interval \((d+u-b,d)\) or the maximum is attained at boundary; that is, \(\theta ^*=d\). The second scenario corresponds to a case where the contract is immediately stopped. \(\square \)

From this proof, we can see that \(h_>(d,u,p,\theta )\longrightarrow {\tilde{k}}(d,u,p)\). Thus, the continuous fit for this problem is always satisfied.Footnote 1

In the last step, using Verification Lemma 5, we will prove that the stopping rule (62) is indeed optimal.

Theorem 16

Let \(c: [d+u-b,a)\rightarrow {\mathbb {R}}_+\) be a non-increasing bounded function in \(C^2([d+u-b,a))\) and it additionally satisfies (41). Assume that (63) holds. Let \(\theta ^*\) defined by (68) satisfy the smooth fit condition:

if \(\sigma >0\). If

then \(\tau ^*=\tau _{d-\theta ^*}^+\) given in (62) is the optimal stopping rule for the stopping problem (59) and, hence, for the insurance contract (57). In this case the value K(d, u, p) of the drawdown contract with drawup contingency and cancellable feature equals \(k(d,u,p)+h_{\tau _{d-\theta ^*}^+}(d,u,p)\), as defined in (65) for \(h_<(d,u,p,\theta ^*)\), and identified in (66) and for \(h_>(d,u,p,\theta ^*)\) given in (67).

Proof

We again apply the Verification Lemma 5 for the optimal stopping problem (59). This time we take Markov process \(\Upsilon _t=(D_t, U_t)\), \({\mathbb {B}}={\mathbb {R}}_+\times {\mathbb {R}}_+\), \(\tau _0=\tau _U^+(b)\wedge \tau _D^+(a)\) and \(V(\phi )={\tilde{k}}(d,u,p)\) with \(\phi =(d,u)\). To prove the domination condition (i) of Verification Lemma 5, we have to show that \(h_{\tau ^+_{d-\theta ^*}}(d,u,p)\ge {\tilde{k}}(d,u,p)\). To do this, we take \(\theta =d\) and use the definition of \(\theta ^*\) in (68). In particular, if \(\theta ^*<d\) then

Otherwise, if \(\theta ^*\ge d\), then:

To prove the second condition of Verification Lemma 5, which states that process

is a supermartingale, we use key Lemma 7. We take \(p(t,d,u):=e^{-rt}h(d,u,p)\) for \(d\in [0,\infty )\), \(u\in [0,b)\) and \(C=(\theta ^*,\infty )\), \(S=[d+u-b,\theta ^*)\), and then \(p_C(t,d,u)=e^{-rt}h_>(t,d,u,\theta ^*){mathbb {1}}_{(d\in C)}\) and \(p_S(t,d,u)=e^{-rt}h_<(t,d,u,\theta ^*){mathbb {1}}_{(d\in S)}\). Note that \(\theta ^*\) always satisfies the continuous fit and the smooth fit condition by the made assumption. Also observe that function \(h_{\tau _{d-\theta ^*}^+}(d,u,p)\) defined in (60) is bounded. Indeed, this follows from the inequalities \(0\le \nu (d,u)\le 1\), \(0\le \lambda (d,u)\le 1\), \(N(d,u)\le \Xi (d)<\infty \) by (16) for any \(d\ge 0, u\ge 0\). Thus, from the Strong Markov Property, the process

is a martingale (with the interpretation as mentioned in Remark 14 for \(\theta ^*\le 0\)). Thus, the condition from Lemma 7 (ii) is satisfied and \(I(p_C)\) is a martingale.

To prove that \(I(p_S)\) is a supermartingale for region S, we first show that \(e^{-rt}{\tilde{k}}(D_t,U_t,p)\) is a supermartingale. Indeed, from the definition of \({\tilde{k}}\) given in (61) we have

Now, note that

are \(\mathcal {F}_t\)-martingales. Then, the supermartingale condition of \(e^{-rt}{\tilde{k}}\) holds if \(\left( \frac{p}{r}-c(d)\right) \) is a supermartingale. By [16, Thm. 31.5] this holds when:

which is a consequence of assumption (41); see also, (44). Note that the condition (40) also holds from assumption that function c is non-increasing. Indeed, observe that

and \(\frac{\partial }{\partial u}\left( \frac{p}{r}-c(d)\right) =0\). To prove the supermartingale property of \(I(p_S)\) for \(p_S(t,D_t,U_t)=e^{-rt}h_<(D_t,U_t,p){mathbb {1}}_{(d\in S)}\) note that for \(d\in S\) we have \(p_S(t,d,u)=e^{-rt}{\tilde{k}}(d,u,p)\) from (66). Thus, the generator \(\mathcal {A}^{(D,U)}\) of \(p_S\), as defined in (37), is equal:

From the made assumption (69), the integral in the above formula is positive. Thus, for \(d\in S\), we have

Hence, the supermartingale condition (39) from Lemma 7 (iii) for \(I(p_S)\) is satisfied. Given that \(p_S(t,d,u)=e^{-rt}{\tilde{k}}(d,u,p)\) for \(d\in S\), the condition (40) is the same for both functions because the derivative in (40) is checked at \(d+u-b\in S\). Thus, the condition (40) is satisfied for \(p_S\) because it is satisfied for \(e^{-rt}{\tilde{k}}(d,u,p)\), as we have shown earlier. This completes the proof of the supermartingale property of \(I(p_S)\) for \(p_S(t,d,u)=e^{-rt}h_<(d,u,p,\theta ^*){mathbb {1}}_{(d\in S)}\). \(\square \)

Example 1

(continued) We continue the analysis of linear Brownian motion for the drawdown contract with drawup contingency and cancellable feature. We take \(b<a\). Note that we can calculate function \(h_>\) from (67). Then, we can find \(\theta ^*\) that maximises this function \(h_{>}\). We can numerically check that for chosen parameters this identified \(\theta ^*\) indeed satisfies the smooth fit condition. Note also that the condition (69) is satisfied because the Brownian motion has no jumps. This means that \(\tau ^+_{d-\theta ^*}\) is the optimal stopping rule.

On Fig. 13 we depicted the function h for constant, linear (48) and quadratic (49) fee functions. Note that condition (63) is satisfied in these cases. On Fig. 14, taking the fee function \(c_2\) given in (49) (yellow graph on Fig. 13), we show that \(\theta ^*\approx 1.8\), which was found before, indeed satisfies the smooth fit condition in this case.

The condition (69) (left) and the function h (right) for Cramér–Lundberg model and linear fee function \(c_3\). Parameters: \(r=0.01,\ {\hat{\mu }}=0.04,\ \beta =0.1,\ \rho =2.5,\ a=10, \alpha (d)=100+20d, p=0.6\)

Example 2

(continued) For the Cramér–Lundberg model, we consider the case when \(a=b\). Assume first that \(d+u\ge a\), then the indicator in the last increment equals zero and we have:

If \(d+u<a\), then using integration by parts formula we get:

Using these formula, we can find function \(h_>\) for the linear fee function, which is defined as:

for \(c<\frac{p}{r}\). The graph of function \(h_>\) is depicted on Fig. 15. On the same figure, we also show that condition (69) is satisfied. We check the condition (63) numerically and find that it is satisfied in all considered cases. On Fig. 16 we present the continuous fit pasting for yellow graph with \(c_3(d,35)\), which appears also on Fig. 15.

Notes

Because of the complexity of the value function \(h_>(t,\theta ^*,u)\) we cannot prove the smooth pasting condition using theoretical considerations. However, we believe that it always holds true.

References

Carr, P., Zhang, H., Hadjiliadis, O.: Maximum drawdown insurance. Int. J. Theor. App. Financ. 14(8), 1–36 (2011)

Cont, R., Tankov, P.: Financial Modelling with Jump Processes. Chapman and Hall, CRC Press (2003)

Eisenbaum, N., Kyprianou, A.E.: On the parabolic generator of a general one-dimensional Lévy process. Electron. Commun. Probab. 13, 198–208 (2008)

Grossman, S.J., Zhou, Z.: Optimal investment strategies for controlling drawdowns. Math. Financ. 3(3), 241–276 (1993)

Kyprianou, A.E., Kuznetsov, A., Rivero, V.: The theory of scale functions for spectrally negative Le\(\acute{\text{v}}\)y processes. Lévy Matters II, Springer Lecture Notes in Mathematics (2013)

Kyprianou, A.E.: Introductory Lectures on Fluctuations of Lévy Processes with Applications. Springer, Berlin (2006)

Kyprianou, A.E.: Gerber–Shiu Risk Theory. Springer, Berlin (2013)

Kyprianou, A.E., Surya, B.: A note on a change of variable formula with local time-space for Lévy processes of bounded variation. Séminaire de Probabilites XL, 97–104 (2006)

Magdon-Ismail, M., Atiya, A.: Maximum drawdown. Risk 17(10), 99–102 (2004)

Mijatović, A., Pistorius, M.R.: On the drawdown of completely asymmetric Lévy process. Stoch. Process. Appl. 122(11), 3812–3836 (2012)

Palmowski, Z., Tumilewicz, J.: Pricing insurance drawdowns-type contracts with underlying Lévy assets. https://arxiv.org/abs/1701.01891 (2016) (Submitted for publication)

Peskir, G.: On the American option problem. Math. Financ. 15(1), 169–181 (2005)

Pistorius, M.R.: A potential-theoretical review of some exit problems of spectrally negative Lévy processes. Séminaire de Probabilités XXXVIII, 30–41 (2004)

Pospisil, L., Vecer, J.: Portfolio sensitivities to the changes in the maximum and the maximum drawdown. Quant. Financ. 10(6), 617–627 (2010)

Pospisil, L., Vecer, J., Hadjiliadis, O.: Formulas for stopped diffusion processes with stopping times based on drawdowns and drawups. Stoch. Process. Appl. 119(8), 2563–2578 (2009)

Sato, K.: Lévy Processes and Infinitely Divisible Distributions. Cambridge University Press, Cambridge (1999)

Sornette, D.: Why Stock Markets Crash: Critical Events in Complex Financial Systems. Princeton University Press, Princeton (2003)

Vecer, J.: Maximum drawdown and directional trading. Risk 19(12), 88–92 (2006)

Vecer, J.: Preventing portfolio losses by hedging maximum drawdown. Wilmott 5(4), 1–8 (2007)

Zhang, H., Hadjiliadis, 0.: Formulas for the Laplace transform of stopping times based on drawdowns and drawups. https://avix.org/pdf/0911.1575 (2009)

Zhang, H., Leung, T., Hadjiliadis, O.: Stochastic modeling and fair valuation of drawdown insurance. Insur. Math. Econ. 53, 840–850 (2013)

Author information

Authors and Affiliations

Corresponding author

Additional information

This work is partially supported by National Science Centre Grants No. 2015/17/B/ST1/01102 (2016-2019) and No. 2016/23/N/ST1/01189 (2017-2019).

Appendix

Appendix

Proof of Proposition 2

The identity for \(\xi \) was already proven in [11]. We focus on proving the identity for \(\Xi \). Note that \(\tau _D^+(a)\) may happen before new supremum \({\overline{X}}_t>d\) is attained or after this event. In a case where the underlying process X does not cross level d, the drawdown epoch \(\tau _D^+(a)\) just exceeds the level \(d-a\) by the process X. On the other hand, when X crosses level d, then a new supremum is attained and the drawdown process starts from 0. We then split the function \(\Xi \) into these two separate scenarios:

We then use the two-sided formula given in (9). We now identify the expectations appearing in the last line (71) of the above equation.

Let us rewrite the first expectation by considering the position of the process X at time \(\tau _0^-\):

The first increment of above identity refers to a case when the drawdown process \(D_t\) creeps over level a and the second increment refers to case when stopping time \(\tau _D^+(a)\) is attained by a jump of \(X_t\) that puts the process \(D_t\) strictly above level a. The case of creeping was analysed in [13, Cor. 3], producing:

where \(u^r(x,\cdot ,a)\) is a potential density of \(X_t\) killed on exiting [0, a] starting at \(x\in [0,a]\). By [6, Th. 8.7] we have:

We recall that the spectrally negative process \(X_t\) creeps across 0 if and only if \(X_t\) has a non-zero Gaussian coefficient \(\sigma ^2\) (see [13, Cor. 2]). Thus,

The case concerning jump can be solved by considering the joint law of \(\tau _X^-(0)\) and \(X_{\tau _X^-(0)}\), which is given in [7, Th. 5.5] (note the result there, although presented only for the drift minus compound Poisson process, holds for general spectrally negative Lévy process). Then,

for \(h>0\) and \(z\in (0,a)\). Because we only need to know the position of \(X_{\tau ^-_0}\) ,we have to integrate above equation with respect to z. In summary,

To find (71), in the last part of the proof we find the formula for \({\mathbb {E}}\left[ e^{-r\tau _D^+(a)}\right. \left. \alpha (D_{\tau _D^+(a)})\right] \). Note that

The first equation describes a case where drawdown process creeps over a and the second equation refers to a case where it strictly exceeds level a by jump. These two expectations were calculated in [10], as follows:

and

Putting all of the increments together completes the proof. \(\square \)

Proof of Lemma 7

Assume at the beginning that \(\sigma >0\). Using the same arguments as in the proof of Eisenbaum and Kyprianou [3, Thm.3], and fact that by our assumptions the transient density of \(D_t\) exists and hence

(see also [12, Eq. (2.26)–(2.30)]), we can extend Itô formula for function \(p(t,D_t,U_t)\) into the following change of variables formula:

where \(L_t^\theta \) is a local time of \(D_t\) at \(\theta \), which can be defined formally as was done for the process \(X_t\) in [3, Thm. 3]. However, in this construction we use one crucial observation. Note that the local time in [3] is defined along some continuous curve b(t). The local time \(L_t^\theta \) of \(D_t\) at point \(\theta \) is the same as the local time of \(X_t\) at \(b(t)={\overline{X}}_t-\theta \), which is continuous because process \({\overline{X}}_t\) is continuous. Moreover, the process \(X_t\) in [3] lives in whole real line but the drawdown process \(D_t\) lives on non-negative half-line \([0,\infty )\). Therefore we have in \(I(p_C)\) and in \(I(p_S)\) additional integrals with respect to continuous parts of supremum and infimum processes.

Now, the smooth fit reduces the change variables formula into the following identity:

Thus, \(p(t,D_t, U_t)\) is a supermartingale if \(I(p_C)(t,D_t, U_t)\) is a martingale and \(I(p_S)(t,D_t, U_t)\) is a supermartingale. This completes the proof of the first part (i).

To prove the second (ii) and third (iii) parts, note that from the Dynkin’s formula for \({\hat{p}}=p_C\) or \({\hat{p}}=p_S\) we have:

where \(\mathcal {M}_t\) is a martingale part and \(\mathcal {A}^{(D,U)}\) is the full generator of the Markov process \((D_t,U_t)\) defined in (37)–(38). Other explanation comes from identifying the drift as a compensator of \(X^c\), from the equality \(\mathop {}\!\mathrm {d}[X]^c_s=\sigma \mathop {}\!\mathrm {d}t\) and compensation formula applied to the jump part (see e.g. [6, Thm. 4.4, p. 95]).

Moreover, to prove (ii) part, observe that

which follows from Itô formula applied to \(p_C\) in the open set C. Note also that process \((t,D_t, U_t)\) goes from set C to set S in continuous way since \(X_t\) is spectrally negative. If \(p_C(t\wedge \tau _D^-(\theta ^*),D_{t\wedge \tau _D^-(\theta ^*)}, U_{t\wedge \tau _D^-(\theta ^*)})=p_C(t\wedge \tau _D^-(\theta ^*)-,D_{t\wedge \tau _D^-(\theta ^*)-}, U_{t\wedge \tau _D^-(\theta ^*)-})\) is a martingale, then from (73) applied to \({\hat{p}}=p_C\) and from (74) by taking small \(t>0\) we can conclude that martingale condition (35) and normal reflection condition (36) hold true. Thus again by (73) the process \(I(p_C)(t,D_t, U_t)\) is a martingale for all \(t\ge 0\).

Similarly, from (73) we can conclude that the supermartingale condition (39) and normal reflection condition (40) give the supermartingale property of \(I(p_S)(t,D_t,U_t)\) (we also use the observation that \({\underline{X}}^c_s\) is a decreasing process).

All of these arguments remain true and are almost unchanged for the Lévy processes (3) of bounded variation (hence, for \(\sigma =0\)). The only change that has to be made is changing (72) into

see [8] for details. In the next step, the continuous pasting condition should be applied and the rest of the proof is the same as before. This completes the proof. \(\square \)

Proof of Proposition 11

The identities for \(\lambda (\cdot ,\cdot )\) and \(\nu (\cdot ,\cdot )\) were proven in [11] for both cases \(b<a\) and \(b=a\). We focus on identifying \(N(\cdot ,\cdot )\). Note that \({mathbb {1}}_{(\tau _D^+(a)<\tau _U^+(b))}=1-{mathbb {1}}_{(\tau _U^+(b)<\tau _D^+(a))}\) because \(\tau _D^+(a)\) and \(\tau _U^+(b)\) cannot happen at the same time. Thus, for \(b\le a\), we have

which follows from the Strong Markov Property. We will analyse the cases \(b<a\) and \(b=a\) separately. Assume first that \(b<a\). We can extend the equivalent representation of the event \(\left\{ \tau _U^+(b)<\tau _D^+(a),\ D_0=y,\ U_0=z\right\} \) in terms of running supremum and infimum of underlying process \(X_t\) given in [11] by adding the position of the drawdown process \(D_t\) at the stopping moment \(\tau _U^+(b)\) as follows:

This is a purely geometric and pathwise observation. Using this identity, we can derive the following equality:

Using definition of \(\Xi (\cdot )\) given in (15) we obtain the result for \(b<a\).

For \(b=a\), note that when \(a\le d+u\) then \(\{\tau _U^+(a)<\tau _D^+(a)\} = \{\tau ^+_{a-u}<\tau ^-_{d-a}\}\). Moreover, since \(a-u\le d\) and the process \(X_t\) has no positive jumps, then \({\overline{X}}_{\tau ^+_U(a)}\vee d={\overline{X}}_0\vee d=d\) and \(X_{\tau _U^+(a)}=a-u\). This gives that in this case \(D_{\tau _U^+(a)}=d+u-a\). On the other hand, when \(a>u+d\) then \(X_{\tau _U^+(a)}={\overline{X}}_{\tau _U^+(a)}\in (d,a-u]\) (see [11] for details). Therefore, we get that \(D_{\tau _U^+(a)}=0\). We have just proven that \(D_{\tau _U^+(a)}=(d+u-a)^+\). Thus,

This completes the proof. \(\square \)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Palmowski, Z., Tumilewicz, J. Fair Valuation of Lévy-Type Drawdown-Drawup Contracts with General Insured and Penalty Functions. Appl Math Optim 81, 301–347 (2020). https://doi.org/10.1007/s00245-018-9492-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00245-018-9492-y