Abstract

Background

In vivo and ex vivo simulation training workshops can contribute to surgical skill acquisition but require validation before becoming incorporated within curricula. Ideally, that validation should include the following: face, content, construct, concurrent, and predictive validity.

Methods

During two in vivo porcine surgical training workshops, 27 participants completed questionnaires relating to face and content validity of porcine in vivo flap elevation. Six participants’ performances raising a pedicled myocutaneous latissimus dorsi (LD) flap in the pig (2 experts and 4 trainees) were sequentially and objectively assessed for construct validity with hand motion analysis (HMA), a performance checklist, a blinded randomized procedure-specific rating scale of standardized video recordings, and flap viability by fluorescence imaging.

Results

Face and content validity were demonstrated straightforwardly. Construct validity was demonstrated for average procedure time by HMA between trainees and experts (p = 0.036). Skill acquisition was demonstrated by trainees’ HMA average number of hand movements (p = 0.046) and fluorescence flap viability (p = 0.034).

Conclusion

Face and content validity for in vivo porcine flap elevation simulation training were established. Construct validity was established for an in vivo porcine latissimus dorsi flap elevation simulation specifically. Predictive validity will prove more challenging to establish.

Level of evidence: Not ratable .

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Trainee surgeons are increasingly expected to complete a variety of competency-based surgical courses during specialty training. Surgical courses on high fidelity simulation models provide students and junior trainees with key practical skills placing them at the center of the educational experience as opposed to the clinical setting [1]. Surgical simulation is increasingly presented as a solution to improving patient safety despite truncation of training schemes. It has particular relevance to technically challenging sub-specialty areas including microvascular flap reconstruction [2].

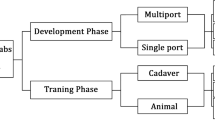

Microsurgical simulation training courses generally extend over a number of consecutive days aiming to provide a bundle of skills within a patient safe and non-threatening training environment for trainees [3, 4]. These educational and training interventions have been shown to lead to significant technical and non-technical skill acquisition both over the course period, as well as over a long term [5,6,7]. Usually, microsurgical simulation training progresses from ex vivo to in vivo animal simulations with a rising level of complexity [8, 9].

There is an increasing recognition clinically that microvascular reconstructive flap outcomes are at least as dependent on flap elevation as the subsequent microvascular anastomoses, especially that successful flap design relies on maintaining the structure and physiological function of smaller perforator vessels [10].

The live pig is a favorable model for reconstructive microsurgery flap elevation over other models due to its large size and similarity to human anatomy [11,12,13,14]. In a step towards achieving validation of the live pig as a training model in reconstructive microsurgery training, the aim of this research was to investigate trainees’ perceptions (face and content validity) as well as skill acquisition (construct validity) of an in vivo porcine simulation training model for a classical flap, namely, the pedicled latissimus dorsi myocutaneous flap.

Methods

Study participants

Twenty-seven participants from two different surgical simulation courses were involved in the study: 18 participants from the “Advance Hands-on Course in Microsurgical Breast Reconstruction Workshop” that took place at the Experimental Research Center of ELPEN, Athens, Greece on the 12th–13th June 2015, and 9 participants from the “Free flap dissection workshop” that took place on the 15th–17th of March, 2015 at the Pius Branzeu Center, Timisoara, Romania (Fig. 1).

Face and content validity

We used questionnaires as the main assessment tool, as it has been the mainstay of subjective assessment accounting for face and content validity [15,16,17,18]. All 27 participants have completed a questionnaire of 15 questions requiring a response from a 5-point Likert scale. The questionnaire included general questions about the usefulness and overall experience of the courses, as well as questions related to the acquisition of skill and similarity of the live pig model to the real-time setting. A further questionnaire of 20 questions requiring a response from a 5-point Likert scale was completed by 6 participants from the Timisoara group, which included additional specific questions in relation to the elevation of the latissimus dorsi myocutaneous flap on the live pig model.

Construct validity—objective assessments

Six participants from the “free flap dissection workshop” in Timisoara also completed objective assessments on an in vivo porcine simulation of the LD flap elevation. They were divided into two groups: 4 surgical trainees and an expert group comprising of 2 plastic surgery consultants with at least 6 years of training in the UK (Fig. 1).

Objective assessments of skill and skill acquisition (first flap elevation = pre-test, second flap elevation = post-test) included the following: HMA using the Trackstar™ electromagnetic tracking system [19, 20]; video recording [21] using a GoPro™ camera (Fig. 2); and grading with a peer-reviewed procedure-specific rating scale [22] (Appendix B), a performance checklist [23], and an assessment of flap viability using a skin scratch test (Fig. 3) and fluorescence imaging [24] (Fig. 4). The assessment methods are listed in Table 1.

Analysis of data

Face and content validities were assessed by Likert scale questionnaires, with the answers to general questions relating to the courses as indicators of face validity and answers to specific questions directly relating to the LD flap procedure indicators of content validity. The mean Likert data for each question are presented as percentages. Construct validity was assessed by a student t-test (parametric values: time and path length) and Mann-Whitney U test (non-parametric values: number of hand movements, checklist, PSRS, and flap viability). In evaluating the effect of training on skill acquisition, the differences between pre-test and post-test were compared between skill levels. A paired sample t-test was used for parametric values, and a Wilcoxon rank sum test was used for non-parametric values. For all tests a p value of < 0.05 was considered statistically significant.

Results

Face and content validity

Of the total 27 participants canvassed for both courses, 100% agreed strongly or moderately that the live pig is a useful model for flap elevation training. Of the participants, 98.2% agreed strongly or moderately that the live pig is a useful model for skill acquisition and relevant clinical application, and 77.8% were interested in attending a similar course in the future. Only a very small minority dismissed inclusion at an undergraduate level (3.7%) and at CST level (7.4%) (Fig. 5).

Of the 6 participants who raised the latissimus dorsi flap, 100% agreed strongly or moderately that the live pig model is useful for both skill acquisition and potentially skill maintenance in raising the flap. One hundred percent agreed that the live pig training model adequately simulates the gross dissection and microvascular skills involved in raising and following the flap. All of the participants recommended porcine flap elevation simulation for trainees prior to LD flap elevation clinically. Half of the participants recommended the live porcine simulation training model for general plastic surgical skill acquisition, and 66.7% agreed that training using the live pig model adequately resembles operating on human tissue or the real-time setting. Further review of questionnaire results is shown in Fig. 6.

Construct validity

HMA

The expert group scored lower values on all three HMA parameters: time, number of movements and path length, and on the pre-test attempt. The average total time taken by the expert group was 37.75 min and that for the trainee group was 96.28 min, with a difference of 58.5 min (60.8%) (p = 0.036) (Fig. 7). The average total number of movements recorded for the expert group was 6185.75 movements and that for the trainee group was 12,285.75 movements, with a difference of 6100 movements (49.7%) (p = 0.165). The average total path length recorded for the expert group was 342.94 m and that for the trainee group was 837.64 m, with a difference of 494.71 m (59.1%) (p = 0.146 - Table 2).

Checklist

A difference of 20% was noted for the pre-test checklist between the control and trainee group (p = 0.140) (Appendix A).

Flap viability

Skin scratch test for flap viability

The expert group achieved 100% viability on the pre-test attempt (n = 2) (2 viable flaps) but 25% (1 viable flap) among the trainees’ group (n = 4). This comparison was not statistically significant (p = 0.114).

Fluoptics® imaging

Three flaps were assessed using the Fluoptics® system on the pre-test attempt, including those of both experts and one trainee. All three flaps were viable; however, this data was not sufficient for comparison between both groups but lends itself to further study.

PSRS

The average total score for the control group was 49.5 points (99%) and that of the trainee group was 36 points (72%). The control group score was higher than that of the trainee group by a mean difference of 13.5 points (27%). The difference, however, was not statistically significant at the level of p < 0.05 (p = 0.064) (Appendix B).

Effect of a single repetition on skill acquisition

HMA

In the trainee group, there was a decrease in average time to completion of 44.2%, average number of movements of 52.3%, and average path length of 68.1% compared with their initial attempt. For the control group, there was an increase in average time to completion of 12.9%, and a decrease in the average number of movements of 52.1% and average path length of 22.5%. A summary of differences between pre-test and post-test values in the control and trainee groups is shown in Table 2. Difference between attempts for all participants were not significant for average time (p = 0.206) or average path length (p = 0.099) but were statistically significant for number of hand movements (p = 0.046).

Checklist

The mean pre-test checklist completion for both groups was 86.7% against a mean post-test score of 87.5%. The trainees’ completion percentage was 11.3% higher post-test, while in the expert group, it was actually decreased by 20%, but not statistically significantly (p = 0.917) (Appendix A).

Flap viability

There were 2 viable flaps in the expert control group (100%) on the pre-test attempt, and only 1 flap (50%) in the post-test attempt. The trainee group had only 1 viable flap in the pre-test attempt (25%) and 4 viable flaps (100%) in the post-test attempt (p = 0.034). Flap failure was presumably from direct pedicle damage ± damage to the skin island perforator base. Table 2 shows the number of viable and non-viable flaps in the pre-test and post-test attempts for both groups that was assessed (by skin scratch test, and including those assessed by fluorescence imaging).

PSRS

The lowest achieved score of the PSRS was 30, and the highest achieved was 50 out of 50. On average the pre-test score of the control group was 49.5, and the trainee group 36; the post-test score for the control group was 40 and the trainee group 40.75 (Table 2). The differences were not statistically significant (p = 0.078) (Appendix B).

Discussion

Surgical training courses vary in their content to acquire necessary surgical skills. The variation of training courses and models used leads us to question the efficiency by which these models succeed in delivering surgical skill necessary, which can in turn be transferred to the actual clinical setting. Validation of various surgical training models is without doubt an important stage for identifying, comparing, and consequently selecting a reliable model for its justified use in surgical training, ensuring proper acquisition of skill necessary, with minimal loss of resources and maximal avoidance of risk to patients. In this project, an attempt was made to validate the live pig model for surgical training in the field of reconstructive microsurgery.

Face validity

In this study, the evaluation forms’ responses (n = 27) has supported the live pig model as well, approving that it has led to the improvement of their skill, that these skills would be used in the real setting, and that it is recommended for surgical trainees, all by 100% of responses varying between strong and moderate agreement. The approval denotes the significance of this model in their hands on experience whether in the trainee or control group.

Despite the high approval rate of the flap model, there was only a 50% agreement on recommending courses with the live pig training model for trainees for learning of other flaps or general plastic surgery skills. These responses may be explained by varying trainees’ seniority and operative experience. Early exposure builds confidence and may develop a more profound clinical interest, a better learning experience, and a more enhanced learning curve [25].

Responses on timing of introducing the model into the surgical curriculum varied at the undergraduate stage or during early core surgical training. In contrast, most respondents agreed that it should be part of a higher surgical training, an opinion warranted by the fact that this type of procedures are usually carried out at higher levels of training in any case, and therefore these skills may be unnecessary to acquire at earlier stages.

Content validity

Most participants agreed that the live pig model is excellent for preparation and maintenance of skills involved in the LD flap operation and that the live pig model adequately presents the tissue and pedicle dissection skills involved in raising the LD flap. The agreement however was understandably impeded by the anatomical factor; the difference in anatomy rendered the model less accepted for representation of flap marking and design skill.

Publications vary in their consideration of content validity through participants’ responses in regard to skill level: Some include both experts and trainees [26,27,28], while others only consider the opinions of experts [18, 29, 30]. In this validation, the discrimination between expert and trainee was only made for 6 participants by questionnaire. As the total number of participants (n = 27) including both experts and trainees provided responses in favor of the live pig model in terms of face and content validity, discrimination between levels of expertise was considered unnecessary. Those few fully assessed at the workshop in the “free flap dissection workshop” in Timisoara were supported by the opinions of attendees at the “Advanced Hands on Course in Microsurgical Breast Reconstruction” in Athens.

The main and relatively small number of participants (n = 6) who were fully assessed at the workshop in the “free flap dissection workshop” in Timisoara was strengthened by adding data from the same tools of the assessment of face and content validities from the “Advance Hands on Course in Microsurgical Breast Reconstruction” in Athens; the combined results had aided in strengthening the validation and eliminating influencing factors that may be related to the workshops themselves from the validation process of the live pig training model. Overall, the model showed promising results of face and content validity.

Construct validity

Despite the small sample size of this study, the model allowed differential demonstration of competence between the expert and trainee groups as measured by procedure-specific checklist, flap physiological outcome (scratch test and fluorescence imaging), and objective hand motion analysis. However, this discrimination was not statistically significant on all parameters measured.

The procedure-specific checklist and global rating scales were developed by the expert panel in this study and are likely to prove most valuable as a training feedback tool. However, neither the check list nor the global rating scale was subjected to any validation research before their use in this procedure assessment. Many surgical assessment tools have been designed and introduced in the field of reconstructive microsurgery for the same procedure by different research groups [31]. The standardization of surgical assessment tools by means of comparative performance and consensus methodologies [32] may help limit variables when it comes to data collection, thus allowing an accurate compilation of skill acquisition data for future validation of training models.

Physiological flap perfusion parameters were used to evaluate performance between the two groups. Various factors may affect viability outcome in any flap surgery. The general condition of the animal and tissue handling during the procedure play an important part in the surgical outcome. Despite the high level of success of modern free flap surgery, reaching up to 95% success rate [33], free flap failure continues to be a serious complication that should be diagnosed and addressed early during and/or postoperatively. Out of the two methods used to assess flap viability, the fluorescence imaging provided direct visualization of flap perfusion and is therefore more objective than the scratch test. This assessment method, although was not of great value statistically, provided a very important feedback on individual and team performance.

Hand motion analysis (HMA) is an objective assessment tool of surgical skills that involves the tracking of one’s hand movements while performing a standardized task, using measures gained from this tracking, to assess competence and acquisition of microsurgical skill [34, 35].

In this study, there was a notable difference between expert and trainee groups on the pre-test attempt in all three parameters of HMA measurements, namely, time, hand movements, and path length. Despite the fact that only average time was statistically significant, this objective discrimination shows promising results for the purpose of this training model’s construct validity for future research.

There is no consensus on the ideal placement for the digital sensors for HMA [36], and standardization is likely to provide more consistency. There was some limited electromagnetic interference with the HMA software when the transmitter was in close proximity to the electrocautery device.

Simultaneous filming of the procedures allows real-time or subsequent expert assessment with a rating score (PSRS). In this instance, the expert rater was present at the workshop, so ratings were not absolutely blinded. Nevertheless, the complexity of whole procedure simulation provided by this model presents an extremely rich opportunity for assessment of skills. The procedure length, instrument handling, tissue handling, and pedicle handling all provide a spectrum of competencies that are easy to discern by a blinded assessor despite the potential bias of procedure’s audio and video footage that might make it easy to identify a trainee from an expert.

The eagerness of the trainee group to improve their performance both established a promising level of construct validity of the model and exposed a relative fall in performance in the expert control group. This could be explained by an element of overconfidence. The expert group included experienced specialists who had performed the procedure numerous times in the clinical setting. Any lack of interest in a perfect post-test attempt is more striking following flawless pre-test attempts. Indeed, surgical training workshops also offer a platform for altering the confidence and attitudes of surgeons. Through training, overconfidence can be reduced [37, 38].

Conclusions

The in vivo porcine simulation model of pedicled latissimus dorsi flap elevation demonstrated face and content validity, and some evidence of construct validity, with trainee skill acquisition. This model can then be extended to an even more face valid free flap model, by dividing the pedicle and re-anastamosing at a distant site.

References

Borman KR, Fuhrman GM, Association Program Directors in Surgery (2009) "Resident duty hours: enhancing sleep, supervision, and safety": response of the Association of Program Directors in surgery to the December 2008 report of the Institute of Medicine. Surgery. 146(3):420–427

Britt LD, Sachdeva AK, Healy GB, Whalen TV, Blair PG, Members of ACS Task Force on Resident Duty Hours (2009) Resident duty hours in surgery for ensuring patient safety, providing optimum resident education and training, and promoting resident well-being: a response from the American College of Surgeons to the report of the Institute of Medicine, "resident duty hours: enhancing sleep, supervision, and safety". Surgery. 146(3):398–409

Chan WY, Matteucci P, Southern SJ (2007) Validation of microsurgical models in microsurgery training and competence: a review. Microsurgery. 27(5):494–499

Javid P, Aydın A, Mohanna PN, Dasgupta P, Ahmed K (2019) Current status of simulation and training models in microsurgery: a systematic review. Microsurgery 39(7):655–668

Starkes JL, Payk I, Hodges NJ (1998) Developing a standardized test for the assessment of suturing skill in novice microsurgeons. Microsurgery. 18(1):19–22

Atkins JL, Kalu PU, Lannon DA, Green CJ, Butler PEM (2005) Training in microsurgical skills: assessing microsurgery training. Microsurgery 25(6):481–485

Christensen TJ, Anding W, Shin AY, Bishop AT, Moran SL (2015) The influence of microsurgical training on the practice of hand surgeons. J Reconstr Microsurg 31(6):442–449

Ilie VG, Ilie VI, Dobreanu C, Ghetu N, Luchian S, Pieptu D (2008) Training of microsurgical skills on nonliving models. Microsurgery. 28(7):571–577

Ghanem A, Kearns M, Ballestín A, Froschauer S, Akelina Y, Shurey S, Legagneux J et al (2020) International microsurgery simulation society (IMSS) consensus statement on the minimum standards for a basic microsurgery course, requirements for a microsurgical anastomosis global rating scale and minimum thresholds for training. Injury S0020-1383(20)30078–4. https://doi.org/10.1016/j.injury.2020.02.004

Carey JN, Rommer E, Sheckter C, Minneti M, Talving P, Wong AK, Garner W et al (2014) Simulation of plastic surgery and microvascular procedures using perfused fresh human cadavers. J Plast Reconstr Aesthet Surg 67:2

Kerrigan CL, Zelt RG, Thomson JG, Diano E (1986) The pig as an experimental animal in plastic surgery research for the study of skin flaps, myocutaneous flaps and fasciocutaneous flaps. Lab Anim Sci 36(4):408–412

Bodin F, Diana M, Koutsomanis A, Robert E, Marescaux J, Bruant-Rodier C (2015) Porcine model for free-flap breast reconstruction training. J Plast Reconstr Aesthet Surg 68(10):1402–1409

Millican PG, Poole MD (1985) A pig model for investigation of muscle and myocutaneous flaps. Br J Plast Surg 38(3):364–368

Nebril BA (2019) Porcine model and oncoplastic training: results and reflections. Mastology 29(2):55–57

Oliveira MM, Araujo AB, Nicolato A, Prosdocimi A, Godinho JV, Valle ALM, Santos M et al (2016) Face, content, and construct validity of brain tumor microsurgery simulation using a human placenta model. Operative Neurosurg 12(4):61–67

Evgeniou E, Walker H, Gujral S (2018) The role of simulation in microsurgical training. J Surg Educ 75:1

Hung AJ, Zehnder P, Patil MB, Cai J, Ng CK, Aron M, Gill IS et al (2011) Face, content and construct validity of a novel robotic surgery simulator. J Urol 186(3):1019–1025

Kelly DC, Margules AC, Kundavaram CR, Narins H, Gomella LG, Trabulsi EJ, Lallas CD (2012) Face, content, and construct validation of the da Vinci skills simulator. Urology 79(5):1068–1072

Ghanem A, Podolsky DJ, Fisher DM, Wong RKW, Myers S, Drake JM, Forrest CR (2019) Economy of hand motion during cleft palate surgery using a high-fidelity cleft palate simulator. Cleft Palate Craniofac J 56(4):432–437

Applebaum MA, Doren EL, Ghanem AM, Myers SR, Harrington M, Smith DJ (2018) Microsurgery competency during plastic surgery residency: an objective skills assessment of an integrated residency training program. Eplasty 25;18:e25.

Beard J, Jolly B, Newble D, Thomas WEG, Donnelly J, Southgate LJ (2005) Assessing the technical skills of surgical trainees. (John Wiley & Sons Ltd.) John Wiley & Sons Ltd.

Goderstad JM, Sandvik L, Fosse E, Lieng M (2016) Assessment of surgical competence: development and validation of rating scales used for laparoscopic supracervical hysterectomy. J Surg Educ 73:4

Balasundaram I, Aggarwal R, Darzi LA (2010) Development of a training curriculum for microsurgery. Br J Oral Maxillofac Surg 48(8):598–606

Bettega G, Ochala C, Hitier M, Hamou C, Guillermet S, Gayet P, Coll J-L (2015) Fluorescent angiography for flap planning and monitoring in reconstructive surgery. F.D. Dip et al. (eds.), Fluorescence Imaging for Surgeons: Concepts and Applications. Springer International Publishing Switzerland. https://doi.org/10.1007/978-3-319-15678-1_32

Hamaoui K, Saadeddin M, Sadideen H (2014) Surgical skills training: time to start early. Clinical Teacher. [online] 11(3):179–183

Hennessey IAM, Hewett P (2013) Construct, concurrent, and content validity of the eoSim laparoscopic simulator. J Laparoendosc Adv Surg Tech A 23(10):855

Gröne J, Lauscher J, Buhr H, Ritz J (2010) Face, content and construct validity of a new realistic trainer for conventional techniques in digestive surgery. Langenbeck's Arch Surg 395(5):581–588

Gavazzi A, Bahsoun AN, Van Haute W, Ahmed K, Elhage O, Jaye P, Khan MS, Dasgupta P (2011) Face, content and construct validity of a virtual reality simulator for robotic surgery (SEP robot). Ann R Coll Surg Engl 93(2):152–156

Seixas-Mikelus S, Stegemann AP, Kesavadas T, Srimathveeravalli G, Sathyaseelan G, Chandrasekhar R, Wilding GE, Peabody JO, Guru KA (2011) Content validation of a novel robotic surgical simulator. BJU Int 107(7):1130

Kang SG, Cho S, Kang SH, Haidar AM, Samavedi S, Palmer KJ, Patel VR, Cheon J (2014) The tube 3 module designed for practicing vesicourethral anastomosis in a virtual reality robotic simulator: determination of face, content, and construct validity. Urology 84:345–350

Ramachandran S, Ghanem AM, Myers SR (2013) Assessment of microsurgery competency-where are we now?: assessment of microsurgery competency. Microsurgery 33(5):406–415

Ghanem A, Pafitanis G, Myers S, Kearns M, Ballestin A, Froschauer S, Akelina Y et al (2020) International microsurgery simulation society (IMSS) consensus statement on the minimum standards for a basic microsurgery course, requirements for a microsurgical anastomosis global rating scale and minimum thresholds for training. Injury S0020-1383(20)30078-4. https://doi.org/10.1016/j.injury.2020.02.004

Varvares MA, Lin D, Hadlock T, Azzizadeh B, Gliklich R, Rounds M, Rocco J et al (2005) Success of multiple, sequential, Free Tissue Transfers to the Head and Neck. Laryngoscope 115(1):101–104

Grober ED, Hamstra SJ, Wanzel KR, Reznick RK, Matsumoto ED, Sidhu RS, Jarvi KA (2003) Validation of novel and objective measures of microsurgical skill: hand-motion analysis and stereoscopic visual acuity. Microsurgery 23(4):317–322

Moulton C-A E, Dubrowski A, MacRae H, Graham B, Grober E, Reznick R (2006) Teaching surgical skills: what kind of practice makes perfect?: a randomized, controlled trial. Ann Surg 244(3):400–409

Singh M (2012) The role of direct current high frequency electromagnetic hand motion tracking as an objective assessment tool in microsurgical training. MSc. Imperial College London

Arkes HR (1981) Impediments to accurate clinical judgment and possible ways to minimize their impact. J Consult Clin Psychol 49(3):323–330

Baumann AO, Deber RB, Thompson GG (1991) Overconfidence among physicians and nurses: the ‘micro-certainty, macro-uncertainty’ phenomenon. Soc Sci Med 32(2):167–174

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Maha Wagdy Hamada, Giorgios Pafitanis, Alex Nistor, Youn Hwan Kim, Simon Myers, and Ali Ghanem declare that they have no conflict of interest.

Ethical approval

Romania and Greece are both members of the Federation of Laboratory Animal Science Associations (FELASA). The organization was established in 1978 and supports animal welfare through responsible clinical research (Felasa, 2015). All use of animals was in agreement with the policies described in the Guide for the Care and Use of Laboratory Animals.

Funding

None.

Additional information

Part of this data was included in M.Sc. Dissertation project 2015 at Imperial College London, London, UK, by Ms. Maha Wagdy Hamada, under supervision of Mr. Ali Ghanem.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1

Checklist (excerpt)

Appendix 2

Procedure-specific rating scale

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hamada, M.W., Pafitanis, G., Nistor, A. et al. Validation of an in vivo porcine simulation model of pedicled latissimus dorsi myocutaneous flap elevation. Eur J Plast Surg 44, 65–74 (2021). https://doi.org/10.1007/s00238-020-01734-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00238-020-01734-9