Abstract

This paper introduces the counterpart of strong bisimilarity for labelled transition systems extended with timeout transitions. It supports this concept through a modal characterisation, congruence results for a standard process algebra with recursion, and a complete axiomatisation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This is a contribution to classic untimed non-probabilistic process algebra, modelling systems that move from state to state by performing discrete, uninterpreted actions. A system is modelled as a process-algebraic expression, whose standard semantics is a state in a labelled transition system (LTS). An LTS consists of a set of states, with action-labelled transitions between them. The execution of an action is assumed to be instantaneous, so when any time elapses the system must be in one of its states. With “untimed” I mean that I will refrain from quantifying the passage of time; however, whether a system can pause in some state or not will be part of my model.

Following [33], I consider reactive systems that interact with their environments through the synchronous execution of visible actions \(a, b, c, \ldots \) taken from an alphabet A. At any time, the environment allows a set of actions \(X\subseteq A\), while blocking all other actions. At discrete moments, the environment can change the set of actions it allows. In a metaphor from [33], the environment of a system can be seen as a user interacting with it. This user has a button for each action \(a\in A\), on which it can exercise pressure. When the user exercises pressure and the system is in a state where it can perform action a, the action occurs. For the system, this involves taking an a-labelled transition to a following state; for the environment it entails the button going down, thus making the action occurrence observable. This can trigger the user to alter the set of buttons on which it exercises pressure.

The current paper considers two special actions that can occur as transition labels: the traditional hidden action \(\tau \) [33], modelling the occurrence of an instantaneous action from which we abstract, and the timeout action \(\mathrm{t}\), modelling the end of a time-consuming activity from which we abstract. The latter was introduced in [18] and constitutes the main novelty of the present paper with respect to [33] and forty years of research in process algebra. Both special actions are assumed to be unobservable, in the sense that their occurrence cannot trigger any state-change in the environment. Conversely, the environment cannot cause or block the occurrence of these actions.

Following [18], I model the passage of time in the following way. When a system arrives in a state P, and at that time X is the set of actions allowed by the environment, there are two possibilities. If P has an outgoing transition  with \(\alpha \in X\cup \{\tau \}\), the system immediately takes one of the outgoing transitions

with \(\alpha \in X\cup \{\tau \}\), the system immediately takes one of the outgoing transitions  with \(\alpha \in X\cup \{\tau \}\), without spending any time in state P. The choice between these actions is entirely nondeterministic. The system cannot immediately take a transition

with \(\alpha \in X\cup \{\tau \}\), without spending any time in state P. The choice between these actions is entirely nondeterministic. The system cannot immediately take a transition  with \(b\in A{\setminus }X\), because the action b is blocked by the environment. Neither can it immediately take a transition

with \(b\in A{\setminus }X\), because the action b is blocked by the environment. Neither can it immediately take a transition  , because such transitions model the end of an activity with a finite but positive duration that started when reaching state P.

, because such transitions model the end of an activity with a finite but positive duration that started when reaching state P.

In case P has no outgoing transition  with \(\alpha \in X\cup \{\tau \}\), the system idles in state P for a positive amount of time. This idling can end in two possible ways. Either one of the timeout transitions

with \(\alpha \in X\cup \{\tau \}\), the system idles in state P for a positive amount of time. This idling can end in two possible ways. Either one of the timeout transitions  occurs, or the environment spontaneously changes the set of actions it allows into a different set Y with the property that

occurs, or the environment spontaneously changes the set of actions it allows into a different set Y with the property that  for some \(a \in Y\). In the latter case, a transition

for some \(a \in Y\). In the latter case, a transition  occurs, with \(a \in Y\). The choice between the various ways to end a period of idling is entirely nondeterministic. It is possible to stay forever in state P only if there are no outgoing timeout transitions

occurs, with \(a \in Y\). The choice between the various ways to end a period of idling is entirely nondeterministic. It is possible to stay forever in state P only if there are no outgoing timeout transitions  .

.

The addition of timeouts enhances the expressive power of LTSs and process algebras. The process \(a.P + \mathrm{t}.b.Q\), for instance, models a choice between a.P and b.Q where the former has priority. In an environment where a is allowed it will always choose a.P and never b.Q; but in an environment that blocks a the process will, after some delay, proceed with b.Q. Such a priority mechanism cannot be modelled in standard process algebras without timeouts, such as CCS [33], CSP [6, 28] and ACP [2, 10]. Additionally, mutual exclusion cannot be correctly modelled in any of these standard process algebras [20], but adding timeouts makes it possible—see Sect. 11 for a more precise statement.

In [18], I characterised the coarsest reasonable semantic equivalence on LTSs with timeouts—the one induced by may testing, as proposed by De Nicola and Hennessy [8]. In the absence of timeouts, may testing yields weak trace equivalence, where two processes are defined equivalent iff they have the same weak traces: sequence of actions the system can perform, while eliding hidden actions. In the presence of timeouts weak trace equivalence fails to be a congruence for common process algebraic operators, and may testing yields its congruence closure, characterised in [18] as (rooted) failure trace equivalence.

The present paper aims to characterise one of the finest reasonable semantic equivalences on LTSs with timeouts—the counterpart of strong bisimilarity for LTSs without timeouts. Naturally, strong bisimilarity can be applied verbatim to LTSs with timeouts—and has been in [18]—by treating \(\mathrm{t}\) exactly like any visible action. Here, however, I aim to take into account the essence of timeouts, and propose an equivalence that satisfies some natural laws discussed in [18], such as \(\tau .P + \mathrm{t}.Q = \tau .P\) and \(a.P + \mathrm{t}.(Q + \tau .R + a.S) = a.P + \mathrm{t}.(Q + \tau .R)\). To motivate the last law, note that the timeout transition  can occur only in an environment that blocks the action a, for otherwise a would have taken place before the timeout went off. The occurrence of this transition is not observable by the environment, so right afterwards the state of the environment is unchanged, and the action a is still blocked. Therefore, the process \(Q + \tau .R + a.S\) will, without further ado, proceed with the \(\tau \)-transition to R, or any action from Q, just as if the a.S summand were not present.

can occur only in an environment that blocks the action a, for otherwise a would have taken place before the timeout went off. The occurrence of this transition is not observable by the environment, so right afterwards the state of the environment is unchanged, and the action a is still blocked. Therefore, the process \(Q + \tau .R + a.S\) will, without further ado, proceed with the \(\tau \)-transition to R, or any action from Q, just as if the a.S summand were not present.

Standard process algebras and LTSs without timeouts can model systems whose behaviour is triggered by input signals from the environment in which they operate. This is why they are called “reactive systems”. By means of timeouts, one can additionally model systems whose behaviour is triggered by the absence of input signals from the environment, during a sufficiently long period. This creates a greater symmetry between a system and its environment, as it has always been understood that the environment or user of a system can change its behaviour as a result of sustained inactivity of the system it is interacting with. Hence, one could say that process algebras and LTSs enriched with timeouts form a more faithful model of reactivity. It is for this reason that I use the name reactive bisimilarity for the appropriate form of bisimilarity on systems modelled in this fashion.

Section 2 introduces strong reactive bisimilarity as the proper counterpart of strong bisimilarity in the presence of timeout transitions. Naturally, it coincides with strong bisimilarity when there are no timeout transitions. Section 3 derives a modal characterisation; a reactive variant of the Hennessy–Milner logic. Section 4 offers an alternative characterisation of strong reactive bisimilarity that will be more convenient in proofs, although it is lacks the intuitive appeal to be used as the initial definition. Appendix C, reporting on work by Max Pohlmann [37], offers yet another characterisation of strong reactive bisimilarity; one that reduces it to strong bisimilarity in a context that models a system together with its environment.

Section 5 recalls the process algebra CCSP, a common mix of CCS and CSP, and adds the timeout action, as well as two auxiliary operators that will be used in the forthcoming axiomatisation. Section 6 states that in this process algebra one can express all countably branching transition systems, and only those, or all and only the finitely branching ones when restricting to guarded recursion.

Section 7 recalls the concept of a congruence, focusing on the congruence property for the recursion operator, which is commonly the hardest to establish. It then shows that the simple initials equivalence, as well as Milner’s strong bisimilarity, are congruences. Due to the presence of negative premises in the operational rules for the auxiliary operators, these proofs are not entirely trivial. Using these results as a stepping stone, Sect. 8 shows that strong reactive bisimilarity is a congruence for my extension of CCSP. Here, the congruence property for one of the auxiliary operators with negative premises is needed in establishing the result for the common CCSP operators, such as parallel composition.

Section 9 shows that guarded recursive specifications have unique solutions up to strong reactive bisimilarity. Using this, Sect. 10 provides a sound and complete axiomatisation for processes with guarded recursion. My completeness proof combines three innovations in establishing completeness of process algebraic axiomatisations. First of all, following [22], it applies to all processes in a Turing powerful language like guarded CCSP, rather than the more common fragment merely employing finite sets of recursion equations featuring only choice and action prefixing. Secondly, instead of the classic technique of merging guarded recursive equations [11, 30,31,32, 40], which in essence proves two bisimilar systems P and Q equivalent by equating both to an intermediate variant that is essentially a product of P and Q, I employ the novel method of canonical solutions [24, 29], which equates both P and Q to a canonical representative within the bisimulation equivalence class of P and Q—one that has only one reachable state for each bisimulation equivalence class of states of P and Q. In fact I tried so hard, and in vain, to apply the traditional technique of merging guarded recursive equations, that I came to believe that it fundamentally does not work for this axiomatisation. The third innovation is the use of the axiom of choice [41] in defining the transition relation on my canonical representative, in order to keep this process finitely branching.

Section 11 describes a worthwhile gain in expressiveness caused by the addition of timeouts, and presents an agenda for future work.

2 Reactive bisimilarity

A labelled transition system (LTS) is a triple  with

with  a set (of states or processes), Act a set (of actions) and

a set (of states or processes), Act a set (of actions) and  . In this paper, I consider LTSs with \(Act:= A\uplus \{\tau ,\mathrm{t}\}\), where A is a set of visible actions, \(\tau \) is the hidden action, and \(\mathrm{t}\) the timeout action. The set of initial actions of a process

. In this paper, I consider LTSs with \(Act:= A\uplus \{\tau ,\mathrm{t}\}\), where A is a set of visible actions, \(\tau \) is the hidden action, and \(\mathrm{t}\) the timeout action. The set of initial actions of a process  is

is  . Here

. Here  means that there is a Q with

means that there is a Q with  .

.

Definition 1

A strong reactive bisimulation is a symmetric relation  (meaning that

(meaning that  and

and  ), such that,

), such that,

-

if

and

and  , then there exists a \(Q'\) such that

, then there exists a \(Q'\) such that  and

and  ,

, -

if

then

then  for all \(X\subseteq A\),

for all \(X\subseteq A\),

and for all  ,

,

-

if

with \(a\in X\), then there exists a \(Q'\) such that

with \(a\in X\), then there exists a \(Q'\) such that  and

and  ,

, -

if

, then there exists a \(Q'\) such that

, then there exists a \(Q'\) such that  and

and  ,

, -

if \(\mathcal {I}(P)\cap (X\cup \{\tau \})=\emptyset \), then

, and

, and -

if \(\mathcal {I}(P)\cap (X\cup \{\tau \})=\emptyset \) and

, then \(\exists Q'\) such that

, then \(\exists Q'\) such that  and

and  .

.

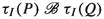

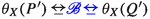

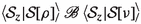

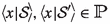

Processes  are strongly X-bisimilar, denoted

are strongly X-bisimilar, denoted  , if

, if  for some strong reactive bisimulation

for some strong reactive bisimulation  . They are strongly reactive bisimilar, denoted

. They are strongly reactive bisimilar, denoted  , if

, if  for some strong reactive bisimulation

for some strong reactive bisimulation  .

.

Intuitively,  says that processes P and Q behave the same way, as witnessed by the relation

says that processes P and Q behave the same way, as witnessed by the relation  , when placed in the environment X—meaning any environment that allows exactly the actions in X to occur—whereas

, when placed in the environment X—meaning any environment that allows exactly the actions in X to occur—whereas  says they behave the same way in an environment that has just been triggered to change. An environment can be thought of as an unknown process placed in parallel with P and Q, using the operator \({\Vert ^{}_{A}}\), enforcing synchronisation on all visible actions. The environment X can be seen as a process \(\sum _{i\in I}a_i.R_i + \mathrm{t}.R\) where \(\{a_i \mid i\in I\} = X\). A triggered environment, on the other hand, can execute a sequence of instantaneous hidden actions before stabilising as an environment Y, for \(Y\subseteq A\). During this execution, actions can be blocked and allowed in rapid succession. Since the environment is unknown, the bisimulation should be robust under any such environment.

says they behave the same way in an environment that has just been triggered to change. An environment can be thought of as an unknown process placed in parallel with P and Q, using the operator \({\Vert ^{}_{A}}\), enforcing synchronisation on all visible actions. The environment X can be seen as a process \(\sum _{i\in I}a_i.R_i + \mathrm{t}.R\) where \(\{a_i \mid i\in I\} = X\). A triggered environment, on the other hand, can execute a sequence of instantaneous hidden actions before stabilising as an environment Y, for \(Y\subseteq A\). During this execution, actions can be blocked and allowed in rapid succession. Since the environment is unknown, the bisimulation should be robust under any such environment.

The first clause for  is like the common transfer property of strong bisimilarity [33]: a visible a-transition of P can be matched by one of Q, such that the resulting processes \(P'\) and \(Q'\) are related again. However, I require it only for actions \(a\in X\), because actions \(b\in A{\setminus }X\) cannot happen at all in the environment X, and thus need not be matched by Q. Since the occurrence of a is observable by the environment, this can trigger the environment to change the set of actions it allows, so \(P'\) and \(Q'\) ought to be related in a triggered environment.

is like the common transfer property of strong bisimilarity [33]: a visible a-transition of P can be matched by one of Q, such that the resulting processes \(P'\) and \(Q'\) are related again. However, I require it only for actions \(a\in X\), because actions \(b\in A{\setminus }X\) cannot happen at all in the environment X, and thus need not be matched by Q. Since the occurrence of a is observable by the environment, this can trigger the environment to change the set of actions it allows, so \(P'\) and \(Q'\) ought to be related in a triggered environment.

The second clause is the transfer property for \(\tau \)-transitions. Since these are not observable by the environment, they cannot trigger a change in the set of actions allowed by it, so the resulting processes \(P'\) and \(Q'\) should be related only in the same environment X.

The first clause for  expresses the transfer property for \(\tau \)-transitions in a triggered environment. Here, it may happen that the \(\tau \)-transition occurs before the environment stabilises, and hence \(P'\) and \(Q'\) will still be related in a triggered environment. A similar transfer property for a-transitions is already implied by the next two clauses.

expresses the transfer property for \(\tau \)-transitions in a triggered environment. Here, it may happen that the \(\tau \)-transition occurs before the environment stabilises, and hence \(P'\) and \(Q'\) will still be related in a triggered environment. A similar transfer property for a-transitions is already implied by the next two clauses.

The second clause allows a triggered environment to stabilise into any environment X.

The first two clauses for  imply that if

imply that if  then \(\mathcal {I}(P)\cap (X\cup \{\tau \}) = \mathcal {I}(Q) \cap (X\cup \{\tau \})\). So

then \(\mathcal {I}(P)\cap (X\cup \{\tau \}) = \mathcal {I}(Q) \cap (X\cup \{\tau \})\). So  implies \(\mathcal {I}(P)=\mathcal {I}(Q)\). The condition \(\mathcal {I}(P)\cap (X\cup \{\tau \})=\emptyset \) is necessary and sufficient for the system to remain a positive amount of time in state P when X is the set of allowed actions. The next clause says that during this time the environment may be triggered to change the set of actions it allows by an event outside our model, that is, by a timeout in the environment. So P and Q should be related in a triggered environment.

implies \(\mathcal {I}(P)=\mathcal {I}(Q)\). The condition \(\mathcal {I}(P)\cap (X\cup \{\tau \})=\emptyset \) is necessary and sufficient for the system to remain a positive amount of time in state P when X is the set of allowed actions. The next clause says that during this time the environment may be triggered to change the set of actions it allows by an event outside our model, that is, by a timeout in the environment. So P and Q should be related in a triggered environment.

The last clause says that also a \(\mathrm{t}\)-transition of P should be matched by one of Q. Naturally, the \(\mathrm{t}\)-transition of P can be taken only when the system is idling in P, i.e. when \(\mathcal {I}(P)\cap (X\cup \{\tau \})=\emptyset \). The resulting processes \(P'\) and \(Q'\) should be related again, but only in the same environment allowing X.

Proposition 2

Strong X-bisimilarity and strong reactive bisimilarity are equivalence relations.

Proof

,

,  are reflexive, as

are reflexive, as  is a strong reactive bisimulation.

is a strong reactive bisimulation.

and

and  are symmetric, since strong reactive bisimulations are symmetric by definition.

are symmetric, since strong reactive bisimulations are symmetric by definition.

and

and  are transitive, for if

are transitive, for if  and

and  are strong reactive bisimulations, then so is

are strong reactive bisimulations, then so is

\(\square \)

Note that the union of arbitrarily many strong reactive bisimulations is itself a strong reactive bisimulation. Therefore, the family of relations  ,

,  for \(X\subseteq A\) can be seen as a strong reactive bisimulation.

for \(X\subseteq A\) can be seen as a strong reactive bisimulation.

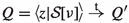

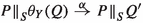

To get a firm grasp on strong reactive bisimilarity, the reader is invited to check the two laws mentioned in the Introduction, and then to construct a strong reactive bisimulation between the two systems depicted in Fig. 1. Here, P, Q, R and S are arbitrary subprocesses.

The four processes that are targets of \(\mathrm{t}\)-transitions always run in an environment that blocks b. In an environment that allows a, the branch b.R disappears, so that the left branch of the first process can be matched with the left branch of the second process, and similarly for the two right branches. In an environment that blocks a, this matching won’t fly, as the branch b.R now survives. However, the branches a.Q will disappear, so that the left branch of the first process can be matched with the right branch of the second, and vice versa.

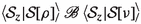

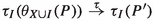

The processes U and V of Fig. 2 show that the pairs that occur in a strong reactive bisimulation are not completely determined by the triples. One has  for any \(X\subseteq A\), yet

for any \(X\subseteq A\), yet  . In particular, when \(a \in X\) the branch \(\mathrm{t}.R\) is redundant, and when \(a \notin X\) the branch a.Q is redundant.

. In particular, when \(a \in X\) the branch \(\mathrm{t}.R\) is redundant, and when \(a \notin X\) the branch a.Q is redundant.

Appendix C, reporting on work by Max Pohlmann [37], offers a context  with the property that

with the property that  iff

iff  , thereby reducing strong reactive bisimilarity to strong bisimilarity. The context

, thereby reducing strong reactive bisimilarity to strong bisimilarity. The context  places a system in a most general environment in which it could be running. This result allows any toolset for checking strong bisimilarity to be applicable for checking strong reactive bisimilarity.

places a system in a most general environment in which it could be running. This result allows any toolset for checking strong bisimilarity to be applicable for checking strong reactive bisimilarity.

2.1 A more general form of reactive bisimulation

The following notion of a generalised strong reactive bisimulation (gsrb) generalises that of a strong reactive bisimulation; yet it induces the same concept of strong reactive bisimilarity. This makes the relation convenient to use for further analysis. I did not introduce it as the original definition, because it lacks a strong motivation.

Definition 3

A gsrb is a symmetric relation  such that, for all

such that, for all  ,

,

-

if

with \(\alpha \in A\cup \{\tau \}\), then there exists a \(Q'\) such that

with \(\alpha \in A\cup \{\tau \}\), then there exists a \(Q'\) such that  and

and  ,

, -

if \(\mathcal {I}(P)\cap (X\cup \{\tau \})=\emptyset \) with \(X\subseteq A\) and

, then \(\exists Q'\) with

, then \(\exists Q'\) with  and

and  ,

,

and for all  ,

,

-

if

with either \(a\in Y\) or \(\mathcal {I}(P)\cap (Y\cup \{\tau \})=\emptyset \), then \(\exists Q'\) with

with either \(a\in Y\) or \(\mathcal {I}(P)\cap (Y\cup \{\tau \})=\emptyset \), then \(\exists Q'\) with  and

and  ,

, -

if

, then there exists a \(Q'\) such that

, then there exists a \(Q'\) such that  and

and  ,

, -

if \(\mathcal {I}(P)\cap (X\cup Y \cup \{\tau \})=\emptyset \) with \(X\subseteq A\) and

then \(\exists Q'\) with

then \(\exists Q'\) with  and

and  .

.

Unlike Definition 1, a gsrb needs the triples (P, X, Q) only after encountering a \(\mathrm{t}\)-transition; two systems without \(\mathrm{t}\)-transitions can be related without using these triples at all.

Proposition 4

iff there exists a gsrb

iff there exists a gsrb  with

with  .

.

Likewise,  iff there exists a gsrb

iff there exists a gsrb  with

with  .

.

Proof

Clearly, each strong reactive bisimulation satisfies the five clauses of Definition 3 and thus is a gsrb. In the other direction, given a gsrb  , let

, let

It is straightforward to check that  satisfies the six clauses of Definition 1. \(\square \)

satisfies the six clauses of Definition 1. \(\square \)

The above proof has been formalised in [37], using the interactive proof assistant Isabelle. The formalisation takes up around 250 lines of code.

3 A modal characterisation of strong reactive bisimilarity

The Hennessy–Milner logic [27] expresses properties of the behaviour of processes in an LTS.

Definition 5

The class  of infinitary HML formulas is defined as follows, where I ranges over all index sets and \(\alpha \) over \(A\cup \{\tau \}\):

of infinitary HML formulas is defined as follows, where I ranges over all index sets and \(\alpha \) over \(A\cup \{\tau \}\):

\(\top \) abbreviates the empty conjunction, and \(\varphi _1\wedge \varphi _2\) stands for \(\bigwedge _{i\in \{1,2\}}\varphi _i\).

\(P\models \varphi \) denotes that process P satisfies formula \(\varphi \). The first two operators represent the standard Boolean operators conjunction and negation. By definition, \(P\models \langle \alpha \rangle \varphi \) iff  for some \(P'\) with \(P'\models \varphi \).

for some \(P'\) with \(P'\models \varphi \).

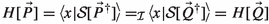

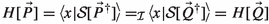

A famous result stemming from [27] states that

where  denotes strong bisimilarity [27, 33], formally defined in Sect. 7.2. It states that the Hennessy–Milner logic yields a modal characterisation of strong bisimilarity. I will now adapt this result to obtain a modal characterisation of strong reactive bisimilarity.

denotes strong bisimilarity [27, 33], formally defined in Sect. 7.2. It states that the Hennessy–Milner logic yields a modal characterisation of strong bisimilarity. I will now adapt this result to obtain a modal characterisation of strong reactive bisimilarity.

To this end, I extend the Hennessy–Milner logic with a new modality \(\langle X\rangle \), for \(X\subseteq A\), and auxiliary satisfaction relations  for each \(X \subseteq A\). The formula \(P \models \langle X\rangle \varphi \) says that in an environment X, allowing exactly the actions in X, process P can perform a timeout transition to a process that satisfies \(\varphi \). \(P \models _X \varphi \) says that P satisfies \(\varphi \) when placed in environment X. The relations \(\models \) and \(\models _X\) are the smallest ones satisfying:

for each \(X \subseteq A\). The formula \(P \models \langle X\rangle \varphi \) says that in an environment X, allowing exactly the actions in X, process P can perform a timeout transition to a process that satisfies \(\varphi \). \(P \models _X \varphi \) says that P satisfies \(\varphi \) when placed in environment X. The relations \(\models \) and \(\models _X\) are the smallest ones satisfying:

Note that a formula \(\langle a\rangle \varphi \) is less often true under \(\models _X\) than under \(\models \), due to the side condition \(a \in X\). This reflects the fact that a cannot happen in an environment that blocks it. The last clause in the above definition reflects the fifth clause of Definition 1. If \(\mathcal {I}(P)\cap (X\cup \{\tau \}) = \emptyset \), then process P, operating in environment X, idles for a while, during which the environment can change. This ends the blocking of actions \(a \notin X\) and makes any formula valid under \(\models \) also valid under \(\models _X\).

Example 6

Both systems from Fig. 1 satisfy \(\langle \emptyset \rangle \langle \tau \rangle \langle b\rangle \top \wedge \langle \emptyset \rangle \langle \tau \rangle \lnot \langle b\rangle \top \wedge \langle \{a\}\rangle \langle a\rangle \top \wedge \langle \{a\}\rangle \lnot \langle a\rangle \top \) and neither satisfies  or

or  .

.

Theorem 7

Let  and \(X\subseteq A\). Then

and \(X\subseteq A\). Then  and

and  .

.

Proof

“\(\Rightarrow \)”: I prove by simultaneous structural induction on  that, for all

that, for all  and \(X\subseteq A\),

and \(X\subseteq A\),  and

and  . For each \(\varphi \), the converse implications (\(Q \models \varphi \Rightarrow P \models \varphi \) and \(Q \models _X \varphi \Rightarrow P \models _X \varphi \)) follow by symmetry. In particular, these converse directions may be used when invoking the induction hypothesis.

. For each \(\varphi \), the converse implications (\(Q \models \varphi \Rightarrow P \models \varphi \) and \(Q \models _X \varphi \Rightarrow P \models _X \varphi \)) follow by symmetry. In particular, these converse directions may be used when invoking the induction hypothesis.

-

Let

.

.-

Let \(\varphi = \bigwedge _{i\in I}\varphi _i\). Then, \(P \models \varphi _i\) for all \(i\in I\). By induction \(Q \models \varphi _i\) for all i, so \(Q \models \bigwedge _{i\in I}\varphi _i\).

-

Let \(\varphi = \lnot \psi \). Then, \(P \not \models \psi \). By induction \(Q \not \models \psi \), so \(Q \models \lnot \psi \).

-

Let \(\varphi = \langle \alpha \rangle \psi \) with \(\alpha \in A\cup \{\tau \}\). Then,

for some \(P'\) with \(P' \models \psi \). By Definition 3,

for some \(P'\) with \(P' \models \psi \). By Definition 3,  for some \(Q'\) with

for some \(Q'\) with  . So by induction \(Q' \models \psi \), and thus \(Q \models \langle \alpha \rangle \psi \).

. So by induction \(Q' \models \psi \), and thus \(Q \models \langle \alpha \rangle \psi \). -

Let \(\varphi = \langle X\rangle \psi \) for some \(X\subseteq A\). Then, \(\mathcal {I}(P)\cap (X\cup \{\tau \}) = \emptyset \) and

for some \(P'\) with \(P' \models _X \psi \). By Definition 3,

for some \(P'\) with \(P' \models _X \psi \). By Definition 3,  for some \(Q'\) with

for some \(Q'\) with  . So by induction \(Q' \models _X \psi \). Moreover, \(\mathcal {I}(Q)=\mathcal {I}(P)\), as

. So by induction \(Q' \models _X \psi \). Moreover, \(\mathcal {I}(Q)=\mathcal {I}(P)\), as  , so \(\mathcal {I}(Q)\cap (X\cup \{\tau \}) = \emptyset \). Thus, \(Q \models \langle X\rangle \psi \).

, so \(\mathcal {I}(Q)\cap (X\cup \{\tau \}) = \emptyset \). Thus, \(Q \models \langle X\rangle \psi \).

-

-

Let

.

.-

Let \(\varphi = \bigwedge _{i\in I}\varphi _i\), and \(P \models _X \varphi _i\) for all \(i\in I\). By induction \(Q \models _X \varphi _i\) for all \(i\in I\), so \(q \models _X \bigwedge _{i\in I}\varphi _i\).

-

Let \(\varphi = \lnot \psi \), and \(P \not \models _X \psi \). By induction \(Q \not \models _X \psi \), so \(Q \models _X \lnot \psi \).

-

Let \(\varphi = \langle a\rangle \psi \) with \(a\in X\) and

for some \(P'\) with \(P' \models \psi \). By Definition 1,

for some \(P'\) with \(P' \models \psi \). By Definition 1,  for some \(Q'\) with

for some \(Q'\) with  . By induction \(Q' \models \psi \), so \(Q \models _X \langle a\rangle \psi \).

. By induction \(Q' \models \psi \), so \(Q \models _X \langle a\rangle \psi \). -

Let \(\varphi = \langle \tau \rangle \psi \), and

for some \(P'\) with \(P' \models _X \psi \). By Definition 1,

for some \(P'\) with \(P' \models _X \psi \). By Definition 1,  for some \(Q'\) with

for some \(Q'\) with  . By induction \(Q' \models _X \psi \), so \(Q \models _X \langle \tau \rangle \psi \).

. By induction \(Q' \models _X \psi \), so \(Q \models _X \langle \tau \rangle \psi \). -

Let \(\mathcal {I}(P)\cap (X\cup \{\tau \}) = \emptyset \) and \(P \models \varphi \). By the fifth clause of Definition 1,

. Hence, by the previous case in this proof, \(Q \models \varphi \). Moreover, \(\mathcal {I}(Q)\cap (X\cup \{\tau \}) =\mathcal {I}(P) \cap (X\cup \{\tau \})\), since

. Hence, by the previous case in this proof, \(Q \models \varphi \). Moreover, \(\mathcal {I}(Q)\cap (X\cup \{\tau \}) =\mathcal {I}(P) \cap (X\cup \{\tau \})\), since  . Thus, \(Q \models _X \varphi \).

. Thus, \(Q \models _X \varphi \).

-

“\(\Leftarrow \)”: Write \(P\! \equiv \!Q\) for  , and \(P\! \equiv _X \!Q\) for

, and \(P\! \equiv _X \!Q\) for  . I show that the family of relations \(\equiv \), \(\equiv _X\) for \(X\subseteq A\) constitutes a gsrb.

. I show that the family of relations \(\equiv \), \(\equiv _X\) for \(X\subseteq A\) constitutes a gsrb.

-

Suppose \(P \equiv Q\) and

with \(\alpha \in A \cup \{\tau \}\). Let

with \(\alpha \in A \cup \{\tau \}\). Let  . For each \(Q^\dagger \in \mathcal {Q}^\dagger \), let

. For each \(Q^\dagger \in \mathcal {Q}^\dagger \), let  be a formula such that \(P'\models \varphi _{Q^\dagger }\) and \(Q^\dagger \not \models \varphi _{Q^\dagger }\). (Such a formula always exists because

be a formula such that \(P'\models \varphi _{Q^\dagger }\) and \(Q^\dagger \not \models \varphi _{Q^\dagger }\). (Such a formula always exists because  is closed under negation.) Define

is closed under negation.) Define  . Then, \(P' \models \varphi \), so \(P \models \langle a\rangle \varphi \). Consequently, also \(Q \models \langle a\rangle \varphi \). Hence, there is a \(Q'\) with

. Then, \(P' \models \varphi \), so \(P \models \langle a\rangle \varphi \). Consequently, also \(Q \models \langle a\rangle \varphi \). Hence, there is a \(Q'\) with  and \(Q' \models \varphi \). Since none of the \(Q^\dagger \in \mathcal {Q}^\dagger \) satisfies \(\varphi \), one obtains \(Q' \notin \mathcal {Q}^\dagger \) and thus \(P' \equiv Q'\).

and \(Q' \models \varphi \). Since none of the \(Q^\dagger \in \mathcal {Q}^\dagger \) satisfies \(\varphi \), one obtains \(Q' \notin \mathcal {Q}^\dagger \) and thus \(P' \equiv Q'\). -

Suppose \(P \equiv Q\), \(X\subseteq A\), \(\mathcal {I}(P)\cap (X\cup \{\tau \}) = \emptyset \) and

. Let

. Let

For each \(Q^\dagger \in \mathcal {Q}^\dagger \), let

be a formula such that \(P'\models _X \varphi _{Q^\dagger }\) and \(Q^\dagger \not \models _X \varphi _{Q^\dagger }\). Define \(\varphi := \bigwedge _{Q^\dagger \in \mathcal {Q}^\dagger } \varphi _{Q^\dagger }\). Then, \(P' \models _X \varphi \), so \(P \models \langle X\rangle \varphi \). Consequently, also \(Q \models \langle X\rangle \varphi \). Hence, there is a \(Q'\) with

be a formula such that \(P'\models _X \varphi _{Q^\dagger }\) and \(Q^\dagger \not \models _X \varphi _{Q^\dagger }\). Define \(\varphi := \bigwedge _{Q^\dagger \in \mathcal {Q}^\dagger } \varphi _{Q^\dagger }\). Then, \(P' \models _X \varphi \), so \(P \models \langle X\rangle \varphi \). Consequently, also \(Q \models \langle X\rangle \varphi \). Hence, there is a \(Q'\) with  and \(Q' \models _X \varphi \). Again, \(Q' \notin \mathcal {Q}^\dagger \) and thus \(P' \equiv _X Q'\).

and \(Q' \models _X \varphi \). Again, \(Q' \notin \mathcal {Q}^\dagger \) and thus \(P' \equiv _X Q'\). -

Suppose \(P \equiv _Y Q\) and

with \(a\in A\) and either \(a\in Y\) or \(\mathcal {I}(P)\cap (Y\cup \{\tau \})=\emptyset \). Let

with \(a\in A\) and either \(a\in Y\) or \(\mathcal {I}(P)\cap (Y\cup \{\tau \})=\emptyset \). Let  . For each \(Q^\dagger \in \mathcal {Q}^\dagger \), let

. For each \(Q^\dagger \in \mathcal {Q}^\dagger \), let  be a formula such that \(P'\models \varphi _{Q^\dagger }\) and \(Q^\dagger \not \models \varphi _{Q^\dagger }\). Define

be a formula such that \(P'\models \varphi _{Q^\dagger }\) and \(Q^\dagger \not \models \varphi _{Q^\dagger }\). Define  . Then, \(P' \models \varphi \), so \(P \models \langle a\rangle \varphi \), and also \(P \models _Y \langle a\rangle \varphi \), using either the third or last clause in the definition of \(\models _X\). Hence, also \(Q \models _Y \langle a\rangle \varphi \). Therefore, there is a \(Q'\) with

. Then, \(P' \models \varphi \), so \(P \models \langle a\rangle \varphi \), and also \(P \models _Y \langle a\rangle \varphi \), using either the third or last clause in the definition of \(\models _X\). Hence, also \(Q \models _Y \langle a\rangle \varphi \). Therefore, there is a \(Q'\) with  and \(Q' \models \varphi \), using the third clause of either \(\models _X\) or \(\models \). Since none of the \(Q^\dagger \in \mathcal {Q}^\dagger \) satisfies \(\varphi \), one obtains \(Q' \notin \mathcal {Q}^\dagger \) and thus \(P' \equiv Q'\).

and \(Q' \models \varphi \), using the third clause of either \(\models _X\) or \(\models \). Since none of the \(Q^\dagger \in \mathcal {Q}^\dagger \) satisfies \(\varphi \), one obtains \(Q' \notin \mathcal {Q}^\dagger \) and thus \(P' \equiv Q'\). -

The fourth clause of Definition 3 is obtained exactly like the first, but using \(\models _Y\) instead of \(\models \).

-

Suppose \(P \equiv _Y Q\),

and \(\mathcal {I}(P)\cap (X \cup Y\cup \{\tau \}) = \emptyset \), with \(X \subseteq A\). Let

and \(\mathcal {I}(P)\cap (X \cup Y\cup \{\tau \}) = \emptyset \), with \(X \subseteq A\). Let

For each \(Q^\dagger \in \mathcal {Q}^\dagger \), let

be a formula such that \(P'\models _X \varphi _{Q^\dagger }\) and \(Q^\dagger \not \models _X \varphi _{Q^\dagger }\). Define \(\varphi := \bigwedge _{Q^\dagger \in \mathcal {Q}^\dagger } \varphi _{Q^\dagger }\). Then, \(P' \models _X \varphi \), so \(P \models \langle X\rangle \varphi \), and thus \(P \models _Y \langle X\rangle \varphi \). Consequently, also \(Q \models _Y \langle X\rangle \varphi \) and therefore \(Q \models \langle X\rangle \varphi \). Hence, there is a \(Q'\) with

be a formula such that \(P'\models _X \varphi _{Q^\dagger }\) and \(Q^\dagger \not \models _X \varphi _{Q^\dagger }\). Define \(\varphi := \bigwedge _{Q^\dagger \in \mathcal {Q}^\dagger } \varphi _{Q^\dagger }\). Then, \(P' \models _X \varphi \), so \(P \models \langle X\rangle \varphi \), and thus \(P \models _Y \langle X\rangle \varphi \). Consequently, also \(Q \models _Y \langle X\rangle \varphi \) and therefore \(Q \models \langle X\rangle \varphi \). Hence, there is a \(Q'\) with  and \(Q' \models _X \varphi \). Again \(Q' \notin \mathcal {Q}^\dagger \) and thus \(P' \equiv _X Q'\).\(\square \)

and \(Q' \models _X \varphi \). Again \(Q' \notin \mathcal {Q}^\dagger \) and thus \(P' \equiv _X Q'\).\(\square \)

4 Timeout bisimulations

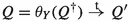

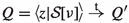

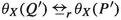

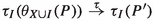

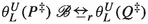

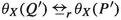

I will now present a characterisation of strong reactive bisimilarity in terms of a binary relation  on processes—a strong timeout bisimulation—not parametrised by the set of allowed actions X. To this end, I need a family of unary operators \(\theta _X\) on processes, for \(X\subseteq A\). These environment operators place a process in an environment that allows exactly the actions in X to occur. They are defined by the following structural operational rules.

on processes—a strong timeout bisimulation—not parametrised by the set of allowed actions X. To this end, I need a family of unary operators \(\theta _X\) on processes, for \(X\subseteq A\). These environment operators place a process in an environment that allows exactly the actions in X to occur. They are defined by the following structural operational rules.

The operator \(\theta _X\) modifies its argument by inhibiting all initial transitions (here including also those that occur after a \(\tau \)-transition) that cannot occur in the specified environment. When an observable transition does occur, the environment may be triggered to change, and the inhibiting effect of the \(\theta _X\)-operator comes to an end. The premises \(x \mathop {\nrightarrow }\limits ^{\beta }~\text{ for } \text{ all }~\beta \in X\cup \{\tau \}\) in the third rule guarantee that the process x will idle for a positive amount of time in its current state. During this time, the environment may be triggered to change, and again the inhibiting effect of the \(\theta _X\)-operator comes to an end.

Below I assume that  is closed under \(\theta \), that is, if

is closed under \(\theta \), that is, if  and \(X\subseteq A\) then

and \(X\subseteq A\) then  .

.

Definition 8

A strong timeout bisimulation is a symmetric relation  , such that, for

, such that, for  ,

,

-

if

with \(\alpha \in A\cup \{\tau \}\), then \(\exists Q'\) such that

with \(\alpha \in A\cup \{\tau \}\), then \(\exists Q'\) such that  and

and  ,

, -

if \(\mathcal {I}(P)\cap (X\cup \{\tau \})=\emptyset \) and

, then \(\exists Q'\) such that

, then \(\exists Q'\) such that  and

and  .

.

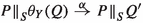

Proposition 9

iff there exists a strong timeout bisimulation

iff there exists a strong timeout bisimulation  with

with  .

.

Proof

Let  be a gsrb on

be a gsrb on  . Define

. Define  by

by  iff either

iff either  or \(P=\theta _{X}(P^\dagger )\), \(Q=\theta _{X}(Q^\dagger )\) and

or \(P=\theta _{X}(P^\dagger )\), \(Q=\theta _{X}(Q^\dagger )\) and  . I show that

. I show that  is a strong timeout bisimulation.

is a strong timeout bisimulation.

-

Let

and

and  with \(a\in A\). First suppose

with \(a\in A\). First suppose  . Then, by the first clause of Definition 3, there exists a \(Q'\) such that

. Then, by the first clause of Definition 3, there exists a \(Q'\) such that  and

and  . So

. So  . Next suppose \(P=\theta _{X}(P^\dagger )\), \(Q=\theta _{X}(Q^\dagger )\) and

. Next suppose \(P=\theta _{X}(P^\dagger )\), \(Q=\theta _{X}(Q^\dagger )\) and  . Since

. Since  it must be that

it must be that  and either \(a\in X\) or \(P^\dagger \mathop {\nrightarrow }\limits ^{\beta }\) for all \(\beta \in X\cup \{\tau \}\). Hence, there exists a \(Q'\) such that

and either \(a\in X\) or \(P^\dagger \mathop {\nrightarrow }\limits ^{\beta }\) for all \(\beta \in X\cup \{\tau \}\). Hence, there exists a \(Q'\) such that  and

and  , using the third clause of Definition 3. Recall that

, using the third clause of Definition 3. Recall that  implies \(I(P^\dagger )\cap (X\cup \{\tau \}) = I(Q^\dagger )\cap (X\cup \{\tau \})\), and thus either \(a\in X\) or \(Q^\dagger \mathop {\nrightarrow }\limits ^{\beta }\) for all \(\beta \in X\cup \{\tau \}\). It follows that

implies \(I(P^\dagger )\cap (X\cup \{\tau \}) = I(Q^\dagger )\cap (X\cup \{\tau \})\), and thus either \(a\in X\) or \(Q^\dagger \mathop {\nrightarrow }\limits ^{\beta }\) for all \(\beta \in X\cup \{\tau \}\). It follows that  and

and  .

. -

Let

and

and  . First suppose

. First suppose  . Then, using the first clause of Definition 3, there is a \(Q'\) with

. Then, using the first clause of Definition 3, there is a \(Q'\) with  and

and  . So

. So  . Next suppose \(P=\theta _{X}(P^\dagger )\), \(Q=\theta _{X}(Q^\dagger )\) and

. Next suppose \(P=\theta _{X}(P^\dagger )\), \(Q=\theta _{X}(Q^\dagger )\) and  . Since

. Since  , it must be that \(P'\) has the form \(\theta _{X}(P^\ddagger )\), and

, it must be that \(P'\) has the form \(\theta _{X}(P^\ddagger )\), and  . Thus, by the fourth clause of Definition 3, there is a \(Q^\ddagger \) with

. Thus, by the fourth clause of Definition 3, there is a \(Q^\ddagger \) with  and

and  . Now

. Now  and

and  .

. -

Let

, \(\mathcal {I}(P)\cap (X\cup \{\tau \})=\emptyset \) and

, \(\mathcal {I}(P)\cap (X\cup \{\tau \})=\emptyset \) and  . First suppose

. First suppose  . Then, by the second clause of Definition 3, there is a \(Q'\) with

. Then, by the second clause of Definition 3, there is a \(Q'\) with  and

and  . So

. So  . Next suppose \(P=\theta _{Y}(P^\dagger )\), \(Q=\theta _{Y}(Q^\dagger )\) and

. Next suppose \(P=\theta _{Y}(P^\dagger )\), \(Q=\theta _{Y}(Q^\dagger )\) and  . Since

. Since  , it must be that

, it must be that  and \(P^\dagger \mathop {\nrightarrow }\limits ^{\beta }\) for all \(\beta \in Y\cup \{\tau \}\). Consequently, \(\mathcal {I}(P^\dagger )=\mathcal {I}(P)\) and thus \(\mathcal {I}(P^\dagger ) \cap (X\cup Y\cup \{\tau \}) = \emptyset \). By the last clause of Definition 3 there is a \(Q'\) such that

and \(P^\dagger \mathop {\nrightarrow }\limits ^{\beta }\) for all \(\beta \in Y\cup \{\tau \}\). Consequently, \(\mathcal {I}(P^\dagger )=\mathcal {I}(P)\) and thus \(\mathcal {I}(P^\dagger ) \cap (X\cup Y\cup \{\tau \}) = \emptyset \). By the last clause of Definition 3 there is a \(Q'\) such that  and

and  . So

. So  . From

. From  and \(\mathcal {I}(P^\dagger )\cap (Y\cup \{\tau \})=\emptyset \), I infer \(\mathcal {I}(Q^\dagger )\cap (Y\cup \{\tau \})=\emptyset \). So \(Q^\dagger \mathop {\nrightarrow }\limits ^{\beta }\) for all \(\beta \in Y\cup \{\tau \}\). This yields

and \(\mathcal {I}(P^\dagger )\cap (Y\cup \{\tau \})=\emptyset \), I infer \(\mathcal {I}(Q^\dagger )\cap (Y\cup \{\tau \})=\emptyset \). So \(Q^\dagger \mathop {\nrightarrow }\limits ^{\beta }\) for all \(\beta \in Y\cup \{\tau \}\). This yields  .

.

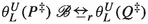

Now let  be a timeout bisimulation. Define

be a timeout bisimulation. Define  by

by  iff

iff  , and

, and  iff

iff  . I need to show that

. I need to show that  is a gsrb.

is a gsrb.

-

Suppose

and

and  with \(\alpha \in A\cup \{\tau \}\). Then,

with \(\alpha \in A\cup \{\tau \}\). Then,  , so there is a \(Q'\) such that

, so there is a \(Q'\) such that  and

and  . Hence,

. Hence,  .

. -

Suppose

, \(X\subseteq A\), \(\mathcal {I}(P)\cap (X\cup \{\tau \})=\emptyset \) and

, \(X\subseteq A\), \(\mathcal {I}(P)\cap (X\cup \{\tau \})=\emptyset \) and  . Then,

. Then,  , so \(\exists Q'\) such that

, so \(\exists Q'\) such that  and

and  . Thus,

. Thus,  .

. -

Suppose

and

and  with either \(a\in X\) or \(\mathcal {I}(P)\cap (X\cup \{\tau \})=\emptyset \). Then,

with either \(a\in X\) or \(\mathcal {I}(P)\cap (X\cup \{\tau \})=\emptyset \). Then,  . Moreover,

. Moreover,  . Hence, there is a \(Q'\) such that

. Hence, there is a \(Q'\) such that  and

and  . It must be that

. It must be that  . Moreover,

. Moreover,  .

. -

Suppose

and

and  . Then,

. Then,  . Since

. Since  , one has

, one has  . Hence, there is an R such that

. Hence, there is an R such that  and

and  . The process R must have the form \(\theta _X(Q')\) for some \(Q'\) with

. The process R must have the form \(\theta _X(Q')\) for some \(Q'\) with  . It follows that

. It follows that  .

. -

Suppose

, \(X\subseteq A\), \(\mathcal {I}(P)\cap (X\cup Y \cup \{\tau \})=\emptyset \) and

, \(X\subseteq A\), \(\mathcal {I}(P)\cap (X\cup Y \cup \{\tau \})=\emptyset \) and  . Then,

. Then,  and

and  . Moreover, \(\mathcal {I}(\theta _Y(P))=\mathcal {I}(P)\), so by the second clause of Definition 8 there exists a \(Q'\) such that

. Moreover, \(\mathcal {I}(\theta _Y(P))=\mathcal {I}(P)\), so by the second clause of Definition 8 there exists a \(Q'\) such that  and

and  . So

. So  and

and  .\(\square \)

.\(\square \)

Note that the union of arbitrarily many strong timeout bisimulations is itself a strong timeout bisimulation. Consequently, the relation  is a strong timeout bisimulation.

is a strong timeout bisimulation.

5 The process algebra \(\text{ CCSP}_\mathrm{t}^\theta \)

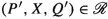

Let A be a set of visible actions and \({ Var}\) an infinite set of variables. The syntax of \(\text{ CCSP}_\mathrm{t}^\theta \) is given by

with \(\alpha \in Act := A \uplus \{\tau ,\mathrm{t}\}\), \(S,I,U,L,X\subseteq A\), \(L \subseteq U\), \(\mathcal {R}\subseteq A \mathop \times A\), \(x \in { Var}\) and \({{\mathcal {S}}}\) a recursive specification: a set of equations \(\{y = {{\mathcal {S}}}_{y} \mid y \in V_{{\mathcal {S}}}\}\) with \(V_{{\mathcal {S}}}\subseteq { Var}\) (the bound variables of \({{\mathcal {S}}}\)) and each \({{\mathcal {S}}}_{y}\) a \(\text{ CCSP}_\mathrm{t}^\theta \) expression. I require that all sets \({\{b\mid (a,b)\in \mathcal {R}\}}\) are finite.

The constant 0 represents a process that is unable to perform any action. The process \(\alpha .E\) first performs the action \(\alpha \) and then proceeds as E. The process \(E+F\) behaves as either E or F. \({\Vert ^{}_{S}}\) is a partially synchronous parallel composition operator; actions \(a\in S\) must synchronise—they can occur only when both arguments are ready to perform them—whereas actions \(\alpha \notin S\) from both arguments are interleaved. \(\tau _I\) is an abstraction operator; it conceals the actions in I by renaming them into the hidden action \(\tau \). The operator \(\mathcal {R}\) is a relational renaming: it renames a given action \(a\in A\) into a choice between all actions b with \((a,b)\in \mathcal {R}\). The environment operators \(\theta _L^U\) and \(\psi _X\) are new in this paper and explained below. Finally,  represents the x-component of a solution of the system of recursive equations \({{\mathcal {S}}}\).

represents the x-component of a solution of the system of recursive equations \({{\mathcal {S}}}\).

The language CCSP is a common mix of the process algebras CCS [33] and CSP [6, 28]. It first appeared in [34], where it was named following a suggestion by M. Nielsen. The family of parallel composition operators \(\Vert _S\) stems from [35], and incorporates the two CSP parallel composition operators from [6]. The relation renaming operators \(\mathcal {R}(\_\!\_)\) stem from [39]; they combine both the (functional) renaming operators that are common to CCS and CSP, and the inverse image operators of CSP. The choice operator \(+\) stems from CCS, and the abstraction operator from CSP, while the inaction constant 0, action prefixing operators \(a.\_\!\_\,\) for \(a\in A\), and the recursion construct are common to CCS and CSP. The timeout prefixing operator \(\mathrm{t}.\_\!\_\,\) was added by me in [18]. The syntactic form of inaction 0, action prefixing \(\alpha .E\) and choice \(E+F\) follows CCS, whereas the syntax of abstraction \(\tau _I(\_\!\_)\) and recursion  follows ACP [2, 10]. The fragment of \(\text{ CCSP}_\mathrm{t}^\theta \) without \(\theta _L^U\) and \(\psi _X\) is called \(\hbox {CCSP}_\mathrm{t}\) [18].

follows ACP [2, 10]. The fragment of \(\text{ CCSP}_\mathrm{t}^\theta \) without \(\theta _L^U\) and \(\psi _X\) is called \(\hbox {CCSP}_\mathrm{t}\) [18].

An occurrence of a variable x in a \(\text{ CCSP}_\mathrm{t}^\theta \) expression E is bound iff it occurs in a subexpression  of E with \(x \in V_{{\mathcal {S}}}\); otherwise it is free. Here, each \({{\mathcal {S}}}_y\) for \(y \in V_{{\mathcal {S}}}\) counts as a subexpression of

of E with \(x \in V_{{\mathcal {S}}}\); otherwise it is free. Here, each \({{\mathcal {S}}}_y\) for \(y \in V_{{\mathcal {S}}}\) counts as a subexpression of  . An expression E is invalid if it has a subexpression \(\theta _L^U(F)\) or \(\psi _X(F)\) such that a variable occurrence in F is free in F but bound in E. Let

. An expression E is invalid if it has a subexpression \(\theta _L^U(F)\) or \(\psi _X(F)\) such that a variable occurrence in F is free in F but bound in E. Let  be the set of valid \(\text{ CCSP}_\mathrm{t}^\theta \) expressions. Furthermore,

be the set of valid \(\text{ CCSP}_\mathrm{t}^\theta \) expressions. Furthermore,  is the set of closed valid \(\text{ CCSP}_\mathrm{t}^\theta \) expressions, or processes; those in which every variable occurrence is bound.

is the set of closed valid \(\text{ CCSP}_\mathrm{t}^\theta \) expressions, or processes; those in which every variable occurrence is bound.

A substitution is a partial function  . The application \(E[\rho ]\) of a substitution \(\rho \) to an expression

. The application \(E[\rho ]\) of a substitution \(\rho \) to an expression  is the result of simultaneous replacement, for all \(x\in \text {dom}(\rho )\), of each free occurrence of x in E by the expression \(\rho (x)\), while renaming bound variables in E if necessary to prevent name clashes.

is the result of simultaneous replacement, for all \(x\in \text {dom}(\rho )\), of each free occurrence of x in E by the expression \(\rho (x)\), while renaming bound variables in E if necessary to prevent name clashes.

The semantics of \(\text{ CCSP}_\mathrm{t}^\theta \) is given by the labelled transition relation  , where the transitions

, where the transitions  are derived from the rules of Table 1. Here,

are derived from the rules of Table 1. Here,  for

for  and \({{\mathcal {S}}}\) a recursive specification denotes the result of substituting

and \({{\mathcal {S}}}\) a recursive specification denotes the result of substituting  for y in E, for all \(y \in V_{{\mathcal {S}}}\).

for y in E, for all \(y \in V_{{\mathcal {S}}}\).

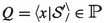

The auxiliary operators \(\theta _L^U\) and \(\psi _X\) are added here to facilitate complete axiomatisation, similar to the left merge and communication merge of ACP [2, 10]. The operator \(\theta _X^X\) is the same as what was called \(\theta _X\) in Sect. 4. It inhibits those transitions of its argument that are blocked in the environment X, allowing only the actions from \(X\subseteq A\). It stops inhibiting as soon as the system performs a visible action or takes a break, as this may trigger a change in the environment. The operator \(\theta _L^U\) preserves those transitions that are allowed in some environment X with \(L\subseteq X \subseteq U\). The letters L and U stand for lower and upper bound. The operator \(\psi _X\) places a process in the environment X when a timeout transition occurs; it is inert if any other transition occurs. If  for \(\beta \in A\cup \{\tau \}\), then a timeout transition

for \(\beta \in A\cup \{\tau \}\), then a timeout transition  cannot occur in an environment that allows \(\beta \). Thus, the transition

cannot occur in an environment that allows \(\beta \). Thus, the transition  survives only when considering an environment that blocks \(\beta \), meaning \(\beta \notin X\cup \{\tau \}\). Taking the contrapositive, \(\beta \in X\cup \{\tau \}\) implies \(P \mathop {\nrightarrow }\limits ^{\beta }\).

survives only when considering an environment that blocks \(\beta \), meaning \(\beta \notin X\cup \{\tau \}\). Taking the contrapositive, \(\beta \in X\cup \{\tau \}\) implies \(P \mathop {\nrightarrow }\limits ^{\beta }\).

The operator \(\theta ^U_\emptyset \) features in the forthcoming law L3, which is a convenient addition to my axiomatisation, although only \(\psi _X\) and \(\theta _X\) (\(= \theta _X^X\)) are necessary for completeness.

Stratification.

Even though negative premises occur in Table 1, the meaning of this transition system specification is well-defined, for instance by the method of stratification explained in [15, 25]. Assign inductively to each expression  an ordinal \(\lambda _E\) that counts the nesting depth of recursive specifications: if

an ordinal \(\lambda _E\) that counts the nesting depth of recursive specifications: if  then \(\lambda _E\) is 1 more than the supremum of the \(\lambda _{S_y}\) for \(y \in V_{{\mathcal {S}}}\); otherwise \(\lambda _E\) is the supremum of

then \(\lambda _E\) is 1 more than the supremum of the \(\lambda _{S_y}\) for \(y \in V_{{\mathcal {S}}}\); otherwise \(\lambda _E\) is the supremum of  for all subterms

for all subterms  of E. Moreover

of E. Moreover  is the nesting depth of \(\theta _L^U\) and \(\psi _X\) operators in E that remain after replacing any subterm F of E with \(\lambda _F < \lambda _E\) by 0. Now the ordered pair \((\lambda _P,\kappa _P)\) constitutes a valid stratification for closed literals

is the nesting depth of \(\theta _L^U\) and \(\psi _X\) operators in E that remain after replacing any subterm F of E with \(\lambda _F < \lambda _E\) by 0. Now the ordered pair \((\lambda _P,\kappa _P)\) constitutes a valid stratification for closed literals  . Namely, whenever a transition

. Namely, whenever a transition  depends on a transition

depends on a transition  , in the sense that that there is a closed substitution instance \({\mathfrak {r}}\) of a rule from Table 1 with conclusion

, in the sense that that there is a closed substitution instance \({\mathfrak {r}}\) of a rule from Table 1 with conclusion  , and

, and  occurring in its premises, then either \(\lambda _Q < \lambda _P\), or \(\lambda _Q = \lambda _P\) and \(\kappa _Q \le \kappa _P\). Moreover, when

occurring in its premises, then either \(\lambda _Q < \lambda _P\), or \(\lambda _Q = \lambda _P\) and \(\kappa _Q \le \kappa _P\). Moreover, when  depends on a negative literal \(Q \mathop {\nrightarrow }\limits ^{\beta }\), then \(\lambda _Q = \lambda _P\) and \(\kappa _Q < \kappa _P\).

depends on a negative literal \(Q \mathop {\nrightarrow }\limits ^{\beta }\), then \(\lambda _Q = \lambda _P\) and \(\kappa _Q < \kappa _P\).

The above argument hinges on the exclusion of invalid \(\text{ CCSP}_\mathrm{t}^\theta \) expressions. The invalid expression  for instance, with \(\mathcal {R}= \{(b,a)\}\), does not have a well-defined meaning, since the transition

for instance, with \(\mathcal {R}= \{(b,a)\}\), does not have a well-defined meaning, since the transition  is derivable iff one has the premise \(P{\mathop {\nrightarrow }\limits ^{b}}\):

is derivable iff one has the premise \(P{\mathop {\nrightarrow }\limits ^{b}}\):

However, the meaning of the valid expression  , for instance, is entirely unproblematic.

, for instance, is entirely unproblematic.

6 Guarded recursion and finitely branching processes

In many process algebraic specification approaches, only guarded recursive specifications are allowed.

Definition 10

An occurrence of a variable x in an expression E is guarded if x occurs in a subexpression \(\alpha .F\) of E, with \(\alpha \in Act\). An expression E is guarded if all free occurrences of variables in E are guarded. A recursive specification \({{\mathcal {S}}}\) is manifestly guarded if all expressions \({{\mathcal {S}}}_y\) for \(y\in V_{{\mathcal {S}}}\) are guarded. It is guarded if it can be converted into a manifestly guarded recursive specification by repeated substitution of expressions \({{\mathcal {S}}}_y\) for variables \(y\in V_{{\mathcal {S}}}\) occurring in the expressions \({{\mathcal {S}}}_z\) for \(z\in V_{{\mathcal {S}}}\). Let guarded \(\text{ CCSP}_\mathrm{t}^\theta \) be the fragment of \(\text{ CCSP}_\mathrm{t}^\theta \) allowing only guarded recursion.

Definition 11

The set of processes reachable from a given process  is inductively defined by

is inductively defined by

-

(i)

P is reachable from P, and

-

(ii)

if Q is reachable from P and

for some \(\alpha \in Act\) then R is reachable from P.

for some \(\alpha \in Act\) then R is reachable from P.

A process P is finitely branching if for all  reachable from P there are only finitely many pairs \((\alpha ,R)\) such that

reachable from P there are only finitely many pairs \((\alpha ,R)\) such that  . Likewise, P is countably branching if there are countably many such pairs. A process is finite iff it is finitely branching, has finitely many reachable states, and is loop-free, in the sense that there are no

. Likewise, P is countably branching if there are countably many such pairs. A process is finite iff it is finitely branching, has finitely many reachable states, and is loop-free, in the sense that there are no  with \(n>0\) and \(Q_0=Q_n\) reachable from P.

with \(n>0\) and \(Q_0=Q_n\) reachable from P.

Proposition 12

Each \(\text{ CCSP}_\mathrm{t}^\theta \) process is countably branching.

Proof

I show that for each \(\text{ CCSP}_\mathrm{t}^\theta \) process Q there are only countably many transitions  . Each such transition must be derivable from the rules of Table 1. So it suffices to show that for each Q there are only countably many derivations of transitions

. Each such transition must be derivable from the rules of Table 1. So it suffices to show that for each Q there are only countably many derivations of transitions  .

.

A derivation of a transition is a well-founded, upwardly branching tree, in which each node models an application of one of the rules of Table 1. Since each of these rules has finitely many positive premises, such a proof tree is finitely branching, and thus finite. Let \(d(\pi )\), the depth of \(\pi \), be the length of the longest branch in a derivation \(\pi \). If \(\pi \) derives a transition  , then I call Q the source of \(\pi \).

, then I call Q the source of \(\pi \).

It suffices to show that for each  there are only finitely many derivations of depth n with a given source. This I do by induction on n.

there are only finitely many derivations of depth n with a given source. This I do by induction on n.

In case \(Q=f(Q_1,\dots ,Q_k)\), with f an k-ary \(\text{ CCSP}_\mathrm{t}^\theta \) operator, a derivation \(\pi \) of depth n is completely determined by the concluding rule from Table 1, deriving a transition  , the subderivations of \(\pi \) with source \(Q_i\) for some of the \(i\in \{1,\dots ,k\}\), and the transition label \(\beta \). (For the purposes of this proof, Table 1 is understood to have only 15 rules, even if each of them can be seen as a template, with an instance for each choice of \(\mathcal {R}\), S, I, \({{\mathcal {S}}}\) etc., and for each fitting choice of a transition labels a, \(\alpha \) and/or \(\beta \).) The choice of the concluding rule depends on f, and for each f there are at most three choices. The subderivations of \(\pi \) with source \(Q_i\) have depth \(< n\), so by induction there are only finitely many. When f is not a renaming operator \(\mathcal {R}\), there is no further choice for the transition label \(\beta \), as it is completely determined by the premises of the rule, and thus by the subderivations of those premises. In case \(f=\mathcal {R}\), there are finitely many choices for \(\beta \) when faced with a given transition label \(\alpha \) contributed by the premise of the rule for renaming. Here, I use the requirement of Sect. 5 that all sets \({\{b\mid (a,b)\in \mathcal {R}\}}\) are finite. This shows there are only finitely many choices for \(\pi \).

, the subderivations of \(\pi \) with source \(Q_i\) for some of the \(i\in \{1,\dots ,k\}\), and the transition label \(\beta \). (For the purposes of this proof, Table 1 is understood to have only 15 rules, even if each of them can be seen as a template, with an instance for each choice of \(\mathcal {R}\), S, I, \({{\mathcal {S}}}\) etc., and for each fitting choice of a transition labels a, \(\alpha \) and/or \(\beta \).) The choice of the concluding rule depends on f, and for each f there are at most three choices. The subderivations of \(\pi \) with source \(Q_i\) have depth \(< n\), so by induction there are only finitely many. When f is not a renaming operator \(\mathcal {R}\), there is no further choice for the transition label \(\beta \), as it is completely determined by the premises of the rule, and thus by the subderivations of those premises. In case \(f=\mathcal {R}\), there are finitely many choices for \(\beta \) when faced with a given transition label \(\alpha \) contributed by the premise of the rule for renaming. Here, I use the requirement of Sect. 5 that all sets \({\{b\mid (a,b)\in \mathcal {R}\}}\) are finite. This shows there are only finitely many choices for \(\pi \).

In case  , the last step in \(\pi \) must be application of the rule for recursion, so \(\pi \) is completely determined by a subderivation \(\pi '\) of a transition with source

, the last step in \(\pi \) must be application of the rule for recursion, so \(\pi \) is completely determined by a subderivation \(\pi '\) of a transition with source  . By induction there are only finitely many choices for \(\pi '\), and hence also for \(\pi \). \(\square \)

. By induction there are only finitely many choices for \(\pi '\), and hence also for \(\pi \). \(\square \)

Proposition 13

Each \(\text{ CCSP}_\mathrm{t}^\theta \) process with guarded recursion is finitely branching.

Proof

A trivial structural induction shows that if P is a \(\text{ CCSP}_\mathrm{t}^\theta \) process with guarded recursion and Q is reachable from P, then also Q has guarded recursion. Hence, it suffices to show that for each \(\text{ CCSP}_\mathrm{t}^\theta \) process Q with guarded recursion there are only finitely many derivations with source Q.

Let \(\rightsquigarrow \) be the smallest binary relation on  such that (i) \(f(P_1,\dots ,P_k) \rightsquigarrow P_i\) for each k-ary \(\text{ CCSP}_\mathrm{t}^\theta \) operator f except action prefixing, and each \(i\in \{1,\dots ,k\}\), and (ii)

such that (i) \(f(P_1,\dots ,P_k) \rightsquigarrow P_i\) for each k-ary \(\text{ CCSP}_\mathrm{t}^\theta \) operator f except action prefixing, and each \(i\in \{1,\dots ,k\}\), and (ii)  . This relation is finitely branching. Moreover, on processes with guarded recursion, \(\rightsquigarrow \) has no forward infinite chains \(P_0 \rightsquigarrow P_1 \rightsquigarrow \dots \). In fact, this could have been used as an alternative definition of guarded recursion. Let, for any process Q with guarded recursion, e(Q) be the length of the longest forward chain \(Q \rightsquigarrow P_1 \rightsquigarrow \dots \rightsquigarrow P_{e(Q)}\). I show with induction on e(Q) that there are only finitely many derivations with source Q. In fact, this proceeds exactly as in the previous proof. \(\square \)

. This relation is finitely branching. Moreover, on processes with guarded recursion, \(\rightsquigarrow \) has no forward infinite chains \(P_0 \rightsquigarrow P_1 \rightsquigarrow \dots \). In fact, this could have been used as an alternative definition of guarded recursion. Let, for any process Q with guarded recursion, e(Q) be the length of the longest forward chain \(Q \rightsquigarrow P_1 \rightsquigarrow \dots \rightsquigarrow P_{e(Q)}\). I show with induction on e(Q) that there are only finitely many derivations with source Q. In fact, this proceeds exactly as in the previous proof. \(\square \)

Proposition 14

[13] Each finitely branching process in an LTS can be denoted by a closed \(\hbox {CCSP}_\mathrm{t}\) expression with guarded recursion. Here, I only need the operations inaction (0), action prefixing (\(\alpha .\_\!\_\,\)) and choice (\(+\)), as well as recursion  .

.

Proof

Let P be a finitely branching process in an LTS  . Let

. Let

For each Q reachable from P, let \(\textit{next}(Q)\) be the finite set of pairs  such that there is a transition

such that there is a transition  . Define the recursive specification \({{\mathcal {S}}}\) as \(\{x_Q = \sum _{(\alpha ,R)\in \textit{next}(Q)} \alpha .x_R \mid x_Q \in V_{{\mathcal {S}}}\}\). Here, the finite choice operator \(\sum _{i\in I}\alpha _i.P_i\) can easily be expressed in terms of inaction, action prefixing and choice. Now the \(\hbox {CCSP}_\mathrm{t}\) process

. Define the recursive specification \({{\mathcal {S}}}\) as \(\{x_Q = \sum _{(\alpha ,R)\in \textit{next}(Q)} \alpha .x_R \mid x_Q \in V_{{\mathcal {S}}}\}\). Here, the finite choice operator \(\sum _{i\in I}\alpha _i.P_i\) can easily be expressed in terms of inaction, action prefixing and choice. Now the \(\hbox {CCSP}_\mathrm{t}\) process  denotes P. \(\square \)

denotes P. \(\square \)

In fact,  , where

, where  denotes strong bisimilarity [33], formally defined in the next section.

denotes strong bisimilarity [33], formally defined in the next section.

Likewise, recursion-free \(\text{ CCSP}_\mathrm{t}^\theta \) processes are finite, and, up to strong bisimilarity, each finite process is denotable by a closed recursion-free \(\text{ CCSP}_\mathrm{t}^\theta \) expression, using only 0, \(\alpha .\_\!\_\,\) and \(+\).

Proposition 15

[13] Each countably branching process in an LTS can be denoted by a closed \(\hbox {CCSP}_\mathrm{t}\) expression. Again I only need the \(\hbox {CCSP}_\mathrm{t}\) operations inaction, action prefixing, choice and recursion.

Proof

The proof is the same as the previous one, except that \(\textit{next}(Q)\) now is a countable set, rather than a finite one, and consequently I need a countable choice operator  . The latter can be expressed in \(\hbox {CCSP}_\mathrm{t}\) with unguarded recursion by

. The latter can be expressed in \(\hbox {CCSP}_\mathrm{t}\) with unguarded recursion by  . \(\square \)

. \(\square \)

7 Congruence

Given an arbitrary process algebra with a collection of operators f, each with an arity n, and a recursion construct  as in Sect. 5, let

as in Sect. 5, let  and

and  be the sets of [closed] valid expressions, and let a substitution instance

be the sets of [closed] valid expressions, and let a substitution instance  for

for  and

and  be defined as in Sect. 5. Any semantic equivalence

be defined as in Sect. 5. Any semantic equivalence  extends to

extends to  by defining \(E \sim F\) iff \(E[\rho ] \sim F[\rho ]\) for each closed substitution

by defining \(E \sim F\) iff \(E[\rho ] \sim F[\rho ]\) for each closed substitution  . It extends to substitutions

. It extends to substitutions  by \(\rho \sim \nu \) iff \(\text {dom}(\rho ) = \text {dom}(\nu )\) and \(\rho (x) \sim \nu (x)\) for each \(x\in \text {dom}(\rho )\).

by \(\rho \sim \nu \) iff \(\text {dom}(\rho ) = \text {dom}(\nu )\) and \(\rho (x) \sim \nu (x)\) for each \(x\in \text {dom}(\rho )\).

Definition 16

[16] A semantic equivalence \({\sim }\) is a lean congruence if \(E[\rho ] \sim E[\nu ]\) for any expression  and any substitutions \(\rho \) and \(\nu \) with \(\rho \sim \nu \). It is a full congruence if it satisfies

and any substitutions \(\rho \) and \(\nu \) with \(\rho \sim \nu \). It is a full congruence if it satisfies

for all functions f of arity n, processes  , and recursive specifications \({{\mathcal {S}}},{{\mathcal {S}}}'\) with \(x \in V_{{\mathcal {S}}}= V_{{{\mathcal {S}}}'}\) and

, and recursive specifications \({{\mathcal {S}}},{{\mathcal {S}}}'\) with \(x \in V_{{\mathcal {S}}}= V_{{{\mathcal {S}}}'}\) and  .

.

Clearly, each full congruence is also a lean congruence, and each lean congruence satisfies (1). Both implications are strict, as illustrated in [16].

A main result of the present paper will be that strong reactive bisimilarity is a full congruence for the process algebra \(\text{ CCSP}_\mathrm{t}^\theta \). To achieve it, I need to establish first that strong bisimilarity [33],  , and initials equivalence [14, Section 16], \(=_\mathcal {I}\), are full congruences for \(\text{ CCSP}_\mathrm{t}^\theta \).

, and initials equivalence [14, Section 16], \(=_\mathcal {I}\), are full congruences for \(\text{ CCSP}_\mathrm{t}^\theta \).

7.1 Initials equivalence

Definition 17

Two \(\text{ CCSP}_\mathrm{t}^\theta \) processes P and Q are initials equivalent, denoted \(P =_\mathcal {I}Q\), if \(\mathcal {I}(P)=\mathcal {I}(Q)\).

Theorem 18

Initials equivalence is a full congruence for \(\text{ CCSP}_\mathrm{t}^\theta \).

Proof

In Appendix A. \(\square \)

7.2 Strong bisimilarity

Definition 19

A strong bisimulation is a symmetric relation  on

on  , such that, whenever

, such that, whenever  ,

,

-

if

with \(\alpha \in Act\) then

with \(\alpha \in Act\) then  for some \(Q'\) with

for some \(Q'\) with  .

.

Two processes  are strongly bisimilar,

are strongly bisimilar,  , if

, if  for some strong bisimulation

for some strong bisimulation  .

.

Contrary to reactive bisimilarity, strong bisimilarity treats the timeout action \(\mathrm{t}\), as well as the hidden action \(\tau \), just like any visible action. In the absence of timeout actions, there is no difference between a strong bisimulation and a timeout bisimulation, so  and

and  coincide. In general, strong bisimulation is a finer equivalence relation than strong reactive bisimilarity and initials equivalence:

coincide. In general, strong bisimulation is a finer equivalence relation than strong reactive bisimilarity and initials equivalence:  , and both implications are strict.

, and both implications are strict.

Lemma 1

For each \(\text{ CCSP}_\mathrm{t}^\theta \) process P, there exists a \(\hbox {CCSP}_\mathrm{t}\) process Q only built using inaction, action prefixing, choice and recursion, such that  .

.

Proof

Immediately from Propositions 12 and 15. \(\square \)

Theorem 20

Strong bisimilarity is a full congruence for \(\text{ CCSP}_\mathrm{t}^\theta \).

Proof

The structural operational rules for \(\hbox {CCSP}_\mathrm{t}\) (that is, \(\text{ CCSP}_\mathrm{t}^\theta \) without the operators \(\theta _L^U\) and \(\psi _X\)) fit the tyft/tyxt format with recursion of [16]. By [16, Theorem 3] this implies that  is a full congruence for \(\hbox {CCSP}_\mathrm{t}\). (In fact, when omitting the recursion construct, the operational rules for \(\hbox {CCSP}_\mathrm{t}\) fit the tyft/tyxt format of [26], and by the main theorem of [26],

is a full congruence for \(\hbox {CCSP}_\mathrm{t}\). (In fact, when omitting the recursion construct, the operational rules for \(\hbox {CCSP}_\mathrm{t}\) fit the tyft/tyxt format of [26], and by the main theorem of [26],  is a congruence for the operators of \(\hbox {CCSP}_\mathrm{t}\), that is, it satisfies (1) in Definition 16. The work of [16] extends this result of [26] with recursion.)

is a congruence for the operators of \(\hbox {CCSP}_\mathrm{t}\), that is, it satisfies (1) in Definition 16. The work of [16] extends this result of [26] with recursion.)

The structural operational rules for all of \(\text{ CCSP}_\mathrm{t}^\theta \) fit the ntyft/ntyxt format with recursion of [16]. By [16, Theorem 2] this implies that  is a lean congruence for \(\text{ CCSP}_\mathrm{t}^\theta \). (In fact, when omitting the recursion construct, the operational rules for \(\text{ CCSP}_\mathrm{t}^\theta \) fit the ntyft/ntyxt format of [25], and by the main theorem of [25],

is a lean congruence for \(\text{ CCSP}_\mathrm{t}^\theta \). (In fact, when omitting the recursion construct, the operational rules for \(\text{ CCSP}_\mathrm{t}^\theta \) fit the ntyft/ntyxt format of [25], and by the main theorem of [25],  is a congruence for the operators of \(\text{ CCSP}_\mathrm{t}^\theta \). The work of [16] extends this result of [25] with recursion.)

is a congruence for the operators of \(\text{ CCSP}_\mathrm{t}^\theta \). The work of [16] extends this result of [25] with recursion.)

To verify (2) for the whole language \(\text{ CCSP}_\mathrm{t}^\theta \), let \({{\mathcal {S}}}\) and \({{\mathcal {S}}}'\) be recursive specifications with \(x \in V_{{\mathcal {S}}}= V_{{{\mathcal {S}}}'}\), such that  and

and  for all \(y\in V_{{\mathcal {S}}}\). Let \(\{P_i \mid i \in I\}\) be the collection of processes of the form

for all \(y\in V_{{\mathcal {S}}}\). Let \(\{P_i \mid i \in I\}\) be the collection of processes of the form  or

or  , for some L, U, X, that occur as a closed subexpression of \({{\mathcal {S}}}_y\) or \({{\mathcal {S}}}'_y\) for one of the \(y\in V_{{\mathcal {S}}}\), not counting strict subexpressions of a closed subexpression R of

\({{\mathcal {S}}}_y\) or \({{\mathcal {S}}}'_y\) that is itself of the form

, for some L, U, X, that occur as a closed subexpression of \({{\mathcal {S}}}_y\) or \({{\mathcal {S}}}'_y\) for one of the \(y\in V_{{\mathcal {S}}}\), not counting strict subexpressions of a closed subexpression R of

\({{\mathcal {S}}}_y\) or \({{\mathcal {S}}}'_y\) that is itself of the form  or

or  . Pick a fresh variable

\(z_i\notin V_{{\mathcal {S}}}\) for each \(i\in I\), and let, for \(y\in V_{{\mathcal {S}}}\),

. Pick a fresh variable

\(z_i\notin V_{{\mathcal {S}}}\) for each \(i\in I\), and let, for \(y\in V_{{\mathcal {S}}}\),  be the result of replacing each occurrence of \(P_i\) in \({{\mathcal {S}}}_y\) by \(z_i\). Then,

be the result of replacing each occurrence of \(P_i\) in \({{\mathcal {S}}}_y\) by \(z_i\). Then,  does not contain the operators \(\theta _L^U(Q)\) or \(\psi _X(Q)\). In deriving this conclusion, it is essential that

does not contain the operators \(\theta _L^U(Q)\) or \(\psi _X(Q)\). In deriving this conclusion, it is essential that  is a valid expression, for this implies that the term

is a valid expression, for this implies that the term  , which may contain free occurrences of the variables \(y\in V_{{\mathcal {S}}}\), does not have a subterm of the form

, which may contain free occurrences of the variables \(y\in V_{{\mathcal {S}}}\), does not have a subterm of the form  or

or  that contains free occurrences of these variables. Let

that contains free occurrences of these variables. Let

; it is a recursive specification in the language \(\hbox {CCSP}_\mathrm{t}\). The recursive specification

; it is a recursive specification in the language \(\hbox {CCSP}_\mathrm{t}\). The recursive specification  is defined in the same way.

is defined in the same way.

For each \(i\in I\) there is, by Lemma 1, a process \(Q_i\) in the language \(\hbox {CCSP}_\mathrm{t}\) such that  . Now let