Abstract

Session-based concurrency is a type-based approach to the analysis of message-passing programs. These programs may be specified in an operational or declarative style: the former defines how interactions are properly structured; the latter defines governing conditions for correct interactions. In this paper, we study rigorous relationships between operational and declarative models of session-based concurrency. We develop a correct encoding of session \(\pi \)-calculus processes into the linear concurrent constraint calculus (\(\texttt {lcc}\)), a declarative model of concurrency based on partial information (constraints). We exploit session types to ensure that our encoding satisfies precise correctness properties and that it offers a sound basis on which operational and declarative requirements can be jointly specified and reasoned about. We demonstrate the applicability of our results by using our encoding in the specification of realistic communication patterns with time and contextual information.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper addresses the problem of relating two distinct models of concurrent processes: one of them, the session \(\pi \)-calculus [45] (\(\pi \), in the following), is operational; the other one, the linear concurrent constraint calculus [22, 27] (\(\texttt {lcc}\), in the following), is declarative. Our interest in these two models stems from the analysis of message-passing software systems, which are best specified by combining operational features (present in models such as \(\pi \)) and declarative features (present in models such as \(\texttt {lcc}\)). In this work, we aim at results of relative expressiveness, which explain how to faithfully encode programs in one model into programs in some other model [39, 40]. Concretely, we are interested in translations in which \(\pi \) and \(\texttt {lcc}\) are, respectively, source and target languages; a key common trait supporting expressiveness results between \(\pi \) and \(\texttt {lcc}\) is linearity, in the sense of Girard’s linear logic, the logic of consumable resources [24].

The process language \(\pi \) falls within session-based concurrency, a type-based approach to the analysis of message-passing programs. In this approach, protocols are organized into basic units called sessions; interaction patterns are abstracted as session types [29], against which specifications may be checked. A session connects exactly two partners; session types ensure that interactions always occur in matching pairs: when one partner sends, the other receives; when one partner offers a selection, the other chooses; when a partner closes the session, the other acknowledges. When specifications are given in the \(\pi \)-calculus [33, 34], we obtain processes interacting along channels to implement session protocols. Sessions thus involve concurrency, mobility, and resource awareness: a session is a sequence of deterministic interactions on linear channels, to be used exactly once.

In specifying message-passing programs, operational and declarative features are complementary: while the former describe how a message-passing program is implemented, the latter describe what are the (least) conditions that govern a program’s correct behavior. Although languages based on the \(\pi \)-calculus can conveniently specify mobile, point-to-point communications, they do not satisfactorily express other kinds of requirements that influence protocol interactions and/or communicating partners—in particular, partial and contextual information can be unnatural or difficult to express in them.

To address this shortcoming, extensions of name-passing calculi such as, e.g., [5, 11, 14, 20, 21] have been developed: they typically add declarative features based on constraints (or assertions), i.e., logical conditions that specify and influence process behavior. Interestingly, several of these extensions are inspired by concurrent constraint programming [43] (\(\texttt {ccp}\), in the following), a model of concurrency in which constraints (but also other forms of partial information) are a primitive concept. Process languages based on \(\texttt {ccp}\) are appealing because they are simple, rest upon solid foundations, and are very expressive. Indeed, \(\texttt {ccp}\) languages such as \(\texttt {lcc}\) and \(\texttt {utcc}\) [37] can represent mobility as in the \(\pi \)-calculus; such representations, however, tend to be unpractical for reasoning about message-passing programs.

In our view, this current state of affairs begs for a unifying account of operational and declarative approaches to session-based concurrency. We envision a declarative basis for session-based concurrency in which constructs from operational models (such as \(\pi \)) are given correct, low-level implementations in declarative models (such as \(\texttt {lcc}\)). Such implementations can then be freely used as “macros” in larger declarative specifications, in which requirements related to partial and contextual information can be cleanly expressed. In this way, existing operational and declarative languages (and their analysis techniques) can be articulated at appropriate abstraction levels. An indispensable step towards this vision is developing rigorous ways of compiling operational languages into declarative ones. This is the main technical challenge in this paper.

In line with this challenge, our previous work [31] formally related the session \(\pi \)-calculus in [29] and \(\texttt {utcc}\) using an encoding, i.e., a language translation that satisfies certain encodability criteria [26]. Although this encoding already enables us to reason about message-passing specifications from a declarative standpoint, it presents some important limitations. First, the key rôle of linearity and type-based correctness in session-based concurrency is not explicit when encoding session \(\pi \)-calculus processes in \(\texttt {utcc}\). Also, because \(\texttt {utcc}\) is a deterministic language, the encoding in [31] can only translate deterministic session processes, and so it rules out useful forms of non-determinism that naturally arise in session-based concurrency.

To address these limitations within a unified account for session-based concurrency, here we develop an encoding of \(\pi \) into \(\texttt {lcc}\). Unlike \(\texttt {utcc}\), \(\texttt {lcc}\) treats constraints as linear resources that can be used exactly once. Our main discovery is that \(\texttt {lcc}\) with its explicit treatment of linearity is a much better match for interactions in session-based concurrency than \(\texttt {utcc}\). This is made formal by the tight correspondences between source processes in \(\pi \) and target processes in \(\texttt {lcc}\). Unlike \(\texttt {utcc}\), \(\texttt {lcc}\) is a non-deterministic language. Hence, by using \(\texttt {lcc}\) as target language, our encoding can translate \(\pi \) processes that cannot be translated by the encoding in [31], such as, e.g., a process specifying a session protocol in which a client can non-deterministically interact with multiple servers (cf. Ex. 3).

Summarizing, this paper develops the following contributions:

-

A translation from \(\pi \) into \(\texttt {lcc}\) (Sect. 4). By using \(\texttt {lcc}\) as target language, our translation supports linearity and non-determinism, as essential in session-based concurrency.

-

A study of the conditions under which the session types by Vasconcelos [45] enable us to correctly translate \(\pi \) processes into \(\texttt {lcc}\) (Sect. 3.1). We use these conditions to prove that our translation is a valid encoding: it satisfies Gorla’s encodability criteria [26], in particular operational correspondence.

-

Extended examples that showcase how processes resulting from our encoding can be used as macros in declarative specifications (Sect. 5). By exploiting a general strategy that uses encoded processes as code snippets, we specify in \(\texttt {lcc}\) two of the communication patterns with time in [36].

The rest of this paper is structured as follows. Section 2 describes session-based concurrency and introduces the key ideas in our approach. Section 3 presents required background on relative expressiveness, \(\pi \), and \(\texttt {lcc}\). In particular, Sect. 3.1 presents a variant of the session types in [45] that is crucial to establish correctness for our translation. Section 4 presents the translation of \(\pi \) into \(\texttt {lcc}\) and establishes its correctness. Section 5 develops the extended examples. We close by discussing related work (Sect. 6) and giving some concluding remarks (Sect. 7). “Appendices” contain additional examples and omitted proofs.

This paper builds upon results first reported in the conference paper [17]. Such results include (i) an encoding of \(\pi \) into \(\texttt {lcc}\), as well as (ii) an encoding of an extension of \(\pi \) with session establishment into a variant of \(\texttt {lcc}\). Here, we offer a revised, extended presentation of the results on (i), for which we present stronger correspondences, full technical details, and additional examples. This focus allows us to keep presentation compact; a detailed description of the results related to (ii) can be found in Cano’s PhD thesis [15].

2 Overview of key ideas

We informally illustrate our approach and main results. We use \(\pi \) and \(\texttt {lcc}\) processes, whose precise syntax and semantics will be introduced in the following section.

Session-Based Concurrency. Consider a simple protocol between a client and a shop:

-

1.

The client sends a description of an item that she wishes to buy to the shop.

-

2.

The shop replies with the price of the item and offers two options to the client: to buy the item or to close the transaction.

-

3.

Depending on the price, the client may choose to purchase the item or to end the transaction.

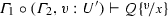

Here is a session type that specifies this protocol from the client’s perspective:

Type S says that the output of a value of type \(\mathsf {item}\) (denoted \(!\mathsf {item}\)) should be followed by the input of a value of type \(\mathsf {price}\) (denoted \(?\mathsf {price}\)). These two actions should precede the selection (internal choice, denoted \(\oplus \)) between two different behaviors distinguished by labels \({\textit{buy}}\) and \({\textit{quit}}\): in the first behavior, the client sends a value of type \(\mathsf {ccard}\), then receives a value of type \(\mathsf {invoice}\), and then closes the protocol (\(\texttt {end}\) denotes the concluded protocol); in the second behavior, the client emits a value of type \(\mathsf {bye}\) and closes the session.

From the shop’s perspective, we would expect a protocol that is complementary to S:

After receiving a value of type \(\mathsf {item}\), the shop sends a value of type \(\mathsf {price}\) back to the client. Using external choice (denoted&), the shop then offers two behaviors to the client, identified by labels \({\textit{buy}}\) and \({\textit{quit}}\). The complementarity between types such as S and T is formalized by session-type duality (see, e.g., [23]). The intent is that implementations derived from dual session types will respect their (complementary) protocols at run-time, avoiding communication mismatches and other insidious errors.

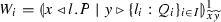

We illustrate the way in which session types relate to \(\pi \) processes. We write \({x}\langle v\rangle .P\) and x(y).P to denote output and input along name x, with continuation P. Also, given a finite set I, we write \(x \triangleright \{{\textit{lab}}_i:P_i\}_{i\in I} \) to denote the offer of labeled processes \(P_1, P_2, \ldots \) along name x; dually, \(x \triangleleft {\textit{lab}}.\,P\) denotes the selection of a label \({\textit{lab}}\) along x. Moreover, process \(b?\,P\!:\!Q\) denotes a conditional expression which executes P or Q depending on Boolean b. Process \(P_x\) below is a possible implementation of type S along x:

Process \(P_x\) uses a conditional to implement the decision of which option offered by the shop is chosen: the purchase will take place only if the item (a book) is within a $20 budget. We assume that \({\textit{end}}\) is a value of type \(\mathsf {bye}\). Similarly, \(P_y\) is a process that implements the shop’s intended protocol along y: it first expects a petition for an item (w), and after that returns the item’s current price. Then, it offers the buyer a (labeled) choice: either to buy the item or to quit the transaction.

Sessions with Declarative Conditions. The session-based calculus \(\pi \) is a language with point-to-point, synchronous communication. Hence, \(\pi \) processes can appropriately describe protocol actions, but can be less adequate to express contextual conditions on partners and their interactions, which are usually hard to know and predict. Consider a variation of the above protocol, in which the last step is specified as follows:

-

3’.

Depending on the item’s price and whether the purchase occurs in a given time interval (say, a discount period), the client may either purchase the item or end the transaction.

This kind of time constraints has been studied in [36], where a number of timed patterns in communication protocols are identified and analyzed. These patterns add flexibility to specifications by describing the protocol’s behavior with respect to external sources (e.g., non-interacting components like clocks and the communication infrastructure). For example, a protocol step such as 3’ dictates that communication actions will be executed only within a given time interval. Hence, even though timed requirements do not necessarily enact a communicating action, they may influence interactions between partners.

Timed patterns are instances of declarative requirements, which are difficult to express in the (session) \(\pi \)-calculus. Formalizing Step 3’ in \(\pi \) is not trivial, because one must necessarily represent time units by using synchronizations—a far-fetched relationship. The (session) \(\pi \)-calculus does not naturally lend itself to specify the combination of operational descriptions of structured interactions (typical of sessions) and declarative requirements (as in, e.g., protocol and workflow specifications). Given this, our aim is to use \(\texttt {lcc}\) as a unified basis for both operational and declarative requirements in session-based concurrency.

\(\texttt {ccp}\) and \(\texttt {lcc}\). \(\texttt {lcc}\) is based on concurrent constraint programming (\(\texttt {ccp}\)) [43]. In \(\texttt {ccp}\), processes interact via a constraint store (store, in the sequel) by means of tell and ask operations. Processes may add constraints (pieces of partial information) to the store via tell operations; using ask operations processes may query the store about some constraint and, depending on the result of the query, execute a process or suspend. These queries are governed by a constraint system, a parametric structure that specifies the entailment relation between constraints. The constraint store thus defines an asynchronous synchronization mechanism; both communication-based and external events can be modeled as constraints in the store.

In \(\texttt {lcc}\), tell and ask operations work as follows. Let c and d denote constraints, and let \(\widetilde{x}\) denote a (possibly empty) vector of variables. While the tell process \(\overline{c}\) can be seen as the output of c to the store, the parametric ask operator \(\mathbf {\forall }{\widetilde{x}}(d \!\rightarrow \! P) \) may be read as: if d can be inferred from the store, then P will be executed; hence, P depends on the guard d. These parametric ask operators are called abstractions. Resource awareness in \(\texttt {lcc}\) is crucial: not only the inference consumes the abstraction (i.e., it is linear), it may also involve the consumption of constraints in the store as well as substitution of parameters \(\widetilde{x}\) in P.

In \(\texttt {lcc}\), parametric asks can express name mobility as in the \(\pi \)-calculus [27, 44]. That is, the key operational mechanisms of the (session) \(\pi \)-calculus (name communication, scope extrusion) admit (low-level) declarative representations as \(\texttt {lcc}\) processes.

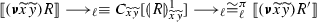

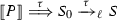

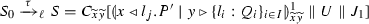

Our Encoding To illustrate our encoding, let us consider the most elementary computation step in \(\pi \), which is given by the following reduction rule:

where v is a value or a variable. This rule specifies the synchronous communication between two complementary (session) endpoints, represented by the output process \({x}\langle v\rangle .P\) and the input process y(z).Q. In session-based concurrency, no races in communications between endpoints can occur. We write \((\varvec{\nu }xy) P\) to denote that (bound) variables x and y are reciprocal endpoints for the same session protocol in P.

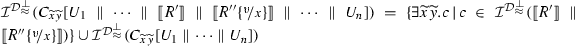

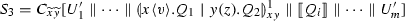

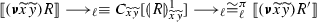

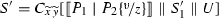

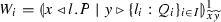

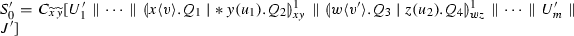

Our encoding \(\llbracket \cdot \rrbracket \) translates \(\pi \) processes into \(\texttt {lcc}\) processes (cf. Fig. 8). The essence of this declarative interpretation is already manifest in the translation of output- and input-prefixed processes:

where \(\otimes \) denotes multiplicative conjunction in linear logic. We use predicates \(\mathsf {snd} (x,v)\) and \(\mathsf {rcv} (x,y)\) to represent synchronous communication in \(\pi \) using the asynchronous communication model of \(\texttt {lcc}\); also, we use the constraint \(\{x{:}z\}\) to indicate that x and z are dual endpoints. These pieces of information are treated as linear resources by \(\texttt {lcc}\); this is key to ensure operational correspondence (cf. Theorems 11 and 12). As we will see, \( \llbracket {x}\langle v\rangle .P \rrbracket \) and \( \llbracket x(y).Q \rrbracket \) synchronize in two steps. First, constraint \(\mathsf {snd} (x,v)\) is consumed by the abstraction in \(\llbracket x(y).Q \rrbracket \), thus enabling \(\llbracket Q \rrbracket \) and adding \(\mathsf {rcv} (x,y)\) to the store. Then, constraint \(\mathsf {rcv} (x,y)\) is consumed by the abstraction \(\mathbf {\forall }{z}\big (\mathsf {rcv} (z,v)\otimes \{x{:}z\} \rightarrow \llbracket P \rrbracket \big ) \), thus enabling \(\llbracket P \rrbracket \).

Encoding Correctness using Session Types. To contrast the rôle of linearity in \(\pi \) and in \(\texttt {lcc}\), we focus on \(\pi \) processes which are well-typed in the type system by Vasconcelos [45]. Type soundness in [45] ensures that well-typed processes never reduce to ill-formed processes that do not respect their intended session protocols.

The type system in [45] offers flexible support for processes with infinite behavior, in the form of recursive session types that can be shared among multiple threads. Using recursive session types, the type system in [45] admits \(\pi \) processes with output races, i.e., processes in which two or more sub-processes in parallel have output actions on the same variable. Here is a simple example of a process with an output race (on x), which is typable in [45]:

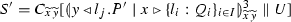

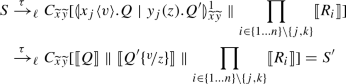

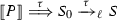

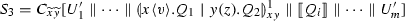

Even though our translation \(\llbracket \cdot \rrbracket \) works fine for the set of well-typed \(\pi \) processes as defined in [45], the class of typed processes with output races represents a challenge for proving that the translation \(\llbracket \cdot \rrbracket \) is correct. We aim at correctness in the sense of Gorla’s encodability criteria [26], which define a general and widely used framework for studying relative expressiveness. Roughly speaking, \(\pi \) processes with output races induce ambiguities in the \(\texttt {lcc}\) processes that are obtained via \(\llbracket \cdot \rrbracket \). To illustrate this, consider the translation of \(R_1\):

where context \(C[-]\) includes the constraints needed for interaction (i.e., \(\{x{:}y\}\)) and ‘\({{\,\mathrm{!}\,}}\)’ denotes replication. The ambiguities concern the values involved as objects in the output races. If we assume \(v_1 \ne v_2\), then there are no ambiguities and translation correctness as in [26] can be established. Now, if \(v_1 = v_2\) then \(\mathsf {snd} (x,v_1) = \mathsf {snd} (x,v_2)\), which is problematic for translation correctness (in particular, for proving operational correspondence): once process \({{\,\mathrm{!}\,}}\mathbf {\forall }{z,w_3}\big (\mathsf {snd} (w_3,z)\otimes \{w_3{:}y\} \rightarrow \overline{\mathsf {rcv} (y,z)} \parallel \llbracket Q_3 \rrbracket \big ) \) consumes either constraint, we cannot precisely determine which continuation should be enabled with constraint \(\mathsf {rcv} (x,v_i)\)—both \(\llbracket Q_1 \rrbracket \) and \(\llbracket Q_2 \rrbracket \) could be spawned at that point.

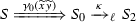

To establish translation correctness following the criteria in [26], we narrow down the class of typable \(\pi \) processes in [45] by disallowing processes with output races. To this end, we introduce a type system in which a recursive type involving an output behavior (a potential output race) can be associated to at most one thread. This is a conservative solution, which allows us to retain useful forms of infinite behavior. Although process \(R_1\) in (3) is not typable in our type system, it allows processes such as

in which the parallel server invocations exhibited by \(R_1\) have been sequentialized.

3 Preliminaries

We start by introducing the source and target languages (Sects. 3.1 and 3.2) and the encodability criteria we shall use as reference for establishing translation correctness (Sect. 3.3).

3.1 A session \(\pi \)-calculus without output races (\(\pi \))

We present the session \(\pi \)-calculus (\(\pi \)) and its associated type system, a specialization of that by Vasconcelos [45] that disallows output races.

3.1.1 Syntax and semantics

We assume a countably infinite set of variables \(\mathcal {V}_{\pi } \), ranged over by \(x, y, \ldots \). Channels are represented as pairs of variables, called covariables. Messages are represented by values, ranged over by \(v,v',u,u',\ldots \) and whose base set is called \(\mathcal {U}_{\pi }\). Values can be both variables and the Boolean constants \(\texttt {tt} , \texttt {ff} \). We also use \(l, l', \ldots \) to range over a countably infinite set of labels, denoted \(\mathcal {B}_\pi \). We write \(\widetilde{x}\) to denote a finite sequence of variables \(x_1,\ldots ,x_n\) with \(n\ge 0\) (and similarly for other elements).

Definition 1

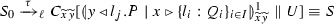

(\(\pi \)) The set of \(\pi \) processes is defined by grammar below:

Process \({x}\langle v\rangle .P\) sends value v over x and then continues as P; dually, process x(y).Q expects a value v on x that will replace all free occurrences of y in Q. Processes \(x \triangleleft l_j.P\) and \(x \triangleright \{l_i:Q_i\}_{i\in I}\) define a labeled choice mechanism, with labels indexed by the finite set I: given \(j \in I\), the selection process \(x \triangleleft l_j.P\) uses x to select \(l_j\) from the branching process \(x \triangleright \{l_i:Q_i\}_{i\in I}\), thus triggering process \(Q_j\). We assume pairwise distinct labels. The conditional process \(v?\,P\!:\!Q\) behaves as P if v evaluates to \(\texttt {tt} \); otherwise it behaves as Q. Process \(\mathbf {*}\, x(y).P\) denotes a replicated input process, which allows us to specify persistent servers. The restriction \((\varvec{\nu }xy)P\) binds together x and y in P, thus indicating that they are two endpoints of the same channel (i.e., the same session). Processes for parallel composition \(P \, {\mid }\,Q\) and inaction \(\mathbf {0} \) are standard.

We write \((\varvec{\nu }\widetilde{x}\widetilde{y})P\) to stand for \((\varvec{\nu }x_1,\ldots ,x_n \, y_1,\ldots ,y_n)P\), for some \(n\ge 1\). We often write \(\prod _{i = 1}^{n}P_i\) to stand for \(P_1 \, {\mid }\,\cdots \, {\mid }\,P_n\), and refer to the parallel sub-processes of \(P_1, \ldots , P_n\) as threads.

In x(y).P and \(\mathbf {*}\, x(y).P\) (resp. \((\varvec{\nu }yz)P\)) occurrences of y (resp. y, z) are bound with scope P. The set of free variables of P, denoted \(\mathsf {fv}_{\pi }(P)\), is standardly defined.

Remark 1

(Barendregt’s variable convention) Throughout the paper, in both \(\pi \) and \(\texttt {lcc}\), we shall work up to \(\alpha \)-equivalence, as usual; in definitions and proofs we assume that all bound variables are distinct from each other and from all free variables.

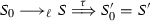

The operational semantics for \(\pi \) is given as a reduction relation \(\longrightarrow \), the smallest relation generated by the rules in Fig. 1. Reduction expresses the computation steps that a process performs on its own. It relies on a structural congruence on processes, given below.

Definition 2

(Structural Congruence) The structural congruence relation for \(\pi \) processes is the smallest congruence relation \(\equiv _{\pi } \) that satisfies the following axioms and identifies processes up to renaming of bound variables (i.e., \(\alpha \)-conversion, denoted \(\equiv _\alpha \)).

Intuitions on the rules in Fig. 1 follow. Reduction requires an enclosing restriction \((\varvec{\nu }xy)(\,\cdots )\); this represents that a session connecting endpoints x and y has been already established. Hence, communication cannot occur on free variables, as there is no way to tell what is the pair of interacting covariables. In Rules \({\lfloor \textsc {Com}\rfloor }\), \({\lfloor \textsc {Sel}\rfloor }\), and \({\lfloor \textsc {Rep}\rfloor }\), the restriction is persistent after each reduction, to allow further synchronizations on x and y. In the same rules, process R stands for all the threads that may share x and y.

Rule \({\lfloor \textsc {Com}\rfloor }\) represents the synchronous communication of value v through endpoint x to endpoint y. Furthermore, Rule \({\lfloor \textsc {Sel}\rfloor }\) formalizes a labeled choice mechanism, in which communication of a label \(l_j\) is used to choose which of the \(Q_i\) will be executed, Rule \({\lfloor \textsc {Rep}\rfloor }\) is similar to Rule \({\lfloor \textsc {Com}\rfloor }\), and used to spawn a new copy of Q, available as a replicated server. Rules \({\lfloor \textsc {IfT}\rfloor }\) and \({\lfloor \textsc {IfF}\rfloor }\) are self-explanatory. Rules for reduction within parallel and restriction contexts, together with reduction up to \(\equiv _{\pi } \), are standard.

To reason compositionally about the syntactic structure of processes, we introduce (evaluation) contexts. A context represents a process with a “hole”, denoted ‘\(- \)’, which may be filled by another process.

Definition 3

(Contexts for \(\pi \)) The syntax of (evaluation) contexts in \(\pi \) is given by the following grammar:

where P is a \(\pi \) process. We write \(C[- ] \) to range over contexts of the form \((\varvec{\nu }\widetilde{x}\widetilde{y})(-)\). Also, we write \(E[P ] \) (resp. \(C[P ] \)) to denote the process obtained by filling ‘\(- \)’ with P.

3.1.2 Type system

We now present the type system for \(\pi \), a variant of the system in [45]. Rem. 2 discusses the differences of our type system with respect to the one in [45].

We use \(q, q',\dots \), to range over qualifiers; \(p, p' ,\dots \), to range over pre-types; \(T,U,\dots \) to range over types, and \(\varGamma , \varGamma ',\dots \) to range over the typing contexts which gather assignments of the form x : T, where x is a variable and T is a type. As usual, we treat contexts up to the exchange of entries; the variables that appear in a context are required to be pairwise distinct. The concatenation of typing contexts \(\varGamma _1\) and \(\varGamma _2\) is written \(\varGamma _1, \varGamma _2\).

Definition 4

(Syntax of Types) The syntax of types and typing contexts is in Fig. 2.

Intuitively, pre-types represent pure communication behavior (e.g., send, receive, selection, and branching). Pre-type \(!T_1.T_2\) represents a protocol that sends a value of type \(T_1\) and then continues according to type \(T_2\). Dually, pre-type \(?T_1.T_2\) represents a protocol that receives a value of type \(T_1\) and then proceeds according to type \(T_2\). Pre-types \(\oplus \{l_i:T_i\}_{i\in I}\) and&\(\{l_i:T_i\}_{i\in I}\) denote labeled selection (internal choice) and branching (external choice), respectively.

Pre-types are given a qualifier q to indicate whether the communication behavior is unrestricted or linear. Linearly qualified pre-types can only be assigned to variables that do not appear shared among threads, whereas unrestricted pre-types may be assigned to variables shared among different threads.

Types can be one of the following: (1) \(\texttt {bool}\), used for constants and variables; (2) \(\texttt {end}\), which indicates a terminated behavior; (3) qualified pre-types; or (4) recursive types for disciplining potentially infinite communication patterns. Recursive types are considered equi-recursive (i.e., a recursive type and its unfolding are considered equal because they represent the same regular infinite tree) and contractive (i.e., containing no sub-expression of the form \(\mu \mathsf {a}_1.\cdots .\mu \mathsf {a}_n.\mathsf {a}_1\)) [42]. The qualifier of a recursive type \(T = \mu \mathsf {a}. T'\) is obtained via unfolding and by assigning the qualifier of the body \(T'\) to type T.

As in [45], we omit \(\texttt {end}\) at the end of types whenever it is not needed; we also write recursive types \(\mu \mathsf {a}. {{\,\mathrm{\texttt {un}}\,}}!T.\mathsf {a}\) and \(\mu \mathsf {a}. {{\,\mathrm{\texttt {un}}\,}}?T.\mathsf {a}\) as \(\mathbf {*}\,!T\) and \(\mathbf {*}\,?T\), respectively.

We use predicates over types to control which types can be shared among variables. While in [45] all unrestricted types can be shared, we proceed differently: to rule out output races, we enforce that only unrestricted input-like types can be shared. We start by presenting an auxiliary predicate that allows us to distinguish output-like types—even when the output behavior in the type is not immediate:

Definition 5

(Output-Like Unrestricted Types) We define the predicate \(\texttt {out}(p)\) on pre-types inductively:

The predicate is lifted to types as follows:

Using this predicate, we have the following definition, which specializes the one in [45]:

Definition 6

(Predicates for Types and Contexts) Let T be a session type (cf. Fig. 2). We define \(\texttt {un}^{\star }(T)\) as follows:

-

\(\texttt {un}^{\star }(T)\) if and only if \((T = \texttt {bool}) \vee (T = \texttt {end}) \vee (T = {{\,\mathrm{\texttt {un}}\,}}p \wedge \lnot \texttt {out}(p))\).

Also, we define \(\texttt {un}^{\star }(\varGamma )\) if and only if \(x:T \in \varGamma \) implies \(\texttt {un}^{\star }(T)\).

Above, predicate \(\texttt {un}^{\star }(T)\) modifies the \(\texttt {un}(T)\) predicate in [45] to rule out the sharing of output-like types: it requires that pre-types qualified with ‘\({{\,\mathrm{\texttt {un}}\,}}\)’ do not satisfy \(\texttt {out}(\cdot )\).

Session-type systems use duality to relate types with complementary (or opposite) behaviors: e.g., the dual of input is output (and vice versa); branching is the dual of selection (and vice versa). We define duality by induction on the structure of types.

Definition 7

(Duality of Session Types) For every type T except \(\texttt {bool}\), we define its dual \(\overline{T}\) inductively:

Duality in the presence of recursive types is delicate [7]. While intuitive, the inductive definition above is correct only for tail recursive types, in which all message types are closed. To account also for non-tail-recursive types (e.g., \(\mu \mathsf {a}. ?\mathsf {a}.\mathsf {a}\)) a more involved coinductive definition is required, cf. Definition 37 in “Appendix”. The reader is referred to [23] for a detailed treatment of duality, where Definition 7 is called “naive duality”. Notice that using naive duality does not undermine the correctness of our results.

We shall use a splitting operator on typing contexts, denoted ‘\(\circ \)’, to maintain the linearity invariant for variables on typing derivations. Because of predicate \(\texttt {un}^{\star }(\cdot )\) the splitting operation will not allow to share unrestricted output-like types.

Definition 8

(Typing Context Splitting) Let \(\varGamma _1\) and \(\varGamma _2\) be two typing contexts. The (typing) context splitting of \(\varGamma _1\) and \(\varGamma _2\), written \(\varGamma _1 \circ \varGamma _2\), is defined as follows:

We also define a ‘\(+\)’ operation to correctly update typing contexts during derivations:

There are two typing judgments. We write \(\varGamma \vdash v:T\) to denote that value v has type T under \(\varGamma \). Also, we write \(\varGamma \vdash P\) to denote that process P is well-typed under \(\varGamma \).

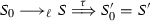

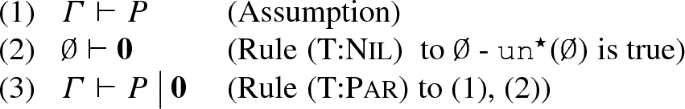

Figure 3 gives the typing rules for constants, variables, and processes; some intuitions follow. Rules \({(\textsc {T:Bool})}\) and \({(\textsc {T:Var})}\) are for variables; in both cases, we require \(\texttt {un}^{\star }(\varGamma )\) to ensure that all variables assigned to types that do not satisfy predicate \(\texttt {un}^{\star }(\cdot )\) are consumed. Rule \({(\textsc {T:In})}\) types an input process: it checks whether x has the right type and checks the continuation; it also adds variable y with type T and updates x in \(\varGamma \) with type U. To type-check a process \({x}\langle v\rangle .P\), Rule \({(\textsc {T:Out})}\) splits the typing context in three parts: the first checks the type of the subject x; the second checks the type of the object v; the third checks the continuation P. Rules \({(\textsc {T:Sel})}\) and \({(\textsc {T:Bra})}\) type-check selection and branching processes, and work similarly to Rules \({(\textsc {T:Out})}\) and \({(\textsc {T:In})}\), respectively.

Rule \({(\textsc {T:Rin})}\) types a replicated input \(\mathbf {*}\, x(y).P\) under the context \(\varGamma \); it presents several differences with respect to the rule in [45]. Our rule requires \(\varGamma \) to satisfy predicate \(\texttt {un}^{\star }(\cdot )\). Also, the type T of y must either satisfy \(\texttt {un}^{\star }(\cdot )\) or be linear. The rule also requires that \(\varGamma \) assigns x an input type qualified with \({{\,\mathrm{\texttt {un}}\,}}{}\), and that the continuation P is typed with a context that contains y : T and x : U.

Rule \({(\textsc {T:Par})}\) types parallel composition using the (context) splitting operation to divide resources among the two threads. Rule \({(\textsc {T:Res})}\) types the restriction operator by performing a duality check on the types of the covariables. Rule \({(\textsc {T:If})}\) type-checks the conditional process. Given the inactive process \(\mathbf {0} \), Rule \({(\textsc {T:Nil})}\) checks that the context satisfies \(\texttt {un}^{\star }(\cdot )\) and Rule \({(\textsc {T:WkNil})}\) ensures that unrestricted types that are output-like (cf. Definition 5) can be weakened when needed. The following example illustrates the need for this rule:

Example 1

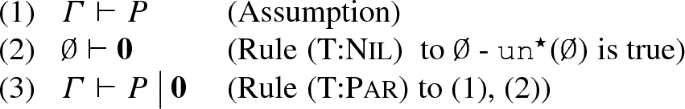

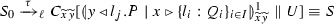

(Recursive Types and Rule \({(\textsc {T:WkNil})}\)) We show the kind of recursive processes typable in our system, and the most glaring differences with respect to [45]. Process \(P_1 = {x}\langle \texttt {tt} \rangle .{x}\langle \texttt {ff} \rangle .\mathbf {0} \) is typable both in our system and in the one in [45] under a context in which x is assigned the recursive type \(T = \mu \mathsf {a}. {{\,\mathrm{\texttt {un}}\,}}!\texttt {bool}.\mathsf {a}\). Let us consider the typing derivation for \(P_1\) in our system:

Notice that, by Definition 6, \(\texttt {un}^{\star }(T)\) does not hold because \(\texttt {out}(T)\) holds. This in turn influences the context splitting (Definition 8) required by Rule \({(\textsc {T:Out})}\): the assignment x : T can only appear in one of the branches of the split (the middle one). Let us consider U and D, which appear unspecified above. Because we use equi-recursive types (as in [45]), T is equivalent to \(U = {!}\texttt {bool}.\mu \mathsf {a}. {{\,\mathrm{\texttt {un}}\,}}!\texttt {bool}.\mathsf {a}\), which means that the judgment in the rightmost branch becomes \(x: T \vdash {x}\langle \texttt {ff} \rangle .\mathbf {0} \). To determine its derivation D, we use Rule \({(\textsc {T:WkNil})}\):

Indeed, before concluding the derivation for the rightmost branch, we are left with the judgment \(x: T \vdash \mathbf {0} \). Because \(\texttt {un}^{\star }(T)\) does not hold, we cannot apply Rule \({(\textsc {T:Nil})}\): to complete the derivation, we first apply Rule \({(\textsc {T:WkNil})}\) and then apply Rule \({(\textsc {T:Nil})}\). This way, Rule \({(\textsc {T:WkNil})}\) enforces a limited weakening principle, required in the specific case of process \(\mathbf {0} \) and an unrestricted type that is output-like.

Consider now process \(P_2 = {x}\langle \texttt {tt} \rangle .\mathbf {0} \mathord {\,\big |\,}{x}\langle \texttt {ff} \rangle .\mathbf {0} \), which is typable in [45] under the context x : T. This process is not typable in our system because it has an output race on x:

Because \(\texttt {un}^{\star }(T)\) does not hold, context splitting allows x : T to appear in \(\varGamma _1\) or \(\varGamma _2\) but not in both of them. As a result, either \(\varGamma _1\) or \(\varGamma _2\) should be empty, which in turn implies that the typing derivation will not be completed.

3.1.3 Type safety

Our type system enjoys type safety, which ensures that well-typed processes do not have communication errors. Type safety depends on the subject reduction property, stated next, which ensures that typing is preserved by the reduction relation given in Fig. 1. The proof follows by induction on the derivation of the reduction (cf. App. B.2).

Theorem 1

(Subject Reduction) If \(\varGamma \vdash P\) and \(P \longrightarrow Q \), then \(\varGamma \vdash Q\).

To establish type safety, we require auxiliary notions for pre-redexes and redexes, given below. We use the following notation:

Notation 2

We write \(P = \diamond ~y(z).P'\) to stand for either \(P = y(z).P'\) or \(P = \mathbf {*}\, y(z).P'\).

We now have:

Definition 9

(Pre-redexes and Redexes) We shall use the following terminology:

-

We say \({x}\langle v\rangle .P\), x(y).P, \(x \triangleleft l.P\), \(x \triangleright \{l_i:P_i\}_{i\in I}\), and \(\mathbf {*}\, x(y).P\) are pre-redexes (at variable x).

-

A redex is a process R such that \((\varvec{\nu }xy) R \longrightarrow \) and:

-

1.

\(R = v?\,P\!:\!Q\) with \(v\in \{\texttt {tt} ,\texttt {ff} \}\) (or)

-

2.

\(R = {x}\langle v\rangle .P \, {\mid }\,\diamond ~y(z).Q\) (or)

-

3.

\(R = x \triangleleft l_j.P \, {\mid }\,y \triangleright \{l_i:Q_i\}_{i\in I}\), with \(j \in I\).

-

1.

-

A redex R is either conditional (if \(R = v?\,P\!:\!Q\)) or communicating (otherwise).

We follow [45] in formalizing safety using a well-formedness property, which characterizes the set of processes that should be considered correct.

Definition 10

(Well-Formed Process) A process \(P_0\) is well-formed if for each of its structural congruent processes \(P_0 \equiv _{\pi } (\varvec{\nu }x_1y_1)\dots (\varvec{\nu }x_ny_n)(P\mathord {\,\big |\,}Q\mathord {\,\big |\,}R)\), with \(n\ge 0\), the following conditions hold:

-

1.

If \(P\equiv _{\pi } v?\,P'\!:\!P''\), then \(v=\texttt {tt} \) or \(v=\texttt {ff} \).

-

2.

If P and Q are prefixed at the same variable, then they are of the same input-like nature (inputs, replicated inputs, or branchings).

-

3.

If P is prefixed at \(x_i\) and Q is prefixed at \(y_i\), \(1\le i\le n\), then \(P \mathord {\,\big |\,}Q\) is a redex.

Unlike the definition in [45], Definition 10(2) excludes processes with output races, i.e., parallel processes can only be prefixed on the same variable if they are input-like. This is how we exclude processes with output races. We now introduce a notation for programs:

Notation 3

((Typable) Programs) A process P such that \(\mathsf {fv}_{\pi }(P) = \emptyset \) is called a program. Therefore, program P is typable if it is well-typed under the empty context (\(\, \vdash P\)).

We can now state type safety, which ensures that every well-typed program is well-formed—hence, well-typed processes have no output races. The proof follows by contradiction (cf. App. B.2).

Theorem 4

(Type Safety) If \(\,\vdash P\), then P is well-formed.

Observe that because of Theorem 1, well-formedness is preserved by reduction. Hence:

Corollary 1

If \( \vdash P\) and \(P \longrightarrow ^* Q\), then Q is well-formed with respect to Definition 10.

Remark 2

(Differences with respect to [45]) There are three differences between our type system and the one in [45]. First, the modified predicates in Definition 6 enable us to rule out processes with output races, which are typable in [45]. This required adding Rule \({(\textsc {T:WkNil})}\) (cf. Ex. 1). Second, our notion of well-formed processes (Definition 10) excludes processes with output races, which are admitted as well-formed in [45]. Finally, as already discussed, our typing rule for replicated inputs (Rule \({(\textsc {T:RIn})}\)) is less permissive than in [45], also for the purpose of ruling out output races.

3.2 Linear concurrent constraint programming (\(\texttt {lcc}\))

We now introduce \(\texttt {lcc}\), following Haemmerlé [27].

3.2.1 Syntax and semantics

Variables, ranged over by \(x,y,\ldots \), belong to the countably infinite set \(\mathcal {V}_{l} \). We assume that \(\varSigma _c\) and \(\varSigma _f\) correspond to sets of predicate and function symbols, respectively. First-order terms, built from \(\mathcal {V}_{l} \) and \(\varSigma _f\), will be denoted by \(t, t', \ldots \). An arbitrary predicate in \(\varSigma _c\) is denoted \(\varphi (\widetilde{t})\).

Definition 11

(Syntax) The syntax for \(\texttt {lcc}\) is given by the grammar in Fig. 4.

Constraints represent the pieces of information that can be posted to and asked from the store. Constant \(\texttt {tt}\), the multiplicative identity, denotes truth; constant \(\texttt {ff}\) denotes falsehood. Logic connectives used as constructors include the multiplicative conjunction (\(\otimes \)), bang (\({{\,\mathrm{!}\,}}\)), and the existential quantifier (\(\exists \widetilde{x}\)). Notation  denotes the constraint obtained by the (capture-avoiding) substitution of the free occurrences of \(x_i\) for \(t_i\) in c, with \(| \widetilde{t}| = | \widetilde{x}|\) and pairwise distinct \(x_i\)’s. Process substitution

denotes the constraint obtained by the (capture-avoiding) substitution of the free occurrences of \(x_i\) for \(t_i\) in c, with \(| \widetilde{t}| = | \widetilde{x}|\) and pairwise distinct \(x_i\)’s. Process substitution  is defined analogously.

is defined analogously.

The syntax for guards includes non-deterministic choices, denoted \(G_1 + G_2\), and parametric asks (also called abstractions). A parametric ask \(\mathbf {\forall }{\widetilde{x}}(c \rightarrow P) \) spawns process  if the current store entails constraint

if the current store entails constraint  ; the exact operational semantics for these ask operators (and its interplay with linear constraints) is detailed below. When \(\widetilde{x}\) is empty (a parameterless ask), \(\mathbf {\forall }{\widetilde{ x}}(c \rightarrow P) \) is written \(\mathbf {\forall }{\epsilon }(c \rightarrow P) \).

; the exact operational semantics for these ask operators (and its interplay with linear constraints) is detailed below. When \(\widetilde{x}\) is empty (a parameterless ask), \(\mathbf {\forall }{\widetilde{ x}}(c \rightarrow P) \) is written \(\mathbf {\forall }{\epsilon }(c \rightarrow P) \).

The syntax of processes includes guards and the tell operator \(\overline{c}\), which adds constraint c to the current store; hiding \(\exists \widetilde{ x}. \, P\), which declares x as being local to P; parallel composition \(P \parallel Q\), which has the expected reading; and replication \({{\,\mathrm{!}\,}}P\), which provides infinitely many copies of P. Notation \(\prod _{1 \le i \le n} P_i\) (with \(n\ge 1\)) stands for process \(P_1 \parallel \dots \parallel P_n\). Universal quantifiers in parametric ask operators and existential quantifiers in hiding operators bind their respective variables. Given this, the set of free variables in constraints and processes is defined as expected, and denoted \(\mathsf {fv}(\cdot )\).

The semantics of processes is defined as a labeled transition system (LTS), which relies on a structural congruence on processes. The semantics is parametric in a constraint system, as defined next.

Definition 12

(Constraint System) A constraint system is a triplet \((\mathcal {C},\varSigma ,\vdash )\), where \(\varSigma \) contains \(\varSigma _c\) (i.e., the set of predicates) and \(\varSigma _f\) (i.e., the set of functions and constants). \(\mathcal {C}\) is the set of constraints obtained by using the grammar in Definition 11 and \(\varSigma \). Relation \(\Vdash \) is a subset of \(\mathcal {C}\times \mathcal {C}\) that defines the non-logical axioms of the constraint system. Relation \(\vdash \) is the least subset of \(\mathcal {C}^*\times \mathcal {C}\) containing \(\Vdash \) and closed by the deduction rules of intuitionistic linear logic (see Fig. 5). We write \( c \dashv \vdash d \) whenever both \( c \vdash d \) and \( d \vdash c \) hold.

Intuitionistic sequent calculus for \(\texttt {lcc}\) (cf. Definition 12)

Definition 13

(Structural Congruence) The structural congruence relation is the smallest equivalence relation \(\equiv \) that satisfies \(\alpha \)-renaming of bound variables, commutativity and associativity for parallel composition and summation, together with the following identities:

As customary, a (strong) transition \(P \xrightarrow {\smash {\,\alpha \,}}_{\ell } P'\) denotes the evolution of process P to \(P'\) by performing the action denoted by the transition label \(\alpha \):

Label \(\tau \) denotes a silent (internal) action. Label \({c \in \mathcal {C}}\) denotes a constraint “received” as an input action (but see below) and \((\widetilde{x})\overline{c}\) denotes an output (tell) action in which \(\widetilde{x}\) are extruded variables and \(c \in \mathcal {C}\). We write \(ev(\alpha )\) to refer to these extruded variables.

Before discussing the transition rules (cf. Fig. 6), we introduce a key notion: the most general choice predicate:

Definition 14

(Most General Choice (\(\mathbf {mgc}\)) [27]) Let c, d, and e be constraints, \(\widetilde{x}, \widetilde{y}\) be vectors of variables, and \(\widetilde{t}\) be a vector of terms. We write

whenever for any constraint \(e'\), all terms \(\widetilde{t'}\) and all variables \(\widetilde{y'}\), if  and \(\exists \widetilde{y'}. e'\vdash \exists \widetilde{y}. e\) hold, then

and \(\exists \widetilde{y'}. e'\vdash \exists \widetilde{y}. e\) hold, then  and \(\exists \widetilde{y}. e\vdash \exists \widetilde{y'}.e'\).

and \(\exists \widetilde{y}. e\vdash \exists \widetilde{y'}.e'\).

Intuitively, the \(\mathbf {mgc}\) predicate allows us to refer formally to decompositions of a constraint c (seen as a linear resource) that do not “lose” or “forget” information in c. This is essential in the presence of linear constraints. For example, assuming that \(c \vdash d \otimes e\) holds, we can see that \(\mathbf {mgc} (c,d\otimes e)\) holds too, because c is the precise amount of information necessary to obtain \(d\otimes e\). However, \(\mathbf {mgc} (c\otimes f, d\otimes e)\) does not hold, assuming \(f\not = \texttt {tt}\), since \(c\otimes f\) produces more information than the necessary to obtain \(d\otimes e\).

We briefly discuss the transition rules of Fig. 6. Rule \({\lfloor \textsc {C:In}\rfloor }\) asynchronously receives a constraint; it represents the separation between observing an output and its (asynchronous) reception, which is not directly observable.

Rule \({\lfloor \textsc {C:Out}\rfloor }\) formalizes asynchronous tells: using the \(\mathbf {mgc}\) predicate, the emitted constraint is decomposed in two parts: the first one is actually sent (as recorded in the label); the second part is kept as a continuation. (In the rule, these two parts are denoted as \(d'\) and e, respectively.) Rule \({\lfloor \textsc {C:Sync}\rfloor }\) formalizes the synchronization between a tell (i.e., an output) and a parametric ask. The constraint mentioned in the tell is decomposed using the \(\mathbf {mgc}\) predicate: in this case, the first part is used (consumed) to “trigger” the processes guarded by the ask, while the second part is the remaining continuation.

Rule \({\lfloor \textsc {C:Comp}\rfloor }\) enables the parallel composition of two processes P and Q, provided that the variables extruded in an action by P are disjoint from the free variables of Q. Rule \({\lfloor \textsc {C:Sum}\rfloor }\) enables non-deterministic choices at the level of guards.

Rules \({\lfloor \textsc {C:Ext}\rfloor }\) and \({\lfloor \textsc {C:Res}\rfloor }\) formalize hiding: the former rule makes local variables explicit in the transition label; the latter rule avoids the hiding of free variables in the label.

Finally, Rule \({\lfloor \textsc {C:Cong}\rfloor }\) closes transitions under structural congruence (cf. Definition 13).

Notation 5

(\(\tau \)-transitions) Some terminology and notation for \(\tau \)-transitions in \(\texttt {lcc}\):

-

We shall write \(\xrightarrow {\smash {\,\tau \,}}_{\ell } ^*\) to denote a sequence of zero or more \(\tau \)-labeled transitions. Whenever the number \(k\ge 1\) of \(\tau \)-transitions is fixed, we write \(\xrightarrow {\smash {\,\tau \,}}_{\ell } ^k\).

-

When \(\tau \)-labels are unimportant (or clear from the context) we shall write \(\longrightarrow _\ell \), \(\longrightarrow _\ell ^*\), and \(\longrightarrow _\ell ^k\) to stand for \(\xrightarrow {\smash {\,\tau \,}}_{\ell } \), \(\xrightarrow {\smash {\,\tau \,}}_{\ell } ^*\), and \(\xrightarrow {\smash {\,\tau \,}}_{\ell } ^k\), respectively.

-

Weak transitions are standardly defined: we write

if and only if \((P \xrightarrow {\smash {\,\tau \,}}_{\ell } ^{*} Q)\); similarly, we write

if and only if \((P \xrightarrow {\smash {\,\tau \,}}_{\ell } ^{*} Q)\); similarly, we write  if and only if \((P \xrightarrow {\smash {\,\tau \,}}_{\ell } ^{*}P'\xrightarrow {\smash {\,\alpha \,}}_{\ell } P''\xrightarrow {\smash {\,\tau \,}}_{\ell } ^{*} Q)\).

if and only if \((P \xrightarrow {\smash {\,\tau \,}}_{\ell } ^{*}P'\xrightarrow {\smash {\,\alpha \,}}_{\ell } P''\xrightarrow {\smash {\,\tau \,}}_{\ell } ^{*} Q)\).

3.2.2 Observational equivalences

We require the following auxiliary definition from [27]:

Definition 15

(\(\mathcal {D}\)-Accessible Constraints) Let \(\mathcal {D} \subset \mathcal {C}\), where \(\mathcal {C}\) is the set of all constraints. The observables of a process P are the set of all \(\mathcal {D}\)-accessible constraints defined as follows:

Next, we introduce a notion of equivalence for \(\texttt {lcc}\) processes: weak barbed congruence, as in [27]. We first need a notation that parameterizes processes in terms of the constraints they can tell and ask (see below). Then, we introduce (evaluation) contexts for \(\texttt {lcc}\).

Notation 6

(\(\mathcal {DE}\)-Processes) Let \(\mathcal {D}\subseteq \mathcal {C}\) and \(\mathcal {E}\subseteq \mathcal {C}\). Also, let P be a process (cf. Definition 11).

-

P is \(\mathcal {D}\)-ask restricted if for every sub-process \(\mathbf {\forall }{\widetilde{x}}(c \rightarrow P') \) in P, we have \(\exists \widetilde{z}.c \in \mathcal {D}\).

-

P is \(\mathcal {E}\)-tell restricted if for every sub-process \(\overline{c}\) in P, we have \(\exists \widetilde{z}.c \in \mathcal {E}\).

-

If P is both \(\mathcal {D}\)-ask restricted and \(\mathcal {E}\)-tell restricted, then we call P a \(\mathcal {DE}\)-process.

Definition 16

(Contexts in \(\texttt {lcc}\)) Let E be the evaluation contexts for \(\texttt {lcc}\) as given by the following grammar, where ‘\(- \)’ represents a hole and P is a process:

Given an evaluation context \(E[- ] \), we write \(E[P ] \) to denote the process that results from filling in the occurrences of the hole with process P.

Given \(\mathcal {D}\subseteq \mathcal {C}\) and \(\mathcal {E}\subseteq \mathcal {C}\), we will say that a context is a \(\mathcal {DE}\)-context, ranged over \(C, C', \ldots \), if it is formed only by \(\mathcal {DE}\)-processes.

We may now define weak barbed bisimulation and weak barbed congruence:

Definition 17

(Weak \(\mathcal {DE}\)-Barbed Bisimulation) Let \(\mathcal {D}\subseteq \mathcal {C}\) and \(\mathcal {E}\subseteq \mathcal {C}\). A symmetric relation \(\mathcal {R}\) is a \(\mathcal {DE}\)-barbed bisimulation if, for \(\mathcal {DE}\)-processes P and Q, \((P, Q) \in \mathcal {R}\) implies:

-

(1)

\(\mathcal {O}^{\mathcal {D}}(P) = \mathcal {O}^{\mathcal {D}}(Q)\) (and),

-

(2)

whenever \(P\xrightarrow {\smash {\,\tau \,}}_{\ell } P'\) there exists \(Q'\) such that

and \(P'\mathcal {R}Q'\).

and \(P'\mathcal {R}Q'\).

The largest weak barbed \(\mathcal {DE}\)-bisimulation is called \(\mathcal {DE}\)-bisimilarity and is denoted by \(\approx _{\mathcal {D E}}\).

Definition 18

(Weak \(\mathcal {DE}\)-Barbed Congruence) We say that two processes P, Q are weakly barbed \(\mathcal {DE}\)-congruent, denoted by \(P \cong _{\mathcal {D E}} Q\), if for every \(\mathcal {DE}\)-context \(E[- ] \) it holds that \(E[P ] \! \approx _{\mathcal {D E}} E[Q ] \). We define the weak barbed \(\mathcal {DE}\)-congruence \(\cong _{\mathcal {D E}} \) as the largest \(\mathcal {DE}\)-congruence that is a weak barbed \(\mathcal {DE}\)-bisimilarity.

3.3 Relative expressiveness

We shall work with (valid) encodings, i.e., language translations that satisfy some correctness (or encodability) criteria. We follow the encodability criteria defined by Gorla [26], which define a general and widely used framework for studying relative expressiveness.

3.3.1 Languages and translations

Definition 19

(Languages and Translations) We define:

-

A language \(\mathcal {L}\) is a triplet \(\langle \mathsf {P}, \xrightarrow {\,~\,}, \approx \rangle \), where \(\mathsf {P}\) is a set of terms (i.e., expressions, processes), \(\xrightarrow {\,~\,}\) is a relation on \(\mathsf {P}\) defining its operational semantics, and \(\approx \) is an equivalence on \(\mathsf {P}\). We use \(\Longrightarrow \) to denote the reflexive-transitive closure of \(\xrightarrow {\,~\,}\).

-

A translation from \(\mathcal {L}_s = \langle \mathsf {P}_s, \xrightarrow {\,~\,}_s, \approx _s \rangle \) into \(\mathcal {L}_t = \langle \mathsf {P}_t, \xrightarrow {\,~\,}_t, \approx _t \rangle \) (each with countably infinite sets of variables \(\mathsf {V}_s\) and \(\mathsf {V}_t\), respectively) is a pair \(\langle \llbracket \cdot \rrbracket ,\psi _{\llbracket \cdot \rrbracket } \rangle \), where \(\llbracket \cdot \rrbracket : \mathsf {P}_s \rightarrow \mathsf {P}_t\) is defined as a mapping from source terms to target terms, and \(\psi _{\llbracket \cdot \rrbracket }:\mathsf {V}_s \rightarrow \mathsf {V}_t\) is a renaming policy for \(\llbracket \cdot \rrbracket \), which maps source variables to target variables.

In a language \(\mathcal {L}\), the set \(\mathsf {P}\) of terms is defined as a formal grammar that gives the formation rules. The operational semantics \(\xrightarrow {\,~\,}\) is given as a relation on terms, finitely denoted by sets of rules. We write \(P \xrightarrow {\,~\,} P'\) to represent a pair \((P,P')\) that is included in the relation; we call each one of these pairs a step. Each step represents the fact that term P reduces to term \(P'\). For the rest of this section, we will refer to \(P \xrightarrow {\,~\,} P'\) as a reduction step. Moreover, we use \(P \Longrightarrow P'\) to say that P reduces to \(P'\) in zero or more steps (i.e., a “multi-step” reduction). Finally, \(\approx \) denotes an equivalence on terms of the language.

In \(\langle \llbracket \cdot \rrbracket ,\psi _{\llbracket \cdot \rrbracket } \rangle \), the mapping \(\llbracket \cdot \rrbracket \) assigns each source term a corresponding target term. It is usually defined inductively over the structure of source terms. The renaming policy \(\psi _{\llbracket \cdot \rrbracket }\) translates variables. In our translation, a variable is simply translated into itself; the general formulation of a renaming policy given in [26] is not needed. When referring to translations, we often use \(\llbracket \cdot \rrbracket \) instead of \(\langle \llbracket \cdot \rrbracket ,\psi _{\llbracket \cdot \rrbracket } \rangle \).

We now introduce some terminology regarding translations.

Notation 7

Let \(\langle \llbracket \cdot \rrbracket ,\psi _{\llbracket \cdot \rrbracket } \rangle \) be a translation from \(\mathcal {L}_s= \langle \mathsf {P}_s, \xrightarrow {\,~\,}_s, \approx _s\rangle \) into \(\mathcal {L}_t= \langle \mathsf {P}_t, \xrightarrow {\,~\,}_t, \approx _t\rangle \).

-

We will refer to \(\mathcal {L}_s\) and \(\mathcal {L}_t\) as source and target languages of the translation, respectively. Whenever it does not create any confusion, we will only refer to source and target languages as source and target.

-

We say that any process \(S\in \mathsf {P}_s\) is a source term. Similarly, given a source term S, any process \(T\in \mathsf {P}_t\) that is reachable from \(\llbracket S \rrbracket \) using \(\Longrightarrow _t\) is called a target term.

3.3.2 Correctness criteria

To focus on meaningful translations, we define correctness criteria: a set of properties that determine whether a translation is a valid encoding or not. Following [26], we shall be interested in name invariance, compositionality, operational completeness, operational soundness, and success sensitiveness.

Definition 20

(Valid Encoding) Let \(\mathcal {L}_s=\langle \mathsf {P}_s, \xrightarrow {\,~\,}_s, \approx _s\rangle \) and \(\mathcal {L}_t=\langle \mathsf {P}_t, \xrightarrow {\,~\,}_t, \approx _t\rangle \) be languages. Also, let \(\langle \llbracket \cdot \rrbracket , \psi _{\llbracket \cdot \rrbracket } \rangle \) be a translation between them (cf. Definition 19). Such a translation is a valid encoding if it satisfies the following criteria:

-

1.

Name invariance: For all \(S \in \mathsf {P}_s\) and substitution \(\sigma \), there exists \(\sigma '\) such that \(\llbracket S\sigma \rrbracket = \llbracket S \rrbracket \sigma '\), with \(\psi _{\llbracket \cdot \rrbracket }(\sigma (x)) = \sigma '(\psi _{\llbracket \cdot \rrbracket }(x))\), for any \(x \in \mathsf {V}_s\).

-

2.

Compositionality: For every k-ary operator \(\texttt {op}\) of \(\mathsf {P}_s\) there exists a k-ary context \(C_{\texttt {op}}\) in \(\mathsf {P}_t\) such that for all \(S_1, \ldots , S_k \in \mathsf {P}_s\), it holds that

$$\begin{aligned}\llbracket \texttt {op}(S_1, \ldots , S_k) \rrbracket = C_{\texttt {op}}(\llbracket S_1 \rrbracket , \ldots , \llbracket S_k \rrbracket ).\end{aligned}$$ -

3.

Operational Completeness: For every \(S,S'\in \mathsf {P}_s\) such that \(S \Longrightarrow _s S'\), it holds that \(\llbracket S \rrbracket \Longrightarrow _{t} T\) and \(T \approx _t \llbracket S' \rrbracket \), for some \(T \in \mathsf {P}_t\).

-

4.

Operational Soundness: For every \(S \in \mathsf {P}_s\) and \(T\in \mathsf {P}_t\) such that \(\llbracket S \rrbracket \Longrightarrow _t T\), there exist \(S',T'\) such that \(S\Longrightarrow _s S'\) and \(T\Longrightarrow _t T'\) and \(T'\approx _t \llbracket S' \rrbracket \).

-

5.

Success Sensitiveness: Given \(\Downarrow _s\) (resp. \(\Downarrow _t\)) the unary success predicate for \(\mathsf {P}_s\) (resp. \(\mathsf {P}_t\)), for every \(S \in \mathsf {P}_s\) it holds that \(S \!\Downarrow _s\) if and only if \(\llbracket S \rrbracket \!\Downarrow _t\).

Name invariance ensures that substitutions are well-behaved in translated terms. Condition \(\psi _{\llbracket \cdot \rrbracket }(\sigma (x)) = \sigma '(\psi _{\llbracket \cdot \rrbracket }(x))\) ensures that for every variable substituted in the source term (i.e., \(\sigma (x)\)), there exists a substitution \(\sigma '\) such that the translation of x (i.e., \(\psi _{\llbracket \cdot \rrbracket }(x)\)) is substituted by the translation of \(\sigma (x)\). The renaming policy \(\psi _{\llbracket \cdot \rrbracket }(x)\) is particularly important in translations that fix some variables to play a specific role or that translate a single variable into a vector of variables. This is not the case here: as already mentioned, we shall require a simple renaming policy that translates a variable into itself.

Compositionality ensures that the translation of a composite term depends on the translation of its sub-terms. These sub-terms should be combined in a unique target context that ensures that their interactions are preserved. Unlike Gorla’s definition of compositionality, we do not need the target context to be parametric on a set of free names.

Together, operational completeness and soundness form the operational correspondence criterion, which deals with preservation and reflection of process behavior. Intuitively, operational completeness is about preserving the behavior of the source semantics: it requires that for every multi-step reduction in the source language there exists a corresponding multi-step reduction in the target language. The equivalence \(\approx _t\) then ensures that the target term thus obtained is behaviorally equivalent to the translation of the reduced source term. Operational soundness, on the other hand, ensures that the target semantics does not introduce extraneous steps that do not correspond to any source behaviors: it requires that every reduction in the target language corresponds to a reduction in the source language, using \(\approx _t\) to ensure that the reduced target term is behaviorally equivalent to the reduced source term.

Success sensitiveness assumes a “success” predicate, definable on source processes (denoted \(S\!\!\Downarrow \)) and on target processes (denoted \(T\!\!\Downarrow \)). In the name-passing calculi considered in [26], this predicate naturally corresponds to the notion of observable (or barb). In our setting, we will define a success predicate based on the potential that a process has of reducing to a process with an unguarded occurrence of the success process, denoted \(\checkmark \).

Having introduced the two languages and a framework for comparing their relative expressiveness, we now present our translation of \(\pi \) into \(\texttt {lcc}\) and establish its correctness.

4 Encoding \(\pi \) into \(\texttt {lcc}\)

We present the encoding from \(\pi \) into \(\texttt {lcc}\), the main contribution of our work. This section is structured as follows. In Sect. 4.1, we define the translation from \(\pi \) into \(\texttt {lcc}\) and illustrate it by means of examples. We prove that the translation is a valid encoding: first, in Sect. 4.2 we prove name invariance, compositionality, and operational completeness properties; then, operational soundness and success sensitiveness are proven in Sects. 4.3 and 4.4, respectively.

4.1 The translation

Our translation relies on the constraint system defined next.

Definition 21

(Session Constraint System) A session constraint system is represented by the tuple \(\langle \mathcal {C}, \varSigma , \vdash _{\mathcal {S} }\rangle \), where:

-

\(\varSigma \) is the set of predicates given in Fig. 7;

-

\(\mathcal {C}\) is the set of constraints obtained by using linear logic operators \({{\,\mathrm{!}\,}}\), \(\otimes \) and \(\exists \) over the predicates of \(\varSigma \);

-

\(\vdash _{\mathcal {S} }\) is given by the rules in Fig. 5 (cf. Definition 12), extended with the syntactic equality ‘\(=\)’.

Session constraint system: Predicates (cf. Definition 21)

The first four predicates in Fig. 7 serve as acknowledgments of actions in the source \(\pi \) process: predicate \(\mathsf {rcv} (x,y)\) signals an input action on x of a value denoted by y; conversely, predicate \(\mathsf {snd} (x,y)\) signals an output action on x of a value denoted by y. Predicates \(\mathsf {sel} (x,l)\) and \(\mathsf {bra} (x,l)\) signal selection and branching actions on x involving label l, respectively. Finally, predicate \(\{x{:}y\}\) indicates that x and y denote dual endpoints, as required to translate restriction in \(\pi \). To ensure alignment with the properties of restricted covariables in \(\pi \) (cf. Definition 2), we assume \( \{x{:}y\} \dashv \vdash _{\mathcal {S} } \{y{:}x\} \) for every pair of variables x, y.

Defining \(\texttt {lcc}\) as a language in the sense of Definition 24 requires setting up observational equivalences (cf. Definitions 17 and 18). To this end, we first define two sets of observables: the output and complete observables of \(\texttt {lcc}\) processes under the constraint system in Definition 21.

Definition 22

(Output and Complete Observables) Let \(\mathcal {C}\) be the constraint system in Definition 21. We define \(\mathcal {D}_{\pi }\), the set of output observables of \(\texttt {lcc}\), as follows:

We define \(\mathcal {D}^{\star }_{\pi }\), the set of complete observables of \(\texttt {lcc}\), as the following extension of \(\mathcal {D}_{\pi }\):

Notice that constraints such as \(\{x{:}y\}\) are not part of the observables. As we will see, covariable predicates will be persistent, and so the information on covariables can be derived by using other constraints. In particular, as we will show later, if \(\exists x,y. \mathsf {snd} (x,v)\) and \(\exists x,y. \mathsf {rcv} (y,v)\) are in the complete observables of a process, then constraint \({{\,\mathrm{!}\,}}\{x{:}y\}\) must be in the corresponding store too. This will become clear when analyzing the shape of translated processes (cf. Lemma 1).

We are now ready to instantiate sets \(\mathcal {D}\) and \(\mathcal {E}\) for the barbed bisimilarity (cf. Definition 17) and the barbed congruence for \(\texttt {lcc}\) (cf. Definition 18). To this end, we let \(\mathcal {D}= \mathcal {D}_{\pi }\), and \(\mathcal {E}=\mathcal {C}\) (cf. Definition 21).

Definition 23

(Weak o-barbed bisimilarity and congruence) We define weak o-barbed bisimilarity and weak o-barbed congruence as follows:

-

1.

Weak o-barbed bisimilarity, denoted \(\approx ^{\pi }_\ell \), arises from Definition 17 as the weak \(\mathcal {D}_{\pi }\mathcal {C}\)-barbed bisimilarity.

-

2.

Weak o-barbed congruence, denoted \(\cong ^{\pi }_\ell \), arises from Definition 18 as the weak \(\mathcal {D}_{\pi }\mathcal {C}\)-barbed congruence.

We define \(\pi \) and \(\texttt {lcc}\) as the source and target languages for our translation, respectively:

Definition 24

(Source and Target Language)

-

(1)

The language \(\mathcal {L}_{\pi } \) is defined by the triplet \(\langle \pi ,\longrightarrow ,\equiv _{\pi } \rangle \), where \(\pi \) is as in Definition 3.1.1, \(\longrightarrow \) is as in Fig. 1, and \(\equiv _{\pi } \) is as in Definition 2.

-

(2)

The language \(\mathcal {L}_{\texttt {lcc}} \) is given by the triplet \(\langle \texttt {lcc},\longrightarrow _\ell ,\cong ^{\pi }_\ell \rangle \), where \(\texttt {lcc} \) is as in Definition 11, \(\longrightarrow _\ell \) is the relation given only by \(\tau \)-transitions (cf. Fig. 6), and \(\cong ^{\pi }_\ell \) is the behavioral equivalence in Definition 23.

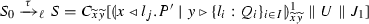

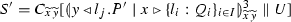

The translation of \(\mathcal {L}_{\pi } \) into \(\mathcal {L}_{\texttt {lcc}} \) is defined as follows:

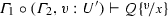

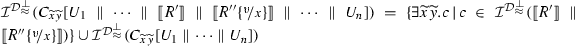

Translation from \(\pi \) to \(\texttt {lcc}\) (cf. Definition 25)

Definition 25

(Translation of \(\pi \) into \(\texttt {lcc}\)) The translation from \(\mathcal {L}_{\pi } \) into \(\mathcal {L}_{\texttt {lcc}} \) (cf. Definition 24) is the pair \(\langle \llbracket \cdot \rrbracket , \varphi _{\llbracket \cdot \rrbracket } \rangle \), where \(\llbracket \cdot \rrbracket \) is the process mapping defined in Fig. 8 and \(\varphi _{\llbracket \cdot \rrbracket }(x) = x\).

Let us discuss some of the cases of the definition in Fig. 8:

-

The output process \({x}\langle v\rangle .P\) is translated by using both tell and abstraction constructs:

$$\begin{aligned} \overline{\mathsf {snd} (x,v)} \parallel \mathbf {\forall }{z}\big (\mathsf {rcv} (z,v)\otimes \{x{:}z\} \rightarrow \llbracket P \rrbracket \big ) \end{aligned}$$The translation posts predicate \(\mathsf {snd} (x,v)\) in the store, signaling that an output has taken place, and can be received by the translation of an input process. The translation of the continuation is activated once predicate \(\mathsf {rcv} (y,v)\) has been received: this signals that the message has been correctly received by a translated process that contains its covariable (e.g., predicate \(\{x{:}y\}\)). Therefore, input-output interactions are represented in \(\llbracket \cdot \rrbracket \) as a two-step synchronization. As we stick to the variable convention in Rem. 1, a proviso such as “\(z \not \in \mathsf {fv}_{\pi }(P)\)” is redundant here (and in the cases below).

-

Accordingly, the translation of an input process x(y).P is defined as follows:

$$\begin{aligned} \mathbf {\forall }{y,w}\big (\mathsf {snd} (w,y)\otimes \{w{:}x\} \!\rightarrow \! \overline{\mathsf {rcv} (x,y)} \parallel \llbracket P \rrbracket \big ) \end{aligned}$$Whenever a predicate \(\mathsf {snd} (x,v)\) is detected by the abstraction, constraint \(\mathsf {snd} (x,v)\) is consumed to obtain both the subject x and the object y. Then, the covariable restriction is checked: this enforces synchronization between intended endpoints. Subsequently, the translation emits a message \(\mathsf {rcv} (\cdot , \cdot )\) and spawns its continuation.

-

The translation of branching-selection synchronizations is similar, using \(\mathsf {bra} (\cdot ,\cdot )\) and \(\mathsf {sel} (\cdot ,\cdot )\) as acknowledgment messages. In this case, the exchanged value is one of the pairwise distinct labels, say \(l_j\); depending on the received label, the translation of branching will spawn exactly one continuation. The continuations corresponding to labels different from \(l_j\) get blocked, as their equality guard can never be satisfied. Similarly, the translation of conditionals makes both branches available for execution; we use a parameterized ask as guard to ensure that only one of them will be executed.

-

The translation of process \((\varvec{\nu }xy)P\) provides infinitely many copies of the covariable constraint \(\{x{:}y\}\), using hiding in \(\texttt {lcc}\) to appropriately regulate the scope of the involved endpoints.

-

The translation of replicated processes simply corresponds to the replication of the translation of the given input-guarded process. Finally, the translations of parallel composition and inaction are self-explanatory.

The following examples illustrate our translation.

Example 2

(Translating Session Delegation) We show how our translation captures session delegation. Consider the following \(\pi \) process:

Above, endpoint z is being sent over x, to be received by endpoint y, which then enables the communication between w and z. The translated process \(\llbracket P_1 \rrbracket \) is given below:

where, using the semantics in Fig. 6, it can be shown that:

which can then reduce as expected.

We now show how our translation can handle non-determinism. In particular, the kind of non-determinism induced by multiple replicated servers that can interact with a single client.

Example 3

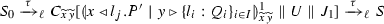

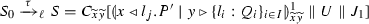

(Translating Non-Determinism) Let us consider the \(\pi \) program \(P_2\) below, which is not encodable in [31]:

The translation for \(P_2\) follows:

Note that  . Figure 9 shows how this reduction is mimicked in \(\texttt {lcc}\): observe that we use structural congruence twice to get a copy of process \(\overline{\{x{:}y\}}\) (cf. Axiom \({(\textsc {SC})}\)\(_{\ell }\):4 in Definition 13). The other reduction from \(P_2\) (involving \(\mathbf {*}\, y(z_2).Q_3\)) can be treated similarly.

. Figure 9 shows how this reduction is mimicked in \(\texttt {lcc}\): observe that we use structural congruence twice to get a copy of process \(\overline{\{x{:}y\}}\) (cf. Axiom \({(\textsc {SC})}\)\(_{\ell }\):4 in Definition 13). The other reduction from \(P_2\) (involving \(\mathbf {*}\, y(z_2).Q_3\)) can be treated similarly.

Our next example considers a \(\pi \) process that implements a selection protocol, and shows how to obtain the observables of its translation. These observables can be used to showcase the behavioral equivalences in Definition 23 on translated processes (see App. A for details).

Example 4

(Translations and their observables) Let us consider process \(P_3\), which models a simple transaction between a client and a store.

\(P_{3}\) specifies a client sub-process (on the left) that wants to buy some item from a store sub-process (on the right). Intuitively, the client selects to buy, and sends its credit card number, before receiving an invoice. Dually, the store is waiting for a selection to be made. If the \(\textit{buy}\) label is picked, the store awaits for the credit card number, before emitting an invoice.

The translation of \(P_{3}\) is then given below:

Combining Definitions 15 and 22, we have the following observables:

Having introduced and illustrated our translation, we now move to establish its correctness in the sense of Definition 20.

4.2 Name invariance, compositionality, and operational completeness

First, we prove that the translation is name invariant with respect to the renaming policy in Definition 25. The proof follows by induction on the structure of process P.

Theorem 8

(Name Invariance for \(\llbracket \cdot \rrbracket \)) Let P be a well-typed \(\pi \) process. Also, let \(\sigma \) be a substitution satisfying the renaming policy for \(\llbracket \cdot \rrbracket \) (Definition 25(b)), and x be a variable. Then, \(\llbracket P\sigma \rrbracket = \llbracket P \rrbracket \sigma '\), with \(\varphi _{\llbracket \cdot \rrbracket }(\sigma (x)) = \sigma '(\varphi _{\llbracket \cdot \rrbracket }(x))\) and \(\sigma = \sigma '\).

Next, we establish that \(\llbracket \cdot \rrbracket \) is compositional, in the sense of Definition 20(2). The proof follows immediately from the translation definition in Fig. 8: each process in \(\pi \) is translated using a context in \(\texttt {lcc}\) that depends on the translation of its sub-processes. In particular, notice that parallel composition (denoted ‘\(\mathord {\,\big |\,}\)’ in \(\pi \)) is translated homomorphically: the associated \(\texttt {lcc}\) context in that case is \(C_{\mathord {\,\big |\,}}(- _1, - _2) = [- _1] \parallel [- _2]\).

Theorem 9

(Compositionality for \(\llbracket \cdot \rrbracket \)) The encoding \( \llbracket \cdot \rrbracket \) is compositional.

Related to compositionality, we have the following result, which says that the translation preserves the evaluation contexts of Definition 3, which involve restriction and parallel composition. Below, we use the extension of \(\llbracket \cdot \rrbracket \) to evaluation contexts, obtained by decreeing \(\llbracket - \rrbracket = - \). The proof is by induction on the structure of P and a case analysis on \(E[- ] \).

Theorem 10

(Evaluation Contexts and \(\llbracket \cdot \rrbracket \)) Let P and \(E[- ] \) be a well-typed \(\pi \) process and a \(\pi \) evaluation context as in Definition 3, respectively. Then, we have: \( \llbracket E[P] \rrbracket = \llbracket E \rrbracket \big [ \llbracket P \rrbracket \big ]\).

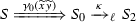

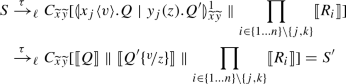

We close this section by stating operational completeness, which holds up to barbed congruence (cf. Definition 23):

Theorem 11

(Completeness for \(\llbracket \cdot \rrbracket \)) Let \(\llbracket \cdot \rrbracket \) be the translation in Definition 25. Also, let P be a well-typed \(\pi \) program. Then, if \(P \longrightarrow ^{*} Q\) then  .

.

Proof

By induction on the length of the reduction \(\longrightarrow ^{*}\), with a case analysis on the last applied rule, relying on auxiliary results to be given in Sect. 4.3.2. For details see App. C.2.

\(\square \)

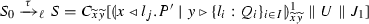

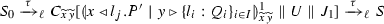

4.3 Operational soundness

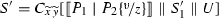

The most challenging part of our technical development is proving that our translation satisfies the operational soundness criterion (cf. Definition 20):

Theorem 12

(Soundness for \(\llbracket \cdot \rrbracket \)) Let \(\llbracket \cdot \rrbracket \) be the translation in Definition 25. Also, let P be a well-typed \(\pi \) program. For every S such that  there are Q, \(S'\) such that \(P \longrightarrow ^* Q\) and

there are Q, \(S'\) such that \(P \longrightarrow ^* Q\) and  .

.

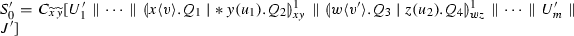

Our goal is to precisely identify which well-typed \(\pi \) program is being mimicked at any given point by a target term in \(\texttt {lcc}\), while ensuring that such target term does not add undesired behaviors. This is a non-trivial task: programs may contain multiple redexes running at the same time, and redexes in \(\pi \) are mimicked in \(\texttt {lcc}\) using two-step synchronizations. Our proof draws inspiration from [41], where translated process is characterized semantically, by defining pre-processing and post-processing reduction steps, according to the effect they have over target terms and the simulation of the behavior of the source language.

We first define target terms for \(\llbracket \cdot \rrbracket \) to set our focus on well-typed \(\pi \) programs:

Definition 26

(Target Terms) We define target terms as the set of \(\texttt {lcc}\) processes that are induced by the translation of well-typed \(\pi \) programs and is closed under \(\tau \)-transitions:  . We shall use \(S,S', \ldots \) to range over target terms.

. We shall use \(S,S', \ldots \) to range over target terms.

We start by giving a roadmap to the proof of Theorem 12 and its different ingredients. Then, these ingredients are spelled out in detail in Sect. 4.3.2. The full proof is given in Sect. 4.3.3.

4.3.1 Proof roadmap