Abstract

The Travelling Salesman Problem is one of the fundamental and intensively studied problems in approximation algorithms. For more than 30 years, the best algorithm known for general metrics has been Christofides’s algorithm with an approximation factor of \(\frac{3}{2}\), even though the so-called Held-Karp LP relaxation of the problem is conjectured to have the integrality gap of only \(\frac{4}{3}\). Very recently, significant progress has been made for the important special case of graphic metrics, first by Oveis Gharan et al. (FOCS, 550–559, 2011), and then by Mömke and Svensson (FOCS, 560–569, 2011). In this paper, we provide an improved analysis of the approach presented in Mömke and Svensson (FOCS, 560–569, 2011) yielding a bound of \(\frac{13}{9}\) on the approximation factor, as well as a bound of \(\frac{19}{12}+\varepsilon\) for any ε>0 for a more general Travelling Salesman Path Problem in graphic metrics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Related Work

The Travelling Salesman Problem (TSP) is one the fundamental and intensively studied problems in combinatorial optimization, and approximation algorithms in particular. In the standard version of the problem, we are given a metric (V,d) and the goal is to find a closed tour that visits each point of V exactly once and has minimum total cost, as measured by d. This problem is APX-hard, even if all distances are one or two (Papadimitriou et al. [13]), and the best known approximation factor of \(\frac{3}{2}\) was obtained by Christofides [2] more than thirty years ago. However, the so-called Held-Karp LP relaxation of TSP is conjectured to have an integrality gap of \(\frac{4}{3}\). It is known to have a gap at least that big, however the best known upper bound [14] for the gap is equal to \(\frac{3}{2}\), and is given by Christofides’s algorithm.

In a more general version of the problem, called the Travelling Salesman Path Problem (TSPP), in addition to a metric (V,d) we are also given two points s,t∈V and the goal is to find a path from s to t visiting each point exactly once, except if s and t are the same point in which case it can be visited twice (this is when TSPP reduces to TSP). For this problem, the best approximation algorithm known is that of Hoogeveen [7] with an approximation factor of \(\frac{5}{3}\). However, the Held-Karp relaxation of TSPP is conjectured to have an integrality gap of \(\frac{3}{2}\).

One of the natural directions of attacking these problems is to consider special cases and several attempts of this nature has been made. Among the most interesting is the graphic TSP/TSPP, where we assume that the given metric is the shortest path metric of an undirected graph. Equivalently, in graphic TSP we are given an undirected graph G=(V,E) and we need to find a shortest tour that visits each vertex at least once. Yet another equivalent formulation asks for a minimum size Eulerian multigraph spanning V and only using edges of G. Similar equivalent formulations apply to the graphic TSPP case. The reason why these special cases are interesting is that they seem to include the difficult inputs of TSP/TSPP. Not only are they APX-hard (see [5]), but also the standard examples showing that the Held-Karp LP relaxation has a gap of at least \(\frac{4}{3}\) in the TSP case and \(\frac{3}{2}\) in the TSPP case, are in fact graphic metrics (see Figs. 1 and 2).

\(\operatorname{OPT}_{\mathrm{LP}}(G)\) for the graph above (so-called prism graph) is 3n—simply put x e =1 for all horizontal edges e, and \(x_{e}=\frac {1}{2}\) for the remaining edges. On the other hand, visiting all vertices requires going through 4n−2 edges, and so in this case the integrality gap of LP(G) can approach \(\frac{4}{3}\) with n→∞

If G is a 2n-cycle, then \(\operatorname {OPT}_{\mathrm{LP}}(G,s,t) \le2n\) for any choice of s,t—simply put x e =1 for all edges. On the other hand, if s and t lie opposite to each other on the cycle, then visiting all vertices starting with s and ending with t requires going through 3n−2 edges. Thus, the integrality gap of LP(G,s,t) can approach \(\frac{3}{2}\) with n→∞

Very recently, significant progress has been made in approximating the graphic TSP and TSPP. First, Oveis Gharan et al. [12] gave an algorithm with an approximation factor \(\frac{3}{2}-\varepsilon\) for graphic TSP. Despite ε being of the order of 10−12, this is considered a major breakthrough. Following that, Mömke and Svensson [8] obtained a significantly better approximation factor of \(\frac{14(\sqrt{2}-1)}{12\sqrt{2}-13} \approx 1.461\) for graphic TSP, as well as factor \(3-\sqrt{2}+\varepsilon \approx1.586+\varepsilon\) for graphic TSPP, for any ε>0. Their approach uses matchings in a truly ingenious way. Whereas earlier approaches (including that of Christofides [2] as well as Oveis Gharan et al. [12]) add edges of a matching to a spanning tree to make it Eulerian, the new approach is based on adding and removing the matching edges. This process is guided by a so-called removable pairing of edges which essentially encodes the information about which edges can be simultaneously removed from the graph without disconnecting it. A large removable pairing of edges is found by computing a minimum cost circulation in a certain auxiliary flow network, and the bounds on the cost of this circulation translate into bounds on the size of the resulting TSP tour/path.

Remark 1

Since the announcement of the preliminary version of this work, several improved approximation algorithms have been found. An et al. [1] gave a factor of \(\frac{1+\sqrt{5}}{2} \approx 1.618\) for the general metric TSPP, as well as a factor of ≈1.578 for the graphic TSPP. Sebő and Vygen [10] improved the ratio for graphic TSPP to \(\frac{3}{2}\), which is tight w.r.t. the Held-Karp LP relaxation. They also gave a \(\frac {7}{5}\)-approximation algorithm for graphic TSP. Finally, Sebő [9] announced an \(\frac{8}{5}\)-approximation algorithm for the general metric TSPP.

1.1 Our Results

In this paper we present an improved analysis of the cost of the circulation used by Mömke and Svensson [8] in the construction of the TSP tour/path. Our results imply a bound of \(\frac {13}{9} \approx1.444\) on the approximation factor for the graphic TSP, as well as a \(\frac{19}{12}+\varepsilon\approx1.583+\varepsilon\) bound for the graphic TSPP, for any ε>0. The circulation used in [8] consists of two parts: the “core” part based on an optimal extreme point solution to the Held-Karp LP relaxation of TSP, and the “correction” part that adds enough flow to the core part to make it feasible. We improve bounds on costs of both parts, in particular we show that the second part is, in a sense, free. In particular, we obtain the same upper-bounds for the total cost of both parts as for the first part alone. As for the first part, similarly to the original proof of Mömke and Svensson, our proof exploits its knapsack-like structure. However, we use the 2-dimensional knapsack problem in our analysis, instead of the standard knapsack problem. Not only does this lead to an improved bound, it is also in our opinion a cleaner one. In particular, we also provide an essentially matching lower bound on the cost of the core part, which means that any further progress on bounding that cost has to take into account more than just the knapsack-like structure of the circulation.

1.2 Organization of the Paper

In the next section we present previous results relevant to the contributions of this paper. In particular we recall key definitions and theorems of Mömke and Svensson [8]. In Sect. 3 we present the improved upper bound on the cost of the core part of the circulation, as well as an essentially matching lower bound. In Sect. 4 we prove that the correction part of the circulation is, in a sense, free. Finally, in Sect. 5 we apply the results of the previous sections to obtain improved approximation algorithms for graphic TSP and TSPP.

2 Preliminaries

In this section we review some standard results concerning TSP/TSPP approximation and recall the parts of the work of Mömke and Svensson [8] relevant to the contributions of this paper.

Held-Karp LP Relaxation and the Algorithm of Christofides

The Held-Karp LP relaxation (or subtour elimination LP) for graphic TSP on graph G=(V,E) can be formulated as follows (see [4, 6, 8] for details on equivalence between different formulations):

Here δ(S) denotes the set of all edges between S and V∖S for any S⊆V, and x(F) denotes ∑ e∈F x e for any F⊆E. We will refer to this LP as LP(G) and denote the value of any of its optimal solutions by \(\operatorname{OPT}_{\mathrm{LP}}(G)\).

The approximation ratio of the classic \(\frac{3}{2}\)-approximation algorithm for metric TSP due to Christofides [2] is related to \(\operatorname{OPT}_{\mathrm{LP}}(G)\) as follows:

Theorem 1

(Wolsey [15], Shmoys and Williamson [14])

The cost of the solution produced by the algorithm of Christofides on a graph G is bounded by \(n+\operatorname{OPT}_{\mathrm{LP}}(G)/2\), and so its approximation factor is at most

On the other hand, the graph in Fig. 1 shows that the integrality gap of LP(G) can be as large as \(\frac{4}{3}\).

The Held-Karp LP relaxation can be generalized to the graphic TSPP in a straightforward manner. Suppose we want to solve the problem for a graph G=(V,E) and endpoints s,t. Let Φ={S⊆V:|{s,t}∩S|=1}. Then the relaxation can be written as

We denote this generalized program by LP(G,s,t) and its optimum value by \(\operatorname{OPT}_{\mathrm{LP}}(G,s,t)\). It is clear that \(\operatorname{OPT}_{\mathrm{LP}}(G,v,v) = \operatorname{OPT}_{\mathrm{LP}}(G)\) for any v∈V. The example in Fig. 2 shows that the integrality gap of integrality gap of LP(G,s,t) can be as large as \(\frac{3}{2}\).

Let G′=(V,E∪{e′}), where e′={s,t}. From any feasible solution to LP(G,s,t) we can obtain a feasible solution to LP(G′) by adding 1 to x e′. Therefore

Fact 2

\(\operatorname{OPT}_{\mathrm{LP}}(G,s,t) \ge\operatorname {OPT}_{\mathrm{LP}}(G')-1\).

Reduction to Minimum Cost Circulation

The authors of [8] use the optimal solution of LP(G) to construct a low cost circulation in a certain auxiliary flow network. This circulation is then used to produce a small TSP tour for G. We will now describe the construction of the flow network and the relationship between the cost of the circulation and the size of the TSP tour.

Let us start with the following reduction

Lemma 1

(Lemma 2.1 and Lemma 2.1(generalized) of Mömke and Svensson [8])

If there exists a polynomial time algorithm that for any 2-vertex connected graph G returns a graphic TSP solution of cost at most \(r \cdot\operatorname{OPT}_{\mathrm{LP}}(G)\), then there exists an algorithm that does the same for any connected graph. Similarly, if there exists a polynomial time algorithm that for any 2-vertex connected graph G and its two vertices s,t returns a graphic TSPP solution of cost at most \(r \cdot \operatorname{OPT}_{\mathrm{LP}}(G,s,t)\), then there exists an algorithm that does the same for any connected graph.

We will henceforth assume that the graphs we work with are all 2-vertex-connected. Let G be such graph. We now construct a certain auxiliary flow network corresponding to G.

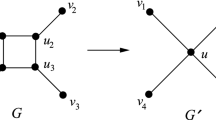

Let T be a depth first search spanning tree of G with an arbitrary root vertex r. Direct all edges of T (called tree-edges) away from the root, and all other edges (called back-edges) towards the root. Let G be the resulting directed graph, and let T be its subgraph corresponding to T. Where necessary to avoid confusion, we will use the name arcs (and tree-arcs and back-arcs) for the edges of this directed graph. The flow network is obtained from G by replacing some of its vertices with gadgets, as described below.

Let v be any non-root vertex of G having l children: w 1,…,w l in T. We introduce l new vertices v 1,…,v l and replace the tree-arc (v,w j ) by tree-arcs (v,v j ) and (v j ,w j ) for j=1,…,l. We also redirect to v j all the back-arcs leaving the subtree rooted at w j and entering v (see Fig. 3). We will call the new vertices and the root in-vertices and the remaining vertices out-vertices. We will also denote the set of all in-vertices by \(\mathcal{I}\), and the set of in-vertices in the gadget corresponding to v by \(\mathcal {I}_{v}\). Notice that all the back-arcs go from out-vertices to in-vertices, and that each in-vertex has exactly one outgoing arc (for the root vertex this follows from 2-vertex connectivity).

We assign lower bounds (demands) and upper bounds (capacities) as well as costs to arcs. The demands of the tree-arcs are 1 and the demands of the back-arcs are 0. The capacities of all arcs are ∞. Finally the cost of any circulation f is defined to be \(\sum_{v \in \mathcal{I}} \max(f(B(v))-1,0)\), where B(v) is the set of incoming back-arcs of v. This basically means that the cost is 0 for tree-arcs and 1 for back-arcs, except that for every in-vertex the first unit of circulation using a back-arc is free. The circulation network described above will be denoted C(G,T). For any circulation C, we will use |C| to denote its cost as described above.

It is worth noting that the cost function of C(G,T) can be simulated using the usual fixed-cost arcs by introducing an extra vertex v′ for each in-vertex v, redirecting all in-arcs of v to v′ and putting two arcs from v′ to v: one with capacity of 1 and cost 0, and the other with capacity ∞ and cost 1. Note, that this is the only place where we use arc capacities. For simplicity of presentation we will use the simpler network with a slightly unusual cost function and infinite arc capacities.

Also note that the edges of C(G,T) minus the incoming tree edges of the in-vertices are in 1-to-1 correspondence with the edges of G. Similarly, all vertices of C(G,T) except for the new in-vertices correspond to the vertices of the original graph. We will often use the same symbol to denote both edges or both vertices.

The main technical tool of [8] is given by the following theorem:

Theorem 3

(Lemma 4.1 of [8])

Let G be a 2-vertex connected graph, let T be a depth first search tree of G, and let C ∗ be a circulation in C(G,T) of cost |C ∗|. Then there exists a spanning Eulerian multigraph H in G with at most \(\frac{4}{3}n + \frac{2}{3} |C^{*}| - \frac{2}{3}\) edges. In particular, this means that there exists a TSP tour in the shortest path metric of G with the same cost.

And its generalized version

Theorem 4

(Lemma 4.1(generalized) of [8])

Let G=(V,E) be a 2-vertex connected graph and s,t its two vertices, and let G′=(V,E∪{e′}) where e′={s,t}. Let T be a depth first search tree of G′ and let C ∗ be a circulation in C(G′,T) of cost |C ∗|. Then there exists a spanning multigraph H in G, that has an Eulerian path between s and t with at most \(\frac {4}{3}n + \frac{2}{3} |C^{*}| - \frac{2}{3} + \mathrm{dist}_{G}(s,t)\) edges. In particular, this means that there exists a TSP path between s and t in the shortest path metric of G with the same cost.

Remark 2

The above theorem is not just a rewording of the generalized version of Lemma 4.1 from [8]. In our version C ∗ is a circulation in C(G′,T) and not C(G,T). Note however, that in the proof of Theorem 1.2 of [8] the authors are in fact using the version above, and provide arguments for why it is correct.

In order to be able to apply Theorems 3 and 4, the authors of [8] use the optimal solution of LP(G) to define a circulation f in C(G,T) as follows. Let G=(V,E) be a graph and let x ∗ be an optimal extreme point solution of LP(G). Let \(E_{+} = \{ e \in E: x_{e}^{*} > 0\}\), i.e. E + is the support of x ∗, and let G +=(V,E +). It is clear that x ∗ is also an optimal solution for LP(G +), so an r-approximate TSP tour with respect to \(\operatorname{OPT}_{\mathrm{LP}}(G_{+})\) is also r-approximate with respect to \(\operatorname{OPT}_{\mathrm{LP}}(G)\). Therefore, we can always assume that E +=E. The reason why this assumption is useful is given by the following theorem.

Theorem 5

(Cornuejols, Fonlupt, Naddef [3])

For any graph G, the support of any optimal extreme point solution to LP(G) has size at most 2n−1.

Thus, we can assume that |E|≤2n−1. Moreover, we can assume that G is 2-vertex connected because of Lemma 1.

Let T used in the construction of C(G,T) be the tree resulting from always following the edge e with the highest value of \(x_{e}^{*}\). We construct a circulation f in C(G,T) as a sum of two circulations: f′ and f″. The circulation f′ corresponds to sending, for each back-arc a, flow of size \(\min(x_{a}^{*},1)\) along the unique cycle formed by a and some tree-arcs. The circulation f″ is defined as follows, to guarantee that f=f′+f″ satisfies all the lower bounds. Let v be an out-vertex and w an in-vertex, such that there is an arc (v,w) in C(G,T), and the flow on (v,w) is smaller than 1. Also let a be any back-arc going from a descendant of w to an ancestor of v (in T). Such an arc always exists since G is 2-vertex connected. We push flow along all edges of the unique cycle formed by a and tree-arcs until the flow on (v,w) reaches 1.

The total cost of f can be bounded by

We will denote the terms in the above expression as |f|, |f′| and |f″|, respectively. Note in particular, that |f″| denotes the sum \(\sum_{v \in\mathcal{I}} f''(B(v))\) which is not equal to the cost of f″. We thus have |f|≤|f′|+|f″|.

The authors of [8] provide the following bounds for the two terms of the above expression:

Lemma 2

(Claim 5.3 in [8])

\(|f''| \le\operatorname{OPT}_{\mathrm{LP}}(G)-n\).

Lemma 3

(Claim 5.4 in [8])

\(|f'| \le(7-6\sqrt{2})n + 4(\sqrt{2}-1)\operatorname{OPT}_{\mathrm {LP}}(G)\).

The main theorem of [8] follows from these two bounds

Theorem 6

(Theorem 1.1 in [8])

There exists a polynomial time approximation algorithm for graphic TSP with approximation ratio \(\frac{14(\sqrt{2}-1)}{12\sqrt{2}-13} < 1.461\).

3 New Upper Bound for |f′|

In this section we describe an improved bound on |f′|.

Lemma 4

\(|f'| \le\frac{5}{3}\operatorname{OPT}_{\mathrm{LP}} - \frac{3}{2} n\).

Before presenting our analysis of the cost of f′ let us recall some notation and basic observations introduced in [8]. For any \(v \in\mathcal{I}\) let t v be the (unique) outgoing arc of v.

Fact 7

For every in-vertex v, we have \(|B(v)| \ge \lceil\frac {f'(B(v))}{\min(x_{t_{v}}^{*},1)} \rceil\).

Proof

Since T was constructed by always following the arc a with the highest value of \(x_{a}^{*}\), we have that \(x_{t_{v}}^{*} \ge x_{a}\) for any a∈B(v) and the claim follows. □

Decompose f′(B(v)) into two parts: \(l_{v} = \min(2-x_{t_{v}}^{*}, f'(B(v)))\) and u v =f′(B(v))−l v . Notice, that we have

The intuition behind this decomposition is that the higher u v is, the larger \(\operatorname{OPT}_{\mathrm{LP}}(G)\) is. In particular, if we let \(u^{*} = \sum_{v \in\mathcal{I}} u_{v}\), then

Fact 8

(Stated in the Proof of Claim 5.3 in [8])

\(u^{*} \le2(\operatorname{OPT}_{\mathrm{LP}}(G)-n)\).

Proof

Consider a vertex v of G which (in the construction of C(G,T)) is replaced by a gadget with a set \(\mathcal{I}_{v}\) of in-vertices, and let x ∗(v) be the fractional degree of v in x ∗. Since for any \(w \in\mathcal {I}_{v}\), the tree-arc t w and all the back-arcs entering w correspond to edges of G incident to v, each such w contributes at least 2+u w to x ∗(v), provided that u w >0 (if u w =0 we cannot bound w’s contribution in any way). Since we also know that x ∗(v)≥2 (this is one of the inequalities of the Held-Karp LP relaxation), we get the following bound

Summing this over all vertices we get \(2\operatorname{OPT}_{\mathrm{LP}}(G) \ge2n + u^{*}\), and the claim follows. □

Because of Theorem 5 we have \(\sum_{v \in\mathcal {I}} |B(v)| + n-1 \le2n-1\), and so by Fact 7

Note that in terms of l v and u v the total cost of f′ is given by the following formula

Our goal is to upper-bound this cost as a function of n and u ∗. Instead of working directly with G and the solution x ∗ to the corresponding LP(G), we abstract out the key properties of \(x_{t_{v}}^{*}\), l v and u v and work in this restricted setting.

Definition 1

A configuration of size n is a triple (x,l,u), where x,l,u:{1,…,n}→ℝ≥0 such that for all i=1,…,n

-

1.

0<x i ≤1,

-

2.

l i ≤2−x i ,

-

3.

\(u_{i} > 0 \implies l_{i} = 2-x_{i}\), and

-

4.

\(\sum_{i=1}^{n} \lceil\frac{l_{i} + u_{i}}{x_{i}} \rceil\le n\).

Let C=(x,l,u) be a configuration. We call the triple (x i ,l i ,u i ) the i-th element of C. We say that the i-th element of C uses \(\lceil\frac{l_{i}+u_{i}}{x_{i}} \rceil\) edges and denote this number by e i (C), or e i if it is clear what C is. We also say that C uses \(\sum_{i=1}^{n} e_{i}\) edges. Note that by the definition of a configuration, the number of edges used by C is at most n.

The value of the i-th element of C is defined as \(\operatorname{val}_{i} \hspace{-1pt}=\hspace{-1pt} \operatorname{val}_{i}(C) \hspace{-1pt}=\hspace{-1pt} \max (0,l_{i}\hspace{-1pt}+\hspace{-1pt}u_{i}\hspace{-1pt}-\hspace{-1pt}1)\) and the value of C as \(\operatorname{val}(C) = \sum_{i=1}^{n} \operatorname{val}_{i}(C)\).

Remark 3

The values x i , l i and u i correspond to \(x_{t_{v}}^{*}\), l v and u v , respectively. The properties enforced on the former are clearly satisfied by the latter with the exception of the inequalities x i ≤1. The reason for introducing these inequalities is the following. Without them, the natural definition of the number of edges used by the i-th element of C would be \(\lceil\frac{l_{i}+u_{i}}{\min(x_{i},1)} \rceil\). However, in that case, for any configuration C there would exists a configuration C′ with \(\operatorname{val}(C') = \operatorname {val}(C)\) and x i ≤1 for all i=1,…,n. In order to construct C′ simply replace all x i >1 with ones. If as a result we get l i <2−x i and u i >0 for some i, simultaneously decrease u i and increase l i at the same rate until one of these inequalities becomes an equality.

For that reason, we prefer to simply assume x i ≤1 and be able to use a (slightly) simpler definition of e i . As we will see, the inequalities x i ≤1 turn out to be quite useful as well.

We denote by \(\operatorname{CONF}(n,u^{*})\) the set of all configurations (x,l,u) of size n such that \(\sum_{i=1}^{n} u_{i} = u^{*}\). We also use \(\operatorname{OPT}(n,u^{*})\) to denote any maximum value element of \(\operatorname{CONF} (n,u^{*})\), and \(\operatorname{VAL}(n,u^{*})\) to denote its value. We clearly have

Fact 9

\(|f'| \le\operatorname{VAL}(n,u^{*})\).

Notice that determining \(\operatorname{VAL}(n,u^{*})\) for given n and u ∗ is a 2-dimensional knapsack problem. Here, items are the possible elements (x i ,l i ,u i ) satisfying the configuration definition. The value of element (x i ,l i ,u i ) is equal to max(0,l i +u i −1), i.e. its contribution to the configuration value, if used in one. Also, the “mass” of (x i ,l i ,u i ) is u i and its “volume” is e i . We want to maximize the total item value, while keeping the total mass ≤u ∗ and total volume ≤n.

Lemma 5

For any n∈ℕ,u ∗∈ℝ≥0, there exists an optimal configuration in \(\operatorname{CONF}(n,u^{*})\) such that:

-

1.

\(e_{i} = \frac{l_{i}+u_{i}}{x_{i}}\) for all i=1,…,n (in particular, all e i are integral),

-

2.

(l i =0)∨(l i =2−x i ) for all i=1,…,n.

Proof

We prove each property by showing a way to transform any \(C \in \operatorname{CONF} (n,u^{*})\) into \(C' \in\operatorname{CONF}(n,u^{*})\) such that \(\operatorname{val}(C') \ge\operatorname{val}(C)\) and C′ satisfies the property.

Let us start with the first property, which basically says that all edges are fully saturated. Assume we have \(e_{i} > \frac{l_{i}+u_{i}}{x_{i}}\) for some i∈{1,…,n}. If l i <2−x i , we increase l i until either \(e_{i} = \frac {l_{i}+u_{i}}{x_{i}}\), in which case we are done, or l i =2−x i . In the second case we start decreasing x i while increasing l i at the same rate, until \(e_{i} = \frac{l_{i}+u_{i}}{x_{i}}\). Clearly, both transformations increase the value of the configuration and keep both u i and e i unchanged.

To prove the second property, let us assume that for some i∈{1,…,n} we have 0<l i <2−x i . We also assume that our configuration already satisfies the first property, in particular we have \(e_{i} = \frac{l_{i}}{x_{i}}\) (u i =0 since l i <2−x i ). We increase x i and keep l i =e i x i until l i +x i =2. This increases the value of the configuration and keeps u i and e i unchanged. To see that x i ≤1, note that x i =l i /e i ≤l i and x i +l i =2. □

Theorem 10

For any n∈ℕ,u ∗∈ℝ≥0, and any \(C \in \operatorname{CONF}(n,u^{*})\) we have \(\operatorname{val}(C) \le u^{*} + \frac{1}{6}(n-u^{*})\).

Proof

It is enough to prove the bound for optimal configurations satisfying the properties in Lemma 5. Let C be such a configuration. We will prove that for all i=1,…,n we have:

Summing this bound over all i gives the desired claim.

If u i =l i =e i =0, then the bound clearly holds. It follows from Lemma 5 that the only other case to consider is when l i =2−x i and \(e_{i} = \frac{l_{i}+u_{i}}{x_{i}}\) (notice that since we only consider x i ≤1, we have l i +u i −1≥0 in this case, and so \(\operatorname {val}_{i} = l_{i}+u_{i}-1\)). It follows from these two equalities that e i x i =l i +u i =2−x i +u i and so

Using this expression to bound \(\operatorname{val}_{i}\) we get

We need to prove that

or equivalently

Since u i ≤e i (this follows from property 1 in Lemma 5 and the fact that x i ≤1), we have two cases to consider.

- Case 1::

-

\(\frac{1}{6} - \frac{1}{1+e_{i}} \ge0\). In this case the whole expression is clearly nonnegative.

- Case 2::

-

\(\frac{1}{6} - \frac{1}{1+e_{i}} < 0\), meaning that e i ∈{1,2,3,4}. In this case we proceed as follows:

$$(e_i-u_i) \biggl(\frac{1}{6} - \frac{1}{1+e_i} \biggr) + \frac {1}{1+e_i} = u_i \biggl( \frac{1}{1+e_i}-\frac{1}{6} \biggr) + \biggl(\frac{e_i}{6} - \frac {e_i-1}{e_i+1}\biggr). $$The first term is clearly nonnegative and the second one can be checked to be nonnegative for e i ∈{1,2,3,4}. Note that integrality of e i plays a key role here, as the second term is negative for e i ∈(2,3).

□

We can show that the above bound is essentially tight

Theorem 11

For any n∈ℕ,u ∗∈ℝ≥0, there exists \(C \in\operatorname{CONF}(n,u^{*})\) such that \(\operatorname{val}(C) = u^{*} + \frac{1}{6}(n-u^{*}) - O(1)\).

Proof

It is quite easy to construct such C by looking at the proof of Theorem 10. We get the first tight example when, in Case 2 of the analysis, we have u i =0 and e i ∈{2,3}. This corresponds to configurations consisting of elements of the form:

-

\(x_{i} = \frac{2}{3}, l_{i} = \frac{4}{3}, u_{i} = 0\), in which case we have e i =2 and so \(u_{i}+\frac{1}{6}(e_{i} - u_{i}) = \frac{1}{3}\) and \(\operatorname{val}_{i} = l_{i} + u_{i} - 1 = \frac{1}{3}\), or

-

\(x_{i} = \frac{1}{2}, l_{i} = \frac{3}{2}, u_{i} = 0\), in which case we have e i =3 and so \(u_{i}+\frac{1}{6}(e_{i} - u_{i}) = \frac{1}{2}\) and \(\operatorname{val}_{i} = l_{i} + u_{i} - 1 = \frac{1}{2}\).

Using these two items we can construct tight examples for u ∗=0 and arbitrary n≥2.

To handle the case of u ∗>0 we need another (almost) tight case in the proof of Theorem 10 which occurs when u i is close to e i and e i is relatively large. In this case the value of the expression \((e_{i}-u_{i}) (\frac{1}{6} - \frac{1}{1+e_{i}} ) + \frac {1}{1+e_{i}}\) is clearly close to 0. This corresponds to using items of the form x i =1,l i =1 and arbitrary u i . For such elements we have e i =⌈u i +1⌉ and so

and

so the difference between the two is at most \(\frac{1}{3}\). By combining the three types of items described, we can clearly construct C as required for any n and u ∗. Figure 4 illustrates the three tight cases directly in terms of the corresponding solutions of LP(G).

□

We are now ready to prove the Lemma 4.

Proof of Lemma 4

It follows from Theorem 10 and Fact 9 that

Using Fact 8 we get:

□

4 New Upper Bound for |f″|

In this section we give a new bound for |f″|. We do not bound it directly, as in Lemma 2. Instead, we show the following.

Lemma 6

What this says is basically that f″ can be fully paid for by (\(\frac {5}{6}\) of) the slack we get in Fact 8. To better understand this bound, and in particular the constant \(\frac{5}{6}\), before we proceed to prove it, let us first show how it can be used.

Corollary 1

\(|f| \le\frac{5}{3} \operatorname{OPT}_{\mathrm{LP}} - \frac{3}{2} n\).

Proof

We have \(|f| \le|f'| + |f''| \le (\frac{5}{6} u^{*} + \frac {1}{6}n ) + \frac{5}{6} (2\operatorname{OPT}_{\mathrm{LP}} - 2n - u^{*} ) = \frac{5}{3} \operatorname{OPT}_{\mathrm{LP}} - \frac{3}{2} n\). □

There are several interesting things to note here. First of all, we got the exact same bound as in Lemma 4, which means that |f″| can be fully paid for by the slack in Fact 8, as suggested earlier. In particular, this means that improving the constant \(\frac{5}{6}\) in Lemma 6 is pointless, since we would still be getting the same bound on |f| when |f″|=0. Therefore, we do not try to optimize this constant, but instead make the proof of the Lemma as straightforward as possible.

Let us now proceed to prove Lemma 6. For any non-root in-vertex w let \(z_{w} = x^{*}_{t_{w}} + x^{*}(B(w))\). Basically, if v is the parent of w in T, then z w is the total value of x ∗ over all edges connecting v with vertices in the subtree T w of T determined by w. By equality (1) we have

Also, let γ w be the total of x ∗ over all edges connecting vertices in T w with vertices above v. Note that max(0,1−γ v ) is essentially by how much f′ falls short of reaching the lower-bound of 1 on arc (v,w). The definitions of z w and γ w are illustrated in Fig. 5.

We can formulate the following local version of Lemma 6.

Lemma 7

For every non-root vertex v of G we have

Notice that Lemma 6 follows from Lemma 7 by summing over all non-root vertices.

Proof of Lemma 7

Let v be a non-root vertex of G. We define 3 types of vertices in \(\mathcal{I}_{v}\):

-

\(w \in\mathcal{I}_{v}\) is heavy if γ w <1 and z w >2,

-

\(w \in\mathcal{I}_{v}\) is light if γ w <1 and z w ≤2,

-

\(w \in\mathcal{I}_{v}\) is trivial otherwise (i.e. γ w ≥1).

We denote by H v and L v the sets of heavy and light vertices in \(\mathcal{I}_{v}\), respectively. Intuitively, heavy vertices are the ones that contribute to both u ∗ and |f″|, light vertices contribute only to |f″|, and the remaining (i.e. trivial) vertices do not contribute to |f″|.

The following lemma contains two key observations:

Lemma 8

-

1.

z w ≥2−γ w for all w∈H v ∪L v ,

-

2.

\(x^{*}(v) \ge\sum_{w \in H_{v} \cup L_{v}} z_{w} + \max(0,2-\sum_{H_{v} \cup L_{v}} \gamma_{w})\).

Proof

Using the definition of z w we have \(z_{w} = x^{*}_{t_{w}} + x^{*}(B(w)) = x^{*}(\{v\},T_{w})\), where v is the parent of w in T and T w is the subtree of T rooted at w. Moreover, using the definition of γ w we get γ w =x ∗(P v ∖{v},T w ), where P v is the unique path in T connecting the root of T with v. Therefore z w +γ w =x ∗(P v ,T w )=x ∗(V−T w ,T v )≥2. The last two steps follow from the fact that T is a depth first search tree, and the fact that x ∗ is a solution of the Held-Karp LP, respectively.

For the second inequality consider the set \(W=\bigcup_{w \in H_{v} \cup L_{v}} T_{w} \cup\{v\}\). We have x ∗(W,V∖W)≥2 since x ∗ is the solution to the Held-Karp LP. Therefore

and so

We now have

which ends the proof. □

Note that the trivial vertices might have z w >2 and so they might contribute to u ∗. However in that case the proof is quite simple and it will be advantageous for us to get it out of our way. Let w 0 be a trivial vertex with \(z_{w_{0}} > 2\). We then have \(u_{w_{0}} = z_{w_{0}}-2\) by equality (2). What we do is to use w 0 to cancel out the lone 2 in the second factor of the RHS of (3).

Since w 0∉H v ∪L v we thus have

The last inequality holds because we have z w −u w =2 for heavy w and z w −u w =z w ≥2−γ w for light w, where the second step follows from the first observation of Lemma 8. This proves Lemma 7 for the case where there is at least one trivial vertex w with z w >2. Hence it remains to prove the lemma for the case where all trivial vertices have z w ≤2 (and hence u w =0 using equality (2)).

Note that using the second observation of Lemma 8, and the fact that for trivial vertices we have γ w ≥1 and hence max(0,1−γ w )=0, it suffices to prove the following inequality:

and since we now assume that all trivial vertices have z w ≤2, it is enough to prove:

(since z w =2+u w for w∈H v ).

Clearly, if all \(w \in\mathcal{I}_{v}\) are trivial, both sides of the bound are 0 and so it trivially holds. Otherwise, we consider the following two cases:

- Case 1::

-

\(\sum_{w \in H_{v} \cup L_{v}} \gamma_{w} > 2\). Notice that this implies |H v |+|L v |≥3. In this case the RHS of (3) becomes

$$\frac{5}{6} \biggl( \sum_{w \in L_v} z_w + 2\bigl(|H_v|-1\bigr) \biggr) \ge \frac {5}{6} \biggl( \sum_{w \in L_v} (2-\gamma_w) + 2\bigl(|H_v|-1\bigr) \biggr). $$The ratio of the above expression and the LHS of (3) is lower-bounded by the ratio of these same expressions with all γ w =0, i.e. \(\frac{5}{6} \cdot\frac{2(|L_{v}|+|H_{v}|-1)}{|L_{v}|+|H_{v}|}\), which is definitely at least 1, since |L v |+|H v |≥3.

- Case 2::

-

\(\sum_{w \in H_{v} \cup L_{v}} \gamma_{w} \le2\). In this case the RHS of (3) becomes

$$\frac{5}{6} \biggl( \sum_{w \in L_v} z_w + 2-\sum_{w \in H_v \cup L_v} \gamma_w + 2\bigl(|H_v|-1\bigr) \biggr). $$By rearranging the terms and using the inequality z w ≥2−γ w we lower-bound this expression by

$$\frac{5}{6} \biggl( \sum_{w \in L_v} (2-2 \gamma_w) + \sum_{w \in H_v} (2- \gamma_w) \biggr). $$The claim now follows by observing that (2−2γ w )=2(1−γ w ) and 2−γ w ≥2(1−γ w ).

□

5 Applications to Graphic TSP and TSPP

As a consequence of Corollary 1, we get improved approximation factors for graphic TSP and graphic TSPP.

Theorem 12

There is a \(\frac{13}{9}\)-approximation algorithm for graphic TSP.

Proof

By Corollary 1 we get

The TSP tour guaranteed by Theorem 3 has size at most

Notice that the approximation ratio of the resulting algorithm is getting better with \(\operatorname{OPT}_{\mathrm{LP}}\) increasing (with fixed n). Therefore the worst case bound is the one we get for \(\operatorname {OPT}_{\mathrm{LP}}=n\), i.e. \(\frac {10}{9}+\frac{1}{3} = \frac{13}{9}\). □

Remark 4

This analysis is significantly simpler than the one in [8]. Balancing with Christofides’s algorithm is no longer necessary since bounds on approximation ratios for both algorithms are decreasing in \(\operatorname{OPT}_{\mathrm{LP}}\).

Theorem 13

There is a \(\frac{19}{12}+\varepsilon\)-approximation algorithm for graphic TSPP, for any ε>0.

Proof

This proof is very similar to the proof of Theorem 1.2 in [8]. However, the reasoning is slightly simpler, in our opinion. Suppose we want to approximate the graphic TSPP in G=(V,E) with end-vertices s and t. Let G′=(V,E∪{e′}), where e′={s,t}, and let \(\operatorname{OPT}_{\mathrm{LP}}\) denote \(\operatorname{OPT}_{\mathrm{LP}}(G')\). Also, let d be the distance between s and t in G. By Corollary 1 we get

The TSP path guaranteed by Theorem 4 has size at most

It is clear that the quality of this algorithm deteriorates as d increases. We are going to balance it with another algorithm that displays the opposite behaviour. The following approach is folklore: Find a spanning tree T in G and double all edges of T except those that lie on the unique shortest path connecting s and t. The resulting graph has a spanning Eulerian path connecting s and t with at most 2(n−1)−d edges.

Since \(\operatorname{OPT}_{\mathrm{LP}}-1 \le\operatorname {OPT}_{\mathrm{LP}}(G,s,t)\) by Fact 2, which is a lower bound for the optimal solution , the two approximation algorithms have approximation ratios bounded by

and

For a fixed value of \(\operatorname{OPT}_{\mathrm{LP}}\) the first of these expressions is increasing and the second is decreasing in d. Therefore the worst case bound we get for an algorithm that picks the best of the two solutions occurs when

which leads to

For this value of d the approximation ratio is at most

Since \(\operatorname{OPT}_{\mathrm{LP}} \ge n\) this is at most

which proves the claim. □

Remark 5

One might ask why the improvement for the graphic TSP is much bigger than the one for graphic TSPP. The reason for this is that while for large values of \(\operatorname{OPT}/n\) our bound on |f| is significantly better than the one in [8], it is only slightly better when \(\operatorname{OPT}=n\). As it turns out, this is exactly the worst case for TSPP, both in our analysis and in the one in [8]. For TSP however, the worst case value of \(\operatorname{OPT}\) for the analysis in [8] is larger than n.

References

An, H.-C., Kleinberg, R., Shmoys, D.B.: Improving Christofides’ algorithm for the s–t path TSP. In: STOC’12, pp. 875–886 (2012)

Christofides, N.: Worst-case analysis of a new heuristic for the travelling salesman problem. Tech. Rep. 388, Graduate School of Industrial Administration, CMU (1976)

Cornuejols, G., Naddef, D., Fonlupt, J.: The traveling salesman problem on a graph and some related integer polyhedra. Math. Program. 33, 1–27 (1985)

Goemans, M.X., Bertsimas, D.J.: On the parsimonious property of connectivity problems. In: SODA’90, pp. 388–396 (1990)

Grigni, M., Koutsoupias, E., Papadimitriou, C.H.: An approximation scheme for planar graph TSP. In: FOCS’95, pp. 640–645 (1995)

Held, M., Karp, R.M.: The traveling-salesman problem and minimum spanning trees. Oper. Res. 18(6), 1138–1162 (1970)

Hoogeveen, J.A.: Analysis of Christofides’ heuristic: some paths are more difficult than cycles. Oper. Res. Lett. 10(5), 291–295 (1991)

Mömke, T., Svensson, O.: Approximating graphic TSP by matchings. In: FOCS, pp. 560–569 (2011)

Sebő, A.: Eight fifth approximation for TSP paths (2012). arXiv:1209.3523

Sebő, A., Vygen, J.: Shorter tours by nicer ears (2012). arXiv:1201.1870

Mucha, M.: 13/9-approximation for graphic TSP. In: STACS, pp. 30–41 (2012)

Oveis Gharan, S., Saberi, A., Singh, M.: A randomized rounding approach to the travelling salesman problem. In: FOCS, pp. 550–559 (2011)

Papadimitriou, C.H., Yannakakis, M.: The traveling salesman problem with distances one and two. Math. Oper. Res. 18, 1–12 (1993)

Shmoys, D.B., Williamson, D.P.: Analyzing the Held-Karp TSP bound: a monotonicity property with application. Inf. Process. Lett. 35(6), 281–285 (1990)

Wolsey, K.: Heuristic analysis, linear programming and branch and bound. Math. Program. Stud. 13, 121–134 (1980)

Acknowledgements

The author would like to thank Marcin Pilipczuk for pointing out some problems in an early version of this work, and an anonymous referee for his comments, which greatly improved the presentation.

Open Access

This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was partially supported by the Polish Ministry of Science grant N206 355636 and by the ERC StG project PAAl no. 259515. Preliminary version of this paper was announced at STACS 2012 [11].

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Mucha, M. \(\frac{13}{9}\)-Approximation for Graphic TSP. Theory Comput Syst 55, 640–657 (2014). https://doi.org/10.1007/s00224-012-9439-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00224-012-9439-7