Abstract

By elaborating a two-dimensional Selberg sieve with asymptotics and equidistributions of Kloosterman sums from \(\ell \)-adic cohomology, as well as a Bombieri–Vinogradov type mean value theorem for Kloosterman sums in arithmetic progressions, it is proved that for any given primitive Hecke–Maass cusp form of trivial nebentypus, the eigenvalue of the n-th Hecke operator does not coincide with the Kloosterman sum \(\mathrm {Kl}(1,n)\) for infinitely many squarefree n with at most 100 prime factors. This provides a partial negative answer to a problem of Katz on modular structures of Kloosterman sums.

Similar content being viewed by others

References

Barnet-Lamb, T., Geraghty, D., Harris, M., Taylor, R.: A family of Calabi–Yau varieties and potential automorphy II. Publ. Res. Inst. Math. Sci. 47, 29–98 (2011)

Bombieri, E., Friedlander, J.B., Iwaniec, H.: Primes in arithmetic progressions to large moduli. Acta Math. 156, 203–251 (1986)

Booker, A.: A test for identifying Fourier coefficients of automorphic forms and application to Kloosterman sums. Exp. Math. 9, 571–581 (2000)

Chai, C.-L., Li, W.-C.: Character sums, automorphic forms, equidistribution, and Ramanujan graphs. I. The Kloosterman sum conjecture over function fields. Forum Math. 15, 679–699 (2003)

Clozel, L., Harris, M., Taylor, R.: Automorphy for some \(\ell \)-adic lifts of automorphic mod \(\ell \) Galois representations. Publ. Math. IHÉS 108, 1–181 (2008)

Conrey, J.B., Duke, W., Farmer, D.W.: The distribution of the eigenvalues of Hecke operators. Acta Arith. 78, 405–409 (1997)

Davis, P.J.: Interpolation and Approximation. Blaisdell, New York (1961)

Deligne, P.: La conjecture de Weil II. Publ. Math. IHÉS 52, 137–252 (1980)

Drappeau, S., Maynard, J.: Sign changes of Kloosterman sums and exceptional zeros. Proc. Am. Math. Soc. 147, 61–75 (2019)

Fouvry, É.: Sur le problème des diviseurs de Titchmarsh. J. Reine Angew. Math. 357, 51–76 (1985)

Fouvry, É., Kowalski, E., Michel, Ph: Algebraic trace functions over the primes. Duke Math. J. 163, 1683–1736 (2014)

Fouvry, É., Kowalski, E., Michel, P.: Trace functions over finite fields and their applications. Colloquium de Giorgi 2013 and 2014, 5, 7–35 (2015)

Fouvry, É., Michel, P.: Crible asymptotique et sommes de Kloosterman. In: Proceeding of the Session in Analytic Number Theory and Diophantine Equations, Bonner Mathematische Schriften, Vol. 360 (2003)

Fouvry, É., Michel, P.: Sur le changement de signe des sommes de Kloosterman. Ann. Math. 165, 675–715 (2007)

Friedlander, J.B., Iwaniec, H.: Opera de Cribro, vol. 57. Amer. Math. Soc., Colloq. Publ., AMS, Providence (2010)

Gelbart, S., Jacquet, H.: A relation between automorphic representations of \(GL(2)\) and \(GL(3)\). Ann. Sci. École Norm. Sup. 11, 471–552 (1978)

Halberstam, H., Richert, H.-E.: Sieve Methods. London Mathematical Society Monographs, vol. 4. Academic Press, New York (1974)

Holowinsky, R.: A sieve method for shifted convolution sums. Duke Math. J. 146, 401–448 (2009)

Iwaniec, H.: Spectral Methods of Automorphic Forms, Second Edition, Grad. Stud. Math 53, Amer. Math. Soc., Providence, RI. Revista Matemática Iberoamericana, Madrid (2002)

Iwaniec, H., Kowalski, E.: Analytic Number Theory, vol. 53. Amer. Math. Soc. Colloq. Publ., AMS, Providence (2004)

Katz, N.M.: Sommes Exponentielles, Asterisque 79, Société mathématique de France (1980)

Katz, N.M.: Gauss Sums, Kloosterman Sums, and Monodromy Groups, Annals of Mathematics Studies, vol. 116. Princeton University Press, Princeton (1988)

Katz, N.M.: Exponential Sums and Differential Equations, Annals of Mathematics Studies, vol. 124. Princeton University Press, Princeton (1990)

Kim, H., Sarnak, P.: Appendix: refined estimates towards the Ramanujan and Selberg conjectures. J. Am. Math. Soc. 16, 175–181 (2003)

Kim, H., Shahidi, F.: Functorial products for \(GL_2\times GL_3\) and functorial symmetric cube for \(GL_2\). C. R. Acad. Sci. Paris Sér. I Math. 331, 599–604 (2000)

Kim, H., Shahidi, F.: Cuspidality of symmetric powers with applications. Duke Math. J. 112, 177–197 (2002)

Louvel, B.: On the distribution of cubic exponential sums. Forum Math. 26, 987–1028 (2014)

Mason, J.C., Handscomb, D.: Chebyshev Polynomials. Chapman & Hall, New York (2003)

Matomäki, K.: A note on signs of Kloosterman sums. Bull. Soc. Math. Fr. 139, 287–295 (2011)

Michel, Ph: Autour de la conjecture de Sato–Tate pour les sommes de Kloosterman. I. Invent. Math 121, 61–78 (1995)

Michel, Ph: Autour de la conjecture de Sato–Tate pour les sommes de Kloosterman. II. Duke Math. J. 92, 221–254 (1998)

Michel, Ph: Minorations de sommes d’exponentielles. Duke Math. J. 95, 227–240 (1998)

Rudnick, Z., Sarnak, P.: Zeros of principal L-functions and random matrix theory. Duke Math. J. 81, 269–322 (1996)

Sarnak, P.: Statistical properties of eigenvalues of the Hecke operators. In: Analytic Number Theory and Diophantine Problems, Progr. Math., vol. 70. Birkhäuser, Basel (1987)

Selberg, A.: Sieve methods. In: Proc. Sympos. Pure Math., vol. XX, pp. 311–351. Amer. Math. Soc., Providence (1971)

Serre, J.-P.: Abelian \(l\)-Adic Representations and Elliptic Curves. Benjamin, NewYork (1968)

Serre, J.-P.: Répartition asymptotique des valeurs propres de l’opérateur de Hecke \(T_p\). J. Am. Math. Soc. 10, 75–102 (1997)

Shahidi, F.: Third symmetric power \(L\)-functions for \(GL(2)\). Compos. Math. 70, 245–273 (1989)

Sivak-Fischler, J.: Crible asymptotique et sommes de Kloosterman. Bull. Soc. Math. Fr. 137, 1–62 (2009)

Soundararajan, K.: An inequality for multiplicative functions. J. Number Theory 41, 225–230 (1992)

Xi, P.: Sign changes of Kloosterman sums with almost prime moduli. Monatsh. Math. 177, 141–163 (2015)

Xi, P.: Sign changes of Kloosterman sums with almost prime moduli. II. Int. Math. Res. Not. 4, 1200–1227 (2018)

Acknowledgements

I am very grateful to Professors Étienne Fouvry, Nicholas Katz and Philippe Michel for their valuable suggestions, comments and encouragement. Sincere thanks are also due to the referee for his/her patient comments and corrections that lead to a much more polished version of this article. This work is supported in part by NSFC (Nos. 11971370, 11601413, 11771349, 11801427).

Author information

Authors and Affiliations

Corresponding author

Additional information

Dedicated to Professor Étienne Fouvry on the occasion of his sixty-fifth birthday.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Multiplicative functions against Möbius

We would like to evaluate a weighted average of general multiplicative functions against Möbius function. This will be employed in the evaluation of Selberg sieve weights essentially given by (2.2).

Let g be a non-negative multiplicative function with \(0\leqslant g(p)<1\) for each \(p\in {\mathcal {P}}\). Suppose the Dirichlet series

converges absolutely for \(\mathfrak {R}s>1.\) Assume there exist a positive integer \(\kappa \) and some constants \(L,c_0>0,\) such that

where \({\mathcal {F}}(s)\) is holomorphic for \(\mathfrak {R}s\geqslant -c_0\) and does not vanish in the region

and \(|1/{\mathcal {F}}(s)|\leqslant L\) for all \(s\in {\mathcal {D}}.\) We also assume

holds for all \(x\geqslant 3\) and

We are interested in the asymptotic behaviour of the sum

where q is a positive integer and \(x,z\geqslant 3\).

Lemma A.1

Let \(q\geqslant 1.\) Under the assumption as above, we have

for all \(A>0,x\geqslant 2,z\geqslant 2\) with \(x\leqslant z^{O(1)}\), where \(s=\log x/\log z,\)

and \(m_\kappa (s)\) is a continuous solution to the differential-difference equation

The implied constant depends on \(A,\kappa ,L\) and \(c_0.\)

Proof

We are inspired by [15, Appendix A.3]. Write \({\mathcal {M}}_\kappa (x,x;q)={\mathcal {M}}_\kappa (x;q)\). By Mellin inversion, we have

where

Note that

where

which is absolutely convergent and holomorphic for \(t\in {\mathcal {C}}\) by (A.2), (A.4) and (A.5). Hence we find

Shifting the t-contour to the left boundary of \({\mathcal {C}}\) and passing one simple pole at \(t=0\), we get

for any fixed \(A>0\).

For \(s=\log x/\log z,\) we expect that

for all \(A>0,x\geqslant 2,z\geqslant 2\) and \(q\geqslant 1\), where c(q) is some constant defined in terms of g and depending also on q, and \(m_\kappa (s)\) is a suitable continuous function in \(s>0.\) As mentioned above, this expected asymptotic formula holds for \(0<s\leqslant 1,\) in which case we may take

We now move to the case \(s>1\) and prove the asymptotic formula (A.7) by induction. Since \(x\leqslant z^{O(1)},\) this induction will have a bounded number of steps. We first consider the difference \({\mathcal {M}}_\kappa (x,z;q)-{\mathcal {M}}_\kappa (x;q)\). In fact, each n that contributes to this difference has a prime factor at least z, and we may decompose \(n=mp\) uniquely up to the restriction \(z\leqslant p<x,\)\(m\mid P(p).\) Hence

Substituting (A.7) to (A.8), we get

By partial summation, we find

Hence, by (A.7), \(m_\kappa (s)\) should satisfy the equation

for \(s>1\). Taking the derivative with respect to s gives (A.6). \(\square \)

Remark 6

To extend \(m_\kappa (s)\) to be defined on \({\mathbf {R}},\) we may put \(m_\kappa (s)=0\) for \(s\leqslant 0\).

Appendix B: A two-dimensional Selberg sieve with asymptotics

This section devotes to present a two-dimensional Selberg sieve that plays an essential role in proving Proposition 2.2.

Let h be a non-negative multiplicative function. Suppose the Dirichlet series

converges absolutely for \(\mathfrak {R}s>1.\) Assume there exist some constants \(L,c_0>0,\) such that

where \({\mathcal {H}}^*(s)\) is holomorphic for \(\mathfrak {R}s\geqslant 1-c_0,\) and does not vanish in the region \({\mathcal {D}}\) as given by (A.3) and \(|1/{\mathcal {H}}^*(s)|\leqslant L\) for all \(s\in {\mathcal {D}}\). We also assume

holds for all \(x\geqslant 3\) and

Define

where \(\varvec{\varrho }=(\varrho _d)\) is given as in (2.2) and \(\varPsi \) is a fixed non-negative smooth function supported in [1, 2] with normalization (2.3).

Theorem B.1

Let \(X,D,z\geqslant 3\) with \(X\leqslant D^{O(1)}\) and \(X\leqslant z^{O(1)}.\) Put \(\tau =\log D/\log z\) and \(\sqrt{D}=X^\vartheta \exp (-\sqrt{{\mathcal {L}}}),\vartheta \in ~]0,\frac{1}{2}[.\) Under the above assumptions, we have

where \({\mathfrak {S}}(\vartheta ,\tau )\) is defined by

where

Here \(\sigma (s)\) is the continuous solution to the differential-difference equation

and \({\mathfrak {f}}(s)=m_2(s/2)\) as given by (A.6), i.e., \({\mathfrak {f}}(s)\) is the continuous solution to the differential-difference equation

Remark 7

Theorem B.1 is a generalization of [42, Proposition 4.1] with a general multiplicative function h and the extra restriction \(d\mid P(z)\), but specializing \(k=2\) therein. It would be rather interesting to extend the case to a general \(k\in {\mathbf {Z}}^+\) and we would like to concentrate this problem in the near future.

We now choose \(z=\sqrt{D}\), so that the restriction \(d\mid P(z)\) is redundant, in which case one has \(\tau =2.\) Note that

For \(\vartheta =1/4,\) we find \({\mathfrak {S}}(\vartheta ,\tau )={\mathfrak {S}}(1/4,2)=112/3\), which coincides with \(4{\mathfrak {c}}(2,F)\) in [42, Proposition 4.1] by taking \(F(x)=x^2\) therein.

We now give the proof of Theorem B.1. To begin with, we write by (8.4) that

By Mellin inversion,

where, for \(\mathfrak {R}s>1,\)

For \(\mathfrak {R}s>1,\) we first write

Note that

By (B.2), \({\mathcal {H}}^\flat (s,d)\) admits a meromorphic continuation to \(\mathfrak {R}s\geqslant 1-c_0.\) Shifting the s-contour to the left beyond \(\mathfrak {R}s=1,\) we may obtain

We compute the residue as

where c is some constant independent of d.

Define \(\beta \) and \(\beta ^*\) to be multiplicative functions supported on squarefree numbers via

Define L to be an additive function supported on squarefree numbers via

Therefore, for each squarefree number d, we have

In this way, we may obtain

where

Note that

Hence we may diagonalize \(S_1(X)\) by

where, for each \(l\mid P(z)\) and \(l\leqslant \sqrt{D},\)

From the definition of sieve weights (2.2), we find

Applying Lemma A.1 with \(g(p)=1/\beta (p)\) and \(q=l\), we have

Inserting this expression to (B.8), we have

Following [17, Lemma 6.1], we have

with

from which and partial summation, we find

with \(\tau =\log D/\log z\) and

We now turn to consider \(S_2(X)\). Note that L(d) is an additive function supported on squarefree numbers. We then have

where there is an implicit restriction that \(d,d_1,d_2\) are pairwise coprime. By Möbius formula, we have

with

where for each \(l\mid P(z),l\leqslant \sqrt{D},\)

and

Moreover, we have

It then follows that

say.

From (B.9), it follows, by partial summation, that

Up to a minor contribution, the inner sum over p can be relaxed to all primes \(p\leqslant z.\) In fact, the terms with \(p\mid \ell \) contribute at most

We then derive that

where

In a similar manner, we can also show that

where

In conclusion, we obtain

We now evaluate \(S_{22}(X)\). For each squarefree \(l\geqslant 1\), we have

Hence

by (B.9). From partial summation, it follows that

Combining all above evaluations, we find

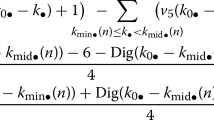

Hence Theorem B.1 follows by observing that \(c_2(\tau )=2c_{21}'(\tau )-c_{21}''(\tau )\) and

by Mertens’ formula.

Appendix C: Chebyshev approximation

A lot of statistical analysis of \(GL_2\) objects relies heavily on the properties of Chebychev polynomials \(\{U_k(x)\}_{k\geqslant 0}\) with \(x\in [-\,1,1],\) which can be defined recursively by

It is well-known that Chebychev polynomials form an orthonormal basis of \(L^2([-\,1, 1])\) with respect to the measure \(\frac{2}{\pi }\sqrt{1-x^2}\mathrm {d}x\). In fact, for any \(f\in {\mathcal {C}}([-\,1,1])\), the expansion

holds with

In practice, the following truncated approximation is usually more effective and useful, which has its prototype in [28, Theorem 5.14].

Lemma C.1

Suppose \(f:[-\,1,1]\rightarrow {\mathbf {R}}\) has \(C+1\) continuous derivatives on \([-\,1,1]\) with \(C\geqslant 2\). Then for each positive integer \(K>C,\) there holds the approximation

uniformly in \(x\in [-\,1,1]\), where the implied constant depends only on C.

Proof

For each \(K>C\), we introduce the operator \(\vartheta _{K}\) mapping \(f \in {\mathcal {C}}^{C+1}([-\,1,1])\) via

This gives the remainder of approximation by Chebychev polynomials up to degree K. Obviously, \((\vartheta _{K}f)(\cdot )\in {\mathcal {C}}^{C+1}([-\,1,1])\) and in fact, \(\vartheta _{K}\) is a bounded linear functional on \({\mathcal {C}}^{C+1}([-\,1,1]),\) which vanishes on polynomials of degree \(\leqslant K\).

Using a theorem of Peano ([7, Theorem 3.7.1]), we find that

where

with

Put \(x=\cos \theta ,t=\cos \phi \), so that

We deduce from integration by parts that

where the implied constant is absolute. For any \(x,t\in [-\,1,1]\), the Stirling’s formula \(\log \Gamma (k) = (k-1/2)\log k - k +\log \sqrt{2\pi } + O(1/k)\) gives

from which and (C.2) we conclude that

This completes the proof of the lemma. \(\square \)

We now turn to derive a truncated approximation for |x| on average.

Lemma C.2

Let k, J be two positive integers and \(K>1.\) Suppose \(\{x_j\}_{1\leqslant j\leqslant J}\in [-\,1,1]\) and \({\mathbf {y}}:=\{y_j\}_{1\leqslant j\leqslant J}\in {\mathbf {C}}\) are two sequences satisfying

with some \(B\geqslant 1\) and \(U>0\). Then we have

where \(\delta (B)\) vanishes unless \(B=1\), in which case it is equal to 1, and the O-constant depends only on B.

Proof

In order to apply Lemma C.1, we would like to introduce a smooth function \(R:[-\,1,1]\rightarrow [0,1]\) with \(R(x)=R(-\,x)\) such that

where \(\varDelta \in ~]0,1[\) be a positive number to be fixed later. We also assume the derivatives satisfy

for each \(j\geqslant 0\) with an implied constant depending only on j.

Put \(f(x):=R(x)|x|.\) Due to smooth decay of R at \(x=0,\) we may apply Lemma C.1 to f(x) with \(C=2\), getting

Note that \(f'''(x)\) vanishes unless \(x\in [-\,2\varDelta ,-\varDelta ]\cup [\varDelta ,2\varDelta ]\), in which case we have \(f'''(x)\ll \varDelta ^{-2}.\) It then follows that

Moreover, \(f(x)-|x|\) vanishes unless \(x\in [-\,2\varDelta ,2\varDelta ]\). This implies that \(f(x)=|x|+O(\varDelta )\). In addition, \(\beta _0(f)=\frac{4}{3\pi }+O(\varDelta )\). Therefore,

We claim that

for all \(k\geqslant 1\) with an absolute implied constant. It then follows that

where the implied constant depends only on B. To balance the first and last terms, we take \(\varDelta =\Vert {\mathbf {y}}\Vert _1/(UK^B)\), which yields

as expected.

It remains to prove the upper bound (C.4). Since \(U_k(\cos \theta ){=}\sin ((k{+}1)\theta )/\sin \theta \), it suffices to show that

for all \(k\geqslant 3\) with an absolute implied constant. From the elementary identity \(2\sin \alpha \sin \beta =\cos (\alpha -\beta )-\cos (\alpha +\beta )\), it follows that

where, for \(\ell \geqslant 2\) and a function \(g\in {\mathcal {C}}^2([-\,1,1])\),

From integration by parts, we derive that

and also

It then follows that

We then further have

with

Note that

and

Hence

The first term can be evaluated as

Again from the integration by parts, the second term is

Hence \(\beta _{k,1}\ll k^{-2}\), and similarly \(\beta _{k,2}\ll k^{-2}.\) These yield (C.5), and thus (C.4), which completes the proof of the lemma. \(\square \)

Note that \(U_k(\cos \theta )=\mathrm {sym}_k(\theta ).\) Taking \(x_j=\cos \theta _j\) in Lemma C.2, we obtain the following truncated approximation for \(|\cos |\).

Lemma C.3

Let k, J be two positive integers and \(K>1.\) Suppose \(\{\theta _j\}_{1\leqslant j\leqslant J}\in [0,\pi ]\) and \({\mathbf {y}}:=\{y_j\}_{1\leqslant j\leqslant J}\in {\mathbf {C}}\) are two sequences satisfying

with some \(B\geqslant 1\) and \(U>0.\) Then we have

where \(\delta (B)\) is defined as in Lemma C.2.