Abstract

Previous studies investigating the anterior cingulate cortex (ACC) have relied on a number of tasks which involved cognitive control and attentional demands. In this fMRI study, we tested the model that ACC functions as an attentional network in the processing of language. We employed a paradigm that requires the processing of concurrent linguistic information predicting that the cognitive costs imposed by competing trials would engender the activation of ACC. Subjects were confronted with sentences where the semantic content conflicted with the prosodic intonation (CONF condition) randomly interspaced with sentences which conveyed coherent discourse components (NOCONF condition). We observed the activation of the rostral ACC and the middle frontal gyrus when the NOCONF condition was subtracted from the CONF condition. Our findings provide evidence for the involvement of the rostral ACC in the processing of complex competing linguistic stimuli, supporting theories that claim its relevance as a part of the cortical attentional circuit. The processing of emotional prosody involved a bilateral network encompassing the superior and medial temporal cortices. This evidence confirms previous research investigating the neuronal network that supports the processing of emotional information.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Recently, great effort has been invested to clarify the functions of the anterior cingulate cortex (ACC) and to provide a unifying concept for its significance. ACC occupies the medial wall of the cerebral hemispheres and includes Brodmann’s areas (BA) 24, 25, and 32 (Koski and Paus 2000). Given its limbic location, and its extensive connections with motor, parietal, and prefrontal cortices (Koski and Paus 2000; Vogt et al. 1995), ACC seems to play a key role in the regulation of affective, cognitive, motor and autonomic functions, interfacing ancient and more recent brain structures (Bush et al. 2000; Critchley et al. 2003).

Neuroimaging evidence has attributed a key role to this brain structure, conceptualised as a crucial part of the attentional system (Kondo et al. 2004a, b; Luks et al. 2002; Benedict et al. 2002; Bush et al. 2000; Davis et al. 2000; Devinsky et al. 1995). ACC has been claimed to be implicated in a form of attention known as attention for action, thought to be allocated when routine functions become inadequate or when new or stronger environmental demands force ongoing processing or behaviour to be adjusted (Posner and DiGirolamo 1998; Posner and Dehaene 1994).

This model implicates and predicts the engagement of ACC in cases where greater attentional costs are imposed by very demanding cognitive states, such as response competition induced by competing response tendencies, and situations characterised by high error incidence.

The significance of ACC for cognitive processing has been assessed by tasks which elicit conflict, or cognitive interference, employing for example stimuli characterised by conflicting features (Grachev et al. 2001; Leung et al. 2000). A well known interference task is the Stroop Color-Word task (Stroop 1935) which requires subjects to name the colour of visually presented words in congruent, neutral and incongruent conditions (i.e., where naming of a colour name conflicts with the colour of the written name). A number of variants for this paradigm have been explored, such as the “Counting Stroop” and the “Emotional Counting Stroop” tasks, introduced by Bush and colleagues (1998; 1999; 2000) as instruments for imaging the functions of the rostral and ventral subdivisions of ACC.

However, so far research on the role of ACC in processing of conflicting information has been carried out by employing low-complexity stimuli, such as words, in the case of the Stroop Task. As a matter of fact, the investigation of ACC recruitment during complex verbal interaction remains a challenging topic, which might contribute to refining our understanding about the impact of attentional resources on language processing.

In the present study, we explored ACC relevance for language processing investigating its engagement in processing of conflicting semantic and prosodic information. We focused on sentences, in consideration of the fact that complex linguistic stimuli can be regarded as the base constituent of ordinary speech communication.

In human verbal communication, the concordance between affective tone and propositional content cannot be considered a conditio sine qua non for the achievement of satisfactory interaction. Instead, discrepancies between the messages conveyed by these two channels of communication can be used to engender a subtle and intriguing interplay of interpretations, creating forms of expressiveness rich in complexity, which might be regarded as appreciable in human verbal interaction. We took advantage of this inherent feature of verbal communication to investigate the functions of ACC.

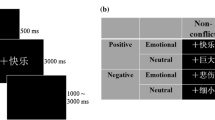

In the present study, sets of incoherent and sets of coherent sentences were employed. Incongruence (CONF condition) was obtained by matching incompatible prosodic and semantic valenced information (i.e., for example, matching sad prosody and happy semantics). Subjects were instructed to concentrate on the emotional prosodic intonation of the strings, ignoring the content meaning, and were required to carry out a prosody identification task. Although no attention was supposed to be allocated to the propositional content, we assumed that the CONFL condition would strongly affect the amount of cognitive resources recruited in order to perform correctly. We assumed that the semantic content would elicit a dominant but inappropriate response tendency, which would conflict with the prosodic intonation, raising a response competition once the subject realised that he or she needed to overcome this tendency in order to perform correctly. We predicted that this condition would impose increased processing costs sustained by allocating attentional resources supplied by ACC.

Behaviourally, we hypothesized that cognitive demands imposed by the conflicting information would be mirrored by a significantly poorer performance, itself reflected by lower accuracy rates.

Methods

Participants

Ten males, German native speakers (aged 24–38 years, mean age 25), right handed according to the Edinburgh Handedness Inventory (Oldfield 1971), participated in the study. None of the recruited subjects had a neurological or psychiatric history or was on medication. Each of them had normal hearing capacities (audiometric thresholds ≤20 dB HL at 500, 1,000, 2,000, 4,000, and 6,000 Hz), gave written informed consent and was paid for participation. The protocol was approved by the Ethics Committee of the Medical Faculty of the University of Tübingen, Germany.

Task and stimuli

The stimuli consisted of four sets of German sentences of the Tübingen Affect Battery (Breitenstein et al. 1996), describing (i.e., with semantic content) happy, sad, angry or neutral scenarios, for a total of 16 samples. Each of the 16 sentences was read by a professional actress with sad, happy, angry and neutral emotional intonation, producing a total of 64 samples.

Example sentences | |

|---|---|

Sad semantics | Der Mann hilft dem sterbenden Sohn. |

The man helps the dying son. | |

Happy semantics | Das Fest war nett und lustig. |

The party was nice and funny. | |

Angry semantics | Diese Schufte stahlen mein ganzes Geld. |

These rascals stole all my money. | |

Neutral semantics | Das Kind geht in den Zoo. |

The child goes to the zoo. | |

The stimuli were digitally recorded in a sound-treated booth (IAC 1604) with a 16-bit resolution at a 22,050-Hz sampling rate. All stimulus material was scaled to the same overall root mean square level and presented binaurally. The sentences employed in the present study lasted for 2 s and were randomly presented.

The NOCONF condition comprised the sentences that conveyed coherent semantic-prosodic information (i.e., with the same emotional valence) and the sentences with a neutral semantics or prosody, where no conflict was present.

Uncovering the neural network that sustains the processing of different types of semantic-prosodic incongruence was the aim of the present study and motivated the choice of computing together different types of conflict (i.e., sad–happy, sad–angry, and angry–happy).

The subjects listened to a sentence in one piece and were instructed to identify the emotional intonation in the shortest time possible, within a 4-s limit. Prosody judgements were performed by button pressing (i.e., selecting from four buttons the key corresponding to the intended emotional intonation), and provided by means of both hands. No feedback about the subjects’ performance was provided. A fixed inter-trial-interval (ISI) of 12 s was chosen. Error rates were collected as a behavioural measure. Stimuli presentation and collection of responses were carried out using the E-prime 1.1 software (Schneider et al. 2002). Incorrect or failed responses were counted as errors. At the end of the session, subjects were debriefed and their ability to reliably hear and understand the auditory stimuli was assured.

Imaging parameters

Participants were lying in a supine position in a 1.5 tesla whole body scanner (Siemens Vision, Erlangen, Germany). In order to minimise movement, their head was fixed within the head coil by means of rubber foam. Subjects were provided with fMRI compatible earphones (Baumgart et al. 1988) to allow them to listen to the auditory stimuli presented during the experiment, and to isolate them from the scanner noise.

Echo-planar images (EPIs) sensitive to the blood oxygen level-dependent (BOLD) effect, covering 27 axial slices (4 mm thickness, 1 mm gap) were acquired (FOV 192 × 192 mm; TE 40 ms; TR 2s; flip angle 90°, matrix 64 × 64).

High resolution T1 weighted images served as anatomical reference (MPRage, matrix, 176 sagittal slices, 1 × 1 × 1 mm3 thickness).

The first four functional images acquired at the beginning of every session were excluded in order to avoid measurements preceding T1 equilibrium.

fMRI and behavioural data analysis

Image pre-processing and statistical analysis were conducted using SPM2 (Wellcome Trust Centre for Neuroimaging, London, UK; http://www.fil.ion.ucl.ac.uk/spm/). Movement parameters were estimated and functional images were realigned to correct for movement artefacts. The mean functional images obtained from each subject were coregistered with the corresponding anatomical image.

The anatomical images were normalised using the Montreal Neurological Institute (MNI) templates implemented in SPM2. The estimated parameters obtained for each subject were used for normalisation of the functional EPI images. Functional images were smoothed using a Gaussian kernel with a full width at half maximum (FWHM) of 12 mm.

The hemodynamic responses were convolved with a synthetic hemodynamic response function and the fMRI data were analysed within the framework of the General Linear Model as implemented in SPM2 (Friston et al. 1995).

First-level fixed effects analyses were carried out for every subject. Event onsets were set at the beginning of each sentence presentation and ISIs were modelled as the baseline. The computed contrast images were then entered into second-level random effects analyses and four different contrasts were calculated:

-

(a)

“main effect” of identification of prosody, where all sets of sentences were contrasted to the baseline (i.e., sentence judgements minus ISIs) to determine the main activation effect (i.e., the brain activation pattern recruited by the detection of emotional intonations).

-

(b)

“emotional prosody effect”, to determine the brain activation pattern recruited by the processing of selective emotional intonations. To compute this comparison, sentences with neutral intonations were subtracted from sentences with emotional intonations (i.e., “happy minus neutral”, “sad minus neutral”, and “angry minus neutral”).

-

(c)

“coherent emotional prosody”, with the aim of isolating the neuronal network supporting the processing of coherent prosody, obtained by subtracting the CONF condition from the NOCONF condition, and

-

(d)

“incoherent emotional prosody”, with the aim of highlighting the brain network recruited by the increased cognitive costs imposed by incongruent speech. This contrast was investigated by subtracting the NOCONF condition from the CONF condition.

Statistical inference was based on the resulting t statistics for each voxel and was corrected for the amount of false positive activations in the whole brain (Genovese et al. 2002). A sphericity correction was applied to take into account between-subjects variance.

Statistical analyses on behavioural data (i.e., levels of accuracy) were computed using the statistical package SPSS 11.1 (SPSS Inc., Chicago, IL, USA). A paired t test analysis between the CONF and the NOCONF conditions was conducted, and values exceeding a threshold of P < 0.05 were considered to be significant.

Results

The task of identifying emotional intonations [contrast (a)] elicited the activation of a bilateral brain network (see Table 1 and Fig. 1) comprising the medial and superior temporal gyri (BA 21, BA 41, and BA 42), the triangular part of the inferior frontal gyrus (BA 45), and the supplementary motor areas (BA 6).

Significant activations elicited by the identification of emotional prosody. Statistical parametrical maps are based on random effects analyses. All sets of sentences were contrasted with the rest condition (baseline). Parametric images were corrected for multiple comparisons and thresholded at P < 0.001. At the bottom of the figure, significant differential activations resulting from the comparison between sentences characterised by incoherence between prosody and semantics and sentences characterised by semantics-prosody coherence are shown. Second level statistical parametrical maps are based on random effects analyses. All images were thresholded at P < 0.05 (corrected for multiple comparisons)

The investigation of the cortical network underlying the identification of different emotional intonations (i.e., happy, sad, angry vs. neutral) and coherent prosody (i.e., the NOCONF minus the CONF condition) failed to yield any significant results.

The processing of incoherent versus coherent sentences [contrast (d)] elicited the activation of the dorsal part of the ACC (BA 32) and of the middle frontal gyrus (BA 10) (see Table 1 and Fig. 1).

Behaviourally, subjects succeeded in correctly identifying the emotional intonation of 73 ± 9% (mean ± SD) of all stimuli. Moreover, subjects identified 83 ± 12% (mean ± SD) of the sentences characterised by congruency (NOCONF condition) and 71 ± 9% (mean ± SD) of the stimuli belonging to the CONF condition (see Fig. 2).

Levels of accuracy for coherence and incoherence between semantics and affective prosody. The figure depicts mean levels of accuracy for the detection of emotional intonations, for the NOCONF (trials conveying coherent prosodic and semantic valences) and the CONF conditions (trials conveying incoherent prosodic and semantic valences). Error bars show mean ± 1 SE. Statistical analyses on accuracy levels revealed a significant difference between the two conditions (paired t test, P < 0.05, df = 9, t = 5.05)

Paired t test analysis on behavioural data showed significant differences in the accuracy levels between CONF and NOCONF conditions (P < 0.001, df = 9, t = 5.05).

Discussion

In the main contrast of interest, the NOCONF condition was subtracted from the CONFL condition in order to investigate the brain network involved in assessing conflicting linguistic information. Consistently with our assumptions, the comparison between the two conditions revealed the activation of the rostral ACC (BA 32). Further activation was observed in the middle frontal gyrus (BA 10).

The aim of the present study consisted in testing the hypothesis that attributes a functional role in cognitive control and attentional workload to ACC, identifying this brain site as an important area for the allocation of attentional resources during the processing of competing information and mediation of response selection (Kondo et al. 2004a, b; Bush et al. 2000; Barch et al.2000; Devinsky et al. 1995, Posner and Dehaene 1994).

With respect to the CONFL condition, we assumed that the valenced propositional content of the sentences would trigger a potent emotional response tendency, which would cause the subjects to fail in correctly identifying the intended intonation. Furthermore, we assumed that the allocation of attentional resources, which was required in order to override this tendency and perform correctly, would engender the recruitment of ACC. This response pattern was not expected in response to the NOCONF condition.

Our result supports a key role for the rostral ACC in attentional control. As expected, identifying emotional intonation in the discordant condition proved to be more difficult, as indicated by a significant lower level of accuracy in performance (see Fig. 2). Moreover, the selective activation of the rostral ACC during processing of prosodic intonations supports its functional specialisation for the assessment of emotional salience (Bush et al. 2000). Bush and colleagues (2000) devised two Stroop-like tasks to investigate the role of the ACC rostral/ventral and dorsal subdivisions in the processing of emotional and cognitive interference. To generate cognitive interference, Bush and colleagues presented sets of number words and instructed the subjects to report the number of all of them. The incongruence between the words meaning and the number of the words contained in each set elicited the activation of the dorsal ACC. To elicit emotional interference, the number words were replaced with emotionally valenced words such as “murder”. As reported by these authors, the Emotional Counting Stroop engendered the selective activation of the rostral and ventral areas of ACC. In coherence with those studies, our result supports a role of this brain site in processing of conflicting emotional information.

Further activation was observed in the middle frontal gyrus (BA 10). The coactivation of ACC and prefrontal regions is often observed in studies that investigate conflict (for a review, refer to Duncan and Owen 2000).

BA 10 occupies the anterior part of the frontal lobe and has been implicated in a wide range of cognitive, emotional and motivational processes (for a review, refer to Ramnani and Owen 2004). Recent findings suggest an important role of this brain region in decision-making processes during response selection (Fleck et al. 2006, Rowe et al. 2000).

With respect to the task investigated in the present study, we assume that selecting the correct responses to incoherent items imposes additional cognitive workload: during the processing of incongruent sentences, selection of task-relevant information (i.e., the string emotional intonation) is more difficult and must be carried out despite cognitive interference engendered by task-irrelevant information. Such a process is likely to have increased the difficulty of the response selection process, thus recruiting the activation of BA 10.

The detection of emotional intonations elicited BOLD responses of a bilateral brain network encompassing the superior (BA 41–42) and medial (BA 21) temporal gyri, the pars triangularis of the inferior frontal gyrus (BA 45), and the supplementary motor area (BA 6).

As mentioned, the task required the subjects to identify affective prosody expressed by emotional (happy, sad, and angry) or neutral intonations, ignoring the semantics conveyed by the propositional meaning picturing happy, sad, angry, or neutral scenarios.

Although subjects were not required to pay attention to the content of the sentences, and although the structure of the stimuli was kept as simple as possible, we assume that encoding of syntactic and semantic information must have taken place to some extent. The behavioural data showing the impact of the CONF condition on the accuracy levels confirm this hypothesis, leading us to assume that the conflict created by the semantic valence strongly affected the task. Therefore, the activation pattern revealed by this contrast presumably mirrors the processing of prosodic and semantic information together, and in order to account for these results both components must be taken into consideration.

The investigation of cognitive processing of emotional prosody has gained considerable interest during the last years. A conceptualisation which first emerged from studies conducted on right-brain lesioned patients, and which has also been supported by neuroimaging evidence, highlights the dominant engagement of the right hemisphere in assessing emotional prosody regardless of valence or processing mode (Wildgruber et al. 2005; Dogil 2003; Adolphs et al. 2000; Borod 2000; Buchanan et al. 2000; Baum and Pell 1999; Blonder et al. 1991).

Specifically, activation of the right middle temporal gyrus has been observed in tasks where detection of emotional prosody was investigated. This brain site has been hypothesized to be a prime processor for prosody and its significance as a conveying area of auditory and emotional information processing has been sustained by recent imaging evidence (Mitchell et al. 2003).

On the other hand, processing of propositional semantics has been consistently attributed to a left lateralised fronto-temporal network (Friederici and Alter 2004; Wildgruber et al. 2004; Mitchell et al. 2003; Vikingstad et al. 2000). Right recruitment for prosody and left for semantics was documented by Mitchell and colleagues (2003) who described activation of the right superior temporal gyrus in response to simple congruent emotional prosody, and activation of the controlateral left area for semantic encoding of the same stimuli.

In consideration of these accounts we are led to consider the activation pattern elicited by the main effect [contrast (a)] as a result of combined and indissociable demands imposed by the processing of semantic and prosodic information. This explanation accounts for the bilateral involvement of the superior temporal gyri and the left inferior frontal gyrus.

Moreover, this explanation appears to be consistent with imaging evidence proposed by Buchanan and co-workers (2000), who found bilateral temporal activation in response to both emotional and verbal aspects of language.

The engagement of the right inferior frontal gyrus seems to be ascribable to processing of prosodic features of the auditorily presented stimuli, as reported by previous imaging findings (Friederici and Alter 2004; Kotz et al. 2003).

The contrast (a) also revealed the bilateral activation of the supplementary motor areas (BA 6), which can presumably be attributed to motor responses preparation elicited by button pressing as required by the task.

Investigations of brain networks underlying the processing of pure emotional (i.e., happy, sad, and angry vs. neutral) and coherent (NOCONF vs. CONF) prosody failed to yield any significant results. This finding supports previous neuroimaging evidence (Wildgruber et al. 2005) indicating that processing of affective intonations is sustained by a common cortical network, and leads us to suggest that comprehension of coherent speech imposes less of a workload than processing of incoherent information.

Conclusions

In our study, we aimed at testing the “attentional” hypothesis conceptualised for explaining the cognitive relevance of the rostral ACC with respect to the process of incoherent and demanding verbal information. Confirming the results of previous imaging research, the current study emphasises the key role of the rostral ACC and the middle frontal gyrus in allocating attentional resources required when processing conflicting linguistic information.

References

Adolphs R, Damasio H, Tranel D, Cooper G, Damasio AR (2000) A role for somatosensory cortices in the visual recognition of emotion as revealed by three-dimensional lesion mapping. J Neurosci 20:2683–2690

Barch DM, Braver TS, Sabb FW, Noll DC (2000) Anterior cingulate and the monitoring of response conflict: evidence from an fMRI study of overt verb generation. J Cogn Neurosci 12:298–309

Baum S, Pell M (1999) The neural bases of prosody: insights from lesion studies and neuroimaging. Aphasiology 13:581–608

Baumgart F, Kaulisch T, Tempelmann C, Gaschler-Markefski B, Tegeler C, Schindler F, Stiller D, Scheich H (1988) Electrodynamic headphones and woofers for application in magnetic resonance imaging scanners. Med Phys 25:2068–2070

Benedict RH, Shucard DW, Santa Maria MP, Shucard JL, Abara JP, Coad ML, Wack D, Sawusch J, Lockwood A (2002) Covert auditory attention generates activation in the rostral/dorsal anterior cingulate cortex. J Cogn Neurosci 14:637–645

Blonder LX, Bowers D, Heilman KM (1991) The role of the right hemisphere in emotional communication. Brain 114:1115–1127

Borod JC (2000) The neuropsychology of emotion. Oxford University Press, New York

Breitenstein C, Daum I, Ackermann H, Lütgehetmann R, Müller E (1996) Erfassung der Emotionswahrnehmung bei zentralnervösen Läsionen und Erkrankungen: Psychometrische Gütekriterien der “Tübinger Affekt Batterie.” Neur Rehab 2:93–101

Buchanan TW, Lutz K, Mirzazade S, Specht K, Shah NJ, Zilles K, Jancke L (2000) Recognition of emotional prosody and verbal components of spoken language: an fMRI study. Brain Res Cogn Brain Res 3:227–238

Bush G, Frazier JA, Rauch SL, Seidman LJ, Whalen PJ, Jenike MA, Rosen BR, Biederman J (1999) Anterior cingulate cortex dysfunction in attention-deficit/hyperactivity disorder revealed by fMRI and the counting Stroop. Biol Psychiatry 45:1542–1552

Bush G, Whalen PJ, Rosen BR, Jenike MA, McInerney SC, Rauch SL (1998) The counting Stroop: an interference task specialized for functional neuroimaging-validation study with functional MRI. Hum Brain Mapp 6:270–282

Bush G, Luu P, Posner MI (2000) Cognitive and emotional influences in anterior cingulate cortex. Trends Cogn Sci 4:215–222

Collins DL, Neelin P, Peters TM, Evans AC (1994) Automatic 3D intersubject registration of MR volumetric data in standardized Talairach space. J Comput Assist Tomogr 18:192–205

Critchley HD, Mathias CJ, O’Doherty JO, Zanini SJ, Dewar BK, Cipolotti L, Shallice T, Dolan RJ (2003) Human cingulate cortex and autonomic control: converging neuroimaging and clinical evidence. Brain 126:2139–2152

Davis KD, Hutchison WD, Lozano AM, Tasker RR, Dostrovsky JO (2000) Human anterior cingulate cortex neurons modulated by attention-demanding tasks. J Neurophysiol 83:3575–3577

Devinsky O, Morrell MJ, Vogt BA (1995) Contributions of anterior cingulate cortex to behaviour. Brain 118:279–306

Dogil G (2003) Understanding Prosody. In: Rickheit G, Herrmann T, Deutsch W (eds) Psycholinguistics: an international handbook. Mouton de Gruyter, Berlin, pp 544–566

Duncan J, Owen AM (2000) Common regions of the human frontal lobe recruited by diverse cognitive demands. Trends Neurosci 23:475–483

Fleck MS, Daselaar SM, Dobbins IG, Cabeza R (2006) Role of prefrontal and anterior cingulate regions in decision-making processes shared by memory and nonmemory tasks. Cereb Cortex 16:1623–1630

Friederici AD, Alter K (2004) Lateralization of auditory language functions: a dynamic dual pathway model. Brain Lang 89:267–276

Friston KJ, Holmes A, Worsley KJ, Pline JP, Frith CD, Frackowiak RS (1995) Statistical parametric map in functional imaging: a general linear approach. Hum Brain Mapp 2:189–210

Genovese CR, Lazar NA, Nichols T (2002) Thresholding of statistical maps in functional neuroimaging using the false discovery rate. Neuroimage 4:870–878

Grachev ID, Kumar R, Ramachandran TS, Szeverenyi NM (2001) Cognitive interference is associated with neuronal marker N-acetyl aspartate in the anterior cingulate cortex: an in vivo (1)H-MRS study of the Stroop Color-Word task. Mol Psychiatry 6:529–539

Kondo H, Morishita M, Osaka N, Osaka M, Fukuyama H, Shibasaki H (2004a) Functional roles of the cingulo-frontal network in performance on working memory. Neuroimage 21:2–14

Kondo H, Osaka N, Osaka M (2004b) Cooperation of the anterior cingulate cortex and dorsolateral prefrontal cortex for attention shifting. Neuroimage 23:670–679

Koski L, Paus T (2000) Functional connectivity of the anterior cingulate cortex within the human frontal lobe: a brain-mapping meta-analysis. Exp Brain Res 133:55–65

Kotz SA, Meyer M, Alter K, Besson M, von Cramon DY, Friederici AD (2003) On the lateralization of emotional prosody: an event-related functional MR investigation. Brain Lang 86:366–376

Leung HC, Skudlarski P, Gatenby JC, Peterson BS, Gore JC (2000) An event-related functional MRI study of the stroop color word interference task. Cereb Cortex 10:552–560

Luks TL, Simpson GV, Feiwell RJ, Miller WL (2002) Evidence for anterior cingulate cortex involvement in monitoring preparatory attentional set. Neuroimage 17:792–802

Mitchell RL, Elliott R, Barry M, Cruttenden A, Woodruff PW (2003) The neural response to emotional prosody as revealed by functional magnetic resonance imaging. Neuropsychologia 41:1410–1421

Oldfield RC (1971) The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9:97–113

Posner MI, DiGirolamo G (1998) Executive attention: conflict target detection and cognitive control In: Parasuraman R (ed) The attentive brain Cambridge. MIT Press, Cambridge, pp 401–423

Posner MI, Dehaene S (1994) Attentional networks. Trends Neurosci 17:75–79

Ramnani N, Owen AM (2004) Anterior prefrontal cortex: insights into function from anatomy and neuroimaging. Nat Rev Neurosci 5:184–194

Rowe JB, Toni I, Josephs O, Frackowiak RS, Passingham RE (2000) The prefrontal cortex: response selection or maintenance within working memory? Science 288:1656–1660

Schneider W, Eschman A, Zuccolotto A (2002) E-Prime User’s Guide Pittsburgh: Psychology Software Tools Inc

Stroop JR (1935) Studies of interference in serial verbal reactions. J Exp Psych 18:643–661

Vikingstad EM, George KP, Johnson AF, Cao Y (2000) Cortical language lateralization in right handed normal subjects using functional magnetic resonance imaging. J Neurol Sci 175:17–27

Vogt BA, Nimchinsky EA, Vogt LJ, Hof PR (1995) Human cingulate cortex: surface features flat maps and cytoarchitecture. J Comp Neurol 359:490–506

Wildgruber D, Hertrich I, Riecker A, Erb M, Anders S, Grodd W, Ackermann H (2004) Distinct frontal regions subserve evaluation of linguistic and emotional aspects of speech intonation. Cereb Cortex 14:1384–1389

Wildgruber D, Riecker A, Hertrich I, Erb M, Grodd W, Ethofer T, Ackermann H (2005) Identification of emotional intonation evaluated by fMRI. Neuroimage 24:1233–1241

Acknowledgments

The authors thank Michael Erb for his assistance given during fMRI data acquisition and Ingo Hertrich for providing the sound files employed in the present experiment. Supported by the Graduiertenkolleg “Sprachliche Repräsentationen und ihre Interpretation” (University of Stuttgart), and by the Deutsche Forschungsgemeinschaft (DFG), Germany, and the National Institutes of Health (NIH, NINDS), USA.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License ( https://creativecommons.org/licenses/by-nc/2.0 ), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Rota, G., Veit, R., Nardo, D. et al. Processing of inconsistent emotional information: an fMRI study. Exp Brain Res 186, 401–407 (2008). https://doi.org/10.1007/s00221-007-1242-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00221-007-1242-3