Abstract

We study q-pushTASEP, a discrete time interacting particle system whose distribution is related to the q-Whittaker measure. We prove a uniform in N lower tail bound on the fluctuation scale for the location \(x_N(N)\) of the right-most particle at time N when started from step initial condition. Our argument relies on a map from the q-Whittaker measure to a model of periodic last passage percolation (LPP) with geometric weights in an infinite strip that was recently established in Imamura et al. (Skew RSK dynamics: Greene invariants, affine crystals and applications to q-Whittaker polynomials. arXiv:2106.11922, 2021). By a path routing argument we bound the passage time in the periodic environment in terms of an infinite sum of independent passage times for standard LPP on \(N\times N\) squares with geometric weights whose parameters decay geometrically. To prove our tail bound result we combine this reduction with a concentration inequality, and a crucial new technical result—lower tail bounds on \(N\times N\) last passage times uniformly over all \(N \in {\mathbb {N}}\) and all the geometric parameters in (0, 1). This technical result uses Widom’s trick (Widom in Int Math Res Notices 2002(9):455–464, 2002) and an adaptation of an idea of Ledoux introduced for the GUE (Ledoux, in: GAFA seminar notes, 2005) to reduce the uniform lower tail bound to uniform asymptotics for very high moments, up to order N, of the Meixner ensemble. This we accomplish by first obtaining sharp uniform estimates for factorial moments of the Meixner ensemble from an explicit combinatorial formula of Ledoux (Electron J Probab 10:1116–1146, 2005), and translating them to polynomial bounds via a further careful analysis and delicate cancellation.

Similar content being viewed by others

Notes

Let \(\Delta _{k-1}(T+1)=x_{k-1}(T+1) - x_{k-1}(T)\) and j be the value the probability mass function in the RHS of the previous display is evaluated at. When \(\Delta _{k-1}(T+1)\ge \textrm{gap}_k(T)\), the probability mass function is zero unless \(j\ge \Delta _{k-1}(T+1) - \textrm{gap}_k(T)\), as otherwise the factor \((q^{\textrm{gap}_k(T)}; q^{-1})_{\Delta _{k-1}(T+1) - j}\) is zero. It is immediate from the definition that this implies \(x_k(T)+ j\ge x_{k-1}(T+1) + 1\), thus ordering and exclusion are maintained.

References

Aggarwal, A., Corwin, I., Ghosal, P.: The ASEP speed process. Advances in Mathematics 422, 109004 (2023)

Amir, G., Corwin, I., Quastel, J.: Probability distribution of the free energy of the continuum directed random polymer in 1+ 1 dimensions. Communications on pure and applied mathematics 64(4), 466–537 (2011)

Auffinger, A., Damron, M., Hanson, J.: 50 Years of First-Passage Percolation, vol. 68. American Mathematical Society (2017)

Aggarwal, A.: Universality for lozenge tiling local statistics. Ann. Math. (2023) (To appear)

Augeri, F., Guionnet, A., Husson, J.: Large deviations for the largest eigenvalue of sub-Gaussian matrices. Commun. Math. Phys. 383, 997–1050 (2021)

Aggarwal, A., Huang, J.: Edge statistics for lozenge Tilings of polygons, II: Airy line ensemble. arXiv:2108.12874 (2021)

Barraquand, G., Borodin, A., Corwin, I.: Half-space Macdonald processes. In: Forum of Mathematics, Pi, vol. 8, p. e11. Cambridge University Press (2020)

Borodin, A., Corwin, I.: Macdonald processes. Probab. Theory Relat. Fields 158(1), 225–400 (2014)

Barraquand, G., Corwin, I., Dimitrov, E.: Fluctuations of the log-gamma polymer free energy with general parameters and slopes. Probab. Theory Relat. Fields 181(1), 113–195 (2021)

Borodin, A., Corwin, I., Ferrari, P., Vető, B.: Height fluctuations for the stationary KPZ equation. Math. Phys. Anal. Geom. 18(1), 1–95 (2015)

Baik, J., Deift, P., McLaughlin, K.D.T., Miller, P., Zhou, X.: Optimal tail estimates for directed last passage site percolation with geometric random variables. Adv. Theor. Math. Phys. 5(6), 1–41 (2001)

Borodin, A., Ferrari, P.: Large time asymptotics of growth models on space-like paths I: PushASEP. Electron. J. Probab. 13, 1380–1418 (2008)

Borodin, A., Gorin, V.: Moments match between the KPZ equation and the Airy point process. SIGMA. Symmetry Integrability Geom. Methods Appl. 12, 102 (2016)

Basu, R., Ganguly, S.: Time correlation exponents in last passage percolation. In: In and Out of Equilibrium 3: Celebrating Vladas Sidoravicius, pp. 101–123 (2021)

Basu, R., Ganguly, S., Hammond, A., Hegde, M.: Interlacing and scaling exponents for the geodesic watermelon in last passage percolation. Commun. Math. Phys. 393(3), 1241–1309 (2022)

Basu, R., Ganguly, S., Hegde, M., Krishnapur, M.: Lower deviations in \(\beta \)-ensembles and law of iterated logarithm in last passage percolation. Isr. J. Math. 242(1), 291–324 (2021)

Basu, R., Ganguly, S., Sly, A.: Upper tail large deviations in first passage percolation. Commun. Pure Appl. Math. 74(8), 1577–1640 (2021)

Basu, R., Ganguly, S., Zhang, L.: Temporal correlation in last passage percolation with flat initial condition via Brownian comparison. Commun. Math. Phys. 383, 1805–1888 (2021)

Basu, R., Hoffman, C., Sly, A.: Nonexistence of bigeodesics in integrable models of last passage percolation. arXiv:1811.04908 (2018)

Baik, J., Liu, Z.: Fluctuations of TASEP on a ring in relaxation time scale. Commun. Pure Appl. Math. 71(4), 747–813 (2018)

Baik, J., Liu, Z.: Multipoint distribution of periodic TASEP. J. Am. Math. Soc. 32(3), 609–674 (2019)

Baik, J., Liu, Z.: Periodic TASEP with general initial conditions. Probab. Theory Relat. Fields 179(3), 1047–1144 (2021)

Borodin, A., Olshanski, G.: The ASEP and determinantal point processes. Commun. Math. Phys. 353(2), 853–903 (2017)

Betea, D., Occelli, A.: Peaks of cylindric plane partitions. arXiv:2111.15538 (2021)

Borodin, A.: Periodic Schur process and cylindric partitions. Duke Math. J. 140(3), 391–468 (2007)

Borodin, A.: Stochastic higher spin six vertex model and Macdonald measures. J. Math. Phys. 59(2), 023301 (2018)

Borodin, A., Petrov, L.: Nearest neighbor Markov dynamics on Macdonald processes. Adv. Math. 300, 71–155 (2016)

Basu, R., Sidoravicius, V., Sly, A.: Last passage percolation with a defect line and the solution of the slow bond problem. arXiv:1408.3464 (2014)

Basu, R., Sarkar, S., Sly, A.: Coalescence of geodesics in exactly solvable models of last passage percolation. J. Math. Phys. 60(9), 093301 (2019)

Cafasso, M., Claeys, T.: A Riemann–Hilbert approach to the lower tail of the Kardar–Parisi–Zhang equation. Commun. Pure Appl. Math. 75(3), 493–540 (2022)

Cohen, P., Cunden, F.D., O’Connell, N.: Moments of discrete orthogonal polynomial ensembles. Electron. J. Probab. 25, 1–19 (2020)

Cook, N.A., Ducatez, R., Guionnet, A.: Full large deviation principles for the largest eigenvalue of sub-Gaussian Wigner matrices. arXiv:2302.14823 (2023)

Corwin, I., Ghosal, P.: KPZ equation tails for general initial data. Electron. J. Probab. 25 (2020)

Corwin, I., Ghosal, P.: Lower tail of the KPZ equation. Duke Math. J. 169(7), 1329–1395 (2020)

Corwin, I., Hammond, A.: Brownian Gibbs property for Airy line ensembles. Invent. Math. 195(2), 441–508 (2014)

Corwin, I., Hammond, A.: KPZ line ensemble. Probab. Theory Relat. Fields 166(1), 67–185 (2016)

Calvert, J., Hammond, A., Hegde, M.: Brownian structure in the KPZ fixed point. Astérisque (2023) (To appear)

Dauvergne, D.: Wiener densities for the Airy line ensemble. arXiv:2302.00097 (2023)

Dauvergne, D., Ortmann, J., Virág, B.: The directed landscape. Acta Math. (2022) (To appear)

Dauvergne, D., Virág, B.: Bulk properties of the Airy line ensemble. Ann. Probab. 49(4), 1738–1777 (2021)

Dauvergne, D., Virág, B.: The scaling limit of the longest increasing subsequence. arXiv:2104.08210 (2021)

Emrah, E., Georgiou, N., Ortmann, J.: Coupling derivation of optimal-order central moment bounds in exponential last-passage percolation. arXiv:2204.06613 (2022)

Emrah, E., Janjigian, C., Seppäläinen, T.: Right-tail moderate deviations in the exponential last-passage percolation. arXiv:2004.04285 (2020)

Emrah, E., Janjigian, C., Seppäläinen, T.: Optimal-order exit point bounds in exponential last-passage percolation via the coupling technique. arXiv:2105.09402 (2021)

Flores, G., Seppäläinen, T., Valkó, B.: Fluctuation exponents for directed polymers in the intermediate disorder regime. Electron. J. Probab. 19, 1–28 (2014)

Guionnet, A., Husson, J.: Asymptotics of \(k\) dimensional spherical integrals and applications. arXiv:2101.01983 (2021)

Ganguly, S., Hegde, M.: Sharp upper tail estimates and limit shapes for the KPZ equation via the tangent method. arXiv:2208.08922 (2022)

Ganguly, S., Hegde, M.: Optimal tail exponents in general last passage percolation via bootstrapping and geodesic geometry. Probab. Theory Relat. Fields 186(1), 221–284 (2023)

Hammond, A.: A patchwork quilt sewn from Brownian fabric: regularity of polymer weight profiles in Brownian last passage percolation. In: Forum of Mathematics, Pi, vol. 7. Cambridge University Press (2019)

Hammond, A.: Brownian regularity for the Airy line ensemble, and multi-polymer watermelons in Brownian last passage percolation. Mem. Am. Math. Soc. 277(1363) (2022)

Huang, J.: Edge statistics for lozenge Tilings of polygons, I: concentration of height function on strip domains. arXiv:2108.12872 (2021)

Huang, J., Yang, F., Zhang, L.: Pearcey universality at cusps of polygonal lozenge tiling. arXiv:2306.01178 (2023)

Imamura, T., Mucciconi, M., Sasamoto, T.: Skew RSK dynamics: Greene invariants, affine crystals and applications to \(q\)-Whittaker polynomials. arXiv:2106.11922 (2021)

Imamura, T., Mucciconi, M., Sasamoto, T.: Solvable models in the KPZ class: approach through periodic and free boundary Schur measures. arXiv:2204.08420 (2022)

Imamura, T., Sasamoto, T.: Determinantal structures in the O’Connell–Yor directed random polymer model. J. Stat. Phys. 163(4), 675–713 (2016)

Johansson, K.: Shape fluctuations and random matrices. Commun. Math. Phys. 209(2), 437–476 (2000)

Johansson, K.: Transversal fluctuations for increasing subsequences on the plane. Probab. Theory Relat. Fields 116(4), 445–456 (2000)

Johansson, K.: Discrete orthogonal polynomial ensembles and the Plancherel measure. Ann. Math. 153(1), 259–296 (2001)

Kuchibhotla, A.K., Chakrabortty, A.: Moving beyond sub-Gaussianity in high-dimensional statistics: applications in covariance estimation and linear regression. arXiv:1804.02605 (2018)

Ledoux, M.: Deviation inequalities on largest eigenvalues. In: GAFA Seminar Notes (2005)

Ledoux, M.: Distributions of invariant ensembles from the classical orthogonal polynimials: the discrete case. Electron. J. Probab. 10, 1116–1146 (2005)

Ledoux, M., Rider, B.: Small deviations for beta ensembles. Electron. J. Probab. 15, 1319–1343 (2010)

Landon, B., Sosoe, P.: Tail bounds for the O’Connell–Yor polymer. arXiv:2209.12704 (2022)

Landon, B., Sosoe, P.: Upper tail bounds for stationary KPZ models. arXiv:2208.01507 (2022)

Macdonald, I.G.: Symmetric Functions and Hall Polynomials. Oxford University Press, Oxford (1998)

Masoero, D.: A Laplace’s method for series and the semiclassical analysis of epidemiological models. arXiv:1403.5532 (2014)

Matveev, K., Petrov, L.: \(q\)-randomized Robinson–Schensted–Knuth correspondences and random polymers. Ann. Inst. Henri Poincaré D 4(1), 1–123 (2016)

Mansour, T., Shabani, A.S.: Some inequalities for the \(q\)-digamma function. J. Inequal. Pure Appl. Math. 10(1) (2009)

O’Connell, N., Yor, M.: Brownian analogues of Burke’s theorem. Stoch. Process. Appl. 96(2), 285–304 (2001)

Ramirez, J., Rider, B., Virág, B.: Beta ensembles, stochastic Airy spectrum, and a diffusion. J. Am. Math. Soc. 24(4), 919–944 (2011)

Seppäläinen, T.: Scaling for a one-dimensional directed polymer with boundary conditions. Ann. Probab. 40(1), 19–73 (2012)

Sagan, B.E., Stanley, R.P.: Robinson–Schensted algorithms for skew tableaux. J. Comb. Theory Ser. A 55(2), 161–193 (1990)

Stein, E.M., Shakarchi, R.: Fourier Analysis: An Introduction, vol. 1. Princeton University Press, Princeton (2011)

Schmid, D., Sly, A.: Mixing times for the TASEP on the circle. arXiv:2203.11896 (2022)

Sarkar, S., Sly, A., Zhang, L.: Infinite order phase transition in the slow bond TASEP. arXiv:2109.04563 (2021)

Sarkar, S., Virág, B.: Brownian absolute continuity of the KPZ fixed point with arbitrary initial condition. Ann. Probab. (2021) (To appear)

Vető, B.: Asymptotic fluctuations of geometric \(q\)-TASEP, geometric \(q\)-PushTASEP and \(q\)-PushASEP. Stoch. Process. Their Appl. 148, 227–266 (2022)

Widom, H.: On convergence of moments for random young tableaux and a random growth model. Int. Math. Res. Notices 2002(9), 455–464 (2002)

Acknowledgements

The authors thank Matteo Mucciconi for explaining the proof of Theorem 1.3, as well as Philippe Sosoe and Benjamin Landon for sharing their preprint [LS22a] with us in advance. We also thank the anonymous referees for their thorough reading of the paper and helpful comments. I.C. was partially supported by the NSF through grants DMS:1937254, DMS:1811143, DMS:1664650, as well as through a Packard Fellowship in Science and Engineering, a Simons Fellowship, and a W.M. Keck Foundation Science and Engineering Grant. I.C. also thanks the Pacific Institute for the Mathematical Sciences (PIMS) and Centre de Recherches Mathematique (CRM), where some materials were developed in conjunction with the lectures he gave at the PIMS-CERM summer school in probability (which is partially supported by NSF grant DMS:1952466). M.H. was partially supported by NSF grant DMS:1937254.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by K. Johansson.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Proof of the LPP-q-Whittaker Connection

In this appendix we give the proof of Theorem 1.3 relating the observable \(x_N(T)\) to an infinite last passage problem in a periodic and inhomogeneous environment. As mentioned, the proof goes through an equivalence to the q-Whittaker measure, and we start by introducing it.

1.1 A.1 q-Whittaker polynomials and measure

Definition A.1

(q-Whittaker polynomial). For a skew partition \(\mu /\lambda \), the skew q-Whittaker polynomial in n variables  is defined recursively by the branching rule

is defined recursively by the branching rule

where, for a single variable \(z\in {\mathbb {C}}\) (recalling the q-binomial coefficient defined in (1)),

For a partition \(\mu \), the q-Whittaker polynomial  is given by the skew q-Whittaker polynomial

is given by the skew q-Whittaker polynomial  with \(\lambda \) taken to be the empty partition. The q-Whittaker polynomial is a special case (\(t=0\)) of the Macdonald polynomials, for which a comprehensive reference is [Mac98, Section VI].

with \(\lambda \) taken to be the empty partition. The q-Whittaker polynomial is a special case (\(t=0\)) of the Macdonald polynomials, for which a comprehensive reference is [Mac98, Section VI].

For a partition \(\mu \), we also define  by

by

Definition A.2

(q-Whittaker measure). The q-Whittaker measure \({\mathbb {W}}_{a;b}^{(q)}\), first introduced in [BC14], is the measure on the set of all partitions given by

where \(a = (a_1, \ldots , a_n)\) and \(b = (b_1, \ldots , b_t)\) satisfy \(a_i, b_j\in (0,1)\), and \(\Pi (a;b)\) is a normalization constant given explicitly by

We may now record the important connection between \(x_N(T)\) and the q-Whittaker measure which holds under general parameter choices and general times, when started from the narrow-wedge initial condition:

Theorem A.3

(Section 3.1 of [MP16]). Let a, b be specializations of parameters respectively \((a_1, \ldots , a_N) \in (0, 1)^N\) and \((b_1, \ldots , b_T ) \in (0, 1)^T\). Let \(\mu \sim {\mathbb {W}}^{(q)}_{a;b}\) and let x(T) be a q-pushTASEP under initial conditions \(x_k(0) = k\) for \(k = 1, \ldots , N\). Then,

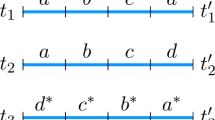

1.2 q-Whittaker to LPP

Next we give the proof of Theorem 1.3, which was explained to us by Matteo Mucciconi. As indicated in Sect. 1.5, the proof we give relies heavily on the work [IMS21]. Before proceeding we introduce some terms that will be needed. First, a tableaux is a filling of a Young diagram with non-negative integers. It is called semi-standard if the entries in the rows and columns are non-decreasing, from left to right and top to bottom respectively. A vertically strict tableaux is a tableaux of non-negative integers in which the columns are strictly increasing from top to bottom, but there is no constraint on the row entries. A skew tableaux is a pair of partitions \((\lambda , \mu )\) such that the Young diagram of \(\lambda \) contains that of \(\mu \), and should be thought of as the boxes corresponding to \(\lambda \setminus \mu \). A semi-standard skew tableaux is defined analogously to the semi-standard tableaux.

As we saw, Theorem A.3 on the relation between \(x_N(T)\) and the q-Whittaker measure reduces the proof of Theorem 1.3 to proving the equality in distribution of the LPP value L and the length of the top row of a Young diagram \(\lambda \) sampled from the q-Whittaker measure. In fact, we will prove a stronger statement which relates all the row lengths of \(\lambda \) to appropriate last passage percolation observables. For this, we let \(L^{(j)}\) be the maximum weight over all collections of j disjoint paths, one path starting from (i, 1) for each \(1\le i\le j\) and all going to \(\infty \) downwards, where the weight of a collection of paths is the sum of the weights of the individual paths.

Theorem A.4

For \(1\le j\le \min (N,T)\), let \(L^{(j)}\) be as defined above in the environment defined in Sect. 1.4 and let \(\mu \sim {\mathbb {W}}^{(q)}_{a;b}\) with \(a_i, b_j \in (0,1)\) for all \((i,j)\in \{1, \ldots , N\}\times \{1, \ldots , T\}\). Then, jointly across \(1\le j\le \min (N,T)\),

Theorem 1.3 follows immediately from combining the \(j=1\) case of Theorem A.4 with Theorem A.3.

Proof of Theorem A.4

We prove this in the case \(T=N\). It is easy to see that the same proof applies to the \(T< N\) case by setting \(m_{(i,j);k} = 0\) (in the same notation as below) for \(j=T+1, \ldots , N\), and similarly for \(N<T\).

We will make use of two bijections. The first, known as the Sagan–Stanley correspondence and denoted by \(\textsf{SS}\), is a bijection between the set of \((M, \nu )\) and the collection of pairs (P, Q) of semi-standard tableaux of general skew shape, where \(M=({m_{(i,j);k}})_{1\le i,j\le N, k=0,1, \ldots }\) is a filling of the infinite strip by non-negative integers which are eventually all zero and \(\nu \) is a partition. The second is a bijection \(\Upsilon \) introduced in [IMS21] between the collection of such (P, Q) and tuples of the form \((V,W; \kappa , \nu )\), where V, W are vertically strict tableaux of shape \(\mu \) (which is a function of P, Q) and \(\kappa \in {\mathcal {K}}(\mu )\) is an ordered tuple of non-negative integers with certain constraints on the entries depending on \(\mu \), encoded by the set \({\mathcal {K}}(\mu )\). We will not need the definition of \({\mathcal {K}}(\mu )\) for our arguments, but the interested reader is referred to [IMS21, Eq. (1.22)] for it.

If one applies \(\textsf{SS}\) to \((M, \nu )\) and then \(\Upsilon \) to the result, the \(\nu \) in the resulting output \((V,W, \kappa ; \nu )\) is the same as the starting one (see the line following [IMS21, Theorem 1.4]). Thus the \(\nu \) can be factored out, yielding a bijection between the set of M and the set of \((V,W,\kappa )\); we call this \(\tilde{\Upsilon }\), as in [IMS21].

Now, [IMS21, Theorem 1.2] asserts that \(L^{(j)}(M)\) is equal to \(\mu _1+ \cdots +\mu _j\), the sum of the lengths of the first j rows in the partition \(\mu \) from the previous paragraph, for all \(1\le j\le N\) (this is a deterministic statement for L defined with respect to any fixed entries of the environment). So we need to understand the distribution of \(\mu \) under the map \(\tilde{\Upsilon }\) when the entries \({m_{(i,j);k}}\) of M are distributed as independent \(\textrm{Geo}(q^ka_ib_j)\), in particular, show that it is \({{\mathbb {W}}^{(q)}_{a,b}}\).

For this task we will need certain weight preservation properties of \(\tilde{\Upsilon }\) which we record in the next lemma.

Lemma A.5

For an infinite matrix M and \((V,W; \kappa )\) as above, define weight functions

and, with \(\#(U,i)\) being the number of times the entry i appears in the vertically strict tableaux U,

where \({\mathcal {H}}\) is the “intrinsic energy function”. Its definition is complicated and not strictly needed for our purposes, so the interested reader is referred to [IMS21, Definition 7.4] for a precise definition.

Then, if M and \((V,W,\kappa )\) are in bijection via \(\tilde{\Upsilon }\), it holds that, for \(i=1,2,3\),

We will prove this after completing the proof of Theorem 1.3. We wish to calculate \({\mathbb {P}}(L^{(k)}(M) =\sum _{i=1}^k\mu _i\ \text { for all} 1\le k\le n)\) where M is distributed according to independent geometric random variables as above, and show that this is equal to the first k row lengths marginal of the q-Whittaker measure. We will instead show the stronger statement that the law of the shape of V (or W) obtained by applying \(\tilde{\Upsilon }\) to M with \({m_{(i,j);k}} \sim \textrm{Geo}(q^ka_ib_j)\) is the q-Whittaker measure. Then marginalizing to the lengths of the first k rows will complete the proof. Denoting the V obtained by applying \(\tilde{\Upsilon }\) to M by V(M) and by \(\textrm{vst}(\mu )\) the set of vertically-strict tableau of shape \(\mu \),

By applying the bijection \(\tilde{\Upsilon }\) and Lemma A.5, and recalling the definitions of \(\widetilde{W}_i\), we see that the previous line equals (letting \(x^V = \prod _{i} x_i^{\#(V,i)}\))

Now it is known that the first factor is  (see for example [IMS21, Eq. (10.5)]), while the second and third factors are

(see for example [IMS21, Eq. (10.5)]), while the second and third factors are  and

and  (see [IMS21, Proposition 10.1]). Thus the RHS is the unnormalized probability mass function of \({{\mathbb {W}}^{(q)}_{a,b}}\) at \(\mu \), as desired. \(\square \)

(see [IMS21, Proposition 10.1]). Thus the RHS is the unnormalized probability mass function of \({{\mathbb {W}}^{(q)}_{a,b}}\) at \(\mu \), as desired. \(\square \)

Proof of Lemma A.5

That \(W_3(M) = \widetilde{W}_3(V,W, \kappa )\) follows by combining Eq. (1.23) in [IMS21, Theorem 1.4] (on the conservation of the quantity under \(\Upsilon \)) with Eq. (4.15) in [IMS21, Theorem 4.11] (on its conservation under \(\textsf{SS}\)).

For \(W_i(M)\) for \(i=1,2\) we will similarly quote separate statements for its conservation under \(\textsf{SS}\) and \(\Upsilon \). Under \(\textsf{SS}\), this is a consequence of [SS90, Theorem 6.6] (which is also the source of [IMS21, Theorem 4.11] mentioned in the previous paragraph), i.e., it holds that \(W_1(M) = (\#(P, i))_{1\le i\le N}\) and \(W_2(M) = (\#(Q, j))_{1\le j\le N}\). For \(\Upsilon \) this preservation property is not recorded explicitly in [IMS21], but it is easy to see it from its definition. Indeed, as described in [IMS21, Sections 1.2 and 3.3], the output (V, W) of \(\Upsilon \) is obtained as the asymptotic result of iteratively applying a map known as the skew RSK map to (P, Q), and it is immediate from the definition of this map that it does not change the number of times any entry i appears in P or Q (only possibly the location of the entries and/or the shape of the tableaux). Thus this property carries over to \(\Upsilon \). \(\square \)

Appendix B: Asymptotics for the Sum in the Factorial Moments Formula

Here we obtain upper and lower bounds (with the correct dependencies on q and k) on the sum in (20), which we label S, i.e., (recall \(H(x) = -x\log x-(1-x)\log (1-x)\))

As indicated, the idea behind the analysis is simply Laplace’s method, but it must be done carefully and explicitly here since we need to obtain the estimates uniformly in q. We start with the upper bound, and turn to the lower bound in Sect. B.2.

1.1 B.1 The upper bound

Proposition B.1

There exist positive constants C, \(k_0\), and \(N_0\) such that for all \(N\ge N_0\), \(k_0\le k\le N\), and \(q\in (0,1)\), there exists \(x_0 = 1-\Theta (q^{1/2})\) such that

Proof

Recall that \(\alpha \) is defined by \(k=\alpha N\). Also recall the definition of \(f_\alpha , g:[0,1]\rightarrow {\mathbb {R}}\) from (19) by

Then the sum S is

the O(1) term is to account for the stray \(\pm 1\) we have ignored when going from the definition of S to its form in terms of \(f_\alpha \).

The first step is to identify the location \(x_0\) where \(f_\alpha \) is maximized.

Therefore we see that \(f_\alpha '(x) = 0\) is equivalent to

Observe that the LHS tends to \(\infty \) as \(x \rightarrow 0\) and equals 0 when \(x=1\). Further the LHS is decreasing while the RHS is increasing in x, and both are continuous in x. Thus there is a unique \(x_0\in (0,1)\) satisfying (38).

Further, the same observations yield (by evaluating the RHS of (38) at \(x=0\) and \(x=1\) and solving for x on the LHS) that

We further see that the size of \(I_{q,\alpha }\) is

As a result, if \(x\in I_{q,\alpha }\), and since \(|f_\alpha '(x)| = O(\alpha )\) for such x, the mean value theorem implies that \(|f_\alpha (x) - f_\alpha (x_0)| = O(q^{1/2}\alpha ^3)\).

1.2 Evaluating \(f_\alpha (x_0)\)

Using these estimates we may obtain an estimate of \(f_\alpha (x_0)\). Let us first evaluate the first three terms from (37) at

(Note that since \(1+x\le \exp (x) \le (1-x)^{-1}\), \(x^*\in I_{q,\alpha }\).) We see that the three terms evaluated at \(x^*\) equal

We recognize \((1+q^{1/2}\exp (\alpha /2))^{-1}\) to be \(x^*\), which results in a cancellation with the \(x^*\log x^*\) term. With this, and writing \(\log (1+\alpha (1-x^*)) = \alpha (1-x^*) \pm O(\alpha ^2(1-x^*)^2)\), we see that the previous display equals

where we performed a Taylor expansion of \(\exp (\alpha /2)\) as well as of the logarithm in the last step.

Next we must move from this calculation done at \(x^*\) to \(x_0\). Observe that the derivative with respect to x of both the first three terms in (37) of \(f_\alpha \) (which we will refer to as \({f_\alpha ^{(1)}}\) below) gives an expression with a common factor of \(\alpha \). By the mean value theorem, we therefore have that, for some \(y\in [x_0, x^*]\) (or \(y\in [x^*, x_0]\) depending on which is greater),

the last equality using that \(|(f^{(1)}_\alpha )'(y)| = O(\alpha )\) and \(|x_0-x^*| = O(\alpha ^2q^{1/2})\) from (40) and since \(x_0, x^*\in I_{q,\alpha }\).

Putting back in the the remaining two terms of \(f_\alpha \) not included in \(f_\alpha ^{(1)}\), overall we have shown that

This can be simplified further using the following cancellation, which we prove in Sect. B.3.

Lemma B.2

Let \(x_0 = x_0(\alpha )\) be the maximizer of \(f_\alpha \) over [0, 1]. For \(\alpha \in (0,\frac{1}{2}]\) (and adopting the convention that O(1) refers to a quantity whose value is upper bounded by an absolute constant)

This yields that

1.3 Performing Laplace’s method

For future reference we also record that

since \(x_0 = 1-\Theta (q^{1/2})\). To apply Laplace’s method, we need to have bounds on \(f_\alpha \) over its domain, which we will obtain by Taylor approximations. We will expand to third order as we need to include the just calculated second order term precisely. So next we bound the third derivative of \(f_\alpha \).

Fix \(c>0\). We observe that there exists C such that for \(x\in [\frac{1}{4}, 1-cq^{1/2}]\) and \(\alpha \in (0,\frac{1}{2}]\),

So by Taylor’s theorem, we see that, if \(\varepsilon >0\) is such that \(x_0-\varepsilon q^{1/2}\in [\frac{1}{4}, 1-cq^{1/2}]\), then for some \(y\in [x_0-\varepsilon q^{1/2}, x_0]\),

since \(f_\alpha '(x_0) = 0\). We may pick \(\varepsilon _0\) a constant depending only on C such that, if \(0<\varepsilon <\varepsilon _0\), then (recalling that \(f_\alpha ''(x_0)<0\))

that \(\varepsilon _0\) can be taken to not depend on q or \(\alpha \) follows from the fact that \(|f_\alpha ''(x_0)|\) can be lower bounded by an absolute constant times \(\alpha q^{-1/2}\), due to (43). Similarly, since \(f'''(x) < C\alpha \) for \(x>\frac{1}{4}\), it holds for \(\varepsilon >0\) that (since \(x_0>\frac{1}{4}\) always)

the last inequality for \(\varepsilon < \varepsilon _0\) for some absolute constant \(\varepsilon _0\) by similar reasoning as above.

So, for \(-\varepsilon _0< \varepsilon < \varepsilon _0\),

The above controls \(f_\alpha \) inside \([x_0-\varepsilon q^{1/2}, x_0+\varepsilon q^{1/2}]\), where \(0<\varepsilon < \varepsilon _0\). We will also need control outside this interval, which we turn to next. We observe that, since \(f_\alpha \) is concave on (0, 1) and \(f_\alpha '(x_0) = 0\), it holds for \(0<\varepsilon <\varepsilon _0\) and \(x\in (0,x_0-\varepsilon q^{1/2}]\) that

the last inequality using again that \(|f_\alpha ''(x_0)| =\Theta (\alpha q^{-1/2})\) and using Taylor’s theorem for \(f_\alpha '\) around \(x_0\) to obtain \(f_\alpha '(x_0-\varepsilon q^{1/2}) =f_\alpha '(x_0) - \varepsilon q^{1/2} f_\alpha ''(y)\) for some \(y\in [x_0-\varepsilon q^{1/2}, x_0]\) and using (43) to then obtain that \(f_\alpha '(x_0-\varepsilon q^{1/2}) \ge C\varepsilon \alpha \).

Similarly, since \(f_\alpha \) is concave, \(f'(x_0) = 0\), and (45), it follows for \(x\in [x_0+\varepsilon q^{1/2}, 1]\) that

We next analyze the actual sum S. We break up S into three subsums \(S_1\), \(S_2\), and \(S_3\). Let \(0< \varepsilon < \varepsilon _0\) be fixed. \(S_1\) corresponds to \(i = 1\) to \(i=\lceil k(x_0-\varepsilon q^{1/2})\rceil \), \(S_2\) to \(i=\lceil k(x_0-\varepsilon q^{1/2})\rceil +1\) to \(\lceil k(x_0+\varepsilon q^{1/2})\rceil \), and \(S_3\) to \(\lceil k(x_0+\varepsilon q^{1/2})\rceil +1\) to \(k-1\).

We start by bounding \(S_1\) using the above groundwork. Recall that \(g(x) = (x(1-x))^{-1}\). Recall that \(g(i/k) = k^2/(i(k-i))\). We drop the \(\lceil \rceil \) in the notation for convenience. Using (46), that \(q\le 1\), and that \(\alpha N=k\),

It is easy to see that the sum is bounded by \(Cq^{-1/2}\) for an absolute constant C depending on \(\varepsilon \), as this is the behaviour near \(j=k\varepsilon q^{1/2}\) (using that \(x_0 = 1-\Theta (q^{1/2})\)). At the same time, it is easy to check that for \(k\ge 2\) and \(1\le j\le k-1\), \(g(i/k)\le 2k\), so that \(S_1\) is also upper bounded by \(Ck\exp (Nf_\alpha (x_0))\). Thus

Next we bound \(S_3\). We apply (47) and use again that \(g(i/k) \le 2k\) and \(x_0=1-\Theta (q^{1/2})\) to see that

Now \(k^2q^{1/2} = q^{-1/4}k^{1/2}\cdot (kq^{1/2})^{3/2}\) and, since \(x\mapsto x^{3/2}\exp (-cx)\) is uniformly bounded over \(x\ge 0\), this implies from the previous display that

Finally we turn to the main sum, \(S_2\), which consists of the range \(\frac{i}{k}\in [x_0-\varepsilon q^{1/2}, x_0+\varepsilon q^{1/2}]\). We first want to say that, for x in the same range, \(g(x) \le Cg(x_0)\) for some absolute constant C. Observe that g blows up near 1 (and \(x_0\) can be arbitrarily close to 1), and it is to avoid this and thereby be able to control g on the mentioned interval that its upper boundary is of order \(q^{1/2}\) above \(x_0\).

Lemma B.3

There exists an absolute constant C such that for \(x\in [x_0-\varepsilon q^{1/2}, x_0+\varepsilon q^{1/2}]\), \(g(x) \le Cg(x_0)\).

Proof

We have to upper bound \(g(x)/g(x_0) = {\frac{x_0(1-x_0)}{x(1-x)}}\). When \(x\in [x_0-\varepsilon q^{1/2}, x_0]\), this ratio is upper bounded by \(x_0/x\); since \(x\ge x_0-\varepsilon q^{1/2}\), and \(x_0\ge \frac{1}{4}\) always, if \(\varepsilon <\frac{1}{8}\) say, the ratio is uniformly upper bounded. When \(x\in [x_0, x_0+\varepsilon q^{1/2}]\), this ratio is upper bounded by \((1-x_0)/(1-x)\); since \(1-x\ge 1-x_0-\varepsilon q^{1/2} \ge \frac{1}{2}(1-x_0)\) (using that \(\varepsilon q^{1/2} \le \frac{1}{2}(1-x_0) = cq^{1/2}\) whenever \(\varepsilon \) is small enough), the ratio is again upper bounded by a constant. \(\square \)

So we see, from Lemma B.3 and (45) that \(S_2\) equals

Recall that \(f_\alpha ''(x_0)=-\Theta (\alpha q^{-1/2})\), and so the coefficient of \(i^2\) is of order \(c(q^{1/2}k)^{-1}\). We bound the above series using Proposition B.4 ahead, which says, with \(\gamma = c\alpha ^{-1}k^{-1}|f_\alpha ''(x_0)| =\Theta ((q^{1/2}k)^{-1})\), and using that \(g(x_0) =\Theta (q^{-1/2})\),

Note that this estimate holds for all \(q>0\) and dos not require \(q\ge k^{-2}\). Thus overall we have shown that

Using the expression for \(f_\alpha (x_0)\) from (42) and recalling \(\alpha N = k\) completes the proof.

\(\square \)

The following is the bound on the discrete Gaussian sum, more precisely a Jacobi theta function, which we used in the proof. It can be proved using the Poisson summation formula and straightforward bounds.

Proposition B.4

(page 157 of [SS11]). There exists C and, for any \(M>0\), a constant \(c_M>0\) such that for \(0<\gamma \le M\) (for the first inequality) and \(\gamma >0\) (for the second),

1.4 B.2 The lower bound

Recall the definition of S from (36).

Proposition B.5

There exist positive constants C and \(k_0\) such that for all \(q\in [k^{-2},1)\) and \(k_0\le k\le N\),

Proof

As in the proof of Proposition B.1, we focus around the point \(Nx_0\), where \(x_0 =(1+q^{1/2}\exp (\frac{1}{2}\alpha ))^{-1} \pm O(q^{1/2}\alpha ^2)\) satisfies (38). So, for \(\varepsilon ,\varepsilon '>0\) to be chosen,

where \(g(x) = (x(1-x))^{-1}\) and \(f_\alpha \) is as defined in (37). We break into two cases depending on whether \(x_0>\frac{1}{2}\) or \(x_0\le \frac{1}{2}\). We start with the first case.

In this case the basic issue is that \(x_0\) can be arbitrarily close to 1. Thus, since the contribution of the sum from \(i=kx_0\) to \(i=k-1\) will be small anyway, we will ignore it and set \(\varepsilon '=0\). We will also set \(\varepsilon \) to be an absolute constant. With these values, we want to show that, for some \(c>0\) and all \(x\in [x_0-\varepsilon , x_0]\), it holds that \(g(x) \ge cg(x_0)\). This is easy to verify by upper bounding \(g(x)/g(x_0)\) for x in the same range using the expression for g(x), the value of \(\varepsilon \), and that \(x_0\in [\frac{1}{4}, 1]\). So, for some absolute constant \(c>0\),

Now as in the proof of Proposition B.1, we Taylor expand \(f_\alpha \) around \(x_0\) to obtain a lower bound on \(f_\alpha (x)\) for \(x\in [x_0-\varepsilon , x_0]\):

the second equality by noting that, since \(x-x_0 < 0\), the infimum is equivalent to maximizing \(f_\alpha '''(y)\) over \(y\in [x,x_0]\). Now, if \(x\ge \frac{1}{2}\), then \(y\ge \frac{1}{2}\) in the previous display and \(f_\alpha '''(y)<0\) by the explicit formula (44), and so the last term in the previous display is non-negative, i.e., can be lower bounded by zero. If \(x<\frac{1}{2}\), we can ensure that \(\varepsilon <\frac{1}{4}\), so that \(x_0 < \frac{3}{4}\). Then we see that each of \(|f_\alpha ''(x_0)|\) and \(|f_\alpha '''(x)|\) are uniformly bounded by \(C\alpha \) where C is independent of q, so, by picking \(\varepsilon \) small enough also independent of q, we can ensure that \(|x-x_0|f_\alpha '''(x) < Cf_\alpha ''(x_0)\) for some absolute constant C.

Thus over all, we have shown that, in the case that \(x_0\ge \frac{1}{2}\), there exists \(\varepsilon >0\) and \(C>0\) independent of q such that, for \(x\in [x_0-\varepsilon , x_0]\),

Using this we see that

Now it is easy to see that \(\sum _{i:|i-kx_0| > k\varepsilon } \exp \left( -CNk^{-2}|f_\alpha ''(x_0)|(i-kx_0)^2\right) \le C\exp \) \( (-cN|f_\alpha ''(x_0)|\varepsilon ^2)\), so the previous display is lower bounded by (using Proposition B.4 in the third line)

using that \(|f_\alpha ''(x_0)| = \Theta (\alpha q^{-1/2})\) and \(\alpha N=k\). This is in turn lower bounded by \(cq^{-1/4}k^{1/2}e^{Nf_\alpha (x_0)}\) since \(g(x_0) =\Theta (q^{-1/2})\) since \(x_0 = 1-\Theta (q^{1/2})\).

In the case that \(x_0\le \frac{1}{2}\), the same proof works after noting that, since also \(x_0\ge \frac{1}{4}\) for all \(q, \alpha \) (as can be observed from (39)), quantities like \(|f''(x_0)|\), \(f'''(x)\), and g(x) are all bounded above and below by absolute constants for \(x\in [x_0-\varepsilon , x_0+\varepsilon ]\) (assuming \(\varepsilon <\frac{1}{8}\) say) and \(\alpha \in (0,\frac{1}{2}]\). \(\square \)

1.5 B.3 The factorial to polynomial moment cancellation

Here we give the proof of Lemma B.2. The proof goes by doing a full series expansion of the expression in \(\alpha \) around 0. Performing a Taylor expansion to second order in \(\alpha \) of the expression under consideration for fixed x, and uniformly (over \(\alpha \in (0,\frac{1}{2})\) and \(x=x_0=1-\Theta (q^{1/2})\)) bounding the third derivative error term by \(Cq^{1/2}\) is also possible but somewhat messier.

Proof of Lemma B.2

To establish this we will utilize the series expansion for the logarithm as well as for \((1-x)^{-k}\). We start with the first term: for any x,

Collecting the \(\alpha ^\ell \) terms together, the previous line equals

Invoking Lemma B.6 ahead, the sums in the previous display equals

Now turning to the third term in (41),

Thus we see overall that the LHS of (41) equals (where \(x_0\) is the solution of (38))

We focus on the coefficient of \(\alpha ^2\) first, which, using that \(\mu _q = (1+q^{1/2})/(1-q^{1/2})\), simplifies to 0.

Now we turn to the sum in (48). We observe that \(\mu _q= 1+\Theta (q^{1/2})\) and recall that \(x_0 = 1-\Theta (q^{1/2})\) (from (39)), so that the expression in the square brackets is equal to

implying that the sum in (48) is \(\pm O(1)\sum _{\ell =3}^\infty \frac{q^{1/2}\alpha ^\ell }{(\ell -1)} =\pm O(1)q^{1/2}\alpha ^3.\) This completes the proof. \(\square \)

Lemma B.6

It holds for any \(x\in {\mathbb {R}}\) and \(\ell \ge 2\) that

Proof

We do the change of variable \(i\mapsto \ell -i-2\) and use \(\left( {\begin{array}{c}n\\ k\end{array}}\right) = \frac{n}{k}\left( {\begin{array}{c}n-1\\ k-1\end{array}}\right) \) twice to write the LHS as

Now we do a change of variable (\(i\mapsto i-2\)) and add and subtract the terms corresponding to \(i=0\) and \(i=1\) in the new indexing to obtain

Multiplying out over the \((i-1)\) factor and using the binomial theorem on each of the resulting terms yields the claim. \(\square \)

Appendix C: Concentration Inequality Proofs

Here we prove the concentration inequality Theorem 5.1. As is typical for such inequalities, the main step is to obtain a bound on the moment generating function.

Proposition C.1

Suppose X is such that

for some \(\rho >0\), and all \(t\ge 0\). Then, there exist positive absolute constants C and \(c >0\) such that, for \(\lambda >0\),

Proof

By rescaling X as \(\rho ^{2/3}X\) it is enough to prove the proposition with \(\rho =1\). First, we see that

Next, we break up the integral into two at 0 and use the hypothesis (49) for the second term:

We focus on the second integral now, and make the change of variables \(y = \lambda ^{-2} x \iff x=\lambda ^{2} y\), to obtain

We upper bound this essentially using Laplace’s method. We first note that, for all \(y>0\), it holds that \(y-y^{3/2}\le 1-\frac{1}{2}y^{3/2}\). So

for positive absolute constants C and c. Putting all the above together yields that

Now we break into two cases depending on whether \(\lambda \) is less than or greater than 1: if \(\lambda \le 1\), then, since \(1+x\le \exp (x)\) and \(C\cdot C_1 \exp (c\lambda ) \le C\cdot C_1\exp (c)\), we obtain, for an absolute constant \(\tilde{C}\),

On the other hand if \(\lambda \ge 1\), we observe that, since \(x\le \exp (x)\), and by increasing the coefficient in the exponent,

It is easy to see that we may take \(c'\) to depend linearly on \(C_1\). Thus overall, since \(\max (a,b)\le a+b\) when \(a,b\ge 0\), we obtain, for some universal constant C,

\(\square \)

Proof of Theorem 5.1

Following the proof of the Chernoff bound, we exponentiate inside the probability (with \(\lambda >0\) to be chosen shortly) and apply Markov’s inequality:

Using Proposition C.1, this is bounded by

the penultimate line using Proposition C.1 for \(\lambda >0\) to be chosen soon. Optimizing over \(\lambda \) and setting it to \(c't^{1/2}\sigma _2^{-1/2}\) for some small constant \(c'>0\) now yields that,

\(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Corwin, I., Hegde, M. The Lower Tail of q-pushTASEP. Commun. Math. Phys. 405, 64 (2024). https://doi.org/10.1007/s00220-024-04944-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00220-024-04944-5