Abstract

Quantum simulation is a promising application of future quantum computers. Product formulas, or Trotterization, are the oldest and still remain an appealing method to simulate quantum systems. For an accurate product formula approximation, the state-of-the-art gate complexity depends on the number of terms in the Hamiltonian and a local energy estimate. In this work, we give evidence that product formulas, in practice, may work much better than expected. We prove that the Trotter error exhibits a qualitatively better scaling for the vast majority of input states, while the existing estimate is for the worst states. For general k-local Hamiltonians and higher-order product formulas, we obtain gate count estimates for input states drawn from any orthogonal basis. The gate complexity significantly improves over the worst case for systems with large connectivity. Our typical-case results generalize to Hamiltonians with Fermionic terms, with input states drawn from a fixed-particle number subspace, and with Gaussian coefficients (e.g., the SYK models). Technically, we employ a family of simple but versatile inequalities from non-commutative martingales called uniform smoothness, which leads to Hypercontractivity, namely p-norm estimates for k-local operators. This delivers concentration bounds via Markov’s inequality. For optimality, we give analytic and numerical examples that simultaneously match our typical-case estimates and the existing worst-case estimates. Therefore, our improvement is due to asking a qualitatively different question, and our results open doors to the study of quantum algorithms in the average case.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A promising application of future quantum computers is to simulate properties of physical systems [3, 12, 16, 20, 29, 33, 39, 59]. As a fundamental quantum algorithm subroutine, Hamiltonian simulation seeks to efficiently approximate the time evolution operator \( \textrm{e}^{\textrm{i}\varvec{H}t} \) using elementary building blocks, such as a universal gate set or whichever experimentally available operations. Despite the simplicity of the problem statement, developing quantum algorithms that minimize the required resources (e.g., the gate complexity) has drawn tremendous effort [7, 9, 18, 19, 35], especially given the current limited experimental capability of quantum simulators.

The main Hamiltonian simulation method we study is product formulas, or Trotterization. As an old idea, it simply approximates the exponential of a sum by products of individual exponentials

Constructions such as the Lie-Trotter-Suzuki [33, 53] formulas generalize to Hamiltonians with many terms and to a higher-order approximation \(\mathcal {O}( t^{\ell +1} )\). However, the Trotter error, as hidden in \(\mathcal {O}(t^2)\), had been challenging to analyze, and for a while product formulas were under the shadow of more advanced quantum algorithms based on quantum walks and quantum signal processing [34, 35].

Nevertheless, product formulas have recently resurfaced as a strong candidate for Hamiltonian simulation for experimental, numerical, and theoretical reasons. In the near-term or early-fault-tolerant regime with severe restrictions to the number of qubits, depth, and connectivity, its simple prescription without controlled ancilla appears attractive. Despite its simplicity, numerical case studies [16] suggest product formula may outperform more advanced methods. These reasons further fueled theoretical analysis of Trotter errors where sharper and sharper theoretical guarantees continue to reduce the cost by exploiting the structure of the problem, such as initial state knowledge [48, 56] and spatial locality of the model [22].

Especially, the seminal work [18] puts together an analytic framework that exploits commutation relations. Consider the general class of k-local Hamiltonians \(\varvec{H}= \sum _{\gamma =1}^{\Gamma } \varvec{H}_{\gamma }\) (i.e., sum over few-body Pauli strings \(\varvec{\sigma }^x_1, \varvec{\sigma }^y_1 \varvec{\sigma }^y_2,\ldots \)). It was shown that using higher-order Suzuki formulas, the gate complexity

suffices to approximate the unitary evolution for any input state. The bound depends on the number of terms \(\Gamma \) in the Hamiltonian and a local energy estimate \(\left\| {\varvec{H}} \right\| _{(local),1}\) (Fig. 2). This local quantity sums over terms \(\varvec{H}_\gamma \) overlapping with a site i and takes the maximum over sites; it tends to be much smaller than the global sum \(\sum _{\gamma } \Vert {\varvec{H}_{\gamma }} \Vert \). This theoretical guarantee renders product formulas among the strongest candidates for simulating physical systems (Table 1).

In light of the developments, we may ask: what remains to be known for Trotter error? In some other contexts, the folklore [59] suggests errors in quantum computing might, in practice, add up incoherently, which can be significantly smaller than coherent noise [8, 23, 54]. Intuitively, different scaling occurs whether the noises are “pointing at the same direction”. For a minimal example, consider a sum over m numbers taking values \(\pm 1\). In the worst possible scenario, they could all share the same sign and add up coherently. However, if the numbers have random signs independent of each other, the total strength is usually much smaller

Curiously, the existing gate complexity, as a manifestation of the Trotter error, exhibits the coherent scaling where terms are added up linearly (1.1). Could Trotter error and the gate complexity, in practice, enjoy the much milder incoherent scaling?

Concentration of gate complexity distribution for product formula for states drawn from any fixed orthogonal basis. The vast majority of states are controlled by our typical case results (Theorem I.1), while extremal states may require the worst case guarantees (1.1) [18]. The two gate complexities coexist and differ because the Trotter error is a high-dimensional object

This work presents the incoherent aspects of Trotter error that exhibit qualitatively different scaling from the state-of-the-art estimates [18]. Pictorially, the Trotter error is a high-dimensional object that cannot be summarized in a single bound. Instead, there is a distribution of Trotter error over input states (Fig. 1). The existing estimate (1.1) accounts for the worst-case inputs that may not be practically relevant; the vast majority of inputs enjoy a much better scaling. More precisely, we show that, with high probability, the gate complexity exhibits a root-mean-square, or 2-norm scaling for inputs drawn from any orthogonal basis

The local quantity \(\left\| {\varvec{H}} \right\| _{(local),2}\) is now a sum-of-squares over sets S overlapping with a site i (the scalar \(b_S\) sums over all terms \(\varvec{H}_{\gamma }\) with support being the set S). Our estimate yields substantial improvements over (1.1) when the Hamiltonian has large connectivity (such as with long-range interactions, see Table 1), which directly leads to resource reduction for various quantum simulation tasks. Further, motivated by quantum chaos and the SYK models [38, 47], we show that when the Hamiltonian itself has random coefficients, even the worst input states enjoy a 2-norm scaling for Trotter error.

To reiterate, our results give evidence that, in practice, product formulas may generically work even better than expected. This improvement is due to framing a qualitatively different question from the existing worse-case results. Indeed, we provide analytic and numerical evidence that the average-case (1.2) and worst-case (1.1) estimates can be simultaneously tight in their respective contexts. More broadly, our findings open doors to the average-case study of quantum algorithms, which is relatively unexplored yet could greatly improve the feasibility of quantum computing applications.

To derive our average-case results, we combine matrix concentration inequalities (uniform smoothness and hypercontractivity) with the commutator expansion of exponential products [18]. The matrix analysis framework is simple and robust, and we expect further applications in quantum information (See, e.g., [13,14,15]).

When this work was completed, we became aware of the work [61] which also studies Hamiltonian simulation for random inputs, and we briefly highlight the differences. First, [61] studies only the variance of Trotter error, while we show a stronger sense of typicality where the 2-norm scaling holds for all but exponentially rare inputs. This utilizes matrix concentration inequalities for the higher moments. Second, our gate complexity is asymptotically tighter for non-spatially local models and is accompanied by analytic and numerical evidence for optimality. This roots from diving deeply into the combinatorics of nested commutators. Third, in addition to random inputs, we also study random Hamiltonians and show the corresponding typical-case results.

The main text is organized as follows: we summarize results for arbitrary k-local Hamiltonians in Sect. 1.1.1 and random Hamiltonians in Sect. 1.1.2. The gate complexities are compared in Table 1. We then introduce the proof ingredients in Sect. 1.2.

1.1 Summary of results

In this section, we present our main results regarding the performances of product formulas. Especially, consider the first-order Lie-Trotter formula and the second-order Suzuki formula

and the higher-order (\(\ell = 4, 6,\dots , 2p,\dots \)) Suzuki [53] formulas constructed recursively

1.1.1 Non-random Hamiltonians

Here, we consider a k-local (i.e., a sum of Pauli strings of length k) Hamiltonian on n-qubits with \(\Gamma \) terms \(\varvec{H}= \sum _{\gamma =1}^\Gamma \varvec{H}_\gamma .\) To present our main results, define the normalized Schatten p-norms \( \left\| {\varvec{O}} \right\| _{\bar{p}}:= \frac{\left\| {\varvec{O}} \right\| _{p}}{\left\| {\varvec{I}} \right\| _{p}} \), the vector 2-norm  , and a global energy estimate in 2-norm

, and a global energy estimate in 2-norm

Theorem I.1

(Trotter error in k-local models). To simulate a k-local Hamiltonian using \(\ell \)-th order Suzuki formula, the gate count

The p-norm estimate implies concentration for typical input states via Markov’s inequality.

Corollary I.1.1

Draw  from a state 1-design ensemble such that

from a state 1-design ensemble such that  (e.g., an orthonormal basis), then with high probability, the gate count

(e.g., an orthonormal basis), then with high probability, the gate count

See Table 1 for the gate counts in various models and Sect. 3 for the explicit dependence on the failure probability hidden in (1.5). When the Hamiltonian contains Fermionic terms or the input is restricted to a low-particle number subspace, see Proposition II.5.1 and Proposition II.5.2 for analogous results.Footnote 1

Regarding optimality (Sect. 4), we construct a Hamiltonian that demonstrates a separation between the worst case and the typical case bounds: its Schatten p-norm saturates our estimates, while the operator norm saturates the state-of-the-art bound [18]. Namely, our 1-norm to 2-norm improvement is due to asking a qualitatively different question (Fig. 1).

Proposition I.1.1

(A model with different p-norms and spectral norm). Consider a 2-local Hamiltonian on three subsystems of qubits \(\mathcal {H}=\mathcal {H}_{S_1}\otimes \mathcal {H}_{S_2}\otimes \mathcal {H}_{S_3}\)

Then, at large subsystem sizes \(\left| {S_1} \right| =\left| {S_2} \right| =\left| {S_3} \right| \rightarrow \infty \), the first and second-order Trotter at short enough times match the p-norm estimates in Theorem II.1 and also the spectral norm estimates [18] (up to constant factors).

Note that the dependence on the number of terms \(\Gamma \) is not optimal when the terms in the Hamiltonian have non-uniform strengths; we can use a truncation argument [18] to improve the gate complexity at early times (Appendix A). Interestingly, the error due to truncation also enjoys concentration (using Hypercontractivity directly).

Lastly, we present numerics complementing our Trotter error bounds. In particular, we study Trotter error for 2-body Hamiltonians with an on-site disorder, with all-to-all connectivity (Figs. 3, 4, 5), or nearest-neighbor interactions (Fig. 6).Footnote 2 These models may capture many-body localization and glassy physics. The Trotter error is averaged over realizations of disorder to extract a smooth curve. The disorder also illustrates the robustness of our bounds. Our numerics appear to match the theoretical predictions regarding the dependence on the system size n (Figs. 3, 6), the evolution time t (Fig. 4), and the product formula order \(\ell \) (Fig. 5).

Trotter error for the all-to-all interacting Heisenberg model for second-order Suzuki formulas \(\varvec{S}_2(t/r)\). We fix time \(t = 10\), repeats \(r = 20{,}000\), and change the system size \(n = 5,\ldots , 13\). Each Trotter error is estimated by medium-of-mean: take the medium over 27 bins, where each bin is an average over 32 independent disorder realization. The fit \(a(n+c)^b\) gives the system size dependence b. For average inputs (2-norm), the empirical exponent reads \(b=2.03\pm 0.03\), which matches the theoretical bound (Theorem I.1, \(b = 2\)). For worst inputs (operator norm), the empirical exponent is much larger, \(b=4.07\pm 0.13\), which matches the theoretical bound ([18], \(b = 4\))

Time dependence of the 2-norm Trotter error in Fig. 3. We fix repetition \(r = 20{,}000\), the system size \(n = 12\), and change time \(t = 0, \ldots 17.5\). The fit \(a t^b+c\) gives the time dependence exponent \(b= 2.74 \pm 0.04\) (variance calculated by independent runs), which deviates slightly from the theoretical upper bounds (Theorem I.1, \(b = 3\))

Different orders of Suzuki formulas for the all-to-all interacting Heisenberg model. For the first-order Lie-Trotter-Suzuki formula, the parameters are \(t = 5, r = 200,000, n = 5,\ldots , 14\). We take medium over 8 bins, each averaging over 12 runs. The fit \(a(n+c)^b\) gives the empirical system size dependence \(b=1.46\pm 0.03\), matching the theoretical bound (Theorem I.1, \(b = 1.5\)); the parameters for 4-th order formula are: \(t=10, r =1000, n = 5,\ldots , 13\). We take medium over 32 bins, each averaging over 15 runs. The empirical exponent reads \(b=2.98\pm 0.03\), matching the theoretical bound (Theorem I.1, \(b = 3\))

Trotter error for the spatially-local Heisenberg model for second-order Suzuki formulas \(\varvec{S}_2(t/r)\). We fix time \(t = 50\), repeats \(r = 40,000\), and change the system size \(n = 5,\ldots , 13\). We take medium over 15 bins, where each bin is an average over 32 independent disorder. The fit \(a(n+c)^b\) gives the system size dependence b. For average inputs (2-norm), the empirical exponent reads \(b=0.46\pm 0.01\), which matches the theoretical bound (Theorem I.1, \(b = 1\)). For worst inputs (operator norm), the empirical exponent is much larger, \(b=1.18\pm 0.02\), which is consistent with the theoretical bound ([18], \(b = 1\))

Trotter error for the random all-to-all Heisenberg model for second-order Suzuki formulas \(\varvec{S}_2(t/r)\). We fix time \(t = 10\), repeats \(r = 20{,}000\), and change the system size \(n = 5,\ldots , 13\). We take medium over 15 bins, each averaging over 32 independent disorder. The fit \(a(n+c)^b\) gives the system size dependence b. For worst inputs (operator norm), the empirical exponent is \(b=2.56\pm 0.1\), which is smaller than the theoretical bound for random Hamiltonians (Theorem VIII.1, \(b = 3.5\)Footnote

The \(b=5\) part should be suppressed at this value of repeats \(r=20{,}000\).

) and non-random Hamiltonians ([18], \(b = 4\).). We are unable to numerically optimize the fixed input state for the norm \(\Vert {\cdot } \Vert _{fix,2}\); we only present the numerics for average inputs (2-norm) for a comparison1.1.2 Random Hamiltonians

Sometimes, we are interested in an ensemble of Hamiltonians, most notably the Sachdev-Ye-Kitaev [38, 47] models with random coefficients. The intrinsic randomness of the Hamiltonian allows us to obtain similar but stronger results. More precisely, we consider random Hamiltonians \( \varvec{H}= \sum _{\gamma =1}^\Gamma \varvec{H}_\gamma = \sum _{\gamma =1}^\Gamma g_\gamma \varvec{K}_\gamma , \) where the coefficients \(g_\gamma \) are i.i.d. standard Gaussian \(\mathbb {E}[g_\gamma ^2]=1\), and the matrices \(\varvec{K}_\gamma \) are deterministic. The local quantities here are defined by dropping Gaussians

Theorem I.2

((Informal). Trotter error in random models) Simulating random k-local models with Gaussian coefficients via higher-order (\(\ell \rightarrow \infty \)) Suzuki formulas, the asymptotic gate count

with high probability drawing from the random Hamiltonian ensemble. The fixed input state  can be arbitrary.

can be arbitrary.

See Theorem VIII.1 for the complete dependence on the finite order \(\ell \ne \infty \) and the failure probability and Theorem VI.1 for a precise gate count for the first-order Trotter formula. In other words, when the Hamiltonian is random, an arbitrary fixed input state exhibits 2-norm scaling of Trotter error. A slightly higher gate count (by a factor of the system size \(\sqrt{n}\)) would control the performance for the worst inputs that may correlate with the Hamiltonian (e.g., the Gibbs state or the ground state of the model).

Proposition I.2.1

(Distinct Hamiltonians). There exists a set of k-local Hamiltonians \(\{\varvec{H}^{(i)}\}\) with cardinality \(\textrm{e}^{\Omega (\Gamma )}\) such that each of them satisfies

but for early times \(t=\Omega (1)\) they are pairwise distinct

If we further assume the matrix exponentials are also distinct (which is believable but harder to prove) \( \left\| {\textrm{e}^{\varvec{H}^{(i)}t}- \textrm{e}^{\varvec{H}^{(j)}t}} \right\| _{\infty } {\mathop {\ge }\limits ^{?}} \Omega (1), \) this implies a counting circuit complexity lower boundFootnote 4\(G=\Omega (\Gamma )=\Omega (n^k)\), which matches our gate complexity for fixed inputs (1.6) and typical input (1.4) at early times \(t=\theta (1)\). See Sect. 9.2 for the proof.

The general optimality of our bounds for random Hamiltonians is less understood numerically. We present qualitative evidence (Fig. 7) suggesting that the Trotter error for random Hamiltonians, in the operator norm, could be much smaller than that of non-random Hamiltonians [18]. At the scale of our numerics, the error seems even smaller than our theoretical estimates. Unfortunately, we are not able to numerically estimate the norm \(\Vert {\cdot } \Vert _{fix,2}\) for fixed inputs.

1.2 Proof ingredients

The Trotter error is a complicated function of matrices. The leading order Trotter error is a commutator; for example, in the first-order product formula

Analogously, the \(\ell \)-th order product formulas have leading order errors as degree \(\ell +1\) polynomials of commutators [18].

There are two main technical steps: First, how to take care of the infinite series of higher-order terms? Second, how to deliver concentration bounds for the commutator?

1.2.1 A good presentation of error

The Trotter error has a rather nasty higher-order dependence on time, and a good expansion simplifies the proof. Here we build upon the framework from [18]. Denote the target Hamiltonian \( \varvec{H}= \sum _{\gamma =1}^\Gamma \varvec{H}_\gamma , \) with some labels \(\gamma \) for the summand. We specify a product formula \(\textrm{e}^{\textrm{i}a_J\varvec{H}_{\gamma (J)} t}\cdots \textrm{e}^{\textrm{i}a_1 \varvec{H}_{\gamma (1)} t}\) with J exponentials by choosing an ordering \(\gamma (j)\) and weights \(a_j\). In particular, we will focus on the Suzuki formulas (1.3), which can be rewritten as

For the first-order Lie-Trotter formula, each term appears once, so there is only one stage \(\Upsilon =1\); the higher-order Suzuki formula has a total of \(J = \Gamma \cdot \Upsilon \) exponentials and decomposes into \(\Upsilon \) stages, where each stage goes through each Hamiltonian term \(\varvec{H}_{\gamma }\) exactly once.

Following [18], the Trotter error can be captured in the time-ordered exponential form

The error is now represented as a sum of nested commutators

In our proof, we will “beat the nested commutator \(\varvec{\mathcal {E}}\) to death”; do a Taylor expansion on time t for the nested commutators, and each order will be a polynomial of matrices. (Fortunately, we will not need the details of the particular orderings of product formulas.) We then apply our matrix concentration tools and go through a complicated combinatorial bound (which is much more involved than obtaining the 1-norm quantity \(\Vert {\varvec{H}} \Vert _{(1),1}\) in [18]).

1.2.2 Uniform smoothness, matrix martingales, and hypercontractivity

To obtain quantitative control of complicated matrix functions, let us begin with an instructive example that captures the different perspectives. Consider a Hamiltonian as a sum of 1-local Pauli-Zs,

where each Pauli \(\varvec{\sigma }^z_i\) is supported on qubit i. How “big” is the sum?

For two scalar random variables satisfying the martingale condition, the variable b has zero mean conditioned on variable a. In the non-commutative generalization, the matrix \(\varvec{B}\) is partially traceless (“zero-mean”) on a subsystem where the matrix \(\varvec{A}\) is trivial (“conditioned on \(\varvec{A}\)”)

(1) Take the spectral norm for the largest eigenvalue in magnitude

(2) Interpret the trace as an expectation, then its eigenvalue distribution is equivalent to a sum of independent random variables \( S_n:= x_1+\cdots +x_n\) each drawn from the Rademacher distribution \(\Pr (x_i=1)=\Pr (x_i=-1)=1/2\). Now, we can use a concentration inequality to describe how rarely the random variable deviates from its expectation

In other words, the typical magnitude of eigenvalues \( \left| {\lambda } \right| = \mathcal {O}(\sqrt{n}) \ll n\) is much smaller than the largest eigenvalue. This simple example captures the overarching theme of this work: Concentration is ubiquitous but often unspoken in the high dimensional setting.

To go beyond the above example, we rely on a family of recursive inequalities for their p-norms, which leads to concentration by Markov’s inequality. We begin with reviewing the ancestral scalar version, often called the two-point inequality or Bonami’s inequality (See, e.g., [21]).

Fact I.3

(The two-point inequality). For real numbers a, b,

This can be seen by expanding the binomial. This seemingly trivial inequality turns out to have far-reaching consequences, and its simplicity becomes its strength (See, e.g., Boolean analysis [43]). The same form of inequality has an exact matrix analog, often called uniform smoothness.

Fact I.4

(Uniform smoothness for Schatten Classes [55]). For matrices \(\varvec{X}\) and \(\varvec{Y}\),

The above form is not directly applicable, but its alternative forms with a martingale flavor streamline most of our proofs. For k-local operators (which are, in fact, closely related to non-commutative martingales; see Fig. 8), we derive and make heavy usage of the following:

Proposition I.4.1

(Uniform smoothness for subsystems). Consider matrices \(\varvec{X}, \varvec{Y}\in \mathcal {B}(\mathcal {H}_i\otimes \mathcal {H}_j)\) that satisfy the non-commutative martingale condition \(\textrm{Tr}_i(\varvec{Y}) = 0\) and \(\varvec{X}= \varvec{X}_j\otimes \varvec{I}_i\). For \(p \ge 2\),

In other words, uniform smoothness delivers sum-of-squares behavior that contrasts with the triangle inequality, which is linear

This difference highlights the qualitative distinction between the worst case and the typical case, which is the starting point of all arguments in this work.

To illustrate its power, we apply to the 2-local operator (Fig. 9)

and more generally this gives concentration of k-local operators, or Hypercontractivity (Sect. 2).

For random Hamiltonians, the flavor of the problem changes slightly; we can think of adding Gaussian coefficients in our guiding example

The Gaussian coefficient (i.e., external randomness) requires the following version of uniform smoothness regarding the expected p-norm \({\left| \hspace{-1.0625pt}\left| \hspace{-1.0625pt}\left| \varvec{X} \right| \hspace{-1.0625pt}\right| \hspace{-1.0625pt}\right| }_{p}:= (\mathbb {E}[ \Vert {\varvec{X}} \Vert _p^p ] )^{1/p}\) that will allow us to control the spectral norm, i.e., the worst input states. Initially, this featured in simple derivations of matrix concentration for martingales [24, 42].

Fact I.5

(Uniform smoothness for expected p-norm [24, Proposition 4.3]). Consider random matrices \(\varvec{X}, \varvec{Y}\) of the same size that satisfy \(\mathbb {E}[\varvec{Y}|\varvec{X}] = 0\). When \(2 \le p\),

See Sect. 5 for the relevant background and an alternative norm for arbitrary fixed input states. Beyond the scope of this work, we emphasize these robust and straightforward martingale inequalities should find applications in versatile quantum information settings, whether by exploiting the tensor product structure of the Hilbert space or the randomness in matrix summands. See, e.g., [13, 15] for applications in operator growth and [14] in randomized quantum simulation.

1.3 Discussion

For many physical systems of interest (i.e., non-random k-local Hamiltonians), we present an average-case gate complexity that is qualitatively better than the worst-case. Without even changing the product formula, our analysis leads to a direct reduction of resources for quantum simulation applications. It is natural to hope that states appearing in practice (such as the ground state in quantum chemistry applications) behave like the typical states rather than the worst states, which would make product formulas very appealing for quantum simulation. It would be very interesting to carry out small-scale numerics. Our result holds with high probability for inputs drawn from any orthonormal basis. Unfortunately, our current argument is probabilistic and does not label the exceptional states. Heuristically, the Trotter error is another k-local Hamiltonian that does not resemble the original Hamiltonian, so the states at low energy need not be “aligned” with the extremal states maximizing the Trotter error. We leave this as an open problem.

Another natural question is whether other quantum simulation methods (such as qubitization) or even other quantum algorithms enjoy an average-case speed-up. If true, it would greatly improve the feasibility of many quantum computing applications.

2 Notations

This section recapitulates notations, and the reader may skim through this and return as needed. We write scalars, functions, and vectors in normal font, matrices in bold font \(\varvec{O}\), and superoperators in curly font \(\mathcal {L}\). Natural constants \(\textrm{e}, \textrm{i}, \pi \) are denoted in Roman font.[0]

Hamiltonian:

Random Hamiltonian ensemble:

Product formula:

Norms:

3 Preliminary: k-Locality, Uniform Smoothness, and Hypercontractivity

In quantum information, we often encounter a k-local operator: its Pauli strings \(\varvec{F}_{S}\) have lengths at most k. This is the quantum analog of a low-degree Boolean function [41].

Given such a k-local operator, let us quantify its “strength” acting on states. One cautious choice is to worry about the worst possible state via the operator norm

which maximizes the vector \(\ell _2\)-norm.

Nevertheless, the input state is often unstructured (as opposed to adversarially chosen to extremize the operator). A simple and common case is to draw the inputs randomly from the computational basis. Nicely, this input state ensemble can be efficiently generated and, at the same time, correspond to the maximally mixed state and infinite temperature physics. Motivated by this, we model our “typical” states in our work to be an ensemble of state

which includes any orthonormal basis states, any t-design ensemble, and Haar random states. Of course, adding more structure to the problem would more directly address the particular question at hand, but the statement would become case-dependent and lose its simplicity. In fact, our argument below also applies to other ensembles, but applying it requires additional knowledge of the ensemble.

Now that we specified the ensemble, how large is the typical strength  ? This question can be succinctly phrased in terms of a concentration inequality that controls the probability of an undesirably large strength.

? This question can be succinctly phrased in terms of a concentration inequality that controls the probability of an undesirably large strength.

Proposition II.0.1

(Typical states and Schatten p-norms). For a pure state  drawn randomly from an ensemble

drawn randomly from an ensemble  , we have

, we have

In particular, for the maximally mixed state, we recover the normalized Schatten p-norm

Proof of Proposition II.0.1

For illustration, we start with Chebychev’s inequality and the variance \(p=2\)

The last equality evaluates the expectation over states. To obtain sharper tail bounds via Markov’s inequality, consider the p-th moment

The inequality applies a certain form of concavity (Fact II.7). \(\quad \square \)

In other words, the (weighted) 2-norm coincides with the variance of the typical strength; the (weighted) p-norm governs the tail bounds. Indeed, the p-norms can be expressed in terms of the eigenvalues of the operator \(\varvec{F}\); (2.1) will be an equality if we draw the state  from the eigenbasis of the operator \(\varvec{F}\). Conveniently, with other choices of basis, the concavity tells us we still retain an inequality in (2.1). The rest of our discussion boils down to estimating the p-norm of k-local operators. This is the content of Hypercontractivity.

from the eigenbasis of the operator \(\varvec{F}\). Conveniently, with other choices of basis, the concavity tells us we still retain an inequality in (2.1). The rest of our discussion boils down to estimating the p-norm of k-local operators. This is the content of Hypercontractivity.

Fact II.1

(Hypercontractivity for Paulis [41, Theorem 46]). For an operator acting on qubits \(\varvec{F}\in \mathcal {B}(\mathcal {H}(2^n))\), \(p \ge 2\), and \(C_p:=p-1\),

Indeed, for \(p=2\), it holds with equality, and the 2-norm gives an incoherent sum over subsets S. (The Pauli strings are orthogonal in the Hilbert-Schmidt inner product.) We take squares to emphasize this sum-of-square nature. For general \(p\ge 2\), Hypercontractivity gives an analogous incoherent sum over subsets but with enlarged coefficients \(C_p^{\left| {S} \right| }\). In other words, so long as the sets S are small (e.g., the operator is k-local for a fixed k), we obtain all p-norm estimates from a 2-norm calculation. See also [40] for applications of Hypercontractivity, and note the equivalent inverted form also appears

Historically, this zoo of closely-related ideas starts from the Boolean cases (see, e.g., [43] and Sect. 7.1) and extends to the non-commutative cases, including Paulis [41], Fermions [10], and abstract von Neumann algebras [46]. In various contexts, Hypercontractivity has been constantly revisited and found applications in classical [1, 6, 43] and quantum computing [40]. The goal of our discussion here is to put together a coherent and accessible review that illustrates the rather common phenomena with some problem-driven adaptations. We begin with the intuitive qudit case (with the maximally mixed state as the ensemble) and later swiftly generalize to several settings arising from quantum simulation.

To understand Hypercontractivity, our main approach is the following recursive inequality called uniform smoothness.

Proposition II.1.1

(Uniform smoothness for subsystems). Consider matrices \(\varvec{X}, \varvec{Y}\in \mathcal {B}(\mathcal {H}_i\otimes \mathcal {H}_j)\) that satisfy \(\textrm{Tr}_i(\varvec{Y}) = 0\) and \(\varvec{X}= \varvec{X}_j\otimes \varvec{I}_i\). For \(p \ge 2\), \(C_p=p-1\),

Technically, the partially traceless assumption \(\textrm{Tr}_i(\varvec{Y}) = 0\) makes it a non-commutative martingale where taking partial trace is a conditional expectation

We can rewrite Proposition II.1.1 more formallyFootnote 5. For any matrix \(\varvec{K}\),

This martingale condition naturally appears in subroutines of quantum information applications, while Hypercontractivity as a black box is more “rigid”. Although these two ideas are intimately related, we emphasize uniform smoothness is a versatileFootnote 6 and transparent driving horse, which implies, among other consequences, Hypercontractivity (Corollary II.4.1).

3.1 Uniform smoothness for subsystems

Proposition II.1.1 is a special case of [46]. Here, we present an elementary proof by adapting the argument in [24, Prop 4.3].Footnote 7 We start with the primitive form of uniform smoothness as a black box.

Fact II.2

(Uniform smoothness for Schatten Classes, recap [55]). For matrices \(\varvec{X}\) and \(\varvec{Y}\),

We also need the following fact.

Fact II.3

(Monotonicity of p-norm w.r.t partial trace). For matrices \(\varvec{X}\) and \(\varvec{Y}\) satisfying \(\textrm{Tr}_i(\varvec{Y}) = 0\) and \(\varvec{X}= \varvec{X}_j\otimes \varvec{I}_i\), \(p \ge 2\),

This can be understood as the non-commutative analog of convexity \( \left\| {\varvec{X}+\mathbb {E}_{\varvec{Y}}\varvec{Y}} \right\| _{p} \le \mathbb {E}_{\varvec{Y}}\left\| {\varvec{X}+\varvec{Y}} \right\| _{p}. \)

Proof of Fact II.3

Recall the variational expression [60, Sec 12.2.1] for Schatten p-norms

Then,

The last equality uses the partially traceless condition \(\textrm{Tr}_i \varvec{Y}=0\) and that

An alternative proof is by averaging over Haar unitary on subsystem i

The first equality is Schur’s lemma, then convexity, and lastly, we used unitary invariance of p-norms. \(\quad \square \)

We can almost prove Proposition II.1.1.

The last inequality is Lyapunov’s and then Fact I.4. Rearranging terms yields a slightly worse constant \(2C_p\). The advertised constant can be obtained via another elementary but insightful trick [24, Lemma A.1], which we reproduce as follows.

Proof of Proposition II.1.1

The proof considers a rescaling argument. Let \(\varvec{Z}:=\frac{1}{n}\varvec{Y}\). We have just obtained

Rearranging Fact I.4,

The last inequality recursively applies the first line for \(n\ge k\ge 2\) and (2.2) at base caseFootnote 8\(k=1\). Therefore,

Take \(n\rightarrow \infty \) to obtain the sharp constant. \(\square \)

3.1.1 Subalgebras

Let us work out the analogous elementary derivation for a subalgebra \(\mathcal {N}\subset \mathcal {M}\), which captures non-commutative martingales in full generality. This also provides a unifying perspective for the manipulations we are doing. For subalgebras \(\mathcal {N}\subset \mathcal {M}\subset B(\mathcal {H})\), let \(E:\mathcal {M}\rightarrow \mathcal {N}\) be the projection to subalgebra \(\mathcal {N}\) (or the trace-preserving conditional expectation), with the defining properties:

Intuitively, E is the analog of normalized partial trace \(\varvec{I}_j \frac{\textrm{Tr}_j[\cdot ]}{\textrm{Tr}[\varvec{I}_j]}\). Using the notation natural in this setting, we reproduce the monotonicity.

Fact II.4

(Monotonicity of p-norm w.r.t projection to subalgebra). Consider finite dimensional subalgebras \(\mathcal {N}\subset \mathcal {M}\subset B(\mathcal {H})\) and the corresponding projection to subalgebra \(E:\mathcal {M}\rightarrow \mathcal {N}\). Then, for any \(\varvec{Z}\subset \mathcal {M}\) and \(p \ge 2\),

Proof of Fact II.4

Again, consider the variational expression

Note that the maximum is attained in the same algebra \(\varvec{B}\in \mathcal {N}\) (This can be seen by the structure theorem of finite-dimensional von Neumann algebra. \(\mathcal {N}\) is a direct sum of subsystems.). Then

which is the advertised result. \(\quad \square \)

Through the same arguments, we conclude the discussion for subalgebras by the following.

Proposition II.4.1

(Uniform smoothness for subalgebras). Consider finite dimensional subalgebras \(\mathcal {N}\subset \mathcal {M}\subset \mathcal {B}(\mathcal {H})\) and the corresponding projection to subalgebra \(E:\mathcal {M}\rightarrow \mathcal {N}\). Then, for any \(\varvec{Z}\in \mathcal {M}\),

This result was first obtained in [46] in a more technical setting. We hope the discussion here provides a simple interpretation.

3.2 Deriving hypercontractivity

Uniform smoothness, through a recursion, implies Hypercontractivity-like global formulas.Footnote 9

Proposition II.4.2

(Moment estimates for local operator). For an operator \(\varvec{F}\in \mathcal {B}(\mathbb {C}^{d\otimes n})\) on n-qudits, and \(p\ge 2\), \(C_p:= p-1\),

The super-operator \(E_s[\cdot ]:=\varvec{I}_{s}\otimes \bar{\textrm{Tr}}_s[\cdot ] \) is the conditional expectation associated with the partial trace, and the set \(S^c\) is the complement of set S.

Intuitively, for each subset S, the product of conditional expectations selects the component \(\varvec{F}_S\) that is non-trivial on set S and trivial on the complement \(S^c\). Indeed, for qubits, the summand \(\varvec{F}_S\) corresponds to the Pauli strings non-trivial on set S.

Let us grasp this formula with some examples. For single-site Paulis, this resembles the usual concentration inequality for bounded independent summand (e.g., Hoeffdings’ inequality).

Example II.4.1

(1-local Paulis). For \(\varvec{F}:=\sum _i \alpha _i\varvec{\sigma }^z_i\),

By Markov’s inequality, we obtain concentration for pure states drawn from a fixed orthonormal basis  .

.

In other words, the strength is typically bounded by the variance \(\sum _i \left| {\alpha _i} \right| ^2\). If we take the states to be the computational basis, the tail bound applies to its eigenvalue distribution.

Moreover, we obtain a similar sum-of-squares behavior for 4-local Paulis, albeit with heavier tails.

Example II.4.2

(4-local Paulis). For \(\varvec{F}:=\sum _{i>j>k>\ell } \alpha _{ijk\ell }\varvec{\sigma }^x_i\varvec{\sigma }^z_j \varvec{\sigma }^y_k\varvec{\sigma }^x_\ell \),

By Markov’s inequality, we obtain

which does not have a Gaussian tail anymore but still decays super-polynomially.

Let us now present the elementary proof.

Proof of Proposition II.4.2

Apply uniform smoothness (Proposition II.1.1) for \(s=1,\ldots , n\) recursively.

Each application produces two branches, and the total \(2^n\) branches are labeled by the subsets \(S\subset \{n,\ldots , 1\}\). We may regroup the conditional expectations since they are just taking partial traces of disjoint subsystems. The power \((C_p)^{|S|}\) comes from the times the branch \((1-E_s)\) appears. \(\quad \square \)

To compare with the existing Hypercontractivity for qubits, it is worth bringing Proposition II.4.2 to the following form.

Corollary II.4.1

(Non-commutative hypercontractivity). In the setting of Proposition II.4.2,

This is equivalent to the existing bound (Fact II.1) up to slightly worse constants. However, the martingale formulation streamlines a simple proof (Proposition II.1.1) and, more importantly, allows us to adapt to different settings in the subsequent sections.

Proof of Corollary II.4.1

Bound the normalized p-norm \(\left\| {\varvec{F}} \right\| _{\bar{p}}:=\frac{\left\| {\varvec{F}} \right\| _{p}}{\left\| {\varvec{I}} \right\| _{p}}\) by Pauli expansion \(\sigma _S:=\{\varvec{\sigma }^x,\varvec{\sigma }^y,\varvec{\sigma }^z\}^{\left| {S} \right| }\)

which is the advertised result. Intuitively, the extra factor we pay is the number of distinct Pauli strings \(3^{\left| {S} \right| }\). \(\quad \square \)

3.3 Product background states

Our previous discussion focused on the unweighted p-norm. In this section, we discuss the weighted p-norms. For \(0\le s\le 1\), define

where \(s=1/2\) [5] and \(s=1\) are the notable cases

The latter feeds into the concentration for typical input states drawn from an ensemble whose average is \(\varvec{\rho }\) (Proposition II.0.1). Even though not applied elsewhere in this paper, we keep the general \(0\le s\le 1\) expression in the following arguments. Uniform smoothness generalizes to the \(\varvec{\rho }\)-weighted p-norm for factorized state \(\varvec{\rho }=\otimes _i \varvec{\rho }_i\). The martingale condition now depends on the state \(\varvec{\rho }_i\).

Proposition II.4.3

(Uniform smoothness for subsystem, weighted). Consider product state \(\varvec{\rho }= \varvec{\rho }_j\otimes \varvec{\rho }_i\) and matrices \(\varvec{X}, \varvec{Y}\in \mathcal {B}(\mathcal {H}_i\otimes \mathcal {H}_j)\) that satisfy the martingale condition \(\textrm{Tr}_i(\varvec{\rho }_i\varvec{Y}) = 0; \varvec{X}= \varvec{X}_j\otimes \varvec{I}_i\). For \(p \ge 2\), \(C_p=p-1\),

In a similar proof, all we need is to modify monotonicity.

Fact II.5

(Monotonicity w.r.t partial trace). For matrices \(\varvec{X}\) and \(\varvec{Y}\) satisfying \(\textrm{Tr}_i(\varvec{\rho }_i\varvec{Y}) = 0\) and \(\varvec{X}= \varvec{X}_j\otimes \varvec{I}_i\), \(p \ge 2\),

Proof

Once again, plug in the variational expression

Suppose the maximum is attained at some \(\varvec{B}_j\). Then by Proposition II.3,

In the last inequality, we used the partially traceless assumption \(\textrm{Tr}_i [\varvec{\rho }_i\varvec{Y}] =0\) and that

\(\square \)

Combining the monotonicity with Fact I.4, we obtain uniform smoothness (Proposition II.4.3). Moreover, we automatically get a weighted version of a Hypercontractivity-like formula. Let us first define the appropriate operator re-centered w.r.t. the background

as a “shifted” Pauli \(\varvec{\sigma }^{z}\). Accordingly, we shift the conditional expectation

Proposition II.5.1

(Moment estimates for local operator, \(\varvec{\rho }\)-weighted). For an operator \(\varvec{F}\in \mathcal {B}(\mathbb {C}^{d\otimes n})\) on n-qudits, product state \(\varvec{\rho }= \otimes _i \varvec{\rho }_i\), and \(p\ge 2\), \(C_p:= p-1\),

The set \(S^c\) is the complement of set S.

3.3.1 Low-particle number subspace

Why did we study product state as the background? Interestingly, it will tell us about concentration when restricting to low particle number subspaces. Consider the following two operators: the projector \(\varvec{P}_m\) to the m-particle subspace of n-qubit Hilbert space

and the product state

We can control the p-norm with the low-particle subspace, which we care about, with the product state, which we can calculate.

Proposition II.5.2

For any operator \(\varvec{F}\),

Note the factor \(\textrm{Poly}(n,m)\) is mild since they are suppressed as long as \(p\gtrsim \log (\textrm{Poly}(n,m))\).

Proof of Proposition II.5.2

By Stirling’s approximation, the operators obey positive semi-definite order

This gives the advertised result by Fact II.6. \(\square \)

Fact II.6, proved below, is that weighted norms are monotone w.r.t the state. In our application for Trotter error, the Hamiltonian is often particle number preserving, and the following becomes trivial. But for potential applications in other contexts, we include a quick proof when the operator \(\varvec{F}\) and state \(\varvec{\rho }\) are not commuting.

Fact II.6

(Monotonicity of weight). For positive semi-definite operators \(\varvec{\rho }\ge \varvec{\sigma }\ge 0\) (presumably not normalized),

This is closely related to a polynomial version of Lieb’s concavity.

Fact II.7

([11, Theorem 1.1]). For operator \(\varvec{A}\ge 0\), and \(q\ge 1\), \(r\le 1\), the function

is concave (and hence monotone) in \(\varvec{A}\).

We can now quickly adapt to our settings to present a proof.

Proof of Fact II.6

Both inequalities use Fact II.7 for \(q=\frac{p}{1-s}\ge 1\) and \(r = \frac{1-s}{2} \le 1\) and for \(q=\frac{p}{s}\ge 1\) and \(r = \frac{s}{2} \le 1\). The second equality is \(\left\| {\varvec{X}^\dagger \varvec{X}} \right\| _{\frac{p}{2}}= \left\| {\varvec{X}\varvec{X}^\dagger } \right\| _{\frac{p}{2}}\). This is the advertised result. \(\quad \square \)

3.4 Fermionic operators

Uniform smoothness and Hypercontractivity apply to Fermions. Consider the Jordan-Wigner transform

These operators also linearly span the full algebra on n-qubits \(\mathcal {B}(\mathcal {H}(2^n))\) by products \(\prod _s(\varvec{a}_s,\varvec{a}^\dagger _s,\varvec{a}_s\varvec{a}^\dagger _s,\varvec{I}_s)\). In this form, Fermions are not local operators due to the Pauli-Z strings. Fortunately, all we need for uniform smoothness is the martingale property (conditionally zero-mean). We derive an analogous 2-norm-like bound with a minor tweak due to Jordan-Wigner strings. The following result was known in [10, Theorem 4]Footnote 10 but we hope the presented derivation is more transparent. We will also extend it in Corollary II.7.2.

Corollary II.7.1

(Hypercontractivity for Fermions). On n-qubits, consider an operator without terms \(\varvec{a}_i\varvec{a}^\dagger _i\). Expand it \(\varvec{A}= \sum _{S\subset \{n,\cdots ,1\}} \varvec{A}_{S}\) by subsets S indicated by Fermionic operators \(\{\varvec{a}^\dagger ,\varvec{a}\}\). Then, for \(p\ge 2\), \(C_p=p-1\),

Proof

WLG, assume the Fermionic operators are ordered such that the larger index appears on the right (e.g.\(\varvec{a}_1\varvec{a}_3\varvec{a}_n\)).

To complete the induction as in Proposition II.4.2, apply a gauge transformation to change the Jordan-Winger string such that only \(a_2\) is nontrivial on site 2. Then we can repeat the above inequality. Note that the background \(\varvec{\rho }_{\eta }\) is invariant under gauge transformations, and the Pauli strings of \(\varvec{\sigma }^z\) do not blow up the weighted p-norm. \(\square \)

Example II.7.1

(2-local Fermionic operators).

However, when multiplying Fermion operators we may get even powers \(\varvec{a}^\dagger _i \varvec{a}_i= (\varvec{I}+\varvec{\sigma }_i)/2, \varvec{a}_i\varvec{a}^\dagger _i = (\varvec{I}-\varvec{\sigma }_i)/2\) where the Pauli string \(\varvec{\sigma }^z\) cancels. Let us quickly extend to the cases with the presence of \(\varvec{\sigma }^z_i\) terms (perhaps with weighted background). Let us formally define the conditional expectation

The conditional expectation maps the full algebra to the subalgebra generated by all but one fermions. Intuitively, it removes terms that have non-trivial terms \(\varvec{a}_s,\varvec{a}_s^\dagger , \varvec{O}^{\eta }\) on site s.

Corollary II.7.2

(Hypercontractivity for Fermions and \(\varvec{O}^{\eta }\)). On n qubits, consider a product state diagonal in the computational basis  . Then, for \(p\ge 2\), \(C_p =p-1\),

. Then, for \(p\ge 2\), \(C_p =p-1\),

The proof is also elementary.

Proof

The rest gauge transformation argument follows from Corollary II.7.1. Note that \(\varvec{O}^{\eta }\) is invariant under gauge transformations. Alternatively, we can take the formal route by manipulating the conditional expectations as in Proposition II.4.2. \(\quad \square \)

4 Non-Random k-Local Hamiltonians

This section presents the main result of this work. We evaluate Hypercontractivity (Sect. 2) for Trotter error of non-random Hamiltonians.

Theorem II.1

(Trotter error in k-local models). To simulate a k-local Hamiltonian using \(\ell \)-th order Suzuki formula, the gate complexity

The p-norm estimate and Proposition II.0.1 imply concentration for typical input states via Markov’s inequality.

Corollary II.1.1

Draw  from an orthonormal basis

from an orthonormal basis  , then

, then

This quickly converts to the trace distance between the pure states

We begin with an instructive example that illustrates the combinatorics (Sect. 3.1). We sketch the proof in Sect. 3.2. In Sects. 3.3 and 3.4, we combine the estimates and conclude the proof with explicit constants in Sect. 3.5. See Sect. 3.7 for the analogous result for Fermions.

4.1 An instructive example

Consider a 2-local Hamiltonian on three subsystems of qubits \(\mathcal {H}= \mathcal {H}_{I_1}\otimes \mathcal {H}_{I_2}\otimes \mathcal {H}_{I_3}\) of equal subsystem sizes n/3.

Let us play around with the first-order product formula. Recall

The leading order \(\mathcal {O}(t^2)\) Trotter error will be a sum of 3-local terms and 1-local terms

The 3-local terms are the “greediest” way to produce long Pauli strings

No cancellation nor collision occurs, and each term is supported on distinct subsets \(\{i_1,i_2,i_3\}\) or \(\{i_1,i_1',i_2\}\). These operators add incoherently (in the Hilbert-Schmidt norm for simplicity). The 1-local terms are more peculiar but turn out equally important. They come from terms that overlap on both sites

The collision of the same Pauli \(\varvec{\sigma }^x_{i_1}\) leads to a “constructive interference” over site \(i_1\). Consequently, it gives a comparable contribution to the Trotter error, although it has a single sum over \(i_2\). This is not a coincidence; both terms are formally controlled by the advertised quantity

From this example, we can anticipate a formal proof would require (1) extracting the local quantities \(\Vert {\varvec{H}} \Vert _{(1),2}\) and \(\Vert {\varvec{H}} \Vert _{(0),2}\) from the nested commutators and Hypercontractivity and (2) dealing with the higher-order time dependence.

4.2 Proof outline

With the above example in mind, we sketch the proof strategy as follows. Recall for any product formula with ordering \(\gamma (j)\), weights \(a_j\), and number of J exponentials, the general Trotter error can be represented in a time-ordered exponential

The error \(\mathcal {E}\) is time-dependent and takes the commutator form

The particular form depends on the choice of ordering and weights, but fortunately, the precise values of the coefficients \(a_j\) will not matter. For \(\ell \)-th order Suzuki formulas that we focus on, all we need is a crude uniform bound \(\left| {a_k} \right| \le 1\) and that the total formula consists of \(\Upsilon =2\cdot 5^{\ell /2-1}\) stages for \(J= \Upsilon \cdot \Gamma \). Our combinatorial argument takes norms everywhere and does not rely on delicate cancellations. Our proof will “beat the error \(\varvec{\mathcal {E}}\) to death” by Taylor expansion (from right to left).

Fact III.2

(Taylor expansion [18, Theorem 10]). For any order \(g'\),

The \(g-1\) exponent will be used consistently in the following. Setting \(\mathcal {L}_j:=a_j\mathcal {L}_{\gamma (j)}\), Taylor expansion gives the formal expansion for the error in powers of time t

Each g-th order term \(\varvec{\mathcal {E}}_g\) is a sum of nested commutators, and we control its p-norm (Sect. 3.3). We will evaluate Hypercontractivity through a rather involved combinatorics to extract the local quantities \(\Vert {\varvec{H}} \Vert _{(1),2}\) and \(\Vert {\varvec{H}} \Vert _{(0),2}\). Note that we will use the version we derived (Proposition II.4.2)

This will straightforwardly generalize to the case of Fermions (Sect. 3.7) and is not restricted to the case of qubits. See Sect. 3.5 for comments on how much constant overhead improvement is possible using the other Hypercontractivity \(\left\| {\varvec{F}} \right\| _{\bar{p}}^2 \le \sum _{S} C_p^{\left| {S} \right| } \left\| {\varvec{F}_S} \right\| _{\bar{2}}^2\) (Proposition II.1).

We handle the edge case \(g'\)-th order term \(\varvec{\mathcal {E}}_{g'}\) in Sect. 3.4. Indeed, bounding the infinite series will give divergent results, so we must halt the expansion at an appropriate order \(g'\). We combine the estimates and apply Markov’s inequality in Sect. 3.5.

4.3 Bounds on the g-th order

We proceed by controlling each g-th order (3.1) polynomial by Hypercontractivity (Proposition II.1.1). We begin with \(\mathcal {L}_j:=a_j\mathcal {L}_{\gamma (j)}\) to ease notation

The second inequality uses a uniform bound on locality \(\left| {S} \right| \le g(k-1)+1\) and applies a brutal triangle inequality. The last inequality expresses \(\mathcal {L}_j\) by \(a_j\mathcal {L}_{\gamma (j)}\) and \(\varvec{H}_j\) by \(a_j\varvec{H}_{\gamma (j)}\) and uses that \(\left| {a_j} \right| \le 1\). We also symmetrize the sum over terms \(\mathcal {L}_{\gamma }\) by throwing in extra terms. This costs an extra factor of \((g-1)!\) (which cancels the factor \(1/(g-1)!\) in the exponential) due to possible permutation of a \((g-1)\)-th order term. For example, consider a particular term

The number of stages \(\Upsilon \) arise as each term \(\mathcal {L}_{\gamma }\) or \(\varvec{H}_{\gamma }\) appears \(\Upsilon \)-times.

The main lemma of this section is the following recursive estimate for one layer of commutators \(\sum _{\gamma } \mathcal {L}_\gamma \). This is effectively calculating certain “2–2 norm” for the commutator \(\sum _{S_2} \mathcal {L}_{S_2}\), where the “2-norm” is \( \sum _{S_1} \left( \sum _\alpha \left\| {[\varvec{O}]^{\alpha }_{S_1}} \right\| _{p}\right)^2. \) We will keep this at an analogy level to avoid introducing extra notations.

Lemma II.3

(Effective 2–2 norm of the commutator). For any set of operators \(\{\varvec{O}^{\alpha }\}_{\alpha }\),

where \(\mathcal {L}_{\gamma }[\varvec{O}^\alpha ]\) is at most \(\left| {S_{max}} \right| \)-local and

Assuming Lemma II.3, iterating it for \((g-1)\)-times gives the estimate

The first inequality also evaluates the last sum over the Hamiltonian terms \(\varvec{H}_{\gamma _0}\) by

The last inequality uses \(g(k-1)+1\le g k\) and hides constants depending only on k in the value c(k)

The expression (3.3) yields the desired estimate for the g-th order error term. Unfortunately, the power series is not summable due to the super-exponential factor \(g^{2gk}\). We will later truncate the expansion at some properly chosen order \(g'\) (Sect. 3.4).

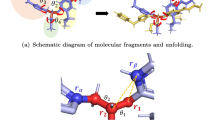

What remains in this section is to show Lemma II.3. As hinted in the example (Sect. 3.1), we need to systematically handle the cases that grow greedily and those with collisions. Let us identify how taking commutators may produce other sets S (Fig. 10).

Let \(S_2(\gamma )\) be the support of \(\gamma \).

-

If the sets \(S_1\) and \(S_2(\gamma )\) are disjoint, the commutator vanishes.

-

(I) If they overlap on a single site, there is no cancellation. The resulting set is the union \(S=S_2(\gamma )\cup S_1\). This was the “greedy” term in the example.

-

(II) If they overlap on more than 1 site, we may lose all but 1 site. The resulting set S is a subset of the union \(S\subset S_2(\gamma )\cup S_1\).Footnote 11

To account for the above, we rewrite the sets \(S,S_2,S_1\) in terms of the components

where

-

\(S_0:= S_1/S_2\) are the “untouched” sites.

-

\(S_-\subset S_1\cap S_2\) are the sites that got canceled due to collison

-

\(S_f\) are the sites that stayed in all sets \(S_0, S_1,S_2\). We must have \(\left| {S_f} \right| \ge 1\)

-

\(S_+:=S_2/S_1\) are the new sites.

We will constantly use this decomposition back and forth in the proof.

4.3.1 “Greedy growth”: overlapping at 1 site

To get familiar with the manipulations and notations, we work out the simpler case when the sets overlap on a single site

We will see that the growth due to the commutator \(\sum _{\gamma } \mathcal {L}_{\gamma }\) is controlled by the succinct norm \(\left\| {\varvec{H}} \right\| _{(local),2}^2\), multiplied by some function of the locality \(\left| {S} \right| \). To ease the notation, we will also overload the set \(S_2(\gamma )\) by \(\gamma \).

The first inequality is Cauchy-Schwartz. The second inequality rearranges the sum over \(S_1,S_2\), uses Holder’s inequality, and then evaluates the combinations that the two sets \(S_1,S_2\) can give rise to the set S. In the last inequality, we make the 2-norm \(\left\| {\varvec{H}} \right\| _{(local),2}\) explicit by

We also use a uniform upper-bound for the combinatorial function of the set sizes \(\left| {S} \right| ,\left| {S_1} \right| \).

It is instructive to compare with the \(1-1\)-norm calculation without invoking Hypercontractivity.

This is the 1-norm local quantity that featured in the worst-case Trotter error [18].

(Left) Intuitively, commuting with another operator produces new occupancy. (Middle) Unfortunately, occasion cancellations complicate the calculation. (Right) For bookkeeping, we label the possible sets that can be produced by acting the commutator \(\mathcal {L}_{S_2}\) on some operator \(\varvec{O}_{S_1}\). In their intersection, some subset \(S_-\) becomes the identity, and some subset \(S_{f}\) remains occupied. For Pauli strings, the fixed subset \(S_{f}\) must be non-empty; in the Fermionic case, the fixed subset \(S_{f}\) may be empty

4.3.2 Cancellation and collision due to larger overlap

The case with cancellation requires delicate notations to handle. Suppose we lose some set in the overlap \( S_- \subset S_1\cap S_2\) due to collision and gain a new set \(S_2/S_1=:S_+\) (Fig. 10). The combinatorics will be organized by the size of the fixed set \(k_{f}:=\left| {S_{f}} \right| = 1,\ldots , k\).

Proposition II.3.1

(Fixed \(k_f\)). For a value of \(\left| {S_{f}} \right| =k_{f} \in \{ 1,\ldots , k\}\),

where the indicator \(\mathbb {1}(S_2\sim \gamma )\) checks if the set \(S_2\) coincides with the support of \(\gamma \) and

To connect to our notation in the main text, for \(k_f= 0\), this is what we defined as the global norm \(\left\| {\varvec{H}} \right\| _{(0),2} = \left\| {\varvec{H}} \right\| _{(global),2}\); for \(k_f= 1\), this is what we defined as the local norm \(\left\| {\varvec{H}} \right\| _{(1),2} = \left\| {\varvec{H}} \right\| _{(local),2}\). The norms for \(k_f\ge 2\) are more of a proof artifact. To be careful with the distinction between an operator \(\varvec{O}\) and its local component \(\varvec{O}_{S}\), we first note the following bound.

Fact II.4

For any set S and operator \(\varvec{O}\), we have

Proof of Fact II.4

Use monotonicity of partial trace (Fact II.3), i.e., the conditional expectation \(E_s\) is norm non-increasing. The last factor \(2^{\left| {S} \right| }\) is due to a brutal triangle inequality. \(\quad \square \)

Proof of Proposition II.3.1

The first inequality uses that \(\Vert {\left[ \mathcal {L}_{\gamma }[\varvec{O}_{S_1}]\right] _S} \Vert _{p}= \Vert {\prod _{s \in S_f} (1-E_s) \mathcal {L}_{\gamma }[\varvec{O}_{S_1}]} \Vert _{p}\le 2^{\left| {S_f} \right| }\Vert {\mathcal {L}_{\gamma }[\varvec{O}_{S_1}]} \Vert _{p}\) via Fact II.4. The second inequality evaluates \(\sum _{\gamma \sim S} \Vert {\varvec{H}_{\gamma }} \Vert = b_{S}\) and uses Cauchy-Schwartz w.r.t to the sum over sets \(S_{f}, S_+, S_0\) associated with a given set S. The third inequality uses Cauchy-Schwartz w.r.t the sum over sets \(S_-\). We also evaluate the elementary sum (the last inequality here uses that the largest term is attained at \(\left| {S_+} \right| = k- \left| {S_f} \right| \). )

The equality rearranges the sum. The last inequality uses the following estimates

and

These, together with the hidden constants \((\cdot )(\cdot )\), give the ultimate prefactors. \(\square \)

We can now prove the main lemma by summing over the set sizes \(k_f = 1,\ldots , k\).

Proof of Lemma II.3

The second equality presents the sets \(S_1,S_2\) by the decomposition \(S_{f},S_-,S_+,S_0\) and isolates the sum over \(k_f\). The last inequality might look intimidating, but it is actually a triangle inequality (over values \(\left| {S_{f}} \right| \) ) for certain 2-norm

We may now use a variant of Proposition II.3.1 with an additional sum over an abstract set \(\sum _\alpha \). The derivation is analogous by keeping the sum at the innermost layer (sticking to the operator \(\varvec{O}^\alpha \)). with the replacement

This is the advertised result. \(\quad \square \)

4.4 Bounds for \(g'\)-th order and beyond

The previous section evaluates the g-th order terms \(\mathcal {E}_g\). This section takes care of the last term in the Taylor expansion \(\mathcal {E}_{\ge g'}\). To ease notation, we set the dummy variable to be \(g'\rightarrow g\). It has infinite-order dependence on time, so we have to tweak the calculations. Recall (3.1),

The first inequality exchanges the summation order, applies the triangle inequality, integrates over time, and removes the unitary conjugations by unitary invariance of p-norms. The second inequality is a similar calculation to (3.2). We use Hypercontractivity, pull the p-norm inside the sum, and symmetrize the sum by completing the exponential for \(\gamma _{g-2}\cdots \gamma _0\).

The only difference from (3.2) is the outer-most sum outside the square root.

Lemma II.5

(Sum outside the square-root).

where

We can evaluate the bound using Lemma II.5 for the outer-most sum \(\sum _{\gamma _{g-1}}^{\Gamma }\) and Lemma II.3 for \(\gamma _{g-2},\cdots \gamma _1\)

The last inequality absorbs constants into \(c'(k) \)

In other words, the higher-order time dependence forces us to apply triangle inequality for the outer layer sum; fortunately, we can still use Lemma II.3 for the inner sums. These give the different prefactor \(c'(k)\).

Proof of Lemma II.5

The calculation is analogous to Lemma II.3. We define a slightly different quantity

that will organize the combinatorics (the analog of the number \(k_f\) in Lemma II.3). We first rearrange the expression in terms of the subsets \(S_+,S_-,S_0,S_f\).

The first inequality parameterizes the sets \(S_1 = S_{f}S_-S_0\) that could give rise to S after taking the commutator \(\mathcal {L}_{\gamma }\). The factor \(2^{\left| {S_{f}} \right| }\) is due to Fact II.4. The second inequality is a triangle inequality to postpone the sum over \(k' = \left| {S_{f}} \right| +\left| {S_-} \right| \).

Next, we use Cauchy-Schwartz to break the non-linear expression into individual pieces. This costs multiplicative constant overheads that depend only on k.

The first inequality is Cauchy-Schwartz, where the sum evaluates to

The second inequality is a triangle inequality for the sum over subsets \(S_-,S_{f}\subset S_2\), which then combines with the sum over \(S_2\). The fifth inequality is Cauchy-Schwartz’s. Lastly, we evaluate the combinatorial factors for each term

and

These give the advertised result. \(\quad \square \)

4.5 Proof of Theorem II.1

Proof

For a short time \(\tau \), we arrange and perform the last integral using estimate \(\int (\tau ')^{g-1} d\tau '\le \tau '^{g}\)

In the second inequality we call the bounds for each \(g-th\) order (3.3) and the \(g'\)-th order (3.4) for a good value of

This is possible as long as the following holds.

Constraint II.5.1

\( (\frac{1}{b_p\tau })^{1/k}\ge \textrm{e}(\ell +3). \)

Then, the total Trotter error at a long time \(t = r \cdot \tau \) is bounded by a telescoping sum

At the second line we restrict to sufficiently large values of r that the first term dominates.Footnote 12

Constraint II.5.2

\((\frac{1}{b_p\tau })^{1/k} \ge \textrm{e}\ln \left( \frac{c_2}{c_1} (\frac{1}{b_p\tau })^{\ell +1} \right)\).

The last inequality isolates the p-dependence and we use \(C_p = p-1\le p\).

Next, for each value of r,

Via Markov’s inequality, this gives concentration for its singular values(or over any 1-design inputs)

Choose

which explicitly evaluates to

We also need to comply with both Constraint II.5.1 and Constraint II.5.2, which summarizeFootnote 13 to

The constant x is the unique solution to the transcendental equation

Rearrange to obtain

And recall the explicit values

and

The above expressions for gate count are for numerical evaluation; for comprehension, use \(\Omega (\cdot )\) to suppress functions of \(k,\ell \) (such as the number of stages \(\Upsilon \)) and note the local norms are decreasing with \(k'\).

The first term dominates for large time, system size, and error (fixing the value of failure probability \(\delta \)). The gate complexity is given by \(G = r\cdot \Upsilon \cdot \Gamma \). This is the advertised result. \(\quad \square \)

4.5.1 Constant overhead improvement from another hypercontractivity

One may consider directly apply the existing Hypercontractivity \(\left\| {\varvec{F}} \right\| _{\bar{p}}^2 \le \sum _{S} C_p^{\left| {S} \right| } \left\| {\varvec{F}_S} \right\| _{\bar{2}}^2\) (Proposition II.1). However, one needs to go through the same combinatorial estimates, with minor constant overheads improvements by replacing \(\left\| {\varvec{O}_{S_1}} \right\| _{p}^2\rightarrow \left\| {\varvec{O}_{S_1}} \right\| _{2}^2\) and discarding Fact II.4. Unfortunately, what comes into the ultimate quantity \(\left\| {\varvec{H}} \right\| _{(local),2}\) is the spectral norm \(\Vert {\varvec{H}_{\gamma }} \Vert \) coming from a Holder’s inequality

and it requires more accounting to get better estimates.

4.6 Spin models at a low particle number

In many Hamiltonians, each term \(\varvec{H}_{\gamma }\) preserves the particle number and the total Hilbert space decomposes into a direct sum of subspaces labeled by their particle number. The input state may have a known particle number.

In this section, we will present an appropriate notion of concentration for input states drawn randomly from a fixed particle number subspace. Formally, denote the m-particle subspace by the orthogonal projector

then particle number preserving means

We need to first define the appropriate k-locality in this case by expanding the Hamiltonian in the basis

k-locality in this basis is defined by

Note that particle number preserving enforces the number of raising and lower operators match \(\left| {S_+} \right| =\left| {S_-} \right| \). This expansion is motivated by an auxiliary product state \(\varvec{\rho }_{\frac{m}{n}}\) that closely relates to the normalized subspace projector \(\bar{\varvec{P}}_m = \varvec{P}_m/\textrm{Tr}[\varvec{P}_m]\). Intuitively, the operator \(\varvec{O}^{\eta }\) is the analog of Pauli \(\varvec{\sigma }^z\) in a biased background

See Sect. 2.3 for the details on the construction. Here, we present the concentration result for Trotter error.

Proposition II.5.1

(Trotter error in k-local models). To simulate a number preserving k-local Hamiltonian using the \(\ell \)-th order Suzuki formula on the m-particle subspace \(\varvec{P}_m\), the gate complexity

where the quantities \(\left\| {\varvec{H}} \right\| _{(global),2}\) and \(\left\| {\varvec{H}} \right\| _{(local),2} \) are defined w.r.t to (3.5).

Note that we have dropped the parameter s in \(\left\| {\cdot } \right\| _{p,\bar{\varvec{P}}_m,s}\) since every term commutes with \(\varvec{P}_m\) (and the auxiliary state \(\varvec{\rho }_{\frac{m}{n}}\)).

Proof

The result quickly follows by converting to the p-norm w.r.t. the auxiliary product state  defined by the filling ratio \(\eta = \frac{m}{n}\). For \(\varvec{F}= [\varvec{\mathcal {E}} (\varvec{H}_1,\ldots ,\varvec{H}_\Gamma , t)]_{g}\),

defined by the filling ratio \(\eta = \frac{m}{n}\). For \(\varvec{F}= [\varvec{\mathcal {E}} (\varvec{H}_1,\ldots ,\varvec{H}_\Gamma , t)]_{g}\),

Some technical notes: Holder’s inequality still works for the \(\varvec{\rho }_{\eta }-\) weighted normsFootnote 14\(\left\| {\varvec{H}_{\gamma } \varvec{O}} \right\| _{p,\varvec{\rho }_{\eta }}\le \left\| {\varvec{O}} \right\| _{p,\varvec{\rho }_{\eta }} \Vert {\varvec{H}_{\gamma }} \Vert \) (which needs not be true for general \(\varvec{\rho }\)); if \(\varvec{O}\) is particle number preserving, then \([\varvec{O}]_{S}\) is also particle number preserving.Footnote 15\(\quad \square \)

Via Markov’s inequality (plug \(\varvec{\rho }=\bar{\varvec{P}}_m \) into Proposition II.0.1), we obtain concentration.

Corollary II.5.1

Draw  from a \(m = \eta n\) - particle subspaces (i.e.,

from a \(m = \eta n\) - particle subspaces (i.e.,  ), then

), then

4.7 k-locality for fermions

Analogously, we generalize to Hamiltonians with Fermionic terms. We begin with defining k-locality for Fermionic systems. Suppose the particle-number preserving Fermionic Hamiltonian can be written as

Again, particle number preserving enforces \(\left| {S_+} \right| =\left| {S_-} \right| \).Footnote 16 Recall the second quantization commutation relations (following [18])

and

Compared with k-local Paulis, the only difference for the Fermionic case is (3.7): commuting two Fermionic operators on the same site \(\ell \) can produce an identity \(\varvec{I}_{\ell }\). This would add an extra term in our effective 2–2 norm calculation (Corollary II.3)

where the “global” 2-norm \(\left\| {\cdot } \right\| _{(0),2}\) only contains Fermionic operators

Intuitively, when identity is produced at the overlapping site, more terms may collide, i.e., add coherently. See Sect. 4.2 for an example where this term is necessary. Otherwise, the rest of the calculation is identical (\(\lambda '(k)\) remains the same). Note that we would use a Fermionic version of Fact II.4, which can be shown by a gauge transformation argument.

Proposition II.5.2

(k-local Fermionic Hamiltonians). To simulate a k-local, particle number preserving Fermionic Hamiltonian using \(\ell \)-th order Suzuki formula on m-particle subspace \(\varvec{P}_m\), the gate complexity

where \(\left\| {\varvec{H}} \right\| _{(global),2}, \left\| {\varvec{H}} \right\| _{(local),2} \) is defined w.r.t to (3.6).

Corollary II.5.2

Draw  from a \(m = \eta n\) - particle subspaces (i.e.,

from a \(m = \eta n\) - particle subspaces (i.e.,  ), then

), then

5 Optimality for First-order and Second-order Formulas

We demonstrate the optimality of our p-norm estimates for a particular 2-local Hamiltonian, at short times, for the first and second-order Lie-Trotter-Suzuki formulas. The \(k\ge 2\) cases can also be constructed analogously. Consider the Hamiltonian

for the first-order Trotter formula

We can exactly compute its 2-norm due to the orthogonality of Paulis

For our upper bounds (3.3),

which means when \(\alpha _{ij}=1\) are equal strength,

It is less obvious how to calculate its p-norm or operator norm.

To obtain tight p-norm and spectral norm estimates, we construct another Hamiltonian on three set of qubits \(\mathcal {H}= \mathcal {H}_{S_1}\otimes \mathcal {H}_{S_2}\otimes \mathcal {H}_{S_3}\)

The commutator evaluates to a factorized commuting sum

Its p-norms can be obtain by central limit theorem at large \(\left| {S_1} \right| , \left| {S_2} \right| , \left| {S_3} \right| \)

where we recall the p-th moment of standard Gaussian \(\left| {g} \right| _p=\theta (\sqrt{ p } )\). Now, let \(\left| { S_1} \right| =\left| { S_2} \right| =\left| { S_3} \right| =\theta (n)\), then it saturates our first-order p-norm upper bound (3.3).

At the same time, its spectral norm

matches the triangle inequality bound in [18].

5.1 Second-order suzuki formulas

For the second-order Trotter error, recall the expansion [18, Appendix L],

with the same Hamiltonian (4.1). Due to the symmetry, we know \([\varvec{B},[\varvec{B},\varvec{A}]]\) has the same p-norm as \([\varvec{A},[\varvec{A},\varvec{B}]]\). Conveniently, the factor \(\frac{1}{2}\) allows us to consider only one term (at most losing a constant overhead \(\frac{1}{2}\))

This converges to a function of three independent Gaussians (note that the \(s_3\), \(s'_3\) are two dummy indexes in the same set \(S_3\))

matching our p-norm bound. The spectral norm

again matches the triangle inequality bounds in [18].

5.2 Fermionic hamiltonians

To demonstrate the need for the extra term for Fermionic Hamiltonians \(\left\| {\varvec{H}_{ferm}} \right\| _{(0),2}\), consider a Hamiltonian of the form

The commutator evaluates to

And for the second-order Suzuki,

6 Preliminary: Matrix-Valued Martingales

Concentration inequalities are well known for an i.i.d. sum of random numbers. Unfortunately, the phenomena in the wild are rarely like a sum, identical, or independent yet nonetheless concentrate around the mean. Among the zoo of extensions that attempt to capture realistic randomness, a (scalar-valued) martingale describes a random process that the future has zero mean conditioned on the past. Martingales constitute a class more flexible than i.i.d. sums that will serve our purpose.

For a minimal technical introduction (following Tropp [57] and Huang et. al [24]), consider a filtration of the master sigma algebra \(\mathcal {F}_0\subset \mathcal {F}_1 \subset \mathcal {F}_2 \cdots \subset \mathcal {F}_t \subset \cdots \mathcal {F}\), where for each filtration \(\mathcal {F}_j\) we denote the conditional expectation \(\mathbb {E}_j\). Intuitively, we can think of the index t as the ’time’, where the associated filtration \(\mathcal {F}_t\) hosts possible events happening before time t. More precisely, a martingale is a sequence of random variable \(Y_t\) adapted to the filtration \(\mathcal {F}_t\) such that

In other words, the present depends on the past (’causality’), and tomorrow has the same expectation as today (’status quo’). For simplicity, we often subtract the mean to obtain a martingale difference sequence

6.1 Useful norms and recursive bounds for matrices

In our case, our goal is to quantify the error between the ideal unitary \(\varvec{U}=\textrm{e}^{\textrm{i}\varvec{H}t}\) and the product formula \(\varvec{S}\) where the Hamiltonian is drawn randomly. This can be framed as a matrix-valued martingale

where the conditional expectation \(\mathbb {E}_{t-1}\) acts entrywise. In other words, the randomness here has both classical (the expectation \(\mathbb {E}\)) and quantum (the trace \(\textrm{Tr}[\cdot ]\)) sources. In comparison, our previous discussion on Paulis strings does not have the above extra layer of classical randomness. This will give slightly different flavors.

Historically, the earliest general results on matrix-valued martingales were established in [36, 37, 45], and more recent works and applications include [13, 14, 24, 26, 44, 57]. Throughout this work, our main driving horse is again uniform smoothness (in a slightly different format from uniform smoothness for subsystems (Proposition II.1.1)). It is not the tightest kind of martingale inequality but arguably the simplest and most robust when matrices are bounded (or with Gaussian coefficients via the central limit theorem). Analogously to Proposition II.1.1, these inequalities deliver sum-of-square (“incoherent”) estimates sharper than the triangle inequality, which is linear (“coherent”).

To study concentration of matrices, we first pick a suitable norm. The error between the ideal unitary and the product formula can be quantified in two ways with different operational meanings. For both norms, uniform smoothness streamlines our concentration results (Sects. 6, 7).

6.1.1 The operator norm

The operator norm quantifies the error for the worst input state

If we are interested in concentration of the operator norm, it suffices to control its moments by the expected Schatten p-norm

To bound the RHS, the driving horse is the following bound with only a martingale requirement (“conditionally zero-mean”).

Fact V.1

(Uniform smoothness for Schatten classes [24, Proposition 4.3]). Consider random matrices \(\varvec{X}, \varvec{Y}\) of the same size that satisfy \(\mathbb {E}[\varvec{Y}|\varvec{X}] = 0\). When \(2 \le p\),

The constant \(C_p = p - 1\) is the best possible.

Uniform smoothness for Schatten classes in another form (Fact I.4) was proven by [55] with optimal constants determined by [4]. The above martingale form is due to [42, 46] and [24, Proposition 4.3]. This can be alternatively seen as a special case of Proposition II.1.1 by interpreting the classical expectation as a trace.Footnote 17

6.1.2 Fixed input state

Sometimes we only care about a fixed but arbitrary input state  . This deserves another error metric (following [14]) that differs from the spectral norm by an order of quantifier

. This deserves another error metric (following [14]) that differs from the spectral norm by an order of quantifier

Uniform smoothness for this norm follows.

Corollary V.1.1

(Uniform smoothness, fixed input [13]). Consider random matrices \(\varvec{X}, \varvec{Y}\) of the same size that satisfy \(\mathbb {E}[\varvec{Y}|\varvec{X}] = 0\). When \(2 \le p\),

with constant \(C_p = p - 1\).

Proof

This can be seen by rewriting the \(\ell _2\)-norm as a p-norm

\(\square \)

Note that the pure inputs  capture general mixed inputs \(\varvec{\rho }\) by convexity

capture general mixed inputs \(\varvec{\rho }\) by convexity

The second inequality is a telescoping sum. The third equality uses that the operator norm equals to the 1-norm \(\Vert {\cdot } \Vert =\left\| {\cdot } \right\| _{1}\) for rank 1 matrices.

6.2 Reminders of useful facts

Before we turn to the proof, let us remind ourselves of the useful properties for the underlying norms \({\left| \hspace{-1.0625pt}\left| \hspace{-1.0625pt}\left| \cdot \right| \hspace{-1.0625pt}\right| \hspace{-1.0625pt}\right| }_{*}:={\left| \hspace{-1.0625pt}\left| \hspace{-1.0625pt}\left| \cdot \right| \hspace{-1.0625pt}\right| \hspace{-1.0625pt}\right| }_{p,q}, {\left| \hspace{-1.0625pt}\left| \hspace{-1.0625pt}\left| \cdot \right| \hspace{-1.0625pt}\right| \hspace{-1.0625pt}\right| }_{\text {fix},p}\) for \(p,q\ge 2\). They are largely inherited from the (non-random) Schatten p-norm. Following [13],

Fact V.2

(Non-commutative Minkowski). Each of the expected moments satisfies the triangle inequality and thus is a valid norm. For any random matrix \(\varvec{X}, \varvec{Y}\)

Fact V.3

(Operator ideal norms). For operators \(\varvec{A}\) deterministic and \(\varvec{X}\) random

Fact V.4