Abstract

We characterise the multiplicative chaos measure \({\mathcal {M}}\) associated to planar Brownian motion introduced in Bass et al. (Ann Probab 22(2):566–625, 1994), Aïdékon et al. (Ann. Probab. 48(4), 1785–1825, 2020) and Jego (Ann Probab 48(4):1597–1643, 2020) by showing that it is the only random Borel measure satisfying a list of natural properties. These properties only serve to fix the average value of the measure and to express a spatial Markov property. As a consequence of our characterisation, we establish the scaling limit of the set of thick points of planar simple random walk, stopped at the first exit time of a domain, by showing the weak convergence towards \({\mathcal {M}}\) of the point measure associated to the thick points. In particular, we obtain the convergence of the appropriately normalised number of thick points of random walk to a nondegenerate random variable. The normalising constant is different from that of the Gaussian free field, as conjectured in Jego (Electron J Probab 25:39, 2020). These results cover the entire subcritical regime. A key new idea for this characterisation is to introduce measures describing the intersection between different independent Brownian trajectories and how they interact to create thick points.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Main Results

The study of exceptional points of planar random walk has a long history. In 1960, Erdős and Taylor [ET60] showed that the number of visits of the most visited site of a planar simple random walk after n steps is asymptotically between \((\log n)^2/(4\pi )\) and \((\log n)^2/\pi \) and conjectured that the upper bound is sharp. This conjecture was proven forty years later by Dembo, Peres, Rosen and Zeitouni in the landmark paper [DPRZ01]. These authors also considered the set of thick points of the walk, where the walk has spent a time at least a fraction of \((\log n)^2\), and computed its asymptotic size at the level of exponents. Their proof is based on planar Brownian motion and uses KMT-type approximations to transfer the results to random walk with increments having finite moments of all order. [Ros05] provided another proof of these results without the use of Brownian motion and [BR07] extended them to planar random walk with increments having finite moment of order \(3+\varepsilon \). [Jeg20b] streamlined the arguments by exploiting the links between the local times and the Gaussian free field (GFF) and extended the above results to walks with increments of finite variance and to more general graphs. [AHS20] and [Jeg20a] constructed simultaneously a random measure supported on the set of thick points of Brownian motion extending results of [BBK94]. Finally, [Oka16] studied the most visited points of the inner boundary of the random walk range.

A closely related (but in fact distinct as we will argue below) area of research is the study of planar random walk run until a time close to the cover time. It has become very active since Dembo, Peres, Rosen and Zeitouni [DPRZ04] found the leading order term of the cover time for both planar Brownian motion and random walk settling a conjecture of Aldous [Ald89]. Since then, the understanding of the behaviour of the walk in this regime has considerably improved. We mention a few works. On the torus, the multifractal structure of the set of thin/thick/late points has been studied [DPRZ06, CPV16, Abe15], the subleading order of the cover time has been established [Abe21, BK17] and even the tightness of the cover time associated to Brownian motion on the 2D sphere is known [BRZ19]. For a walk resampled every time it hits the boundary of a planar domain, the scaling limit of the set of thin/thick/late points has been established [AB22]. The picture is even more complete on binary trees where the scaling limit of the cover time [CLS21, DRZ21] as well as the scaling limit of the set of extreme points having maximal local times [Abe18] have been derived.

The current paper is closer to the setup of the first series of articles where the walk is stopped at the first exit time of a planar domain. Its aim is to establish the scaling limit of the thick points of planar simple random walk stopped at the first exit time of a domain by showing that the point measure associated to the thick points converges to a nondegenerate random measure \({\mathcal {M}}\). This gives much finer information on the set of thick points and, as a corollary, we obtain the convergence of the appropriately normalised number of thick points of random walk to a nondegenerate random variable considerably improving the previously known above-mentioned results. In that sense, it is the final answer to the question raised by Erdős and Taylor.

In this regime a comparison to the GFF is too rough, in contrast with the regime corresponding to times closer to cover time; and indeed, in this latter case the limiting measure is related to the so-called Liouville measure of GFF (see [AB22] and see [RV10, DS11, RV11, Sha16, Ber17] for subcritical Liouville measures and Gaussian multiplicative measures). In our delicate setting of limited time horizon, the limiting measure \({\mathcal {M}}\), that we can call “Brownian multiplicative chaos” in analogy to Gaussian multiplicative chaos measures, was introduced in [BBK94, AHS20, Jeg20a] and was so far fairly mysterious. On the one hand, it shares a lot of similarities with the Liouville measure such as carrying dimension and conformal invariance. But on the other hand the measure \({\mathcal {M}}\) is very different in the sense that it is carried and entirely determined by the random fractal composed of a Brownian trace. One of the main result of this paper consists in characterising the law of the measure \({\mathcal {M}}\). We show that it is the only random Borel measure satisfying a list of natural properties which fix its average value and express a spatial Markov property. This demystifies the measure \({\mathcal {M}}\) and shows its universal nature.

We start by presenting our results on random walk. We then discuss our characterisation of Brownian multiplicative chaos.

In this paper, we will consider simply connected domains with a boundary composed of a finite number of analytic curves. Such a continuous domain will be called a “nice domain” and a boundary point where the boundary is locally analytic will be called a “nice point”.

1.1 Scaling limit of thick points of planar random walk

We will extend the definition of the integer part function by setting for \(x=(x_1,x_2) \in {\mathbb {R}}^2\), \(\left\lfloor x \right\rfloor = (\left\lfloor x_1 \right\rfloor ,\left\lfloor x_2 \right\rfloor )\). For a nice domain U, a reference point \(x_0 \in U\) and a large integer N, let \(U_N\) and \(\partial U_N\) be discrete approximations of U and \(\partial U\) defined as follows:

and

This intricate definition of \(U_N\) is just to avoid issues with “thin” boundary pieces.Footnote 1 For \(z \in \partial U\), we will abusively write \(\left\lfloor Nz \right\rfloor \) any point of \(\partial U_N\) closest to z. Let \((X_t)_{t \ge 0}\) be a continuous time simple random walk on \({\mathbb {Z}}^2\) with jump rate one (at every vertex, it waits an exponential time with parameter one before jumping) and define its hitting time of \(\partial U_N\) and local times:

For \(x, z \in {\mathbb {C}}\), we will denote by \({\mathbb {P}}^{U_N}_x\) the probability measure associated to the walk \((X_t,t \le \tau _{\partial U_N})\) starting at \(X_0 = \left\lfloor x \right\rfloor \) and \({\mathbb {P}}_{x,z}^{U_N} := {\mathbb {P}}_x^{U_N} \left( \cdot \left| X_{\tau _{\partial U_N} } = \left\lfloor z \right\rfloor \right. \right) \).

Let \(x_0 \in D\) and \(z \in \partial D\) be a nice point. Let \(a \in (0,2)\) be a parameter measuring the thickness level,

be universal constants appearing in the asymptotic behaviour of the discrete Green function (see Lemma 3.1); here \(\gamma _{\textrm{EM}}\) stands for the Euler–Mascheroni constant. We define a random Borel measure \(\mu ^{U,a}_{x_0;N}\) on \({\mathbb {C}}\) by setting for all Borel sets \(A \subset {\mathbb {C}}\),

We also define the conditioned version \(\mu ^{U,a}_{x_0,z;N}\) of \(\mu ^{U,a}_{x_0;N}\) by replacing \({\mathbb {P}}^{U_N}_{Nx_0}\) by \({\mathbb {P}}^{U_N}_{Nx_0,Nz}\).

One of our main theorems is the following.

Theorem 1.1

For all \(a \in (0,2)\), the sequence \(\mu ^{U,a}_{x_0;N}, N \ge 1,\) (resp. \(\mu ^{U,a}_{x_0,z;N}, N \ge 1\)) converges weakly relatively to the topology of weak convergence (resp. vague convergence) on U. Moreover, the limiting measure has the same distribution as \(e^{c_0a/g} {\mathcal {M}}^{U,a}_{x_0}\) (resp. \(e^{c_0a/g} {\mathcal {M}}^{U,a}_{x_0,z}\)) built in [BBK94, AHS20, Jeg20a].

In Sect. 1.2, we recall a precise definition of the above-mentioned Brownian multiplicative chaos measures \({\mathcal {M}}^{U,a}_{x_0}\) and \({\mathcal {M}}^{U,a}_{x_0,z}\).

We now emphasise the difficulties inherent to the random walk setting that are not present in the Brownian motion case considered in [BBK94, Jeg20a]. Theorem 1.1 looks very similar to [Jeg20a, Theorem 1.1] (see also [BBK94] for partial results) which studies flat measures \({\mathcal {M}}_\varepsilon , \varepsilon >0,\) supported on the set of thick points of planar Brownian motion. See Sect. 1.2 for more details about this. But let us emphasise that the approach of [Jeg20a] cannot be adapted to prove Theorem 1.1 and that a new strategy is needed. Indeed, the proof of [Jeg20a, Theorem 1.1] is based on the \(L^1\)-convergence of \(({\mathcal {M}}_\varepsilon (A), \varepsilon >0)\) for all Borel set \(A \subset {\mathbb {C}}\). This strong form of convergence is crucial to the strategy in [Jeg20a]. Here, it is not even a priori clear how to build the random measures \(\mu ^{U,a}_{x_0,z;N}, N \ge 1\), on the same probability space so that \((\mu ^{U,a}_{x_0,z;N}(A), N\ge 1)\) converges in \(L^1\). For instance, coupling the random walks via the same Brownian motion through KMT-type couplings does not seem to be tractable, or is at least too rough. As mentioned in the introduction, our proof of Theorem 1.1 will rely on a characterisation of the law of Brownian multiplicative chaos, which we describe below.

We first mention however that Abe and Biskup [AB22] have recently established a result with a similar flavour but important differences. Indeed, they consider a random walk on a box with wired boundary conditions (so it is uniformly resampled on the boundary every time it touches the boundary) and run the walk up to a time proportional to the cover time. In this regime, the local times of the walk are very closely related to the Gaussian free field and indeed their limiting measure is the Liouville measure (in contrast to here).

A direct consequence of Theorem 1.1 is the convergence of the appropriately scaled number of random walk’s thick points. This answers a question raised in [Jeg20b] and considerably improves the previous known estimates on the fractal dimension [DPRZ01, Ros05, BR07, Jeg20b] of the set of thick points. For \(a \in (0,2)\), we denote

Recalling the definition (1.1) of g and \(c_0\), we have:

Corollary 1.1

For all \(a \in (0,2)\), the following convergence holds in distribution: under \({\mathbb {P}}^{U_N}_{Nx_0}\),

Moreover, the limit is nondegenerate, i.e. \({\mathcal {M}}^{U,a}_{x_0}(U) \in (0,\infty )\) a.s.

As mentioned in [Jeg20b], despite the strong link between the local times and the GFF, this shows a subtle difference in the structure of thick points of random walk compared to those of the GFF which cannot be observed through rougher estimates such as the fractal dimension. Indeed, the analogue of Corollary 1.1 with the local times replaced by half of the GFF squared uses a normalisation factor with \(\sqrt{\log N}\) instead of \(\log N\). See [BL19].

Remark 1.1

To ease the exposition we decided to focus on the measures \(\mu ^{U,a}_{x_0;N}\) defined above, but one can consider random measures on \({\mathbb {C}}\times {\mathbb {R}}\) defined by: for \(A \in {\mathcal {B}}({\bar{U}})\) and \(T \in {\mathcal {B}}({\mathbb {R}}\cup \{+\infty \})\),

Once the convergence of \(\mu ^{U,a}_{x_0;N}\) is established, it can be shown that \({\tilde{\mu }}^{U,a}_{x_0;N}, N \ge 1\), converges, relative to the topology of vague convergence on \({\bar{U}} \times ({\mathbb {R}}\cup \{+\infty \})\) to a product measure: \({\mathcal {M}}^{U,a}_{x_0}\) times an exponential measure. See [Jeg20a] for the case of local times of Brownian motion.

Finally, the convergence of thick points of random walk to Brownian multiplicative chaos opens the door to other scaling limit results. We mention the paper [ABJL21] which builds and studies a multiplicative chaos associated to the so-called Brownian loop soup. When the intensity of the loop soup is critical, [ABJL21] shows that the resulting chaos is closely related to Liouville measure elucidating connections between Brownian multiplicative chaos, Gaussian free field and Liouville measure. This identification of measures heavily relies on the scaling limit results of the current paper. A stronger form of convergence than what is stated in Theorem 1.1 is actually needed in [ABJL21]. This convergence is stated in Theorem 5.1 and is a by-product of our approach to Theorem 1.1. We preferred to defer the exposition of this result to Section 5 because it requires the introduction of many more notations.

1.2 Brownian multiplicative chaos: background and extension

Background This section recalls the definition of Brownian multiplicative chaos measure \({\mathcal {M}}^{U,a}_{x_0,z}\) as well as provides the extension of the results of [BBK94, AHS20, Jeg20a] that we need. We follow the construction of [Jeg20a] (see also [BBK94] for partial results and [AHS20] for a different construction). For a nice domain \(U \subset {\mathbb {C}}\) and \(x_0 \in U\), let \({\mathbb {P}}^U_{x_0}\) be the law under which \((B_t, t \le \tau _{\partial U})\) is a Brownian motion starting at \(x_0\) and stopped at the first exit time of U:

For \(x_0 \in U\) and a nice point \(z \in \partial U\), we will also consider the conditional law \({\mathbb {P}}_{x_0,z}^U := {\mathbb {P}}_{x_0}^U \left( \cdot \left| B_{\tau _{\partial U}} = z \right. \right) \) which is rigorously defined for instance in [AHS20, Notation 2.1]. For all \(x \in U\) and \(\varepsilon >0\), define the local time \(L_{x,\varepsilon }\) of the circle \(\partial D(x,\varepsilon )\) up to time \(\tau _{\partial U}\):

with the convention that \(L_{x,\varepsilon }=0\) if the disc \(D(x,\varepsilon )\) is not fully included in U. [Jeg20a, Proposition 1.1] shows that these local times are well-defined for all \(x \in U\) and \(\varepsilon >0\) simultaneously. For all parameter values \(a \in (0,2)\) measuring the thickness level, we can thus define the random measure

[Jeg20a] shows that for all \(a \in (0,2)\) and under \({\mathbb {P}}_{x_0,z}^U\), the previous measure converges in probability (relatively to the weak convergence) as \(\varepsilon \rightarrow 0\) to a nondegenerate random measure \({\mathcal {M}}^{U,a}_{x_0,z}\), our object of interest. Let us point out that this measure can also be constructed by exponentiating the square root of the local times \(L_{x,\varepsilon }\), justifying the name “Brownian multiplicative chaos”. This random measure is conformally covariant and, almost surely, it is nondegenerate, supported on the set of thick points of Brownian motion and its carrying dimension equals \(2-a\) (see e.g. [Jeg20a, Corollary 1.4]).

Extension In this paper, a crucial new idea will be to consider the “multipoint” analogue of this measure. We will denote by \({\mathcal {S}}\) the collection of sets

where \(r \ge 1\), for all \(i=1 \dots r\), \(D_i\) is a nice domain, \(x_i \in D_i\), \(z_i \in \partial D_i\) is a nice boundary point, and the \(z_i\)’s are pairwise distinct points (i.e. \(z_i \ne z_j\) for all \(i \ne j\)). If \({\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\in {\mathcal {S}}\), we will (with some abuse of notations) see the set \({\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\) as a triplet \({\mathcal {D}}, {\mathcal {X}}, {\mathcal {Z}}\) of domains, starting points and exit points. We will for instance write “\(D \in {\mathcal {D}}\)” when we mean that we pick a domain that occurs in \({\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\). Similarly, we will write \({\mathcal {D}}{\mathcal {X}}\) when we forget about the exit points.

We now define the multipoint analogue of \({\mathcal {M}}^{U,a}_{x_0,z}\). Let \({\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}= \{ (D_i,x_i,z_i), i = 1 \dots r\} \in {\mathcal {S}}\). For all \(i=1 \dots r\), we consider independent Brownian motions distributed according to \({\mathbb {P}}_{x_i,z_i}^{D_i}\) and we denote by \(L_{x,\varepsilon }^{(i)}\) their associated local times. For all thickness level \(a \in (0,2)\) and Borel set \(A \subset {\mathbb {C}}\), we define

We emphasise that, in this definition, the thick points arise from the interaction of the different trajectories. In particular, the single trajectories are not required to be a-thick. In fact, as we will see in Proposition 1.3, a single trajectory will typically be \(\alpha \)-thick where \(\alpha \) is uniformly distributed in [0, a]. Note also that the normalisation is the same as the individual measures (1.3). This indicates that they contribute in the same manner to the occurrence of thick points.

A rather simple modification of [Jeg20a, Theorem 1.1] shows:

Proposition 1.1

For all \(a \in (0,2)\), relative to the topology of weak convergence, the sequence of random measures \({\mathcal {M}}^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}};\varepsilon }\) converges as \(\varepsilon \rightarrow 0\) to some random measure \({\mathcal {M}}^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}\) in probability.

The proof of this result is contained in “Appendix A”. Let us comment that \({\mathcal {M}}^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}\) clearly vanishes almost surely if \(\bigcap _{i=1}^r D_i = \varnothing \). Section 1.4 investigates some further properties of this multipoint version of Brownian multiplicative chaos. In particular, we explain that we can express \({\mathcal {M}}^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}\) in terms of the integral of the “intersection” of one-point Brownian multiplicative chaos measures

This “intersection measure” is a natural measure supported on the intersection of the set of thick points associated to each single Brownian motion with suitable thickness level. Further surprising properties of these measures are discussed in Section 1.4. See in particular Proposition 1.3.

Finally, we will consider the process of measures \(\left( {\mathcal {M}}^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}, {\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\in {\mathcal {S}}\right) \). We have already defined the one-dimensional marginals of this process. The definition of the finite-dimensional marginals is done in the following way: if \({\mathcal {D}}_j{\mathcal {X}}_j{\mathcal {Z}}_j \in {\mathcal {S}}, j =1 \dots J\), for all \((D,x_0,z)\) appearing in one of the \({\mathcal {D}}_j{\mathcal {X}}_j{\mathcal {Z}}_j\), we always use the same Brownian motion from \(x_0\) to z to define the measures \({\mathcal {M}}_{{\mathcal {X}}_j,{\mathcal {Z}}_j}^{{\mathcal {D}}_j,a}\). As before, for different triplets \((D,x_0,z)\), we use independent Brownian motions. In particular, if \({\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\cap {\mathcal {D}}{'}{\mathcal {X}}{'}{\mathcal {Z}}{'} = \varnothing \), the measures \({\mathcal {M}}^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}\) and \({\mathcal {M}}_{{\mathcal {X}}{'},{\mathcal {Z}}{'}}^{{\mathcal {D}}{'},a}\) are independent. This definition is consistent and thus uniquely defines the process \(\left( {\mathcal {M}}^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}, {\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\in {\mathcal {S}}\right) \). We mention that we will sometimes write \({\mathcal {M}}_{{\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}}^a\) instead of \({\mathcal {M}}_{{\mathcal {X}},{\mathcal {Z}}}^{{\mathcal {D}},a}\) to clarify the situation.

1.3 Characterisation of Brownian multiplicative chaos

We can now state our characterisation of the law of Brownian multiplicative chaos. We start off by introducing some complex analysis notations. Let \(a \in (0,2)\) be a thickness level. For any nice domain \(D \subset {\mathbb {C}}\), \(x \in D\) and a nice point \(z \in \partial D\), we will denote by \({{\,\textrm{CR}\,}}(x,D)\) the conformal radius of D seen from x, \(G^D\) the Green function of D with zero boundary conditions and \(H^D(x,z)dz = {\mathbb {P}}_{x} \left( B_{\tau _{\partial D}} \in dz \right) \) the Poisson kernel or harmonic measure of D. See Section 1.6 for precise definitions. We set

By convention, we will set \(\psi ^{D,a}_{x_0,z}(x) = 0\) if \(x \notin D\). We also introduce, for any \(r \ge 1\), a notation for the \((r-1)\)-dimensional simplex

The Lebesgue measure on E(a, r) will be denoted by \(d {\textsf{a}} = da_1 \dots da_{r-1}\).

We are about to consider properties characterising the law of the process \(\left( {\mathcal {M}}^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}, {\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\in {\mathcal {S}}\right) \) defined in Sect. 1.2. The most important one will be the spatial Markov property (Property \((P_2)\)). Because it will be notationally heavy, we first present a simple particular case of it which explains the main idea. Let \({\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\in {\mathcal {S}}\) be of the form \({\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}=\{(D,x_0,z)\}\). Let \(D'\) be a nice subset of D containing \(x_0\). Then Property \((P_2)\) amounts to saying that:

have the same law, where Y has the law of \(B_{\tau _{\partial D'}}\) under \({\mathbb {P}}_{x_0,z}^D\). Conditionally on \(\{ Y=y \}\), the joint law of the measures \({\mathcal {M}}_{x_0,Y}^{D',a}\), \({\mathcal {M}}_{Y,z}^{D,a}\) and \({\mathcal {M}}_{(D',x_0,Y),(D,Y,z)}^{a}\) is by definition that of \({\mathcal {M}}_{x_0,y}^{D',a}\), \({\mathcal {M}}_{y,z}^{D,a}\) and \({\mathcal {M}}_{(D',x_0,y),(D,y,z)}^{a}\). This property comes from the following simple observation. Let \((B_t, t \le \tau _{\partial D})\) be a Brownian motion in D starting at \(x_0\) and conditioned to exit D through z. We divide \((B_t, t \le \tau _{\partial D})\) into \((B_t, t\le \tau _{\partial D'})\) and \((B_t, \tau _{\partial D'} \le t \le \tau _{\partial D})\). An a-thick point for the overall trajectory is either entirely generated by one of the two small trajectories and missed by the other one, or comes from the intersection of both.

We now explain our characterisation. Let \(\left( \mu ^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}, {\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\in {\mathcal {S}}\right) \) be a stochastic process taking values in the set of finite Borel measures. We consider the following properties:

- \((P_1)\):

-

(Average value) For all \({\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}= \left\{ (D_i,x_i,z_i), i=1 \dots r \right\} \in {\mathcal {S}}\) and for all Borel set \(A \subset {\mathbb {C}}\),

$$\begin{aligned}&{\mathbb {E}} \left[ \mu ^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}(A) \right] = \int _A dx \int _{{\textsf{a}} \in E(a,r)} d {\textsf{a}} \prod _{k=1}^r \psi _{x_k,z_k}^{D_k,a_k}(x). \end{aligned}$$ - \((P_2)\):

-

(Markov property) Let \({\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\in {\mathcal {S}}\), \((D,x_0,z) \in {\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\) and let \(D'\) be a nice subset of D containing \(x_0\). Let Y be distributed according to \(B_{\tau _{\partial D'}}\) under \({\mathbb {P}}_{x_0,z}^D\). The joint law of \((\mu _{{\mathcal {X}}{'},{\mathcal {Z}}{'}}^{{\mathcal {D}}{'},a}, {\mathcal {D}}{'}{\mathcal {X}}{'}{\mathcal {Z}}{'} \subset {\mathcal {D}}{\mathcal {X}}{\mathcal {Z}})\) is the same as the joint law given by for all \({\mathcal {D}}{'}{\mathcal {X}}{'}{\mathcal {Z}}{'} \subset {\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\),

$$\begin{aligned} \left\{ \begin{array}{l} \mu _{{\mathcal {X}}{'},{\mathcal {Z}}{'}}^{{\mathcal {D}}{'},a} \mathrm {~if~} (D,x_0,z) \notin {\mathcal {D}}{'}{\mathcal {X}}{'}{\mathcal {Z}}{'}, \\ \mu _{{\bar{{\mathcal {D}}}}{\bar{{\mathcal {X}}}}{\bar{{\mathcal {Z}}}} \cup \{(D',x_0,Y)\} }^a + \mu _{{\bar{{\mathcal {D}}}}{\bar{{\mathcal {X}}}}{\bar{{\mathcal {Z}}}} \cup \{(D,Y,z)\} }^a + \mu _{{\bar{{\mathcal {D}}}}{\bar{{\mathcal {X}}}}{\bar{{\mathcal {Z}}}} \cup \{(D',x_0,Y), (D,Y,z)\} }^a \mathrm {~otherwise}, \end{array} \right. \end{aligned}$$where in the second line we denote \({\bar{{\mathcal {D}}}}{\bar{{\mathcal {X}}}}{\bar{{\mathcal {Z}}}} = {\mathcal {D}}{'}{\mathcal {X}}{'}{\mathcal {Z}}{'} \backslash \{(D,x_0,z)\}\).

- \((P_3)\):

-

(Independence) For all disjoint sets \({\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}, {\mathcal {D}}{'}{\mathcal {X}}{'}{\mathcal {Z}}{'} \in {\mathcal {S}}\), the measures \(\mu ^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}\) and \(\mu _{{\mathcal {X}}{'},{\mathcal {Z}}{'}}^{{\mathcal {D}}{'},a}\) are independent.

- \((P_4)\):

-

(Non-atomicity) For all \({\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\in {\mathcal {S}}\), with probability one, simultaneously for all \(x \in {\mathbb {C}}\), \(\mu ^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}(\{x\}) = 0\).

Theorem 1.2

Let \(a \in (0,2)\). The process \(\left( {\mathcal {M}}^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}, {\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\in {\mathcal {S}}\right) \) from Section 1.2 satisfies Properties \((P_1)\)-\((P_4)\). Moreover, if \(\left( \mu ^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}, {\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\in {\mathcal {S}}\right) \) is another process taking values in the set of finite Borel measures satisfying Properties \((P_1)\)-\((P_4)\), then it has the same law as \(\left( {\mathcal {M}}^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}, {\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\in {\mathcal {S}}\right) \).

Biskup and Louidor [BL19] provide a somewhat similar characterisation of the Liouville measure. The main difference is that Properties \((P_2)\) and \((P_3)\) are replaced by how the spatial Markov property of the Gaussian free field translates to the Liouville measure.

Other characterisations have been formulated before: let D be a fixed nice domain, \(x_0 \in D\), \(z \in \partial D\) nice and consider the pair given by the measure \({\mathcal {M}}^{D,a}_{x_0,z}\) together with the Brownian motion \((B_t, t \le \tau _{\partial D})\) from which it has been built. Then the pair \(({\mathcal {M}}^{D,a}_{x_0,z},B)\) is uniquely characterised by

\(\bullet \) the measurability of \({\mathcal {M}}^{D,a}_{x_0,z}\) with respect to the Brownian path B,

\(\bullet \) the way the law of the path B is changed given a sample of \({\mathcal {M}}^{D,a}_{x_0,z}\).

See Theorem 5.2 of [BBK94]. See also Proposition 1.4 for an extension of this characterisation to finitely many trajectories. The advantage of this characterisation is that it considers only one domain, with given starting and ending points and does not need to rely on the multipoint version of Brownian multiplicative chaos. But its drawback is that it refers explicitly to the underlying Brownian motion and it seems to be less applicable in practice. For instance, in the context of our application to random walk, it does not seem easy to apply this characterisation (even measurability is not a priori clear).

Let us also mention that the proof of Theorem 1.2 provides a construction of \({\mathcal {M}}^{D,a}_{x_0,z}\) through a martingale approximation (see Lemma 2.2). This is very similar to some aspects of the construction of [AHS20] except that they divide the domain into small dyadic squares rather than long narrow rectangles. This might seem to be a cosmetic difference but it is in fact significant since it leads to a decomposition of the Brownian path into excursions from internal to boundary point rather than from boundary to boundary. This is at the heart of what leads to the recursive decomposition of the proof and in turn to the theorem, since the measure \({\mathcal {M}}^{D,a}_{x_0,z}\) is also itself of this type.

Finally, it is possible that Properties \((P_1)\)-\((P_3)\) are enough to characterise the law, but Property \((P_4)\) is necessary for our current proof; see especially Lemma 2.1. In practice, in our context of uniform measure on thick points of random walk, Property \((P_4)\) is a consequence of uniform-integrability-type estimates that are needed in order to verify Property \((P_1)\).

1.4 Further results on multipoint Brownian multiplicative chaos

In this section, we study in greater detail the multipoint version of Brownian multiplicative chaos measures. We start by introducing the “intersection” of Brownian multiplicative chaos measures: a measure whose support is included in the intersection of the support of each intersected measure. Let \({\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}= \{(D_i,x_i,z_i), i=1 \dots r \} \in {\mathcal {S}}\) and consider independent Brownian motions \(B_{x_i,z_i}^{D_i}\) distributed according to \({\mathbb {P}}_{x_i,z_i}^{D_i}\) for all \(i=1 \dots r\). Denote by \(L_{x,\varepsilon }^{(i)}\) their associated local times. Let \(a_i >0, i =1 \dots r,\) be thickness levels such that \(a := \sum a_i <2\). We now consider the measure defined by: for all Borel set \(A \subset {\mathbb {C}}\),

Proposition 1.2 below studies the limit of these measures and Proposition 1.3 studies the link between this limiting measure and \({\mathcal {M}}^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}\) introduced in Sect. 1.2. These results are proven in “Appendix A”.

Proposition 1.2

-

(i)

Relative to the topology of weak convergence, the measure \(\bigcap _{i=1}^r {\mathcal {M}}_{x_i,z_i;\varepsilon }^{D_i,a_i}\) converges as \(\varepsilon \rightarrow 0\) towards a random finite Borel measure \(\bigcap _{i=1}^r {\mathcal {M}}_{x_i,z_i}^{D_i,a_i}\) in probability.

-

(ii)

Inductive decomposition. If \(r \ge 2\), the sequence of random Borel measures

$$\begin{aligned} A \in {\mathcal {B}}({\mathbb {C}}) \mapsto \left| \log \varepsilon \right| \varepsilon ^{-a_r} \int _A {\textbf{1}}_{ \left\{ \frac{1}{\varepsilon } L^{(r)}_{x,\varepsilon } \ge 2 a_r \left| \log \varepsilon \right| ^2 \right\} } \bigcap _{i=1}^{r-1} {\mathcal {M}}_{x_i,z_i}^{D_i,a_i}(dx) \end{aligned}$$(1.8)converges as \(\varepsilon \rightarrow 0\) to \(\bigcap _{i=1}^r {\mathcal {M}}_{x_i,z_i}^{D_i,a_i}\) in probability, relative to the topology of weak convergence.

-

(iii)

The measure \(\bigcap _{i=1}^r {\mathcal {M}}_{x_i,z_i}^{D_i,a_i}\) is measurable with respect to \(\sigma \left( {\mathcal {M}}_{x_i,z_i}^{D_i,a_i}, i =1 \dots r \right) \), the underlying sigma-algebra being the one associated to the topology of weak convergence.

-

(iv)

For all \(A \in {\mathcal {B}}({\mathbb {C}})\),

$$\begin{aligned} {\mathbb {E}} \left[ \bigcap _{i=1}^r {\mathcal {M}}_{x_i,z_i}^{D_i,a_i}(A) \right] = \int _A \prod _{i=1}^r \psi _{x_i,z_i}^{D_i,a_i}(x) dx. \end{aligned}$$ -

(v)

With probability one, simultaneously for all Borel set A of Hausdorff dimension strictly smaller than \(2-\sum _{i=1}^r a_i\), \(\bigcap _{i=1}^r {\mathcal {M}}_{x_i,z_i}^{D_i,a_i} (A) = 0\).

-

(vi)

The stochastic process

$$\begin{aligned} (a_i)_{i = 1 \dots r} \in \{ (\alpha _i)_{i =1 \dots r} \in (0,2)^r: \sum \alpha _i < 2 \} \mapsto \bigcap _{i=1}^r {\mathcal {M}}_{x_i,z_i}^{D_i,a_i} \end{aligned}$$taking values in the set of finite Borel measures, equipped with the topology of weak convergence, possesses a measurable modification.

For the following proposition, we consider the measure \({\mathcal {M}}^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}\) built from the same Brownian motions as the ones used to defined the previous intersection measures.

Proposition 1.3

(Disintegration). Let \(a \in (0,2)\). If \(r \ge 2\), then

Note that the integral of intersection measures above is well-defined thanks to Proposition 1.2, Point (vi).

This result can be compared to the disintegration theorem in measure theory. In words, this proposition shows that the measure \({\mathcal {M}}^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}\) “restricted to the event” that, for all \(k=1 \dots r\), the contribution of the k-th trajectory to the overall thickness a is exactly \(a_k\), agrees with the intersection measure \(\bigcap _{k=1}^r {\mathcal {M}}_{x_k,z_k}^{D_k,a_k}\). With the standard disintegration theorem, one is able to make sense of the disintegrated measure for almost every \({\textsf{a}} \in E(a,r)\). Here, the randomness of the measures helps us and we are able to make sense of these measures almost surely, simultaneously for all \({\textsf{a}} \in E(a,r)\).

In view of Proposition 1.3, we can rewrite Property \((P_2)\) in the following way. Let \(D' \subset D\) be two nice domains, \(x_0 \in D'\) and \(z \in \partial D\) be a nice point, then

with \(Y = B_{\tau _{\partial D'}}\). A surprising consequence of Proposition 1.3 is the following.

For all \(x \in D'\), if we condition x to be an a-thick point for the overall trajectory \((B_t, t \le \tau _{\partial D})\) and if we condition the two small trajectories \((B_t, t \le \tau _{\partial D'})\) and \((B_t, \tau _{\partial D'} \le t \le \tau _{\partial D})\) to visit x, then the thickness level of x for one of the two small trajectories will be uniformly distributed in (0, a). Proposition 1.3 makes this statement formal. Indeed, by definition, this type of thick points is described by the measure

where the equality follows from Proposition 1.3. On the right hand side, the thickness associated to each subtrajectory is fixed to \(a-\alpha \) and \(\alpha \) respectively where \(\alpha \) is sampled uniformly in [0, a].

We finish this section by giving an intrinsic characterisation of the intersection measure \(\bigcap _{i=1}^r {\mathcal {M}}_{x_i,z_i}^{D_i,a_i}\). The characterisation below is a simple extension of the characterisation of the multiplicative chaos associated to one Brownian trajectory, but it is nevertheless an important result since it allows one to quickly identify the measure.

The next result uses the notations introduced above Proposition 1.2. In particular, recall that \(B_{x_i,z_i}^{D_i}\) denotes the Brownian motion distributed according to \({\mathbb {P}}_{x_i,z_i}^{D_i}\) associated to \(\bigcap _{i=1}^r {\mathcal {M}}_{x_i,z_i}^{D_i,a_i}\). For all \(i = 1\dots r\), we view \(B_{x_i,z_i}^{D_i}\) as a random element of the set \({\mathcal {P}}\) of càdlàg paths in \({\mathbb {R}}^2\) with finite durations. See Sect. 5 for details, in particular concerning the topology associated to \({\mathcal {P}}\). The following proposition describes the law of the Brownian paths after shifting the probability measure by \(\bigcap _{i=1}^r {\mathcal {M}}_{x_i,z_i}^{D_i,a_i}(dx)\) (the so-called rooted measure). As we will see, the resulting trajectories can be written as the concatenations of three independent pieces \(B_{x_i,x}^{D_i} \wedge \Xi _x^{D_i,a_i} \wedge B_{x,z_i}^{D_i}\). The first one is a trajectory \(B_{x_i,x}^{D_i}\) with law \({\mathbb {P}}_{x_i,x}^{D_i}\), i.e. a Brownian path conditioned to visit x before exiting \(D_i\). The second part \(\Xi _x^{D_i,a_i}\) consists in the concatenation of infinitely many loops rooted at x that are distributed according to a Poisson point process with intensity \(a_i \nu _{D_i}(x,x)\). Here \(\nu _{D_i}(x,x)\) is a measure on Brownian loops that stay in \(D_i\) (see e.g. (2.12) in [AHS20]). Finally, the last part of the trajectory is a Brownian motion \(B_{x,z_i}^{D_i}\) distributed according \({\mathbb {P}}_{x,z_i}^{D_i}\), that is, a trajectory which starts at x and which is conditioned to exit \(D_i\) through \(z_i\).

Proposition 1.4

Let \(F : {\mathbb {C}}\times {\mathcal {P}}^r \rightarrow {\mathbb {R}}\) be a bounded measurable function. Then

Moreover, if \(\mu \) is another random Borel measure which is measurable w.r.t. \(B_{x_i,z_i}^{D_i}, i =1 \dots r\), and which satisfies (1.9) for all bounded measurable function F, then \(\mu = \bigcap _{i=1}^r {\mathcal {M}}_{x_i,z_i}^{D_i,a_i}\) almost surely.

As already alluded to, this type of characterisation is of little help when one wants to establish scaling limit results since it relies on the measurability of the underlying Brownian trajectories.

Finally, we mention that, using Propositions 1.3 and 1.4 above, one can also compute the left hand side of (1.9) where the intersection measure has been replaced by the multipoint measure \({\mathcal {M}}_{{\mathcal {X}},{\mathcal {Z}}}^{{\mathcal {D}},a}\). Therefore, a similar characterisation concerning the multipoint measure could also be stated.

1.5 Outline of proofs

We now present the organisation of the paper and explain the main ideas behind the proofs of Theorems 1.1 and 1.2.

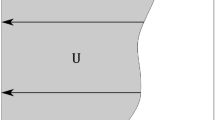

Section 2 is devoted to the proof of Theorem 1.2. It will start by proving that Brownian multiplicative chaos satisfies Properties \((P_1)\)-\((P_4)\) assuming Propositions 1.1, 1.2 and 1.3 on the multipoint version of Brownian multiplicative chaos. These propositions will be proven in “Appendix A”. The rest of Section 2 will deal with the uniqueness part of Theorem 1.2 and we now sketch its proof. Let \(\left( \mu ^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}, {\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\in {\mathcal {S}}\right) \) be a process of Borel measures satisfying Properties \((P_1)\)-\((P_4)\). Let D be a nice domain, \(x_0 \in D\) and a nice point \(z \in \partial D\). We are going to explain the characterisation of the law of \(\mu ^{D,a}_{x_0,z}\). The characterisation of the law of more general marginals follows along the same lines. The only extra difficulty lies in the notations. We will start by noticing that Property \((P_4)\) implies that we can find a deterministic direction such that almost surely all the lines parallel to this direction are not seen by the measure \(\mu ^{D,a}_{x_0,z}\). Without loss of generality, assume that this direction is the vertical one (straightforward adaptations would need to be made in the case of a general direction). We will slice the domain D into many narrow rectangle-type domains \(D \cap (q2^{-p}, (q+2)2^{-p}) \times {\mathbb {R}}\), \(q \in {\mathbb {Z}}\). By iterating Property \((P_2)\), we will be able to decompose

\(D_i^p\) will be a narrow rectangle as above centred at \(x_i^p\) and \(x_i^p,i \ge 1,\) will correspond to the successive hitting points of \(2^{-p}{\mathbb {Z}}\times {\mathbb {R}}\) of a Brownian trajectory. See (2.4) for precise definitions and Figure 1 for an illustration of these successive hitting points. The idea is then that most of the randomness comes from the points \(x_i^p, i \ge 1\), and we do not change the measure so much by replacing each term

This latter expression is entirely determined by Property \((P_1)\) and does not depend on the process \(\left( \mu ^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}, {\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\in {\mathcal {S}}\right) \) any more. This conditional expectation encodes a lot of information. For instance, it ensures the measure to be concentrated around the Brownian trajectory. In fact, it provides a martingale approximation of the measure \(\mu ^{D,a}_{x_0,z}\) as we will see in Lemma 2.2. The proof will then consist in showing that the error in the above approximation tends to zero when \(p \rightarrow \infty \). The fact that almost surely \(\mu ^{D,a}_{x_0,z}\) gives zero-mass to any vertical line will be useful for this purpose making sure that we decomposed the initial measure into many small pieces.

We now turn to the random walk part. We will first show the convergence of \(\mu ^{U,a}_{x_0,z;N}\). The convergence of the unconditioned measures \(\mu ^{U,a}_{x_0;N}\) will then follow fairly quickly thanks to the weak convergence of the discrete Poisson kernel. To show the convergence of \(\mu ^{U,a}_{x_0,z;N}\), the overall strategy is simple: we will prove that this sequence is tight and we will then identify the subsequential limits. The tightness is the easy part and relies on a first moment computation. Section 3.1 is devoted to it. The identification of the subsequential limits uses Theorem 1.2 and is done in Sect. 3.2. We sketch the main steps of this identification. Let \(x_* \in U\) and \(z_* \in \partial U\) be a nice point. Let \((N_k,k \ge 1)\) be an increasing sequence of integers so that \((\mu ^{U,a}_{x_*,z_*;N_k},k \ge 1)\) converges. In Lemma 3.4, we will show that we can extract a further subsequence \((N_k',k \ge 1)\) of \((N_k,k \ge 1)\) such that for all \({\mathcal {D}}{'}{\mathcal {X}}{'}{\mathcal {Z}}{'} \in {\mathcal {S}}\),

converges. The above measures are the discrete analogue of the multipoint versions of Brownian multiplicative chaos and are defined in (3.1). We denote by \( (\mu ^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}, {\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\in {\mathcal {S}}) \) the limiting process of finite Borel measures. Showing that we can extract such a subsequence requires some work since we consider an uncountable number of sequences. Thanks to Theorem 1.2, to conclude the identification of the limiting measure \(\mu ^{U,a}_{x_*,z_*}\), it is then enough to show that the process \( (\mu ^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}, {\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\in {\mathcal {S}}) \) satisfies Properties \((P_1)\)-\((P_4)\). This will roughly follow along the same lines as in the Brownian case. In particular, the uniform integrabilitiy of \(\mu _{{\mathcal {X}},{\mathcal {Z}};N}^{{\mathcal {D}},a}({\mathbb {Z}}^2)\), \(N \ge 1\), which is the content of Proposition 3.2 is key. This comes from a careful truncated second moment estimate which is similar to what was done in [Jeg20a]. The proof of Proposition 3.2 is written in Section 4.

1.6 Some notations

We finish this introduction with some notations that will be used throughout the paper. Let \(D \subset {\mathbb {C}}\) be a nice domain. For \(x \in D\) and a nice point \(z \in \partial D\), we will denote by \({{\,\textrm{CR}\,}}(x,D)\) the conformal radius of D seen from x, \(G^D\) the Green function of D with zero boundary conditions normalised so that \(G^D(x,y) \sim - \log \left| x-y \right| \) as \(\left| x-y \right| \rightarrow 0\) and \(H^D(x,z)dz = {\mathbb {P}}_{x} \left( B_{\tau _{\partial D}} \in dz \right) \) the Poisson kernel or harmonic measure of D. These three quantities can be expressed in terms of a conformal map \(f_D : D \rightarrow {\mathbb {D}}\) onto the unit disc (see e.g. [Law05, Chapter 2]): for all \(x, y \in D\) and for all nice point \(z \in \partial D\),

With the notations of Sect. 1.1, we will similarly denote by \(G^{D_N}\) and \(H^{D_N}\) the discrete Green’s function and Poisson kernel defined by: for all \(x,y \in {\mathbb {Z}}^2\),

In the rest of paper, \(a \in (0,2)\) will always denote the thickness level that we look at.

2 Characterisation: Proof of Theorem 1.2

We start by proving that Brownian multiplicative chaos satisfies Properties \((P_1)\)-\((P_4)\).

Proof of Theorem 1.2, existence

Property \((P_1)\), resp. \((P_4)\), is a direct consequence of Proposition 1.2(iv), resp. (v), and Proposition 1.3. Property \((P_3)\) follows from the fact that we consider independent Brownian motions.

We now prove Property \((P_2)\). To ease notations, we will only prove this in the simplest case \({\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}=\{(D,x_0,z)\}\). The general case follows along the same lines. Let \(D'\) be a nice subset of D containing \(x_0\). Let B be a Brownian motion under \({\mathbb {P}}_{x_0,z}^D\), \(L_{x,\varepsilon }\) its associated local times and let \(L_{x,\varepsilon }^{(0)}\) be the local times of B stopped at the first exit time of \(D'\) and \(L^{(1)}_{x,\varepsilon } := L_{x,\varepsilon } - L_{x,\varepsilon }^{(0)}\). We can write

If we denote by Y the first hitting point of \(\partial D'\) of the Brownian trajectory B, Proposition 1.1 shows that the last term on the right hand side converges in probability towards \({\mathcal {M}}_{(D',x_0,Y),(D,Y,z)}^a\). We are now going to argue that the first right hand side term converges in probability towards \({\mathcal {M}}_{x_0,Y}^{D',a}\). Indeed, for all Borel set \(A \subset {\mathbb {C}}\),

We can dominate

which is integrable (see (A.2)). Moreover, for all \(x \notin \partial D'\),

tends to zero as \(\varepsilon \rightarrow 0\) (which is again a consequence of (A.2)). By dominated convergence theorem, it implies that

tends to zero as \(\varepsilon \rightarrow 0\). Since \({\mathcal {M}}_{x_0,Y;\varepsilon }^{D'a}\) converges in probability towards \({\mathcal {M}}_{x_0,Y}^{D'a}\) (Proposition 1.1), this shows that

converges in probability to the same limiting measure. Similarly, the second right hand side term of (2.1) converges in probability towards \({\mathcal {M}}_{Y,z}^{D,a}\) which overall yields

This is Property \((P_2)\) and it completes the proof.\(\square \)

The rest of this section is devoted to the uniqueness part of Theorem 1.2.

Proof of Theorem 1.2, uniqueness

Let \(\left( \mu ^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}, {\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\in {\mathcal {S}}\right) \) be a process satisfying Properties \((P_1)\)-\((P_4)\). Let D be a nice domain, \(x_0 \in D\) and \(z \in \partial D\) be a nice point. We are going to identify the law of \(\mu ^{D,a}_{x_0,z}\). As mentioned in Sect. 1.5, the identification of more general marginals follows along the same lines. The only extra difficulty lies in the notations. We start this proof by noticing that we can find a deterministic angle \(\theta \in {\mathbb {R}}\) such that all the lines with angle \(\theta \) are not seen by the measure \(\mu ^{D,a}_{x_0,z}\). Here and in the following, we say that the angle of a line L is \(\theta \) if we can write \(L = x + e^{i\theta } (\{0\} \times {\mathbb {R}})\) for some \(x \in {\mathbb {C}}\).\(\square \)

Lemma 2.1

There exists an angle \(\theta \in {\mathbb {R}}\) such that for all \(\varepsilon >0\),

Proof

We proceed by contradiction. Assume that for all \(\theta \in {\mathbb {R}}\), there exists \(\varepsilon _\theta >0\) such that the event \(E_\theta \) that

holds with positive probability \(p_\theta \). We first argue that on the event \(E_\theta \), there exists a line \(L_\theta \) with angle \(\theta \) such that \(\mu ^{D,a}_{x_0,z}(L_\theta ) \ge \varepsilon _\theta \). Indeed, on the event \(E_\theta \), there exists an increasing sequence of integers \((p_n)_{n \ge 1}\) and a sequence \((q_n)_{n \ge 1} \subset {\mathbb {Z}}\) such that \(\mu ^{D,a}_{x_0,z} \left( e^{i \theta } \left( 2^{-p_n} (q_n + (0,1]) \times {\mathbb {R}}\right) \right) \ge \varepsilon _\theta \) for all \(n \ge 1\). Moreover, because the total mass of \(\mu ^{D,a}_{x_0,z}\) is almost surely finite, we can extract a subsequence to ensure that \(e^{i \theta } \left( 2^{-p_n} (q_n + (0,1]) \times {\mathbb {R}}\right) , n \ge 1,\) is a decreasing sequence of sets. The intersection of those sets is a line \(L_\theta \) with angle \(\theta \) satisfying the desired property that \(\mu ^{D,a}_{x_0,z}(L_\theta ) \ge \varepsilon _\theta \).

Now, since \([0,\pi )\) is uncountable, there exists \(\eta >0\) such that \(\{ \theta \in [0,\pi ): p_\theta> \eta , \varepsilon _\theta > \eta \}\) is infinite. Let \(\{\theta _k, k \ge 1\}\) be a subset of this set. For all \(k \ge 1\), we have by the Paley-Zygmund inequality

Hence the probability that an infinite number of events \(E_{\theta _k}, k \ge 1\), occur is positive. On this event, we have

But because \(\mu ^{D,a}_{x_0,z}\) is non-atomic (Property \((P_4)\)), we almost surely have

which is almost surely finite (Property \((P_1)\) implies that it has a finite first moment). We have obtained an absurdity which concludes the proof. \(\square \)

This result will be used at the very end of the proof; see (2.9). Roughly speaking, in the course of the proof we will decompose the measure into small pieces and Lemma 2.1 ensures that these pieces are indeed small.

Without loss of generality, we will assume that the specific angle \(\theta \) provided by Lemma 2.1 is equal to 0. In other words, the measure \(\mu ^{D,a}_{x_0,z}\) almost surely vanishes on all vertical lines. We will also assume for convenience that \(D \subset (0,1) \times {\mathbb {R}}\).

Let us introduce some notations. We will need to consider small portions of the domain which are well-separated from one another. For this reason, we introduce a Cantor-type set \(K^\infty \) which we define now. Let \(p_0 \ge 1\) (to be thought of as large) and for all \(n \ge 1\), let \({\mathfrak {D}}_n\) be the set of dyadic points of generation exactly n, i.e.

For instance, \({\mathfrak {D}}_1 = \{1/2\}\), \({\mathfrak {D}}_2 = \{1/4, 3/4\}\), \({\mathfrak {D}}_3 = \{1/8, 3/8, 5/8, 7/8\}\), etc. We now define \(K^0 = [0,1]\) and for all \(n \ge 1\),

We then define

Later in the proof, we will restrict some measures to the set \(D \cap K^\infty \times {\mathbb {R}}\). This will capture almost entirely our measures since the Lebesgue measure of \(D \backslash \left( D \cap K^\infty \times {\mathbb {R}}\right) \) is at most \(C 2^{-p_0}.\) Note also that, as \(p_0 \rightarrow \infty \), \(K^\infty \) increases to \([0,1] \setminus \bigcup _{n \ge 1} {\mathfrak {D}}_n\).

We now start more concretely the proof of Theorem 1.2. Let \(p \ge 1\) and \((B_t, t \le \tau _{\partial D})\) be a Brownian motion distributed according to \({\mathbb {P}}^D_{x_0,z}\). We are going to keep track of the successive Brownian hitting points of \(2^{-p} {\mathbb {Z}}\times {\mathbb {R}}\): define \(\sigma _0^p := 0\), \(x^p_0 := x_0\) and \(D_0^p := D \cap \left( 2^{-p} \left\lfloor 2^p x_0 \right\rfloor + \left( -2^{-p}, 2^{-p} \right) \times {\mathbb {R}}\right) \) and for all \(i \ge 1\),

Let \(I_p := \sup \{i \ge 1: \sigma _i^p \le \tau _{\partial D} \}\). Note that \(\sigma _{I_p}^p = \tau _{\partial D}\) and \(x^p_{I_p} = z\). See Figure 1 for an illustration of these notations. Let

and let

be the process so that conditionally on \(x_i^p, i = 1 \dots I_p-1\), it has the same law as

Note that with the definition (2.4), \(D_i^p\) may be formed of several connected components. To be more precise, we define \(D_i^p\) as being the connected component that contains \(x_i^p\) which is a nice domain belonging to \({\mathcal {D}}\). \(x_{i+1}^p\) being almost surely a nice boundary point of \(D_i^p\) and the \(x_i^p, i \ge 1\) being almost surely pairwise distinct, the above random measures are well defined. An elementary iteration of Property \((P_2)\) shows that

has the same law as \(\mu ^{D,a}_{x_0,z}\). These definitions are consistent and by Kolmogorov’s extension theorem, we can define \(x^p_i, i =0 \dots I_p\), \(p \ge 1\), \({\bar{\mu }}_{{\mathcal {X}},{\mathcal {Z}}}^{{\mathcal {D}},a}\), \({\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\subset \cup _{p \ge 1} {\mathcal {D}}^p{\mathcal {X}}^p{\mathcal {Z}}^p\) on the same probability space.

In the rest of the proof, we will work on the specific probability space given by Kolmogorov’s extension theorem as above. We will drop the bar and simply write

In the following, we will denote by \({\mathcal {F}}_p\) (resp. \({\mathcal {F}}_\infty \)) the \(\sigma \)-algebra generated by \(x_i^p, i=1 \dots I_p-1\) (resp. \(x_i^p, i=1 \dots I_p-1, p \ge 1\)) and

By (2.5) and Property \((P_1)\), \(\mu _p(dx)\) does not depend on the process \((\mu ^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}, {\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\in {\mathcal {S}})\) any more since it is equal to

The following lemma is a key feature of the proof:

Lemma 2.2

There exists an a.s. finite random Borel measure \(\mu _\infty \) such that for all bounded measurable function \(f: D \rightarrow {\mathbb {R}}\), \((\left\langle \mu _p,f \right\rangle , p \ge 1)\) is a martingale and converges a.s. to \(\left\langle \mu _\infty ,f \right\rangle \).

Proof

Let \({\mathcal {P}}\) be a countable \(\pi \)-system generating the Borel sets of D. For all \(A \in {\mathcal {P}}\), \((\mu _p(A), {\mathcal {F}}_p)_{p \ge 1}\) is a non-negative martingale thanks to (2.6). Hence, almost surely for all \(A \in {\mathcal {P}}\), \(\mu _p(A)\) converges towards some L(A). By standard arguments (see Section 6 of [Ber17] for instance), one can show that it implies that there exists an a.s. finite random Borel measure \(\mu _\infty \) such that almost surely for all \(A \in {\mathcal {P}}\), \(L(A) = \mu _\infty (A)\). It moreover implies that almost surely for all bounded measurable function f, \(\left\langle \mu _p,f \right\rangle \) converges towards \(\left\langle \mu _\infty ,f \right\rangle \). \(\square \)

Since \(\mu _\infty \) is entirely characterised by Properties \((P_1)\)-\((P_4)\), it is enough to show that \(\mu ^{D,a}_{x_0,z} = \mu _\infty \) a.s. to conclude the proof of Theorem 1.2. Since two finite measures which coincide on a (countable) \(\pi \)-system generating the Borel sets of \({\mathbb {C}}\) are equal, it is further enough to show that for all Borel set \(A \subset {\mathbb {C}}\), \(\mu ^{D,a}_{x_0,z}(A) = \mu _\infty (A)\) a.s. We then notice that it is enough to show that for all \(t >0\) and Borel set A,

Indeed, it proves that conditionally on \({\mathcal {F}}_\infty \) the Laplace transform of \(\mu ^{D,a}_{x_0,z}(A)\) is almost surely equal to the Laplace transform of the constant \(\mu _\infty (A)\) on all the positive rational numbers which in turn proves that \(\mu ^{D,a}_{x_0,z}(A) = \mu _\infty (A)\) a.s. Until the end of the proof we will fix such a Borel set A. We reduce the problem one last time: recall the definition (2.3) of the Cantor-type set \(K^\infty \) (which depends on the integer \(p_0\)) that we introduced at the beginning of the proof and recall that \(K^\infty \) increases with \(p_0\) towards \([0,1] \setminus \bigcup _{n \ge 1} {\mathfrak {D}}_n\) (see the discussion below (2.3)). By computing the first moment of the variables below, we see that

Therefore, as \(p_0 \rightarrow \infty \),

In other words, we can safely assume that A is included in \(K^\infty \). This assumption will be made for the rest of the proof.

Our objective is to show (2.8). Without loss of generality, we can assume that \(t=1\). One direction is easy: by (2.6), we have

so by Jensen’s inequality,

By Lemma 2.2, \(\mu _p(A) \rightarrow \mu _\infty (A)\) a.s. So by letting \(p \rightarrow \infty \) we get

For the reverse direction, we use Lemma 3.12 of [BL19] which provides a “reverse Jensen” inequality that we recall.

Lemma A ([BL19], Lemma 3.12). If \(X_1, \dots , X_n\) are non-negative independent random variables, then for each \(\varepsilon >0\),

Let \(p \ge 1\) be much larger than \(p_0\) and let \(n \ge 1\) be such that \(p_0 + 2n = p\) (or such that \(p_0 + 2n = p-1\), depending on the parity). Recall the definition (2.2) of \(K^n\). We will denote by \(K^{n,m}, m =1, \dots , 2^n\), the connected components of \(K^n\). We notice that conditioned on \({\mathcal {F}}_p\), the measures  , \(m =1 \dots 2^n\), are independent. Indeed, looking at (2.5) we see that Property \((P_3)\) implies that conditioned on \({\mathcal {F}}_p\),

, \(m =1 \dots 2^n\), are independent. Indeed, looking at (2.5) we see that Property \((P_3)\) implies that conditioned on \({\mathcal {F}}_p\),  and

and  are independent as soon as the projections of \(A_1\) and \(A_2\) on the real axis are at distance at least \(2\times 2^{-p}\) from each other. The whole introduction of the set \(K^\infty \) is motivated by this fact. Now, because \(A \subset K^\infty \) and by Lemma 2, we deduce that for each \(\varepsilon >0\),

are independent as soon as the projections of \(A_1\) and \(A_2\) on the real axis are at distance at least \(2\times 2^{-p}\) from each other. The whole introduction of the set \(K^\infty \) is motivated by this fact. Now, because \(A \subset K^\infty \) and by Lemma 2, we deduce that for each \(\varepsilon >0\),

To conclude that

it is thus enough to show that a.s.

We have

But by Lemma 2.1 and dominated convergence theorem,

tends to zero as \(p \rightarrow \infty \) (recall that \(n \rightarrow \infty \) as \(p \rightarrow \infty \)). Hence, by extracting a subsequence if necessary, we have

which concludes the proof of Theorem 1.2. \(\square \)

3 Application to Random Walk: Proof of Theorem 1.1

We start off by defining the multipoint analogue of \(\mu _{x_0,z;N}^{U,a}\). Let \(r \ge 1\) and \({\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}= \{(D^i,x_i,z_i)\), \(i =1 \dots r \} \in {\mathcal {S}}\). Let \(X^{(i)}\), \(i=1 \dots r\), be r independent random walk distributed according to \({\mathbb {P}}_{Nx_i,Nz_i}^{D^i_N}\) or according to \({\mathbb {P}}_{Nx_i}^{D^i_N}\) and let \(\ell _x^{(i)}\) be their associated local times. We define simultaneously for all \({\mathcal {D}}{'}{\mathcal {X}}{'}{\mathcal {Z}}{'} = \{(D^i,x_i,z_i), i \in I\} \subset {\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\) the measures given by: for all Borel set A,

under the probability \(\bigotimes _{i=1}^r {\mathbb {P}}_{Nx_i,Nz_i}^{D^i_N}\). We define similarly the unconditioned measures \(\mu _{{\mathcal {X}}{'};N}^{{\mathcal {D}}{'},a}\), \({\mathcal {D}}{'}{\mathcal {X}}{'} \subset {\mathcal {D}}{\mathcal {X}}\), under \(\bigotimes _{i=1}^r {\mathbb {P}}_{Nx_i}^{D^i_N}\).

3.1 Tightness and first moment estimates

In this section we fix a nice domain D. We start by recalling Green’s function and Poisson kernel asymptotic behaviours. Recall the notations of Sect. 1.6.

Lemma 3.1

(Green’s function). Let \(K \Subset D\). There exist \(C,C_K>0\) such that for all \(x,y \in {\mathbb {Z}}^2\),

Moreover, for all \(x\ne y \in D\), we have

where \(c_0\) is the universal constant defined in (1.1).

Proof

(3.2) and (3.3) are direct consequences of [Law96] Theorem 1.6.2 and Proposition 1.6.3. (3.4) and (3.5) are contained in Theorem 1.17 of [Bis20]. \(\square \)

Lemma 3.2

(Poisson kernel). Let \(K \Subset D\) and \(\alpha >0\). For all N large enough, \(x,y \in K\) and \(z \in \partial D\) a nice point, we have

Moreover, for all \(x \in D\), the following weak convergence holds:

Proof

Statements of the flavour of (3.6) have been extensively studied to show the convergence of loop-erased random walk towards \(\textrm{SLE}_2\). (3.6) is a direct consequence of [YY11, Lemma 1.2] for instance. (3.7) is the content of [Bis20, Lemma 1.23]. \(\square \)

These two lemmas allow us to derive the first moment estimates that we need. In the following we let \({\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}= \{ (D^i,x_i,z_i), i=1 \dots r\} \in {\mathcal {S}}\) and \({\mathcal {D}}{\mathcal {X}}= \{(D^i,x_i), i = 1 \dots r\}\) and we denote \(\ell _x^{(i)}\) the local times associated to the i-th random walk as at the very beginning of Sect. 3. For all nice domain D and \(x_0 \in D\), we will also denote for all \(x \in {\mathbb {C}}\),

Lemma 3.3

There exists \(C>0\) such that for all \(N \ge 1\) and \(x \in {\mathbb {C}}\),

Let \(K \Subset \cap _{i=0}^{r-1} D^i\). There exists \(C>0\) depending on K, such that for all N large enough and \(x \in K\),

Moreover, for all \(x \in {\mathbb {C}}\),

and

where E(a, r) is the \((n-1)\)-dimensional simplex defined in (1.6).

Proof of Lemma 3.3

We start by proving (3.9) and (3.11). To ease notations, we will write

Let \(x \in {\mathbb {Z}}^2\). We have

The Markov property gives that for all \(i=1 \dots r\),

Moreover, under \({\mathbb {P}}^{D^i_N}_x\), \(\ell _x^{\tau _{\partial D^i_N}}\) is an exponential variable with mean \(G^{D^i_N}(x,x)\) which is independent of \(X^{(i)}_{\tau _{\partial D^i_N}}\) (see Lemma 4.4). Therefore, conditioning on \(X^{(i)}_{\tau _{\partial D^i_N}}\) does not change the law of \(\ell _x^{\tau _{\partial D^i_N}}\) and

To bound this term from above, we use (3.2) which allows us to bound

which yields

(3.2), (3.3) and (3.6) then concludes the proof of (3.9). To get (3.11), we come back to (3.13) which gives

(3.14) shows that the first right hand side term is at most \(C(\log N)^{r-2} N^{-a}\) which is going to be of smaller order than the second term. Using (3.4) and performing the change of variable \(s_i = t_i/ \log N\) shows that when \(x = \left\lfloor Ny \right\rfloor \) the second right hand side term is asymptotically equivalent to

Using (3.6), this shows that

which proves (3.11).

We omit the proofs of (3.8) and (3.10) which are very similar and even slightly easier since there is no conditioning to deal with. We nevertheless mention that in (3.8), we do not need to restrict ourselves to the bulk of the domains (compared to (3.9)) because the probability increases with the domains. We can thus assume that all the points we consider are deep inside the domains. This finishes the proof.\(\square \)

We are now ready to prove:

Proposition 3.1

(Tightness). The sequences

are tight for the product topology of, respectively, weak and vague convergence on \(\bigcap _{D \in {\mathcal {D}}{'}} D, {\mathcal {D}}{'} \subset {\mathcal {D}}\). Moreover, for any Borel set \(A \subset {\mathbb {C}}\),

and if A is compactly included in \(\cap _{i=1}^r D^i\),

where E(a, r) is the \((r-1)\)-dimensional simplex defined in (1.6).

Proof of Proposition 3.1

To prove the desired tightness, it is enough to show that for all \({\mathcal {D}}{'}{\mathcal {X}}{'} \subset {\mathcal {D}}{\mathcal {X}}\) and \({\mathcal {D}}{'}{\mathcal {X}}{'}{\mathcal {Z}}{'} \subset {\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\) and \(K \Subset \bigcap _{D \in {\mathcal {D}}{'}} D\), the sequences of real-valued random variables

are tight. This is a direct consequence of Lemma 3.3: (3.8) and (3.9) show that

are uniformly bounded in N. (3.15) and (3.16) follow from dominated convergence theorem and (3.10) and (3.11) respectively.\(\square \)

3.2 Study of the subsequential limits

As described in Sect. 1.5, we start by showing that we can extract a subsequence such that the convergence holds for all domains and starting/stopping points at the same time. The difficulty lies in the fact that we consider uncountably many sequences.

Lemma 3.4

Let \((N_k, k \ge 1)\) be an increasing sequence of integers. There exists a subsequence \((N'_k, k \ge 1)\) of \((N_k, k \ge 1)\) such that for all \({\mathcal {D}}{'}{\mathcal {X}}{'}{\mathcal {Z}}{'} \in {\mathcal {S}}\),

converges as \(k \rightarrow \infty \) in distribution, relative to the product topology of vague convergence on \(\bigcap _{D \in {\mathcal {D}}} D\), \({\mathcal {D}}\subset {\mathcal {D}}{'}\).

Before proving this result, we state an elementary lemma for ease of reference:

Lemma 3.5

Let \((X_k, k \ge 1)\) be a sequence of random variables. Assume that for all \(k \ge 1\) and \(p \ge 1\), \(X_k\) can be written as \(X_k = Y_{k,p} + Z_{k,p}\) where \(Y_{k,p}\) and \(Z_{k,p}\) are two non-negative random variables defined on the same probability space. Assume further that for all \(\lambda >0\),

exist and that for all \(p \ge 1\), \(\sup _{k \ge 1} {\mathbb {E}} \left[ Y_{k,p} \right] < \infty \) and \(\sup _{k \ge 1} {\mathbb {E}} \left[ Z_{k,p} \right] \rightarrow 0\) when \(p \rightarrow \infty \). Then \((X_k,k \ge 1)\) converges in distribution.

Proof of Lemma 3.5

As \(\sup _{k \ge 1} {\mathbb {E}} \left[ X_k \right] < \infty \), \((X_k, k \ge 1)\) is tight. To show that it converges, it is thus enough to show the pointwise convergence of the Laplace transform. Take \(\lambda >0\). Since \(Z_{k,p}\) is non-negative,

and

On the other hand,

and

We have shown that \({\mathbb {E}} \left[ e^{-\lambda X_k} \right] , k \ge 1,\) converges to \(\lim _{p \rightarrow \infty } \lim _{k \rightarrow \infty } {\mathbb {E}} \left[ e^{-\lambda Y_{k,p}} \right] \) which concludes the proof.\(\square \)

Proof of Lemma 3.4

In this proof, the topologies associated to the unconditioned (resp. conditioned) measures will be the topology of weak convergence (resp. vague convergence) on the underlying domain. We will denote by \({\mathfrak {D}}\) the collection of simply connected domains that can be written as a finite union of discs with rational centres and radii and

Notice that \({\mathcal {S}}'\) is countable.

Let \({\mathcal {D}}{\mathcal {X}}\in {\mathcal {S}}\). By Proposition 3.1, the sequence \((\mu ^{{\mathcal {D}},a}_{{\mathcal {X}};N_k}, k \ge 1)\) is tight. Denote by \((X^{D}_{x;N_k}(t), 0 \le t \le \tau ^{N_k}_{D,x}), (D,x) \in {\mathcal {D}}{\mathcal {X}}\), the associated random walks, i.e. independent trajectories sampled according to \({\mathbb {P}}^{D_{N_k}}_x\). The sequence \(\Bigg ( N_k^{-1} X^D_{x;N_k}(N_k^2 t), t \le N_k^{-2} \tau ^{N_k}_{D,x} \Bigg )_{(D,x) \in {\mathcal {D}}{\mathcal {X}}}, k \ge 1,\) is also tight since it converges to independent Brownian motions. Hence, by Cantor’s diagonal argument, we can extract a subsequence of \((N_k, k \ge 1)\) (that we still denote \((N_k, k \ge 1)\) in the following) such that for all \({\mathcal {D}}{'}{\mathcal {X}}{'} \in {\mathcal {S}}'\), the joint distribution

converges as \(k \rightarrow \infty \).

We will conclude the proof with the following two steps.

- (i):

-

We will first fix \(D_i \in {\mathfrak {D}}, i =1 \dots r\) and show that the fact that for all \(x_i \in D_i \cap {\mathbb {Q}}^2, i=1 \dots r\), (3.17) converges with \({\mathcal {D}}{'}{\mathcal {X}}{'} = \{(D_i,x_i)\}\) implies the same statement for all \(x_i \in D_i, i=1 \dots r\).

- (ii):

-

We will then fix nice domains \(D_i\) and initial points \(x_i \in D_i, i=1 \dots r\), and we will show that the fact that for all \(D_i' \in {\mathfrak {D}}\) containing \(x_i, i=1 \dots r\), (3.17) converges with \({\mathcal {D}}{'}{\mathcal {X}}{'} = \{(D_i',x_i)\}\) implies that for all pairwise distinct nice points \(z_i \in \partial D_i\) and \({\mathcal {D}}{'}{\mathcal {X}}{'}{\mathcal {Z}}{'} = \{ (D_i,x_i,z_i) \}\), \(\left( \mu ^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}};N_k}, {\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\subset {\mathcal {D}}{'}{\mathcal {X}}{'}{\mathcal {Z}}{'} \right) \) converges as \(k \rightarrow \infty \).

We will only prove (ii) since (i) is very similar. See the end of the proof for a few comments about the step (i) above. To ease notations, we will moreover only prove (ii) for \(r=1\). The general case \(r \ge 1\) follows along the same lines by considering multivariate Laplace transforms.

Let D be a nice domain, \(x_0 \in D\) and \(z \in \partial D\) be a nice point. Let \((X_t)_{t \ge 0}\) be the associated random walk. We assume that we already know that for all \(D' \in {\mathfrak {D}}\) containing \(x_0\), the joint distribution of

converges as \(k \rightarrow \infty \) and we want to show the convergence of \(\mu ^{D,a}_{x_0,z;N_k}, k \ge 1\). Let \(f \in C_c(D, [0,\infty ))\). Our objective is to show that \(\left\langle \mu ^{D,a}_{x_0,z;N_k},f \right\rangle , k \ge 1,\) converges in law. Let \(p \ge 1\) and consider \(D^p \in {\mathfrak {D}}\) such that

In the following, we will consider the measure \(\mu _{x_0,z;N}^{D^p,a}\) which is defined as \(\mu _{x_0;N}^{D^p,a}\) but under the conditional probability \({\mathbb {P}}_{Nx_0,Nz}^{D_N^p}\) instead of \({\mathbb {P}}_{Nx_0}^{D^p_N}\). \((B_t, t \le \tau _{\partial D^p})\) under \({\mathbb {P}}^D_{x_0}\) and \((B_t, t \le \tau _{\partial D^p})\) under \({\mathbb {P}}^D_{x_0,z}\) are mutually absolutely continuous: if \({\mathcal {F}}_{\tau _{\partial D^p}}\) denotes the \(\sigma \)-algebra generated by \((B_t, t \le \tau _{\partial D^p})\), we have (see [AHS20] (2.7) for instance)

Similarly (direct consequence of Markov property),

Hence the convergence of \( \left( \left\langle \mu ^{D^p,a}_{x_0;N_k},f \right\rangle , X_{\tau _{D^p}^{N_k}}/N_k \right) , k \ge 1\), implies the convergence of \(\left\langle \mu ^{D^p,a}_{x_0,z;N_k},f \right\rangle \), \(k \ge 1\): by Lemma 3.2, for all \(\alpha >0\) and k large enough,

and

We obtain similarly that the liminf is bounded from below by the above right hand side term implying that \({\mathbb {E}} \left[ \exp \left( - \left\langle \mu ^{D^p,a}_{x_0,z;N_k},f \right\rangle \right) \right] \) converges as \(k \rightarrow \infty \). Since \(D^p, p \ge 1,\) is an increasing sequence of domains, for all \(k \ge 1\), \({\mathbb {E}} \left[ \exp \left( - \left\langle \mu ^{D^p,a}_{x_0,z;N_k},f \right\rangle \right) \right] \) is non-increasing with p. Hence

converges when \(p \rightarrow \infty \). By Lemma 3.3, we also notice that for all \(N \ge 1\) and \(p \ge 1\),

By lemma 3.5, it implies that \( \left\langle \mu _{x_0,z;N_k}^{D,a},f \right\rangle , k \ge 1,\) converges in distribution. This concludes the proof of the step (ii).

We finish this proof with a comment about the step (i). The proof is very similar. One would need to first stop the walks at the first hitting times of small discs centred at the starting points \(x_i\). One would need to argue that the main contribution comes from the rest of the trajectories which converge by an h-transform-type of argument as above. We leave the details to the reader.\(\square \)

As mentioned in Sect. 1.5, to prove that the subsequential limits satisfy Properties \((P_1)\) and \((P_4)\), we need the following result which is proven in Sect. 4:

Proposition 3.2

(Uniform integrability). For all \({\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\in {\mathcal {S}}\) and \(K \Subset \bigcap _{D \in {\mathcal {D}}} D\),

are uniformly integrable. Moreover, any subsequential limit \(\mu ^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}\) of \(\left( \mu ^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}};N}, N \ge 1 \right) \) satisfies: almost surely for all Borel set A of Hausdorff dimension less than \(2-a\), \(\mu ^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}(A) = 0\).

Before jumping into the proof of Theorem 1.1, we state the following result which is a quick consequence of (3.7).

Lemma 3.6

Let \(x_0 \in U\) and let \(\phi _N : {\mathbb {C}}\rightarrow [0,1]\) be a sequence of functions converging pointwise towards \(\phi \). Let \(\{z_i, i =1 \dots p \} \subset \partial U\) be the points where the boundary \(\partial U\) is not analytic. Assume that for all \(\alpha >0\) and for any compact subset K of \({\mathbb {C}}\backslash \{z_i,i=1 \dots p\}\), there exists \(C_{\alpha ,K} >0\) such that for all N large enough and for all \(z,z' \in K\),

Then,

dz denotes here the one-dimensional Hausdorff measure on \(\partial U\).

Proof

In this proof, when we say that a set \(K \subset {\mathbb {C}}\) is smooth, we mean that each connected component of the boundary of K is analytic. Let \(\alpha ,\varepsilon >0\). Since \(0 \le \phi \le 1\), there exists a smooth compact subset K of \({\mathbb {C}}\backslash \{z_i,i=1 \dots p\}\) such that

Using the weak convergence (3.7), this upper bound in particular implies

We now decompose \(K = \cup _{i=1}^I K_i\) into smooth compact sets of diameter at most \(\varepsilon \) and such that for all \(i \ne j\), \(K_i \cap K_j \cap \partial U\) is composed of at most one point. For all \(i = 1 \dots I\), let \(y_i\) be any point of \(K_i\). By the weak convergence (3.7), we now have

We have obtained

We obtain the desired upper bound by letting \(\varepsilon \rightarrow 0\) and then \(\alpha \rightarrow 0\). The lower bound is similar. \(\square \)

We are now ready to prove Theorem 1.1.

Proof of Theorem 1.1

Let \(x_0 \in U\). We start by assuming the convergence of \((\mu _{x_0,z;N}^{U,a}, N \ge 1)\) for all nice points \(z \in \partial U\) and we are going to explain how we deduce the convergence of \((\mu _{x_0;N}^{U,a}, N \ge 1)\). Let \(f \in C(D,[0,\infty ))\). It is enough to prove that

converges. By Lemma 3.3 (3.8),

We can thus assume that f has a compact support included in U (see Lemma 3.5). We have

To obtain the convergence of the above sum, we are going to show that we can cast our situation into Lemma 3.6. Let \(\alpha , r>0\) and define

By Lemma 3.3, if r is small enough (possibly depending on \(U, x_0\) and f), we have for all \(z \in \partial D\),

We now notice by Lemma 3.2 (3.6) that for all N large enough and \(z,z' \in \partial D\),

Using (1.12), we see that for all compact subset K of an analytic portion of \(\partial U\), the above supremum is at most \(C_{\alpha ,K} \left| z-z' \right| \) for all \(z,z' \in K\). We have proven that for all N large enough, all such compact subset K and \(z,z' \in K\),

We can thus conclude with Lemma 3.6 that

This finishes the transfer of the convergence of conditioned measures to unconditioned measures.

We now turn to the proof of the convergence of \((\mu _{x_*,z_*;N}^{U,a}, N \ge 1)\) where \(x_* \in U\) and \(z_* \in \partial U\) is a nice point. Let \((N_k, k \ge 1)\) be an increasing sequence of integers such that \((\mu _{x_*,z_*;N_k}^{U,a}, k \ge 1)\) converges. By Lemma 3.4, by extracting a further subsequence if necessary, we can assume that for all \({\mathcal {D}}{'}{\mathcal {X}}{'}{\mathcal {Z}}{'} \in {\mathcal {S}}\),

converges as \(k \rightarrow \infty \) towards some

By Theorem 1.2, to show that \(\mu _{x_*,z_*}^{U,a} \overset{\mathrm {(d)}}{=} e^{c_0a/g} {\mathcal {M}}_{x_*,z_*}^{U,a}\), it is enough to prove that \((\mu ^{{\mathcal {D}},a}_{{\mathcal {X}},{\mathcal {Z}}}\), \({\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}\in {\mathcal {S}})\) satisfies Properties \((P_1)\)-\((P_4)\).

Property \((P_1)\) is a direct consequence of what we have already done. For instance, for \({\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}= \{(D,x_0,z)\} \in {\mathcal {S}}\), the arguments are as follows. In order to identify the two finite Borel measures

we only need to check that for any continuous bounded nonnegative function \(f : {\mathbb {C}}\rightarrow {\mathbb {R}}\), the integrals of f against these two measures agree. For \(r>0\), let \(f_r\) be a continuous function with support compactly included in D which agrees with f on \(\{x \in D: d(x,\partial D) \ge r \}\) and such that \(0 \le f_r \le f\). By Proposition 3.1, for all \(r>0\),

Since Proposition 3.2 shows that \((\left\langle \mu ^{D,a}_{x_0,z;N_k}, f_r \right\rangle , k \ge 1)\) is uniformly integrable, we can interchange the limit and the expectation which gives

We then obtain Property \((P_1)\) by letting \(r \rightarrow 0\) and using monotone convergence theorem.

The proof of Property \((P_2)\) is very similar to the Brownian case. For instance, in the case \({\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}= \{(D,x_0,z)\} \in {\mathcal {S}}\) and \(D'\) nice subset of D containing \(x_0\), we can very similarly show that for all continuous function \(f : {\mathbb {C}}\rightarrow [0,\infty )\) with compact support included in \(D \backslash \partial D'\), and all \(y \in \partial D'\), \(\left\langle \mu _{x_0,z;N}^{D,a},f \right\rangle \) under \({\mathbb {P}}^{D_N}_{\left\lfloor Nx_0 \right\rfloor ,\left\lfloor Nz \right\rfloor } \left( \cdot \left| X_{\tau _{\partial D'_N}} = \left\lfloor Ny \right\rfloor \right. \right) \) has the same law as

plus smaller order terms which converge to zero in \(L^1\). This shows the conditional version of Property \((P_2)\). To obtain Property \((P_2)\) without having to condition on the hitting point of \(\partial D'\), we have to integrate over \(y \in \partial D'\). For this, we use the same argument as what we did at the very beginning of the proof to transfer results from the conditioned to the unconditioned measures.

Finally, Property \((P_3)\) follows from the fact that we consider independent random walks and Property \((P_4)\) is a direct consequence of the carrying dimension estimate of Proposition 3.2. This concludes the proof.\(\square \)

4 Uniform Integrability: Proof of Proposition 3.2

To ease notations, we will prove Proposition 3.2 for \({\mathcal {D}}{\mathcal {X}}{\mathcal {Z}}= \{(D,x_0,z)\}\). Our approach is very close to the one of [Jeg20a]. We have simplified some minor aspects since we only need to show the uniform integrability of the sequence but not its convergence in \(L^1\). For instance, our definition of “good events” limits the number of certain excursions rather than limiting certain local times.

If \(x \in {\mathbb {Z}}^2\) and \(R \ge 1\), we will denote by \(C_R(x)\) the contour \({\mathbb {Z}}^2 \cap \partial (x + [-R,R]^2)\), by \(A_N(x \rightarrow R)\) the number of excursions from x to \(C_R(x)\) before \(\tau _{\partial D_N}\) and

For \(b \in (a,2)\) and \(\varepsilon >0\), we introduce

the good event at x

and the modified version of \(\mu _{x_0;N}^{D,a}({\mathbb {C}})\),

We will see that adding these good events does not change the behaviour of the first moment and makes the second moment finite.

Lemma 4.1

For all \(b >a\),

Lemma 4.2

If \(b>a\) is close enough to a,

Moreover, if b is close enough to a, for all \(\eta >0\),

We now explain how these two lemmas imply Proposition 3.2.

Proof of Proposition 3.2

Lemma 4.1 and (4.2) imply that \((\mu _{x_0;N}^{D,a}({\mathbb {C}}), N \ge 1)\) is uniformly integrable. Moreover, by Frostman’s lemma, Lemma 4.1 and the energy estimate (4.3) imply that any subsequential limit \(\mu _{x_0}^{D,a}\) of \(\mu _{x_0;N}^{D,a}, N \ge 1\), satisfies: almost surely for all Borel set A with Hausdorff dimension smaller than \(2-a\), \(\mu _{x_0}^{D,a}(A) = 0\).

To finish the proof, we now have to explain how we transfer these results to the conditioned measures \(\mu _{x_0,z;N}^{D,a}\), \(N \ge 1\). Let \(K \Subset D\), \(r >0\) and define \(D^r := \{ x \in D, d(x, \partial D) > r \}\). We denote by \(\mu _{x_0,z;N}^{D^r,a}(K)\) the random variable

under \({\mathbb {P}}_{x_0,z}^{D_N}\). A similar reasoning as in the proof of Lemma 3.4 shows that