Abstract

We establish a surface order large deviation estimate for the magnetisation of low temperature \(\phi ^4_3\). As a byproduct, we obtain a decay of spectral gap for its Glauber dynamics given by the \(\phi ^4_3\) singular stochastic PDE. Our main technical contributions are contour bounds for \(\phi ^4_3\), which extends 2D results by Glimm et al. (Commun Math Phys 45(3):203–216, 1975). We adapt an argument by Bodineau et al. (J Math Phys 41(3):1033–1098, 2000) to use these contour bounds to study phase segregation. The main challenge to obtain the contour bounds is to handle the ultraviolet divergences of \(\phi ^4_3\) whilst preserving the structure of the low temperature potential. To do this, we build on the variational approach to ultraviolet stability for \(\phi ^4_3\) developed recently by Barashkov and Gubinelli (Duke Math. J. 169(17):3339–3415, 2020).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We study the behaviour of the average magnetisation

for fields \(\phi \) distributed according to the measure \({\nu _{\beta ,N}}\) with formal density

in the infinite volume limit \(N \rightarrow \infty \). Above, \({\mathbb {T}}_N= ({\mathbb {R}}/N{\mathbb {Z}})^3\) is the 3D torus of sidelength \(N \in {\mathbb {N}}\), \(\prod _{x\in {\mathbb {T}}_N}d\phi (x)\) is the (non-existent) Lebesgue measure on fields \(\phi :{\mathbb {T}}_N\rightarrow {\mathbb {R}}\), \(\beta > 0\) is the inverse temperature, and \({\mathcal {V}}_\beta : {\mathbb {R}}\rightarrow {\mathbb {R}}\) is the symmetric double-well potential given by \({\mathcal {V}}_\beta (a) = \frac{1}{\beta }(a^2-\beta )^2\) for \(a \in {\mathbb {R}}\).

\({\nu _{\beta ,N}}\) is a finite volume approximation of a \(\phi ^4_3\) Euclidean quantum field theory [Gli68, GJ73, FO76]. Its construction, first in finite volumes and later in infinite volume, was a major achievement of the constructive field theory programme in the ’60s-’70s: Glimm and Jaffe made the first breakthrough in [GJ73] and many results followed [Fel74, MS77, BCG+80, BFS83, BDH95, MW17, GH18, BG19]. The model in 2D was constructed earlier by Nelson [Nel66]. In higher dimensions there are triviality results: in dimensions \(\geqslant 5\) these are due to Aizenman and Fröhlich [Aiz82, Frö82], whereas the 4D case was only recently done by Aizenman and Duminil-Copin [ADC20]. By now it is also well-known that the \(\phi ^4_3\) model has significance in statistical mechanics since it arises as a continuum limit of Ising-type models near criticality [SG73, CMP95, HI18].

It is natural to define \({\nu _{\beta ,N}}\) using a density with respect to the centred Gaussian measure \(\mu _N\) with covariance \((-\Delta )^{-1}\), where \(\Delta \) is the Laplacian on \({\mathbb {T}}_N\) (see Remark 1.1 for how we deal with the issue of constant fields/the zeroeth Fourier mode). However, in 2D and higher \(\mu _N\) is not supported on a space of functions and samples need to be interpreted as Schwartz distributions. This is a serious problem because there is no canonical interpretation of products of distributions, meaning that the nonlinearity \(\int _{{\mathbb {T}}_N}{\mathcal {V}}_\beta (\phi (x)) dx\) is not well-defined on the support of \(\mu _N\). If one introduces an ultraviolet (small-scale) cutoff \(K>0\) on the field to regularise it, then one sees that the nonlinearities \({\mathcal {V}}_\beta (\phi _K)\) fail to converge as the cutoff is removed—there are divergences. The strength of these divergences grow as the dimension grows: they are only logarithmic in the cutoff in 2D, whereas they are polynomial in the cutoff in 3D. In addition, \({\nu _{\beta ,N}}\) and \(\mu _N\) are mutually singular [BG20] in 3D, which produces technical difficulties that are not present in 2D.

Renormalisation is required in order to kill these divergences. This is done by looking at the cutoff measures and subtracting the corresponding counter-term \(\int _{{\mathbb {T}}_N}\delta m^2(K) \phi ^2_K\) where \(\phi _K\) is the field cutoff at spatial scales less than \(\frac{1}{K}\) and the renormalisation constant \(\delta m^2(K) = \frac{C_1}{\beta } K - \frac{C_2}{\beta ^2} \log K\) for specific constants \(C_1, C_2 > 0\) (see Sect. 2). If these constants are appropriately chosen (i.e. by perturbation theory), then a non-Gaussian limiting measure is obtained as \(K \rightarrow \infty \). This construction yields a one-parameter family of measures \({\nu _{\beta ,N}}={\nu _{\beta ,N}}(\delta m^2)\) corresponding to bounded shifts of \(\delta m^2(K)\).

Remark 1.1

For technical reasons, we work with a massive Gaussian free field as our reference measure. We do this by introducing a mass \(\eta > 0\) into the covariance. This resolves the issue of the constant fields/zeroeth Fourier mode degeneracy. In order to stay consistent with (1.1), we subtract \(\int _{{\mathbb {T}}_N}\frac{\eta }{2} \phi ^2 dx\) from \({\mathcal {V}}_\beta (\phi )\).

Once we have chosen \(\eta \), it is convenient to fix \(\delta m^2\) by writing the renormalisation constants in terms of expectations with respect to \(\mu _N(\eta )\). The particular choice of \(\eta \) is inessential since one can show that changing \(\eta \) corresponds to a bounded shift of \(\delta m^2\) that is \(O\Big (\frac{1}{\beta }\Big )\) as \(\beta \rightarrow \infty \).

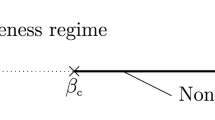

The large-scale behaviour of \({\nu _{\beta ,N}}\) depends heavily on \(\beta \) as \(N \rightarrow \infty \). To see why, note that \(a \mapsto {\mathcal {V}}_\beta (a)\) has minima at \(a = \pm {\sqrt{\beta }}\) with a potential barrier at \(a=0\) of height \(\beta \), so the minima become widely separated by a steep barrier as \(\beta \rightarrow \infty \). Consequently, \({\nu _{\beta ,N}}\) resembles an Ising model on \({\mathbb {T}}_N\) with spins at \(\pm {\sqrt{\beta }}\) (i.e. at inverse temperature \(\beta > 0\)) for large \(\beta \). Glimm et al. [GJS75] exploited this similarity and proved phase transition for \(\nu _\beta \), the infinite volume analogue of \({\nu _{\beta ,N}}\), in 2D using a sophisticated modification of the classical Peierls’ argument for the low temperature Ising model [Pei36, Gri64, Dob65]. See also [GJS76a, GJS76b]. Their proof relies on contour bounds for \({\nu _{\beta ,N}}\) in 2D that hold in the limit \(N \rightarrow \infty \). Their techniques fail in the significantly harder case of 3D. However, phase transition for \(\nu _\beta \) in 3D was established by Fröhlich, Simon, and Spencer [FSS76] using a different argument based heavily on reflection positivity. Whilst this argument is more general (it applies, for example, to some models with continuous symmetry), it is less quantitative than the Peierls’ theory of [GJS75]. Specifically, it is not clear how to use it to control large deviations of the (finite volume) average magnetisation \({\mathfrak {m}}_N\).

Although phase coexistence for \(\nu _\beta \) has been established, little is known of this regime in comparison to the low temperature Ising model. In the latter model, the study of phase segregation at low temperatures in large but finite volumes was initiated by Minlos and Sinai [MS67, MS68], culminating in the famous Wulff constructions: due to Dobrushin, Kotecký, and Shlosman in 2D [DKS89, DKS92], with simplifications due to Pfister [Pfi91] and results up to the critical point by Ioffe and Schonmann [IS98]; and Bodineau [Bod99] in 3D, see also results up to the critical point by Cerf and Pisztora [CP00] and the bibliographical review in [BIV00, Section 1.3.4]. We are interested in a weaker form of phase segregation: surface order large deviation estimates for the average magnetisation \({\mathfrak {m}}_N\). For the Ising model, this was first established in 2D by Schonmann [Sch87] and later extended up to the critical point by Chayes, Chayes, and Schonmann [CCS87]; in 3D this was first established by Pisztora [Pis96]. These results should be contrasted with the volume order large deviations established for \({\mathfrak {m}}_N\) in the high temperature regime where there is no phase coexistence [CF86, Ell85, FO88, Oll88].

Our main result is a surface order upper bound on large deviations for the average magnetisation under \({\nu _{\beta ,N}}\).

Theorem 1.2

Let \(\eta > 0\) and \({\nu _{\beta ,N}}= {\nu _{\beta ,N}}(\eta )\) as in Remark 1.1. For any \(\zeta \in (0,1)\), there exists \(\beta _0 = \beta _0(\zeta ,\eta ) > 0\), \(C=C(\zeta , \eta )>0\), and \(N_0 = N_0(\zeta ) \geqslant 4\) such that the following estimate holds: for any \(\beta > \beta _0\) and any \(N > N_0\) dyadic,

Proof

See Sect. 3.5. \(\quad \square \)

The condition that N is a sufficiently large dyadic in Theorem 1.2 comes from Proposition 3.8 (we also need that N is divisible by 4 to apply the chessboard estimates of Proposition 6.5). Our analysis can be simplified to prove Theorem 1.2 in 2D with \(N^2\) replaced by N in (1.2).

Our main technical contributions are contour bounds for \({\nu _{\beta ,N}}\). As a result, the Peierls’ argument of [GJS75] is extended to 3D, thereby giving a second proof of phase transition for \(\phi ^4_3\). The main difficulty is to handle the ultraviolet divergences of \({\nu _{\beta ,N}}\) whilst preserving the structure of the low temperature potential. We do this by building on the variational approach to showing ultraviolet stability for \(\phi ^4_3\) recently developed by Barashkov and Gubinelli [BG19]. Our insight is to separate scales within the corresponding stochastic control problem through a coarse-graining into an effective Hamiltonian and remainder. The effective Hamiltonian captures the macroscopic description of the system and is treated using techniques adapted from [GJS76b]. The remainder contains the ultraviolet divergences and these are killed using the renormalisation techniques of [BG19].

Our next contribution is to adapt arguments used by Bodineau, Velenik, and Ioffe [BIV00], in the context of equilibrium crystal shapes of discrete spin models, to study phase segregation for \(\phi ^4_3\). In particular, we adapt them to handle a block-averaged model with unbounded spins. Technically, this requires control over large fields.

1.1 Application to the dynamical \(\phi ^4_3\) model

The Glauber dynamics of \({\nu _{\beta ,N}}\) is given by the singular stochastic PDE

where \(\Phi \in S'({\mathbb {R}}_+\times {\mathbb {T}}_N)\) is a space-time Schwartz distribution, \(\phi _0 \in {\mathcal {C}}^{-\frac{1}{2} -\kappa }({\mathbb {T}}_N)\), the infinite constant indicates renormalisation (see Remark 6.16), and \(\xi \) is space-time white noise on \({\mathbb {T}}_N\). The well-posedness of this equation, known as the dynamical \(\phi ^4_3\) model, has been a major breakthrough in stochastic analysis in recent years [Hai14, Hai16, GIP15, CC18, Kup16, MW17, GH19, MW18].

In finite volumes the solution is a Markov process and its associated semigroup \(({\mathcal {P}}_t^{\beta ,N})_{t \geqslant 0}\) is reversible and exponentially ergodic with respect to its unique invariant measure \({\nu _{\beta ,N}}\) [HM18a, HS19, ZZ18a]. As a consequence, there exists a spectral gap \(\lambda _{\beta ,N}>0\) given by the optimal constant in the inequality:

for suitable \(F \in L^2({\nu _{\beta ,N}})\). \(\lambda _{\beta ,N}^{-1}\) is called the relaxation time and measures the rate of convergence of variances to equilibrium. An implication of Theorem 1.2 is the exponential explosion of relaxation times in the infinite volume limit provided \(\beta \) is sufficiently large.

Corollary 1.3

Let \(\eta > 0\) and \({\nu _{\beta ,N}}= {\nu _{\beta ,N}}(\eta )\) as in Remark 1.1. Then, there exists \(\beta _0=\beta _0(\eta )>0\), \(C=C(\beta _0,\eta )\), and \(N_0 \geqslant 4\) such that, for any \(\beta > \beta _0\) and \(N > N_0\) dyadic,

Proof

See Sect. 7. \(\quad \square \)

Corollary 1.3 is the first step towards establishing phase transition for the relaxation times of the Glauber dynamics of \(\phi ^4\) in 2D and 3D. This phenomenon has been well-studied for the Glauber dynamics of the 2D Ising model, where a relatively complete picture has been established (in higher dimensions it is less complete). The relaxation times for the Ising dynamics on the 2D torus of sidelength N undergo the following trichotomy as \(N \rightarrow \infty \): in the high temperature regime, they are uniformly bounded in N [AH87, MO94]; in the low temperature regime, they are exponential in N [Sch87, CCS87, Tho89, MO94, CGMS96]; at criticality, they are polynomial in N [Hol91, LS12]. It would be interesting to see whether the relaxation times for the dynamical \(\phi ^4\) model undergo such a trichotomy.

1.2 Paper organisation

In Sect. 2 we introduce the renormalised, ultraviolet cutoff measures \(\nu _{\beta ,N,K}\) that converge weakly to \({\nu _{\beta ,N}}\) as the cutoff is removed. In Sect. 3 we carry out the statistical mechanics part of the proof of Theorem 1.2. In particular, conditional on the moment bounds in Proposition 3.6, we develop contour bounds for \({\nu _{\beta ,N}}\). These contour bounds allow us to adapt techniques in [BIV00], which were developed in the context of discrete spin systems, to deal with \({\nu _{\beta ,N}}\).

In Sect. 4 we lay the foundation to proving Proposition 3.6 by introducing the Boué–Dupuis formalism for analysing the free energy of \({\nu _{\beta ,N}}\) as in [BG19]. We then use a low temperature expansion and coarse-graining argument within the Boué–Dupuis formalism in Sect. 5 to establish Proposition 5.1 which contains the key analytic input to proving Proposition 3.6.

In Sect. 6, we use the chessboard estimates of Proposition 6.5 to upgrade the bounds of Proposition 5.1 to those of Proposition 3.6. Chessboard estimates follow from the well-known fact that \({\nu _{\beta ,N}}\) is reflection positive. We give an independent proof of this fact by using stability results for the dynamics (1.3) to show that lattice and Fourier regularisations of \({\nu _{\beta ,N}}\) converge to the same limit. Then, in Sect. 7, we prove Corollary 1.3 showing that the spectral gaps for the dynamics decay in the infinite volume limit provided \(\beta \) is sufficiently large.

We collect basic notations and analytic tools that we use throughout the paper in “Appendix A”.

2 The Model

In the following, we use notation and standard tools introduced in “Appendix A”.

Let \(\eta > 0\). Denote by \(\mu _N = \mu _N(\eta )\) the centred Gaussian measure with covariance \((-\Delta + \eta )^{-1}\) and expectation \({\mathbb {E}}_N\). Above, \(\Delta \) is the Laplacian on \({\mathbb {T}}_N\). As pointed out in Remark 1.1, the choice of \(\eta \) is inessential. We consider it fixed unless stated otherwise and we do not make \(\eta \)-dependence explicit in the notation.

Fix \(\beta > 0\). Let \({\mathcal {V}}_\beta :{\mathbb {R}}\rightarrow {\mathbb {R}}\) be given by

\({\mathcal {V}}_\beta \) is a symmetric double well potential with minima at \(a = \pm {\sqrt{\beta }}\) and a potential barrier at \(a=0\) of height \(\beta \).

Fix \(\rho \in C^\infty _c({\mathbb {R}}^3;[0,1])\) rotationally symmetric; decreasing; and satisfying \(\rho (x)=1\) for \(|x| \in [0,c_\rho )\), where \(c_\rho >0\). See Lemma 4.6 for why the last condition is important. Note that many of our estimates rely on the choice of \(\rho \), but we omit explicit reference to this.

For every \(K>0\), let \(\rho _K\) be the Fourier multiplier on \({\mathbb {T}}_N\) with symbol \(\rho _K(\cdot ) = \rho (\frac{\cdot }{K})\). For \(\phi \sim \mu _N\), we denote \(\phi _K = \rho _K \phi \). Note that \(\phi _K\) is smooth. Let

where \(\langle \cdot \rangle = \sqrt{\eta + 4\pi ^2|\cdot |}\). Note that  as \(K \rightarrow \infty \). The first four Wick powers of \(\phi _K\) are given by the generalised Hermite polynomials:

as \(K \rightarrow \infty \). The first four Wick powers of \(\phi _K\) are given by the generalised Hermite polynomials:

We define the Wick renormalised potential by linearity:

Let \(\nu _{\beta ,N,K}\) be the probability measure with density

Above, \({\mathcal {H}}_{\beta ,N,K}\) is the renormalised Hamiltonian

where \(\gamma _K\) and \(\delta _K\) are additional renormalisation constants given by (5.25) and (5.26), respectively, and \({\mathscr {Z}}_{\beta ,N,K} = {\mathbb {E}}_N e^{-{\mathcal {H}}_{\beta ,N,K}(\phi _K)}\) is the partition function.

Proposition 2.1

For every \(\beta > 0\) and \(N \in {\mathbb {N}}\), the measures \(\nu _{\beta ,N,K}\) converge weakly to a non-Gaussian measure \({\nu _{\beta ,N}}\) on \(S'({\mathbb {T}}_N)\) as \(K \rightarrow \infty \). In addition, \({\mathscr {Z}}_{\beta ,N,K} \rightarrow {\mathscr {Z}}_{\beta ,N}\) as \(K \rightarrow \infty \) and satisfies the following estimate: there exists \(C=C(\beta ,\eta ) > 0\) such that

Proof

Proposition 2.1 is a variant of the classical ultraviolet stability for \(\phi ^4_3\) first established in [GJ73]. Our precise formulation, i.e. the choice of \(\gamma _\bullet \) and \(\delta _\bullet \), is taken from [BG19, Theorem 1]. \(\quad \square \)

We write \(\langle \cdot \rangle _{\beta ,N}\) and \(\langle \cdot \rangle _{\beta ,N,K}\) for expectations with respect to \({\nu _{\beta ,N}}\) and \(\nu _{\beta ,N,K}\), respectively.

Remark 2.2

The constants  are, respectively, Wick renormalisation, (second order) mass renormalisation, and energy renormalisation constants. They all depend on \(\eta \) and N. \(\delta _K\) additionally depends on \(\beta \) and is needed for the convergence of \({\mathscr {Z}}_{\beta ,N,K}\) as \(K \rightarrow \infty \), but drops out of the definition of the cutoff measures (2.2).

are, respectively, Wick renormalisation, (second order) mass renormalisation, and energy renormalisation constants. They all depend on \(\eta \) and N. \(\delta _K\) additionally depends on \(\beta \) and is needed for the convergence of \({\mathscr {Z}}_{\beta ,N,K}\) as \(K \rightarrow \infty \), but drops out of the definition of the cutoff measures (2.2).

Remark 2.3

In 2D a scaling argument [GJS76c] allows one to work with the measure with density proportional to

where \({\tilde{\mu }}_N\) is the Gaussian measure with covariance \((-\Delta + {\sqrt{\beta }}^{-1})^{-1}\), i.e. a \(\beta \)-dependent mass. This measure is significantly easier to work with due to the degenerate mass when \(\beta \) is large. In particular, it is easier to obtain contour bounds which, although suboptimal from the point of view of \(\beta \)-dependence, are sufficient for the Peierls’ argument in [GJS75] and for the analogue of our argument in Sect. 3 carried out in 2D. In 3D one cannot work with such a measure.

3 Surface Order Large Deviation Estimate

In this section we carry out the statistical mechanics part of the proof of Theorem 1.2. Recall that for large \(\beta \), the the minima of potential \({\mathcal {V}}_\beta \) at \(\pm {\sqrt{\beta }}\) are widely separated by a steep potential barrier of height \(\beta \), so formally \({\nu _{\beta ,N}}\) resembles an Ising model at inverse temperature \(\beta \). We use this intuition to prove contour bounds for \({\nu _{\beta ,N}}\) (see Proposition 3.2) conditional on certain moment bounds (see Proposition 3.6). The contour bounds are then used to adapt arguments from [BIV00] to prove Theorem 1.2.

3.1 Block averaging

Let \(e_1, e_2, e_3\) be the standard basis for \({\mathbb {R}}^3\). We identify \({\mathbb {T}}_N\) with the set

Define

We call elements of \({{\mathbb {B}}_N}\) blocks. For any \(B \subset {{\mathbb {B}}_N}\), we overload notation and write  . Hence, \(|B| = \int _B 1 dx\) is the number of blocks in B. In addition, we identify any \(f \in {\mathbb {R}}^{{\mathbb {B}}_N}\) with the piecewise continuous function on \({\mathbb {T}}_N\) given by

. Hence, \(|B| = \int _B 1 dx\) is the number of blocks in B. In addition, we identify any \(f \in {\mathbb {R}}^{{\mathbb {B}}_N}\) with the piecewise continuous function on \({\mathbb {T}}_N\) given by  for

for  .

.

Let \(\phi \sim {\nu _{\beta ,N}}\). For any  , let

, let  . Here, the integral is interpreted as the duality pairing between \(\phi \) (a distribution) and the indicator function

. Here, the integral is interpreted as the duality pairing between \(\phi \) (a distribution) and the indicator function  (a test function); we use this convention throughout. We let

(a test function); we use this convention throughout. We let  denote the block averaged field obtained from \(\phi \).

denote the block averaged field obtained from \(\phi \).

Remark 3.1

Testing \(\phi \) against  , which is not smooth, yields a well-defined random variable on the support of \({\nu _{\beta ,N}}\). Indeed, \(\phi \) belongs almost surely to \(L^\infty \)-based Besov spaces of regularity s for every \(s < -\frac{1}{2}\) (see Appendix A for a review of Besov spaces and see Sect. 4 for the almost sure regularity of \(\phi \)). On the other hand, indicator functions of blocks belong to \(L^1\)-based Besov spaces of regularity s for every \(s < 1\) or, more generally, \(L^p\)-based Besov spaces of regularity s for every \(s < \frac{1}{p}\) (see, for example, Lemma 1.1 in [FR12]). This is sufficient to test \(\phi \) against indicator functions of blocks (using e.g. Proposition A.1). We also give an alternative proof using a type of Itô isometry in Proposition 5.23.

, which is not smooth, yields a well-defined random variable on the support of \({\nu _{\beta ,N}}\). Indeed, \(\phi \) belongs almost surely to \(L^\infty \)-based Besov spaces of regularity s for every \(s < -\frac{1}{2}\) (see Appendix A for a review of Besov spaces and see Sect. 4 for the almost sure regularity of \(\phi \)). On the other hand, indicator functions of blocks belong to \(L^1\)-based Besov spaces of regularity s for every \(s < 1\) or, more generally, \(L^p\)-based Besov spaces of regularity s for every \(s < \frac{1}{p}\) (see, for example, Lemma 1.1 in [FR12]). This is sufficient to test \(\phi \) against indicator functions of blocks (using e.g. Proposition A.1). We also give an alternative proof using a type of Itô isometry in Proposition 5.23.

3.2 Phase labels

We define a map \({\phi }\in {\mathbb {R}}^{{\mathbb {B}}_N}\mapsto \sigma \in \{-{\sqrt{\beta }}, 0, {\sqrt{\beta }}\}^{{\mathbb {B}}_N}\) called a phase label. A basic function of \(\sigma \) is to identify whether the averages  take values around the well at \(+{\sqrt{\beta }}\), the well at \(-{\sqrt{\beta }}\), or neither. We quantify this to a given precision \(\delta \in (0,1)\), which is taken to be fixed in what follows.

take values around the well at \(+{\sqrt{\beta }}\), the well at \(-{\sqrt{\beta }}\), or neither. We quantify this to a given precision \(\delta \in (0,1)\), which is taken to be fixed in what follows.

-

We say that

is plus (resp. minus) valued if

is plus (resp. minus) valued if

The set of plus (resp. minus) valued blocks is denoted \({\mathcal {P}}\) (resp. \({\mathcal {M}}\)).

-

The set of neutral blocks is defined as \({\mathcal {N}}= {{\mathbb {B}}_N}{\setminus } ({\mathcal {P}}\cup {\mathcal {M}})\).

Each block in \({{\mathbb {B}}_N}\) contains a midpoint. Given two distinct blocks in \({{\mathbb {B}}_N}\), we say that they are nearest-neighbours if their midpoints are of distance 1. They are \(*\)-neighbours if either they are nearest-neighbours or if their midpoints are of distance \(\sqrt{3}\). For any  , the \(*\)-connected ball centred at

, the \(*\)-connected ball centred at  is the set

is the set  consisting of

consisting of  and its \(*\)-neighbours. It contains exactly 27 blocks.

and its \(*\)-neighbours. It contains exactly 27 blocks.

-

We say that

is plus good if every

is plus good if every  is plus valued. The set of plus good blocks is denoted \({\mathcal {P}}_G\).

is plus valued. The set of plus good blocks is denoted \({\mathcal {P}}_G\). -

We say that

is minus good if every

is minus good if every  is minus valued. The set of minus good blocks is denoted \({\mathcal {M}}_G\).

is minus valued. The set of minus good blocks is denoted \({\mathcal {M}}_G\). -

The set of bad blocks is defined as \({\mathcal {B}}= {{\mathbb {B}}_N}{\setminus } ({\mathcal {P}}_G \cup {\mathcal {M}}_G)\).

Define the phase label \(\sigma \) associated to \({\phi }\) of precision \(\delta > 0\) by

The following proposition can be thought of as an extension of the contour bounds developed for \(\phi ^4\) in 2D [GJS75, Theorem 1.2] to 3D.

Proposition 3.2

Let \(\sigma \) be a phase label of precision \(\delta \in (0,1)\). Then, there exists \(\beta _0=\beta _0(\delta , \eta ) > 0\) and \(C_P=C_P(\delta , \eta ) > 0\) such that, for \(\beta > \beta _0\), the following holds for any \(N \in 4{\mathbb {N}}\): for any set of blocks \(B\subset {{\mathbb {B}}_N}\),

Proof

See Sect. 3.3.1. The main estimates required in the proof are given in Proposition 3.6, which extends [GJS75, Theorem 1.3] to 3D and improves the \(\beta \)-dependence. Assuming this, we then prove Proposition 3.2 in the spirit of the proof of [GJS75, Theorem 1.2]. \(\quad \square \)

3.3 Penalising bad blocks

Given a phase label, we partition the set of bad blocks \({\mathcal {B}}\) into two types.

-

Frustrated blocks are blocks

such that

such that  contains a neutral block. We denote the set of frustrated blocks \({\mathcal {B}}_F\).

contains a neutral block. We denote the set of frustrated blocks \({\mathcal {B}}_F\). -

Interface block are blocks

such that

such that  contains no neutral blocks, but there exists at least one pair of nearest-neighbours

contains no neutral blocks, but there exists at least one pair of nearest-neighbours  such that

such that  but

but  . We denote the set of interface blocks \({\mathcal {B}}_I\).

. We denote the set of interface blocks \({\mathcal {B}}_I\).

For any  and any nearest-neighbours

and any nearest-neighbours  , define:

, define:

Remark 3.3

Note that testing \(:\phi ^2:\) against  yields a well-defined random variable on the support of \({\nu _{\beta ,N}}\). We give a proof of this fact in Proposition 5.24.

yields a well-defined random variable on the support of \({\nu _{\beta ,N}}\). We give a proof of this fact in Proposition 5.24.

We write  for the set of unordered pairs of nearest-neighbour blocks

for the set of unordered pairs of nearest-neighbour blocks  in \({{\mathbb {B}}_N}\) such that

in \({{\mathbb {B}}_N}\) such that  . There are 54 elements in this set.

. There are 54 elements in this set.

Lemma 3.4

Let \(N \in {\mathbb {N}}\) and fix a phase label of precision \(\delta \in (0,1)\). Then, for every  ,

,

where \(C_\delta = \min \Big ( \frac{\delta }{2}, 2-2\delta \Big ) > 0\).

Frustrated blocks are penalised by the potential \({\mathcal {V}}_\beta \) whereas interface blocks are penalised by the gradient term in the Gaussian measure. Lemma 3.4 formalises this through use of the random variables \(Q_1, Q_2\) and \(Q_3\), which (up to trivial modifications) were introduced in [GJS75]. \(Q_1\) penalises frustrated blocks. \(Q_2\) is an error term coming from the fact that the potential is written in terms of \(\phi \) rather than \({\phi }\). \(Q_3\) penalises interface blocks.

Proof of Lemma 3.4

For any  ,

,

where in the penultimate line we have used that \(\delta ^2 \leqslant \delta \).

By the definition of \({\mathcal {B}}_F\),

Using (3.5) applied to  in (3.6) yields (3.3).

in (3.6) yields (3.3).

(3.4) is established by the following estimates: by the definition of \({\mathcal {B}}_I\),

\(\square \)

In order to use Lemma 3.4 to prove Proposition 3.2, we want to control expectations of \(\cosh Q_1, \cosh Q_2\) and \(\cosh Q_3\) by the exponentially small (in \({\sqrt{\beta }}\)) prefactor in (3.3) and (3.4). Moreover, we want to control these expectations over a set of blocks as opposed to just single blocks.

Let \(B_1, B_2 \subset {{\mathbb {B}}_N}\) and let \(B_3\) be any set of unordered pairs of nearest-neighbours in \({{\mathbb {B}}_N}\). Define

Remark 3.5

Although the random variable  does depend on the ordering of

does depend on the ordering of  and

and  ,

,  does not.

does not.

Proposition 3.6

For every \(a_0 > 0\), there exist \(\beta _0 = \beta _0(a_0,\eta )>0\) and \(C_Q = C_Q(a_0,\beta _0,\eta )>0\) such that the following holds uniformly for all \(\beta > \beta _0\), \(a_1,a_2,a_3 \in {\mathbb {R}}\) such that \(|a_i| \leqslant a_0\), and \(N \in 4{\mathbb {N}}\): let \(B_1, B_2 \subset {{\mathbb {B}}_N}\) and \(B_3\) a set of unordered pairs of nearest-neighbour blocks in \({{\mathbb {B}}_N}\). Then,

where \(|B_3|\) is given by the number of pairs in \(B_3\).

Proof

Proposition 3.6 is established in Sect. 6.3, but its proof takes up most of this article. The overall strategy is as follows: the crucial first step is to obtain upper and lower bounds on the free energy \(-\log {\mathscr {Z}}_{\beta ,N}\) that are uniform in \(\beta \) and extensive in the volume, \(N^3\). We then build on this analysis to obtain upper bounds on expectations of the form \(\langle \exp Q \rangle _{\beta ,N}\) that are uniform in \(\beta \) and extensive in \(N^3\). Here, Q is a placeholder for random variables that are derived from the \(Q_i\)’s, but that are supported on the whole of \({\mathbb {T}}_N\) rather than arbitrary unions of blocks. This is all done in Sect. 5, where the key results are Propositions 5.3 and 5.1 , within the framework developed in Sect. 4.

The next step in the proof is to use the chessboard estimates of Proposition 6.5 (which requires \(N \in 4{\mathbb {N}}\)) to bound the lefthand side of (3.8) in terms of \(|B_1|+|B_2|+|B_3|\) products of expectations of the form \(\langle \exp Q \rangle _{\beta ,N}^\frac{1}{N^3}\). Applying the results of Sect. 5 then completes the proof. \(\quad \square \)

Key features of the estimate (3.8) used in the proof of Proposition 3.2 are that it is uniform in \(\beta \) and extensive in the support of the \(Q_i\)’s.

3.3.1 Proof of the Proposition 3.2 assuming Proposition 3.6

We first show that we can reduce to the case where B contains no \(*\)-neighbours, which simplifies the combinatorics later on. Identify \({{\mathbb {B}}_N}\) with a subset of \({\mathbb {Z}}^3\). For every \(e_l \in \{-1,0,1\}^3\), let \({\mathbb {Z}}^3_l = e_l + (3 {\mathbb {Z}})^3\). There are 27 such sub-lattices which we order according to \(l \in \{1,\dots ,27\}\). Note that \({\mathbb {Z}}^3 = \bigcup _{l=1}^{27} {\mathbb {Z}}^3_l\). Let \({\mathbb {B}}_N^l = {{\mathbb {B}}_N}\cap {\mathbb {Z}}^3_l\). Each \(*\)-connected ball in \({{\mathbb {B}}_N}\) contains at most one block from each of these \({\mathbb {B}}_N^l\).

Assume that (3.1) has been established for sets with no \(*\)-neighbours with constant \(C_P'\). Then, by Hölder’s inequality,

thereby establishing (3.1) with \(C_P = \frac{C_P'}{27}\).

Now assume that B contains no \(*\)-neighbours. Fix any \(A \subset B\). Let  and let

and let  . By our assumption, A contains no \(*\)-neighbours. Hence, for any

. By our assumption, A contains no \(*\)-neighbours. Hence, for any  there exists a unique

there exists a unique  such that

such that  ; we define the root of

; we define the root of  to be

to be  . Similarly, for any

. Similarly, for any  there exists a unique

there exists a unique  such that

such that  ; we define the root of

; we define the root of  to be

to be  . Note that the definition of root is A-dependent in both cases.

. Note that the definition of root is A-dependent in both cases.

By Lemma 3.4, there exists \(C_\delta \) such that

where the last sum is over all \(A_1, A_2 \subset \mathrm {B}^*(A)\) and \(A_3 \subset \mathrm {B}^*_{\mathrm {nn}}(B {\setminus } A)\) such that: no two blocks in \(A_1 \cup A_2\) share a root, and no two pairs of blocks in \(A_3\) share a root; and, \(|A_1| + |A_2| = |A|\) and \(|A_3| = |B {\setminus } A|\). We note that there are \((2 \cdot 27)^{|A|}=54^{|A|}\) possible \(A_1\) and \(A_2\), and \(54^{|B {\setminus } A|}\) possible \(A_3\).

By Proposition 3.6, there exists \(C_Q\) such that, after taking expectations in (3.10) and using that \(|A| + |B {\setminus } A| = |B|\), we obtain

Thus, choosing

yields (3.1) with \(C_P= \frac{C_\delta }{2}\). This completes the proof.

3.4 Exchanging the block averaged field for the phase label

We now show that Propositions 3.2 and 3.6 allow one to reduce the problem of analysing the block averaged field to that of analysing the phase label. The main difficulty here is dealing with large fields, i.e. those \({\phi }\) for which \(\int _{{\mathcal {B}}} |{\phi }|\) is large.

Proposition 3.7

Let \(\delta , \delta ' \in (0,1)\) satisfy \(\delta ' \leqslant \frac{\delta }{2}\). Then, there exists \(\beta _0 = \beta _0(\delta ,\eta )>0\), \(C=C(\delta , \beta _0, \eta )>0\) and \(N_0=N_0(\delta )>0\) such that, for all \(\beta > \beta _0\) and \(N \in 4{\mathbb {N}}\) with \(N>N_0\),

where \(\sigma \) is the phase label of precision \(\delta ' \leqslant \frac{\delta }{2}\).

Proof

Observe that

By Proposition 3.2, there exists \(\beta _0>0\) and \(C_P>0\) such that, for \({\sqrt{\beta }}> \max \Big (\sqrt{\beta _0}, \frac{16 \log 2}{C_P\delta } \Big ) \),

Now consider the first term on the right hand side of (3.12). We decompose one step further:

where

We show that \(T_1 = \emptyset \) and that

for some constant \(C=C(\delta )>0\) and for \(\beta \) sufficiently large. Combining these estimates with (3.13) completes the proof.

First, we treat \(T_1\). On good blocks \(|\phi _i - \sigma |\) is bounded by the \({\sqrt{\beta }}\) multiplied by the precision of the phase label (\(\delta ' \leqslant \frac{\delta }{2}\) in this instance) and \(\sigma = 0\) on bad blocks. Therefore, on the set \(\Big \{ \int _{{\mathcal {B}}} |{\phi }| dx \leqslant \frac{\delta }{2} {\sqrt{\beta }}N^3\Big \}\), we have:

which shows that the first condition in \(T_1\) is inconsistent with the second, so \(T_1 = \emptyset \).

We turn our attention to \(T_2\). Fix \(B \subset {{\mathbb {B}}_N}\). By Chebyschev’s inequality, Young’s inequality, and Proposition 3.6, there exists \(\beta _0>0\) and \(C_Q>0\) such that, for \(\beta > \beta _0\),

Therefore,

Taking

yields (3.14) with \(C=\frac{3\delta }{16}\). \(\quad \square \)

3.5 Proof of the main result

Adapting an argument from [Bod02], we reduce the proof of Theorem 1.2 to bounding the probability that \({\phi }\) is far from \(\pm {\sqrt{\beta }}\)-valued functions on \({{\mathbb {B}}_N}\) whose boundary (between regions of opposite spins) is of certain fixed area. Proposition 3.7 then allows us to go from analysing \({\phi }\) to the phase label, for which we use existing results from [BIV00].

For any \(B \subset {{\mathbb {B}}_N}\), let \(\partial B\) denotes its boundary, which is given by the union of faces of blocks in B. Let \(|\partial B| = \int _{\partial B} 1 ds(x)\), where ds(x) is the 2D Hausdorff measure (normalised so that faces have unit area). Thus, \(|\partial B|\) is the number of faces in \(\partial B\).

For any \(a > 0\), let \(C_a\) be the set of functions \(f \in \{ \pm 1\}^{{\mathbb {B}}_N}\) such that \(|\partial \{ f = +1\}|\leqslant aN^2\). For any \(\delta > 0\), let \({\mathfrak {B}}(C_a,\delta )\) be the set of integrable functions g on \({\mathbb {T}}_N\) such that there exists \(f \in C_a\) that satisfies \(\int _{{\mathbb {T}}_N}|g-f| dx \leqslant \delta N^3\).

Proposition 3.8

Let \(\delta , \delta ' \in (0,1)\) satisfy \(\delta ' \leqslant \delta \). Then, there exists \(\beta _0 = \beta _0(\delta ,\eta )>0\) and \(C=C(\delta ,\beta _0,\eta )>0\) such that, for all \(\beta > \beta _0\), the following estimate holds: for all \(a>0\), there exists \(N_0 = N_0(a,\delta ) \geqslant 4\) such that, for all \(N > N_0\) dyadic,

where \(\sigma \) is the phase label of precision \(\delta '\).

Proof

See [BIV00, Theorem 2.2.1] where Proposition 3.8 is proven for a more general class of phase labels that satisfy a Peierls’ type estimate such as the one in Proposition 3.2. We give a self-contained proof for our setting in Sect. 3.6. \(\quad \square \)

The following lemma is our main geometric tool. It is a weak form of the isoperimetric inequality on \({\mathbb {T}}_N\), although it can be reformulated in arbitrary dimension. Its proof is a standard application of Sobolev’s inequality and we include it for the reader’s convenience.

Lemma 3.9

There exists \(C_I>0\) such that the following estimate holds for every \(N \in {\mathbb {N}}\):

for every \(f \in \{\pm 1 \}^{{\mathbb {B}}_N}\).

Proof

Let \(\theta \in C^\infty _c({\mathbb {R}}^3)\) be rotationally symmetric with \(\int _{{\mathbb {R}}^3} \theta dx = 1\). By Sobolev’s inequality, there exists C such that, for every \(\varepsilon \),

where \(f_\varepsilon = f *\varepsilon ^{-3} \theta ( \varepsilon ^{-1} \cdot )\) and \(c_\varepsilon = \frac{1}{N^3} \int _{{\mathbb {T}}_N}f_\varepsilon dx\). Note that C is independent of N by scaling.

Letting \(\varepsilon \rightarrow 0\) in the left hand side of (3.16), we obtain

where \(c = \frac{ |\{ f = 1 \}| - |\{ f = -1 \}|}{N^3}\). Note that \(c\in [-1,1]\).

Without loss of generality, assume \(c\geqslant 0\). This implies that \(|\{ f = 1 \}| \geqslant \{ f = -1 \}|\). Then, evaluating the integral on the righthand side of (3.17), we find that

where we have used that the function

has minimum at \(c=0\) on the interval [0, 1].

For the term on the right hand side of (3.16), using duality we obtain

where \(|\cdot |_\infty \) denotes the supremum norm on \(C^\infty ({\mathbb {T}}_N,{\mathbb {R}}^3)\).

For any such \({\mathbf {g}}\), using integration by parts and commuting the convolution with differentiation,

where the \({\mathbf {g}}_\varepsilon \) is interpreted as convolving each component of \({\mathbf {g}}\) with \(\varepsilon ^{-3} \theta (\varepsilon ^{-1}\cdot )\) separately.

Hence, by the divergence theorem, Young’s inequality for convolutions, and using the supremum norm bound on \({\mathbf {g}}\),

where \({\hat{n}}\) denotes the unit normal to \(\partial \{ f = 1 \}\) pointing into \(\{ f = -1\}\).

Inserting (3.21) in (3.19) implies that, for any \(\varepsilon \),

Thus, by inserting (3.22), (3.17) and (3.18) into (3.16), we obtain

\(\square \)

Proof (Proof of Theorem 1.2)

Let \(\zeta \in (0,1)\). Choose \(a>0\) and \(\delta \in (0,1)\) such that

where \(C_I\) is the same constant as in Lemma 3.9. We first show that

Assume \(\frac{1}{{\sqrt{\beta }}} {\phi }\in {\mathfrak {B}}(C_a,\delta ).\) Then, there exists \(f \in C_a\) such that

This implies

from which we deduce, together with Lemma 3.9,

Since \(f \in C_a\), we obtain

by (3.23).

Hence,

Taking complements establishes (3.24).

Now let \(\sigma \) be the phase label of precision \(\frac{\delta }{2}\). Note that

Applying Proposition 3.7, Proposition 3.8, and using (3.24) finishes the proof. \(\quad \square \)

3.6 Proof of Proposition 3.8

For any \(B \subset {{\mathbb {B}}_N}\), let \(\partial ^* B\) be the set of blocks in B with \(*\)-neighbours in \({\mathbb {T}}_N{\setminus } B\). Note that this is not the same as \(\partial B\), which was defined earlier. Let \({\mathcal {D}}\) be the set of \(*\)-connected components of \(\partial ^* ({\mathbb {T}}_N{\setminus } {\mathcal {M}}_G)\). We call this the set of defects. Necessarily, any \(\Gamma \in {\mathcal {D}}\) satisfies \(\Gamma \subset {\mathcal {B}}\).

Fix \(\gamma \in (0, 1)\). Let \({\mathcal {D}}^\gamma \subset {\mathcal {D}}\) be the set of \(\Gamma \in {\mathcal {D}}\) such that \(|\Gamma | \leqslant 6 N^\gamma \). The elements of \({\mathcal {D}}^\gamma \) are called \(\gamma \)-small defects and the elements of \({\mathcal {D}}{\setminus } {\mathcal {D}}^\gamma \) are called \(\gamma \)-large defects.

Take any \(\Gamma \in {\mathcal {D}}^\gamma \). Recall that we identify \(\Gamma \) with the subset of \({\mathbb {T}}_N\) given by the union of blocks in \(\Gamma \). Write \(\mathrm {Cl}(\Gamma )\) for its closure in \({\mathbb {T}}_N\). The condition \(\gamma < 1\) ensures that, provided N is taken sufficiently large depending on \(\gamma \), any \(\Gamma \in {\mathcal {D}}^\gamma \) is contained in a (translate of a) sphere of radius \(\frac{N}{4}\) in \({\mathbb {T}}_N\). Let \(\mathrm {Ext}(\Gamma )\) be the unique connected component of \({\mathbb {T}}_N{\setminus } \mathrm {Cl}(\Gamma )\) that intersects with the complement of this sphere. Let \(\mathrm {Int}(\Gamma ) = {\mathbb {T}}_N{\setminus } \mathrm {Ext}(\Gamma )\). We identify \(\mathrm {Ext}(\Gamma )\) and \(\mathrm {Int}(\Gamma )\) with their representations as subsets of \({{\mathbb {B}}_N}\). Note that \(\Gamma \subset \mathrm {Int}(\Gamma )\) and generically the inclusion strict, e.g. when \(\Gamma \) encloses a region.

Let \({\mathcal {D}}^{\gamma ,\max }\) be the set of \(\Gamma \in {\mathcal {D}}^{\gamma }\) such that \(\Gamma \bigcap \mathrm {Int}({\tilde{\Gamma }}) = \emptyset \) for any \({\tilde{\Gamma }} \in {\mathcal {D}}^\gamma {\setminus } \{ \Gamma \}\). In other words, \({\mathcal {D}}^{\gamma ,\max }\) is the set of \(\gamma \)-small defects that are not contained in the interior of any other \(\gamma \)-small defects, and we call these maximal \(\gamma \)-small defects.

We define two events, one corresponds to the total surface area of \(\gamma \)-large defects being small and the other corresponding to the total volume contained within maximal \(\gamma \)-small defects being small. Let

We now show that for \(\phi \in S_1 \cap S_2 \cap \{|{\mathcal {B}}| < \frac{\delta }{2} N^3\}\), we have \(\frac{1}{{\sqrt{\beta }}}\sigma \in {\mathfrak {B}}(C_a,\delta )\).

We obtain a \(\pm {\sqrt{\beta }}\)-valued spin configuration from \(\sigma \) by erasing all \(\gamma \)-small defects in two steps: First, we reset the values on bad blocks to \({\sqrt{\beta }}\). Define \(\sigma _1 \in \{ \pm {\sqrt{\beta }}\}^{{\mathbb {B}}_N}\) by  if

if  , otherwise

, otherwise  . Second, define \(\sigma _2\in \{\pm {\sqrt{\beta }}\}^{{\mathbb {B}}_N}\) as follows: Given

. Second, define \(\sigma _2\in \{\pm {\sqrt{\beta }}\}^{{\mathbb {B}}_N}\) as follows: Given  for some \(\Gamma \in {\mathcal {D}}^{\gamma ,\max }\), let

for some \(\Gamma \in {\mathcal {D}}^{\gamma ,\max }\), let  , where

, where  is any block in \(\mathrm {Ext}(\Gamma )\) that is \(*\)-neighbours with a block in \(\Gamma \). Note that the second step is well-defined since the first step ensures that every block in \(\mathrm {Ext}(\Gamma )\) that is \(*\)-neighbours with \(\Gamma \) has the same value. See Figure 1 for an example of this procedure.

is any block in \(\mathrm {Ext}(\Gamma )\) that is \(*\)-neighbours with a block in \(\Gamma \). Note that the second step is well-defined since the first step ensures that every block in \(\mathrm {Ext}(\Gamma )\) that is \(*\)-neighbours with \(\Gamma \) has the same value. See Figure 1 for an example of this procedure.

From the definition of \(S_1\) and using that the factor 6 in the definition of \(\gamma \)-small defects accounts for the discrepancy between \(|\partial \cdot |\) and \(|\partial ^*\cdot |\),

yielding \(\frac{1}{{\sqrt{\beta }}}\sigma _2 \in C_a\). Then, from the definition of \(S_2\) and using the smallness assumption on the number of bad blocks,

which establishes that \(\frac{1}{{\sqrt{\beta }}}\sigma \in {\mathfrak {B}}(C_a,\delta )\).

We deduce that the event \(\Big \{ \frac{1}{{\sqrt{\beta }}}\sigma \not \in {\mathfrak {B}}(C_a,\delta ) \Big \}\) necessarily implies one of three things: either there are many bad blocks; or, the total surface area of \(\gamma \)-large defects is large; or, the density of \(\gamma \)-small defects is high. That is,

Proposition 3.2 gives control on the first event. The other two are controlled by the following lemmas.

Lemma 3.10

Let \(\gamma ,\delta \in (0,1)\). Then, there exists \(\beta _0=\beta _0(\gamma ,\delta ,\eta )>0\) and \(C=C(\gamma ,\delta ,\beta _0,\eta )>0\) such that, for all \(\beta > \beta _0\), the following holds: for any \(a > 0\), there exists \(N_0 = N_0(\gamma ,a)>0\) such that, for any \(N \in 4{\mathbb {N}}\) with \(N > N_0\),

where the underlying phase label is of precision \(\delta \).

Proof

We give a proof based on arguments from [DKS92, Theorem 6.1] in Sect. 3.6.1. \(\quad \square \)

Lemma 3.11

Let \(\gamma ,\delta ,\delta ' \in (0,1)\). Then, there exists \(\beta _0=\beta _0(\gamma ,\delta ,\delta ',\eta )>0\), \(C=C(\gamma ,\delta , \delta ',\beta _0,\eta )>0\) and \(N_0=N_0(\gamma , \delta ) \geqslant 4\) such that, for all \(\beta > \beta _0\) and \(N > N_0\) dyadic,

where the underlying phase label is of precision \(\delta '\).

Proof

See [BIV00, Section 5.1.3] for a proof in a more general setting. We give an alternative proof in Sect. 3.6.2 that avoids the use of techniques from percolation theory. \(\quad \square \)

As in (3.13), by Proposition 3.2 there exists \(C_P > 0\) such that

provided \({\sqrt{\beta }}> \frac{4\log 2}{\delta C_P}\).

Therefore, from (3.25), (3.26), Lemma 3.10 and Lemma 3.11, there exists \(C>0\) such that

Taking \(\gamma < \frac{1}{3}\) and N sufficiently large completes the proof. All that remains is to show Lemmas 3.10 and 3.11.

3.6.1 Proof of Lemma 3.10

By a union bound

where\(\{ \Gamma _i \}\) refers to a non-empty set of distinct \(*\)-connected subsets of \({{\mathbb {B}}_N}\).

By Proposition 3.2 there exists \(C_P\) such that, for any \(\{ \Gamma _i \}\),

Inserting this into (3.27) and using the trivial estimate \(\sum |\Gamma _i| \geqslant \frac{1}{2} aN^2 + \frac{1}{2} \sum |\Gamma _i|\),

Summing first over the number of elements in \(\{ \Gamma _i \}\) and then the number of \(*\)-connected regions containing a fixed number of blocks,

provided \({\sqrt{\beta }}> \max \Big ( \frac{4\log 27}{C_P}, \frac{4\log 2}{C_P} \Big ) = \frac{4 \log 27}{C_P} \) (note that the condition arises so that \(e^{-\frac{C_P}{4} {\sqrt{\beta }}} < \frac{1}{2}\), so that the geometric series with this rate is bounded by 1).

For any \(\gamma > 0\), the final series in (3.29) is summable provided \(N^\gamma > \log N\) and \({\sqrt{\beta }}> \frac{24}{C_P}\), thereby finishing the proof.

3.6.2 Proof of Lemma 3.11

Choose \( 2 N^\gamma \leqslant K \leqslant 4 N^\gamma \) such that K divides N. Such a choice is possible since we take N to be a sufficiently large dyadic. Let

Elements of \({\mathbb {B}}_N^K\) are called K-blocks.

We say that two distinct K-blocks are \(*_K\)-neighbours if their corresponding midpoints are of distance at most \(K\sqrt{3}\). We define the \(*_K\)-connected ball around  to be the set containing itself and its \(*_K\)-neighbours. As in the proof of Proposition 3.2, we can decompose \({\mathbb {B}}_N^K = \bigcup _{l=1}^{27} {\mathbb {B}}_N^{K,l}\) such that any \(*_K\)-connected ball in \({\mathbb {B}}_N^K\) contains exactly one K-block from each element of the decomposition.

to be the set containing itself and its \(*_K\)-neighbours. As in the proof of Proposition 3.2, we can decompose \({\mathbb {B}}_N^K = \bigcup _{l=1}^{27} {\mathbb {B}}_N^{K,l}\) such that any \(*_K\)-connected ball in \({\mathbb {B}}_N^K\) contains exactly one K-block from each element of the decomposition.

For each  , distinguish the unit block

, distinguish the unit block  . For every \(h \in \{0,\dots ,K-1\}^3\), let \(\tau _h\) be the translation map on \({{\mathbb {B}}_N}\) induced from the translation map on \({\mathbb {T}}_N\). We identify

. For every \(h \in \{0,\dots ,K-1\}^3\), let \(\tau _h\) be the translation map on \({{\mathbb {B}}_N}\) induced from the translation map on \({\mathbb {T}}_N\). We identify  . Denote the set of distinguished unit blocks in \({\mathbb {B}}_N^K\) (respectively, \({\mathbb {B}}_N^{K,l}\)) as \({\mathbb {U}}{\mathbb {B}}_N^K\) (respectively, \({\mathbb {U}}{\mathbb {B}}_N^{K,l}\)).

. Denote the set of distinguished unit blocks in \({\mathbb {B}}_N^K\) (respectively, \({\mathbb {B}}_N^{K,l}\)) as \({\mathbb {U}}{\mathbb {B}}_N^K\) (respectively, \({\mathbb {U}}{\mathbb {B}}_N^{K,l}\)).

By our choice of K, \(\mathrm {Int}(\Gamma )\) is entirely contained in a translation of a K-block for any \(\Gamma \in {\mathcal {D}}^\gamma \). As a result, \(\mathrm {Int}(\Gamma )\) intersects at most one K-block in \({\mathbb {B}}_N^{K,l}\) for any fixed l.

Using the correspondence between K-blocks and unit blocks described above, we have

Hence,

where the maximum is over \(h \in \{0,\dots ,K-1\}^3\) and \(1\leqslant l \leqslant 27\).

Let \(E_k\) be the event that precisely k indicator functions appearing on the right hand side of (3.30) are nonzero. In other words, \(E_k\) is the event that there are k distinct defects of size at most \(N^\gamma \) such that the k distinct  , where

, where  , are contained in their interiors.

, are contained in their interiors.

Given a block there are \(27 \cdot 26^{n-1}\) possible defects of size n that contain this block. Thus, by Proposition 3.2, there exists \(C_P\) such that

provided e.g. \({\sqrt{\beta }}> \max \Big ( \frac{4\log 27}{C_P}, \frac{2\log 2}{C_P} \Big ) = \frac{4\log 27}{C_P}\). This estimate is uniform over the choice of h and l.

By a union bound on (3.30), using (3.31), and that \(2N^\gamma \leqslant K \leqslant 4N^\gamma \),

provided \(\gamma \log N < N^{3-3\gamma }\) and \({\sqrt{\beta }}> \frac{81 \cdot 32 + 4\log 2}{\delta C_P}\). Taking logarithms and dividing by \(N^2\) completes the proof.

4 Boué–Dupuis Formalism for \(\phi ^4_3\)

In this section we introduce the underlying framework that we build on to analyse expectations of certain random variables under \({\nu _{\beta ,N}}\), as required in the proof of Proposition 3.6. This framework was originally developed in [BG19] to show ultraviolet stability for \(\phi ^4_3\) and identify its Laplace transform.

In particular, we want to obtain estimates on expectations of the form \(\langle e^{Q_K} \rangle _{\beta ,N,K}\), where \(Q_K\) are random variables that converge (in an appropriate sense) to some random variable Q of interest. We always work with a fixed ultraviolet cutoff K and establish estimates on \(\langle e^{Q_K} \rangle _{\beta ,N,K}\) that are uniform in K: this requires handling of ultraviolet divergences. The first observation is that we can represent such expectations as a ratio of Gaussian expectations:

where we recall \({\mathbb {E}}_N\) denotes expectation with respect to \(\mu _N\) and \({\mathscr {Z}}_{\beta ,N,K} = {\mathbb {E}}_N e^{-{\mathcal {H}}_{\beta ,N,K}(\phi _K)}\) is the partition function.

We then introduce an auxiliary time variable that continuously varies the ultraviolet cutoff between 0 and K, and use it to represent these Gaussian expectations in terms of expectations of functionals of finite dimensional Brownian motions. This allows us to use the Boué–Dupuis variational formula given in Proposition 4.7 to write these expectations in terms of a stochastic control problem. Hence, the problem of obtaining bounds is translated into choosing appropriate controls. An insight made in [BG19] is that one can use methods developed in the context of singular stochastic PDEs, specifically the paracontrolled calculus approach of [GIP15], within the control problem to kill ultraviolet divergences.

Remark 4.1

In the following, we make use of tools in Appendices A and A concerning Besov spaces and paracontrolled calculus. In addition, for the rest of Sects. 4 and 5 , we consider \(N \in {\mathbb {N}}\) fixed and drop it from notation when clear.

4.1 Construction of the stochastic objects

Fix \(\kappa _0 > 0\) sufficiently small. We equip \(\Omega = C({\mathbb {R}}_+; {\mathcal {C}}^{-\frac{3}{2} -\kappa _0})\) with its Borel \(\sigma \)-algebra. Denote by \({\mathbb {P}}\) the probability measure on \(\Omega \) under which the coordinate process \(X_{\bullet }=(X_k)_{k \geqslant 0}\) is an \(L^2\) cylindrical Brownian motion. We write \({\mathbb {E}}\) to denote expectation with respect to \({\mathbb {P}}\). We consider the filtered probability space \((\Omega , {\mathcal {A}}, ({\mathcal {A}}_k)_{k \geqslant 0},{\mathbb {P}})\), where \({\mathcal {A}}\) is the \({\mathbb {P}}\)-completion of the Borel \(\sigma \)-algebra on \(\Omega \), and \(({\mathcal {A}}_k)_{k \geqslant 0}\) is the natural filtration induced by X and augmented with \({\mathbb {P}}\)-null sets of \({\mathcal {A}}\).

Given \(n \in (N^{-1}{\mathbb {Z}})^3\), define the process \(B^n_\bullet \) by \(B^n_k = \frac{1}{N^\frac{3}{2}} \int _{{\mathbb {T}}_N}X_k e_{-n} dx\), where \(e_n(x) = e^{2\pi i n \cdot x}\) and we recall that the integral denotes duality pairing between distributions and test functions. Then, \(\{B^n_\bullet : n \in (N^{-1}{\mathbb {Z}})^3\}\) is a set of complex Brownian motions defined on \((\Omega , {\mathcal {A}}, ({\mathcal {A}}_k)_{k \geqslant 0},{\mathbb {P}})\), independent except for the constraint \(\overline{B_k^n} = B_k^{-n}\). Moreover,

where \({\mathbb {P}}\)-almost surely the sum converges in \({\mathcal {C}}^{-\frac{3}{2} - \kappa _0}\).

Let \({\mathcal {J}}_k\) be the Fourier multiplier with symbol

where \(\rho _k\) is the ultraviolet cutoff defined in Sect. 2 and we recall \(\langle \cdot \rangle = \sqrt{\eta + 4\pi ^2|\cdot |^2}\). \({\mathcal {J}}_k\) arises from a continuous decomposition of the covariance of the pushforward measure \(\mu _N\) under \(\rho _k\):

where \({\mathcal {F}}\) denotes the Fourier transform and \({\mathcal {F}}^{-1}\) denotes its inverse (see “Appendix A”). Note that the function \(\partial _k \rho _k^2\) has decay of order \(\langle k \rangle ^{-\frac{1}{2}}\) and the corresponding multiplier is supported frequencies satisfying \(|n| \in (c_\rho k, C_\rho k)\) for some \(c_\rho < C_\rho \). Thus, we may think of \({\mathcal {J}}_k\) as having the same regularising properties as the multiplier  ; precise statements are given in Proposition A.9.

; precise statements are given in Proposition A.9.

Define the process  by

by

is a centred Gaussian process with covariance:

is a centred Gaussian process with covariance:

for any \(f,g \in L^2\). Thus, the law of  is the law of \(\rho _k \phi \) where \(\phi \sim \mu _N\). As with other processes in the following, we simply write

is the law of \(\rho _k \phi \) where \(\phi \sim \mu _N\). As with other processes in the following, we simply write  .

.

4.1.1 Renormalised multilinear functions of the free field

The second, third, and fourth Wick powers of  are the space-stationary stochastic processes

are the space-stationary stochastic processes  defined by:

defined by:

where we recall from Sect. 2 that  . Note that

. Note that  , and

, and  are equal in law to \(:\phi _k^2:, :\phi _k^3:\), and \(:\phi _k^4:\), respectively.

are equal in law to \(:\phi _k^2:, :\phi _k^3:\), and \(:\phi _k^4:\), respectively.

The Wick powers of  can be expressed as iterated integrals using Itô’s formula (see [Nua06, Section 1.1.2]). We only need the iterated integral representation

can be expressed as iterated integrals using Itô’s formula (see [Nua06, Section 1.1.2]). We only need the iterated integral representation  :

:

where we have used the convention that sums over frequencies \(n_i\) range over \((N^{-1}{\mathbb {Z}})^3\).

We define additional space-stationary stochastic processes  by

by

We make two observations: first, a straightforward calculation shows that  diverges in variance as \(k \rightarrow \infty \). However, due to the presence of \({\mathcal {J}}_k\),

diverges in variance as \(k \rightarrow \infty \). However, due to the presence of \({\mathcal {J}}_k\),  can be made sense of as \(k \rightarrow \infty \). See Lemma 4.6.

can be made sense of as \(k \rightarrow \infty \). See Lemma 4.6.

Second,  ,

,  , and

, and  are renormalised resonant products of

are renormalised resonant products of  ,

,  , and

, and  , respectively. The latter products are classically divergent in the limit \(k \rightarrow \infty \). We refer to Remark 4.2 for an explanation of why the resonant product is used.

, respectively. The latter products are classically divergent in the limit \(k \rightarrow \infty \). We refer to Remark 4.2 for an explanation of why the resonant product is used.

Remark 4.2

Let \(f \in {\mathcal {C}}^{s_1}\) and \(g \in {\mathcal {C}}^{s_2}\) for \(s_1< 0 < s_2\). Bony’s decomposition states that, if the product exists,  and is of regularity \(s_1\) (see Appendix A). Since paraproducts are always well-defined (see Proposition A.5), the resonant product contains all of the difficulty in defining the product. However, the resonant product gives regularity information of order \(s_1 + s_2\) (see Proposition A.6), which is strictly stronger than the regularity information of the product: i.e. the bound on

and is of regularity \(s_1\) (see Appendix A). Since paraproducts are always well-defined (see Proposition A.5), the resonant product contains all of the difficulty in defining the product. However, the resonant product gives regularity information of order \(s_1 + s_2\) (see Proposition A.6), which is strictly stronger than the regularity information of the product: i.e. the bound on  is strictly stronger than the bound on \(\Vert fg \Vert _{{\mathcal {C}}^{s_1}}\). This is the key property that makes paracontrolled calculus useful in this context [GIP15].

is strictly stronger than the bound on \(\Vert fg \Vert _{{\mathcal {C}}^{s_1}}\). This is the key property that makes paracontrolled calculus useful in this context [GIP15].

The required renormalisations of  and

and  are related to the usual “sunset” diagram appearing in the perturbation theory for \(\phi ^4_3\),

are related to the usual “sunset” diagram appearing in the perturbation theory for \(\phi ^4_3\),

See [Fel74, Theorem 1]. We emphasise that  depends on \(\eta , N\) and k.

depends on \(\eta , N\) and k.

By the fundamental theorem of calculus, the Leibniz rule, and symmetry,

Thus, the renormalisations of  and

and  are given by

are given by  and

and  , respectively.

, respectively.

Remark 4.3

It is straightforward to verify that there exists \(C=C(\eta )>0\) such that

Let  . We refer to the coordinates of \(\Xi \) as diagrams. The following proposition gives control over arbitrarily high moments of diagrams in Besov spaces.

. We refer to the coordinates of \(\Xi \) as diagrams. The following proposition gives control over arbitrarily high moments of diagrams in Besov spaces.

Proposition 4.4

For any \(p,p' \in [1,\infty )\), \(q \in [1,\infty ]\), and \(\kappa > 0\) sufficiently small, there exists \(C=C(p,p',q,\kappa ,\eta )>0\) such that

Proof

See [BG19, Lemma 24]. \(\quad \square \)

Remark 4.5

The constant on the righthand side of (4.5) is independent of N because our Besov spaces are defined with respect to normalised Lebesgue measure  (see Appendix A). For \(p=\infty \), bounds that are uniform in N do not hold. Indeed, for \(L^\infty \)-based norms, there is in general no chance of controlling space-stationary processes uniformly in the volume. Thus, we cannot work in Besov-Hölder spaces.

(see Appendix A). For \(p=\infty \), bounds that are uniform in N do not hold. Indeed, for \(L^\infty \)-based norms, there is in general no chance of controlling space-stationary processes uniformly in the volume. Thus, we cannot work in Besov-Hölder spaces.

We prove the bound in (4.5) for  since it illustrates the role of \({\mathcal {J}}_k\), is used later in the proof of Proposition 5.23, and gives the reader a flavour of how to prove the bounds on the other diagrams.

since it illustrates the role of \({\mathcal {J}}_k\), is used later in the proof of Proposition 5.23, and gives the reader a flavour of how to prove the bounds on the other diagrams.

Lemma 4.6

There exists \(C=C(\eta )>0\) such that, for any \(n \in (N^{-1}{\mathbb {Z}})^3\),

As a consequence, for every \(p \in [1,\infty )\) and \(s < \frac{1}{2}\), there exists \(C=C(p,s,\eta )>0\) such that

Proof

Inserting (4.3) in the definition of  and switching the order of integration,

and switching the order of integration,

Therefore, by Itô’s formula,

where we have performed the \(k_2\) and \(k_3\) integrations, and used that \(|\rho _k| \leqslant 1\).

Recall that \(\partial _{k'} \rho _{k'}^2\) is supported on frequencies \(| n | \in (c_\rho k', C_\rho k')\). Hence, for any \(\kappa > 0\),

where \(\lesssim \) means \(\leqslant \) up to a constant depending only on \(\eta \), \(c_\rho \) and \(C_\rho \); the last inequality uses standard bounds on discrete convolutions contained in Lemma A.12; and we have used that the double convolution produces a volume factor of \(N^6\). Note that, as said in Sect. 2, we omit the dependence on \(c_\rho \) and \(C_\rho \) in the final bound.

By Fubini’s theorem, Nelson’s hypercontractivity estimate [Nel73] (or the related Burkholder-Davis-Gundy inequality [RY13, Theorem 4.1]), and space-stationarity

where \(\Delta _j\) is the j-th Littlewood-Paley block defined in Appendix A and we recall  .

.

We overload notation and also write \(\Delta _j\) to mean its corresponding Fourier multiplier. Then, by space-stationarity, for any \(j \geqslant -1\),

Inserting (4.10) into (4.9) we obtain

which converges provided \(s < \frac{1}{2}\), thus finishing the proof. \(\quad \square \)

4.2 The Boué–Dupuis formula

Fix an ultraviolet cutoff K. Recall that we are interested in Gaussian expectations of the form

where \({\mathcal {H}}(\phi _K) = {\mathcal {H}}_{\beta ,N,K}(\phi _K) + Q_K(\phi _K)\).

We may represent such expectations on \((\Omega , {\mathcal {A}}, ({\mathcal {A}}_k)_{k \geqslant 0}, {\mathbb {P}})\):

The key point is that the righthand side of (4.11) is written in terms of a measurable functional of Brownian motions. This allows us to exploit continuous time martingale techniques, crucially Girsanov’s theorem [RY13, Theorems 1.4 and 1.7], to reformulate (4.11) as a stochastic control problem.

Let \({\mathbb {H}}\) be the set of processes \(v_\bullet \) that are \({\mathbb {P}}\)-almost surely in \(L^2({\mathbb {R}}_+;L^2({\mathbb {T}}_N))\) and progressively measurable with respect to \(({\mathcal {A}}_k)_{k \geqslant 0}\). We call this the space of drifts. For any \(v \in {\mathbb {H}}\), let \(V_\bullet \) be the process defined by

For our purposes, it is sufficient to consider the subspace of drifts \({\mathbb {H}}_K \subset {\mathbb {H}}\) consisting of \(v \in {\mathbb {H}}\) such that \(v_k = 0\) for \(k > K\).

We also work with the subset of bounded drifts \({\mathbb {H}}_{b,K} \subset {\mathbb {H}}_K\), defined as follows: for every \(M \in {\mathbb {N}}\), let \({\mathbb {H}}_{b,M,K}\) be the set of \(v \in {\mathbb {H}}_K\) such that

\({\mathbb {P}}\)-almost surely. Set \({\mathbb {H}}_{b,K} = \bigcup _{M \in {\mathbb {N}}} {\mathbb {H}}_{b,M,K}\).

The following proposition is the main tool of this section.

Proposition 4.7

Let \(N \in {\mathbb {N}}\) and \({\mathcal {H}}:C^\infty ({\mathbb {T}}_N) \rightarrow {\mathbb {R}}\) be measurable and bounded. Then, for any \(K > 0\),

where the infimum can be taken over v in \({\mathbb {H}}_K\) or \({\mathbb {H}}_{b,K}\).

Proof

(4.13) was first established by Boué and Dupuis [BD98], but we use the version in [BD19, Theorem 8.3], adapted to our setting. \(\quad \square \)

We cannot directly apply Proposition 4.7 for the case \({\mathcal {H}}= {\mathcal {H}}_{\beta ,N,K} + Q_K\) because it is not bounded. To circumvent this technicality, we introduce a total energy cutoff \(E \in {\mathbb {N}}\). Since K is taken fixed, \({\mathcal {H}}_{\beta ,N,K} + Q_K\) is bounded from below. Hence, by dominated convergence

We apply Proposition 4.7 to \({\mathcal {H}}= \big ({\mathcal {H}}_{\beta ,N,K} + Q_K\big ) \wedge E\). For the lower bound on the corresponding variational problem, we establish estimates that are uniform over \(v \in {\mathbb {H}}_{b,K}\). For the upper bound, we establish estimates for a specific choice of \(v \in {\mathbb {H}}_K\) which is constructed via a fixed point argument. All estimates that we establish are independent of E. Hence, using (4.14) and the representation (4.11), they carry over to \({\mathbb {E}}_N e^{-{\mathcal {H}}_{\beta ,N,K}(\phi _K) + Q_K(\phi _K)}\). We suppress mention of E unless absolutely necessary.

Remark 4.8

The assumption that \({\mathcal {H}}\) is bounded allows the infimum in (4.13) to be interchanged between \({\mathbb {H}}_K\) and \({\mathbb {H}}_{b,K}\). The use of \({\mathbb {H}}_{b,K}\) allows one to overcome subtle stochastic analysis issues that arise later on: specifically, justifying certain stochastic integrals appearing in Lemmas 5.15 and 5.17 are martingales and not just local martingales. See Lemma 5.14. The additional boundedness condition is important in the lower bound on the variational problem as the only other a priori information that we have on v there is that \({\mathbb {E}}\int _0^K\int _{{\mathbb {T}}_N}v_k^2 dx dk < \infty \), which alone is insufficient. On the other hand, the candidate optimiser for the upper bound is constructed in \({\mathbb {H}}_K\), but it has sufficient moments to guarantee the aforementioned stochastic integrals in Lemma 5.14 are martingales. See Lemma 5.22.

Remark 4.9

A version of the Boué–Dupuis formula for \({\mathcal {H}}\) measurable and satisfying certain integrability conditions is given in [Üst14, Theorem 7]. These integrability conditions are broad enough to cover the cases that we are interested in, and it is required in [BG19] to identify the Laplace transform of \(\phi ^4_3\). However, it is not clear to us that the infimum in the corresponding variational formula can be taken over \({\mathbb {H}}_{b,K}\). Therefore, it seems that the stochastic analysis issues discussed in Remark 4.8 cannot be resolved directly using this version without requiring some post-processing (e.g. via a dominated convergence argument with a total energy cutoff as above).

4.2.1 Relationship with the Gibbs variational principle

Given a drift \(v \in {\mathbb {H}}_K\), we define the measure \({\mathbb {Q}}\) whose Radon-Nikodym derivative with respect to \({\mathbb {P}}\) is given by the following stochastic exponential:

Let \({\mathbb {H}}_{c,K}\) be the set of \(v \in {\mathbb {H}}_K\) such that its associated measure defined in (4.15) is a probability measure, i.e. the expectation of the stochastic integral is 1. Then, by Girsanov’s theorem [RY13, Theorems 1.4 and 1.7 in Chapter VIII] it follows that the process \(X_\bullet \) is a semi-martingale under \({\mathbb {Q}}\) with decomposition:

where \(X^v_\bullet \) is an \(L^2\) cylindrical Brownian motion with respect to \({\mathbb {Q}}\). This induces the decomposition

where  .

.

Lemma 4.10

Let \(N \in {\mathbb {N}}\) and \({\mathcal {H}}:C^\infty ({\mathbb {T}}_N) \rightarrow {\mathbb {R}}\) be measurable and bounded from below. Then, for any \(K > 0\),

where \({\mathbb {E}}_{\mathbb {Q}}\) denotes expectation with respect to \({\mathbb {Q}}\).

Proof

(4.17) is a well-known representation of the classical Gibbs variational principle [DE11, Proposition 4.5.1]. Indeed, one can verify that \(R({\mathbb {Q}}\Vert {\mathbb {P}}) = {\mathbb {E}}_{{\mathbb {Q}}} \Big [ \int _0^\infty \int _{{\mathbb {T}}_N}v_k^2 dx dk\Big ]\), where \(R({\mathbb {Q}}\Vert {\mathbb {P}}) = {\mathbb {E}}_{{\mathbb {Q}}} \log \frac{d{\mathbb {Q}}}{d{\mathbb {P}}}\) is the relative entropy of \({\mathbb {Q}}\) with respect to \({\mathbb {P}}\). A full proof in our setting is given in [GOTW18, Proposition 4.4]. \(\quad \square \)

Proposition 4.7 has several upshots over Lemma 4.10. The most important for us is that drifts can be taken over a Banach space, thus allowing candidate optimisers to be constructed using fixed point arguments via contraction mapping. In addition, the underlying probability space is fixed (i.e. with respect to the canonical measure \({\mathbb {P}}\)), although this is a purely aesthetic advantage in our case. The cost of these upshots is that the minimum in (4.17) is replaced by an infimum in (4.13), and more rigid conditions on \({\mathcal {H}}\) are required. We refer to [BD19, Section 8.1.1] or [BG19, Remark 1] for further discussion.

With the connection with the Gibbs variational principle in mind, we call \({\mathcal {H}}(V_K)\) the drift (potential) energy and we call \(\int _0^K\int _{{\mathbb {T}}_N}v_k^2 dx dk\) the drift entropy.

4.2.2 Regularity of the drift

In our analysis we use intermediate scales between 0 and K. As we explain in Sect. 5.1, this means that we require control over the process \(V_\bullet \) in terms of the drift energy and drift entropy terms in (4.13).

The drift entropy allows a control of \(V_\bullet \) in \(L^2\)-based topologies.

Lemma 4.11

For every \(v \in L^2({\mathbb {R}}_+;L^2({\mathbb {T}}_N))\) and \(K > 0\),

Proof

(4.18) is a straightforward consequence definition of \({\mathcal {J}}_k\), see [BG19, Lemma 2]. \(\quad \square \)

To control the homogeneity in our estimates, we also require bounds on \(\Vert V_\bullet \Vert _{L^4}^4\). This is a problem: for our specific choices of \({\mathcal {H}}\), the drift energy allows a control in \(L^4\)-based topologies at the endpoint \(V_K\). It is in general impossible to control the history of the path by the endpoint (for example, consider an oscillating process \(V_\bullet \) with \(V_K = 0\)). We follow [BG19] to sidestep this issue.

Let \({\tilde{\rho }} \in C^\infty _c({\mathbb {R}}_+;{\mathbb {R}}_+)\) be non-increasing such that

and let \({\tilde{\rho }}_k(\cdot ) = {\tilde{\rho }}(\frac{\cdot }{k})\) for every \(k>0\).

Define the process \(V^\flat _\bullet \) by

Note that \({\mathcal {F}}(V_k^\flat )(n) = {\mathcal {F}}(V_k)(n)\) if \(|n| \leqslant \frac{c_\rho }{2}\). Thus, \(V^\flat _\bullet \) and \(V_\bullet \) have the same low frequency/large-scale behaviour (hence the notation).

The two processes differ on higher frequencies/small-scales. Indeed, as a Fourier multiplier, \({\tilde{\rho }}_k {\mathcal {J}}_k = 0\) for \(k' > k\). Hence, for any \(k \leqslant K\),

This is sufficient for our purposes because \({\tilde{\rho }}_k\) is an \(L^p\) multiplier for \(p \in (1,\infty )\), and hence the associated operator is \(L^p\) bounded for \(p \in (1,\infty )\).

Lemma 4.12

For any \(p \in (1,\infty )\), there exists \(C=C(p,\eta )>0\) such that, for every \(v \in L^2({\mathbb {R}}_+;L^2({\mathbb {T}}_N))\),

Moreover, for any \(s,s' \in {\mathbb {R}}\), \(p \in (1,\infty )\), \(q \in [1,\infty ]\), there exists \(C=C(s,s',p,q,\eta )\) such that, for every \(v \in L^2({\mathbb {R}}_+;L^2({\mathbb {T}}_N))\),

Proof

(4.19) and (4.20) are a consequence of the preceding discussion together with the observation that \(\partial _k V_k^\flat \) is supported on an annulus in Fourier space and, subsequently, applying Bernstein’s inequality (A.6). See [BG19, Lemma 20]. \(\quad \square \)

5 Estimates on Q-Random Variables

The main results of this section are upper bounds on expectations of certain random variables, derived from \(Q_1,Q_2,\) and \(Q_3\) defined in (3.2), that are uniform in \(\beta \) and extensive in \(N^3\).

Proposition 5.1

For every \(a_0 > 0\), there exist \(\beta _0 = \beta _0(a_0,\eta ) \geqslant 1\) and \(C_Q = C_Q(a_0, \beta _0, \eta )>0\) such that the following estimates hold: for all \(\beta > \beta _0\) and \(a \in {\mathbb {R}}\) satisfying \(|a| \leqslant a_0\),

In addition,

where B is any set of unordered pairs of nearest-neighbour blocks that partitions \({{\mathbb {B}}_N}\).

Proof

See Sect. 5.9. \(\quad \square \)

Proposition 5.1 is used in Sect. 6.3, together with the chessboard estimates of Proposition 6.5, to prove Proposition 3.6. Indeed, chessboard estimates allow us to obtain estimates on expectations of random variables, derived from the \(Q_i\), that are extensive in their support from estimates that are extensive in \(N^3\). Note that the latter are significantly easier to obtain than the former since these random variables may be supported on arbitrary unions of blocks.

Remark 5.2

For the remainder of this section, we assume \(\eta < \frac{1}{392 C_P}\) where \(C_P\) is the Poincaré constant on unit blocks (see Proposition A.11). This is for convenience in the analysis of Sects. 5.8.1 and 5.9 (see also Lemma 5.21). Whilst this may appear to fix the specific choice of renormalisation constants \(\delta m^2\), we can always shift into this regime by absorbing a finite part of \(\delta m^2\) into \({\mathcal {V}}_\beta \).

Most of the difficulties in the proof of Proposition 5.1 are contained in obtaining the following upper and lower bounds on the free energy \(-\log {\mathscr {Z}}_{\beta ,N}\) that are uniform in \(\beta \).

Proposition 5.3

There exists \(C=C(\eta )>0\) such that, for all \(\beta \geqslant 1\),

and

Proof

See Sects. 5.8.1 and 5.8.2 for a proof of (5.1) and (5.2), respectively. These proofs rely on Sects. 5.2–5.7, and the overall strategy is sketched in Sect. 5.1. \(\quad \square \)

Remark 5.4

In [BG19] estimates on \(-\log {\mathscr {Z}}_{\beta ,N,K}\) are obtained that are uniform in \(K > 0\) and extensive in \(N^3\). However, one can show that these estimates are \(O(\beta )\) as \(\beta \rightarrow \infty \). This is insufficient for our purposes (compare with the uniform in \(\beta \) estimates required to prove Proposition 3.2).

5.1 Strategy to prove Proposition 5.3

The lower bound on \(-\log {\mathscr {Z}}_{\beta ,N,K}\), given by (5.1), is the harder bound to establish in Proposition 5.3. Our approach builds on the analysis of [BG19] by incorporating a low temperature expansion inspired by [GJS76a, GJS76b]. This is explained in more detail in Sect. 5.1.1.

On the other hand, we establish the upper bound on \(-\log {\mathscr {Z}}_{\beta ,N,K}\), given by (5.2), by a more straightforward modification of the analysis in [BG19]. See Sect. 5.8.2.

We now motivate our approach to establishing (5.1) by first isolating the the difficulty in obtaining \(\beta \)-independent bounds when using [BG19] straight out of the box. The starting point is to apply Proposition 4.7 with \({\mathcal {H}}= {\mathcal {H}}_{\beta ,N,K}\), together with a total energy cutoff that we refrain from making explicit (see Remark 4.8 and the discussion that precedes it), to represent \(-\log {\mathscr {Z}}_{\beta ,N,K}\) as a stochastic control problem.

For every \(v \in {\mathbb {H}}_{b,K}\), define

Ultraviolet divergences occur in the expansion of  since the integrals

since the integrals  and

and  appear and cannot be bounded uniformly in K:

appear and cannot be bounded uniformly in K:

-

For the first integral, there are difficulties in even interpreting

as a random distribution in the limit \(K \rightarrow \infty \). Indeed, the variance of

as a random distribution in the limit \(K \rightarrow \infty \). Indeed, the variance of  tested against a smooth function diverges as the cutoff is removed.

tested against a smooth function diverges as the cutoff is removed. -

On the other hand, one can show that

does converge as \(K \rightarrow \infty \) to a random distribution of Besov-Hölder regularity \(-1-\kappa \) for any \(\kappa > 0\) (see Proposition 4.4). However, this regularity is insufficient to obtain bounds on the second integral uniform on K . Indeed, \(V_K\) can be bounded in at most \(H^1\) uniformly in K (see Lemma 4.11), and hence we cannot test

does converge as \(K \rightarrow \infty \) to a random distribution of Besov-Hölder regularity \(-1-\kappa \) for any \(\kappa > 0\) (see Proposition 4.4). However, this regularity is insufficient to obtain bounds on the second integral uniform on K . Indeed, \(V_K\) can be bounded in at most \(H^1\) uniformly in K (see Lemma 4.11), and hence we cannot test  against \(V_K\) (or \(V_K^2\)) in the limit \(K \rightarrow \infty \).

against \(V_K\) (or \(V_K^2\)) in the limit \(K \rightarrow \infty \).

This is where the need for renormalisation beyond Wick ordering appears.

To implement this, we follow [BG19] and postulate that the small-scale behaviour of the drift v is governed by explicit renormalised polynomials of  through the change of variables:

through the change of variables:

where the remainder term \(r=r(v)\) is defined by (5.3). Since \(v \in {\mathbb {H}}_K \supset {\mathbb {H}}_{b,K}\), we have that \(r \in {\mathbb {H}}_K\) and, hence, has finite drift entropy; however, note that \(r \not \in {\mathbb {H}}_{b,K}\). The optimisation problem is then changed from optimising over \(v \in {\mathbb {H}}_{b,K}\) to optimising over \(r(v) \in {\mathbb {H}}_K\).

The change of variables (5.3) means that the drift entropy of any v now contains terms that are divergent as \(K \rightarrow \infty \). One uses Itô’s formula to decompose the divergent integrals identified above into intermediate scales, and then uses these divergent terms in the drift entropy to mostly cancel them. Using the renormalisation counterterms beyond Wick ordering (i.e. the terms involving \(\gamma _K\) and \(\delta _K\)), the remaining divergences can be written in terms of well-defined integrals involving the diagrams  defined in Sect. 4.1.1.

defined in Sect. 4.1.1.

One can then establish that, for every \(\varepsilon >0\), there exists \(C=C(\varepsilon ,\beta ,\eta )>0\) such that, for every \(v \in {\mathbb {H}}_{b,K}\),

where