Abstract

Consider a nonuniformly hyperbolic map \( T:M\rightarrow M \) modelled by a Young tower with tails of the form \( O(n^{-\beta }) \), \( \beta >2 \). We prove optimal moment bounds for Birkhoff sums \( \sum _{i=0}^{n-1}v\circ T^i \) and iterated sums \( \sum _{0\le i<j<n}v\circ T^i\, w\circ T^j \), where \( v,w:M\rightarrow {{\mathbb {R}}} \) are (dynamically) Hölder observables. Previously iterated moment bounds were only known for \( \beta >5\). Our method of proof is as follows; (i) prove that \( T\) satisfies an abstract functional correlation bound, (ii) use a weak dependence argument to show that the functional correlation bound implies moment estimates. Such iterated moment bounds arise when using rough path theory to prove deterministic homogenisation results. Indeed, by a recent result of Chevyrev, Friz, Korepanov, Melbourne & Zhang we have convergence to an Itô diffusion for fast-slow systems of the form

in the optimal range \( \beta >2\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

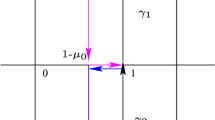

Let \( T:M\rightarrow M \) be an ergodic, measure-preserving transformation defined on a bounded metric space \( (M,d) \) with Borel probability measure \( \mu \). Consider a fast-slow system on \({{\mathbb {R}}}^d\times M\) of the form

where the initial condition \(x^{(n)}_0\equiv \xi \) is fixed and \( y_0 \) is picked randomly from \( (M,\mu ). \) When the fast dynamics \( T:M\rightarrow M \) is chaotic enough, it is expected that the stochastic process \( X_n \) defined by \( X_n(t)=x^{(n)}_{[nt]} \) will weakly converge to the solution of a stochastic differential equation driven by Brownian motion. This is referred to as deterministic homogenisation and has been of great interest recently [8,9,10, 12, 13, 18, 21, 27]. See [7] for a survey of the topic.

In [18], Kelly and Melbourne considered the special case where \(a(x,y)\equiv a(x)\) and \( b(x,y)=h(x)v(y) \). By using rough path theory, they showed that deterministic homogenisation reduces to proving two statistical properties for \( T:M\rightarrow M \). In [8] this result was extended to general a, b satisfying mild regularity assumptions.

One of the assumed statistical properties is an “iterated weak invariance principle”. In [18, 29] it was shown that this property is satisfied by nonuniformly expanding/hyperbolic maps modelled by Young towers, provided that the tails of the return time decay at rate \( O(n^{-\beta }) \) for some \( \beta >2 \) (which is the optimal range for such results).

The second assumed statistical property is control of “iterated moments”, which gives tightness in the rough path topology used for proving convergence. This condition has proved much more problematic. Advances in rough path theory [7, 8] significantly weakened the moment requirements from [18] and these weakened moment requirements were eventually proved for nonuniformly expanding maps in the optimal range (i.e. \( \beta >2 \)) in [21].

However, for nonuniformly hyperbolic maps modelled by Young towers previously it was only possible to show iterated moment bounds for \( \beta >5 \) [11]. In this article, we extend iterated moment bounds to the optimal range \( \beta >2. \)

1.1 Illustrative examples

Many examples of invertible dynamical systems are modelled by Young towers [33, 34]. For example, Axiom A (uniformly hyperbolic) diffeomorphisms, Henon attractors and the finite-horizon Sinai billiard are modelled by Young towers with exponential tails, so for such systems deterministic homogenisation results follow from [18, 19]. We now give some examples of slowly-mixing nonuniformly hyperbolic dynamical systems for which it was not previously possible to show deterministic homogenisation, due to a lack of control of iterated moments. We start with an example which is easy to write down:

-

Intermittent Baker’s maps Let \( \alpha \in (0,1). \) Define \( g:[0,1/2]\rightarrow [0,1] \) by \( g(x)=x(1+2^{\alpha }x^\alpha ) \). The Liverani-Saussol-Vaienti map \( {\bar{T}}:[0,1]\rightarrow [0,1]\),

$$\begin{aligned} {{\bar{T}}} x={\left\{ \begin{array}{ll} g(x),&{} \quad x\le 1/2,\\ 2x-1,&{} \quad x>1/2 \end{array}\right. } \end{aligned}$$is a prototypical example of a slowly-mixing nonuniformly expanding map [25]. As in [29, Exa. 4.1], consider an intermittent Baker’s map \( T:M\rightarrow M \), \( M=[0,1]^2 \) defined by

$$\begin{aligned} T(x_1,x_2)={\left\{ \begin{array}{ll} ({\bar{T}}x_1,g^{-1}(x_2)),&{}\quad x_1\in [0,\frac{1}{2}],\ x_2\in [0,1],\\ ({\bar{T}}x_1,(x_2+1)/2),&{}\quad x_1\in (\frac{1}{2},1],\ x_2\in [0,1].\\ \end{array}\right. } \end{aligned}$$There is a unique absolutely continuous invariant probability measure \( \mu \). The map \( T\) is nonuniformly hyperbolic and has a neutral fixed point at (0, 0) whose influence increases with \( \alpha \). In particular, \( T\) is modelled by a two-sided Young tower with tails of the form \( \sim n^{-\beta } \) where \( \beta =1/\alpha \).

For \( \beta >2 \) the central limit theorem (CLT) holds for all Hölder observables. For \( \beta \le 2 \) the CLT fails for typical Hölder observables [14], so it is natural to restrict to \( \beta >2 \) when considering deterministic homogenisation. By [11] it is possible to show iterated moment bounds for \( \beta >5 \). Our results yield iterated moment bounds and hence deterministic homogenisation in the full range \( \beta >2 .\)

Dispersing billiards provide many examples of slowly-mixing nonuniformly hyperbolic maps. Markarian [26], Chernov and Zhang [5] showed how to model many examples of dispersing billiards by Young towers with polynomial tails.

We give two classes of dispersing billiards for which it is now possible to show deterministic homogenisation:

-

Bunimovich flowers [2]. By [5] the billiard map is modelled by a Young tower with tails of the form \( O(n^{-3}(\log n)^{3}). \)

-

Dispersing billiards with vanishing curvature In [6] Chernov and Zhang introduced a class of billiards modelled by Young towers with tails of the form \( O((\log n)^{\beta }n^{-\beta }) \) to any prescribed value of \( \beta \in (2,\infty ) \).

Notation We endow \( {{\mathbb {R}}}^k \) with the norm \( |y|=\sum _{i=1}^k |y_i|\).

Let \( \eta \in (0,1] \). We say that an observable \( v:M\rightarrow {{\mathbb {R}}} \) on a metric space \( (M,d) \) is \( \eta \)-Hölder, and write \( v\in {{\mathscr {C}}}^{\eta }(M) \), if \( \left\Vert v\right\Vert _\eta =\left| v\right| _\infty +[v]_\eta <\infty , \) where \( \left| v\right| _\infty =\sup _M|v|\) and \([v]_\eta =\sup _{x\ne y}|v(x)-v(y)|/d(x,y)^\eta . \) If \( \eta =1 \) we call v Lipschitz and write \( \mathrm {Lip}(v)=[v]_{1}. \) For \( 1\le p\le \infty \) we use \(|\cdot |_p\) to denote the \( L^p \) norm.

The rest of this article is structured as follows. In Sect. 2 we state our main results. Our first main result, Theorem 2.3, is that mixing nonuniformly hyperbolic maps modelled by Young towers with polynomial tails satisfy a functional correlation bound. Our second main result, Theorem 2.4, is that this functional correlation bound implies control of iterated moments.

In Sect. 3 we recall background material on Young towers and prove Theorem 2.3. In Sect. 4 we prove that our functional correlation bound implies an elementary weak dependence condition. Finally in Sect. 5 we use this condition to prove Theorem 2.4.

2 Main Results

Let \( T:M\rightarrow M \) be a nonuniformly hyperbolic map modelled by a Young tower. We state our results for the class of dynamically Hölder observables, noting that this includes Hölder observables. We delay the definitions of Young tower and dynamically Hölder until Sect. 3.1. Let \( {{\mathscr {H}}}(M) \) denote the class of dynamically Hölder observables on \( M\) and let \( [\cdot ]_{{{\mathscr {H}}}} \) denote the dynamically Hölder seminorm.

Definition 2.1

Fix an integer \( q\ge 1. \) Given a function \( G:M^{q}\rightarrow {{\mathbb {R}}} \) and \( 0\le i< q\) we denote

We call G separately dynamically Hölder, and write \( G\in \mathscr {SH}_{q}(M)\), if \( \left| G\right| _\infty +\sum _{i=0}^{q-1} [G]_{{{\mathscr {H}}},i}<\infty . \)

Fix \(\gamma >0\). We consider dynamical systems which satisfy the following property:

Definition 2.2

Suppose that there exists a constant \(C>0\) such that for all integers \( 0\le p< q,\ 0\le n_0\le \cdots \le n_{q-1} \),

for all \(G\in \mathscr {SH}_{q}(M)\). Then we say that \(T\) satisfies the Functional Correlation Bound with rate \(n^{-\gamma }\).

A similar condition was introduced by Leppänen in [22] and further studied by Leppänen and Stenlund in [23, 24]. In particular, [22] showed that functional correlation decay implies a multi-dimensional CLT with bounds on the rate of decay. We are now ready to state the main results which we prove in this paper.

The rate of decay of correlations of a dynamical system modelled by a Young tower is determined by the tails of the return time to the base of the tower. Indeed, let \( T\) be a mixing transformation modelled by a two-sided Young tower with tails of the form \(O(n^{-\beta })\) for some \(\beta >1\). In [28] by using a method due to S. Gouëzel (privately communicated based on ideas from [3]), it was shown that there exists \( C>0 \) such that

for all \(n\ge 1, v,w \in {{\mathscr {H}}}(M)\). Our first main result is that the Functional Correlation Bound holds with the same rate:

Theorem 2.3

Let \(\beta >1\). Let \(T\) be a mixing transformation modelled by a two-sided Young tower whose return time has tails of the form \(O(n^{-\beta })\). Then \(T\) satisfies the Functional Correlation Bound with rate \(n^{-(\beta -1)}\).

Given \(v,w\in {{\mathscr {H}}}(M)\) mean zero define

Our second main result is that the Functional Correlation Bound implies moment estimates for \( S_v(n) \) and \( {{\mathbb {S}}}_{v,w}(n) \). Let \( \left\Vert \cdot \right\Vert _{{{\mathscr {H}}}}=\left| \cdot \right| _\infty +[\cdot ]_{{{\mathscr {H}}}} \) denote the dynamically Hölder norm.

Theorem 2.4

Let \(\gamma >1\). Suppose that \(T\) satisfies the Functional Correlation Bound with rate \(n^{-\gamma }\). Then there exists a constant \( C>0 \) such that for all \(n\ge 1\), for any mean zero \( v,w\in {{\mathscr {H}}}(M) \),

-

(a)

\(\left| S_v(n)\right| _{2\gamma }\le Cn^{1/2}\left\Vert v\right\Vert _{{{\mathscr {H}}}}\).

-

(b)

\(\left| {{\mathbb {S}}}_{v,w}(n)\right| _\gamma \le Cn\left\Vert v\right\Vert _{{{\mathscr {H}}}}\left\Vert w\right\Vert _{{{\mathscr {H}}}}\).

Remark 2.5

As mentioned above, by [8, Theorem 2.10] to obtain deterministic homogenisation results it suffices to prove the iterated WIP and iterated moment bounds. Let \( T\) be a mixing transformation modelled by a two-sided Young tower with tails of the form \( O(n^{-\beta })\) for some \(\beta >2\). By [29], the Iterated WIP holds for all Hölder observables. Together Theorem 2.3 and Theorem 2.4 imply that for all \( \eta \in (0,1] \) there exists \( C>0 \) such that

-

(a)

\(\left| S_v(n)\right| _{2(\beta -1)}\le Cn^{1/2}\left\Vert v\right\Vert _\eta \).

-

(b)

\(\left| {{\mathbb {S}}}_{v,w}(n)\right| _{\beta -1}\le Cn\left\Vert v\right\Vert _\eta \left\Vert w\right\Vert _\eta \).

for all mean zero \( v,w\in {{\mathscr {C}}}^{\eta }(M)\), giving the required control of iterated moments.

3 Young Towers

3.1 Prerequisites

Young towers were first introduced by Young in [33, 34], as a broad framework to prove decay of correlations for nonuniformly hyperbolic maps. Our presentation follows [1]. In particular, this framework does not assume uniform contraction along stable manifolds and hence covers examples such as billiards.

Gibbs–Markov maps Let \(({{\bar{Y}}},{{\bar{\mu }}}_Y)\) be a probability space and let \({{\bar{F}}}:{{\bar{Y}}}\rightarrow {{\bar{Y}}}\) be ergodic and measure-preserving. Let \(\alpha \) be an at most countable, measurable partition of \({{\bar{Y}}}\). We assume that there exist constants \(K>0,\ \theta \in (0,1)\) such that for all elements \(a\in \alpha \):

-

(Full-branch condition) The map \({{\bar{F}}}|_{a}:a\rightarrow {{\bar{Y}}}\) is a measurable bijection.

-

For all distinct \(y,y'\in {{\bar{Y}}}\) the separation time

$$\begin{aligned} s(y,y')=\inf \{n\ge 0: {{\bar{F}}}^n y,\, {{\bar{F}}}^n y' \text { lie in distinct elements of }\alpha \}<\infty . \end{aligned}$$ -

Define \(\zeta :a\rightarrow {{\mathbb {R}}}^+\) by \(\zeta =d{{\bar{\mu }}}_Y/(d\, (F|_a^{-1})_* {{\bar{\mu }}}_Y)\). We have \( |\log \zeta (y)-\log \zeta (y')|\le K\theta ^{s(y,y')} \) for all \( y,y'\in a \).

Then we call \({\bar{F}}:{{\bar{Y}}}\rightarrow {{\bar{Y}}}\) a full-branch Gibbs–Markov map.

Two-sided Gibbs–Markov maps Let (Y, d) be a bounded metric space with Borel probability measure \(\mu _Y\) and let \(F:Y\rightarrow Y\) be ergodic and measure-preserving. Let \({\bar{F}}:{\bar{Y}}\rightarrow {\bar{Y}}\) be a full-branch Gibbs–Markov map with associated measure \({{\bar{\mu }}}_Y\).

We suppose that there exists a measure-preserving semi-conjugacy \({\bar{\pi }}:Y\rightarrow {\bar{Y}}\), so \({\bar{\pi }}\circ F={\bar{F}}\circ {\bar{\pi }}\) and \({\bar{\pi }}_{*}\mu _Y={\bar{\mu }}_Y.\) The separation time \(s(\cdot ,\cdot )\) on \({{\bar{Y}}}\) lifts to a separation time on Y given by \(s(y,y')=s({{\bar{\pi }}} y,{{\bar{\pi }}} y')\). Suppose that there exist constants \(K>0,\theta \in (0,1)\) such that

Then we call \(F:Y\rightarrow Y\) a two-sided Gibbs–Markov map.

One-sided Young towers Let \({{\bar{\phi }}}:{{\bar{Y}}}\rightarrow {{\mathbb {Z}}}^+\) be integrable and constant on partition elements of \(\alpha \). We define the one-sided Young tower \({{\bar{\varDelta }}}={{\bar{Y}}}^{{{\bar{\phi }}}}\) and tower map \({{\bar{f}}}:{{\bar{\varDelta }}}\rightarrow {{\bar{\varDelta }}}\) by

We extend the separation time \(s(\cdot ,\cdot )\) to \({{\bar{\varDelta }}}\) by defining

Note that for \(\theta \in (0,1)\) we can define a metric by \(d_\theta ({\bar{p}},{\bar{q}})=\theta ^{s({\bar{p}},{\bar{q}})}\).

Now, \({\bar{\mu }}_\varDelta =({\bar{\mu }}_Y \times \text {counting})/\int _{{\bar{Y}}}{\bar{\phi }} d{\bar{\mu }}_Y\) is an ergodic \({\bar{f}}\)-invariant probability measure on \({\bar{\varDelta }}\).

Two-sided Young towers Let \(F:Y\rightarrow Y\) be a two-sided Gibbs–Markov map and let \(\phi :Y\rightarrow {{\mathbb {Z}}}^+\) be an integrable function that is constant on \({\bar{\pi }}^{-1}a\) for each \(a\in \alpha \). In particular, \(\phi \) projects to a function \({\bar{\phi }}:{\bar{Y}}\rightarrow M\) that is constant on partition elements of \(\alpha \).

Define the one-sided Young tower \({\bar{\varDelta }}={\bar{Y}}^{{\bar{\phi }}}\) as in (3.2). Using \(\phi \) in place of \({\bar{\phi }}\) and \(F:Y\rightarrow Y\) in place of \({\bar{F}}:{\bar{Y}}\rightarrow {\bar{Y}}\), we define the two-sided Young tower \(\varDelta =Y^{\phi }\) and tower map \(f:\varDelta \rightarrow \varDelta \) in the same way. Likewise, we define an ergodic f-invariant probability measure on \(\varDelta \) by \(\mu _\varDelta =(\mu _Y \times \text {counting})/\int _{Y}\phi \, d\mu _Y\).

We extend \({\bar{\pi }}:Y\rightarrow {\bar{Y}}\) to a map \({\bar{\pi }}:\varDelta \rightarrow {\bar{\varDelta }}\) by setting \({\bar{\pi }}(y,\ell )=({\bar{\pi }}y,\ell )\) for all \((y,\ell )\in \varDelta \). Note that \({\bar{\pi }}\) is a measure-preserving semi-conjugacy; \({\bar{\pi }}\circ f={\bar{f}}\circ {\bar{\pi }}\) and \({\bar{\pi }}_{*}\mu _\varDelta ={\bar{\mu }}_\varDelta \). The separation time s on \({\bar{\varDelta }}\) lifts to \(\varDelta \) by defining \(s(y,y)=s({\bar{\pi }}y,{\bar{\pi }}y').\)

We are now finally ready to say what it means for a map to be modelled by a Young tower:

Let \( T:M\rightarrow M \) be a measure-preserving transformation on a probability space \( (M,\mu ) \). Suppose that there exists \(Y\subset M\) measurable with \(\mu (Y)>0\) such that:

-

\(F=T^{\phi }:Y\rightarrow Y\) is a two-sided Gibbs–Markov map with respect to some probability measure \(\mu _Y\).

-

\(\phi \) is constant on partition elements of \({\bar{\pi }}^{-1}\alpha \), so we can define Young towers \(\varDelta =Y^\phi \) and \({{\bar{\varDelta }}}={\bar{Y}}^{{\bar{\phi }}}\).

-

The map \(\pi _M:\varDelta \rightarrow M\), \(\pi _M(y,\ell )=T^\ell y\) is a measure-preserving semiconjugacy.

Then we say that \(T:M\rightarrow M\) is modelled by a (two-sided) Young tower.

From now on we fix \( \beta >1 \) and suppose that \( T:M\rightarrow M \) is a mixing transformation modelled by a Young tower \( \varDelta \) with tails of the form \( \mu _Y(\phi \ge n)=O(n^{-\beta }). \)

Remark 3.1

Here we have not assumed that the tower map \( f:\varDelta \rightarrow \varDelta \) is mixing. However, as in [4, Theorem 2.1, Proposition 10.1] and [1] the a priori knowledge that \( \mu \) is mixing ensures that this is irrelevant.

Let \( \psi _n(x)=\#\{j=1,\dots ,n:f^j x\in \varDelta _0\} \) denote the number of returns to \( \varDelta _0=\{(y,\ell )\in \varDelta :\ell =0\} \) by time n. The following bound is standard, see for example [20, Lemma 5.5].

Lemma 3.2

Let \(\theta \in (0,1)\). Then there exists a constant \(D_1>0\) such that

\(\square \)

The transfer operator L corresponding to \({\bar{f}}:{{\bar{\varDelta }}}\rightarrow {{\bar{\varDelta }}}\) and \({\bar{\mu }}_\varDelta \) is given pointwise by

It follows that for \(n\ge 1\), the operator \(L^n\) is of the form \((L^n v)(x)=\sum _{{\bar{f}}^n z=x}g_n(z)v(z)\), where \(g_n=\prod _{i=0}^{n-1}g\circ {{\bar{f}}}^i.\)

We say that \(z,z'\in {{\bar{\varDelta }}}\) are in the same cylinder set of length n if \({{\bar{f}}}^k z\) and \({{\bar{f}}}^k z'\) lie in the same partition element of \({{\bar{\varDelta }}}\) for \(0\le k\le n-1\). We use the following distortion bound (see e.g. [20, Proposition 5.2]):

Proposition 3.3

There exists a constant \(K_1>0\) such that for all \(n\ge 1\), for all points \(z,z'\in {{\bar{\varDelta }}}\) which belong to the same cylinder set of length n,

\(\square \)

Let \( \theta \in (0,1). \) We say that \( v:{{\bar{\varDelta }}}\rightarrow {{\mathbb {R}}} \) is \( d_\theta \)-Lipschitz if \( \left\Vert v\right\Vert _\theta =\left| v\right| _\infty +\sup _{x\ne y}|v(x)-v(y)|/d_\theta (x,y)<\infty \). If \( f:\varDelta \rightarrow \varDelta \) is mixing then by [34],

The same bound holds pointwise on \( {\bar{\varDelta }}_0 \):

Lemma 3.4

Suppose that \( f:\varDelta \rightarrow \varDelta \) is mixing. Then there exists \( D_2>0 \) such that for all \( d_\theta \)-Lipschitz \( v:{{\bar{\varDelta }}}\rightarrow {{\mathbb {R}}} \), for any \( n\ge 1, \)

This is a straightforward application of operator renewal theory developed by Sarig [31] and Gouëzel [15, 16]. However, we could not find a reference to this result in the literature so we provide a proof.

Proof

Define partial transfer operators \( T_n \) and \( B_n \) as in [17, Section 4]. Then

Define an operator \( \varPi \) by \( \varPi v=\int _{{{\bar{\varDelta }}}_0}v\, d{{\bar{\mu }}}_\varDelta \). Then as in the proof of [17, Theorem 4.6] we can write \( T_k=\varPi +E_k \) where \( \left\Vert E_k\right\Vert =O(k^{-(\beta -1)}). \) Moreover, by [17, Theorem 4.6], \( \left\Vert B_b\right\Vert =O(b^{-\beta }) \) and

It follows that

The conclusion of the lemma follows by noting that the expressions \( \sum ^\infty _{b= n+1}b^{-\beta }\) and

are both \( O(n^{-(\beta -1)})\). \(\square \)

Finally we recall the class of observables on \( M\) that are of interest to us:

Dynamically Hölder observables Fix \( \theta \in (0,1) \). For \( v:M\rightarrow {{\mathbb {R}}}\), define

We say that v is dynamically Hölder if \( \left\Vert v\right\Vert _{{{\mathscr {H}}}}<\infty \) and denote by \( {{\mathscr {H}}}(M) \) the space of all such observables.

It is standard (see e.g. [1, Proposition 7.3]) that Hölder observables are also dynamically Hölder for the classes of dynamical systems that we are interested in:

Proposition 3.5

Let \( \eta \in (0,1] \) and let \( d_0 \) be a bounded metric on \( M\). Let \( {{\mathscr {C}}}^{\eta }(M) \) be the space of observables that are \( \eta \)-Hölder with respect to \( d_0 \). Suppose that there exists \( K>0 \), \( \gamma _0\in (0,1) \) such that \( d_0(T^\ell y,T^\ell y')\le K(d_0(y,y')+\gamma _0^{s(y,y')}) \) for all \( y,y'\in Y,0\le \ell <\phi (y). \)

Then \( {{\mathscr {C}}}^{\eta }(M) \) is continuously embedded in \( {{\mathscr {H}}}(M) \) where we may choose any \( \theta \in [\gamma _0^\eta ,1) \) and \( d=d_0^{\eta '} \) for any \( \eta '\in (0,\eta ]. \) \(\square \)

3.2 Reduction to the case of a mixing Young tower

In proofs involving Young towers it is often useful to assume that the Young tower is mixing, i.e. \(gcd \{\phi (y):y\in Y\}=1. \) Hence in subsequent subsections we focus on proving the Functional Correlation Bound under this assumption:

Lemma 3.6

Suppose that \( T\) is modelled by a mixing two-sided Young tower whose return time has tails of the form \( O(n^{-\beta }) \). Then \( T\) satisfies the Functional Correlation Bound with rate \( n^{-(\beta -1)} \).

Proof of Theorem 2.3

Let \( d=\gcd \{\phi (y):y\in Y\} \). Set \( T'=T^d \) and \( \phi '=\phi /d. \) Construct a mixing two-sided Young tower \( \varDelta '=Y^{\phi '} \), with tower measure \( \mu '_\varDelta . \) Define \( \pi '_{M}:\varDelta '\rightarrow M \) by \( \pi '_{M}(y,\ell )=(T')^\ell y.\) Then \( T' \) is modelled by \( \varDelta ' \) with ergodic, \( T' \)-invariant measure \( (\pi '_{M})_{*}\mu '_\varDelta .\) Now by assumption the measure \( \mu \) is mixing so by the same argument as in [1, Section 4.1] we must have \( \mu =(\pi '_M)_* \mu '_{\varDelta } \).

Let \( G\in \mathscr {SH}_{q}(M) \) and fix integers \( 0\le n_0\le \cdots \le n_{q-1} \). Define \( n'_i=[n_i/d], r_i=n_i \mod d \). We need to bound

Define \( G':M^q\rightarrow {{\mathbb {R}}} \) by \( G'(x_0,\dots ,x_{q-1})=G(T^{r_0}x_0,\dots ,T^{r_{q-1}}x_{q-1}). \) Then

Let \( [\cdot ]_{{{\mathscr {H}}}'} \) denote the dynamically Hölder seminorm as defined with \( T',\phi ' \) in place of \( T,\phi \). Then by Lemma 3.6,

Now fix \( 0\le i<q.\) Let \( x_0,\dots ,x_{q-1}\in M\) and write

Let \( y,y'\in Y \) and \( 0\le \phi '(y)<\ell . \) Then

so \([G']_{{{\mathscr {H}}}',i} \le [G]_{{{\mathscr {H}}},i}. \) \(\square \)

3.3 Approximation by one-sided functions

Let \( 0\le p<q \) and \( 0\le n_0\le \cdots \le n_{q-1} \) be integers and consider a function \( G\in \mathscr {SH}_{q}(M) \). We wish to bound

Now since \(\pi _M:\varDelta \rightarrow M\) is a measure-preserving semiconjugacy

where \( {{\widetilde{H}}} :\varDelta ^2\rightarrow {{\mathbb {R}}}\) is given by

where \( \smash [t]{\widetilde{G}}=G\circ \pi _M\) and \( k_i=n_i-n_p \).

Let \( R\ge 1 \). We approximate \({\widetilde{H}}(f^R \cdot ,f^R \cdot )\) by a function \({\widetilde{H}}_R\) that projects down onto \({\bar{\varDelta }}\). Our approach is based on ideas from Appendix B of [28].

Recall that \( \psi _R(x)=\#\{j=1,\dots ,R: f^j x\in \varDelta _0\} \) denotes the number of returns to \(\varDelta _0=\{(y,\ell )\in \varDelta : \ell =0\}\) by time R. Let \({{\mathscr {Q}}}_R\) denote the at most countable, measurable partition of \(\varDelta \) with elements of the form \(\{x'\in \varDelta : s(x,x')>2\psi _R(x)\}\), \(x\in \varDelta \). Choose a reference point in each partition element of \({{\mathscr {Q}}}_R\). For \( x\in \varDelta \) let \( {\hat{x}} \) denote the reference point of the element that x belongs to. Define \( {\widetilde{H}}_R:\varDelta ^2\rightarrow {{\mathbb {R}}} \) by

Proposition 3.7

The function \( {\widetilde{H}}_R \) lies in \( L^\infty (\varDelta ^2) \) and projects down to a function \( {\bar{H}}_R\in L^\infty ({{\bar{\varDelta }}}^2) \). Moreover, there exists a constant \(K_2>0\) depending only on \( T:M\rightarrow M \) such that,

-

(i)

\( \left| {\bar{H}}_R\right| _\infty =\left| \smash [t]{\widetilde{H}}_R\right| _\infty \le \left| G\right| _\infty . \)

-

(ii)

For all \( x,y\in \varDelta \),

$$\begin{aligned} |\smash [t]{\widetilde{H}}(f^R x,f^R y)-\smash [t]{\widetilde{H}}_R(x,y)|\le K_2\biggl (\sum _{i=0}^{p-1} [G]_{{{\mathscr {H}}},i}\,\theta ^{\psi _R(f^{n_i}x)}+\sum _{i=p}^{q-1} [G]_{{{\mathscr {H}}},i}\,\theta ^{\psi _R(f^{k_i}y)}\biggr ). \end{aligned}$$ -

(iii)

For all \( {{\bar{y}}}\in {{\bar{\varDelta }}}\),

$$\begin{aligned} \left\Vert L^{R+n_{p-1}}{\bar{H}}_R(\cdot ,{{\bar{y}}})\right\Vert _\theta \le K_2\biggl (\left| G\right| _\infty +\sum _{i=0}^{p-1} [G]_{{{\mathscr {H}}},i}\biggr ). \end{aligned}$$

Here we recall that \( \left\Vert \cdot \right\Vert _\theta \) denotes the \( d_\theta \)-Lipschitz norm, which is given by \( \left\Vert v\right\Vert _\theta =\left| v\right| _\infty +\sup _{x\ne y}|v(x)-v(y)|/d_\theta (x,y)\) for \( v:{{\bar{\varDelta }}}\rightarrow {{\mathbb {R}}} \).

Proof

We follow the proof of Proposition 7.9 in [1].

By definition \( {\widetilde{H}}_R \) is piecewise constant on a measurable partition of \( \varDelta ^2 \). Moreover, this partition projects down to a measurable partition on \( {{\bar{\varDelta }}} \), since it is defined in terms of s and \( \psi _R \) which both project down to \( {{\bar{\varDelta }}} \). It follows that \( {\bar{H}}_R \) is well-defined and measurable. Part (i) is immediate.

Let \( x,y\in \varDelta \). Write \(\smash [t]{\widetilde{H}}(f^R x,f^R y)-\smash [t]{\widetilde{H}}_R(x,y) =I_1+I_2 \) where

Let \( a_i=f^{n_i}x\) and \( b_i=f^R f^{k_i}y\). By successively substituting \( a_i \) by \( {\hat{a}}_i \),

where \({{\tilde{v}}}_i(x)={{\tilde{G}}}(f^R a_0,\dots ,f^R a_{i-1},x,f^R {{\hat{a}}}_{i+1},\dots ,f^R {{\hat{a}}}_{p-1},b_p,\dots ,b_{q-1}). \)

Fix \( 0\le i<p \). Since \( a_i \) and \( {\hat{a}}_i \) are in the same partition element, \( s(a_i,{\hat{a}}_i)>2\psi _R(a_i) \). Write \( a_i=(y,\ell ), {{\hat{a}}}_i=({{\hat{y}}},\ell ). \) Then \( f^R a_i=(F^{\psi _R(a_i)}y,\ell _1)\) and similarly \(f^R {{\hat{a}}}_i=(F^{\psi _R(a_i)}{{\hat{y}}},\ell _1)\), where \( \ell _1=\ell +R-\varPhi _{\psi _R(a_i)}(y) \). (Here, \( \varPhi _k=\sum _{j=0}^{k-1}\phi \circ F^k \).) Now by the definition of \( [G]_{{{\mathscr {H}}},i} \) and (3.1),

Thus

By a similar argument,

completing the proof of (ii).

Let \({{\bar{x}}},{{\bar{x}}}',{{\bar{y}}}\in {{\bar{\varDelta }}}. \) Recall that

It follows that \( \left| L^{R+n_{p-1}}{\bar{H}}_R(\cdot ,{\bar{y}})\right| _\infty \le \left| {\bar{H}}_R\right| _\infty \le \left| G\right| _\infty .\) If \( d_\theta ({{\bar{x}}},{{\bar{x}}}')=1 \), then

Otherwise, we can write \( L^{n_{p-1}+R}{\bar{H}}_R(\cdot ,{\bar{y}})({{\bar{x}}})-L^{n_{p-1}+R}{\bar{H}}_R(\cdot ,{\bar{y}})({{\bar{x}}}')=J_1+J_2 \) where

Here, as usual we have paired preimages \( {{\bar{z}}},{{\bar{z}}}' \) that lie in the same cylinder set of length \( n_{p-1}+R \). By bounded distortion (Proposition 3.3), \( |J_1|\le C\left| G\right| _\infty d_\theta ({{\bar{x}}},{{\bar{x}}}'). \) We claim that \( |{\bar{H}}_R({{\bar{z}}},{{\bar{y}}})-{\bar{H}}_R({{\bar{z}}}',{{\bar{y}}})|\le K_2\sum _{i=0}^{p-1} [G]_{{{\mathscr {H}}},i}d_\theta ({{\bar{x}}},{{\bar{x}}}') \). It follows that \( |J_2|\le K_2\sum _{i=0}^{p-1} [G]_{{{\mathscr {H}}},i}d_\theta ({{\bar{x}}},{{\bar{x}}}'). \)

It remains to prove the claim. Choose points \( z,z',y\in \varDelta \) that project to \({{\bar{z}}},{{\bar{z}}}',{{\bar{y}}} \). Let \( a_i=f^{n_i}z,a_i'=f^{n_i}z',b_i=f^{R+n_i}y. \) As in part (ii),

where \( {{\tilde{w}}}_i(x)={{\tilde{G}}}(f^R {{\hat{a}}}_0,\dots ,{{\hat{a}}}_{i-1}, x,f^R {{\hat{a}}}'_{i+1},\dots ,{{\hat{a}}}'_{p-1},{{\hat{b}}}_p,\dots ,{{\hat{b}}}_{q-1})\).

Let \( 0\le i< p \). We bound \( E_i={{\tilde{w}}}_i(f^R {{\hat{a}}}_i)-{{\tilde{w}}}_i(f^R {{\hat{a}}}'_i) \). Without loss suppose that

for otherwise \({{\hat{a}}}_i \) and \({{\hat{a}}}'_i \) are reference points of the same partition element so \( {{\hat{a}}}_i={{\hat{a}}}'_i \) and \( E_i=0 \). Now as in part (ii),

Note that

Since \( {{\bar{z}}},{{\bar{z}}}' \) lie in the same cylinder set of length \( R+n_{p-1}\), we have \( \psi _R(a_i)=\psi _R(a'_i) \) and

Now \( a_i \) and \( {\hat{a}}_i \) are contained in the same partition element so \( s({{\hat{a}}}_i,a_i)-\psi _R({{\hat{a}}}_i) \ge \psi _R({{\hat{a}}}_i)\) and

Hence \( s({{\hat{a}}}_i,{{\hat{a}}}_i')-\psi _R({{\hat{a}}}_i)\ge \min \{s({{\bar{x}}},{{\bar{x}}}'),\psi _R(a_i)\}\). It follows that \( E_i\le 2(K+1)\theta ^{s({{\bar{x}}},{{\bar{x}}}')} \), completing the proof of the claim. \(\square \)

3.4 Proof of Lemma 3.6

We continue to assume that \( \beta >1 \) and that \( \mu _Y(\phi \ge n)=O(n^{-\beta }).\) We also assume that \( \gcd \{\phi (y):y\in Y\}=1 \) so that \( f:\varDelta \rightarrow \varDelta \) is mixing.

Lemma 3.8

Let \( \theta \in (0,1). \) There exists \( D_3>0 \) such that for any \( V\in L^{\infty }({{\bar{\varDelta }}}^2) \),

for all \( n\ge 1. \)

Remark 3.9

Let \( V(x,y)=v(x)w(y) \) where v is \( d_\theta \)-Lipschitz and \( w\in L^\infty ({{\bar{\varDelta }}}) \). Then we obtain that

so Lemma 3.8 can be seen as a generalisation of the usual upper bound on decay of correlations for observables on the one-sided tower \( {{\bar{\varDelta }}} \).

Remark 3.10

Our proof of Lemma 3.8 is based on ideas from [3, Section 4]. However, we have chosen to present the proof in full because (i) our assumptions are weaker, in particular we only require \( \beta >1 \) instead of \( \beta >2 \) and V need not be separately \(d_\theta \)-Lipschitz and (ii) we avoid introducing Markov chains.

Proof of Lemma 3.8

Write \( v(x)=V(x,{{\bar{f}}}^n x) \) so

where \( u_x(z)=V(z,x)\). Let \({{\bar{\varDelta }}}_\ell =\{(y,j)\in {{\bar{\varDelta }}}: j=\ell \} \) denote the \( \ell \)-th level of \( {{\bar{\varDelta }}} \). It follows that we can decompose

where

For all \( \ell \ge 0 \),

Hence,

Let \( x\in {{\bar{\varDelta }}}_\ell \), \( \ell \le n \). Then \( (L^{n} u_x)(x)=(L^{n-\ell }u_x)(x_0) \) where \( x_0\in {{\bar{\varDelta }}}_0 \) is the unique preimage of x under \( {{\bar{f}}}^\ell \). Thus by Lemma 3.4,

Hence,

completing the proof. \(\square \)

Proof of Lemma 3.6

Recall that we wish to bound

Without loss take \( n_p-n_{p-1}\ge 2 \). Let \( R=[(n_p-n_{p-1})/2] \). Write \( \nabla {\widetilde{H}}=I_1+I_2+\nabla {{\bar{H}}}_R \) where

Now by Proposition 3.7(ii) and Lemma 3.2,

Similarly,

Now let \( u_y(z)={\bar{H}}_R(z,y) \) and \( V(x,y)=(L^{n_{p-1}+R}u_y)(x) \). Then

and

where \( {{\hat{u}}}(z)={\bar{H}}_R(z,{{\bar{f}}}^{n_{p}}z) \). Hence

Now by Proposition 3.7(iii), \( \sup _{y\in {{\bar{\varDelta }}}}\left\Vert V(\cdot ,y)\right\Vert _{\theta }\le K_2(\left| G\right| _\infty +\sum _{i=0}^{p-1} [G]_{{{\mathscr {H}}},i}). \) By Lemma 3.8, (3.7) and (3.8) it follows that

Recall that \( R=[(n_{p}-n_{p-1})/2] \). Hence \( n_{p}-n_{p-1}-R\ge R\). By combining (3.5), (3.6) and (3.9) it follows that

as required. \(\square \)

4 An Abstract Weak Dependence Condition

The Functional Correlation Bound can be seen as a weak dependence condition. Let \(k\ge 1\) and consider k disjoint blocks of integers \(\{\ell _i,\ell _i+1,\dots ,u_i\}\), \(0\le i< k\) with \( \ell _i\le u_i<\ell _{i+1}. \) Consider random variables \( X_i \) on \( (M,\mu ) \) of the form

where \( \varPhi _i\in \mathscr {SH}_{u_i-\ell _i+1}(M) \), \( 0\le i< k. \)

When the gaps \(\ell _{i+1}-u_i\) between blocks are large, the random variables \( X_0,\dots ,X_{k-1} \) are weakly dependent. Let \({\widehat{X}}_0,\dots ,{\widehat{X}}_{k-1}\) be independent random variables with \({\widehat{X}}_i{=_dX_i}\).

Lemma 4.1

Suppose that \(T\) satisfies the Functional Correlation Bound with rate \(n^{-\gamma }\) for some \( \gamma >0 \). Let \(R=\max _i \left| \varPhi _i\right| _\infty \). Then for all Lipschitz \( F:[-R,R]^k\rightarrow {{\mathbb {R}}}\),

where \(C>0\) only depends on \(T:M\rightarrow M\).

Proof

We proceed by induction on k. For \( k=1 \) the inequality is trivial. Assume that this lemma holds for \( k\ge 1 \).

Consider an enriched probability space which contains independent copies of \( \{X_i\} \) and \( \{\smash [t]{\widehat{X}}_i\} \). Write

where

Since \( \smash [t]{\widehat{X}}_{k}=_dX_{k} \) and \( \smash [t]{\widehat{X}}_{k}\) is independent of \( X_0,\dots ,X_{k-1} \) and \( \smash [t]{\widehat{X}}_0,\dots ,\smash [t]{\widehat{X}}_{k-1} \),

Let \( y\in M. \) The function \( F_y=F(\cdot ,\dots ,\cdot ,X_{k}(y)):M^{k}\rightarrow {{\mathbb {R}}} \) satisfies \( \mathrm {Lip}(F_y)\le \mathrm {Lip}(F) \). Hence by the inductive hypothesis,

Now

Write

and

where \( G:M^s\rightarrow {{\mathbb {R}}} \), \( s=\sum _{i=0}^k (u_i-\ell _i+1)\). By a straightforward calculation, \( G\in \mathscr {SH}_{s}(M) \) and

Hence by the Functional Correlation Bound,

This completes the proof. \(\square \)

5 Moment Bounds

In this section we prove Theorem 2.4. Throughout this section we fix \( \gamma >1\) and assume that \( T:M\rightarrow M \) satisfies the Functional Correlation Bound with rate \( n^{-\gamma }. \)

In both parts of Theorem 2.4 we use the following moment bounds for independent, mean zero random variables, which are due to von Bahr, Esseen [32] and Rosenthal [30], respectively:

Lemma 5.1

Fix \(p\ge 1\). There exists a constant \(C>0\) such that for all \(k\ge 1\), for all independent, mean zero random variables \({\widehat{X}}_0,\dots ,{\widehat{X}}_{k-1}\in L^p\):

-

(i)

If \(1\le p\le 2\), then

$$\begin{aligned} {{\mathbb {E}}}_{}\left[ \biggl |\sum _{i=0}^{k-1} {\widehat{X}}_i \biggr |^p\right] \le C \sum _{i=0}^{k-1} {{\mathbb {E}}}_{}\left[ |{\widehat{X}}_i|^p\right] . \end{aligned}$$ -

(ii)

If \(p>2\), then

$$\begin{aligned} {{\mathbb {E}}}_{}\left[ \biggl |\sum _{i=0}^{k-1} {\widehat{X}}_i \biggr |^p\right] \le C\left( \biggl (\sum _{i=0}^{k-1} {{\mathbb {E}}}_{}\left[ {\widehat{X}}_i^2\right] \biggr )^{p/2}+ \sum _{i=0}^{k-1} {{\mathbb {E}}}_{}\left[ |{\widehat{X}}_i|^p\right] \right) . \end{aligned}$$

\(\square \)

Let \(v,w\in {{\mathscr {H}}}(M)\) be mean zero. For \( b\ge a\ge 0 \) we denote

Note that \(S_v(n)=S_v(0,n)\) and \( {{\mathbb {S}}}_{v,w}(n)={{\mathbb {S}}}_{v,w}(0,n)\). Some straightforward algebra yields the following proposition.

Proposition 5.2

Fix \( \ell \ge 1 \) and \( 0=a_0\le a_1\le \dots \le a_\ell .\) Then,

-

(i)

\(\displaystyle S_v(a_\ell )=\sum _{i=0}^{\ell -1}S_v(a_i,a_{i+1})\).

-

(ii)

\( \displaystyle {{\mathbb {S}}}_{v,w}(a_\ell )=\sum _{i=0}^{\ell -1} {{\mathbb {S}}}_{v,w}(a_i,a_{i+1})+\sum _{0\le i<j<\ell }S_v(a_i,a_{i+1})S_w(a_j,a_{j+1}).\)

\(\square \)

We also need the following elementary proposition:

Proposition 5.3

Fix \(R>0\), \(p\ge 1\) and an integer \(k\ge 1\). Define \(F:[-R,R]^k\rightarrow {{\mathbb {R}}}\) by \(F(y_0,\dots ,y_{k-1})=|y_0+\dots +y_{k-1}|^p.\) Then \( \left| F\right| _\infty \le (kR)^p\) and \(\mathrm {Lip}(F)\le p(kR)^{p-1}.\)

Proof

Note that \( \left| F\right| _\infty \le (kR)^p\). Fix \(y=(y_0,\dots ,y_{k-1}),y'=(y'_0,\dots ,y'_{k-1})\in [-R,R]^k\) and set \(a=|y_0+\dots +y_{k-1}|, b=|y'_0+\dots +y'_{k-1}|\). By the Mean Value Theorem,

so \(\mathrm {Lip}(F)\le p(kR)^{p-1}\). \(\square \)

Let \(k\ge 1,n\ge 2k \) and define \( a_i=[\tfrac{in}{2k}]\) for \( 0\le i\le 2k.\) Note that

For \( 0\le i< k \) let \( X_i=S_v(a_{2i},a_{2i+1}). \) Let \({\widehat{X}}_0,\dots ,{\widehat{X}}_{k-1}\) be independent random variables with \({\widehat{X}}_i=_dX_i\).

Lemma 5.4

There exists a constant \( C>0 \) such that

for all \(n\ge 2k,k\ge 1\), for any \(v\in {{\mathscr {H}}}(M)\).

Proof

Note that

where \(\ell _i=a_{2i}, u_i=a_{2i+1}-1\) and

Let \( R=\max _i \left| \varPhi _i\right| _\infty . \) Then

where \(F:[-R,R]^k\rightarrow {{\mathbb {R}}}\) is given by \( F(y_0,\dots ,y_{k-1})=|y_0+\dots +y_{k-1}|^{2\gamma }.\) Hence by Lemma 4.1,

where

It remains to bound A. First we bound the expressions \( [\varPhi _i]_{{{\mathscr {H}}},j} \). Fix \( 0\le i< k \) and \(0\le j\le u_i-\ell _i\). For \(x_0,\dots ,x_{k-1}, x'_j\in M\),

so \( [\varPhi _i]_{{{\mathscr {H}}},j}\le [v]_{{{\mathscr {H}}}}.\) Note that by (5.1), \( \left| \varPhi _i\right| _\infty \le (a_{2i+1}-a_{2i})\left| v\right| _\infty \le \tfrac{n}{k}\left| v\right| _\infty . \) Hence by Proposition 5.3,

and \(\mathrm {Lip}(F)\le 2\gamma (n\left| v\right| _\infty )^{2\gamma -1}.\)

Thus

Now by (5.1), \(\ell _{r+1}-u_r=a_{2r+2}-(a_{2r+1}-1)\ge \tfrac{n}{2k}\) for each \(0\le r\le k-2\). Hence

Substituting (5.3), (5.4) and (5.5) into (5.2) gives

as required. \(\square \)

We are now ready to prove the moment bound for \(S_v(n)\) (Theorem 2.4(a)).

Proof of Theorem 2.4(a)

We prove by induction that there exists \( D> 0 \) such that

for all \( m\ge 1\), for any mean zero \(v\in {{\mathscr {H}}}(M)\).

Claim There exists \( C>0 \) such that for all mean zero \( v\in {{\mathscr {H}}}(M) \), for any \(D>0\), for any \( k\ge 1 \) and any \( n\ge 2k \) such that (5.6) holds for all \(m<n\), we have

Now fix \( k\ge 1 \) such that \( Ck^{1-\gamma }\le \frac{1}{2} \). Fix \( D>0 \) such that \( Ck^{1+\gamma }\le \frac{1}{2}D^{2\gamma } \) and (5.6) holds for all \( m<2k \) and any mean zero \( v\in {{\mathscr {H}}}(M) \). Then the claim shows that for any \( n\ge 2k \) such that (5.6) holds for all \( m<n \), we have \( \left| S_v(n)\right| _{2\gamma }^{2\gamma }\le D^{2\gamma }n^\gamma \left\Vert v\right\Vert _{{{\mathscr {H}}}}^{2\gamma }.\) Hence by induction, (5.6) holds for all \( m\ge 1. \)

It remains to prove the claim. Note that in the following the constant \( C>0 \) may vary from line to line.

Fix \( n\ge 2k \) and assume that (5.6) holds for all \( m<n \). By Proposition 5.2(i),

where

We first bound \( \left| I_1\right| _{2\gamma }\). Write \(X_i=S_v(a_{2i},a_{2i+1})\) so that \(I_1=\sum _{i=0}^{k-1} X_i\). By Lemma 5.4,

We now bound \( {{\mathbb {E}}}_{}\left[ |\sum _{i=0}^{k-1}\smash [t]{\widehat{X}}_i|^{2\gamma }\right] \) by using Lemma 5.1 and the inductive hypothesis.

Fix \(0\le i<k\). By stationarity, \(X_i=S_v(a_{2i},a_{2i+1})=_dS_v(a_{2i+1}-a_{2i}).\) Thus by the inductive hypothesis (5.6), \( {{\mathbb {E}}}_{\mu }\left[ |X_i|^{2\gamma }\right] \le D^{2\gamma }(a_{2i+1}-a_{2i})^{\gamma }\left\Vert v\right\Vert _{{{\mathscr {H}}}}^{2\gamma }\). Hence by (5.1),

Now by the Functional Correlation Bound, \( |{{\mathbb {E}}}_{\mu }\left[ v\, v\circ T^n\right] |\le Cn^{-\gamma }\left\Vert v\right\Vert _{{{\mathscr {H}}}}^2\). By a standard calculation, it follows that \( {{\mathbb {E}}}_{\mu }\left[ S_v(n)^2\right] \le Cn\left\Vert v\right\Vert _{{{\mathscr {H}}}}^2\). Thus

By Lemma 5.1(ii), it follows that

Hence by (5.7), overall

Exactly the same argument applies to \(|I_2|_{2\gamma }^{2\gamma }. \) The conclusion of the claim follows by noting that

\(\square \)

We now prove Theorem 2.4(b). Our proof follows the same lines as that of part (a).

Let \(n, k\ge 1. \) Recall that \( a_i=\left[ \tfrac{in}{2k}\right] \). For \( 0\le i< k \) define mean zero random variables \( X_i \) on \( (M,\mu ) \) by

Let \({\widehat{X}}_0,\dots ,{\widehat{X}}_{k-1}\) be independent random variables with \({\widehat{X}}_i=_dX_i\).

The following lemma plays the same role that Lemma 5.4 played in the proof of Theorem 2.4(a).

Lemma 5.5

There exists a constant \( C>0 \) such that for any \(v,w\in {{\mathscr {H}}}(M)\),

for all \(n\ge 2k,k\ge 1\).

Proof

Note that

where \( \ell _i=a_{2i}, u_i=a_{2i+1}-1 \) and

Let \( R=\max _i \left| \varPhi _i\right| _\infty \). Observe that

where \(F:[-R,R]^k\rightarrow {{\mathbb {R}}}\) is given by \( F(y_0,\dots ,y_{k-1})=|y_0+\dots +y_{k-1}|^{\gamma }.\) Hence by Lemma 4.1,

where

It remains to bound A. The first step is to bound the expressions \( [\varPhi _i]_{{{\mathscr {H}}},j}\). Fix \( 0\le i<k, 0\le j\le u_i-\ell _i.\) Let \( x_0,\dots ,x_{k-1},x_j'\in M.\) Note that

where

Now,

and similarly \( |J_2|\le \left| v\right| _\infty \sum _{0\le q<j}|w(x_j)-w(x'_j)|\), so

Now recall from (5.1) that \(u_i-\ell _i+1=a_{2i+1}-a_{2i}\le n/k \) so

Next note that

so by Proposition 5.3, \(\left| F\right| _\infty \le \bigl (\tfrac{2n^2}{k}\left| v\right| _\infty \left| w\right| _\infty \bigr )^\gamma \) and \( \mathrm {Lip}(F)\le \gamma \big (\tfrac{2n^2}{k} \left| v\right| _\infty \left| w\right| _\infty \big )^{\gamma -1}. \) Combining these bounds with (5.5), (5.8) and (5.9) yields that

as required. \(\square \)

We are now ready to prove Theorem 2.4(b).

Proof of Theorem 2.4(b)

We prove by induction that there exists \( D> 0 \) such that

for all \( m\ge 1\), for any \(v,w \in {{\mathscr {H}}}(M)\) mean zero.

Claim There exists \( C>0 \) such that for all \( v,w\in {{\mathscr {H}}}(M) \) mean zero, for any \( D>0\), any \(k\ge 1\) and any \( n\ge 2k \) such that (5.10) holds for all \(m<n\), we have

Now fix \( k\ge 1 \) such that \( C(k^{1-\gamma }+k^{-\gamma /2})\le \frac{1}{2} \). Fix \( D>0 \) such that \({Ck^\gamma \le \frac{1}{2}D^{\gamma }}\) and (5.10) holds for all \( m<2k \) and any mean zero \( v,w\in {{\mathscr {H}}}(M)\). Then the claim shows that if \( n\ge 2k \) and (5.10) holds for all \( m<n \), then \( \left| {{\mathbb {S}}}_{v,w}(n)\right| _{\gamma }^\gamma \le D^\gamma (n\left\Vert v\right\Vert _{{{\mathscr {H}}}}\left\Vert w\right\Vert _{{{\mathscr {H}}}})^\gamma \). Hence by induction, (5.10) holds for all \( m\ge 1. \)

It remains to prove the claim. Note that in the following the constant \( C>0 \) may vary from line to line.

Fix \( n\ge 2k \) and assume that (5.10) holds for all \(m<n\). Recall that \( a_i= \big [\tfrac{in}{2k}\big ]\) for \( 0\le i\le 2k. \) By Proposition 5.2(ii),

where

Recall from (5.1) that \( a_{i+1}-a_i\le n/k \). Hence by Theorem 2.4(a),

Now by the Functional Correlation Bound, \( |{{\mathbb {E}}}_{\mu }\left[ v\, w\circ T^n\right] |\le Cn^{-\gamma }\left\Vert v\right\Vert _{{{\mathscr {H}}}}\left\Vert w\right\Vert _{{{\mathscr {H}}}}\). By a standard calculation, it follows that \( |{{\mathbb {E}}}_{\mu }\left[ {{\mathbb {S}}}_{v,w}(n)\right] |\le Cn\left\Vert v\right\Vert _{{{\mathscr {H}}}}\left\Vert w\right\Vert _{{{\mathscr {H}}}}\). Thus

We now bound \( \left| I_3\right| _\gamma ^\gamma . \) Note that \( I_3=\sum _{i=0}^{k-1}X_i, \) where \( X_i={{\mathbb {S}}}_{v,w}(a_{2i},a_{2i+1})-{{\mathbb {E}}}_{\mu }\left[ {{\mathbb {S}}}_{v,w}(a_{2i},a_{2i+1})\right] .\) Hence by Lemma 5.5,

Fix \( 0\le i<k. \) By stationarity, \( X_i=_d{{\mathbb {S}}}_{v,w}(a_{2i+1}-a_{2i})-{{\mathbb {E}}}_{\mu }\left[ {{\mathbb {S}}}_{v,w}(a_{2i+1}-a_{2i})\right] . \) Now by the inductive hypothesis (5.10), \( \left| {{\mathbb {S}}}_{v,w}(a_{2i+1}-a_{2i})\right| _\gamma \le D(a_{2i+1}-a_{2i})\left\Vert v\right\Vert _{{{\mathscr {H}}}}\left\Vert w\right\Vert _{{{\mathscr {H}}}}, \) so

It follows that

If \( 1< \gamma \le 2 \), then by Lemma 5.1(i),

Suppose on the other hand that \( \gamma >2. \) Note that

so

Hence by Lemma 5.1(ii),

Hence for any \( \gamma >1 \),

By (5.11), it follows that

Exactly the same argument applies to \( \left| I_4\right| ^\gamma _\gamma .\) The conclusion of the claim follows by noting that

as required. \(\square \)

References

Bruin, H., Melbourne, I., Terhesiu, D.: Sharp polynomial bounds on decay of correlations for multidimensional nonuniformly hyperbolic systems and billiards. Ann. H. Lebesgue 4, 407–451 (2021)

Bunimovič, L.A.: The ergodic properties of billiards that are nearly scattering. Dokl. Akad. Nauk SSSR 211, 1024–1026 (1973)

Chazottes, J.-R., Gouëzel, S.: Optimal concentration inequalities for dynamical systems. Commun. Math. Phys. 316(3), 843–889 (2012)

Chernov, N.: Decay of correlations and dispersing billiards. J. Stat. Phys. 94(3–4), 513–556 (1999)

Chernov, N., Zhang, H.-K.: Billiards with polynomial mixing rates. Nonlinearity 18(4), 1527–1553 (2005)

Chernov, N., Zhang, H.-K.: A family of chaotic billiards with variable mixing rates. Stoch. Dyn. 5(4), 535–553 (2005)

Chevyrev, I., Friz, P.K., Korepanov, A., Melbourne, I., Zhang, H.: Multiscale systems, homogenization, and rough paths. In: Probability and Analysis in Interacting Physical Systems, Volume 283 of Springer Proceedings of Mathematical Statistics, pp. 17–48. Springer, Cham (2019)

Chevyrev, I., Friz, P.K., Korepanov, A., Melbourne, I., Zhang, H.: Deterministic homogenization under optimal moment assumptions for fast-slow systems. Part 2. Ann. Inst. H. Poincaré Probab. Statist. to appear

De Simoi, J., Liverani, C.: Statistical properties of mostly contracting fast-slow partially hyperbolic systems. Invent. Math. 206(1), 147–227 (2016)

De Simoi, J., Liverani, C.: Limit theorems for fast-slow partially hyperbolic systems. Invent. Math. 213(3), 811–1016 (2018)

Demers, M., Melbourne, I., Nicol, M.: Martingale approximations and anisotropic Banach spaces with an application to the time-one map of a Lorentz gas. Nonlinearity 33(8), 4095–4113 (2020)

Dolgopyat, D.: Limit theorems for partially hyperbolic systems. Trans. Am. Math. Soc. 356(4), 1637–1689 (2004)

Gottwald, G.A., Melbourne, I.: Homogenization for deterministic maps and multiplicative noise. Proc. R. Soc. Lond. Ser. A Math. Phys. Eng. Sci. 469(2156), 20130201 (2013)

Gouëzel, S.: Central limit theorem and stable laws for intermittent maps. Probab. Theory Relat. Fields 128(1), 82–122 (2004)

Gouëzel, S.: Sharp polynomial estimates for the decay of correlations. Israel J. Math. 139, 29–65 (2004)

Gouëzel, S.: Vitesse de décorrélation et théoremes limites pour les applications non uniformément dilatantes. PhD thesis, Ecole Normale Supérieure (2004)

Gouëzel, S.: Berry–Esseen theorem and local limit theorem for non uniformly expanding maps. Ann. Inst. H. Poincaré Probab. Statist. 41(6), 997–1024 (2005)

Kelly, D., Melbourne, I.: Smooth approximation of stochastic differential equations. Ann. Probab. 44(1), 479–520 (2016)

Kelly, D., Melbourne, I.: Deterministic homogenization for fast–slow systems with chaotic noise. J. Funct. Anal. 272(10), 4063–4102 (2017)

Korepanov, A., Kosloff, Z., Melbourne, I.: Explicit coupling argument for non-uniformly hyperbolic transformations. Proc. Roy. Soc. Edinb. Sect. A 149(1), 101–130 (2019)

Korepanov, A., Kosloff, Z., Melbourne, I.: Deterministic homogenization under optimal moment assumptions for fast–slow systems. Part 1. Ann. Inst. H. Poincaré Probab. Statist., to appear

Leppänen, J.: Functional correlation decay and multivariate normal approximation for non-uniformly expanding maps. Nonlinearity 30(11), 4239–4259 (2017)

Leppänen, J., Stenlund, M.: A note on the finite-dimensional distributions of dispersing billiard processes. J. Stat. Phys. 168(1), 128–145 (2017)

Leppänen, J., Stenlund, M.: Sunklodas’ approach to normal approximation for time-dependent dynamical systems. J. Stat. Phys. 181(5), 1523–1564 (2020)

Liverani, C., Saussol, B., Vaienti, S.: A probabilistic approach to intermittency. Ergod. Theory Dyn. Syst. 19(3), 671–685 (1999)

Markarian, R.: Billiards with polynomial decay of correlations. Ergod. Theory Dyn. Syst. 24(1), 177–197 (2004)

Melbourne, I., Stuart, A.M.: A note on diffusion limits of chaotic skew-product flows. Nonlinearity 24(4), 1361–1367 (2011)

Melbourne, I., Terhesiu, D.: Decay of correlations for non-uniformly expanding systems with general return times. Ergod. Theory Dyn. Syst. 34(3), 893–918 (2014)

Melbourne, I., Varandas, P.: A note on statistical properties for nonuniformly hyperbolic systems with slow contraction and expansion. Stoch. Dyn. 16(3), 1660012 (2016)

Rosenthal, H.P.: On the subspaces of \(L^{p}\) (\(p\) > 2) spanned by sequences of independent random variables. Israel J. Math. 8, 273–303 (1970)

Sarig, O.: Subexponential decay of correlations. Invent. Math. 150(3), 629–653 (2002)

von Bahr, B., Esseen, C.-G.: Inequalities for the \(r\)th absolute moment of a sum of random variables, \(1\le r\le 2\). Ann. Math. Stat. 36, 299–303 (1965)

Young, L.-S.: Statistical properties of dynamical systems with some hyperbolicity. Ann. Math. (2) 147(3), 585–650 (1998)

Young, L.-S.: Recurrence times and rates of mixing. Israel J. Math. 110, 153–188 (1999)

Acknowledgements

The author would like to thank his supervisor Ian Melbourne for suggesting the problem considered in this paper, providing constant feedback and participating in many helpful discussions. He is also grateful to the anonymous referee for their comments, which improved the presentation of this paper.

Open Access

This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funding

The author is funded by a departmental award.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by C. Liverani.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Fleming-Vázquez, N. Functional Correlation Bounds and Optimal Iterated Moment Bounds for Slowly-Mixing Nonuniformly Hyperbolic Maps. Commun. Math. Phys. 391, 173–198 (2022). https://doi.org/10.1007/s00220-022-04325-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00220-022-04325-w