Abstract

We consider spin systems in the d-dimensional lattice \({\mathbb Z} ^d\) satisfying the so-called strong spatial mixing condition. We show that the relative entropy functional of the corresponding Gibbs measure satisfies a family of inequalities which control the entropy on a given region \(V\subset {\mathbb Z} ^d\) in terms of a weighted sum of the entropies on blocks \(A\subset V\) when each A is given an arbitrary nonnegative weight \(\alpha _A\). These inequalities generalize the well known logarithmic Sobolev inequality for the Glauber dynamics. Moreover, they provide a natural extension of the classical Shearer inequality satisfied by the Shannon entropy. Finally, they imply a family of modified logarithmic Sobolev inequalities which give quantitative control on the convergence to equilibrium of arbitrary weighted block dynamics of heat bath type.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Functional inequalities such as the Poincaré and the logarithmic Sobolev inequality have long played a key role in the analysis of convergence to equilibrium for spin systems. For the Glauber dynamics associated to a lattice Gibbs measures in the high temperature regime, rather conclusive results were obtained around thirty years ago in a series of influential papers [18, 21, 26, 32, 33, 35]. Broadly speaking, the main results of these works can be summarized with the statement that for finite or compact spin space, if the spin system satisfies a spatial mixing condition, then the relative entropy functional of the Gibbs measure \(\mu _V\) describing the system on any region \(V\subset {\mathbb Z} ^d\), satisfies an approximate tensorization of the form

where \(C\ge 1\) is a constant, f is a nonnegative function, and \(\mathrm{Ent} _V f\) is the relative entropy

with \(\mathrm{Ent} _x f\) denoting the local entropy at x, a function of all spins except for the spin at vertex x. The key feature of this inequality is its dimensionless character, namely the fact that the constant \(C\ge 1\) is independent of both the region V, and the boundary condition fixed in \({\mathbb Z} ^d{\setminus } V\), which we have omitted from our notation for simplicity. The value \(C=1\) is attained in the trivial case of independent spins. The papers mentioned above formulate their results in terms of logarithmic Sobolev inequalities, but we find it natural to restate them in terms of the tensorization inequality (1.1), which seems to have a more fundamental character in our setting. Anyhow, if the spin space is finite, the statement (1.1) is equivalent to the standard logarithmic Sobolev inequality for the single site heat bath Markov chain, see e.g. [9, 29].

The proof of these results was obtained through refined recursive techniques, which exploit the spatial mixing assumption to establish some form of factorization of the entropy functional. We refer to the surveys [17, 24] for systematic expositions of these techniques. A particularly simple and effective approach was later developed in [10] and [12], who independently showed that the spatial mixing condition implies a factorization estimate of the form

where A, B are e.g. two overlapping rectangular regions in \({\mathbb Z} ^d\), with \(V=A\cup B\), and \(\varepsilon >0\) is a constant that can be made suitably small provided the overlap between A and B is sufficiently thick. Here \(\mathrm{Ent} _Af\) denotes the relative entropy of f with respect to the Gibbs measure \(\mu _A\) and it is thus a function of all spins outside of the region A. If the inequality (1.2) is available, then a relatively simple recursion leads to the desired conclusion (1.1).

The spatial mixing assumed for all these results is a condition of the Dobrushin-Shlosman type [14], that can be formulated in terms of exponential decay of correlations. In the literature one finds various degrees of generality of the mixing condition, often loosely referred to as strong spatial mixing. We refer to the original papers for the precise notions of spatial mixing involved; see also Sect. 2.3 below for more on this matter. We point out that the discussion here is mostly concerned with the case of finite or compact spin space, in which case one can actually show that (1.1) is equivalent to a strong mixing condition [26, 31]. In the case of unbounded spins the techniques and the results are somewhat different; we refer the interested reader to [8, 20, 28, 30, 34, 36].

While the inequality (1.1) is well suited for the analysis of the single site heat bath Markov chain, it is not very helpful in the analysis of more general block dynamics, that is Markov chains where an entire region \(A\subset V\) can be resampled at once by a single heat bath move. With that motivation in mind, in this work we address the question of the validity of a version of the inequality (1.1) where single sites \(x\in V\) are replaced by arbitrary blocks \(A\subset V\). More precisely, we consider the question of finding a constant C such that for all nonnegative functions f,

where \(\alpha =\{\alpha _A,\,A\subset V\}\) is an arbitrary collection of nonnegative weights, and we define

If (1.3) holds with the same constant C for all finite regions \(V\subset {\mathbb Z} ^d\), for all given boundary conditions on \({\mathbb Z} ^d{\setminus } V\), and for all choices of weights \(\alpha \), we say that the spin system satisfies the block factorization of entropy (with constant C).

This definition is inspired by the fact that in the case of infinite temperature, that is if \(\mu _V\) is a product measure, then (1.3) holds with \(C=1\). Indeed, in this special case it is a consequence of the well known Shearer inequality satisfied by the Shannon entropy, see [9]. These inequalities have far reaching applications in several different settings, see e.g. [2, 11, 22], and it is thus very natural to investigate their validity beyond the product case.

However, as far as we know there are no significant results in the literature concerning the validity of (1.3) when \(\mu _V\) is not a product measure. Notice that the tensorization statement (1.1) corresponds to the special case where \(\alpha _A=1\) or 0 according to whether A is a single site or not. In this case, the right hand side of (1.3) has a simple additive structure, a feature that is crucially used in all existing proofs of (1.1).

Important progress was obtained recently in [6] concerning the linearized version of (1.3). Namely, if we replace the entropy functional \(\mathrm{Ent} _V f\) by the variance functional

then (1.3) becomes the Poincaré inequality

which we may refer to as the block factorization of variance. Notice that the inequality (1.4) provides the lower bound \(\gamma (\alpha )/C\) on the spectral gap of the \(\alpha \)-weighted block dynamics, that is the Markov chain with Dirichlet form defined by

where \({{\,\mathrm{Cov}\,}}_A(f,g)= \mu _A\left[ fg\right] - \mu _A\left[ f\right] \mu _A\left[ g\right] \) denotes the covariance of two functions f, g with respect to \(\mu _A\). This is the continuous time Markov chain where each block A independently undergoes full heat bath resamplings at the arrival times of a Poisson process with rate \(\alpha _A\ge 0\), see e.g. [24].

One of the main results of [6] shows that, if the system satisfies the strong spatial mixing assumption, then it must satisfy the special case of (1.4) where the weights \(\alpha \) are all either zero or one, but otherwise arbitrary, and where \(\gamma (\alpha )\) is replaced by the indicator \(\mathbf {1}_{\gamma (\alpha )>0}\), see [6, Theorem 1.2]. The proofs in [6] however rely crucially on coupling arguments as in [15], which do not seem to apply directly to prove the stronger statement (1.3).

In this paper we establish the block factorization of entropy, namely the full statement (1.3), for nearest neighbor spin systems satisfying the strong spatial mixing assumption. For instance, it will follow that the block factorization of entropy holds throughout the whole one phase region for the ferromagnetic Ising/Potts models in two dimensions, provided V in (1.3) is a sufficiently regular set in the sense of [25], see Sect. 2.3.

As a corollary, we obtain estimates on the speed of convergence to equilibrium of any block dynamics. Indeed, Jensen’s inequality shows that, for any \(A\subset V\subset {\mathbb Z} ^d\),

and therefore (1.3) implies the following modified logarithmic Sobolev inequality for any \(\alpha \)-weighted block dynamics:

In particular, the block factorization of entropy implies the exponential decay in time of the relative entropy, with rate at least \(\gamma (\alpha )/C\), for any \(\alpha \)-weighted block dynamics. Moreover, if the spin state is finite the bound (1.6) implies the upper bound

where |V| is the cardinality of the set V, D is some new absolute constant and \(T_\mathrm{mix}(V,\alpha )\) denotes the total variation mixing time of the \(\alpha \)-weighted block dynamics. We remark that (1.7) provides tight bounds on the mixing time for a large class of non-local Markov chains, for which previously known estimates were only polynomial in the size of V. A particularly interesting example, that is often used in the Monte Carlo Markov Chain practice, is the case of the even/odd chain, which corresponds to \(\alpha _E=\alpha _O=1\) and \(\alpha _A=0\) for all \(A\ne E,O\), where E (respectively O) denotes the set of all even (resp. odd) sites in V. In this case (1.7) gives a tight \(O(\log |V|)\) bound whereas the best previously known estimate was of order |V|, see [6]. Let us mention that the methods and the results introduced here can be also adapted to study the Swendsen-Wang dynamics for the ferromagnetic Potts model [5].

If the spin state is finite it is also possible to use (1.3) to derive a standard logarithmic Sobolev inequality for the \(\alpha \)-weighted block dynamics in the form

with the constant

where D is an absolute constant and \(\mu _{A,*}\) is the minimum value attained by the probability measure \(\mu _A\), minimized over the choice of the implicit boundary condition in \({\mathbb Z} ^d{\setminus } A\). We note that (1.8) contains as a special case the well known logarithmic Sobolev inequality for the single site heat bath Markov chain, which corresponds to the choice of weights \(\alpha _A=1\) or 0 according to whether A is a single site or not.

We conclude this introduction with a brief discussion of the main ideas involved in the proof of our main result (1.3). The proof starts with an observation already put forward in [6] for the case of the spectral gap, which allows us to reduce the general factorization problem to the problem of factorization with two special blocks only: the even sites and the odd sites. The latter is then analyzed via a recursion similar to that employed in Cesi’s proof of (1.1), see [10]. As mentioned above, the main obstacle in implementing the recursion here is the lack of an additive structure, which generates potentially large error terms when trying to restore a block from smaller components. To overcome this difficulty we develop a two-stage recursion, which combines a version of the two-block factorization estimate (1.2) together with a decomposition of the entropy which allows us to smear out the errors coming from the restoration of large blocks, see Theorem 4.7. A further crucial ingredient in the proof is a new tensorization lemma which we believe to be of independent interest, see Lemma 3.2 below.

The plan of the paper is as follows. In Sect. 2 we describe the setup and the main results. In Sect. 3 we develop some key tools needed for the proof. In Sect. 4 we prove the block factorization estimate.

2 Setup and Main Results

2.1 The spin system

The underlying graph is the d-dimensional integer lattice \({\mathbb Z} ^d\), with vertices \(x=(x_1,\dots ,x_d)\), and edges \({\mathcal {E}}\) defined as unordered pairs xy of vertices x and y such that \(\sum _{i=1}^d|x_i-y_i| =1\). We call \(d(\cdot ,\cdot )\) the resulting graph distance. For any set of vertices \(\Lambda \subset {\mathbb Z} ^d\), the exterior boundary is \(\partial \Lambda =\{y\in \Lambda ^c:\, d(y,\Lambda )=1\}\), where \(\Lambda ^c={\mathbb Z} ^d{\setminus } \Lambda \). We write \({\mathbb F} \) for the set of finite subsets \(\Lambda \subset {\mathbb Z} ^d\).

We take the single spin state to be an arbitrary probability space \((S,\mathscr {S},\nu )\). Given any region \(\Lambda \subset {\mathbb Z} ^d\), the associated configuration space is the product space \((\Omega _\Lambda ,{\mathcal {F}}_\Lambda ) = (S^\Lambda ,\mathscr {S}^\Lambda )\), whose elements are denoted by \(\sigma _\Lambda =\{\sigma _x,\,x\in \Lambda \}\) with \(\sigma _x\in S\) for all x. The apriori measure on \(\Omega _\Lambda \) is the product measure \(\nu _\Lambda =\otimes _{x\in \Lambda }\nu \).

Given a bounded measurable symmetric function \(U:S\times S\mapsto {\mathbb R} \), the pair potential, and a bounded measurable function \(W:S\mapsto {\mathbb R} \), the single site potential, for any \(\Lambda \in {\mathbb F} \), and \(\tau \in \Omega _{\Lambda ^c}\), the Hamiltonian \(H_\Lambda ^\tau :\Omega _\Lambda \mapsto {\mathbb R} \) is defined by

The Gibbs measure in the region \(\Lambda \in {\mathbb F} \) with boundary condition \(\tau \in \Omega _{\Lambda ^c}\) is the probability measure \(\mu _\Lambda ^\tau \) on \( (\Omega _\Lambda ,{\mathcal {F}}_\Lambda )\) defined by

where \(Z_\Lambda ^\tau \) is the normalizing constant.

For any measurable function \(f:\Omega _\Lambda \mapsto {\mathbb R} \) we write \(\mu _\Lambda ^\tau f \) for the expectation of f under \(\mu _\Lambda ^\tau \), and write \(\mu _\Lambda f\) for the measurable function \(\Omega _{\Lambda ^c}\ni \tau \mapsto \mu _\Lambda ^\tau f\). A fundamental feature of the family of measures \(\{\mu _\Lambda ^\tau ,\,\Lambda \in {\mathbb F} \,,\tau \in \Omega _{\Lambda ^c}\}\) is the so-called DLR property:

valid for all \(\Lambda \subset V\in {\mathbb F} \), and for all bounded measurable function \(f:\Omega _V\mapsto {\mathbb R} \).

2.2 Examples and remarks

Below we list some standard examples which fit the general framework defined above and discuss possible extensions. We refer the reader to [16] for an introduction to the statistical mechanics of lattice spin systems.

2.2.1 Finite spins

When the space S is finite we take \(\nu \) as the counting measure on S. The Potts model corresponds to \(S=\{1,\dots ,q\}\), with \(q\ge 2\) a fixed integer,

where the parameter \(\beta \in {\mathbb R} \) is related to the inverse temperature of the system and the fixed vector \((h_1,\dots ,h_q)\in {\mathbb R} ^q\) to an external magnetic field. When \(\beta \ge 0\) the model is called ferromagnetic. When \(q=2\) the Potts model is called the Ising model. In the case of finite spin space, in order to include spin systems with hard constraints, we shall also allow the function U to take the value \(-\infty \). The spin system is called permissive if for every \(\Lambda \in {\mathbb F} \), for every \(\tau \in \Omega _{\Lambda ^c}\), there exists \(\sigma _\Lambda \in \Omega _\Lambda \) with positive mass under \(\mu _\Lambda ^\tau \), that is such that \(\mu _\Lambda ^\tau (\sigma _\Lambda ) >0\). Well known examples of permissive spin systems include the hard-core model with parameter \(\lambda \), for any \(\lambda >0\), and the uniform distribution over proper q-colorings, for any integer \(q\ge 2d+1\). The hard-core model with parameter \(\lambda \) corresponds to \(S=\{0,1\}\), \(U(1,1)=-\infty \), \(U(1,0)=U(0,1)=U(0,0)=0\), \(W(s)=s\log (\lambda )\), while the uniform distribution over proper q-colorings corresponds to the limit \(\beta \rightarrow -\infty \) in the Potts model. A permissive spin system is called irreducible if the single site heat bath Markov chain on \(\Lambda \) with boundary condition \(\tau \) is irreducible for any choice of \(\Lambda \in {\mathbb F} \) and \(\tau \in \Omega _{\Lambda ^c}\), see [6, Section 2]. Our main results below will apply to permissive irreducible spin systems.

2.2.2 Continuous compact spins

Other classical examples are obtained when S is a compact subset of \({\mathbb R} ^n\) and \(\nu \) is the uniform distribution over S. The O(n) model, for \(n\ge 2\), corresponds to the case where S is the unit sphere in \({\mathbb R} ^n\), \(\beta \in {\mathbb R} \),

for some fixed vector \(v\in S\), with \(\langle \cdot , \cdot \rangle \) denoting the standard inner product in \({\mathbb R} ^n\).

2.2.3 Unbounded spins

The setup introduced above includes unbounded (continuous or discrete) spins. When \(S={\mathbb Z} _+\) for instance it covers the particle systems considered in [12]. It should be however clear that the boundedness assumptions on the interaction U rules out many interesting models in the unbounded setting.

2.2.4 Extensions

Concerning possible extensions of our main results to more general settings, we remark that the definitions given above can be extended to include spatially non-homogeneous models, with pair potentials U and site potentials W replaced by edge dependent functions \(U_{xy}\) and site dependent functions \(W_x\) respectively. It is not difficult to check that all results in this paper can be extended to include these cases provided that all the estimates involved in our assumptions are uniform with respect to the new potentials. Finally, we remark that our setup is restricted to the case of nearest neighbor interactions, and the extension of our main results to more general finite range spin systems is not immediate. Indeed, our proof makes explicit use of the nearest neighbor structure at various places. We believe however that a similar approach can be used, provided the decomposition into even and odd sites used in our proof is replaced by more general tilings such as the ones used in [6].

2.3 Spatial mixing

The notion of spatial mixing to be considered belongs to the family of strong spatial mixing conditions. In the case of finite spins it is one of many equivalent conditions introduced by Dobrushin and Shlosman [14] to characterize the so-called complete analyticity regime.

The precise formulation we give here coincides with the one adopted in Cesi’s paper [10]. For any \(\Delta \subset \Lambda \in {\mathbb F} \) we call \(\mu _{\Lambda ,\Delta }^\tau \) the marginal of \(\mu _{\Lambda }^\tau \) on \(\Omega _\Delta \). A version of the Radon–Nikodym density of \(\mu _{\Lambda ,\Delta }^\tau \) with respect to \(\nu _\Delta \) is given by the function

where \(\eta _{\Lambda {\setminus }\Delta }\sigma _\Delta \) denotes the configuration \(\xi \in \Omega _\Lambda \) such that \(\xi _x=\eta _x\) if \(x\in \Lambda {\setminus }\Delta \) and \(\xi _x=\sigma _x\) if \(x\in \Delta \).

Definition 2.1

Given constants \(K,a>0\), and \(\Lambda \in {\mathbb F} \) we say that condition \({\mathcal {C}}(\Lambda ,K,a)\) holds if for any \(\Delta \subset \Lambda \), for all \(x\in \partial \Lambda \):

where \(\tau ,\tau '\in \Omega _{\Lambda ^c}\) are such that \(\tau _y=\tau '_y\) for all \(y\ne x\), and \(\Vert \cdot \Vert _\infty \) denotes the \(L^\infty \) norm. We say that the spin system satisfies SM(K, a) if \({\mathcal {C}}(\Lambda ,K,a)\) holds for all \(\Lambda \in {\mathbb F} \).

As emphasized in [25] it is often important to consider a relaxed spatial mixing condition that requires \({\mathcal {C}}(\Lambda ,K,a)\) to hold only for all sufficiently “fat” sets \(\Lambda \). The latter is defined as follows.

Definition 2.2

Given \(L\in {\mathbb N} \), let \(Q_L=[0,L-1]^d\cap {\mathbb Z} ^d\) be the lattice cube of side L located at the origin. For any \(y\in {\mathbb Z} ^d\), define the translated cube \(Q_L(y)=Ly + Q_L\). Let \({\mathbb F} ^{(L)}\) be the set of all \(\Lambda \in {\mathbb F} \) of the form

for some \(\Lambda '\in {\mathbb F} \). The spin system satisfies \(SM_L(K,a)\) if \({\mathcal {C}}(\Lambda ,K,a)\) holds for all \(\Lambda \in {\mathbb F} ^{(L)}\).

For systems without hard constraints it is well known that SM(K, a), for some K, a, is always satisfied in dimension one, and that for any dimension \(d>1\) it holds under the assumption of suitably high temperature, see e.g. [24]. It is important to note that the validity of both SM(K, a) and \(SM_L(K,a)\) can be ensured by checking finite size conditions only [23].

We recall that SM(K, a) can be strictly stronger than \(SM_L(K,a)\), that is there are spin systems that do not satisfy the condition SM(K, a) but for which the relaxed condition \(SM_L(K,a)\) holds if L is a suitably large constant. We refer to [25] for a thorough discussion of this subtle point. As a consequence of results in [1, 3, 27] it is also known that the two-dimensional ferromagnetic Potts model satisfies \(SM_L(K,a)\), for some \(K,a>0\) and \(L\in {\mathbb N} \), throughout the whole one phase region, that is for all values of temperature and external field within the uniqueness region, there exist constants \(K,a>0\) and L such that \(SM_L(K,a)\) holds.

Finally, we note that \({\mathcal {C}}(\Lambda ,K,a)\) is too strong a requirement in the case of systems with hard constraints, since \(\mu _{\Lambda ,\Delta }^{\tau '}\) may be not absolutely continuous with respect to \(\mu _{\Lambda ,\Delta }^\tau \). However, since (2.2) will only be relevant if \(d(x,\Delta )\) is sufficiently large, in order to have a meaningful assumption for permissive spin systems with hard constraints, we may rephrase the condition \(SM_L(K,a)\) by requiring, for all \(\Lambda \in {\mathbb F} ^{(L)}\), that (2.2) holds for all \(\Delta \subset \Lambda \) and \(x\in \partial \Lambda \) such that \(d(x,\Delta )\ge L/2\).

2.4 Main results

We first recall some standard notation. For any \(V\in {\mathbb F} \), \(\tau \in \Omega _{V^c}\), and \(f:\Omega _V\mapsto {\mathbb R} _+\) with \(f\log ^+\!f\in L^1(\mu _V^\tau )\), we write \(\mathrm{Ent} _V^\tau f\) for the entropy

and use the notation \(\mathrm{Ent} _V f\) for the function \(\tau \mapsto \mathrm{Ent} _V^\tau f\).

Theorem 2.3

Suppose that the spin system satisfies SM(K, a) for some constants \(K,a>0\). Then there exists a constant \(C>0\) such that for all \(V\in {\mathbb F} \), \(\tau \in \Omega _{V^c}\), for all nonnegative weights \(\alpha =\{\alpha _A,\,A\subset V\}\), for all \(f:\Omega _V\mapsto {\mathbb R} _+\) with \(f\log ^+\!f\in L^1(\mu _V^\tau )\),

where \(\gamma (\alpha ) =\min _{x\in V} \sum _{A:\,A\ni x}\alpha _A\). If instead the spin system satisfies \(SM_L(K,a)\) for some constants \(K,a>0\), \(L\in {\mathbb N} \), then the conclusion (2.3) continues to hold, provided we require that \(V\in {\mathbb F} ^{(L)}\).

Theorem 2.3 has the following corollary for the \(\alpha \)-weighted block dynamics defined by (1.5). Below, \({\mathcal {E}}^\tau _{V,\alpha }(f,g)\) denotes the Dirichlet form (1.5) evaluated at a given boundary condition \(\tau \in \Omega _{V^c}\).

Corollary 2.4

If the spin system satisfies SM(K, a) for some constants \(K,a>0\), then the following modified logarithmic Sobolev inequalities hold: for all \(V\in {\mathbb F} \), all \(\tau \in \Omega _{V^c}\), for all weights \(\alpha \), for all \(f:\Omega _V\mapsto {\mathbb R} _+\) with \(f\log ^+\!f\in L^1(\mu _V^\tau )\),

where \(\gamma (\alpha )\) and C are the same constants appearing in (2.3). In particular, if the spin state S is finite, then there exists a constant \(D>0\) such that for all \(V\in {\mathbb F} \), \(\tau \in \Omega _{V^c}\), for all weights \(\alpha \), the mixing time \(T_\mathrm{mix}^\tau (V,\alpha )\) of the Markov chain with Dirichlet form \( {\mathcal {E}}^\tau _{V,\alpha }\) satisfies

Moreover, if the spin state is finite, then SM(K, a) implies the following logarithmic Sobolev inequalities: there exists a constant \(D>0\) such that for all \(V\in {\mathbb F} \), all \(\tau \in \Omega _{V^c}\), for all weights \(\alpha \), all \(f\ge 0\),

where

Finally, all statements above continue to hold if we only assume \(SM_L(K,a)\) for some constants \(K,a>0\) and \(L\in {\mathbb N} \), provided we restrict to \(V\in {\mathbb F} ^{(L)}\).

Corollary 2.4 is a straightforward consequence of Theorem 2.3. Indeed, as we mentioned in Sect. 1, the modified log-Sobolev inequality (2.4) follows from the block factorization (2.3) via Jensen’s inequality. Moreover, the bound (2.5) is a standard consequence of (2.4), see e.g. [7, 13]. Finally, (2.6) follows immediately from (2.3) and a well known bound comparing \(\mathrm{Ent} _A f\) to \({{\,\mathrm{Var}\,}}_A \!\sqrt{f} \), see [13, Corollary A.4].

3 Some Key Tools

In this section we collect some key general facts that do not depend on the spatial mixing assumption. We start by recalling some standard decompositions of the entropy. Next, we prove a new general tensorization lemma. Finally, we revisit the two-block factorization (1.2).

Some remarks on the notation are in order. We fix a region \(V\in {\mathbb F} \) and a boundary condition \(\tau \in \Omega _{V^c}\). To avoid heavy notation, we often omit explicit reference to \(V,\tau \). In particular, whenever possible we shall use the following shorthand notation

Moreover, whenever we write \(\mu _\Lambda \) or \(\mathrm{Ent} _\Lambda \) for some \(\Lambda \subset V\), we assume that the implicit boundary condition outside \(\Lambda \) has been fixed, and it agrees with \(\tau \) outside of V. Unless otherwise stated, f will always denote a nonnegative measurable function such that \(f\log ^+ f\in L^1(\mu )\). To avoid repetitions, we simply write \(f\ge 0\) throughout. As a convention, we set \(\mu _\emptyset f = f\) and \(\mathrm{Ent} _\emptyset f=0\).

3.1 Preliminaries

We first recall a standard lemma that will be repeatedly used.

Lemma 3.1

For any \(\Lambda \subset V\), for any \(f\ge 0\):

More generally, for any \(\Lambda _0\subset \Lambda _1\subset \cdots \subset \Lambda _k\subset V\), for any \(f\ge 0\):

Proof

The identity (3.2) follows from (3.3) in the case \(k=2\) with \(\Lambda _0=\emptyset \), \(\Lambda _1=\Lambda \), \(\Lambda _2=V\). To prove (3.3), set \(g_i=\mu _{\Lambda _i}f\), and note that \(g_{i}=\mu _{\Lambda _{i}}g_{i-1}\) by (2.1). Therefore,

\(\square \)

3.2 A new tensorization lemma

Consider subsets

such that \(\cup _{i,j}A_{i,j}=\Lambda \subset V\), and define “row” subsets and “column” subsets:

Assume that \(\mu _\Lambda \) is a product measure along the partition \(\{R_i,\,i=1\dots ,n\}\) of \(\Lambda \):

Notice that this is the case if \(\{R_1,\dots ,R_n\}\) are such that \(d(R_i,R_j)>1\) for all \(i\ne j\).

Lemma 3.2

Let \(s_i>0\) be constants such that for each \(i=1,\dots ,n\), for all \(f\ge 0\),

Then

where \(s=\max _is_i\).

Proof

To simplify the notation, we write \(\mu =\mu _\Lambda \) and \(\mathrm{Ent} _\Lambda f=\mathrm{Ent} f\). Setting \(\Lambda _k = \cup _{i=1}^k R_i\), with \(\Lambda _0=\emptyset \), from Lemma 3.1 we have

Since \(\mu _{\Lambda _k}\) is a product of \(\mu _{R_i}\), \(i=1,\dots ,k\), we have

From the assumption (3.4) we estimate

The proof is complete once we show that for each j,

Define \(\Lambda _{k,j}=\Lambda _k \cap C_j\). From Lemma 3.1 we have

For each j, k fixed, \(\mu _{\Lambda _{k,j}}\) is a product of \(\mu _{A_{i,j}}\), \(i=1,\dots ,k\). Hence,

Therefore, (3.5) follows if we show that all j, k fixed:

To prove (3.6), notice that

where the second identity follows from the product structure \(\mu _{\Lambda _k}=\otimes _{i=1}^k\mu _{R_i}\). Therefore,

where the inequality follows from the variational principle

valid for any region U, any boundary condition on \(U^c\), and any function \(g\ge 0\). \(\quad \square \)

Here is an example to keep in mind, with n arbitrary and \(m=2\). Let \(\{R_1,\dots ,R_n\}\) denote a collection of subsets \(R_i\in {\mathbb F} \) with \(d(R_i,R_j)>1\) for all \(i\ne j\). Let \(A_{i,1}=ER_i\) be the even sites in \(R_i\) and \(A_{i,2}=OR_i\) be the odd sites in \(R_i\), where a vertex \(x\in {\mathbb Z} ^d\) is even or odd according to the parity of \(\sum _{i=1}^dx_i\). Lemma 3.2 says that if we can factorize the even and odd sites on each \(R_i\) with some constant \(s_i\), then we can also factorize, with the constant \(\max _i s_i\), the even and odd sites on all \(\Lambda =\cup _i R_i\). In this example, one has \(A_{i,j}\cap A_{i,k}=\emptyset \) if \(k\ne j\), so in particular \(C_j\cap C_k=\emptyset \) for \(k\ne j\), but it is interesting to note that this need not be the case in Lemma 3.2, that is each “row” \(R_i\) is allowed to be decomposed into arbitrary, possibly overlapping subsets \(A_{i,j}\), \(j=1,\dots ,m\). We refer to Remark 3.5 for useful applications of the latter situation.

3.3 Two block factorizations

We shall need the following versions of an inequality of Cesi [10].

Lemma 3.3

Take \(A,B\in {\mathbb F} \) and \(V=A\cup B\). Suppose that for some \(\varepsilon \in (0,1)\):

for all functions \(g\in L^1(\mu )\). Then, for all functions \(f\ge 0\),

where \(\theta (\varepsilon )=84\varepsilon (1-\varepsilon )^{-2}\).

Proof

The inequality (3.9) coincides with [10, Eq. (2.10)]. To prove (3.10) we use essentially the same argument. As in the proof of (3.9) we may restrict to the case where f is bounded, and bounded away from zero. Then

Cesi’s inequality [10, Eq. (3.2)] says that the assumption (3.8) implies

for all \(f\ge 0\), where \(\theta (\varepsilon )=84\varepsilon (1-\varepsilon )^{-2}\). Therefore, the claim (3.10) follows from (3.11) applied with \(\mu _Af\) in place of f. \(\quad \square \)

Remark 3.4

If \(\mu \) is a product measure over A, B, that is \(\mu =\mu _B\mu _A\), then one can take \(\varepsilon =0\) in Lemma 3.3. In this case (3.10) is actually an identity. In this sense (3.10) might be considered to be tighter than (3.9), although it is not true that \( \mu [\mathrm{Ent} _{B}\mu _A f]\le \mu [\mathrm{Ent} _{B} f]\) in the general non-product case: think for instance of some f which depends only on \(A{\setminus }B\); in this case \( \mu [\mathrm{Ent} _{B} f]=0\) while it is possible that \(\mu [\mathrm{Ent} _{B}\mu _A f]>0\). For our purposes below it will be crucial to use both (3.9) and (3.10).

Remark 3.5

To appreciate the strength of the tensorization Lemma 3.2, consider a case where \(V=\cup _{i=1}^nR_i\) with \(R_i=A_i\cup B_i\) and suppose that \(\mu _V\) is a product measure over the \(R_i\)’s. If the condition (3.8) holds for every pair \(A_i,B_i\), \(i=1,\dots ,n\), with the same constant \(\varepsilon \in (0,1)\), the combination of Lemmas 3.3 and 3.2 shows that (3.9) holds uniformly in n, with \(A=\cup _{i=1}^nA_i\) and \(B=\cup _{i=1}^n B_i\). On the other hand, Lemma 3.3 alone seems unable to yield such a uniform estimate. Indeed, the assumption (3.8) does not tensorize: it is not hard to construct examples where (3.8) holds for every pair \(A_i,B_i\), \(i=1,\dots ,n\), with the same error \(\varepsilon \in (0,1)\), but one has to take the error proportional to n in order to have (3.8) for \(A=\cup _{i=1}^nA_i\) and \(B=\cup _{i=1}^n B_i\). Here is a toy example of this phenomenon. Suppose \(V=A\cup B\) with \(A=\{1,\dots ,n\}\) and \(B=\{n+1,\dots ,2n\}\) and for all \(i\in \{1,\dots ,n\}\), let \(A_i=\{i\}\), \(B_i=\{n+i\}\), and write \(\sigma _i\) and \(\eta _i=\sigma _{n+i}\) for the spin at i and at \(n+i\) respectively. Suppose that each spin takes values in \(\{-,+\}\) and that the probability measure \(\mu \) on \(\{-,+\}^{2n}\) is defined by \(\mu (\sigma ,\eta )= \prod _{i=1}^n\mu _i(\sigma _i,\eta _i)\), where \(\mu _i(\sigma _i,\eta _i)=\frac{1}{4}(1+\varepsilon \sigma _i\eta _i)\), for some fixed \(\varepsilon \in (0,1)\). For any function \(g:\{-,+\}^2\mapsto {\mathbb R} \), for all \(i\in \{1,\dots ,n\}\) one has

Therefore \(\Vert \mu _{B_i}\mu _{A_i} g - \mu _i g\Vert _\infty \le \varepsilon \mu _i (|g|)\). On the other hand, the marginal on \(\eta \) of \(\mu \) is the uniform distribution over \(\{-,+\}^{n}\), so that if we choose \(f(\sigma ,\eta )=f(\eta )=\mathbf{1}_{\eta \,\equiv \,+}\) one has \(\mu (|f|)=\mu (f)=2^{-n}\), \(\mu _A f = f\), and \(\mu _B \mu _A f = 2^{-n}\prod _{i=1}^n(1+\varepsilon \sigma _i)\). In particular, taking \(\sigma \equiv +\) one finds

4 Proof of the Main Results

We first reduce the general block factorization problem to the factorization into even and odd sites only.

4.1 Reduction to even and odd blocks

We partition the vertices of \({\mathbb Z} ^d\) into even sites and odd sites, where x is even if \(\sum _{i=1}^dx_i\) is an even integer, while x is odd if \(\sum _{i=1}^dx_i\) is an odd integer. Given a set of vertices \(V\in {\mathbb F} \) we write EV for the set of even vertices \(x\in V\) and OV for the set of odd vertices \(x\in V\). Whenever possible we simply write E for EV and O for OV. Notice that both \(\mu _E\) and \(\mu _O\) are product measures.

The reduction to even and odd blocks can be stated as follows. As usual we assume that a region \(V\in {\mathbb F} \), and a boundary condition \(\tau \in \Omega _{V^c}\) have been fixed, and we use the shorthand notation (3.1).

Proposition 4.1

Suppose that for some constant \(C>0\) and some function \(f\ge 0\),

Then, for the same C and f, for all nonnegative weights \(\alpha =\{\alpha _A,\,A\subset V\}\),

where \(\gamma (\alpha )=\min _{x\in V}\sum _{A: A\ni x}\alpha _A\).

Proposition 4.1 is a direct consequence of the following version of Shearer’s inequality satisfied by the relative entropy functional of any product measure.

Lemma 4.2

Fix \(\Lambda \subset V\in {\mathbb F} \) and suppose that \(\mu _\Lambda \) is a product measure on \(\Omega _\Lambda \). Then, for any choice of nonnegative weights \( \alpha =\{ \alpha _A, A\subset \Lambda \}\) and any function \(f\ge 0\):

where \(\gamma ( \alpha )=\min _{x\in \Lambda }\sum _{A: A\ni x} \alpha _A\).

Proof

As in [9, Proposition 2.6], the inequality (4.2) follows from a weighted version of Shearer’s inequality for Shannon entropy. For a proof of the latter we refer e.g. to [11, Theorem 6.2]. \(\quad \square \)

Proof of Proposition 4.1

For any \(A\subset V\), call EA and OA the even and odd sites in A respectively. Fix a choice of weights \(\alpha =\{\alpha _A, A\subset V\}\). Since \(\mu _E\) is a product measure on \(\Omega _E\), we may apply Lemma 4.2 with \(\Lambda =E\) and weights \(\alpha \) replaced by \(\hat{\alpha }=\{{\hat{\alpha }}_U,\,U\subset E\}\), with \({\hat{\alpha }}_U = \sum _{A\subset V}\alpha _A\mathbf {1}_{EA=U}\). It follows that

where \(\gamma _E(\alpha )=\min _{x\in E}\sum _{A: A\ni x}\alpha _A\). Similarly,

with \(\gamma _O(\alpha )=\min _{x\in O}\sum _{A: A\ni x}\alpha _A\). Since \(\gamma _E(\alpha )\) and \(\gamma _O(\alpha )\) are both at least as large as \(\gamma (\alpha )\), the inequality (4.1) follows by summing (4.3) and (4.4), taking the expectation with respect to \(\mu \) and noting that both \(\mu [\mathrm{Ent} _{EA} f]\) and \(\mu [\mathrm{Ent} _{OA} f]\) are at most \(\mu [\mathrm{Ent} _{A} f]\).

\(\square \)

The rest of this section is concerned with the proof of the factorization into even and odd blocks. Namely, we prove the following theorem, which together with Proposition 4.1 establishes the main result Theorem 2.3.

Theorem 4.3

Suppose that the spin system satisfies SM(K, a) for some constants \(K,a>0\). Then there exists a constant \(C\ge 1\) such that for all \(V\in {\mathbb F} \), \(\tau \in \Omega _{V^c}\), for all \(f\ge 0\),

If instead the spin system satisfies \(SM_L(K,a)\) for some constants \(K,a>0\), \(L\in {\mathbb N} \), then the same conclusion (4.5) holds, provided we require that \(V\in {\mathbb F} ^{(L)}\).

Remark 4.4

The constant C in (4.5) must be larger than 1, since if e.g. \(f=f(\sigma _E)\) is a function depending only on the spins at even sites then the right hand side in (4.5) is equal to \(\mu _V^\tau \left[ \mathrm{Ent} _E f\right] \le \mathrm{Ent} _V^\tau f\). From our proof one can in principle extract an explicit dependency of C on the various parameters defining the spin system and on the constants K, a in the strong mixing assumption. While this dependency is not optimal, one can for instance check, using a high temperature expansion, that in the limit of infinite temperature one recovers the constant \(C=1\) corresponding to non interacting spins.

4.2 Proof of Theorem 4.3

The overall idea is to follow a recursive strategy based on a geometric construction introduced in [4], see also [10]. However, contrary to the problems studied in [4, 10], the error terms produced at each step of the iteration are too large in our setting to obtain directly the desired conclusion, see Theorem 4.7, and we will need an additional recursive argument to finish the proof, see Theorem 4.8. We first carry out the proof under the spatial mixing assumption SM(K, a), and then, in the end, consider the relaxed assumption \(SM_L(K,a)\).

Definition 4.5

Set \(\ell _k=(3/2)^{k/d}\) and let \({\mathbb F} _k\) denote the set of all subsets \(V\in {\mathbb F} \) such that, up to translation and permutation of the coordinates, V is contained in the rectangle

Let \(\delta (k)\) denote the largest constant \(\delta >0\) such that

holds for all \(V\in {\mathbb F} _k\), \(\tau \in \Omega _{V^c}\), and all \(f:\Omega _V\mapsto {\mathbb R} _+\).

Note that \(\delta (k)\le 1\) for any \(k\in {\mathbb N} \), see Remark 4.4. On the other hand, the next lemma guarantees that it is positive for all \(k\in {\mathbb N} \).

Lemma 4.6

For every \(k\in {\mathbb N} \), \(\delta (k)>0\).

Proof

If the spin system has no hard constraints one can use a perturbation argument from [18], see e.g. [9, Lemma 2.2] for the application to our setting. In particular, one obtains that there exists a constant \(C>0\) such that for all \(k\in {\mathbb N} \):

In the presence of hard constraints, in the case of irreducible permissive systems one can argue as follows. It is known that any probability measure \(\mu \) satisfies

with \(\mu _* = \min _\sigma \mu (\sigma )\), where the minimum is restricted to \(\sigma \) such that \(\mu (\sigma )>0\), and \(C_0\) is an absolute constant, see [13, Corollary A.4]. Here \({{\,\mathrm{Var}\,}}\) denotes the variance functional of \(\mu \). For a finite permissive system in a region V one has \(\mu _*\ge e^{-C|V|}\) for some \(C>0\) independent of V. Moreover, using the irreducibility assumption, a crude coupling argument shows that the spectral gap of the even/odd Markov chain is bounded away from zero in any fixed region \(V\in {\mathbb F} \), see [6, Lemma 5.1]. In other words, for some constant \(C_1=C_1(k)\) one has

for any function g. Taking \(g=\sqrt{f}\), the desired conclusion now follows from (4.6) and (4.7) using, for both \(\mu _E\) and \(\mu _O\), the well known inequality \({{\,\mathrm{Var}\,}}(\sqrt{f})\le \mathrm{Ent} f\), which holds for any probability measure, see e.g. [19, Lemma 1]. \(\quad \square \)

Lemma 4.6 will be used as the base case for our induction.

Theorem 4.7

Assume SM(K, a). There exists a constant \(k_0\in {\mathbb N} \) depending on K, a, d such that

Theorem 4.7 can only be useful if we know that \(\delta (k)\) is much larger than \(1/\ell _k\) for k large enough, and thus it is not sufficient to prove Theorem 4.3. The next result allows us to have an independent control on \(\delta (k)\) which, together with Theorem 4.7 implies the desired uniform bound of Theorem 4.3.

Theorem 4.8

Assume SM(K, a). For any \(\varepsilon >0\), there exists a constant \(k_0\in {\mathbb N} \) depending on \(K,a,d,\varepsilon \), such that

Theorems 4.7 and 4.8 are more than sufficient for our purpose. Indeed, using (4.9) and (4.8), taking for instance \(\varepsilon =1/2\), we see that

if k is large enough, and therefore

Lemma 4.6 and (4.10) imply \(\inf _{k\in {\mathbb N} } \delta (k) >0\), which concludes the proof of Theorem 4.3 under the assumption SM(K, a).

4.3 Proof of Theorem 4.7

We start with a simple decomposition that will be used in the inductive step. Recall that EA and OA stand for the even and odd sites respectively in the region \(A\subset V\), and we use the shorthand notation \(E=EV\) and \(O=OV\) for the whole region V.

Lemma 4.9

For any \(A,B\in {\mathbb F} \) such that \(V=A\cup B\), for any \(f\ge 0\):

Proof

The decomposition in Lemma 3.1 shows that

Another application of that decomposition shows that

However, the product property of \(\mu _E\) implies that \(\mu _{EB}\mu _{EA}f=\mu _E f\), and therefore

The same argument applies to the case of odd sites. \(\quad \square \)

Let us give a sketch of the main steps of the proof before entering the details. Suppose that \(V=A\cup B\in {\mathbb F} _k\), and suppose that the assumption of Lemma 3.3 is satisfied. Then

where we use the fact that \(\mathrm{Ent} \mu _A f\le \mathrm{Ent} f\). Now suppose furthermore that \(A,B\in {\mathbb F} _{k-1}\). By definition of \(\delta (k)\) we then have

Therefore, using Lemma 4.9,

Disregarding the second line in (4.11) would allow us to obtain a bound of the form

provided that an arbitrary set \(V\in {\mathbb F} _k\) can be decomposed into sets \(A,B\in {\mathbb F} _{k-1}\) as above. We remark that if \(\mu \) were a product over A, B then by convexity one would have

and the same bound for odd sites. Thus in the product case the second line in (4.11) may be neglected and we recover a factorization statement which is contained already in Lemma 3.2. In the case we are interested in however one has \(A\cap B\ne \emptyset \) and we cannot hope for a bound like (4.12). For an illustration of the problem, consider for instance the 1D case, with \(V=\{1,\dots ,n\}\), \(A=\{1,\dots ,m\}\) and \(B=\{m-\ell ,\dots ,n\}\) for some integers \(0< \ell<m<n\). Suppose that \(m+1\) is even, and suppose that f only depends on \(\sigma _m\), the spin at site m. Then, once all odd sites have been frozen, \(\mu _{EA}f\) is a constant, and therefore \(\mathrm{Ent} _{EB}\mu _{EA} f=0\). On the other hand, \(\mu _{A}f\) depends on \(\sigma _{m+1}\), since the conditional expectation \(\mu _A\) depends non-trivially on \(\sigma _{m+1}\), and thus we may well have \(\mathrm{Ent} _{EB}\mu _{A} f\ne 0\).

Therefore, the second line of (4.11) does produce a nontrivial error term. At this point a fruitful idea from [24] comes to our rescue. Namely, one can average over many possible choices of the decomposition \(V=A\cup B\) and hope that the averaging lowers the size of the overall error. This strategy works very well if the error terms have an additive structure, such as in the case of [10]. Here there is no simple additive structure to exploit, and we resort to using the martingale-type decompositions from Lemma 3.1 to control the average error term by means of the global entropy \(\mathrm{Ent} f\), see Lemma 4.12. This will be sufficient to obtain the recursive estimate (4.8). To implement this argument, we use a slightly different averaging procedure than in [10].

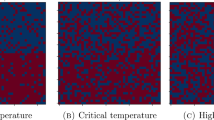

We turn to the actual proof. We start with some geometric considerations, see Fig. 1 for a two-dimensional representation. Set \(r:=\lfloor \tfrac{1}{6}\,\ell _{k+d}\rfloor \), and define the rectangular sets

Suppose that \(V\subset [0,\ell _{k+1}]\times \dots \times [0,\ell _{k+d}]\), and define, for \(i=1,\dots ,r+1\):

where, as usual \(E=EV\) and \(O=OV\) denote the even and the odd sites of V respectively. Define also

Lemma 4.10

Suppose that \(V\subset [0,\ell _{k+1}]\times \dots \times [0,\ell _{k+d}]\), and that \(V\notin {\mathbb F} _{k-1}\). Referring to the above setting, for all \(i=1,\dots r\):

-

(1)

\(V{\setminus }B\ne \emptyset \) and \(V{\setminus }A_i\ne \emptyset \);

-

(2)

\(d(V{\setminus }B,V{\setminus }A_i)\ge \frac{1}{4}\,\ell _k\);

-

(3)

\(B\in {\mathbb F} _{k-1}\) and \(A_i\in {\mathbb F} _{k-1}\);

-

(4)

\(\Gamma _{i+1}\subset E\) if i is odd, and \(\Gamma _{i+1}\subset O\) if i is even. Moreover \(A_{i}\) and \(V{\setminus }A_{i+1}\) become independent if we condition on the spins in \(\Gamma _{i+1}\), that is

$$\begin{aligned} \mu _V\left( \cdot |\sigma _{\,\Gamma _{i+1}}\right) =\mu _{V{\setminus } \Gamma _{i+1}}=\mu _{A_{i}}\mu _{V{\setminus }A_{i+1}}= \mu _{V{\setminus } A_{i+1}}\mu _{A_{i}}. \end{aligned}$$

Proof

-

1.

Suppose that \(V{\setminus }B\) is empty. Then \(V=B\) and therefore, up to translation it is contained in \([0,\ell _{k+1}]\times \dots \times [0,\tfrac{2}{3}\ell _{k+d}]\). Since \(\tfrac{2}{3}\ell _{k+d}= \ell _k\) this would imply that up to permutation of the coordinates \(V\in [0,\ell _{k}]\times [0,\ell _{k+1}]\times \dots \times [0,\ell _{k+d-1}]\) which violates the assumption \(V\notin {\mathbb F} _{k-1}\). The same argument shows that \(R_{i-1}\cap V\ne \emptyset \) for all i and \(A_i\ne \emptyset \) follows from \(A_i\supset R_{i-1}\cap V\).

-

2.

If \(x\in V{\setminus }B\) and \(y\in V{\setminus }A_i\) then \(y_d-x_d\ge \frac{1}{2}\ell _{k+d}-\frac{1}{3}\ell _{k+d}=\frac{1}{6}\ell _{k+d}=\frac{1}{4}\ell _k\).

-

3.

The maximal stretch of B along the d-th coordinate is at most \(\frac{2}{3} \ell _{k+d}=\ell _k\) and therefore up to translations and permutation of the coordinates \(B\in [0,\ell _{k}]\times [0,\ell _{k+1}]\times \dots \times [0,\ell _{k+d-1}]\) which says that \(B\in {\mathbb F} _{k-1}\). The same argument shows that \(A_i\subset R_i\cap V\in {\mathbb F} _{k-1}\) for all i.

-

4.

If \(i\ge 1\) is odd, then

$$\begin{aligned} \Gamma _{i+1}&= \left[ (R_{i+1}\cap E)\cup (R_{i}\cap O)\right] {\setminus } \left[ (R_{i}\cap O)\cup (R_{i-1}\cap E)\right] \\&= (R_{i+1}\cap E){\setminus }(R_{i-1}\cap E), \end{aligned}$$and therefore \(\Gamma _{i+1}\subset E\). Similarly, one has \(\Gamma _{i+1}\subset O\) if i is even. Moreover, any \({\mathbb Z} ^d\)-path inside V connecting \(A_{i}\) with \(V{\setminus }A_{i+1}\) must go through \(\Gamma _{i+1}\), and therefore \(A_{i}\) and \(V{\setminus }A_{i+1}\) become independent if we condition on the spins in \(\Gamma _{i+1}\). \(\quad \square \)

Lemma 4.11

Let V, B and \(A_i\) be as in Lemma 4.10. If SM(K, a) holds, then

for all \(i=1,\dots r\), all functions \(g\in L^1(\mu )\), and for all \(k\ge k_0=k_0(K,a,d)\).

Proof

Since i is fixed, for simplicity we write A instead of \(A_i\). Set \(h=\mu _A g\). Then h depends only on \(\sigma _\Delta \), where \(\Delta =V{\setminus }A\subset B\). We are going to use (2.2) with \(\Lambda =B\). Let \(\Omega _{B,\tau }\) denote the set of all spin configurations \(\eta \in \Omega _{B^c}\) which agree on the set \(V^c\) with the overall boundary condition \(\tau \in \Omega _{V^c}\). For any \(\eta \in \Omega _{B,\tau }\) one has

Therefore,

where

Since \(\psi _{B,\Delta }^{\eta }\) depends on \(\eta \) only through the spins in \(\partial B\), the configurations \(\eta ,\eta '\in \Omega _{B,\tau }\) in (4.14) can be assumed to differ only in the set \(N_B=(\partial B)\cap (V{\setminus }B)\). Notice that \(N_B\) has at most \((\ell _{k+d-1}+1)^{d-1}\) elements, and that

by Lemma 4.10(2). Therefore, if \(\eta (0)=\eta ,\dots ,\eta (m)=\eta '\), denotes a sequence of configurations interpolating between \(\eta \) and \(\eta '\), such that, for all \(j\in \{0,\dots ,m-1\}\), \(\eta (j)\) and \(\eta (j+1)\) differ only at one site \(x_j\in N_B\), with \(m\le (\ell _{k+d-1}+1)^{d-1}\), we have

The definition of SM(K, a) implies that

Expanding the products in (4.14), and assuming \(m\varepsilon _0\le 1\), we obtain

where we use the inequality \((1+x)^m\le 1+emx\) for \(x>0\) and \(m>0\) such that \(mx\le 1\). Thus, if \(k\ge k_0\) for some constant \(k_0\) depending only on K, a, d, we have obtained (4.13) with \(\varepsilon =K'\ell _{k}^{d-1}e^{-a \ell _{k}/4}\), where \(K'=(3/2)^{d-1}eK\le 5^dK\). \(\quad \square \)

Lemma 4.12

Let V, B and \(A_i\), \(i=1,\dots r\), be as in Lemma 4.10. Then

Proof

We prove the first inequality. The same argument proves the second one, with the role of even and odd sites exchanged. Fix \(i\in \{1,\dots ,r\}\). Notice that \(\mu _{A_i} f = \mu _{A_i}\mu _{EA_i}f\). Let us first observe that if i is even then

Indeed, in this case \(i+1\) is odd and Lemma 4.10(4) implies

Therefore,

where the inequality follows from the variational principle (3.7). This settles the case when i is even.

Next, suppose that i is odd. Here the commutation relation (4.16) does not hold, since the average \(\mu _{A_i}\) depends on the spins in the even sites \(\Gamma _{i+1}\subset B{\setminus }A_i\). Moreover, (4.15) is in general false since if e.g. f depends only on \(\sigma _{\,\Gamma _i}\), then \(\mathrm{Ent} _{EB}\mu _{EA_i} f=0\) while one can have \(\mathrm{Ent} _{EB}\mu _{A_i} f>0\).

Define \(g=\mu _{EA_i}f\). From the decomposition in Lemma 3.1 we see that

where we use the shorthand notation \(\sigma _{i+1}\) for \(\sigma _{\,\Gamma _{i+1}}\), \(\mathrm{Ent} _E\left( g|\sigma _{i+1}\right) \) denotes the entropy of g with respect to the conditional measure \(\mu _E(\cdot |\sigma _{i+1})=\mu _{E{\setminus }\Gamma _{i+1}}\). Since \(\mu _E\) is a product measure,

where \(\mathrm{Ent} _{\, i+1}=\mathrm{Ent} _{\Gamma _{i+1}}\) denotes the entropy with respect to the probability measure \(\mu _{\Gamma _{i+1}}\). Similarly,

Let us show that

Indeed, Lemma 4.10(4) implies that

where \(E(V{\setminus }A_{i+1})\) are the even sites in \(V{\setminus } A_{i+1}\), and we have used the fact that \(A_i\) and \(E(V{\setminus } A_{i+1})\) are conditionally independent given the spins \(\sigma _{i+1}\). Therefore, reasoning as in (4.17):

From (4.18)–(4.19)–(4.20)–(4.21) we conclude that, when i is odd:

As in (4.22), we may write

Therefore

where the first inequality follows from convexity of entropy and the second from the monotonicity of \(A\mapsto \mu [\mathrm{Ent} _A f]\). Neglecting the last term in (4.23), we have arrived at

for all i odd. In view of the estimate (4.15) we may use the bound (4.24) for all i. Therefore, an application of Lemma 3.1 shows that

\(\square \)

We are now able to conclude the proof of Theorem 4.7. To prove the recursive bound (4.8) we suppose \(V\in {\mathbb F} _k{\setminus }{\mathbb F} _{k-1}\). Then, by translation invariance and by the invariance under coordinate permutation, we may assume that V is as in Lemma 4.10. Combining Lemma 3.3 with Lemma 4.11 we obtain, for each \(i=1,\dots , r\),

Since \(A_i,B\in {\mathbb F} _{k-1}\), by definition of \(\delta (k)\) we obtain

From Lemma 4.9 we find that the right hand side of (4.25) equals

Averaging over i in (4.26) and using Lemma 4.12,

In conclusion, \(\delta (k)\ge (1-\theta (\varepsilon _k))\delta (k-1) - \frac{2}{r}\), or equivalently

Since \(r\sim \frac{1}{4}\ell _k\) and \(\delta (k-1)\le 1\), it follows that \(\frac{1}{r\delta (k-1)}\gg \theta (\varepsilon _k)\) for all k large enough, and therefore

for all \(k\ge k_0(K,a,d)\).

4.4 Proof of Theorem 4.8

The idea is to divide the set V into two sets \(A=\cup _i A_i\), \(B=\cup _i B_i\) each being the union of a large number of well separated subsets, to use the factorization from Lemma 3.3 to reduce the problem in the set V to the problem in either A or B, and then finally to use the Lemma 3.2 to tensorize within A and within B, which allows us to reduce the problem to a single region \(A_i\) or \(B_i\) only.

Fix a large integer \(b>1\), define \(u_k = b^{k/d}\), and call \({\mathbb G} _k\) the set of all subsets \(V\subset {\mathbb Z} ^d\) which up to translations and permutation of the coordinates are included in the rectangle \([0,u_{k+1}]\times \cdots \times [0,u_{k+d}]\). We partition the interval \(I=[0,u_{k+d}]\) into 2b consecutive non-overlapping intervals \(I_1,\dots ,I_{2b}\) such that \(I_j\) have length \(t_k:=\frac{1}{2b}u_{k+d}\), that is

Define also the enlarged intervals \({\bar{I}}_j = \{s\in I: d(s,I_j)\le t_k/4\}\), and consider the collections of intervals

We remark that both \(\Delta _A\) and \(\Delta _B \) are collections of non-overlapping intervals, with

for all \(i\ne j\). On the other hand, \(\Delta _A\cap \Delta _B\ne \emptyset \). We define the rectangular sets in \({\mathbb R} ^d\):

and define the \({\mathbb Z} ^d\) subsets

We refer to Fig. 2 for a two-dimensional representation.

We observe that \(A_i \in {\mathbb G} _{k-1}\) and \(B_i \in {\mathbb G} _{k-1}\) for all \(i=1,\dots ,b\). Indeed, the stretch of \(A_i\) along the d-th coordinate is at most \(t_k + 2t_k/4 \le 2t_k\le u_k\) which together with \(u_{k,i}=u_{k-1,i+1}\), \(i=1,\dots ,d-1\), implies that \(A_i \in {\mathbb G} _{k-1}\). The same applies to \(B_i\). Observe that with these definitions one has the product property

Moreover, the geometric construction shows that

Thus, a repetition of the argument in Lemma 4.11 shows that the assumption of Lemma 3.3 is satisfied with \(\varepsilon \) given by

Therefore, by Lemma 3.3,

Next, let \(\varrho (k)\) be defined as the largest constant \(\varrho > 0\) such that the inequality

holds for all \(V\in {\mathbb G} _k\), \(\tau \in \Omega _{V^c}\), and all \(f\ge 0\). The key observation is that thanks to the product property (4.27), and using the fact that \(A_i \in {\mathbb G} _{k-1}\) for all i, Lemma 3.2 allows us to estimate

Similarly,

Thus, (4.28) implies

where we use the monotonicity of \(\Lambda \mapsto \mu \left[ \mathrm{Ent} _{\Lambda } f\right] \). Estimating \(1-\theta (\varepsilon _k)\ge 1/2\) we have proved that

Iterating, we conclude \(\varrho (k)\ge 4^{-k}\varrho (k_0)\). To finish the proof, observe that \((3/2)^k=b^{k\varepsilon }\) where \(\varepsilon =\log (3/2)/\log (b)\), which can be made small by taking b large. Therefore,

where \(c_0\) is a constant depending on K, a, d, b, while \(\varepsilon '=d\log (4)/\log (b)\) can be as small as we wish provided b is suitably large. This ends the proof of Theorem 4.8.

Remark 4.13

We point out that the argument given in the proof of Theorem 4.8 can be improved if one replaces the parameter \(t_k\) which is linear in \(u_k\) by \(t'_k=C_1\log (u_k)\), with \(C_1\) a suitably large constant. Since \(t'_k\) is logarithmic in \(u_k\), one can modify the recursion to obtain a bound of the form \(\delta (k)\ge \delta (C_2\log (k))/C_2\) for some new constant \(C_2\), which provides a much better lower bound on \(\delta (k)\) than the one stated in Theorem 4.8. However, without the companion recursive estimate from Theorem 4.7, this argument alone would not provide the uniform estimate \(\inf _k\delta (k)>0\).

4.5 Proof of Theorem 4.3 assuming \(SM_L(K,a)\)

Theorems 4.7 and 4.8 allowed us to establish Theorem 4.3 under the assumption SM(K, a). We now prove it assuming only \(SM_L(K,a)\). To this end we observe that any set \(V\in {\mathbb F} ^{(L)}\) is uniquely identified by the set \(V'\in {\mathbb F} \) such that

A careful check of the previous proofs then shows that if we work on the rescaled lattice, that is we replace vertices x with blocks \(Q_L(x)\), then we may repeat all steps in Theorems 4.7 and 4.8 to obtain the following coarse-grained version of Theorem 4.3 assuming only \(SM_L(K,a)\): for any \(V\in {\mathbb F} ^{(L)}\), for all \(f\ge 0\),

where, if V is given by (4.29), then \(E_L=\cup _{x\in EV'}Q_L(x)\), and \( O_L=\cup _{x\in OV'}Q_L(x)\).

Consider now a single cube \(Q_L(x)\). By Lemma 4.6 we know that

for some constant \(C_1=C_1(L)\). Observe that by construction \(d(Q_L(x),Q_L(y))>1\) for all \(x,y\in EV'\). Similarly, \(d(Q_L(x),Q_L(y))>1\) for all \(x,y\in OV'\). Therefore, Lemma 3.2 implies

where \(EE_L\) denotes the even sites in \(E_L\), \(EO_L\) the even sites in \(O_L\), and so on. Plugging these estimates in (4.30) and using the monotonicity of \(A\mapsto \mu [\mathrm{Ent} _A f]\) one arrives at

with \(D=2C\times C_1\). This ends the proof of Theorem 4.3.

References

Alexander, K.S.: On weak mixing in lattice models. Probab. Theory Relat. Fields 110(4), 441–471 (1998)

Balister, P., Bollobás, B.: Projections, entropy and sumsets. Combinatorica 32(2), 125–141 (2012)

Beffara, V., Duminil-Copin, H.: The self-dual point of the two-dimensional random-cluster model is critical for \(q\ge 1\). Probab. Theory Relat. Fields 153(3–4), 511–542 (2012)

Bertini, L., Cancrini, N., Cesi, F.: The spectral gap for a Glauber-type dynamics in a continuous gas. Annales de l’IHP Probabilités et statistiques 38, 91–108 (2002)

Blanca, A., Caputo, P., Parisi, D., Sinclair, A., Vigoda, E.: Entropy decay in the Swendsen–Wang dynamics on \({Z}^d\). In: Proceedings of the 53rd Annual ACM SIGACT Symposium on Theory of Computing, pp. 1551–1564 (2021)

Blanca, A., Caputo, P., Sinclair, A., Vigoda, E.: Spatial mixing and nonlocal Markov chains. Random Struct. Algorithms 55(3), 584–614 (2019)

Bobkov, S.G., Tetali, P.: Modified logarithmic Sobolev inequalities in discrete settings. J. Theor. Probab. 19(2), 289–336 (2006)

Bodineau, T., Helffer, B.: The log-Sobolev inequality for unbounded spin systems. J. Funct. Anal. 166(1), 168–178 (1999)

Caputo, P., Menz, G., Tetali, P.: Approximate tensorization of entropy at high temperature. Annales de la Faculté des sciences de Toulouse: Mathématiques 24, 691–716 (2015)

Cesi, F.: Quasi-factorization of the entropy and logarithmic Sobolev inequalities for Gibbs random fields. Probab. Theory Relat. Fields 120(4), 569–584 (2001)

Csóka, E., Harangi, V., Virág, B.: Entropy and expansion. Annales de l’Institut Henri Poincaré, Probabilités et Statistiques 56, 2428–2444 (2020)

Dai Pra, P., Paganoni, A.M., Posta, G.: Entropy inequalities for unbounded spin systems. Ann. Probab. 30(4), 1959–1976 (2002)

Diaconis, P., Saloff-Coste, L.: Logarithmic Sobolev inequalities for finite Markov chains. Ann. Appl. Probab. 6(3), 695–750 (1996)

Dobrushin, R.L., Shlosman, S.B.: Completely analytical interactions: constructive description. J. Stat. Phys. 46(5–6), 983–1014 (1987)

Dyer, M., Sinclair, A., Vigoda, E., Weitz, D.: Mixing in time and space for lattice spin systems: a combinatorial view. Random Struct. Algorithms 24(4), 461–479 (2004)

Friedli, S., Velenik, Y.: Statistical Mechanics of Lattice Systems: A Concrete Mathematical Introduction. Cambridge University Press, Cambridge (2017)

Guionnet, A., Zegarlinski, B.: Lectures on logarithmic Sobolev inequalities. In: Séminaire de Probabilités, XXXVI, Volume 1801 of Lecture Notes in Mathematics, pp. 1–134. Springer, Berlin (2003)

Holley, R., Stroock, D.: Logarithmic Sobolev inequalities and stochastic Ising models. J. Stat. Phys. 46(5–6), 1159–1194 (1987)

Latala, R., Oleszkiewicz, K.: Between Sobolev and Poincaré. In: Milman, V.D., Schechtman, G. (eds.) Geometric Aspects of Functional Analysis, pp. 147–168. Springer, Berlin (2000)

Ledoux, M.: Logarithmic Sobolev inequalities for unbounded spin systems revisited. In: Azéma, J., Émery, M., Ledoux, M. (eds.) Séminaire de Probabilités XXXV, pp. 167–194. Springer, Berlin (2001)

Lu, S.L., Yau, H.-T.: Spectral gap and logarithmic Sobolev inequality for Kawasaki and Glauber dynamics. Commun. Math. Phys. 156(2), 399–433 (1993)

Madiman, M., Tetali, P.: Information inequalities for joint distributions, with interpretations and applications. IEEE Trans. Inform. Theory 56(6), 2699–2713 (2010)

Martinelli, F.: An elementary approach to finite size conditions for the exponential decay of covariances in lattice spin models. In: Dobrushin, R.L., Minlos, R.A., Shlosman, S., Suhov, Yu.M. (eds.) On Dobrushin’s Way: From Probability Theory to Statistical Physics, pp. 169–181. American Mathematical Society, Providence (2000)

Martinelli, F.: Lectures on Glauber dynamics for discrete spin models. In: Bernard, P. (ed.) Lectures on Probability Theory and Statistics (Saint-Flour, 1997). Lecture Notes in Mathematics, vol. 1717, pp. 93–191. Springer, Berlin (1999)

Martinelli, F., Olivieri, E.: Approach to equilibrium of Glauber dynamics in the one phase region. I. Commun. Math. Phys. 161(3), 447–486 (1994)

Martinelli, F., Olivieri, E.: Approach to equilibrium of Glauber dynamics in the one phase region. II. The general case. Commun. Math. Phys. 161(3), 487–514 (1994)

Martinelli, F., Olivieri, E., Schonmann, R.H.: For 2-D lattice spin systems weak mixing implies strong mixing. Commun. Math. Phys. 165(1), 33–47 (1994)

Marton, K.: An inequality for relative entropy and logarithmic Sobolev inequalities in Euclidean spaces. J. Funct. Anal. 264(1), 34–61 (2013)

Marton, K.: Logarithmic Sobolev inequalities in discrete product spaces: a proof by a transportation cost distance. arXiv preprint arXiv:1507.02803 (2015)

Otto, F., Reznikoff, M.G.: A new criterion for the logarithmic Sobolev inequality and two applications. J. Funct. Anal. 243(1), 121–157 (2007)

Stroock, D.W., Zegarlinski, B.: The equivalence of the logarithmic Sobolev inequality and the Dobrushin–Shlosman mixing condition. Commun. Math. Phys. 144(2), 303–323 (1992)

Stroock, D.W., Zegarliński, B.: The logarithmic Sobolev inequality for continuous spin systems on a lattice. J. Funct. Anal. 104(2), 299–326 (1992)

Stroock, D.W., Zegarliński, B.: The logarithmic Sobolev inequality for discrete spin systems on a lattice. Commun. Math. Phys. 149(1), 175–193 (1992)

Yoshida, N.: The log-Sobolev inequality for weakly coupled lattice fields. Probab. Theory Relat. Fields 115(1), 1–40 (1999)

Zegarlinski, B.: Dobrushin uniqueness theorem and logarithmic Sobolev inequalities. J. Funct. Anal. 105(1), 77–111 (1992)

Zegarlinski, B.: The strong decay to equilibrium for the stochastic dynamics of unbounded spin systems on a lattice. Commun. Math. Phys. 175(2), 401–432 (1996)

Open Access

This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funding

Open access funding provided by Università degli Studi Roma Tre within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by H. Spohn.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Caputo, P., Parisi, D. Block Factorization of the Relative Entropy via Spatial Mixing. Commun. Math. Phys. 388, 793–818 (2021). https://doi.org/10.1007/s00220-021-04237-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00220-021-04237-1