Abstract

We prove an asymptotic crystallization result in two dimensions for a class of nonlocal particle systems. To be precise, we consider the best approximation with respect to the 2-Wasserstein metric of a given absolutely continuous probability measure \(f \mathrm {d}x\) by a discrete probability measure \(\sum _i m_i \delta _{z_i}\), subject to a constraint on the particle sizes \(m_i\). The locations \(z_i\) of the particles, their sizes \(m_i\), and the number of particles are all unknowns of the problem. We study a one-parameter family of constraints. This is an example of an optimal location problem (or an optimal sampling or quantization problem) and it has applications in economics, signal compression, and numerical integration. We establish the asymptotic minimum value of the (rescaled) approximation error as the number of particles goes to infinity. In particular, we show that for the constrained best approximation of the Lebesgue measure by a discrete measure, the discrete measure whose support is a triangular lattice is asymptotically optimal. In addition, we prove an analogous result for a problem where the constraint is replaced by a penalization. These results can also be viewed as the asymptotic optimality of the hexagonal tiling for an optimal partitioning problem. They generalise the crystallization result of Bourne et al. (Commun Math Phys, 329: 117–140, 2014) from a single particle system to a class of particle systems, and prove a case of a conjecture by Bouchitté et al. (J Math Pures Appl, 95:382–419, 2011). Finally, we prove a crystallization result which states that optimal configurations with energy close to that of a triangular lattice are geometrically close to a triangular lattice.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider the problem of approximating an absolutely continuous probability measure by a discrete probability measure. To quantify the quality of the approximation, we measure the approximation error in the 2-Wasserstein metric. Let \(\Omega \subset {\mathbb {R}}^d\) be the closure of an open and bounded set, and let

be the density of the absolutely continuous probability measure. We approximate \(f \mathrm {d}x\) by a discrete measure from the set

For \(\mu \in {\mathcal {P}}_{\mathrm {d}}(\Omega )\), we define \(N_\mu := \# \mathrm {supp}(\mu )\), which is not fixed a priori. For \(p\ge 1\), the p-Wasserstein distance (see [65, 71]) between \(f \mathrm {d}x\) and \(\mu \in {\mathcal {P}}_{\mathrm {d}}(\Omega )\) is

Observe that

since there exists a sequence of discrete measures \(\mu _n\) converging weakly\(^*\) to \(f \mathrm {d}x\), with \(N_{\mu _n} \rightarrow \infty \) as \(n \rightarrow \infty \). On the other hand, for each \(N\in {\mathbb {N}}\), \(\inf \{ W_p(f,\mu ): \mu \in {\mathcal {P}}_{\mathrm {d}}(\Omega ), \, N_\mu \le N \} > 0\). Therefore the problem \(\inf \{ W_p(f,\mu ): \mu \in {\mathcal {P}}_{\mathrm {d}}(\Omega )\}\) has no solution. To obtain a minimizer we must constrain the number of atoms \(N_\mu \), either explicitly (with a constraint) or implicitly (with a penalization). Given an entropy \(H:{\mathcal {P}}_{\mathrm {d}}(\Omega )\rightarrow [0,\infty ]\) (defined below) we consider the constrained optimal location problem

where \(L>0\), and the penalized optimal location problem

where \(\delta >0\). If H satisfies \(H(\mu )\rightarrow \infty \) as \(N_\mu \rightarrow \infty \), then minimising sequences for problems (3) and (4) have a uniformly bounded number of atoms. If in addition H is lower semi-continuous with respect to the weak\(^*\) convergence of measures, then problems (3) and (4) admit a solution.

When L or \(\delta \) are fixed, the geometry of the set \(\Omega \) has a strong effect on optimal particle arrangements, and it is very difficult to characterise minimising configurations. As L increases, or \(\delta \) decreases, the optimal number of particles \(N_\mu \) increases, and it is believed that optimal configurations locally form regular, periodic patterns; see the numerical evidence in Figs. 1 and 2 . This phenomenon is known as crystallization (see Sect. 1.3 for more on this). The specific geometry of these patterns depends on the choice of p in the Wasserstein distance, the choice of H, and the dimension d. In this paper we will study the crystallization problem by taking the limits \(L\rightarrow \infty \) and \(\delta \rightarrow 0\).

Approximate local minimizers for the penalized problem (4) for the case \(p=d=2\), \(\Omega =[0,1]^2\), \(f={\mathbbm {1}}_\Omega \), \(H_\alpha (\mu )=\sum _i m_i^\alpha \), for several values of \(\alpha \) and \(\delta \). The value of \(\alpha \) is constant in each row, and the value of \(\delta \) decreases from left to right in each row. The black dots are the particles \(z_i\), where \(\mu = \sum _{i=1}^{N_\mu } m_i \delta _{z_i}\) is an approximate local minimizer of (4). The polygons are the sets \(T^{-1}(\{z_i\})\), where T is the optimal transport map in (2). The particles \(z_i\) are located at the centroids of the polygons. The masses \(m_i\) are the areas of the polygons. The colours correspond to the number of sides: squares are yellow, pentagons are orange, hexagons are blue, and heptagons are red. For each value of \(\alpha \), a hexagonal tiling (with defects) starts to emerge as \(\delta \) is decreased. This figure, Fig. 2 and Table 1 were made by Steven Roper using the generalized Lloyd algorithm from [16]. To search for a global minimizer in the highly non-convex energy landscape, the algorithm was ran many times using different, randomly generated initial conditions. The values of \(\delta \) were chosen by first choosing a target value of \(N_\mu \) and then using the heuristic (16) to generate the corresponding \(\delta \). Better results, without defects, can be achieved by taking the initial particle locations to be a perturbation of a triangular lattice; see Fig. 2

Approximate global minimizers for the penalized problem (4) for the case \(p=d=2\), \(\Omega =[0,1]^2\), \(f={\mathbbm {1}}_\Omega \), \(H_\alpha (\mu )=\sum _i m_i^\alpha \), \(\alpha =0.583\) for the values of \(\delta \) used in Fig 1 (middle row, middle and right columns). See the caption to Fig. 1 for a description of the polygons and the colour scheme. These configurations have lower energy (\(W_2^2(f,\mu )+\delta H_\alpha (\mu )\)) than the corresponding configurations shown in Fig. 1, and they do not have defects. This figure was generated by Steven Roper using the generalized Lloyd algorithm from [16] and by taking the initial conditions to be perturbations of a triangular lattice. In Fig. 1 (middle row, right column) there are. \(N_\mu =200\) particles whereas in this figure (right) there are \(N_\mu =202\) particles; algorithm [16] attempts to find the optimum number of particles

For the entropy

Zador’s Theorem for the asymptotic quantization error states that

for some positive constant \(C_{p,d}\) that is independent of the density f. See for example [20, 41, 45, 74] and see [44, 52, 53] for the more general case where \(\Omega \) is a Riemannian manifold. The constant \(C_{p,d}\) is known in two dimensions:

where \(P_6\) is a regular hexagon of unit area centred at the origin. This follows from Fejes Tóth’s Theorem on Sums of Moments (see [36, 43]), which has also been proved in various levels of generality by several other authors including [13, 37, 59, 61].

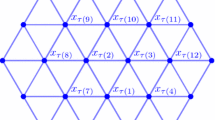

The geometric interpretation of (6) and (7) is the following: In two dimensions it is asymptotically optimal to arrange the atoms of the discrete measure at the centres of regular hexagons, i.e., on a regular triangular lattice, where the areas of the hexagons depend on the density f. Locally, where f is approximately constant, these hexagons form a regular honeycomb. By the regular triangular lattice we mean the set \({\mathbb {Z}}(1,0)\oplus {\mathbb {Z}}(1/2,\sqrt{3}/2)\) up to dilation and isometry. See Remark 1.3 below for more on this geometric interpretation.

Formula (6) was extended to more general entropies by Bouchitté, Jimenez and Mahadevan in [14]. Their class of entropies includes the case

where \(\alpha \in (-\infty ,1)\). This reduces to the entropy (5) when \(\alpha =0\). Bouchitté, Jimenez and Mahadevan [14, Proposition 3.11(i)] proved that

for some positive constant \(C_{p,d}(\alpha )\). Moreover, they conjectured [14, Sect. 3.6 (ii)] that

If this conjecture is true, then by (7)

In particular, the conjecture for the case \(p=2\), \(d=2\) is

for all \(\alpha \in (-\infty ,1)\). It is known that \(C_{2,2}(\alpha )=c_6\) for all \(\alpha \in (-\infty ,0]\) (see [14, Sect. 3.6]) and so it remains to establish the conjecture for the case \(\alpha \in (0,1)\). The conjecture would mean that in two dimensions a discrete measure supported on a regular triangular lattice gives asymptotically the best constrained approximation of the Lebesgue measure (again, see Remark 1.3 below for this geometric interpretation).

1.1 Main results

In this paper we prove conjecture (10) for all \(\alpha \in (-\infty ,{\overline{\alpha }}]\), where \({\overline{\alpha }}=0.583\); see Theorem 1.2. The conjecture for \(\alpha \in ({\overline{\alpha }},1)\) remains open, although we suggest a direction for proving it in Theorem 6.1, where we prove it under an additional assumption. In Theorem 1.1 we prove an analogous asymptotic quantization formula for the penalized optimal location problem (4) for all \(\alpha \in (-\infty ,{\overline{\alpha }}]\). This generalises the crystallization result of [18], where Theorem 1.1 was proved for the special case \(\alpha =0.5\), \(f=1\). Moreover, for the case \(f= 1\), we prove that minimal configurations are ‘asymptotically approximately’ a triangular lattice; see Theorem 1.4. To be more precise, we prove that, as \(\delta \rightarrow 0\), rescaled minimal configurations for the penalized quantization problem are quantitatively close to a triangular lattice. This result will be proved for the case \(\alpha ={\overline{\alpha }}\). The proof can be easily modified for any \(\alpha \le {\overline{\alpha }}\).

Define the constrained optimal quantization error by

and the penalized optimal quantization error by

Since the Wasserstein distance on the compact set \(\Omega \) metrizes the tight convergence of probability measures, and the map \(\mu \mapsto \sum _i m_i^\alpha \) is lower semi-continuous with respect to this convergence [65, Lemma 7.11], both infima above are attained. Our main results are the following.

Theorem 1.1

(Asymptotic crystallization for the penalized optimal location problem). Let \(\alpha \in (-\infty ,{\overline{\alpha }}]\), where \({\overline{\alpha }}:=0.583\). Let \(\Omega \subset {\mathbb {R}}^2\) be the closure of an open and bounded set. Assume that \(f:\Omega \rightarrow [0,\infty )\) is lower semi-continuous with \(f\ge c>0\) and \(\int _\Omega f \, \mathrm {d}x = 1\). Then

Taking the special case \(f=1\), \(|\Omega |=1\), \(\alpha =0.5\) in Theorem 1.1 gives [18, Theorem 2]. We illustrate Theorem 1.1 in Table 1 and Figs. 1 and 2 .

Theorem 1.2

(Asymptotic crystallization for the constrained optimal location problem). Let \(\alpha \in (-\infty ,{\overline{\alpha }}]\), where \({\overline{\alpha }}:=0.583\). Let \(\Omega \subset {\mathbb {R}}^2\) be the closure of an open and bounded set. Assume that \(f:\Omega \rightarrow [0,\infty )\) is lower semi-continuous with \(f\ge c>0\) and \(\int _\Omega f \, \mathrm {d}x = 1\). Then

By comparing equation (9) to equation (14) with \(p=d=2\), we read off that \(C_{2,2}(\alpha )=c_6\) for all \(\alpha \in (-\infty ,{\overline{\alpha }}]\), which proves conjecture (10) for this range of \(\alpha \). We believe that Theorem 1.1 and Theorem 1.2 hold for all \(\alpha \in (-\infty ,1)\), not just for \(\alpha \in (-\infty ,{\overline{\alpha }}]\), but we are only able to prove them for the whole range of \(\alpha \) if we make an ansatz about minimal configurations; see Theorem 6.1.

Remark 1.3

(Energy scaling and the geometric interpretation of Theorems 1.1& 1.2). To motivate the rescaling on the left-hand side of (13) we reason as follows. Let

be the union of N disjoint regular hexagons of equal area \(|\Omega |/N\). Let \(z_i\) be the centroid of \(H_i\) and let \(f = \tfrac{1}{|\Omega |}{\mathbbm {1}}_\Omega \) be the uniform probability distribution on \(\Omega \). Here \({\mathbbm {1}}_\Omega \) denotes the characteristic function of the set \(\Omega \). By definition of \(c_6\) (equation (10)) and a change of variables,

for all i. Therefore the penalized quantization error of approximating \(f \mathrm {d}x\) by \(\mu =\sum _{i=1}^N \tfrac{1}{N} \delta _{z_i}\) is

The right-hand side of (15) is minimized when

Substituting this value of N into (15) (assuming for a moment that it is an integer) gives

which motivates the rescaling used in (13). This heuristic computation suggests an upper bound for the left-hand side of (13), for the case where f is the uniform distribution. Theorem 1.1 says that this upper bound is in fact asymptotically optimal. In this sense we can say that the honeycomb structure gives asymptotically the best approximation of the uniform distribution.

The rescaling used in (14) can be derived in a similar way. Indeed, fix \(L>0\) and consider the constraint

If all the masses are the same, \(m_i = 1/N_\mu \) for all i, then the biggest number \(N_\mu \) for which this constraint is satisfied is

Assuming that \(N_\mu \) is an integer, take as above

Then

which motivates the rescaling used in (14). Combining this formal calculation with Theorem 1.2 again suggests the asymptotic optimality of the honeycomb.

Theorem 1.1 gives the asymptotic minimum value of the penalized quantization error but says nothing about the configuration of the particles; it says that the triangular lattice is asymptotically optimal, but it does not say that asymptotically optimal configurations are close to a triangular lattice. We prove this in the following theorem.

Theorem 1.4

(Asymptotically optimal configurations are close to a regular triangular lattice). Let \(\Omega \subset {\mathbb {R}}^2\) be a convex polygon with at most six sides, \(|\Omega |=1\), \(f={\mathbbm {1}}_\Omega \), and \(\alpha = {\overline{\alpha }}\). There exist constants \(\varepsilon _0, c, \beta _1, \beta _2 >0\) with the following property. Let \(\delta >0\) and \(\mu _\delta =\sum _{i=1}^{N_\delta } {\widetilde{m}}_i\delta _{{\widetilde{z}}_i}\in {\mathcal {P}}_{\mathrm {d}}(\Omega )\) be a solution of the penalized quantization problem defining \(\mathrm {m}_{\mathrm {p}}(\alpha ,\delta )\). Define the defect of \(\mu _\delta \) by

Note that \(\lim _{\delta \rightarrow 0} \mathrm {d}(\mu _\delta ) = 0\) by Theorem 1.1. Define

Define rescaled particle positions \(z_i = V_{\delta ,\alpha }^{1/2} {\widetilde{z}}_i\), \(i \in \{1,\ldots ,N_\delta \}\). Let \(\{ V_i \}_{i=1}^{N_\delta }\) be the Voronoi tessellation of \(\Omega \) generated by \(\{z_i\}_{i=1}^{N_\delta }\), i.e.,

-

(a)

The optimal number of particles \(N_\delta \) is asymptotically equal to \(V_{\delta ,\alpha }\):

$$\begin{aligned} \lim _{\delta \rightarrow 0} \frac{V_{\delta ,\alpha }}{N_\delta }=1. \end{aligned}$$ -

(b)

If \(\delta >0\) is sufficiently small, and if \(\varepsilon \in (0,\varepsilon _0)\) and \(\mu _\delta \) satisfy

$$\begin{aligned} \beta _1 \mathrm {d}(\mu _\delta ) + \beta _2 V_{\delta ,\alpha }^{-1/2} \le \varepsilon , \end{aligned}$$(17)then, with the possible exception of at most \(N_\delta c \varepsilon ^{1/3}\) indices \(i\in \{1,\dots ,N_\delta \}\), the following hold:

-

(i)

\(V_i\) is a hexagon;

-

(ii)

the distance between \(z_i\) and each vertex of \(V_i\) is between \((1 \pm \varepsilon ^{1/3}) \sqrt{\frac{V_{\delta ,\alpha }}{N_\delta }} \sqrt{\frac{2}{3 \sqrt{3}}}\);

-

(iii)

the distance between \(z_i\) and each edge of \(V_i\) is between \((1 \pm \varepsilon ^{1/3}) \sqrt{\frac{V_{\delta ,\alpha }}{N_\delta }} \sqrt{\frac{1}{2 \sqrt{3}}}\).

-

(i)

Even though Theorem 1.4 is stated only for the case \(\alpha ={\overline{\alpha }}\), the same proof holds for any \(\alpha \le {\overline{\alpha }}\), up to proving the convexity inequality (25) for that specific value of \(\alpha \) (by using the same strategy we used for the case \(\alpha ={\overline{\alpha }}\)). A similar result can be proved for the constrained quantization problem.

Remark 1.5

(Geometric interpretation of Theorem 1.4). Note that the term \( \beta _2 V_{\delta ,\alpha }^{-1/2}\) in (17) converges to 0 as \(\delta \rightarrow 0\). Theorem 1.4 essentially states that if the defect \(\mathrm {d}(\mu _\delta )\) is small, then the support of \(\mu _\delta \) is close to a regular triangular lattice, and it quantifies how close. Note that the Voronoi tessellation generated by the regular triangular lattice is a regular hexagonal tessellation. The theorem states that the Voronoi tessellation of \( V_{\delta ,\alpha }^{1/2} \Omega \) generated by the rescaled particles \(z_i\) is close to a regular hexagonal tessellation in the sense that, except for at most \(N_\delta c \varepsilon ^{1/3}\) Voronoi cells, the Voronoi cells are hexagons, and it quantifies how far the hexagons are from being regular. For a regular hexagon of area \(\frac{V_{\delta ,\alpha }}{N_\delta }\), the distance between the centre of the hexagon and each vertex is \(\sqrt{\frac{V_{\delta ,\alpha }}{N_\delta }} \sqrt{\frac{2}{3 \sqrt{3}}}\), and the distance between the centre of the hexagon and each edge is \(\sqrt{\frac{V_{\delta ,\alpha }}{N_\delta }} \sqrt{\frac{1}{2 \sqrt{3}}}\). Since \(\lim _{\delta \rightarrow 0} V_{\delta ,\alpha } /N_\delta \rightarrow 1\), ‘most’ of the rescaled Voronoi cells \(V_i\) are ‘close’ to a regular hexagon of area 1.

Remark 1.6

(Locality and weaker assumptions on f). Theorems 1.1, 1.2 say that the quantization problems are essentially independent of f, in the sense that the optimal constants \(\frac{2-\alpha }{1-\alpha } c_6\) and \(c_6\) are independent of f and are determined by the corresponding quantization problems with \(f=1\); see Remarks 3.5 and 3.11 . The locality of the quantization problems is independent of the crystallization and is easier to prove. The locality for the constrained problem was proved by [14] and the locality for the penalised problem follows easily from this, as we shall see in Sect. 3.2. Locality results for the classical quantization problem were proved among others by [20, 45, 53, 74]. We believe that the assumption of lower semi-continuity on f in Theorems 1.1, 1.2 could be relaxed by using the approach in [60], where a locality result is proved for the related irrigation problem, which concerns the best approximation of an absolutely continuous probability measure by a one-dimensional Hausdorff measure supported on a curve.

Remark 1.7

(\(\alpha \ge 1\)). For \(\alpha \ge 1\), the constrained and penalized quantization problems \(\mathrm {m_c}(1,L)\) and \(\mathrm {m_p}(1,\delta )\) do not have a minimizer. The infimum is zero since both the Wasserstein distance and the entropy can be sent to zero by sending the number of particles to infinity. In [14] the authors considered the constraint

for \(\alpha > 1\). For \(f \in L^\infty (\Omega )\), \(\alpha \in (1,2) \cup (2,\infty )\) they proved that there exists a constant \(C_{2,2}(\alpha )\) such that

See [14, Proposition 3.11(iii), Remark 3.13(iii)]. For \(\alpha >2\), \(C_{2,2}(\alpha )=0\). For \(\alpha \in (1,2)\), \(C_{2,2}(\alpha )\) is not known, but it satisfies the bounds

where B is the ball of unit area centred at the origin [14, Lemma 3.10].

Remark 1.8

(Motivation for the choice of entropy \(H_\alpha \)). The are several reasons why we chose to study the entropy \(H_\alpha (\mu )=\sum _i m_i^\alpha \), both mathematical and from a modelling point of view.

-

(i)

The functional \(\mu \mapsto W_2^2(f,\mu ) + \delta \sum _i h(m_i)\) is lower semi-continuous if \(h(0)=0\), \(h(t) \ge 0\), h is lower semi-continuous, subadditive and \(\lim _{t\rightarrow 0+}h(t)/t=+\infty \); see [65, Lemma 7.11]. This includes our entropy \(h(m)=m^\alpha \). There is evidence, however, that crystallization does not hold for all entropies in this class, or at least that optimal configurations consist of particles of different sizes; see [14, Sect. 3.4]. In this paper we have found a subclass for which crystallization holds. It is an open problem to find the largest class of such entropies.

-

(ii)

Functionals of the form \(\mu \mapsto W_2^2(f,\mu ) + \delta \sum _i h(m_i)\) arise in models of economic planning; see [24]. For example, consider the problem of the optimal location of warehouses in a county \(\Omega \) with population density f. The measure \(\mu =\sum _i m_i \delta _{z_i}\) represents the locations \(z_i\) and sizes \(m_i\) of the warehouses. The Wasserstein term in the functional above penalizes the average distance between the population and the warehouses, and the entropy term penalizes the building or running costs of the warehouses. The subadditive nature of the entropy \(H_\alpha \) corresponds to an economy of scale, where it is cheaper to build one warehouses of size m than two of size m/2.

-

(iii)

The special case \(\alpha =0.5\) arises in a simplified model of a two-phase fluid, namely a diblock copolymer melt, in two dimensions; see [17]. Here the entropy \(\sqrt{m}\) corresponds to the interfacial length between a droplet of one phase of area m and the surrounding, dominant phase.

-

(iv)

Finally, from a mathematical perspective, we were inspired to study the entropy \(H_\alpha \) by the conjecture of Bouchitté et al. [14, Sect. 3.6 (ii)].

1.2 Sketch of the proofs of Theorems 1.1& 1.2

We briefly present the main ideas of the paper. We will see that Theorem 1.2 is an easy consequence of Theorem 1.1 (see Sect. 5), and so here we just focus on the ideas behind the proof of Theorem 1.1. The strategy for proving Theorem 1.4 is discussed in Sect. 7.

First we identify the scaling of the penalized quantization error \(\mathrm {m_p}(\alpha ,\delta )\) as \(\delta \rightarrow 0\) using the \(\Gamma \)-convergence result of [14]. This gives

where

and \(Q=[-1/2,1/2]^2\); see Corollary 3.10 and Remark 3.11. The main challenge in this paper is to show that the optimal constant is \(C_{\mathrm {p}}(\alpha )= c_6 (2-\alpha )/(1-\alpha )\) for all \(\alpha \in (-\infty ,{\overline{\alpha }}]\). Thanks to equations (18) and (19), to prove Theorem 1.1 it is sufficient to prove it for the case where \(\Omega = Q\) and \(f={\mathbbm {1}}_\Omega \).

Next we prove a monotonicity result (Lemma 3.12), which is analogous to a monotonicity result proved by [14] for the constrained quantization problem, which asserts that if Theorem 1.1 holds for some \({\widetilde{\alpha }}\in (-\infty ,1)\), then it holds for all \(\alpha \in (-\infty ,{\widetilde{\alpha }}]\). Therefore we only need to prove Theorem 1.1 for the single value \(\alpha ={\overline{\alpha }}=0.583\). Therefore for the rest of the paper we can take \(\Omega =Q\), \(f={\mathbbm {1}}_\Omega \), \(\alpha ={\overline{\alpha }}\) without loss of generality.

From the definition of the Wasserstein distance, equation (2), if \(\mu =\sum _{i=1}^{N_\mu } m_i \delta _{z_i}\), then

where T is the optimal transport map. Since Q is a polygonal set, it is well known (see Lemma 2.1) that the sets \(T^{-1}(\{z_i\})\) are convex polygons, called Laguerre cells.

A classical result by Fejes Tóth (see Lemma 2.3) states that the second moment of a polygon about any point in the plane is greater than or equal to the second moment of a regular polygon (with the same area and same number of edges) about its centre of mass:

where P(m, n) is a polygon with area m and n edges, R(m, n) is a regular polygon centred at the origin with area m and n edges, and \(z \in {\mathbb {R}}^2\). Combining (20) and (21) gives

for all \(\mu \in {\mathcal {P}}_{\mathrm {d}}(\Omega )\), where \(n_i\) denotes the number of edges of the polygon \(T^{-1}(\{z_i\})\) and \(m_i\) denotes its area. Our proofs are limited to the p-Wasserstein metric with \(p=2\) since, for \(p \ne 2\), the transport regions \(T^{-1}(\{ z_i \})\) are not convex polygons. Moreover, our proofs are limited to two dimensions since there is no equivalent statement of Fejes Tóth’s Moment Lemma in higher dimensions (due to the lack of regular polytopes in higher dimensions).

Next we recall the proof of Theorem 1.2 due to Gruber [43] for the case \(\alpha =0\), which we will adapt to prove Theorem 1.1 (and consequently Theorem 1.2) for general \(\alpha \in (-\infty ,{\overline{\alpha }}]\). It can be shown that the function

is convex. (Note that \(n \mapsto c_n\) can be extended from a function on \({\mathbb {N}} \cap [3,\infty )\) to a function on \([3,\infty )\); see Lemma 2.3.) If \(\mu \) is a minimizer of \(W_2^2({\mathbbm {1}}_\Omega ,\cdot )\) subject to the constraint \(N_\mu \le L\), then clearly \(N_\mu =L\) (assuming that L is an integer) since we get the best constrained approximation of \({\mathbbm {1}}_\Omega \) by taking as many Dirac masses as possible. By convexity,

where \(\kappa :=g_n(1,6)=\partial _n c_n |_{n=6}<0\). Combining equations (22) and (23) gives

since \(\sum _i m_i = |\Omega | = 1\). Euler’s formula for planar graphs implies that the average number of edges in any partition of the unit square \(\Omega \) by convex polygons is less than or equal to 6: \(\frac{1}{L} \sum _{i} n_i \le 6\); see Lemma 2.6. Therefore, by equation (24) and since \(\kappa < 0\),

This is the lower bound in Theorem 1.2 for the case \(\alpha =0\). A matching upper bound can be obtained in the limit \(L \rightarrow \infty \) by taking \(\mu =\sum _{i=1}^L \tfrac{1}{L} \delta _{z_i}\) where \(z_i\) lie on a regular triangular lattice.

In [17] Gruber’s strategy was generalized to prove Theorem 1.1 for the case \(\alpha =0.5\) and \(f=1\). Thanks to the results of [14] and our results in Sect. 3.2, it follow that Theorem 1.1 holds for all \(\alpha \in (-\infty ,0.5]\) and all lower semi-continuous f satisfying (1). In this paper we extend these ideas further to prove Theorem 1.1 for the case \(\alpha =0.583\), and hence all \(\alpha \in (-\infty ,0.583]\). First of all, we rescale the square Q as follows (see Remark 1.3):

The rescaling factor is chosen in such a way that a discrete measure supported at the centres of regular hexagons of unit area is asymptotically optimal. Up to a multiplicative factor, the rescaled energy is

where \({\mathbbm {1}}_{Q_{\delta ,\alpha }}\) denotes the characteristic function of the square \(Q_{\delta ,\alpha }\). Here \(\mu \) is a Borel measure on \(Q_{\delta ,\alpha }\) of the form \(\mu =\sum _{i=1}^{N_\mu } m_i \delta _{z_i}\) with \(\sum _{i=1}^{N_\mu } m_i = V_{\delta ,\alpha }\). By (22) we have

where

Unfortunately, for \(\alpha \in (0,1)\), \(g_\alpha \) is not convex. Our first main technical result is to show that for \(\alpha ={\overline{\alpha }}\) there exists \(m_0>0\) such that the following ‘convexity inequality’ holds for all \(m\ge m_0\), \(n \in {\mathbb {N}} \cap [3,\infty )\):

See Lemma 4.11, Corollaries 4.12 and 4.16. Our second main technical result (Lemma 4.15) is to show that if \(\mu =\sum _{i=1}^{N_\mu } m_i \delta _{z_i}\) minimizes \({\mathcal {F}}_{\delta ,\alpha }\), then

Therefore minimizers satisfy the convexity inequality (25), and the proof of Theorem 1.1 now follows using Gruber’s strategy.

To be precise, we are only able to prove the inequality (26) for particles \(z_i\) that are not too close to the boundary (Lemma 4.15(i)). Nevertheless, we are able to prove a worse lower bound on the mass \(m_i\) of particles near the boundary (Lemma 4.15(ii)), which is still sufficient to show that the number of particles near the boundary is asymptotically negligible. This fixes what appears to be a gap in the proof in [18], where it was tacitly assumed that all of the particles were sufficiently far from the boundary of the rescaled domain (at least distance 3.2143; see the proof of [18, Lemma 7]).

The idea of the proof of (26) is to compare the energy of a minimizer \(\mu \) with that of a competitor \({\widetilde{\mu }}\) that is obtained by gluing the smallest particle of \(\mu \) with one of its neighbours. The proofs of (25) and (26) require some delicate positivity estimates. As in the proof of [18], we also use computer evaluation at several points in the proof to check the sign of some explicit numerical constants (that are much larger than machine precision).

1.3 Literature on crystallization, optimal partitions and quantization

Our work belongs to the very active research programme of establishing crystallization results for nonlocal interacting particle systems. This problem is known as the crystallization conjecture [12]. Despite experimental evidence that many particle systems, such as atoms in metals, have periodic ground states, until recently there were few rigorous mathematical results. Results in one dimension include [11, 39] and results in two dimensions include [3, 7,8,9,10, 18, 30, 35, 50, 63, 64, 69]. Let us recall that a central open problem in mathematical physics is to establish the optimality of the Abrikosov (triangular) lattice for the Ginzburg-Landau energy [68]. In three dimensions there are few rigorous results. Even establishing the optimal configuration of just five charges on a sphere was only achieved in 2013 via a computer-assisted proof [66]. The Kepler conjecture about optimal sphere packing was also computer-assisted [48, 49], while the optimal sphere covering remains to this day unknown. In even higher dimensions (in particular 8 and 24), there start to be more rigorous results again, e.g., [26, 27, 70]. For a thorough survey of recent crystallization results for nonlocal particle systems see [12] and [67].

Our result also falls into the field of optimal partitions (see Remark 4.10). The optimality of hexagonal tilings, or Honeycomb conjectures, have been proved for example by [21,22,23, 47]. Kelvin’s problem of finding the optimal foam in 3D (the ‘three-dimensional Honeycomb conjecture’) remains to this day unsolved; for over 100 years it was believed that truncated octahedra gave the optimal tessellation, until the remarkable discovery of a better tessellation by Weire and Phelan [72].

Finally, our result also belongs to the field of optimal quantization or optimal sampling [41, 45, 46, Sect. 33], which concerns the best approximation of a probability measure by a discrete probability measure. The most commonly used notion of best approximation is the Wasserstein distance. This problem has been studied by a wide range of communities including applied analysis [14, 24, 51], computational geometry [33], discrete geometry [28, 45], and probability [41]. Applications include optimal location of resources [13], signal and image compression [34, 42], numerical integration [62, Sect. 2.3], mesh generation [32, 58], finance [62], materials science [19, Sect. 3.2], and particle methods for PDEs (sampling the initial distribution) [15, Example 7.1].

It is well known that if \(\mu =\sum _{i=1}^N m_i z_i\) is a minimizer of \(W_2(f \mathrm {d}x,\cdot )\), then the particles \(z_i\) generate a centroidal Voronoi tessellation (CVT) [33, 55], which means the particles \(z_i\) lie at the centroids of their Voronoi cells. Numerical methods for computing CVTs include Lloyd’s algorithm [33] and quasi-Newton methods [55]. More generally, minimizers of the penalized energy \(\mu \mapsto \delta \sum _i m_i^\alpha + W_2^2(f \mathrm {d}x,\mu )\) generate centroidal Laguerre tessellations (see Remark 4.6). Numerical methods for solving the constrained and penalized quantization problems include [16] (which was used to produce Figs. 1 and 2 ) and [73].

There is a large literature on optimal CVTs of N points (global minima of \(\mu \mapsto W_2({\mathbbm {1}}_\Omega ,\mu )\) subject to \(\# \mathrm {supp}(\mu )=N\)). According to Gersho’s conjecture (see [40]), minimizers correspond to regular tessellations consisting of the repetition of a single polytope whose shape depends only on the spatial dimension. In two dimensions the polytope is a hexagon [13, 36, 37, 43, 59, 61] and moreover the result holds for any p-Wasserstein metric, \(p \in [1,\infty )\). Gersho’s conjecture is open in three dimensions, although it is believed that the optimal CVT is a tessellation by truncated octahedra, which is generated by the body-centred cubic (BCC) lattice. Some numerical evidence for this is given in [31], and in [6] it was proved that the BCC lattice is optimal among lattices (but we do not know whether the optimal configuration is in fact a lattice). Geometric properties of optimal CVTs in 3D were recently proved in [25], who also suggested a strategy for a computed-assisted proof of Gersho’s conjecture.

1.4 Organization of the paper

In Sect. 2 we recall some basic notions from optimal transport theory and convex geometry. In Sect. 3.1 we recall from [14] the result (9) for the case \(d=p=2\), namely the scaling of the minimum value of the energy for the constrained problem (11). In Sect. 3.2 we derive the scaling of the minimum value of the energy for the penalized problem (12). These results give the optimal scaling of the minimum values of the constrained and penalized energies, but they do not give the optimal constants. In Sect. 4 we identify the optimal constant for the penalized problem (which proves Theorem 1.1) and in Sect. 5 we identify the optimal constant for the constrained problem (which proves Theorem 1.2). In Sect. 6 we prove the asymptotic crystallization result for all \(\alpha \in (-\infty ,1)\) under an additional assumption. Finally, Sect. 7 is devoted to the proof of Theorem 1.4.

2 Preliminaries

2.1 Main assumptions

We assume that \(\Omega \subset {\mathbb {R}}^2\) is the closure of an open and bounded set, and \(f\in L^1(\Omega )\) is a lower semi-continuous function satisfying \(f\ge c>0\) and

2.2 Notation

Define \({\mathbb {R}}^+:=(0,\infty )\). For a Lebesgue-measurable set \(A\subset {\mathbb {R}}^2\), we denote by |A| its area and by \({\mathbbm {1}}_A\) its characteristic function. We let \({\mathcal {M}}(X)\) denote the set of non-negative finite Borel measures on \(X\subset {\mathbb {R}}^d\) and \({\mathcal {P}}(X)\subset {\mathcal {M}}(X)\) denote the set of probability measures on X. Moreover, we let \({\mathcal {M}}_{\mathrm {d}}(X)\subset {\mathcal {M}}(X)\) be the following set of discrete measures:

Recall that \({\mathcal {P}}_{\mathrm {d}}(X)\) denotes the set of discrete probability measures, \({\mathcal {P}}_{\mathrm {d}}(X)={\mathcal {M}}_{\mathrm {d}}(X)\cap {\mathcal {P}}(X)\), and that, for \(\mu \in {\mathcal {P}}_{\mathrm {d}}(\Omega )\), \(N_\mu := \# \mathrm {supp}(\mu )\). For brevity, in an abuse of notation, we denote the preimage of a singleton set \(\{ z\} \subset X\) under a map \(T:X \rightarrow X\) by \(T^{-1}(z)\) instead of \(T^{-1}(\{z\})\).

2.3 Facts from optimal transport theory and convex geometry

We start by recalling the characterization of solutions of the semi-discrete transport problem (2) for the case \(p=2\). The following result goes back to [4] and is now well-known in the optimal transport community; see for example [54, 56, 57].

Lemma 2.1

(Characterization of the optimal transport map). Let \(U\subset {\mathbb {R}}^2\) be a convex polygon, \(\mu = \sum _{i=1}^{N_\mu } m_i \delta _{z_i} \in {\mathcal {P}}_{\mathrm {d}}(U)\), \(g \in L^1(U;{\mathbb {R}}^+)\), \(\int _U g \, \mathrm {d}x =1\), and \(W_2(g,\mu )\) be the Wasserstein metric

Then the infimum is attained and the minimizer \(T:U\rightarrow \{z_i\}_{i=1}^{N_\mu }\) is unique (up to a set of measure zero). Moreover, by possibly modifying T on a set of measure zero, there exists \((w_1,\dots , w_{N_\mu })\in {\mathbb {R}}^{N_\mu }\) such that

Remark 2.2

(Laguerre cells). The previous lemma implies that the partition \(\{\overline{T^{-1}(z_i)} \}_{i=1}^{N_\mu }\) is the Laguerre tessellation or power diagram generated by the weighted points \(\{ (z_i, w_i) \}_{i=1}^{N_\mu }\); see [5, 56]. The sets \(\overline{T^{-1}(z_i)}\) are convex polygons, known as Laguerre cells or power cells.

We now recall a classical result by L. Fejes Tóth (see [36, p. 198]), which says that the minimal second moment of an n-gon is greater than or equal to the minimal second moment of a regular n-gon of the same area:

Lemma 2.3

(Fejes Tóth’s Moment Lemma). For \(n\in {\mathbb {N}}\), \(n\ge 3\), define

Then the infimum is attained by a regular n-gon. Consequently a direct calculation gives

Remark 2.4

Note that a change of variables gives

for all \(m>0\).

We extend the definition of \(c_n\) to all \(n\in [3,\infty )\) using equation (27). Its main properties are stated in the next result, whose proof is a direct computation (see [43]).

Lemma 2.5

(Properties of \(c_n\)). The function \(n \mapsto c_n\), \(n \in [3,\infty )\), is convex and decreasing. Moreover

Finally, we recall one more result from convex geometry, which follows from Euler’s polytope formula. It is proved for example in [18, Lemma 4] or [59, Lemma 3.3].

Lemma 2.6

(Partitions by convex polygons). Let \(U \subset {\mathbb {R}}^2\) be a convex polygon with at most 6 sides. In any partition of U by convex polygons, the average number of edges per polygon is less than or equal to 6.

3 Scaling of the Asymptotic Quantization Error

3.1 The constrained optimal location problem

We report here a result about the asymptotic quantization error from [14].

Definition 3.1

(Young measures). Given \(\varepsilon >0\) and a measure \(\mu \in {\mathcal {P}}_{\mathrm {d}}(\Omega )\) of the form

define the measures \(\rho (\mu )\in {\mathcal {P}}_{\mathrm {d}}({\mathbb {R}}^+)\) and \(\lambda _\varepsilon (\mu )\in {\mathcal {M}}_{\mathrm {d}}(\Omega \times {\mathbb {R}}^+)\) by

Observe that the first marginal of \(\lambda _\varepsilon (\mu )\) is \(\mu \) and that the second marginal of \(\lambda _1(\mu )\) is \(\rho (\mu )\).

In order to define the cell formula for the asymptotic quantization error, we need to introduce the following metric on the space of probability measures. Given \(\rho _1, \rho _2\in {\mathcal {P}}({\mathbb {R}}^+)\), we define

where \(\mathrm {Lip}({\mathbb {R}}^+)\) is the space of Lipschitz continuous functions on \({\mathbb {R}}^+\) and \( \mathrm {Lip}(\varphi )\) denotes the Lipschitz constant of \(\varphi \). It is well known that \(\mathrm {d}_{\mathrm {BL}}\) metrizes tight convergence (see [29, Theorem 11.3.3]).

The energy density of the asymptotic quantization error is introduced as follows.

Definition 3.2

(Cell formula). Given \(t>0\) and \(\rho \in {\mathcal {P}}({\mathbb {R}}^+)\), define

where \(Q_k:=[-k/2,k/2]^2\subset {\mathbb {R}}^2\) and

Define \(G:{\mathcal {P}}({\mathbb {R}}^+)\rightarrow {\mathbb {R}}\) by

Given \(\lambda \in {\mathcal {M}}(\Omega \times {\mathbb {R}}^+)\), let \(\pi _1 \# \lambda \) denote its first marginal, where \(\pi _1 : \Omega \times {\mathbb {R}}^+ \rightarrow \Omega \) is the projection \(\pi _1(x,t)=x\). One of the main results of Bouchitté, Jimenez and Mahadevan [14, Theorem 3.1] is the following:

Theorem 3.3

(Gamma-limit of the quantization error). For \(\varepsilon >0\), let

Then \({\mathcal {E}}_{\varepsilon }{\mathop {\rightarrow }\limits ^{\Gamma }}{\mathcal {E}}_{0}\) with respect to tight convergence on \({\mathcal {M}}(\Omega \times {\mathbb {R}}^+)\) where

where \(f \mathrm {d}x\otimes \lambda ^x\) denotes the disintegration of \(\lambda \) with respect to \(f \mathrm {d}x\); see [2, Theorem 2.28].

Bouchitté, Jimenez and Mahadevan used Theorem 3.3 to prove the following result about the scaling of the asymptotic quantization error for the constrained optimal location problem; see [14, Lemma 3.10, Proposition 3.11(i)].

Corollary 3.4

(Asymptotic quantization error for the constrained problem). For all \(\alpha \in (-\infty ,1)\),

where

Moreover, the function \(\alpha \mapsto C_{\mathrm {c}}(\alpha )\) is non-increasing.

Note that the result proved in [14] holds more generally: in any dimension, for any p-Wasserstein metric, and for more general entropies.

Remark 3.5

Let \(Q \subset {\mathbb {R}}^2\) be a unit square. Taking \(\Omega =Q\) and \(f={\mathbbm {1}}_Q\) in Corollary 3.4 yields

Remark 3.6

(Optimal constant). The constant \(C_{\mathrm {c}}(\alpha )\) in Corollary 3.4 was known explicitly for the case \(\alpha \in (-\infty ,0]\), where \(C_{\mathrm {c}}(\alpha ) = C_{\mathrm {c}}(0)=G(\delta _1)=c_6\) for all \(\alpha \le 0\). We briefly recall the proof: By Fejes Tóth’s Theorem on Sums of Moments [43],

where the final inequality follows from [14, Prop. 3.2(iv)]. Therefore \(C_{\mathrm {c}}(0) =c_6\). In addition, \( C_{\mathrm {c}}(\alpha ) \ge C_{\mathrm {c}}(0) = c_6\) for all \(\alpha \le 0\) by the monotonicity of the map \(\alpha \mapsto C_{\mathrm {c}}(\alpha )\) (see Corollary 3.4). On the other hand, \( C_{\mathrm {c}}(\alpha ) \le c_6\) by [14, Prop. 3.2(iv)]. We conclude that \( C_{\mathrm {c}}(\alpha ) = c_6\) for all \(\alpha \le 0\), as required. One of our contributions is to prove that \(C_{\mathrm {c}}(\alpha )=c_6\) for all \(\alpha \in (-\infty ,0.583]\); see Sect. 5.

3.2 The penalized optimal location problem

Here we prove analogous results to those presented in the previous section.

Definition 3.7

(penalized energy). Let \(\delta >0\) and \(\alpha \in (-\infty ,1)\). Define \({\mathcal {E}}_{\delta ,\alpha } : {\mathcal {P}}_{\mathrm {d}}(\Omega ) \rightarrow [0,\infty )\) by

where \(\mu = \sum _{i=1}^{N_\mu } m_i \delta _{z_i}\).

Proposition 3.8

(Gamma-limit of the penalized energy). Let \(\delta >0\), \(\alpha \in (-\infty ,1)\) and

Define the rescaled penalized energy \({\widetilde{{\mathcal {E}}}}_{\delta ,\alpha }:{\mathcal {M}}(\Omega \times {\mathbb {R}}^+)\rightarrow [0,\infty ]\) by

Then \({\widetilde{{\mathcal {E}}}}_{\delta ,\alpha }{\mathop {\rightarrow }\limits ^{\Gamma }}{\mathcal {G}}_\alpha \) as \(\delta \rightarrow 0\) with respect to tight convergence on \({\mathcal {M}}(\Omega \times {\mathbb {R}}^+)\) where

To prove Proposition 3.8 we need the following technical result from [14, Lemma 6.3], which says that we can modify \(\mu \) to remove asymptotically small Dirac masses (as \(\delta \rightarrow 0\)) without increasing the energy \({\widetilde{{\mathcal {E}}}}_{\delta ,\alpha }(\mu )\) too much.

Lemma 3.9

Let \(\lambda =f \mathrm {d}x \otimes \lambda ^x \in {\mathcal {M}}(\Omega \times {\mathbb {R}}^+)\) satisfy \( {\mathcal {E}}_0(\lambda )<\infty \). Then, for every \(\gamma >1\), there exists a decreasing sequence \((t_k)_{k\in {\mathbb {N}}} \subset (0,\infty )\), \(t_k \rightarrow 0\), and a doubly-indexed sequence \(( \lambda _ \varepsilon ^k )_{\varepsilon >0, k\in {\mathbb {N}}}\subset {\mathcal {M}}(\Omega \times {\mathbb {R}}^+)\) satisfying the following:

-

(i)

\(\lambda _\varepsilon ^k\) is supported in \(\Omega \times [t_k,\infty )\);

-

(ii)

\(\limsup _{k \rightarrow \infty } \limsup _{\varepsilon \rightarrow 0} \Vert \lambda ^k_ \varepsilon - \lambda \Vert = 0\), where \(\Vert \cdot \Vert \) denotes the total variation norm on the space of signed measures on \(\Omega \times {\mathbb {R}}^+\);

-

(iii)

for all \(\alpha \in (-\infty ,1)\), \(k \in {\mathbb {N}}\),

$$\begin{aligned} \limsup _{\varepsilon \rightarrow 0} \int _{\Omega \times (0,\infty )} t^{\alpha -1} \, \mathrm {d}\lambda _\varepsilon ^k(x,t) \le \int _{\Omega \times (0,\infty )} t^{\alpha -1} \, \mathrm {d}\lambda (x,t); \end{aligned}$$ -

(iv)

there exists \(\mu _\varepsilon ^k \in {\mathcal {P}}_{\mathrm {d}}(\Omega )\) such that \(\lambda _\varepsilon ^k=\lambda _\varepsilon (\mu _\varepsilon ^k)\) and

$$\begin{aligned} \limsup _{k\rightarrow \infty } \, \limsup _{\varepsilon \rightarrow 0} \, \varepsilon ^{-2} \, W_2^2(f, \mu ^k_\varepsilon ) \le \gamma \int _\Omega G(\lambda ^x) \, \mathrm {d}x. \end{aligned}$$

Proof of Proposition 3.8

For \(\mu \in {\mathcal {P}}_{\mathrm {d}}(\Omega )\), \(\lambda =\lambda _{\varepsilon _{\delta ,\alpha }}(\mu )\), we can write

Since the function \(t\mapsto t^{\alpha -1}\) is unbounded, and thus the first term of \({\widetilde{{\mathcal {E}}}}_{\delta ,\alpha }(\lambda )\) is not continuous in \(\lambda \), the \(\Gamma \)-convergence result does not follow directly from Theorem 3.3 and the stability of \(\Gamma \)-limits under continuous perturbations. We therefore reason as follows.

Step 1: liminf inequality. Fix \((\delta _n)_{n\in {\mathbb {N}}}\) with \(\delta _n\rightarrow 0\) as \(n\rightarrow \infty \). Let \(\lambda \in {\mathcal {M}}(\Omega \times {\mathbb {R}}^+)\) and \((\lambda _n)_{n\in {\mathbb {N}}}\subset {\mathcal {M}}(\Omega \times {\mathbb {R}}^+)\) satisfy \(\lambda _n\rightarrow \lambda \) tightly. Without loss of generality we can assume that

Therefore there exists \((\mu _n)_{n\in {\mathbb {N}}}\subset {\mathcal {M}}_{\mathrm {d}}(\Omega )\) such that \(\lambda _n=\lambda _{\varepsilon _{\delta _n,\alpha }}(\mu _n)\). Observe that \(\pi _1 \# \lambda _n = \mu _n\). By (31), and since \(W_2\) metrizes weak convergence of measures [65, Theorem 5.9], then \(\mu _n \rightarrow f \mathrm {d}x\) as \(n \rightarrow \infty \). Therefore \(\pi _1 \# \lambda = \lim _{n \rightarrow \infty } \pi _1 \# \lambda _n = f \mathrm {d}x\). By the Disintegration Theorem [2, Theorem 2.28] there exists \(\lambda ^x \in {\mathcal {M}}({\mathbb {R}}^+)\) satisfying \(\lambda =f \mathrm {d}x\otimes \lambda ^x\).

For \(M>0\) define the continuous bounded function \(g_M:(0,\infty ) \rightarrow {\mathbb {R}}\) by

Then, by using the liminf inequality of Theorem 3.3, we get

Since the function \(g_M\) is non-negative and pointwise non-decreasing in M, we obtain the liminf inequality by passing to the limit \(M \rightarrow \infty \) using the Monotone Convergence Theorem.

Step 2: limsup inequality. Let \(\lambda =f \mathrm {d}x \otimes \lambda ^x \in {\mathcal {M}}(\Omega \times {\mathbb {R}}^+)\) satisfy \({\mathcal {G}}_\alpha (\lambda ) < \infty \), which implies that \({\mathcal {E}}_0(\lambda )<\infty \). Let \(\gamma >1\). By Lemma 3.9(iii),(iv), there exists a decreasing sequence \((t_k)_{k\in {\mathbb {N}}} \subset (0,\infty )\), a sequence \(\delta _n \rightarrow 0\), and a doubly-indexed sequence \(( \lambda _{\varepsilon _{\delta _n,\alpha }}^k )_{n, k\in {\mathbb {N}}}\subset {\mathcal {M}}(\Omega \times {\mathbb {R}}^+)\) such that

By a diagonalization argument and Lemma 3.9(ii), we can find a subsequence \(\delta _n\) (not relabelled) such that \(\lambda _{\varepsilon _{\delta _{n},\alpha }}^{k_n} \rightarrow \lambda \) tightly as \(n \rightarrow \infty \) and

Since \(\gamma >1\) is arbitrary, the limsup inequality follows. \(\square \)

Corollary 3.10

(Asymptotic quantization error for the penalized problem). For all \(\alpha \in (-\infty ,1)\),

where

Proof

Step 1. The functional \({\widetilde{{\mathcal {E}}}}_{\delta ,\alpha }\) has at least one minimizer (by [24, Theorem 2.1]), sequences \((\lambda _\delta )\) with bounded energy have tightly convergent subsequences (by [14, Theorem 3.1(i)]), and \({\widetilde{{\mathcal {E}}}}_{\delta ,\alpha }\) \(\Gamma \)-converges to \({\mathcal {G}}_\alpha \) (by Proposition 3.8). Therefore a standard result in the theory of \(\Gamma \)-convergence implies that the minimum value of \({\widetilde{{\mathcal {E}}}}_{\delta ,\alpha }\) converges to the minimum value of \({\mathcal {G}}_\alpha \):

We are thus left with proving that

where \(C_{\mathrm {p}}(\alpha )\) is defined in (33).

Step 2. For each \(x \in \Omega \), define \({\mathcal {G}}_\alpha ^x:{\mathcal {P}}(\mathbb {R^+}) \rightarrow {\mathbb {R}}\) by

By definition, if \(\lambda =f \mathrm {d}x\otimes \lambda ^x\),

For each \(x \in \Omega \), \({\mathcal {G}}^x_\alpha \) is lower semi-continuous since G is lower semi-continuous [14, Prop. 3.2(i)] and since \(\rho \mapsto \int _0^\infty t^{\alpha -1} \, \mathrm {d}\rho (t)\) is lower semi-continuous [65, Lemma 1.6]. By [14, Prop. 3.2(iv)],

where \(\gamma _{2,2} = \int _{B_1(0)} |x|^2 \, \mathrm {d}x\). Therefore, for each \(x \in \Omega \), minimising sequences for \({\mathcal {G}}^x_\alpha \) are tight. Consequently \({\mathcal {G}}^x_\alpha \) has at least one minimizer.

We claim that there exits a Borel measurable function \(x \mapsto \rho ^x \in {\mathcal {P}}({\mathbb {R}}^+)\), \(x \in \Omega \), such that

This will follow from Aumann’s Selection Theorem (see [38, Theorem 6.10]) once we prove that the graph of the multifunction \(\Gamma : \Omega \rightarrow 2^{{\mathcal {P}}({\mathbb {R}}^+)} \setminus \emptyset \), defined by \(\Gamma (x):=\mathrm {argmin} \, {\mathcal {G}}^x_\alpha \), belongs to \({\mathcal {B}}(\Omega )\otimes {\mathcal {B}}({\mathcal {P}}({\mathbb {R}}^+))\), the product \(\sigma \)-algebra of the Borel sets of \(\Omega \) and the Borel sets of \(\mathcal {P({\mathbb {R}}^+)}\). To prove this, we define the function \(\Psi :{\mathbb {R}}^+ \times {\mathcal {P}}({\mathbb {R}}^+) \rightarrow {\mathbb {R}}\) by

In the following, the target space \({\mathbb {R}}\) will always be equipped with the Borel \(\sigma \)-algebra. For each \(\rho \in {\mathcal {P}}({\mathbb {R}}^+)\), the function \(s \mapsto \Psi (s,\rho )\) is continuous. For each \(s \in {\mathbb {R}}^+\), the function \(\rho \mapsto \Psi (s,\rho )\) is lower semi-continuous and hence \({\mathcal {B}}(\mathcal {P({\mathbb {R}}^+)})\)-measurable. Therefore \(\Psi \) is a Carathéodory function and hence \({\mathcal {B}}({\mathbb {R}}^+) \otimes {\mathcal {B}}(\mathcal {P({\mathbb {R}}^+)})\)-measurable (see, e.g., [1, Lemma 4.51]). Define the composite function \(\Phi :\Omega \times {\mathcal {P}}({\mathbb {R}}^+) \rightarrow {\mathbb {R}}\) by

This is \({\mathcal {B}}(\Omega ) \otimes {\mathcal {B}}(\mathcal {P({\mathbb {R}}^+)})\)-measurable since f and \(\Psi \) are Borel measurable.

We claim that the map \(x \mapsto \min _{\rho \in {\mathcal {P}}({\mathbb {R}}^+)}\Phi (x,\rho )\) is \({\mathcal {B}}(\Omega )\)-measurable. Then \({\overline{\Phi }}:\Omega \times {\mathcal {P}}({\mathbb {R}}^+) \rightarrow {\mathbb {R}}\) defined by \({\overline{\Phi }}(x,\nu ):=\min _{\rho \in {\mathcal {P}}({\mathbb {R}}^+)}\Phi (x,\rho )\) is \({\mathcal {B}}(\Omega )\otimes {\mathcal {B}}(\mathcal {P({\mathbb {R}}^+)})\)-measurable (since \({\overline{\Phi }}\) is constant in its second argument). The required \({\mathcal {B}}(\Omega )\otimes {\mathcal {B}}(\mathcal {P({\mathbb {R}}^+)})\)-measurability of graph of the multifunction \(\Gamma \) then follows by noticing that

To show that \(x \mapsto \min _{\rho \in {\mathcal {P}}({\mathbb {R}}^+)}\Phi (x,\rho )\) is \({\mathcal {B}}(\Omega )\)-measurable, we write it as the composite function \(x \mapsto f(x) \mapsto \min _{\rho \in {\mathcal {P}}({\mathbb {R}}^+)}\Psi (f(x),\rho )\). This is \({\mathcal {B}}(\Omega )\)-measurable since \(x \mapsto f(x)\) is \({\mathcal {B}}(\Omega )\)-measurable and \(s \mapsto \min _{\rho \in {\mathcal {P}}({\mathbb {R}}^+)}\Psi (s,\rho )\) is the pointwise infimum of a family of continuous functions, hence upper semi-continuous and \({\mathcal {B}}({\mathbb {R}}^+)\)-measurable. This completes the proof that there exists a Borel measurable function \(x \mapsto \rho ^x \in {\mathcal {P}}({\mathbb {R}}^+)\) satisfying (36).

Step 3. Define \(\lambda :=f\mathrm {d}x \otimes \rho ^x \in {\mathcal {M}}(\Omega \times {\mathbb {R}}^+)\), where \(\rho ^x\) is the minimizer of \({\mathcal {G}}^x_\alpha \) constructed in Step 2 (note that \(\lambda \) is well-defined by [2, Definition 2.27] since \(x \mapsto \rho ^x\) is Borel measurable and hence Lebesgue measurable). By equations (35), (36), \(\lambda \) is a minimizer of \({\mathcal {G}}_\alpha \) and

We now rewrite

as follows. For \(a>0\), define the dilation \(L^a:{\mathbb {R}}^+\rightarrow {\mathbb {R}}^+\) by \(L^a(t):=at\). Let \(\rho \in {\mathcal {P}}({\mathbb {R}}^+)\) and consider the push-forward \(\rho _a:=L^a\#\rho \in {\mathcal {P}}({\mathbb {R}}^+)\). It was proved in [14, Prop. 3.2(ii)] that

Note that

Fix \(x\in \Omega \) and let \(a:=f(x)^{-\frac{1}{2-\alpha }}\). By (38) and (39) we can write

Therefore, by using (40) and the definition of \(C_{\mathrm {p}}(\alpha )\) (see (33)), we have that

for all \(x\in \Omega \). By combining (37) and (41) we prove (34) and conclude the proof. \(\square \)

Remark 3.11

Let \(Q \subset {\mathbb {R}}^2\) be a unit square. Taking \(\Omega =Q\) and \(f={\mathbbm {1}}_Q\) in Corollary 3.10 yields

By Corollary 3.10, in order to prove Theorem 1.1 it is sufficient to prove that

for all \(\alpha \in (-\infty ,{\overline{\alpha }}]\). The next result, which is analogous to the monotonicity of the map \(\alpha \mapsto C_{\mathrm {c}}(\alpha )\), means that in order to prove Theorem 1.1 for all \(\alpha \in (-\infty ,{\overline{\alpha }})\), it is sufficient to prove it for the single value \(\alpha ={\overline{\alpha }}\).

Lemma 3.12

(Monotonicity of the constant \(C_{\mathrm {p}}\)). Assume that for some \({\tilde{\alpha }}\in (-\infty ,1)\),

Then

for every \(\alpha <{\tilde{\alpha }}\).

Proof

Recall from Remark 3.6 that \(c_6 = G(\delta _1)\). By (33), for all \(\alpha \in (-\infty ,1)\),

Write \(C_{\mathrm {p}}(\alpha )=\min \{ F(\alpha ,\rho ) : \rho \in {\mathcal {P}}({\mathbb {R}}^+)\}\), where

For all \( \rho \in {\mathcal {P}}({\mathbb {R}}^+)\), \(\alpha \in (-\infty ,1)\), we have

Let \(\phi (t,\alpha )=(t^{\alpha -1}-1)/(1-\alpha )\) denote the integrand on the right-hand side. Then

Since \(\partial _\alpha \psi (t,\alpha )=(1-\alpha )(\ln t)^2 t^{\alpha -1}\ge 0\) and \(\psi (t,1)=0\), we obtain that \(\psi (t,\alpha )\le 0\) for all \(t\in (0,\infty )\), \(\alpha \in (-\infty ,1)\). Therefore \(\partial _\alpha \phi \le 0\) and \(\phi \) is non-increasing in \(\alpha \). Consequently the map \(\alpha \mapsto F(\alpha ,\rho ) - {F(\alpha ,\delta _1)}\) is non-increasing.

Let \({\tilde{\alpha }}\in (\infty ,1)\) be such that

For all \(\rho \in {\mathcal {P}}({\mathbb {R}}^+)\) and all \(\alpha \in (-\infty ,{\tilde{\alpha }}]\),

Taking the infimum over \(\rho \) gives

4 The penalized optimal location problem: Proof of Theorem 1.1

This section is devoted to the proof of Theorem 1.1. In particular, we prove that

The upper bound is easy to prove:

Lemma 4.1

(Upper bound on \(C_{\mathrm {p}}(\alpha )\)). For all \(\alpha \in (-\infty ,1)\),

Proof

Recall from Remark 3.6 that \(G(\delta _1)=c_6\). Therefore

\(\square \)

Remark 4.2

(Direct proof of the upper bound). Lemma 4.1 can also be proved without using the result from [14] that \(G(\delta _1)=c_6\). Instead we can start from equation (42) and directly build a sequence of asymptotically optimal competitors \(\mu _\delta \) supported on a subset of a triangular lattice. This is done by covering the square Q with regular hexagons of a suitable size and making the heuristic calculation from Remark 1.3 rigorous; cf. [18, Lemma 8].

The matching lower bound

requires much more work. Owing to Corollary 3.10 and Remark 3.11 we can assume without loss of generality that

We will do this throughout the rest of the paper.

4.1 Rescaling of the energy and the energy of a partition

To prove (45) it is convenient to rescale the domain Q. As \(\delta \rightarrow 0\), the optimal masses \(m_i\) in (42) go to 0. Following [18], instead of keeping the domain Q fixed as \(\delta \rightarrow 0\), we blow up Q in such a way that the optimal masses \(m_i\) tend to 1. The following definition is motivated by the heuristic calculation given in Remark 1.3.

Definition 4.3

(Rescaled domain and energy). For \(\alpha \in (-\infty ,1)\) and \(\delta >0\), define

and define the rescaled square domain \(Q_{\delta ,\alpha }\) by

Moreover, define the set of admissible discrete measures \({\mathcal {A}}_{\delta ,\alpha }\) by

and define the rescaled energy \({\mathcal {F}}_{\delta ,\alpha }:{\mathcal {A}}_{\delta ,\alpha } \rightarrow {\mathbb {R}}\) by

Remark 4.4

(Restating \(C_{\mathrm {p}}(\alpha )\) in terms of \({\mathcal {F}}_{\delta ,\alpha }\)). Let \(\mu = \sum _i m_i \delta _{z_i}\in {\mathcal {P}}_{\mathrm {d}}(Q)\). For \(\alpha \in (-\infty ,1)\) and \(\delta >0\), define \({\widetilde{z}}_i:=V_{\delta ,\alpha }^{1/2} z_i\), \({\widetilde{m}}_i:=V_{\delta ,\alpha } m_i\), and \({\widetilde{\mu }}_{\delta ,\alpha }:=\sum _i {\widetilde{m}}_i \delta _{{\widetilde{z}}_i} \in {\mathcal {A}}_{\delta ,\alpha }\). Then

We now state two first-order necessary conditions for minimizers of \({\mathcal {F}}_{\delta ,\alpha }\). For a proof see, for instance, [17, Theorem 4.5].

Lemma 4.5

(Properties of minimizers). Let \(\mu = \sum _{i=1}^{N_\mu } m_i \delta _{z_i}\in {\mathcal {A}}_{\delta ,\alpha }\) be a minimizer of \({\mathcal {F}}_{\delta ,\alpha }\). Let T be the optimal transport map defining \(W_2({\mathbbm {1}}_{Q_{\delta ,\alpha }},\mu )\) and let \((w_1,\ldots ,w_{N_\mu })\) be the weights of the corresponding Laguerre tessellation (see Lemma 2.1).

-

(i)

For all \(i \in \{1,\dots ,N_\mu \}\), we have

$$\begin{aligned} w_i=-\frac{\alpha }{1-\alpha } c_6 m_i^{\alpha -1}. \end{aligned}$$ -

(ii)

The point \(z_i\) is the centroid of the Laguerre cell \(\overline{T^{-1}(z_i)}\), namely

$$\begin{aligned} z_i = \frac{1}{m_i}\int _{T^{-1}(z_i)} x\,\mathrm {d}x. \end{aligned}$$In particular, \(z_i\in T^{-1}(z_i)\).

Remark 4.6

(Centroidal Laguerre tessellations). Lemma 4.5(ii) implies that minimizers of \({\mathcal {F}}_{\delta ,\alpha }\) generate centroidal Laguerre tessellations, which means that the particles \(z_i\) lie at the centroids of their Laguerre cells \(T^{-1}(z_i)\) [16, 73].

In the following it will also be convenient to reason from a geometrical point of view. Each \(\mu \in {\mathcal {A}}_{\delta ,\alpha }\) induces a partition of \(Q_{\delta ,\alpha }\) by the Laguerre cells \(\overline{T^{-1}(z_i)}\), where T is the optimal transport map defining \(W_2({\mathbbm {1}}_{Q_{\delta ,\alpha }},\mu )\). We define a wider class of partitions as follows:

Definition 4.7

(Admissible partitions). Let \({\mathcal {S}}_{\delta ,\alpha }\) denote the family of partitions of \(Q_{\delta ,\alpha }\) of the form \({\mathcal {C}}=(C_1,\dots , C_k)\) where \(k \in {\mathbb {N}}\), \(C_i \subset Q_{\delta ,\alpha }\) is measurable, and \(\sum _{i=1}^k {\mathbbm {1}}_{C_i} = 1\) a.e. in \(Q_{\delta ,\alpha }\).

The advantage of working with partitions instead of measures is that it allows us to localise the nonlocal energy \({\mathcal {F}}_{\delta ,\alpha }\).

Definition 4.8

(Optimal partitions). Define the partition energy \(F:{\mathcal {S}}_{\delta ,\alpha } \rightarrow {\mathbb {R}}\) by

where \(\xi _{C_i}:=\frac{1}{|C_i|} \int _{C_i} x\, \mathrm {d}x\) is the centroid of \(C_i\), \(i\in \{1,\dots ,k\}\). We say that \({\mathcal {C}}\in {\mathcal {S}}_{\delta ,\alpha }\) is an optimal partition if it minimizes F.

To each \(\mu \in {\mathcal {A}}_{\delta ,\alpha }\) it is possible to associate an element of \({\mathcal {S}}_{\delta ,\alpha }\) as follows:

Definition 4.9

(Partition associated to a discrete measure). Let \(\mu \in {\mathcal {A}}_{\delta ,\alpha }\) be of the form \(\mu =\sum _{i=1}^{N_\mu } m_i \delta _{z_i}\). Define \({\mathcal {C}}^\mu = (C^\mu _1,\dots ,C^\mu _{N_\mu })\in {\mathcal {S}}_{\delta ,\alpha }\) by

for all \(i\in \{1,\dots ,N_\mu \}\), where T is the optimal transport map defining \(W_2({\mathbbm {1}}_{Q_{\delta ,\alpha }},\mu )\).

Remark 4.10

(Equivalence of the partition formulation). It was proved in [18, p. 125] that

Let \(\mu =\sum _{i=1}^{N_\mu } m_i \delta _{z_i}\) be a minimizer of \({\mathcal {F}}_{\delta ,\alpha }\). For \(i\in \{1,\dots ,N_\mu \}\), let \(n_i\) denote the number of edges of \(C_i^\mu \). Then we can bound the energy from below as follows:

by Lemma 2.3 and Remark 2.4. For \(\alpha \in (-\infty ,1)\), define \(g_\alpha :[0,\infty ) \times [3,\infty ) \rightarrow {\mathbb {R}}\) by

In this notation the lower bound above becomes

In the following section we study the function \(g_\alpha \).

4.2 The convexity inequality

We start by proving a technical result that plays the role of a convexity inequality for \(g_\alpha \). We want to show that, for large enough values of m,

Writing this out explicitly gives

where

For \(\alpha \in (0,1)\), define the function \(h_\alpha :[0,\infty )\times [3,\infty )\rightarrow {\mathbb {R}}\) to be the difference between \(g_\alpha \) and its tangent plane approximation at (1, 6):

Note that in this section we restrict our attention to \(\alpha >0\) without loss of generality since by the monotonicity result (Lemma 3.12) in the end we only need to consider \(\alpha = {\overline{\alpha }}=0.583\). The typical behaviour of the function \(m\mapsto h_\alpha (m,n)\) is depicted in Fig. 3. Our aim is to prove that \(h_\alpha (m,n)\) is non-negative for all integers \(n \ge 3\) for large enough values of m, as suggested by the figure.

Lemma 4.11

(Positivity of \(h_{{\overline{\alpha }}}\)). Let \(n\ge 3\) be a integer. If \(h_{{\overline{\alpha }}}(m_1,n)\ge 0\) for some \(m_1\ge 0\), then \(h_{{\overline{\alpha }}}(m,n)\ge 0\) for all \(m\ge m_1\).

Before proving this, we prove the following easy but important corollary, which allows us to reduce the proof of the convexity inequality \(h_{{\overline{\alpha }}}(\cdot ,n)\ge 0\) for all integers \(n \ge 3\) to the finite number of cases \(n \in \{3,4,5\}\):

Corollary 4.12

(Reduction to \(n \in \{3,4,5\}\)). Let \(n \ge 6\) be an integer. Then \(h_{{\overline{\alpha }}}(m,n)\ge 0\) for all \(m \ge 0\).

Proof

Observe that \(h_{{\overline{\alpha }}}(0,n)=-\kappa (n-6) \ge 0\) for all \(n \ge 6\). Therefore the result follows immediately from Lemma 4.11. \(\square \)

On the other hand, \(h_{{\overline{\alpha }}}(0,n)<0\) for \(n \in \{3,4,5\}\). This is why in the next section we will need to prove a lower bound on the masses \(m_i\) of minimizers of \({\mathcal {F}}_{\delta ,{\overline{\alpha }}}\) to ensure the validity of the convexity inequality (50).

Proof of Lemma 4.11

Step 1. First we study the shape of the function \(m\mapsto h_\alpha (m,n)\). In particular, we show that it has exactly one local minimum point. Its derivative is

It is easy to see that \(\partial _m h_\alpha (m,n)\) is strictly convex in m (since \(\alpha > 0\)). Therefore \(m \mapsto \partial ^2_{mm} h_\alpha (m,n)\) is increasing and so \(m\mapsto \partial _m h_\alpha (m,n)\) has at most one critical point. On the other hand, \(\lim _{m \rightarrow 0}\partial _m h_\alpha (m,n)=+\infty \), \(\lim _{m \rightarrow \infty }\partial _m h_\alpha (m,n)=+\infty \). Therefore \(m\mapsto \partial _m h_\alpha (m,n)\) has exactly one critical point and \(m\mapsto h_\alpha (m,n)\) has at most two critical points.

Next we prove that \(m\mapsto h_\alpha (m,n)\) has exactly two critical points. It is sufficient to prove that

for all \(n \ge 3\) (since \(\lim _{m \rightarrow 0}\partial _m h_\alpha (m,n) >0\) and \(\lim _{m \rightarrow \infty }\partial _m h_\alpha (m,n) > 0\)). We have

It is straightforward to check that

with

Differentiating again gives

The concave quadratic polynomial q has roots \(\alpha =1\) and \(\alpha = 2/\ln 2 > 2\). Therefore, for all \(\alpha \in (0,1)\), \(q(\alpha )<0\), \(\psi '(\alpha )<0\), and

From equations (52)–(54) we conclude that \(\alpha \mapsto \partial _m h_\alpha (1/2,n)\) is increasing. Therefore

Since \(n \mapsto c_n\) is decreasing (see Lemma 2.5),

as required.

We have shown that \(m\mapsto h_\alpha (m,n)\) has exactly two critical points. The smallest critical point is a local maximum point and the largest critical point is a local minimum point (since \(m\mapsto \partial ^2_{mm} h_\alpha (m,n)\) is increasing). Let \({\widetilde{m}}(\alpha ,n)\) denote the local minimum point. To prove the lemma it is sufficient to prove that

for \(\alpha ={\overline{\alpha }}\) and for all \(n \in {\mathbb {N}} \cap [3,\infty )\).

Step 2. Next we prove (55) for the case \(n=6\). A direct computation shows that 1 is a local minimum point of \(m\mapsto h_\alpha (m,6)\) for all \(\alpha \in (0,1)\). Therefore \({\widetilde{m}}(\alpha ,6)=1\) and \(\varphi (\alpha ,6)= h_{\alpha }(1,6) =0\).

Step 3. Next we prove (55) for the case \(n \in \{3,4,5,7\}\). Let

Then numerically evaluating \(\partial _m h_{{\overline{\alpha }}}\) gives

Let \(n \in \{3,4,5,7\}\). By the Intermediate Value Theorem, the map \(m \mapsto \partial h_{{\overline{\alpha }}}(m,n)\) has a root between \(m_1(n)\) and \(m_2(n)\). Moreover, since \(\partial _m h_{{\overline{\alpha }}}(m_1(n),n) < 0\), we have bracketed the largest root \({\widetilde{m}}({\overline{\alpha }},n)\): \(m_1(n)<{\widetilde{m}}({\overline{\alpha }},n)<m_2(n)\). Therefore

which proves (55) for the case \(n \in \{3,4,5,7\}\).

Step 4. Finally, we prove (55) for the case \(n \in {\mathbb {N}} \cap [8,\infty )\). To do this we prove that \(\partial _n \varphi ({\overline{\alpha }},n)>0\) for \(n\ge 7\). Then the result follows from the case \(n=7\) proved in Step 3. By definition of \({\widetilde{m}}(\alpha ,n)\), for all \(\alpha \in (0,1)\), we have \(\partial _m h_\alpha ({\widetilde{m}}(\alpha ,n),n)=0\). Therefore

Since \(n\mapsto c_n\) is convex (Lemma 2.5), then \(\partial _n c_n\) is increasing and we get the lower bound

for all \(n \ge 7\). We will prove below that

Observe that

From (56), (57), (58) we obtain that

as required.

To prove (57) we reason as follows. Using (51) and the fact that \(n\mapsto c_n\) is decreasing (Lemma 2.5), we deduce that \(n \mapsto \partial _m h_\alpha (m,n)\) is decreasing for all m, and hence \(n\mapsto {\widetilde{m}}(\alpha ,n)\) is increasing. Therefore \({\widetilde{m}}(\alpha ,n)\le {\widetilde{m}}(\alpha ,\infty )\), where \({\widetilde{m}}(\alpha ,\infty )\) is defined to be the largest root of

where \(c_\infty \) was defined in Lemma 2.5. We want to show that \({\widetilde{m}}({\overline{\alpha }},\infty )\le 3/2\). We have

Therefore \({\widetilde{m}}({\overline{\alpha }},\infty ) \in (1,3/2)\) by the Intermediate Value Theorem. This proves (57) and completes the proof. \(\square \)

Remark 4.13

Despite the fact that we used the specific value of \({{\overline{\alpha }}}\) in several places in the proof of Lemma 4.11, we expect Lemma 4.11 to hold for all \(\alpha \in (0,1)\).

4.3 Lower bound on the area of optimal cells

Let \(\mu =\sum _{i=1}^{N_\mu }m_i\delta _{z_i} \in {\mathcal {A}}_{\delta ,{\overline{\alpha }}}\) be a minimizer of \({\mathcal {F}}_{\delta ,{\overline{\alpha }}}\). We will prove the convexity inequality \(h_{{\overline{\alpha }}}(m_i,n)\ge 0\) for all \(i \in \{1,\ldots ,N_\mu \}\), \(n \in {\mathbb {N}} \cap [3,\infty )\). The idea is to prove a lower bound \(m_i\ge {\overline{m}}\) such that \(h_{{\overline{\alpha }}}({\overline{m}},n)\ge 0\) for all \(n \in {\mathbb {N}} \cap [3,\infty )\). Then the convexity inequality follows from Lemma 4.11.

We prove the lower bound \(m_i\ge {\overline{m}}\) following the strategy of the proof of [18, Lemma 7], which was developed for the case \(\alpha =0.5\). The main differences are that we have to deal with the more difficult case of \(\alpha ={\overline{\alpha }}>0.5\) and that we optimise some of the estimates. Our proof can be used to give a lower bound \({\overline{m}}\) on the areas \(m_i\) for all \(\alpha \in (0,1)\), but this lower bound does not satisfy the convexity inequality \(h_{\alpha }({\overline{m}},n)\ge 0\) if \(\alpha > {\overline{\alpha }}\). Our lower bound on the area of the cells holds for cells with arbitrarily many sides, although by Corollary 4.12 we only need the lower bound for cells with 3, 4 or 5 sides. We saw no advantage in the proof of restricting the number of sides.

The following result gives the difference in energy of a partition and the one obtained by merging two of its cells. Recall that \({\mathcal {S}}_{\delta ,\alpha }\) and F were defined in Definitions 4.7 and 4.8 .

Lemma 4.14

(Merging). Let \({\mathcal {C}}=(C_1,\dots ,C_k)\in {\mathcal {S}}_{\delta ,\alpha }\), \(k\ge 2\). For \(i\in \{1,\dots ,k\}\) let \(m_i=|C_i|\) and let \(z_i\in C_i\) be the centroid of \(C_i\). Define \({\mathcal {D}}\in {\mathcal {S}}_{\delta ,\alpha }\) by \({\mathcal {D}}:=(C_1\cup C_2, C_3,\dots , C_k)\). For all \(\alpha \in (-\infty ,1)\),

Proof

By definition

Let \({\overline{z}}\in C_1\cup C_2\) be the centroid of \(C_1\cup C_2\):

A direct computation gives

The result now follows immediately from the definition of F. \(\square \)

We now prove a lower bound on the area of optimal cells, as well as an upper bound on the diameter of the cells and the maximum distance between the centroids. The latter two estimates will be used later to deal with the fact that the lower bound on the area of cells close to the boundary of \(Q_{\delta ,{\overline{\alpha }}}\) is not good enough to ensure the validity of the convexity inequality (50).

Lemma 4.15

(Lower bound on the area of optimal cells). Let \(\mu =\sum _{i=1}^{N_\mu }m_i\delta _{z_i} \in {\mathcal {A}}_{\delta ,{\overline{\alpha }}}\) be a minimizer of \({\mathcal {F}}_{\delta ,{\overline{\alpha }}}\). If \(\delta >0\) is sufficiently small, then the following hold:

-

(i)

If \(\mathrm {dist}(z_i,\partial Q_{\delta ,{\overline{\alpha }}}) \ge 4\), then

$$\begin{aligned} m_i > {\overline{m}} := 2.0620 \times 10^{-4}. \end{aligned}$$ -

(ii)

If \(\mathrm {dist}(z_i,\partial Q_{\delta ,{\overline{\alpha }}}) < 4\), then

$$\begin{aligned} m_i > m_{\mathrm {b}} := 1.5212 \times 10^{-5}. \end{aligned}$$ -

(iii)

Let \(B \subset Q_{\delta ,{\overline{\alpha }}}\) be a ball of radius R. If \(B \cap \mathrm {supp}(\mu ) = \emptyset \), then \(R<R_0 := 3.3644\).

-

(iv)

Let T be the optimal transport map defining \(W_2({\mathbbm {1}}_{Q_{\delta ,{\overline{\alpha }}}},\mu )\). For all \(i \in \{1,\ldots ,N_\mu \}\),

$$\begin{aligned} \mathrm {diam}(T^{-1}(z_i)) \le D_0 := 2 \left( 8 R_0^2 + \frac{{\overline{\alpha }}}{1-{\overline{\alpha }}} c_6 m_{\mathrm {b}}^{{\overline{\alpha }}-1} \right) ^{\frac{1}{2}}. \end{aligned}$$

The construction inside the ball \(B_R({\bar{z}})\) (see the proof of Lemma 4.15)

Proof

Step 1: Upper bound on the distance between Dirac masses. Let \({\bar{z}}\in \mathrm{supp}(\mu )\) satisfy \(\mathrm {dist}({\bar{z}},\partial Q_{\delta ,{\overline{\alpha }}}) \ge 4\). Take \(R \in (0,4)\) such that \(B_{R}({\bar{z}})\cap \mathrm{supp}(\mu )=\{{\bar{z}}\}\). We want to get an upper bound on R. Let \(S:B_{R}({\bar{z}}) \rightarrow \mathrm{supp}(\mu )\) be any Borel map. Then

It is now convenient to use the partition energy F. Let \({\mathcal {C}}^\mu \) be the partition associated to the minimizer \(\mu \) (see Definition 4.9). Consider the partition \(\widetilde{{\mathcal {C}}} \in {\mathcal {S}}_{\delta ,\alpha }\) obtained by modifying \({\mathcal {C}}^\mu \) in the ball \(B_R({\bar{z}})\) as follows. Write \({\mathcal {C}}^\mu =(C_1,\ldots ,C_{N_\mu })\) and define \({\widetilde{C}}_i = C_i \setminus B_R({\bar{z}})\) for \(i \in \{ 1 ,\ldots , N_\mu \}\). Let \(\{H_j\}\) be a tiling of the plane by regular hexagons of area A, where \(A>0\) will be defined below, and let \(\widetilde{H_j}=H_j \cap B_R({\bar{z}})\). Define \(\widetilde{{\mathcal {C}}}\) to be the partition consisting of the sets \({\widetilde{C}}_i\) for all \(i \in \{ 1 ,\ldots , N_\mu \}\) and \({\widetilde{H}}_j\) for all j such that \(\widetilde{H_j} \ne \emptyset \); see Fig. 4.

Let \(d_A:=2^{\frac{3}{2}}3^{-\frac{3}{4}}A^{\frac{1}{2}}\) be the diameter of a regular hexagon of area A. The number of hexagons \(N_A\) needed to cover a ball of radius R is bounded above by

If \(H \in \widetilde{{\mathcal {C}}}\) is a (whole) regular hexagon of area A with centroid \(\xi _H\), then

Since \(\mu \) is a minimizer of \({\mathcal {F}}_{\delta ,{\overline{\alpha }}}\), then \(C^\mu \) is a minimizer of F. Therefore

where \(S:B_R({\bar{z}}) \rightarrow \mathrm {supp}(\mu )\) is defined by \(S(x)=\xi _{C_i}\) if \(x \in C_i\), and where in the final inequality we used the property of the centroid that

Combining estimates (63) and (60) gives

Define

Then (64) implies that \(p(R,A)\le 0\). The quadratic polynomial \(R \mapsto p(R,A)\) has one positive root and one negative root. Let \({\widetilde{R}}(A)\) denote the positive root:

Since \(p(R,A)\le 0\), we have \(R \in [0,{\widetilde{R}}(A)]\) for all A, and so

where the final inequality was obtained by numerically evaluating \({\widetilde{R}}(0.52)\). The choice \(A=0.52\) was motivated by numerically minimising \({\widetilde{R}}(A)\).

Step 2: Proof of (i). Let \({\bar{z}}\in \mathrm {supp}(\mu )\) satisfy \(\mathrm {dist}({\bar{z}},\partial Q_{\delta ,{\overline{\alpha }}}) \ge 4\). Let \(R_0=3.3644\). By Step 1, there exists at least one point \(z\in B_{R_0}({\bar{z}}) \cap \mathrm {supp}(\mu )\). In particular,

Let \(m=\mu (\{{\bar{z}}\})\), \(M=\mu (\{z\})\). We can assume without loss of generality that \(m \le M\) (otherwise simply interchange the roles of \({\bar{z}}\) and z).

Let \({\mathcal {C}}^\mu \) be the partition associated to the minimizer \(\mu \). We can define a new partition \({\mathcal {D}}\) by replacing the cells \(\overline{T^{-1}({\bar{z}})}\) and \(\overline{T^{-1}(z)}\) with their union. Then Lemma 4.14 yields

Define \(\lambda :=\frac{m}{M} \in (0,1]\). By dividing the previous inequality by \(M^{{\overline{\alpha }}}\) and rearranging we obtain

For \(\alpha \in (0,1)\), let

We can restrict out attention to \(\alpha >0\) since eventually we will apply this result to \(\alpha ={\overline{\alpha }}>0\). We want to bound \(\Theta _{\alpha }\) from below for the case \(\alpha ={\overline{\alpha }}\). The idea is the following: We first prove in Step 2a that the function \(\lambda \mapsto \Theta _{\alpha }(\lambda )\) has one minimum point for all \(\alpha \in (0,1)\). In Step 2b we estimate this minimum point for the case \(\alpha ={\overline{\alpha }}\).

Step 2a. In this substep we prove that, for all \(\alpha \in (0,1)\), the function \(\lambda \mapsto \Theta '_\alpha (\lambda )\) vanishes at only one point \(\lambda \in [0,1)\). Fix \(\alpha \in (0,1)\). We have

The strategy we use to prove that there exists a unique \(\lambda \in (0,1)\) such that \(\Lambda (\lambda )=0\) is the following: Using the fact that

the desired result is proved once we show that \(\Lambda '\) vanishes at only one point \(\lambda \in (0,1)\). A direct computation gives

where

We claim that

and that

for all \(\lambda \in [0,1)\). This will show that \(\Phi \) vanishes at only one point \(\lambda \in (0,1)\) and, in turn, that the same holds for \(\Lambda '\).

We start by proving (68). Note that \(\Phi (0)=-\alpha + \alpha ^2 < 0\) since \(\alpha \in (0,1)\). Let

We want to prove that \(\psi (\alpha )>0\). Since \(\psi (1)=0\), it is sufficient to prove that \(\psi '(\alpha )<0\). Note that \(\psi '(\alpha ) = -2^{2-\alpha }\ln 2 - 1 +2\alpha \), \(\psi ''(\alpha )=2^{2-\alpha }(\ln 2)^2 + 2 >0\), and \(\psi '(1)=-2\ln 2 + 1 <0\). Therefore \(\psi (\alpha )>0\) for all \(\alpha \in (0,1)\), which completes the proof of (68).

Finally, we prove (69). We have

Since \(\Phi ''(\lambda )<0\) for all \(\lambda \in [0,1)\), we have that

We have

Therefore \(\varphi ''(\alpha )=0\) if and only if \(\alpha \in \{\alpha _-,\alpha _+\}\), where

Since \(1<\alpha _-<\alpha _+\), \(\varphi \) is strictly convex in \((0,\alpha _-)\). Therefore, for all \(\alpha \in (0,1)\),

Therefore \(\Phi '(\lambda ) \ge \varphi (\alpha )>0\), which completes the proof of (69).

Step 2b. Let \({\bar{\lambda }} \in (0,1)\) denote the unique root of \(\Theta '_{{\overline{\alpha }}}\). We now estimate \(\Theta _{{\overline{\alpha }}}({\bar{\lambda }})=\inf _{\lambda \in (0,1]}\Theta _{{\overline{\alpha }}}(\lambda )\). Let \(\lambda _1 = 0.160764\) and \(\lambda _2 = 0.160767\). Numerically we see that

Therefore \({\bar{\lambda }}\in (\lambda _1,\lambda _2)\) by the Intermediate Value Theorem. Recall that

Therefore, for all \(\lambda \in (\lambda _1,\lambda _2)\),

Therefore \(|\Theta '_{{\overline{\alpha }}}(\lambda )|\le 6.3 \times 10^{-5}\) for all \(\lambda \in (\lambda _1,\lambda _2)\). It follows that

From (66), (67) and (70) we conclude that

which proves (i).

Step 3: Proof of (iii). This is very similar to Step 1. Let \(B=B_R(x_0) \subset Q_{\delta ,{\overline{\alpha }}}\) satisfy \(B \cap \mathrm {supp}(\mu ) = \emptyset \). Let \(S:B \rightarrow \mathrm{supp}(\mu )\) be any Borel map. We have

The second inequality is clearly suboptimal, but it is sufficient for our purposes. Repeating exactly the same argument used in Step 1 (with \(B_R({\bar{z}})\) replaced by B) gives \(R<3.3644\), as required.

Step 4: Proof of (ii). Let \(z_i \in \mathrm {supp}(\mu )\) satisfy \(\mathrm {dist}(z_i,\partial Q_{\delta ,{\overline{\alpha }}}) < 4\). Let U be the square \(U = \{ x \in Q_{\delta ,{\overline{\alpha }}} : \mathrm {dist}(x,\partial Q_{\delta ,{\overline{\alpha }}}) \ge 4 \}\). Take \(x \in \partial U\) satisfying \(|x-z_i| = \mathrm {dist}(z_i,\partial U)\). Let K be a closed square of side-length \(2R_0\) such that \(x \in \partial K\) and \(K \subset U\) (such a square exists if \(\delta \) is sufficiently small). By Step 3, there exists \(z_j \in \mathrm {supp}(\mu ) \cap K\), \(z_j \ne z_i\). Therefore