Abstract

We study (unrooted) random forests on a graph where the probability of a forest is multiplicatively weighted by a parameter \(\beta >0\) per edge. This is called the arboreal gas model, and the special case when \(\beta =1\) is the uniform forest model. The arboreal gas can equivalently be defined to be Bernoulli bond percolation with parameter \(p=\beta /(1+\beta )\) conditioned to be acyclic, or as the limit \(q\rightarrow 0\) with \(p=\beta q\) of the random cluster model. It is known that on the complete graph \(K_{N}\) with \(\beta =\alpha /N\) there is a phase transition similar to that of the Erdős–Rényi random graph: a giant tree percolates for \(\alpha > 1\) and all trees have bounded size for \(\alpha <1\). In contrast to this, by exploiting an exact relationship between the arboreal gas and a supersymmetric sigma model with hyperbolic target space, we show that the forest constraint is significant in two dimensions: trees do not percolate on \({\mathbb {Z}}^2\) for any finite \(\beta >0\). This result is a consequence of a Mermin–Wagner theorem associated to the hyperbolic symmetry of the sigma model. Our proof makes use of two main ingredients: techniques previously developed for hyperbolic sigma models related to linearly reinforced random walks and a version of the principle of dimensional reduction.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 The Arboreal Gas and Uniform Forest Model

1.1 Definition and main results

Let \({{\mathbb {G}}} = (\Lambda ,E)\) be a finite (undirected) graph. A forest is a subgraph \(F=(\Lambda , E')\) that does not contain any cycles. We write \(\mathcal {F}\) for the set of all forests. For \(\beta >0\) the arboreal gas (or weighted uniform forest model) is the measure on forests F defined by

where |F| denotes the number of edges in F. It is an elementary observation that the arboreal gas with parameter \(\beta \) is precisely Bernoulli bond percolation with parameter \(p_{\beta }=\beta /(1+\beta )\) conditioned to be acyclic:

The arboreal gas model is also the limit, as \(q\rightarrow 0\) with \(p=\beta q\), of the q-state random cluster model, see [40]. The particular case \(\beta =1\) is the uniform forest model mentioned in, e.g., [25, 26, 31, 40]. We emphasize that the uniform forest model is not the weak limit of a uniformly chosen spanning tree; emphasis is needed since the latter model is called the ‘uniform spanning forest’ (USF) in the probability literature. We will shortly see that the arboreal gas has a richer phenomenology than the USF. In fact, in finite volume, the uniform spanning tree is the \(\beta \rightarrow \infty \) limit of the arboreal gas.

Given that the arboreal gas arises from bond percolation, it is natural to ask about the percolative properties of the arboreal gas. It is straightforward to rule out the occurrence of percolation for small values of \(\beta \) via the following proposition, see Appendix A.

Proposition 1.1

On any finite graph, the arboreal gas with parameter \(\beta \) is stochastically dominated by Bernoulli bond percolation with parameter \(p_{\beta }\).

In particular, all subgraphs of \({\mathbb {Z}}^{d}\), all trees have uniformly bounded expectation if \(p_{\beta }<p_{c}(d)\) where \(p_{c}(d)\) is the critical parameter for Bernoulli bond percolation on \({\mathbb {Z}}^{d}\).

In the infinite-volume limit, the arboreal gas is a singular conditioning of bond percolation, and hence the existence of a percolation transition as \(\beta \) varies is non-obvious. However, on the complete graph it is known that there is a phase transition, see [8, 34, 36]. To illustrate some of our methods we will give a new proof of the existence of a transition.

Proposition 1.2

Let \({\mathbb {E}}_{N,\alpha }\) denote the expectation of the arboreal gas on the complete graph \(K_{N}\) with \(\beta = \alpha /N\), and let \(T_{0}\) be the tree containing a fixed vertex 0. Then

where \(c=3^{2/3}\Gamma (4/3)/\Gamma (2/3)\) and \(\Gamma \) denotes the Euler Gamma function.

Thus there is a transition for the arboreal gas exactly as for the Erdős–Rényi random graph with edge probability \(\alpha /N\). To compare the arboreal gas directly with the Erdős–Rényi graph, recall that Proposition 1.1 shows the arboreal gas is stochastically dominated by the Erdős–Rényi graph with edge probability \(p_{\beta } = \beta - \beta ^{2}/(1+\beta )\). The fact that the Erdős–Rényi graph asymptotically has all components trees in the subcritical regime \(\alpha <1\) makes the behaviour of the arboreal gas when \(\alpha <1\) unsurprising. On the other hand, the conditioning plays a role when \(\alpha >1\), as can be seen at the level of the expected tree size. For the supercritical Erdős–Rényi graph the expected size is \(4(\alpha -1)^{2}N\) as \(\alpha \downarrow 1\) — this follows from the fact that the largest component for the Erdős–Rényi graph with \(\alpha >1\) has size yN where y solves \(e^{-\alpha y}=1-y\), see, e.g., [3]. For further discussion, see Sect. 1.3.

On \({\mathbb {Z}}^2\), the singular conditioning that defines the arboreal gas has a profound effect. In the next theorem statement and henceforth, for finite subgraphs \(\Lambda \) of \({\mathbb {Z}}^{2}\) we write \({\mathbb {P}}_{\Lambda ,\beta }\) for the arboreal gas on \(\Lambda \).

Theorem 1.3

For all \(\beta >0\) there is a universal constant \(c_\beta >0\) such that the connection probabilities satisfy

for all \(\Lambda \subset {\mathbb {Z}}^2\), where ‘\(i\leftrightarrow j\)’ denotes the event that the vertices i and j are in the same tree.

This theorem, together with classical techniques from percolation theory, imply the following corollary for the infinite volume limit, see Appendix A.

Corollary 1.4

Suppose \({\mathbb {P}}_{\beta }\) is a translation-invariant weak limit of \({\mathbb {P}}_{\Lambda _{n},\beta }\) for an increasing exhaustion of finite volumes \(\Lambda _{n}\uparrow {\mathbb {Z}}^{2}\). Then all trees are finite \({\mathbb {P}}_{\beta }\)-almost surely.

Thus on \({\mathbb {Z}}^{2}\) the behaviour of the arboreal gas is completely different from that of Bernoulli percolation. The absence of a phase transition can be non-rigorously predicted from the representation of the arboreal gas as the \(q\rightarrow 0\) limit (with \(p=\beta q\) fixed) of the random cluster model with \(q>0\) [19]. We briefly describe how this prediction can be made. The critical point of the random cluster model for \(q\geqslant 1\) on \({\mathbb {Z}}^{2}\) is known to be \(p_{c}(q)=\sqrt{q}/(1+\sqrt{q})\) [9]. Conjecturally, this formula holds for \(q>0\). Thus \(p_{c}(q)\sim \sqrt{q}\) as \(q\downarrow 0\), and by assuming continuity in q one obtains \(\beta _{c}=\infty \) for the arboreal gas. This heuristic applies also to the triangular and hexagonal lattices. Our proof is in fact quite robust, and applies to much more general recurrent two-dimensional graphs. We have focused on \({\mathbb {Z}}^{2}\) for the sake of concreteness.

This absence of percolation is not believed to persist in dimensions \(d\geqslant 3\): we expect that there is a percolative transition on \({\mathbb {Z}}^{d}\) with \(d\geqslant 3\). In the next section we will discuss the conjectural behaviour of the arboreal gas on \({\mathbb {Z}}^{d}\) for all \(d\geqslant 2\). Before this, we outline how we obtain the above results. Our starting point is an alternate formulation of the arboreal gas. Namely, in [13, 14, 16] it was noticed that the arboreal gas can be represented in terms of a model of fermions, and that this fermionic model can be extended to a sigma model with values in the superhemisphere. We also use this fermionic representation, but our results rely in an essential way on the new observation that this model is most naturally connected to a sigma model taking values in a hyperbolic superspace. Similar sigma models have recently received a great deal of attention due to their relationship with random band matrices and reinforced random walks [6, 21, 44, 45]. We will discuss the connection between our techniques and these papers after introducing the sigma models relevant to the present paper. A key step in our proof is the following integral formula for connection probabilities in the arboreal gas (see Corollary 2.14 for a version with general edge weights):

where \(\Delta _{\beta (t)}\) is the graph Laplacian with edge weights \(\beta e^{t_i+t_j}\), understood as acting on \(\Lambda \setminus 0\). This formula is a consequence of the hyperbolic sigma model representation of the arboreal gas.

Surprisingly, if the exponent 3/2 in (1.5) is replaced by 1/2, then the integrand on the right-hand side is the mixing measure of the vertex-reinforced jump process found by Sabot and Tarrès [45]. The Sabot–Tarrès formula (along with a closely related version for the edge-reinforced random walk) is known as the magic formula [32]. It seems even more magical to us that the same formula, with only a change of exponent, describes the arboreal gas. We will explain in Sect. 2 that there are in fact three ingredients to this magic: a ‘non-linear’ version of the matrix-tree theorem, supersymmetric localisation, and horospherical coordinates for (super-)hyperbolic space.

We remark that the whole family of sigma models taking values in hyperbolic superspaces has interesting behaviour, but for the present paper we restrict our attention to those related to the arboreal gas. A more general discussion of such models can be found in [17] by the second author.

1.2 Context and conjectured behaviour

Recall that ‘\(i\leftrightarrow j\)’ denotes the event that the vertices i and j are in the same tree. We also write \({\mathbb {P}}_{\beta }\left[ ij\right] \) for the probability an edge ij is in the forest.

The following conjecture asserts that the arboreal gas has a phase transition in dimensions \(d\geqslant 3\), just as in mean-field theory (Proposition 1.2). Numerical evidence for this transition can be found in [19].

Conjecture 1.5

For \(d\geqslant 3\) there exists \(\beta _c > 0\) such that

where \(T_0\) is the tree containing 0 and \(B_{n}\) is the ball of radius n centred at 0. Moreover, when \(\beta <\beta _c\) there is a universal constant \(c_\beta >0\) such that

When \(\beta > \beta _c\) there is a universal constant \(c_{\beta }'> 0\) such that

As indicated in the previous section, it is straightforward to prove the first equality of (1.6) when \(\beta \) is sufficiently small. The existence of a transition, i.e., a percolating phase for \(\beta \) large, is open. However, a promising approach to proving the existence of a percolation transition when \(d\geqslant 3\) and \(\beta \gg 1\) is to adapt the methods of [21]; we are currently pursuing this direction. Obviously, the existence of a sharp transition, i.e., a precise \(\beta _{c}\) separating the two behaviours in (1.6) is also open. The next conjecture distinguishes the supercritical behaviour of the arboreal gas from that of percolation for which the (centered) connection probabilities have exponential decay.

Conjecture 1.6

For \(d\geqslant 3\), when \(\beta >\beta _{c}\)

where \(c_{\beta }'\) is the optimal constant for which (1.8) holds.

Assuming the existence of a phase transition, one can also ask about the critical behaviour of the arboreal gas. One intriguing aspect of this question is that the upper critical dimension is not clear, even heuristically. There is some evidence that the critical dimension of the arboreal gas should be \(d=6\), as for percolation, and opposed to \(d=4\) for the Heisenberg model. For further details, and for other related conjectures, see [16, Section 12].

Theorem 1.3 shows that the behaviour of the arboreal gas in two dimensions is different from that of percolation. This difference would be considerably strengthened by the following conjecture, which first appeared in [13].

Conjecture 1.7

For \(\Lambda \subset {\mathbb {Z}}^{2}\), for any \(\beta >0\) there exists a universal constant \(c_\beta >0\) such that

As \(\beta \rightarrow \infty \), the constant \(c_{\beta }\) is exponentially small in \(\beta \):

In particular, \({\mathbb {E}}_{\beta }|T_0| \approx e^{c\beta } < \infty \) (with a different c) where \(T_0\) is the tree containing 0.

This conjecture is much stronger than the main result of the present paper, Theorem 1.3, which establishes only that all trees are finite almost surely, a significantly weaker property than having finite expectation.

Conjecture 1.7 is a version of the mass gap conjecture for ultraviolet asymptotically free field theories. The conjecture is based on the field theory representation discussed in Sect. 2, and supporting heuristics can be found in, e.g., [13]. Other models with the same conjectural feature include the two-dimensional Heisenberg model [41], the two-dimensional vertex-reinforced jump process [21] (and other \({\mathbb {H}}^{n|2m}\) models with \(2m-n\leqslant 0\), see [17]), the two-dimensional Anderson model [1], and most prominently four-dimensional Yang–Mills Theories [29, 41].

Let us briefly indicate discuss why Conjecture 1.7 seems challenging. Note that in finite volume the (properly normalized) arboreal gas converges weakly to the uniform spanning tree as \(1/\beta \rightarrow 0\), see Appendix B. For the uniform spanning tree it is a triviality that \(c_{\beta }=0\), and this is consistent with the conjecture \(c_\beta \approx e^{-c\beta }\) as \(\beta \rightarrow \infty \). On the other hand \(c_\beta \approx e^{-c\beta }\) suggests a subtle effect, not approachable via perturbative methods such as using \(1/\beta >0\) as a small parameter for a low-temperature expansion as can be done for, e.g., the Ising model. Indeed, since \(t \mapsto e^{-c/t}\) has an essential singularity at \(t=0\), its behaviour as \(t=1/\beta \rightarrow 0\) cannot be detected at any finite order in \(t=1/\beta \). The same difficulty applies to the other models mentioned above for which analogous behaviour is conjectured.

The last conjecture we mention is the negative correlation conjecture stated in [26, 31, 40] and recently in [10, 27]. This conjecture is also expected to hold true for general (positive) edge weights, see Sect. 2.1.

Conjecture 1.8

For any finite graph and any \(\beta >0\) negative correlation holds: for distinct edges ij and kl,

More generally, for all distinct edges \(i_1j_1, \dots , i_nj_n\) and \(m<n\),

The weaker inequality \({\mathbb {P}}_\beta [ij,kl] \leqslant 2{\mathbb {P}}_\beta [ij] {\mathbb {P}}_\beta [kl]\) was recently proved in [10]. It is intriguing that the Lorentzian signature plays an important role in both [10] and the present work, but we are not aware of a direct relation. An important consequence of the full conjecture (with factor 1) is the existence of translation invariant arboreal gas measures on \({\mathbb {Z}}^d\); we prove this in Appendix A.

Proposition 1.9

Assume Conjecture 1.8 is true. Suppose \(\Lambda _{n}\) is an increasing family of subgraphs such that \(\Lambda _{n}\uparrow {\mathbb {Z}}^{d}\), and let \({\mathbb {P}}_{\beta ,n}\) be the arboreal gas on the finite graph \(\Lambda _{n}\). Then the weak limit \(\lim _{n}{\mathbb {P}}_{\beta ,n}\) exists and is translation invariant.

Remark 1

The conjectured inequality (1.12) can be recast as a reversed second Griffiths inequality. More precisely, (1.12) can be rewritten in terms of the \({\mathbb {H}}^{0|2}\) spin model introduced below in Sect. 2 as

This equivalence follows immediately from the results in Sect. 2.

1.3 Related literature

The arboreal gas has received attention under various names. An important reference for our work is [13], along with subsequent works by subsets of these authors and collaborators [7, 8, 14,15,16, 28]. These authors considered the connection of the arboreal gas with the antiferromagnetic \({{\mathbb {S}}} ^{0|2}\) model.

Our results are in part based on a re-interpretation of the \({{\mathbb {S}}} ^{0|2}\) formulation in terms of the hyperbolic \({\mathbb {H}}^{0|2}\) model. At the level of infinitesimal symmetries these models are equivalent. The power behind the hyperbolic language is that it allows for a further reformulation in terms of the \({\mathbb {H}}^{2|4}\) model, which is analytically useful. The \({\mathbb {H}}^{2|4}\) representation arises from a dimensional reduction formula, which in turn is a consequence of supersymmetric localization [2, 11, 39]. Much of Sect. 2 is devoted to explaining this. The upshot is that this representation allows us to make use of techniques originally developed for the non-linear \({\mathbb {H}}^{2|2}\) sigma model [20, 21, 49,50,51] and the vertex-reinforced jump process [4, 45]. In particular, our proof of Theorem 1.3 makes use of an adaptation of a Mermin–Wagner argument for the \({\mathbb {H}}^{2|2}\) model [6, 33, 44]; the particular argument we adapt is due to Sabot [44]. For more on the connections between these models, see [6, 45].

Conjecture 1.8 seems to have first appeared in print in [30]. Subsequent related works, including proofs for some special subclasses of graphs, include [10, 26, 46, 48].

As mentioned before, considerably stronger results are known for the arboreal gas on the complete graph. The first result in this direction concerned forests with a fixed number of edges [34], and later a fixed number of trees was considered [8]. Later in [36] the arboreal gas itself was considered, in the guise of the Erdős–Rényi graph conditioned to be acyclic. In [34] it was understood that the scaling window is of size \(N^{-1/3}\), and results on the behaviour of the ordered component sizes when \(\alpha = 1 +\lambda N^{-1/3}\) were obtained. In particular, the large components in the scaling window are of size \(N^{2/3}\). A very complete description of the component sizes in the critical window was obtained in [36].

We remark on an interesting aspect of the arboreal gas that was first observed in [34] and is consistent with Conjecture 1.6. Namely, in the supercritical regime, the component sizes of the k largest non-giant components are of order \(N^{2/3}\) [34, Theorem 5.2]. This is in contrast to the Erdős–Rényi graph, where the non-giant components are of logarithmic size. The critical size of the non-giant components is reminiscent of self-organised criticality, see [42] for example. A clearer understanding of the mechanism behind this behaviour for the arboreal gas would be interesting.

1.4 Outline

In the next section we introduce the \({\mathbb {H}}^{0|2}\) and \({\mathbb {H}}^{2|4}\) sigma models, relate them to the arboreal gas, and derive several useful facts. In Sect. 3 we use the \({\mathbb {H}}^{0|2}\) representation and Hubbard–Stratonovich type transformations to prove Theorem 3.1 by a stationary phase argument. In Sect. 4 we prove the quantitative part of Theorem 1.3, i.e., (1.4). The deduction that all trees are finite almost surely follows from adaptions of well-known arguments and is given in Appendix A. For the convenience of readers, we briefly discuss the fermionic representation of rooted spanning forests and spanning trees in Appendix B.

2 Hyperbolic Sigma Model Representation

In [13], it was noticed that the arboreal gas has a formulation in terms of fermionic variables, which in turn can be related to a supersymmetric spin model with values in the superhemisphere and negative (i.e., antiferromagnetic) spin couplings. In Sect. 2.1, we reinterpret this fermionic model as the \({\mathbb {H}}^{0|2}\) model (defined there) with positive (i.e., ferromagnetic) spin couplings. This reinterpretation has important consequences: in Sect. 2.4, we relate the \({\mathbb {H}}^{0|2}\) model to the \({\mathbb {H}}^{2|4}\) model (defined there) by a form of dimensional reduction applied to the target space. Technically this amounts to exploiting supersymmetric localisation associated to an additional set of fields. The \({\mathbb {H}}^{2|4}\) model allows the introduction of horospherical coordinates, which leads to an analytically useful probabilistic representation of the model as a gradient model with a non-local and non-convex potential. This gradient model is very similar to gradient models that arise in the study of linearly-reinforced random walks. In fact, up to the power of a determinant, this representation is in terms of a measure that is identical to the magic formula describing the mixing measure of the vertex-reinforced jump process, see (1.5).

2.1 \({\mathbb {H}}^{0|2}\) model and arboreal gas

Let \(\Lambda \) be a finite set, let \(\varvec{\beta } = (\beta _{ij})_{i,j\in \Lambda }\) be real-valued symmetric edge weights, and let \(\varvec{h} = (h_i)_{i\in \Lambda }\) be real-valued vertex weights. Throughout we will use this bold notation to denote tuples indexed by vertices or edges. For \(f:\Lambda \rightarrow {\mathbb {R}}\), we define the Laplacian associated with the edge weights by

The non-zero edge weights induce a graph \({{\mathbb {G}}} = (\Lambda , E)\), i.e., \(ij\in E\) if and only if \(\beta _{ij}\ne 0\).

Let \(\Omega ^{2\Lambda }\) be a (real) Grassmann algebra (or exterior algebra) with generators \((\xi _i,\eta _i)_{i\in \Lambda }\), i.e., all of the \(\xi _i\) and \(\eta _i\) anticommute with each other. For \(i,j\in \Lambda \), define the even elements

Note that \(u_i \cdot u_i = -1\) which we formally interpret as meaning that \(u_i=(\xi ,\eta ,z) \in {\mathbb {H}}^{0|2}\) by analogy with the hyperboloid model for hyperbolic space. However, we emphasize that ‘\(\in {\mathbb {H}}^{0|2}\)’ does not have any literal sense. Similarly we write \(\varvec{u} = (u_{i})_{i\in \Lambda }\in ({\mathbb {H}}^{0|2})^{\Lambda }\). The fermionic derivative \(\partial _{\xi _i}\) is defined in the natural way, i.e., as the odd derivation on that acts on \(\Omega ^{2\Lambda }\) by

for any form F that does not contain \(\xi _{i}\). An analogous definition applies to \(\partial _{\eta _i}\). The hyperbolic fermionic integral is defined in terms of the fermionic derivative by

if \(\Lambda =\{1,\dots ,N\}\). It is well-known that while the fermionic integral is formally equivalent to a fermionic derivative, it behaves in many ways like an ordinary integral. The factors of 1/z make the hyperbolic fermionic integral invariant under a fermionic version of the Lorentz group; see (2.18).

The \({\mathbb {H}}^{0|2}\) sigma model action is the even form \(H_{\beta ,h}(\varvec{u})\) in \(\Omega ^{2\Lambda }\) given by

where \((a,b) \equiv \sum _{i}a_{i}\cdot b_{i}\), with \(a_i\cdot b_i\) interpreted as the \({\mathbb {H}}^{0|2}\) inner product defined by (2.3). The corresponding unnormalised expectation \([\cdot ]_{\beta ,h}\) and normalised expectation \(\langle \cdot \rangle _{\beta ,h}\) are defined by

the latter definition holding when \([1]_{\beta ,h}\ne 0\). In (2.7) the exponential of the even form \(H_{\beta ,h}\) is defined by the formal power series expansion, which truncates at finite order since \(\Lambda \) is finite. For an introduction to Grassmann algebras and integration as used in this paper, see [5, Appendix A].

Note that the unnormalised expectation \([\cdot ]_{\beta ,h}\) is well-defined for all real values of the \(\beta _{ij}\) and \(h_i\), including negative values, and in particular \(\varvec{h}=\varvec{0}\), \(\varvec{\beta }=\varvec{0}\), or both, are permitted. We will use the abbreviations \([\cdot ]_\beta \equiv [\cdot ]_{\beta ,0}\) and \(\langle \cdot \rangle _\beta \equiv \langle \cdot \rangle _{\beta ,0}\).

The following theorem shows that the partition function \([1]_{\beta ,h}\) of the \({\mathbb {H}}^{0|2}\) model is exactly the partition function of the arboreal gas \(Z_\beta \) defined in (1.1) when \(\varvec{h}=\varvec{0}\), and that it is a generalization the partition function when \(\varvec{h} \ne \varvec{0}\) which we will subsequently denote by \(Z_{\beta ,h}\). This connection between spanning forests and the antiferromagnetic \({{\mathbb {S}}} ^{0|2}\) model, which is equivalent to our ferromagnetic \({\mathbb {H}}^{0|2}\) model, was previously observed in [13]. As mentioned earlier, our hyperbolic interpretation will have important consequences in what follows.

Theorem 2.1

For any real-valued weights \(\varvec{\beta }\) and \(\varvec{h}\),

where the inner product runs over the trees T that make up the forest F.

For the reader’s convenience and to keep our exposition self contained, we provide a concise proof of Theorem 2.1 below. The interested reader may consult the original paper [13], where they can also find generalizations to hyperforests. The \(\varvec{h}=\varvec{0}\) case of Theorem 2.1 also implies the following useful representations of probabilities for the arboreal gas.

Corollary 2.2

Let \(\varvec{h}=\varvec{0}\) and assume the edge weights \(\varvec{\beta }\) are non-negative. Then for all edges ab,

and more generally, for all sets of edges S,

Moreover, for all vertices \(a,b \in \Lambda \),

and also

We will prove Theorem 2.1 and Corollary 2.2 in Sect. 2.3, but first we establish some integration identities associated with the symmetries of \({\mathbb {H}}^{0|2}\).

2.2 Ward Identities for \({\mathbb {H}}^{0|2}\)

Define the operators

Using (2.2), one computes that these act on coordinates as

The operator S is an even derivation on \(\Omega ^{2\Lambda }\), meaning that it obeys the usual Leibniz rule \(S(FG) = S(F)G + FS(G)\) for any forms F, G. On the other hand, the operators T and \({{\bar{T}}}\) are odd derivations on \(\Omega ^{2\Lambda }\), also called supersymmetries. This means that if F is an even or odd form, then \(T(FG) = (TF)G \pm F(TG)\), with ‘\(+\)’ for F even and ‘−’ for F odd. We remark that T and \({{\bar{T}}}\) can be regarded as analogues of the infinitesimal Lorentz boost symmetries of \({\mathbb {H}}^{n}\), while S is an infinitesimal symplectic symmetry. In particular, the inner product (2.3) is invariant with respect to these symmetries, in the sense that

For T, this follows from \(T (u_a\cdot u_b) = T(-\xi _a\eta _b-\xi _b\eta _a-z_az_b) = -z_a\eta _b-z_b\eta _a+\eta _az_b+\eta _bz_a=0\) since the \(z_{i}\) are even. Analogous computations apply to \({{\bar{T}}}\) and S.

A complete description of the infinitesimal symmetries of \({\mathbb {H}}^{0|2}\) is given by the orthosymplectic Lie superalgebra \(\mathfrak {osp}(1|2)\), which is spanned by the three operators described above, together with a further two symplectic symmetries; see [13, Section 7] for details.

Lemma 2.3

For any \(a \in \Lambda \), the operators \(T_a\), \({{\bar{T}}}_a\) and S are symmetries of the non-interacting expectation \([\cdot ]_0\) in the sense that, for any form F,

Moreover, for any \(\varvec{\beta }=(\beta _{ij})\) and \(\varvec{h} = \varvec{0}\), also \(T = \sum _{i\in \Lambda } T_i\) and \({{\bar{T}}} = \sum _{i\in \Lambda } \bar{T}_i\) are symmetries of the interacting expectation \([\cdot ]_\beta \):

and similarly \(S = \sum _{i\in \Lambda } S_i\) is a symmetry of \([\cdot ]_{\beta ,h}\) for any \(\varvec{\beta }\) and \(\varvec{h}\).

Proof

First assume that \(\varvec{\beta }=\varvec{0}\). Then by (2.13),

since \((\partial _{\xi _a})^2\) acts as 0 since any form can have at most one factor of \(\xi _a\). The same argument applies to \({{\bar{T}}}\), and a similar argument applies to S.

We now show that this implies T and \({{\bar{T}}}\) are also symmetries of \([\cdot ]_\beta \). Indeed, for any form F that is even (respectively odd), the fact that T is an odd derivation and the fact that \([\cdot ]_0\) is invariant implies the integration by parts formula

For any \(\varvec{\beta }\) the right-hand side vanishes since \(TH_{\beta } = 0\) by (2.17). A similar argument applies for \({{\bar{T}}}\). Since every form F can be written as a sum of an even and an odd form, (2.19) follows.

The argument for S being a symmetry of \(\left[ \cdot \right] _{\beta ,h}\) is similar. \(\quad \square \)

To illustrate the use of these operators, we give a proof of the identities on the right-hand side of (2.11) and a proof of (2.12). Define

and note \(T\lambda _{ab} = \xi _a\eta _b + z_az_b \) and \({{\bar{T}}} {{\bar{\lambda }}}_{ab}= \xi _{b}\eta _{a}+z_{a}z_{b}\). Hence

where the final equality is by linearity and Lemma 2.3. In particular, \(\langle z_{a}^{2} \rangle _{\beta }=-1\). Reasoning similarly, we obtain

which proves (2.12), and implies \(\langle \xi _{a}\eta _{a} \rangle _{\beta }=1\). Since \(z_az_b = (1-\xi _a\eta _a)(1-\xi _b\eta _b) = 1 - \xi _a\eta _a - \xi _b\eta _b + \xi _a\eta _a \xi _b\eta _b\) this also gives

Finally, we note that the symplectic symmetry and \(S(\xi _a\xi _b) = \xi _a\eta _b - \xi _b\eta _a\) imply

2.3 Proofs of Theorem 2.1 and Corollary 2.2

Our first lemma relies on the identities of the previous section.

Lemma 2.4

For any forest F,

Proof

By factorization for fermionic integrals, it suffices to prove (2.28) when F is in fact a tree. We recall the definition

Hence, if T contains no edges then we have \([1]_{0}=1\). We complete the proof by induction, with the inductive assumption that the claim holds for all trees on k or fewer vertices. To advance the induction, let T be a tree on \(k+1\geqslant 2\) vertices and choose a leaf edge \(\{a,b\}\) of T. We will advance the induction by considering the sum of the integrals that result from expanding \((u_{a}\cdot u_{b}+1)\) in (2.28).

Note that by Lemma 2.3, if \(G_{1}\) is even (resp. odd) and \(TG=0\), then

and similarly if \({{\bar{T}}} G = 0\). Thus for such a G, recalling the definition (2.22) of \(\lambda _{ab}\) and \({{\bar{\lambda }}}_{ab}\),

where we have used (2.30) in the second and final equalities. Applying this identity with \(G=\prod _{ij\in T\setminus \{a,b\}}(u_{i}\cdot u_{j}+1)\), the right-hand side is 0 since the product does not contain the missing generator at a to give a non-vanishing expectation. The inductive assumption and factorization for fermionic integrals implies \([G]_0=1\), and thus

advancing the induction. \(\quad \square \)

Lemma 2.5

For any \(i,j\in \Lambda \) we have \((u_i\cdot u_j+1)^2=0\), and for any graph C that contains a cycle,

Proof

It suffices to consider when C is a cycle or doubled edge. Orienting C, the oriented edges of C are \((1,2),\dots , (k-1,k),(k,1)\) for some \(k\geqslant 2\). Then, with the convention \(k+1=1\),

the second equality by nilpotency of the generators and \(k\geqslant 2\). To complete the proof of the claim we consider which terms are non-zero in the expansion of this product. First consider the term that arises when choosing \(\xi _{1}\eta _{1}\) in the first term in the product: then for the second term any choice other than \(\xi _{2}\eta _{2}\) results in zero. Continuing in this manner, the only non-zero contribution is \(\prod _{i=1}^{k}\xi _{i}\eta _{i}\). Similar arguments apply to the other three choices possible in the first product, leading to

which is zero for all k. The signs arise from re-ordering the generators. We have used that C is a cycle for the third and fourth terms. \(\quad \square \)

Proof of Theorem 2.1when \(\varvec{h}=\varvec{0}\). By Lemma 2.5,

where the sum runs over sets S of edges and that over F is over forests. By taking the unnormalised expectation \([\cdot ]_0\) we conclude from Lemma 2.4 that

\(\square \)

To establish the theorem for \(\varvec{h} \ne \varvec{0}\) requires one further preliminary, which uses the idea of pinning the spin \(u_{0}\) at a chosen vertex \(0 \in \Lambda \). Informally, this means that \(u_0\) always evaluates to \((\xi ,\eta ,z) = (0,0,1)\). Formally, this means the following. To compute the pinned expectation of a function F of the forms \((u_{i}\cdot u_{j})_{i,j\in \Lambda }\), we replace \(\Lambda \) by \(\Lambda _{0} = \Lambda \setminus \{0\}\), set

in \(H_{\beta }\), and replace all instances of \(u_0 \cdot u_j\) by \(-z_j\) in both F and \(e^{-H_{\beta }}\). The pinned expectation of F is the hyperbolic fermionic integral (2.5) of this form with respect to the generators \((\xi _{i},\eta _{i})_{i\in \Lambda _{0}}\). We denote this expectation by

This procedure gives a way to identify any function of the forms \((u_{i}\cdot u_{j})_{i,j\in \Lambda }\) with a function of the forms \((u_{i}\cdot u_{j})_{i,j\in \Lambda _{0}}\) and \((z_{i})_{i\in \Lambda _{0}}\). To minimize the notation, we will implicitly identify \(u_{0}\cdot u_{j}\) with \(-z_{j}\) when taking pinned expectations of functions F of the \((u_{i}\cdot u_{j})\).

The following proposition relates the pinned and unpinned models.

Proposition 2.6

For any polynomial F in \((u_i\cdot u_j)_{i,j\in \Lambda }\),

Proof

It suffices to prove the first equation of (2.40), as this implies \([1]_{\beta }^{0}=[1-z_{0}]_{\beta }=[1]_{\beta }\) since \([z_{0}]_{\beta }=0\) by (2.24).

Since \(1-z_0 = \xi _0\eta _0\), for any form F that contains a factor of \(\xi _0\) or \(\eta _0\), we have \((1-z_0)F=0\). Thus the expectation \([(1-z_0)F]_\beta \) amounts to the expectation with respect to \([\cdot ]_{0}\) of \(F e^{-H_\beta }\) with all terms containing factors \(\xi _0\) and \(\eta _0\) removed. The claim thus follows from by computing the right-hand side using the observations that (i) removing all terms with factors of \(\xi _0\) and \(\eta _0\) from \(u_0\cdot u_i\) yields \(-z_i\), and (ii) \(\partial _{\eta _{0}}\partial _{\xi _{0}}\xi _{0}\eta _{0}z_{0}^{-1}=1\).

\(\square \)

There is a correspondence between pinning and external fields. If one first chooses \(\Lambda \) and then pins at \(0\in \Lambda \), the result is that there is an external field \(h_j\) for all \(j\in \Lambda \setminus 0\). One can also view this the other way around, by beginning with \(\Lambda \) and an external field \(h_j\) for all \(j\in \Lambda \), and then realizing this as due to pinning at an ‘external’ vertex \(\delta \notin \Lambda \). This idea shows that Theorem 2.1 with \(\varvec{h}\ne \varvec{0}\) follows from the case \(\varvec{h}=\varvec{0}\); for the reader who is not familiar with arguments of this type, we provide the details below.

Proof of Theorem 2.1when \(\varvec{h}\ne \varvec{0}\). The partition function of the arboreal gas with \(\varvec{h} \ne \varvec{0}\) can be interpreted as that of the arboreal gas with \(\varvec{h} \equiv \varvec{0}\) on a graph \({{\tilde{G}}}\) augmented by an additional vertex \(\delta \) and with weights \({\tilde{\beta }}\) given by \({\tilde{\beta }}_{ij} = \beta _{ij}\) for all \(i,j\in G\) and \({\tilde{\beta }}_{i\delta } = {\tilde{\beta }}_{\delta i} = h_i\). Each \(F'\in \mathcal {F}({{\tilde{G}}})\) is a union of \(F\in \mathcal {F}(G)\) with a collection of edges \(\{i_{r}\delta \}_{r\in R}\) for some \(R\subset V(G)\). Since \(F'\) is a forest, \(|T\cap R |\leqslant 1\) for each tree T in F. Moreover, for any \(F\in \mathcal {F}(G)\) and any \(R\subset V(G)\) satisfying \(|V(T)\cap R |\leqslant 1\) for each T in F, \(F\cup \{i_{r}\delta \}_{r\in R}\in \mathcal {F}({{\tilde{G}}})\). Thus

To conclude, note that \([(1-z_\delta )F]_{{\tilde{\beta }}} = [F]_{{\tilde{\beta }}}\) for any function F with \(TF=0\); this follows from \([z_aF] = [(T\xi _a)F] = -[\xi _a(TF)] = 0\). The conclusion now follows from Proposition 2.6 (where \(\delta \) takes the role of 0 in that proposition), which shows \([(1-z_\delta )F]_{{\tilde{\beta }}} = [F]_{\beta ,h}\). \(\quad \square \)

Proof of Corollary 2.2

Since \({\mathbb {P}}_\beta \left[ ab\right] = \beta _{ab}\frac{d}{d\beta _{ab}} \log Z\), we have

and expanding the right-hand side yields (2.9). Alternatively, multiplying (2.36) by \(\beta _{ij}(1+u_i\cdot u_j)\), using Lemma 2.5, and then applying Lemma 2.4 yields the result. Similar considerations yield (2.10), and also show that

Therefore \({\mathbb {P}}_{\beta }[i\leftrightarrow j] = -\langle u_{i}\cdot u_{j} \rangle _{\beta }\). Together with the identities (2.23)–(2.26), this proves (2.11). We already established (2.12) in Sect. 2.2. \(\quad \square \)

2.4 \({\mathbb {H}}^{2|4}\) model and dimensional reduction

In this section we define the \({\mathbb {H}}^{2|4}\) model, and show that for a class of ‘supersymmetric observables’ expectations with respect to the \({\mathbb {H}}^{2|4}\) model can be reduced to expectations with respect to the \({\mathbb {H}}^{0|2}\) model. To study the arboreal gas we will use this reduction in reverse: first we express arboreal gas quantities as \({\mathbb {H}}^{0|2}\) expectations, and in turn as \({\mathbb {H}}^{2|4}\) expectations. The utility of this rewriting will be explained in the next section, but in short, \({\mathbb {H}}^{2|4}\) expectations can be rewritten as ordinary integrals, and this carries analytic advantages.

The \({\mathbb {H}}^{2|4}\) model is a special case of the following more general \({\mathbb {H}}^{n|2m}\) model. These models originate with Zirnbauer’s \({\mathbb {H}}^{2|2}\) model [21, 51], but makes sense for all \(n ,m \in {\mathbb {N}}\). For fixed n and m with \(n+m>0\), the \({\mathbb {H}}^{n|2m}\) model is defined as follows.

Let \(\phi ^1,\dots , \phi ^n\) be n real variables, and let \(\xi ^1,\eta ^1,\dots ,\xi ^m,\eta ^m\) be 2m generators of a Grassmann algebra (i.e., they anticommute pairwise and are nilpotent of order 2). Note that we are using superscripts to distinguish variables. Forms, sometimes called superfunctions, are elements of \(\Omega ^{2m}({\mathbb {R}}^n)\), where \(\Omega ^{2m}({\mathbb {R}}^{n})\) is the Grassmann algebra generated by \((\xi ^k,\eta ^k)_{k=1}^m\) over \(C^\infty ({\mathbb {R}}^n)\). See [5, Appendix A] for details. We define a distinguished even element z of \(\Omega ^{2m}({\mathbb {R}}^n)\) by

and let \(u = (\phi ,\xi , \eta ,z)\). Given a finite set \(\Lambda \), we write \(\varvec{u} = (u_i)_{i\in \Lambda }\), where \(u_i=(\phi _i, \xi _i, \eta _i, z_i)\) with \(\phi _i\in {\mathbb {R}}^{n}\) and \(\xi _i=(\xi _{i}^{1},\dots ,\xi _{i}^{m})\) and \(\eta _i=(\eta _{i}^{1},\dots ,\eta _{i}^{m})\), each \(\xi _{i}^{j}\) (resp. \(\eta _{i}^{j}\)) a generator of \(\Omega ^{2m\Lambda }({\mathbb {R}}^{n\Lambda })\). We define the ‘inner product’

Note that these definitions imply \(u_i\cdot u_i = -1\). If \(m=0\), the constraint \(u_i\cdot u_i=-1\) defines the hyperboloid model for hyperbolic space \({\mathbb {H}}^n\), as in this case \(u_i \cdot u_j\) reduces to the Minkowski inner product on \({\mathbb {R}}^{n+1}\). For this reason we write \(u_i\in {\mathbb {H}}^{n|2m}\) and \(\varvec{u}\in ({\mathbb {H}}^{n|2m})^\Lambda \) and think of \({\mathbb {H}}^{n|2m}\) as a hyperbolic supermanifold. As we do not need to enter into the details of this mathematical object, we shall not discuss it further (see [51] for further details). We remark, however, that the expression \(\sum _{\ell =1}^{m} (-\xi _i^\ell \eta _j^\ell +\eta _i^\ell \xi _j^\ell )\) is the natural fermionic analogue of the Euclidean inner product \(\sum _{\ell =1}^{n}\phi _i^\ell \phi _j^\ell \) and motivates the supermanifold terminology.

The general class of models of interest are defined analogously to the \({\mathbb {H}}^{0|2}\) model by the action

where we now require \(\varvec{\beta } \geqslant 0\) and \(\varvec{h}\geqslant 0\), i.e., \(\varvec{\beta }=(\beta _{ij})_{i,j\in \Lambda }\) and \(\varvec{h}=(h_i)_{i\in \Lambda }\) satisfy \(\beta _{ij} \geqslant 0\) and \(h_i\geqslant 0\) for all \(i,j\in \Lambda \). We have again used the notation \((a,b) = \sum _{i\in \Lambda }a_{i}\cdot b_{i}\) but where \(\cdot \) now refers to (2.45). For a form \(F \in \Omega ^{2m\Lambda }({\mathbb {H}}^{n})\), the corresponding unnormalised expectation is

where the superintegral of a form G is

where the \(z_{i}\) are defined by (2.44).

Henceforth we will only consider the \({\mathbb {H}}^{0|2}\) and \({\mathbb {H}}^{2|4}\) models, and hence we will write \(x_{i}=\phi _{i}^{1}\) and \(y_{i}=\phi ^{2}_{i}\) for notational convenience. We will also assume \(\varvec{\beta }\geqslant 0\) and \(\varvec{h}\geqslant 0\) to ensure both models are well-defined.

2.4.1 Dimensional reduction

The following proposition shows that, due to an internal supersymmetry, all observables F that are functions of \(u_i\cdot u_j\) have the same expectations under the \({\mathbb {H}}^{0|2}\) and the \({\mathbb {H}}^{2|4}\) expectation. Here \(u_i\cdot u_j\) is defined as in (2.3) for \({\mathbb {H}}^{0|2}\), respectively as in (2.45) for \({\mathbb {H}}^{2|4}\). In this section and henceforth we work under the convention that \(z_i = u_\delta \cdot u_i\) with \(u_\delta = (0,\dots , 0,1)\), and that \((u_i\cdot u_j)_{i,j}\) refers to the collection of forms indexed by \(i,j \in {\tilde{\Lambda }} \equiv \Lambda \cup \{\delta \}\). In other words, functions of \((u_i\cdot u_j)_{i,j}\) are also permitted to depend on \((z_i)_{i}\).

Proposition 2.7

For any \(F:{\mathbb {R}}^{{\tilde{\Lambda }}\times {\tilde{\Lambda }}}\rightarrow {\mathbb {R}}\) smooth with enough decay that the integrals exist,

In view of this proposition we will subsequently drop the superscript \({\mathbb {H}}^{n|2m}\) for expectations of observables F that are functions of \((u_i\cdot u_j)_{i,j}\). That is, we will simply write \(\left[ F\right] _{\beta ,h}\) for

We will similarly write \(\langle F \rangle _{\beta ,h}=\langle F \rangle _{\beta ,h}^{{\mathbb {H}}^{0|2}}=\langle F \rangle _{\beta ,h}^{{\mathbb {H}}^{2|4}}\) whenever \(\left[ 1\right] _{\beta ,h}^{{\mathbb {H}}^{2|4}}\) positive and finite.

The proof of Proposition 2.7 uses the following fundamental localisation theorem. To state the theorem, consider forms in \(\Omega ^{2N}({\mathbb {R}}^{2N})\) and denote the even generators of this algebra by \((x_i,y_i)\) and the odd generators by \((\xi _i,\eta _i)\). Then we define

Theorem 2.8

Suppose \(F \in \Omega ^{2N}({\mathbb {R}}^{2N})\) is integrable and satisfies \(QF=0\). Then

where the right-hand side is the degree-0 part of F evaluated at 0.

A proof of this theorem can be found, for example, in [5, Appendix B].

Proof of Proposition 2.7

To distinguish \({\mathbb {H}}^{0|2}\) and \({\mathbb {H}}^{2|4}\) variables, we write the latter as \(u_i'\), i.e.,

We begin by considering the case \(N=1\), i.e., a graph with a single vertex. Since \(e^{-H_{\beta ,h}(\varvec{u})}\) is a function of \((u_i\cdot u_j)_{i,j}\), we will absorb the factor of \(e^{-H_{\beta ,h}(\varvec{u})}\) into the observable F to ease the notation. The \({\mathbb {H}}^{2|4}\) integral can be written as

where

and \(\int _{{\mathbb {R}}^{2}}dx \, dy \, \partial _{\eta ^{2}}\partial _{\xi ^{2}} \frac{1}{z'} F\) is the form in \((\xi ^{1},\eta ^{1})\) obtained by integrating the coefficient functions term-by-term. Applying the localisation theorem (Theorem 2.8) to the variables \((x,y,\xi ^{2},\eta ^{2})\) gives, after noting \(z'\) localises to \(z = \sqrt{1-2 \xi ^{1}\eta ^{1}}\),

Therefore

which is the claim. The argument for the case of general N is exactly analogous. \(\quad \square \)

2.5 Horospherical coordinates

Proposition 2.7 showed that ‘supersymmetric observables’ have the same expectations in the \({\mathbb {H}}^{0|2}\) and the \({\mathbb {H}}^{2|4}\) model. This is useful because the richer structure of the \({\mathbb {H}}^{2|4}\) model allows the introduction of horospherical coordinates, whose importance was recognised in [21, 47]. We will shortly define horospherical coordinates, but before doing this we state the result that we will deduce using them.

For the statement of the proposition, we require the following definitions. Let \(-\Delta _{\beta (t),h(t)}\) be the matrix with (i, j)th element \(\beta _{ij}e^{t_{i}+t_{j}}\) for \(i\ne j\) and ith diagonal element \(-\sum _{j\in \Lambda }\beta _{ij}e^{t_{i}+t_{j}}-h_{i}e^{t_{i}}\). Let

where we abuse notation by using the symbol \({{\tilde{H}}}_{\beta ,h}\) both for the function \({{\tilde{H}}}_{\beta ,h}(t,s)\) and \({{\tilde{H}}}_{\beta ,h}(t)\). Below we will assume that \(\varvec{\beta }\) is irreducible, by which we mean that \(\varvec{\beta }\) induces a connected graph.

Proposition 2.9

Assume \(\varvec{\beta } \geqslant 0\) and \(\varvec{h} \geqslant 0\) with \(\varvec{\beta }\) irreducible and \(h_i>0\) for at least one \(i\in \Lambda \). For all smooth functions \(F:{\mathbb {R}}^{2\Lambda } \rightarrow {\mathbb {R}}\), respectively \(F:{\mathbb {R}}^\Lambda \rightarrow {\mathbb {R}}\), such that the integrals on the left- and right-hand sides converge absolutely,

In particular, the normalising constant \(\left[ 1\right] _{\beta ,h}^{{\mathbb {H}}^{2|4}}\) is the partition function \(Z_{\beta ,h}\) of the arboreal gas.

Abusing notation further, we will denote either of the expectations on the right-hand sides of (2.61) and (2.62) by \([{\cdot }]_{\beta ,h}\), and we will write \(\langle \cdot \rangle _{\beta ,h}\) for the normalised versions. Before giving the proof of the proposition, which is essentially standard, we collect some resulting identities that will be used later.

Corollary 2.10

For all \(\varvec{\beta }\) and \(\varvec{h}\) as in Proposition 2.9,

and

where the left-hand sides are evaluated as on the right-hand side of (2.61), and the right-hand sides are given by the \({\mathbb {H}}^{0|2}\) expectation (2.7).

Proof

To lighten notation, we write \(\langle \cdot \rangle \equiv \langle \cdot \rangle _{\beta ,h}\). For the \({\mathbb {H}}^{2|4}\) expectation (2.47), we have \(\langle x_i^qz_i^p \rangle = 0\) whenever \(q>0\) is an odd integer by the symmetry \(x\mapsto -x\) (recall that \(x=\phi ^1\)). Also note that

where we emphasize that the superscript of \(x_i^2\) denotes the square and the superscript of \(\xi _i^2\) denotes the second component. These identies follow from the \(x\leftrightarrow y\) and \(\xi _{i}^{1}\eta _{i}^{1}\leftrightarrow \xi _{i}^{2}\eta _{i}^{2}\) symmetries of the \({\mathbb {H}}^{2|4}\) model and \(\langle x_i^2+y_i^2-2\xi _i^1\eta _i^1 \rangle =0\) by supersymmetric localisation, i.e., Theorem 2.8. Since

and since the left-hand sides are equal by Proposition 2.7, we further see that the \({\mathbb {H}}^{2|4}\) expectation (2.65) equals the \({\mathbb {H}}^{0|2}\) expectation \(\langle \xi _i\eta _i \rangle \). Similarly, \(\langle x_{i}^{2}z_{i} \rangle = \langle y_{i}^{2}z_{i} \rangle = \langle \xi _{i}^{1}\eta _{i}^{1}z_{i} \rangle = \langle \xi _{i}^{2}\eta _{i}^{2}z_{i} \rangle \). By using the preceding equalities and by expanding \(\langle (-1+z_{i}^{2})z_{i} \rangle =\langle (u_{i}\cdot u_{i}+z_{i}^{2})z_{i} \rangle \) in both \({\mathbb {H}}^{0|2}\) and \({\mathbb {H}}^{2|4}\), one obtains

where the first expectation is with respect to \({\mathbb {H}}^{2|4}\) and the others are with respect to \({\mathbb {H}}^{0|2}\). Using these identities and (2.61), we then find

The identity (2.64) follows analogously:

where we used the generalisation of (2.65) for the mixed expectation \(\langle x_ix_j \rangle \) and that \(\langle \xi _i\eta _j \rangle = \langle \xi _j\eta _i \rangle \), see (2.27). \(\quad \square \)

To describe the proof of Proposition 2.9 we now define horospherical coordinates for \({\mathbb {H}}^{2|4}\). These are a change of generators from the variables \((x,y,\xi ^{\gamma },\eta ^{\gamma })\) with \(\gamma =1,2\) to \((t,s,\psi ^{\gamma },{{\bar{\psi }}}^{\gamma })\), where

We note that \({{\bar{\psi }}}_{i}\) is simply notation to indicate a generator distinct from \(\psi _{i}\), i.e., the bar does not denote complex conjugation, which would not make sense. In these coordinates the action is quadratic in \(s, {{\bar{\psi }}}^{1},\psi ^{1},{{\bar{\psi }}}^{2},\psi ^{2}\). This leads to a proof of Proposition 2.9 by explicitly integrating out these variables when t is fixed via the following standard lemma, whose proof we omit.

Lemma 2.11

For any \(N \times N\) matrix A,

and, for a positive definite \(N\times N\) matrix A,

Proof of Proposition 2.9

The first step is to compute the Berezinian for the horospherical change of coordinates. This can be done as in [6, Appendix A]. There is an \(e^{t}\) for the s-variables and an \(e^{-t}\) for each fermionic variable, leading to a Berezinian \(z e^{-3t}\), i.e.,

where \({{\bar{H}}}_{\beta ,h}(s,t,\psi ,{{\bar{\psi }}})\) is \(H_{\beta ,h}\) expressed in horospherical coordinates.

The second step is to apply Lemma 2.11 repeatedly. To prove (2.62), we apply it twice, once for \(({{\bar{\psi }}}^{1},\psi ^{1})\) and once for \(({{\bar{\psi }}}^{2},\psi ^{2})\). The lemma applies since F does not depend on \(\psi ^{1},{{\bar{\psi }}}^{1},\psi ^{2},{{\bar{\psi }}}^{2}\) by assumption. To prove (2.62), we apply it three times, once for \(({{\bar{\psi }}}^{1},\psi ^{1})\), once for \(({{\bar{\psi }}}^{2},\psi ^{2})\), and once for s. Each integral contributes a power of \(\det (-\Delta _{\beta (t),h(t)})\), namely \(-1/2\) for the Gaussian and \(+1\) for each fermionic Gaussian. This explains the coefficient 2 in (2.61) and the coefficient \(3/2=2-1/2\) in (2.62).

The final claim follows as the conditions that \(\varvec{\beta }\) induces a connected graph and some \(h_{i}>0\) implies \(\left[ 1\right] _{\beta ,h}^{{\mathbb {H}}^{2|4}}\) is finite. The claim thus follows from Theorems 2.7 and 2.1. \(\quad \square \)

2.6 Pinned measure for the \({\mathbb {H}}^{2|4}\) model

This section introduces a pinned version of the \({\mathbb {H}}^{2|4}\) model and relates it to the pinned \({\mathbb {H}}^{0|2}\) model that was introduced in Sect. 2.2. For the \({\mathbb {H}}^{2|4}\) pinning means \(u_{0}\) always evaluates to \((x,y,\xi ^{1},\eta ^{1},\xi ^{2},\eta ^{2},z) = (0,0,0,0,0,0,1)\). As before, we implement this by replacing \(\Lambda \) by \(\Lambda _0 = \Lambda \setminus \{0\}\) and setting

and replacing \(u_0 \cdot u_j\) by \(-z_j\). We denote the corresponding expectations by

We can relate the pinned and unpinned measures exactly as for the \({\mathbb {H}}^{0|2}\) model.

Proposition 2.12

For any polynomial F in \((u_{i}\cdot u_{j})_{i,j\in \Lambda }\),

Moreover, \([1]^0_\beta = [1]_\beta \) and hence for any pairs of vertices \(i_kj_k\),

Proof

The first equality in (2.79) follows by reducing the \({\mathbb {H}}^{2|4}\) expectation to a \({\mathbb {H}}^{0|2}\) expectation by Proposition 2.7 (recall the convention that \(z_0 = u_\delta \cdot u_0\)), then applying Proposition 2.6 for the \({\mathbb {H}}^{0|2}\) expectation, and finally applying Proposition 2.7 again (in reverse). The second equality in (2.79) then follows by normalising using that \([1]_\beta ^0 = [1-z_0]_\beta = [1]_\beta \) (as in Proposition 2.6). The equalities (2.80) follow from \([1]_\beta ^0 = [1]_\beta \) by differentiating with respect to the \(\beta _{i_kj_k}\). \(\quad \square \)

The next corollary expresses the pinned model in horospherical coordinates. For \(i,j \in \Lambda \), set

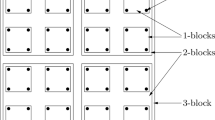

and let \({{\tilde{D}}}_\beta (t)\) be the determinant of \(-\Delta _{\beta (t)}\) restricted to \(\Lambda _0 = \Lambda \setminus \{0\}\), i.e., the determinant of submatrix of \(-\Delta _{\beta (t)}\) indexed by \(\Lambda _{0}\). When \(\varvec{\beta }\) induces a connected graph, this determinant is non-zero, and by the matrix-tree theorem it can be written as

where the sum is over all spanning trees on \(\Lambda \). For \(t\in {\mathbb {R}}^\Lambda \), then define

By combining Proposition 2.12 with Proposition 2.9, we have the following representation of the pinned measure in horospherical coordinates .

Corollary 2.13

For any smooth function \(F:{\mathbb {R}}^\Lambda \rightarrow {\mathbb {R}}\) with sufficient decay,

Proof

We recall the definition of the left-hand side, i.e., that the expectation \([{\cdot }]_\beta ^0\) is defined in (2.77)–(2.78) as the expectation on \(\Lambda _{0}\) given by \([{\cdot }]^{0}_\beta = [{\cdot }]_{{\tilde{\beta }},{{\tilde{h}}}}\) with \({\tilde{\beta }}_{ij}=\beta _{ij}\) and \({{\tilde{h}}}_i = \beta _{0i}\) for \(i,j\in \Lambda _0\). The equality now follows from (2.62), together with the observation that \(\Delta _{\beta (t)}|_{\Lambda _0}\) is \(\Delta _{{\tilde{\beta }}(t),{{\tilde{h}}}(t)}\) if \(t_{0}=0\). \(\quad \square \)

In view of (2.84) and since \([1]_{\beta }^{0}=Z_{\beta }\) by Proposition 2.12, we again abuse notation somewhat and write the normalised expectation of a function of \(t=(t_i)_{i\in \Lambda }\) as

Corollary 2.14

The connection probabilities can be written as in terms of the pinned \({\mathbb {H}}^{2|4}\) measure:

Moreover, for any vertex i,

Proof

(2.86) follows by applying first (2.11), then (2.80), then using the fact that \(u_0\cdot u_i=-z_i\) under \(\langle \cdot \rangle _\beta ^0\), then using that \(\langle x_i \rangle _\beta =0\) by symmetry, and finally applying (2.84):

The argument that \(\langle e^{3t_{i}} \rangle _{\beta }^{0}=1\) is identical to (2.71) with \(\langle \cdot \rangle _\beta \) replaced by \(\langle \cdot \rangle _\beta ^0\). \(\quad \square \)

3 Phase Transition on the Complete Graph

The following theorem shows that on the complete graph the arboreal gas undergoes a transition very similar to the percolation transition, i.e., the Erdős–Rényi graph. As mentioned in the introduction, this result has been obtained previously [8, 34, 36]. We have included a proof only to illustrate the utility of the \({\mathbb {H}}^{0|2}\) representation. The study of spanning forests of the complete graph goes back to (at least) Rényi [43] who obtained a formula which can be seen to imply that their asymptotic number grows like \(\sqrt{e}n^{n-2}\), see [37].

Throughout this section we consider \({{\mathbb {G}}} = K_{N}\), the complete graph on N vertices with vertex set \(\{0,1,2,\dots , N-1\}\), and we choose \(\beta _{ij} = \alpha /N\) with \(\alpha >0\) fixed for all edges ij. For notational simplicity we write \(Z_{\beta }\) and \({\mathbb {P}}_{\beta }\), i.e., we leave the dependence on N implicit.

Theorem 3.1

In the high temperature phase \(\alpha < 1\),

In the low temperature phase \(\alpha > 1\),

In the critical case \(\alpha = 1\),

3.1 Integral representation

The first step in the proof of the theorem is the following integral representation that follows from a transformation of the fermionic field theory representation from Sect. 2.1. We introduce the effective potential

and set

Proposition 3.2

For all \(\alpha >0\) and all positive integers N,

where \(Z_{\beta }[0\leftrightarrow 1] \equiv {\mathbb {P}}_{\beta }[0\leftrightarrow 1]Z_{\beta }\).

Proof

We start from the representations of the partition functions in terms of the \({\mathbb {H}}^{0|2}\) model, i.e., Theorem 2.1 and Corollary 2.2, which we simplify using the assumption that the graph is the complete graph. Let \((\Delta _\beta f)_i = \frac{\alpha }{N}\sum _{j=0}^{N-1} (f_i-f_j)\) be the mean-field Laplacian and \(\varvec{h}= (h_i)_i\). Then

In the sequel we will omit the range of sums and products when there is no risk of ambiguity.

To decouple the two terms that are not diagonal sums we use the following Hubbard–Stratonovich-type transforms in terms of auxiliary variables \({\tilde{\xi }},{\tilde{\eta }}\) (fermionic) and \({{\tilde{z}}}\) (real). Let \({\mathbf {1}}\) be the vector such that \({\mathbf {1}}_i=1\) for all \(0\leqslant i\leqslant N-1\).

The second formula is the formula for the Fourier transform of a Gaussian measure. The first formula can be seen by making use of the following identity. Write \(Af \equiv \frac{1}{N}\sum _i f_i\) for the average of f, so that

Using this identity the first equality in (3.10) is readily obtained by computing the fermionic derivatives, while the second equality follows by expanding the exponent. In the second line of (3.12) we used the orthogonality of constant functions with the mean 0 function \(\varvec{\xi }-(A\varvec{\xi }){\mathbf {1}}\). Finally, on the last line of (3.12), we used that \([{\tilde{\eta }}-A\varvec{\eta }]{\mathbf {1}}\) is a constant to write the \(\ell ^2\) inner product as a product multiplied by a factor N, and the factor \(\alpha \) in the second term was absorbed into \(\Delta _{\beta }\).

Substituting (3.10)–(3.11) into (2.8) gives

Simplifying the term inside the exponential gives

Since \(({\tilde{\xi }}{\tilde{\eta }})^2=0\) and \(({\tilde{\xi }}\eta _i+\xi _i{\tilde{\eta }})^3=0\), the exponential can be replaced by its third-order Taylor expansion, giving

Using again nilpotency of \({\tilde{\xi }}{\tilde{\eta }}\) this may be rewritten as

Evaluating the fermionic derivatives gives the identity

To show (3.6)–(3.7) we now take \(\varvec{h}=0\). By definition the last bracket in (3.17) is then \(F(i\alpha {{\tilde{z}}})\) and the remaining integrand defines \(e^{-NV({{\tilde{z}}})}\), proving (3.6). For (3.7) we use that \(z_i = e^{z_i-1}\), and hence that \([z_0z_1]_{\beta } = Z_{\beta ,-1_0-1_1}\). Therefore (3.17) implies

By definition, the integrand equals \(-F_{01}(i\alpha {{\tilde{z}}})\), so together with the relation \(Z_\beta [0\leftrightarrow 1] = -[z_0z_1]_{\beta }\), which holds by (2.11), the claim (3.7) follows. \(\quad \square \)

3.2 Asymptotic analysis

To apply the method of stationary phase to evaluate the asymptotics of the integrals, we need the stationary points of V, and asymptotic expansions for V and F. The first two derivatives of P are

The stationary points are those \(w=i\alpha {{\tilde{z}}}\) such that \(P'(w)=0\). This equation can be rewritten as

which has solutions \(w=0\) and \(w=1-\alpha \). We call a root \(w_0\) stable if \(P''(w_0) >0\) and unstable if \(P''(w_0)<0\). For \(\alpha <1\) the root 0 is stable whereas \(1-\alpha \) is unstable; for \(\alpha >1\) the root \(1-\alpha \) is stable whereas 0 is unstable; for \(\alpha =0\) the two roots collide at 0 and \(P''(0)=0\).

For the asymptotic analysis, we start with the nondegenerate case \(\alpha \ne 1\). First observe that we can view the right-hand sides of (3.6)–(3.7) as contour integrals and can, due to analyticity of the integrand and the decay of \(e^{-N\alpha {{\tilde{z}}}^2/2}\) when \({{\,\mathrm{Re}\,}}{{\tilde{z}}}\) is large, shift this contour to the horizontal line \({\mathbb {R}}+iw\) for any \(w\in {\mathbb {R}}\). We will then apply Laplace’s method in the version given by the next theorem, which is a simplified formulation of [38, Theorem 7, p.127].

Theorem 3.3

Let I be a horizontal line in \({\mathbb {C}}\). Suppose that \(V,G:U \rightarrow {\mathbb {R}}\) are analytic in a neighbourhood U of the contour I, that \(t_0 \in I\) is such that \(V'\) has a simple root at \(t_0\), and that \({{\,\mathrm{Re}\,}}(V(t)-V(t_0))\) is positive and bounded away from 0 for t away from \(t_0\). Then

where the notation \(\sim \) means that the right-hand side is an asymptotic expansion for the left-hand side, and the coefficients are given by (with all functions evaluated at \(t_0\)):

and with \(b_{s}\) as given in [38] for \(s \ge 2\). (Also recall that \(\Gamma (1/2) = \sqrt{\pi }\) and that \(\Gamma (s+1)=s\Gamma (s)\).)

For \(\alpha \ne 1\), denote by \(w_0\) the unique stable root. As discussed in the previous paragraph, we can shift the contour to the line \({\mathbb {R}}-i \frac{w_0}{\alpha }\), and the previous theorem implies that

with all functions on the right-hand side are evaluated at \(w_0\). From this the proof of Theorem 3.1 for \(\alpha \ne 1\) is an elementary (albeit somewhat tedious) computation of the derivatives of P and F and \(F_{01}\) at \(w_0\).

Proof of Theorem 3.1, \(\alpha <1\). The stable root is \(w_0=0\). By (3.23) and elementary computations for the derivatives of P and F and \(F_{01}\), we find

Recalling the definitions (3.6)–(3.7), this implies the claims. \(\quad \square \)

Proof of Theorem 3.1, \(\alpha >1\). The stable root is \(w_0=1-\alpha \). Again (3.23) and elementary computations for the derivatives of P and F and \(F_{01}\) lead to

and \(P = P(w_0)=P(1-\alpha )\). Again the claims follow from (3.6)–(3.7). \(\quad \square \)

At the critical point \(\alpha =1\), the two roots collide at 0 and \(P''(0)=0\). We analyse the integral as follows.

Proof of Theorem 3.1, \(\alpha =1\). We begin by using the conjugate flip symmetry to write

Using analyticity of the integrand, we then deform the contour from \([0, \infty )\) to \([0, e^{i\pi /6}\infty )\); the contribution of the boundary arc vanishes due to the decay of \(e^{-N\alpha {{\tilde{z}}}^2/2}\) on this arc. We now split the contour into two intervals \(I_1 = [0, e^{i\pi /6}N^{-3/10})\) and \( I_2 = [e^{i\pi /6}N^{-3/10},e^{i\pi /6} \infty )\), and denote the integrals over these regions as \(J_1\) and \(J_2\) respectively.

Over the first interval \(I_1\), we introduce the new real variable \(s = N^{\frac{1}{3}}e^{-i\pi /6}{\tilde{z}}\), in terms of which

We then approximate the arguments as

where the last error bounds hold uniformly for \(s\in [0,N^{1/30}]\). This gives

The second term \(J_2\) is asymptotically negligible. To see this, we bound \(|F(i{\tilde{z}})| \le 1\), introduce the real variable \(s = e^{-\frac{i\pi }{6}}{\tilde{z}}\), and split the resulting domain as \( [N^{-3/10},2) \cup [2,\infty ) = I_2' \cup I_2''\):

Over \(I_2'\), we use that \(|I_2'| \le 2\) and bound the integral in terms of the supremum of the integrand:

and as \({{\,\mathrm{Re}\,}}NV(\frac{i\pi }{6} s)\) is decreasing, this supremum is attained on the boundary \(s = N^{-3/10}\). Taylor expanding as before gives us

Over \(I_2''\), we use that \({{\,\mathrm{Re}\,}}[NV(\frac{i\pi }{6} s)] \ge \frac{Ns^2}{4}\) for all \(s \ge 2\) to bound the second term as

Putting together the estimates for \(J_1\) and \(J_2\), we therefore find

and hence the first asymptotic relation in (3.3) follows from (3.6), i.e.,

Using the same procedure, we can compute \({\mathbb {P}}_\beta [0\leftrightarrow 1]\). We again split the (conveniently scaled) integral into two terms as

As before \(J_2\) is asymptotically negligible. For \(J_1\), we approximate the \(F_{01}\) term as

uniformly for \(s\in [0,N^{1/30}]\), to obtain the asymptotic relation

From (3.7), we therefore find

which after dividing by \(Z_\beta \) shows the second asymptotic relation in (3.3). \(\quad \square \)

4 No Percolation in Two Dimensions

In this section, we consider the arboreal gas on (finite approximations of) \({\mathbb {Z}}^2\) with constant nearest neighbour weights, i.e., with \(\beta _{ij}=\beta >0\) for all edges ij and vertex weights \(h_i=h\) for all vertices i. As such we write \(\beta \) instead of \(\varvec{\beta }\) in this section. Constant weights are merely a convenient choice; everything in this section also applies to translation-invariant finite range weights, for example. In contrast with the case of the complete graph, we show that on \({\mathbb {Z}}^2\) the tree containing a fixed vertex always has finite density. Our arguments are closely based on estimates developed for the vertex-reinforced jump process [6, 33, 44]. The main new idea is to use these bounds in combination with dimensional reduction from Sect. 2.4.

4.1 Two-point function decay in two dimensions

The proof of Theorem 1.3 makes use of the representation from Sect. 2.6, and closely follows [44]; an alternative proof could likely be obtained by adapting instead [33].

To lighten the notation, for a finite subgraph \(\Lambda \subset {\mathbb {Z}}^{2}\) we write \({\mathbb {P}}_{\beta }\) in place of \({\mathbb {P}}_{\Lambda ,\beta }\). By (2.86), the connection probability can be written in the horospherical coordinates of the \({\mathbb {H}}^{2|4}\) model as

where \(\langle \cdot \rangle ^0_\beta \) denotes the expectation with pinning at vertex 0. Explicitly, by (2.85), the measure \(\langle \cdot \rangle _\beta ^0\) on the right-hand side can be written as the \(a=3/2\) case of

where

and where \({{\tilde{D}}}_{\beta }(t)\) was given explicitly in (2.82) and \(Z_{\beta ,a}\) is a normalising constant. We have made the parameter a explicit as our argument adapts that of [44], which concerned the case \(a=1/2\). When \(a=1/2\) supersymmetry implies that \(Z_{\beta ,1/2} =1\) and \({\mathbb {E}}_{Q_{\beta ,1/2}}(e^{t_k})=1\) for all \(\varvec{\beta }=(\beta _{ij})\) and all \(k\in \Lambda \). These identities require the following replacement when \(a\ne 1/2\):

When \(a=3/2\) the first of these facts follow from the forest representation for the partition function, see Proposition 2.9, and the second is (2.87) of Corollary 2.13. Proof that (4.4) holds for general half-integer \(a \geqslant 0\) appears in [17], and we conjecture that these assumptions are true for any \(a\geqslant 0\).

With (4.4) given, it is straightforward to adapt [44, Lemma 1] to obtain the following lemma. In the next lemma we assume \(0,i\in \Lambda \), but we make no further assumptions beyond that \(\varvec{\beta }\) induces a connected graph.

Lemma 4.1

(Sabot [44, Lemma 1] for \(a=1/2\)). Let \(a\geqslant 0\), \(s\in (0,1)\), and \(\gamma > 0\). Assume (4.4) holds. Then for any \(v \in {\mathbb {R}}^\Lambda \) with \(v_j=1\), \(v_{0}=0\), and

one has, with \(q=1/(1-s)\),

Proof

As mentioned, our proof is an adaptation of [44, Lemma 1], and hence we indicate the main steps but will be somewhat brief. In this reference \(a=1/2\), \(Q_{\beta ,a}\) is denoted Q, \(\beta _{ij}\) is denoted \(W_{ij}\), and t is denoted by u. Let \(Q_{\beta ,a}^\gamma \) denote the distribution of \(t-\gamma v\). Since the partition function does not change under translation of the underlying measure, by following [44, Prop. 1] we obtain,

With \(e^t\) replaced by \(e^{2at}\) but otherwise exactly as in the argument leading to [44, (2)], by using that \(s^{-1}\) and q are Hölder conjugate and using the second part of (4.4),

The expectation on the right-hand side is estimated as in [44], with the only change that \(\sqrt{D(\beta ,t)}\) is replaced by \(D(\beta ,t)^a\) in all expressions, and that the change of measure from \(Q_{\beta ,a}\) to \(Q_{{\tilde{\beta }},a}\) involves the normalisation constants, i.e., a factor \(Z_{{\tilde{\beta }},a}/Z_{\beta ,a}\). Setting \(\gamma '= \gamma (q-1)\), we obtain

where

The ratio of determinants is bounded using the matrix-tree theorem as done on [44, p.7], and we use that \(Z_{{\tilde{\beta }},a} \leqslant Z_{\beta ,a}\), by (4.4). The result is (4.6). \(\quad \square \)

Proof of Theorem 1.3

We may choose \(s=1/(2a) = 1/3 \in (0,1)\) in Lemma 4.1. We then combine (4.1) and (4.6) and choose v as a difference of Green functions (exactly as in [44, Section 2.2]) to find that,

as needed. \(\quad \square \)

4.2 Mermin–Wagner theorem

We now show that the vanishing of the density of the cluster containing a fixed vertex on the torus also follows from a version of the classical Mermin–Wagner theorem. We first derive an expression for a quantity closely related to the mean tree size. For constant h, Theorem 2.1 implies that

which leads to

where \(T_i\) is the (random) tree containing the vertex i.

Let \(\Lambda \) be a d-dimensional discrete torus, and let \(\lambda (p)\) by the Fourier multiplier of the corresponding discrete Laplacian:

where \(\cdot \) is the Euclidean inner product on \({\mathbb {R}}^d\) and \(\Lambda ^{\star }\) is the Fourier dual of the discrete torus \(\Lambda \).

Theorem 4.2

Let \(d \geqslant 1\), and let \(\Lambda \) be a d-dimensional discrete torus of side length L. Then

Proof

The proof is analogous to [6, Theorem 1.5]. We write the \({\mathbb {H}}^{0|2}\) expectations \(\langle \xi _i\eta _j \rangle _{\beta ,h}\) and \(\langle z_i \rangle _{\beta ,h}\) in horospherical coordinates using Corollary 2.10:

Set

Since the expectation of functions depending only on (s, t) in horospherical coordinates is an expectation with respect to a probability measure, denoted \(\langle \cdot \rangle \) from hereon, the Cauchy–Schwarz inequality implies

Since the density in horospherical coordinates is \(e^{-{\tilde{H}}(s,t)}\), the probability measure \(\langle \cdot \rangle \) obeys the integration by parts \(\langle FD{{\tilde{H}}} \rangle = \langle DF \rangle \) identity for any function \(F=F(s,t)\) that does not grow too fast. Therefore by translation invariance, with \(y_i = s_ie^{t_i}\),

By Cauchy–Schwarz, translation invariance, and (4.16) we also have

Using (4.21) and the integration by parts identity it follows that

In summary, we have proved

Summing over \(p \in \Lambda ^{\star }\) in the Fourier dual of \(\Lambda \) (with the sum correctly normalized), the left-hand side becomes \(\langle \xi _0\eta _0 \rangle \). Using \(\langle z_0 \rangle = 1-\langle \xi _0\eta _0 \rangle \) this then gives the claim:

\(\square \)

From the Mermin–Wagner theorem we obtain that on a finite torus of side length L the density of the tree containing 0 tends to 0 as \(L\rightarrow \infty \). We write \(\lesssim \) for inequalities that hold up to universal constants.

Corollary 4.3

Let \(\Lambda \) be the 2-dimensional discrete torus of side length L. Then

Proof

For any \(h \leqslant 1/|\Lambda |\) we have \(h|T_0| \leqslant 1\). By Theorem 4.2, for \(d=2\) thus

where we used that, for all \(h \geqslant 0\), the Green’s function of the discrete torus satisfies

Directly following the conclusion of the present proof, we shall show that if X is a random variable with \(|X|\leqslant 1\), and if \(h \ll 1/|\Lambda |\),

Applying this estimate with \(X=|T_0|/|\Lambda |\), for \(h \ll 1/|\Lambda |\) we have

With \(h =L^{-2}(\log L)^{-1/2}\), combining both estimates gives

\(\square \)

Lemma 4.4

Let \(\Lambda \) be any finite graph with \(|\Lambda |\) vertices. Let X be a random variable with \(|X|\leqslant 1\). Then for \(h \ll 1/|\Lambda |\),

Proof

By definition,

With \(A'/(1+\varepsilon )-A = (A'-A) - A' (\varepsilon /(1+\varepsilon )) = (A'-A) + (A'/(1+\varepsilon )) \varepsilon \) we get

Since \(|X|\leqslant 1\) it suffices to bound

where the sum runs over subforests \(F'\) of F, i.e., unions of the disjoint trees in F. Since \(\sum _i |T_i| \leqslant |\Lambda |\),

whenever \(h|\Lambda |\ll 1\). \(\quad \square \)

References

Abrahams, E., Anderson, P.W., Licciardello, D.C., Ramakrishnan, T.V.: Scaling theory of localization: absence of quantum diffusion in two dimensions. Phys. Rev. Lett. 42, 673–676 (1979)

Albeverio, S., Vecchi, F.C.D., Gubinelli, M.: Elliptic stochastic quantization. Preprint, arXiv:1812.04422

Alon, N., Spencer, J.H.: The Probabilistic Method. Wiley Series in Discrete Mathematics and Optimization, 4th edn. Wiley, Hoboken (2016)

Angel, O., Crawford, N., Kozma, G.: Localization for linearly edge reinforced random walks. Duke Math. J. 163(5), 889–921 (2014)

Bauerschmidt, R., Helmuth, T., Swan, A.: The geometry of random walk isomorphism theorems. Ann. Inst. Henri Poincaré Probab. Stat. (to appear)

Bauerschmidt, R., Helmuth, T., Swan, A.: Dynkin isomorphism and Mermin-Wagner theorems for hyperbolic sigma models and recurrence of the two-dimensional vertex-reinforced jump process. Ann. Probab. 47(5), 3375–3396 (2019)

Bedini, A., Caracciolo, S., Sportiello, A.: Hyperforests on the complete hypergraph by Grassmann integral representation. J. Phys. A 41(20), 205003, 28 (2008)

Bedini, A., Caracciolo, S., Sportiello, A.: Phase transition in the spanning-hyperforest model on complete hypergraphs. Nucl. Phys. B 822(3), 493–516 (2009)

Beffara, V., Duminil-Copin, H.: The self-dual point of the two-dimensional random-cluster model is critical for \(q{\geqslant } 1\). Probab. Theory Relat. Fields 153(3–4), 511–542 (2012)

Brändén, P., Huh, J.: Lorentzian polynomials. Preprint, arXiv:1902.03719

Brydges, D.C., Imbrie, J.Z.: Branched polymers and dimensional reduction. Ann. Math. 158(3), 1019–1039 (2003)

Burton, R.M., Keane, M.: Density and uniqueness in percolation. Commun. Math. Phys. 121(3), 501–505 (1989)

Caracciolo, S., Jacobsen, J.L., Saleur, H., Sokal, A.D., Sportiello, A.: Fermionic field theory for trees and forests. Phys. Rev. Lett. aracc(8), 080601, 4 (2004)

Caracciolo, S., Sokal, A.D., Sportiello, A.: Grassmann integral representation for spanning hyperforests. J. Phys. A 40(46), 13799–13835 (2007)

Caracciolo, S., Sokal, A.D., Sportiello, A.: Noncommutative determinants, Cauchy-Binet formulae, and Capelli-type identities. I. Generalizations of the Capelli and Turnbull identities. Electron. J. Combin., 16(1):Research Paper 103, 43, (2009)

Caracciolo, S., Sokal, A.D., Sportiello, A.: Spanning forests and \(OSP(N|2M)\)-invariant -models. J. Phys. A 50(11), 114001, 52 (2017)

Crawford, N.: Supersymmetric hyperbolic -models and decay of correlations in two dimensions. Preprint, arXiv:1912.05817

den Hollander, W.T.F., Keane, M.: Inequalities of FKG type. Phys. A 138(1–2), 167–182 (1986)

Deng, Y., Garoni, T.M., Sokal, A.D.: Ferromagnetic phase transition for the spanning-forest model (\(q\rightarrow 0\) limit of the potts model) in three or more dimensions. Phys. Rev. Lett. 98, 030602 (2007)

Disertori, M., Spencer, T.: Anderson localization for a supersymmetric sigma model. Commun. Math. Phys. 300(3), 659–671 (2010)

Disertori, M., Spencer, T., Zirnbauer, M.R.: Quasi-diffusion in a 3D supersymmetric hyperbolic sigma model. Commun. Math. Phys. 300(2), 435–486 (2010)

Duminil-Copin, H.: Lectures on the Ising and Potts models on the hypercubic lattice. In: Random graphs, phase transitions, and the Gaussian free field, volume 304 of Springer Proc. Math. Stat., pp. 35–161. Springer, Cham (2020)

Feder, T., Mihail, M.: Balanced matroids. In: Proceedings of the Twenty Fourth Annual ACM Symposium on the Theory of Computing, pp. 26–38, (1992)

Ginibre, J.: General formulation of Griffiths’ inequalities. Commun. Math. Phys. 16, 310–328 (1970)

Grimmett, G.: The random-cluster model, volume 333 of Grundlehren der Mathematischen Wissenschaften [Fundamental Principles of Mathematical Sciences]. Springer, Berlin (2006)

Grimmett, G.R., Winkler, S.N.: Negative association in uniform forests and connected graphs. Random Struct. Algorith. 24(4), 444–460 (2004)

Huh, J., Schröter, B., Wang, B.: Correlation bounds for fields and matroids. Preprint, arXiv:1806.02675

Jacobsen, J.L., Saleur, H.: The arboreal gas and the supersphere sigma model. Nuclear Phys. B 716(3), 439–461 (2005)

Jaffe, A., Witten, E.: Quantum Yang-Mills theory. In: The millennium prize problems, pages 129–152. Clay Math. Inst., Cambridge, MA (2006)

Kahn, J.: A normal law for matchings. Combinatorica 20(3), 339–391 (2000)

Kahn, J., Neiman, M.: Negative correlation and log-concavity. Random Struct. Algorith. 37(3), 367–388 (2010)

Kozma, G.: Reinforced random walk. In: European Congress of Mathematics, pp. 429–443. Eur. Math. Soc., Zürich, (2013)

Kozma, G., Peled, R.: Power-law decay of weights and recurrence of the two-dimensional VRJP. Preprint, arXiv:1911.08579

Łuczak, T., Pittel, B.: Components of random forests. Combin. Probab. Comput. 1(1), 35–52 (1992)

Lyons, R., Peres, Y.: Probability on trees and networks, volume 42 of Cambridge Series in Statistical and Probabilistic Mathematics. Cambridge University Press, New York (2016)

Martin, J.B., Yeo, D.: Critical random forests. ALEA Lat. Am. J. Probab. Math. Stat. 15(2), 913–960 (2018)

Moon, J.W.: Counting labelled trees, volume 1969 of From lectures delivered to the Twelfth Biennial Seminar of the Canadian Mathematical Congress (Vancouver. Canadian Mathematical Congress, Montreal, Que., (1970)

Olver, F.W.J.: Asymptotics and special functions. Academic Press, 1974. Computer Science and Applied Mathematics

Parisi, G., Sourlas, N.: Random magnetic fields, supersymmetry, and negative dimensions. Phys. Rev. Lett. 43, 744–745 (1979)

Pemantle, R.: Towards a theory of negative dependence. volume 41, pp. 1371–1390. 2000. Probabilistic techniques in equilibrium and nonequilibrium statistical physics

Polyakov, A.M.: Interaction of goldstone particles in two dimensions. Applications to ferromagnets and massive Yang-Mills fields. Phys. Lett. B 59, 79–81 (1975)

Ráth, B.: Mean field frozen percolation. J. Stat. Phys. 137(3), 459–499 (2009)

Rényi, A.: Some remarks on the theory of trees. Magyar Tud. Akad. Mat. Kutató Int. Közl. 4, 73–85 (1959)

Sabot, C.: Polynomial localization of the 2D-Vertex Reinforced Jump Process

Sabot, C., Tarrès, P.: Edge-reinforced random walk, vertex-reinforced jump process and the supersymmetric hyperbolic sigma model. J. Eur. Math. Soc. 17(9), 2353–2378 (2015)

Semple, C., Welsh, D.: Negative correlation in graphs and matroids. Combin. Probab. Comput. 17(3), 423–435 (2008)

Spencer, T., Zirnbauer, M.R.: Spontaneous symmetry breaking of a hyperbolic sigma model in three dimensions. Commun. Math. Phys. 252(1–3), 167–187 (2004)

Stark, D.: The edge correlation of random forests. Ann. Comb. 15(3), 529–539 (2011)

Zirnbauer, M.R.: Localization transition on the Bethe lattice. Phys. Rev. B 34(9), 6394–6408 (1986)

Zirnbauer, M.R.: Fourier analysis on a hyperbolic supermanifold with constant curvature. Commun. Math. Phys. 141(3), 503–522 (1991)

Zirnbauer, M.R.: Riemannian symmetric superspaces and their origin in random-matrix theory. J. Math. Phys. 37(10), 4986–5018 (1996)

Acknowledgements

We thank G. Grimmett for helpful discussions and for pointing out Proposition 1.1, G. Slade for helpful references concerning Laplace’s method, and B. Tóth and A. Celsus for helpful conversations. We thank the anonymous referee for their helpful comments and criticisms, and we especially thank T. Hutchcroft for pointing out an error in an earlier version of the appendix, and for proposing the proof used in Appendix A.3. T.H. was supported by EPSRC Grant No. EP/P003656/1 and was at the University of Bristol when this work was carried out. A.S. is supported by EPSRC Grant No. 1648831. N.C. supported by Israel Science Foundation Grant Number 1692/17.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by H. Duminil-Copin

Publisher's Note