Abstract

Quantum teleportation is one of the fundamental building blocks of quantum Shannon theory. While ordinary teleportation is simple and efficient, port-based teleportation (PBT) enables applications such as universal programmable quantum processors, instantaneous non-local quantum computation and attacks on position-based quantum cryptography. In this work, we determine the fundamental limit on the performance of PBT: for arbitrary fixed input dimension and a large number N of ports, the error of the optimal protocol is proportional to the inverse square of N. We prove this by deriving an achievability bound, obtained by relating the corresponding optimization problem to the lowest Dirichlet eigenvalue of the Laplacian on the ordered simplex. We also give an improved converse bound of matching order in the number of ports. In addition, we determine the leading-order asymptotics of PBT variants defined in terms of maximally entangled resource states. The proofs of these results rely on connecting recently-derived representation-theoretic formulas to random matrix theory. Along the way, we refine a convergence result for the fluctuations of the Schur–Weyl distribution by Johansson, which might be of independent interest.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Quantum teleportation protocols

Quantum teleportation [1] is a fundamental quantum information-processing task, and one of the hallmark features of quantum information theory: Two parties Alice and Bob may use a shared entangled quantum state together with classical communication to “teleport” an unknown quantum state from Alice to Bob. The original protocol in [1] consists of Alice measuring the unknown state together with her half of the shared entangled state and letting Bob know about the outcome of her measurement. Based on this information Bob can then manipulate his half of the shared state by applying a suitable correction operation, thus recovering the unknown state in his lab.

From an information-theoretic point of view, quantum teleportation implements a quantum channel between Alice and Bob. If the shared entangled state is a noiseless, maximally entangled state (a so-called EPR state, named after a famous paper by Einstein, Podolski, and Rosen [2]), then this quantum channel is in fact a perfect, noiseless channel. On the other hand, using a noisy entangled state as the shared resource in the teleportation protocol renders the effective quantum channel imperfect or noisy. A common way to measure the noise in a quantum channel is by means of the entanglement fidelity, which quantifies how well the channel preserves generic correlations with an inaccessible environment system (see Sect. 2.1 for a definition).

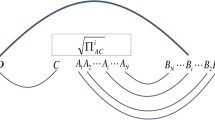

Schematic representation of port-based teleportation (PBT). Like in ordinary teleportation, the sender applies a joint measurement to her input system A and her parts of the entangled resource, \(A_i, i=1,\ldots ,N\), and sends the outcome to the receiver, who applies a correction operation. In PBT, however, this correction operation merely consists of choosing one of the subsystems \(B_i\), the ports, of the entangled resource. A PBT protocol cannot implement a perfect quantum channel with a finite number of ports. There are different variants of PBT. The four commonly studied ones are characterized by whether failures are announced, or heralded (probabilistic PBT) or go unnoticed (deterministic PBT), and whether simplifying constraints on the resource state and the sender’s measurement are enforced

Port-based teleportation (PBT) [3, 4] is a variant of the original quantum teleportation protocol [1], where the receiver’s correction operation consists of merely picking the right subsystem, called port, of their part of the entangled resource state. Figure 1 provides a schematic description of the protocol (see Sect. 3 for a more detailed explanation). While being far less efficient than the ordinary teleportation protocol, the simple correction operation allows the receiver to apply a quantum operation to the output of the protocol before receiving the classical message. This simultaneous unitary covariance property enables all known applications that require PBT instead of just ordinary quantum teleportation, including the construction of universal programmable quantum processors [3], quantum channel discrimination [5] and instantaneous non-local quantum computation (INQC) [6].

In the INQC protocol, which was devised by Beigi and König [6], two spatially separated parties share an input state and wish to perform a joint unitary on it. To do so, they are only allowed a single simultaneous round of communication. INQC provides a generic attack on any quantum position-verification scheme [7], a protocol in the field of position-based cryptography [6, 8,9,10]. It is therefore of great interest for cryptography to characterize the resource requirements of INQC: it is still open whether a computationally secure quantum position-verification scheme exists, as all known generic attacks require an exponential amount of entanglement. Efficient protocols for INQC are only known for special cases [11,12,13,14]. The best lower bounds for the entanglement requirements of INQC are, however, linear in the input size [6, 15, 16], making the hardness of PBT, the corner stone of the best known protocol, the only indication for a possible hardness of INQC.

PBT comes in two variants, deterministic and probabilistic, the latter being distinguished from the former by the fact that the protocol implements a perfect quantum channel whenever it does not fail (errors are “heralded”). In addition, two classes of protocols have been considered in the literature, one using maximally entangled resource states and the other using more complex resources optimized for the protocol. The appeal of the former type of protocol is mostly due to the fact that maximally entangled states are a standard resource in quantum information processing and can be prepared efficiently. Using a protocol based on maximally entangled resources thus removes one parameter from the total complexity of the protocol, the complexity of preparing the resource, leaving the amount of resources as well as the complexity of the involved quantum measurement as the remaining two complexity contributions.

In their seminal work [3, 4], Ishizaka and Hiroshima completely characterize the problem of PBT for qubits. They calculate the performance of the standardFootnote 1 and optimized protocols for deterministic and the EPR and optimized protocols for probabilistic PBT, and prove the optimality of the ‘pretty good’ measurement in the standard deterministic case. They also show a lower bound on the performance of the standard protocol for deterministic PBT, which was later reproven in [6]. Further properties of PBT were explored in [17], in particular with respect to recycling part of the resource state. Converse bounds for the probabilistic and deterministic versions of PBT have been proven in [18] and [19], respectively. In [20], exact formulas for the fidelity of the standard protocol for deterministic PBT with \(N=3\) or 4 in arbitrary dimension are derived using a graphical algebra approach. Recently, exact formulas for arbitrary input dimension in terms of representation-theoretic data have been found for all four protocols, and the asymptotics of the optimized probabilistic case have been derived [21, 22].

Note that, in contrast to ordinary teleportation, a protocol obtained from executing several PBT protocols is not again a PBT protocol. This is due to the fact that the whole input system has to be teleported to the same output port for the protocol to have the mentioned simultaneous unitary covariance property. Therefore, the characterization of protocols for any dimension d is of particular interest. The mentioned representation-theoretic formulas derived in [21, 22] provide such a characterization. It is, however, not known how to evaluate these formulas efficiently for large input dimension.

1.2 Summary of main results

In this paper we provide several characterization results for port-based teleportation. As our main contributions, we characterize the leading-order asymptotic performance of fully optimized deterministic port-based teleportation (PBT), as well as the standard protocol for deterministic PBT and the EPR protocol for probabilistic PBT. In the following, we provide a detailed summary of our results. These results concern asymptotic characterizations of the entanglement fidelity of deterministic PBT, defined in Sect. 3.1, and the success probability of probabilistic PBT, defined in Sect. 3.2.

Our first, and most fundamental, result concerns deterministic PBT and characterizes the leading-order asymptotics of the optimal fidelity for a large number of ports.

Theorem 1.1

For arbitrary but fixed local dimension d, the optimal entanglement fidelity for deterministic port-based teleportation behaves asymptotically as

Theorem 1.1 is a direct consequence of Theorem 1.5 below. Prior to our work, it was only known that \(F_d^*(N) = 1 - \Omega (N^{-2})\) as a consequence of an explicit converse bound [19]. We prove that this asymptotic scaling is in fact achievable, and we also provide a converse with improved dependency on the local dimension, see Corollary 1.6.

For deterministic port-based teleportation using a maximally entangled resource and the pretty good measurement, a closed expression for the entanglement fidelity was derived in [21], but its asymptotics for fixed \(d>2\) and large N remained undetermined. As our second result, we derive the asymptotics of deterministic port-based teleportation using a maximally entangled resource and the pretty good measurement, which we call the standard protocol.

Theorem 1.2

For arbitrary but fixed d and any \(\delta >0\), the entanglement fidelity of the standard protocol of PBT is given by

Previously, the asymptotic behavior given in the above theorem was only known for \(d=2\) in terms of an exact formula for finite N; for \(d>2\), it was merely known that \(F^{\mathrm {std}}_d(N)=1-O\left( N^{-1}\right) \) [4]. In Fig. 2 we compare the asymptotic formula of Theorem 1.2 to a numerical evaluation of the exact formula derived in [21] for \(d\le 5\).

For probabilistic port-based teleportation, Mozrzymas et al. [22] obtained the following expression for the success probability \(p^*_d\) optimized over arbitrary entangled resources:

valid for all values of d and N (see the detailed discussion in Sect. 3). In the case of using N maximally entangled states as the entangled resource, an exact expression for the success probability in terms of representation-theoretic quantities was also derived in [21]. We state this expression in (3.9) in Sect. 3. However, its asymptotics for fixed \(d>2\) and large N have remained undetermined to date. As our third result, we derive the following expression for the asymptotics of the success probability of the optimal protocol among the ones that use a maximally entangled resource, which we call the EPR protocol.

Theorem 1.3

For probabilistic port-based teleportation in arbitrary but fixed dimension d with EPR pairs as resource states,

where \(\mathbf {G}\sim {\text {GUE}}^0_d\).

The famous Wigner semicircle law [24] provides an asymptotic expression for the expected maximal eigenvalue, \( \mathbb E[\lambda _{\max }(\mathbf {G})]\sim 2\sqrt{d}\) for \(d\rightarrow \infty \). Additionally, there exist explicit upper and lower bounds for all d, see the discussion in Sect. 5.

Success probability of the EPR protocol for probabilistic port-based teleporation in local dimension \(d=2,3,4,5\) using N ports [23]. We compare the exact formula (3.9) for \(p_d^{\mathrm {EPR}}\) (blue dots) with the first-order asymptotic formula obtained from Theorem 1.3 (orange curve). The first-order coefficient \(c_d \equiv \mathbb {E}[\lambda _{\max }(\mathbf {G})]\) appearing in the formula in Theorem 1.3 was obtained by numerical integration from the eigenvalue distribution of \({\text {GUE}}_d\)

To establish Theorems 1.2 and 1.3, we analyze the asymptotics of the Schur–Weyl distribution, which also features in other fundamental problems of quantum information theory including spectrum estimation, tomography, and the quantum marginal problem [25,26,27,28,29,30,31,32,33,34,35]. Our main technical contribution is a new convergence result for its fluctuations that strengthens a previous result by Johansson [36]. This result, which may be of independent interest, is stated as Theorem 4.1 in Sect. 4.

Theorem 1.1 is proved by giving an asymptotic lower bound for the optimal fidelity of deterministic PBT, as well as an upper bound that is valid for any number of ports and matches the lower bound asymptotically. For the lower bound, we again use an expression for the entanglement fidelity of the optimal deterministic PBT protocol derived in [22]. The asymptotics of this formula for fixed d and large N have remained undetermined so far. We prove an asymptotic lower bound for this entanglement fidelity in terms of the lowest Dirichlet eigenvalue of the Laplacian on the ordered \((d-1)\)-dimensional simplex.

Theorem 1.4

The optimal fidelity for deterministic port-based teleportation is bounded from below by

where

is the \((d-1)\)-dimensional simplex of ordered probability distributions with d outcomes and \(\lambda _1(\Omega )\) is the first eigenvalue of the Dirichlet Laplacian on a domain \(\Omega \).

Using a bound from [37] for \(\lambda _1(\mathrm {OS}_d)\), we obtain the following explicit lower bound.

Theorem 1.5

For the optimal fidelity of port-based teleportation with arbitrary but fixed input dimension d and N ports, the following bound holds,

As a complementary result, we give a strong upper bound for the entanglement fidelity of any deterministic port-based teleportation protocol. While valid for any finite number N of ports, its asymptotics for large N are given by \(1-O(N^{-2})\), matching Theorem 1.5.

Corollary 1.6

For a general port-based teleportation scheme with input dimension d and N ports, the entanglement fidelity \(F_d^*\) and the diamond norm error \(\varepsilon _d^*\) can be bounded as

Previously, the best known upper bound on the fidelity [19] had the same dependence on N, but was increasing in d, thus failing to reflect the fact that the task becomes harder with increasing d. Interestingly, a lower bound from [38] on the program register size of a universal programmable quantum processor also yields a converse bound for PBT that is incomparable to the one from [19] and weaker than our bound.

Finally we provide a proof of the following ‘folklore’ fact that had been used in previous works on port-based teleportation. The unitary and permutation symmetries of port-based teleportation imply that the entangled resource state and Alice’s measurement can be chosen to have these symmetries as well. Apart from simplifying the optimization over resource states and POVMs, this implies that characterizing the entanglement fidelity is sufficient to give worst-case error guarantees. Importantly, this retrospectively justifies the use of the entanglement fidelity F in the literature about deterministic port-based teleportation in the sense that any bound on F implies a bound on the diamond norm error without losing dimension factors. This is also used to show the diamond norm statement of Corollary 1.6.

Proposition 1.7

(Proposition 3.4 and 3.3 and Corollary 3.5, informal). There is an explicit transformation between port-based teleportation protocols that preserves any unitarily invariant distance measure on quantum channels, and maps an arbitrary port-based teleportation protocol with input dimension d and N ports to a protocol that

-

(i)

has a resource state and a POVM with \(U(d)\times S_N\) symmetry, and

-

(ii)

implements a unitarily covariant channel.

In particular, the transformation maps an arbitrary port-based teleportation protocol to one with the symmetries (i) and (ii) above, and entanglement fidelity no worse than the original protocol. Point (ii) implies that

where \(F_d^*\) and \(\varepsilon _d^*\) denote the optimal entanglement fidelity and optimal diamond norm error for deterministic port-based teleportation.

1.3 Structure of this paper

In Sect. 2 we fix our notation and conventions and recall some basic facts about the representation theory of the symmetric and unitary groups. In Sect. 3 we define the task of port-based teleportation (PBT) in its two main variants, the probabilistic and deterministic setting. Moreover, we identify the inherent symmetries of PBT, and describe a representation-theoretic characterization of the task. In Sect. 4 we discuss the Schur–Weyl distribution and prove a convergence result that will be needed to establish our results for PBT with maximally entangled resources. Our first main result is proved in Sect. 5, where we discuss the probabilistic setting in arbitrary dimension using EPR pairs as ports, and determine the asymptotics of the success probability \(p^{\mathrm {EPR}}_d\) (Theorem 1.3). Our second main result, derived in Sect. 6.1, concerns the deterministic setting in arbitrary dimension using EPR pairs, for which we compute the asymptotics of the optimal entanglement fidelity \(F^{\mathrm {std}}_d\) (Theorem 1.2). Our third result, an asymptotic lower bound on the entanglement fidelity \(F_d^*\) of the optimal protocol in the deterministic setting (Theorem 1.5), is proved in Sect. 6.2. Finally, in Sect. 7 we derive a general non-asymptotic converse bound on deterministic port-based teleportation protocols using a non-signaling argument (Theorem 7.5). We also present a lower bound on the communication requirements for approximate quantum teleportation (Corollary 7.4). We make some concluding remarks in Sect. 8. The appendices contain technical proofs.

2 Preliminaries

2.1 Notation and definitions

We denote by A, B, ...quantum systems with associated Hilbert spaces \(\mathcal {H}_A\), \(\mathcal {H}_B\), ..., which we always take to be finite-dimensional, and we associate to a multipartite quantum system \(A_1\ldots A_n\) the Hilbert space \(\mathcal {H}_{A_1\ldots {}A_n}=\mathcal {H}_{A_1}\otimes \ldots \otimes \mathcal {H}_{A_n}\). When the \(A_i\) are identical, we also write \(A^n=A_1\ldots {}A_n\). The set of linear operators on a Hilbert space \(\mathcal {H}\) is denoted by \(\mathcal {B}(\mathcal {H})\). A quantum state \(\rho _A\) on quantum system A is a positive semidefinite linear operator \(\rho _A\in \mathcal {B}(\mathcal {H}_A)\) with unit trace, i.e., \(\rho _A\ge 0\) and \({{\,\mathrm{tr}\,}}(\rho _A)=1\). We denote by \(I_A\) or \(1_A\) the identity operator on \(\mathcal {H}_A\), and by \(\tau _A=I_A/|A|\) the corresponding maximally mixed quantum state, where \(|A|\,{:}{=}\,\dim \mathcal {H}_A\). A pure quantum state \(\psi _A\) is a quantum state of rank one. We can write \(\psi _A=|\psi \rangle \langle \psi |_A\) for a unit vector \(|\psi \rangle _A\in \mathcal {H}_A\). For quantum systems \(A,A'\) of dimension \(\dim \mathcal {H}_A=\dim \mathcal {H}_{A'}=d\) with bases \(\lbrace |i\rangle _A\rbrace _{i=1}^d\) and \(\lbrace |i\rangle _{A'}\rbrace _{i=1}^d\), the vector \(|\phi ^+\rangle _{A'A} = \frac{1}{\sqrt{d}} \sum _{i=1}^d |i\rangle _{A'}\otimes |i\rangle _A\) defines the maximally entangled state of Schmidt rank d. The fidelity \(F(\rho ,\sigma )\) between two quantum states is defined by \(F(\rho ,\sigma )\,{:}{=}\, \Vert \sqrt{\rho }\sqrt{\sigma }\Vert _1^2\), where \(\Vert X\Vert _1={{\,\mathrm{tr}\,}}(\sqrt{X^\dagger X})\) denotes the trace norm of an operator. For two pure states \(|\psi \rangle \) and \(|\phi \rangle \), the fidelity is equal to \(F(\psi ,\phi )=|\langle \psi |\phi \rangle |^2\). A quantum channel is a completely positive, trace-preserving linear map \(\Lambda :\mathcal {B}(\mathcal {H}_A)\rightarrow \mathcal {B}(\mathcal {H}_B)\). We also use the notation \(\Lambda :A\rightarrow B\) or \(\Lambda _{A\rightarrow B}\), and we denote by \({{\,\mathrm{id}\,}}_A\) the identity channel on A. Given two quantum channels \(\Lambda _1,\Lambda _2:A\rightarrow B\), the entanglement fidelity \(F(\Lambda _1,\Lambda _2)\) is defined as

and we abbreviate \(F(\Lambda )\,{:}{=}\, F(\Lambda ,{{\,\mathrm{id}\,}})\). The diamond norm of a linear map \(\Lambda :\mathcal {B}(\mathcal {H}_A)\rightarrow \mathcal {B}(\mathcal {H}_B)\) is defined by

The induced distance on quantum channels is called the diamond distance. A positive operator-valued measure (POVM) \(E=\lbrace E_x\rbrace \) on a quantum system A is a collection of positive semidefinite operators \(E_x\ge 0\) satisfying \(\sum _x E_x = I_A\).

We denote random variables by bold letters (\(\mathbf {X}\), \(\mathbf {Y}\), \(\mathbf {Z}\), ...) and the valued they take by the non-bold versions (\(X,Y,Z,\ldots \)). We denote by \(\mathbf {X} \sim \mathbb {P}\) that \(\mathbf {X}\) is a random variable with probability distribution \(\mathbb {P}\). We write \(\Pr (\ldots )\) for the probability of an event and \(\mathbb {E}\left[ \ldots \right] \) for expectation values. The notation \(\mathbf {X}_n\overset{P}{\rightarrow }\mathbf {X} \ (n\rightarrow \infty )\) denotes convergence in probability and \(\mathbf {X}_n\overset{D}{\rightarrow }\mathbf {X} \ (n\rightarrow \infty )\) denotes convergence in distribution. The latter can be defined, e.g., by demanding that \(\mathbb {E}\left[ f(\mathbf {X}_n)\right] \rightarrow \mathbb {E}\left[ f(\mathbf {X})\right] \ (n\rightarrow \infty )\) for every continuous, bounded function f. The Gaussian unitary ensemble \({\text {GUE}}_d\) is the probability distribution on the set of Hermitian \(d\times d\)-matrices H with density \(Z_d^{-1} \exp (-\frac{1}{2}{{\,\mathrm{tr}\,}}H^2)\), where \(Z_d\) is the appropriate normalization constant. Alternatively, for \(\mathbf {X}\sim {\text {GUE}}_d\), the entries \(\mathbf {X}_{ii}\) for \(1\le i\le d\) are independently distributed as \(\mathbf {X}_{ii}\sim N(0,1)\), whereas the elements \(\mathbf {X}_{ij}\) for \(1\le i<j\le d\) are independently distributed as \(\mathbf {X}_{ij}\sim N(0,\frac{1}{2})+iN(0,\frac{1}{2})\). Here, \(N(0,\sigma ^2)\) denotes the centered normal distribution with variance \(\sigma ^2\). The traceless Gaussian unitary ensemble \({\text {GUE}}^0_d\) can be defined as the distribution of the random variable \(\mathbf {Y} \,{:}{=}\, \mathbf {X} - \tfrac{{{\,\mathrm{tr}\,}}\mathbf {X}}{d} I\), where \(\mathbf {X}\sim {\text {GUE}}_d\).

For a complex number \(z\in \mathbb {C}\), we denote by \(\mathfrak {R}( z)\) and \(\mathfrak {I}( z)\) its real and imaginary part, respectively. We denote by \(\mu \vdash _d n\) a partition \((\mu _1,\ldots ,\mu _d)\) of n into d parts. That is, \(\mu \in \mathbb {Z}^d\) with \(\mu _1\ge \mu _2\ge \cdots \ge \mu _d\ge 0\) and \(\sum _i\mu _i=n\). We also call \(\mu \) a Young diagram and visualize it as an arrangement of boxes, with \(\mu _i\) boxes in the ith row. For example, \(\mu =(3,1)\) can be visualized as  . We use the notation \((i,j)\in \mu \) to mean that (i, j) is a box in the Young diagram \(\mu \), that is, \(1\le i\le d\) and \(1\le j\le \mu _i\). We denote by \({{\,\mathrm{GL}\,}}(\mathcal {H})\) the general linear group and by \(U(\mathcal {H})\) the unitary group acting on a Hilbert space \(\mathcal {H}\). When \(\mathcal {H}=\mathbb {C}^d\), we write \({{\,\mathrm{GL}\,}}(d)\) and U(d). Furthermore, we denote by \(S_n\) the symmetric group on n symbols. A representation \(\varphi \) of a group G on a vector space \(\mathcal {H}\) is a map \(G\ni g\mapsto \varphi (g)\in {{\,\mathrm{GL}\,}}(\mathcal {H})\) satisfying \(\varphi (gh)=\varphi (g)\varphi (h)\) for all \(g,h\in G\). In this paper all representations are unitary, which means that \(\mathcal {H}\) is a Hilbert space and \(\varphi (g) \in U(\mathcal {H})\) for every \(g\in G\). A representation is irreducible (or an irrep) if \(\mathcal {H}\) contains no nontrivial invariant subspace.

. We use the notation \((i,j)\in \mu \) to mean that (i, j) is a box in the Young diagram \(\mu \), that is, \(1\le i\le d\) and \(1\le j\le \mu _i\). We denote by \({{\,\mathrm{GL}\,}}(\mathcal {H})\) the general linear group and by \(U(\mathcal {H})\) the unitary group acting on a Hilbert space \(\mathcal {H}\). When \(\mathcal {H}=\mathbb {C}^d\), we write \({{\,\mathrm{GL}\,}}(d)\) and U(d). Furthermore, we denote by \(S_n\) the symmetric group on n symbols. A representation \(\varphi \) of a group G on a vector space \(\mathcal {H}\) is a map \(G\ni g\mapsto \varphi (g)\in {{\,\mathrm{GL}\,}}(\mathcal {H})\) satisfying \(\varphi (gh)=\varphi (g)\varphi (h)\) for all \(g,h\in G\). In this paper all representations are unitary, which means that \(\mathcal {H}\) is a Hilbert space and \(\varphi (g) \in U(\mathcal {H})\) for every \(g\in G\). A representation is irreducible (or an irrep) if \(\mathcal {H}\) contains no nontrivial invariant subspace.

2.2 Representation theory of the symmetric and unitary group

Our results rely on the representation theory of the symmetric and unitary groups and Schur–Weyl duality (as well as their semiclassical asymptotics which we discuss in Sect. 4). In this section we introduce the relevant concepts and results (see e.g., [39, 40].

The irreducible representations of \(S_n\) are known as Specht modules and labeled by Young diagrams with n boxes. We denote the Specht module of \(S_n\) corresponding to a Young diagram \(\mu \vdash _d n\) by \([\mu ]\) (d is arbitrary). Its dimension is given by the hook length formula [39, pp. 53–54],

where \(h_\mu (i,j)\) is the hook length of the hook with corner at the box (i, j), i.e., the number of boxes below (i, j) plus the number of boxes to the right of (i, j) plus one (the box itself).

The polynomial irreducible representations of U(d) are known as Weyl modules and labeled by Young diagrams with no more than d rows. We denote the Weyl module of U(d) corresponding to a Young diagram \(\mu \vdash _d n\) by \(V^d_\mu \) (n is arbitrary). Its dimension can be computed using Stanley’s hook length formula [39, p. 55],

where \(c(i,j) = j-i\) is the so-called content of the box (i, j). This is an alternative to the Weyl dimension formula, which states that

We stress that \(m_{d,\mu }\) depends on the dimension d.

Consider the representations of \(S_n\) and U(d) on \({\left( \mathbb {C}^d\right) }^{\otimes n}\) given by permuting the tensor factors, and multiplication by \(U^{\otimes n}\), respectively. Clearly the two actions commute. Schur–Weyl duality asserts that the decomposition of \({\left( \mathbb {C}^d\right) }^{\otimes n}\) into irreps takes the form (see, e.g., [40])

3 Port-Based Teleportation

The original quantum teleportation protocol for qubits (henceforth referred to as ordinary teleportation protocol) is broadly described as follows [1]: Alice (the sender) and Bob (the receiver) share an EPR pair (a maximally entangled state on two qubits), and their goal is to transfer or ‘teleport’ another qubit in Alice’s possession to Bob by sending only classical information. Alice first performs a joint Bell measurement on the quantum system to be teleported and her share of the EPR pair, and communicates the classical measurement outcome to Bob using two bits of classical communication. Conditioned on this classical message, Bob then executes a correction operation consisting of one of the Pauli operators on his share of the EPR pair. After the correction operation, he has successfully received Alice’s state. The ordinary teleportation protocol can readily be generalized to qudits, i.e., d-dimensional quantum systems. Note that while the term ‘EPR pair’ is usually reserved for a maximally entangled state on two qubits (\(d=2\)), we use the term more freely for maximally entangled states of Schmidt rank d on two qudits, as defined in Sect. 2.

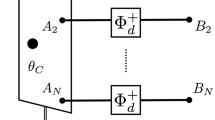

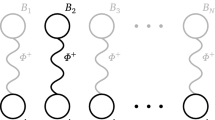

Port-based teleportation, introduced by Ishizaka and Hiroshima [3, 4], is a variant of quantum teleportation where Bob’s correction operation solely consists of picking one of a number of quantum subsystems upon receiving the classical message from Alice. In more detail, Alice and Bob initially share an entangled resource quantum state \(\psi _{A^N B^N}\), where \(\mathcal {H}_{A_i}\cong \mathcal {H}_{B_i}\cong \mathbb {C}^d\) for \(i=1,\ldots ,N\). We may always assume that the resource state is pure, for we can give a purification to Alice and she can choose not to use it.Footnote 2 Bob’s quantum systems \(B_i\) are called ports. Just like in ordinary teleportation, the goal is for Alice to teleport a d-dimensional quantum system \(A_0\) to Bob. To achieve this, Alice performs a joint POVM \(\{(E_i)_{A_0A^N}\}_{i=1}^N\) on the input and her part of the resource state and sends the outcome i to Bob. Based on the index i he receives, Bob selects the ith port, i.e. the system \(B_i\), as being the output register (renaming it to \(B_0\)), and discards the rest. That is, in contrast to ordinary teleportation, Bob’s decoding operation solely consists of selecting the correct port \(B_i\). The quality of the teleportation protocol is measured by how well it simulates the identity channel from Alice’s input register \(A_0\) to Bob’s output register \(B_0\).

Port-based teleportation is impossible to achieve perfectly with finite resources [3], a fact first deduced from the application to universal programmable quantum processors [41]. There are two ways to deal with this fact: either one can just accept an imperfect protocol, or one can insist on simulating a perfect identity channel, with the caveat that the protocol will fail from time to time. This leads to two variants of PBT, which are called deterministic and probabilistic PBT in the literature [3].Footnote 3

3.1 Deterministic PBT

A protocol for deterministic PBT proceeds as described above, implementing an imperfect simulation of the identity channel whose merit is quantified by the entanglement fidelity \(F_d\) or the diamond norm error \(\varepsilon _d\). We denote by \(F_d^*(N)\) and \(\varepsilon _d^*(N)\) the maximal entanglement fidelity and the minimal diamond norm error for deterministic PBT, respectively, where both the resource state and the POVM are optimized. We will often refer to this as the fully optimized case.

Let \(\psi _{{A}^N{B}^N}\) be the entangled resource state used for a PBT protocol. When using the entanglement fidelity as a figure of merit, it is shown in [4] that the problem of PBT for the fixed resource state \(\psi _{{A}^N{B}^N}\) is equivalent to the state discrimination problem given by the collection of states

with uniform prior (here we trace over all B systems but \(B_i\), which is relabeled to \(B_0\)). More precisely, the success probability q for state discrimination with some fixed POVM \(\{E_i\}_{i=1}^N\) and the entanglement fidelity \(F_d\) of the PBT protocol with Alice’s POVM equal to \(\{E_i\}_{i=1}^N\), but acting on \(A^NA_0\), are related by the equation \(q=\frac{d^2}{N}F_d\). This link with state discrimination provides us with the machinery developed for state discrimination to optimize the POVM. In particular, it suggests the use of the pretty good measurement [43, 44].

As in ordinary teleportation, it is natural to consider PBT protocols where the resource state is fixed to be N maximally entangled states (or EPR pairs) of local dimension d. This is because EPR pairs are a standard resource in quantum information theory that can easily be produced in a laboratory. We will denote by \(F_d^{\mathrm {EPR}}(N)\) the optimal entanglement fidelity for any protocol for deterministic PBT that uses maximally entangled resource states. A particular protocol is given by combining maximally entangled resource states with the pretty good measurement (PGM) POVM [43, 44]. We call this the standard protocol for deterministic PBT and denote the corresponding entanglement fidelity by \(F^{\mathrm {std}}_d(N)\). For qubits (\(d=2\)), the pretty good measurement was shown to be optimal for maximally entangled resource states [4]:

According to [22], the PGM is optimal in this situation for \(d>2\) as well.

In [3] it is shown that the entanglement fidelity \(F^{\mathrm {std}}_d\) for the standard protocol is at least

Beigi and König [6] rederived the same bound with different techniques. In [19], a converse bound is provided in the fully optimized setting:

Note that the dimension d is part of the denominator instead of the numerator as one might expect in the asymptotic setting. Thus, the bound lacks the right qualitative behavior for large values of d. A different, incomparable, bound can be obtained from a recent lower bound on the program register dimension of a universal programmable quantum processor obtained by Kubicki et al. [38],

where c is a constant. By Proposition 1.7, this bound is equivalent to

Earlier works on programmable quantum processors [45, 46] also yield (weaker) converse bounds for PBT.

Interestingly, and of direct relevance to our work, exact formulas for the entanglement fidelity have been derived both for the standard protocol and in the fully optimized case. In [21], the authors showed that

Here, the inner sum is taken over all Young diagrams \(\mu \) that can be obtained by adding one box to a Young diagram \(\alpha \vdash _d N-1\), i.e., a Young diagram with \(N-1\) boxes and at most d rows. Equation (3.5) generalizes the result of [4] for \(d=2\), whose asymptotic behavior is stated in Eq. (3.2).

In the fully optimized case, Mozrzymas et al. [22] obtained a formula similar to Eq. (3.5) in which the dimension \(d_\mu m_{d,\mu }\) of the \(\mu \)-isotypic component in the Schur–Weyl decomposition is weighted by a coefficient \(c_\mu \) that is optimized over all probability densities with respect to the Schur–Weyl distribution (defined in Sect. 4). More precisely,

where the optimization is over all nonnegative coefficients \(\{c_\mu \}\) such that \(\sum _{\mu \vdash _d N} c_\mu \frac{d_\mu m_{d,\mu }}{d^N}=1\).

3.2 Probabilistic PBT

In the task of probabilistic PBT, Alice’s POVM has an additional outcome that indicates the failure of the protocol and occurs with probability \(1-p_d\). For all other outcomes, the protocol is required to simulate the identity channel perfectly. We call \(p_d\) the probability of success of the protocol. As before, we denote by \(p_d^*(N)\) the maximal probability of success for probabilistic PBT using N ports of local dimension d, where the resource state as well as the POVM are optimized.

Based on the no-signaling principle and a version of the no-cloning theorem, Pitalúa-García [18] showed that the success probability \(p^*_{2^n}(N)\) of teleporting an n-qubit input state using a general probabilistic PBT protocol is at most

Subsequently, Mozrzymas et al. [22] showed for a general d-dimensional input state that the converse bound in (3.7) is also achievable, establishing that

This fully resolves the problem of determining the optimal probability of success for probabilistic PBT in the fully optimized setting.

As discussed above, it is natural to also consider the scenario where the resource state is fixed to be N maximally entangled states of rank d and consider the optimal POVM given that resource state. We denote by \(p^{\mathrm {EPR}}_d\) the corresponding probability of success. We use the superscript \(\mathrm {EPR}\) to keep the analogy with the case of deterministic PBT, as the measurement is optimized for the given resource state and no simplified measurement like the PGM is used. In [4], it was shown for qubits (\(d=2\)) that

For arbitrary input dimension d, Studziński et al. [21] proved the exact formula

where \(\mu ^*\) is the Young diagram obtained from \(\alpha \) by adding one box in such a way that

is maximized (as a function of \(\mu \)).

Finally, we note that any protocol for probabilistic PBT with success probability \(p_d\) can be converted into a protocol for deterministic PBT by sending over a random port index to Bob whenever Alice’s measurement outcome indicates an error. The entanglement fidelity of the resulting protocol can be bounded as \(F_d\ge p_d+\frac{1-p_d}{d^2}\). When applied to the fully optimized protocol corresponding to Eq. (3.8), this yields a protocol for deterministic PBT with better entanglement fidelity than the standard protocol for deterministic PBT. It uses, however, an optimized resource state that might be difficult to produce, while the standard protocol uses N maximally entangled states.

3.3 Symmetries

The problem of port-based teleportation has several natural symmetries that can be exploited. Intuitively, we might expect a U(d)-symmetry and a permutation symmetry, since our figures of merit are unitarily invariant and insensitive to the choice of port that Bob has to select. For the resource state, we might expect an \(S_N\)-symmetry, while the POVM elements have a marked port, leaving a possible \(S_{N-1}\)-symmetry among the non-marked ports. This section is dedicated to making these intuitions precise.

The implications of the symmetries have been known for some time in the community and used in other works on port-based teleportation (e.g. in [22]). We provide a formal treatment here for the convenience of the interested reader as well as to highlight the fact that the unitary symmetry allows us to directly relate the entanglement fidelity (which a priori quantifies an average error) to the diamond norm error (a worst case figure of merit). This relation is proved in Corollary 3.5.

While the sub-structure of the resource state on Alice’s side in terms of N subsystems is natural from a mathematical point of view, it does not correspond to an operational feature of the task of PBT. This is in contrast to the port sub-structure on Bob’s side, in terms of which the port-based condition on the teleportation protocol is defined. In the following, it will be convenient to allow resource states for PBT to have an arbitrary sub-structure on Alice’s side.

We begin with a lemma on purifications of quantum states with a given group symmetry (see [47, 48] and [49, Lemma 5.5]):

Lemma 3.1

Let \(\rho _A\) be a quantum state invariant under a unitary representation \(\varphi \) of a group G, i.e., \([\rho _A,\varphi (g)]=0\) for all \(g\in G\). Then there exists a purification \(|\rho \rangle _{AA'}\) such that \((\varphi (g)\otimes \varphi ^*(g)) |\rho \rangle _{AA'}=|\rho \rangle _{AA'}\) for all \(g\in G\). Here, \(\varphi ^*\) is the dual representation of \(\varphi \), which can be written as \(\varphi ^*(g)=\overline{\varphi (g)}\).

Starting from an arbitrary port-based teleportation protocol, it is easy to construct a modified protocol that uses a resource state such that Bob’s marginal is invariant under the natural action of \(S_N\) as well as the diagonal action of U(d). In slight abuse of notation, we denote by \(\zeta _{B^N}\) the unitary representation of \(\zeta \in S_N\) that permutes the tensor factors of \(\mathcal {H}_B^{\otimes N}\).

Lemma 3.2

Let \(\rho _{A^NB^N}\) be the resource state of a protocol for deterministic PBT with input dimension d. Then there exists another protocol for deterministic PBT with resource state \( \rho '_{{A}^N{B}^NIJ}\), where I and J are additional registers held by Alice, such that \( \rho '_{{B}^N}\) is invariant under the above-mentioned group actions,

and such that the new protocol has diamond norm error and entanglement fidelity no worse than the original one.

In fact, Lemma 3.2 applies not only to the diamond norm distance and the entanglement fidelity, but any convex functions on quantum channels that is invariant under conjugation with a unitary channel.

Proof of Lemma 3.2

Define the resource state

where \(\zeta _{B^N}\) is the action of \(S_N\) on \(\mathcal {H}_B^{\otimes N}\) that permutes the tensor factors, and I is a classical ‘flag’ register with orthonormal basis \(\lbrace |\zeta \rangle \rbrace _{\zeta \in S_N}\). The following protocol achieves the same performance as the preexisting one: Alice and Bob start sharing \(\tilde{\rho }_{A^NB^NI}\) as an entangled resource, with Bob holding \(B^N\) as usual and Alice holding registers \(A^NI\). Alice begins by reading the classical register I. Suppose that its content is a permutation \(\zeta \). She then continues to execute the original protocol, except that she applies \(\zeta \) to the index she is supposed to send to Bob after her measurement, which obviously yields the same result as the original protocol.

A similar argument can be made for the case of U(d). Let \(D\subset U(d)\), \(|D|<\infty \) be an exact unitary N-design, i.e., a subset of the full unitary group such that taking the expectation value of any polynomial P of degree at most N in both U and \(U^\dagger \) over the uniform distribution on D yields the same result as taking the expectation of P over the normalized Haar measure on U(d). Such exact N-designs exist for all N ([50]; see [51] for a bound on the size of exact N-designs). We now define a further modified resource state \(\rho '_{A^NB^NIJ}\) from \(\tilde{\rho }_{A^NB^NI}\) in analogy to (3.12):

where \(\lbrace |U\rangle \rbrace _{U\in D}\) is an orthonormal basis for the flag register J. Again, there exists a modified protocol, in which Bob holds the registers \(B^N\) as usual, but Alice holds registers \(A^NIJ\). Alice starts by reading the register J which records the unitary \(U\in D\) that has been applied to Bob’s side. She then proceeds with the rest of the protocol after applying \(U^\dagger \) to her input state. Note that \(\rho '_{B^N}\) clearly satisfies the symmetries in (3.11), and furthermore the new PBT protocol using \(\rho '_{A^NB^NIJ}\) has the same performance as the original one using \(\rho _{A^NB^N}\), concluding the proof. \(\quad \square \)

Denote by \({{\,\mathrm{Sym}\,}}^N(\mathcal {H})\) the symmetric subspace of a Hilbert space \(\mathcal {H}^{\otimes N}\), defined by

Using the above two lemmas we arrive at the following result.

Proposition 3.3

Let \(\rho _{A^NB^N}\) be the resource state of a PBT protocol with input dimension d. Then there exists another protocol with properties as in Lemma 3.2 except that it has a resource state \(|\psi \rangle \langle \psi |_{{A}^N{B}^N}\) with \(|\psi \rangle _{{A}^N{B}^N}\in {{\,\mathrm{Sym}\,}}^{N}(\mathcal {H}_A\otimes \mathcal {H}_B)\) that is a purification of a symmetric Werner state, i.e., it is invariant under the action of U(d) on \(\mathcal {H}_{A}^{\otimes N}\otimes \mathcal {H}_{B}^{\otimes N}\) given by \(U^{\otimes N}\otimes \overline{U}^{\otimes N}\).

Proof

We begin by transforming the protocol according to Lemma 3.2, resulting in a protocol with resource state \(\rho '_{A^NB^N I J}\) such that Bob’s part is invariant under the \(S_N\) and U(d) actions. By Lemma 3.1, there exists a purification \(|\psi \rangle _{{A}^N{B}^N}\in {{\,\mathrm{Sym}\,}}^n\left( \mathbb {C}^d\otimes \mathbb {C}^d\right) \) of \(\rho '_{B^N}\) that is invariant under \(U^{\otimes N}\otimes \overline{U}^{\otimes N}\) (note that the \(S_n\)-representation is self-dual, so the representation \(\phi \otimes \phi ^*\) referred to in Lemma 3.1 just permutes the pairs of systems \(A_iB_i\)). But Uhlmann’s Theorem ensures that there exists an isometry \(V_{A^N\rightarrow A^N I J E}\) for some Hilbert space \(\mathcal {H}_E\) such that \(V_{A^N\rightarrow A^N I J E}|\psi \rangle _{{A}^N{B}^N}\) is a purification of \(\rho '_{A^NB^N I J}\). The following is a protocol using the resource state \(|\psi \rangle \): Alice applies V and discards E. Then the transformed protocol from Lemma 3.2 is performed. \(\quad \square \)

Using the symmetries of the resource state, we can show that the POVM can be chosen to be symmetric as well. In the proposition below, we omit identity operators.

Proposition 3.4

Let \(\{\left( E_i\right) _{A_0A^N}\}_{i=1}^N\) be Alice’s POVM for a PBT protocol with a resource state \(|\psi \rangle \) with the symmetries from Proposition 3.3. Then there exists another POVM \(\{\left( E'_i\right) _{A_0A^N}\}_{i=1}^N\) such that the following properties hold:

-

(i)

\( \zeta _{A^N}\left( E'_i\right) _{A_0A^N}\zeta _{A^N}^\dagger =\left( E'_{\zeta (i)}\right) _{A_0A^N}\) for all \(\zeta \in S_N\);

-

(ii)

\(\left( U_{A_0}\otimes \overline{U}_{A}^{\otimes N}\right) \left( E'_i\right) _{A_0A^N} \left( U_{A_0}\otimes \overline{U}_{A}^{\otimes N}\right) ^\dagger =\left( E'_i\right) _{A_0A^N}\) for all \(U\in U(d)\);

-

(iii)

the channel \(\Lambda '\) implemented by the PBT protocol is unitarily covariant, i.e.,

$$\begin{aligned} \Lambda '_{A_0\rightarrow B_0}(X)=U_{B_0} \Lambda '_{A_0\rightarrow B_0}(U_{A_0}^\dagger X U_{A_0})U_{B_0}^\dagger \quad \text {for all } U\in U(d); \end{aligned}$$ -

(iv)

the resulting protocol has diamond norm distance (to the identity channel) and entanglement fidelity no worse than the original one.

Proof

Define an averaged POVM with elements

which clearly has the symmetries (i) and (ii). The corresponding channel can be written as

where

where we suppressed \({{\,\mathrm{id}\,}}_{B_i\rightarrow B_0}\). Here we used the cyclicity property

of the partial trace and the symmetries of the resource state, and \(\Lambda _{A_0\rightarrow B_0}\) denotes the channel corresponding to the original protocol. It follows at once that \(\Lambda '_{A_0\rightarrow B_0}\) is covariant in the sense of (iii). Finally, since the identity channel is itself covariant, property (iv) follows from the concavity (convexity) and unitary covariance of the entanglement fidelity and the diamond norm distance, respectively. \(\quad \square \)

Similarly as mentioned below Lemma 3.2, the statement in Proposition 3.4(iv) can be generalized to any convex function on the set of quantum channels that is invariant under conjugation with unitary channels.

The unitary covariance allows us to apply a lemma from [5] (stated as Lemma D.3 in “Appendix D”) to relate the optimal diamond norm error and entanglement fidelity of port-based teleportation. This shows that the achievability results Eqs. (3.5) to (3.4) for the entanglement fidelity of deterministic PBT, as well as the ones mentioned in the introduction, imply similar results for the diamond norm error without losing a dimension factor.

Corollary 3.5

Let \(F_d^*\) and \(\varepsilon _d^*\) be the optimal entanglement fidelity and optimal diamond norm error for deterministic PBT with input dimension d. Then, \(\varepsilon _d^*=2\left( 1-F_d^*\right) \).

Note that the same formula was proven for the standard protocol in [5].

3.4 Representation-theoretic characterization

The symmetries of PBT enable the use of representation-theoretic results, in particular Schur–Weyl duality. This was extensively done in [21, 22] in order to derive the formulas Eqs. (3.5)–(3.9). The main ingredient used in [21] to derive Eqs. (3.5) and (3.9) was the following technical lemma. For the reader’s convenience, we give an elementary proof in “Appendix A” using only Schur–Weyl duality and the classical Pieri rule. In the statement below, \(B_i^c\) denotes the quantum system consisting of all B-systems except the ith one.

Lemma 3.6

[21]. The eigenvalues of the operator

on \((\mathbb {C}^d)^{\otimes (1+N)}\) are given by the numbers

where \(\alpha \vdash _{d}N-1\), the Young diagram \(\mu \vdash _d N\) is obtained from \(\alpha \) by adding a single box, and \(\gamma _\mu (\alpha )\) is defined in Eq. (3.10).

Note that the formula in Lemma 3.6 above gives all eigenvalues of \(T(N)_{AB^N}\), i.e., including multiplicities.

The connection to deterministic PBT is made via the equivalence with state discrimination. In particular, when using a maximally entangled resource, T(N) is a rescaled version of the density operator corresponding to the ensemble of quantum states \(\eta _i\) from Eq. (3.1),

Using the hook length formulas Eqs. (2.1) and (2.2), we readily obtain the following simple expression for the ratio \(\gamma _\mu (\alpha )\) defined in Eq. (3.10):

Lemma 3.7

[52] Let \(\mu =\alpha +e_i\). Then,

i.e.,

Proof

Using Eqs. (2.1) and (2.2), we find

which concludes the proof. \(\quad \square \)

Remark 3.8

It is clear that \(\gamma _\mu (\alpha )\) is maximized for \(\alpha =(N-1,0,\ldots ,0)\) and \(i=1\). Therefore,

This result can be readily used to characterize the extendibility of isotropic states, providing an alternative proof of the result by Johnson and Viola [53].

4 The Schur–Weyl Distribution

Our results rely on the asymptotics of the Schur–Weyl distribution, a probability distribution defined below in (4.1) in terms of the representation-theoretic quantities that appear in the Schur–Weyl duality (2.4). These asymptotics can be related to the random matrix ensemble \({\text {GUE}}^0_d\). In this section we explain this connection and provide a refinement of a convergence result (stated in (4.4)) by Johansson [36] that is tailored to our applications. While representation-theoretic techniques have been extensively used in previous analyses, the connection between the Schur–Weyl distribution and random matrix theory has, to the best of our knowledge, not been previously recognized in the context of PBT (see however [31] for applications in the the context of quantum state tomography).

Recalling the Schur–Weyl duality \({\left( \mathbb {C}^d\right) }^{\otimes n} \cong \bigoplus _{\alpha \vdash _d n} [\alpha ] \otimes V^d_\alpha \), we denote by \(P_\alpha \) the orthogonal projector onto the summand labeled by the Young diagram \(\alpha \vdash _d n\). The collection of these projectors defines a projective measurement, and hence

with \(\tau _d=\frac{1}{d}1_{\mathbb {C}^d}\) defines a probability distribution on Young diagrams \(\alpha \vdash _d n\), known as the Schur–Weyl distribution. Now suppose that \(\varvec{\alpha }^{(n)} \sim p_{d,n}\) for \(n\in \mathbb {N}\). By spectrum estimation [25,26,27, 54, 55], it is known that

This can be understood as a law of large numbers. Johansson [36] proved a corresponding central limit theorem: Let \(\mathbf {A}^{(n)}\) be the centered and renormalized random variable defined by

Then Johansson [36] proved that

for \(n\rightarrow \infty \), where \(\mathbf {G} \sim {\text {GUE}}^0_d\). The result for the first row is by Tracy and Widom [56] (cf. [36, 57]; see [31] for further discussion).

In the following sections, we would like to use this convergence of random variables stated in Eqs. (4.2) and (4.4) to determine the asymptotics of Eqs. (3.9) and (3.5). To this end, we rewrite the latter as expectation values of some functions of Young diagrams drawn according to the Schur–Weyl distribution. However, in order to conclude that these expectation values converge to the corresponding expectation values of functions on the spectrum of \({\text {GUE}}^0_d\)-matrices, we need a stronger sense of convergence than what is provided by the former results. Indeed, we need to establish convergence for functions that diverge polynomially as \(n\rightarrow \infty \) when \(A_{j}=\omega (1)\) or when \(A_{j}=O(n^{-1/2})\).Footnote 4 The former are easily handled using the bounds from spectrum estimation [27], but for the latter a refined bound on \(p_{d,n}\) corresponding to small A is needed. To this end, we prove the following result, which shows convergence of expectation values of a large class of functions that includes all polynomials in the variables \(\mathbf {A}_i\).

In the following, we will need the cone of sum-free non-increasing vectors in \(\mathbb {R}^d\),

and its interior \(\mathrm {int}(C^d)=\lbrace x\in C^d:x_i \ne 0 \text { for }i=1,\ldots ,d\rbrace \).

Theorem 4.1

Let \(g:\mathrm {int}(C^d)\rightarrow \mathbb {R}\) be a continuous function satisfying the following: There exist constants \(\eta _{ij}\) satisfying \(\eta _{ij}> -2-\frac{1}{d-1}\) such that for

there exists a polynomial q with

For every n, let \(\mathbf \alpha ^{(n)} \sim p_{d,n}\) be drawn from the Schur–Weyl distribution, \(\mathbf{A}^{(n)} \,{:}{=}\, \sqrt{d/n}(\mathbf \alpha ^{(n)}-n/d)\) the corresponding centered and renormalized random variable, and \(\tilde{\mathbf{A}}^{(n)}=\mathbf{A}^{(n)}+\frac{d-i}{\sqrt{\frac{n}{d}}}\). Then the family of random variables \(\left\{ g\left( \tilde{\mathbf{A}}^{(n)}\right) \right\} _{n\in \mathbb {N}}\) is uniformly integrable and

where \(\mathbf{A}=\mathrm {spec}(\mathbf {G})\) and \(\mathbf {G} \sim {\text {GUE}}^0_d\).

As a special case we recover the uniform integrability of the moments of \(\mathbf {A}\) (Corollary 4.5), which implies convergence in distribution in the case of an absolutely continuous limiting distribution. Therefore, Theorem 4.1 is a refinement of the result (4.4) by Johansson. The remainder of this section is dedicated to proving Theorem 4.1.

The starting point for what follows is Stirling’s approximation, which states that

It will be convenient to instead use the following variant,

where the upper bound is unchanged and the lower bound follows using \(n!=\frac{(n+1)!}{n+1}\). The dimension \(d_\alpha \) is equal to the multinomial coefficient up to inverse polynomial factors [27]. Defining the normalized Young diagram \(\bar{\alpha }=\frac{\alpha }{n}\) for \(\alpha \vdash n\), the multinomial coefficient \(\left( {\begin{array}{c}n\\ \alpha \end{array}}\right) \) can be bounded from above using Eq. (4.5) as

where \(C_d \,{:}{=}\, \frac{e^{d+1}}{(2\pi )^{d/2}}\). Hence,

Here, \(D(p\Vert q)\,{:}{=}\,\sum _i p_i \log {p_i}/\!{q_i}\) is the Kullback-Leibler divergence defined in terms of the natural logarithm, \(\tau =(1/d,\ldots ,1/d)\) is the uniform distribution, and we used Pinsker’s inequality [58] in the second step.

We go on to derive an upper bound on the probability of Young diagrams that are close to the boundary of the set of Young diagrams under the Schur–Weyl distribution. More precisely, the following lemma can be used to bound the probability of Young diagrams that have two rows that differ by less than the generic \(O(\sqrt{n})\) in length.

Lemma 4.2

Let \(d\in \mathbb {N}\) and \(c_1,\ldots ,c_{d-1}\ge 0\), \(\gamma _1,\ldots ,\gamma _{d-1}\ge 0\). Let \(\alpha \vdash _dn\) be a Young diagram with (a) \(\alpha _i-\alpha _{i+1}\le c_i n^{\gamma _i}\) for all i. Finally, set \(A \,{:}{=}\, \sqrt{d/n}(\alpha -n/d)\). Then,

where \(\gamma _{ij}\,{:}{=}\,\max \{\gamma _i,\gamma _{i+1},\ldots ,\gamma _{j-1}\}\) and \(C=C(c_1,\ldots ,c_{d-1},d)\) is a suitable constant.

Proof

We need to bound \(p_{d,n}(\alpha )=m_{d,\alpha } d_\alpha / d^n\) and begin with \(m_{d,\alpha }\). By assumption (a), there exist constants \(C_{ij}>0\) (depending on \(c_i,\ldots ,c_{j-1}\) as well as on d) such that the inequality \(\alpha _i-\alpha _j+j-i\le C_{ij}n^{\gamma _{ij}}\) holds for all \(i<j\). Using the Weyl dimension formula (2.3) and assumption (a), it follows that

for a suitable constant \(C_1=C_1(c_1,\ldots ,c_{d-1},d)>0\). Next, consider \(d_\alpha \). By comparing the hook-length formulas (2.1) and (2.2), we have

where \(C_2=C_2(d)>0\), and \(\bar{\alpha }_i = \alpha _i/n\). In the inequality, we used that \(\alpha _i + d - i \ge \alpha _i + 1\) for \(1\le i\le d-1\), and for \(i=d\), the exponent of \(\alpha _i + 1\) on the right hand side is zero.

Combining Eqs. (4.7)–(4.6) and setting \(C_3=C_1^2 C_2C_d\), we obtain

Substituting \(\bar{\alpha }_i = \frac{1}{d} + \frac{A_i}{\sqrt{nd}}\) we obtain the desired bound. \(\quad \square \)

In order to derive the asymptotics of entanglement fidelities for port-based teleportation, we need to compute limits of certain expectation values. As a first step, the following lemma ensures that the corresponding sequences of random variables are uniformly integrable. We recall that a family of random variables \(\{\mathbf{X}^{(n)}\}_{n\in \mathbb {N}}\) is called uniformly integrable if, for every \(\varepsilon >0\), there exists \(K<\infty \) such that \(\sup _n \mathbb {E}\left[ |\mathbf{X}^{(n)}|\cdot \mathbb {1}_{|\mathbf{X}^{(n)}|\ge K}\right] \le \varepsilon \).

Lemma 4.3

Under the same conditions as for Theorem 4.1, the family of random variables \(\left\{ g\left( \tilde{\mathbf{A}}^{(n)}\right) \right\} _{n\in \mathbb {N}}\) is uniformly integrable.

Proof

Let \(\mathbf{X}^{(n)} \,{:}{=}\, g\left( \tilde{\mathbf{A}}^{(n)}\right) \). The claimed uniform integrability follows if we can show that

for every choice of the \(\eta _{ij}\). Indeed, to show that \(\{ \mathbf{X}^{(n)} \}\) is uniformly integrable it suffices to show that \(\sup _n \mathbb {E}\left[ |\mathbf{X}^{(n)} |^{1+\delta }\right] <\infty \) for some \(\delta >0\) [59, Ex. 5.5.1]. If we choose \(\delta >0\) such that \(\eta '_{ij} \,{:}{=}\, (1+\delta )\eta _{ij} > -2-\frac{1}{d-1}\) for all \(1\le i < j \le d\), then it is clear that Eq. (4.9) for \(\eta '_{ij}\) implies uniform integrability of the original family.

Moreover, we may also assume that \(h_{\eta }\equiv g/\varphi _{\eta }=1\), since the general case then follows from the fact that \(p_{d,n}(\alpha )\) decays exponentially in \(\Vert A\Vert _1\) (see Lemma 4.2). More precisely, for any polynomial r and any constant \(\theta _1>0\) there exist constants \(\theta _2, \theta _3>0\) such that

In particular, this holds for the polynomial q bounding h from above by assumption. When proving the statement \(\sup _n \mathbb {E}\left[ |\mathbf{X}^{(n)} |\right] <\infty \), the argument above allows us to reduce the general case \(h_{\eta } = g/\varphi _{\eta }\ne 1\) to the case \(h_{\eta }=1\), or equivalently, to

Thus, it remains to be shown that

where

for some constants \(\eta _{ij}\) satisfying the assumption of Theorem 4.1 that we fix for the rest of this proof. Define \(\Gamma _{ij}\,{:}{=}\, A_i-A_j+\frac{j-i}{\sqrt{n/d}}\). Then we have \(f^{(n)}(A) = \prod _{i<j} \Gamma _{ij}^{\eta _{ij}}\), while the Weyl dimension formula (2.3) becomes

Hence, together with Eqs. (4.8) and (4.6) we can bound \(p_{d,n}(\alpha ) = m_{d,\alpha } d_{\alpha } / d^n\) as

where \(C=C(d)\) is some constant, and we used \(\bar{\alpha }_i + \frac{1}{n} = \frac{1}{d}\Big (\sqrt{\frac{d}{n}} A_i + \frac{d}{n} + 1 \Big )\) and \(\tau =(1/d,\ldots ,1/d)\) in the equality. Using \(f^{(n)}(A) = \prod _{i<j} \Gamma _{ij}^{\eta _{ij}}\), this yields the bound

We now want to bound the expectation value in Eq. (4.10) and begin by splitting the sum over Young diagrams according to whether \(\exists i: |A_i|>n^\varepsilon \) for some \(\varepsilon \in (0,\frac{1}{2})\) to be determined later, or \(|A_i|\le n^\varepsilon \) for all i. We denote the former event by \(\mathcal {E}\) and obtain

We treat the two expectation values in (4.13) separately and begin with the first one. If \(|A_i|>n^\varepsilon \) for some i, then \(\Vert A\Vert _1^2 \ge n^{2\varepsilon }\), so it follows by Eq. (4.12) that

Here, \({{\,\mathrm{poly}\,}}(n)\) denotes some polynomial in n and we also used that, for fixed d, the number of Young diagrams is polynomial in n. This shows that the first expectation value in (4.13) vanishes for \(n\rightarrow \infty \).

For the second expectation value, note that \(|A_i|\le n^{\varepsilon }=o(\sqrt{n})\) for all i, and hence there exists a constant \(K>0\) such that we have

Using Eqs. (4.12) and (4.14), we can therefore bound

where we have introduced \(\mathcal {D}_n \,{:}{=}\, \{ A:\alpha \vdash _d n \}\). The summands are nonnegative, even when evaluated on any point in the larger set \(\hat{\mathcal {D}}_n \,{:}{=}\, \left\{ A \in \sqrt{\frac{d}{n}}\left( \mathbb {Z}-\frac{n}{d}\right) ^d : \sum _i A_i = 0, A_i \ge A_{i+1} \forall i \right\} \supset \mathcal {D}_n\), so that we have the upper bound

Let \(x_i=A_i-A_{i+1}, \ i=1,\ldots ,d-1\). Next, we will upper bound the exponential in Eq. (4.15). For this, define \(\tilde{x}_i=\max (\frac{1}{d-1},x_i)\) and let \(S=\{ i\in \{1,\ldots ,d-1\} \;|\; x_i\le \frac{1}{d-1} \}\). Then, assuming \(S^c\ne \emptyset \),

since \(\sum _{i\in S^c} x_i \ge \frac{|S^c|}{d-1}\). This bounds also holds when \(S^c=\emptyset \). Hence,

where \(\gamma \,{:}{=}\, \frac{1}{2d(2d-1)}\) and \(R \,{:}{=}\, e^{\gamma }\). The first inequality follows from \(\sum _{i=1}^{d-1}x_i=A_1-A_d=|A_1|+|A_d| \le \Vert A\Vert _1\). If we use Eq. (4.16) in Eq. (4.15) we obtain the upper bound

where \(C' \,{:}{=}\, CKR\).

Let us first assume that all \(\eta _{ij} \le -2\), so that \(2+\eta _{ij}\in (-\frac{1}{d-1},0]\). Since

and \(\eta _{ij}+2\le 0\), we have that

as power functions with non-positive exponent are non-increasing. We can then upper-bound Eq. (4.17) as follows,

where the first inequality is Eq. (4.17) and in the second inequality we used Eq. (4.18). Since \(\eta _{ij} > -2-\frac{1}{d-1}\) by assumption, it follows that \(\sum _{j=i+1}^d (2+\eta _{ij}) > -\frac{d-i}{d-1} \ge -1\). Thus, each term in the product is a Riemann sum for an improper Riemann integral, as in Lemma D.4, which then shows that the expression converges for \(n\rightarrow \infty \).

The case where some \(\eta _{ij}>-2\) is treated by observing that

for suitable constants \(c_1,c_2>0\). We can use this bound in Eq. (4.17) to replace each \(\eta _{ij}>-2\) by \(\eta _{ij}=-2\), at the expense of modifying the constants \(C'\) and \(\gamma \), and then proceed as we did before. This concludes the proof of Eq. (4.10). \(\quad \square \)

The uniform integrability result of Lemma 4.3 implies that the corresponding expectation values converge. To determine their limit in terms of the expectation value of a function of the spectrum of a \({\text {GUE}}^0_d\)-matrix, however, we need to show that we can take the limit of the dependencies on n of the function and the random variable \(\mathbf {A}^{(n)}\) separately. This is proved in the following lemma, where we denote the interior of a set E by int(E).

Lemma 4.4

Let \(\lbrace \mathbf {A}^{(n)}\rbrace _{n\in \mathbb {N}}\) and \(\mathbf {A}\) be random variables on a Borel measure space E such that \(\mathbf {A}^{(n)}\overset{D}{\rightarrow }\mathbf {A}\) for \(n\rightarrow \infty \) and \(\mathbf {A}\) is absolutely continuous. Let \(f:\mathrm {int}(E)\rightarrow \mathbb {R}\). Let further \(f_n: E\rightarrow \mathbb {R}\), \(n\in \mathbb {N}\), be a sequence of continuous bounded functions such that \(f_n\rightarrow f\) pointwise on \(\mathrm {int}(E)\) and, for any compact \(S\subset \mathrm {int}(E)\), \(\{f_n|_S\}_{n\in \mathbb {N}}\) is uniformly equicontinuous and \(f_n|_S\rightarrow f|_S\) uniformly. Then for any such compact \(S\subset \mathrm {int}(E)\), the expectation value \(\mathbb {E}\left[ f(\mathbf {A})\mathbb {1}_S(\mathbf {A})\right] \) exists and

Proof

For \(n,m\in \mathbb {N}\cup \{\infty \}\), define

with \(f_\infty \,{:}{=}\, f\), \(\mathbf {A}^{(\infty )}\,{:}{=}\, \mathbf {A}\) and \(S\subset \mathrm {int}(E)\) compact. These expectation values readily exist as \(f_n\) is bounded for all n, and the uniform convergence of \(f_n|_S \) implies that \(f|_S\) is continuous and bounded as well. The uniform convergence \(f_n|_S\rightarrow f|_S\) implies that \(f_n|_S\) is uniformly bounded, so by Lebesgue’s theorem of dominated convergence \(b_{\infty m}(S)\) exists for all \(m\in \mathbb {N}\) and

This convergence is even uniform in m which follows directly from the uniform convergence of \(f_n|_S\). The sequence \(\lbrace \mathbf {A}^{(n)}\rbrace _{n\in \mathbb {N}}\) of random variables converges in distribution to the absolutely continuous \(\mathbf {A}\), so the expectation value of any continuous bounded function converges. Therefore,

An inspection of the proof of Theorem 1, Chapter VIII in [60] reveals the following: The fact that the uniform continuity and boundedness of \(f_n|_S\) hold uniformly in n implies the uniformity of the above limit. Moreover, since both limits exist and are uniform, this implies that they are equal to each other, and any limit of the form

for \(m(n)\xrightarrow {n\rightarrow \infty }\infty \) exists and is equal to the limits in Eqs. (4.19) and (4.20). \(\quad \square \)

Finally, we obtain the desired convergence theorem. For our applications, \(\eta _{ij}\equiv -2\) suffices. The range of \(\eta _{ij}\)’s for which the lemma is proven is naturally given by the proof technique.

Proof of Theorem 4.1

The uniform integrability of \(\mathbf {X}^{(n)}\,{:}{=}\, g\left( \tilde{\mathbf {A}}^{(n)}\right) \) is the content of Lemma 4.3. Recall that uniform integrability means that

where \(\mathcal {E}_K\,{:}{=}\, \lbrace x\in \mathbb {R}^d:\Vert x\Vert _\infty \le K\rbrace \). Let now \(\varepsilon >0\) be arbitrary, and \(K<\infty \) be such that the following conditions are true:

where \(\mathbf {A}\) is distributed as the spectrum of a \({\text {GUE}}^0_d\) matrix. For the bound on the second expectation value, recall that the density of the eigenvalues \((\mu ,\ldots ,\mu _d)\) of a \({\text {GUE}}^0_d\) matrix is proportional to \(\exp (-\sum _{i=1}^d \mu _i^2) \prod _{i<j}(\mu _i-\mu _j)^2\), and hence decays exponentially in \(\Vert \mu \Vert _\infty \). By Lemma 4.4, \(\lim _{n\rightarrow \infty }\mathbb {E}\left[ \mathbf {X}^{(n)}\mathbb {1}_{\mathcal {E}_K}\left( \mathbf {A}^{(n)}\right) \right] = \mathbb {E}\left[ g(\mathbf{A})\mathbb {1}_{\mathcal {E}_K}\left( \mathbf {A}\right) \right] \). Thus, we can choose \(n_0\in \mathbb {N}\) such that for all \(n\ge n_0\),

Using the above choices, we then have

for all \(n\ge n_0\), proving the desired convergence of the expectation values. \(\square \)

From Theorem 4.1 we immediately obtain the following corollary about uniform integrability of the moments of \(\mathbf {A}\).

Corollary 4.5

Let \(k\in \mathbb {N}\), let \(j\in \{1,\ldots ,d\}\), and, for every n, let \(\mathbf{A}^{(n)}\) be the random vector defined in (4.3). Then, the sequence of kth moments \(\big \lbrace ( \mathbf{A}^{(n)}_j)^k \big \rbrace _{n\in \mathbb {N}}\) is uniformly integrable and \(\lim _{n\rightarrow \infty } \mathbb {E}\bigl [(\mathbf{A}^{(n)}_j)^k\bigr ] = \mathbb {E}[\mathbf{A}_j^k]\), where \(\mathbf{A} \sim {\text {GUE}}^0_d\).

5 Probabilistic PBT

Our goal in this section is to determine the asymptotics of \(p^{\mathrm {EPR}}_d\) using the formula (3.9) and exploiting our convergence theorem, Theorem 4.1. The main result is the following theorem stated in Sect. 1.2, which we restate here for convenience.

Theorem 1.3

(Restated). For probabilistic port-based teleportation in arbitrary but fixed dimension d with EPR pairs as resource states,

where \(\mathbf {G}\sim {\text {GUE}}^0_d\).

Previously, such a result was only known for \(d=2\) following from an exact formula for \(p^{\mathrm {EPR}}_2(N)\) derived in [4]. We show in Lemma C.1 in “Appendix C” that, for \(d=2\), \(\mathbb E[\lambda _{\max }(\mathbf {G})] = \frac{2}{\sqrt{\pi }}\), hence rederiving the asymptotics from [4].

While Theorem 1.3 characterizes the limiting behavior of \(p^{\mathrm {EPR}}\) for large N, it contains the constant \(\mathbb {E}[\lambda _{\max }(\mathbf {G})]\), which depends on d. As \(\mathbb {E}[\mathbf {M}]=0\) for \(\mathbf {M}\sim {\text {GUE}}_d\), it suffices to analyze the expected largest eigenvalue for \({\text {GUE}}_d\). The famous Wigner semicircle law [24] implies immediately that

but meanwhile the distribution of the maximal eigenvalue has been characterized in a much more fine-grained manner. In particular, according to [61], there exist constants C and \(C'\) such that the expectation value of the maximal eigenvalue satisfies the inequalities

This also manifestly reconciles Theorem 1.3 with the fact that teleportation needs at least \(2 \log d\) bits of classical communication (see Sect. 7), since the amount of classical communication in a port-based teleportation protocol consists of \(\log N\) bits.

Proof of Theorem 1.3

We start with Eq. (3.9), which was derived in [21], and which we restate here for convenience:

where \(\mu ^*\) is the Young diagram obtained from \(\alpha \vdash N-1\) by adding one box such that \(\gamma _\mu (\alpha ) = N\frac{m_{d,\mu } d_\alpha }{m_{d,\alpha },d_\mu }\) is maximal. By Lemma 3.7, we have \(\gamma _\mu (\alpha ) = \alpha _i - i + d + 1\) for \(\mu =\alpha +e_i\). This is maximal if we choose \(i=1\), resulting in \(\gamma _{\mu ^*}(\alpha ) = \alpha _1+d\). We therefore obtain:

Recall that

is a random vector corresponding to Young diagrams with \(N-1\) boxes and at most d rows, where \(p_{d,N-1}\) is the Schur–Weyl distribution defined in (4.1). We continue by abbreviating \(n=N-1\) and changing to the centered and renormalized random variable \(\mathbf {A}^{(n)}\) from Eq. (4.3). Corollary 4.5 implies that

Using the \(\mathbf {A}^{(n)}\) variables from (4.3), linearity of the expectation value and suitable rearranging, one finds that

where we set

Note that, for \(x\ge 0\),

for some constants \(K_i\), where the first inequality follows from the fact that the denominator in the first line is greater than 1 for \(x\ge 0\). Since both \(\mathbf {A}^{(n)}_1\ge 0\) and \(\lambda _{\max }(\mathbf {G})\ge 0\), and using (5.1), it follows that

Thus we have shown that, for fixed d and large N,

which is what we set out to prove. \(\square \)

Remark 5.1

For the probabilistic protocol with optimized resource state, recall from Eq. (3.8) that

For fixed d, this converges to unity as O(1/N), i.e., much faster than the \(O(1/\sqrt{N})\) convergence in the EPR case proved in Theorem 1.3 above.

6 Deterministic PBT

The following section is divided into two parts. First, in Sect. 6.1 we derive the leading order of the standard protocol for deterministic port-based teleportation (see Sect. 3, where this terminology is explained). Second, in Sect. 6.2 we derive a lower bound on the leading order of the optimal deterministic protocol. As in the case of probabilistic PBT, the optimal deterministic protocol converges quadratically faster than the standard deterministic protocol, this time displaying an \(N^{-2}\) versus \(N^{-1}\) behavior (as opposed to \(N^{-1}\) versus \(N^{-1/2}\) in the probabilistic case).

6.1 Asymptotics of the standard protocol

Our goal in this section is to determine the leading order in the asymptotics of \(F^{\mathrm {std}}_d\). We do so by deriving an expression for the quantity \(\lim _{N\rightarrow \infty }N(1 - F^{\mathrm {std}}_d(N))\), that is, we determine the coefficient \(c_1=c_1(d)\) in the expansion

We need the following lemma that states that we can restrict a sequence of expectation values in the Schur–Weyl distribution to a suitably chosen neighborhood of the expectation value and remove degenerate Young diagrams without changing the limit. Let

be the Heaviside step function. Recall the definition of the centered and normalized variables

such that \(\alpha _i = \sqrt{\frac{n}{d}} A_i + \frac{n}{d}\). In the following it will be advantageous to use both variables, so we use the notation \(A(\alpha )\) and \(\alpha (A)\) to move back and forth between them.

Lemma 6.1

Let \(C>0\) be a constant and \(0<\varepsilon <\frac{1}{2}(d-2)^{-1}\) (for \(d=2\), \(\varepsilon >0\) can be chosen arbitrary). Let \(f_N\) be a function on the set of centered and rescaled Young diagrams (see Eq. (4.3)) that that grows at most polynomially in N, and for N large enough and all arguments A such that \(\Vert A\Vert _1\le n^\varepsilon \) fulfills the bound

Then the limit of its expectation values does not change when removing degenerate and large deviation diagrams,

where \(\mathbb {1}_{\mathrm {ND}}\) is the indicator function that is 0 if two or more entries of its argument are equal, and 1 else. Moreover we have the stronger statement

Proof

The number of all Young diagrams is bounded from above by a polynomial in N. But \(p_{d, n}(\alpha (A))=O(\exp (-\gamma \Vert A\Vert _1^2))\) for some \(\gamma >0\) according to Lemma 4.2, which implies that

Let us now look at the case of degenerate diagrams. Define the set of degenerate diagrams that are also in the support of the above expectation value,

Here, \(\mathbf {1}=(1,\ldots ,1)^T\in \mathbb {R}^d\) is the all-one vector. We write

with

It suffices to show that

for all \(k=1,\ldots ,d-1\). We can now apply Lemma 4.2 to \(\Xi _k\) and choose the constants \(\gamma _k=0\) and \(\gamma _i=\frac{1}{2}+\varepsilon \) for \(i\ne k\). Using (4.11), the 1-norm condition on A and bounding the exponential function by a constant we therefore get the bound

for some constant \(C_1>0\). The cardinality of \(\Xi _k\) is not greater than the number of integer vectors whose entries are between \(n/d-n^{1/2+\varepsilon }\) and \(n/d+n^{1/2+\varepsilon }\) and sum to n, and for which the kth and \((k+1)\)st entries are equal. It therefore holds that

By assumption,

Finally, we conclude that

This implies that we have indeed that

In fact, we obtain the stronger statement

The statement follows now using Eq. (6.1). \(\quad \square \)

With Lemma 6.1 in hand, we can now prove the main result of this section, which we stated in Sect. 1.2 and restate here for convenience.

Theorem 1.2

(Restated). For arbitrary but fixed d and any \(\delta >0\), the entanglement fidelity of the standard protocol of PBT is given by

Proof

We first define \(n=N-1\) and recall (3.5), which we can rewrite as follows:

In the third step, we used Lemma 3.7 for the term \(\frac{d_\mu m_{d,\alpha }}{m_{d,\mu } d_\alpha }\) and the Weyl dimension formula (2.3) for the term \(\frac{m_{d,\mu }}{m_{d,\alpha }}\). The expectation value refers to a random choice of \(\alpha \vdash _{d}n\) according to the Schur–Weyl distribution \(p_{d,n}\). The sum over \(\mu =\alpha +e_i\) is restricted to only those \(\mu \) that are valid Young diagrams, i.e., where \(\alpha _{i-1}>\alpha _i\), which we indicate by writing ‘YD’. Hence, we have

In the following, we suppress the superscript indicating \(n=N-1\) for the sake of readability. The random variables \(\varvec{\alpha }\), \(\mathbf {A}\), and \(\varvec{\Gamma }_{ij}\), as well as their particular values \(\alpha \), A, and \(\Gamma _{ij}\), are all understood to be functions of \(n=N-1\).

The function

satisfies the requirements of Lemma 6.1. Indeed we have that

for all \(i\ne j\), and clearly

Therefore we get

for some constant C. If \(\Vert A\Vert _1\le n^\varepsilon \), we have that

and hence

for N large enough. We therefore define, using an \(\varepsilon \) in the range given by Lemma 6.1, the modified expectation value

and note that an application of Lemma 6.1 shows that the limit that we are striving to calculate does not change when replacing the expectation value with the above modified expectation value, and the difference between the members of the two sequences is \(O(n^{-\frac{1}{2}+\varepsilon (d-2)})\).

For a non-degenerate \(\alpha \), adding a box to any row yields a valid Young diagram \(\mu \). Hence, the sum \(\sum _{\mu =\alpha +e_i\text { YD}}\) in (6.2) can be replaced by \(\sum _{i=1}^d\), at the same time replacing \(\mu _i\) with \(\alpha _i+1\). The expression in (6.2) therefore simplifies to

Let us look at the square root term, using the variables \(\mathbf {A}_i\). For sufficiently large n, we write

In the second line we have defined

and in the third line we have written the inverse square root in terms of its power series around 1. This is possible as we have \(\Vert \mathbf {A}\Vert _1\le n^\varepsilon \) on the domain of \(\tilde{\mathbb {E}}\), so \(\gamma _{i,d,n}\sqrt{\frac{d}{n}}\mathbf {A}_i=O(n^{-1/2+\varepsilon })\), i.e., it is in particular in the convergence radius of the power series, which is equal to 1. This implies also that the series converges absolutely in that range. Defining

as in Sect. 4, we can write

Here we have defined \(\tilde{ \mathbf {A}}\) by \(\tilde{ \mathbf {A}}_i=\gamma _{i,d,n}\mathbf {A}_i\) and the polynomials \(P_{i,j}^{(1,s)}\), \(P_{i,j}^{(2,r)}\), for \(s=0,\ldots ,2(d-1)\), \(r\in \mathbb {N}\), \(i,j=1,\ldots ,d\), which are homogeneous of degree r, and s, respectively. In the last equality we have used the absolute convergence of the power series. We have also abbreviated \(\varvec{\Gamma }\,{:}{=}\,(\varvec{\Gamma }_{ij})_{i<j}\), \(\varvec{\Gamma }^{-1}\) is to be understood elementwise, and \(P_{i,j}^{(1,s)}\) has the additional property that for all \(k,l\in \{1,\ldots ,d\}\) it has degree at most 2 in each variable \(\varvec{\Gamma }_{k,l}\).

By the Fubini-Tonelli Theorem, we can now exchange the infinite sum and the expectation value if the expectation value

exists, where the polynomials \(\tilde{P}^{(1,s)}_{i,j}\) and \(\tilde{P}^{(2,r)}_{i,j}\) are obtained from \(P^{(1,s)}_{i,j}\) and \(P^{(2,r)}_{i,j}\), respectively, by replacing the coefficients with their absolute value, and the absolute values \(|\varvec{\Gamma }^{-1}|\) and \(|\tilde{\mathbf {A}}|\) are to be understood element-wise. But the power series of the square root we have used converges absolutely on the range of \(\mathbf {A}\) restricted by \(\tilde{\mathbb {E}}\) (see Eq. (6.3)), yielding a continuous function on an appropriately chosen compact interval. Moreover, if A is in the range of \(\mathbf {A}\) restricted by \(\tilde{\mathbb {E}}\), then so is |A|. The function is therefore bounded, as is \(\tilde{ \mathbf {A}}\) for fixed N, and the expectation value above exists. We therefore get

Now note that the expectation values above have the right form to apply Theorem 4.1, so we can start calculating expectation values provided that we can exchange the limit \(N\rightarrow \infty \) with the infinite sum. We can then split up the quantity \(\lim _{N\rightarrow \infty }R_N\) as follows,

provided that all the limits on the right hand side exist. We continue by determining these limits and begin with Eq. (6.6). First observe that, for fixed r and s such that \(r+s\ge 3\),

This is because the expectation value in Eq. (6.7) converges according to Theorem 4.1 and Lemma 6.1, which in turn implies that the whole expression is \(O(N^{-1/2})\). In particular, there exists a constant \(K>0\) such that, for the finitely many values of r and s such that \(r\le r_0\,{:}{=}\, \lceil (\frac{1}{2}-\varepsilon )^{-1}\rceil \),