Abstract

We test M. Berry’s ansatz on nodal deficiency in presence of boundary. The square billiard is studied, where the high spectral degeneracies allow for the introduction of a Gaussian ensemble of random Laplace eigenfunctions (“boundary-adapted arithmetic random waves”). As a result of a precise asymptotic analysis, two terms in the asymptotic expansion of the expected nodal length are derived, in the high energy limit along a generic sequence of energy levels. It is found that the precise nodal deficiency or surplus of the nodal length depends on arithmetic properties of the energy levels, in an explicit way. To obtain the said results we apply the Kac–Rice method for computing the expected nodal length of a Gaussian random field. Such an application uncovers major obstacles, e.g. the occurrence of “bad” subdomains, that, one hopes, contribute insignificantly to the nodal length. Fortunately, we were able to reduce this contribution to a number theoretic question of counting the “spectral semi-correlations”, a concept joining the likes of “spectral correlations” and “spectral quasi-correlations” in having impact on the nodal length for arithmetic dynamical systems. This work rests on several breakthrough techniques of J. Bourgain, whose interest in the subject helped shaping it to high extent, and whose fundamental work on spectral correlations, joint with E. Bombieri, has had a crucial impact on the field.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Nodal length of Laplace eigenfunctions

Let \(f:\mathcal {M}\rightarrow {\mathbb {R}}\) be a smooth function on a compact smooth Riemannian surface \((\mathcal {M},g)\), with or without boundary, with no critical zeros. The zero set of f, called the nodal line is a smooth curve with no self-intersections; it is an important qualitative descriptor of f. We are interested in the geometry of the nodal lines of Laplace eigenfunctions on \(\mathcal {M}\), in the high energy limit, i.e. the solutions \(\{(\varphi _{j},\lambda _{j})\}_{j\ge 1}\) of the Schrödinger equation

satisfying either Dirichlet or Neumann boundary conditions, where \(\Delta ={\text {div}}\circ \nabla \) is the Laplace–Beltrami (Laplacian) operator on \(\mathcal {M}\), and \(\lambda _{j}\ge 0\) are the energy levels (or simply the energies). It is well-known that the spectrum of \(\Delta \) is purely discrete, i.e. there exists a complete orthonormal system \(\{\varphi _{j}\}_{j\ge 1}\) (orthonormal basis), spanning the whole of \(L^{2}(\mathcal {M})\), so that all the spectral multiplicities are finite, and \(\lambda _{j}\rightarrow \infty \) as \(j\rightarrow \infty \) being the high energy limit.

Much of the focus in the study of the nodal lines of Laplace eigenfunctions has been turned to the study of the nodal length, i.e. the total length \({\mathcal {L}}(\varphi _{j})\) of the curve \(\varphi _{j}^{-1}(0)\) on \(\mathcal {M}\), as \(j\rightarrow \infty \). Yau’s conjecture [30] asserts that the nodal length is commensurable with \(\sqrt{\lambda _{j}}\), i.e.

for some positive constants \(c_{\mathcal {M}},C_{\mathcal {M}}>0\). Yau’s conjecture was proven [6, 7, 11] for \(\mathcal {M}\) analytic, and more recently the optimal lower bound [18] and polynomial upper bound [19] were established for the more general, smooth, case (see also [17]).

1.2 Nodal length for random fields

One way to obtain stronger (or more precise) results than (1.2) is to study the nodal length \({\mathcal {L}}(f)\) of random functions f, an approach that has been actively pursued, in particular in the recent few years. As a concrete direction of research within the indicated scope, one may take a Gaussian random field \(f:{\mathbb {R}}^{2}\rightarrow {\mathbb {R}}\) (or \(f:{\mathbb {R}}^{d}\rightarrow {\mathbb {R}}\), \(d\ge 2\)) and study the distribution of the nodal length \({\mathcal {L}}(f;R)\) of f restricted to \(B(R)\subseteq {\mathbb {R}}^{2}\), the radius-R centred ball, \(R\rightarrow \infty \). ForFootnote 1\(f:{\mathbb {R}}^{2}\rightarrow {\mathbb {R}}\)stationary a straightforward application of the standard Kac–Rice formula yields a precise expression

whereas a significantly heavier machinery involving perturbation theory (and asymptotic analysis of the 2-point correlation function) yields an asymptotic expression for the variance

as \(R\rightarrow \infty \). One may go further by applying the Wiener chaos decomposition on \({\mathcal {L}}(f;R)\) to obtain [15] a limit law for the distribution of

Alternatively to working with a fixed random field restricted to expanding balls, one may fix a compact surface \(\mathcal {M}\), consider a Gaussian ensemble of random functions on \(\mathcal {M}\), i.e. a sequence \(f_{n}:\mathcal {M}\rightarrow {\mathbb {R}}\) of Gaussian random fields indexed by \(\mathcal {M}\), and study the asymptotic distribution of the nodal length of \(f_{n}\), that is the total length \({\mathcal {L}}(f_{n})\) of \(f_{n}^{-1}(0)\), as \(n\rightarrow \infty \); in some natural examples (to be discussed below) \(f_{n}\) possesses a natural scaling with n.

Berry [2] suggested that for \(\mathcal {M}\) generic chaotic, there exists a (non-rigorous) link between the (deterministic) eigenfunctions \(\varphi _{j}\) as in (1.1), and the restriction of monochromatic isotropic random wave g (a particular random field on \({\mathbb {R}}^{2}\) to be defined immediately below), to B(R) with \(R\approx \sqrt{\lambda _{j}}\); this vague relation, usually referred to as “Berry’s Random Wave Model” (RWM), agreed in a wide community, is subject to many numerical tests with overwhelmingly positive outcomes. In particular, the study of the nodal structures of g restricted to B(R) as \(R\rightarrow \infty \) facilitates our understanding of the nodal structures of \(\varphi _{j}\) in the high energy limit. Berry’s monochromatic isotropic random wave g is uniquely defined as the centred Gaussian random field on \({\mathbb {R}}^{2}\) with covariance function

\(x,y\in {\mathbb {R}}^{2}\), whose Fourier transform on \({\mathbb {R}}^{2}\) is the arc length of the unit circle (meaning that the monochromatic waves are propagating uniformly in all directions). Since \(r_{g}\) depends only on the Euclidean distance \(|x-y|\), the law of g is invariant under all translations \(g(\cdot )\mapsto g(\cdot +z)\), \(z\in {\mathbb {R}}^{2}\), and rotations \(g(\cdot )\mapsto g(o\,\cdot )\), \(o\in O(2)\) (i.e. g is stationary isotropic); it has applications in the study of ocean waves propagating [20, 21].

Consistent to the above (1.3), the expected nodal length for this stationary model is easily found to be

with \(c_{0}>0\) explicitly evaluated, via a straightforward application of the Kac–Rice formula. Berry [3, Formula (28)] further found that the variance is logarithmic, i.e. satisfying the asymptotic law

much smaller than one would expect, due to an “obscure cancellation” (see also [29]). A Central Limit Theorem was asserted [22] for the nodal length of the Gaussian ensemble of random spherical harmonics, scaling as Berry’s RWM around every point, also serving as a clear indication [28] that the result for the RWM should also hold, made rigorous subsequently [24].

The central objective of this manuscript is investigating the effect of nontrivial boundary on the nodal structures of Laplace eigenfunctions, first and foremost on the nodal length, either in the vicinity of the boundary, or globally. Berry argued that, since the nodal line is perpendicular to the boundary [9] (except for intersection points with higher degree vanishing), its presence should impact its length negatively compared to (1.4), he referred to as “nodal deficiency”. He backed this ansatz by a precise evaluation of the secondary term around the boundary for the “boundary-adapted random waves”, a Gaussian random field constrained to satisfy the boundary conditions, Dirichlet or Neumann, on an infinite straight line.

It was concluded that, bearing in mind that the primary term in his asymptotic expansion of nodal length for this boundary-adapted case is consistent to (1.4), whereas the secondary term was, a large number of wavelengths away from the boundary, negative (identical between Dirichlet and Neumann boundary conditions), with total contribution in absolute value larger than the length fluctuations in (1.5) (possibly extending to the boundary-adapted case), based on one sample only, one should be able to detect the deficiency of the total nodal length of Laplace eigenfunctions for surfaces with boundary compared to the boundary-less case. Gnutzmann and Lois [12] supported Berry’s deficiency ansatz by performing a mean nodal volume calculation for \(\mathcal {M}\) cuboid of arbitrarily high dimension, a dynamical system with separation of variables, while averaging w.r.t. energy levels (rather than w.r.t. a Gaussian ensemble).

1.3 Arithmetic Random Waves

The “usual” Arithmetic Random Waves are random toral Laplace eigenfunctions. Let

be the set of all integers expressible as sum of two squares, \(n\in S\), and

the lattice points set lying on the centred radius-\(\sqrt{n}\) circle. It is well-known that \(n\in S\), if and only if the prime decomposition of n is of the form

for some nonnegative integers a, \(\{e_{j}\}_{j\le s}\), \(\{h_{k}\}_{k\le r}\), and \(p_{j}\equiv 1\mod 4\) and \(q_{k}\equiv 3 \mod 4\) primes.

By a classical result due to E. Landau [16] the sequence \(S\subseteq {\mathbb {Z}}\) is thin, i.e. of asymptotic density 0, and, what is stronger,

with some semi-explicit constant \(c_{0}>0\). Any function \(g_{n}:{\mathbb {T}}^{2}\rightarrow {\mathbb {R}}\) on the torus \({\mathbb {T}}^{2}={\mathbb {R}}^{2}/{\mathbb {Z}}^{2}\) of the form

where \(a_{\mu }\in {\mathbb {C}}\) are some constants satisfying the condition

and \(N_{n}=|\mathcal {E}_{n}|\) is the size of the lattice points set \(\mathcal {E}_{n}\) (equivalently, \(N_{n}=r_{2}(n)\), the number of ways to express n as a sum of two squares), is a real-valued Laplace eigenfunction with eigenvalue

The convenience pre-factor \(\frac{1}{\sqrt{N_{n}}}\) on the r.h.s. of (1.9) has no bearing on the nodal set of \(g_{n}\), and will be understood below. Conversely, any real-valued Laplace eigenfunction on \({\mathbb {T}}^{2}\) is necessarily of the form (1.9) for some \(n\in S\).

The linear space of functions (1.9) may be endowed with a probability measure by making the coefficients \(\{a_{\mu }\}_{\mu \in \mathcal {E}_{n}}\) i.i.d. standard complex valued Gaussian random variables, save for the condition (1.10) to ensure the \(g_{n}\) are real-valued; this model is called “Arithmetic Random Waves”. Alternatively and equivalently, Arithmetic Random Waves is the Gaussian ensemble of centred stationary random fields with the covariance functions

its (random) nodal length \({\mathcal {Z}}_{n}={\mathcal {L}}(g_{n})\) on \({\mathbb {T}}^{2}\) is our etalon, representing the boundary-less cases for comparison against the appearance of nontrivial boundary. As it is the case with stationary random fields, it is easy to evaluate its expected nodal length to be

Rudnick and Wigman [25] gave the useful upper bound

showing, in particular, that the distribution of \(\frac{{\mathcal {Z}}_{n}}{\sqrt{n}}\) concentrates around the constant \(\frac{\pi }{\sqrt{2}}\).

Krishnapur–Kurlberg–Wigman [13] further resolved the question of the true asymptotic behaviour of the variance on the l.h.s. of (1.14), requiring the following background in the two squares problem. For every n we define the atomic probability measure

on the unit circle \(\mathcal {S}^{1}\), supported on the angles of \(\mathcal {S}^{1}\) corresponding to points of \(\mathcal {E}_{n}\). It is known that for a “generic” sequence \(\{n\}\subseteq S\) the angles \(\{\mu /\sqrt{n}\}_{\mu \in \mathcal {E}_{n}}\) equidistribute on \(\mathcal {S}^{1}\), i.e. there exists a relative density 1 sequence \(\{n\}\subseteq S\), so that

with \(`\Rightarrow '\) standing for the weak-\(*\) convergence of probability measures on \(\mathcal {S}^{1}\), and in particular \(N_{n}\rightarrow \infty \). However, there exist [10, 13, 14] other attainable measures, i.e. weak-\(*\) partial limits of the sequence \(\{\nu _{n}\}_{n\in S}\), and even under the (generic) constraint \(N_{n}\rightarrow \infty \), the accumulation set of the sequence \(\{\widehat{\nu _n}(4)\}\) of the 4th Fourier coefficients of \(\nu _{n}\) (being the first nontrivial Fourier coefficient) is the whole of \([-1,1]\). The said work [13] established the precise asymptotic relation

and, bearing in mind that \(1+\widehat{\nu _{n}}(4)^{2}\) is bounded away from both 0 and infinity, in particular, it shows that the fluctuations around the mean of \({\mathcal {Z}}_{n}\) are of the order of magnitude

important below, just like (1.5), due to an unexpected cancellation (“arithmetic Berry’s cancellation”). Later a non-universal limit theorem for \({\mathcal {Z}}_{n}\) was obtained [23].

1.4 Boundary-adapted Arithmetic Random Waves

The boundary-adapted Arithmetic Random Waves are random Laplace eigenfunctions on the unit square \(\mathcal {Q}=[0,1]^{2}\), subject to Dirichlet boundary conditions. Let S be as above (1.6), \(n\in S\), and \(\mu =(\mu _{1},\mu _{2})\in \mathcal {E}_{n}\) a lattice point with \(\mathcal {E}_{n}\) given by (1.7). Any function \(\mathcal {Q}\rightarrow {\mathbb {R}}\) of the form

is a Laplace eigenfunction with eigenvalue

satisfying the Dirichlet boundary conditions on \(\mathcal {Q}\) (cf. (1.11)). However, given \(\mu =(\mu _{1},\mu _{2})\in \mathcal {E}_{n}\) and \(\mu '=(\pm \mu _{1},\pm \mu _{2})\in \mathcal {E}_{n}\), the resulting maps as in (1.19) differ at most by a sign. Therefore, to avoid redundancies, we introduce the equivalence relation on \(\mathcal {E}\): \((\mu _{1},\mu _{2})\sim (\mu _{1}',\mu _{2}')\) if \(\mu _{1}=\pm \mu _{1}'\) and \(\mu _{2}=\pm \mu _{2}'\).

The general form of a Laplace eigenfunction on \(\mathcal {Q}\) satisfying Dirichlet boundary conditions assumes the form

for some \(n\in S\), and we endow this linear space with a Gaussian probability measure by making the \(\{a_{\mu }\}_{\mu \in \mathcal {E}_{n}/\sim }\) i.i.d. standard (real) Gaussian random variables. If either \(\mu _{1}=0\) or \(\mu _{2}=0\), then the corresponding summand in (1.21) vanish, so that we are allowed to assume that both \(\mu _{1}\ne 0\) and \(\mu _{2}\ne 0\). We then call the random function (1.21) equipped with the said Gaussian probability measure “boundary-adapted Arithmetic Random Waves”, much like the Arithmetic Random Waves (1.9). Alternatively (and equivalently), the boundary-adapted Arithmetic Random Waves is the ensemble of Gaussian centred random fields indexed by \(x\in \mathcal {Q}\), with covariance functions

\(n\in S\), \(x=(x_{1},x_{2}),y=(y_{1},y_{2})\in \mathcal {Q}\). The \(f_{n}\) are not stationary, even though, around generic points in \(\mathcal {Q}\), far away from the boundary, \(f_{n}\) asymptotically tends to stationarity [8], understood in suitable regime, after suitable re-scaling. The main interest of this manuscript is the expected nodal length of \(f_{n}\), and its comparison to (1.13), from this point on tacitly assuming \(N_{n}\rightarrow \infty \).

Theorem 1.1

Let

be the total nodal length of \(f_{n}\) on \(\mathcal {Q}\), and recall the notation (1.15) and (1.20). There exists a subsequence of energy levels \(S'\subseteq S\) satisfying the following properties:

-

a.

The sequence \(S'\) is of relative asymptotic density 1 within S.

-

b.

The set of accumulation points of the sequence of numbers \(\{\widehat{\nu _{n}}(4)\}_{n\in S'}\) is \([-1,1]\).

-

c.

Along \(n\in S'\) we have \(N_{n}\rightarrow \infty \), and

$$\begin{aligned} {\mathbb {E}}[{\mathcal {L}}_{n}] = \frac{\sqrt{\lambda _{n}}}{2\sqrt{2}}\cdot \left( 1- \frac{1+4\widehat{\nu _{n}}(4)}{16}\cdot \frac{1}{N_{n}} + o_{N_{n}\rightarrow \infty }\left( \frac{1}{N_{n}} \right) \right) . \end{aligned}$$(1.23)

The asymptotics (1.23) is expressed in terms of \(\lambda _{n}\) rather than in terms of n in a way that the leading term on the r.h.s. of (1.23) agrees with (1.13) explicitly, for there is a discrepancy factor of 2 otherwise, due to the discrepancy between (1.11) and (1.20). The boundary effect is then encapsulated within the second, correction, term

On one hand the asymptotics (1.23) shows that, since, outside a thin set of \(n\in S\), we have the convergence (1.16) of \(\nu _{n}\) to the uniform measure on \(\mathcal {S}^{1}\), for such a sequence of n the correction term is asymptotic to

confirming Berry’s ansatz on the nodal deficiency. On the other hand, bearing in mind that, by property (b) of the sequence in Theorem 1.1, the accumulation set of \(\{\widehat{\nu _{n}}(4)\}_{n\in S'}\) is the whole of \([-1,1]\), without exclusions from \(S'\),

fluctuates infinitely in the asymmetric interval

The maximal nodal deficiency (resp. maximal nodal surplus) in (1.24) is uniquely attained by the Cilleruelo measure

(resp. its tilt by \(\pi /4\)), consistent with our interpretation of the amplified horizontal and vertical wave propagation for Cilleruelo sequences (resp. their \(\pi /4\)-tilt for the tilted Cilleruelo), in light of Berry’s rationale of nodal deficiency occurring as a result of nodal lines perpendicular to the boundary. Finally, we notice that, judging by the analogous quantity for the Arithmetic Random Waves (1.18), and applying M. Berry’s reasoning, explained in Sect. 1.2, we expect the fluctuations of \({\mathcal {L}}_{n}\) to be of the same order of magnitude \(\approx \frac{\sqrt{n}}{N_{n}}\) as \(\mathcal {C}_{n}\) (a by-product of the aforementioned “miraculous” cancellation). That means that, unlike the situation in [3], one cannot detect the nodal surplus or deficiency judging by the total nodal length based on one sample only. However, this could be mended by taking more samples, or, likely, by restricting the sample to the vicinity of the boundary.

The main conclusion (1.23) of Theorem 1.1 is valid for “generic” \(n\in S'\subseteq S\) only, rather than for the whole sequence \(n\in S\), though, importantly, this generic family is sufficiently rich so that to exhibit a variety of different asymptotic biases of the correction term (1.24). Below Theorem 1.4 will be stated, a version of Theorem 1.1 with an explicit control over the error term in (1.23), valid for the whole sequence \(n\in S\) of energy level, expressed in terms of the so-called “spectral semi-correlations”, defined in Sect. 1.5 (see Definition 1.2). Our failure to unrestrict the statement of Theorem 1.1 for the whole sequence \(n\in S\) is then a by-product of Theorem 1.3 below asserting a bound for the semi-correlations for a generic sequence of energy levels. We do believe that (1.23) holds for\(n\in S\), with no further restriction.

1.5 Spectral semi-correlations

Let

be an even number, with \(k\ge 1\) an integer. The length-l spectral correlation set [13] is the set

of all l-tuples of lattice points in \(\mathcal {E}_{n}\) whose sum vanishes; by an elementary congruence obstruction modulo 2, for l odd the corresponding correlation sets are all empty. The size of \({\mathcal {R}}_{l}(n)\) is directly related to the l-th moment of the covariance function (1.12) corresponding to the Arithmetic Random Waves:

and bounding \(|{\mathcal {R}}_{6}(n)|\) was a key ingredient for bounding the remainder while proving (1.17) in [13]. Since, for \(k\ge 2\), fixing \(\mu ^{1},\ldots , \ldots , \mu ^{l-2}\) so that

the relation

determines the remaining two lattice points \(\mu ^{l-1}\) and \(\mu ^{l}\) up to permutation, it is readily seen that for every \(l\ge 4\),

Let

be the set of all l-tuples cancelling out in pairs, where \(S_{l}\) is the symmetric group permuting the l-tuples, of size

Evidently, for every l and \(n\in S\), we have the inclusion

Hence, in particular

recalling (1.25). Bombieri and Bourgain [4] showed that

and established the striking inequality

for some \(\gamma >0\), valid for density-1 sequence of \(n\in S\), or, alternatively, conditionally for the full sequence S, so that, in particular, for these n, the optimal inequality

holds (recall (1.25)).

In [5], the notion of spectral quasi-correlations, was instrumental for studying the analogue of \({\mathcal {Z}}_{n}\) for the Arithmetic Random Waves (1.9), restricted to domains decreasing with n above Planck scale, e.g discs with radius \(n^{-1/2+\delta }\) (“Shrinking balls”). For l as above and \(\epsilon >0\), a length-l quasi correlation is an l-tuple \((\mu ^{1},\ldots ,\mu ^{l})\) of points in \(\mathcal {E}_{n}\) so that

It was shown [5, Theorem 1.4] that for n generic and l arbitrary even number, the quasi-correlation set is empty.

In this manuscript we introduce a new concept, of semi-correlations, instrumental within the proof of Theorem 1.1, as it will allow us to control the problematic singular set in \(\mathcal {Q}\), see Corollary 2.6, and, we believe, of independent interest on its own right.

Definition 1.2

(Semi-correlations). For \(l=2k\), \(n\in S\), the length-l semi-correlation set is the collection

of all l-tuples of lattice points in \(\mathcal {E}_{n}\) with the first coordinate summing up to 0.

From the above definition, it is evident that

so that, in particular,

cf. (1.27). Quite remarkably, the following optimal upper bound holds for the semi-correlation set size, albeit for a generic sequence only.

Theorem 1.3

(Bound for the number of semi-correlations). For every \(l=2k\ge 4\) even integer, there exists a sequence \(S'=S'(l)\subseteq S\) satisfying the following properties.

-

a.

The sequence \(S'\subseteq S\) is of relative asymptotic density 1.

-

b.

The set of accumulation points of the sequence of numbers \(\{\widehat{\nu _{n}}(4)\}_{n\in S'}\) is the whole of \([-1,1]\).

-

c.

Along \(n\in S'\) we have \(N_{n}\rightarrow \infty \) and

$$\begin{aligned} |{\mathcal {M}}_{l}(n)| = O\left( N_{n}^{k}\right) . \end{aligned}$$(1.31)

By using a standard diagonal argument it is possible to choose a density 1 sequence \(S'\subseteq S\), satisfying (1.31) for all\(l\ge 4\) even (with constant involved in the ‘O’-notation depending on l). Theorem 1.3 is stronger compared to the upper bound (1.29) for the spectral correlations, due to Bombieri-Bourgain, also valid for a density one sequence of \(n\in S\). In addition to claiming the upper bound for the semi-correlations rather, significantly weaker than correlation, at also asserts the richness of the postulated sequence in terms of the angular distribution of \(\mathcal {E}_{n}\), expressed in terms of the Fourier coefficients \(\{\widehat{\nu _{n}}(4)\}_{n\in S'}\). The following result is a version of Theorem 1.1, with an explicit control over the error term in (1.23), expressed in terms of the spectral semi-correlations. After a significant amount of effort put into, we still do not know whether (1.31) holds for allleven, along\(n\in S\)with no further restriction, and believe this question to be of sufficiently high interest, both for applications of the type of Theorem 1.1, and intrinsic, to be addressed in the future.

Theorem 1.4

(Explicit unrestricted version of Theorem 1.1). For every \(l\ge 4\) even we have

Theorem 1.4 implies Theorem 1.1 at once, by working with the sequence resulting from an application of Theorem 1.3 on \(l=8\) (say), and from this point on we will only care to prove Theorem 1.4 (and Theorem 1.3).

2 Outline of the Paper

2.1 Outline of the proof of Theorem 1.4

2.1.1 The Kac–Rice formula.

The Kac–Rice formula is a meta-theorem allowing one to evaluate the \((d-1)\)-volume of the zero set of a random field \(F:{\mathbb {R}}^{d}\rightarrow {\mathbb {R}}\), for F satisfying some smoothness and non-degeneracy conditions. For \(F:{\mathbb {R}}^{d}\rightarrow {\mathbb {R}}\), a sufficiently smooth centred Gaussian random field, we define

the zero density (first intensity) of F. Then the Kac–Rice formula asserts that for some suitable class of random fields F and \({\overline{\mathcal {D}}}\subseteq {\mathbb {R}}^{d}\) a compact closed subdomain of \({\mathbb {R}}^{d}\), one has the equality

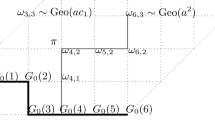

We would like to apply (2.1) on the random fields \(f_{n}\) in (1.21) to evaluate the expectation on the l.h.s. of (1.23). Unfortunately, for some n, the aforementioned non-degeneracy conditions fail decisively for some points of \(\mathcal {Q}\). For these cases, an approximate version of Kac–Rice was developed [26, Proposition 1.3] (in a slightly different context of evaluating the variance), so that rather than holding precisely, (2.1) would hold approximately, still yielding the asymptotic law for the evaluated expectation. Nevertheless, for this particular case, by excising some neighbourhoods of the problematic degenerate set, consisting of a union of a grid and finitely many isolated points, and by applying the Monotone Convergence Theorem, we will be able to deduce that (2.1) holds precisely, save for the length of the said deterministicgrid contained in the nodal set of \(f_{n}\) for some \(n\in S\) of a particular form.

Let \(n\in S\) be of the form (1.8), and denote the associated number

so that, in particular, \(Q_{n}^{2} | n\). We will establish later (cf. Lemma 3.1 below) that in such a scenario, all of the \(\mu _{1}\) and \(\mu _{2}\) on the r.h.s. of (1.21) are divisible by \(Q_{n}\), so that if \(Q_{n}>1\), then necessarily the (deterministic) grid

is a.s. contained inside the nodal line \(f_{n}^{-1}(0)\) (see Figs. 1, 2); \({\mathcal {G}}_{n}\) is of length

Lemma 3.1 will also assert that such a situation is only possible in this scenario, i.e. all the components \(\{\mu _{1}\}_{\mu \in \mathcal {E}_{n}/\sim }\) are divisible by a maximal number \(d>1\), if and only \(d=Q_{n}\) in (2.2). The following proposition is the announced Kac–Rice formula, with the said caveat (namely, the length of \({\mathcal {G}}_{n}\), manifested on the r.h.s. of (2.6)).

Proposition 2.1

Let \(f_{n}\) be as in (1.21), \({\mathcal {L}}_{n}\) the nodal length of \(f_{n}\), and

be the zero density of \(f_{n}\). Then, for every \(n\in S\), we have \(K_{1}\in L^{1}(\mathcal {Q})\), and moreover, we have

where \(Q_{n}\) is as in (2.2).

For example, by comparing (2.6) to (2.1), we may deduce as a particular by-product of Proposition 2.1, that (2.1) holds precisely in our case, if and only if \(Q_{n}=1\), i.e. the grid is empty. Below it will be demonstrated that \(Q_{n}\) on the r.h.s. of (2.6) does not contribute to the Kac–Rice integral (nor to the correction term \(\sqrt{n}\mathcal {C}_{n}\) in (1.23), with \(\mathcal {C}_{n}\) given by (1.24)), e.g., it is easily dominated by \(\frac{\sqrt{n}}{N_{n}^{A}}\), for every \(A>0\) (see the proof of Theorem 1.4 in Sect. 2.1.5 below).

2.1.2 The joint distribution of \((f_{n}(x),\nabla f_{n}(x))\).

By the definition (2.5) of the zero density function of \(f_{n}\), to investigate \(K_{1}(x)\) we naturally encounter the value distribution of both \(f_{n}(x)\), determined by \({\text {Var}}(f_{n}(x))\), and \(\nabla f_{n}(x)\) conditioned on \(f_{n}(x)=0\), determined by its \(2\times 2\) (conditional) covariance matrix. On recalling that the covariance function \(r_{n}\) of \(f_{n}\) is given by (1.22), so that on the diagonal

with an elementary manipulation and well-known trigonometric identities yielding

where

Further, we need to evaluate the covariance matrix of \(\nabla f_{n}(x)\) conditioned on \(f_{n}(x)=0\). A scrupulous direct computation, carried out in “Appendix A” shows that the corresponding (normalised) covariance matrix is given by the following:

Lemma 2.2

The \(2\times 2\) covariance matrix of \(\nabla f_{n}(x)\), conditioned on \(f_{n}(x)=0\), and appropriately normalised, is the following \(2\times 2\) real symmetric matrix:

where

where \(v_{n}\) is given by (2.7) and (2.8);

and

2.1.3 Singular set.

Next, we aim at analysing the asymptotic behaviour of the r.h.s. of (2.6). Towards this goal we will separate the domain \(\mathcal {Q}\) of the integration on the r.h.s. of (2.6) into the “good” or nonsingular set, where \(K_{1}\) is “tame”, i.e. admits precise asymptotic (Proposition 2.7), and the “bad” or singular sets, which itself consists of small singular squares so that to be able to control the integral of \(K_{1}\). First, we will bound the total contribution of the singular set (Corollary 2.6) from above, by separately bounding the number of small singular squares (Proposition 2.4), appealing to the bound for spectral semi-correlations in Theorem 1.3, and the contribution of a singular small square (Proposition 2.5).

Below it will asserted that for most of the points \(x\in \mathcal {Q}\), both the value of \(v_{n}(x)\) is close to unit (equivalently, \(s_{n}(x)\) is small), and \(\Omega _{n}\) in (2.9) is close to the unit matrix (equivalently, \(\Gamma _{n}\) is small); we will designate the other points as “singular”, and excise them while performing an asymptotic analysis on \(K_{1}\). To quantify it, we take \(\epsilon _{0}>0\) and \(c_{0}>0\), and keep them fixed but sufficiently small throughout. We will endow the singular set with a structure of a union of small squares (cf. [25, 26]).

Definition 2.3

(Singular set). Let \(\epsilon _{0}>0\) and \(c_{0}>0\) be two parameters. Take

and \(K:=\left\lfloor \frac{1}{\delta _{0}}\right\rfloor + 1\). For \(1\le i\le K\) define the interval

and for \(1\le i,j \le K\) denote the small square

We have the partition

of the square into a union of small squares, disjoint save for boundary overlaps.

-

a.

Recall the notation in (2.8) and (2.9). A small square \(Q_{ij}\) in (2.13) is “singular” if it contains a point \(x_{0}\in Q_{ij}\) satisfying either of the three inequalities:

$$\begin{aligned} |s_{n}(x_{0})|> \epsilon _{0}, \end{aligned}$$or

$$\begin{aligned} |{\text {tr}}(\Gamma _{n}(x_{0}))|>\epsilon _{0}, \end{aligned}$$or

$$\begin{aligned} |\det (\Gamma _{n}(x_{0}))|>\epsilon _{0}. \end{aligned}$$ -

b.

The singular set is the union

$$\begin{aligned} \mathcal {Q}_{s}:= \bigcup \limits _{Q_{ij} \text { singular}} Q_{ij}. \end{aligned}$$of all small singular squares.

-

c.

The complement \(\mathcal {Q}{\setminus } \mathcal {Q}_{s}\) of the singular set is called “nonsingular set”.

The following couple of propositions assert a bound for the total measure of the singular set, and for the contribution of a single singular square respectively. Combining these two will yield an upper bound for the total contribution of the singular set \(\mathcal {Q}_{s}\) to the integral on the r.h.s. of (2.6).

Proposition 2.4

(Bound for the measure of the singular set). For every \(l\ge 4\) even integer we have the following bound for the measure of the singular set in terms of the length-l spectral correlation set (1.30):

where the constant involved in the \(`O'\)-notation depends only on l.

Proposition 2.5

(Bound for a single small square). Let \(Q\subseteq \mathcal {Q}\) be an arbitrary square of side length \(\frac{c_{0}}{\sqrt{n}}\) with \(c_{0}>0\) sufficiently small. Then

with the constant involved in the ‘O’-notation in (2.15) absolute.

It is worthy of a mention that the doubling exponent method due to Donnelly-Fefferman [11], with relation to Yau’s conjecture (1.2), yields the deterministic bound of \(O\left( 1\right) \) for the nodal length of \(f_{n}\) restricted to Q as in Proposition 2.5, that, being better than the global bound of \(\sqrt{n}\), falls short from being sufficient for our needsFootnote 2, by a significant margin. We believe that the optimal upper bound on the r.h.s. of (2.15) should be \(O\left( \frac{1}{\sqrt{n}} \right) \), however, after some effort, we were not able to prove that. Instead, we sacrifice a power of \(N_{n}\) by virtually not exploiting the summation in (1.22) (except the invariance of \(\mathcal {E}_{n}\) w.r.t. \(\mu =(\mu _{1},\mu _{2})\mapsto (\mu _{2},\mu _{1})\)), in the hope to gain the lost power of \(N_{n}\) while bounding the number of singular squares (equivalently, the measure of \(\mathcal {Q}_{s}\)), which is precisely what is achieved in Proposition 2.4, with the help of Theorem 1.3. In particular, Proposition 2.5 applies to all singular squares \(Q_{ij}\subseteq \mathcal {Q}_{s}\), leading to the following, possibly sub-optimal, result.

Corollary 2.6

For every \(l\ge 4\) even integer we have the following bound for the contribution of the singular set to the integral on the r.h.s. of (2.6):

The upshot of Corollary 2.6 is that, thanks to Theorem 1.3, by choosing l sufficiently big, we can make the r.h.s. of (2.16) smaller than \(\sqrt{n}\cdot N_{n}^{-A}\), with \(A>0\) arbitrarily large. That is crucial if we are to majorise it by the second term in the claimed asymptotic expansion (1.23), of order of magnitude \(\approx \frac{\sqrt{n}}{N_{n}}\). The proof of Corollary 2.6 is immediate given Propositions 2.4 and 2.5, and, thereupon, conveniently omitted.

2.1.4 Perturbative analysis on the non-singular set.

Outside the singular set, the precise analysis for the density function is feasible.

Proposition 2.7

(Asymptotics for \(K_{1}\) outside \(\mathcal {Q}_{s}\)). Let \(\epsilon _{0}>0\) be a sufficiently small number, and recall that \(s_{n}(\cdot )\) and \(\Gamma _{n}(\cdot )\) are given by (2.8) and (2.10) respectively. Then \(K_{1}\) admits the following asymptotics, uniformly for \(x\in \mathcal {Q}{\setminus }\mathcal {Q}_{s}\),

where the leading term is given by

and the error term is bounded by

with constant involved in the ‘O’-notation absolute.

We observe that, by the definition (2.10) with (2.11, 2.12), the diagonal entries of \(\Gamma _{n}(x)\) are bounded by an absolute constant (using the nonnegativity of the \(d_{\cdot ;n}(x)^{2}\)), and therefore, so are the diagonal entries of \(\Omega _{n}(x)\) in (2.9), and, further, all the entries of \(\Omega _{n}\) are bounded by an absolute constant, by the Cauchy-Schwarz inequality.Footnote 3 Taking also into account (2.8), and the definition (2.17) of \(L(x)=L_{n}(x)\), we conclude that L(x) is uniformly bounded

with the involved constant absolute; this will prove useful later, while restricting (or, rather, un-restricting) the range of the integration in (2.6) to \(\mathcal {Q}{\setminus } \mathcal {Q}_{s}\).

2.1.5 Proof of Theorem 1.4.

The following lemma will be proved in Sect. 7 below.

Lemma 2.8

-

a.

Let \(L(x)=L_{n}(x)\) be as in (2.17), and \(l\ge 4\) an even number. Then

$$\begin{aligned} \int \limits _{\mathcal {Q}}L(x)dx = - \frac{\pi (1+4 {\hat{\nu }}_n)}{32\sqrt{2} }\cdot \frac{\sqrt{n}}{N_n} +O\left( \frac{\sqrt{n} }{N^2_n} \right) + O\left( n^{1/2} N_{n}^{-l-1} |{\mathcal {M}}_{l}(n)|\right) . \end{aligned}$$ -

b.

Let \(\Upsilon (x)=\Upsilon _{n}(x)\) be a function defined on \(\mathcal {Q}\), satisfying (2.18). Then

$$\begin{aligned} \int \limits _{\mathcal {Q}}|\Upsilon (x)|dx = O\left( \frac{\sqrt{n}}{N_{n}^{2}} \right) . \end{aligned}$$

Given the above results, the proof of Theorem 1.4 is rather straightforward.

Proof of Theorem 1.4 assuming Corollary 2.6, Proposition 2.7 and Lemma 2.8

Let l be given. We invoke Proposition 2.1, and separate the contribution of the nonsingular and the singular sets in the integral on the r.h.s. of (2.6) to write, with the help of Corollary 2.6,

with \(Q_{n}\) given by (2.2). First, we claim that for every \(A>0\),

so that the contribution of the grid length is majorised by the error term on the r.h.s. of (1.32). To this end, we recall the prime decomposition (1.8) of n, and write

so that

Since, as it is well-known,

and \(N_{n}=O(n^{\epsilon })\) for every \(\epsilon >0\), we may easily write (taking \(A:=1/(2\epsilon )\))

which is (2.21).

Next, we use the asymptotics of \(K_{1}\) on the nonsingular set claimed in Proposition 2.7 to write

with a bound (2.18) for the error term, thanks to the uniform bound (2.19) on \(L_{n}(x)\) together with Proposition 2.4.

Upon substituting (2.22) into (2.20), and exploiting (2.21), we may deduce (with the error term of \(\sqrt{n} \cdot N_{n}^{-l}|{\mathcal {M}}_{l}(n)|\) being majorized by \(N_{n}^{2-l}\sqrt{n} \cdot |{\mathcal {M}}_{l}(n)|\)):

The statement (1.32) of Theorem 1.4 now follows upon employing Lemma 2.8a for evaluating the integral \(\int \limits _{\mathcal {Q}}L_{n}(x)dx\) on the r.h.s. of (2.23), and Lemma 2.8b for bounding the error term \(\int \limits _{\mathcal {Q}{\setminus }\mathcal {Q}_{s}}|\Upsilon _{n}(x)|dx\).

\(\square \)

2.2 Outline of the proof of Theorem 1.3

To prove Theorem 1.3, our first step as in [4], is to restrict ourselves to the set of integers \(n\in S\) with “typical” factorization type. This is accomplished (for square-free n) with the help of Lemma 8.1. Fixing “typical” \(n=p_1p_2\dots p_r\) with \(p_1<p_2<\dots <p_r,\) the key observation then is that any non-trivial relation of the form

can be rewritten as a non-degenerate quasi-linear equation with respect to the Gaussian primes \(p_j=\pi _j{\bar{\pi }}_j,\)

where each \(\pi _{j,s}^*=\{\pi _j,\bar{\pi _j}\}\) with rotation factor \(\alpha _s\in {\mathbb {Z}}.\) Thus, having primes \(p_1,p_2\dots p_{r-1}\) fixed, the Eq. (2.24) determines \(\arg {\pi }_r,\) and therefore prime \(p_r\) in a unique fashion (see the proof of Proposition 8.2 for the details). After conditioning on this value of \(p_r\) and taking into account Lemma 8.1, we deduce that equality (2.24) can occur only for small proportion of numbers \(n\in S.\) This is accomplished in Proposition 8.2 for those \(n\in S\) which are free of small prime factors and in Proposition 8.3 for general \(n\in S.\)

In order to show that, the set of accumulation points of the sequence of numbers \(\{\widehat{\nu _{n}}(4)\}_{n\in S'}\) is the whole of \([-1,1],\) we choose \(n\in S\) of the form \(n=p_n^mp,\) where \(p_n\) and p are appropriately chosen primes and \(m\in S\) using classical result due to Kubilius (Lemma 8.4). This is the content of Proposition 8.5.

2.3 Outline of the paper

The rest of the paper is organised as follows. The proof of a version of the Kac–Rice formula in Proposition 2.1 will be given in Sect. 3, and the asymptotics for the Kac–Rice integral on the r.h.s. of (2.6) will be analysed throughout Sects. 4–7, as follows. An upper bound of Proposition 2.4 for the singular set \(\mathcal {Q}_{s}\) as in Definition (2.3) in terms of the semi-correlations set will be established in Sect. 4, whereas a contribution of a single small square \(Q_{ij}\subseteq \mathcal {Q}_{s}\) of Proposition 2.5 will be controlled in Sect. 5.

The perturbative analysis of Proposition 2.7 for the zero density on the nonsingular set will be carried out in Sect. 6, whose contribution to the expected nodal length of \(f_{n}\) will be evaluated in Sect. 7. A proof of Theorem 1.3, bounding the semi-correlation set, will be given in Sect. 8, whereas some more technically demanding computations, required as part of proofs for the said results, will be performed in the appendix.

3 Proof of Proposition 2.1: Kac–Rice Formula for Expected Nodal Length

In view of [1, Theorem 6.3] (see also [1, Proposition 1.2]), the equality (2.6) holds provided that the Gaussian distribution of \(f_n(x)\) is non-degenerate for every \(x \in \mathcal {Q}\). It is easy to construct examples of numbers n, so that this non-degeneracy condition fails decisively for some points in \(\mathcal {Q}\). Let

be the degenerate set.

Lemma 3.1

Let n be of the form (1.8), recall that \(Q_{n}\) is given by (2.2), and the grid \({\mathcal {G}}_{n}\) as in (2.3). Then we have the decomposition

where \(\mathcal {A}_{n}\subseteq \mathcal {Q}\) is a finite set of isolated points in \(\mathcal {Q}\).

Proof

First we aim at proving the announced decomposition (3.2). Let \(x=(x_{1},x_{2})\in {\mathcal {H}}_{n}\), whence, by the definition (3.1) of \({\mathcal {H}}_{n}\), for all \(\mu \in \mathcal {E}_{n}/\sim \) either \(\mu _{1}x_{1}\in {\mathbb {Z}}\) or \(\mu _{2}x_{2}\in {\mathbb {Z}}\) holds, and recall that we assumed that \(\mu _{1},\mu _{2}\ne 0\) for all \(\mu \in \mathcal {E}_{n}/\sim \) (as otherwise the corresponding summand in (1.21) necessarily vanishes). Assume that for some \(\mu \in \mathcal {E}_{n}\) we have \(\mu _{1}x_{1}\in {\mathbb {Z}}\), and denote \(l:=\mu _{1}x_{1}\in {\mathbb {Z}}\). Then, since \(x_{1}\in [0,1]\) and \(\mu _{1}^{2}+\mu _{2}^{2}=n\), necessarily \(|l|\le \sqrt{n}\), and \(x_{1}=\frac{l}{\mu _{1}}\). Hence, if for some \(\mu =(\mu _{1},\mu _{2})\in \mathcal {E}_{n}/\sim \) we have \(\mu _{1}x_{1}\in {\mathbb {Z}}\), and for some \({\widetilde{\mu }}=({\widetilde{\mu }}_{1},{\widetilde{\mu }}_{2})\in \mathcal {E}_{n}/\sim \) we have \({\widetilde{\mu }}_{2}x_{2}\in {\mathbb {Z}}\), then both coordinates \((x_{1},x_{2})\) belong to the finite set

so that, by prescribing \(\mathcal {A}_{n}\subseteq \mathcal {A}'_{n}\), the \(\mathcal {A}_{n}\) in the decomposition (3.2), is finite, provided that we prove that the rest of \({\mathcal {H}}_{n}\) is indeed the grid \({\mathcal {G}}_{n}\).

By the above, we may assume that \(x\in {\mathcal {H}}_{n}\) satisfies

and claim that in this case necessarily \(x_{1}\) is of the form

for some \(1\le k\le Q_{n}-1\), taking care of the symmetric case (\(\mu _{2}x_{2}\in {\mathbb {Z}}\)for all\(\mu \in \mathcal {E}_{n}/\sim \)) along identical lines. Once having (3.4) established, that would yield that \(x\in {\mathcal {G}}_{n}\) on the grid (see (2.3)), and we would only have the burden of proving the converse inclusion \({\mathcal {G}}_{n}\subseteq {\mathcal {H}}_{n}\) (which is easy).

To the end of proving (3.4), we let

be the greatest common divisor of the abscissas of all the lattice points in \(\mathcal {E}_{n}\). Then, since the set of integers d so that \(d\cdot x_{1}\in {\mathbb {Z}}\) is an ideal in \({\mathbb {Z}}\), we have \(Q'_{n}x_{1}\in {\mathbb {Z}}\) (equivalently, since we can express \(Q'_{n}\) as a linear combination of \(\{\mu _{1}:\, \mu \in \mathcal {E}_{n}/\sim \})\). The above shows that (3.3) is equivalent to the single condition \(Q'_{n}x_{1}\in {\mathbb {Z}}\). That is, \(x_{1}=\frac{k}{Q'_{n}}\), and, since \(x_{1}\in (0,1)\), we also get \(1\le k\le Q'_{n}-1\), yielding (3.4) (that, as mentioned above, in turn implies \(x\in {\mathcal {G}}_{n}\)), once we prove that \(Q_{n}=Q'_{n}\), to be shown next.

To this end we recall the prime decomposition (1.8) of n, and work in the ring of Gaussian integers \({\mathbb {Z}}[i]\) (which is a unique factorization domain, or, simply, UFD), where we think of \(\mathcal {E}_{n}\subseteq {\mathbb {R}}^{2}\) as embedded into \({\mathbb {C}}\), via the map \(\mu =(\mu _{1},\mu _{2})\mapsto \mu _{1}+i\mu _{2}\). For every prime \(p_{j}\) in the decomposition (1.8) we associate a prime element \(\pi _{j}\in {\mathbb {Z}}[i]\) with norm \(\Vert \pi _{j}\Vert ^{2}=p_{j}\), and take

With this notation, and (2.2), one may express every \(\mu \in \mathcal {E}_{n}/\sim \) (i.e., as an element of \({\mathbb {C}}\), up to a sign or complex conjugation) as

for some \(0\le k_{j}\le e_{j}\), \(j=1,\ldots ,s\). This implies that \(Q_{n}|Q_{n}'\) at once. To see that also \(Q_{n}'|Q_{n}\), we further exploit the UFD property of \({\mathbb {Z}}[i]\), implying, in particular, that the gcd in \({\mathbb {Z}}[i]\) is well-defined.

By the definition (3.5) of \(Q_{n}'\), we have that \(Q_{n}'|\mu _{1}, \mu _{2}\) for all \(\mu \in \mathcal {E}_{n}/\sim \), and so \(Q_{n}'|\mu \) in \({\mathbb {Z}}[i]\), valid for all\(\mu \in \mathcal {E}_{n}\), i.e.

However, by making the two choices \(k_{j}:=e_{j}\), and \(k_{j}:=0\), having only \(Q_{n}\cdot (1+i)^{a_{0}}\) as a common factor in (3.6), it shows that \(Q_{n}''=Q_{n}\cdot (1+i)^{a_{0}}\), and recalling that either \(a_{0}=0\) or \(a_{0}=1\), the readily established \(|Q_{n}|Q_{n}'\), and \(Q_{n}'|Q_{n}''\) (so that \(Q_{n}'\) could be either \(Q_{n}\) or \(Q_{n}\cdot (1+i)\), the latter not being an integer number), this readily implies \(Q_{n}'=Q_{n}\), that, as it was mentioned above, yields that \(x\in {\mathcal {G}}_{n}\). To finish the statement of Lemma 3.1 it is sufficient to observe that if \(x_{1}\) is of the form (3.4), then, in light of (3.5), and \(Q_{n}=Q_{n}'\) above, (3.3) is satisfied, so that the inclusion \({\mathcal {G}}_{n}\subseteq {\mathcal {H}}_{n}\) holds. \(\square \)

With the above preparatory result we are now in a position to conclude the proof of Proposition 2.1.

Proof of Proposition 2.1

Around each point \(x_{i}\in {\mathcal {H}}_n\) we excise a small ball \(\mathcal {B}(x_i,\varepsilon )\), and denote

with the intention to apply the Kac–Rice method to evaluate the expected nodal length of the restriction \(f_n |_{\mathcal {Q}_{\varepsilon }}\) of \(f_{n}\) to the remaining set. That is, we excised the radius-\(\varepsilon \) balls centred at each of the finitely many points \(\mathcal {A}_{n}\), and, possibly, finitely many rectangles of the form \((x_{1}-\varepsilon ,x_{1}+\varepsilon ) \times (0,1)\) and \((0,1)\times (x_{2}-\varepsilon ,x_{2}+\varepsilon )\), centred at the horizontal and vertical bars of the grid \({\mathcal {G}}_{n}\), in case it is non-empty. Since outside \({\mathcal {H}}_{n}\), the random field \(f_{n}\) satisfies the non-degeneracy hypothesis of [1, Theorem 6.3], the Kac–Rice formula (2.1) holds for \(f_{n}\) restricted to \(\mathcal {Q}_{\varepsilon }\), asserting that the restricted expected nodal length is given by

with \(K_{1}\) as in (2.5).

On one hand, since, on recalling (2.4), the restricted nodal length \(\{{\mathcal {L}}(f_n |_{\mathcal {Q}_{\varepsilon }})\}_{\varepsilon >0}\) is an increasing sequence of nonnegative random variables with the a.s. limit

the Monotone Convergence Theorem applied as \(\epsilon \rightarrow 0\), yields

On the other hand, by the definition,

with the r.h.s. of (3.8) finite on infinite. The equality of the limits in (3.7) and (3.8) show that the main statement (2.6) of Proposition 2.1 holds, whether both the l.h.s. and r.h.s. of (2.6) are finite or infinite. That \(K_{1}\in L^{1}(\mathcal {Q})\) also follows, since the l.h.s. of (2.6) is finite by the deterministic bound (1.2) (alternatively, from the asymptotic analysis within Theorem 1.4 of the integral on the r.h.s. of (2.6), with no circular logic). \(\square \)

4 Proof of Proposition 2.4: Controlling the Measure of the Singular Set

4.1 Proof of Proposition 2.4

We will need the following auxiliary lemmas, whose proofs will be given in Sect. 4.2 below.

Lemma 4.1

Let \(Q_{ij} \subset \mathcal {Q}_s\) be a singular small square. Then necessarily one of the followings holds:

-

a.

For every \(y \in Q_{ij}\),

$$\begin{aligned} |s_{n}(y)|> {\epsilon _{0}}/{2}. \end{aligned}$$ -

b.

For every \(y \in Q_{ij}\),

$$\begin{aligned} |{\text {tr}}(\Gamma _{n}(y))|>{\epsilon _{0}}/{2}. \end{aligned}$$ -

c.

For every \(y \in Q_{ij}\),

$$\begin{aligned} |\det (\Gamma _{n}(y))|>{\epsilon _{0}}/{2}. \end{aligned}$$

Lemma 4.1 allows for the following decomposition of \(\mathcal {Q}_{s}\).

Definition 4.2

(Singular decomposition).

-

a.

The set \(\mathcal {Q}_{s,1} \subset \mathcal {Q}_s\) is the union of all small squares \(Q_{ij} \subset \mathcal {Q}_s\) so that for all \(x\in Q_{ij}\) the inequality

$$\begin{aligned} |s_n(x)| >\epsilon _0/2 \end{aligned}$$(4.1)is satisfied.

-

b.

The set \(\mathcal {Q}_{s,2}\) is the union of all small squares \(Q_{ij}\subseteq \mathcal {Q}_{s}{\setminus } \mathcal {Q}_{s,1}\), so that either for all \(x\in Q_{ij}\) the inequality

$$\begin{aligned} |{\text {tr}}(\Gamma _{n}(x))|>\epsilon _{0}/2 \end{aligned}$$holds, or for all \(x\in Q_{ij}\), the inequality

$$\begin{aligned} |\det (\Gamma _{n}(x))|>\epsilon _{0}/2 \end{aligned}$$holds.

-

c.

By Lemma 4.1,

$$\begin{aligned} \mathcal {Q}_{s} = \mathcal {Q}_{s,1} \cup \mathcal {Q}_{s,2}, \end{aligned}$$(4.2)(“singular decomposition”), with \(\mathcal {Q}_{s,1}\) and \(\mathcal {Q}_{s,2}\) disjoint save for boundary overlaps.

The respective measures of \(\mathcal {Q}_{s,1}\) and \(\mathcal {Q}_{s,2}\) will be bounded in the following lemma. Recall that \({\mathcal {M}}_{l}(n)\) is the length-l spectral semi-correlation set (1.30).

Lemma 4.3

For every \(l\ge 4\) even integer we have the following bounds for the measures of the singular sets \(\mathcal {Q}_{s,1}\), \(\mathcal {Q}_{s,2}\):

with the constant involved in the \(`O'\)-notation depending only on l (also \(\epsilon _{0}\) and \(c_{0}\)).

Proof of Proposition 2.4 assuming Lemmas 4.1–4.3

In light of the singular decomposition (4.2), the statement (2.14) of Proposition 2.4 follows at once from Lemma 4.3.

\(\square \)

4.2 Proofs of the auxiliary Lemmas 4.1–4.3

Proof of Lemma 4.1

We assume that for some i, j, there exists \(x_{0} \in Q_{ij}\) with

and claim that for all \(x\in Q_{ij}\), the inequality

holds (assuming \(c_{0}\) is sufficiently small), i.e. scenario (a) of Lemma 4.1 prevails. On recalling the definition (2.8) of \(s_{n}\), and differentiating (2.8) explicitly, it is easy to obtain the uniform bound

with some absolute constant \(c_{1}>0\). This readily implies that \(s_{n}(\cdot /\sqrt{n})\) is a Lipschitz function with associated constant absolute, i.e.

Hence, if \(x\in Q_{ij}\), we have that \(\Vert x-x_{0}\Vert \le \sqrt{2}c_{0}\cdot \sqrt{n}\), and together with (4.5) and (4.7) this implies (4.6), so long as we choose \(c_{0}>0\) sufficiently small, depending on \(\epsilon _{0}\) (and \(c_{1}\)).

Essentially the same argument works for the other two scenarios (b) and (c) of Lemma 4.1, on recalling the definition (2.10) of \(\Gamma _{n}(\cdot )\), and exploiting the Lipschitz property of both \({\text {tr}}(\Gamma _{n}(\cdot ))\) and \(\det (\Gamma _{n}(\cdot ))\), in place of \(s_{n}(\cdot )\). These are easy to establish to be with Lipschitz constant of order of magnitude at most \(\sqrt{n}\), by differentiating the individual entries of \(\Gamma _{n}(\cdot )\). \(\square \)

Proof of Lemma 4.3

We first aim to prove the first statement (4.3) of Lemma 4.3, the proof of the second statement (4.4) being quite similar, as explained in the last paragraph of this proof. By the defining inequality (4.1) of \(\mathcal {Q}_{s,1}\), holding for all \(x\in \mathcal {Q}_{s,1}\), we have that

so that we may apply the Chebyshev inequality to yield for every \(l\ge 4\) even integer the bound

By the definition (2.8) of \(s_{n}(x)\), we have \(s_n(x)=A_n(x)+B_n(x)+C_{n}(x)\), where

Since l is even, we may bound

Recalling the definition of the semi-correlation set \({\mathcal {M}}_{l}(n)\) in (1.30), we observe that, for \(i=1,2\), we easily evaluate:

and the same

whereas for the other integral in (4.8), we recall the correlation set (1.26) to bound

as, obviously, \({\mathcal {R}}_{l}(n)\subseteq {\mathcal {M}}_{l}(n)\).

The first statement (4.3) of Lemma 4.3 follows directly from (4.8), (4.9), (4.10) and (4.11), via Chebyshev’s inequality. Finally, the same argument as above also yields the second statement (4.4) of Lemma 4.3, upon observing that for every \(y \in \mathcal {Q}_{s,2}\) we have \(|s_n(y)|\le \epsilon _0\) with \(\epsilon _0\) small; so on \(\mathcal {Q}_{s,2}\) we can Taylor expand the function

that appear in the entries of the conditional covariance matrix \(\Gamma _n(x)\). \(\square \)

5 Proof of Proposition 2.5: Controlling the Contribution of a Small Square

5.1 Proof of Proposition 2.5

We will first state the following lemma, whose proof will be given in Sect. 5.2 immediately below.

Lemma 5.1

Let \(Q\subseteq \mathcal {Q}\) be an arbitrary square of side length \(\frac{c_{0}}{\sqrt{n}}\) with \(c_{0}>0\) sufficiently small. We have the following uniform bound, holding for all \(\eta ,\mu \in \mathcal {E}_n\) with \(\eta _{1}\ne 0\) and \(\mu _{1}\ne 0\):

with constant involved in the ‘O’-notation depending only on the constant \(c_{0}>0\).

Proof of Proposition 2.5 assuming Lemma 5.1

Let X and Y be the conditional random variables

and recall that the joint distribution of (X, Y) is centred Gaussian, with covariance matrix equal to \(\Omega _{n}(x)\) in (2.9) (and (2.10)–(2.12)), up to the normalising constant read from (2.9). Then, with the aid of the Cauchy-Schwarz inequality, we obtain the inequality

so that we may bound the zero density (2.5) as

where

and

In what follows we are going to prove that

and the same proof (with all coordinates switched) yields the other estimate

together these imply (2.15). By (2.9), and on recalling that

we express the variance of the conditional derivative as

since

it follows that

where

It follows that we have the following explicit expression:

Next, by grouping \((\eta ,\mu )\) together with \((\mu ,\eta )\) on the r.h.s. of (5.4), this is equivalent to

Hence

where we used the positivity of all the summands in the denominator, as well as the easy inequality

valid for any sequence of real numbers \(\{a_{k}\}_{k=1}^{K}\). The bound (5.2) (and similarly (5.3)), implying, as it was mentioned above, the statement of Proposition 2.5, finally follows upon integrating the individual summands on the r.h.s. of (5.5), and using Lemma 5.1 to bound the contribution of each one of them (recall that we are allowed to assume that \(\eta _{1},\mu _{1}\ne 0\)). \(\square \)

5.2 Proof of Lemma 5.1

Proof

Let \(\eta , \mu \in \mathcal {E}_n/\sim \), and \(Q\subseteq \mathcal {Q}\) be an arbitrary square of side length \(\frac{c_{0}}{\sqrt{n}}\) with \(c_{0}>0\) sufficiently small. Now we write

where \(x_1 \in [0,1]\), \(k_{\eta }= \left[ \frac{\eta _{1}x_{1}}{\pi } \right] \in {\mathbb {Z}}\) is the integer value of \(\eta _{1}x_{1}/\pi \), independent of \(x_{1}\) by the above, and \(h_{\eta }=h_{\eta }(x_{1})\in (-\pi /2,\pi /2]\). We also denote

the numbers \(k_{\mu }\), \(x_{1}^{\mu }\) and \(h_{\mu }=h_{\mu }(x_{1})\) are defined analogously, with \(\eta _{1}\) replaced by \(\mu _{1}\). Note that, \(h_{\eta }\) and \(h_{\mu }\), both being linear functions of \(x_{1}\), satisfy the relation

that will be exploited below. Finally, we denote

so that (5.8) reads

crucially depending on \(\eta ,\mu \) and Q only (but independent of \(x_{1}\)).

First, we assume, that \(x_{1}^{\eta }\ne x_{1}^{\mu }\), i.e.

and by switching between \(\eta \) and \(\mu \) if necessary, we may assume w.l.o.g., that

We expand the numerator of the integrand on l.h.s. of (5.1) (first, without the absolute value):

so that

by (5.10), where \(d(\eta ,\mu )\) is as in (5.9), and the error term is bounded by

For the denominator of the integrand on l.h.s. of (5.1), we have

and also

and we plan to use the former inequality whenever we are going to bound the contribution of the main term of (5.13), and both inequalities for the error term in (5.13).

We denote \(s_{\eta }=s_{\eta }(x)=\sin (\eta _{2} \pi x_{2})\) and \(s_{\mu }=s_{\mu }(x) =\sin (\mu _{2} \pi x_{2})\). With the newly introduced notation, the denominator (5.15) is

as we will keep \(x_{2}\) fixed, and aim at first to integrate w.r.t. \(x_{1}\), we will treat \(s_{\eta }\) and \(s_{\mu }\) as parameters. The estimates (5.13) and (5.16) imply that the integral in (5.1) is bounded by

and, in what follows, we are going to bound the contribution of the main and the error terms by separate arguments.

First, we bound the contribution of the main term in the integrand on the r.h.s. of (5.17). To this end we notice that \(h_{\eta }\) and \(h_{\mu }\) are both linear functions of \(x_{1}\), so we are going to exploit their inter-dependence (5.6) (and its analogue for \(h_{\mu }\)) to write:

where we denoted

a linear transformation of the variable \(x_{1}\), and used (5.12). We complete the expression on the r.h.s. of (5.18) to a square:

note that we may assume that \(s_{\mu }\ne 0\) and \(s_{\eta } \ne 0\) (holding outside a discrete set of \(x_{2}\)). Substituting the identity (5.20) into (5.18), we may bound the contribution of the main term of the integral on the r.h.s. of (5.17) as

where we have transformed the coordinates (5.19), and \({\tilde{I}}_{i}\) is some shift of the interval \(I_{i}\).

Another transformation of coordinates w.r.t. \(\triangle x_{1}\) shows that, denoting \(\widetilde{{\widetilde{I}}}_{i}\) the new range of integration, (5.21) is equal to

since the integral w.r.t. t is bounded by an absolute constant.

Next, we turn to evaluating the contribution of the error term

to the integral (5.17). By (5.14), we have

we will bound the 1st integral on the r.h.s. of (5.23), with the last one being bounded along similar lines, and the 2nd and the 3rd ones are easier, as the corresponding numerator is divisible by both \(h_{\eta }\) and \(h_{\mu }\) (see the argument below).

We might bound the 1st integral on the r.h.s. of (5.23) (or the integral w.r.t. \(x_{1}\)), using the Cauchy-Schwarz inequality

to bound the denominator from below, so that

which has the unfortunate burden of having to deal with \(h_{\mu }\) in the denominator, that could potentially be much smaller than \(h_{\eta }\). To deal with this obstacle we exploit the relation (5.10) between \(h_{\eta }\) and \(h_{\mu }\) once again, both being linear functions of \(x_{1}\). We write

so that

For the former integral on the r.h.s. of (5.25) we use the aforementioned idea (5.24) to bound the denominator from below

since \(|h_{\eta }| \le \frac{\pi }{2}\) is bounded, so that, after the integration w.r.t. \(x_{2}\), we obtain

Concerning the latter integral on the r.h.s. of (5.25) (or the double integral on Q), since, as above, \(h_{\eta }\) is bounded, we have

readily evaluated above as the main term (see (5.22)).

Combining the estimates (5.27) and (5.26), and substituting them into (5.25) (integrated w.r.t. \(x_{2}\) on \({ I}_{j}\)) yield the bound

for the 1st integral on the r.h.s. of (5.23), and its analogues for the other integrals on the r.h.s. of (5.23) also follow (the 4th is symmetric, whereas the 2nd and the 3rd are easier, with no need to deal with the denominator). Substituting (5.28) and its analogues for the other three integrals in (5.23) into (5.23), we finally obtain a bound for the contribution of the error term

This, together with (5.22), and (5.17), implies the statement (5.1) of Lemma 5.1 in this non-degenerate case (5.11).

Finally, we treat the degenerate case \(x_{1}^{\eta }=x_{1}^{\mu }\), or, equivalently,

In this case the situation becomes easier to analyse, and the main terms of the expansion (5.13) vanishes via (5.30), so that here (5.13) reads

with the same bound (5.14) for the error term. The argument leading to (5.26) above works unimpaired, with no need to bound the extra term (5.27) here, as the precise identity (5.30) reduces bounding the integral (5.25) (after integrating w.r.t. \(x_{2}\) on \(I_{j}\)) to bounding (5.26) with no remainder term (5.27). This shows that in this degenerate case, (5.29) holds, and, by (5.31), it also shows that the statement (5.1) of Lemma 5.1 holds here. \(\square \)

6 Proof of Proposition 2.7: Perturbative Analysis on the Non-singular Set

Proof

The proof of Proposition 2.7 rests on a precise Taylor analysis for the density function \(K_1\). We exploit the fact that the Gaussian expectation (2.5) is an analytic function with respect to the parameters of the corresponding covariance matrix outside its singularities. It is then possible to Taylor expand \(K_1\) explicitly, in the domain \(\mathcal {Q}{\setminus } \mathcal {Q}_s\), where both \(s_n(x)\) and all the entries of \(\Gamma _n(x)\) are small.

We first expand the factor

that appear in (2.5). Next, we consider the Gaussian integral

Observing that

we expand the exponential in (6.2) as:

so that the Gaussian integral (6.2) is such that

We introduce the following notation:

and

In Lemma 6.1, postponed at the end of this section, we evaluate the terms \({\mathcal {I}}_i(\Gamma _n(x))\); we use Lemma 6.1 to write

where

Finally we expand the factor

We know that \(\Gamma _n(x)\) is symmetric and hence diagonalizable, we denote with \(g_{1;n}(x)\) and \(g_{2;n}(x)\) its eigenvalues. The eigenvalues of \(I_2+\Gamma _n(x)\) are then \(1+g_{1;n}(x)\) and \(1+g_{2;n}(x)\), and we have

This implies that we can write

We rewrite the third term on the right hand side of the last equation as follows

and we have that

Observing that \(g^2_{1;n}(x)\) and \(g^2_{2;n}(x)\) are eigenvalues of \(\Gamma ^2_n(x)\), we rewrite \(\det (\Gamma _n(x))\) as follows

and we arrive at the following expansion

In conclusion, in view of (6.1), (6.5) and (6.6), we obtain the Taylor expansion of the density function \(K_1\):

\(\square \)

We state now Lemma 6.1; the proof of this lemma is relegated to “Appendix B”.

Lemma 6.1

We have

7 Proof of Lemma 2.8: Boundary Effect and Error Term

We first prove Lemma 2.8a. By the definition (2.17) of \(L_{n}(x)\), we have

The following technical lemma evaluates the individual integrals encountered within (7.1).

Lemma 7.1

The integrals of the individual terms on the r.h.s. of (7.1) admit the following asymptotics.

-

a.

$$\begin{aligned} \int \limits _{\mathcal {Q}} s_n(x) d x=0. \end{aligned}$$

-

b.

$$\begin{aligned} \int \limits _Q{\text {tr}}(\Gamma _n(x)) d x = - \frac{6}{ N_n } + O \left( \frac{1}{N^2_n} \right) + O\left( N_{n}^{-l-1} |{\mathcal {M}}_{l}(n)|\right) . \end{aligned}$$

-

c.

$$\begin{aligned} \int \limits _{\mathcal {Q}} s^2_n(x) d x=\frac{5}{N_n}. \end{aligned}$$

-

d.

$$\begin{aligned} \int \limits _Q s_n(x) {\text {tr}}(\Gamma _n(x)) d x = \frac{2}{ N_n} + O \left( \frac{1}{N^2_n} \right) + O\left( N_{n}^{-l-1} |{\mathcal {M}}_{l}(n)|\right) . \end{aligned}$$

-

e.

$$\begin{aligned} \int \limits _{\mathcal {Q}} {\text {tr}}(\Gamma ^2_n(x)) dx =\frac{2^2}{N_n} \left[ 1+2^5 M_4(n) \right] +O\left( \frac{1}{N^2_n} \right) + O\left( n^{-1-1/2} N_n^{-l-3} |{\mathcal {M}}_{l}(n)|\right) . \end{aligned}$$

-

f.

$$\begin{aligned} \int \limits _{\mathcal {Q}} [{\text {tr}}(\Gamma _n(x))]^2 d x=\frac{2^2}{N_n} \left[ 2^6 M_4(n)-3 \right] +O\left( \frac{1}{N^2_n} \right) + O\left( N_{n}^{-l-1} |{\mathcal {M}}_{l}(n)|\right) . \end{aligned}$$

-

g.

$$\begin{aligned} \int \limits _{\mathcal {Q}} s^3_n(x) d x=O \left( \frac{1}{N^2_n} \right) . \end{aligned}$$

-

h.

$$\begin{aligned} \int \limits _{\mathcal {Q}} [ {\text {tr}}( \Gamma _n(x)) ]^3 d x=O \left( \frac{1}{N^2_n} \right) . \end{aligned}$$

The proof of Lemma 7.1 is postponed till Section C.

Proof of Lemma 2.8 assuming Lemma 7.1

We substitute the various asymptotic statements of Lemma 7.1 into (7.1) to obtain

The latter formula, expressed in terms of the sequence \(\{\widehat{\nu _n}(4)\}\) of the 4th Fourier coefficients of \(\nu _n\) gives the statement of Lemma 2.8a. The proof of Lemma 2.8b follows immediately from (2.18) and Lemma 7.1 parts g and h. \(\square \)

8 Proof of Theorem 1.3: Length-l Spectral Semi-correlations

We begin by proving Theorem 1.3 in the case of square-free numbers. To this end, for any fixed \(K\ge 1\) we introduce the set

and let \(\Omega _M:=\Omega _{M,1}=S\cap [1,M].\) The following lemma is borrowed from [4].

Lemma 8.1

For \(m\in \Omega _{M,K},\) let  be its factorization with \(K< p_1<p_2\dots <p_r.\) Then as \(M\rightarrow \infty \) we have \(p_s>2^{s\Phi (s)}\) for \(1\le s\le r\) holds for all \(m\in \Omega _{M,K}{\setminus } \Omega _{M,K}^{(1)},\) where the exceptional set \(\Omega _{M,K}^{(1)}\) has cardinality

be its factorization with \(K< p_1<p_2\dots <p_r.\) Then as \(M\rightarrow \infty \) we have \(p_s>2^{s\Phi (s)}\) for \(1\le s\le r\) holds for all \(m\in \Omega _{M,K}{\setminus } \Omega _{M,K}^{(1)},\) where the exceptional set \(\Omega _{M,K}^{(1)}\) has cardinality

with \(\eta (K, \Phi )\rightarrow 0\) as \(K\rightarrow \infty .\) If \(\Phi (x)=o(\log x),\) then we can choose \(\eta (K,\Phi )=K^{-1+\delta }\) for every fixed \(\delta >0.\)

The next proposition shows that the number of solutions of (1.30) is small for almost all \(m\in \Omega _{M,K}.\)

Proposition 8.2

Let \(\delta >0\) be fixed. If \(K\ge K(\delta )\) and \(M\rightarrow \infty ,\) then for all but \(K^{-1+\delta }|\Omega _{M,K}|\) elements \(m\in \Omega _{M,K}\) the Eq. (1.30) has \(O(N_m^k)\) solutions.

Proof

Let \({\tilde{S}}\in S\) be the subset for which (1.30) has a nontrivial solution. For any prime p we write \(p=\pi \cdot {\bar{\pi }}\) where \(\pi \) is the corresponding Gaussian prime with \(\text {arg}(\pi )\in [0,\pi /2].\) For any integer \(s\ge 1\) we introduce the set

Fix \(s\ge 1\) and consider \(n\in {\mathcal {F}}_s\) with a given factorization \(n=p_1\cdot p_2\dots p_s,\)\(K<p_1<p_2<\dots <p_s.\) We have that there exist integer points \(\{\xi \}_{j=1}^{2k}\) with \(\Vert \xi _j\Vert =\sqrt{n}\) and \(\varepsilon _j\in \{-1,0,1\},\)\(1\le j\le 2k\) with vanishing linear combination

Each point \(\xi _r\) can be uniquely written as a product \(\xi _r=i^{k_{\xi _r}}\prod _{j\le s }\pi _{j,r}^*\) where each \(\pi _{j,r}^*\in \{\pi _j,{\bar{\pi }}_j\}\) and \(k_{\xi _r}\in \{0,1,2,3\}.\) We now regroup the terms in the last expression by collecting \(\pi _s\) and \(\bar{\pi _s}\) into different summands to end up with an equivalent form

where each \(A_{s-1},B_{s-1}\) consists of the sum of at most \(2k-1\) terms composed of first \((s-1)\) Gaussian primes. Setting \(\pi _s=|\pi _s|e^{i\phi _s},\)\(A_{s-1}=|A_{s-1}|e^{ia_{s-1}}\) and \(B_{s-1}=|B_{s-1}|e^{ib_{s-1}},\) the Eq. (8.1) can be rewritten as

Using elementary trigonometric identities we get

Consequently,

and so \(\tan (\phi _s)\) is determined uniquely unless

In the latter case we must have

Since \(n\in {\mathcal {F}}_s\) we have \(p_1p_2\dots p_{s-1}={\tilde{n}}\vert n\) and so by definition \({\tilde{n}}\in S{\setminus }{\tilde{S}}.\) This implies that (8.2) and consequently (8.1) must be trivial with \(A_{s-1}=B_{s-1}=0.\) This contradicts the definition of \(n\in {\mathcal {F}}_s.\)

Hence \(\tan (\phi _s)\) is determined uniquely and so is the corresponding prime \(p_s.\) Indeed, if \(\pi ^{(1)}_s=x^2+y^2\) and \(p^{(2)}_s=a^2+b^2\) are two primes corresponding to the same angle \(\phi _s,\) then

Since \((a,b)=(x,y)=1,\) we have that \(|a|=|x|\) and \(|b|=|y|\) and therefore \(a^2+b^2=x^2+y^2=p^{(1)}_s=p^{(2)}_s:=p_s.\)

We are now ready to estimate the number of \(m\in \Omega _{M,K}\) which give rise to a nontrivial solution of (1.30). By Lemma 8.1, we can restrict ourselves to \(m=p_1p_2\dots p_r\in \Omega _{M,K},\) with \(K<p_1<p_2<\dots <p_r\) and \(p_j\ge 2^{j\Phi (j)}\) for any \(1\le j\le r\) and some slowly growing function \(\Phi (x)\) to be determined later. Clearly for each such m, there exists unique \(1\le s\le r\) such that the product \(p_1p_2\dots p_s\in {\mathcal {F}}_s.\) Given \(K<p_1<p_2<\dots <p_{s-1}\) we can form at most \(2^{2k(s-1)}\) sums \(A_{s-1}\) and \(B_{s-1}\) and thus produce at most \(2^{2k(s-1)}\) distinct \(n=p_1p_2\dots p_{s-1}p_s\in \Omega _{M,K}.\) By Lemma 8.1, \(p_s\ge \max \{2^{s\Phi (s)},K\}\) and therefore the total number of elements in \({\tilde{S}}\cap [1,M]\) induced by the elements in \({\mathcal {F}}_s\) is at most

where the last bound comes from “conditioning” on the value of \(p_s\) and noting that \(m/p_s\in \Omega _{M,K}.\) Summing this over different ranges for s and choosing \(\Phi (x)=o(\log x)\) in the same way as in [4] yields the desired conclusion. \(\square \)

We are now ready to handle the general case.

Proposition 8.3

For all but \(o\left( \frac{M}{\sqrt{\log M}}\right) \) elements \(m\in S\cap [1,M],\) the Eq. (1.30) has \(O(N_m^k)\) solutions.

Proof

Fix large \(K>0\) and consider \({\mathcal {P}}_K=\prod _{p\le K}p.\) We decompose \(n=n_{K}n_b\) where \((n_b,{\mathcal {P}}_K)=1\) and \(\text {rad}(n_K)\vert {\mathcal {P}}_K.\) For any fixed part \(n_K=\xi {\bar{\xi }}\) we count the number of \(n\in {\tilde{S}}\cap [1,M]\) with fixed \(n_K|n\) and \((\frac{n}{n_K},{\mathcal {P}}_K)=1.\) In this way (1.30) reduces to

with \(\alpha _i\vert n_K\) and \(\Vert \xi _i\Vert =\sqrt{n_b}\) for \(1\le i\le 2k.\) We now follow the proof of Proposition 8.2 regarding \(\alpha _i\) as fixed coefficients. Let \(\Omega _{4,1}(n)\) denote the number of prime divisors \(p=1(\bmod 4)\) of n counting multiplicity. Given \(n_K,\) we have at most \(2^{2k\Omega _{4,1}(n_K)}\) choices for the coefficients \(\alpha _i.\) Note that the number of integers \(n\in \Omega _M\) for which \(p^2|n\) for some prime \(p\ge K,\) is bounded above by

and thus give negligible contribution. Consequently, we can restrict ourselves to the set of integers with \(\text {rad}(n_b)=n_b.\) The number of \(n\in {\tilde{S}}\cap [1,M]\) induced in this way, after appealing to Proposition 8.2 is bounded above by

The result now follows by letting \(K\rightarrow \infty .\)\(\square \)

Proposition 8.3 now implies that \(|{\tilde{S}}\cap [1,M]|=o\left( \frac{M}{\sqrt{\log M}}\right) \) and we may take \(S'=S{\setminus } {\tilde{S}}.\) In order to prove the last part of Theorem 1.3, we require the following classical result of Kubilius.

Lemma 8.4

The number of Gaussian primes \(\omega \) in the sector \(0\le \alpha \le \arg \omega \le \beta \le 2\pi ,\)\(|\omega |^2\le u\) is equal to

for some positive constant \(b\in {\mathbb {R}}.\)

We now choose particular “thin” subset of S, to guarantee the desired limiting behaviour of \(\{\widehat{\nu _{n}}(4)\}_{n\in S'}.\)

Proposition 8.5

For any \(s\in [-1,1],\) there exists a sequence \(\{n_i\}_{i\ge 1},\) with \(N_{n_i}\rightarrow \infty \) whenever \(i\rightarrow \infty ,\) such that \(\widehat{\nu _{n_i}}(4)\rightarrow s\) and Eq. (1.30) has \(O(N_{n_i}^k)\) solutions for any \(i\ge 1.\)

Proof

Fix large \(m\ge 1\) and small \(\varepsilon >0.\) By Lemma 8.4, we can select prime \(p_{n}=\pi _{n}\bar{\pi _n},\)\(p_n=1\pmod 4\) and \(|\text {arg}(\pi _n)|\le \frac{\varepsilon }{100m}.\) We further select prime p such that

Consider the number of the form \(n=p_n^{m}p.\) It is easy to see that

and \(N_n> 2^m.\) Using an elementary inequality

valid for \(|x_j|,|y_j|\le 1\) and the fact that \(\widehat{\nu _{n}}(4)=(\widehat{\nu _{p_n}}(4))^m\widehat{\nu _{p}}(4),\) we can estimate

We are left to show that Eq. (1.30) has only trivial solutions for appropriately chosen values of \(p_n,p,\) which satisfy (8.3) and (8.4). Let \(\pi _n=r_ne^{i\phi }\) and \(p=\pi \cdot {\bar{\pi }}\) with \(\arg {\pi }=\alpha .\) Clearly each integer point on the circle of radius \(\sqrt{n}\) can be written as \(\xi _j=\sqrt{n}e^{i(j\phi \pm \alpha +r\frac{\pi }{2})}\) for some \(|j|\le m\) and \(r=\{0,1,2,3\}.\) For such defined n, upon taking real parts, equaton (1.30) can be rewritten in the form

where \(\varepsilon _j=\{+1,-1\}\) and \(|l_j|\le m\) for \(1\le j\le 2k.\) By collecting terms with equal phases \(l_j\phi \) and using elementary trigonometric identities, we can rewrite (8.5) in the form

where \(1\le r\le 2k\) and \(0\le m_1<m_2<\dots m_r\) with \(-2k\le \alpha _j,\alpha _j^{(1)},\beta _j,\beta _j^{(1)}\le 2k.\) Since k is fixed, there are only finitely many choices for the coefficients \(\alpha _j,\alpha _j^{(1)},\beta _j,\beta _j^{(1)}\) and therefore we can select angle \(\alpha \) for which the corresponding prime p satisfies (8.3) and such that \(a\sin {\alpha }+b\cos (\alpha )\ne 0\) for all \(a,b\in {\mathbb {Z}}\) with \(|a|+|b|\ne 0\) and \(|a|,|b|\le 2k.\) Now since each \(F_r(\phi )\) is a trigonometric polynomial of a total degree at most 4k, each non degenerate Eq. (8.6) has at most 4k solutions. Since there are only finitely many of such equations, we can select \(p_n\) sufficiently large which satisfies (8.4) and the corresponding Eq. (8.5) has only trivial solutions. This concludes the proof. \(\square \)

Combining Proposition 8.3 and Proposition 8.5 completes the proof of Theorem 1.3.

Remark 8.6

It is possible to give a construction of n in Proposition 8.5 which is square-free. The idea is to use Lemma 8.4 and choose inductively sequence of primes \(p_1<p_2<\dots <p_m\) such that \(p_j=\pi _j\bar{\pi _j}\) and \(|\text {arg}(\pi _j)|\le \frac{1}{m^2}\) with the property that for any \(1\le r\le m\) we have \(\cos (r\cdot \text {arg}(\pi _m))\notin \text {span}_{{\mathbb {N}}}\{\cos (\sum _{i\le m-1}a_i\text {arg}(\pi _i))\}\) where \(-m\le a_i\le m,\)\(a_i\in {\mathbb {N}}.\) The latter can be ensured by taking sufficiently sparse sequence of primes \(p_1,p_2.\dots \) Now select \(n=p\cdot p_1\dots p_m\) with \(|\hat{\mu _p}(4)-s|\le \delta \) and \(\delta \) sufficiently small. We leave the details to the interested reader.

Notes

From this point we will tacitly assume that all the involved random fields are sufficiently smooth and are satisfying some non-degeneracy assumptions.

This argument recovers the Gaussian Correlation Inequality in this particular case, see e.g. [27].

References

Azaïs, J.-M., Wschebor, W.: Level Sets and Extrema of Random Processes and Fields. Wiley, Hoboken (2009)

Berry, M.V.: Regular and irregular semiclassical wavefunctions. J. Phys. A 10(12), 2083–2091 (1977)

Berry, M.V.: Statistics of nodal lines and points in chaotic quantum billiards: perimeter corrections, fluctuations, curvature. J. Phys. A 35, 3025–3038 (2002)

Bombieri, E., Bourgain, J.: A problem on sums of two squares. Int. Math. Res. Not. 11, 3343–3407 (2015)

Benatar, J., Marinucci, D., Wigman, I.: Planck-scale distribution of nodal length of arithmetic random waves. J. d’Anal. Math. (to appear). arXiv:1710.06153

Brüning, J.: Über Knoten Eigenfunktionen des Laplace–Beltrami operators. Math. Z. 158, 15–21 (1978)

Brüning, J., Gromes, D.: Über die Länge der Knotenlinien schwingender Membranen. Math. Z. 124, 79–82 (1972)

Cann, J.: Counting nodal components of boundary-adapted arithmetic random waves. PhD thesis submitted at King’s College London (2019)

Cheng, S.Y.: Eigenfunctions and nodal sets. Commun. Math. Helv. 51, 43–55 (1976)

Cilleruelo, J.: The distribution of the lattice points on circles. J. Number Theory 43(2), 198–202 (1993)

Donnelly, H., Fefferman, C.: Nodal sets of eigenfunctions on Riemannian manifolds. Invent. Math. 93, 161–183 (1988)

Gnutzmann, S., Lois, S.: Remarks on nodal volume statistics for regular and chaotic wave functions in various dimensions. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. (2014). https://doi.org/10.1098/rsta.2012.0521

Krishnapur, M., Kurlberg, P., Wigman, I.: Nodal length fluctuations for arithmetic random waves. Ann. Math. 177(2), 699–737 (2013)

Kurlberg, P., Wigman, I.: On probability measures arising from lattice points on circles. Math. Ann. 367(3–4), 1057–1098 (2017)

Kratz, M.F., León, J.R.: Central limit theorems for level functionals of stationary Gaussian processes and fields. J. Theoret. Probab. 14(3), 639–672 (2001)

Landau, E.: Uber die Einteilung der positiven Zahlen nach vier Klassen nach der Mindestzahl der zu ihrer addition Zusammensetzung erforderlichen Quadrate. Arch. Math. und Phys. III (1908)

Logunov, A., Malinnikova, E.: Nodal sets of Laplace eigenfunctions: estimates of the Hausdorff measure in dimensions two and three. 50 Years with Hardy spaces. Oper. Theory Adv. Appl. 261, 333–344 (2018)

Logunov, A.: Nodal sets of Laplace eigenfunctions: proof of Nadirashvili’s conjecture and of the lower bound in Yau’s conjecture. Ann. Math. (2) 187(1), 241–262 (2018)

Logunov, A.: Nodal sets of Laplace eigenfunctions: polynomial upper estimates of the Hausdorff measure. Ann. Math. (2) 187(1), 221–239 (2018)

Longuet-Higgins, M.S.: The statistical analysis of a random, moving surface. Philos. Trans. R. Soc. Lond. Ser. A 249, 321–387 (1957)

Longuet-Higgins, M.S.: Statistical properties of an isotropic random surface. Philos. Trans. R. Soc. London. Ser. A 250, 157–174 (1957)

Marinucci, D., Rossi, M., Wigman, I.: The asymptotic equivalence of the sample trispectrum and the nodal length for random spherical harmonics. Annales de l’Institut Henri Poincaré, Probabilités et Statistiques 56(1), 374–390 (2020)

Marinucci, D., Peccati, G., Rossi, M., Wigman, I.: Non-universality of nodal length distribution for arithmetic random waves. Geom. Funct. Anal. (GAFA) 26(3), 926–960 (2016)

Nourdin, I., Peccati, G., Rossi, M.: Nodal statistics of planar random waves. Commun. Math. Phys. 369(1), 99–151 (2019)

Rudnick, Z., Wigman, I.: On the volume of nodal sets for eigenfunctions of the Laplacian on the torus. Ann. Henri Poincaré 9(1), 109–130 (2008)

Rudnick, Z., Wigman, I.: Nodal intersections for random eigenfunctions on the torus. Am. J. Math. 138(6), 1605–1644 (2016)

Royen, T.: A simple proof of the Gaussian correlation conjecture extended to multivariate gamma distributions. arXiv preprint arXiv:1408.1028 (2014)

Todino, A.P.: Nodal lengths in shrinking domains for random Eigenfunctions on \({\mathbb{S}}^ 2\), arXiv preprint arXiv:1807.11787 (2018)

Wigman, I.: Fluctuations of the nodal length of random spherical harmonics. Commun. Math. Phys. 298(3), 787–831 (2010). Erratum published Commun. Math. Phys. 309(1), 293–294 (2012)

Yau, S.T.: Survey on partial differential equations in differential geometry. Seminar on Differential Geometry. Ann. Math. Stud. 102, 3–71 (1982)

Acknowledgements