Abstract

We introduce a family of loop soup models on the hypercubic lattice. The models involve links on the edges, and random pairings of the link endpoints on the sites. We conjecture that loop correlations of distant points are given by Poisson–Dirichlet correlations in dimensions three and higher. We prove that, in a specific random wire model that is related to the classical XY spin system, the probability that distant sites form an even partition is given by the Poisson–Dirichlet counterpart.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Loop soups are models in statistical mechanics that involve sets of one-dimensional loops living in higher dimensional space. These models are representations of particle or spin systems of statistical physics. It was recently conjectured that in most cases, in dimensions three and higher, these models have phases with long, macroscopic loops—the lengths of these loops scale like the volume of the system—and the joint distribution of macroscopic loops is always Poisson–Dirichlet [23].

This conjecture has been rigorously established in a model of spatial permutations related to the quantum Bose gas [10, 14, 18]. This model has a peculiar structure that makes it possible to integrate out the spatial variables and to use tools from asymptotic analysis, so there were suspicions that this property was accidental. But the conjecture has also been verified numerically in several other models, namely in lattice permutations [25]; in loop O(N) models [29]; and in the random interchange and a closely related loop model [2]. These findings are alas not supported by rigorous results.

There exist limited results for some models with fundamental spatial structure. The method of reflection positivity and infrared bounds [17, 21] allows to prove the occurrence of macroscopic loops [36]. More precisely, it is shown that the expectation of the length of a loop attached to a given site, when divided by the volume, is bounded away from 0 uniformly in the size of the system. While encouraging, this result gives no information regarding the possible presence of several macroscopic loops, let alone their joint distribution.

The goal of this article is to propose a genuinely spatial loop model where much of the conjecture can be rigorously established. We refer to it as the “random wire model”. It is defined for arbitrary finite graphs; in the most relevant case, the set of vertices is a large box in \({\mathbb Z}^d\) and the set of edges are the pairs of nearest-neighbours. On each edge there is a random number of “links” satisfying the constraint that the number of links touching a site is even. These links are paired at each site, resulting in closed trajectories (an illustration can be found in Fig. 2). Our main result is a rigorous proof that even loop correlations are given by Poisson–Dirichlet, at least when the parameters of the model are chosen wisely.

There is a lot of background for this study. Our random wire model is an extension of the random current representation of the Ising model that was introduced by Griffiths, Hurst, and Sherman [24], and popularised by Aizenman [1]. It is also related to the Brydges-Fröhlich-Spencer representation of spin O(N) models [15, 19], and to loop O(N) models [9, 30]. The Poisson–Dirichlet distribution of random partitions was introduced by Kingman [27]; it is the invariant measure for the split-merge (coagulation-fragmentation) process [7, 16, 35]. Its relevance for mean-field loop soup models was first suggested by Aldous for the random interchange model on the complete graph, see [5]; Schramm succeeded in making this rigorous [34] (see also [6, 11,12,13]). The relevance of these ideas for systems with spatial structure was pointed out in [23].

The connections between the Poisson–Dirichlet distribution and symmetry breaking was noticed and exploited in [29, 36]. Our method of proof combines these ideas and rests on two major results about the classical XY model: The proof of Fröhlich, Simon, and Spencer that a phase transition occurs in dimensions three and higher [21]; and Pfister’s characterisation of all translation-invariant extremal infinite-volume Gibbs states [32]. We should point out that the precise relations between Poisson–Dirichlet and symmetry breaking are far from elucidated. The heuristics of Sect. 4.2 show that the loops that represent the classical XY model are characterised by the distribution PD(1), as are the loops of the quantum XY model [36]. However, these heuristics also show that the loops representing the classical Heisenberg model are characterised by PD(\(\frac{3}{2}\)) while the loops of the quantum Heisenberg model are PD(2) [23, 36]. Right now, this looks curious.

It is perhaps worth emphasising that the Poisson–Dirichlet distribution is expected to characterise loop soups only in dimensions three and higher. The behaviour in dimension two is also interesting and partially understood, see [4, 8, 18]. There may be a Berezinskii–Kosterlitz–Thouless phase where loop correlations have power-law decay instead of exponential. A separate topic is the critical behaviour of two-dimensional loop soups, that is characterised by conformal invariance and Schramm-Löwner evolution; there have been many impressive results in recent years, but we do not discuss this here.

The article is organised as follows. The notation is summarised in Sect. 2 for the comfort of the reader. The random wire model is introduced in Sect. 3 and basic properties are established. The Poisson–Dirichlet conjecture is explained in Sect. 4. Our main results, Theorems 5.1 and 5.2, are stated in Sect. 5. The first claim deals with the density of points in long loops, and the second claim is about even loop correlations being given by Poisson–Dirichlet. Section 6 discusses classical spin systems and their relations to the random wire model. We gather the necessary properties in Sect. 7 by summarising and completing the results of [21, 32], and we prove Theorems 5.1 and 5.2.

2 Notation

We list here the main notation used in this article; the precise definitions can be found in subsequent sections.

\({\mathcal G}= ({\mathcal V},{\mathcal E})\) the graph; \({\mathcal V}\) is the set of vertices and \({\mathcal E}\) is the set edges. \({\mathcal G}^{\mathrm{b}} = ({\mathcal V}\cup {\bar{{\mathcal V}}}, {\mathcal E}\cup {\bar{{\mathcal E}}})\) denotes the graph with a boundary; \({\bar{{\mathcal V}}}\) is the set of boundary sites and \({\bar{{\mathcal E}}}\) are edges between \({\mathcal V}\) and \({\bar{{\mathcal V}}}\).

\({\mathcal G}_L = (\Lambda _L,{\mathcal E}_L)\) with \(\Lambda _L = \{-L,\dots ,L\}^d \subset {\mathbb Z}^d\) and \({\mathcal E}_L\) the set of nearest-neighbours. \({\mathcal G}_L^{\mathrm{b}}\) is the graph with boundary \(\partial \Lambda _L\), given by sites of \({\mathbb Z}^d\) at distance 1 from \(\Lambda _L\).

\({\mathcal W}_{\mathcal G}= \{ {{\varvec{w}}}= ({{\varvec{m}}},{\varvec{\pi }}) \}\) is the set of wire configurations on \({\mathcal G}\), that consists of a link configuration \({{\varvec{m}}}\in {\mathcal M}_{\mathcal G}\subset {\mathbb N}_0^{\mathcal E}\) (with an even number of links touching each site) and a pairing configuration \({\varvec{\pi }}\in {\mathcal P}_{\mathcal G}({{\varvec{m}}})\).

\(n_x({{\varvec{m}}})\) is the local occupancy (or “local time”); it is equal to the number of times that loops pass by the site \(x \in {\mathcal V}\).

\(\lambda ({{\varvec{w}}})\) is the number of loops in the wire configuration \({{\varvec{w}}}\).

\(\alpha \) is the positive “loop parameter”.

\({{\varvec{J}}}= (J_e)_{e \in {\mathcal E}}\) are “edge constants”, or “coupling parameters”.

\(U : {\mathbb N}_0 \rightarrow {\mathbb R}\) is a potential function; \(U(n_x)\) gives the energy of the \(n_x\) wires that cross the site \(x\in {\mathcal V}\).

\({\mathbb P}_{\mathcal G}^{\alpha ,{{\varvec{J}}}}, {\mathbb E}_{\mathcal G}^{\alpha ,{{\varvec{J}}}}\) denote the probability and expectation with respect to wire configurations.

\(Z_{\mathcal G}(\alpha ,{{\varvec{J}}})\) is the partition function and \(p_{\mathcal G}(\alpha ,{{\varvec{J}}})\) is the pressure.

\({\tilde{n}}_x\) is the number of pairs at the site x that belong to long or open loops.

\(E_X({{\varvec{x}}},{{\varvec{q}}})\) is the set of configurations \({{\varvec{w}}}\) where \((x_i,q_i)\) and \((x_j,q_j)\) belong to the same loop iff i, j belong to the same partition element of X.

\({\mathcal X}_{2k}^{\mathrm{even}}\) is the family of set partitions of \(\{1,\dots ,2k\}\) whose elements have even cardinality.

\(M_\theta (X)\) is the probability that k random points on [0, 1] and a random partition chosen with Poisson–Dirichlet distribution PD(\(\theta \)), yield the set partition X.

\(M_\theta ^{\mathrm{even}}(2k) = \sum _{X \in {\mathcal X}_{2k}^{\mathrm{even}}} M_\theta (X)\) is the probability that 2k random points on [0, 1], and a random partition from PD(\(\theta \)), yield an even set partition.

3 Setting

3.1 Links, pairings, wires, and loops

We consider a generalisation of the model of random currents of the Ising model. Let \({\mathcal G}= ({\mathcal V},{\mathcal E})\) a graph. Given a collection \({{\varvec{m}}}= (m_e)_{e\in {\mathcal E}}\) of nonnegative integers, we define the local occupancy (or local time) to be

A link configuration is a collection \({{\varvec{m}}}\) that satisfies the constraint that there is an even number of links touching any site; in other words, the local occupancy \(n_x({{\varvec{m}}})\) is integer at every site \(x \in {\mathcal V}\). We let \({\mathcal M}_{\mathcal G}\) denote the set of link configurations. A link configuration can be represented by a labeled multigraph with labeled edges, see Fig. 1.

For a given link configuration \({{\varvec{m}}}\), a pairing configuration\({\varvec{\pi }}= (\pi _x)_{x \in {\mathcal V}}\) is a collection of pairings such that \(\pi _x\) connects the links that touch the site \(x \in {\mathcal V}\). This is illustrated in Fig. 2. We let \({\mathcal P}_{\mathcal G}({{\varvec{m}}})\) denote the set of pairing configurations that are compatible with \({{\varvec{m}}}\); notice that the number of pairing configurations is equal to

(with the convention that \((-1)!! = 1\)). We call the pair \({{\varvec{w}}}= ({{\varvec{m}}},{\varvec{\pi }})\) a wire configuration; the set of wire configurations on the graph \({\mathcal G}\) is denoted \({\mathcal W}_{\mathcal G}\).

We now define the loops of a wire configuration. This notion is intuitive and it is illustrated in Fig. 2b, even though the proper definition is a bit cumbersome. We consider the set of finite sequences of labeled links \(\bigl ( (e_1,p_1), \dots , (e_\ell ,p_\ell ) \bigr )\) where \(e_i \in {\mathcal E}\) and \(p_i \in \{1,\dots ,m_{e_i}\}\), and such that \(e_i \cap e_{i+1} \ne \emptyset \), \(i = 1,\dots ,\ell \). We identify sequences that are related by cyclicity and inversion; that is, we identify \(\bigl ( (e_2,p_2),\dots ,(e_\ell ,p_\ell ), (e_1,p_1) \bigr )\) and \(\bigl ( (e_\ell ,p_\ell ),\dots ,(e_1,p_1) \bigr )\) with \(\bigl ( (e_1,p_1),\dots ,(e_\ell ,p_\ell ) \bigr )\). After identification, these sequences form a loop of length \(\ell \). In order to define the set of loops of a given wire configuration \({{\varvec{w}}}\), we can start at any link \((e_1,p_1)\); we choose an endpoint x and get the next link as the one that is paired by the pairing \(\pi _x\); we continue until we get back to \((e_1,p_1)\). For the next loop we choose a link that has not been selected yet, and we proceed alike until all links have been exhausted.

The number of loops of a wire configuration \({{\varvec{w}}}\) is denoted \(\lambda ({{\varvec{w}}})\). We also define the length of a loop as the number of links in the loop.

3.2 The model of random wires

We now introduce the probability distribution on wire configurations. Let \({{\varvec{J}}}= (J_e)_{e \in {\mathcal E}}\) a collection of nonnegative parameters indexed by the edges of \({\mathcal G}\). Let \(\alpha >0\) another parameter. We consider an “interaction potential” function \(U : {\mathbb N}_0 \rightarrow {\mathbb R}\cup \{+\infty \}\) and define the probability of the wire configuration \({{\varvec{w}}}= ({{\varvec{m}}},{\varvec{\pi }})\) to be

Here, the normalisation \(Z_{\mathcal G}(\alpha ,{{\varvec{J}}})\) is the partition function defined by

Notice that the exponent of \(\alpha \) is \(\lambda ({{\varvec{w}}})\), which is the number of loops. For \(\alpha \ne 1\), loops affect the probability distribution.

The interaction potential typically becomes infinite as the local occupancy diverges. It is natural to consider models where the partition function is finite for all choices of \(\alpha \) and \({{\varvec{J}}}\). The first claim of the next proposition gives a sufficient condition.

Proposition 3.1

Let \({\bar{\alpha }} = \max (\sqrt{\alpha },1)\) and assume that the potential function satisfies

for some positive constant C independent of n. Then

- (a)

The partition function is bounded by

$$\begin{aligned} Z_{\mathcal G}(\alpha , {{\varvec{J}}}) \le \exp \biggl \{ {\bar{\alpha }} C \sum _{e\in {\mathcal E}} J_e \biggr \}. \end{aligned}$$ - (b)

Let \(\ell ^{\mathrm{max}}_{x_0}({{\varvec{w}}})\) be the length of the longest loop that passes through the site \(x_0\). For all \(n\in {\mathbb N}\) and all \(\eta \ge 0\), we have

$$\begin{aligned} {\mathbb P}_{\mathcal G}^{\alpha ,{{\varvec{J}}}}(\ell ^{\max }_{x_0} \ge n) \le \,\mathrm{e}^{-\eta n}\, \sum _{k\ge 0} \sum _{\begin{array}{c} x_1,\dots ,x_k \in {\mathcal V} \\ \{x_{i-1},x_i\} \in {\mathcal E}\text { for } i = 1,\dots ,k-1 \end{array}} \prod _{i=1}^k \bigl ( \,\mathrm{e}^{\,\mathrm{e}^{\eta }\,{\bar{\alpha }} C J_{\{x_{i-1},x_i\}}}\, - 1 \bigr )^{1/2}. \end{aligned}$$

The upper bound in (b) involves a sum over walks of arbitrary lengths that start at \(x_0\). In many situations, such as graphs with bounded degrees and \(J_e\) bounded uniformly, this sum is convergent when \({{\varvec{J}}}\) is small. Then all loops passing by the site \(x_0\) are small, that is, their lengths are finite uniformly in the size of the graph.

Proof

The number of loops is less than \(\frac{1}{2} \sum _e m_e\) so that \(\alpha ^{\lambda ({{\varvec{w}}})} \le {\bar{\alpha }}^{\sum m_e}\). The number of pairing configurations is \(\prod _x (2n_x-1)!!\). Neglecting the constraints on link numbers, we get

We used \(\sum _{x \in {\mathcal V}} n_x({{\varvec{m}}}) = \sum _{e \in {\mathcal E}} m_e\), which follows from Eq. (3.1). This proves (a).

For the claim (b), given a configuration \({{\varvec{m}}}\), we consider the graph with set of vertices \({\mathcal V}\) and with set of edges \(\{ e \in {\mathcal E}: m_e \ge 1 \}\). Further, let \({\mathcal G}' = ({\mathcal V}',{\mathcal E}') \subset {\mathcal G}\) be the connected subgraph that contains the vertex \(x_0\). We have

The last bound follows from Markov’s inequality. We now condition on the graph \({\mathcal G}'\) in the equation below; the sum over \(({{\varvec{m}}},{\varvec{\pi }}) : {\mathcal G}'\) is a sum over link configurations on \({\mathcal E}'\) so that the graph with edges \(\{ e \in {\mathcal E}' : m_e \ge 1 \}\) is connected, and over pairing configurations on \({\mathcal V}'\). Thanks to factorisation properties we have

Assuming that U is normalised so that \(U(0)=0\), which we can do without loss of generality, we have that \(Z_{{\mathcal G}\setminus {\mathcal G}'}(\alpha ,{{\varvec{J}}}) \le Z_{\mathcal G}(\alpha ,{{\varvec{J}}})\). Using similar estimates as in (a), we get

For any connected graph, there exists a walk that uses each edge exactly twice. This is easily seen by induction: knowing the walk for a given graph, and adding an edge, we get a new walk by crossing the new edge twice. The sum over connected graphs \({\mathcal G}'\) can then be estimated by a sum over walks starting at the vertex \(x_0\). The sum over \({{\varvec{m}}}\) can be estimated by \(\,\mathrm{e}^{\,\mathrm{e}^{\eta }\,{\bar{\alpha }} C J_e}\,-1\) at every edge, and we get the claim (b). \(\square \)

We now introduce a random wire model with “open” boundary conditions. The idea is to allow open loops that end at the boundary (we refer to them as open loops, although they are no real loops). The new graph is

Here, \({\bar{{\mathcal V}}}\) is an extra set of vertices (the boundary), and \({\bar{{\mathcal E}}}\) is a set of edges between \({\mathcal V}\) and \({\bar{{\mathcal V}}}\), i.e. \({\bar{{\mathcal E}}} \subset {\mathcal V}\times {\bar{{\mathcal V}}}\).

The set of link configurations is \({\mathcal M}_{{\mathcal G}^{\mathrm{b}}} \subset {\mathbb N}_0^{{\mathcal E}\cup {\bar{{\mathcal E}}}}\) and it satisfies the constraints that each site of \({\mathcal V}\) is touched by an even number of links; there are no constraints at the sites of \({\bar{{\mathcal V}}}\). The set of pairing configurations is \({\mathcal P}_{{\mathcal G}^{\mathrm{b}}}({{\varvec{m}}})\); pairings are defined at the sites of \({\mathcal V}\) only, not at \({\bar{{\mathcal V}}}\). Loops are defined as before, except for open loops that start and end at the boundary—they involve exactly two edges touching the boundary (closed loops do not pass by the boundary). Given a wire configuration \({{\varvec{w}}}= ({{\varvec{m}}},{\varvec{\pi }}) \in {\mathcal W}_{{\mathcal G}^{\mathrm{b}}}\), we let \(\lambda ({{\varvec{w}}})\) denote the number of all loops, counting closed and open loops. The probability of a wire configuration with open boundary conditions is

The partition function \(Z_{{\mathcal G}^{\mathrm{b}}}(\alpha ,{{\varvec{J}}})\) is defined as expected, so that \({\mathbb P}_{{\mathcal G}^{\mathrm{b}}}^{\alpha ,{{\varvec{J}}}}\) is a probability distribution.

The main advantage of open boundary conditions is to allow us to introduce the event where a site belongs to long loops, namely that it is connected to the boundary. This is discussed in Sect. 4.

4 Loop Correlations and Poisson–Dirichlet Distribution

We now fix the graph to be a large box in \({\mathbb Z}^d\) with edges given by nearest-neighbours. We write \(\Lambda _L = \{-L,\dots ,L\}^d\) for the set of vertices, \({\mathcal E}_L\) for the set of nearest-neighbours, and \({\mathcal G}_L = (\Lambda _L,{\mathcal E}_L)\) for this graph. We also assume that \(J_e \equiv J\) is constant. In dimensions \(d\ge 3\), and if J is large enough, we expect that macroscopic loops are present and that they are described by a Poisson–Dirichlet distribution.

4.1 Joint distribution of the lengths of macroscopic loops

These properties can be formulated in various ways. The most direct way is to look at the lengths of the loops in a large box. Recall that the length of a loop is the number of its links. Let \(\bigl ( \ell _1({{\varvec{w}}}), \ell _2({{\varvec{w}}}), \dots , \ell _k({{\varvec{w}}}) \bigr )\) be the sequence of the lengths of the loops of \({{\varvec{w}}}\) in decreasing order, repeated with multiplicities; the number of loops is also random, \(k = k({{\varvec{w}}})\). The “volume” occupied by the loops is defined as

We consider the following sequence, which is a random partition of the interval [0, 1]:

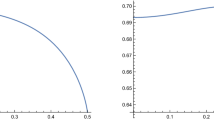

It is rather obvious that the number of microscopic loops (those whose lengths are bounded uniformly in L) scales like the volume \(|\Lambda _L|\) of the system. Consequently, the tail of the random partition consists of tiny dust occupying a non-vanishing interval. On the other hand, the lengths of the longer loops are expected to be of order of the volume and to be described by a Poisson–Dirichlet distribution. The typical random partition is illustrated in Fig. 3.

One can formulate the Poisson–Dirichlet conjecture as follows. There exists \(m \in [0,1]\) (and \(m>0\) when \(d\ge 3\) and J large enough) such that

For every \({\varepsilon }>0\), we have

$$\begin{aligned} \lim _{n\rightarrow \infty } \lim _{L\rightarrow \infty } {\mathbb P}_{{\mathcal G}_L}^{\alpha ,J} \Bigl ( \sum _{j=1}^n \frac{\ell _j({{\varvec{w}}})}{V({{\varvec{w}}})} \in [m-{\varepsilon },m+{\varepsilon }] \Bigr ) = 1. \end{aligned}$$(4.3)For every \(n\in {\mathbb N}\) and as \(L \rightarrow \infty \), the distribution of the vector \(\bigl ( \frac{\ell _1({{\varvec{w}}})}{mV({{\varvec{w}}})}, \dots , \frac{\ell _n({{\varvec{w}}})}{mV({{\varvec{w}}})} \bigr )\) converges to the Poisson–Dirichlet distribution PD(\(\frac{\alpha }{2}\)) restricted to the first n elements.

Let us recall that Poisson–Dirichlet is a one-parameter family of distributions on partitions of [0, 1]. It is most easily defined using the random allocation (or “stick breaking”) construction. Namely, let \(Y_1,Y_2,\dots \) be i.i.d. Beta\((1,\theta )\) random variables (that is, their probability density function is equal to \(\theta (1-t)^{\theta -1}\) for \(t \in [0,1]\) and is zero otherwise); we construct the sequence

It is not hard to check that the sum of these numbers give 1 almost surely. Rearranging the numbers in decreasing order, we get a random partition with Poisson–Dirichlet distribution PD(\(\theta \)). See [27, 33] for more information.

The heuristics for this conjecture is explained in the next subsection; it also contains the calculation of the Poisson–Dirichlet parameter, \(\theta = \frac{\alpha }{2}\).

4.2 Heuristics and calculation of the Poisson–Dirichlet parameter

An important property of Poisson–Dirichlet is to be the stationary distribution of split-merge processes (also called coagulation-fragmentation) [7, 16, 35]. The following heuristic has already been explained in [23, 25, 36] for other loop soups; notice that the article [25] contains numerical verifications of some of the steps. It is worth sketching the heuristic in some details since it allows to calculate—non rigorously, but exactly—the Poisson–Dirichlet parameter. For a fixed link configuration \({{\varvec{m}}}\), we introduce a discrete-time Markov process on pairing configurations, i.e. on \({\mathcal P}_{{\mathcal G}_L}({{\varvec{m}}})\). Let \(T_{{\varvec{m}}}({\varvec{\pi }},{\varvec{\pi }}')\) be the probability that, if the system is at \({\varvec{\pi }}\) at time t, it moves to \({\varvec{\pi }}'\) at time \(t+1\). Assuming the process to be irreducible (that is, there are possible trajectories reaching all configurations of \({\mathcal P}_{\Lambda _L}({{\varvec{m}}})\)), a sufficient condition for a measure to be stationary is that it satisfies the detailed balance condition

We only consider changes that involve rewiring two pairs at a single site. This is illustrated in Fig. 4. The number of loops changes by at most one. We have

We need to choose the transition probabilities so that the ratio \(\frac{T_{{\varvec{m}}}({\varvec{\pi }},{\varvec{\pi }}')}{T_{{\varvec{m}}}({\varvec{\pi }}',{\varvec{\pi }})}\) is equal to the above equation when \({\varvec{\pi }}\) and \({\varvec{\pi }}'\) differ by just one rewiring. There are many possibilities; we can take

Here, C is a constant that is small enough so that \(\sum _{{\varvec{\pi }}' \ne {\varvec{\pi }}} T_{{\varvec{m}}}({\varvec{\pi }},{\varvec{\pi }}') \le 1\) (it affects the speed of the process but not its stationary distribution). Notice that \(({\begin{matrix} 2n_x \\ 2 \end{matrix}})\) is the number of pairs of endpoints at x, and there are exactly two choices whose rewiring gives \({\varvec{\pi }}'\).

In words, we choose a site uniformly at random, then pick two endpoints uniformly at random, and accept the rewiring with probability \(C \alpha ^{1/2}, C, C \alpha ^{-1/2}\) according to whether the number of loops increases by 1, stays constant, or decreases by 1. It is clear that \(T_{{\varvec{m}}}\) satisfies the detailed balance condition, and also that the process is irreducible on \({\mathcal P}_{\Lambda _L}({{\varvec{m}}})\) for a fixed \({{\varvec{m}}}\).

Next, we look at the resulting process on partitions. We get a split-merge process with a priori complicated rates. But we can discard all rewirings that involve microscopic loops, as they have negligible effect in the infinite-volume limit. Much more interesting are changes that affect macroscopic loops. If we select endpoints belonging to different loops, the rewiring always merges them. If we select endpoints belonging to the same loop, the rewiring may split it, or just rearrange it (this is analogous to \(0 \leftrightarrow 8\)). The essence of the conjecture is that macroscopic loops merge well, and the number of pairs of endpoints that allow two macroscopic loops \(\gamma ,\gamma '\) to merge is approximately equal to \(c \ell _\gamma \ell _{\gamma '}\) for a constant c that is independent of \(\gamma ,\gamma '\). Further, the number of pairs of endpoints that allow a macroscopic loop \(\gamma \) to split is approximately equal to \(\frac{1}{4} c \ell _\gamma ^2\), with the same constant c as before. The factor \(\frac{1}{4} = \frac{1}{2} \cdot \frac{1}{2}\) is there because pairs within a loop should be counted once, and only half the pairs cause a split and not a rearrangement.

The conclusion of this heuristic is that, as the volume becomes large, the effective split-merge process on partitions behaves like the standard, mean-field process where two partition elements \(\eta ,\eta '\) merge at rate \(\frac{2c}{\sqrt{\alpha }} \eta \eta '\) and an element \(\eta \) splits at rate \(\frac{c\sqrt{\alpha }}{2} \eta ^2\); moreover, the element is split uniformly. It is known that the Poisson–Dirichlet distribution with parameter \(\theta = \frac{\alpha }{2}\) is the invariant measure for this process [7, 35, 37] (partial results about uniqueness can be found in [16]).

This long heuristics was needed in order to identify the correct parameter. This justifies the above conjecture.

4.3 Poisson–Dirichlet correlations

As we argue below in Sect. 4.4, the probability that points belong to the same loop, knowing that they belong to long loops, is given by the probability that random points in the interval [0, 1] belong to the same partition element with Poisson–Dirichlet distribution. We collect now the relevant formulæ.

Let \(u_1,\dots ,u_k \in [0,1]\) and let \((z_1,z_2,\dots )\) be a partition of [0, 1]. We denote \(X_{u_1,\dots ,u_k}(z_1,z_2,\dots )\) the set partition of \(\{1,\dots ,k\}\) where i, j belong to the same subset if and only if \(u_i, u_j\) belong to the same partition element. Further, if X is a set partition of \(\{1,\dots ,k\}\), let

Finally, let

where the latter expectation is taken over k i.i.d. random variables \(U_1,\dots ,U_k\) with uniform distribution on [0, 1].

The number \(M_\theta (X)\) depends only on the sizes of the partition elements of X. If \(X = \cup _{i=1}^\ell X_i\) with \(|X_i|=n_i\), then, with \(Z_i\) denoting the ith element of a Poisson–Dirichlet random partition, we have

The latter formula seems well-known to experts but it does not appear often in the literature. It is written in [29] where it is derived using “supersymmetry” calculations in a loop O(N) model. A calculation within Poisson–Dirichlet can be found in [37].

In the present article we need the probability that the random set partition is even, that is, all its subsets have an even number of elements. Let \({\mathcal X}_{2k}^{\mathrm{even}}\) denote the set of even partitions of \(\{1,\dots ,2k\}\), and let

Proposition 4.1

For all \(\theta >0\) and all \(k\in {\mathbb N}\), we have

In the case \(\theta =1\), the formula above reduces to

Proof

We use the following trick:Footnote 1 Consider random partitions of [0, 1] and a random sequence of signs \(({\varepsilon }_1,{\varepsilon }_2,\dots )\) where \({\varepsilon }_i\) are i.i.d. and take values \(\pm 1\) with probability \(\frac{1}{2}\). Let

Here, \(Z_i\)s are the elements of the random partition with PD(\(\theta \)) distribution and \(h \in {\mathbb R}\). \(\Phi (h)\) is an even function and

The function \(\Phi (h)\) was calculated in [37, Eq. (4.18)]; it is equal to

Differentiating 2k times and looking at the coefficient of \(h^0\), we get the claim of the proposition. \(\square \)

4.4 Loop correlations—Conjectures

We now formulate the Poisson–Dirichlet conjecture in terms of loop correlations. This is more natural in the context of statistical mechanics, and this is the form that we can prove in a special case.

The idea behind loop connectivity is to consider k points and to look at how these points are connected by the loops. A complication is that many loops may pass by a given site, and also that the same loop may pass many times. We then introduce a label on the pairings. Namely, we assign the labels \(1,\dots ,n_x({{\varvec{m}}})\) to the pairs of the pairing \(\pi _x\). Given distinct sites \({{\varvec{x}}}= (x_1,\dots ,x_k)\), pair labels \({{\varvec{q}}}= (q_1,\dots ,q_k)\), and a set partition \(X = \{X_1,\dots ,X_\ell \}\) of \(\{1,\dots ,k\}\), we introduce the event

In other words, we look at the partition of the points \((x_j,q_j)_{j=1}^k\) given by the loops, and \(E_X({{\varvec{x}}},{{\varvec{q}}})\) is the event where this partition is equal to X; see Fig. 5. We also use the event \(E_\infty ({{\varvec{x}}},{{\varvec{q}}})\), the set of wire configurations where all \((x_i,q_i)\) belong to long loops: With \(\ell (x,q)\) denoting the length of the loop passing through the qth pair at the site \(x \in \Lambda _L\),

where the cutoff \({{\tilde{\ell }}}_L\) is chosen so that \(\lim _{L\rightarrow \infty } {\tilde{\ell }}_L = \infty \) and \(\lim _{L\rightarrow \infty } {\tilde{\ell }}_L/L^d = 0\).

We consider a “splashing sequence” \({{\varvec{x}}}^{(n)} = (x_1^{(n)}, \dots , x_k^{(n)})\), that is, a sequence of sites in \({\mathbb Z}^d\) that satisfies

The Poisson–Dirichlet conjecture can be formulated as follows: Let X be a set partition without singletons; in the limits \(L\rightarrow \infty \) then \(n\rightarrow \infty \), the probability of \(E_X({{\varvec{x}}}^{(n)},{{\varvec{q}}})\) involves the probability \({\mathbb P}_{{\mathbb Z}^d}^{\alpha ,J} \bigl ( E_\infty (x_i^{(n)},q_i))\) that the \(q_i\)th pair at \(x_i^{(n)}\) belongs to macroscopic loops, and the probability \(M_\theta (X)\) that k random numbers placed in a random partition, yields the set partition X. More precisely, we expect that for all set partitions X without singletons, we have

The first identity should not hold for X with singletons, since the probability that the corresponding points belong to small loops is positive. Letting \(m(d,J) = \sum _{q\ge 1} {\mathbb P}_{{\mathbb Z}^d}^{\alpha ,J}\bigl ( E_\infty (0,q) \bigr )\), we can also formulate the conjecture as

\(M_{\frac{\alpha }{2}}(X)\) can be found in Eq. (4.10).

We now formulate a revised conjecture; it is less appealing but it is closer to what is proved in Theorem 5.2 below. For all splashing sequences \({{\varvec{x}}}^{(n)} = (x_1^{(n)}, \dots , x_k^{(n)})\) and all set partitions X of \(\{1,\dots ,k\}\) (without singletons), we can repeat the steps of (4.19) and we obtain

with \({\tilde{m}}_q(d,J)\) given by

Letting \({\tilde{m}}(d,J) = \sum _{q\ge 1} {\tilde{m}}_q(d,J)\), the Poisson–Dirichlet conjecture states that for any k and any set partition X of \(\{1,\dots ,k\}\) without singletons, we have

As in the version (4.20) of the conjecture, \(\tilde{m}(d,J)\) is related to the density of points in long loops and is model-dependent; \(M_{\frac{\alpha }{2}}(X)\) is the term that signals the presence of the Poisson–Dirichlet distribution PD(\(\frac{\alpha }{2}\)) for the lengths of the long loops.

Replacing k by 2k, summing over even set partitions, and using (4.11), we get

This is a weaker conjecture than (4.23). We prove it in a specific random wire model, see Theorem 5.2 in the next section.

5 Main Results—Long Loops and Their Joint Distribution

We can now formulate the main result of this article, which strongly hints towards the presence of the Poisson–Dirichlet distribution in a class of models of random wires. We restrict to the random wire model with loop parameter \(\alpha =2\) and potential function defined by

The graph is \({\mathcal G}_L = (\Lambda _L,{\mathcal E}_L)\) with \(\Lambda _L = \{-L,\dots ,L\}^d\) and \({\mathcal E}_L\) is the set of nearest-neighbours. We choose \(J_e = J\) for all \(e \in {\mathcal E}_L\). We also consider the graph \({\mathcal G}_L^{\mathrm{b}}\) with boundary conditions; the set of vertices is \(\Lambda _L\) with the external boundary

Edges of \({\mathcal G}_L^{\mathrm{b}}\) are the nearest-neighbours in \(\Lambda _L\), and the edges between \(\Lambda _L\) and \(\partial \Lambda _L\). On this graph some loops are open, with endpoints on \(\partial \Lambda _L\). Let \(\tilde{n}_x({{\varvec{w}}})\) be the random variable for the number of times that open loops pass by the site \(x\in \Lambda _L\). In other words, for \({{\varvec{w}}}=({{\varvec{m}}},{\varvec{\pi }})\), we let

where \(\tilde{L}({{\varvec{w}}})\) is the set of links that are connected to the boundary. We then define

Ignoring the denominator in the expectation, \(\tilde{m}(d,J)\) gives the average number of pairs at the origin that belong to long loops. The reason why the denominator is present is that \(\tilde{m}(d,J)\) can be written in terms of spin correlations; this allows to establish the following properties, our first main result.

Theorem 5.1

Let \(\alpha =2\) and U defined in Eq. (5.1). Then the limit \(L\rightarrow \infty \) of \(\tilde{m}(d,J)\) in Eq. (5.4) exists. Further,

- (a)

\(\tilde{m}(d,J)\) is nondecreasing with respect to d and J.

- (b)

\(\tilde{m}(d,J) = 0\) when \(J < 2^{-3/2} \log ( 1 + \frac{1}{(2d)^2})\), for arbitrary dimension d.

- (c)

\(\tilde{m}(d,J) = 0\) when \(d=1,2\), for all \(J\ge 0\).

- (d)

For \(d\ge 3\), there exists \(J_{\mathrm{c}}(d)<\infty \) such that \(\tilde{m}(d,J) > 0\) if \(J > J_{\mathrm{c}}(d)\) and \(\tilde{m}(d,J) = 0\) if \(J < J_{\mathrm{c}}(d)\).

The proof of this theorem can be found in Sect. 7.

Theorem 5.1 establishes that a positive fraction of sites are crossed by long loops when \(d\ge 3\) and J is large enough. It is remarkable that \(\tilde{m}(d,J)\) can be proved to be monotone nondecreasing in d and in J. This property is expected to hold for fairly general random wire models; but the present proof, relying as it does on the equivalent XY spin model and its correlation inequalities, cannot be extended easily.

The claim (b) follows from Proposition 3.1 and it holds for more general \(\alpha \) and U. When \(\alpha = 3,4,5,\dots \), and U(n) is defined by Eq. (6.6) with \(N=\alpha \), the claim (c) also holds (its proof uses the continuous symmetry of the corresponding spin system).

Next we consider loop correlations between distant points. In order to formulate the result, we need to introduce the pressure \(p(\alpha ,J)\):

The infinite-volume limit exists by a standard subadditive argument—\(Z_{{\mathcal G}_L}\) is submultiplicative, and Proposition 3.1 (a) guarantees that the pressure is finite. It is easy to verify that \(p(\alpha ,\,\mathrm{e}^{s}\,)\) is convex in s, as the second derivative gives the variance of \(\sum _e m_e\) and is therefore positive. It follows that \(p(\alpha ,J)\) is differentiable with respect to J at all points, except possibly for a countable set.

Recall the notion of splashing sequences of sites in (4.18). Our second main result is the weaker form of the Poisson–Dirichlet conjecture, see Eq. (4.24).

Theorem 5.2

Let \(\alpha =2\), U defined in Eq. (5.1), and \(\tilde{m}(d,J)\) defined in Eq. (5.4). We assume that J is such that the pressure p(2, J) is differentiable. Then for all \(k \in {\mathbb N}\) and all splashing sequences of 2k sites, we have

The proof of this theorem uses the connections to the classical XY model; it can be found in Sect. 7.

Theorem 5.2 gives a lot of information on the structure of long loops: They are present when \(\tilde{m}(d,J)>0\); arbitrary sites have positive probability to belong to them; multiple long loops occur with positive probability. An important aspect of Theorem 5.2 is that it holds for all k with the same constant \(\tilde{m}(d,J)\). This is compatible with the Poisson–Dirichlet distribution PD(\(\theta \)) with \(\theta =1\); this is incompatible with PD(\(\theta \)) with \(\theta \ne 1\) and with most other distributions on partitions. Theorem 5.2 is then a good step forward towards proving that the correlations due to long loops are given by PD(1).

6 Random Wire Representation of Classical O(N) Spin Systems

We show now that the random wire model can be derived as a representation of classical O(N) spin systems. In fact, the case \(N=1\) is close to the random current representation of the Ising model [1, 20, 24]. The general case \(N\in {\mathbb N}\) can be seen as a reformulation of the Brydges–Fröhlich–Spencer loop model [15, 19]; explicit relations between BFS loops and wire configurations can be found in [3].

We consider an arbitrary finite graph \({\mathcal G}= ({\mathcal V},{\mathcal E})\). Let \({{\varvec{J}}}= (J_e)_{e\in {\mathcal E}}\) be fixed parameters. We denote \({\varvec{\varphi }}\in ({\mathbb S}^{N-1})^{\mathcal V}\) the spin configurations. The hamiltonian of the O(N) spin system is defined as

where \(\varphi _x \cdot \varphi _y\) denotes the usual inner product of two N-component vectors. The partition function is

Here, \(\mathrm{d}\varphi _x\) denotes the uniform probability measure on \({\mathbb S}^{N-1}\), that is, \(\int _{{\mathbb S}^{N-1}} \mathrm{d}\varphi _x = 1\). The relevant Gibbs state can be defined as the linear functional \(\langle \cdot \rangle _{\mathcal G}^{{\varvec{J}}}\) on functions \(({\mathbb S}^{N-1})^{\mathcal V}\rightarrow {\mathbb R}\), that assigns the value

We introduce a special class of spin correlation functions that have special relevance to loop models. Let \(k\in {\mathbb N}\) and \(x_1, \dots , x_{2k} \in {\mathcal V}\) be distinct sites. We assume that \(N\ge 2\) and we write \(\varphi _x^{(i)}\) for the ith component of the vector \(\varphi _x \in {\mathbb R}^N\). The corresponding correlation function is

with

Let us define the potential function U of the random wire model by the equation

We then have a relation between the O(N) spin system and the random wire model with \(\alpha =N\). Recall the event \(E_X({{\varvec{x}}},{{\varvec{q}}})\) defined in (4.16).

Proposition 6.1

Let U(n) defined by (6.6). Then

- (a)

If \(N \in {\mathbb N}\), we have

$$\begin{aligned} Z_{\mathcal G}^{\mathrm{spin}}({{\varvec{J}}}) = Z_{\mathcal G}(N,{{\varvec{J}}}). \end{aligned}$$ - (b)

If \(N=2,3,4,\dots \), we have

$$\begin{aligned} \langle \varphi _{x_1}^{(1)} \varphi _{x_1}^{(2)} \dots \varphi _{x_{2k}}^{(1)} \varphi _{x_{2k}}^{(2)} \rangle _{\mathcal G}^{{\varvec{J}}}= \sum _{X \in {\mathcal X}^{\mathrm{even}}_{2k}} \Bigl ( \frac{2}{N} \Bigr )^{|X|} \frac{1}{2^{2k}} \sum _{{{\varvec{q}}}\in {\mathbb N}^{2k}} {\mathbb E}_{\mathcal G}^{N,{{\varvec{J}}}} \biggl [ 1_{E_X({{\varvec{x}}},{{\varvec{q}}})} \prod _{j=1}^{2k} \frac{1}{n_{x_j}+\frac{N}{2}} \biggr ]. \end{aligned}$$

Recall that \({\mathcal X}^{\mathrm{even}}_{2k}\) is the set of even set partitions of \(\{1,\dots ,2k\}\). It is possible to consider other correlation functions, for instance \(\langle \varphi _x^{(1)} \varphi _y^{(1)} \rangle _{\mathcal G}^{{\varvec{J}}}\). They can be written as ratios of loop partition functions, with the numerator involving “open” configurations of links where \(2n_x\) and \(2n_y\) are odd. See [1, 20] for the Ising random currents and [15, 28] for the related loop model for O(N) spin systems. But these correlations do not have a direct probability meaning and we ignore them in this article.

In the case \(N=1\) we have \(\,\mathrm{e}^{-U(n)}\, = 2^n/(2n-1)!!\); the denominator is equal to the number of pairings of 2n elements.

Proof

Let \({{\varvec{x}}}= (x_1,\dots ,x_{2k})\). We get an expansion for \(Z_{\mathcal G}^{\mathrm{spin}}({{\varvec{J}}}; {{\varvec{x}}})\) that also applies to the case \(k=0\), i.e. \({{\varvec{x}}}= \emptyset \). Let \({\mathcal M}_{\mathcal G}({{\varvec{x}}}) \subset {\mathbb N}_0^{\mathcal E}\) be the set of link configurations with odd numbers of links touching \(x_1,\dots ,x_{2k}\), and even numbers touching all other sites. We write

and we expand the exponential in Taylor series. Recalling the definition (3.1), we find

We set \(m_e = \sum _{i=1}^N m_e^{(i)}\). We restricted the link configurations to the sets \({\mathcal M}_{\mathcal G}({{\varvec{x}}})\) or \({\mathcal M}_{\mathcal G}\) since the angular integrals vanish otherwise by symmetry. Recall that \(\mathrm{d}\varphi \) denote the normalised uniform measure on \({\mathbb S}^{N-1}\); we now use that

where \(n = n^{(1)} + \dots + n^{(N)}\) and with the convention that \((-1)!! = 1\). We rewrite the expansion by first summing over \({{\varvec{m}}}\in {\mathcal M}_{\mathcal G}\). We then sum over \((m_e^{(1)})\), ..., \((m_e^{(N)})\) such that \(m_e^{(1)} + \dots + m_e^{(N)} = m_e\) for all \(e \in {\mathcal E}\). We get

We now replace the sums over \((m_e^{(i)})\) by a sum over N possible “colours” for each link, subject to the constraint that each site is intersected by an even number of links of each colour—except for the sites \(x_1,\dots ,x_{2k}\), which are intersected by an odd number of 1-links and 2-links, and an even number of links of other colours. Further, we replace \((2n_x^{(i)}-1)!!\) by a sum over pairings of the i-links that intersect the site x. As for the sites \(x_1,\dots ,x_{2k}\), we sum over pairings such that a 1-link is paired with a 2-link, and all other pairs are between links of same colour. The number of choices for 1-links is \(2n_{x_j}^{(1)}\) times \((2 n_{x_j}^{(1)}-2)!!\), the number of pairings of the remaining \(2n_{x_j}^{(1)}-1\) points. The number of such pairings is then

Let \(C({{\varvec{w}}},{{\varvec{x}}})\) be the set of colour configurations that are compatible with the wire configuration \({{\varvec{w}}}= ({{\varvec{m}}},{\varvec{\pi }})\) and the sites \({{\varvec{x}}}\). We obtain

The number of colours for a given \({{\varvec{m}}},{\varvec{\pi }},{{\varvec{x}}}\) can be expressed in terms of loops. If \(k=0\), i.e. without the complications due to \({{\varvec{x}}}\), the constraint is that the links must have the same colour if they belong to the same loop. Then \(|C({{\varvec{w}}},\emptyset )| = N^{\lambda ({{\varvec{w}}})}\) and the claim (a) of the theorem is proved.

For \(k\ge 1\) the constraints from \({{\varvec{x}}}\) are that there must be loops crossing these sites, whose colours change from 1 to 2 (or 2 to 1). We first sum over the pairs \(q_1, \dots , q_{2k}\) where the changes occur. Then the wire configuration \({{\varvec{w}}}\) must belong to a set \(E_X({{\varvec{x}}},{{\varvec{q}}})\) defined in (4.16) for some even partition X of \(\{1,\dots ,2k\}\). In that case there are N possible colours for ordinary loops, and 2 colours for loops with changes \(1 \leftrightarrow 2\). The number of colourings is then \(N^{\lambda ({{\varvec{w}}})} (\frac{2}{N})^{|X|}\) with |X| the number of partition elements. Thus

This gives the claim (b) of the theorem. \(\square \)

We now consider the graph \({\mathcal G}^{\mathrm{b}}\) with boundary. The hamiltonian is

where \({{\varvec{1}}}\) is the N-component vector \((1,\dots ,1)\). The partition function is

We write \(\langle \cdot \rangle _{{\mathcal G}^{\mathrm{b}}}^{J,{{\varvec{1}}}}\) for the Gibbs state with boundary condition \({{\varvec{1}}}\).

Proposition 6.2

We have

- (a)

\(\displaystyle Z_{{\mathcal G}^{\mathrm{b}}}^{\mathrm{spin},{{\varvec{1}}}}(J) = Z_{{\mathcal G}^{\mathrm{b}}}(N,J)\).

- (b)

If \(N\ge 2\), \(\displaystyle \langle \varphi _x^{(1)} \varphi _x^{(2)} \rangle _{{\mathcal G}^{\mathrm{b}}}^{J,{{\varvec{1}}}} = \tfrac{1}{N} \, {\mathbb E}_{{\mathcal G}^{\mathrm{b}}}^{N,J} \biggl [ \frac{\tilde{n}_x}{n_x+\frac{N}{2}} \biggr ]\).

Proof

The claim (a) can be proved as Proposition 6.1 (a). The relation between \((m_e)\) and \((n_x)\) is

Thus the factor \(2^{-\sum n_x}\) from (6.9) kills the factors 2 and \(\sqrt{2}\) in front of the coupling parameters. The number of colours for a wire configuration \({{\varvec{w}}}\in {\mathcal W}_{{\mathcal G}^\mathrm{b}}\) is equal to \(N^{\lambda ({{\varvec{w}}})}\), where \(\lambda ({{\varvec{w}}})\) is the total number of closed and open loops.

The claim (b) is also similar to Proposition 6.1 (b). Let

Proceeding as before, we get the analogue of (6.12). With \({\mathcal M}_{{\mathcal G}^{\mathrm{b}}}(x)\) the set of link configurations with an odd number of links touching x and an even number touching all other sites of \({\mathcal V}\), we have

With \(\tilde{n}_x\) the number of pairs at x that belong to open loops, the number of colours is

We get Proposition 6.2 (b). \(\square \)

7 Correlations of O(2) Spin Systems

We now calculate the correlation function (6.4). The idea is to use Pfister’s theorem on the characterisation of translation-invariant Gibbs states for the O(2) spin model [32]. In this section the graph is \({\mathcal G}_L^{\mathrm{b}}\), that is, a box in \({\mathbb Z}^d\) with boundary conditions.

It is convenient to introduce the angles \({\varvec{\phi }}= (\phi _x)_{x \in \Lambda } \in [0,2\pi )^{\Lambda _L}\) such that \(\varphi _x = (\cos \phi _x, \sin \phi _x)\). In these variables, the hamiltonians (6.1) and (6.14) are

The boundary condition \({{\varvec{1}}}\) with variables \(\{\varphi _x\}\) corresponds to \({\overline{{\varvec{\phi }}}} = (\frac{\pi }{4})_{x \in \partial \Lambda _L}\). The corresponding Gibbs state for free boundary conditions is the linear functional that assigns the value

to a function \(f : [0,2\pi )^{\Lambda _L} \rightarrow {\mathbb R}\). For boundary conditions \({\overline{{\varvec{\phi }}}}\), the definition of \(\langle \cdot \rangle _{{\mathcal G}_L^{\mathrm{b}}}^{J,{\overline{{\varvec{\phi }}}}}\) is the same but with hamiltonian \(H_{{\mathcal G}_L^{\mathrm{b}}}^{J,{\overline{{\varvec{\phi }}}}}\).

We have \(\varphi _x^{(1)} \varphi _x^{(2)} = \cos \phi _x \sin \phi _x = \frac{1}{2} \sin (2\phi _x)\). We rotate all spins by \(-\frac{\pi }{4}\) so as to get the more traditional \({\overline{{\varvec{\phi }}}} \equiv 0\) boundary conditions. Since \(\sin (2(\phi _x+\frac{\pi }{4})) = \cos (2\phi _x)\), we obtain

We are going to use a major result of Pfister about the set of extremal states of the classical XY model [32]. In order to state this result, let \(\langle \cdot \rangle _{{\mathbb Z}^d}^J\) and \(\langle \cdot \rangle _{{\mathbb Z}^d}^{J,0}\) denote the infinite-volume Gibbs states

Existence of the limits \(L\rightarrow \infty \) follows from Ginibre’s inequalities [22] with standard arguments, see e.g. [20]. As a matter of fact the infinite-volume limits can be taken along any “van Hove sequence” of increasing domains, which implies in particular that the limiting states are translation-invariant. Then Pfister’s theorem states that the limiting symmetric Gibbs state \(\langle \cdot \rangle _{{\mathbb Z}^d}^J\) is equal to the following convex combination of extremal states:

Notice that the state \(\langle \cdot \rangle _{{\mathbb Z}^d}^{J,\psi }\) is obtained from \(\langle \cdot \rangle _{{\mathbb Z}^d}^{J,0}\) by a global spin rotation of angle \(-\psi \in [0,2\pi )\). The above decomposition holds for all J such that the pressure p(2, J) is differentiable.

We can now prove Theorems 5.1 and 5.2.

Proof of Theorem 5.1

From its definition (5.4), Proposition 6.2 (b), and Eq. (7.3), we have that

The monotonicity properties of Theorem 5.1 (a) follow from standard arguments based on Ginibre’s inequalities, see [20].

For Theorem 5.1 (b), we use Proposition 3.1 (b)—more precisely, we use a straightforward extension to the case of open boundary conditions. We take \({\bar{\alpha }} = \sqrt{2}\) and \(C=2\). The number of random walks of length k and with fixed initial point is equal to \((2d)^k\). This immediately gives the result.

The absence of long loops when \(d=1\) is an easy exercise, and when \(d=2\) it follows from the works of Pfister [31] and Ioffe, Shlosman, and Velenik [26]; see [20, Theorem 9.2] for a clear exposition. Their result is that the infinite-volume Gibbs state is invariant under spin rotations, so \(\tilde{m}(2,J) = \langle \cos (2\phi _0) \rangle _{{\mathbb Z}^2}^{J,0} = 0\). This proves (c).

For (d), it can be shown that \(\bigl \langle \cos \phi _0 \bigr \rangle _{{\mathbb Z}^d}^{J,0} > 0\) implies that \(\bigl \langle \cos (2\phi _0) \bigr \rangle _{{\mathbb Z}^d}^{J,0} > 0\), see [31, Corollary 3.6]. We now use the fundamental result of Fröhlich, Simon, Spencer about the occurrence of long-range order in O(N). Let \(\langle \cdot \rangle _{{\mathbb Z}^d}^{J,\mathrm{per}}\) denote the infinite-volume Gibbs state obtained as the limit \(L\rightarrow \infty \) of the state \(\langle \cdot \rangle _{{\mathcal G}_L}^{J,\mathrm{per}}\) with even L and periodic boundary conditions. The claim [21, Theorem 3.1] is that

with \(c(d,J)>0\) for \(d\ge 3\) and J large enough—the theorem actually holds for all \(N \in {\mathbb N}\), not only \(N=2\). Since the state \(\bigl \langle \cdot \bigr \rangle _{{\mathcal G}_L}^{J,\mathrm{per}}\) is translation and rotation invariant, the infinite-volume limit is equal to the state in (7.5). Then

We used \(\langle \cos \phi _0 \rangle _{{\mathbb Z}^d}^{J,\psi } = \langle \cos (\phi _0+\psi ) \rangle _{{\mathbb Z}^d}^{J,0} = \cos \psi \; \langle \cos \phi _0 \rangle _{{\mathbb Z}^d}^{J,0}\) and we integrated the angular integral. It follows that \(\langle \cos \phi _0 \rangle _{{\mathbb Z}^d}^{J,0} = \sqrt{2c(d,J)}\) is positive for J large enough, and so is \(\tilde{m}(d,J)\).

Notice that the extremal state decomposition (7.5) is only proved for almost all J; but using the claim (a) about monotonicity in J, we get the existence of \(J_{\mathrm{c}}\) as stated in (d). \(\square \)

Proof of Theorem 5.2

By Proposition 6.1 (b), the left side of the equation of Theorem 5.2 is equal to the limits \(L\rightarrow \infty \) then \(n\rightarrow \infty \) of the correlation function \(2^{2k} \bigl \langle \varphi _{x_1^{(n)}}^{(1)} \varphi _{x_1^{(n)}}^{(2)} \dots \varphi _{x_{2k}^{(n)}}^{(1)} \varphi _{x_{2k}^{(n)}}^{(2)} \bigr \rangle _{{\mathcal G}_L}^J \).

We use Pfister’s theorem (7.5) and the fact that extremal states are clustering; we get

The expectation in the rotated Gibbs state can be expressed in term of \(\tilde{m}(d,J)\), namely,

We have \(\bigl \langle \sin (2\phi _0) \bigr \rangle _{{\mathbb Z}^d}^{J,0} = 0\) by symmetry \(\phi _x \mapsto -\phi _x\). We recognise \(\tilde{m}(d,J)\) in the last term. We obtain

This is precisely the formula (4.12) for \(M^\mathrm{even}_{\theta =1}(2k)\). This completes the proof of Theorem 5.2. \(\square \)

Notes

We are grateful to Peter Mörters for the suggestion.

References

Aizenman, M.: Geometric analysis of \(\varphi ^4\) fields and Ising models. Commun. Math. Phys. 86, 1–48 (1982)

Barp, A., Barp, E.G., Briol, F.-X., Ueltschi, D.: A numerical study of the 3D random interchange and random loop models. J. Phys. A 48, 345002 (2015)

Benassi, C.: On classical and quantum lattice spin systems. Ph.D. thesis, University of Warwick (2018)

Benassi, C., Fröhlich, J., Ueltschi, D.: Decay of correlations in 2D quantum systems with continuous symmetry. Ann. Henri Poincaré 18, 2831–2847 (2017)

Berestycki, N., Durrett, R.: A phase transition in the random transposition random walk. Probab. Theory Relat. Fields 136, 203–233 (2006)

Berestycki, N., Kozma, G.: Cycle structure of the interchange process and representation theory. Bull. Soc. Math. France 143, 265–281 (2015)

Bertoin, J.: Random Fragmentation and Coagulation Processes. Cambridge Studies in Advanced Mathematics 102. Cambridge University Press, Cambridge (2006)

Betz, V.: Random permutations of a regular lattice. J. Stat. Phys. 155, 1222–1248 (2014)

Betz, V., Schäfer, H., Taggi, L.: Interacting self-avoiding polygons. arXiv:1805.08517 (2018)

Betz, V., Ueltschi, D.: Spatial random permutations and Poisson–Dirichlet law of cycle lengths. Electr. J. Probab. 16, 1173–1192 (2011)

Björnberg, J.E.: Large cycles in random permutations related to the Heisenberg model. Electr. Commun. Probab. 20, 1–11 (2015)

Björnberg, J.E.: The free energy in a class of quantum spin systems and interchange processes. J. Math. Phys. 57, 073303 (2016)

Björnberg, J.E., Kotowski, M., Lees, B., Miłoś, P.: The interchange process with reversals on the complete graph. arXiv:1812.03301 (2018)

Bogachev, L.V., Zeindler, D.: Asymptotic statistics of cycles in surrogate-spatial permutations. Commun. Math. Phys. 334, 39–116 (2015)

Brydges, D., Fröhlich, J., Spencer, T.: The random walk representation of classical spin systems and correlation inequalities. Commun. Math. Phys. 83, 123–150 (1982)

Diaconis, P., Mayer-Wolf, E., Zeitouni, O., Zerner, M.P.W.: The Poisson-Dirichlet law is the unique invariant distribution for uniform split-merge transformations. Ann. Probab. 32, 915–938 (2004)

Dyson, F.J., Lieb, E.H., Simon, B.: Phase transitions in quantum spin systems with isotropic and nonisotropic interactions. J. Stat. Phys. 18, 335–383 (1978)

Elboim, D., Peled, R.: Limit distributions for Euclidean random permutations. Commun. Math. Phys. (2019). arXiv:1712.03809

Fernández, R., Fröhlich, J., Sokal, A.D.: Random Walks, Critical Phenomena, and Triviality in Quantum Field Theory. Texts and Monographs in Physics. Springer, Berlin (1992)

Friedli, S., Velenik, Y.: Statistical Mechanics of Lattice Systems: A Concrete Mathematical Introduction. Cambridge University Press, Cambridge (2017)

Fröhlich, J., Simon, B., Spencer, T.: Infrared bounds, phase transitions and continuous symmetry breaking. Commun. Math. Phys. 50, 79–95 (1976)

Ginibre, J.: General formulation of Griffiths’ inequalities. Commun. Math. Phys. 16, 310–328 (1970)

Goldschmidt, C., Ueltschi, D., Windridge, P.: Quantum Heisenberg models and their probabilistic representations. In: Entropy and the Quantum II. Contemporary Mathematics vol. 552, pp. 177–224. arXiv:1104.0983 (2011)

Griffiths, R.B., Hurst, C.A., Sherman, S.: Concavity of the magnetization of an Ising ferromagnet in a positive external magnetic field. J. Math. Phys. 11, 790–795 (1970)

Grosskinsky, S., Lovisolo, A.A., Ueltschi, D.: Lattice permutations and Poisson–Dirichlet distribution of cycle lengths. J. Stat. Phys. 146, 1105–1121 (2012)

Ioffe, D., Shlosman, S., Velenik, Y.: 2D models of statistical physics with continuous symmetry: the case of singular interactions. Commun. Math. Phys. 226, 433–454 (2002)

Kingman, J.F.C.: Random discrete distributions. J. R. Stat. Soc. B 37, 1–22 (1975)

Lees, B., Taggi, L.: Site monotonicity and uniform positivity for interacting random walks and the spin \(O(N)\) model with arbitrary \(N\). arXiv:1902.07252

Nahum, A., Chalker, J.T., Serna, P., Ortuño, M., Somoza, A.M.: Length distributions in loop soups. Phys. Rev. Lett. 111, 100601 (2013)

Peled, R., Spinka, Y.: Lectures on the spin and loop \(O(n)\) models. arXiv:1708.00058 (2017)

Pfister, C.-É.: On the symmetry of the Gibbs states in two-dimensional lattice systems. Commun. Math. Phys. 79, 181–188 (1981)

Pfister, C.-É.: Translation invariant equilibrium states of ferromagnetic abelian lattice systems. Commun. Math. Phys. 86, 375–390 (1982)

Pitman, J.: Poisson-Dirichlet and GEM invariant distributions for split-and-merge transformations of an interval partition. Comb. Probab. Comput. 11, 501–514 (2002)

Schramm, O.: Compositions of random transpositions. Isr. J. Math. 147, 221–243 (2005)

Tsilevich, N.V.: Stationary random partitions of a natural series. Teor. Veroyatnost. i Primenen. 44, 55–73 (1999)

Ueltschi, D.: Random loop representations for quantum spin systems. J. Math. Phys. 54(083301), 1–40 (2013)

Ueltschi, D.: Uniform behaviour of 3D loop soup models. In: 6th Warsaw School of Statistical Physics, pp. 65–100. arXiv:1703.09503 (2017)

Acknowledgements

We are grateful to Jakob Björnberg, Jürg Fröhlich, Peter Mörters, Charles-Édouard Pfister, Vedran Sohinger, Akinori Tanaka, and Yvan Velenik, for useful discussions. We thank the referee for valuable comments. CB is supported by the Leverhulme Trust Research Project Grant RPG-2017-228.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by H. Duminil-Copin

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Benassi, C., Ueltschi, D. Loop Correlations in Random Wire Models. Commun. Math. Phys. 374, 525–547 (2020). https://doi.org/10.1007/s00220-019-03474-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00220-019-03474-9