Abstract

This paper introduces Finite Elements with Switch Detection (FESD), a numerical discretization method for nonsmooth differential equations. We consider the Filippov convexification of these systems and a transformation into dynamic complementarity systems introduced by Stewart (Numer Math 58(1):299–328, 1990). FESD is based on solving nonlinear complementarity problems and can automatically detect nonsmooth events in time. If standard time-stepping Runge–Kutta (RK) methods are naively applied to a nonsmooth ODE, the accuracy is at best of order one. In FESD, we let the integrator step size be a degree of freedom. Additional complementarity conditions, which we call cross complementarities, enable exact switch detection, hence FESD can recover the high order accuracy that the RK methods enjoy for smooth ODE. Additional conditions called step equilibration allow the step size to change only when switches occur and thus avoid spurious degrees of freedom. Convergence results for the FESD method are derived, local uniqueness of the solution and convergence of numerical sensitivities are proven. The efficacy of FESD is demonstrated in several simulation and optimal control examples. In an optimal control problem benchmark with FESD, we achieve up to five orders of magnitude more accurate solutions than a standard time-stepping approach for the same computational time.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The goal of this paper is to develop high-accuracy numerical simulation and optimal control methods for Ordinary Differential Equations (ODE) with a discontinuous vector field. We assume the following structure

where \(R_i\) are disjoint open sets and \(f_i(\cdot )\) are smooth functions on an open neighborhood of \({\overline{R}}_i\), \({n_{f}}\) is a positive integer and u(t) is an externally chosen control function.

This formulation of piecewise smooth ODE falls into the class of hybrid systems [13, 50]. Many practical problems give rise to such ODE with a discontinuous right hand side (r.h.s.), e.g., in sliding mode control [3], mechanics problems with Coulomb friction [49], state constrained ODE derived from Pontryagin’s maximum principle [41], electronic circuits [1], biological systems [4], vaccination strategies [14], transportation systems and traffic flow networks [8], constrained optimization algorithms viewed as dynamic systems [27] and many more. Systems with state jumps, including impact mechanics, robotics and hybrid systems with hysteresis can be transformed into systems matching the form of (1) via the time-freezing reformulation [34, 37, 40]. Consequently, efficient and accurate numerical optimal control algorithms for this class of systems are of great interest.

Related work: High-accuracy simulation of ODE with a discontinuous r.h.s. is numerically difficult since an accurate location of the nonsmooth events in time is needed. Standard time-stepping methods with a fixed step size applied to this class of ODE have at best first-order accuracy as they do not detect the switches [2]. Additionally, in the case of sliding modes the numerical solution obtained by explicit time-stepping methods tends to chatter around the discontinuity. Convergence of standard time-stepping discretization methods with order one is studied in [17, 28, 52]. Most high-accuracy methods include a root-finding procedure for accurate switch location and they usually assume that the trajectory crosses the discontinuity or only passes through two regions at a time [2]. An exception is Stewart’s high accuracy method which can deal with almost all switching cases [46, 48]. However, it also has an external switch location routine and is thus difficult to apply in direct optimal control, i.e., in first discretize, then optimize approaches to optimal control [44].

The parametric sensitivities of discontinuous ODE (1) have jump discontinuities [22, 51] when the trajectory passes through or enters a surface of discontinuity, cf. Sect. 2.4. Therefore, the application of high-accuracy integrators even in a direct multiple shooting setting [12], which hides the external switch detection procedure from the optimizer, will be notoriously difficult, since derivative-based optimization algorithms will likely fail due to the non-Lipschitz sensitivities. Other fundamental difficulties within direct optimal control of non-smooth ODE are illuminated in the seminal paper by Stewart and Anitescu [51]. They show that direct methods based on time-stepping integration schemes with fixed step sizes are doomed to fail since the numerical sensitivities obtained by differentiating the results of a simulation are wrong no matter how small the step size is. We refer to this class of methods as standard methods because they are commonly used in optimal control of smooth dynamical systems. It is also shown that the numerical sensitivities of a smooth approximation of an ODE with a discontinuous r.h.s. are only correct if the step size approaches zero faster than the smoothing parameter, which makes accurate approximations computationally expensive. The same effects carry over to many Dynamic Complementarity Systems (DCS) [36].

On the theoretical side, necessary and/or sufficient conditions for optimality are provided in [15, 23, 45, 53]. Guo and Ye [23] and Vieira et. al [53] study optimal control of a DCS with absolutely continuous solutions. The problems from this paper fall into this class. A broader overview can be found in [13].

On the practical side, many authors have developed methods to numerically treat discontinuous ODE in optimal control [9,10,11, 29, 30, 36, 42, 51]. Kirches [30] develops a direct multiple shooting-based approach with a switch detecting integrator and sensitivity update formulae. Similarly, in [42] a method is developed that can treat sliding modes on a single switching surface. Katayama et al. [29] fix the switching sequence and optimize the lengths of the phases in a model predictive control loop, where at every sample the switching sequence is updated. Bemporad et al. [10] assign integer variables to every mode and solve mixed-integer optimization problems. In [9, 11, 36] the ODE is transformed into a DCS, resulting in a Mathematical Program with Complementarity Constraints (MPCC) to be solved after discretization. In [36] the authors use a standard discretization approach and suggest to use a homotopy to avoid spurious local minima due to wrong sensitivities [51]. Baumrucker and Biegler [9] consider systems with a single switching surface (or with multiple independent switching surfaces, cf. Sect. 2.3) and allow variable step sizes. This method yields exact switch detection, higher order integration accuracy, and correct numerical sensitivities. The step sizes are left to the optimizer as a degree of freedom, hence it can play with the discretization accuracy, possibly in an undesired way. Unfortunately, a formal proof of the appealing properties of the method is not provided in [9].

As it can be seen from the discussion above, most of the practical methods use only first-order accuracy methods with possibly incorrect sensitivities or treat the discontinuity in the integrator which complicates the use of derivative-based optimization algorithms (except [9]). They do not treat sliding modes appropriately or handle only systems with a single switching surface, i.e., only two regions. The goal of this paper is to develop a method that resolves these issues and to provide a proper convergence theory. Note that the (easier) case of ODE with a continuous but nonsmooth r.h.s. fits into the structure of (1). Conversely, the time-freezing reformulation [34, 37, 40] transforms many systems from the (more difficult) case of systems with state jumps into the form of (1). This enables us to treat many classes of nonsmooth systems with the same numerical method in a unified way. FESD uses the DCS representation introduced by Stewart [46, 48] and is motivated by the variable step size ideas of Baumrucker and Biegler [9].

Contributions: In this paper, we develop the FESD method, which can be used both in simulation and optimal control problems. We start with a reformulation of (1) into dynamic complementary systems introduced by Stewart [46, 48] and provide a constructive way to pass from more natural definitions of discontinuous ODE to Stewart’s form. Discretization of the DCS results in a nonlinear complementary problem. We build on the ideas of varying the step size and allowing switches only to take place at the boundaries of the finite elements introduced in [9]. The FESD method can efficiently deal with multiple and simultaneous switches including sliding modes on higher co-dimension surfaces, and thus is more general than [9]. Moreover, in contrast to [9], where only Radau-IIA Implicit Runge–Kutta (IRK) methods were considered, in FESD one can use any Runge–Kutta method.

Additionally, we prove that FESD detects the switches exactly in time, recovers the high-order accuracy that RK methods enjoy for smooth ODE, and obtains the correct numerical sensitivities even when the solution crosses or stays on a discontinuity. To allow switching on the boundaries of the finite elements we introduce the cross complementarity formulation. Using FESD to discretize OCP with DCS results in mathematical programs with complementarity constraints which can be solved efficiently with smooth optimization techniques by solving few several Nonlinear Programming (NLP) problems. [7, 26, 31, 32, 43]. Thus, we avoid the nonsmooth difficulty encountered within direct multiple shooting with switch-detecting integrators.

Since the step sizes \(h_n\) are allowed to vary, if no switches occur, we would encounter spurious degrees of freedom which let the optimizer play with the integrator accuracy in a possibly undesired way. To avoid this problem we propose additional conditions called step equilibration which are based on an indicator function. This decouples the integrator accuracy from the optimizer and results in piecewise equidistant discretization grids between the switches. We illustrate the practicability of FESD on several simulation and optimal control examples and verify the theoretical findings.

The FESD method with its many variations and the remainder of the tool-chain for numerically solving optimal control problems with nonsmooth systems are implemented in the open source MATLAB toolbox NOSNOC [35, 38].

Organization of the paper: Sect. 2 gives an introduction to Filippov systems and Stewart’s reformulation. It provides a practical procedure to construct Stewart’s indicator functions and discusses issues with discontinuous sensitivities. Section 3 introduces the FESD method and discusses the main ideas that lead to it. In Sect. 4 several relevant theoretical properties of the FESD are studied. The sections contain numerical examples that illustrate the theoretical and algorithmic developments. Section 5 shows how to use FESD in numerical optimal control. The section finishes with an optimal control example and a benchmark compassion of FESD to the standard approach. Finally, Sect. 6 summarizes the paper and outlines future research.

Notation: The complementary conditions for two vectors \(a,b \in {{\mathbb {R}}}^{n}\) read as \({0\le a \perp b\ge 0}\), where \(a \perp b\) means \(a^{\top }b =0\).

For two scalar variables a, b the so-called C-functions [19, Section 1.5.1] have the property \(\phi (a,b) = 0 \iff a\ge 0, b\ge 0, ab = 0\). Examples are the natural residual functions \(\phi _{\textrm{NR}}(a,b)=\min (a,b)\) or the Fischer-Burmeister function \(\phi _{\textrm{FB}}(a,b) = a+b-\sqrt{a^2+b^2}\). If \(a,b \in {{\mathbb {R}}}^{n}\), we use \(\phi (\cdot )\) component-wise and define \(\Phi (a,b) = (\phi (a_1,b_1),\dots ,\phi (a_{n},b_{n}))\).

All vector inequalities are to be understood element-wise, \(\textrm{diag}(x)\in {{\mathbb {R}}}^{n\times n}\) returns a diagonal matrix with \(x \in {{\mathbb {R}}}^n\) containing the diagonal entries. The concatenation of two column vectors \(a\in {{\mathbb {R}}}^{n_a}\), \(b\in {{\mathbb {R}}}^{n_b}\) is denoted by \((a,b):=[a^\top ,b^\top ]^\top \), the concatenation of several column vectors is defined analogously. The identity matrix is denoted by \(I \in {{\mathbb {R}}}^{n \times n}\) and a column vector with all ones is denoted by \(e=(1,1,\dots ,1) \in {{\mathbb {R}}}^n\), their dimension is clear from the context. The closure of a set C is denoted by \({\overline{C}}\), its boundary as \( \partial C\) and \({conv }(C)\) is its convex hull. Given a matrix \(M \in {{\mathbb {R}}}^{n \times m}\), its i-th row is denoted by \(M_{i,\bullet }\) and its j-th column is denoted by \(M_{\bullet ,j}\). For a function \(f:{{\mathbb {R}}}^{n} \rightarrow {{\mathbb {R}}}^{m}\) we denote by \(\textrm{D}f(x) = \frac{\partial {f}}{\partial {x}}(x)\in {{\mathbb {R}}}^{m\times n}\) the Jacobian matrix and by \(\nabla f(x) :=\frac{\partial {f}}{\partial {x}}(x)^\top \) its transpose.

For the left and the right limits, we use the notation \({x({t_{\textrm{s}}}^+) = \lim \limits _{t\rightarrow {t_{\textrm{s}}},\ t>{t_{\textrm{s}}}} x(t)}\) and \({x({t_{\textrm{s}}}^-) = \lim \limits _{t\rightarrow {t_{\textrm{s}}},\ t<{t_{\textrm{s}}}}x(t)}\), respectively.

When clear from context, we often drop the dependency on time t.

2 Piecewise smooth differential equations

This section will introduce some necessary assumptions on the systems PSS (1), its Filippov convexification [21], and Stewart’s reformulation into Dynamic Complementarity Systems (DCS) [46, 48] to prepare the ground for the novel method presented in Sect. 3. We discuss some properties of the DCS for a fixed active set and active-set changes.

2.1 Filippov convexification

Initial value problems arising from the nonsmooth ODE (1) usually fail to have classic Carathéodory solutions, for a counterexample, see e.g., [46, Section 1]. To have a meaningful solution concept for this class of ODE, the main idea of Filippov was to replace the r.h.s. of (1) with a convex set and to obtain the following Differential Inclusion (DI):

where \(f(x,u) = f_i(x,u)\) if \(x \in R_i\), B(x) is the Euclidean unit ball at x in \({{\mathbb {R}}}^{n_x}\), \(\mu (\cdot )\) is the Lebesgue measure on \({{\mathbb {R}}}^{n_x}\) and \(\overline{\textrm{conv}}(\cdot )\) maps a subset of \({\mathbb {R}}^{n_x}\) to its closed convex hull. Throughout the paper we assume that the regions \(R_i\) are disjoint, connected and open. They are assumed to be nonempty and to have piecewise-smooth boundaries \(\partial R_i\). We assume that \(\overline{\bigcup \limits _{i\in \mathcal {J}} R_i} = {{\mathbb {R}}}^{n_x}\) and that \({{\mathbb {R}}}^{n_x} \setminus \bigcup \limits _{i\in \mathcal {J}} R_i\) is a set of measure zero.

Let \(\mathcal {I}(x) :=\{ i \mid x \in {\overline{R}}_i\} \subseteq \mathcal {J}\) be the active set at \(x \in {{\mathbb {R}}}^{n_x}\). Due to the special structure of (1) the Filippov DI (2) can be written as

This means that in the interior of the regions, \(R_i\) the Filippov set \(F_\textrm{F}(x,u)\) is equal to \(\{f_i(x,u)\}\) and on the boundary between regions we have a convex combination of the neighboring vector fields. If \({\dot{x}}\) exists, functions \(\theta _i(\cdot )\) which serve as convex multipliers can be introduced and the Filippov DI can be written as

We call the functions \(\theta _i(\cdot )\) Filippov multipliers. As it will be seen later the functions \(\theta _i(\cdot )\) lack any continuity properties. But it can be shown that they are at least measurable [20, 46]. Given (3), we will compute piecewise active solutions [46], which are defined as follows.

Definition 1

(Piecewise active solution [46]) For an initial value \(x(0) = x_0\), a given measurable control function u(t) and a compact interval [0, T], a function \(x:[0,T] \rightarrow {{\mathbb {R}}}^{n_x}\) is said to be a solution of (2), if \({\dot{x}}(t) \in F_{\textrm{F}}(x(t),u(t))\) almost everywhere on [0, T]. This function is called a piecewise active solution if the active set \(\mathcal {I}(x(t))\) is a piecewise constant function of time and it changes its value only finitely many times on [0, T]. A time point \({t_{\textrm{s}}}\in [0,T]\) is called a switching point if \(\mathcal {I}(x(t))\) is not constant in any sufficiently small neighborhood of \({t_{\textrm{s}}}\).

Note that this definition assumes a finite number of switches and excludes so-called Zeno solutions, where infinitely many switches can occur in a finite time interval. Zeno solutions cannot be treated with event-detecting methods.

For a constant active set \(\mathcal {I}(x)\) one can derive an ODE or Differential Algebraic Equation (DAE) (for sliding modes) from (3) and apply standard integration methods. The overall ODE solution x(t) is continuous and consists of smooth pieces connected by nondifferentiable points ("kinks") at the switching times \({t_{\textrm{s}}}\).

2.2 Stewart’s reformulation

We regard a specific representation of the sets \(R_i\) which was introduced by Stewart [46]. The main assumption is that the regions \(R_i\) are given as

It is assumed that the indicator functions \(g_i(\cdot ),\ i \in \mathcal {J}\), are smooth functions. Moreover, throughout the paper we assume additionally that \(g_i(\cdot ), f_i(\cdot )\) and \(\nabla g_i(\cdot )\) are Lipschitz continuous.

Note that due to the definition of the sets \(R_i\) in (4), the active set can be defined as

We define the vectors \(\theta = (\theta _1,\ldots ,\theta _{{n_{f}}}) \in {{\mathbb {R}}}^{{n_{f}}}\), \(g(x) = (g_1(x),\ldots ,g_{{n_{f}}}(x)) \in {{\mathbb {R}}}^{{n_{f}}}\) and the matrix \(F(x) = \begin{bmatrix}f_1(x), \ldots , f_{{n_{f}}}(x)\end{bmatrix}\in {{\mathbb {R}}}^{n_x \times {{n_{f}}}}\). Using the specific representations (4), from [46] and equation (3) one can deduce that the Filippov DI can be written as

where the algebraic variables \(\theta (x(t))\) are a solution of the parametric Linear Program (LP)

Using the Karush-Kuhn-Tucker (KKT) conditions of LP(x) and (6), we obtain the dynamic complementarity system

where the algebraic variables \(\lambda \in {{\mathbb {R}}}^{{n_{f}}}\) and \(\mu \in {{\mathbb {R}}}\) are the Lagrange multipliers of the parametric LP (7). To have an even more compact representation we use a C-function \(\Psi \) for the complementarity conditions and rewrite the KKT conditions of the LP (7) as the nonsmooth equation:

It provides \(2 {n_{f}}+1\) conditions for the \(2 {n_{f}}+1\) algebraic variables \(\theta ,\,\lambda \) and \(\mu \). The DCS reads in compact form as a nonsmooth differential algebraic equation:

Example 1

We illustrate this formulation on the simple example of \({\dot{x}} \in 2 - \textrm{sign}(x)\). This ODE is characterized by the regions \(R_1 = \{x \in {{\mathbb {R}}}\mid x<0 \}\) and \(R_2 = \{x \in {{\mathbb {R}}}\mid x>0 \}\), with \(f_1(x) = 3\), \(f_2(x) = 1\) and \(F(x) = [3 \quad 1]\). It can be verified that with the functions \(g_1(x) = x \) and \( g_2(x) = -x\) we have a representation of the regions as in (4). Moreover, we have the multipliers \(\theta ,\lambda \in {{\mathbb {R}}}^2\) and \(\mu \in {{\mathbb {R}}}\). Thus, the corresponding DCS reads as:

2.2.1 Remark on how to treat switching functions

Definition (4) might not be the most intuitive way to represent the sets \(R_i\). In many practical examples some smooth scalar functions \(c_i(\cdot )\), called switching functions, are given. Their zero-level sets define the boundaries of the regions \(R_i\). For example, \(R'_1 = \{ x\in {{\mathbb {R}}}^{n_x} \mid c_1(x)>0,\ldots ,c_m(x)>0\}\), \(R'_2 = \{ x\in {{\mathbb {R}}}^{n_x} \mid c_1(x)>0,\ldots ,c_{m-1}(x)>0,c_m(x)<0\}\) and so on. Let \(c(x) = (c_1(x),\ldots ,c_m(x)) \in {{\mathbb {R}}}^m\) and assume that \(\nabla c(x) \in {{\mathbb {R}}}^{n\times m}\) has rank m. Thus, we can locally define up to \({n_{f}}= 2^m\) regions and encode them via a sign matrix \(S \in {{\mathbb {R}}}^{2^m \times m}\) defined as

Note that the matrix S has no repeating rows. Moreover, we assume that this matrix has no zero entries. The sets \(R'_i\) can be compactly represented using the rows \(S_{i,\bullet }\) as

The next proposition provides a constructive way to find the functions \(g(\cdot )\) from the more intuitive representation of the regions via \(c(\cdot )\).

Proposition 2

Let the function \(g: {{\mathbb {R}}}^{n_x} \rightarrow {{\mathbb {R}}}^{{n_{f}}}\) be defined as

then for all \( x \in R'_i\) the following statements are true:

-

(i)

\(g_i(x) < g_j(x),\ \text{ for } \ i \ne j\),

-

(ii)

the Definitions (4) and (12) define the same set, i.e., \(R_i = R'_i\).

Proof

For (i), note that for \(x \in R'_i\) all terms in the sum \(g_i(x) = -S_{i,\bullet }c(x) = -\sum _k S_{i,k} c_k(x)\) are strictly positive. On the other hand, for any \(g_j(x) = -S_{j,\bullet }c(x) =- \sum _k S_{j,k} c_k(x),\ j \ne i\) and \(x \in R'_i\), due to (12), all terms in the sum where \(S_{j,k} \ne S_{i,k}\) are strictly negative. Therefore \(S_{i,\bullet }c(x) > S_{j,\bullet }c(x)\), thus (i) holds.

For (ii), first regard the rows \(S_{j,\bullet }\) that differ from \(S_{i,\bullet }\) only in the k-th column. Then \(g_i(x) - g_j(x) = -(S_{i,k}-S_{j,k}) c_k(x) < 0\). If \(S_{i,k} = 1\), then \(g_i(x) - g_j(x) = -2c_k(x) < 0\). Likewise, for \(S_{i,k} = -1\), then \(g_i(x) - g_j(x) = 2c_k(x) < 0\). Therefore, from (4) we recover the definition of (12) by looking at the rows where \(S_{i,k}\) and \(S_{j,k}\) differ by one element. For all rows j that differ from \(S_{i,\bullet }\) by more than one column, by similar reasoning, we obtain inequalities that do not tighten (12), since \(g_i(x) - g_j(x)\) consists of a sum of the terms from the inequalities where only one component of c(x) is left. Therefore, statement (ii) holds and this completes the proof. \(\square \)

Example 2

For our tutorial example \({\dot{x}} \in 2-\textrm{sign}(x)\) and the corresponding DCS (11) we have \(c(x) = x\) and \(S = \begin{bmatrix} -1&1 \end{bmatrix}^\top \) and we obtain \(g(x) = -Sc(x) = (x,-x)\) as used in Example 1.

2.2.2 Fixed active set

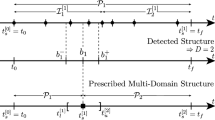

Illustration of active sets at different points. It can be seen that \({\mathcal {I}}(x(t_1)) = {\mathcal {I}}_0 = \{1\}\). At \(x(t_{\textrm{s},1})\) the trajectory crosses the surface of discontinuity between \(R_1\) and \(R_2\), hence \({\mathcal {I}}(x(t_{\textrm{s},1})) = {\mathcal {I}}_1^0 = \{1,2\}\) and later \({\mathcal {I}}_1 = \{2\}\). The segment between \(x(t_{\textrm{s},2})\) and \(x(t_{\textrm{s},3})\) is a sliding mode and we have \({\mathcal {I}}_2^0 = \{2,3\}\) and \({\mathcal {I}}_2 = \{2,3\}\). Finally we have at \(x(t_{\textrm{s},3})\) that \({\mathcal {I}}_3^0 = \{1,2,3,4\}\)

For a given solution \(x(\cdot )\) let us denote all switching points by \(0= t_{\textrm{s},0}<t_{\textrm{s},1}< \dots < t_{\textrm{s},{N_\textrm{sw}}}=T\). The fixed active set between two switches is denoted by \({\mathcal {I}}_n:={\mathcal {I}}(x(t)), \ t\in (t_{\textrm{s},n},t_{\textrm{s},n+1})=:I_n\) and at a switching point \(t_{\textrm{s},n}\) by \({\mathcal {I}}^0_n:={\mathcal {I}}(x(t_{\textrm{s},n}))\). Note that \({\mathcal {I}}_n^0 = {\mathcal {I}}_n \cup {\mathcal {I}}_{n-1}\). These definitions are illustrated in Fig. 1. In this subsection, we regard the DCS (8) for a single fixed \({\mathcal {I}}_n\). For ease of notation, we drop the subscripts in this subsection and denote the fixed active set by \({\mathcal {I}}\). Depending on the active set, the DCS (8) reduces either to an ODE or to a DAE.

To simplify our exposition we introduce the following notation. For a given vector \(a\in {{\mathbb {R}}}^n\) and set \({\mathcal {I}}\subseteq \{1,\ldots ,n\}\), we define the projection matrix \(P_{\mathcal {I}}\in {{\mathbb {R}}}^{|{\mathcal {I}}| \times n}\) which has zeros or ones as entries. It selects all component \(a_i, i\in {\mathcal {I}}\) from the vector a, i.e., \(a_{\mathcal {I}}= P_{\mathcal {I}}a \in {{\mathbb {R}}}^{|{\mathcal {I}}|}\) and \(a_{\mathcal {I}}= [a_i \mid i\in {\mathcal {I}}]\).

In the DAE case, x is on the boundary of one or more regions \(R_i\), we speak of sliding modes [22], i.e., \(|{\mathcal {I}}|>1\) and typically obtain an index 2 differential algebraic equation. In this case, two or more equal entries of g(x) are the smallest components of this vector, and the solution \(\theta \) of the LP(x) is not unique and lies on a facet of the unit simplex. To compute the values of \(\theta \), we must treat the DCS as a DAE. We define \(F_{{\mathcal {I}}}(x,u) :=F(x,u) P_{{\mathcal {I}}}^\top \), which selects the appropriate columns of F(x, u). For \(t \in I\), we have \(\theta _i =0, i \notin {\mathcal {I}}\) and \(\lambda _i = 0, i\in {\mathcal {I}}\), thus the DCS (8) reduces to the DAE

There are \(|{\mathcal {I}}|+1\) nontrivial algebraic equations and \(|{\mathcal {I}}|+1\) unknown algebraic variables, namely \(\mu \) and \(\theta _i\) for \(i \in {\mathcal {I}}\), since we consider \(\theta _i(t)=0,\ i \notin {\mathcal {I}}\) as fixed.

In the ODE case, x is in the interior of some region \(R_i\), we have \(|{\mathcal {I}}|=1\). The algebraic variables \(\mu \) and \(\theta _i\) can be computed explicitly from (14) and we have \(\theta _i = 1\) and \(\mu = g_i(x)\). Thus, the DCS reduces to the ODE \({\dot{x}} = f_i(x)\).

Next, we provide sufficient conditions for solution uniqueness of the DAE (14) for a given \(|{\mathcal {I}}|\ge 1\). We define the matrix

Note that entries of this matrix arise by taking the total time derivative of (14b).

Assumption 3

Given a fixed active set \({\mathcal {I}}(x(t)) = {\mathcal {I}}\) for \(t \in I\), it holds that the matrix \(M_{{\mathcal {I}}}(x(t))\) is invertible and \(e^\top M_{{\mathcal {I}}}(x(t))^{-1} e \ne 0\) for all \(t\in I\).

Proposition 4

Suppose that Assumption 3 holds. Given the initial value \(x(t_{\textrm{s},n})\), then the DAE (14) has a unique solution for all \(t\in I\).

Proof

For a given \(x(\cdot )\) we can differentiate equation (14b) w.r.t. t and obtain the following index 1 DAE

with the algebraic variables \(\theta _{{\mathcal {I}}}\) and \(v\in {{\mathbb {R}}}\). For a given initial condition \(x(t_{\textrm{s,n}})\), \(\mu (t_{\textrm{s,n}})\) can be directly computed from any component of (14b). Using the Schur complement and Assumption 3, we conclude that we can find unique \(\theta _{{\mathcal {I}}}\) and v by solving the linear system (16b). Therefore, the DAE (14) can be reduced to an ODE. Since the functions \(f_i\) are assumed to be Lipschitz the resulting ODE has a unique solution \(x(t), t\in I\). \(\square \)

A similar result, with a more complicated proof but different assumptions can be found in [46, Section 2].

Note that even though the DAE has a unique solution for a given active set \({\mathcal {I}}\), there might be multiple \({\mathcal {I}}\) that give a well-defined ODE, as we discuss in the subsequent sections. We do not know a priori whether we need to treat an ODE or a DAE, but for both cases, we will use Runge–Kutta methods within FESD to provide high-accuracy solutions. The crucial part of FESD is the automatic active set and switching time detection so that sliding modes and crossings of region boundaries can be treated in a unified way.

2.2.3 Active-set changes and continuity of \(\lambda \) and \(\mu \)

Every active-set change in (8d) corresponds to crossing a discontinuity, entering or leaving a sliding mode, or a spontaneous leaving of a surface of discontinuity. These events in time are called switches.

From (3), Eq. (8b) and the complementarity conditions (8d) for \(i \in {\mathcal {I}}(x)\) it follows that \(\theta _i \ge 0\) and \(\lambda _i =0\). Likewise, for \(i \notin {\mathcal {I}}(x)\) it follows that \(\theta _i = 0\) and \(\lambda _i \ge 0\). Hence, for \(i \in {\mathcal {I}}(x)\) from (8b) and (5) we conclude that \(\mu = \min _{j\in \mathcal {J}} g_j(x)\).

Lemma 5

The functions \(\lambda (t)\) and \(\mu (t)\) in (8) are continuous in time.

Proof

The function \(\mu (t)\) is a minimum of continuous functions and is thus continuous. Therefore, continuity of \(\lambda (t) = g(x(t)) - \mu (t)e\) follows from the continuity of x(t) and g(x) and Eq. (8b). \(\square \)

Remark 6

Continuity of \(\lambda (t)\) implies that at an active-set change of a component i at \(t_{\textrm{s},n+1}\) some \(\lambda _i(t_{\textrm{s},n+1})\) must be zero. Moreover, for some \(i \notin {\mathcal {I}}_n\), in the case of crossing a discontinuity or entering a sliding mode with \(i\in {\mathcal {I}}_{n+1}\), it holds that the left time derivative of \(\lambda _i\) is negative, i.e., \({\dot{\lambda }}_i(t_{\textrm{s},n+1}^-) < 0\). Likewise, in the case of leaving a sliding mode or a spontaneous switch, with \(i \in {\mathcal {I}}_n\) and \(i \notin {\mathcal {I}}_{n+1}\), it follows that the right time derivative of \(\lambda _i\) is positive, i.e., \({\dot{\lambda }}_i(t_{\textrm{s},n+1}^+) > 0\). If some of the first-order one-sided derivatives of \({\lambda }(\cdot )\) are zero at a switching point \(t_{\textrm{s},n+1}\), then one must look at higher-order derivatives to determine if it stays active or not.

We exploit the continuity of \(\lambda (\cdot )\) and \(\mu (\cdot )\) later in the derivation of the FESD method.

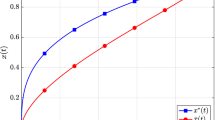

Illustration of the arguments of Lemma 5 and Remark 6 on the Example 3 (a) and its corresponding DCS (11) for \(t\in [0,2]\) and \(x(0) = -1\) with \({t_{\textrm{s}}}= -\frac{1}{3}\). The functions \(\mu = \min (-x,x)\), \(\lambda _1 = x-\mu \) and \(\lambda _2 =- x-\mu \) are continuous. At the switching point \({t_{\textrm{s}}}\) we have \({\dot{\lambda }}_1({t_{\textrm{s}}}^-)=0\), \({\dot{\lambda }}_1({t_{\textrm{s}}}^-)>0\) and \({\dot{\lambda }}_2({t_{\textrm{s}}}^-)<0\), \({\dot{\lambda }}_1({t_{\textrm{s}}}^-)=0\)

The next example discusses the difference between the possible switching cases.

Example 3

There are four possible switching cases which we illustrate with the following examples:

-

(a)

crossing a surface of discontinuity, \({\dot{x}}(t) \in 2-\textrm{sign}(x(t))\),

-

(b)

sliding mode, \({\dot{x}}(t) \in -\textrm{sign}(x(t))\),

-

(c)

leaving sliding mode \({\dot{x}}(t) \in -\textrm{sign}(x(t))+t\).

-

(d)

spontaneous switch, \({\dot{x}}(t) \in \textrm{sign}(x(t))\),

In case (a), for \(x(0) < 0\) the trajectory reaches \(x = 0\) and crosses it. In example (b), for any finite x(0), the trajectory reaches \(x=0\) and stays there. On the other hand, in example (c), for \(x(0) =0\) the DI has a unique solution and leaves \(x=0\) at \(t=1\). In the last example, for \(x(0) =0\), the DI has infinitely many solutions, and x(t) can spontaneously leave \(x = 0\) at any \(t\ge 0\). Note that there is a qualitative difference between leaving a sliding mode (c) and spontaneous switch (d). The arguments of Lemma 5 and Remark 6 are illustrated in Fig. 2 for the Example 3 (a).

2.2.4 Predicting the new active set

In this subsection, we restate a more technical result from [46] which is later needed in the convergence proof. The reader not interested in the proofs may skip this part.

As already noted in Remark 6, switches are characterized by the time derivative of \(\lambda (\cdot )\). Note that, e.g., for crossing a discontinuity or entering a sliding mode at a switching point and for the subsequent interval it holds that \({\mathcal {I}}_n \subseteq {\mathcal {I}}_n^0\). Moreover, one can construct a Linear Complementarity Problem (LCP) with the data at \(x(t_{\textrm{s},n})\) and predict \({\mathcal {I}}_{n+1}\).

We define the vector \(w_{{\mathcal {I}}}(t) :=\frac{{\textrm{d}}}{{\textrm{d}}t}\lambda _{{\mathcal {I}}}(t) = M_{{\mathcal {I}}}(x(t)) \theta _{{\mathcal {I}}}(t) - {\dot{\mu }}(t) e\). One can construct the following mixed LCP between \({\dot{\lambda }}_{{\mathcal {I}}_n^0}\) and \(\theta _{{\mathcal {I}}_n^0}\) at \(t_{\textrm{s},n}\):

For a sufficiently large \(\alpha >0\) all entries of the matrix \(M_{{\mathcal {I}},\alpha }(x) = M_{{\mathcal {I}}}(x)+ \alpha e e^\top \) are strictly positive. This means the matrix \(M_{{\mathcal {I}},\alpha }(x)\) is strictly copositive, i.e., for any \(a\ge 0, a\ne 0\) it holds that \(a^\top M_{{\mathcal {I}},\alpha }(x) a >0\) [19]. One can derive an LCP equivalent to (17) [46, Lemma 3.3]:

The motivation for rewriting (17) as (18) is twofold. It is both easier to prove solution existence and to compute a solution for an LCP with a strictly copositve matrix than for the initial mixed LCP [46]. The solution of the initial LCP \((w_{{\mathcal {I}}_n^0},\theta _{{\mathcal {I}}_n^0})\) can be reconstructed via \(\theta _{{\mathcal {I}}_n^0} = {{\tilde{\theta }}_{{\mathcal {I}}_n^0}}/{e^\top {\tilde{\theta }}_{{\mathcal {I}}_n^0}}\) and \(w_{{\mathcal {I}}_n^0} = {{\tilde{w}}_{{\mathcal {I}}_n^0}}/{e^\top {\tilde{\theta }}_{{\mathcal {I}}_n^0}}\), for further details cf. [46, Lemma 3.3]. There is a one-to-one correspondence between the active set in a neighborhood of a switching point \(t_{\textrm{s},n}\) and the solutions of the LCP (18). This is summarized in the next theorem proved by Stewart [46].

Theorem 7

(Theorem 3.2 [46]) Let x(t) be a solution in the sense of Definition 1 for \(t \in [t_a,t_b]\), with \({\mathcal {I}}^0 = {\mathcal {I}}(x(t_a))\) and \({\mathcal {I}}= {\mathcal {I}}(x(t))\) for all \(t\in (t_a,t_b)\). Suppose Assumption 3 holds for all \(t\in (t_a,t_b)\). Then for each \(t \in (t_a,t_b)\) there is a solution of the LCP (18) such that

Conversely, let \(x_0\in {{\mathbb {R}}}^{n_x}\) and \(t_a\) be given with \({\mathcal {I}}^0 = {\mathcal {I}}(x_0)\). Then if \(({\tilde{w}}_{{\mathcal {I}}^0},{\tilde{\theta }}_{{\mathcal {I}}^0})\) is a solution of the LCP (18) such that

and the conditions of Assumptions 3 are satisfied for \(\nabla g_i(x)\) and \(f_i(x,u)\), \(i \in {\mathcal {I}}\), then there is a \(t_b > t_a\) and a solution \(x(\cdot )\) in the sense of Definition 1 on \([t_a,t_b]\) such that \(x(t_a) = x_0\) and \({\mathcal {I}}(x(t)) = {\mathcal {I}}\) for all \(t\in (t_a,t_b)\).

Regard an LCP

with \(M \in {{\mathbb {R}}}^{l \times l}\) and \(q\in {{\mathbb {R}}}^{l}\). The given LCP (19) is compactly denoted by \(\textrm{LCP}(M,q)\) and its set of solutions is denoted by \(\textrm{SOL}(M,q)\subseteq {{\mathbb {R}}}^l\). If a solution satisfies \((M \theta + q) +\theta >0\), we say that strict complementarity holds.

To show convergence we will require the solutions of the LCP to be strongly stable [19, 46]. A solution \((w^*,\theta ^*)\in \textrm{SOL}(M,q)\) of a given \(\textrm{LCP}(M,q)\) is said to be strongly stable if there is a neighborhood U of \(\theta ^*\) and a neighborhood V of the problem data \(M\in {{\mathbb {R}}}^{l\times l}\) and \(q\in {{\mathbb {R}}}^{l}\), such that the intersection of U with the solution set of an LCP constructed from the data from one point in V is a singleton. We state a regularity assumption about the LCP (18).

Assumption 8

Consider a solution x(t) in the sense of Definition 1 for \(t\in [0, T]\), and let \(\mathcal {S}=\{ t_{\textrm{s},0},\ldots , t_{\textrm{s},{N_\textrm{sw}}}\}\) be the set of switching points. The solutions of the LCP (18) are strongly stable and satisfy strict complementarity for all \(t\in [{t_{\textrm{s}}}-\epsilon , {t_{\textrm{s}}}+\epsilon ]\cap [0,T]\), \({t_{\textrm{s}}}\in \mathcal {S}\), for a sufficiently small \(\epsilon >0\).

The strict complementarity assumption is needed to obtain a tight prediction of the next active set \({\mathcal {I}}\), cf. first part of Theorem 7. From the proof of [46, Theorem 3.2] it follows that the strict complementarity condition implies that the one-sided time derivatives of \(\lambda _i(t), i \notin {\mathcal {I}}(x(t))\) are nonzero, see also Remark 6. Without this assumption, one can obtain only an over-approximation of \({\mathcal {I}}\). However, it can be relaxed at the cost of looking at higher-order time derivatives of \(\lambda _i(t_{\textrm{s},n}), i \in {\mathcal {I}}_{n}^0\) and constructing an appropriate LCP for determining the active sets past some switching point, cf. [47, Section 4.2] for derivations. Note that the strict complementarity is needed only in a neighborhood of the switching points. Strong stability is assumed in order to apply some results on parametric LCPs. In our case, we will use it to draw the same conclusions from LCPs constructed at t and \(t'\), where t and \(t'\) are sufficiently close.

Example 4

We briefly illustrate Theorem 7 on our example \({\dot{x}} = 2-\textrm{sign}(x)\) with \(x(0) = -1\), cf. Fig. 2. It is easy to see that \(t_{\textrm{s},1} = -\frac{1}{3}\) and that the relevant active sets are \({\mathcal {I}}_0 = \{1\}\), \({\mathcal {I}}_1 =\{2\}\) and \({\mathcal {I}}^0_1(t_{\textrm{s},1}) = \{1,2\}\). The LCP (18) for our example at \(t_{\textrm{s},1}\) reads as

With \(\alpha = 5\) this LCP has the unique solution \({\tilde{\theta }}_{{\mathcal {I}}_1^0} = (0,\frac{1}{4})\) and \({\tilde{w}}_{{\mathcal {I}}_1^0} = (\frac{1}{2},0)\) and according to the last theorem it correctly predicts \({\mathcal {I}}_1 = \{2\}\).

2.3 Remark on Cartesian products of Filippov systems

The reformulation from the last subsection given by the DCS (8) fails on some simple examples such as: \({\dot{x}}_1 \in -{\textrm{sign}}(x_1), \ {\dot{x}}_2\in -{\textrm{sign}}(x_2)\), \(x \in {{\mathbb {R}}}^2\). This example satisfies the one-sided Lipschitz condition and has a unique Filippov solution [22, 48]. However, as shown in [48] at (0, 0) the DAE arising from (8) fails to have a unique solution. One can see that \({\dot{x}}_1 \in -{\textrm{sign}}(x_1)\) and \({\dot{x}}_2 \in -{\textrm{sign}}(x_2)\) are completely independent and thus they should be treated in such a way.

Stewart introduced a generalization of his reformulation for such cases in [48]. One should identify the \({n_\textrm{sys}}\) independent subsystems with index \(k = 1,\ldots ,{n_\textrm{sys}}\), where each subsystem has \(n_f^k\) modes. We equip all variables related to the \(k-\)th subsystem with the superscript k. Instead of (3) one can write

Finding the functions \({g}^{k}(\cdot ) \in {{\mathbb {R}}}^{n_f^k}\) from \({c}^{k}(\cdot ) \in {{\mathbb {R}}}^{{n_f^k}}\) for every subsystem works the same way as in Sect. 2.2.1. Thereby, the regions of every subsystem are defined via the matrix \(S^k\) and the switching functions \(c^k(x) \in {{\mathbb {R}}}^{n_c^k}\). Every mode’s convex combination is encoded by its parametric linear program (7), constructed with the k-th modes’ switching functions \(g^k(x) \in {{\mathbb {R}}}^{n_f^k}\). Thus, we can derive the DCS

where \(F^k(x,u) = [f_{1}^{k}(x,u),\ldots ,f_{n_f^k}^{k}(x,u)] \in {{\mathbb {R}}}^{n_x \times n_f^k}\) and \({g}^{k}(x) \in {{\mathbb {R}}}^{n_f^k}\), \({\theta }^k\in {{\mathbb {R}}}^{n_f^k}\), \({\lambda }^k \in {{\mathbb {R}}}^{n_f^k}\) and \({\mu }^k \in {{\mathbb {R}}}\), for all \(k \in \{ 1,\dots , {n_\textrm{sys}}\}\). For ease of notation, in the remainder of the paper we treat the case with \({n_\textrm{sys}}= 1\), as all extensions are straightforward.

To the best of the authors’ knowledge, there are no general conditions known which identify when the r.h.s. of (1) is partially separable as in (20) and there might even be multiple ways to write it in this form. However, in practice, it is usually easy to identify the structure of (20) by inspection. For example, this occurs if we have multiple surfaces with friction, or multiple objects touching the same frictional surface [48].

2.4 Sensitivities with respect to parameters and initial values

Correct calculation of derivatives of solutions w.r.t. parameters (e.g., discretized control functions) and initial values is crucial for efficient numerical optimal control algorithms and verifying the optimality of a solution. This is not straightforward for ODE with a discontinuous r.h.s., as the sensitivity usually exhibits jumps when switches occur. As any constant parameter \({\hat{p}}\) can be modeled via adding the state \({\dot{p}} = 0\) and \(p(0) = {\hat{p}}\), we restrict our attention to sensitivities w.r.t. initial values.

Regard the DCS given by Eq. (8) on a time interval [0, T] with the initial condition \(x(0) = x_0\). Assume that the surface \(\partial R_j\) is reached at \({t_{\textrm{s}}}(x_0)\in (0,T)\) and that \(x_0 \in R_i\). We consider the case where the solution crosses a co-dimension one surface of discontinuity \(\partial R_j\). Other cases are where the trajectory: (a) slides on the surface of discontinuity after reaching it, (b) starts on a surface of discontinuity and stays on it or leaves it, or (c) goes from one surface to another. They can be analyzed with the same arguments as below, but we omit these cases here for brevity, cf. [22, Section 2.11].

In the case of crossing, we have for \(t \in \left[ 0,{t_{\textrm{s}}}\right) \) that \({\mathcal {I}}(x(t)) = \{i\}\) and from (8) it follows that \({\dot{x}} = f_i(x)\). After crossing \(\partial R_j\) at \({t_{\textrm{s}}}\) we have \({\mathcal {I}}(x(t)) = \{ j\}\) for \(t\in \left( {t_{\textrm{s}}},T\right] \) and \({\dot{x}} = f_j(x)\). At \({t_{\textrm{s}}}\) it holds that \(\psi _{i,j}(x({t_{\textrm{s}}}))=0\) with

Thus, the system can be compactly represented by

We are interested in the exact sensitivity matrix \(X(t,0;x_0) = \frac{\partial x(t;x_0)}{\partial x_0} \in {{\mathbb {R}}}^{n_x \times n_x}\) of a solution \(x(t;x_0)\) of the system (23). The function \(X(t,0;x_0)\) obeys smooth linear variational differential equations on both sides of \({t_{\textrm{s}}}\), but exhibits a jump at \({t_{\textrm{s}}}\) [22]. The statement of the next proposition is adapted from [51, Section 3.3].

Proposition 9

Regard the system (23) with \(x(0) = x_0 \in R_i\) on an interval [0, T] with a switch at \({t_{\textrm{s}}}\in (0,T)\). Assume that the functions \(f_i(x),\; f_j(x),\psi _{i,j}(x)\) are continuously differentiable along \(x(t), t\in [0,T]\). Assume the solution x(t) reaches the surface of discontinuity transversally, i.e., \(\nabla \psi _{i,j} (x({t_{\textrm{s}}}))^\top f_i (x({t_{\textrm{s}}}))>0\). Then the sensitivity \(X(T,0;x_0)\) of a solution \(x(t;x_0)\) of the system described by the ODE (23) is given by

This proposition can also be adapted to the case of sliding modes. We obtain similar expressions for the sensitivity jump formula as in (24). The only change needed to be made is to replace \(f_j(x)\) with \(f^*(x)\), where \(f^*(x)\) defines the sliding vector field [21].

Since numerical sensitivities obtained via standard time-stepping methods fail to converge to their correct values (24) [40, 51], artificial local minima arbitrarily close to the initialization point may arise in the context of optimization and impair the progress of the optimizer. This is resolved within FESD, where the convergence of the discrete-time sensitivities is recovered, cf. Sect. 4.5.

3 Finite Elements with Switch Detection

This section introduces the main algorithmic ingredients of the FESD method. The goal of the method is to: (a) detect exactly the time of reaching or leaving the region boundaries which is necessary for high accuracy of integration methods, (b) exactly compute the sensitivities across regions in order to correctly treat the nonsmoothness and (c) appropriately treat the possible evolution on the boundary that is present in sliding modes.

In this section, we regard a single control interval [0, T] with a constant externally chosen control input \(q \in {{\mathbb {R}}}^{n_u}\), i.e., we set \(u(t) = q\) for \( t\in [0,T]\). Extensions with more complex smooth parametrizations of the control function are straightforward.

3.1 Standard Runge–Kutta discretization

As a starting point in our analysis, we regard a standard Runge-Kutta (RK) discretization of the DCS (8). In the nonsmooth ODE community, these schemes are known as time-stepping methods. Opposed to event-based/switch-detection methods, they assume fixed step sizes \(h_n\) and do not try to detect the switches. As a consequence, they have in general only first-order accuracy [2]. The theoretical properties of RK methods for DI and DCS have been studied by many authors, e.g., [17, 28, 50, 52].

Suppose the initial value \(x(0) = s_0\) is given. We divide the control interval into \({N_\textrm{FE}}\) finite elements (i.e., integration intervals) \([t_{n},t_{n+1}]\) via the grid points \(0= t_0< t_1< \ldots <t_{{N_\textrm{FE}}} = T\). On each of the finite elements we consider an \({n_\textrm{s}}\)-stage Runge–Kutta method which is characterized by the Butcher tableau entries \(a_{i,j},b_i\) and \(c_i\) with \(i,j\in \{1,\ldots ,{n_\textrm{s}}\}\) [25]. The fixed step size reads as \(h_{n} = t_{n+1} - t_{n},\; n = 0, \ldots ,{N_\textrm{FE}}-1\). The approximation of the differential state at the grid points \(t_n\) is denoted by \(x_n \approx x(t_n)\). We regard a differential representation of the Runge–Kutta method where the derivatives of states at the stage points \(t_{n,i} :=t_n + c_i h_n,\; i = 1,\ldots , {n_\textrm{s}}\), are degrees of freedom. For a single finite element, they are summarized in the vector \(V_n :=(v_{n,1}, \ldots , v_{n,{n_\textrm{s}}}) \in {{\mathbb {R}}}^{{n_\textrm{s}}n_x}\). The stage values for the algebraic variables are collected in the vectors: \(\Theta _n :=(\theta _{n,1}, \ldots , \theta _{n,{n_\textrm{s}}} )\in {{\mathbb {R}}}^{{n_\textrm{s}}\cdot {n_{f}}}\), \(\Lambda _n :=(\lambda _{n,1}, \ldots , \lambda _{n,{n_\textrm{s}}} )\in {{\mathbb {R}}}^{{n_\textrm{s}}\cdot {n_{f}}}\) and \(M_n :=(\mu _{n,1}, \ldots , \mu _{n,{n_\textrm{s}}} )\in {{\mathbb {R}}}^{{n_\textrm{s}}}\). We also define the vector \(Z_n =(x_n,\Theta _n,\Lambda _n,M_n,V_n)\) which collects all internal variables. With \(x_n^{\textrm{next}}\) we denote the value at \(t_{n+1}\), which is obtained after a single integration step. Finally, the RK equations for a single finite element for the DCS (8) are given by:

Next, we summarize the equations for all \({N_\textrm{FE}}\) finite elements over the whole interval [0, T] in a discrete-time system manner. For this purpose, we introduce some additional shorthands. All variables of all finite elements for a single control interval are collected in the vectors \({\textbf{x}}= (x_0,\ldots ,x_{{N_\textrm{FE}}}) \in {{\mathbb {R}}}^{({N_\textrm{FE}}+1)n_x}\), \({\textbf{V}} = (V_0,\ldots ,V_{{N_\textrm{FE}}-1}) \in {{\mathbb {R}}}^{{N_\textrm{FE}}{n_\textrm{s}}n_x}\) and \({\textbf{h}}:=(h_0,\ldots ,h_{{N_\textrm{FE}}-1})\in {{\mathbb {R}}}^{{N_\textrm{FE}}}\). Note that the simple continuity condition \(x_{n+1} = x_{n}^{\textrm{next}}\) holds. We collect all stage values of the Filippov multipliers in the vector \(\mathbf {\Theta } = ({\Theta }_0,\ldots ,\Theta _{{N_\textrm{FE}}-1})\in {{\mathbb {R}}}^{n_{{\theta }}}\) and \(n_{{\theta }}= {N_\textrm{FE}}{n_\textrm{s}}{n_{f}}\). The vectors \(\mathbf {\Lambda }\in {{\mathbb {R}}}^{n_{\theta }},\ {\textbf{M}}\in {{\mathbb {R}}}^{n_{\mu }}\) for the stage values of the Lagrange multipliers are defined accordingly, with \(n_{\mu } = \frac{n_{{\theta }}}{{n_{f}}}\). The vector \({\textbf{Z}} = ({{\textbf {x}}},{\textbf{V}},\mathbf {\Theta },\mathbf {\Lambda },{\textbf{M}})\in {{\mathbb {R}}}^{n_{{\textbf{Z}}}}\) collects all internal variables and \(n_{{\textbf{Z}}} = ({N_\textrm{FE}}+1)n_x + {N_\textrm{FE}}{n_\textrm{s}}n_x + 2n_{\theta }+n_{\mu }\).

All computations over a single control interval which we call here the standard discretization are summarized in the following equations which resemble a discrete-time system:

where \(s_1\in {{\mathbb {R}}}^{n_x}\) is the approximation of x(T) and

Note that \({\textbf{h}}\) are given parameters, implicitly fixed by the chosen discretization grid. It is usually impossible to obtain high-accuracy solutions with this method, as this can only happen if active-set changes occur coincidentally at \(t_n\). Despite the high accuracy in this unlikely case, the numerical sensitivities would still be wrong [36, 51]. When active-set changes happen within a finite element, the IRK method tries to approximate a nonsmooth trajectory by a smooth polynomial, cf. the left plot in Fig. 3, which results in a poor approximation.

3.2 Algorithmic ingredients of the FESD method

To ensure high-accuracy solutions of FESD, we allow the optimization routine to vary the lengths \(h_n\) of the finite elements such that all switching points coincide with grid points \(t_n\). Consequently, active-set changes cannot happen in the interior of each finite element, and smooth functions are approximated by smooth polynomials within a finite element, cf. the right plot in Fig. 3. Thus, the active set \(\mathcal {I}(x(t))\) changes its value only at some grid point \(t_n\) and is constant in the interior of all intervals \((t_{n},t_{n+1})\). A key assumption in any event-based method is that there are finitely many switches in finite time. We also assume that there are enough finite elements to capture every switch that occurs in the time interval [0, T].

3.2.1 The step sizes as degrees of freedom

To capture the switches with the discretization grid points \(t_n\), the step sizes \(h_n\) are left to be degrees of freedom in the RK method in the remainder of this paper. Additionally, the condition \(\sum _{n=0}^{{N_\textrm{FE}}-1}h_n = T\) ensures that we regard a time interval of unaltered length.

Illustration of the analytic solution and a polynomial solution approximation to a PSS via an IRK Radau-IIA method of order 7. The left plot shows an approximation with a fixed step size where an active-set change happens on a stage point. The right plot shows an approximation obtained with FESD (based on the same IRK method) where the switch happens on the boundary. The circles represent the stage values, the vertical dotted lines the finite elements boundaries, and the vertical dashed line the switching time \({t_{\textrm{s}}}\)

3.2.2 Cross complementarity

We want to prohibit active-set changes on stage points inside a finite element. To achieve this, next to the complementarity conditions for every stage point \(0=\Phi (\theta _{n,m},\lambda _{n,m})\) we include additional conditions on the variables \(\mathbf {\Theta }\) and \(\mathbf {\Lambda }\). These conditions ensure that the variable step size \(h_n\) adapts so that the switching times are indeed captured, as shown below.

For ease of exposition, we assume that the underlying RK scheme satisfies \(c_{{n_\textrm{s}}} =1\) (e.g., Radau and Lobatto methods [25]). This means that the right boundary point of a finite element is a stage point, since \(t_{n+1} = t_n+c_{{n_\textrm{s}}} h_n\) for \(c_{{n_\textrm{s}}}=1\). At the end of the section, we detail how to treat the case with \(c_{{n_\textrm{s}}} \ne 1\) (e.g., Gauss-Legendre methods).

Continuity of \(\lambda (\cdot )\)and \(\mu (\cdot )\).

The boundary values of the approximation of \(\lambda (\cdot )\) and \(\mu (\cdot )\) on an interval \([t_n,t_{n+1}]\) play a crucial role in FESD. Therefore, we regard their values at \(t_n\) and \(t_{n+1}\) which are denoted by \(\lambda _{n,0},\; \mu _{n,0}\) and \(\lambda _{n,{n_\textrm{s}}},\; \mu _{n,{n_\textrm{s}}}\), respectively. We exploit the continuity of \(\lambda (\cdot )\) and \(\mu (\cdot )\) (cf. Lemma 5) and impose for their discrete-time counterparts for \(n = 0,\ldots , {N_\textrm{FE}}-1\):

Therefore, in the sequel we use only the right boundary points \(\lambda _{n,{n_\textrm{s}}}\) and \(\mu _{n,{n_\textrm{s}}}\) which are degrees of freedom in the RK equations (26).

Moving the switching points to the boundary. Since \(\lambda (\cdot )\) is continuous, on some interval \((t_n,t_{n+1})\) with a fixed active set \({\mathcal {I}}_n\), in the interior of the regarded interval its components are either zero or positive on the whole interval. The stage values \(\lambda _{n,i}\) of the discrete-time counterpart should satisfy this property as well. This is achieved by the cross complementarity conditions, which read for all \(n \in \{0,\ldots ,{N_\textrm{FE}}\!-\!1\}\) as

Some of the appealing properties of the constraints (28) are given by the next lemma. In our notation \(\theta _{n,m,i}\) is the i-th component of the vector \(\theta _{n,m}\).

Lemma 10

Regard a fixed \(n \in \{0,\ldots ,{N_\textrm{FE}}\!-\!1\}\) and a fixed \(i \in \mathcal {J}\). If any \(\theta _{n,m,i}\) with \(m \in \{1,\ldots , {n_\textrm{s}}\}\) is positive, then all \(\lambda _{n,m',i}\) with \(m'\in \{0,\ldots , {n_\textrm{s}}\}\) must be zero. Conversely, if any \(\lambda _{n,m',i}\) is positive, then all \(\theta _{n,m,i}\) are zero.

Proof

Let \(\theta _{n,m,i}\) be positive, and suppose \(\lambda _{n,j,i} = 0 \) and \(\lambda _{n,k,i} >0\) for some \(k,j\in \{0,\ldots , {n_\textrm{s}}\}, k\ne j\), then \(\theta _{n,m,i}\lambda _{n,k,i} >0\) which violates (28), thus all \(\lambda _{n,m',i}=0,\ m'\in \{0,\ldots , {n_\textrm{s}}\}\). The converse is proven similarly. \(\square \)

A consequence of this lemma is that at the boundary points \(t_{n+1}\) for active-set changes we have \(\lambda _{n,{n_\textrm{s}},i} = \lambda _{n+1,{n_\textrm{s}},i} = 0\). This is important for the switch detection as we discuss below. The results of the last lemma is illustrated in Fig. 4. Note that in contrast to the left plot illustrating the standard complementary conditions, in the right plot, all stage points inside a finite element have the same active set and on the finite element boundary we have \(\lambda _{n,{n_\textrm{s}},i} = 0\).

Note that \(\lambda _{0,0}\) and \(\mu _{0,0}\) are not defined via Eq. (27), as we do not have a preceding finite element. However, they are crucial for the statement of the last lemma, especially, if the boundary point is the only stage point, as is the case for the implicit Euler method. This can be resolved by pre-computing \(\lambda _{0,0}\) explicitly and using it in (28). Note that \(\lambda _{0,0}\) is not a degree of freedom. Since \(x_0\) is known, we obtain \(\mu _{0,0} = \min _i g_i(x_0)\) and thus we have \(\lambda _{0,0} = g(x_0) - \mu _{0,0}\).

An illustration of the standard complementarity conditions \(\Psi (\mathbf {\Theta },\mathbf {\Lambda }) =0\) (left plot) and the standard complementarity conditions augmented by \(0=G_{\textrm{cross}}(\mathbf {\Theta },\mathbf {\Lambda })\) (right plot). The dots represent the stage values. The vertical dotted line marks the finite element boundaries, and the vertical dashed line marks the switching time \({t_{\textrm{s}}}\). In the standard case (left plot), an active-set change can happen at any complementarity pair. With the cross complementarities (29) (right plot) an active-set change can only happen on the boundaries of a finite element

The conditions (28) are given in their sparsest form. Due to the non-negativity of \(\Lambda _{n}\) and \(\Theta _{n}\) there are many equivalent formulations of this condition, e.g., all conditions above can be summed up for a single finite element or even for all finite elements on the regarded control interval. Moreover, instead of the component-wise products in \(\theta _{n,m}\) and \(\lambda _{n, m'}\) we can use also inner products of these vectors. Thus, we use a more compact form of (28) where we combine the conditions for two neighboring finite elements. The motivation for this form is that we end up with the same number of new conditions as we have new degrees of freedom by varying \(h_n\). The conditions read as:

Implicit switch detection. We briefly explain how the switch detection for the solution approximation is realized and formalize it later in Sect. 4. Note that for \(x_n^{\textrm{next}} = x_{n+1}\) we have from the KKT conditions of the \(\textrm{LP}(x_{n+1})\) [cf. Eq. (9)] that \(\mu _{n,{n_\textrm{s}}} = \min _j g_j(x_{n+1})\). Moreover, if the active-set changes between the n-th and \(n+1\)-st finite element in the i-th component, then from Lemma 10 it follows that \(\lambda _{n,{n_\textrm{s}},i}=0\). Therefore, we obtain from (25) implicitly the condition

which is equal to

where \(\psi _{i,j}(x_{n+1}) = 0\) defines the switching surface between \(R_i\) and \(R_j\). This condition forces \(h_n\) to adapt such that the switch is detected exactly. Note that the condition (30) appears only if active-set changes happen, hence the whole switch detection procedure is implicit.

3.2.3 Step equilibration

If no switches occur, i.e., the active sets \({\mathcal {I}}_n\) do not change between two neighboring finite elements, then the cross complementarity conditions in (29) are trivially satisfied. This yields spurious degrees of freedom in the step sizes \(h_{n}\) and the optimizer can adapt the grid in an undesirable way and harm the discretization accuracy. Also, the path-constraint discretization can be exploited unfavorably, just to decrease the objective value. To resolve this problem we introduce step equilibration conditions.

The step size should only change if a switch occurs and otherwise be constant. This results in a piecewise uniform discretization grid for the differential and algebraic states on the regarded control interval. To accomplish this, we derive an indicator function that is zero only if a switch occurs otherwise its value is strictly positive.

If some \(\lambda _i(t_n)\) is equal to zero and its left or right time derivative is nonzero, then an active-set change has occurred. Instead of looking at the time derivatives, in the discrete-time case, we exploit the non-negativity of \(\lambda _{n,m}\) and the fact that the active set is fixed for the whole finite element (due to cross complementarity, cf. Lemma 10). For \(n \in \{1,\ldots ,{N_\textrm{FE}}-1\}\), we define the following backward and forward sums of the stage values over the neighboring finite elements \([t_{n-1},t_n]\) and \([t_{n},t_{n+1}]\):

The components of \(\sigma _{n}^{\lambda ,\textrm{B}}\) and \(\sigma _{n}^{\lambda ,\textrm{F}}\) are zero if the left and right time derivatives of the corresponding components of \(\lambda _{n,m}\) are zero.

Likewise, they are positive when the left and right time derivatives are nonzero. Analogously, the sums for \(\theta _{n,m}\) are defined as:

Additionally, we define the following vectors for all \(n \in \{ 1,\dots ,{N_\textrm{FE}}-1\)}:

If there is an active-set change in the i-th complementarity pair, then at most one of the i-th components of \(\sigma _{n}^{\lambda ,\textrm{B}}\) and \(\sigma _{n}^{\lambda ,\textrm{F}}\) is nonzero, hence their product, i.e., the i-th component of \(\pi _{n}^{\lambda }\), is zero. Due to complementarity, the same holds for \(\pi _{n}^{\theta }\). For sliding modes the corresponding components of \(\pi _{n}^{\lambda }\) are zero and of \(\pi _{n}^{\theta }\) they are positive (due to complementarity). Thus, the i-th component of

is only zero if there is an active-set change in the i-th complementarity pair at \(t_n\). A function that has the desired properties is defined as:

This scalar function summarizes the effects of all components. It is zero only if an active-set change happens at the boundary point \(t_{n}\), otherwise, it is strictly positive. Finally, the constraints that remove possible spurious degrees of freedom in \(h_n\) read as:

Since many products are involved in \(\eta _n(\mathbf {\Theta },\mathbf {\Lambda })\), one can replace it by \({\tilde{\eta }}_n(\mathbf {\Theta },\mathbf {\Lambda }) :=\tanh (\eta _n(\mathbf {\Theta },\mathbf {\Lambda }))\) to have a better scaling. An example for step equilibration is studied in Subsection 5.3 numerically.

3.2.4 The FESD discretization

We have now all the ingredients to extend the standard RK discretization (26) to the FESD discretization. We use again the same discrete-time representation

where \(F_{{\textrm{fesd}}}({\textbf{x}})\!=x_{{N_\textrm{FE}}}\) is the state transition map and \(G_{{\textrm{fesd}}}({\textbf{x}},{\textbf{h}},{\textbf{Z}},q, T)\) collects all other internal computations including all RK steps within the regarded control interval:

For a fixed control function q, horizon length T and initial value \(s_0\), the formulation (32) can be used as an integrator with exact switch detection for PSS (1). Since Filippov DI does not always have unique solutions, one cannot expect uniqueness of solutions for their discrete-time counterparts (32) in all cases. In simulation methods, a common approach is to either pick one local solution obtained by the solver for the nonlinear complementarity problem (32) or to enumerate all possible solutions at an active-set change [4, 46]. In this paper, we consider only the first option. Note that in sliding modes, we implicitly obtain differential algebraic equations of index 2, cf. Sect. 2.2.2. To achieve good accuracy in practice it is usually required to use stiffly accurate methods, e.g., Radau-IIA methods [25].

3.2.5 Remark on RK methods with \(c_{{n_\textrm{s}}}\ne 1\)

We outline how to extend the FESD method when an RK scheme with \(c_{{n_\textrm{s}}}\ne 1\) is regarded. In contrast to the developments so far, with \(c_{{n_\textrm{s}}} \ne 1\) the variables \(\lambda _{n,{n_\textrm{s}}},\; \mu _{n,{n_\textrm{s}}}\) do not correspond the boundary values \(\lambda (t_{n+1})\) and \(\mu (t_{n+1})\) anymore (since \(t_n+c_{{n_\textrm{s}}} h_n < t_{n+1}\)). We denote the boundary points in this case by \(\lambda _{n,{n_\textrm{s}}+1},\; \mu _{n,{n_\textrm{s}}+1}\). They are computed by solving \({\textrm{LP}}(x_{n+1})\) for \(n=0,\ldots {N_\textrm{FE}}-2\):

We still exploit the continuity of \(\lambda (\cdot )\) and \(\mu (\cdot )\) (cf. Lemma 5), by replacing (27) with the following continuity conditions for their discrete-time counterparts for \(n = 0,\ldots , {N_\textrm{FE}}-1\):

With slight abuse of notation, we add the new variables \(\theta _{n,{n_\textrm{s}}+1},\lambda _{n,{n_\textrm{s}}+1}\) and \(\mu _{n,{n_\textrm{s}}+1}\) to the vectors \(\mathbf {\Theta }\), \(\mathbf {\Lambda }\) and \({\textbf{M}}\), respectively. The vector \({\textbf{Z}}\) is redefined accordingly. The cross complementarity conditions are now modified such that next to the stage points we include the boundary points with the index \({n_\textrm{s}}+1\):

For the whole control time we have in total \(({N_\textrm{FE}}-1)(2{n_{f}}+1)\) new variables.

4 Convergence theory

In this section we present the main convergence result of the FESD method. First, we prove that even though the FESD system (32) is always over-determined it still has a locally isolated solution. Second, we show that the numerical solution approximation \({\hat{x}}_h(\cdot )\) generated by FESD converges to a solution \(x(\cdot )\) in the sense of Definition 1, with the same order that the underlying RK method has for smooth ODE. Additionally, we prove that the numerical sensitivities converge to their correct values with high accuracy.

4.1 Main assumptions

We start by introducing some notation and stating some assumptions related to the FESD formulation (32), which are important for our theoretical study in this section.

Assumption 11

(Runge–Kutta method) A Butcher tableau with the entries \(a_{i,j},b_i\) and \(c_i\), \(i,j\in \{1,\ldots ,{n_\textrm{s}}\}\) related to an \({n_\textrm{s}}\)-stage Runge–Kutta (RK) method is used in the FESD (32). Moreover, we assume that:

-

(a)

If the same RK method is applied to the differential algebraic equation (14) on an interval \([t_a,t_b]\), it has a global accuracy of \(O(h^p)\) for the differential states.

-

(b)

The RK equations applied to (14) have a locally isolated solution for a sufficiently small \(h_n>0\).

This assumption aims to consider a broad class of RK methods, and both assumptions are standard assumptions [25].

Assumption 12

(Solution existence) For given parameters \(s_0,q\) and T, there exists a solution to the FESD problem (32), such that for all \(n \in \{0,\ldots ,{N_\textrm{FE}}-1\}\) it holds that \({h}_n\ge 0\).

This assumption means that there exists a solution and that we can compute it. If the FESD method is used in direct optimal control, non-negativity of the step sizes can easily be achieved by adding box constraints on \(h_n\). This is the strongest assumption we make in this paper. Ideally, one would prove the existence of solutions. Since the system is over-determined this cannot be done straightforwardly by applying standard existence results [19]. As we will show below, in practice numerical solvers have no trouble computing such solutions.

We state a technical assumption that ensures regularity of the FESD problem (32).

Assumption 13

(Regularity) Given the complementarity pairs \(\Psi (\theta _{n,m},\lambda _{n,m})=0\), for all \(n = 0,\ldots {N_\textrm{FE}}-1\) there exists an \(m\in \{1,\dots ,{n_\textrm{s}}\}\) and \(i \in \{1,\ldots ,{n_{f}}\}\), such that the strict complementarity property holds, i.e., \(\theta _{n,m,i}+\lambda _{n,m,i}>0\). Moreover, for the RK equations (25) it holds for all \(n = 0,\ldots {N_\textrm{FE}}-1\), that at least one entry of the vector \(\nabla _{h_n} G_{{\textrm{rk}}}(x_{n+1},Z_n,h_n,q)\) is nonzero.

Once all stage values are computed by solving (32), we can use some interpolation method to construct the solution approximation candidate in continuous time, cf. Assumption 11. For example, if we use a collocation-based IRK method continuous-time approximation \({\hat{x}}_n(t;h_{n})\) on every finite element is easily obtained via Lagrange polynomials [25]. We append the approximation for every finite element and write

where \(h = \max _{n\in \{0,\ldots {N_\textrm{FE}}-1\}} h_n\). Similarly, continuous-time representations can be found for the algebraic variables, and we denote them compactly as \({\hat{\lambda }}_h(t)\), \({\hat{\theta }}_h(t)\) and \({\hat{\mu }}_h(t)\). Similar to the definitions in Sect. 2.2.3, the fixed active set in this case is denoted by \({\mathcal {I}}({\hat{x}}_h(t)) = {\hat{{\mathcal {I}}}}_n,\ t\in ({\hat{t}}_{\textrm{s},n},{\hat{t}}_{\textrm{s},n+1})\) and the active set at switching point \({\hat{t}}_{\textrm{s},n}\) by \({\mathcal {I}}({\hat{x}}_h({\hat{t}}_{\textrm{s},n})) = {\hat{{\mathcal {I}}}}_n^0\).

4.2 Solutions of the FESD problem are locally isolated

In this subsection, we analyze some properties of solutions of the FESD problem (32). For the convenience of the reader, we restate the problem but discard the trivial state transition map \(s_1 = F_{{\textrm{fesd}}}({\textbf{Z}}) = x_{{N_\textrm{FE}}}\):

Recall that \({\textbf{Z}} = ({{\textbf {x}}},{\textbf{V}},\mathbf {\Theta },\mathbf {\Lambda },{\textbf{M}})\in {{\mathbb {R}}}^{n_{{\textbf{Z}}}}\). Additionally, we have that \({G}_{\textrm{std}}: {{\mathbb {R}}}^{n_{{\textbf{Z}}}} \times {{\mathbb {R}}}^{{N_\textrm{FE}}} \times {{\mathbb {R}}}^{n_x} \times {{\mathbb {R}}}^{n_u} \times {{\mathbb {R}}}\rightarrow {{\mathbb {R}}}^{n_{{\textbf{Z}}}}\), \(G_{\textrm{cross}}: {{\mathbb {R}}}^{n_{\theta }} \times {{\mathbb {R}}}^{n_{\theta }} \rightarrow {{\mathbb {R}}}^{{N_\textrm{FE}}-1}\) and \(G_{\textrm{eq}}: {{\mathbb {R}}}^{n_{{N_\textrm{FE}}}} \times {{\mathbb {R}}}^{n_{\theta }} \times {{\mathbb {R}}}^{n_{\theta }} \rightarrow {{\mathbb {R}}}^{{N_\textrm{FE}}-1}\). Finally, we have that \(G_{{\textrm{fesd}}}: {{\mathbb {R}}}^{n_{{\textbf{Z}}}} \times {{\mathbb {R}}}^{{N_\textrm{FE}}} \times {{\mathbb {R}}}^{n_x} \times {{\mathbb {R}}}^{n_u} \times {{\mathbb {R}}}\rightarrow {{\mathbb {R}}}^{n_{{\textbf{Z}}} + 2{N_\textrm{FE}}-1}\). Again, for ease of exposition, we regard \(c_{{n_\textrm{s}}} = 1\) and give the extensions later with \(n_{\theta }= {N_\textrm{FE}}{n_\textrm{s}}{n_{f}}\) and \(n_{\mu } = {N_\textrm{FE}}{n_\textrm{s}}\).

The vectors \(s_0 \in {{\mathbb {R}}}^{n_x}\), \(q \in {{\mathbb {R}}}^{n_u}\) and \(T\in {{\mathbb {R}}}\) are given parameters, hence we have \(n_{{\textbf{Z}}} + {N_\textrm{FE}}\) unknowns and \(n_{{\textbf{Z}}} + 2{N_\textrm{FE}}-1\) equations. Consequently, for \({N_\textrm{FE}}>1\), which we always assume in FESD, the system (36) is over-determined. However, we show in the next theorem that for a given active set \({N_\textrm{FE}}-1\) equations in (36) are implicitly satisfied, and we always end up with a square system. As a consequence, Eq. (36) has under reasonable assumptions a locally unique solutions. Nevertheless, since we do not know the active set a priori, we can also not know which equations are binding and which are implicitly satisfied.

Lemma 14

(Corollary 6.1 in [33]) Let \(A_1 \in {{\mathbb {R}}}^{k \times m}\) and \(A_2 \in {{\mathbb {R}}}^{m \times q}\), then

Theorem 15

Suppose that Assumptions 11, 12 and 13 hold. Let \({s}_0\), \({q}_0\) and \({T}>0\) be some fixed parameters such that \(G_{\textrm{fesd}}({\textbf{Z}}^*,{\textbf{h}}^*,{s}_0,{q},{T}) =0\). Let \(P^*\subseteq {{\mathbb {R}}}^{n_x}\times {{\mathbb {R}}}^{n_u} \times {{\mathbb {R}}}\) be the set of all parameters \(({\hat{s}}_0,{\hat{q}},{\hat{T}})\) such that \({\textbf{Z}} \in {{\mathbb {R}}}^{n_{\textbf{Z}}}\), which is the solution of \(G_{\textrm{fesd}}({\textbf{Z}},{\textbf{h}},{\hat{s}}_0,{\hat{q}},{\hat{T}})=0\), has the same active set as \({\textbf{Z}}^*\). Additionally, suppose that \(G_{\textrm{fesd}}(\cdot )\) is continuously differentiable in \(s_0,q\) and T for all \((s_0,q,T) \in P^*\). Then there exists a neighborhood \({P} \subseteq P^*\) of \(({s}_0,{q}_0,{T})\) such that there exist continuously differentiable single valued functions \({\textbf{Z}}^{*}: {P} \rightarrow {{\mathbb {R}}}^{n_{{\textbf{Z}}}}\) and \({\textbf{h}}^*: {P} \rightarrow {{\mathbb {R}}}^{{N_\textrm{FE}}}\).

Proof

We regard the active sets for every finite element \({\hat{{\mathcal {I}}}}_n\) for all \(n \in \{0\ldots ,{N_\textrm{FE}}-1\}\) that correspond to the solution \(({\textbf{Z}}^*,{\textbf{h}}^*)\). First, we look closer at the equations \(G_{\textrm{cross}}(\mathbf {\Theta }^*,\mathbf {\Lambda }^*)=0\) and \(G_{\textrm{eq}}({\textbf{h}}^*,\mathbf {\Theta }^*,\mathbf {\Lambda }^*)=0\). If two neighboring finite elements have the same active set, i.e., \({\hat{{\mathcal {I}}}}_{n} = {\hat{{\mathcal {I}}}}_{n+1}\), then the \((n+1)\)-th entry of \(G_{\textrm{cross}}(\mathbf {\Theta }^*,\mathbf {\Lambda }^*)\) is implicitly satisfied due to the point-wise complementarity conditions \(\Psi (\Theta _n,\Lambda _n)=0\) and \(\Psi (\Theta _{n+1},\Lambda _{n+1})=0\). Moreover, by construction we have \(\eta _{n+1}(\mathbf {\Theta }^*,\mathbf {\Lambda }^*)>0\) and the \((n+1)\)-th entry of \(G_{\textrm{eq}}({\textbf{h}}^*,\mathbf {\Theta }^*,\mathbf {\Lambda }^*,{T})=0\) is binding, i.e., it implies \(h^*_{n+1}=h^{*}_{n}\). On the other hand, if \({\hat{{\mathcal {I}}}}_n \ne {\hat{{\mathcal {I}}}}_{n+1}\), we have by construction that \(\eta _{n+1}(\mathbf {\Theta }^*,\mathbf {\Lambda }^*)=0\) and then \((n+1)\)-th entry of \(G_{\textrm{eq}}({\textbf{h}}^*,\mathbf {\Theta }^*,\mathbf {\Lambda }^*,{T})=0\) vanishes, i.e., is satisfied for any \(h^{*}_{n}\) and \(h^{*}_{n+1}\). However, the \((n+1)\)-th entry of \(G_{\textrm{cross}}(\mathbf {\Theta }^*,\mathbf {\Lambda }^*)=0\) is now binding, cf. Lemma 10.

We collect the binding \(n_1\) cross complementarity conditions, with \(0\le n_1 \le {N_\textrm{FE}}-1\), in the equation \(G^*_{\textrm{cross}}(\mathbf {\Theta }^*,\mathbf {\Lambda }^*)=0\), and the \({N_\textrm{FE}}-1-n_1\) implicitly satisfied into \(G^{\textrm{res}}_{\textrm{cross}}(\mathbf {\Theta }^*,\mathbf {\Lambda }^*) = 0\). Likewise, we collect the binding \(n_2\) step equilibration conditions, with \(1\le n_2 \le {N_\textrm{FE}}-1\), in \(G^*_{\textrm{eq}}({\textbf{h}}^*,\mathbf {\Theta }^*,\mathbf {\Lambda }^{*}) = 0\). The remaining \({N_\textrm{FE}}-1-n_2\) conditions are implicitly satisfied and are collected in \(G^{\textrm{res}}_{\textrm{eq}}({\textbf{h}}^*,\mathbf {\Theta }^*,\mathbf {\Lambda }^*)=0\). Note that \(n_1+n_2 = {N_\textrm{FE}}-1\). We highlight that \(\sum _{n=0}^{{N_\textrm{FE}}-1} h_n - T\) is always binding.

We can further simplify our system of equations by eliminating some degrees of freedom using \(G^*_{\textrm{eq}}({\textbf{h}}^*,\mathbf {\Theta }^*,\mathbf {\Lambda }^*) = 0\). All components of this vector are of the form \(\eta _n (h_{n}-h_{n+1})\) with \(\eta _n >0\). Therefore, we have \(n_2\) equations of the form of \(h_{n} = h_{n+1}\) and can remove \(n_2\) degrees of freedom. Furthermore, we can express any \(h_{j} = T- \sum _{i=0, i\ne j}^{{N_\textrm{FE}}-1} h_n\) and remove another degree of freedom. In total we removed \(n_2+1\) degrees of freedom and can regard a reduced number of unknown step-sizes, which we denote by \(\pmb {{\tilde{h}}}^{*} \in {{\mathbb {R}}}^{n_1}, n_1 = {N_\textrm{FE}}-n_2-1\). With a slight abuse of notation, we redefine the standard RK equations accordingly and obtain \({G}_{\textrm{std}}({\textbf{Z}}^*,\pmb {{\tilde{h}}}^*,{s}_0,{q},T) = 0\) with \({G}_{\textrm{std}}: {{\mathbb {R}}}^{n_{{\textbf{Z}}}} \times {{\mathbb {R}}}^{n_1} \times {{\mathbb {R}}}^{n_x} \times {{\mathbb {R}}}^{n_u} \times {{\mathbb {R}}}\rightarrow {{\mathbb {R}}}^{n_{{\textbf{Z}}}}\).

To summarize, for a fixed active set we can rewrite (36) in a reduced form as

with \(G_{{\textrm{fesd}}}^*({\textbf{Z}}^*,{\textbf{h}}^*,{s}_0,{q},{T}) \in {{\mathbb {R}}}^{n_{{\textbf{Z}}}+n_1}\). These conditions imply

with \(G_{{\textrm{fesd}}}^{\textrm{res}}({\textbf{h}}^*,\mathbf {\Theta }^*,\mathbf {\Lambda }^*) \in {{\mathbb {R}}}^{{N_\textrm{FE}}-1}\). Thus, for a given active set we can discard (38) and regard only the equivalent reduced problem (37), which is a square system of equations.

Next, we show that the Jacobian matrix \(\nabla _{({\textbf{Z}},\pmb {{\tilde{h}}})} G_{{\textrm{fesd}}}^*({\textbf{Z}}^*,\pmb {{\tilde{h}}}^*,{s}_0,{q},{T})^\top \) has full rank. This enables us to apply the implicit function theorem (cf. [18, Theorem 1B.1]) and establish the result of this theorem. We take a closer look at the matrix:

Under Assumption 12, for a fixed active set and a fixed \(h_n^{*}\) the equation \({G}_{\textrm{std}}({\textbf{Z}}^*,\pmb {{\tilde{h}}}^*,{s}_0,{q},{T}) = 0\) boils down to the RK equations for the differential algebraic equation (14). Due to Assumption 11 the RK system \({G}_{\textrm{std}}({\textbf{Z}}^*,\pmb {{\tilde{h}}}^*,{s}_0,{q},{T}) = 0\) has a locally isolated solution. A necessary and sufficient condition for this property is the invertibility of the Jacobian \( \nabla _{{\textbf{Z}}} G_{\textrm{std}}({\textbf{Z}}^*,\pmb {{\tilde{h}}}^*,{s}_0,{q},{T})^\top \) [18, Theorem 1B.8]. Thus, we have that \(\textrm{rank}( \nabla _{{\textbf{Z}}} G_{{\textrm{fesd}}}^*({\textbf{Z}}^*,\pmb {{\tilde{h}}}^*,{s}_0,{q},{T})^\top ) = n_{{\textbf{Z}}}\). Second, due to the block diagonal structure of \( \nabla _{\pmb {{\tilde{h}}}} G_{\textrm{std}}^*({\textbf{Z}}^*,\pmb {{\tilde{h}}}^*,{s}_0,{q},{T})\) and Assumption 13 we can deduce that \(\textrm{rank}( \nabla _{\pmb {{\tilde{h}}}} G_{{\textrm{fesd}}}^*({\textbf{Z}}^*,\pmb {{\tilde{h}}}^*,{s}_0,{q},{T})^\top ) = n_1\). Third, due to the nonnegativity of \((\mathbf {\Theta },\mathbf {\Lambda })\) and Assumption 13 by direct computation it can be verified that \(\textrm{rank}(\nabla _{{\textbf{Z}}} G^*_{\textrm{cross}}(\mathbf {\Theta },\mathbf {\Lambda })^\top ) = n_1\) and \(\nabla _{\pmb {{\tilde{h}}}} G^*_{\textrm{cross}}(\mathbf {\Theta },\mathbf {\Lambda })^\top = 0\).

We introduce more compact notation and summarize the results so far with:

-

\(M_1 = \nabla _{{\textbf{Z}}} G_{\textrm{std}}({\textbf{Z}}^*,\pmb {{\tilde{h}}}^*,{s}_0,{q},{T})^\top \in {{\mathbb {R}}}^{n_{{\textbf{Z}}} \times n_{{\textbf{Z}}}}\) with \(\textrm{rank}(M_1) = n_{{\textbf{Z}}}\)

-

\(M_2 = \nabla _{\pmb {{\tilde{h}}}} G_{\textrm{std}}({\textbf{Z}}^*,\pmb {{\tilde{h}}}^*,{s}_0,{q},{T})^\top \in {{\mathbb {R}}}^{n_{{\textbf{Z}}} \times n_1 }\) with \(\textrm{rank}(M_2) = n_{1}\) and

-

\(M_3 = \nabla _{{\textbf{Z}}} G_{\textrm{cross}}(\mathbf {\Theta },\mathbf {\Lambda })^\top \in {{\mathbb {R}}}^{n_1 \times n_{{\textbf{Z}}}}\) with \(\textrm{rank}(M_3) = n_{1}\).

To show that \(\nabla _{({\textbf{Z}},\pmb {{\tilde{h}}})} G_{{\textrm{fesd}}}^*({\textbf{Z}}^*,\pmb {{\tilde{h}}}^*,{s}_0,{q},{T})^\top \) has a rank of \(n_{{\textbf{Z}}}+n_1\), we show that the linear system

with \(v \in {{\mathbb {R}}}^{n_{\textbf{Z}}}\) and \(w \in {{\mathbb {R}}}^{n_1}\) has zero as the only solution.

From the first line in this linear system, we have that \(v = -M_1^{-1} M_2 w\). Since \( n_{{\textbf{Z}}} > n_1\), from Lemma 14, we conclude that \(\textrm{rank}(M_1^{-1} M_2 ) = n_1\). Next, from the second part of our linear system, we have that \( - M_3 M_1^{-1} M_2 w = 0\). Again, using Lemma 14, we conclude that \(\textrm{rank}(M_3 M_1^{-1} M_2 ) = n_1\). Hence, we have \(w = 0\) and \(v=0\) to be the only solution of the regarded linear system. This completes the proof.\(\square \)

Remark 16

We note that one cannot apply more general forms of implicit function theorems for generalized and nonsmooth equations [18]. They usually require Lipschitz continuity of the solution map to reason about local uniqueness, but the solution map for FESD is not continuous in general, but only piecewise continuous.

Example 5

To illustrate the discontinuity of the solution map, we look at the example of \({\dot{x}} \in 2-\textrm{sign}(x) +x^2\), with \({N_\textrm{FE}}= 2\), \(T = 0.2\) and vary \(x_0\in [-0.7,0.1]\). A solution approximation is obtained via FESD based on the Radau-IIA method of order 3. Consider an initial value \(x_0\) such that no switch occurs and a perturbed initial value \(x_0+\epsilon \) where a single switch occurs on the time interval of interest. Clearly, in the first case, we have an equidistant grid with \(h_0=h_1\), and in the second case \(h_0\) jumps to \({\hat{t}}_{\textrm{s},1}\). We conclude that \(h_0(x_0)\) is not a Lipschitz function, see Fig. 5 for an illustration.

Illustration of the discontinuity of the solution map of (36) for the PSS \({\dot{x}} \in 2-\textrm{sign}(x) +x^2\) for \(T = 0.2\) and \({N_\textrm{FE}}=2\)

4.2.1 Extension for the case of \(c_{{n_\textrm{s}}}\ne 1\)