Abstract

The present article is devoted to wavelet matrix compression for boundary integral equations when using anisotropic wavelet bases for the discretization. We provide a compression scheme for boundary integral operators of order \(2q\) on patchwise smooth and globally Lipschitz continuous mainfolds which amounts to only \(\mathcal {O}(N)\) relevant matrix coefficients in the system matrix without deteriorating the accuracy offered by the underlying Galerkin scheme. Here, \(N\) denotes the degrees of freedom in the related trial spaces. By numerical results we validate our theoretical findings.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Many problems from engineering result in partial differential equations, which can often be solved efficiently by using the finite element method [3, 4]. However, if the loading of the equation is zero, under some circumstances, one may transform the partial differential equation in a domain into an integral equation on its boundary. Such an integral equation can then be solved using the boundary element method [28, 32]. There are many practical problems which can be treated with the boundary element method, such as for example the Laplacian or linear elasticity problems [26, 32], scattering problems [7], and homogenization problems [1, 6, 23].

A huge advantage of the boundary element method is that the integral domain under consideration is reduced from \(n\) spatial dimensions to an \( (n-1) \)-dimensional surface. This brings us a significant reduction in the number of degrees of freedom, but since the integral kernels are, in general, nonlocal, also densely populated matrices.

To overcome the dense matrices, fast boundary element methods have been developed, such as adaptive cross adaptation [2], the fast multipole method [16], or the wavelet matrix compression [9, 30]. A comparison of these methods with respect to their advantages and disadvantages can be found in [18] for example. The computational cost of all these methods scale linearly or loglinearly in the number of degrees of freedom. Indeed, the wavelet matrix compression has been shown to have linear cost complexity, compare [9]. Moreover, the boundary integral operator is \(s^\star \)-compressible [33], with the consequence of a quasi-optimal convergence for the adaptive wavelet boundary element method [10, 15, 21, 34].

However, all these works consider isotropic wavelets, meaning that the mesh of the underlying multiscale hierarchy consists of isotropic elements. Therefore, only anisotropic singularities cannot be resolved properly. This leads to a loss in the convergence rate if the solution of the boundary integral equation exhibits such singularites. Anisotropic singularities, however, appear if the boundary under under consideration contains edges as this is the case, for example, for Fichera’s corner [21]. This gives rise to considering anisotropic tensor product wavelets, which are allowed to refine in one coordinate direction whilst staying coarse in the other coordinate direction. With such wavelet functions, the disadvantage of the isotropic wavelets might be overcome.

Anisotropic tensor wavelets for boundary integral equations have been considered first in [17] in the context of sparse tensor product approximations. In [24], for both isotropic and nonisotropic boundary integral operators which are discretized with respect to sparse tensor product spaces, a compression scheme has been developed. This scheme leads to an essentially linear number of the degrees of freedom therein, provided the underlying integro-differential operator is of the order \(2q> \frac{1}{2}(\sqrt{5}-1) > 0\). We, on the other hand, will construct in the present article a linearly scaling compression scheme for integral operators of arbitrary order which are discretized with respect to the full tensor product space. In particular, our compression estimates improve the results from [24]. Note, however, that the computation of the matrix entries of the compressed system matrix is not a topic of the present article. This can be done by using the techniques and results of [19, 36], exploiting the exponentially convergent quadrature scheme proposed in [27].

The rest of the article is structured as follows. In Sect. 2, we introduce the boundary integral equation to be solved. Then, in Sect. 3, we define the anisotropic wavelet basis we shall use for the discretization on the unit square. Estimates on the size of the entries of the respective Galerkin matrix with respect to the unit square are derived in Sect. 4. The wavelet matrix compression is proposed in Sect. 5. The number of the remaining nonzero matrix entries is counted in Sect. 6. In Sect. 7, we generalize the wavelet matrix compression to the boundary of a Lipschitz domain. Consistency and convergence of the wavelet matrix compression is proven in Sect. 8. In Sect. 9, we provide numerical experiments to validate the results derived. Finally, in Sect. 10, we state concluding remarks.

Throughout the article, let us replace generic constants by the notation \(A \lesssim B\), which means that \(A\) is bounded by a constant multiple of \(B\), and, similarly we define \(A \gtrsim B\) if and only if \(B \lesssim A\). If \(A \lesssim B\) and \(B \lesssim A\), we write \(A \sim B\). Moreover, if \({\textbf {j}} , {\textbf {j}} '\in \mathbb {N}_0^2\) are given multiindices, the inequality \({\textbf {j}} \le {\textbf {j}} '\) is understood componentwise. Especially, the notion \({\textbf {j}} <{\textbf {j}} '\) means that \({\textbf {j}} \le {\textbf {j}} '\) and \({\textbf {j}} \not ={\textbf {j}} '\). Finally, we set \(\textbf{1} \mathrel {\mathrel {\mathop :}=}(1,1)\).

2 Problem formulation

2.1 Parametrization

Throughout this article, we consider a bounded, piecewise smooth domain \(\varOmega \subset \mathbb {R}^3\) with Lipschitz boundary \(\varGamma \mathrel {\mathrel {\mathop :}=}\partial \varOmega \). We assume that \(\varGamma \) can be decomposed into \(r\) four-sided, smooth patches \(\varGamma _i\), \(i = 1, \ldots , r\), such that

This decomposition needs to be admissible, meaning that the intersection \(\varGamma _i \cap \varGamma _j\) is for \(i \ne j\) either empty, a common vertex, or a common edge of both \(\varGamma _i\) and \(\varGamma _j\), cf. Fig. 1. We next choose smooth diffeomorphisms \(\varvec{\gamma }_i: \square \mathrel {\mathrel {\mathop :}=}[0,1]^2\rightarrow \varGamma _i\) such that there exist constants \(c_i\) and \(C_i\) with

This parametrization should fulfil the matching condition that \(\varvec{\gamma }_i\) and \(\varvec{\gamma }_j\) coincide up to orientation at a common edge of two neighbouring patches \(\varGamma _i\) and \(\varGamma _j\).

For the current type of surfaces, Sobolev spaces \(H^s(\varGamma )\) can be introduced along the lines of [28, 32] for all \(-s_\varGamma \le s\le s_\varGamma \), where \(s_\varGamma \) depends on the global smoothness of the surface. For example, we have \(s_\varGamma = 1\) in the case of a Lipschitz continuous domain \(\varOmega \). Moreover, the inner product

can be continuously extended to the duality pairing \(H^{-s}(\varGamma )\times H^s(\varGamma )\).

2.2 Boundary integral equation

Let \(\mathcal {A}: H^q(\varGamma )\rightarrow H^{-q}(\varGamma )\) be a boundary integral operator which is for sufficiently smooth u pointwise defined by

In the following, we intend to calculate the solution \(u\in H^q(\varGamma )\) of the boundary integral equation

Typically, the kernel \(K\) is asymptotically smooth of the order \(2q\), that is, \(K\) is singular only on the diagonal \( \{ (\varvec{x}, \varvec{y}) \in \varGamma \times \varGamma : \varvec{x}= \varvec{y}\}\) and smooth apart from it in terms of

provided that \(2 + 2q + |\varvec{\upalpha }| + |\varvec{\upbeta }| > 0\). We assume that \(\mathcal {A}\) is bounded and also strongly elliptic on \(H^q(\varGamma )\), meaning that there exists a uniform constant \(c > 0\), such that for any \(u \in H^q(\varGamma )\), we have

where \(\mathcal {A}':H^q(\varGamma )\rightarrow H^{-q}(\varGamma )\) is the dual operator of \(\mathcal {A}\). Furthermore, for the sake of convenience, the operator \(\mathcal {A}\) is assumed to be injective. If this is not the case, but if its kernel is finite-dimensional and known in advance, then one can consider \(\mathcal {A}\) as an operator

and the presented approach is still valid, which for example is the case for any interior Neumann problem, where the kernel consists of all constant functions.

A practical example, which can be written as a boundary integral equation, is the Laplace problem with homogeneous Dirichlet boundary data in three spatial dimensions for given boundary data \(g \in H^{\frac{1}{2}}(\varGamma )\), i.e.,

It is well known that this problem is uniquely solvable. As described in detail in [28, 32], for example, we may write \(v\in H^1(\varOmega )\) as a layer potential of an unknown density \(u \in H^q(\varGamma )\), that is \(v = \mathcal {P}u\), where \(\mathcal {P}\) is a linear and continuous boundary potential operator from \(H^{q}(\varGamma )\) to \(H^1(\varOmega )\). By applying the trace operator to both sides of the equation \(v = \mathcal {P}u\), we arrive at the boundary integral equation

Especially, in the case of the single layer and the double layer potential, the kernels are given by

where \(\varvec{n}_{\varvec{y}}\) denotes the outward-directed normal at \(\varvec{y}\). It can easily be seen that \(K_s\) and \(K_d\) are asymptotically smooth kernels of the orders \(2q=-1\) and \(2q=0\), respectively. Operators of positive order arise for example from the hypersingular integral equation or in case of nonlocal diffusion, compare [14].

2.3 Galerkin scheme

By testing (2) with \(\phi \in H^{q}(\varGamma )\) with respect to the duality pairing on \(H^{-q}(\varGamma )\times H^q(\varGamma )\), we derive the variational formulation of the boundary integral equation under consideration:

Similar to [9], we are considering a sequence of nested trial spaces \(V_{{\textbf {J}} } \subset V_{{\textbf {J}} +{\textbf {1}} } \subset \dots \subset H^q(\varGamma )\), which is asymptotically dense in \(H^q(\varGamma )\). Here and in the remainder of this article, for \({\textbf {J}} \mathrel {\mathrel {\mathop :}=}(J,J)\), the space \(V_{{\textbf {J}} }\) denotes a trial space of piecewise polynomials on an isotropic mesh with mesh width of size \(\sim 2^{-J}\). For any fixed level J, we then restrict the variational formulation (6) to \(V_{{\textbf {J}} }\) to obtain the Galerkin problem

If \(V_{{\textbf {J}} } = {{\,\textrm{span}\,}}\{ \phi _1, \ldots , \phi _{N_J} \} \subset H^{q}(\varGamma )\), the Galerkin problem (7) is equivalent to the linear system of equations

where \(u_J = \sum _{k=1}^{N_J} c_k \phi _k\). Especially, by means of Cea’s Lemma, the solution \(u_J\in V_{{\textbf {J}} }\) satisfies an estimate of the form

Herein, the right-hand side can be estimated further by imposing more knowledge on the trial spaces \(V_{{\textbf {J}} }\).

3 Discretization

3.1 Single-scale bases

A natural choice of trial functions are piecewise polynomial functions, defined on the unit interval, tensorized, and then transported onto a surface patch \(\varGamma _i\). We postpone this transportation to Sect. 7 and consider the unit square first. To this end, we first have to consider the unit interval \(I = [0, 1]\). Given a level \(j\), we want to construct a space \(V_j\) with \(\dim V_j\sim 2^j\), which consists of piecewise polynomial functions on the dyadic intervals \([2^{-j}k, 2^{-j}(k+1)]\), \(k = 0, 1, \ldots , 2^{j}-1\). This is possible by choosing a suitable function \(\phi \) and then rescaling it according to

where \(\varDelta _j\) is a suitable index set. Note that this scaling implies \(\Vert \phi _{j, k}\Vert _{L^2([0, 1])}\sim 1\).

Outgoing from this construction, we can define any ansiotropic tensor product function and the corresponding tensor product spaces. In particular, for \({\textbf {j}} = (j_1, j_2) \in \mathbb {N}_0^2\), and \({\textbf {k}} = (k_1, k_2) \in \varDelta _{{\textbf {j}} } \mathrel {\mathrel {\mathop :}=}\varDelta _{j_1} \times \varDelta _{j_2}\), we define the tensor product function

With these functions, we define for \({\textbf {J}} =(J,J)\) the trial space

The spaces \(V_{{\textbf {J}} }\) are said to have the approximation order \(d \in \mathbb {N}\) given by

and the regularity \(\gamma \) given by

In the simplest case, we take piecewise constant scaling functions which are defined by the indicator function \(\phi \mathrel {\mathrel {\mathop :}=}\mathbb {1}_{[0, 1]}\). Then, for any fixed \(j \in \mathbb {N}\), we define the local trial functions

This yields the well-known approximation spaces \(V_j \mathrel {\mathrel {\mathop :}=}{{\,\textrm{span}\,}}\{ \phi _{j, k}: k \in \varDelta _j\}\), having the parameters \(\gamma = \frac{1}{2}\) and \(d = 1\).

In general, piecewise polynomial functions of the order \(r\) result in an approximation order \(d = r\). The regularity \(\gamma \) is, however, limited by the global smoothness of the trial functions. There holds \(\gamma = \frac{1}{2}\) if they are discontinuous while there is \(\gamma = \frac{3}{2}\) if they are continuous.

We note that in general, we could also define trial spaces \(V_{{\textbf {j}} }\) for any anisotropic level \({\textbf {j}} =(j_1, j_2)\), where in general, \(j_1\ne j_2\). This would, however, also require adaptations in the definitions of \(d\) and \(\gamma \).

3.2 Wavelet bases

Although the above method is very intuitive, we have a lot of difficulties to deal with. As the boundary integral operators under consideration are not local, the Galerkin problem results in fully populated matrices. This drawback can, up to logarithmic terms, be overcome with fast boundary element methods like the fast multipole method [16]. An alternative approach is to consider specific, linear combinations of piecewise polynomial trial functions, which are called wavelets. For a full introduction into this topic, see for example [13, 20, 30].

The general idea is to discretize the complement of \(V_{j-1}\) in \(V_j\). Roughly speaking, given a function \(u_j\) in \(V_j\), the projection \(Q_{j-1}u_j \in V_{j-1}\) is a good estimation on \(u_j\), and the difference \(u_j - Q_{j-1} u_j\) can be expressed in terms of complementary basis functions. To this end, we fix a minimal level \(j_0 \in \mathbb {N}_0\) and introduce complement spaces \(W_j\) for all \(j > j_0\), satisfying

Similar as before, \(W_j\) is spanned by basis functions of the form

where \(\psi \) is a so-called mother wavelet. Note that by construction, we have \(|\nabla _j|\sim 2^{j-1}\). Also here, there holds \(\Vert \psi _{j, k}\Vert _{L^2(\varGamma )} \sim 1\). We remark that the identity (9) implies that

For the sake of notational convenience, we set \(W_{j_0} \mathrel {\mathrel {\mathop :}=}V_{j_0}\) and denote \(\psi _{j_0, k} \mathrel {\mathrel {\mathop :}=}\phi _{j_0, k}\) for all \(k \in \nabla _{j_0}\mathrel {\mathrel {\mathop :}=}\varDelta _{j_0}\).

By tensorizing (11) with itself, for \({\textbf {J}} =(J,J)\), we arrive at

Note that the space \(V_{{\textbf {J}} }\) coincides with \(V_{{\textbf {J}} }\) from (8), especially it is the full trial space which is used to discretize the energy space.

In view of (10), we can write

with the tensor product wavelets \(\psi _{{\textbf {j}} , {\textbf {k}} } =\psi _{j_1,k_1}\otimes \psi _{j_2,k_2}\) and \(\nabla _{{\textbf {j}} } \mathrel {\mathrel {\mathop :}=}\nabla _{j_1} \times \nabla _{j_2}\).

3.3 Notation

Let us define some notation which we will use throughout the remainder of this article. First, for the sake of simplicity, with \({\textbf {j}} _0\) we always mean the multiindex \({\textbf {j}} _0 \mathrel {\mathrel {\mathop :}=}(j_0,j_0)\), and with a captial \({\textbf {J}} \), we always mean a multiindex \({\textbf {J}} = (J,J)\). Of course, \(J\) is variable, but \(j_0\) depends only on the chosen wavelet basis.

Next, we define the support of a wavelet as

and, accordingly,

Similarly, we define the singular support, i.e., the points at which a wavelet is not smooth, as

For a pair of wavelets \(\psi _{{\textbf {j}} , {\textbf {k}} }\) and \(\psi _{{\textbf {j}} ', {\textbf {k}} '}\), we let

Moreover, we also define

Finally, given a wavelet \(\psi _{j, k}\), we say that \(\psi _{j', k'}\) is located in the far-field of \(\psi _{j, k}\) if there holds \({{\,\textrm{dist}\,}}( \varOmega _{j, k}, \varOmega _{j', k'} ) \gtrsim 2^{- \min \{j, j' \}}\), otherwise, we say that \(\psi _{j', k'}\) is located in the near-field of \(\psi _{j, k}\). For the tensorized wavelets, this threshold is the maximal support length, which amounts to \(2^{- \min \{j_1, j_2, j_1', j_2'\}}\).

3.4 Some important wavelet properties

Wavelet functions have some very nice properties, see e.g. [8, 13, 30] for the full range of expressions. In this section, we will restrict ourselves to the most important ones, which are needed in the remainder of this article.

First, as already stated in [17], the set \(\varPsi \mathrel {\mathrel {\mathop :}=}\{ \psi _{{\textbf {j}} , {\textbf {k}} } :{\textbf {j}} \ge {\textbf {j}} _0, \ {\textbf {k}} \in \nabla _{{\textbf {j}} } \}\) forms a Riesz basis of \(L^2(\square )\), meaning that

provided that the one-dimensional wavelet basis forms a Riesz basis of \(L^2([0,1])\). Moreover, it is well-known (see e.g. [8, 13]) that a one-dimensional Riesz basis of \(L^2([0, 1])\) possesses a unique, dual basis which is biorthogonal to the primal one. By tensorizing this dual basis with itself, we get the dual basis of \(\varPsi \) in \(L^2(\square )\), which we denote by \(\widetilde{\varPsi }\). This dual basis then provides the approximation order \(\widetilde{d}\) and the regularity \(\widetilde{\gamma }>0\).

The primal and the dual basis satisfy the following norm equivalences:

Theorem 1

Let us denote \(H^s_{\circ } \mathrel {\mathrel {\mathop :}=}H^s\) for \(s \ge 0\), and \(H^s_{\circ } = \widetilde{H}^s\) for \(s < 0\), which is the dual space of \(H^{-s}\). Then, there holds

Proof

In accordance with e.g. [9, 30], the univariate wavelet basis satisfies

Thus, when \(0 \le s < \gamma \), we can use the identity \(H^s(\square ) = H^s([0,1])\otimes L^2([0,1])\cap L^2([0,1])\otimes H^s([0,1])\) and standard tensor product arguments to obtain

and likewise

Therefore, as \(2^{2sj_1}+2^{2sj_2} \sim 2^{2\,s|{\textbf {j}} |_\infty }\), we arrive at

The same arguments also allow us to show (13) for \(0 \le t< \widetilde{\gamma }\).

In case of \(-\widetilde{\gamma }< s < 0\), we use a duality argument. We find that

where we have used (13) for \(0 \le -s < \widetilde{\gamma }\). This shows the lower bound in (12). For the upper bound, we remark that

and

This proves (12). With the same arguments, we can also show (13) for \(- \gamma< t < 0\).\(\square \)

Remark 1

For a smaller range of parameters, this has already been shown in [17], while similar norm equivalences have been derived in [5, 29] for wavelets which are at least continuous. Moreover, we should mention that the upper bounds in (12) and (13) can be extended to \(-\widetilde{d}< s < \gamma \), and \(- d< t < \widetilde{\gamma }\), respectively, whereas the lower bounds can be extended to \(- \widetilde{\gamma }< s < d\), and \(-\gamma< t < \widetilde{d}\), respectively, see e.g. [30] for the details.

For a multiindex \({\textbf {j}} \), let us define the (non-orthogonal) projections

provided that \(u \in H^s_ {\circ }(\square )\) for some \(s > -\widetilde{\gamma }\). By using a tensor product argument, the duality and the biorthogonality, the univariate approximation property, which is derived e.g. in [30], generalizes to

Moreover, there holds Bernstein’s inequality

and, regarding \(Q_J u \in V_{\textbf {J}} \), also

Perhaps the most important property of wavelets for the present article is that they have vanishing moments, also called cancellation property, which is induced from the approximation order of the dual basis. Namely, in accordance with [8], there holds

By explicitly enrolling the tensor product structure of the wavelet \(\psi _{{\textbf {j}} , {\textbf {k}} }\), we can immediately deduce that

Herein, for \(k\in \mathbb {N}_0\), the seminorm \(|u|_{W^{k,\infty }(\varXi )} = \max _{|\varvec{\upalpha }|= k} \Vert \partial ^{\varvec{\upalpha }}u\Vert _{L^{\infty }(\varXi )}\) denotes the \(W^{k,\infty }\)-seminorm on \(\varXi \).

Remark 2

Due to the tensor product structure of the wavelets, we must tensorize scaling functions on the coarsest level with wavelets on a finer level. This means that we cannot use \(\widetilde{d}\) vanishing moments in both directions. However, if \(\mathcal {I}\subset \{j_1, j_2\}\) denotes the subset of indices corresponding to univariate wavelets with \(\widetilde{d}\) vanishing moments, we have the estimate

4 Matrix entry estimates

In order to develop a compression scheme for the operator \(\mathcal {A}\) with respect to the wavelet basis \(\varPsi \), we need to estimate the matrix entries in the Galerkin matrix. For now, let us consider the situation \(r = 1\), where the only patch present is the unit square \(\square \), in which case we can assume that \(\varvec{\gamma }_i = \text {id}\). The discussion of the situation on a Lipschitz manifold is postponed to Sect. 7.

4.1 Far-field estimates

For the remainder of Sect. 4.1, we assume that \(\delta _ {{\text {tot}}} > 0\), which means that the first compression [9, 20, 30] applies. There exist estimations for the entries by Reich [24, 25], which make use of the vanishing moments in the one-dimensional wavelets with the smallest corresponding support lengths. This is especially useful when considering thin, long wavelets with a small distance. We quote the following result.

Theorem 2

([24, Theorem 2.1.9]) For \({\textbf {j}} , {\textbf {j}} '\ge {\textbf {j}} _0\), there holds

Here, \(\big \{j^{(1)}, j^{(2)}\big \}\subset \{ j_1, j_1', j_2, j_2'\} \cap [j_0+1, \, \infty )\) can be any two distinct indices, the best behaviour is obtained by choosing the two largest indices.

Let us next derive an estimate which makes use of the vanishing moments in every one-dimensional wavelet, which is beneficial if the supports of the wavelets \(\psi _{{\textbf {j}} , {\textbf {k}} }\) and \(\psi _{{\textbf {j}} ', {\textbf {k}} '}\) are small.

Theorem 3

For \({\textbf {j}} , {\textbf {j}} ' \ge {\textbf {j}} _0+\textbf{1}\), there holds

Proof

By explicitly enrolling the tensor product structure of the wavelets, we can write

We can use the vanishing moments of \(\psi _{j_2', k_2'}\) to deduce that

Note that we can differentiate under the integral because the kernel \(K\) is smooth and bounded on \(\varOmega _{{\textbf {j}} , {\textbf {k}} } \times \varOmega _{{\textbf {j}} ', {\textbf {k}} '}\). The vanishing moments of \(\psi _{j_1', k_1'}\) then allow us to proceed with the estimate to

By subsequently using the vanishing moments of \(\psi _{j_2, k_2}\), and \(\psi _{j_1, k_1}\) as well, we finally arrive at

If we remember the fact that the kernel \(K\) is asymptotically smooth of order \(2q\), compare (3), we can deduce (17).\(\square \)

4.2 Near-field estimates

As we will see in Sect. 5, we may use the previous estimates only if a wavelet pair is in the far-field, meaning that the supports are sufficiently far away. For the near-field, we need to derive different estimates. In this case, we explicitly enrol the tensor product structure of the wavelets again. We will use an approach which is similar to the one created in [24].

To this end, we define the dimensionally reduced kernel

and the operator \( \mathcal {A}_1\) as the integral operator with the kernel \(K_1\). By definition, the kernel \(K_1\) depends on the wavelets \(\psi _{j_2, k_2}\) and \(\psi _{j_2', k_2'}\), but the context will always clarify this relation.

Due to the tensor product structure, the dimensionally reduced operator obviously satisfies \(\langle \mathcal {A}\psi _{{\textbf {j}} ', {\textbf {k}} '}, \psi _{{\textbf {j}} , {\textbf {k}} } \rangle _{\square } = \langle \mathcal {A}_1 \psi _{j_1', k_1'}, \psi _{j_1, k_1} \rangle _{[0,1]}\). Moreover, due to [24, Lemma 2.1.5], the estimate

holds for any \(x,x' \in [0, 1]\) with \(x\ne x'\). However, there are also vanishing moments of the wavelets hidden in the kernel \(K_1\), which can be used to improve the estimate (19) and hence also Theorem 2.1.7 in [24]:

Theorem 4

Assume that \(0 < \sigma _{x_1} \lesssim 2^{- \min \{j_1, j_1'\}}\), and \(\max \{j_1, j_1'\}, \max \{j_2, j_2'\} > j_0\). Then, we have

Proof

We will simply derive the appropriate estimate for the kernel \(K_1\) similar to (19). Then, the rest of the proof may be completed by simply following the arguments of [24].

If \(x \ne x'\), then the function under the integral in (18) is bounded, so we may directly differentiate under the integral. Moreover, let us without loss of generality assume that \(j_2 > j_2'\). Then, for \(x,x' \in [0, 1]\), with \(x\ne x'\),

where for the last line, we have used the property \(|\varOmega _{j_2', k_2'}| \lesssim 2^{-j_2'}\).

As the remainder of the proof is based on the ideas of [9, Section 6], we just sketch it. Without loss of generality, we may assume that \(j_1' \le j_1\). In this case, \(\psi _{j_1, k_1}\) is located on a smooth part of the wavelet \(\psi _{j_1', k_1'}\), so we may decompose \(\psi _{j_1', k_1'} = \tilde{f} + \bar{f}\) such that \(\tilde{f}\) is a smooth function satisfying

This can be realized by Calderón’s extension theorem [31] with \(\Vert \tilde{f}\Vert _{H^s([0, 1])}\lesssim 2^{s j_1'}\). Hence, we have

The estimate for \(\bar{f}\) follows directly from [24, Lemma 2.1.1].

For the function \(\tilde{f}\), we define the operator

where \(\chi \in C^{\infty }_c(\mathbb {R})\) is a smooth cutoff function \(\chi \) satisfying \(\chi |_{[0,1]} = 1\). Then, \(\mathcal {A}_1^\sharp \) is a pseudo-differential operator of the order \(m = 1 + 2q + \widetilde{d}\), cf. [22]. Remarking that in our case, we have \(c(j_2, j_2') = 2^{- \frac{1}{2}(j_2 + j_2')} 2^{-\widetilde{d}j_2}\), we may apply [24, Lemma 2.1.4] and the fact that \(\sigma _{x_1} \lesssim 2^{- j_1'}\) to conclude.\(\square \)

Remark 3

Up to now, we have just considered a reduction to the first coordinate direction. Nevertheless, as also done in [24], a reduction to the second coordinate is possible by using a similar definition for the operator \(\mathcal {A}_2\), and the same estimates hold with exchanged indices.

5 Matrix compression scheme

To keep the number of the degrees of freedom small, we need to introduce a compression scheme, according to which many matrix entries do not have to be calculated, whilst obtaining convergence with the full rate offered by the underlying Galerkin scheme. We differ between the first compression and the second compression, but for either case, we require a matrix block error which is controlled by a level dependent parameter \(\sigma _{{\textbf {j}} , {\textbf {j}} '}\) given by

Here, \(d' > d\) and \(\kappa > 0\) are sufficiently small, but fixed real numbers, which are introduced in order to avoid logarithmic terms in the consistency estimates.

5.1 Far-field: first compression

In the case of the first compression, we consider a pair of wavelets \(\psi _{{\textbf {j}} , {\textbf {k}} }\) and \(\psi _{{\textbf {j}} ', {\textbf {k}} '}\), whose supports are located sufficiently far away from each other. As we will see, we need to estimate a sum of matrix coefficients by an integral, which requires that the minimal distance between the respective wavelets’ supports is large enough. In two dimensions, we must have a minimal distance, which is at least as wide the largest face of the included supports, namely \(2^{- \min \{j_1, j_2, j_1', j_2'\}}\).

If this is not the case, however, we can make use of the tensor product structure and estimate the sum only in the coordinate direction of \(x_i\), which results in a minimal distance of \(2^{- \min \{j_i, j_i'\}}\) in this direction. This procedure basically follows [24], but is adapted here to the setting on the full tensor product space.

5.1.1 Compression in the \(\varvec{x}\)- and \(\varvec{y}\)-coordinate

For a fixed maximal level \(J\), we define the compressed matrix for the first compression \({\textbf {A}} _J^{c_1,1}\) as

for \({\textbf {k}} \in \nabla _{{\textbf {j}} }\), \({\textbf {k}} ' \in \nabla _{{\textbf {j}} '}\), and \(|{\textbf {j}} |_\infty , |{\textbf {j}} '|_\infty \le J\). Herein, the cutoff parameter \(\mathcal {B}_{{\textbf {j}} , {\textbf {j}} '}\) is given as

where \(a > 0\) is a fixed real number.

Theorem 5

Let \({\textbf {R}} _J \mathrel {\mathrel {\mathop :}=}{\textbf {A}} _J - {\textbf {A}} _J^{c_1,1}\). Then, for the matrix block \({\textbf {R}} _{{\textbf {j}} , {\textbf {j}} '}\), we have the estimate

with a generic constant that is independent of the refinement level \(J\).

Proof

We advance similar as in [9] and recall that \({\textbf {j}} , {\textbf {j}} ' \ge {\textbf {j}} _0 + {\textbf {1}} \). First, we define the set

Then, we estimate the column sum of the block \({\textbf {R}} _{{\textbf {j}} , {\textbf {j}} '}\) by

where the last inequality is due to Theorem 3. By the compression rule (21), we have the relation \(\delta _{{\text {tot}}} \ge \mathcal {B}_{{\textbf {j}} , {\textbf {j}} '}\), and, since also \(\mathcal {B}_{{\textbf {j}} , {\textbf {j}} '} \gtrsim 2^{- \min \{j_1, j_2\}}\), we can estimate the sum by an integral, yielding

As we also have \(\mathcal {B}_{{\textbf {j}} , {\textbf {j}} '} \ge a 2^{\frac{\sigma _{{\textbf {j}} , {\textbf {j}} '} - \widetilde{d}(|{\textbf {j}} |_1 + |{\textbf {j}} '|_1)}{2q + 4 \widetilde{d}}}\), we obtain

Using exactly the same arguments, we can likewise derive the estimate for the row sums

Similar to [9], we now use the estimate for the operator norm of a matrix

which gives us, together with (23), (24), the desired result

Remark 4

Similar to [24], using Theorem 2 and the cutoff parameter

where \(\big \{j^{(1)},j^{(2)}\big \}\subset \{j_1,j_2,j_1',j_2'\}\), we have a compression scheme

The requirement \(j^{(1)}, j^{(2)} > j_0\) is necessary to ensure the validity of Theorem 2.

By modifying the appropriate calculations, we get that the corresponding difference matrix \({\textbf {R}} _J = {\textbf {A}} _J - {\textbf {A}} _J^{c_1, 2}\) satisfies

This will be important when we consider the complexity since we can also compress matrix blocks where scaling functions are involved in at most two coordinate directions.

5.1.2 Compression in only one coordinate direction

As remarked earlier, we need at least that \(\delta _{{\text {tot}}} \gtrsim 2^{- \min \{j_1, j_2, j_1', j_2'\}}\) in the proof of Theorem 5 to estimate the row and column sums of the matrix blocks by an integral. If this is not the case, we may estimate the sum by an integral in just one coordinate direction \(x_i\). This leads to restrictions on the distance in only this coordinate direction. Especially when the term \(2^{-\min \{j_1, j_2, j_1', j_2'\}}\) in (22) is too large, this approach is beneficial. As all the derivations can be found in [24], we just quote the results. We also remark that we have exchanged the \(|\cdot |_1\)-norms from [24] with \(|\cdot |_\infty \)-norms in (20), since we are not working on a sparse tensor product space but on the full tensor product space.

Let us define the parameters

We can then define the compressed value

and then the compressed matrix by the rule

The latter definition is just a restriction of the compression to the matrix blocks \({\textbf {A}} _{{\textbf {j}} , {\textbf {j}} '}\), for which \(j^{(1)}, j^{(2)} > 0\), meaning that we can use Theorem 2 to estimate the corresponding matrix entries.

With these definitions, out of the proof of Theorem 2.3.1 in [24], one immediately obtains the following result:

Theorem 6

Let \({\textbf {R}} _J \mathrel {\mathrel {\mathop :}=}{\textbf {A}} _J - {\textbf {A}} _J^{c_1,3}\). Then, the compressed matrix blocks satisfy the estimate

with a generic constant that is independent of the refinement level \(J\).

By combining (21), (25), and (26), we can define the first compression of the matrix by

This compression affects the far-field of the system matrix in wavelet coordinates.

5.2 Near-field: second compression

Up to now, we have considered wavelets with disjoint and distant supports. As we will see, we can also discard many entries if the supports of the wavelet pairs are close or even if they overlap, where a strict requirement is that the distance of the support of the smaller wavelet to the singular support of the bigger wavelet is sufficiently large.

We will only use one direction for the second compression as done by Reich in [24, 25], but with improved parameters. We define

Then, the compressed values are given by

Similar as in the first compression, we define the corresponding compressed matrices as

Combining these two compression schemes leads to the second compressed matrix

Remark 5

It suffices to compress only the entries with \(a2^{- \min \{j_1, j_2, j_1', j_2' \}} \ge \delta _{x_1}, \delta _{x_2}\). Otherwise, we have

and the first compression applies, meaning that either the entries are zero, or that, as we will see, there are only \(\mathcal {O}(2^{2J})\) such entries.

For the remainder of this section, let us without loss of generality assume that \(j_1' \le j_1\). The following estimate holds:

Theorem 7

The matrix blocks of the perturbed matrix \({\textbf {R}} _J \mathrel {\mathrel {\mathop :}=}{\textbf {A}} _J - {\textbf {A}} _J^{c_2}\) satisfy the estimate

with a generic constant that is independent of the refinement level \(J\).

Proof

It suffices to consider the two coordinate directions separately. For \({\textbf {A}} _J^{c_2, 1}\), we first consider the case where \(\delta _{x_1} \le a2^{- \min \{j_1, j_1' \}}\) and \(\delta _{x_2} > a2^{- \min \{j_2, j_2' \}}\).

First, assume that \(j_1' \ne \min \{ j_1, j_2, j_1', j_2'\}\). Since we assumed that \(j_1' \le j_1\), this means that either \(j_2 < j_1'\), or \(j_2' < j_1'\), so we have \( \min \{j_2, j_2'\} = \min \{ j_1, j_2, j_1', j_2'\}, \) resulting in

Hence, according to the definition of \(w_{({\textbf {j}} ,{\textbf {k}} ),({\textbf {j}} ',{\textbf {k}} ')}^{(1)}\), we do not compress such entries here and thus they do not contribute to the block error.

Let us therefore consider the case where \(j_1' = \min \{j_1, j_2, j_1', j_2' \}\). For the sake of comfortability, we define the index sets

and likewise,

As one readily verifies, the cardinality of these sets is bounded by

Next, we recall that, according to Theorem 4, we have the estimate

This allows us to estimate the column sums by

Similarly, we may estimate the row sums by

Hence, we can argue in complete analogy to the proof of Theorem 5.

By using exactly the same arguments, but with interchanging the coordinate directions, we may also control the compression error of the entries, for which \(\delta _{x_1} > a2^{- \min \{j_1, j_1'\}}\) and \(\delta _{x_2} \le a2^{- \min \{j_2, j_2'\}}\). This implies the control of the error for the whole matrix \({\textbf {A}} _J^{c_2, 1}\).

For the matrix \({\textbf {A}} _J^{c_2, 2}\), we may use exactly the same arguments as in the proof of Theorem 2.3.2 in [24], with the only adaptation being that we have to use Theorem 4 to estimate the matrix entries instead of Theorem 2.1.7 in [24].\(\square \)

Finally, by using an additive argument, we can pose the main theorem of this section.

Theorem 8

Consider the compressed matrix

Then, the block error is controlled by

with

Remark 6

We have improved both the parameters \(\mathcal {E}_{{\textbf {j}} , {\textbf {j}} '}\) and \(\mathcal {F}_{{\textbf {j}} , {\textbf {j}} '}^{x_i}\) in contrast to [24]: For both \(\mathcal {E}_{{\textbf {j}} , {\textbf {j}} '}\) and \(\mathcal {F}_{{\textbf {j}} , {\textbf {j}} '}^{x_i}\), we gain an additional factor

in the above error estimates, but we have to pay another \(\widetilde{d}\) in the denominator. This is not mandatory to ensure linear complexity, but it reduces the number of required vanishing moments, for example in the case of piecewise constant wavelets for the single layer operator to as few as three, as it is the case if an isotropic wavelet basis is used.

Much more important, we win the additional factor

for \(\mathcal {E}_{{\textbf {j}} , {\textbf {j}} '}\) in the above error estimates. This is strictly necessary to ensure a linear complexity.

6 Complexity

We are now going to count the number of nonzero matrix coefficients of the compressed matrix \({\textbf {A}} _J^c\) and show that this number is asymptotically bounded by \(N_J = 2^{2J}\). In the arguments below, it is crucial that the exponents are positive to estimate the sum asymptotically by the largest term. To this end, for the sake of simplicity, we will for the remainder of this section assume that \(\kappa > 0\) is sufficiently small. This is not problematic since we only have a bounded number of restrictions on \(\kappa \), depending only on the uniform constants \(d'\), \(\widetilde{d}\), and the order of the operator \(2q\). Moreover, as we will see, we need to require the inequalities

Since this inequality is strict, there will especially always be space for a small \(\kappa > 0\), which can be inserted between \(d'\) and the minimum over both these terms.

First of all, we note that the restriction of the compression to the appropriate matrix blocks in (25), (28), and (29) never causes a problem. Indeed, if we cannot compress a matrix block, then at least two indices in the set \(\{j_1, j_2, j_1', j_2'\}\) are equal to \(j_0\). In particular, as \(\dim V_{{\textbf {j}} _0} \sim 2^{2 j_0}\), there are only \(\mathcal {O}(2^{2j_0}) = \mathcal {O}(1)\) rows and columns corresponding to such situations. As every row and column contains at most \(\mathcal {O}(N_J)\) entries, these are at most \(\mathcal {O}(N_J)\) such entries in total.

For the compressed blocks, we organize the proof in the following steps. First, we split up the unit square into at most nine regions, compare Fig. 2, corresponding to the distance in each coordinate direction being either big or small. These nine regions correspond to the four possible cases

In Sect. 6.1, we will show that already the first compression gives a linear complexity when there holds

Then, in Sect. 6.2, we consider the wavelet pairs whose supports are closer together than \(a 2^{- \min \{j_1, j_2, j_1', j_2' \}}\) and we will show the linear complexity for those regions as well.

Graphical illustration of the regions described in (32). Note that there are at most nine different regions

6.1 Complexity of the first compression

We advance by the type of the compression which is performed on the matrix entries. First, we count all the nontrivial entries remaining from the compression scheme (21) in the case when

Theorem 9

Assume that we set all matrix entries to zero where the underlying wavelets satisfy \(\delta _{{\text {tot}}} > \mathcal {B}_{{\textbf {j}} , {\textbf {j}} '}\) with \(\mathcal {B}_{{\textbf {j}} , {\textbf {j}} '}\) given by (33). Then, only \(\mathcal {O}(2^{2J})\) nontrivial entries remain.

Proof

In any column of a block \({\textbf {A}} _{{\textbf {j}} , {\textbf {j}} '}\), we find \(\mathcal {O}(2^{|{\textbf {j}} |_1} \mathcal {B}_{{\textbf {j}} , {\textbf {j}} '}^2)\) entries, for which the distance of the supports is bounded by \(\mathcal {B}_{{\textbf {j}} , {\textbf {j}} '}\). Since there are \(\mathcal {O}(2^{|{\textbf {j}} '|_1})\) columns in such a block, there are at most \(\mathcal {O}(2^{|{\textbf {j}} |_1 + |{\textbf {j}} '|_1} \mathcal {B}_{{\textbf {j}} , {\textbf {j}} '}^2)\) nonzero entries per block. Hence, the total complexity for the whole matrix is given by

To calculate the sum, we explicitly enrol the indices \(j_1\) and \(j_2\). Then, after calculating the two sums over \(j_2\), and shifting the index in the second sum, we obtain

Inserting this result back into (34), we obtain

which is what we wanted to show.\(\square \)

Remark 7

If we tensorize scaling functions on the coarsest level with wavelets, we must use the cutoff parameter from Remark 4. However, by using very similar arguments as in the proof above, one concludes that there are only \(\mathcal {O}(2^{2J})\) nontrivial entries in this case. If we enrol the expression explicitly, we may assume that \(j^{(1)} = |{\textbf {j}} |_\infty \) and \(j^{(2)} = |{\textbf {j}} '|_\infty \). Since \(q< d < d'\), the exponent in the second sum in (35) will then be negative, but thus the sum can be estimated by the lower limit in the exponent, which is \(j_1\).

Remark 8

Theorem 9 and Remark 7 impliy that there are only \(\mathcal {O}(2^{2J})\) entries in the region \(\text {(I)}\). Indeed, in view of (22), if \(\mathcal {B}_{{\textbf {j}} , {\textbf {j}} '}= a 2^{- \min \{j_1, j_2, j_1', j_2'\}}\), then the entries in the region \(\text {(I)}\) are all set to zero. Otherwise, there are at most \(\mathcal {O}(2^{2J})\) nontrivial entries by Theorem 9 or Remark 7, respectively.

6.2 Complexity of the second compression

In the next step, we want to cover the regions \(\text {(I}\!\text {I})\) and \((\text {I}\!\text {I}\!\text {I})\) in (32). Due to the symmetry in the problem, these two regions yield the same complexity, so we may only consider the region \((\text {I}\!\text {I})\), that is, \(\delta _{x_1} \lesssim 2^{- \min \{j_1, j_1' \}}\) and \(\delta _{x_2} \gtrsim 2^{- \min \{j_2, j_2' \}}\). For the sake of simplicity, let us for the remainder of this section assume that \(j_1' \le j_1\), as the case \(j_1' > j_1\) follows directly by exchanging \({\textbf {j}} \) and \({\textbf {j}} '\).

Lemma 1

Consider all matrix entries in \({\textbf {A}} _{J}^{c}\) such that the underlying wavelet pairs \(\psi _{{\textbf {j}} , {\textbf {k}} }\) and \(\psi _{{\textbf {j}} ', {\textbf {k}} '}\) satisfy

Then, after the combination of the compression schemes (27) and (28), these are at most \(\mathcal {O}(2^{2J})\) nontrivial entries.

Proof

We may without loss of generality assume that \(j_1' = \min \{j_1, j_2, j_1', j_2'\}\). If not, then we must have either \(j_1' > j_2\) or \(j_1' > j_2'\) since we assumed \(j_1' \le j_1\). Therefore, we would have

Hence, these entries are either trivial or there are only \(\mathcal {O}(2^{2J})\) of them due to Sect. 6.1. As a consequence, we may especially assume in the following \(j_1' \le \min \{j_2, j_2'\}\).

We remark that we only have to consider the situation

since if \(\mathcal {D}_{{\textbf {j}} , {\textbf {j}} '}^{x_2} = a 2^{- \min \{j_2, j_2'\}}\), in view of (36), all entries are compressed.

In order to estimate the nontrivial entries, we need to consider four different cases: First, if \(j_2 \le j_2'\) and \(|{\textbf {j}} |_\infty = j_1\), we have \(j_1'\le j_2 \le j_1, j_2'\). Hence, by using \(j^{(1)} \mathrel {\mathrel {\mathop :}=}j_1\) and \(j^{(2)} \mathrel {\mathrel {\mathop :}=}j_2'\), we conclude

Second, if \(j_2 \le j_2'\) and \(|{\textbf {j}} |_\infty = j_2\), then we have the order \(j_1' \le j_1 \le j_2 \le j_2'\), so with \(j^{(1)} \mathrel {\mathrel {\mathop :}=}j_2'\), \(j^{(2)} \mathrel {\mathrel {\mathop :}=}j_2\), we may directly sum up

Third, if \(j_2' \le j_2\) and \(|{\textbf {j}} |_\infty = j_1\), we have \(j_1' \le j_2' \le j_2 \le j_1\), therefore the choice \(j^{(1)} \mathrel {\mathrel {\mathop :}=}j_1\), \(j^{(2)} \mathrel {\mathrel {\mathop :}=}j_2\) yields

Finally, if \(j_2' \le j_2\) and \(|{\textbf {j}} |_\infty = j_2\), we also have \(j_1' \le j_1 \le j_2\). If we choose \(j^{(1)}\mathrel {\mathrel {\mathop :}=}j_2\) and \(j^{(2)}\mathrel {\mathrel {\mathop :}=}j_1\), then the complexity reads as

\(\square \)

Let us now consider the last possible situation, that is, we suppose that

which describes the region \((\text {I}\!\text {V})\) in (32), or, the near-field region in Fig. 2. In this case, the compression scheme (29) applies. Remember that we still assume that \(j_1' \le j_1\).

Lemma 2

Consider all matrix entries in \({\textbf {A}} _{J}^{c}\) such that the underlying wavelet pairs \(\psi _{{\textbf {j}} , {\textbf {k}} }\) and \(\psi _{{\textbf {j}} ', {\textbf {k}} '}\) satisfy

Then, after the compression scheme (29), these are at most \(\mathcal {O}(2^{2J})\) nontrivial entries.

Proof

It suffices to count the respective matrix entries of \({\textbf {A}} _J^{c_2, 2}\). Suppose first that \(j_2' \ge j_2\). In this case, the number of nontrivial entries in the matrix block \([{\textbf {A}} _J^{c_2, 2}]_{{\textbf {j}} , {\textbf {j}} '}\) can be estimated by

Remarking that for \(\kappa > 0\) sufficiently small, we have \(d' - 2 \kappa > 0\), so the properties \(|{\textbf {j}} |_\infty \ge j_1\) and \(|{\textbf {j}} '|_\infty \ge j_2'\) imply that the number of entries can be estimated by

As the indices can be exchanged, the two sums are equal and can be estimated by

Putting everything together, we obtain that

In the case where \(j_2 \ge j_2'\), we need to argue in a slightly different way. By the assumption \(j_1' \le j_1\), we have at least \(\mathcal {O}(2^{|{\textbf {j}} '|_1})\) nontrivial entries in the matrix block \([{\textbf {A}} _J^{c_2, 2}]_{{\textbf {j}} , {\textbf {j}} '}\), as at every point at which the singular supports \(\varOmega _{j_1', k_1'}^\sigma \) and \(\varOmega _{j_2', k_2'}^\sigma \) intersect, there is at least one smaller wavelet \(\psi _{{\textbf {j}} , {\textbf {k}} }\) touching it. On the other hand, every nontrivial entry satisfies \(\sigma _{x_1}\le a \mathcal {F}_{{\textbf {j}} , {\textbf {j}} '}^{x_1}\) and \(\sigma _{x_2} \le a\mathcal {F}_{{\textbf {j}} , {\textbf {j}} '}^{x_2}\), so the number of nontrivial entries in a block \([{\textbf {A}} _J^{c_2, 2} ]_{{\textbf {j}} , {\textbf {j}} '}\) can be estimated by

If the maximum in (38) is equal to \(1\), then it is easy to see that

If, however, the maximum in (38) is not equal to \(1\), we have

Using again that \(d' - 2 \kappa > 0\), \(|{\textbf {j}} |_\infty \ge j_1\), \(|{\textbf {j}} '|_\infty \ge j_2'\), one obtains

The first sum can be treated as in the previous case, whereas for the second sum, there holds

Hence, we can conclude that also \(\mathcal {C}^{(2)} \lesssim 2^{2J}\).\(\square \)

With the preceeding three lemmata and Theorem 9 at hand, we conclude the main result of this section.

Theorem 10

Assume that (31) holds. Then, the compressed matrix \({\textbf {A}} _J^c\) arising from (30) contains at most \(\mathcal {O}(2^{2J})\) nontrivial entries.

Remark 9

For the piecewise constant (\(d=1\)) and piecewise bilinear (\(d=2\)) wavelets, used most often in practice, one needs at least the following number of vanishing moments:

If \(2q = 1\), then using piecewise constant wavelets is mathematically not meaningful because the energy space \(H^{\frac{1}{2}}(\varGamma )\) cannot be discretized by discontinuous trial functions. Note that these are the same values as in the setting of isotropic wavelet bases, compare [9].

7 The situation on a Lipschitz manifold

Up to now, we have only considered the situation on the unit square. As stated in Sect. 2, we are particularly interested in the solution of a boundary integral equation posed on the boundary \(\varGamma \) of a Lipschitz domain \(\varOmega \subset \mathbb {R}^3\). Recall that the boundary \(\varGamma \) is given as the union of the patches \(\varGamma _i\), which can be smoothly parametrized by \(\varvec{\gamma }_i: \square \rightarrow \varGamma _i\). Moreover, we assume that the parametrizations \(\varvec{\gamma }_i\) and \(\varvec{\gamma }_j\) coincide up to the orientation on a common edge of \(\varGamma _i\) and \(\varGamma _j\).

We define the Sobolev spaces \(H^s(\varGamma )\) according to [32]. We remark that the norm \(\Vert \cdot \Vert _{H^s(\varGamma )}\) depends on the parametrizations \(\varvec{\gamma }_i\). However, there can be shown that these norms are equivalent, regardless which parametrizations are chosen [32, 37]. Recall that \(|s| \le 1\) would hold on a Lipschitz smooth surface. For a \(C^{k, \alpha }\)-surface, it is possible to define these spaces up to \(|s| \le k + \alpha \), compare [37] for example.

7.1 Globally discontinuous wavelets

Similar to the existing literature, see e.g. [9, 30], we discretize the energy space \(H^q(\varGamma )\) with \(2q<1\) by transporting the wavelet functions from the unit square onto \(\varGamma \). Precisely, we define a basis function as

and then consider the basis set

To construct a trial space on the level \(J\), we proceed as on the unit square, meaning that we cut the basis off at the level \(J\), obtaining

Note that the above wavelets are supported on a single patch \(\varGamma _i\) only. In general, they are not continuous over the patch boundaries, so they only attain a regularity of \(\gamma = \frac{1}{2}\), regardless how smooth the wavelets are piecewise. It is possible to construct wavelets which are continuous over the patch boundaries, see e.g. [12], but \(\gamma = \frac{1}{2}\) is sufficient if we want to discretize an operator of nonpositive order.

Because the wavelets are supported on a single patch, and each parametrization and cutoff function is smooth, one can generalize all the wavelet properties stated in Sect. 3.4. For the same reason, the Lipschitz continuity of the surface is no restriction in using \(\widetilde{d} > 1\) vanishing moments for the cancellation property (16), since we do not have to consider the behaviour of the test function over the patch boundaries, and the expressions \(|\cdot |_{W^{\widetilde{d}, \infty }(\varOmega _{i, {\textbf {j}} , {\textbf {k}} })}\) are well-defined.

Finally, as the spaces \(V_{{\textbf {J}} }\) coincide with the space spanned by all lifted, isotropic scaling functions on the level \(J\), we can directly quote the following lemma, compare [35].

Lemma 3

For a continuous, strongly elliptic and injective operator \(\mathcal {A}: H^q(\varGamma ) \rightarrow H^{-q}(\varGamma )\), the Galerkin discretization is stable, meaning that

for any sufficiently large \(J\), and

Furthermore, let \(u\) be the solution of (5), and \(u_J\) the solution of (7). Then, we have the convergence

provided that \(u \in H^s(\varGamma )\) and that \(\varGamma \) is sufficiently regular to ensure that the involved Sobolev spaces are well-defined.

Remark 10

The condition that \(\mathcal {A}\) is injective is, as already stated, not strictly necessary. It suffices if the kernel is finite-dimensional and known in advance.

7.2 Matrix estimates for discontinuous wavelets

As we will see, all the matrix estimates on a given surface can be concluded from the matrix estimates on the unit square. Depending on the situation of the two patches on which the wavelets are supported, we need to differ between several cases. To this end, for \(\hat{\varvec{x}}, \hat{\varvec{x}}' \in \square \), let

with \(1\le i,i'\le r\) denote the transported kernel function. With the transported kernel function at hand, we find that

where we define \({\hat{\mathcal {A}}}_{i,i'}\) as the integral operator with the transported kernel \({\hat{K}}_{i,i'}\). Since the local parametrizations \(\varvec{\gamma }_i\) and \(\varvec{\gamma }_{i'}\) are smooth, the transported kernel functions also satisfy the decay property

for almost all \( (\hat{\varvec{x}}, \hat{\varvec{x}'}) \in \square \times \square \), provided that \(2 + 2q + |\varvec{\upalpha }| + |\varvec{\upalpha }'| > 0\). Therefore, in view of (40) and (41), the far-field estimates of Sect. 4.1 hold true also in case of piecewise smooth Lipschitz manifolds.

7.2.1 Wavelets supported on the same patch

Let us look at the easiest situation first. If we consider the interaction between \(\psi _{i,{\textbf {j}} ,{\textbf {k}} }\) and \(\psi _{i,{\textbf {j}} ',{\textbf {k}} '}\), we can use the relations (40) and (41) together with

to conclude that the situation on a single patch is equivalent to that of the unit square. Therefore, also the near-field estimates are valid one-to-one and we find at most \(\mathcal {O}(2^{2J})\) nontrivial entries in the matrix block associated with \(\varGamma _i \times \varGamma _i\). Especially, the compression error in each matrix block satisfies the same estimates as in Sect. 5.

7.2.2 Patches with a common edge

Let us now assume that \(\varGamma _i\) and \(\varGamma _{i'}\) share a common edge. For the sake of simplicity, we assume that the common edge \(\varSigma \) satisfies

especially that there holds \(\varvec{\gamma }_i(1,x_2) = \varvec{\gamma }_{i'}(0,x_2)\) for all \(x_2\in [0,1]\). Otherwise, we can apply suitable rotations such that this assumption holds.

By glueing the two parametrizations together, we obtain a Lipschitz continuous parametrization \(\varvec{\gamma }: [0,2]\times [0,1]\rightarrow \varGamma _i \cup \varGamma _{i'}\) such that

compare Fig. 3 for a graphical illustration. For the near-field estimates, we need to interpret the coordinate directions in a meaningful way. This is quite intuitive in Fig. 3, since we can simply define the \(x\)-direction as the direction across the edge, while the \(y\)-direction can be interpreted as the direction parallel to the edge. Especially, we find

where \(\Vert \cdot \Vert \) denotes the Euclidean norm on \(\mathbb {R}^3\) and \(\mathbb {R}^2\), respectively.

For Theorem 4, we followed the arguments in [24], where the smooth part of the bigger wavelet \(\psi _{j_1', k_1'}\) is extended to a smooth, compactly supported function. Then, also the operator \(\mathcal {A}_1\) is extended to a classical, pseudo-differential operator \(\mathcal {A}_1^\sharp \), compare [22]. This is not so easy to do in the current situation, as the kernel \(\hat{K}_{i, i'}\) is no longer asymptotically smooth of the order \(2q\), since it is only continuous over the edge \(\varSigma \). Nevertheless, this difficulty can be overcome. For the sake of simplicity, let us define

Then, there holds \(\hat{\varOmega }_{j_1, k_1} \subset [0, 1]\) and \(\hat{\varOmega }_{j_1', k_1'} \subset [1, 2]\) in (40). Hence, we either have \(\sigma _{x_1} = 0\), in which case we cannot compress in the direction of \(x_1\) at all, or \(\sigma _{x_1} > 0\).

If we are considering discontinuous wavelets, the smooth extension function \(\tilde{f}\) from the proof of Theorem 4 is equal to 0, since the two wavelets are located on different patches. Hence, we can ignore everything about the pseudo-differential operator and only consider the complement function.

In the second coordinate direction, the situation looks more complicated, as \(\hat{\varOmega }_{j_2, k_2}\) and \(\hat{\varOmega }_{j_2', k_2'}\) may not be disjoint. In this case, we still need to argue with \(\hat{\mathcal {A}}_2^\sharp \), which corresponds to the kernel

Although \(\varvec{\gamma }\) is overall only Lipschitz continuous, the kernel \(\hat{K}_{i, i'}^{(2)}\) is smooth. Indeed, by (1) and the smoothness of \(\varvec{\gamma }_i\) and \(\varvec{\gamma }_{i'}\), for any fixed \(\hat{x}_2,\hat{x}_2' \in [0, 1]\) with \(\hat{x}_2 \ne \hat{x}_2'\), it is not hard to see that all derivatives \(\partial _{x_2}^{\beta } \partial _{x_2'}^{\beta '} \hat{K}_{i, i'}\) of the kernel from (39) exist and are bounded independently of \(x_1, x_1' \in (0, 1)\). Therefore, we may differentiate \(\hat{K}_{i, i'}^{(2)}\) under the integral to obtain that also \(\hat{K}_{i, i'}^{(2)}\) is smooth apart from the diagonal. By arguing as in Sect. 4, additionally using the bi-Lipschitz continuity of the parametrization \(\varvec{\gamma }\), we may derive a Calderón-Zygmund estimate for \(\hat{K}_{i, i'}^{(2)}\), i.e.,

Therefore, the arguments of [24] work in this case as well.

In the proof of Theorem 4, we have also made use of vanishing moments hidden in the kernel. This is possible to do here as well. Indeed, if e.g. \(j_1' \ge j_1\), then we only have to consider a term of the form

where the asymptotic estimate for \({\hat{K}}_{i, i'}\) holds since \(\varvec{\gamma }_{i'}\) is smooth on \(\varOmega _{j_1', k_1'} \times [0, 1]\) in view of (39). Hence, the same arguments as in the proof of Theorem 4 apply.

7.2.3 Patches with a common vertex

If the patches \(\varGamma _i\) and \(\varGamma _{i'}\) have a common vertex, we can use a similar argument as before. We assume that the common vertex \(\varvec{v}\) satisfies, possibly after applications of suitable rotations and translations,

Hence, we may find a Lipschitz continuous parametrization \(\varvec{\gamma }: [0, 2]^2 \rightarrow \varGamma \) such that

Concerning the first compression, in view of (40), (41), and

we can obviously use the estimates of Sect. 4.1 here, too.

To proceed with the second compression, we need an estimate like the one in Theorem 4, for which we define

In view of (42) and the fact that the restrictions of \(\varvec{\gamma }\) to \([0, 1]^2\) and \([1, 2]^2\) are smooth, we deduce for \(\varvec{x}=\varvec{\gamma }_i({\hat{\varvec{x}}})\) and \(\varvec{x}'=\varvec{\gamma }_i({\hat{\varvec{x}}}')\) that

This is exactly the estimate needed for Theorem 4 when considered on the interval \([0,2]\) with \({\hat{x}}_1\in [0,1]\) and \({\widetilde{x}}_1 \mathrel {\mathrel {\mathop :}=}{\hat{x}}_1'+1\in [1,2]\). Similarly, we can derive such an estimate for the second coordinate direction. As there holds

it is enough if one realization of the entry can be compressed.

Moreover, on the parameter domain \([0, 2]^2\), cf. Fig. 4, the preimages of \(\varOmega _{i, {\textbf {j}} , {\textbf {k}} }\) and \(\varOmega _{i', {\textbf {j}} ', {\textbf {k}} '}\) either touch each other, in which case we cannot compress, or they are well-seperated in at least one coordinate direction. Hence, in this direction, the smooth extension \(\tilde{f}\) of the larger wavelet is 0 as well, so we do only have to consider the complement function. This estimate depends only on the distance between the supports, which is, at least in this coordinate direction, equivalent to the distance in \(\mathbb {R}^3\), since

for an \(\ell \in \{1, 2\}\).

7.2.4 Well-separated patches

If \(\varGamma _i\) and \(\varGamma _{i'}\) do neither share a common vertex nor a common edge, then, since the domain under consideration has at least a Lipschitz boundary, there holds \({{\,\textrm{dist}\,}}( \varGamma _i,\varGamma _{i'} ) \gtrsim 1\ge 2^{- \min \{j_1, j_2, j_1', j_2' \}}\). Therefore, the first compression is possible for all such entries. We also note that in this case, we only need the spatial distance, so we do not have to think about appropriate coordinate directions here.

In the first compression, there are two different possibilities: First, if

and \(h \mathrel {\mathrel {\mathop :}=}\min \{ 1,{{\,\textrm{dist}\,}}(\varGamma _i,\varGamma _j) \} > 0\), then we can compress all entries, for which the maximal support length is smaller than \(h\), meaning if

leaving us at

entries. Note that this is entirely theoretical – it is clear that this constant deteriorates if \(h > 0\) is small. If not, then there are only \(\mathcal {O}(2^{2J})\) entries on each patch-patch interaction, which is implied by Sect. 6.1.

Summarized, we arrive at the following theorem:

Theorem 11

Consider a patchwise smooth and globally Lipschitz continuous manifold, and a boundary integral operator \(\mathcal {A}\) of the order \(2q < 1\). By using the liftings of wavelet functions which satisfy the conditions on \(\tilde{d}\) in (31), we can compress the Galerkin matrix \({\textbf {A}} _J\) associated with the operator \(\mathcal {A}\) into matrix \({\textbf {A}} _J^c\), which contains only \(\mathcal {O}(2^{2J})\) nontrivial entries. Moreover, each block satisfies the error estimate

7.3 Globally continuous wavelets

In order to discretize \(H^q(\varGamma )\) for \(q\ge \frac{1}{2}\), we need to construct a wavelet basis of globally continuous functions. To this end, let us consider the wavelet bases introduced in [11]. Because \(\widetilde{d}=2\) vanishing moments is enough to optimally compress the hypersingular operator, cf. Remark 9, we restrict ourselves to this specific case. However, the subsequent construction works also in case of more vanishing moments. We shall first briefly illustrate how the associated piecewise linear wavelet basis on the unit interval looks like.

For a fixed \(j \ge j_0 \mathrel {\mathrel {\mathop :}=}2\), we consider the space \(V_j = {{\,\textrm{span}\,}}\{\phi _{j,0}, \ldots , \phi _{j,2^{j}}\}\), where each \(\phi _{j,k}\) is a properly scaled and piecewise linear hat function. In particular, for \(k=1,\ldots ,2^{j}-1\), we have \(\phi _{j,k}(2^{-j}\ell ) = 2^{j/2}\delta _{k,\ell }\), for \(\ell =0,\ldots ,2^{j}\). We note that \(\phi _{j,0}\) and \(\phi _{j,2^{j}}\) are boundary functions, satisfying \(\phi _{j,0}(0)=2^{j/2}\), and \(\phi _{j,2^j}(1)=2^{j/2}\), respectively.

As wavelet spaces, we define \(W_j\mathrel {\mathrel {\mathop :}=}{{\,\textrm{span}\,}}\{\psi _{j,0},\ldots ,\psi _{j,2^{j-1}-1}\}\), where

and for \(k=1,\ldots ,2^{j-1}-2\)

compare Fig. 5. By construction, all wavelets satisfy homogeneous Dirichlet boundary conditions. Therefore, the tensor product basis on the unit square satisfies also homogeneous Dirichlet boundary conditions except for the tensor products which involve the coarse grid scaling functions at the interval’s boundary. More precisely, the only functions associated with an edge are tensor products of the respective coarse grid scaling function perpendicular to the edge with wavelets parallel to the edge, while the only functions associated with a vertex consist of the tensor product of the respective coarse grid scaling functions. Hence, in order to glue wavelets across an edge together, we only need to glue scaling functions, which is not hard. Likewise, the only functions, which must be glued together across a vertex, are tensor products of scaling functions, which is an easy task, too.

7.4 Matrix estimates for continuous wavelets

Out of the arguments in Sect. 7.2, we can directly conclude the same properties for all wavelets, which are supported on a single patch. Hence, the only remaining wavelets are those which are supported across an edge or a vertex.

We first note that there is only a single function which is supported on a particular vertex. It is composed of scaling functions. Therefore, there are at most \(\mathcal {O}(1)\) such matrix rows and columns, by which this case is trivial.

Next, we consider wavelets which are supported across an edge. Let us use the notation of Sect. 7.2, i.e., we call the edge \(\varSigma \) and assume without loss of generality that it belongs to the patches \(\varGamma _i\) and \(\varGamma _{i'}\) in the direction \(x_1\) as in Fig. 3. Let the wavelet \(\psi _{\varSigma ,{\textbf {j}} ,{\textbf {k}} }\) on the level \({\textbf {j}} \) with \(j_1=j_0\) be supported on \(\varSigma \), while the test wavelet on level \({\textbf {j}} '\) is arbitrary. We differ between two cases:

First, if \(j_1'=j_0\), then the test wavelet is supported on an edge, too, and we do not need to compress such entries, because in total, there are at most \(\mathcal {O}(2^{2J})\) such entries.

On the other hand, if \(j_1'>j_0\), we know that the test wavelet is supported either on \(\varGamma _i\) or on \(\varGamma _{i'}\), but not on both of them. We thus assume without loss of generality that \(\psi _{i',{\textbf {j}} ',{\textbf {k}} '}\) is supported on \(\varGamma _{i'}\). Hence, the corresponding matrix entry is given by

We shall consider these two integrals separately.

For the first one, we remark that \(\psi _{\varSigma ,{\textbf {j}} ,{\textbf {k}} }\big |_{\varGamma _{i'}}\) is nothing but the tensor product of a scaling function on the coarsest level with a wavelet. As we integrate over the same patch \(\varGamma _{i'}\) only, this is equivalent to the situation on the unit square.

The second integral, on the other hand, can be treated as in Sect. 7.2. Indeed, in this case, we can consider \(\psi _{\varSigma ,{\textbf {j}} ,{\textbf {k}} }\big |_{\varGamma _i}\) and \(\psi _{i',{\textbf {j}} ',{\textbf {k}} '}\) as a pair of globally discontinuous, patchwise supported wavelets.

Therefore, we can state the following theorem:

Theorem 12

Consider a patchwise smooth, globally Lipschitz continuous domain and a continuous wavelet basis which satisfies the following conditions:

-

1.

There are no more than \(\mathcal {O}(1)\) wavelets supported on each vertex.

-

2.

On each fixed level \({\textbf {j}} \) and each edge \(\varSigma \), there are only \(\mathcal {O}(2^{|{\textbf {j}} |_\infty })\) wavelets \(\psi _{\varSigma ,{\textbf {j}} ,{\textbf {k}} }\) supported on \(\varSigma \).

-

3.

All wavelets, except for the scaling functions, have patchwise vanishing moments.

-

4.

The number of vanishing moments fulfils the requirements of (31).

Then, we can compress the Galerkin matrix \({\textbf {A}} _J\) of a boundary integral operator \(\mathcal {A}\) of order \(2q < 3\) into a matrix \({\textbf {A}} _J^c\) which contains at most \(\mathcal {O}(2^{2J})\) nontrivial entries. Moreover, each matrix block satisfies the error estimate

8 Consistency and convergence

In this section, we are going to show that the Galerkin scheme for the compressed operator converges as well as the Galerkin scheme for the uncompressed operator. This means that the wavelet matrix compression under consideration realizes the discretization error accuracy offered by the underlying Galerkin scheme, provided that the wavelet basis under consideration satisfies the requirements of Sect. 7.

Similar to [9], we define the compressed boundary integral operator \(\mathcal {A}_J^c: H^{s}(\varGamma ) \rightarrow H^{s - 2q}(\varGamma )\) in accordance with

which defines a continuous operator for all \(- \widetilde{\gamma }< s < \widetilde{\gamma } + 2q\). Especially, this operator represents the compressed matrix \({\textbf {A}} _J^c\) in terms of

Theorem 13

(Consistency) Let \(\varepsilon > 0\) and let \({\textbf {A}} _J^c\) denote the compressed matrix on the level \(J\) with a parameter \(a\), such that

Then, for \(q \le s, t \le d\), the associated compressed operator \(\mathcal {A}_J^c\) satisfies the estimate

uniformly in \(J\), provided that \(\widetilde{\gamma }> -q\), \(u \in H^s(\varGamma )\) and \(v \in H^t(\varGamma )\).

Proof

We note that, since \(s\ge q > -\widetilde{\gamma }\), we have the representation formula

By the definition of the operators \(\mathcal {A}\), \(\mathcal {A}_J^c\) and the biorthogonality, together with all the block error estimations and the definition of \(\sigma _{{\textbf {j}} , {\textbf {j}} '}\) in (20), we obtain that

For each the two sums, we may apply the inequality of Cauchy-Schwarz to obtain that

Herein, the first sum can be estimated by

Moreover, if \(s \ge 0\), the second sum can be treated by the approximation property (14) of the spaces \(V_n\), and we obtain that

Whereas, if \(s < 0\), we can use the Bernstein inequality (15) to get

After applying the same procedure to \(v\), we finally arrive at

since \(q\le s, t \le d < d'\).\(\square \)

Our next goal is to show that the compressed wavelet scheme converges to the original solution. First, similar to [9], we keep in mind that Theorem 13 implies that

In view of the strong ellipticity (4), we can conclude that

so the operator \(\mathcal {A}_J^c\) is strongly elliptic for \(\varepsilon \) sufficiently small, too. These two properties then imply that the operator \(\mathcal {A}_J^c\) is stable in the sense that

With these results at hand, we may deduce the following two theorems by applying the arguments of [9]. We remark that the proofs of these theorems involve only the consistency, the ellipticity, the stability, and the approximation property, compare [9].

Theorem 14

(Convergence) Let \(\varepsilon \) be sufficiently small such that \(\mathcal {A}_J^c\) is strongly elliptic. Then, the solution of the compressed matrix equation

where the coefficient vector \({\textbf {u}} _J\) satisfies

converges to the solution \(u\) of (5) in \(H^q(\varGamma )\) and we have the estimate

provided that \(u \in H^d(\varGamma )\) and \(-\widetilde{\gamma }<q<\gamma \).

Theorem 15

(Aubin-Nitsche) Let all the assumptions of Theorem 14 hold and moreover assume that \(\mathcal {A}': H^{t+q}(\varGamma ) \rightarrow H^{t-q}(\varGamma )\) is an isomorphism for any \(0 \le t \le d-q\). Then, we have the error estimate

provided that \(u \in H^d(\varGamma )\) and \(-\widetilde{\gamma }<q<\gamma \).

9 Numerical computations

In this section, we present numerical experiments to validate the theoretical findings. We use piecewise constant wavelets with three vanishing moments and consider the single layer operator on the unit square. We compute first the full wavelet Galerkin matrix

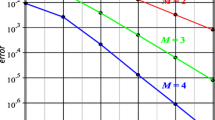

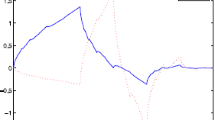

with \(\mathcal {A}\) being the single layer operator. Then, the obsolete entries are removed according to the compression scheme from Sect. 5. The number of nonzero entries are calculated and plotted (blue line) in on the left-hand side of Fig. 8. To this end, we have chosen the parameters \(\tilde{d} = 3\), \(d' = 1.1\), and \(\kappa = 10^{-3}\), while for the bandwidth parameter \(a\) the different values \(a = 0.5, 1.0, 2.0\) have been considered. The system matrix for the anisotropic tensor product wavelet basis and its compressed counterpart with \(2^{2 \cdot 7} = 16\,384\) rows and columns and \(a=1.0\) can be found in Fig. 7. Note that the matrix blocks are lexicographically ordered as illustrated in Fig. 6.

From a theoretical point of view, the number of nontrivial entries in each block can be bounded by

Moreover, in accordance with (37) and (38), an additional summand \(2^{\min \{|{\textbf {j}} |_1,|{\textbf {j}} '|_1\}}\) is added if \({\textbf {j}} \le {\textbf {j}} '\) or \({\textbf {j}} ' \le {\textbf {j}} \). The number of nontrivial entries in the whole compressed matrix can therefore also be bounded by

This estimate is also found (red line) in the plot on the left-hand side of Fig. 8.

On the right-hand side of Fig. 8, we have computed the consistency errors arising from the compression scheme. Therein, we have generated random vectors \({\textbf {u}} , {\textbf {v}} \) and scaled them such that they correspond to coefficient vectors of functions \(u,v \in H^1(\varGamma )\) with respect to the anisotropic tensor product wavelet basis. We have used 100 random samples to calculate the quantity \(\big | {\textbf {v}} ^\intercal ({\textbf {A}} _J - {\textbf {A}} _J^{c} ){\textbf {u}} \big |\) which is the discrete version of (43). We see that the calculations match the expected behaviour.

10 Conclusion

We have developed a matrix compression scheme for the boundary element method using anisotropic tensor product wavelets. In the end, we get a quasi-sparse matrix containing only \(\mathcal {O}(N)\) nontrivial entries whilst the approximate solution converges to the exact solution at the rate of the discretization error. This applies for every integral operator of order \(2q<3\) on the unit square and a smooth boundary. On a Lipschitz geometry, however, the order of the integral operator is bounded by \(2|q| \le 2\) since the underlying Sobolev spaces \(H^s(\varGamma )\) are only defined for \(|s|\le 1\).

Likewise to [24, 25], our compression scheme may be generalized to an arbitrary spatial dimension on the unit cube. One would have to choose the compression parameters according to the location of the two tensor product wavelets with respect to each other. Then, one should combine the first compression in all directions, in which the two wavelets are in the far-field with a second compression for all directions, in which the wavelets are in the near-field. Especially, our compression estimates considerably improve previous results for the compression of nonlocal operators in sparse tensor product spaces.

On the contrary, it is not known yet whether the boundary integral operator is \(s^\star \)-compressible with respect to the anisotropic tensor product wavelet basis, which was established for the isotropic wavelet basis in [33]. With the \(s^\star \)-compressibility at hand, it was shown in [10, 15] that adaptive wavelet compression schemes can approximate the solution at the rate of the best \(N\)-term approximation at a linear complexity.

References

Barnett, A., Greengard, L.: A new integral representation for quasi-periodic fields and its application to two-dimensional band structure calculations. J. Comput. Phys. 229(19), 6898–6914 (2010)

Bebendorf, M., Rjasanow, S.: Adaptive low-rank approximation of collocation matrices. Computing 70(1), 1–24 (2003)

Braess, D.: Finite Elements. Theory, Fast Solvers, and Applications in Solid Mechanics, 2nd edn. Cambridge University Press, Cambridge (2001)

Brenner, S.C., Scott, L.R.: The Mathematical Theory of Finite Element Methods, 3rd edn. Springer, Berlin (2008)

Byrenheid, G., Hübner, J., Weimar, M.: Rate-optimal sparse approximation of compact break-of-scale embeddings. Appl. Comput. Harmon. Anal. 65, 40–66 (2023)

Cazeaux, P., Zahm, O.: A fast boundary element method for the solution of periodic many-inclusion problems via hierarchical matrix techniques. ESAIM Proc. Surv. 48, 156–168 (2015)

Colton, D., Kress, R.: Inverse Acoustic and Electromagnetic Scattering Theory, 2nd edn. Springer, Berlin (1998)

Dahmen, W.: Wavelet and multiscale methods for operator equations. Acta Numer. 6, 55–228 (1997)

Dahmen, W., Harbrecht, H., Schneider, R.: Compression techniques for boundary integral equations: asymptotically optimal complexity estimates. SIAM J. Numer. Anal. 43(6), 2251–2271 (2006)

Dahmen, W., Harbrecht, H., Schneider, R.: Adaptive methods for boundary integral equations: complexity and convergence estimates. Math. Comput. 76(259), 1243–1274 (2007)

Dahmen, W., Schneider, R.: Wavelets with complementary boundary conditions—function spaces on the cube. RM 34, 255–293 (1998)

Dahmen, W., Schneider, R.: Composite wavelet bases for operator equations. Math. Comput. 68, 1533–1567 (1999)

Daubechies, I: Ten lectures on wavelets. In: CBMS-NSF Regional Conference Series in Applied Mathematics. Society for Industrial and Applied Mathematics, Philadelphia (1992)

Du, Q., Gunzburger, M., Lehoucq, R.B., Zhou, K.: Analysis and approximation of nonlocal diffusion problems with volume constraints. SIAM Rev. 54(4), 667–696 (2012)

Gantumur, T., Stevenson, R.: Computation of singular integral operators in wavelet coordinates. Computing 76, 77–107 (2006)

Greengard, L., Rokhlin, V.: A new version of the fast multipole method for the Laplace equation in three dimensions. Acta Numer. 6, 229–269 (1997)

Griebel, M., Oswald, P., Schiekofer, T.: Sparse grids for boundary integral equations. Numer. Math. 83(2), 279–312 (1999)

Harbrecht, H., Peters, M.: Comparison of fast boundary element methods on parametric surfaces. Comput. Methods Appl. Mech. Eng. 261–262, 39–55 (2013)

Harbrecht, H., Schneider, R.: Wavelet Galerkin schemes for boundary integral equations. Implementation and quadrature. SIAM J. Sci. Comput. 27(4), 1347–1370 (2006)

Harbrecht, H., Schneider, R.: Rapid solution of boundary integral equations by wavelet Galerkin schemes. In: DeVore, R., Kunoth, A. (Eds.) Multiscale, Nonlinear and Adaptive Approximation, pp. 249–294. Springer, Berlin (2009)

Harbrecht, H., Utzinger, M.: On adaptive wavelet boundary element methods. J. Comput. Math. 36(1), 90–109 (2018)

Hsiao, G., Wendland, W.L.: Boundary Integral Equations. Applied Mathematical Sciences, vol. 164. Springer, Berlin (2008)

Lukáš, D., Of, G., Zapletal, J., Bouchala, J.: A boundary element method for homogenization of periodic structures. Math. Methods Appl. Sci. 43(3), 1035–1052 (2020)

Reich, N.: Wavelet Compression of Anisotropic Integrodifferential Operators on Sparse Tensor Product Spaces. PhD thesis, ETH Zürich (2008). ETH Diss Nr. 17661

Reich, N.: Wavelet compression of integral operators on sparse tensor spaces. Construction, consistency and asymptotically optimal complexity. Research Report No. 2008-24, Seminar for Applied Mathematics, ETH Zürich (2008)

Rjasanow, S., Steinbach, O.: The Fast Solution of Boundary Integral Equations. Springer, Boston (2003)

Sauter, S., Schwab, C.: Quadrature for hp-Galerkin BEM in \(\mathbb{R} ^3\). Numer. Math. 78, 211–258 (1997)

Sauter, S., Schwab, C.: Boundary Element Methods. Springer Series in Computational Mathematics, vol. 39. Springer, Berlin (2011)

Schäfer, M., Ullrich, T., Vedel, B.: Hyperbolic wavelet analysis of classical isotropic and anisotropic Besov–Sobolev spaces. J. Fourier Anal. Appl. 27, 51 (2021)

Schneider, R.: Multiskalen- und Wavelet-Matrixkompression: analysisbasierte Methoden zur effizien-ten Lösung großer vollbesetzter Gleichungssysteme. B.G. Teubner, Stuttgart (1998)

Stein, E.M.: Harmonic Analysis. Princeton University Press, Princeton (2002)

Steinbach, O.: Numerical Approximation Methods for Elliptic Boundary Value Problems. Springer, New York (2008)

Stevenson, R.: On the compressibility of operators in wavelet coordinates. SIAM J. Math. Anal. 35(5), 1110–1132 (2004)

Utzinger, M.: An Adaptive Wavelet Method for the Solution of Boundary Integral Equations in Three Dimensions. PhD thesis, Universität Basel (2016)