Abstract

Inspired by the successes of stochastic algorithms in the training of deep neural networks and the simulation of interacting particle systems, we propose and analyze a framework for randomized time-splitting in linear-quadratic optimal control. In our proposed framework, the linear dynamics of the original problem is replaced by a randomized dynamics. To obtain the randomized dynamics, the system matrix is split into simpler submatrices and the time interval of interest is split into subintervals. The randomized dynamics is then found by selecting randomly one or more submatrices in each subinterval. We show that the dynamics, the minimal values of the cost functional, and the optimal control obtained with the proposed randomized time-splitting method converge in expectation to their analogues in the original problem when the time grid is refined. The derived convergence rates are validated in several numerical experiments. Our numerical results also indicate that the proposed method can lead to a reduction in computational cost for the simulation and optimal control of large-scale linear dynamical systems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Solving an optimal control problem for a large-scale dynamical system can be computationally demanding. This problem appears in numerous applications. One example is Model Predictive Control (MPC), which requires the solution of several optimal control problems on a receding time horizon [11, 18]. Another example is the training of Deep Neural Networks (DNNs), which can be approached as an optimal control problem for a large-scale nonlinear dynamical system, see, e.g., [4, 9, 10, 27, 29]. Because the computational cost for gradient-based deterministic optimization algorithms explodes on large training data sets, neural networks (NNs) are typically trained using stochastic optimization algorithms such as stochastic gradient descent or stochastic (mini-)batch methods, see, e.g., [6]. In such methods, the update direction for the parameters of the NN is not computed based on the complete training data set, but on a subset of the available training data that is chosen randomly in each iteration. It can be shown that such methods converge in expectation to a (local) minimum of the considered cost functional, see, e.g., [6].

These successes inspired the development of Random Batch Methods (RBMs) for the simulation of interacting particle systems [14, 15, 21]. Because the number of interactions between N particles is of order \(N^2\), the forward simulation of a system with a large number of particles is computationally demanding. A RBM reduces the required computational cost by reducing the number of considered interactions as follows. First, the considered time interval is divided into a number of subintervals of length \(\le h\). In each subinterval, particles are grouped in randomly chosen batches (of at least two particles) and only the interactions between particles in the same batch are considered. The number of considered interactions now grows as PN, where P is the size of the considered batches, and a significant reduction in computational time can be achieved when \(P \ll N\). It can be shown that the expected error introduced by this process is proportional to \(\sqrt{h}\), where h denotes (an upper bound on) the length of the considered time intervals, see [14].

The computation of optimal controls for interacting particle systems is even more computationally demanding than the forward simulation because it requires several simulations of the forward dynamics and the associated adjoint problem, see, e.g., [20]. Because the optimal control for the RBM-approximated dynamics can be computed significantly faster than the control for the original dynamics, it has been proposed in [18] to control the original system with the controls optimized for the RBM dynamics. The numerical experiments in [18] indeed indicate that this approach can lead to a reasonably good approximation of the control for the original system. In [18], the control of the original dynamics with the RBM-optimal controls is combined with an MPC strategy, which creates additional robustness against the errors introduced by the RBM-approximation. However, even for the simplest case that does not consider the combination with MPC, a formal proof that the optimal control computed for the RBM-approximated dynamics indeed converges to the optimal control for the original system for \(h \rightarrow 0\) was not given.

In this paper, we study, motivated by the ideas from [18], the classical linear-quadratic (LQ) optimal control problem constrained by randomized dynamics. Extensions of these results to a nonlinear setting are not only of interest for the control of interacting particle systems as considered in [18], but have also applications in the training of certain DNNs which can be viewed as (the time discretization) of an optimal control problem, see, e.g., [4, 9, 10, 27, 29]. The results for the LQ problem in this paper form a starting point for the study of these more involved problem settings.

In this paper, we propose a framework for the simulation and optimal control of large-scale linear dynamical systems. In our proposed framework, the system matrix is split into submatrices and the time interval of interest is split into subintervals of length \(\le h\). The randomized dynamics is then found based on the randomly selected submatrices in each subinterval. Similarly as in [14, 15, 21], we show that the randomized dynamics converges to the dynamics of the original system at a rate \(\sqrt{h}\). The main contributions of this paper concern the LQ optimal control problem in which the original dynamics is replaced by these randomized dynamics. In particular, we show that the minimal values of the cost functional and the corresponding optimal controls for the RBM-dynamics converge (in \(L^2\) and in expectation) to their analogues for the original dynamics when \(h \rightarrow 0\). The found convergence rates are validated by several numerical examples. Numerical results also indicate that the proposed method can lead to a reduction in computational cost.

The remainder of this paper is structured as follows. Section 2 contains a precise description of our proposed stochastic simulation method and a summary of the main results of the paper. Section 3 contains the detailed proofs of the convergence of the proposed method. The proposed method and the obtained convergence results are illustrated by several numerical examples in Sect. 4. The conclusions and discussions are presented in Sect. 5.

2 Proposed method and main results

2.1 Proposed method

We consider the evolution of a large-scale Linear Time Invariant (LTI) dynamical system of the form

where the state x(t) evolves in \({\mathbb {R}}^N\), the control u(t) evolves in \({\mathbb {R}}^q\), \(A \in {\mathbb {R}}^{N \times N}\) is the system matrix, \(B \in {\mathbb {R}}^{N \times q}\) is the input matrix, and \(x_0 \in {\mathbb {R}}^N\) is the initial condition.

A typical problem associated to the dynamics (1) is to find the optimal control \(u^*(t)\) that minimizes the quadratic cost functional

where the given target trajectory \(x_d(t)\) evolves in \({\mathbb {R}}^N\), the weighting matrix \(Q \in {\mathbb {R}}^{N \times N}\) is symmetric and positive semi-definite, and the weighting matrix \(R\in {\mathbb {R}}^{q \times q}\) is symmetric and positive definite. It is well known that the optimal control \(u^*(t)\) exists and that it is unique, see, e.g., [17, 22].

Remark 1

When the state-dimension N is large, the optimal control \(u^*(t)\) is typically computed using a gradient-based algorithm in which the gradient of J(u) is computed from the adjoint state \(\varphi (t)\) that satisfies (see, e.g., [17])

where x(t) is the solution of (1). Note that the adjoint state \(\varphi (t)\) is computed by integrating (3) backward in time starting from the final condition \(\varphi (T) = 0\). The gradient of the cost functional J(u) is then obtained as

In our proposed randomized time-splitting method, the matrix A is written as the sum of M submatrices \(A_m\)

Typically, the submatrices \(A_m\) will be more sparse than the original matrix A. For ease of presentation, the results in this paper are presented under the following assumption.

Assumption 1

The submatrices \(A_m\) in (5) are dissipative, i.e. \(\langle x, A_m x \rangle \le 0\) for all \(x \in {\mathbb {R}}^N\) and all \(m \in \{1,2,\ldots ,M \}\).

Remark 2

Note that there always exists a constant \(a > 0\) such that the matrices \(A_m - aI\) are dissipative for \(m \in \{1,2,\ldots ,M \}\). Assumption 1 is therefore not essential for the convergence of the proposed method, but without Assumption 1 the error estimates are less clean and grow exponentially in time. This idea is made more precise in Remark 9 in Sect. 3.2.

We then choose a temporal grid in the time interval [0, T]

and denote

In each of the K subintervals \([t_{k-1}, t_k)\), we randomly select a subset of indices in \(\{1,2,\ldots , M \}\). The idea of the proposed method is to consider a linear combination of the submatrices \(A_m\) with the indices that have been selected for each time interval. This can lead to a significant reduction in computational time when the submatrices \(A_m\) are well-chosen and only a small number of submatrices \(A_m\) are selected in each time interval.

To make this idea more precise, we enumerate all of the \(2^M\) subsets of \(\{1,2, \ldots , M \}\) as \(S_1, S_2, \ldots S_{2^M}\). Note that one of the subsets \(S_{\omega }\) will be the empty set. To every subset \(S_{\omega }\) (\(\omega \in \Omega := \{1, 2, \ldots , 2^M \}\)) we then assign a probability \(p_{\omega }\) with which this subset is selected. This probability is the same in each of the time intervals \([t_{k-1},t_k)\). Because we select only one subset \(S_{\omega }\) in each time interval, the probabilities \(p_{\omega }\) should satisfy

From the chosen probabilities \(p_{\omega }\), we then compute the probability \(\pi _m\) that an index \(m \in \{1,2, \ldots , M \}\) is an element of the selected subset

Observe that \(\Omega _m\) is the set of the indices \(\omega \) of the sets \(S_{\omega }\) that contain the index m. We need the following (weak) assumption on the selected probabilities \(p_{\omega }\).

Assumption 2

The probabilities \(p_{\omega }\) (\(\omega \in \{1,2, \ldots ,2^M \}\)) are assigned such that

-

Equation (8) is satisfied and

-

the probabilities \(\pi _m\) defined in (9) are positive for all \(m \in \{1, ,2, \ldots , M \}\).

In each of the K time intervals \([t_{k-1},t_k)\), we then randomly select an index \(\omega _k \in \{1,2, \ldots , 2^M \}\) according to the chosen probabilities \(p_\omega \) (and independently of the other indices \(\omega _1, \omega _2, \ldots \omega _{k-1}, \omega _{k+1}, \omega _{k+1}, \ldots , \omega _K\)). The selected indices form a vector

For the selected \({\varvec{\omega }}\in \Omega ^K\), we then define a piece-wise constant matrix \(t \mapsto {\mathcal {A}}_h({\varvec{\omega }},t)\)

The scaling by \(1/\pi _m\) assures that the expected value of \({\mathcal {A}}_h\) is A because

where the first identity follows after interchanging the two summations using the definition of \(\Omega _m\) in (9), the second from the definition of \(\pi _m\) in (9), and the last identity from the decomposition of A in (5).

Example 1

In the simplest situation, we decompose the original matrix A into \(M = 2\) matrices as \(A = A_1 + A_2\). We then need to assign \(2^M = 4\) probabilities \(p_\ell \) to the subsets \(S_1 = \{ 1 \}\), \(S_2 = \{ 2 \}\), \(S_3 = \{ 1,2 \}\), and \(S_4 = \emptyset \). In this example, we choose \(p_1 = p_2 = \tfrac{1}{2}\) and \(p_3 = p_4 = 0\). This choice indeed satisfies Assumption 2 because \(\pi _1 = p_1 + p_3 = \tfrac{1}{2} > 0\) and \(\pi _2 = p_2 + p_3 = \tfrac{1}{2} > 0\). The matrix \({\mathcal {A}}_h({\varvec{\omega }},t)\) is thus either equal to \(2A_1\) with probability \(p_1 = \tfrac{1}{2}\) or equal to \(2A_2\) with probability \(p_2 = \tfrac{1}{2}\). The expected value of \({\mathcal {A}}_h\) is then indeed \(\tfrac{1}{2} 2 A_1 + \tfrac{1}{2} 2 A_2 = A_1 + A_2 = A\).

To reduce the computational cost for solving (1), the matrix A is replaced by a \({\mathcal {A}}_h({\varvec{\omega }},t)\) in the RBM. For the selected vector of indices \({\varvec{\omega }} \in \Omega ^K\), we thus obtain a solution \(t \mapsto x_h({\varvec{\omega }},t)\)

The main contribution of this paper concerns the optimal controls computed based on the RBM-dynamics (13). In particular, we consider the minimization of the functional

over all \(u \in L^2(0,T ; {\mathbb {R}}^q)\) subject to the dynamics (13). The minimizer of \(J_h({\varvec{\omega }}, \cdot )\) depends on the selected indices \({\varvec{\omega }} \in \Omega ^K\) and is denoted by \(u^*_h({\varvec{\omega }},t)\). Because R is positive definite, the minimizer \(u^*_h({\varvec{\omega }},t)\) exists and is unique. As we will show in (52)–(54) in Sect. 3.1, the minimizers \(u^*_h({\varvec{\omega }},t)\) are uniformly bounded because R is positive definite.

Remark 3

Similarly as for the original cost functional J(u) in (2), we can compute the optimal control \(u_h({\varvec{\omega }},t)\) that minimizes \(J_h({\varvec{\omega }},u)\) by a gradient-based algorithm. We can again compute the gradient of \(J_h({\varvec{\omega }},u)\) from the adjoint state \(\varphi _h({\varvec{\omega }},t)\) which satisfies

The gradient of \(J_h({\varvec{\omega }},u)\) is then obtained as

Note that when the randomized dynamics for \(x_h({\varvec{\omega }},t)\) in (13) can be solved faster than the original dynamics for x(t) in (1), the same reduction in computational cost is typically also obtained for the randomized adjoint in Eq. (15) compared to the original adjoint Eq. (3). Because the computation of the optimal control \(u^*(t)\) [(resp. \(u_h^*({\varvec{\omega }},t)\))] requires several evaluations of the forward dynamics (1) [resp. (13)] and the adjoint Eq. (3) [resp. (15)], it is natural to expect the same relative speed-up for \(u_h^*({\varvec{\omega }},t)\) (compared to \(u_h^*(t)\)) as for \(x_h({\varvec{\omega }},t)\) (compared to x(t)). This idea is confirmed by the numerical experiments in Sect. 4.

To conclude this subsection, we summarize the proposed approach to approximate the solution x(t) of (1) for a given control u(t) and/or the optimal control \(u^*(t)\) that minimizes \(J(\cdot )\) in (2) subject to (1) in Algorithm 1. The accuracy of the obtained solutions \(x_h({\varvec{\omega }},t)\) and/or \(u^*_h({\varvec{\omega }},t)\) depends on the chosen submatrices \(A_m\) in Step 1, the chosen probabilities \(p_\omega \) in Step 2, and the chosen time grid \(t_0, t_1, \ldots , t_K\) in Step 3. This dependence is captured by the error estimates in the next subsection.

It should be emphasized that we do not have that \({\mathbb {E}}[x_h(t)] = x(t)\) (for a fixed control u(t)) or that \({\mathbb {E}}[u^*_h(t)] = u^*(t)\). Repeating Step 4 in Algorithm 1 for different realizations of \({\varvec{\omega }}\) and averaging the obtained results leads to better approximations of \({\mathbb {E}}[x_h(t)]\) and/or \({\mathbb {E}}[u^*_h(t)]\) and can therefore only improve the approximation of x(t) and \(u^*(t)\) to some extend. A better way to increase the accuracy of the proposed method is to repeat Algorithm 1 for a choice of submatrices \(A_m\), probabilities \(p_{\omega }\), and a time grid \(t_0, t_1, \ldots , t_K\) that reduce the error estimates presented in the next subsection.

Remark 4

The presented framework is somewhat different from the problem setting considered in previous publications on RBMs for interacting particle systems, see, e.g., [14, 15, 18, 21]. Appendix A shows how these RBMs can be accommodated in our proposed framework.

2.2 Main results

The main results of this paper concern the effect of replacing the system matrix A in the original LQ optimal control problem (1)–(2) by the randomized matrix \({\mathcal {A}}_h({\varvec{\omega }},t)\) defined in (11). Clearly, the deviation of the randomized matrix \({\mathcal {A}}_h({\varvec{\omega }},t)\) from the original matrix A will influence the accuracy of the obtained results. The deviation of \({\mathcal {A}}_h({\varvec{\omega }},t)\) from A is measured by

where \(\Vert \cdot \Vert \) denotes the operator norm. The quantity \(\mathrm {Var}[{\mathcal {A}}]\) is thus the average squared distance of \({\mathcal {A}}_h({\varvec{\omega }},t)\) from A, weighted with the probabilities \(p_1, p_2, \ldots , p_{2^M}\) with which different values of \({\mathcal {A}}_h({\varvec{\omega }},t)\) occur. Naturally, the error estimates below show that reducing \(\mathrm {Var}[{\mathcal {A}}]\) will also reduce the errors introduced by the proposed randomized time-splitting method.

Example 1 (continued) We again consider the situation from Example 1 in which A is decomposed into \(M=2\) submatrices as \(A = A_1 + A_2\) and \({\mathcal {A}}_h({\varvec{\omega }},t)\) is either \(2A_1\) or \(2A_2\), both with probability \(\tfrac{1}{2}\). We now compute the variance \(\mathrm {Var}[{\mathcal {A}}]\) according to (17) and find

Examples 2 and 3 in the following subsection further illustrate how \(\mathrm {Var}[{\mathcal {A}}]\) depends on the decomposition of A into submatrices \(A_m\) and the selected probabilities \(p_{\omega }\).

Remark 5

When A in an approximation of an unbounded operator as in the examples in Sect. 4, it is natural to introduce an additional (invertible) weighting matrix W and compute

Clearly, we want to choose W such that AW and the matrices \(A_mW\) can be considered as approximations of bounded operators. In that case, \(\mathrm {Var}_W[{\mathcal {A}}]\) is also an approximation of a finite quantity. A natural choice is \(W = (A - \lambda I)^{-1}\) for some \(\lambda \) in the resolvent of A.

The first main result of this paper is an estimate for the difference

between the RBM-dynamics (13) and the original dynamics (1).

Mainresult 1

Assume that Assumptions 1 and 2 hold and that the input u(t) in (1) is the same as in the input u(t) in (13), then

for all \(t \in [0,T]\).

The first main result follows directly from Theorem 1 in Sect. 3.2.

The expectation operator \({\mathbb {E}}\) is taken with respect to all possible outcomes \({\varvec{\omega }} \in \Omega ^K\). A precise definition will be given in Sect. 3.1. The constant \(C_{[A,B,x_0,T,u]}\) can be taken as \((\Vert A\Vert T^2 + 2T)( |x_0 |+|Bu |_{L^ 1(0,T;{\mathbb {R}}^N)})^2\). The estimate thus only depends on the used submatrices \(A_m\), the probabilities \(p_{\omega }\), and the used temporal grid \(t_0,t_1,\ldots , t_K\) through \(h\mathrm {Var}[{\mathcal {A}}]\) defined in (17). The proof of Main result 1 is inspired by the proofs of convergence of the RBM in [14, 15].

The estimate (21) shows that the expected squared error is proportional to the temporal grid spacing h. We can thus make the expected squared error in the forward dynamics arbitrary small by reducing the grid spacing. Note that Markov’s inequality, see, e.g., [26], shows that

The probability that we select an \({\varvec{\omega }} \in \Omega ^K\) for which \(|e_h({\varvec{\omega }},t) |\) exceeds any given treshold \(\varepsilon > 0\) is thus controlled by \({\mathbb {E}}[|e_h(t) |^2]\). According to (21), we can make this probability as small as desired by choosing the temporal grid spacing h small enough. However, one should also keep in mind that decreasing h will increase the computational cost for the RBM-dynamics (13) and that the computational advantage of the RBM is lost when the required grid spacing is too small.

Example 1 (continued) To illustrate why Main result 1 could be true, we again consider the situation from Example 1 in which A is decomposed as \(A = A_1 + A_2\) and \({\mathcal {A}}_h({\varvec{\omega }},t)\) is equal to \(2A_1\) or \(2A_2\), both with probability \(\tfrac{1}{2}\). We additionally assume that \(u(t) \equiv 0\), that the time grid \(t_k = k T / K\) (\(k \in \{0,1,2, \ldots , K \}\)) is uniform with grid spacing \(h = T/ K\), and that \(A_1\) and \(A_2\) commute. Because \(u(t) = 0\), the solution of (1) is \(x(t) = e^{At}x_0\) and the solution of (13) is

Here, \(T_1({\varvec{\omega }})\) and \(T_2({\varvec{\omega }})\) denote the times during which \(A_1\) and \(A_2\) are used, i.e.

where the characteristic functions \(\chi _1(\omega )\) and \(\chi _2(\omega )\) are defined by the property that \(\chi _i(\omega ) = 1\) when \(\omega = i\) and \(\chi _i(\omega ) = 0\) otherwise (\(i \in \{ 1,2\}\)). Note that the second identity in (23) uses that \(A_1\) and \(A_2\) commute. Because \({\mathbb {E}}[\chi _1] = {\mathbb {E}}[\chi _2] = \tfrac{1}{2}\), it follows that \({\mathbb {E}}[T_1] = {\mathbb {E}}[T_2] = T/2\). When we now consider the limit \(K \rightarrow \infty \) (so \(h \rightarrow 0\)), the law of large numbers states that \(T_1\) and \(T_2\) converge to T/2 (in probability). The RHS of (23) thus converges (in probability) to \(e^{AT}x_0 = x(T)\) for \(K \rightarrow \infty \). Note that the convergence in Main result 1 is in expectation, which is stronger than convergence in probability.

We now present the main results aimed at the LQ optimal control problem constrained by randomized dynamics. Because the optimal control \(u^*_h({\varvec{\omega }},t)\) depends on the selected indices \({\varvec{\omega }}\), we need the following result. The key difference with the first main result is that the input \(u_h({\varvec{\omega }},t)\) may now depend on the randomly selected indices \({\varvec{\omega }}\). As will be explained at the start of Sect. 3, this makes the arguments for the convergence of the RBM in [14, 15] break down.

Note that replacing u(t) in (1) and (13) by \(u_h({\varvec{\omega }},t)\) results in solutions \(x({\varvec{\omega }},t)\) and \(x_h({\varvec{\omega }},t)\) that now both depend on the selected indices \({\varvec{\omega }}\). The second main result now gives a bound for the expected value of the difference

Mainresult 2

Consider any control \(u_h : \Omega ^K \rightarrow L^2(0,T ; {\mathbb {R}}^q)\). Assume that Assumptions 1 and 2 are satisfied and let U be such that \(|u_h({\varvec{\omega }}) |_{L^2(0,T; {\mathbb {R}}^q)} \le U\) for all \({\varvec{\omega }} \in \Omega ^K\), then

The second result follows directly from Theorem 2 in Sect. 3.3.

Just as in the first main result, the expectation is taken over all possible values of \({\varvec{\omega }} \in \Omega ^K\) and the constant \(C_{[A,B,x_0,T,U]}\) does not depend on the chosen submatrices \(A_m\) in (5), the chosen probabilities \(p_{\omega }\), and the used temporal grid.

Using this result, we can now obtain a no-gap result which shows that the minimal value of the cost functional \(J_h({\varvec{\omega }}, u_h^*({\varvec{\omega }}))\) is (in expectation) close to the minimal value \(J(u^*)\) in the original problem when \(h \mathrm {Var}[{\mathcal {A}}]\) is small enough.

Mainresult 3

Let \(u^*(t)\) be the control that minimizes the cost functional J(u) in (2) and let \(u_h^*({\varvec{\omega }},t)\) be the control that minimizes the cost functional \(J_h({\varvec{\omega }}, u)\) in (14). Then

The third main result is identical to Theorem 3 in Sect. 3.4.

For \(h\mathrm {Var}[{\mathcal {A}}]\) small enough, Main result 3 clearly implies that \({\mathbb {E}}[|J_h(u^*_h) - J(u^*) |] \le C_{[A,B,x_0,Q,R,x_d,T]} \sqrt{h \mathrm {Var}[{\mathcal {A}}]}\), which is also the rate that is observed in numerical experiments. We keep the second term on the RHS of (27) to assure that the estimate is valid for all values of \(h\mathrm {Var}[{\mathcal {A}}]\), and not just for sufficiently small values of \(h\mathrm {Var}[{\mathcal {A}}]\).

By Markov’s inequality, this result thus implies that, for any \(\varepsilon > 0\), the probability that \(|J(u^*_h({\varvec{\omega }})) - J(u^*) |> \varepsilon \) can be made arbitrarily small by reducing the temporal grid spacing h.

The next main result shows that the optimal control for the RBM-problem \(u_h^*({\varvec{\omega }})\) also converges (in expectation) to the optimal control of the original problem \(u^*\) when \(h \rightarrow 0\).

Mainresult 4

Let \(u_h^*({\varvec{\omega }},t)\) be the minimizer of \(J_h({\varvec{\omega }},\cdot )\) in (14) and \(u^*(t)\) be the minimizer of J in (2), then

The fourth main result follows directly from Theorem 4 in Sect. 3.5.

The fourth main result justifies the use of the optimal control \(u^*_h({\varvec{\omega }})\), that is optimized for the RBM-dynamics to control the original dynamics, as proposed in [18]. An almost immediate corollary of Main result 4 is that the trajectories of the original dynamics (1) resulting from the controls \(u^*_h({\varvec{\omega }},t)\) and \(u^*(t)\) will also be close to each other, see Corollary 2 in Sect. 3.5. This further justifies the strategy in [18].

When the control \(u^*_h({\varvec{\omega }})\) is close to the control \(u^*\) that is optimal for the original dynamics, the performance \(J(u^*_h({\varvec{\omega }}))\) should also be close to the optimal performance \(J(u^*)\). This idea is formalized by the fifth and last main result.

Mainresult 5

Let \(u^*(t)\) be the control that minimizes the cost functional J(u) in (2) and let \(u_h^*({\varvec{\omega }},t)\) be the control that minimizes the cost functional \(J_h({\varvec{\omega }}, u)\) in (14). Then

The fifth main result is identical to Corollary 3 in Sect. 3.5. Main result 5 is proven as a corollary of Main result 4/Theorem 4.

The fifth main result is particularly important because it shows that the performance \(J(u^*_h({\varvec{\omega }}))\) obtained with control \(u^*_h({\varvec{\omega }})\) optimized for the randomized dynamics is close to the optimal performance \(J(u^*)\) when \(h\mathrm {Var}[{\mathcal {A}}]\) is sufficiently small. This further motivates strategies in which the original system is controlled by a control \(u^*_h({\varvec{\omega }})\) that is optimized for the randomized dynamics, as was proposed in [18].

2.3 Further examples for \(\mathrm {Var}[{\mathcal {A}}]\) and computational cost

The quantity \(\mathrm {Var}[{\mathcal {A}}]\) describes how the derived estimates depend on the decomposition of A into submatrices and the selected probabilities \(p_1, p_2, \ldots , p_{2^M}\). We therefore present two other examples that illustrate how \(\mathrm {Var}[{\mathcal {A}}]\) depends on the decomposition of A into submatrices \(A_m\) and the selected probabilities \(p_{\omega }\).

Example 2

We decompose the matrix A into \(M = 3\) parts \(A = A_1 + A_2 + A_3\) and consider two choices for the probabilities \(p_\omega \). In the first case, we only use one of the submatrices \(A_m\) simultaneously. We thus assign probabilities \(p_1 = p_2 = p_3 = \tfrac{1}{3}\) to the subsets \(S_1 = \{ 1\}\), \(S_2 = \{ 2 \}\), and \(S_3 = \{ 3 \}\) and zero probability to the other 5 subsets of \(\{1,2,3 \}\). We then have that \(\pi _1 = \pi _2 = \pi _3 = \tfrac{1}{3}\) and the variance \(\mathrm {Var}[{\mathcal {A}}]\) in (17) becomes

In the second case, we always use two of the three submatrices \(A_m\) simultaneously. We thus assign probabilities \(p_4 = p_5 = p_6 = \tfrac{1}{3}\) to the subsets \(S_4 = \{ 1,2\}\), \(S_5 = \{ 2,3 \}\), and \(S_6 = \{ 1,3 \}\) and zero probability to the other 5 subsets of \(\{1,2,3 \}\). We then have that \(\pi _1 = p_4 + p_6\), \(\pi _2 = p_4 + p_5\), and \(\pi _3 = p_5 + p_6\), so that \(\pi _1 = \pi _2 = \pi _3 = \tfrac{2}{3}\). The variance \(\mathrm {Var}[{\mathcal {A}}]\) in (17) becomes

Observe that \(\Vert \tfrac{1}{2} (A_1 + A_2) - A_3 \Vert ^2 = \tfrac{1}{4} \Vert 2 A_3 - A_1 - A_2 \Vert ^2\) and that similar expressions relate the other terms in (30) and (31). The variance for the first case in (30) is thus four times larger than the variance for the second case in (31). Increasing the overlap between the possible values of \({\mathcal {A}}_h({\varvec{\omega }},t)\) thus reduces \(\mathrm {Var}[{\mathcal {A}}]\) and will improve the accuracy of the proposed method. It is worth noting that similar observations have been made for domain decomposition methods, for which it is well-known that increasing the overlap between subdomains increases the convergence rate (see, e.g., [8, Section 1.5]). Note however that increasing the overlap will also reduce the sparsity of \({\mathcal {A}}_h(t)\) and thus also increase the computational cost. This will be illustrated further in Example 4 and the numerical examples in Sect. 4.

Example 3

It is not always optimal to choose the probabilities uniform. To illustrate this, we assume \(A=A_1+A_2\) has a block-diagonal decomposition

It easy to verify that \(\Vert \alpha A_1 + \beta A_2 \Vert = \max \{ |\alpha |\Vert A_1\Vert , |\beta |\Vert A_2\Vert \}\) for any \(\alpha ,\beta \in {\mathbb {R}}\). We assign the (at this point undetermined) probability \(p_1 = p\) to the subset \(S_1 = \{ 1\}\), the probability \(p_2 = 1-p\) to the subset \(S_2 = \{ 2\}\), and probabilities \(p_3 = p_4 = 0\) to the subsets \(S_3 = \emptyset \) and \(S_4 = \{1,2 \}\). It follows that \(\pi _1 = p\) and \(\pi _2 = 1-p\) and that

It is now easy to see that \(\mathrm {Var}[{\mathcal {A}}]\) is minimal when \(\sqrt{\tfrac{1-p}{p}}\Vert A_1\Vert = \sqrt{\tfrac{p}{1-p}}\Vert A_2 \Vert \). Solving this equation for p, we find optimal probability

We observe that the larger the submatrix \(A_1\) is compared to \(A_2\), the larger the probability p with which the submatrix \(A_1\) is selected should be. Inserting the optimal probability \(p^*\) in (34) into the expression for \(\mathrm {Var}[{\mathcal {A}}]\), we find that

With uniform probabilities, i.e., with \(p = 1/2\), \(\mathrm {Var}[{\mathcal {A}}] = \max \{ \Vert A_1 \Vert ^2, \Vert A_2 \Vert ^2 \}\), see (33). When \(\Vert A_1 \Vert / \Vert A_2 \Vert \gg 1\) or \(\Vert A_1 \Vert / \Vert A_2 \Vert \ll 1\), using the optimal probability \(p^*\) in (34) can thus reduce \(\mathrm {Var}[{\mathcal {A}}]\) significantly.

We conclude this section with two examples that illustrate the potential reduction in computational cost offered by the proposed randomized time-splitting method.

Example 4

Let \(A \in {\mathbb {R}}^{N\times N}\) be a sparse symmetric negative semi-definite matrix with a bandwidth b, i.e. \([A]_{ij} = 0\) when \(|i-j |> b\). Select \(n_1,n_2,n_3 \in \{1,2,\ldots ,N \}\) such that \(n_1 + n_2 + n_3 = N + 2b\). It is then possible to split A as \(A = A_1 + A_2 + A_3\) with

where \(A_{11} \in {\mathbb {R}}^{n_1 \times n_1}\), \(A_{22} \in {\mathbb {R}}^{n_2 \times n_2}\), \(A_{33} \in {\mathbb {R}}^{n_3 \times n_3}\), \(0_n\) denotes an \(n \times n\) zero matrix, and the 0’s denote zero matrices of appropriate size. We assign probabilities \(p_1 = p_2 = p_3 = \tfrac{1}{3}\) to the subsets \(S_1 = \{ 1\}\), \(S_2 = \{ 2 \}\), and \(S_3 = \{ 3 \}\) and zero probability to the other 5 subsets of \(\{1,2,3 \}\). The computational cost for one time step with the matrix \(A_1\) is \(O(n_1^r)\), where \(r \in [1,3]\) is a certain power that depends on b, the time discretization scheme, and the method used to solve the resulting linear systems. In particular, \(r = 1\) when A is tridiagonal (i.e. when \(b = 1\)), \(r = 3\) for an implicit time discretization scheme in which the resulting linear systems are solved by Gaussian elimination, and \(r = 2\) for an implicit time discretization scheme in which the resulting linear systems are solved based on a precomputed Lower-Upper (LU) factorization. Similarly, the computational cost for one time step with the matrices \(A_2\) or \(A_3\) or with the full matrix A is \(O(n_2^r)\) or \(O(n_3^r)\) or \(O(N^r)\), respectively. The proposed randomized time-splitting scheme is therefore expected to reduce the computational cost for one forward simulation (on the same temporal grid) by a factor

When \(b \ll N\), it is possible to choose \(n_1 \approx n_2 \approx n_3 \approx N/3\), and the reduction in computational cost is then \(\approx 1/3^r\). Note that the expected reduction in computational cost can only be observed when \(n_1\), \(n_2\), and \(n_3\) are sufficiently large. As explained in Sect. 1, we expect that the computation of optimal controls is sped up by the same factor as the forward simulation.

Similarly as in the second case in Example 2, we also consider the situation in which the overlap is increased. We thus assign probabilities \(p_4 = p_5 = p_6 = \tfrac{1}{3}\) to the subsets \(S_4 = \{ 1,2\}\), \(S_5 = \{ 2,3 \}\), and \(S_6 = \{ 1,3 \}\) and zero probability to the other 5 subsets of \(\{1,2,3 \}\). The cost of doing one time step with the matrices \(A_1 + A_2\), \(A_2 + A_3\), or \(A_1 + A_3\) is then proportional to \((n_1 + n_2 -b)^r\), \((n_2 + n_3 - b)^r\), or \((n_1 + n_3)^r\), respectively. When \(b \ll N\) and \(n_1 \approx n_2 \approx n_3 \approx N/3\) the proposed randomized time-splitting scheme thus reduces the expected computational cost by a factor \(2^r / 3^r\). Increasing the overlap thus increases the expected computational cost of the randomized time splitting method by a factor \(2^r\), but it also reduces \(\mathrm {Var}[{\mathcal {A}}_h]\) by a factor 4, see Example 2. Choosing the level of overlap is thus a trade-off between accuracy and computational cost.

Example 5

When \(A \in {\mathbb {R}}^{N \times N}\) is symmetric but not sparse, we can select \(n_1, n_2, n_3 \in \{1,2, \ldots , N \}\) such that \(n_1 + n_2 + n_3 = N\), and split A as \(A = A_1 + A_2 + \cdots + A_6\) with

where \(A_{11} \in {\mathbb {R}}^{n_1 \times n_1}\), \(A_{22} \in {\mathbb {R}}^{n_2 \times n_2}\), and \(A_{33} \in {\mathbb {R}}^{n_3 \times n_3}\). The cost for doing one time step with \(A_1\), \(A_2\), or \(A_3\) is \(O(n_1^r)\), \(O(n_2^r)\), and \(O(n_3^r)\), respectively, with r as in Example 4. Similarly, the cost for doing one time step with \(A_4\), \(A_5\), or \(A_6\) is \(O((n_1+n_2)^r)\), \(O((n_2+n_3)^r)\), and \(O((n_1+n_3)^r)\), respectively. When we assign probabilities \(\tfrac{1}{6}\) to the six singleton subsets of \(\{1,2,\ldots , 6 \}\) and zero probability to the other, the proposed randomized time-splitting scheme is expected to reduce the computational cost for one forward simulation (on the same temporal grid) by a factor

where the latter approximation holds when \(n_1 \approx n_2 \approx n_3 \approx N/3\).

3 Convergence analysis

The proof of convergence for the RBM optimal control problem is divided into several stages.

In the first stage, we consider a control \(u \in L^2(0,T; {\mathbb {R}}^q)\) that does not depend on the selected indices \({\varvec{\omega }}\). We then show that the expected difference between the RBM-dynamics (13) and the original dynamics (1) can be bounded in terms of \(h \mathrm {Var}[{\mathcal {A}}]\) as in Main result 1. The proof of this statement is inspired by the results for interacting particles systems in [14, 15].

Because we will also need to deal with the optimal control \(u^*_h({\varvec{\omega }},t)\) that minimizes \(J_h({\varvec{\omega }}, \cdot )\), we consider a general family of controls \(u_h({\varvec{\omega }},t)\) (with \({\varvec{\omega }} \in \Omega ^K\)) in the second stage. This is a nontrivial extension of the results in the previous stage because the crucial idea in the proof for the first stage and in [14, 15] is that the solutions \(x(t_{k-1})\) and \(x_h({\varvec{\omega }},t_{k-1})\) do not depend on \(\omega _k\) (the index that is used in the time interval \([t_{k-1}, t_k)\)). This is clearly no longer the case when we insert an input \(u_h({\varvec{\omega }},t)\) that depends on \({\varvec{\omega }}\), so also on \(\omega _k\), into the dynamics (1) and (13). This problem is particularly clear when we consider the family of optimal controls \(u_h^*({\varvec{\omega }})\) for which \(u_h^*({\varvec{\omega }},t_{k-1})\) will depend on the choices for the ‘future’ indices \(\omega _k, \omega _{k+1}, \ldots \omega _K\).

In the third stage, we prove the no-gap condition presented in Main result 3. A crucial result for the proof is an auxiliary lemma (Lemma 1) that bounds the differences \(J_h({\varvec{\omega }},u) - J(u)\) and \(J_h({\varvec{\omega }},u_h({\varvec{\omega }})) - J(u_h({\varvec{\omega }}))\) (in expectation). For controls u that do not depend on \(\omega \), a bound on \(J_h({\varvec{\omega }},u) - J(u)\) can be obtained directly from Main result 1. For controls \(u_h({\varvec{\omega }})\) that do depend on \({\varvec{\omega }}\), we need to use Main result 2 to find the bound on the expected difference \(J_h({\varvec{\omega }},u_h({\varvec{\omega }})) - J(u_h({\varvec{\omega }}))\). For brevity, Lemma 1 considers controls \(u_h({\varvec{\omega }})\) that depend on \({\varvec{\omega }}\) (which of course also covers the case in which the control does not depend on \({\varvec{\omega }}\)). The no-gap condition (i.e., a bound on \(J_h(u_h^*({\varvec{\omega }})) - J(u^*)\)) can then be obtained using classical arguments from the calculus of variations and Lemma 1 applied to the optimal controls \(u^*\) and \(u_h^*({\varvec{\omega }})\).

In the fourth stage, we bound the difference between the RBM-optimal control \(u^*_h({\varvec{\omega }})\) and the control \(u^*\) optimized for the original dynamics. To this end, we first bound the expected difference between the gradients of \(J_h({\varvec{\omega }}, \cdot )\) and J. The bound on the difference in the optimal controls then follows from classical arguments based on the \(\alpha \)-convexity of the functional \(J_h({\varvec{\omega }},\cdot )\). Finally, the bound for the difference \(J(u^*_h({\varvec{\omega }})) - J(u^*)\) follows easily from the previously derived bound on the difference between the optimal controls \(u^*_h({\varvec{\omega }})\) and \(u^*\).

The four stages discussed above will be proved in detail in Sects. 3.2–3.5. We first present some preliminaries in Sect. 3.1.

3.1 Preliminaries

We will use the following notation. The transpose of a real column vector x is denoted by \(x^\top \). Similarly, the transpose of a real matrix A is denoted by \(A^\top \). The entry in the i-th row and j-th column of A is denoted by \([A]_{ij}\). The standard Euclidean innerproduct of two vectors \(x,y \in {\mathbb {R}}^N\) is denoted by \(\langle x, y \rangle := x^\top y\). The corresponding norm is denoted by \(|x |:= \sqrt{x^\top x}\). The (operator) norm of a matrix \(A \in {\mathbb {R}}^{N \times N}\) is denoted by

We use \(C_{[a,b, \ldots , d]}\) to denote a constant that only depends on the parameters \(a,b, \ldots , d\). The value of \(C_{[a,b, \ldots , d]}\) may vary from line to line. The \(L^p\)-norm of a function in \(u \in L^p(0,T; {\mathbb {R}}^q)\) (for \(1 \le p < \infty \) and \(p = \infty \)) is defined as

We now set up the precise probabilistic setting for our problem. The set \(\Omega ^K\) defined in (10) is the natural sample space for the considered problem. To turn \(\Omega ^K\) into a probability space, we assign a probability \(p({\varvec{\omega }})\) to each \({\varvec{\omega }} \in \Omega ^K\) according to

Note that we use here that each index \(\omega _k\) is chosen independently from the other indices \(\omega _1, \omega _2, \ldots , \omega _{k-1}, \omega _{k+1}, \omega _{k+1}, \ldots , \omega _K\).

A random element on the sample space \(\Omega ^K\) is a function \(X : \Omega ^K \rightarrow V\) from the sample space \(\Omega ^K\) to a vector space V. When \(V = {\mathbb {R}}\), \(X : \Omega ^K \rightarrow {\mathbb {R}}\) is also called a random variable. Note that we can embed V into \(V^{\Omega ^K}\) by associating to each element \(x \in V\) the constant function \(X({\varvec{\omega }}) = x\) for all \({\varvec{\omega }} \in {\Omega ^K}\). Constant functions \(X({\varvec{\omega }}) = x\) are called deterministic. Functions \(X({\varvec{\omega }})\) that are not deterministic are called stochastic. The expectation operator \({\mathbb {E}}\) assigns to a random variable \(X \in V^{\Omega ^K}\) an element of the vector space V

It is immediate from this definition that \({\mathbb {E}}\) is linear. When \(V = {\mathbb {R}}\), we also see that \({\mathbb {E}}[X] \ge 0\) when \(X({\varvec{\omega }}) \ge 0\) for all \({\varvec{\omega }} \in \Omega ^K\).

Several random elements appear in the randomized splitting method outlined in Sect. 2.1. One example is the matrix \({\mathcal {A}}_h({\varvec{\omega }},t)\) defined in (11). When \(t \in [t_{k-1}, t_k)\), \({\mathcal {A}}_h({\varvec{\omega }}, t)\) only depends on \(\omega _k\). Therefore, the definitions in (43) and (11) show that (for \(t \in [t_{k-1}, t_k)\))

where the second to last identity follows from (8) and the last identity from (12). Again using that \({\mathcal {A}}_h({\varvec{\omega }},t)\) only depends on \(\omega _k\) for \(t \in [t_{k-1}, t_k)\), we also find that

where the last identity follows from the definition of \(\mathrm {Var}[{\mathcal {A}}]\) in (17). Note that (45) holds for every time instant t and that \({\mathbb {E}}[\Vert {\mathcal {A}}_h(t) - A \Vert ^2]\) therefore does not depend on the considered time instant t.

Another random element is the solution \(x_h : \Omega ^K \rightarrow L^2(0,T; {\mathbb {R}}^N)\) in (13). We will frequently use that \(|x_h({\varvec{\omega }},t) |\) can be bounded as follows. First of all, observe that

where is was used that \(\langle x, {\mathcal {A}}_h({\varvec{\omega }},t) x \rangle \le 0\) for all \(x\in {\mathbb {R}}^N\) and \({\varvec{\omega }} \in \Omega ^K\) because of Assumption 1. Now observe that

from which we conclude that

For x(t), a similar derivation shows that

We will also consider situations in which we apply an input \(u_h({\varvec{\omega }},t)\) to the dynamics (1) and (13) that depends on \({\varvec{\omega }}\). The resulting solutions are then both random elements \(x({\varvec{\omega }},t)\) and \(x_h({\varvec{\omega }},t)\) which satisfy

In this case we can obtain estimates similar to (48) and (49) with u and x replaced by \(u_h({\varvec{\omega }})\) and \(x({\varvec{\omega }})\), respectively.

The third important random element in this paper is the optimal control \(u^*_h({\varvec{\omega }}, \cdot )\) that minimizes \(J_h({\varvec{\omega }}, \cdot )\) in (14). The coercivity of the functional \(J_h({\varvec{\omega }},\cdot )\) allows us to bound \(|u^*_h({\varvec{\omega }}) |_{L^2(0,T;{\mathbb {R}}^q)}\) as follows. Denote the smallest eigenvalue of the matrix R by \(\alpha > 0\), then

where the last inequality follows because \(u^*_h({\varvec{\omega }})\) is the minimizer of \(J_h({\varvec{\omega }},\cdot )\). Next, observe that

where \(x_h({\varvec{\omega }},t)\) denotes the solution of (13) with \(u(t) = 0\) and the last inequality follows from (48). Looking back at (52), we find

Finally, we repeat some standard definitions from the theory of the convex optimization, see, e.g., [22]. A functional \(J : V \rightarrow {\mathbb {R}}\) on a normed vector space V is \(\alpha \)-convex if there exists an \(\alpha \ge 0\) such that for all \(u, v \in V\) and \(\theta \in [0, 1]\)

One can easily verify that the functional \(J_h({\varvec{\omega }}, \cdot )\) is \(\alpha \)-convex (for all \({\varvec{\omega }} \in \Omega ^K\)) when we take \(\alpha \) as the smallest eigenvalue of the positive definite matrix R. The Gâteaux-derivative of J at the point u in the direction v is denoted by \(\delta J(u; v)\), i.e.

By subtracting J(u) from both sides of (55), dividing the resulting inequality by \(\theta \), and then taking the limit \(\theta \rightarrow 0\), we find the well-known inequality

3.2 The forward dynamics with a deterministic input

In this subsection, we consider a deterministic u(t) and derive a bound for the error

where \(x_h({\varvec{\omega }},t)\) and x(t) are the solutions of (13) and (1) resulting from the same input u(t), respectively.

Remark 6

It is important to stress that \(x_h(t)\) is not an unbiased estimator for x(t), i.e., we do not have \({\mathbb {E}}[e_h(t)] = {\mathbb {E}}[x_h(t)] - x(t) = 0\). This can for example be observed when we write the error dynamics as

where we have substituted \(x({\varvec{\omega }},t) =x_h({\varvec{\omega }},t) - e_h({\varvec{\omega }},t)\). Taking the expected value in (59) we find

However, (60) does not imply that \({\mathbb {E}}[e_h(t)] = 0\) for all t because generally

where the equality follows because \({\mathbb {E}}[{\mathcal {A}}_h(t)] = A\), see (44). This would be the case when \({\mathcal {A}}_h({\varvec{\omega }},t)\) and \(x_h({\varvec{\omega }},t)\) are independent, but they are correlated by the dynamics (13). Note, however, that at the beginning of each time interval \([t_{k-1}, t_k)\), the value of \({\mathcal {A}}_h({\varvec{\omega }},t)\) changes and that \({\mathcal {A}}_h({\varvec{\omega }},t_{k-1})\) is independent of the values of \({\mathcal {A}}_h({\varvec{\omega }},t)\) for \(t < t_{k-1}\) so that

where the second identity again follows because \({\mathbb {E}}[{\mathcal {A}}_h(t)] = A\), see (44). This observation is crucial to obtain the main result of this subsection.

The main result in this subsection is the following.

Theorem 1

Assume that the input u(t) in (13) is deterministic and equal to the input u(t) in (1) and that Assumptions 1 and 2 hold, then

Proof

Observe that

where the last equation follows after substituting \(x_h({\varvec{\omega }},t) = x({\varvec{\omega }},t) + e_h({\varvec{\omega }},t)\).

Fix \(t \in [0, T]\) and let \(k \le K\) be such that \(t \in [t_{k-1}, t_k)\).

Consider an arbitrary time instant \(s \in [0,t)\) and let \(\ell \in \{1,2, \ldots , k \}\) be such that \(s \in [t_{\ell -1}, t_\ell )\). Then (64) shows that

where, in the second equality, we have introduced

The first term on the RHS of (65) is nonpositive due to Assumption 1. We thus find after taking the expected value in (65) that

For the first term on the RHS of (67), observe that \(e_h({\varvec{\omega }},t_{\ell -1}) = x_h({\varvec{\omega }},t_{\ell -1}) - x(t_{\ell -1})\) only depends on \(\omega _1, \ldots \omega _{\ell -1}\), so that

where the second identity uses (8), the third identity follows from (8) and the fact that \(e_h({\varvec{\omega }}, t)\) does not depend on \(\omega _\ell \), and the last identity follows because (12) shows that the factor between round brackets vanishes.

For the second term on the RHS of (67), we use that

where the first identity follows from the Cauchy–Schwartz inequality in \({\mathbb {R}}^N\), the second inequality from Cauchy–Schwartz inequality in the probability space, and the last inequality follows from (49).

We now claim that

We will prove (70) at the end of the proof. Inserting the claim (70) into (69), we find

Inserting (68) and (71) into (67) shows that

Integrating (72) from \(s = 0\) to \(s = t\) using that \(e_h(\omega ,0) = 0\) now shows that

which is the desired estimate (63).

It thus remains to show that (70) holds. Recall that, for \(\tau \in [t_{\ell -1}, s)\), (66) shows that \(\Delta e_h({\varvec{\omega }},\tau ) = e_h({\varvec{\omega }},\tau ) - e_h({\varvec{\omega }},t_{\ell -1})\). Using (59), we thus see that \(\Delta e_h({\varvec{\omega }},\tau )\) is the solution of the ODE

with initial condition \(\Delta e_h({\varvec{\omega }},t_{\ell -1}) = 0\). We therefore also have that

Using that \(\Delta e_h({\varvec{\omega }},t_{\ell -1}) = 0\), integrating (75) from \(\tau = t_{\ell -1}\) to \(\tau = s\) yields

To bound \(e_h({\varvec{\omega }},\tau )\), we apply the variation of constants formula to the error dynamics in (59) and obtain

where we have used the bound for \(x_h({\varvec{\omega }},\sigma )\) in (48) and that \(\Vert e^{A(\tau -\sigma )}\Vert \le 1\) because Assumption 1 implies that A is dissipative. Using this result in (76), we find

where we have again used the bound on \(x_h({\varvec{\omega }},t)\) in (48) for the second term in (76) and introduced

Squaring both sides in (78) and taking the expectation, we find

where the second inequality follows from the Cauchy–Schwartz inequality in \(L^2(t_{\ell -1},s)\). Now observe that (79) shows that

Because \({\mathbb {E}}[\Vert {\mathcal {A}}_h(t) - A \Vert ^2] = \mathrm {Var}[{\mathcal {A}}]\) for all t, we also have that

Using this result in (81), we obtain

Using this result again in (80), also using that \(s-t_{\ell -1} \le h\) and \(\tau \le s\), we find the claimed inequality (70). \(\square \)

Some remarks regarding Theorem 1 are in order.

Remark 7

The error estimate in Theorem 1 involves the operator norm of the matrix A. This suggests that the expected error \({\mathbb {E}}[ |e_h(t) |^2]\) grows when we are considering better approximations A of an unbounded operator, which for example happens when we consider a discretization of a PDE and refine the spatial grid. However, Fig. 4a in Sect. 4 indicates that \({\mathbb {E}}[|e_h(t) |] \le C \sqrt{h \mathrm {Var}[{\mathcal {A}}]}\) for a constant C that does not increase (but even seems to decrease) when the spatial grid is refined.

A first step in understanding the infinite-dimensional case better is taken in Appendix B, where we prove that

under the additional assumptions that \(u(t) \equiv 0\) and that all matrices \(A_m\) commute pairwise. Here, W is any invertible matrix and \(\mathrm {Var}_W[{\mathcal {A}}]\) is the weighted variance introduced in Remark 5. Observe that the operator norm \(\Vert A \Vert \) does not appear in this estimate. The result from Appendix B extends naturally to an infinite dimensional setting in which all operators \(A_m\) have the same domain \(D(A_m) = D(A)\).

Recall from Remark 5 that a typical choice for W is \(W = (A - \lambda I)^{-1}\) for some \(\lambda \) in the resolvent of A. For \(|W^{-1}x_0 |\) to be bounded, we thus require that \(x_0 \in D(A)\), where D(A) denotes the domain of the operator A. In an infinite dimensional setting we thus need an additional smoothness assumption on the initial condition \(x_0\). Such conditions are typical for (deterministic) splitting algorithms, see e.g. [12, 13]. Further details can be found in Appendix B.

Remark 8

The error estimate in Theorem 1 is derived based on the error dynamics (64). Considering the error dynamics (59) leads to a less clean proof because instead of the 3 terms on the RHS of (65), we then get 4 terms

where \(\Delta e_h({\varvec{\omega }},s) := e_h({\varvec{\omega }},s) - e_h({\varvec{\omega }},t_{\ell -1})\) and \(\Delta x_h({\varvec{\omega }},s) := x_h({\varvec{\omega }},s) - x_h({\varvec{\omega }},t_{\ell -1})\). This approach is closer to proofs for interacting particle systems in [14].

Note that the fourth term in (85) is needed because \(x_h({\varvec{\omega }},s)\) is correlated to \({\mathcal {A}}_h({\varvec{\omega }},s)\) for \(s\in (t_{\ell -1},t_\ell )\). Because x(s) is not correlated to \({\mathcal {A}}_h({\varvec{\omega }},s)\), it was not necessary to introduce such a term in (65). The proof of Theorem 1 based on the error dynamics (64) presented above is thus simpler than a proof based on (59).

Remark 9

When we look back at the proof of Theorem 1, we see that Assumption 1 is only used to assure that the matrices A and \({\mathcal {A}}_h({\varvec{\omega }},t)\) are dissipative (for all \({\varvec{\omega }}\) with \(p({\varvec{\omega }}) > 0\) and all \(t \in [0,T]\)). When Assumption 1 is not satisfied, there must exist a constant \(a > 0\) such that \({\hat{A}} = A-aI\) and \(\hat{{\mathcal {A}}}_h({\varvec{\omega }},t) = {\mathcal {A}}_h({\varvec{\omega }},t) - a I\) are dissipative (for all \({\varvec{\omega }}\) with \(p({\varvec{\omega }}) > 0\) and all \(t \in [0,T]\)). Because \({\mathbb {E}}[{\mathcal {A}}_h(t)] = A\), it follows that \({\mathbb {E}}[\hat{{\mathcal {A}}}_h(t)] = {\mathbb {E}}[{\mathcal {A}}_h(t)] - aI = A - aI = {\hat{A}}\) and \(\mathrm {Var}[\Vert \hat{{\mathcal {A}}}_h(t) - {\hat{A}} \Vert ^2] = \mathrm {Var}[{\mathcal {A}}]\). When we let \({\hat{x}}(t)\) and \({\hat{x}}_h({\varvec{\omega }},t)\) denote the solutions generated by \({\hat{A}}\) and \(\hat{{\mathcal {A}}}_h({\varvec{\omega }},t)\), respectively, we can now prove in a similar way as in Theorem 1 that the error \({\hat{e}}_h({\varvec{\omega }},t) = {\hat{x}}_h({\varvec{\omega }},t) - {\hat{x}}(t)\) can be bounded as

Because \(x(t) = e^{at}{\hat{x}}(t)\) and \(x_h({\varvec{\omega }},t) = e^{at}{\hat{x}}_h({\varvec{\omega }},t)\), also

Taking the expectation and using (86), we find

The error estimate now grows exponentially in time.

3.3 The forward dynamics with a stochastic input

In this subsection, we prove a result similar to Theorem 1 for inputs \(u_h({\varvec{\omega }},t)\) that are stochastic, i.e., which depend on \({\varvec{\omega }}\). We thus want to bound the error

where \(x_h({\varvec{\omega }},t)\) and \(x({\varvec{\omega }},t)\) are the solutions of (51) and (50), respectively.

To this end, we consider the semi-group \(e^{At}\) generated by the matrix A and the evolution operator \(S_h({\varvec{\omega }},t,s)\) associated to \({\mathcal {A}}_h({\varvec{\omega }},t)\). The evolution operator \(S_h({\varvec{\omega }},t,s)\) is defined by property that for all vectors \(x_s \in {\mathbb {R}}^N\) (and all \(t \ge s\)), \(S_h({\varvec{\omega }},t,s)x_s\) is equal to the solution \(y_h({\varvec{\omega }},t)\) of

Remark 10

An explicit formula for the evolution operator \(S_h({\varvec{\omega }}, t, s)\) can be obtained as follows. Let \(0 \le s \le t \le T\) and let \(\ell , k \in \{1,2, \ldots , K \}\) be selected such that

By restricting the given time grid \(0 = t_0< t_1< t_2< \cdots< t_{K-1} < t_K = T\) to the interval [s, t], we obtain a grid with \({\tilde{K}} = k-\ell +1\) grid points

The relation between the chosen time grid \(t_0, t_1, \ldots , t_K\) and the time grid \({\tilde{t}}_0, {\tilde{t}}_1, \ldots , {\tilde{t}}_{{\tilde{K}}}\) used in Remark 10. In the displayed example, \(\ell = 2\), \(k = 4\), and \({\tilde{K}} = 3\)

The construction of the time grid \({\tilde{t}}_0, {\tilde{t}}_1, \ldots {\tilde{t}}_{{\tilde{K}}}\) is illustrated in Fig. 1. We also denote \({\tilde{h}}_p := {\tilde{t}}_p - {\tilde{t}}_{p-1}\) (for \(p \in \{1,2, \ldots , {\tilde{K}} \}\)) and introduce (for each \(\omega \in \{1,2, \ldots , 2^M \}\))

Because \({\mathcal {A}}_h({\varvec{\omega }},\tau ) = {\mathcal {A}}_{\omega _p}\) is constant for \(\tau \in [{\tilde{t}}_{p-1}, {\tilde{t}}_p)\), it is now easy to see that

Under Assumption 1, all matrices \({\mathcal {A}}_{\omega _p}\) are dissipative and (94) shows that

Using the variation of constants formula, the solutions of \(x_h({\varvec{\omega }},t)\) and \(x({\varvec{\omega }},t)\) can expressed as

Subtracting (97) from (96) we find the following expression for the error \(e_h({\varvec{\omega }},t)\)

where \(E_h({\varvec{\omega }},t,s) = S_h({\varvec{\omega }},t,s) - e^{A(t-s)}\). The following corollary of Theorem 1 shows that we can bound \(E_h({\varvec{\omega }},t,s) = S_h({\varvec{\omega }},t,s) - e^{A(t-s)}\).

Corollary 1

Under Assumptions 1 and 2, we have that

for all \(0 \le s \le t \le T\).

Proof

Fix \(s \in [0,T]\) and an initial condition \(x_s \in {\mathbb {R}}^N\).

Define \(y(t) = e^{A(t-s)}x_s\) and let \(y_h({\varvec{\omega }},t)\) be the solution of (90), both for \(t \in [s,T]\). We then apply Theorem 1 with \(u(t) \equiv 0\) to the time-shifted solutions \({\tilde{y}}({\tilde{t}}) = y({\tilde{t}}+s)\) and \({\tilde{y}}_h({\varvec{\omega }}, {\tilde{t}}) = y_h({\varvec{\omega }},{\tilde{t}}+s)\) and the time-shifted matrix \(\tilde{{\mathcal {A}}}_h({\varvec{\omega }},{\tilde{t}}) = {\mathcal {A}}_h({\varvec{\omega }},{\tilde{t}}+s)\) defined on \({\tilde{t}} \in [0, T-s]\). We thus conclude that (writing \({\tilde{t}} = t-s\))

Noting that, by definition, \(y(t) = e^{A(t-s)}x_s\) and \(y_h({\varvec{\omega }},t) = S_h({\varvec{\omega }},t,s)x_s\), we find that (for \(x_s \ne 0\))

where it was used that \({\tilde{t}} = t-s \le T\). The result now follows from the definition of the operator-norm. \(\square \)

Remark 11

In Appendix B, we prove a result similar to Corollary 1 under the additional assumption that all matrices \(A_m\) commute pairwise. The result in Appendix B extends naturally to an infinite dimensional setting under the additional assumption that the domains of the operators \(A_m\) are the same. This is not the case for Corollary 1 because the operator norm \(\Vert A \Vert \) appears in (99).

We are now ready for the main result of this subsection.

Theorem 2

Consider any control \(u_h : \Omega ^K \rightarrow L^2(0,T;{\mathbb {R}}^q)\). Assume that Assumptions 1 and 2 are satisfied and let U be such that

for all \({\varvec{\omega }} \in \Omega ^K\), then

Proof

Using the triangle inequality in (98), we find

where the second inequality follows from the Cauchy–Schwarz inequality in \(L^2(0,t)\). Squaring both sides and using the bound (102), we find

In order to use the bound from Corollary 1 to estimate the last term, note that we can use the Cauchy–Schwartz inequality in the probability space to find

Taking the expected value in (105) and using that the bound on \({\mathbb {E}}[\Vert E_h(t,s) \Vert ^2]\) from Corollary 1 does not depend on t and s, we find

which gives the desired estimate. \(\square \)

Remark 12

Because \(\Omega ^K\) is finite, we can always find a constant U such that (102) is satisfied for a given \(u_h: \Omega ^K \rightarrow L^2(0,T;{\mathbb {R}}^q)\). However, when we consider a family of temporal grids for which \(h \rightarrow 0\), the constant U may depend on h (depending on the considered family of controls \(u_h({\varvec{\omega }},t)\)). Fortunately, we only need to apply Theorem 2 with \(u_h({\varvec{\omega }},t) = u_h^*({\varvec{\omega }},t)\), where \(u^*_h({\varvec{\omega }},t)\) is the control that minimizes the cost functional \(J_h({\varvec{\omega }},\cdot )\) in (14). For this control, the coercivity of the cost functional \(J_h({\varvec{\omega }}, \cdot )\) implies that the constant U can be chosen independent of the considered temporal grid, see (54).

Remark 13

Note that the estimate in Theorem 1 depends on the \(L^1\)-norm of the control but that estimate in Theorem 2 depends through (102) on the \(L^2\)-norm. Setting \(u_h({\varvec{\omega }},t) = u(t)\) in Theorem 2 therefore does not give the estimate in Theorem 1. This underlines the additional difficulty posed by stochastic controls.

3.4 A no-gap condition

With the results regarding forward dynamics from the previous two subsections, we are now ready to address the optimal control problem. The main result of this subsection is the no-gap condition in Theorem 3. To prove this result, we need the following technical lemma.

Lemma 1

Consider any control \(u_h : \Omega ^K \rightarrow L^2(0,T;{\mathbb {R}}^q)\). Assume that Assumptions 1 and 2 hold and let \(U > 0\) be such that (102) is satisfied. Then

Proof

Let \(x({\varvec{\omega }},t)\) and \(x_h({\varvec{\omega }},t)\) be the solutions of (50) and (51) for the considered control \(u_h({\varvec{\omega }},t)\). For brevity, we write \({\tilde{x}}({\varvec{\omega }},t) = x({\varvec{\omega }},t) - x_d(t)\) and \({\tilde{x}}_h({\varvec{\omega }},t) = x_h({\varvec{\omega }},t) - x_d(t)\). By definition of the cost functionals \(J(\cdot )\) and \(J_h({\varvec{\omega }},\cdot )\) in (2) and (14), we have

where the last identity follows because \(e_h({\varvec{\omega }},t) = x_h({\varvec{\omega }},t) - x(t)= {\tilde{x}}_h({\varvec{\omega }},t) - {\tilde{x}}(t)\). Taking the absolute value and estimating the RHS, we find

Taking the expectation and using the Cauchy–Schwartz inequality, we find that

Using the estimate from Theorem 2, we find

Because \({\tilde{x}}({\varvec{\omega }},t) = x({\varvec{\omega }}, t) - x_d(t)\), (49) shows that

Because \(|Bu_h({\varvec{\omega }}) |_{L^1(0,T;{\mathbb {R}}^N)} \le \sqrt{T} |Bu_h({\varvec{\omega }}) |_{L^2(0,T;{\mathbb {R}}^N)} \le \sqrt{T}U\), we see from (113) that \({\mathbb {E}}[|{\tilde{x}} |_{L^2(0,T;{\mathbb {R}}^N)}^2] \le C_{[x_0, x_d, T, U]}\). The result now follows by inserting this estimate and (112) into (111). \(\square \)

We are now ready to prove the main result of this section which can be considered as a no-gap condition for the RBM optimal control problem.

Theorem 3

Let \(u^*(t)\) be the (deterministic) control that minimizes the cost functional J(u) in (2) and let \(u_h^*({\varvec{\omega }},t)\) be the control that minimizes the cost functional \(J_h({\varvec{\omega }}, u)\) in (14). Then

Proof

We have that

where \(\delta ({\varvec{\omega }}) = J(u^*_h({\varvec{\omega }})) - J_h({\varvec{\omega }}, u^*_h({\varvec{\omega }}))\) and \(\varepsilon ({\varvec{\omega }}) = J_h({\varvec{\omega }},u^*) - J(u^*)\). Note that the first inequality follows because \(u^*\) is the minimizer of J and the second inequality because \(u_h^*({\varvec{\omega }})\) is the minimizer of \(J_h({\varvec{\omega }}, \cdot )\). Subtracting \(J(u^*) + \delta ({\varvec{\omega }})\) from the first, third, and fifth expressions in (115), shows that

Taking the absolute value, we find

Therefore also

Lemma 1 can now be used to find bounds for \({\mathbb {E}}[|\delta |] = {\mathbb {E}}[|J_h(u_h^*) - J(u_h^*) |]\) and \({\mathbb {E}}[|\varepsilon |] = {\mathbb {E}}[|J_h(u^*)-J(u^*) |]\).

For the bound on \({\mathbb {E}}[|\delta |]\), we use that (54) shows that there exists a constant such that \(|Bu^*_h({\varvec{\omega }})|_{L^2(0,T;{\mathbb {R}}^N)} \le C_{[B,x_0,Q,R,x_d,T]}\) so that (102) is satisfied with a constant U that does not depend on the used temporal grid \(t_0, t_1, \ldots , t_K\). Lemma 1 thus implies that

For the bound on \({\mathbb {E}}[|\varepsilon |]\), we can simply take \(U = |Bu^*(t) |_{L^2(0,T;{\mathbb {R}}^N)}\), which is a constant that only depends on the parameters \(A,B,x_0,Q,R,x_d,T\) that define the deterministic problem (1)–(2). Lemma 1 thus also shows that

Inserting (119) and (120) into (118) we find (114). \(\square \)

3.5 Convergence in the controls

In the last stage of our analysis of the RBM-optimal control problem, we bound the expected difference between the optimal control \(u^*_h\) that minimizes \(J_h\) in (14) and the optimal control \(u^*\) for the original problem. The proof is based on the strong convexity of the functional \(J_h\) in (14).

To prove the main result, we need the following lemma which bounds the difference between the Gâteaux derivative of \(J_h\) and the Gâteaux derivative of J in expectation.

Lemma 2

For any deterministic control \(u \in L^2(0,T;{\mathbb {R}}^q)\) and any stochastic perturbation \(v_h : \Omega ^K \rightarrow L^2(0,T;{\mathbb {R}}^q)\),

Proof

Let x(t) and \(x_h({\varvec{\omega }},t)\) be the solutions of (1) and (13), respectively. Furthermore, denote

Directly from the definition of the Gâteaux derivative, we find that

where we write \({\tilde{x}}(t) = x(t) - x_d(t)\) and \({\tilde{x}}_h({\varvec{\omega }},t) = x_h({\varvec{\omega }},t) - x_d(t)\).

Subtracting (123) from (124), we find

where \(e_h({\varvec{\omega }},t) = x_h({\varvec{\omega }},t) - x(t) = {\tilde{x}}_h({\varvec{\omega }},t) - {\tilde{x}}(t)\) and \(f_h({\varvec{\omega }},t) = y_h({\varvec{\omega }},t) - y({\varvec{\omega }},t)\). Taking the absolute value, we find

Using (48), we find the following bound for \({\tilde{x}}_h({\varvec{\omega }},t) = x_h({\varvec{\omega }}, t) - x_d(t)\)

We thus have \(|{\tilde{x}}_h({\varvec{\omega }},t) |\le C_{[B,x_0,x_d,T,u]}\) for all \({\varvec{\omega }} \in \Omega ^K\).

Taking the expectation in (126) using this result shows that

where the second term on the RHS follows from the Cauchy–Schwartz inequality.

Again using the notation \(E_h({\varvec{\omega }},t,s) := S_h({\varvec{\omega }},t,s) - e^{A(t-s)}\), (122) shows that

Therefore,

where the second inequality follows from the Cauchy–Schwartz inequality in the probability space, the third inequality from Corollary 1, and the third inequality from the Cauchy–Schwartz inequality in \(L^2(0,t)\).

Because the control u(t) is deterministic, Theorem 1 shows that

Finally, note

Therefore, also

Inserting (130), (131), and (133) into (128) completes the proof. \(\square \)

We are now ready to prove the convergence result for the optimal controls.

Theorem 4

Suppose that the functional \(J_h({\varvec{\omega }},\cdot )\) in (14) is \(\alpha \)-convex for all \({\varvec{\omega }} \in \Omega ^K\). Let \(u_h^*({\varvec{\omega }},t)\) be the minimizer of \(J_h({\varvec{\omega }},\cdot )\) in (14) and \(u^*(t)\) be the minimizer of J in (2), then

Proof

We apply (57) with \(J(\cdot ) = J_h({\varvec{\omega }}, \cdot )\), \(v = u^*_h({\varvec{\omega }})\), and \(u = u^*\) to find

Because \(u^*_h({\varvec{\omega }})\) is the minimizer of \(J_h({\varvec{\omega }}, \cdot )\), \(J_h({\varvec{\omega }},u_h^*({\varvec{\omega }})) \le J_h({\varvec{\omega }},u^*)\) and

Bringing \(\delta J_h\) to the other side, taking the absolute value and then the expectation, yields

Since \(u^*\) is the minimizer of J, \(\delta J(u^*, v) = 0\) for all perturbation \(v \in L^2(0,T;{\mathbb {R}}^q)\). In particular, we have that \(\delta J(u^*, u_h^*({\varvec{\omega }}) - u^*) = 0\) for all \({\varvec{\omega }} \in \Omega ^K\) so that also

We now apply Lemma 2 to the RHS with \(u = u^*\) and \(v_h({\varvec{\omega }}) = u^*_h({\varvec{\omega }}) - u^*\), which shows that

Next, we divide (139) by \(\tfrac{1}{2} \sqrt{{\mathbb {E}}[|u_h^*-u^* |^2_{L^2(0,T;{\mathbb {R}}^q)}]}\) to find

Squaring both sides we arrive at

The result follows because the optimal control \(u^*(t)\) only depends on the parameters \(A,B,x_0,Q,R,x_d\), and T that define the original problem (1)–(2). \(\square \)

We now point out two corollaries of Theorem 4 that are important when we use the control \(u_h^*({\varvec{\omega }} ,t)\) (optimized for the RBM-dynamics) to control the original dynamics. For the first corollary, we introduce the notation

i.e., \(x^*_h({\varvec{\omega }}, t)\) is the solution of the original dynamics (1) resulting from the control \(u_h^*({\varvec{\omega }}, t)\) optimized for the RBM-dynamics and \(x^*(t)\) is the solution of the original dynamics (1) resulting from the optimal control \(u^*(t)\).

Corollary 2

Suppose that the functional \(J_h({\varvec{\omega }},\cdot )\) in (14) is \(\alpha \)-convex for all \({\varvec{\omega }} \in \Omega ^K\) and let \(x_h^*({\varvec{\omega }}, t)\) and \(x^*(t)\) be as in (142) and (143), respectively. Then

for all \(t \in [0,T]\).

Proof

Note that

Therefore also

where the second inequality uses that \(\Vert e^{At}\Vert \le 1\) in view of Assumption 1. The result now follows after squaring this inequality, taking the expectation, and using (134). \(\square \)

Corollary 3

Suppose that the cost functional \(J_h({\varvec{\omega }},\cdot )\) is \(\alpha \)-convex for all \({\varvec{\omega }} \in \Omega ^K\). Let \(u^*(t)\) be the (deterministic) control that minimizes the cost functional J(u) in (2) and let \(u_h^*({\varvec{\omega }},t)\) be the control that minimizes the cost functional \(J_h({\varvec{\omega }}, u)\) in (14). Then

Proof

Denote \(v_h({\varvec{\omega }},t) := u_h^*({\varvec{\omega }},t) - u^*(t)\) and \(y({\varvec{\omega }},t) := \int _0^t e^{A(t-s)} Bv_h({\varvec{\omega }},s) \, \mathrm {d}s\). Because the considered functional is quadratic,

where the Hessian \(\delta ^2 J(v_h({\varvec{\omega }}), v_h({\varvec{\omega }}))\) is given by

Because \(u^*\) is the minimizer of \(J(\cdot )\), \(\delta J(u^*, v) = 0\) for all \(v \in L^2(0,T; {\mathbb {R}}^q)\). The first term on the RHS of (148) thus vanishes. Also observe that

A similar estimate as (132) shows that \(|y({\varvec{\omega }})|_{L^2(0,T;{\mathbb {R}}^N)}^2 \le C_{[B,T]}|v_h({\varvec{\omega }})|_{L^2(0,T;{\mathbb {R}}^q)}^2\). Combining these results in (148), we conclude

The result now follows after taking the expectation and using the result from Theorem 4 to bound \({\mathbb {E}}[|v_h |_{L^2(0,T;{\mathbb {R}}^q})^2] = {\mathbb {E}}[|u^*_h - u^* |_{L^2(0,T;{\mathbb {R}}^q)}^2]\). \(\square \)

4 Numerical results

In this section, we apply our proposed method to three medium to large scale linear dynamical systems that are obtained after spatial discretization of a linear PDE.

4.1 A discretized 1D heat equation

We consider a controlled heat equation on the 1-D spatial domain \([-L, L]\),

where \(\chi _{[-L/3,0]}(\xi )\) denotes the characteristic function for the interval \([-L/3,0]\). We want to compute the optimal control \(u^*(t)\) that minimizes

The spatial discretization of the dynamics (152)–(153) is made by finite differences and the cost functional in (154) is discretized by the trapezoid rule. We choose a uniform spatial grid with \(N = 61\) grid points \(\xi _i = (i-1)\Delta \xi - L\) (\(i \in \{ 1,2, \ldots , N \}\)), where \(\Delta \xi = 2L/(N-1)\) is the grid spacing, and obtain a system of the form (1).

The resulting A-matrix is of the form

Observe that A can be written as

where the \(n := N-1 = 60\) matrices \({\tilde{A}}_i \in {\mathbb {R}}^{N \times N}\) are zero except for the entries

One can easily verify that the matrices \({\tilde{A}}_i\) are dissipative. We now define the M submatrices \(A_m\) (for \(M = 1,2,3,4\)) as

where \(i_m = nm/M\). Because of (156), it is easy to see that the submatrices \(A_m\) satisfy (5). Because the submatrices \({\tilde{A}}_i\) are dissipative, the submatrices \(A_m\) in (157) are dissipative and Assumption 1 is satisfied.

Example 6

For \(M = 2\) and \(N = 61\), we obtain the splitting of the A-matrix in (155) as \(A = A_1 + A_2\), with

where \(A_{11}\) and \(A_{22}\) are the \(31 \times 31\)-matrices

We will present numerical results for four cases:

-

Case i

We decompose A into \(M = 2\) submatrices and assign a probability \(\tfrac{1}{2}\) to the subsets \(\{ 1 \}\) and \( \{ 2 \}\) and a probability 0 to the subsets \(\emptyset \) and \(\{1,2 \}\).

-

Case ii

We decompose A into \(M = 3\) submatrices and assign a probability \(\tfrac{1}{3}\) to the subsets \(\{ 1 \}\), \( \{ 2 \}\), and \(\{ 3 \}\) and a probability 0 to the other subsets of \(\{1,2,3 \}\).

-

Case iii

We decompose A into \(M = 4\) submatrices and assign a probability \(\tfrac{1}{4}\) to the subsets \(\{ 1 \}\), \( \{ 2 \}\), \(\{ 3 \}\), and \(\{ 4 \}\) and a probability 0 to the other subsets of \(\{1,2,3,4\}\).

-

Case iv

We decompose A into \(M = 4\) submatrices and assign a probability \(\tfrac{1}{2}\) to the subsets \(\{ 1,3 \}\) and \( \{ 2,4 \}\) and a probability 0 to the other subsets of \(\{1,2,3,4\}\).

In all 4 cases, we fix \(N = 61\), \(L = \tfrac{3}{2}\), and \(T = \tfrac{1}{2}\).

We use a uniform grid \(0=t_0< t_1< \ldots< t_{K-1} < t_K = T\) with a uniform grid spacing h. We will present results for \(h = 2^{-5}\), \(2^{-7}\), \(2^{-9}\), \(2^{-11}\), \(2^{-13}\), and \(2^{-15}\). For each of the \(K = T/h\) time intervals \([t_{k-1},t_k)\), we select an index \(\omega _k\) according to the probabilities specified in Cases i–iv above. The state \(x_h({\varvec{\omega }},t)\) that satisfies (13) is computed using a single Crank-Nicholson step in each time interval \([t_{k-1}, t_k)\). We use precomputed LU-factorizations of the matrices \(I - \tfrac{h}{2}\sum _{m\in S_{\omega }}\tfrac{A_m}{\pi _m}\) (for subsets \(S_{\omega }\) with a nonzero probability \(p_{\omega }\)) that need to be inverted frequently.

The optimal control \(u_h^*({\varvec{\omega }},t)\) that minimizes \(J_h({\varvec{\omega }},u)\) in (14) is computed with a gradient-descent algorithm. The gradient is computed using the adjoint state \(\varphi _h({\varvec{\omega }},t)\), see Remark 3. The time discretization for the adjoint state equation (15) is done using the scheme proposed in [1] that leads to discretely consistent gradients. The iterates \(u^k\) are computed as \(u^{k+1} = u^k-\beta \nabla J_h({\varvec{\omega }},u^k)\). The step size \(\beta \) is chosen such that \(J_h({\varvec{\omega }}, u^k - \beta \nabla J_h({\varvec{\omega }},u^k))\) is minimal. The algorithm is terminated when the relative change in \(J_h({\varvec{\omega }},u)\) is below \(10^{-6}\).

The results for the four considered cases are displayed in Fig. 2. Because the obtained results depend on the randomly selected indices stored in \({\varvec{\omega }}\), each marker in the subfigures in Fig. 2 represents the average error or duration over 25 random realizations of \({\varvec{\omega }}\). The errorbars represent the \(2\sigma \)-confidence interval estimated from these 25 realizations. The errors are computed w.r.t. the solutions x(t) and \(u^*(t)\) that are computed on the same time grid as the corresponding solutions \(x_h({\varvec{\omega }},t)\) and \(u^*_h({\varvec{\omega }},t)\). The displayed errors therefore do not reflect the errors due to the temporal (or spatial) discretization but capture only the error introduced by the proposed randomized splitting method.

Because the matrices A and \(A_m\) represent approximations of unbounded operators, the variance \(\mathrm {Var}[{\mathcal {A}}]\) defined in (17) will grow unbounded when the mesh is refined. This is also reflected by the large values of \(\mathrm {Var}[{\mathcal {A}}]\) given in Table 1. It is therefore more natural to consider the variance \(\mathrm {Var}_W[{\mathcal {A}}]\) in (19) weighted by a matrix of the form \(W = (A - \lambda I)^{-1}\). The values of \(\mathrm {Var}_W[{\mathcal {A}}]\) are indeed much smaller than the values of \(\mathrm {Var}[{\mathcal {A}}]\) in Table 1. The results at the end of this subsection (in Fig. 4) also indicate that the weighted variance \(\mathrm {Var}_W[{\mathcal {A}}]\) reflects the behavior of the error better when the mesh is refined.

The error estimates in Theorems 1, 3, and 4 and in Corollary 3 are proportional to \(h\mathrm {Var}[{\mathcal {A}}]\). We therefore plot the errors in Fig. 2a–d against \(\sqrt{h\mathrm {Var}_W[{\mathcal {A}}]}\) (with \(W = (A - 0.1 I)^{-1}\)) and expect that the errors for the different cases will be (approximately) on one line.

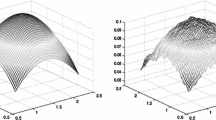

Figure 2a shows the difference \(|x_h({\varvec{\omega }},t) - x(t)|\) between the solutions x(t) and \(x_h(\omega ,t)\) of (1) and (13) with \(u(t) = 0\). Recall that the markers in this figure indicate the average error observed over 25 realizations of \({\varvec{\omega }}\), and are thus estimates for \({\mathbb {E}}[\max _{t \in [0,T]}|x_h(t) - x(t) |]\). Because \({\mathbb {E}}[|x_h(t) - x(t) |] \le \sqrt{{\mathbb {E}}[ |x_h(t) - x(t)|^2]}\), we expect (based on the bound in Theorem 1) that the errors in Fig. 2a are proportional to \(\sqrt{h\mathrm {Var}_W[{\mathcal {A}}]}\). This is indeed confirmed by Fig. 2a.

The optimal controls computed for the 1D heat equation for different time steps h. The controls \(u^*_h({\varvec{\omega }},t)\) computed with the proposed randomized time-splitting method are shown for 25 realizations of \({\varvec{\omega }}\) and compared to the optimal control \(u^*(t)\) for the original system

Figure 2b shows the difference \(|u_h^* - u^* |_{L^2(0,T)}\) between the optimal controls \(u^*(t)\) and \(u_h^*({\varvec{\omega }},t)\) that minimize (2) and (14), respectively. Based on the estimate in Theorem 4, we again expect that the observed errors are proportional to \(\sqrt{h\mathrm {Var}_W[{\mathcal {A}}]}\). This is indeed the case and the proportionality constants for the different cases are again (approximately) equal, which is also expected based on the error estimate in Theorem 4.

The convergence in the optimal controls in Fig. 2b is also illustrated in Fig. 3. This figure shows the optimal controls \(u_h^*({\varvec{\omega }},t)\) obtained for 25 randomly selected realizations of \({\varvec{\omega }} \in \Omega ^K\) (light red) for the six considered grid spacings h of the temporal grid. The figure also shows the average of the 25 optimal controls \(u^*_h({\varvec{\omega }},t)\) (dark red) and the optimal control \(u^*(t)\) for the original system (black). Figure 3 indeed shows that the optimal controls \(u_h^*{\varvec{\omega }},t)\) get closer to the optimal control \(u^*(t)\) when the spacing of the temporal grid h is reduced. Especially in Fig. 3a, b, it is also clear that the average of the 25 optimal controls \(u^*_h({\varvec{\omega }},t)\) (dark red) is not equal to the optimal control \(u^*(t)\) for the original system (black). This indicates that \({\mathbb {E}}[u_h^*] \ne u^*\), see also Remark 6. This means that \(u^*_h\) is a biased estimator for \(u^*\) and averaging several realizations of \(u^*({\varvec{\omega }},t)\) can only improve the approximation of \(u^*(t)\) to a limited extend. Note, however, that

so that Theorem 4 shows that \({\mathbb {E}}[u_h^*] \rightarrow u^*\) at a rate of \(\sqrt{h \mathrm {Var}[{\mathcal {A}}]}\). An analysis of the numerical results (that is not presented in Fig. 2) also indicates that the average of the 25 realizations of \(u_h^*({\varvec{\omega }},t)\) converges to \(u^*(t)\) at this rate.

Figure 2c, d illustrates the convergence of \(J_h({\varvec{\omega }},u^*_h({\varvec{\omega }}))\) and \(J(u^*_h({\varvec{\omega }}))\) to \(J(u^*)\). Fig. 2c illustrates the error estimate in Theorem 3 and shows that the optimality gap \(|J_h(\omega ,u^*_h({\varvec{\omega }})) - J(u^*) |\) is indeed proportional to \(\sqrt{h\mathrm {Var}_W[{\mathcal {A}}]}\). The difference between the different cases is more visible than in Fig. 2a, b. Figure 2d illustrates the error estimate in Corollary 3, which shows that the suboptimality of the RBM-control \(|J(u_h^*({\varvec{\omega }})) - J(u^*) |\) is proportional to \(h\mathrm {Var}_W[{\mathcal {A}}]\). The convergence rate is now twice as high as in the previous cases and the relative error stabilizes around \(10^{-5}\), which seems to be related to the tolerance of \(10^{-6}\) used in the computation of the optimal controls.