Abstract

This paper deals with the kernel-based approximation of a multivariate periodic function by interpolation at the points of an integration lattice—a setting that, as pointed out by Zeng et al. (Monte Carlo and Quasi-Monte Carlo Methods 2004, Springer, New York, 2006) and Zeng et al. (Constr. Approx. 30: 529–555, 2009), allows fast evaluation by fast Fourier transform, so avoiding the need for a linear solver. The main contribution of the paper is the application to the approximation problem for uncertainty quantification of elliptic partial differential equations, with the diffusion coefficient given by a random field that is periodic in the stochastic variables, in the model proposed recently by Kaarnioja et al. (SIAM J Numer Anal 58(2): 1068–1091, 2020). The paper gives a full error analysis, and full details of the construction of lattices needed to ensure a good (but inevitably not optimal) rate of convergence and an error bound independent of dimension. Numerical experiments support the theory.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider a kernel-based approximation for a multivariate periodic function by interpolation at a quasi-Monte Carlo lattice point set. Kernel-based interpolation methods are by now well established (see, e.g., [26] and more discussion below). It is the unique combination of a periodic kernel plus a lattice point set here that will deliver us the significant advantage in computational efficiency. As already advocated by Hickernell and colleagues in [28, 29], the combination of a periodic reproducing kernel with the group structure of lattice points means that the linear system for constructing the kernel interpolant involves a circulant matrix, thus can be solved very efficiently using the fast Fourier transform. So, our kernel method can be fast even if the dimensionality is high.

As also advocated in [28, 29], a kernel interpolant is in many settings optimal among all approximation algorithms that use the same function values (see also known results on optimal recovery, e.g., [20, 21]). We can therefore analyze the worst case approximation error of our kernel method by using, as upper bound, the worst case error of an auxiliary algorithm based on a Fourier series truncated at a hyperbolic cross index set. Using recent works [3, 4], we here construct a lattice generating vector with a guaranteed good error bound for our kernel interpolant. Note, importantly, that neither the construction of our lattice generating vector, nor the implementation of our kernel method, requires explicit knowledge or evaluation of the auxiliary hyperbolic cross index set. In short, we know how to find a good lattice point set so that our kernel method has a small error in addition to being of low cost.

In this paper, the main contribution is to apply and analyze this periodic-kernel-plus-lattice method to uncertainty quantification of elliptic partial differential equations (PDEs), where the diffusion coefficient is given by a random field that is periodic in the stochastic variables, as in the model proposed recently by Kaarnioja et al. [12]. We tailor our lattice generating vector to the regularity of the PDE solution with respect to the stochastic variables. Our numerical results beat the theoretical predictions, indicating that the theory based on worst case analysis may not be sharp.

The kernel approximation developed here may have a role as a surrogate model for complicated forward problems. One popular use for surrogate models is to allow efficient sampling of the original system. If the solution of some particularly difficult PDE problem with high accuracy takes a week for a given parameter choice \(\varvec{y}\), then having a kernel interpolant that can be evaluated in hours or minutes could be very useful. A second possible use for the kernel interpolant is in the easy generation of derivatives, needed for example in gradient-based optimization algorithms. The surrogate might be even more useful for Bayesian inverse problems.

We now elaborate key points.

Periodic-kernel-plus-lattice method. Let \(f(\varvec{y})=f(y_1,\ldots ,y_s)\) be a real-valued function on the s-dimensional unit cube \([0,1]^s\), with a somewhat smooth 1-periodic extension to \({{\mathbb {R}}}^s\). Our main interest is in problems where the dimension s is large. Following [25], we assume that f has an absolutely convergent Fourier series, and belongs to a weighted mixed Sobolev space \(H:=H_{s,\alpha ,{\varvec{\gamma }}}\) which is characterized by a smoothness parameter \(\alpha >1\) and a family of positive numbers \({\varvec{\gamma }}=(\gamma _{{\mathfrak {u}}})_{{{\mathfrak {u}}}\subset {{\mathbb {N}}}}\) called weights; the details are given in Sect. 2.1.

Our ultimate application, to be analyzed in Sect. 4, concerns a class of elliptic PDEs parameterized by a very high (or possibly countably infinite) number of stochastic parameters, for which the solution, as a function of the parameters, is periodic and belongs to the weighted space H for a suitable choice of \(\alpha \) and \({\varvec{\gamma }}\).

The important feature of the space H is that it is a reproducing kernel Hilbert space (RKHS), with a simple reproducing kernel \(K(\varvec{y},\varvec{y}')\). This opens the way to the use of kernel methods to approximate functions in H from given point values. In particular, in this paper we focus on the kernel interpolation: given \(f\in H\) and a suitable set of points \({\varvec{t}}_1,\ldots ,{\varvec{t}}_n\in [0,1]^s\), we seek for an approximation \(f_n\in H\) of the form

which satisfies the interpolation condition \(f_n({\varvec{t}}_{k}) = f({\varvec{t}}_{k})\), \(k=1,\ldots ,n\). We refer to \(f_n\) as the kernel interpolant of f.

We will interpolate the function at a set of n lattice points specified by a generating vector \({\varvec{z}}\in {{\mathbb {Z}}}^s\). The points are then given by the formula \({\varvec{t}}_k = {(k{\varvec{z}}\bmod n)/{n}}\), \(k=1,\ldots ,n\), with \({\varvec{t}}_n={\varvec{t}}_0 = \varvec{0}\). A lattice point set has an additive group structure, implying that the difference of two lattice points is another lattice point (after taking into account periodicity).

A key property of our reproducing kernel is that it depends only on the difference of the two arguments, thus \(K(\varvec{y},\varvec{y}') = K(\varvec{y}-\varvec{y}',\varvec{0})\), and \(K(\cdot ,\varvec{0})\) is a periodic function with an easily computable expression when \(\alpha \) is an even integer. Combining this with the group structure of lattice points means that the matrix \([K({\varvec{t}}_k-{\varvec{t}}_{k'},\varvec{0})]_{k,k'=1,\ldots ,n}\) contains only n distinct values and indeed is a circulant matrix. Therefore the linear system arising from collocating (1) at the points \({\varvec{t}}_{k'}, k' = 1,\ldots ,n\), can be solved using the fast Fourier transform with a cost of \({{\mathcal {O}}}(n\log (n))\).

Once we have the coefficients \(a_k\), we can use (1) to evaluate the interpolant \(f_n\) at L arbitrary points \(\varvec{y}_\ell \), \(\ell =1,\ldots ,L\), with a cost of \({{\mathcal {O}}}(Ln)\). Remarkably, with almost the same cost we can evaluate \(f_n\) at all the Ln points of the union of shifted lattices \(\varvec{y}_\ell + {\varvec{t}}_{k'}\), \(\ell =1,\ldots ,L\), \(k'=1,\ldots ,n\). Indeed, since \(K({\varvec{t}}_k,\varvec{y}_\ell + {\varvec{t}}_{k'}) = K({\varvec{t}}_k-{\varvec{t}}_{k'},\varvec{y}_\ell )\) and the matrix \([K({\varvec{t}}_k-{\varvec{t}}_{k'},\varvec{y}_\ell )]_{k,k'=1,\ldots ,n}\) is circulant, we have

which can be evaluated for each \(\varvec{y}_\ell \) for all \({\varvec{t}}_{k'}\) together by fast Fourier transform with a cost of \({{\mathcal {O}}}(n\log (n))\), leading to the total cost of \({{\mathcal {O}}}(Ln\log (n))\). Comprehensive cost analysis taking into account also the evaluations of f and K is given in Sect. 5.

Brief survey on kernel methods in high dimensions. Griebel and Rieger [10] considered a (non-interpolatory) kernel approximation based on a regularized reconstruction technique from machine learning for a class of parameterized elliptic PDEs similar to the one considered in this work, yet with non-periodic dependence on the parameters. They used an anisotropic kernel, behaving differently in different variables, to address the high dimensionality of the problem. However, their error estimate was in terms of the mesh norm or fill distance of the point set, which is the Euclidean radius of the largest Euclidean ball that contains no points in its interior. Since the fill distance behaves at best like \(n^{-1/s}\), where n is the number of sampling points, their estimates inevitably suffer the curse of dimensionality.

Kempf et al. [15] considered the same PDE problem and anisotropic kernel as [10]. However, they considered a penalized least-squares approach for kernel approximation and an isotropic sparse grid as point set, which allowed them to obtain error estimates with a mitigated (but still present) curse of dimensionality.

As noted above, lattice points have already been used in a kernel interpolation method. Zeng et al. [28] seem to be the first to work in this direction, however the question of dependence on dimension was not considered in their analysis. Zeng et al. [29] established dimension independent error estimates in weighted spaces in the case of product weights (i.e., weights that have the form \(\gamma _{{{\mathfrak {u}}}}=\prod _{j\in {{\mathfrak {u}}}} \gamma _j\)). We note, however, that the assumption of product weights is rather limiting. For instance, for integration problems involving parameterized PDEs, the best convergence rates known up to now are obtained by considering weighted space for the parameter-to-solution map with (S)POD weights [9, 12, 17], whereas weighted spaces with product weights lead to the best known rates only for special models [7, 11, 14]. In this paper we extend these results to the case of kernel approximation (as opposed to integration) of the parameter-to-solution map, and we are able to show dimension-independent convergence rates using (S)POD weights in the general case. To the best of our knowledge, this is the first paper to use non-product weights for approximation in parameterized PDE problems.

PDEs with periodic dependence on random variables. Our motivating application is a class of parameterized elliptic PDEs with periodic dependence on the parameters, for which we will establish dimension independent error estimate for the kernel interpolant, by deriving suitable choices of smoothness parameter and weights for the problem at hand. To the best of our knowledge, this is the first paper presenting dimension independent kernel approximation methods using lattice points for this class of problems.

We consider uncertainty quantification for an elliptic PDE (see details in Sect. 4) on a physical domain \(D\subset {\mathbb {R}}^d\), \(d= 1, 2\) or 3, in a probability space \((\varOmega , {\mathscr {A}}, {\mathbb {P}})\), with an input random field of the form

where \(a_0\) and \(\psi _j\) are uniformly bounded in D, and \(\varTheta _j(\omega )\) are i.i.d. random variables following a prescribed distribution. In the popular affine model, \(\varTheta _j\) are i.i.d. random variables uniformly distributed on \([-\frac{1}{2},\frac{1}{2}]\). In the periodic model [12], \(\varTheta _j\) are i.i.d. random variables distributed according to the arcsine distribution and can be parameterized as

with \(y_j\) uniformly distributed on \([-\frac{1}{2},\frac{1}{2}]\). The mean of the random field is \(a_0\), and the scaling \({{1}/{\sqrt{6}}}\) is chosen here so that the covariance of the random field is also exactly the same as in the affine case. Higher moments are of course somewhat different, but as argued in [12], there seems to be no clear reason for preferring one over the other.

Due to periodicity, it is equivalent to work with \(y_j\) uniformly distributed in the interval [0, 1] instead of \([-\frac{1}{2},\frac{1}{2}]\), thus from now on we consider the parameter space

In the earlier paper [12] the aim was to develop and analyze a method for computing the expected value of a given quantity of interest, expressed as a linear functional of the PDE solution, hence facing a high dimensional integration problem. Here, in contrast, the aim is to develop and analyze a fast method for approximating the solution \(u({\varvec{x}},\varvec{y})\), or some quantity of interest \(Q(\varvec{y})\) derived from \(u({\varvec{x}},\varvec{y})\), as an explicit function of \(\varvec{y}\). To that end we will develop a kernel-based approximation, using the kernel of a reproducing kernel Hilbert space of periodic functions, and interpolation at a lattice point set.

Structure of the paper. In Sect. 2 we define the function space setting and the kernel interpolant, and establish its principal properties, while giving a simple proof of a known optimality result, namely that in the sense of worst case error the kernel interpolant is an optimal \(L_{p}\) approximation among all approximations that use the same information about the target function \(f\in H\). Then in Sect. 3 we establish upper and lower bounds on the error. For the upper bound we use the optimality result together with the error analysis for a trigonometric polynomial method established by two of the current authors together with Cools and Nuyens [3, 4]. For the lower bound we provide another proof of a recent result by Byrenheid et al. [1], namely that a method that draws information only from function values at lattice points inevitably has a rate of convergence that is at best only half of the best possible rate, thereby obtaining matching upper and lower bounds up to logarithmic factors. In Sect. 4, we apply the error analysis developed in Sect. 3 to a parameterized PDE problem, thereby obtaining rigorous upper error bounds that are independent of dimension and have explicit rates of convergence. Section 5 is concerned with the cost analysis of our proposed method. In Sect. 6 we give the results of some numerical experiments.

2 The kernel interpolant

2.1 The function space setting

Let \(f(\varvec{y})=f(y_1,\ldots ,y_s)\) be a real-valued function on \([0,1]^s\) with a somewhat smooth 1-periodic extension to \({\mathbb {R}}^s\) with respect to each variable \(y_j\). Our main interest is in problems where the dimension s is large. Following [25], we assume that f has absolutely convergent Fourier series (and so is continuous),

and moreover belongs to a weighted mixed Sobolev space \(H:=H_{s,\alpha ,{\varvec{\gamma }}}\), a Hilbert space with inner product and norm

where

with \({\mathrm {supp}}({\varvec{h}}) := \left\{ j\in \{1:s\} :h_j\ne 0\right\} \) and \(\{1:s\}:=\{1, 2, \ldots , s\}\), and with the \({\varvec{h}}=\varvec{0}\) term in the sum to be interpreted as \(\gamma _\emptyset ^{-1} |{\widehat{f}}(\varvec{0})|^2\). The weighted space \(H_{s,\alpha ,{\varvec{\gamma }}}\) is characterized by the smoothness parameter \(\alpha >1\) and a family of positive numbers \({\varvec{\gamma }}=(\gamma _{{\mathfrak {u}}})_{{{\mathfrak {u}}}\subset {{\mathbb {N}}}}\) called weights, where a positive weight \(\gamma _{{\mathfrak {u}}}\) is associated with each subset \({{\mathfrak {u}}}\subseteq \{1:s\}\). We fix the scaling of the weights by setting \(\gamma _\emptyset := 1\), so that the norm of a constant function in H matches its \(L_2\) norm.

It can easily be verified that if \(\alpha \) is an even integer then the norm can be rewritten as the norm in an “unanchored” weighted Sobolev space of dominating mixed smoothness of order \({{\alpha }/{2}}\),

where \(\varvec{y}_{{\mathfrak {u}}}\) denotes the components of \(\varvec{y}\) with indices that belong to the subset \({{\mathfrak {u}}}\), and \(\varvec{y}_{-{{\mathfrak {u}}}}\) denotes the components that do not belong to \({{\mathfrak {u}}}\), and \(|{{\mathfrak {u}}}|\) denotes the cardinality of \({{\mathfrak {u}}}\).

The important feature of the space H is that it is an RKHS, with an explicitly known and analytically simple reproducing kernel, namely

where

Note that the reproducing property

is easily verified.

Of special interest are even integer values of \(\alpha \), because, when \(\alpha \) is even, \(\eta _{\alpha }\) can be expressed in the especially simple closed form

where the braces indicate that \(y-y'\) is to be replaced by its fractional part in [0, 1), and \(B_{\alpha }(y)\) is the Bernoulli polynomial of degree \(\alpha \). For example, for \(\alpha =2\) and \(\alpha =4\) we have

2.2 The kernel interpolant

We are interested in approximating a given function \(f\in H\) by an approximation of the form

where \({\varvec{z}}\in \{1,\ldots ,n-1\}^{s}\), and the braces around the vector of length s indicate that each component of the vector is to be replaced by its fractional part. The points

are the points of a lattice cubature rule of rank 1, see [24]. In what follows, we omit these braces because functions we consider are, unless otherwise stated, periodic.

In particular, we define \(f_n \in H\) to be the function of the form (4) that interpolates f at the lattice points,

and refer to \(f_n\) as the kernel interpolant of f.

The coefficients \(a_{k}\) in (4) are given by the linear system based on (6)

where \( {{\mathcal {K}}}_{k',k} \,=\, {{\mathcal {K}}}_{k,k'} \,:=\, K({\varvec{t}}_{k},{\varvec{t}}_{k'})\), \( k,k'=1,\ldots ,n\). Note that the matrix elements can be expressed, using periodicity, as

where \(\varvec{0}\) is the s-vector of all zeroes. It follows that the \(n\times n\) matrix \({{\mathcal {K}}}\) is a circulant matrix, which contains only n distinct elements, and can be diagonalised in a time of order \(n\log n\) by fast Fourier transform. This is a major motivation for using lattice points.

2.3 The kernel interpolant is the minimal norm interpolant

The following property is a well known result for interpolation in a reproducing kernel Hilbert space; for completeness we give a proof.

Theorem 2.1

The kernel interpolant \(f_n\) defined by (4), (5) and (6) is the minimal norm interpolant in H.

Proof

Denoting the linear span of the kernels with one leg at \({\varvec{t}}_{k},k=1,\ldots ,n\) by

we observe the well known fact (see, e.g., [5, 8]), that \(f_n\) is the orthogonal projection of f on \(P_n\) with respect to the inner product \(\langle \cdot ,\cdot \rangle _H\), since from the reproducing property (3) and the interpolation property (6) we have

In turn, there follows the Pythagoras theorem,

and the minimal norm property of \(f_n\),

since if g is any other interpolant of f at the lattice points then

and hence \( \Vert g\Vert _H^{2} \,=\, \Vert g-f_n\Vert _H^{2}+\Vert f_n\Vert _H^{2}\), from which the uniqueness of the minimal norm interpolant also follows. \(\square \)

2.4 The kernel interpolant is optimal for given function values

In this subsection we show that the kernel interpolant \(f_n\) defined by (4), (5) and (6) is optimal among all approximations that use only the same function values of f, in the sense of giving the least possible worst case error measured in any given norm \(\Vert \cdot \Vert _{W}\) such that \(H\subset W\) for functions in H. This is a special case of a general result for optimal recovery problems in Hilbert spaces (see for example [20, Example 1.1] and [21, Section 3]), but for completeness we give a short proof here. Our proof follows the exposition of [26, Proof of Theorem 13.5], but suitably adapted to our setting.

Let \(A_n:H\rightarrow H\) be an algorithm (linear or non-linear) that uses as information about the argument only its values at the points (5), i.e., it is a mapping of the form \(A_n(f)={\mathcal {I}}_n (f({\varvec{t}}_{1}),\ldots ,f({\varvec{t}}_{n}))\) for a mapping \({\mathcal {I}}_{{n}} :{\mathbb {R}}^n\rightarrow H\). The worst case W-error for this algorithm is defined by

Theorem 2.2

Let \(A_n:H\rightarrow H\) be an algorithm (linear or non-linear) such that \(A_n(f)\) uses as information about f only its values \(f({\varvec{t}}_{1}),\ldots ,f({\varvec{t}}_{n})\) at the points (5). For \(f\in H\), let \(A^*_n(f) := f_n\) be the kernel interpolant defined by (4), (5) and (6). Then, for any normed space \(W\supset H\) we have

Proof

Define \( {\mathcal {C}}:=\{g\in H \;:\; \Vert g\Vert _H\le 1\text { and }g({\varvec{t}}_{k})=0 \text{ for } \text{ all } k=1,\ldots ,n\}\). For any \(g\in {\mathcal {C}}\) we have

where in the penultimate step we used \(g({\varvec{t}}_{k})=0\) for all \(k=1,\ldots ,n\), from which it follows that \(A_n(0)= A_n(g) = A_n(-g)\). For any \(f\in H\) such that \(\Vert f\Vert _H\le 1\), since \(f_n\) is interpolatory, the Pythagoras theorem (8) implies \(\Vert f-f_n\Vert _{H}\le 1\), and hence \(f-f_n\in {\mathcal {C}}\). Thus it follows from (9) that

The theorem now follows. \(\square \)

In the above result, we may, for example, take \(W=L_{p}\) for any \(1\le p\le \infty \).

3 Lower and upper error bounds

3.1 Lower bound on the worst case \(L_{p}\) error (\(1\le p \le \infty \))

A recent paper [1] showed (with a different definition of the parameter \(\alpha \)) that the worst case \(L_2\) error for an approximation that uses the points of a rank-1 lattice cannot have an order of convergence better than \(n^{-\alpha /4}\) (with our definition of \(\alpha \)). Bearing in mind that H is a (Hilbert) space of functions of dominating mixed smoothness of order \({{\alpha }/{2}}\), this is just half the rate \(n^{-\alpha /2}\) of the best approximation. Since the function space setting in that paper is rather different from ours (here we use a Fourier description and a so-called unanchored space, and have introduced weights) we briefly reprove the main result here, obtaining a sharp lower bound expressed in terms of the weights. Furthermore, in our setting we make the result stronger by showing that the same lower bound holds for the worse case \(L_1\) error.

Theorem 3.1

Let \(s\ge 2\). Assume that the weights for the subsets of \(\{1:s\}\) containing a single element satisfy \({\gamma _{\{j\}} } >0\) for all \(j\in \{1:s\}\), and that \({\varvec{z}}\in \{0,\ldots ,n-1\}^s\) is given. Let \(A_n:H\rightarrow L_p\) be an algorithm (linear or non-linear) that uses information only at the lattice points (5) and satisfies \(A_n(0)=0\). Then for \(1\le p\le \infty \) the worst case \(L_{{p}}\) error for algorithm \(A_n\) satisfies

In particular, if \(\gamma _{\{1\}}\ge \gamma _{\{2\}}\ge \cdots >0\) then

Proof

Without loss of generality we assume \(\gamma _{\{1\}}\ge \gamma _{\{2\}}\ge \cdots >0\). The heart of the matter is that there exists a non-zero integer vector \({\varvec{h}}^*\) of length s in the 2-dimensional set

such that

(In the language of dual lattices, see [24], there exists a point of the dual lattice in \(D_n\setminus \{\varvec{0}\}\).) To prove this fact, we define \({\widetilde{D}}_{n}\), the positive quadrant of \(D_{n}\), by

noting that if \({\varvec{h}},{\varvec{h}}'\in {\widetilde{D}}_{n}\) then \({\varvec{h}}-{\varvec{h}}'\in D_{n}\). Now define

Since \(|E_{n}({\varvec{z}})|\le n\) and \(|{\widetilde{D}}_{n}|=(1+\lfloor \sqrt{n}\rfloor )^{2}>n\), it follows from the pigeonhole principle that two distinct elements of \({\widetilde{D}}_{n}\), say \({\varvec{h}}\) and \({\varvec{h}}'\), yield the same element of \(E_{n}({\varvec{z}})\); from this it follows that \({\varvec{h}}^{*}:={\varvec{h}}-{\varvec{h}}'\) satisfies (10). A “fooling function” is then defined by

where \({\varvec{e}}_1\) and \({\varvec{e}}_2\) are the unit vectors corresponding to variables 1 and 2. By construction, q vanishes at all the lattice points (5). For this function, since the two terms in q are orthogonal with respect to the inner products in \(H = H_{s,\alpha ,{\varvec{\gamma }}}\), the squared H norm satisfies

On the other hand, the \(L_{p}\) norm is bounded from below by

This integrand is even with respect to \(h_{1}^*\) and \(h_{2}^*\) separately, so both \(h_{1}^*\) and \(h_{2}^*\) can be considered as non-negative. First assume that both \(h_{1}^*\) and \(h_{2}^*\) are positive, and partition the square into boxes of size \(1/h_{1}^*\times 1/h_{2}^*\). It is easy to see that each box gives the same contribution to the integral, and hence

(For the last step it may be useful to note that the integrand in the inner integral is 1-periodic, making the inner integral independent of \(z_2\).) If we have \(h_{1}^*>0\) and \(h_{2}^*=0\) or vice versa, we again have \(\Vert q\Vert _{L_{1}}=4/\pi \). Since \(\varvec{h}^*\) is non-zero, we obtain

If we now define \(g:= q/\Vert q\Vert _H\), then g belongs to the unit ball in H and vanishes at all the points of the lattice (5), and \(\Vert g\Vert _{L_{p}}\) is bounded below by the right-hand side of (11). Since \(A_n(g)\) depends on g only through its values at the lattice points, and g vanishes at all those points, it follows that \(A_n(g) = A_n(0) = 0\), with the last step following from the assumption on \(A_n\). From the definition of worst case error we conclude that \(e^{\mathrm {wor}} (A_n,L_p)\ge \Vert g-A_n(g)\Vert _{L_p} = \Vert g\Vert _{L_p}\), which is bounded below by the right-hand side of (11), completing the proof. \(\square \)

3.2 Upper bound on the worst case \(L_2\) error

In this section, we obtain explicit \(L_{2}\) error bounds for the kernel interpolant by using Theorem 2.2 combined with error bounds given for an explicit trigonometric polynomial approximation in [3, 4] which extends the construction from [18, 19] to general weights. (An alternative approach to obtain an upper bound would be to use a “reconstruction lattice”, see, e.g., [1, 13, 16].)

The lattice algorithm \(A^\dagger _{n,M}\) applied to a target function \(f\in H\) takes the form

which is obtained by applying a lattice integration rule to the Fourier coefficients in the orthogonal projection onto a finite index set defined for some parameter \(M>0\) by

The error for this algorithm consists of the error from truncation to the index set \({{\mathcal {A}}}_s(M)\) together with the quadrature error from approximating those Fourier coefficients with indices \({\varvec{h}}\in {{\mathcal {A}}}_s(M)\), leading to a worst case \(L_2\) approximating error bound of the form

The quantity \({{\mathcal {S}}}_s({\varvec{z}})\) (see [3] for details) can be used as a search criterion in a component-by-component (CBC) construction for finding suitable lattice generating vectors \({\varvec{z}}\), and has the key advantage that it does not depend on the index set \({{\mathcal {A}}}_s(M)\). The analysis in [3] together with the optimality of the kernel interpolant (see Theorem 2.2) leads to the following theorem.

Theorem 3.2

Given \(s\ge 1\), \(\alpha >1\), weights \((\gamma _{{\mathfrak {u}}})_{{{\mathfrak {u}}}\subset {{\mathbb {N}}}}\) with \(\gamma _\emptyset := 1\), and prime n, the worst case \(L_2\) approximation error of the kernel interpolant \(A^*_n(f) = f_n\) defined by (4), (5) and (6), using the generating vector \({\varvec{z}}\) obtained from the CBC construction with search criterion \({{\mathcal {S}}}_s({\varvec{z}})\) in [3, 4], satisfies for all \(\lambda \in (\frac{1}{\alpha },1]\),

with \(\kappa := \sqrt{2}\,[\max (6,2.5+2^{2\alpha \lambda +1})]^{{{1}/{({4\lambda })}}}\) and \(\zeta (x):=\sum _{k=1}^\infty k^{-x}\) denoting the Riemann zeta function for \(x>1\). Hence

where the implied constant depends on \(\delta \) but is independent of s provided that

Proof

The optimality of the kernel interpolant established in Theorem 2.2 means that \(e^{\mathrm {wor}}(A^*_n;L_2) \le e^{\mathrm {wor}}(A^\dagger _{n,M};L_2)\) for all M, and therefore the upper bound in (14) also serves as an upper bound for the kernel interpolant. It is easy to verify that the bound in (14) can be minimized by setting \(M = \sqrt{1/{{\mathcal {S}}}_s({\varvec{z}})}\), leading to (15). The subsequent bound follows from [3, Theorem 3.5]. The big-\({{\mathcal {O}}}\) bound is then obtained by taking \(\lambda = 1/(\alpha -4\delta )\). \(\square \)

From this result (which by Theorem 3.1 is almost best possible with respect to the order of convergence) we immediately obtain an error bound for the kernel interpolant.

Theorem 3.3

Under the conditions of Theorem 3.2, and with lattice generating vector \({\varvec{z}}\) obtained by the CBC construction in [3, 4], for any \(f\in H\), we have for the kernel interpolant \(f_n\) defined by (4), (5) and (6),

We stress again that the CBC construction in [3, 4] does not require the explicit construction of the index set \({{\mathcal {A}}}_s(M)\) in order to determine an appropriate generating vector \({\varvec{z}}\). However, the expression \({{\mathcal {S}}}_s({\varvec{z}})\) (see [3] for details) used as the search criterion does depend in a complicated way on the weights \(\gamma _{{\mathfrak {u}}}\), and therefore the target dimension s needs to be fixed at the start of the CBC construction (except for the case of product weights). For weights with no special structure, the computational cost will be exponentially large in s. We consider some special forms of weights:

-

Product weights: \(\gamma _{{\mathfrak {u}}}\,=\, \prod _{j\in {{\mathfrak {u}}}} \gamma _j\), specified by one sequence \({(\gamma _j)}_{j\ge 1}\).

-

POD weights (product and order dependent): \(\gamma _{{\mathfrak {u}}}\,=\, \varGamma _{|{{\mathfrak {u}}}|} \prod _{j\in {{\mathfrak {u}}}} \gamma _j\), specified by two sequences \({(\varGamma _\ell )}_{\ell \ge 0}\) and \({(\gamma _j)}_{j\ge 1}\).

-

SPOD weights (smoothness-driven product and order dependent) with degree \(\sigma \ge 1\):

$$\begin{aligned} \gamma _{{\mathfrak {u}}}\,=\, \sum _{{\varvec{\nu }}_{{\mathfrak {u}}}\in \{1:\sigma \}^{|{{\mathfrak {u}}}|}} \varGamma _{|{\varvec{\nu }}_{{\mathfrak {u}}}|} \prod _{j\in {{\mathfrak {u}}}} \gamma _{j,\nu _j}, \end{aligned}$$specified by the sequences \({(\varGamma _\ell )}_{\ell \ge 0}\) and \({(\gamma _{j,\nu })}_{j\ge 1}\) for each \(\nu =1,\ldots ,\sigma \), where \(|{\varvec{\nu }}_{{\mathfrak {u}}}| :=\sum _{j\in {{\mathfrak {u}}}} \nu _j\).

Fast CBC construction of lattice generating vector for \(L_2\) approximation has the cost of

plus storage cost and pre-computation cost for POD and SPOD weights, see [4].

4 Application to PDEs with random coefficients

As an application, we apply our kernel interpolation scheme to a forward uncertainty quantification problem, namely, a PDE problem with an uncertain, periodically parameterized diffusion coefficient, fitting the theoretical framework considered in the preceding sections. The kernel interpolant can be postprocessed with low computational cost to obtain statistics of the PDE solution itself or functionals of the solution for uncertainty quantification.

Letting \(D\subset {\mathbb {R}}^d\), \(d\in \{1,2,3\}\), be a bounded domain with Lipschitz boundary, we consider the problem of finding \(u:D\times \varOmega \rightarrow {\mathbb {R}}\) that satisfies

for almost all events \(\omega \in \varOmega \) in the probability space \((\varOmega ,{\mathscr {A}},{\mathbb {P}})\) with

where \(a_0\in L_\infty (D)\), \(\psi _j\in L_\infty (D)\) for all \(j\ge 1\) are such that \(\sum _{j\ge 1}|\psi _j({\varvec{x}})| < \infty \) for any \({\varvec{x}}\in D\), and \(Y_1,Y_2,\ldots \) are i.i.d. random variables uniformly distributed on \([-\frac{1}{2},\frac{1}{2}]\). This type of random field is not new in the context of uncertainty quantification. Indeed, the random variable \(\sin (2\pi Y_j(\omega ))\) induces the arcsine measure as its distribution: for if \(Y(\omega )\) is uniformly distributed on \([-\tfrac{1}{2}, \tfrac{1}{2}]\), then \(Z(\omega ):=\sin (2\pi Y(\omega ))\) has the probability density \(\tfrac{1}{\pi }\tfrac{1}{\sqrt{1-z^{2}}}\) on \([-1,1]\). Thus, a is identical, up to the law to the random field

with \(Z_j\) i.i.d. random variables with arcsine distribution on \([-1,1]\). Expression (19) would be the starting point for deriving a polynomial chaos approximation [27] of the solution in terms of Chebyshev polynomials of the first kind [22]. In this paper, however, we want to exploit periodicity, hence we consider rather the formulation (18) and a different approximation method based on kernel interpolation.

Since the expression (18) is periodic in the random variable \(Y_j\), we can shift those random variables so that their range is [0, 1] instead of \([-\frac{1}{2},\frac{1}{2}]\), i.e., we consider the equivalent parametric space \( U \,:=\, [0,1]^{{\mathbb {N}}}. \) Let \({\mathcal {B}}(U)\) be the Borel \(\sigma \)-algebra corresponding to the product topology on \(U=[0,1]^{{\mathbb {N}}}\), and equip \((U,{\mathcal {B}}(U))\) with the product uniform measure; see, for example, [23] for details. The weak formulation of (16)–(17) can then be stated parametrically as: for \(\varvec{y}\in U\), find \(u(\cdot ,\varvec{y})\in H_0^1(D)\) such that

where the datum \(q\in H^{-1}(D)\) is fixed and the diffusion coefficient is given by

Here \(H^1_0(D)\) denotes the subspace of the \(L_2\)-Sobolev space \(H^1(D)\) with vanishing trace on \(\partial D\), and \(H^{-1}(D)\) denotes the topological dual of \(H^1_0(D)\), and \(\langle \cdot ,\cdot \rangle _{H^{-1}(D),H^1_0(D)}\) denotes the duality pairing between \(H^{-1}(D)\) and \(H_0^1(D)\). We endow the Sobolev space \(H_0^1(D)\) with the norm \(\Vert v\Vert _{H_0^1(D)}:=\Vert \nabla v\Vert _{L_2(D)}\).

Since we now have two sets of variables \({\varvec{x}}\in D\) and \(\varvec{y}\in U\), from here on we will make the domain D and U explicit in our notation. We state the following assumptions and refer to them as they become needed:

-

(A1)

\(a_0\in L_\infty (D)\), \(\psi _j\in L_\infty (D)\) for all \(j\ge 1\), and \(\sum _{j\ge 1} \Vert \psi _j\Vert _{L_\infty (D)}< \infty \);

-

(A2)

there exist positive constants \(a_{\min }\) and \(a_{\max }\) such that \(0<a_{\min }\le a({\varvec{x}},\varvec{y})\le a_{\max }<\infty \) for all \({\varvec{x}}\in D\) and \(\varvec{y}\in U\);

-

(A3)

\(\sum _{j\ge 1}\Vert \psi _j\Vert _{L_\infty (D)}^p<\infty \) for some \(0<p<1\);

-

(A4)

\(a_0\in W^{1,\infty }(D)\) and \(\sum _{j\ge 1}\Vert \psi _j\Vert _{W^{1,\infty }(D)}<\infty \), where

$$\begin{aligned} \Vert v\Vert _{W^{1,\infty }(D)}:=\max \{\Vert v\Vert _{L_\infty (D)},\Vert \nabla v\Vert _{L_\infty (D)}\}; \end{aligned}$$ -

(A5)

\(\Vert \psi _1\Vert _{L_\infty (D)}\ge \Vert \psi _2\Vert _{L_\infty (D)}\ge \cdots \);

-

(A6)

the physical domain \(D\subset {\mathbb {R}}^d\), \(d\in \{1,2,3\}\), is a convex and bounded polyhedron with plane faces.

Let assumptions (A1) and (A2) be in effect. Then the Lax–Milgram lemma [2] implies unique solvability of the problem (20) for all \(\varvec{y}\in U\), with the solution satisfying the a priori bound

Moreover, from the recent paper [12, Theorem 2.3] we know, after differentiating the PDE (20), that the mixed derivatives of the PDE solution are 1-periodic and bounded by

for all \(\varvec{y}\in U\) and all multi-indices \({\varvec{\nu }}\in {\mathbb {N}}_0^\infty \) with finite order \(|{\varvec{\nu }}|:= \sum _{j\ge 1} \nu _j <\infty \), and we define

Furthermore, \(S(\sigma ,m)\) denotes the Stirling number of the second kind for integers \(\sigma \ge m\ge 0\), with the convention that \(S(\sigma ,0)=\delta _{\sigma ,0}\). In [12] we considered a function space with respect to \(\varvec{y}\) with a supremum norm rather than an \(L_2\)-based norm, so here we need to write down the relevant \(L_2\)-based norm bound instead. Moreover, we want to approximate the solution u directly, rather than a bounded linear functional G(u) of the PDE solution.

For our proposed approximation scheme, we require the target function to be pointwise well-defined with respect to both the physical variable and the parametric variable. In terms of our PDE application, this can be achieved either by assuming additional regularity of both the diffusion coefficient a and the source term q or, alternatively, by analyzing instead the construction of the kernel interpolant for the finite element approximation of u (which is naturally pointwise well-defined everywhere). Here we focus on the latter case, in which the kernel interpolant is crafted for the finite element approximation of u. This is also the setting that arises in practical computations, where one only ever has access to a numerical approximation of the solution to (20), with the diffusion coefficient (21) truncated to a finite number of terms. To this end, we split our analysis into three parts: dimension truncation error, finite element error, and kernel interpolation error.

4.1 Dimension truncation error

In anticipation of the forthcoming discussion we define the dimensionally truncated solution of (20) as

Moreover, let us introduce the shorthand notations \(U_s:=U_{\le s}:=[0,1]^s\), \(U_{>s}:=\{(y_j)_{j\ge s+1}: y_j\in [0,1]\}\), and \(\varvec{y}_{>s}:=(y_{s+1},y_{s+2},\ldots )\).

For an \({{\mathbb {R}}}\)-valued function on U that is Lebesgue integrable with respect to the uniform measure on \({\mathcal {B}}(U)\), we use the notation \(\int _{U}F(\varvec{y})\,\mathrm{d}\varvec{y}\) for the integral of F over U. Similarly, for an integrable function \({\tilde{F}}\) on \(U_{> s}\), we denote the integral over \(U_{> s}\) with respect to the uniform measure by \(\int _{U_{> s}}{\tilde{F}}(\varvec{y}_{>s})\,\mathrm{d}\varvec{y}_{>s}\).

Arguing as in [17, Theorem 5.1], it is not difficult to see that

holds under assumptions (A1)–(A3) and (A5). In what follows, we consider the dimension truncation error in the \(L_2\)-norm in the stochastic parameter, and establish the rate \({\mathcal {O}}(s^{-{1}/{p}+{1}/{2}})\), which is one half order better. This case does not appear to have been considered in the existing literature. Notably, this rate is only half that of the rate proved in [12] for integration problem with respect to \(\varvec{y}\):

We will establish a dimension truncation error for a general class of parametrized random fields that includes (21), without the periodicity assumption. Our proof adapts the argument by Gantner [6] to the \(L^2(U;H^1_0(D))\)-norm estimate.

Theorem 4.1

Suppose that (A1), (A3) and (A5) hold. Let \(\xi :[0,1]\rightarrow {\mathbb {R}}\) be an \(L_\infty ([0,1])\)-function such that

Suppose further that the function

satisfies \((A2) \). Then for any \(s\in {\mathbb {N}}\), there exists a constant \(C>0\) such that

where \(u\in H_{0}^{1}(D)\) denotes the solution of the equation (20) but with \(a({\varvec{x}}, \varvec{y})\) given by (26), \(u_{s}\in H_{0}^{1}(D)\) denotes the corresponding dimensionally truncated solution, \(c_D>0\) is the Poincaré constant of the embedding \(H_0^1(D)\hookrightarrow L_2(D)\), and the constant \(C>0\) is independent of s and q.

Proof

We begin by introducing some helpful notations. For \(\varvec{y}\in U\), let us define the operators \(B,B^{s}:H_{0}^{1}(D)\rightarrow H^{-1}(D)\) by

where the operators \(B_{k}:H_{0}^{1}(D)\rightarrow H^{-1}(D)\) are defined by

and \(\langle B_{k}v,w\rangle _{H^{-1}(D),H_{0}^{1}(D)}:=\langle \psi _{k}\nabla v,\nabla w\rangle _{L_{2}(D)}\) for \(v,w\in H_{0}^{1}(D)\) and \(k\ge 1\). This allows the equation (20) with the coefficient a given by (26) to be written as \(Bu=q\). It is easy to see that the assumptions (A1) and (A2) ensure that both \(B(\varvec{y})\) and \(B^{s}(\varvec{y})\) are boundedly invertible linear maps for all \(\varvec{y}\in U\), with the norms of B and \({B^{s}}\) both bounded by \(a_{{\max }}\), and the norms of both \(B^{-1}\) and \({(B^{s})^{-1}}\) bounded by \(a_{{\min }}^{-1}\). Thus we can write \(u:=u(\varvec{y}):=B^{-1}q\) and \(u_{s}:=u_{s}(\varvec{y}):=(B^{s})^{-1}q\) for all \(\varvec{y}\in U\).

Only in this proof, we redefine (24) by \(b_{j}:=\Vert \xi \Vert _{\infty }\Vert \psi _{j}\Vert _{L_{\infty }(D)}/a_{\min }\), with \(\Vert \xi \Vert _{\infty }:=\Vert \xi \Vert _{L_\infty ([0,1])}\). Notice that with \(\xi =\frac{1}{\sqrt{6}}\sin (2\pi \cdot )\) we recover (24). Let \(s'\in {\mathbb {Z}}_{+}\) be such that

Without loss of generality, we can assume that \(s\ge s'\) since the assertion in the theorem can subsequently be extended to all values of s by making a simple adjustment of the constant \(C>0\) (see the end of the proof). Then for all \(j\ge s'+1\) and all \(s\ge s'\) we have

The bound (29) permits the use of a Neumann series expansion

where it is assumed that the product symbol respects the non-commutative nature of the operators \((B^{s})^{-1}B_{j}\), \(j\ge 1\).

Our strategy is to estimate first

and then deduce by the Poincaré inequality \(\Vert u\Vert _{L_{2}(D)}\le c_{D}\Vert u\Vert _{H_{0}^{1}(D)}\), with \(c_{D}>0\) depending only on the domain D, together with uniform coercivity, that

Let \({\mathscr {B}}_s: H_0^1(D) \rightarrow H_0^1(D)\) be defined by

and observe that \({{\mathscr {B}}_{s}}\) is self-adjoint with respect to the inner product

Indeed, for any \(v,w\in H_{0}^{1}(D)\) we have

Hence, from (30) we have

where we used the notation \(\sum _{\varvec{\eta }\in \{s+1:\infty \}^{m}}:=\lim _{{\tilde{s}}\rightarrow \infty }\sum _{\varvec{\eta }\in \{s+1:{\tilde{s}}\}^{m}}\), and the latter product is assumed to respect the non-commutative nature of the operators. Introducing

for each \(\varvec{\eta }\in \{s+1,s+2,\ldots \}^{m}\), we have \(\nu _{i}(\varvec{\eta })=0\), \(i=1,\ldots ,s\), \(|{\varvec{\nu }}(\varvec{\eta })|:=\sum _{i=1}^{\infty }\nu _{i}(\varvec{\eta })=m\), and

Define

and note from (25) that \(c_{\varvec{\nu }}= 0\) if for some \(i \in {\mathrm {supp}}({\varvec{\nu }})\) we have \(\nu _i = 1\). Then we have, using (31),

which can be further bounded by

where the sum of \({\varvec{\eta }}\) simplifies to

The dimension truncation error is estimated by splitting the upper bound into two parts. Let \(m^{*}\ge 3\) be an as yet undetermined index. Then

We can estimate the sum in the first term of (32) by

where \({\varvec{b}}^{{\varvec{\nu }}}:=\prod _{i\in \mathrm {supp}({\varvec{\nu }})}b_{i}^{\nu _{i}}\). Furthermore, we obtain

where we used (28), (29), and the inequality \(\mathrm{e}^{x}\le 1+(\mathrm{e}-1)x\) for all \(x\in [0,1]\).

Recalling (27), we find the following upper bound for the sum in the second term of (32):

where we used the estimates \(m-1\le 2^{m}\) and \(2\sum _{j=s+1}^{\infty }b_{j}<1\).

Observing that by [17, Theorem 5.1] it holds

and (with \(b_j\) replaced by \(b_j^2\) and p replaced by p/2)

so we see that the terms (33)–(34) can be balanced by choosing \(m^{*}=\lceil \frac{2-p}{1-p}\rceil \). One arrives at the dimension truncation bound

where the constant \(C>0\) is independent of s and q. This proves the theorem for \(s\ge s'\). The result can be extended to all \(s\ge 1\) by noting that

for all \(1\le s<s'\), where we used the a priori bound identical to (22). \(\square \)

Remark 4.2

Theorem 4.1 can be generalised further to include a more complex model

where the function \(\xi \) in (26) is now replaced by an \(L_\infty ([0,1])\) function \(\xi _j\) depending on j. Then, assuming that we have \(\int _{0}^1\xi _j(y)\,{\mathrm {d}}y=0\), \(j\ge 1\), and that \({\tilde{b}}_{j}:=\Vert \xi _j\Vert _{\infty }\Vert \psi _{j}\Vert _{L_{\infty }(D)}/a_{\min }\) is non-increasing in j, and moreover that \({\tilde{a}}\) satisfies \((A2) \), the same argument as above establishes the same estimate as in Theorem 4.1.

4.2 Finite element error

Let assumption (A6) be in effect. Let \(\{V_h\}_h\) be a family of conforming finite element subspaces \(V_h\subset H_0^1(D)\), parameterized by the one-dimensional mesh size \(h>0\), which are spanned by continuous, piecewise linear finite element basis functions. It is assumed that the triangulation corresponding to each \(V_h\) is obtained from an initial, regular triangulation of D by recursive, uniform partition of simplices.

For each \(\varvec{y}\in U\), we denote by \(u_{h}(\cdot ,\varvec{y})\in V_h\) the finite element solution to the system

where \(q\in H^{-1}(D)\) and a is defined by (21). Under assumptions (A1)–(A2), this system is uniquely solvable and the finite element solution \(u_h\) satisfies both the a priori bound (22) as well as the partial derivative bounds (23). In analogy to the previous subsection, we also define the dimensionally truncated finite element solution by setting

where \(u_h(\cdot ,\varvec{y})\in V_h\) is the solution of (35) for \(\varvec{y}\in U\).

Theorem 4.3

Under the assumptions (A1), (A2), (A4) and (A6), for every \(\varvec{y}\in U\) and \(q\in H^{-1+t}(D)\) with \(t\in [0,1]\), there holds the asymptotic convergence estimate

where the constant \(C>0\) is independent of h and \(\varvec{y}\).

Proof

Let \(\varvec{y}\in U\). From [17, Theorem 7.2], under the assumptions (A1), (A2), (A4), and (A6), we have for every \(g\in L_2(D)\) the following asymptotic convergence estimate as \(h\rightarrow 0\)

where the constant \(C>0\) is independent of h and \(\varvec{y}\). Therefore

and this concludes the proof. \(\square \)

4.3 Kernel interpolation error

We focus on approximating the finite element solution of the problem (20) in the following discussion, since it is essential for our approximation scheme that the function being approximated is pointwise well-defined in the physical domain D.

Let \(H(U_s)=H\) denote the RKHS of functions with respect to the stochastic parameter \(\varvec{y}\in U_s\), defined in Sect. 2.1. For every \({\varvec{x}}\in D\), let

be the kernel interpolant of the dimensionally truncated finite element solution (36) at \({\varvec{x}}\) as a function of \(\varvec{y}\). We measure the \(L_2\) approximation error \(\Vert u_{s,h}({\varvec{x}},\cdot )-u_{s,h,n}({\varvec{x}},\cdot )\Vert _{L_2(U_s)}\) in \(\varvec{y}\) and then take the \(L_2\) norm over \({\varvec{x}}\), to arrive at the error criterion

where, observing that \(u_{s,h} - u_{s,h,n}\) is jointly measurable, we interchanged the order of integration by appeal to the Fubini’s theorem.

Theorem 4.4

Under the assumptions (A1), (A2) and (A6), let \(u_{s,h}(\cdot ,\varvec{y})\in H_0^1(D)\) denote the dimensionally truncated finite element solution of (35) for \(\varvec{y}\in U_s\) and let \(q\in H^{-1}(D)\) be the corresponding source term. Moreover, for every \({\varvec{x}}\in D\) let \(u_{s,h,n}({\varvec{x}},\cdot ) := A^*_n(u_{s,h}({\varvec{x}},\cdot ))\) be the kernel interpolant at \({\varvec{x}}\) based on a lattice rule satisfying the assumptions of Theorem 3.2. Suppose that \(\alpha \in 2 {{\mathbb {N}}}\) and \(\sigma := \frac{\alpha }{2}\). Then we have for all \(\lambda \in (\frac{1}{\alpha },1]\) that

where \(c_D>0\) is the Poincaré constant of the embedding \(H_0^1(D)\hookrightarrow L_2(D)\), \(\kappa >0\) is the constant defined in Theorem 3.2, and

Proof

We can express the squared \(L_2\) error as

The first factor is the squared worst case \(L_2\) approximation error, which can be bounded using Theorem 3.2. The second factor can be estimated using (2) by

where we used the Cauchy–Schwarz inequality, Fubini’s theorem, the Poincaré constant \(c_D>0\) for the embedding \(H_0^1(D)\hookrightarrow L_2(D)\), together with the PDE derivative bound (23) applied with \({\varvec{\nu }}= (\sigma ,\ldots ,\sigma ) = (\frac{\alpha }{2}, \ldots ,\frac{\alpha }{2})\). The theorem is proved by combining the above expressions with Theorem 3.2. \(\square \)

Next, we proceed to choose the weights \(\gamma _{{\mathfrak {u}}}\) and the parameters \(\lambda \) and \(\alpha \) to ensure that the constant \(C_s(\lambda )\) can be bounded independently of s, with \(\lambda \) as small as possible to yield the best possible convergence rate.

4.3.1 Choosing SPOD weights

One way to choose the weights is to equate the terms inside the two sums over \({{\mathfrak {u}}}\) in the formula (38) for \(C_s(\lambda )\). (The value of \(C_s(\lambda )\) so obtained minimizes (38) with respect to \(\gamma _{{\mathfrak {u}}}\) for \({{\mathfrak {u}}}\subseteq \{1:s\}\).) It will be shown that this yields the convergence rate \({{\mathcal {O}}}(n^{-(\frac{1}{2p}-\frac{1}{4})})\) with an implied constant independent of the dimension s. The rate is precisely the rate of convergence that we expect to get. However, this choice of weights is too complicated to allow for efficient CBC construction of the lattice generating vector. So in the theorem below we propose a choice of SPOD weights that achieves the same error bound.

Theorem 4.5

Assume that (A1)–(A3) and (A6) hold, and that p is as in (A3). Take \(\alpha := 2\lfloor \frac{1}{p}+\frac{1}{2}\rfloor \), \(\sigma := \frac{\alpha }{{2}}\), \(\lambda := \frac{p}{2-p}\), and define the weights to be

for \(\emptyset \ne {{\mathfrak {u}}}\subset {{\mathbb {N}}},\; |{{\mathfrak {u}}}|<\infty \), or SPOD weights

for \(\emptyset \ne {{\mathfrak {u}}}\subset {{\mathbb {N}}},\; |{{\mathfrak {u}}}|<\infty \), with \(\gamma _{\emptyset }:= 1\). Then the kernel interpolant of the finite element solution in Theorem 4.4 satisfies

where the constant \(C>0\) is independent of the dimension s.

Proof

We will proceed to justify the two choices of weights (39) and (40), and show that in both cases the term \(C_s(\lambda )\) appearing in Theorem 4.4 can be bounded independently of s, by specifying \(\lambda \) and \(\alpha \) as in the theorem.

The first choice of weights (39) is obtained by equating the terms inside the two sums over \({{\mathfrak {u}}}\) in the formula (38). Substituting (39) into (38) yields

where we used \(\max (|{{\mathfrak {u}}}|,1)\le [\mathrm {e}^{1/\mathrm {e}}]^{|{{\mathfrak {u}}}|} = (1.4446\cdots )^{|{{\mathfrak {u}}}|}\), and defined \(S_{\max }(\sigma ) := \max _{1\le m\le \sigma } S(\sigma ,m)\), and \( \beta _j \,:=\, S_{\max }(\sigma )\,[2\mathrm{e}^{1/\mathrm{e}}\zeta (\alpha \lambda )]^{\frac{1}{2\lambda }} b_j\) for all \({j\ge 1}\), while applying Jensen’s inequalityFootnote 1 with \(0<\frac{2\lambda }{1+\lambda }\le 1\).

The second choice of weights (40) is inspired by the weights (39) but takes the SPOD form

with \(\tau >0\) to be specified below. (The \(\tau ^{|{{\mathfrak {u}}}|}\) factor can be merged into the product over \({{\mathfrak {u}}}\), thus giving SPOD weights.) Estimating \(\max (|{{\mathfrak {u}}}|,1)\le [\mathrm {e}^{1/\mathrm {e}}]^{|{{\mathfrak {u}}}|}\) in (38), plugging in the weights (42), applying the Cauchy–Schwarz inequality with \(\frac{1}{1+\lambda } + \frac{\lambda }{1+\lambda }=1\), and applying Jensen’s inequality with \(0<\lambda \le 1\), we obtain from (38)

and further

where equality holds provided that we now choose \(\tau := [2\mathrm {e}^{1/\mathrm {e}}\zeta (\alpha \lambda )]^{\frac{1}{1+\lambda }}\). This leads to the same upper bound (41) as for the first choice of weights.

It remains to show that the upper bound (41) can be bounded independently of s. We define the sequence \(d_j :=\beta _{\lceil j/\sigma \rceil }\) for \(j\ge 1\), so that \(d_1=\cdots =d_{\sigma }=\beta _1\), \(d_{\sigma +1}=\cdots =d_{2\sigma }=\beta _2\), and so on. Then for \({\varvec{m}}\in \{0:\sigma \}^s\) we can write

where \({{\mathfrak {v}}}:=\{1,2,\ldots ,m_1,\sigma +1,\sigma +2,\ldots ,\sigma +m_2,\ldots ,(s-1)\sigma +1,\ldots ,(s-1)\sigma +m_s\}\). Clearly, the set \({{\mathfrak {v}}}\) is of cardinality \(|{{\mathfrak {v}}}|=m_1+\cdots +m_s=|{\varvec{m}}|\). It follows that

The final inequality holds because \((\sum _{j\ge 1}d_j^{\frac{2\lambda }{1+\lambda }} )^\ell \) includes all the products of the form \(\prod _{j\in {\mathfrak {v}}}d_j^{\frac{2\lambda }{1+\lambda }} \) with \(|{\mathfrak {v}}|=\ell \), and moreover includes each such term \(\ell !\) times.

Recall from (24) and the assumption (A3) that \(\sum _{j\ge 1} b_j^p < \infty \). We now choose

For the inner sum in (43) we now have

provided that \(\alpha \lambda > 1\), which is equivalent to \(\alpha > \frac{2}{p}-1\). This latter condition as well as the requirement that \(\alpha \) be even can be satisfied by taking \(\alpha \) such that \(\frac{\alpha }{2} = \lfloor (\frac{1}{p} - \frac{1}{2}) +1\rfloor \), so we take \(\alpha := {2\lfloor \frac{1}{p}+\frac{1}{2}\rfloor }\). Finally, the ratio test implies convergence of the outer sum in (43), and consequently \(C_s(\lambda )\) is bounded independently of s. Theorem 4.4 now ensures an error bound independent of s, and the convergence rate is \({{\mathcal {O}}}(n^{-(\frac{1}{2p}-\frac{1}{4})})\). This completes the proof. \(\square \)

4.3.2 Choosing POD weights

In the next theorem we prove that if the assumption (A3) holds for some \(p\in \bigcup _{k=1}^\infty \big (\frac{2}{2k+1},\frac{1}{k}\big )\), then it is possible to use POD weights to obtain the same rate of convergence as in Theorem 4.5. For this and the next subsections we need the sequence of Bell polynomials (more precisely, Touchard polynomials), which we denote by

where \(S(\sigma ,m)\) denotes the Stirling number of the second kind as before.

Theorem 4.6

Assume that (A1)–(A3), (A5) and (A6) hold, and further assume that \(p\in \bigcup _{k=1}^\infty \big (\frac{2}{2k+1},\frac{1}{k}\big )\) in (A3). We take \(\alpha := 2\lfloor \frac{1}{p}\rfloor \), \(\sigma := \frac{\alpha }{2}\), \(\lambda := \frac{p}{2-p}\), and define POD weights

with \(\gamma _{\emptyset }:= 1\). Then the kernel interpolant of the PDE solution in Theorem 4.4 satisfies

where the constant \(C>0\) is independent of the truncation dimension s.

Proof

In (38) we can apply the crude upper bound

which leads to

We equate the terms in the two sums in (45) to obtain the weights (44). Let us again define \(S_{\max }(\sigma ):=\max _{1\le m\le \sigma }S(\sigma ,m)\), so that \(\mathrm{Bell}_{\sigma }(b_j) \le S_{\max }(\sigma ) \sum _{m=1}^\sigma b_j^m\). Plugging the weights back into (45) then yields

To estimate \(V_\ell \), we have

where we estimated the sum over m by the geometric series formula and used \(1+b_1-b_j\ge 1\) as a consequence of the assumption (A5).

In consequence, we have \([C_s(\lambda )]^{\frac{2\lambda }{1+\lambda }} \le \sum _{\ell =0}^\infty a_\ell \), with

We can use the ratio test to determine sufficient conditions for the convergence of the infinite sum over \(\ell \). Letting \(\ell >0\), we find that

provided that \(\frac{2\sigma \lambda }{1+\lambda } = \frac{\alpha \lambda }{1+\lambda }<1\) and \(\alpha \lambda >1\). In conclusion, by choosing \(\frac{2\lambda }{1+\lambda }=p\) \(\Longleftrightarrow \) \(\lambda =\frac{p}{2-p}\), it follows from Theorem 4.4 that the convergence is independent of s with rate \({\mathcal {O}}(n^{-(\frac{1}{2p}-\frac{1}{4})})\), provided that

Unfortunately this condition cannot be fulfilled for all values of p, since \(\alpha =2\sigma \) needs to be an even integer. Indeed, the condition is equivalent to

Hence this condition is met if \(p\in \bigcup _{k=1}^\infty \big (\frac{2}{2k+1},\frac{1}{k}\big )\) by choosing \(\alpha = 2\lfloor \frac{1}{p}\rfloor \). \(\square \)

The Lebesgue measure of the set of admissible values for p is precisely \(\mu \bigl (\bigcup _{k=1}^\infty (\frac{2}{2k+1},\frac{1}{k})\bigr )=2-\log (4)\approx 0.61\). Nevertheless, even if \(p\not \in \bigcup _{k=1}^\infty \bigl (\frac{2}{2k+1},\frac{1}{k}\bigr )\) we can always choose \({\tilde{p}}>p\) such that \({\tilde{p}}\in \bigcup _{k=1}^\infty \big (\frac{2}{2k+1},\frac{1}{k}\big )\) and a correspondingly larger value of \(\lambda \). The theorem then holds but with some loss in the rate of convergence.

4.3.3 Choosing product weights

In the next theorem we increase our error bounds to obtain product weights, which have the benefit of a lower computational cost (see Sect. 5), but with the disadvantage of a compromised theoretical convergence rate.

Theorem 4.7

Assume that (A1)–(A3), (A5) and (A6) hold, and further assume that \(p < \frac{1}{2}\) in (A3). If \(p\in \bigcup _{k=1}^\infty [\frac{2}{4k+3},\frac{2}{4k+1}]\) we take \(\alpha := 2\lfloor \tfrac{1}{2p}-\tfrac{1}{4}\rfloor \), \(\sigma := \frac{\alpha }{2}\), and \(\lambda := \frac{1}{2\sigma -4\delta }\) for arbitrary \(\delta \in (0,\frac{\sigma }{2}-\frac{1}{4})\). If \(p\in (\frac{2}{5},\frac{1}{2}) \cup \bigcup _{k=1}^\infty (\frac{2}{4k+5},\frac{2}{4k+3})\) we take \(\alpha :=2\lceil \frac{1}{2p} - \frac{1}{4}\rceil \), \(\sigma :=\frac{\alpha }{2}\), and \(\lambda := \frac{1}{2/p-1-2\sigma -4\delta }\) for arbitrary \(\delta \in (0,\frac{1}{2p}-\frac{1}{2}-\frac{\sigma }{2})\). We define product weights

with \(\gamma _{\emptyset }:= 1\). Then the kernel interpolant of the PDE solution in Theorem 4.4 satisfies

where the constant \(C>0\) is independent of the truncation dimension s.

Proof

Starting again from the equation (45), we apply further crude upper bounds \(\max (|{{\mathfrak {u}}}|,1)\le [\mathrm {e}^{1/\mathrm {e}}]^{|{{\mathfrak {u}}}|}\) and

to arrive at

We equate the terms in the two sums in (47) to obtain the product weights (46). Plugging the weights back into (47) and following the argument in the proof of Theorem 4.6, we obtain

with

Now one can easily check using the ratio test that the term \(C_s(\lambda )\) can be bounded independently of s as long as the series \(\sum _{j=1}^\infty (j^\sigma b_j)^{\frac{2\lambda }{1+\lambda }}\) is convergent.

From the monotonicity of \((b_j)_{j\ge 1}\) in the assumption (A5) it follows that \(b_j\le j^{-1/p}(\sum _{k=1}^\infty b_k^p)^{1/p}\) for all \(j\ge 1\), implying

which is finite provided that

Taking into account also the requirement that \(\frac{1}{\alpha } < \lambda \le 1\) and that \(\alpha = 2\sigma \) be an even integer, we have the constraint

We consider two scenarios below depending on the value of the maximum.

Scenario A. If \(2\sigma \le \frac{2}{p}-1-2\sigma \) then \(p\le \frac{2}{4\sigma +1}\) and \(\sigma \le \frac{1}{2p} - \frac{1}{4}\), while the condition (48) simplifies to \(\frac{1}{2\sigma }< \lambda \le 1\). Since \(\sigma \) must be an integer and at least 1, this scenario applies only when \(p\in (0,\frac{2}{5}]\). In this case the best convergence rate is obtained by taking \(\lambda \) as close to \(\frac{1}{2\sigma }\) as possible and \(\sigma \) as large as possible. Hence we take \(\sigma := \lfloor \frac{1}{2p} - \frac{1}{4}\rfloor \) and \(\lambda := \frac{1}{2\sigma -4\delta }\) for arbitrary \(\delta \in (0,\frac{\sigma }{2}-\frac{1}{4})\). By Theorem 4.4 this yields the convergence rate \({\mathcal {O}}(n^{-(\frac{1}{2} \lfloor \frac{1}{2p} - \frac{1}{4}\rfloor - \delta )})\) with the implied constant independent of the dimension s, but approaching \(\infty \) as \(\delta \rightarrow 0\).

Scenario B. On the other hand, if \(2\sigma > \frac{2}{p}-1-2\sigma \) then \(p > \frac{2}{4\sigma +1}\) and \(\sigma > \frac{1}{2p} - \frac{1}{4}\), while the condition (48) becomes \(\frac{1}{2/p-1-2\sigma }< \lambda \le 1\). Additionally, for the latter condition on \(\lambda \) to hold we require that \(\frac{2}{p} -1 - 2\sigma > 1\), which means \(p < \frac{1}{\sigma +1}\) and \(\sigma < \frac{1}{p}-1\). Combining all constraints we have

Since \(\sigma \) must be an integer and at least 1, this scenario applies only when \(p\in \bigcup _{k=1}^\infty (\frac{2}{4k+1},\frac{1}{k+1}) = (0,\tfrac{1}{3}) \cup (\tfrac{2}{5},\tfrac{1}{2})\). In this case the best convergence rate is obtained by taking \(\lambda \) as close to \(\frac{1}{2/p-1-2\sigma }\) as possible but now with \(\sigma \) as small as possible. Hence we take \(\sigma := \lceil \frac{1}{2p} - \frac{1}{4}\rceil \) and \(\lambda := \frac{1}{2/p-1-2\sigma -4\delta }\) for arbitrary \(\delta \in (0,\frac{1}{2p}-\frac{1}{2}-\frac{\sigma }{2})\). This yields the convergence rate \({\mathcal {O}}(n^{- (\frac{1}{2p} - \frac{1}{4} - \frac{1}{2} \lceil \frac{1}{2p} - \frac{1}{4}\rceil - \delta )})\), with the implied constant independent of the dimension s.

-

If \(p \in (\frac{2}{5},\frac{1}{2})\) then only Scenario B applies.

-

If \(p\in [\frac{1}{3},\frac{2}{5}]\) then only Scenario A applies.

-

If \(p\in (0,\tfrac{1}{3})\) then both scenarios apply, and it remains

to resolve which scenario to use in order to obtain the better convergence rate. For convenience we abbreviate \(x := \frac{1}{2p}-\frac{1}{4}\) and \(m := \lfloor \frac{1}{2p}-\frac{1}{4}\rfloor \), noting that \(m\ge 1\) since \(p < \frac{1}{3}\). Scenario B has a better convergence rate than Scenario A if and only if \(\frac{1}{2} \lfloor x\rfloor < x - \frac{1}{2}\lceil x\rceil \). The latter condition is not satisfied if \(x\in {\mathbb {Z}}\), while for \(x\notin {\mathbb {Z}}\) the condition is equivalent to \(\lfloor x \rfloor + \tfrac{1}{2}< x < \lceil x \rceil \). Hence the condition is equivalent to

We conclude that for the case \(p<\tfrac{1}{3}\) we should use Scenario B when \(p\in \bigcup _{k=1}^\infty \Bigl (\frac{2}{4k+5},\frac{2}{4k+3}\Bigr )\) and use Scenario A when \(p\in [\frac{2}{7},\frac{1}{3}) \cup \bigcup _{k=2}^\infty [\frac{2}{4k+3},\frac{2}{4k+1}]\).

Combining the above analysis, we should apply Scenario B when \(p\in (\frac{2}{5},\frac{1}{2}) \cup \bigcup _{k=1}^{\infty } (\frac{2}{4k+5},\frac{2}{4k+3})\) and apply Scenario A when \(p \in [\frac{2}{7},\frac{2}{5}] \cup \bigcup _{k=2}^{\infty }\) \([\frac{2}{4k+3},\frac{2}{4k+1}] = \bigcup _{k=1}^{\infty } [\frac{2}{4k+3},\frac{2}{4k+1}]\).\(\square \)

4.4 Combined approximation error

The combined approximation error of the PDE problem (20) can be decomposed as

where the first term is the dimension truncation error, the second term is the finite element error, and the final term is the kernel interpolation error. Combining the results developed in Sects. 4.1–4.3, we arrive at the following result.

Theorem 4.8

Assume that (A1)–(A6) hold. For any \(\varvec{y}\in U\), let \(u(\cdot ,\varvec{y})\in H_0^1(D)\) denote the solution to (20) with the source term \(q\in H^{-1+t}(D)\) for some \(0\le t\le 1\). Let \(u_{s,h}(\cdot ,\varvec{y})\in V_h\) be the corresponding dimensionally truncated finite element solution and let \(u_{s,h,n}({\varvec{x}},\cdot )=A^*_n(u_{s,h}({\varvec{x}},\cdot ))\) be its kernel interpolant constructed using the weights described in Theorems 4.5, 4.6, or 4.7. Then we have the combined error estimate

where \(0\le t\le 1\), h denotes the mesh size of the piecewise linear finite element mesh, \(C>0\) is a constant independent of s, h, n, q, and

and \(\delta >0\) is sufficiently small in each case.

5 Cost analysis

5.1 What is the point set at which values are wanted?

In this section we consider the cost of evaluating the kernel interpolant

as an approximation to the periodic function f, with lattice points \({\varvec{t}}_k = \{\frac{k{\varvec{z}}}{n}\}\), \(k=1,\ldots ,n\), and \({\varvec{t}}_n={\varvec{t}}_0=\varvec{0}\). Recall that all our functions including the kernel are 1-periodic with respect to \(\varvec{y}\). For the linear system (7), as observed already, the matrix \({{\mathcal {K}}}= [K({\varvec{t}}_k-{\varvec{t}}_{k'},\varvec{0})]_{k,k'=1,\ldots ,n}\) is circulant, thus we need to compute only its first column (see the cost for evaluating the kernel in the next subsection) and then solve for the coefficients \(a_k\) with a cost of \({{\mathcal {O}}}(n\log (n))\).

First, however, it turns out to be useful to ask: what is the set of points, say \(\{\varvec{y}_1, \varvec{y}_2,\ldots \}\), at which the values of the interpolant are desired? If L such points \(\varvec{y}_\ell \), \(\ell = 1,\ldots , L\), are chosen arbitrarily then the cost, naturally, is L times the cost of a single evaluation. On the other hand, for a set of Ln points formed by the union of shifted lattices \(\varvec{y}_\ell + {\varvec{t}}_{k'}\), \(\ell = 1,\ldots ,L\), \(k' = 1,\ldots n\), it turns out that the cost for Ln evaluations is little more than the cost of the L evaluations at arbitrary points.

The reason for the low cost lies in the shift invariance of the kernel and the group nature of the lattice. For a single given \(\varvec{y}\) the principal costs for evaluating the kernel interpolant come from evaluating \(K({\varvec{t}}_k,\varvec{0})\) and \(f({\varvec{t}}_k)\) at the n lattice points; then solving the circulant linear system (7) for the n values of \(a_k\); from evaluating \(K({\varvec{t}}_k,\varvec{y})\) at the n lattice points; and finally from assembling \(f_n(\varvec{y})\) with a cost of \({{\mathcal {O}}}(n)\). (The precise cost breakdown is given in Table 1 below after we discuss the cost for evaluating the kernel in the next subsection.)

But for evaluation of \(K({\varvec{t}}_k,\varvec{y}+ {\varvec{t}}_{k'})\) for all n values \(k'= 1,\ldots , n\) we observe that \(K({\varvec{t}}_k,\varvec{y}+ {\varvec{t}}_{k'}) = K({\varvec{t}}_k -{\varvec{t}}_{k'},\varvec{y})\), and hence

Since the right-hand side has the form of a circulant \(n\times n\) matrix multiplying a vector of length n, the n values \(f_n(\varvec{y}+ {\varvec{t}}_{k'})\) for \(k'=1,\ldots ,n\) can be assembled with a cost of \({{\mathcal {O}}}(n \log (n))\), compared with the \({{\mathcal {O}}}(n)\) cost of assembling \(f_n\) at a single value of \(\varvec{y}\).

5.2 Cost for evaluating the kernel for a single \(\varvec{y}\)

Now consider the cost of computing \(K({\varvec{t}},\varvec{y})\) for a single arbitrary value of \(\varvec{y}\) and arbitrary \({\varvec{t}}\),

In the following, we assume that evaluating \(\eta _\alpha \) can be treated as having constant cost. For example, when \(\alpha \) is even we have an analytic formula for \(\eta _\alpha \) in terms of the Bernoulli polynomial.

If the weights have no special structure then the cost to evaluate \(K({\varvec{t}},\varvec{y})\) would be exponential in s because of the sum over subsets of \(\{1:s\}\), but the cost is much reduced in special cases:

-

With product weights we have \(K({\varvec{t}},\varvec{y}) = \prod _{j=1}^s (1 + \gamma _j\eta _\alpha (t_j,y_j))\), which can be evaluated for a pair \(({\varvec{t}},\varvec{y})\) at the cost of \({{\mathcal {O}}}(s)\).

-

With POD weights we have

$$\begin{aligned} K({\varvec{t}},\varvec{y}) =\!\!\!\; \sum _{{{\mathfrak {u}}}\subseteq \{1:s\}}\!\!\!\; \varGamma _{|{{\mathfrak {u}}}|}\!\!\; \prod _{j\in {{\mathfrak {u}}}} \Big (\gamma _j\, \eta _\alpha (t_j,y_j)\Big ) = \sum _{\ell =0}^s \varGamma _\ell \,\underbrace{\sum _{\begin{array}{c} {{\mathfrak {u}}}\subseteq \{1:s\}\\ |{{\mathfrak {u}}}|=\ell \end{array}}\; \prod _{j\in {{\mathfrak {u}}}} \Big (\gamma _j\, \eta _\alpha (t_j,y_j)\Big )}_{=:\, P_{s,\ell }}, \end{aligned}$$where \(P_{s,\ell }\) is defined for \(\ell =0,\ldots ,s\), and can be computed recursively using

$$\begin{aligned} P_{s,\ell } \,=\, P_{s-1,\ell } + \gamma _s\,\eta _\alpha (t_s,y_s)\, P_{s-1,\ell -1}, \end{aligned}$$together with \(P_{s,0}:=1\) for all s and \(P_{s,\ell }:=0\) for all \(\ell >s\). The cost to evaluate this for a pair \(({\varvec{t}},\varvec{y})\) is \({{\mathcal {O}}}(s^2)\).

-

With SPOD weights we have

$$\begin{aligned} K({\varvec{t}},\varvec{y})&\,=\, \sum _{{{\mathfrak {u}}}\subseteq \{1:s\}} \sum _{{\varvec{\nu }}_{{\mathfrak {u}}}\in \{1:\sigma \}^{|{{\mathfrak {u}}}|}} \varGamma _{|{\varvec{\nu }}_{{\mathfrak {u}}}|} \prod _{j\in {{\mathfrak {u}}}} \Bigl (\gamma _{j,\nu _j}\, \eta _\alpha (t_j,y_j)\Bigr ) \\&\,=\, \sum _{{\varvec{\nu }}\in \{0:\sigma \}^s} \varGamma _{|{\varvec{\nu }}|} \prod _{j:\,\nu _j>0} \Bigl (\gamma _{j,\nu _j}\, \eta _\alpha (t_j,y_j)\Bigr )\\&\,=\, \sum _{\ell =0}^{s\sigma } \varGamma _\ell \underbrace{\sum _{\begin{array}{c} {\varvec{\nu }}\in \{0:\sigma \}^s\\ |{\varvec{\nu }}|=\ell \end{array}} \; \prod _{j:\,\nu _j>0} \Bigl (\gamma _{j,\nu _j}\, \eta _\alpha (t_j,y_j)\Bigr )}_{=:\,P_{s,\ell }}, \end{aligned}$$where \(P_{s,\ell }\) is now defined for \(\ell =0,\ldots ,s\sigma \), and can be computed recursively using

$$\begin{aligned} P_{s,\ell } \,=\, P_{s-1,\ell } + \eta _\alpha (t_s,y_s)\sum _{\nu =1}^{\min (\sigma ,\ell )} \gamma _{s,\nu }\, P_{s-1,\ell -\nu }, \end{aligned}$$together with \(P_{s,0}:=1\) for all s and \(P_{s,\ell }:=0\) for all \(\ell >s\sigma \). The cost to evaluate this for a pair \(({\varvec{t}},\varvec{y})\) is now \({{\mathcal {O}}}(s^2\,\sigma ^2)\).

5.3 Cost for the kernel interpolant

We now summarize the cost for the kernel interpolant and different weights using the results of the preceding two subsections. Let X denote the cost for one evaluation of f. The cost breakdown is shown in Table 1. The first four rows are considered to be pre-computation cost while the last three rows are the running cost for sampling. The cost for the fast CBC construction based on the criterion \({{\mathcal {S}}}_s({\varvec{z}})\) with different weight parameters is analyzed in [4].

For the PDE application, our kernel method is

where \(\{{\varvec{x}}_i : i=1,\ldots ,M\} \subset D\) is the set of finite element nodes in the physical domain, and \(a_k({\varvec{x}}_i)\) for \(k = 1,\ldots n\) is the solution for fixed \({\varvec{x}}_i\) of the linear system

Let \(M^a\) for some \(a\,\ge \,1\) denote the cost of the finite element solve to obtain all \({\varvec{x}}_i\) for one \(\varvec{y}\). The cost breakdown for obtaining the kernel interpolant at all M nodes for all L samples is shown in Table 2. Note in this case that the coefficients \(a_k({\varvec{x}}_i)\) need to be computed for every finite element node \({\varvec{x}}_i\), hence the scaling of the cost in line 4 of Table 2 by M. If the quantity of interest is a linear functional of the PDE finite element solution (no need for the solution at every node), then the cost is reduced to be as in Table 1 with \(X=M^a\).

6 Numerical experiments

We consider the parametric PDE problem (16)–(17) in the physical domain \(D=(0,1)^2\) with the source term \(q({\varvec{x}})=x_2\) and the diffusion coefficient periodic in the parameters \(\varvec{y}\) given by (21), with \(a_0({\varvec{x}})=1 \text {~for~} {\varvec{x}}\in D\).

For each fixed \(\varvec{y}\in U_s\) (i.e. with the sum in (21) truncated to s terms), we solve the PDE using a piecewise linear finite element method with \(h=2^{-5}\) as the finite element mesh size. As the stochastic fluctuations, we consider the functions

where \(c>0\) is a constant, \(\theta >1\) is the decay rate of the stochastic fluctuations, and \(s\in {\mathbb {N}}\) is the truncation dimension. Following (24), the sequence \((b_j)_{j\ge 1}\) is taken to be

and \(c<\frac{\sqrt{6}}{\zeta (\theta )}\), ensuring that the assumption (A2) is satisfied.

We approximate the dimensionally truncated finite element solution \(u_{s,h}\) of the PDE (16)–(17) by constructing a kernel interpolant \(u_{{s,h,n}}({\varvec{x}},\varvec{y}):=A^*_n(u_{s,h}({\varvec{x}},\varvec{y}))\), \({\varvec{x}}\in D\) and \(\varvec{y}\in U_s\) using SPOD weights, POD weights, and product weights chosen according to Theorems 4.5, 4.6, and 4.7, respectively. The same weights appear in the formula for the kernel as well as the search criterion for finding good lattice generating vectors. The kernel interpolant is constructed over a lattice point set \({\varvec{t}}_k:=\{k{\varvec{z}}/n\}\), \(k\in \{1,\ldots ,n\}\), where the generating vector \({\varvec{z}}\in \{1,\ldots ,n-1\}^s\) has been obtained separately for each weight type using the fast CBC algorithm detailed in [4]. We assess the kernel interpolation error by computing

where \(\varvec{y}_\ell \) for \(\ell =1,\ldots ,L\) is a sequence of Sobol\('\) nodes in \([0,1]^s\), with \(L=100\), and we recall that all our functions including \(u_{s,h}({\varvec{x}},\varvec{y})\) and \(u_{s,h,n}({\varvec{x}},\varvec{y})\) are 1-periodic with respect to \(\varvec{y}\). The kernel interpolant in the formula above can be evaluated efficiently over the union of shifted lattices \(\varvec{y}_\ell +{\varvec{t}}_k\), \(\ell =1,\ldots ,L\), \(k=1,\ldots ,n\), by making use of formula (49) in conjunction with the fast Fourier transform, requiring only the evaluation of the values \(K({\varvec{t}}_k,\varvec{y}_\ell )\).

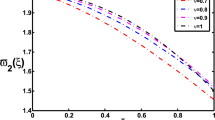

The kernel interpolation errors of the PDE problem (16)–(17) for kernel interpolants constructed using SPOD weights and varying parameters. Top: fixed \(s=100\) and \(c=0.2\) and different values of \(\theta \). Middle: fixed \(s=100\) and \(\theta =3.6\), different values of p, and corresponding \(\sigma =\sigma (p)\). Theoretical error-decay rate is \(-\frac{1}{2p}+\frac{1}{4}=-0.3,-0.85,-1.4\) for \(p=\frac{1}{1.1},\frac{1}{2.2},\frac{1}{3.3}\). Bottom: fixed \(\theta =1.2\), \(c=0.2\), \(p=1/1.1\), and \(\sigma =1\) with different values of \(s\in \{10,20,40,80,160\}\)

We compute the approximation error when \(\theta \in \{1.2,2.4,3.6\}\), choosing \(p\in \{\frac{1}{1.1},\frac{1}{2.2},\frac{1}{3.3}\}\), respectively, which are all p values ensuring that (A3) is satisfied. We also use several values of the parameter \(c\in \{0.2, 0.4, 1.5\}\) to control the difficulty of the problem. We set \(\delta =0.1\) in the product weights (46). The numerical experiments have been carried out by using both \(s=10\) and \(s=100\) as the truncation dimensions. Selected results are displayed in Figures 1, 2, 3, where the corresponding values of \(a_{\min }\) and \(a_{\max }\) are listed to give insights to the difficulty of the problem in each case, as well as the parameter \(\sigma \) which shows the “order” of the lattice rule. Note that as s increases the problem does not change, but the computation becomes harder because the diffusion coefficient takes a wider range of values, with small values of \(a({\varvec{x}},\varvec{y})\) being especially challenging.

The empirically obtained convergence rates appear to exceed the theoretically expected rates once the kernel interpolant enters the asymptotic regime of convergence. The convergence behavior of the kernel interpolant with SPOD weights is good across all experiments, except for the most difficult PDE problem of the lot corresponding to parameters \(\theta =1.2\) and \(c=0.4\), illustrated in the bottom row of Fig. 1. On the other hand, the POD weights and, to a lesser extent, the product weights appear to be somewhat sensitive to the effective dimension of the PDE problem, either leading to a longer pre-asymptotic regime compared to SPOD weights (see “PROD” in the bottom row of Fig. 2) or no apparent convergence (see “POD” in the bottom rows of Figs. 2 and 3).

In the top graph of Fig. 4 we compare the results in Figs. 1, 2, 3 from SPOD weights with truncation dimension \(s=100\) for the same damping parameter \(c=0.2\) and different \(\theta \in \{1.2,2.4,3.6\}\), listing the estimated convergence rate in each case. In the middle graph of Fig. 4 we show the results of an additional experiment, namely, that for \(s=100\) where we fix the decay rate \(\theta =3.6\) of the stochastic fluctuations and solve the parametric PDE problem using different \(\sigma \in \{1,2,3\}\) in the formula for SPOD weights, which correspond to \(p\in \{\frac{1}{1.1},\frac{1}{2.2},\frac{1}{3.3}\}\), see Theorem 4.5. Finally, in the bottom graph of Fig. 4, we return to the experimental setup illustrated in Fig. 1 except this time we carry out the experiment using the truncation dimensions \(s\in \{10,20,40,80,160\}\).

In all cases displayed in Fig. 4, the observed error decays faster than the rate implied by Theorem 4.5. We also see that increasing \(\sigma \) improves the error and mildly improves the rate of convergence. Moreover, we observe from the graph in the middle that the parameter \(\theta \) that governs the decay of \(\Vert \psi _j\Vert _{L_{\infty }(D)}\) is more important in determining the rate than the choice of \(\sigma \). This observation suggests that the kernel interpolation with the rank-1 lattice points are robust in \(\sigma \). Notice that \(\sigma \) appears in the definition (40) of the SPOD weights; and that the weights are an input of the CBC construction, and are used to define the kernel \(K(\cdot ,\cdot )\). These observed error decay rates and the robustness are encouraging, but also suggest that the worst-case error estimates may be pessimistic in practical situations. The bottom graph in Fig. 4 illustrates the effect that the truncation dimension has on the obtained convergence rates: we see that the observed convergence rate remains reasonable even when \(s=160\).

Finally, we present numerical experiments that assess the dimension truncation error rate given in Theorem 4.1. We consider the same PDE and stochastic fluctuations \((\psi _j)_{j\ge 1}\) which were stated at the beginning of this section. We choose the parameters \(c=0.4\) with \(\theta \in \{1.2, 2.4,3.6\}\) and \(c=1.5\) with \(\theta \in \{2.4,3.6\}\). The PDE is discretized using piecewise linear finite element method with mesh size \(h=2^{-6}\) and the integral over the computational domain D is computed exactly for the finite element solutions. As the reference solution, we use the finite element solution with truncation dimension \(s'=2^{11}\). The dimension truncation error is then estimated by computing

for \(s=2^k\), \(k=2,\ldots ,10\), where the value of the parametric integral is computed approximately by means of a rank-1 lattice rule based on the off-the-shelf generating vector lattice-39101-1024-1048576.3600 downloaded from https://web.maths.unsw.edu.au/~fkuo/lattice/ with \(n=2^{17}\) nodes. The results are displayed in Fig. 5. The theoretically expected rate, which is essentially \(\mathcal O(s^{-\theta +1/2})\), is clearly observed in all cases.

7 Conclusions

In this paper we have developed an approximation scheme for periodic multivariate functions based on kernel approximation at lattice points, in the setting of weighted Hilbert spaces of dominating mixed smoothness. We have developed \(L_2\) error estimates that are independent of dimension, for three classes of weights: product weights, POD (product and order dependent) weights and SPOD (smoothness driven product and order dependent) weights. Numerical experiments for 10 and 100 dimensions give results that (with the possible exception of POD weights) are generally satisfactory, and that exhibit better than predicted rates of convergence.