Abstract

An implicit Euler discontinuous Galerkin scheme for the Fisher–Kolmogorov–Petrovsky–Piscounov (Fisher–KPP) equation for population densities with no-flux boundary conditions is suggested and analyzed. Using an exponential variable transformation, the numerical scheme automatically preserves the positivity of the discrete solution. A discrete entropy inequality is derived, and the exponential time decay of the discrete density to the stable steady state in the \(L^1\) norm is proved if the initial entropy is smaller than the measure of the domain. The discrete solution is proved to converge in the \(L^2\) norm to the unique strong solution to the time-discrete Fisher–KPP equation as the mesh size tends to zero. Numerical experiments in one space dimension illustrate the theoretical results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The preservation of the structure of nonlinear diffusion equations on the discrete level is of paramount importance in applications. While there has been an enormous progress on structure-preserving schemes for ordinary differential equations (see, e.g., [16]), the development of structure-preserving numerical techniques for nonlinear diffusion equations is still an ongoing quest, in particular for higher-order methods. In this paper, we analyze a toy problem, the Fisher–Kolmogorov–Petrovsky–Piscounov (Fisher–KPP) equation with no-flux boundary conditions, to devise an implicit Euler discontinuous Galerkin scheme which preserves the positivity of the solution, the entropy structure, and the exponential equilibration on the discrete level. In a future work, we aim to extend the scheme to diffusion systems.

The Fisher–KPP equation [12] is the reaction–diffusion equation

where \(D>0\) is the diffusion coefficient, \(\Omega \subset {{\mathbb {R}}}^d\) a bounded domain, and n the exterior unit normal vector on the boundary \(\partial \Omega \). The variable u(x, t) models a population density or chemical concentration, influenced by diffusion and logistic growth. The Fisher–KPP equation admits traveling-wave solutions \(u(x,t)=\phi (x-ct)\), which switch between the unstable steady state \(u^*=0\) and the stable steady state \(u^*=1\). By the maxiumum principle, the density stays nonnegative if it does so initially, and it satisfies the entropy inequality

If there are no reaction terms, we have conservation of the total mass, and the logarithmic Sobolev inequality implies the exponential decay of the (mathematical) entropy \(S(t)=\int _\Omega (u(t)(\log u(t)-1)+1)dx\) (see, e.g., [20, Chapter 2]). When reaction terms are present, the situation is more delicate, since there are two steady states, \(u^*=0\) and \(u^*=1\). If the initial entropy S(0) is smaller than the measure of \(\Omega \), then u(t) converges exponentially fast to \(u^*=1\) in the \(L^1(\Omega )\) norm. Our objective is to preserve the aforementioned properties on the discrete level.

It is well known that the preservation of the positivity or nonnegativity of discrete solutions for (1) may fail in standard (finite element) schemes, in particular when the solution vanishes in some region; see Sect. 5 for an example. Our key idea to preserve the positivity is to employ the exponential transformation \(u=e^\lambda \). Such a transformation or a variant is used, for instance, in the Il’in scheme [19] and in the existence analysis of drift-diffusion equations [13]. Moreover, it allows for the preservation of \(L^\infty (\Omega )\) bounds in volume-filling cross-diffusion systems [8, 20]. The implicit Euler scheme for (1)–(2) in the exponential variable then reads as

where here and in the following, we set \(D=1\) for simplicity and we choose \(0<\triangle t<1\). Note that the condition \(\nabla \lambda ^k\cdot n=0\) is equivalent to \(\nabla e^{\lambda ^k}\cdot n=0\), since \(\nabla e^{\lambda ^k}=e^{\lambda ^k}\nabla \lambda ^k\) and \(e^{\lambda ^k}\ne 0\). At first glance, one may think that this formulation unnecessarily complicates the problem, but we will show that it enjoys some useful properties.

We propose a discontinuous Galerkin (DG) discretization for problem (4)–(5) with variable \(\lambda _h^k\), where \(h>0\) is the maximal diameter of the mesh elements. The nonlinear diffusion term is discretized by an interior penalty DG method. By construction, the discrete densities \(\exp (\lambda _h^k)\) are positive, and the scheme also preserves the entropy structure and large-time asymptotics. Our main results can be sketched as follows:

-

Existence of a solution \(\lambda _h^k\) to the implicit Euler DG scheme (8), given a function \(\lambda _h^{k-1}\) (Proposition 6). This result is based on the Leray–Schauder fixed-point theorem and a coercivity estimate.

-

Discrete entropy inequality (Lemma 7). The inequality follows from scheme (8) using the test function \(\lambda _h^k\) and the convexity of \(u\mapsto u(\log u-1)+1\).

-

Exponential decay of the discrete entropy

$$\begin{aligned} S_h^k := \int _\Omega \left( e^{\lambda _h^k}\left( e^{\lambda _h^k}-1\right) +1\right) dx \le S_h^0 e^{-\kappa k\triangle t} \end{aligned}$$(Proposition 9) and of the \(L^1\) norm of \(e^{\lambda _h^k}-1\) (Theorem 11). The result holds if \(S_h^0<|\Omega |\). This condition implies a positive lower bound for the total mass \(\int _\Omega e^{\lambda _h^k}dx\), which is needed to guarantee that the discrete solution converges to the stable steady state \(u^*=1\) and not to the steady state \(u^*=0\). The case \(S_h^0\ge |\Omega |\) is discussed in Remark 12.

-

Convergence of the scheme (Theorem 14): There exists a unique strong solution \(u^k\in H^2_n(\Omega )\) to the implicit Euler discretization associated to (1)–(2) such that

$$\begin{aligned} e^{\lambda _h^k}\rightarrow u^k\quad \text{ strongly } \text{ in } L^2(\Omega ) \text{ as } h\rightarrow 0. \end{aligned}$$The result is based on a compactness property, which is a consequence of the gradient estimate from the entropy inequality and a coercivity estimate. This yields a very weak semi-discrete solution, which turns out to be a strong solution thanks to a duality argument.

Compared to conforming finite-element methods, DG methods allow for a more flexible mesh design and polynomial degree distribution, are easier to parallelize, allow to better cope with data discontinuities (e.g. of the material coefficients or initial conditions), and are able to locally reproduce conservation properties. Moreover, they directly produce block-diagonal (or even diagonal) mass matrices, which is an advantage in time-dependent problems. Finally, as observed in Sect. 5, DG discretizations of problem (4)–(5) seem to result in more stable Newton iterations for the solution of the nonlinearity, as compared to continuous finite elements.

Let us put our results into context and review the state of the art of structure preservation in DG methods. The DG scheme was introduced in the early 1970s for first-order hyperbolic problems in [22, 31]. The development of discontinuous finite element schemes for second-order elliptic problems can be traced back to [27] with similar approaches in, for instance, [2, 5, 29, 34]; see also [3].

The design of structure-preserving DG methods is a rather recent topic. Positivity-preserving DG schemes for parabolic equations were developed in, e.g., [9, 15, 23, 33, 36]. The positivity preservation is ensured by using a special slope limiter (as in [9, 15]), together with a strong stability preserving Runge–Kutta time discretization (as in [33, 36]), while in [23], the positivity of the discrete solution is enforced through a reconstruction algorithm, based on positive cell averages. As far as we know, the use of an exponential transformation to ensure the positivity of the discrete solutions within a DG scheme is new. Positivity-preserving schemes for the Fisher–KPP equation were already studied in the literature, but only for finite-difference approximations [17, 24], without a convergence analysis, and for continuous finite element discretizations [35].

Other important properties are entropy stability (the entropy is bounded for all times) and entropy monotonicity (the entropy is nonincreasing). Entropy-stable DG schemes for the compressible Euler and Navier–Stokes equation were studied in [14, 28], while a discrete version of the entropy inequality (and hence entropy monotonicity) was proved in [33] for Fokker–Planck-type equations and aggregation models. We are not aware of results in the literature regarding the preservation of the entropy structure of the Fisher–KPP equation on the discrete level.

The paper is organized as follows. We state our notation and some auxiliary results related to the DG method in Sect. 2. The DG scheme is introduced and studied in Sect. 3: The existence of a solution to the DG scheme, the discrete entropy inequality, and the exponential decay of the entropy are proved. The convergence of the numerical scheme is proved in Sect. 4. Finally, Sect. 5 is devoted to some numerical experiments in one space dimension.

2 Notation and auxiliary results

We start with some notation. Let \({\mathcal {T}}_h=\{K_i:i=1,\ldots ,N_h\}\) be a family of simplicial partitions of the bounded domain \(\Omega \subset {{\mathbb {R}}}^d\) for \(d=1,2,3\). The mesh parameter h is defined by \(h=\max _{K\in {\mathcal {T}}_h}h_K\), where \(h_K={\text {diam}}(K)\). The elements may be tetrahedra in three space dimensions, triangles in two dimensions, and intervals in one dimension. In two and three dimensions, we suppose that \({\mathcal {T}}_h\) is shape regular (see, e.g., [30, Section 2.1]) and, for simplicity, without hanging nodes. Our analysis actually extends also to k-irregular meshes [18]. We denote by \({\mathcal {E}}_h\) the set of interior faces or edges of the elements in \({\mathcal {T}}_h\).

On the partition \({\mathcal {T}}_h\), we define the broken Sobolev space

The traces of functions in \(H^1(\Omega ,{\mathcal {T}}_h)\) belong to the space \(T(\Gamma _h)=\prod _{K\in {\mathcal {T}}_h}L^2(\partial K)\), where \(\Gamma _h\) is the union of all boundaries \(\partial K\) for all \(K\in {\mathcal {T}}_h\). The functions in \(T(\Gamma _h)\) are single-valued on \(\partial \Omega \) and double-valued on \(\Gamma _h{\setminus }\partial \Omega \).

Let q be a piecewise smooth function and q be a piecewise smooth vector field on \({\mathcal {T}}_h\). We write \(K_-\) and \(K_+\) for the two elements sharing the face f, i.e. \(f=\partial K_-\cap \partial K_+\), and \(n_\pm \) for the unit normal vector pointing to the exterior of \(K_\pm \). Furthermore, we set \(q_\pm = q|_{K_\pm }\) and \(\phi _\pm = \phi |_{K_\pm }\). Then we define

Note that the jump of a scalar function is a vector which is normal to f, and the jump of a vector-valued function is a scalar.

The mesh size function \(\mathtt{h}\in L^\infty (\Gamma _h)\) is defined by

Furthermore, we introduce the finite element space of degree \(p\in {{\mathbb {N}}}\) associated to the partition \({\mathcal {T}}_h\):

where \(P_p(K)\) is the set of polynomials on K with degree at most p, and the space of test functions

Next, we recall some auxiliary results.

Lemma 1

(Inverse trace inequality; Lemma 2.1 in [32]) Let \(K\in {{\mathbb {R}}}^d\) (\(d=2,3\)) be an element with diameter \(h_K\), let f be an edge or face of K, and let \(n_f\) be a unit normal vector normal to f. Then for all polynomials \(\xi \in P_p(K)\) of degree p, there exists a constant \(C_{\mathrm{inv}}>0\), independent of \(h_K\) and p, such that

Lemma 2

(Multiplicative trace inequality; Lemma A.2 in [30]) Let K be a shape-regular element. Then there exists a constant \(C>0\) such that for all \(\xi \in H^1(K)\),

Lemma 3

(Discrete Poincaré–Wirtinger inequality; Theorem 4.1 in [7]) There exists a constant \(C_{\mathrm{PW}}>0\) such that for all \(\xi \in H^1(\Omega ,{\mathcal {T}}_h)\),

We also need a compactness result for functions \(\xi \in H^1(\Omega ,{\mathcal {T}}_h)\). For this, we define the DG norm

Lemma 4

(DG compact embedding; Lemma 8 in [7]) Let \((\xi _h)\subset H^1(\Omega ,{\mathcal {T}}_h)\) be a sequence such that \(\Vert \xi _h\Vert _{\mathrm{DG}}\le C\) for all \(h\in (0,1)\) and some \(C>0\). Then there exists a subsequence \((h_i)\) with \(h_i\rightarrow 0\) as \(i\rightarrow \infty \) and a function \(\xi \in H^1(\Omega )\) such that

where \(1\le q<q^*\) and \(q^*=4\) for \(d=3\), \(q^*=\infty \) for \(d=1,2\).

3 Analysis of the DG scheme: existence and structure preservation

We assume the bounded domain \(\Omega \in {{\mathbb {R}}}^d\) to be Lipschitz and, in view of the duality method used in the proof of Theorem 14 below, convex. Recall that we suppose that \(d\le 3\).

The weak formulation of (4)–(5) reads as follows: find \(\lambda ^k\in H^1(\Omega )\cap L^\infty (\Omega )\) such that

for all \(\phi \in H^1(\Omega )\).

Our DG discretization of the above formulation reads as follows. Let \(\varepsilon \ge 0\) and \(\lambda _h^0\in V_h\). Given \(\lambda _h^{k-1}\in V_h\), we wish to find \(\lambda _h^k\in V_h\) such that for all \(\phi _h\in V_h\),

The form \(B:{V_h^3}\rightarrow {{\mathbb {R}}}\) represents the interior penalty DG discretization of the nonlinear diffusion term. It is linear in the second and third argument and is defined by

where \(\alpha (u)\) is a stabilization function, given by

We recall that the constant \(C_{\mathrm{inv}}\) is defined in Lemma 1. The third term on the left-hand side of (8) is a regularization term (only) needed for the existence analysis to derive a uniform (but \(\varepsilon \)-depending) bound for the fixed-point argument. For linear elements \(p=1\), we may allow for \(\varepsilon =0\); see “Appendix A”.

3.1 Existence of a discrete solution

We show that problem (8) possesses a solution. First, we prove a coercivity property for the form B.

Lemma 5

(Coercivity of B) The form B, defined in (9), satisfies for all \(v\in {V_h^3}\),

Proof

Definition (9) gives for \(v\in {V_h^3}\):

We estimate the second integral by using Young’s inequality:

where \(\beta _f>0\) is a parameter which will be defined below. The first integral on the right-hand side is estimated according to

To proceed, we set

Taking into account the inverse trace inequality (6), we infer that

Consequently, we obtain

Inserting this estimate into (11), it follows that

With the definitions of \(\alpha (v)\) (see (10)) and \(\beta _f\) as well as the property \(\mathtt{h}\le h_{K_\pm }\), the difference in the bracket can be computed as

This shows that

By the definition of the jumps and the mean-value theorem for \(x\in f\),

We use Definition (10) and insert the previous estimate into (12):

Since \((e^{v})_\pm \ge \exp (-\Vert v\Vert _{L^\infty (K_\pm )})\), we have

This finishes the proof. \(\square \)

Proposition 6

(Existence) Let \(\varepsilon >0\). Given \(\lambda _h^{k-1}\in V_h\), the DG scheme (8) admits a solution \(\lambda _h^k\in V_h\).

Proof

The idea is to apply the Leray–Schauder fixed-point theorem. We define the fixed-point operator \(\Phi :V_h\times [0,1]\rightarrow V_h\) by \(\Phi (w,\sigma ) = v\), where \(v\in V_h\) is the unique solution to the linear problem

for \(\phi \in V_h\). The left-hand side defines the bilinear form \(a(w,\phi )\), which is coercive, \(a(w,w)=\varepsilon \Vert w\Vert _{L^2(\Omega )}^2\). The right-hand side defines a linear form which is continuous on \(L^2(\Omega )\) (using the fact that in finite dimensions, all norms are equivalent). Thus, \(\Phi \) is well defined by the Lax–Milgram lemma. As the right-hand side of (13) is continuous with respect to w, standard arguments show that \(\Phi \) is continuous. Furthermore, \(\Phi (w,0)=0\). It remains to prove that there exists a uniform bound for all fixed points of \(\Phi \). To this end, let \(v\in V_h\) and \(\sigma \in [0,1]\) such that \(\Phi (v,\sigma )=v\).

Let \({ s(x):=x(\log x-1)+1}\ge 0\). The convexity of s implies that

Then, using the test function \(\phi =v\) in (13) gives, because of the properties \(B(v;v,v)\ge 0\) (Lemma 5) and \(e^v(1-e^v)v\le 0\),

This is the desired uniform bound. We infer the existence of a solution to (8) by the Leray–Schauder fixed-point theorem. \(\square \)

3.2 Discrete entropy inequality and exponential decay

Let \(\lambda _h^k\in V_h\) be a solution to (8). We show that the entropy

is nonincreasing with respect to \(k\in {{\mathbb {N}}}\).

Lemma 7

(Discrete entropy inequality) Let \(\varepsilon \ge 0\) and let \(\lambda _h^k\in V_h\) be a solution to (8). Then

where the constant \(C_0>0\) only depends on \(C_{\mathrm{inv}}\) and \(C_{\mathrm{PW}}\) from Lemmas 1 and 3.

Proof

We take \(\phi _h=\lambda _h^k\) as a test function in (8) and use inequality (14) to find that

It remains to estimate the first term on the right-hand side. For this, we use the coercivity estimate of Lemma 5 and the discrete Poincaré–Wirtinger inequality from Lemma 3:

Setting \(C_0=2\min \{1,C_{\mathrm{inv}}^2\}C_{\mathrm{PW}}^{-2}\) finishes the proof. \(\square \)

We wish to bound the total mass \(\int _\Omega \exp (\lambda _h^k)dx\) from below and above. Since \(s(u)=u(\log u-1)+1\) is invertible only on [0, 1] and on \([1,\infty )\) but not globally on \([0,\infty )\), we introduce the following functions:

In particular, \(\sigma _-\circ s=\text{ id }\) on [0, 1] and \(\sigma _+\circ s=\text{ id }\) on \([1,\infty )\).

Lemma 8

(Bounds for the total mass) Let \(\varepsilon \ge 0\) and let \(\lambda _h^k\) be a solution to (8). Then

Observe that if \(S_h^0<|\Omega |\), the lower bound \(\sigma _-(S_h^0/|\Omega |)\) is positive. Thus, the total mass can never vanish, which excludes the case of solutions converging for \(k\rightarrow \infty \) to the zero solution. The reason for the difference between \(S_h^0<|\Omega |\) and \(S_h^0\ge |\Omega |\) lies in the fact that (4)–(5) admits two steady states, \(\lambda ^k_h=0\) (corresponding to \(u^k=e^{\lambda ^k_h}=1\)) and \(\lambda ^k_h=-\infty \) (corresponding to \(u^k=0\)). The assumption \(S_h^0<|\Omega |\) will be crucial to prove the decay estimate for the entropy; see Proposition 9. We discuss the case \(S_h^0\ge |\Omega |\) in Remark 12.

Proof of Lemma 8

First, we show the lower bound. If \(S_h^0\ge |\Omega |\), we have \(\sigma _-(S_h^0/|\Omega |)=0\), and there is nothing to prove. Thus, let \(S_h^0<|\Omega |\). Set \(\beta _k=\min \{1,\exp (\lambda _h^k)\}\le 1\). As s is convex, we infer from Jensen’s inequality and \(s(\beta _k)=0\) for \(\lambda _h^k>0\) that

where in the last step we have used the monotonicity of \(k\mapsto S_h^k\). With this preparation, we are able to verify the lower bound. As \(\sigma _-\) is decreasing, we find that

For the upper bound, we can assume that \(\int _\Omega \exp (\lambda _h^k)dx\ge |\Omega |\), since otherwise, the inequality is trivially satisfied in view of \(\sigma _+(v)\ge 1\). By the concavity of \(\sigma _+\), we can again apply the Jensen inequality:

proving the claim. \(\square \)

Proposition 9

(Discrete entropy decay) Let \(\varepsilon \ge 0\) and let \(\lambda _h^k\) be a solution to (8). We assume that \(S_h^0<|\Omega |\). Then there exists a constant \(C_1>0\), only depending on \(S_h^0\), such that for all \(k\in {{\mathbb {N}}}\),

In particular, with \(\eta = \log (1+C_1\triangle t)/(C_1\triangle t)<1\), we have the exponential decay

The proof is based on two properties: The diffusion drives the solution towards a constant, while the reaction term guarantees that there is only one (positive) steady state. In order to cope with the interplay of diffusion and reaction, we prove first the following lemma.

Lemma 10

Introduce for \(\theta >0\) the functions

Then

Proof

The function

can be continuously extended to \(v=0\) (with value \(g(0)=1/2\)) and it is decreasing with limits \(\lim _{v\rightarrow \infty }g(v)=0\) and \(\lim _{v\rightarrow -\infty }g(v)=+\infty \). Therefore, \(g(v)\le g(\log \theta ) = M_1(\theta )\) for all \(v\ge \log \theta \), showing the first inequality. For the second one, let \(v\le \log \theta \). Then \(s(e^v)<1\) for \(v\le 0\) and the monotonicity of \(v\mapsto s(e^v)\) for \(v\ge 0\) implies that \(s(e^v)\le s(\theta )\). Thus, for any \(v\in {{\mathbb {R}}}\), \(s(e^v)\le \max \{1,s(\theta )\}=M_2(\theta )\), completing the proof. \(\square \)

Proof of Proposition 9

The idea of the proof is to split \(S^k_h\) into two integrals,

for some suitably chosen \(\alpha >0\) and to estimate these integrals by the second and third terms on the left-hand side of the discrete entropy inequality (16).

Since \(S_h^0/|\Omega |<1\), there exists \(\theta \in (0,1)\) such that \(s(\theta )>S_h^0/|\Omega |\). Let \(0<\varepsilon _0<[1-S_h^0/(|\Omega |s(\theta ))]^2\) and set \(\alpha =\varepsilon _0\theta \in (0,1)\).

We turn to the first integral on the right-hand side of (19). We claim that there exists a constant \(C_{\varepsilon _0 \theta }>0\) such that

To prove this inequality, we begin by showing that \(\int _\Omega \exp (\lambda _h^k/2)dx\) is bounded from below. Indeed, using the monotonicity of \(s\circ \exp \) in [0, 1] and of \(k\mapsto S^k\),

This yields the lower bound

Therefore, as long as \(\lambda _h^k\le \log (\varepsilon _0 \theta )\), the difference

is positive. Squaring this expression and integrating over \(\{\lambda _h^k\le \log (\varepsilon _0 \theta )\}\) thus does not change the inequality sign:

Combining the estimate of Lemma 10 and the previous estimate, we arrive at

This proves claim (20) with

recalling that \(\alpha =\varepsilon _0 \theta \).

Next, we estimate the second integral on the right-hand side of (19). It follows from Lemma 10 that

Therefore, (19) gives

for \(C_1 = 1/\max \{C_{\varepsilon _0 \theta }/C_0,M_1(\varepsilon _0 \theta )\}\). Finally, by Lemma 7,

and solving this recursion shows the proposition. \(\square \)

Theorem 11

(Decay in the \(L^1(\Omega )\) norm) Let the assumptions of Proposition 9 hold. Then there exists a constant \(C_2>0\), only depending on \(S_h^0\) and \(|\Omega |\), such that

where \(\eta \in (0,1)\) and \(C_1>0\) are as in Proposition 9.

Proof

To simplify the notation, we set \(u=e^{\lambda ^k_h}\) and \({\bar{u}}=|\Omega |^{-1}\int _\Omega e^{\lambda _h^k}dx\). Then the Csiszár–Kullback inequality (see, e.g., [4, (2.8)]) gives

using the property \(s(u)\ge 0\) for all \(u\ge 0\). We know from Lemma 8 that \({\bar{u}}\) is bounded from above by \(\sigma _+(S_h^0/|\Omega |)\). Hence,

It remains to show that a similar estimate holds for \(|{\bar{u}}-1|\). Since the entropy density s is convex, Jensen’s inequality shows that

It holds \(s(v)<1\) if and only if \(v<e\). Consequently, we have \({\bar{u}}<e\). Applying the elementary inequality

to \(u={\bar{u}}\) and using (22) gives

Thus, combining (21) and the previous inequality, we conclude that

and the proof follows after applying Proposition 9. \(\square \)

Remark 12

We discuss the case \(S_h^0\ge |\Omega |\). Fix \(\triangle t\in (0,1)\) and \(L\in {{\mathbb {N}}}\) with \(L>1\). Define \(\lambda _h^k = (L-k)^+\log (1-\triangle t)\), where \(z^+=\max \{0,z\}\) denotes the positive part of \(z\in {{\mathbb {R}}}\). Then \(e^{\lambda _h^k}=(1-\triangle t)^{L-k}<1\) for \(k<L\) and \(e^{\lambda _h^k}=1\) for \(k\ge L\). Consider the case \(L>k=1\). Then, setting \(\delta :=(1-\triangle t)^{L-k}\), we estimate

If \(1<k\le L\), we deduce from \(e^{\lambda _h^k}\le 1\) that

By the convexity of s, it follows that \(s(u)-s(v)\le (u-v)s'(u) = (u-v)\log u\) for all u, \(v>0\). Since \(\lambda _h^k\le 0\) for \(k\le L\), (23) yields

which directly implies the entropy inequality (16). This inequality is trivially satisfied for \(k\ge L\). However, it holds for \(L=2k\) that

This means that if \(S_h^0\ge |\Omega |\), there exists no constant \(C>0\) depending only on \(S_h^0\) such that (18) holds for all \((\lambda _h^k)\subset L^2(\Omega )\) satisfying the entropy inequality (16). Note that the constructed function \(e^{\lambda _h^k}\) does not possess a uniform positive lower bound. \(\square \)

4 Analysis of the DG scheme: numerical convergence

We show first that the solutions to (8) are uniformly bounded in the DG norm (7) if the initial entropy \(S_h^0\) is bounded uniformly in h.

Lemma 13

(Uniform bound in DG norm) Let \(\varepsilon \ge 0\) and let \(\lambda _h^k\) be a solution to (8). Then there exists a constant \(C>0\) such that

Proof

We have shown in the proof of Lemma 7 that

Then, by definition of the DG norm,

Using the inequality \(u\le 2+s(u)\) for \(u\ge 0\), applied to \(u=e^{\lambda _h^k}\), and the monotonicity of \(k\mapsto S_h^k\), we find that

If \(2\min \{1,C_{\mathrm{inv}}^2\}\triangle t\le 1\) then

On the other hand, if \(2\min \{1,C_{\mathrm{inv}}^2\}\triangle t > 1\), we have, again by the monotonicity of \(k\mapsto S_h^k\),

such that in either case,

proving the lemma. \(\square \)

Theorem 14

(Convergence) Let \(\varepsilon \ge 0\), \(\triangle t\in (0,1)\), and let \(\lambda _h^k\) be a solution to (8). Assume that \(\lambda _h^{k-1}\in V_h\) such that \(e^{\lambda _h^{k-1}}\rightarrow u^{k-1}\) strongly in \(L^2(\Omega )\) as \((\varepsilon ,h)\rightarrow 0\). Then there exists a unique strong solution \(u^k\in H^2_n(\Omega )\) to

such that

Proof

Let \(\lambda _h^k\in V_h\) be a solution to (8).

Step 1 We claim that there exists a subsequence \((\varepsilon _i,h_i)\rightarrow 0\) such that

Indeed, by assumption, the initial entropy \((S_{h_i}^0)_{i\in {{\mathbb {N}}}}\) is bounded. Then Lemma 13 implies that \(e^{\lambda _{h}^k/2}\) is bounded in the DG norm uniformly in \(\varepsilon \) and h. By the compactness Lemma 4, there exists a subsequence \((\varepsilon _i,h_i)\rightarrow 0\) and a function \(v^k\in H^1(\Omega )\) satisfying

Consequently, \(e^{\lambda _{h_i}^k}\rightarrow (v^k)^2=:u^k\) strongly in \(L^1(\Omega )\). The discrete entropy inequality (16) shows that

is bounded uniformly in \((\varepsilon ,h)\), where \(g(u)=\sqrt{u}(\sqrt{u}-1)\log u\) for \(u\ge 0\). As the function \(g:[0,\infty )\rightarrow [0,\infty )\) is continuous and satisfies \(g(u)/u\rightarrow \infty \) as \(u\rightarrow \infty \), we can apply the Theorem of de la Vallée-Poussin [11, Theorem 1.3, p. 239] (for a proof, see [26, Section II.2]) to conclude that there exists a subsequence \(e^{2\lambda _{h_i}^k}\) such that \(e^{2\lambda _{h_i}^k}\rightarrow w^k\) weakly in \(L^1(\Omega )\) as \(i\rightarrow \infty \), for some function \(w^k\). We deduce from the strong \(L^1\) convergence of \(e^{\lambda _{h_i}^k}\), possibly for another subsequence, that \(e^{2\lambda _{h_i}^k}\rightarrow (u^k)^2=w^k\) a.e. in \(\Omega \). This implies that

thus proving the desired \(L^2\) convergence.

Step 2 We claim that for any \(\phi \in H_n^2(\Omega )\cap C^1({{\overline{\Omega }}})\), it holds that

Since \(\phi \) does not necessarily belong to \(V_h\), we cannot use it as a test function in the weak formulation (8). Therefore, let \(P_h:C^0({{\overline{\Omega }}})\rightarrow C^0({{\overline{\Omega }}})\cap V_h\) be the interpolation operator, defined, e.g., in [10, Section 2.3]. It possesses the following property [10, Section 3.1.6]: There exists a constant \(C_I>0\) such that for all \(K\in {\mathcal {T}}_h\) and \(\phi \in H^2(K)\),

for \(m\le 2\le q\) such that \(m-d/q\le 2-d/2\). In particular, for \(\phi \in H^2(\Omega )\) and \(d\le 3\),

For given \(\phi \in H_n^2(\Omega )\cap C^1({{\overline{\Omega }}})\), we choose the test function \(\phi _{h_i}:=P_{h_i}\phi \) in (8):

Here, we have used the fact that \(\llbracket \phi _{h_i}\rrbracket =0\) since \(\phi _{h_i}\) is continuous. Note that (28) implies that \(\phi _{h_i}\rightarrow \phi \) strongly in \(L^\infty (\Omega )\) as \(i\rightarrow \infty \). As \(e^{\lambda _{h_i}^{k-1}}\rightarrow u^{k-1}\) strongly in \(L^2(\Omega )\), by assumption, we have for the left-hand side of (29):

Similarly, as \(e^{\lambda _{h_i}^k}\rightarrow u^k\) strongly in \(L^2(\Omega )\), we infer for the first and last integrals on the right-hand side of (29) that

Inequality (15) shows that

Thus, \((\varepsilon _i^{1/2}\lambda _{h_i}^k)\) is bounded in \(L^2(\Omega )\) from which we have \(\varepsilon _i\lambda _{h_i}^k\rightarrow 0\) strongly in \(L^2(\Omega )\) as \((\varepsilon _i,h_i)\rightarrow 0\). This implies that the second integral on the right-hand side of (29) converges to zero.

Next, we prove for the third integral on the right-hand side of (29) that

Indeed, by the Hölder inequality, the interpolation property (27), and the discrete entropy inequality (16), we obtain

It remains to prove for the fourth integral on the right-hand side of (29) that

To this end, we use the elementary inequality \(|\{u\nabla v\}|\le 2\{u\}\{|\nabla v|\}\) for functions u, v with nonnegative u and the Cauchy–Schwarz inequality:

We estimate both integrals separately. First, the multiplicative trace inequality in Lemma 2 shows that, for some constant \(C>0\) and for faces or edges \(f=\partial K_+\cap \partial K_-\),

We deduce from (27), i.e.

that

Therefore, also using \(\mathtt{h}(x)\le h_i\), we deduce from (30) that

where \(\mathtt{h}_i(x)=\min \{h_{i,K_+},h_{i,K_-}\}\) for \(x\in \partial K_+\cap \partial K_-\). We claim that the sum on the right-hand side is bounded uniformly in \(h_i\). By Definition (10),

such that we can estimate

where we used (12) in the last step. The proof of Lemma 7 shows that \(B(\lambda _{h_i}^k;\lambda _{h_i}^k,\lambda _{h_i}^k)\ge C/\triangle t\) since \(e^{\lambda _{h_i}^k}\) is uniformly bounded in \(L^2(\Omega )\). We conclude that

We put together all the previous convergence results to infer that

Thus, inserting (29), all integrals involving \(\phi _{h_i}\) cancel, and we end up with (26).

Step 3 We prove that the limit \(u^k\), derived in Step 1, is a solution to the very weak formulation

for all \(\phi \in H_n^2(\Omega )\cap C^1({{\overline{\Omega }}})\). For the proof, we pass to the limit \(h_i\rightarrow 0\) in each term of (26). Because of (25), we have

The limit \(i\rightarrow \infty \) in the remaining expression

is more delicate. Consider the first term in the definition of \(I_i\). Integrating by parts elementwise gives

where we have used the fact that \(\nabla \phi \) has a continuous normal component across interelement boundaries. From the previous identity and the \(L^2\) convergence of \(e^{\lambda ^k_{h_i}}\), we obtain

We claim that

since this implies that

and thus shows (32).

For the proof of (33), let \(x\in \partial K_+\cap \partial K_-\) for two neighboring elements \(K_+\), \(K_-\in {\mathcal {T}}_{h_i}\) and set \(\lambda _\pm :=\lambda _{h_i}^k|_{K_\pm }\). We assume without loss of generality that \(\lambda _+\ge \lambda _-\) since otherwise, we may exchange \(K_+\) and \(K_-\). The definitions of the jump \(\llbracket \cdot \rrbracket \) and average \(\{\cdot \}\) imply that

where \(g(s)=(e^s-1)+s(e^s+1)/2\) for \(s\ge 0\). A Taylor expansion shows that \(g(s)=g''(\xi )s^2/2=-\xi e^\xi s^2/4\) for some \(0\le \xi \le s\). Therefore \(|g(s)|\le s^2 e^{2s}/4\) for \(s\ge 0\), and we obtain

The difference can be identified with the jump of \(\lambda _{h_i}^k\) across \(f=\partial K_+\cap \partial K_-\), while the sum corresponds to the average of \(e^{2\lambda _{h_i}^k}\) in f. Thus, it follows from (34) that

By Definition (10) of the stabilization factor, it holds that

Using this estimate and the coercivity estimate (12) for the form B, we can write

We know from the proof of Lemma 7 that \(B(\lambda _{h_i}^k;\lambda _{h_i}^k,\lambda _{h_i}^k)\le C/\triangle t\) is uniformly bounded. This proves our claim (33).

Now, we can pass to the limit \(i\rightarrow \infty \) in (26), which yields (32).

Step 4 We claim that the solution \(u^k\in L^2(\Omega )\) to (26) satisfies the regularity \(u^k\in H^1(\Omega )\) and hence is a weak solution to (4)–(5). To this end, we use the duality method as in [6, p. 318]. Let \({\mathcal {T}}:L^2(\Omega )\rightarrow H_n^2(\Omega )\) be defined by \({\mathcal {T}}v=u\), where u solves the elliptic problem \(u-\triangle t\Delta u=v\) in \(\Omega \), \(\nabla u\cdot n=0\) on \(\partial \Omega \). By [25, Theorem 8.3.10], for \(v\in C_0^\infty (\Omega )\), it holds that \({\mathcal {T}}v\in H^2_n(\Omega )\cap C^1({{\overline{\Omega }}})\). Then, introducing \(g:=u^{k-1}+\triangle t u^k(1-u^k)\), the very weak formulation (32) can be equivalently written as

for all \(\phi \in H^2_n(\Omega )\cap C^1({{\overline{\Omega }}})\). Given \(v\in C_0^\infty (\Omega )\), we set \(\phi ={\mathcal {T}}v\), and the previous equation becomes

As \(C_0^\infty (\Omega )\) is dense in \(L^2(\Omega )\) and \({\mathcal {T}}\) is continuous, (35) remains valid for all \(v\in L^2(\Omega )\).

Next, we denote by \({\mathcal {T}}':H_n^2(\Omega )' \rightarrow L^2(\Omega )\) the dual operator of \({\mathcal {T}}\). According to [25, Theorem 8.3.10], the operator \({\mathcal {T}}\) can be extended to an operator \({\mathcal {T}}:L^p(\Omega )\cap H^1(\Omega )'\rightarrow W^{2,p}(\Omega )\) for \(1<p\le 2\). (This is basically a regularity statement for the elliptic problem.) We deduce from the Sobolev embedding theorem that \(W^{2,p}(\Omega )\hookrightarrow C^0({{\overline{\Omega }}})\) for \(p>3/2\) since \(d\le 3\). Therefore, there exists an extension \({\mathcal {T}}^*:C^0({{\overline{\Omega }}})'\rightarrow L^{p'}(\Omega )\) of \({\mathcal {T}}'\), where \(p'=p/(p-1)<3\).

Now, \(g\in L^1(\Omega )\subset C^0({{\overline{\Omega }}})'\). Then (35) implies that \(u^k={\mathcal {T}}^*(g)\in L^{p'}(\Omega )\) for \(p'<3\) and consequently, \(g\in L^q(\Omega )\) for \(q<3/2\).

It remains to show that \(u^k\in H^1(\Omega )\). Since \(u^k\in L^2(\Omega )\), the elliptic problem

possesses a unique solution \(v_m\in H^2_n(\Omega )\) [25, Theorem 8.3.10]. Multiplying the elliptic equation by \(v_m\) and applying the Cauchy–Schwarz inequality, we have

Thus, \((v_m)\) is bounded in \(L^2(\Omega )\) and it follows the existence of a subsequence which is not relabeled that \(v_m\rightharpoonup u^k\) weakly in \(L^2(\Omega )\) as \(m\rightarrow \infty \). Using \(v=v_m-\triangle t\Delta v_m\) in (35), it follows that

We apply the Hölder inequality and use the Sobolev embedding \(H^1(\Omega ) \hookrightarrow L^{q'}(\Omega )\) for \(q'\le 6\), knowing that \(g\in L^q(\Omega )\) for \(q<3/2\):

where \(3<q'\le 6\) and \(1/q+1/q'=1\). The \(H^1(\Omega )\) norm can be absorbed by the corresponding term on the right-hand side of (36), and we end up with

This shows that \((v_m)\) is bounded in \(H^1(\Omega )\). Thus, there exists a subsequence (not relabeled) which converges weakly in \(H^1(\Omega )\) to some function \(w\in H^1(\Omega )\). Since \(v_m\rightharpoonup u^k\) weakly in \(L^2(\Omega )\), we conclude that \(w=u^k\in H^1(\Omega )\).

Knowing that \(u^k\in H^1(\Omega )\) solves (32), we can integrate by parts in the first term of the right-hand side of (32), leading to

for all \(\phi \in H^2_n(\Omega )\) and, by density, for all \(\phi \in H^1(\Omega )\).

Moreover, since \(u^k\in L^4(\Omega )\) and consequently, \(u^k(1-u^k)\in L^2(\Omega )\), elliptic regularity implies that \(u^k\in H^2(\Omega )\) and \(\nabla u^k\cdot n=0\) on \(\partial \Omega \), i.e. \(u^k\in H_n^2(\Omega )\). We conclude that \(u^k\) solves (24).

Step 5 We prove the uniqueness of weak solutions to (4)–(5) to conclude the convergence of the whole sequence \(e^{\lambda _{h}^k}\) to \(u^k\). Let \(u^k\), \(v^k\in H^1(\Omega )\) be two weak solutions to (4)–(5). Taking the difference of the corresponding weak formulations with the test function \(u^k-v^k\), we obtain

Thus, choosing \(\triangle t<1\), we infer that \(u^k-v^k=0\) in \(\Omega \). \(\square \)

5 Numerical results

We present some numerical results for the Fisher–KPP equation in one space dimension,

5.1 One group of species

Let \(D=10^{-4}\) and \(u_0(x)=0.8\) for \(0<x<1/2\), \(u_0(x)=0\) elsewhere. Problem (37)–(38) models the evolution of one species initially concentrated in the domain (0, 1/2). We solve this problem by using an implicit Euler scheme in time and a continuous \(P_1\) finite element discretization, both on a uniform mesh. The reaction term is treated implicitly. The Newton method with relaxation is used at each time step, up to convergence. The integrals are computed by using a Gauß–Legendre quadrature formula of order 8. Figure 1 shows the density u(x, t) at various time instances. We observe that the finite element solution \(u_h^k\) becomes negative even on the finer mesh, and it is pushed towards \(-\infty \) in some region since \(u^*=0\) is a repulsive steady state.

Continuous \(P_1\) finite element discretization of problem (37)–(38) in the variable u, using \(N_{\mathrm{el}}=20\) elements (left) and \(N_{\mathrm{el}}=40\) elements (right). The time step size is in both cases \(\triangle t=1/6\), the end time is \(T=10\), and the solutions move from left to right

These results motivate the introduction of the exponential transformation \(u=\exp (\lambda )\). We are choosing the same initial datum as before but choosing \(u_0(x)=10^{-16}\) instead of \(u_0(x)=0\) to allow for the exponential transformation. The regularization term seems not to be necessary in practice, and we set \(\varepsilon =0\) in (8) in all our simulations (see also Sect. 5.3 below; for linear elements, we prove in the “Appendix” that the regularization term is actually not needed). In all the numerical experiments, the constant \(\frac{3}{2}C_{\mathrm{{inv}}}^2\) in the stabilization function \(\alpha (u)\) defined in (10) is replaced by 1. Figure 2 shows the solutions to the continuous \(P_1\) finite element approximation associated to problem (4)–(5) in the variable \(\lambda _h^k\). The implicit nonlinear scheme is solved again by Newton’s method with relaxation at each time step. The integrals are solved again by a Gauß–Legendre quadrature formula of order 8. Note that if \(p=1\), the integrals are of the type \(\int _K e^{ax+b}(cx+d)dx\) and thus can be integrated exactly. The discrete densities \(\exp (\lambda _h^k)\) are positive by construction.

For a spatial mesh with \(N_{\text {el}}=40\) elements, \(N_t=80\) time steps, and final time \(T=20\), we have compared the number of Newton iterations from the continuous FE method with those from the DG method, with polynomial degrees \(p=1,2,3\). In this example, the continuous FE method requires much more Newton iterations than the DG method (see Table 1). Therefore, we choose the discontinuous Galerkin method with polynomial order \(p\ge 1\) for problem (4)–(5) in the variable \(\lambda _h^k\); see scheme (8).

Figure 3 illustrates the discrete solutions for polynomial orders \(p=1,2,3\), indicating that the method is stable with respect to the order. The jumps are due to the discontinuous Galerkin method.

Reference solution computed from the \(P_1\) finite element scheme (formulation in u) with \(N_{\mathrm{el}}=400\) elements in the space grid and \(N_{t}=80\) elements in the time grid, with final time \(T=20\) (left top), and solutions computed from the DG scheme (formulation in \(\lambda \)) with \(N_{\mathrm{el}}=40\), \(N_t=80\), and polynomial order \(p=1\) (right top), \(p=2\) (left bottom), and \(p=3\) (right bottom). The initial datum is \(u_0(x)=0.8\) for \(0<x<1/2\) and \(u_0(x)=10^{-16}\) else

Figure 4 represents the discrete solutions with the same numerical parameters as in Fig. 3 but with the initial datum \(u_0(x)=1\) for \(0<x<1/2\) and \(u_0(x)=0\) else. Also in this example, the lower and upper bounds \(0\le \exp (\lambda _h^k)\le 1\) are always satisfied.

Reference solution computed from the \(P_1\) finite element scheme (formulation in u) with \(N_{\mathrm{el}}=400\) elements in the space grid, and \(N_{\mathrm{t}}=80\) elements in the time grid, with final time \(T=20\) (left top), and solutions computed from the DG scheme (formulation in \(\lambda \)) with \(N_{\mathrm{el}}=40\), \(N_t=80\), and polynomial order \(p=1\) (right top), \(p=2\) (left bottom), and \(p=3\) (right bottom). The initial datum is \(u_0(x)=1\) for \(0<x<1/2\) and \(u_0(x)=10^{-16}\) elsewhere

5.2 Entropy decay

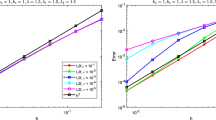

Proposition 9 shows that the discrete entropy \(S_h^k\) decays exponentially fast if \(S_h^0<|\Omega |=1\). To illustrate this behavior numerically, we consider the one-group model with initial condition \(u_0(x)=1\) for \(0<x<1/2\) and \(u_0(x)=10^{-16}\) else. Figure 5 (left) shows that there are two different slopes. For small times, the entropy decay is rather slow. When the time step k is sufficiently large such that \(\exp (\lambda _h^k)>\varepsilon \) for some \(\varepsilon >0\), the reaction dominates, and the entropy decay becomes faster. This behavior becomes even more apparent in the case of pure diffusion (i.e. without reaction terms), illustrated in Fig. 5 (right). We remark that in this situation, the total mass is conserved numerically.

Left: entropy decay for the one-group model (see Fig. 4) with final time \(T=40\), \(N_{\mathrm{el}}=80\) elements in the space grid and \(N_t=160\) in the time grid. The two reference lines correspond to the exponential functions \(t\mapsto 0.95^t\) (indicated as “slope 1”) and \(t\mapsto 0.2^t\) (indicated as “slope 2”). Right: entropy decay for the pure diffusion equation. The computation is done with \(N_{\mathrm{el}}=80\), \(N_{\mathrm{t}}=160\) and \(T=40\). Both figures are in semi-log scale

Figure 6 shows the entropy decay in semi-log scale for the initial data \(u_0(x)=n\) for \(0<x<1/n\) and \(u_0(x)=10^{-16}\) else for \(n=3,6,12\). Then

is larger than \(|\Omega |=1\) if \(n>e\). We observe a region in which the decay rate is very small and it becomes smaller when n (and \(S_h^0\)) increases. This indicates that the assumption \(S_0^h<|\Omega |\) is not just technical to derive exponential time decay.

5.3 Traveling waves

We are looking for traveling-wave solutions to (37) with \(D=10^{-4}\). Setting \(u(x,t)=\psi (s)\) with \(s=x-ct\), the Fisher–KPP equation can be rewritten as a system of first-order differential equations:

We choose the initial data \(\psi (0)=1\) and \(\phi (0)=-10^{-10}\), speed \(c=2.5\), and space domain \(\Omega =[0,150]\). As in the one-species model, the constant \(\frac{3}{2}C_{\mathrm{{inv}}}^2\) in the stabilization function \(\alpha (u)\) in (10) is replaced by 1 in all the computations.

Left: comparison of the traveling-wave solution \(\psi (s)\), the DG solution \(\exp (\lambda _h^k)\), and the FE solution \(u_h\). Both the DG and the FE approximations are computed using the polynomial degree \(p=1\), \(N_{\mathrm{el}}=40\) and \(\triangle t=T/80\) (\(T=20\)). Right: comparison of the traveling-wave solution \(\psi \) and the DG solutions \(\exp (\lambda _h^k)\) obtained by varying the polynomial degree. All the DG approximations are computed using \(N_{\mathrm{el}}=40\) and \(\triangle t=T/80\) (\(T=20\))

The (reference) traveling-wave solution \(\psi (s)\), computed from (39) using the Matlab command ode45, is compared in Fig. 7 (left) with the DG solution \(\exp (\lambda _h^k)\), computed from the DG scheme (8), and with the continuous finite element solution \(u_h\), both with polynomials of degree \(p=1\). Both approximations are equally diffusive, the traveling-wave speed being overestimated. In Fig. 7 (right), the reference solution is compared with the DG solution computed for different values of the polynomial degree. It turns out that t he polynomial order does not affect the diffusivity of the method. All the solutions in Fig. 7 are shown at the time instances \(t=0\), \(t=T/4\), \(t=T/2\), \(t=3T/4\), and \(t=T\) with \(T=20\) and are computed on a space grid with \(N_{\mathrm{el}}=40\) elements and on a time grid with \(\triangle t=T/80\).

Comparison of the traveling-wave solution \(\psi \) and the DG solution \(\exp (\lambda _h^k)\) computed on different space and time meshes (with \(\triangle t=\frac{T}{N_{\mathrm{el}}}\)), with polynomial degree \(p=1\) (top left), \(p=2\) (top right), and \(p=3\) (bottom left). All functions are shown at the time instances \(t=0\) and \(t=T=5\). Bottom right: \(H^1\) seminorm of the error of the DG scheme at time \(t=5\) computed for \(p=1,2,3\)

In Fig. 8, the reference solution \(\psi \) is compared with the DG solution computed by simultaneously varying the space and the time mesh sizes, with polynomial degrees \(p=1,2,3\), respectively. The \(H^1\) seminorm of the errors at final time \(t=5\), namely \(|\psi (\cdot -cT)-e^{\lambda _h(\cdot ,T)}|_{H^1(\Omega )}\), for \(p=1,2,3\) are shown in Fig. 8 (bottom right). In all cases, the observed convergence of the scheme is linear, as expected, since we are employing a first-order method (Euler) in time. Higher polynomial degrees result in a smaller multiplicative constant. Higher convergence rates for higher polynomial degrees in space would be expected by using a higher-order time discretization. Structure preservation of higher-order temporal approximations is a delicate topic (see, e.g., [21]) and will be studied in a future work. Also an error analysis is postponed to a future work.

Comparison of the traveling-wave solution \(\psi \) and the DG solution \(\exp (\lambda _h^k)\) (with \(N_{\mathrm{el}}=50\) and \(p=1\)) for various choices of \(\varepsilon \) (see (8))

All the numerical solutions that we have shown so far are computed with the choice \(\varepsilon =0\) (see (8)). We conclude the section by studying the dependence of the method on \(\varepsilon \). In Fig. 9, we compare the reference traveling-wave solution \(\psi \) with the DG solution computed on a uniform spatial mesh with \(N_{\mathrm{el}}=40\), time mesh size \(\triangle t=T/80\) (with \(T=20\)), and polynomial degree \(p=1\), for the values \(\varepsilon =10^{-9},10^{-12},10^{-13}\) (the choice \(\varepsilon =10^{-14}\) has given the same results as \(\varepsilon =0\)). The term \(\varepsilon \int _\Omega \lambda ^k_h\phi _h\,dx\), which was introduced in the DG formulation (8) in order to prove the existence of the DG solution (see Proposition 6), produces a deterioration of the numerical solution that increases with time.

References

Ait Hammou Oulhaj, A., Cancès, C., Chainais-Hillairet, C.: Numerical analysis of a nonlinearly stable and positive control volume finite element scheme for Richards equation with anisotropy. ESAIM Math. Model. Numer. Anal. 52, 1533–1567 (2018)

Arnold, D.: An interior penalty finite element method for discontinuous elements. SIAM J. Numer. Anal. 19, 742–760 (1982)

Arnold, D., Brezzi, F., Cockburn, B., Marini, L.: Unified analysis of discontinuous Galerkin methods for elliptic problems. SIAM J. Numer. Anal. 39, 1749–1779 (2002)

Arnold, A., Markowich, P., Toscani, G., Unterreiter, A.: On generalized Csiszár–Kullback inequalities. Monatsh. Math. 131, 235–253 (2000)

Baker, G.A.: Finite element methods for elliptic equations using nonconforming elements. Math. Comput. 31, 45–59 (1977)

Brézis, H.: Functional Analysis, Sobolev Spaces and Partial Differential Equations. Springer, New York (2011)

Buffa, A., Ortner, C.: Compact embeddings of broken Sobolev spaces and applications. IMA J. Numer. Anal. 29, 827–855 (2009)

Burger, M., Di Francesco, M., Pietschmann, J.-F., Schlake, B.: Nonlinear cross-diffusion with size exclusion. SIAM J. Math. Anal. 42, 2842–2871 (2010)

Cavalli, F., Naldi, G., Perugia, I.: Discontinuous Galerkin approximation of relaxation models for linear and nonlinear diffusion equations. SIAM J. Sci. Comput. 34, A105–A136 (2012)

Ciarlet, P. (ed.): The Finite Element Method for Elliptic Problems, Studies in Mathematics and Its Applications, vol. 4, pp. 1–530. North-Holland, Amsterdam (1978)

Ekeland, I., Teman, R.: Convex Analysis and Variational Problems. North-Holland, Amsterdam (1972)

Fisher, R.A.: The wave of advance of advantageous genes. Ann. Eugen. 7, 353–369 (1937)

Gajewski, H.: On existence, uniqueness and asymptotic behavior of solutions of the basic equations for carrier transport in semiconductors. Z. Angew. Math. Mech. 65, 101–108 (1985)

Gassner, G., Winters, A., Kopriva, D.: Split form nodal discontinuous Galerkin schemes with summation-by-parts property for the compressible Euler equations. J. Comput. Phys. 327, 39–66 (2016)

Guo, L., Yang, Y.: Positivity preserving high-order local discontinuous Galerkin method for parabolic equations with blow-up solutions. J. Comput. Phys. 289, 181–195 (2015)

Hairer, E., Lubich, C., Wanner, G.: Geometric Numerical Integration. Springer, Berlin (2006)

Hasnain, S., Saqib, M., Mashat, D.Suleiman: Numerical study of one dimensional Fishers KPP equation with finite difference schemes. Am. J. Comput. Math. 7, 70–83 (2017)

Houston, P., Schwab, C., Süli, E.: Discontinuous \(hp\)-finite element methods for advection–diffusion–reaction problems. SIAM J. Numer. Anal. 39, 2133–2163 (2002)

Il’in, A.M.: A difference scheme for a differential equation with a small parameter multiplying the highest derivative. Mat. Zametki 6, 237–248 (1969)

Jüngel, A.: Entropy Methods for Diffusive Partial Differential Equations. BCAM Springer Briefs. Springer, Berlin (2016)

Jüngel, A., Schuchnigg, S.: Entropy-dissipating semi-discrete Runge–Kutta schemes for nonlinear diffusion equations. Commun. Math. Sci. 15, 27–53 (2017)

Lesaint, P., Raviart, P.A.: On a finite element method for solving the neutron transport equation. In: de Boor, C. (ed.) Mathematical Aspects of Finite Elements in Partial Differential Equations, pp. 89–123. Academic Press, New York (1974)

Liu, H.-L., Wang, Z.: An entropy satisfying discontinuous Galerkin method for nonlinear Fokker–Planck equations. J. Sci. Comput. 68, 1217–1240 (2016)

Macías-Díaz, J., Jerez-Galiano, S., Puri, A.: An explicit positivity-preserving finite-difference scheme for the classical Fisher–Kolmogorov–Petrovsky–Piscounov equation. Appl. Math. Comput. 9, 5829–5839 (2012)

Maz’ya, V., Rossmann, J.: Elliptic Equations in Polyhedral Domains. American Mathematical Society, Providence (2010)

Meyer, P.-A.: Probability and Potentials. Blaisdell Publishing, Toronto (1966)

Nitsche, J.: Über ein Variationsprinzip zur Lösung von Dirichlet-Problemen bei Verwendung von Teilräumen, die keinen Randbedingungen unterworfen sind. Abh. Math. Sem. Univ. Hambg. 36, 9–15 (1971)

Parsani, M., Carpenter, M., Fisher, T., Nielsen, E.: Entropy stable staggered grid discontinuous spectral collocation methods of any order for the compressible Navier–Stokes equations. SIAM J. Sci. Comput. 38, A3129–A3162 (2016)

Percell, P., Wheeler, M.: A local residual finite element procedure for elliptic problems. SIAM J. Numer. Anal. 15, 705–714 (1978)

Prudhomme, S., Pascal, F., Oden, J.T., Romkes, A.: Review of a priori error estimation for discontinuous Galerkin methods. TICAM REPORT 00-27, Texas Institute for Computational and Applied Mathematics, Austin, USA (2000)

Reed, W., Hill, T.: Triangular mesh methods for the neutron transport equation. In: Proceedings of the American Nuclear Society for the Conference “National Topical Meeting on Mathematical Models and Computational Techniques for Analysis of Nuclear Systems”, Ann Arbor, Michigan, USA (1973)

Rivière, B., Wheeler, M., Girault, V.: A priori error estimates for finite element methods based on discontinuous approximation spaces for elliptic problems. SIAM J. Numer. Anal. 39, 902–931 (2001)

Sun, Z., Carrillo, J.A., Shu, C.-W.: A discontinuous Galerkin method for nonlinear parabolic equations and gradient flow problems with interaction potentials. J. Comput. Phys. 352, 76–104 (2018)

Wheeler, M.: An elliptic collocation-finite element method with interior penalties. SIAM J. Numer. Anal. 15, 152–161 (1978)

Yadav, O.P., Jiwari, R.: Finite element analysis and approximation of Burgers–Fisher equation. Numer. Methods Partial Differ. Equ. 33, 1652–1677 (2017)

Zhang, R., Zhu, J., Loula, A.F.D., Yu, X.: Operator splitting combined with positivity-preserving discontinuous Galerkin method for the chemotaxis model. J. Comput. Appl. Math. 302, 312–326 (2016)

Acknowledgements

Open access funding provided by TU Wien (TUW).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The authors acknowledge partial support from the Austrian Science Fund (FWF), Grant F65. F. Bonizzoni has been supported by the FWF Firnberg-Program, Grant T998. M. Braukhoff and A. Jüngel acknowledge support from the FWF, Grants P30000 and W1245. I. Perugia acknowledges support from the FWF, Grant P29197-N32. The authors thank Clément Cancès for pointing out how to prove Proposition 15.

Appendix A: Linear elements: existence of solutions for \(\varepsilon =0\)

Appendix A: Linear elements: existence of solutions for \(\varepsilon =0\)

We show that the regularization term \(\varepsilon \int _\Omega \lambda _h^k\phi _h dx\) in the DG scheme (8) is not needed if we consider linear elements.

Proposition 15

(Existence for \(p=1\)) Let \(p=1\), \(\lambda _h^{k-1}\in V_h\), and \(S_0^h<|\Omega |\). Then there exists a solution \(\lambda _h^k\in V_h\) to (8) with \(\varepsilon =0\).

Proof

The proof is based on the idea of the proof of [1, Lemma 3.10]. Let \(\varepsilon >0\) and let \(\lambda _h^k\in V_h\) be a solution to (8) given by Proposition 6. In order to emphasize the dependency on \(\varepsilon \), we write \(\lambda _\varepsilon :=\lambda _h^k\). Our goal is to derive an \(\varepsilon \)-uniform \(L^\infty (\Omega )\) bound for \(\lambda _\varepsilon \).

Step 1 We derive first some estimates for \(e^{\lambda _\varepsilon }\). Lemma 8 shows that

where \(\delta =\sigma _-(S_h^0/|\Omega |)\) and \(M=\sigma _+(S_h^0/|\Omega |)\). Since we assumed that \(S_h^0<|\Omega |\), we have \(\delta >0\). Using the coercivity estimate (12), the inequality (17), and \(e^{\lambda _\varepsilon }(e^{\lambda _\varepsilon }-1)\lambda _\varepsilon \ge 0\), we find that

where we used in the last step the monotonicity of \(k\mapsto S_h^k\), guaranteed by Lemma 7. Consequently,

Step 2 We claim that for any \(\varepsilon >0\), there exists an element \(K_\varepsilon \in {\mathcal {T}}_h\) and a constant \(\mu >0\), independent of \(\varepsilon \), such that

For the proof, let \(N\in {{\mathbb {N}}}\) be the number of elements in \({\mathcal {T}}_h\). The lower and upper bounds (40) imply the existence of an element \(K_\varepsilon \in {\mathcal {T}}_h\) such that

By the mean-value theorem, there exists \(x_\varepsilon \in K_\varepsilon \) such that

Then (43) gives

Since \(\lambda _\varepsilon \) is a polynomial of degree one on \(K_\varepsilon \), by assumption, its gradient is constant on \(K_\varepsilon \), and we deduce from (41) and (43) that

Combining the last two estimates, it follows for \(x\in K_\varepsilon \) that

which shows the claim.

Step 3 We wish to prove a uniform \(L^\infty \) bound for \(\lambda _\varepsilon \) on the faces or edges of \(K_\varepsilon \). Let \(\mu >0\) and \(K_\varepsilon \in {\mathcal {T}}_h\) such that \(\Vert \lambda _\varepsilon \Vert _{L^\infty (K_\varepsilon )}\le \mu \). Set \(K_-:=K_\varepsilon \) and consider neighboring elements \(K_+\in {\mathcal {T}}_h\) satisfying \(f\in \partial K_-\cap \partial K_+\ne \emptyset \). Furthermore, let \(\lambda _\pm =\lambda _\varepsilon |_{K_\pm }\). We claim that there exists \(C_\mu >0\), independent of \(\varepsilon \), such that

The idea is to prove an \(L^2(f)\) estimate for \(\lambda _\varepsilon \), as the equivalence of all norms in the finite-dimensional setting then implies the desired \(L^\infty (f)\) bound.

Observe that

Then we can estimate the stabilization function \(\alpha \) according to

To estimate the \(L^2(f)\) norm of \(\lambda _-|_f\), we use the inequality \(|\lambda _+|\le |\lambda _+ - \lambda _-| + |\lambda _-| = |\llbracket \lambda _\varepsilon \rrbracket | + |\lambda _\varepsilon |\) on f. Then (42) yields

and \(\beta _\mu \) is uniform in \(\varepsilon \) (but not in h) in view of (41). We conclude that

Step 4 Denote by \((\phi _0,\ldots ,\phi _d)\) the basis of \(P_1(K_+)\) such that \(\phi _i(e_j)=\delta _{ij}\) for i, \(j=0,\ldots ,d\), where the vertices \(e_i\) of \(K_+\) are ordered in such a way that \(e_0\not \in f\). Then we can formulate \(\lambda _+\) on \(K_+\) as

where \(a_i^\varepsilon =\lambda _+(e_i)\). Estimate (44) shows that \(\lambda _+(e_i)\) is uniformly bounded at the vertices \(a_1,\ldots ,a_d\) of \(K_+\), i.e. \(|a_i^\varepsilon |\le C_\mu \) for all \(i=1,\ldots ,d\).

Step 5 We wish to estimate the remaining vertex \(e_0^\varepsilon \) that is not an element of \(K_-=K_\varepsilon \). We claim that there exist constants \(L_\mu \le U_\mu \), being independent of \(\varepsilon \), such that

We first prove the upper bound. Using the bound for \(a_i^\varepsilon \) for \(i=1,\ldots ,d\), we have

If \(a_0^\varepsilon \le 0\), there is nothing to show. Otherwise, it follows from (43) that

Then, setting \(c_0=\int _{K_+}\phi _0dx\), we infer that \(a_0^\varepsilon \le Me^{C_\mu d}/c_0 =: U_\mu \).

The proof of the lower bound is more involved. Let \(f_0\) be the face or edge that is opposite of the vertex \(e_0\), and let \(f_1,\ldots ,f_d\) be the remaining faces or edges. For later use, we note that the integrals

converge to zero as \(b\rightarrow -\infty \), so there exists \(L'_\mu \in {{\mathbb {R}}}\) such that

We estimate

As we assumed that \(p=1\), the expression \(|\nabla \lambda _\varepsilon \cdot n|\) is constant. Thus, since \(|\nabla \phi _0\cdot n|>0\) and \(\lambda _\varepsilon \ge -C_\mu \) on \(f_0\), by (44), the previous inequality gives

An integration by parts leads to

since \(\lambda _\varepsilon \) is linear on \(K_+\), so the Laplacian vanishes. Hence, using

and (45), we have

if we choose \(a_0^\varepsilon \le L_\mu '\). Inserting this information into (46), it follows for all \(a_0^\varepsilon \le L_\mu '\) that

Thus, setting \(L_\mu =\min \{L_\mu ',L_\mu ''\}\), we conclude that \(a_0^\varepsilon \ge L_\mu \).

Step 6 Combining the previous steps, we infer that there exists a constant \(g(\mu )>0\) such that

This estimate means that if \(\lambda _\varepsilon \) is bounded in some element with constant \(\mu \), then \(\lambda _\varepsilon \) is bounded in the neighboring elements with constant \(g(\mu )\). Now, take an arbitrary element \(K\in {\mathcal {T}}_h\). Then there exists a finite sequence \(K^0,K^1,\ldots ,K^m\) of elements with \(K^0=K_\varepsilon \) and \(K^m=K\) such that \(K^{j-1}\) and \(K^j\) are neighboring elements. Repeating the arguments of Steps 3–5, the bound \(\Vert \lambda _\varepsilon \Vert _{L^\infty (K^1)}\le \mu ':=g(\mu )\) implies that \(\Vert \lambda _\varepsilon \Vert _{L^\infty (K^2)}\le g(\mu ')=g(g(\mu ))\). Thus, by iteration,

The upper bound is independent of \(\varepsilon \) and holds for all elements \(K\in {\mathcal {T}}_h\). Consequently, \((\lambda _\varepsilon )\) is bounded in \(L^\infty (\Omega )\).

We deduce that there exists a subsequence (not relabeled) such that \(\lambda _\varepsilon \rightarrow \lambda \) strongly in \(L^\infty (\Omega )\), recalling that \(V_h\) is finite-dimensional. In fact, the convergence holds in any norm. Thus, we can pass to the limit \(\varepsilon \rightarrow 0\) in (8), and the limit equation is the same as (8) with \(\varepsilon =0\). \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bonizzoni, F., Braukhoff, M., Jüngel, A. et al. A structure-preserving discontinuous Galerkin scheme for the Fisher–KPP equation. Numer. Math. 146, 119–157 (2020). https://doi.org/10.1007/s00211-020-01136-w

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00211-020-01136-w