Abstract

We propose two variants of the overlapping additive Schwarz method for the finite element discretization of scalar elliptic problems in 3D with highly heterogeneous coefficients. The methods are efficient and simple to construct using the abstract framework of the additive Schwarz method, and an idea of adaptive coarse spaces. In one variant, the coarse space consists of finite element functions associated with the wire basket nodes and functions based on solving some generalized eigenvalue problems on the faces. In the other variant, it contains functions associated with the vertex nodes with functions based on solving some generalized eigenvalue problems on subdomain faces and subdomain edges. The functions that constitute the coarse spaces are chosen adaptively, and they correspond to the eigenvalues that are smaller than a given threshold. The convergence rate of the preconditioned conjugate gradients method in both cases is shown to be independent of the variations in the coefficients for the sufficient number of eigenfunctions in the coarse space. Numerical results are given to support the theory.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Additive Schwarz methods are considered among the most popular domain decomposition methods for solving partial differential equations because of their simplicity, and inherently parallel algorithms, cf. [8, 25, 33, 36]. We consider in this paper variants of the overlapping additive Schwarz method as preconditioners for solving scalar elliptic equations with highly varying coefficient in the space \(\mathbb {R}^3\). Based on the idea of adaptively constructing the coarse space through solving generalized eigenvalue problems in the lower dimensions, we propose two variants of the algorithm in three dimensions. The resulting system has a condition number which is inversely proportional to \(\lambda ^{*}\), where \(\lambda ^{*}\) is the threshold for coarse basis selection of eigenfunctions corresponding to eigenvalues below \(\lambda ^{*}\). The threshold is used as a parameter for the coarse space in the algorithm. The term “lower dimensions” refers to the fact that the eigenvalue problems are solved either on subdomain faces or edges. The methods are effective, inherently parallel, and simple to construct. Additive Schwarz method with adaptive coarse space was recently considered using a different idea based on solving generalized eigenvalue problem in the overlap, cf. [8, 9, 26].

To see the motivation behind such an approach, we take a brief look into the steps of deriving convergence estimates for two-level overlapping Schwarz methods in their abstract Schwarz framework (cf. [25, 33, 36] for the abstract framework). These methods are analyzed in the abstract Schwarz framework. And the abstract Schwarz framework is based on the assumption that any function u in the finite element space can be split into its coarse component \(u_0\) and local components associated with the subdomains, and that this splitting is stable with respect to the energy norm. The condition number bound of the preconditioned system then depends explicitly on the constant \(C_0^2\) which enters into the stability estimate, cf. [25, 33, 36]. Consequently, how robust the method will be with respect to the mesh parameters and the varying coefficients, depends on how robust \(C_0^2\) is concerning those parameters and varying coefficients. Its already known, cf. e.g., [15], that, even with highly varying coefficients as long as the variations are strictly inside the subdomains, this constant is not depend on the variation. The difficulty, however, arises when we have to deal with coefficients which vary along subdomain boundaries. In which case, the crucial term which needs to be bounded in the stability estimate is the boundary term \(\sum _i C/h^2\Vert \sqrt{\alpha }(u-u_0)\Vert ^2_{L^2(\varOmega ^h_i)}\). Here \(\varOmega ^h_i\) denotes the layer of all elements along the subdomain boundary \(\partial \varOmega _i\), \(\alpha \) the varying coefficient, and C a constant. Because the \(\alpha \) is varying, estimating the \(L^2\) term say using the Poincaré or a weighted Poincaré inequality introduces the contrast into the estimate, i.e., the ratio between the largest and the smallest values of \(\alpha \), unless strong assumptions on the distribution of the coefficient are made, cf. [31]. A standard multiscale coarse space alone, cf. [15, 29], cannot make the method robust with respect to the contrast unless some form of enrichment of the coarse space is used that can capture the strong variations along the subdomain boundary and improves the approximation. Including selected eigenfunctions of properly defined eigenvalue problems over the subdomain boundary into coarse spaces, enable us to capture those variations and provide estimates with constants independent of the contrast, which is the primary motivation behind the methods presented here.

The idea of adaptively constructing coarse spaces using eigenfunctions of certain eigenvalue problems has already attracted much interest in recent years. Some earlier works in this direction are found in [1, 2] around the Neumann-Neumann type substructuring domain decomposition method, and [6] around the algebraic multigrid method, although they were not addressed to solve multiscale problems. In the context of multiscale problems this idea has only recently started to emerge, with its first appearance in [12, 13], as well as in [26], and later on in [7, 9,10,11, 14, 35] in the additive Schwarz framework. This idea of adaptively constructing coarse space, in other words, the primal constraints, for the FETI-DP and BDDC substructuring domain decomposition methods has also been developed and extensively analyzed, cf. [17, 19, 20, 24, 34] in 2D and [5, 16, 18, 27, 30] in 3D.

The methods above are preconditioners to iterative methods, designed to obtain fine-scale solutions to elliptic equations. In this recent development of coarse space enrichment, it is important to take note of the parallel development in methods designed to solve elliptic equations directly by a coarse approximation, such as the Reduced Basis method, see, e.g., [4, 28], cf. also [32], and the Localized Orthogonal Decomposition method, see, e.g., [21, 23]. The constructions of these coarse approximations and the constructions of the coarse spaces in the iterative methods show many similarities.

The rest of this paper is organized as follows: In Sect. 2 we define our problem and its discrete formulation. In Sect. 3 we describe the overlapping Schwarz preconditioner, in Sect. 4 we introduce the two new coarse spaces, and in Sect. 5 we establish the convergence estimate for the preconditioned system. Finally, in Sect. 6, we present some numerical results of our method.

2 Differential problem and discrete formulation

Here we present our continuous test problem, the scalar elliptic equation with coefficients piecewise constant on each fine-scale element and its discrete representation. Find \(u \in H^1_0(\varOmega )\) such that

where

We assume that \(\alpha \in L^\infty (\varOmega )\), \(\alpha (x)\ge \alpha _0 > 0\), and \(f \in L^2(\varOmega )\) with \(\varOmega \) being a polyhedral region in the space \(\mathbb {R}^3\).

Let \(\mathcal {T}_h(\varOmega )\) be a quasi uniform triangulation of \(\varOmega \) into fine tetrahedral elements \(\tau \), where \(h=\max _{\tau \in \mathcal {T}_h(\varOmega )}\)diam\((\tau )\) is the parameter of \(\mathcal {T}_h(\varOmega )\), cf. e.g. [3]. Keeping in mind that a tetrahedral element consists of four triangular faces and six edges, we denote the fine element face by \(\tau _t\) and the finite element edge by \(\tau _e\). Let \(V_h=V_h^0(\varOmega )\) be the finite element solution space of piecewise linear continuous functions:

where \(v_{|\tau }\) is the function restricted to an element \(\tau \in \mathcal {T}^h(\varOmega )\) and \(P_1(x)\) is the set of linear polynomials. Note that as the gradient of \(u\in V^h\) is piecewise constant thus \((\alpha \nabla u \nabla v)_{L^2(\tau )}=\nabla u \nabla v \int _\tau \alpha \,dx\). Thus without any loss of generality we assume that \(\alpha (x)=\alpha _\tau \) for \(x\in \tau \) where \(\alpha _\tau \) is a positive constant.

The discrete problem is then defined as: Find \(u_h \in V^0_h(\varOmega )\) such that

This problem has a unique solution by the Lax-Milgram theorem. By using standard nodal basis functions \(\phi _i\) with \(i=1,\ldots ,N_\eta \), where \(N_\eta \) are the number of individual vertex nodes of the tetrahedrons in \(\varOmega \), the above equation may be stated as a system of algebraic equations

where \((A)_{i,j}=a(\phi _j,\phi _i)\), \({(f_{h})}_i=f(\phi _i)\) and \({(u_h)}_j=u_h(x_{j})\). Here \(x_j\) is the coordinate value of the node j. The resulting symmetric system is in general very ill-conditioned; any standard iterative method, like the Conjugate Gradients, e.g. cf [22], may perform poorly due to the ill-conditioning of the system. The aim is to introduce an additive Schwarz preconditioner for the original problem (3) to obtain a well-conditioned system for which the convergence of the conjugate gradient method is independent of any variations in the coefficient, thereby improving the overall performance.

3 Additive Schwarz method

The two-level additive Schwarz method is well known and well understood in the literature, and we refer to [36, Chapter 3] for an overview of the method.

3.1 Geometric structures

Let \(\varOmega \) be partitioned into a set of N nonoverlapping subdomains (or generalized subdomains), \(\{\varOmega _i\}_{i=1}^N\), such that each \(\overline{\varOmega }_i\) (the closure of \({\varOmega }_i\)) is a sum of elements (fine elements) from \(\mathcal {T}_h\), \({\varOmega }_i\cap {\varOmega }_j = \emptyset \) for \(i\ne j\), and \(\overline{\varOmega } = \cup _{i\in I}\overline{\varOmega }_i\). The intersection between two closed subdomains is either an empty set, a closed face (or a closed generalized face which is a sum of closures of fine element faces), a closed edge (or a closed generalized edge which is a sum of closures of fine element edges), or a vertex. Thus, the triangulation \(\mathcal {T}^h(\varOmega )\) is aligned with the subdomains \(\varOmega _i\). Each subdomain \(\varOmega _i\) inherits its own local triangulation \(\mathcal {T}^h(\varOmega _i)=\{\tau \in \mathcal {T}^h(\varOmega ):\tau \subset \overline{\varOmega }_i\}\) such that \(\bigcup _i\mathcal {T}^h(\varOmega _i)=\mathcal {T}^h(\varOmega )\). The corresponding set of overlapping subdomains \(\{\varOmega _i'\}_{i\in I}\) is then defined as follows: extend each subdomain \(\varOmega _i\) to \(\varOmega _i'\), by adding to \(\varOmega _i\) a layer of elements, i.e. sum of \(\overline{\tau }_k\in \mathcal {T}^h(\varOmega )\) such that \(\overline{\tau }_k\cap \partial \varOmega _{i}\ne \emptyset \).

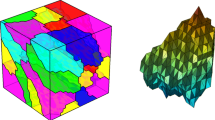

Since the subdomains inherit their triangulation from the global triangulation \(\mathcal {T}^h(\varOmega )\), the nodes, the edges, and the faces of the tetrahedral elements along the interface \(\varGamma =\bigcup _i\partial \varOmega _i{\setminus }\partial \varOmega \) are matching across. The interface is composed of three basic structures: open (generalized) faces, open (generalized) edges, and subdomain vertices, cf. Fig 1. The set of all open edges and subdomain vertices constitute the structure which we call the wire basket, and we denote it by \(\mathcal {W}\). The set of all faces is given by \(\mathcal {F}=\{\mathcal {F}_{kl} : \ \mathcal {\overline{F}}_{kl}=\partial \varOmega _k\cap \partial \varOmega _l, \ |\mathcal {F}_{kl}|>0, \ k\in I, \ l\in I, \ \text{ and } \ k>l\}\), where \(|\cdot |\) is a natural measure of a surface. Note that since the subdomain vertices are matching across the interface, we have \(\varGamma =\bigcup _{kl} \mathcal {\overline{F}}_{kl}{\setminus }\partial \varOmega \). A closed edge is an intersection between two closed faces, consisting of closed fine element edges. The set of all edges are denoted by \(\mathcal {E}\). And the set of subdomain vertices are denoted by \(\mathcal {V}\). The wire basket is defined as \(\mathcal {W}=\bigcup _k\mathcal {\overline{E}}_k{\setminus }\partial \varOmega \).

The unit cube domain, decomposed into 8 subdomains. The subdomain in the back at the top is meshed into a fine mesh. The faces that the subdomain \(\varOmega _1\) shares with its neighbours, \(\varOmega _2\) and \(\varOmega _3\) have been meshed. On the face, \(\mathcal {F}_{I_{13}}\), only the internal nodes have been meshed, while on the face \(\mathcal {F}_{12}\) all the nodes have been meshed. Also, an edge \(\mathcal {E}_1\) is indicated where the edge nodes have been dotted. The center red node is a vertex node (color figure online)

In the same way as each subdomain inherits a 3D triangulation, each face \(\mathcal {F}_{k l}\) inherits a 2D triangulation which we denote by \(\mathcal {T}_h(\mathcal {F}_{k l})\), and each edge \(\mathcal {E}_k\) inherits a 1D triangulation which we denote by \(\mathcal {T}_h(\mathcal {E}_k)\). For each of the structures, \(\varOmega \), \(\overline{\varOmega }\), \(\varOmega _k\), \(\overline{\varOmega }_k\), \(\mathcal {F}\), \(\mathcal {E}\), and \(\mathcal {W}\), we use \(\varOmega _h\), \(\overline{\varOmega }_h\), \(\varOmega _{k,h}\), \(\overline{\varOmega }_{k,h}\), \(\mathcal {F}_h\), \(\mathcal {E}_h\), and \(\mathcal {W}_h\), respectively, to denote the corresponding set of nodal points (vertices of the elements of \(\mathcal {T}_h\)) which are on the structure.

3.2 Space decomposition, subproblems, and preconditioned system

Let the two local subspaces on \(\varOmega _k\), be defined as

Functions of \(V^0_h(\varOmega _k)\) are extended by zero to the rest of the domain \({\overline{\varOmega }}{\setminus }{\overline{\varOmega }}_k\). For the ease of representation, we denote this extended space by the same symbol, that is using \(V^0_h(\varOmega _k)\). We can repeat these definitions for extended domains \(\varOmega _i'\). Let us decompose the finite element solution space into a coarse space and N local subspaces

Here \(V_i=V^0_h(\varOmega _i')\) are the local function spaces associated with the overlapping subdomains \(\varOmega _i'\) for \(i=1, \ldots , N\) extended by zero to the rest of \(\varOmega \), cf. (5). Further, \(V_0^{(k)},k=1,2\) is the coarse space. In the next section, we introduce the two coarse spaces, the wire basket based coarse space \(V_0^{(1)}=V_{\mathcal W}\), cf. (14), and the vertex based coarse space \(V_0^{(2)}=V_{{\mathcal {V}}}\), cf. (21). In both cases, these are relatively much smaller subspaces of the finite element space \(V_h\).

We define projection like operators, \(P_0^{(k)}\) for \(k=1, 2\), and \(P_i\) for \(i=1,\ldots ,N\), as follows,

Defining the additive operator \(P^{(k)}=P_0^{(k)} + \sum _i P_i\), we get the following system of equation equivalent to the original problem (3) in the operator form,

where \(g^{(k)}=g_0^{(k)}+\sum _i g_i\) and \(g_0^{(k)}=P_0^{(k)}u_h, g_i=P_iu_h\) for \(u_h\) the solution of (3). The right-hand side functions \(g_0^{(k)},g_i\) can be computed in parallel without knowing \(u_h\), cf. e.g. [33, 36].

4 Coarse spaces with enrichment

For our the additive Schwarz method we introduce two alternative coarse spaces. The first one is based on enriching a wire basket coarse space, and the second one is based on enriching a vertex based coarse space.

Discrete harmonic extensions are used to extend our functions from subdomain boundaries into subdomains. We define our discrete harmonic extension operator below.

Definition 1

Let \(u|_{\partial \varOmega _k}\) be the u restricted to \(\partial \varOmega _k\). We define \(\mathcal {H}_k:V_h(\varOmega _k)\rightarrow V_h(\varOmega _k)\) as the discrete harmonic extension operator in \(\varOmega _k\) as follows,

A function \(u\in V_h(\varOmega _k)\) is locally discrete harmonic in \(\varOmega _k\) if \(\mathcal {H}_ku=u\). If for any \(u\in V_h\), we have that all its restrictions to the subdomains are locally discrete harmonic then this function is (piecewise) discrete harmonic in \(\varOmega \).

Functions that are locally discrete harmonic have the minimum energy property locally. This property is well known, but for completeness we restate it in the following lemma, cf. [36] for further details on discrete harmonic extensions.

Lemma 1

Let \(u\in V_h(\varOmega _k)\) be given such that \(u=\mathcal {H}_k u\) in the sense of Definition 1, then it follows that

4.1 Wire basket based coarse space

The wire basket based coarse space consists of basis functions, one for each node in the wire basket \(\mathcal {W}\), plus eigenfunctions corresponding to the first few eigenvalues of generalized eigenvalue problems associated with the faces, cf. Definition 3.

For any face \(\mathcal {F}_{k l}\), let \(V_h(\mathcal {F}_{k l})\) be the space of piecewise linear continuous functions on the face \(\mathcal {F}_{k l}\in \mathcal {F}\), and \(V_h^0(\mathcal {F}_{k l})\) the corresponding subspace of functions with zero boundary values, that is

We need the following interpolation operator \(I_\mathcal {W}:V_h\rightarrow V_h\).

Definition 2

For any \(u\in V_h\), let \(I_\mathcal {W}u\) be discrete harmonic (cf. Definition 1) such that

where

and \(\overline{\alpha }_{\tau _t}=\max \{\alpha _{\tau _-},\alpha _{\tau _+}\}\) for a triangle \(\tau _t\) which is an element of the 2D triangulation of the face \(\mathcal {F}_{k l}\), such that \(\overline{\tau }_t=\partial \tau _-\cap \partial \tau _+\) for \(\tau _+\in \mathcal {T}_h(\varOmega _k)\) and \(\tau _-\in \mathcal {T}_h(\varOmega _l)\).

For each face \(\mathcal {F}_{k l}\), we define the following generalized eigenvalue problem.

Definition 3

For \(i=1,\ldots ,{\hat{M}}\), where \({\hat{M}}=\dim (V_h^0(\mathcal {F}_{k l}))\), find \((\lambda ^i_{\mathcal {F}_{k l}}, \xi ^i_{\mathcal {F}_{k l}})\in \left( \mathbb {R}\times V^0_h(\mathcal {F}_{k l})\right) \) such that \(\lambda _{\mathcal {F}_{k l}}^1 \le \cdots \le \lambda _{\mathcal {F}_{k l}}^{\hat{M}}\) and

where \(a_{\mathcal {F}_{k l}}(\cdot ,\cdot )\) is defined in (10), and \(b_{\mathcal {F}_{k l}}(\cdot ,\cdot )\) as follows,

where \(\overline{\alpha }_x=\max \{\alpha _\tau : x\in \partial \tau , \tau \in \mathcal {T}_h\}\). Here \(V^0_h(\mathcal {F}_{k l})\) is the space of piecewise continuous functions on \(\mathcal {T}_h(\mathcal {F}_{k l})\) which are equal to zero on \( \partial \mathcal {F}_{k l}\).

Note that the bilinear forms \(a_{\mathcal {F}_{k l}}(\cdot ,\cdot )\) and \(b_{\mathcal {F}_{k l}}(\cdot ,\cdot )\) in (11) are symmetric and positive definite on \(V^0_h(\mathcal {F}_{k l})\). We extend \(\xi ^i_{\mathcal {F}_{k l}}\) by zero to the whole interface \(\varGamma \), and then as a discrete harmonic function inside each subdomain, denoting the extended function by the same symbol. Now, let

be the space of eigenfunctions, where \(m_{k l}\) is a nonnegative integer smaller than or equal to \({\hat{M}}\), a number which is either prescribed or decided adaptively.

The wire basket based coarse space is then defined as

The space \(I_{\mathcal {W}}V_h\) is a natural extension of the 2D multiscale coarse space introduced in [15] to 3D, and the face enrichment spaces \(V_{\mathcal {F}_{k l}}^{en}\) are natural extensions of the edge enrichment spaces introduced in [14] to 3D.

4.2 Vertex based coarse space

The vertex based coarse space consists of basis functions, one for each node in \(\mathcal {V}\), plus eigenfunctions corresponding to the first few eigenvalues of generalized eigenvalue problems associated with the edges, cf. Definition 5, and the faces, cf. Definition 6.

For any edge \(\mathcal {E}_i\), let \(V_h(\mathcal {E}_i)\) be the space of piecewise linear continuous functions on the edge \(\mathcal {E}_i\in \mathcal {E}\), and \(V_h^0(\mathcal {E}_i)\) the corresponding subspace of functions with zero boundary values, that is

We need the following interpolation operator \(I_{\mathcal V}:V_h\rightarrow V_h\).

Definition 4

For any \(u\in V_h\), let \(I_{{\mathcal {V}}}u\) be discrete harmonic (cf. Definition 1) such that

where

and \(\overline{\alpha }_e=\max \{\tau \in \mathcal {T}_h: e\subset \partial \tau \}\).

For each edge \(\mathcal {E}_k\), we define the following generalized eigenvalue problem.

Definition 5

For \(i=1,\ldots ,M_{{\mathcal {E}}_k}\), where \(M_{\mathcal E_k}=\dim (V_h(\mathcal {E}_k))\), find \((\lambda ^i_{\mathcal {E}_k}, \xi ^i_{\mathcal {E}_k})\in \left( \mathbb {R}\times V^0_h(\mathcal {E}_k)\right) \) such that \(\lambda _{\mathcal {E}_k}^1 \le \cdots \le \lambda _{\mathcal {E}_k}^{M_{{\mathcal {E}}_k}} \) and

where \(a_{\mathcal {E}_k}(\cdot ,\cdot )\) is defined in (15), and

with \(\overline{\alpha }_x\) from Definition 3.

Note that, by definition, the bilinear forms \(a_{\mathcal {E}_{k}}(\cdot ,\cdot )\) and \(b_{\mathcal {E}_k}(\cdot ,\cdot )\) in (16) are both symmetric and positive definite on \(V^0_h(\mathcal {E}_k)\).

For each face \(\mathcal {F}_{k l}\), let

be the sum of closed fine triangles on the face \(\mathcal {F}_{k l}\) that touch the wire basket, and \(\mathcal {F}_{k l}^I=\mathcal {F}_{k l} {\setminus } \overline{\mathcal {F}}_{k l}^B\) the interior of the sum of closed triangles that are lying strictly inside the face. Obviously, \(\overline{\mathcal {F}}_{k l} = \overline{\mathcal {F}}_{k l}^B \cup \mathcal {F}_{k l}^I\).

For each face \(\mathcal {F}_{k l}\), we now define the following generalized eigenvalue problem.

Definition 6

For \(i=1,\ldots ,\hat{M}\), where \({\hat{M}} =\dim (V_h^0(\mathcal {F}_{k l}))\), find \((\lambda ^i_{\mathcal {F}_{k l,I}}, \xi ^i_{\mathcal {F}_{k l,I}}) \in \left( \mathbb {R}\times V_h^0(\mathcal {F}_{k l})\right) \) such that \(\lambda _{\mathcal {F}_{k l, I}}^1\le \cdots \le \lambda _{\mathcal {F}_{k l, I}}^{\hat{M}}\) and

where

where \(\overline{\alpha }_{\tau _t}\) is defined in Definition 2.

Note that, by definition, the bilinear form \(b_{\mathcal {F}_{k l}}(\cdot ,\cdot )\) is symmetric and positive definite on \(V_h^0(\mathcal {F}_{k l})\), while the bilinear form \(a_{\mathcal {F}_{k l,I}}(\cdot ,\cdot )\) in (18) is symmetric and positive semidefinite on this space. We know its kernel, it is the one dimensional space containing functions that are constant over \(\mathcal {F}_{k l}^I\), consequently, we have \(0=\lambda _{\mathcal {F}_{k l, I}}^1< \lambda _{\mathcal {F}_{k l, I}}^2 \le \cdots \le \lambda _{\mathcal {F}_{k l, I}}^{\hat{M}}\).

Analogously, as in the wire basket based coarse space, we extend \(\xi ^i_{\mathcal {E}_k}\) and \(\xi ^i_{\mathcal {F}_{k l,I}}\) by zero to the whole \(\varGamma \), and then as discrete harmonic functions inside each subdomain, denoting the extended function by the same symbols. Now, define the two spaces of eigenfunctions, one associated with the edges and one associated with the faces, as

respectively. Both \(n_{k l}\) and \(m_k\) are integers such that \(1\le n_{k l}\le {\hat{M}}\) and \(0 \le m_k \le M_{{\mathcal {E}}_k}\), and are either prescribed or decided adaptively.

The vertex based coarse space is then defined as

Remark 1

It should be pointed out here that many of the calculations indicated by the use of piecewise discrete harmonic extensions in the definitions above are redundant since, in practice, they are often trivial extensions of zero on subdomain boundaries.

5 Convergence estimate for the preconditioned system

In this section, we prove that the condition number of our preconditioned system can be kept low, and independent of the contrast if our coarse space enrichments are appropriately chosen. The main result is stated in Theorem 1.

Theorem 1

Let \(k=1\) and \(k=2\) in the superscript refer to the two coarse spaces: the wire basket based coarse space and the vertex based coarse space, respectively. Then, for \(P^{(k)}\), cf. (7), we have

with

Here \(\lambda _{\mathcal {F}_{k l}}^{i}\) is defined in (11), \(\lambda _{\mathcal {E}_k}^i\) is from (16), and \(\lambda _{\mathcal {F}_{k l,I}}^i\) is from (18), and the integer parameters \(m_{k l},n_{k l}, m_k\) are defined in (13) or (20), respectively.

The proof is based on the abstract Schwarz framework, cf. e.g. [25, 33, 36] and is given at the end of the section. The following lemmas are required for the proof.

Remark 2

In case of constant \(\alpha \) and regular mesh the minimal eigenvalue of the problems are of \(\mathcal {O}((\frac{h}{H})^2)\) and the maximal eigenvalue is of \(\mathcal {O}(1)\).

Lemma 2

Let \(u\in V_h\) be discrete harmonic i.e. \(u_k := u_{|\overline{\varOmega }_k}=\mathcal {H}_k u\) in the a-norm \(a_{|\varOmega _k}(\cdot ,\cdot )\) or be equal to zero at all interior nodes that are in \(\varOmega _{k,h}\), then it follows that

In particular if u is zero at \(\mathcal {W}_h\), then

and if u is zero at all vertices of \(\partial \varOmega _k\) then

Proof

The first part of the proof follows from the fact that a discrete harmonic function has the minimal energy among all functions taking the same values on the boundary. Hence, \(a_{|\varOmega _k}(u,u)\le a_{|\varOmega _k}(\hat{u},\hat{u})\) for any \(\hat{u}\in V_h(\varOmega _k)\) which is equal to u on \(\partial \varOmega _k\) and zero at the interior nodes \(\varOmega _{k,h}\). The other case means that \(u=\hat{u}\). Consequently,

where \(\varOmega _k^h\) is the h boundary layer that is the sum of elements of \(\mathcal {T}_h(\varOmega _k)\) that touch (has a vertex on) the boundary \(\partial \varOmega _k\). We used a local inverse inequality and the discrete equivalence of the \(L^2\) norm on each \(\tau \). Finally, utilizing the fact that \(\hat{u}\) is zero at the interior nodal points and taking the maximum over \(\alpha _\tau \) such that \(x\in \partial \tau \) we get

The last two statements of the lemma follow directly from the first statement of the lemma plus the definitions of the bilinear forms defined in (12) and (17).

Remark 3

The constant in the estimate of Lemma 2 equals \(C_1\,C_2\,C_3\), where \(C_1\) is the constant of the inverse inequality \(|u|_{H^1(\tau )}^2\le C_1 h^{-2} \Vert u\Vert _{L^2(\tau )}^2, \; u\in V^h, \;\tau \in T_h\), \(C_2\) is the squared constant of the inequality stating local equivalence of the \(L^2\) norm to the discrete local nodal \(l_2\) norm, i.e. \(\Vert u\Vert _{L^2(\tau )}^2 \le C_2 h \sum _{x_k \in \partial \tau } |u(x_k)|^2, \; u\in V^h,\; \tau \in T_h\), and \(C_3\) is the maximum over all \(x\in \partial \varOmega _{k,h}\) of the number of \(\tau \in T_h(\varOmega _k)\) such that x is a vertex of \(\tau \).

We see from the proof that we could define the coefficient \(\alpha _x\) as equal to the sum of \(\alpha _x\) instead of taking the maximum of them.

We restate [14, Lemma 2.2] which contains important estimates for the eigenfunctions found in (11) and (16).

Lemma 3

Let V be a finite dimensional real space and consider the generalized eigenvalue problem: Find the eigenpair \((\lambda _k,\xi _k) \in \mathbb {R}\times V\) such that \(b(\xi _k,\xi _k)=1\) and

where the bilinear form \(b(\cdot ,\cdot )\) is symmetric positive definite, and the bilinear form \(a(\cdot ,\cdot )\) is symmetric positive semi-definite. Then there exist \(M=dim(V)\) eigenpairs with real eigenvalues ordered as follows \(0\le \lambda _1\le \cdots \le \lambda _M\). If \(\lambda _k\) is the smallest positive eigenvalue then the operator \(\varPi _m:V\rightarrow V\), which is defined for \(k-1\le m < M\) as

is the \(b(\cdot ,\cdot )\)-orthogonal projection such that

and

where \(|v|_a^2=a(v,v)\) and \(\Vert v\Vert _b^2=b(v,v)\).

We introduce the wire basket based coarse space interpolator or the interpolation operator \(I_0^{{\mathcal {W}}}:V_h \rightarrow V_{{\mathcal {W}}} \subset V_h\) as

where \(\varPi ^{\mathcal {F}_{k l}}_{m_{k l}}:V_h \rightarrow V_{\mathcal {F}_{k l}}^{en} \subset V_h\) is defined as follows,

We have the following lemma estimating the coarse space interpolant.

Lemma 4

Let the wire basket based coarse space interpolator \(I_0^{\mathcal W}\) be defined in (28). Then for \(u\in V_h\)

where \(\lambda _{\mathcal {F}_{k l}}^{m_{kl}+1}\) is the \((m_{kl}+1)\)th smallest eigenvalue of the generalized eigenvalue problem in Definition 3.

Proof

Note that if we restrict \(w=u - I_0^{{\mathcal {W}}} u\) to \(\overline{\varOmega }_k\) then \(\mathcal {H}_k w= \mathcal {H}_k u - I_0^{{\mathcal {W}}} u\) as \(I_0^{\mathcal {W}}u\) is discrete harmonic, thus by the fact that \(\mathcal {H}_k\) and \(I-\mathcal {H}_k\) are \(a_{|\varOmega _k}\) orthogonal projection in \(V_h(\varOmega _k)\), cf. Definition 1, we have

Thus it remains to estimate \(a_{|\varOmega _k}(\mathcal {H}_kw,\mathcal {H}_kw) \). By Lemma 2 and the fact that \(H_k w=w\) on \(\partial \varOmega _k\) we get

Let us now consider one such face \(\mathcal {F}_{k l}\subset \partial \varOmega _k\). We have \(w=u-I_{\mathcal {W}}u - \varPi ^{\mathcal {F}_{k l}}_{m_{k l}}(u-I_{\mathcal {W}}u)\) on the face, cf. (28). Using Lemma 3 it follows that

Here \(\Vert u\Vert _{b_{\mathcal {F}_{k l}}}^2:=b_{\mathcal {F}_{k l}}(u,u)\) and \(|u|_{a_{\mathcal {F}_{k l}}}^2:=a_{\mathcal {F}_{k l}}(u,u)\). Since by Definition 2\((I_{\mathcal {W}}u)_{|\mathcal {F}_{k l}}\) is orthogonal to \((u-I_{\mathcal {W}}u)_{|\mathcal {F}_{k l}} \in V_h^0(\mathcal {F}_{k l})\) with respect to the bilinear form \(a_{\mathcal {F}_{k l}}(\cdot ,\cdot )\), we have

From the last two estimates, it follows that

Here the last sum is over all pairs of vertices of a 2D face element \(\tau _t\). Using the definition of \(\overline{\alpha }_t\) and the fact that \(|u|_{H^1(\tau )}^2\asymp \mathrm {diam}(\tau )\sum _{x,y \in \partial \tau }|u(x)-u(y)|^2\) (here x, y are vertices of 3D element \(\tau \)) we get

Finally, summing over the faces yields that

and then summing the subdomains ends the proof. \(\square \)

For the next lemma we need a partition of unity. We need a discrete version of a partition of unity, i.e we define \(\theta _i\) is a continuous function which is piecewise linear on \({{\mathcal {T}}}_h\) such that:

where \(N_x\) is the number of subdomains \(\varOmega _j\) such that \(x\in \overline{\varOmega }_{j,h}\). As an example, \(N_x=2\) for any nodal point x on a subdomain face.

Lemma 5

Let the wire basket based coarse space interpolator \(I_0^{\mathcal W}\) be defined in (28) and let \(v_k=I_h(\theta _k (u - I_0^{{\mathcal {W}}} u))\) for any \(u\in V_h\) and \(I_h:C(\overline{\varOmega })\rightarrow V^h\)—the standard nodal piecewise linear interpolant. Then

The last sum is taken over all subdomains which have a common face to \(\varOmega _k\).

Proof

Let \(w=u - I_0^{{\mathcal {W}}} u\). Note that

Thus

We first estimate the second term, that is the sum of the face terms. Note that \(v_{k|\varOmega _l}\) is zero at the interior nodes \(\varOmega _{l,h}\) and the boundary nodes \(\partial \varOmega _{l,h}{\setminus } \mathcal {F}_{k l,h}\). Thus by Lemma 2 and (35) we have

This term has already been estimated in the proof of Lemma 4, cf. (31), that is

We now estimate the first term in (36), that is the restriction of the bilinear form to the \(\varOmega _k\). By a triangle inequality, we can write

The first term has already been estimated in the proof of Lemma 4, cf. (32). Also, note that \((w-v_k)(x)\) equals either \(\frac{1}{2} w(x)\) when x is a face node, or zero when \(x\in \partial \varOmega _k\cap \mathcal {W}_h\) and \(x\in \varOmega _{k,h}\). By Lemma 2 and (35) we thus get

Again, this term has been estimated in the proof of Lemma 4, cf. (31). Summing all those estimates ends the proof. \(\square \)

Analogous to the wire basket case, we now define the vertex based coarse space interpolator \(I_0^{{\mathcal {V}}}:V_h \rightarrow V_{{\mathcal {V}}}\subset V_h\) as

where \(\varPi ^{\mathcal {F}_{k l,I}}_{n_{k l}}:V_h \rightarrow V_{\mathcal {F}_{k l},I}^{en} \subset V_h\) and \(\varPi ^{\mathcal {E}_k}_{m_k}:V_h \rightarrow V_{\mathcal {E}_k}^{en} \subset V_h\), are defined as follows,

We have the following lemma.

Lemma 6

Let the vertex based coarse space interpolator \(I_0^{{\mathcal {V}}}\) be defined in (37), then for any \(u \in V_h\)

where \(\lambda _{\mathcal {F}_{k l}}^{n_{kl}+1}\) and \(\lambda _{\mathcal {E}_k}^{m_k+1}\) are respectively the \((n_{kl}+1)\)th and the \((m_k+1)\)th smallest eigenvalues of the generalized eigenvalue problems in Definitions 5 and 6.

Proof

Let \(w=u - I_0^{{\mathcal {V}}} u\). In the same way as in the proof of Lemma 4, we get

Next we bound \(a_{|\varOmega _k}(\mathcal {H}_kw,\mathcal {H}_kw) \). By Lemma 2, cf. (23), and (12) and (17). we get

Note that by (21) and Definition 4 we have \(w_{|\mathcal {E}_j}=u- I_{{\mathcal {V}}} u - \varPi _{m_j}^{\mathcal {E}_j}(u - I_{{\mathcal {V}}} u)\) for any edge \(\mathcal {E}_j \subset \mathcal {W}\cap \partial \varOmega _k\), and \(w_{|\mathcal {F}_{k l}}=u- \varPi _{m_{k l}}^{\mathcal {F}_{k l,I}}(u)\) for any face \(\mathcal {F}_{k l} \subset \partial \varOmega _k\).

Now, consider the term \(b_{\mathcal {E}_{j}}(w,w)\) related to an edge \(\mathcal {E}_j\). By Lemma 3 we get

Here \(\Vert u\Vert _{b_{\mathcal {E}_j}}^2:=b_{\mathcal {E}_j}(u,u)\) and \(|u|_{a_{\mathcal {E}_j}}^2:=a_{\mathcal {E}_j}(u,u)\). We also used the fact that \((I_{\mathcal {V}}u)_{\mathcal {E}_j}\) is orthogonal to \((u-I_{\mathcal {V}}u)_{|\mathcal {E}_j} \in V_h^0(\mathcal {E}_{j}^0)\) with respect to the bilinear form \(a_{\mathcal {E}_j}(\cdot ,\cdot )\), cf. Definition 4.

Further, using the fact that, for u linear, \(|u|_{H^1(\tau _e)}^2\) is equivalent to \(h^{-1}|u(x)-u(y)|^2\) (x, y are the ends of the 1D element \(\tau _e\)), we get

Here the last sum is over the ends x, y of an 1D edge element \(\tau _e\). Note that \(h|u(x)-u(y)|^2 \preceq \int _\tau |\nabla u|^2\, dx \) if x, y are vertices of \(\tau \in \mathcal {T}_h\). Thus we get

where the sum is over all 3D fine elements such that one of its edges is contained in \(\mathcal {E}_j\).

Now, consider the term \(b_{\mathcal {F}_{k l}}(w,w)\) related to a face \(\mathcal {F}_{k l}\). Note that \(u_{|\partial \mathcal {F}_{k l}}\) does not have to be equal to zero in general but if we define a function \(\hat{u}\) such that \(\hat{u}(x)=u(x)\)\(x\in \mathcal {F}_{kl,h}\) and \(\hat{u}(x)=0\) for \(x\in \partial \mathcal {F}_{k l,h}\), then we have

cf. (12) and (19). Thus we see that \(\varPi ^{\mathcal {F}_{k l,I}}_{n_{k l}}u=\varPi ^{\mathcal {F}_{k l,I}}_{n_{k l}}\hat{u}\) and we can apply Lemma 3 replacing u with \(\hat{u}\) and get

Here \(|u|_{a_{\mathcal {F}_{k l,I}}}^2:=a_{\mathcal {F}_{k l,I}}(u,u)\). The last inequality follows from the fact that

cf. (10) and (19). Further, analogously as in the proof of Lemma 4, cf. (30) and (31), we get

Finally, by summing over the edges and faces, and then over the subdomains we end the proof. \(\square \)

Lemma 7

Let the vertex based coarse space interpolator \(I_0^{{\mathcal {V}}}\) be defined in (37), and \(v_k=I_h(\theta _k (u - I_0^{{\mathcal {V}}}u))\) for any \(u\in V_h\). Then

The last sum is taken over all subdomains which share an edge with \(\varOmega _k\).

Proof

Let \(w=u-I_0^{{\mathcal {V}}}u\), then we have \(I_h\theta _k w\) equal to w at interior nodes \(\varOmega _{k,h}\), \(\frac{1}{2} w\) at the nodes \(\mathcal {F}_{k l,h}\) on each face \(\mathcal {F}_{k l}\) of \(\varOmega _k\), \(\frac{1}{n(\mathcal {E}_i)}w\) at the nodes \(\mathcal {E}_{i,h}\) on each edge \(\mathcal {E}_i\) of \(\varOmega _k\), and zero at all remaining nodal points of \(\varOmega _h\). Here \(n(\mathcal {E}_i)\) is the number of subdomains that share the edge \(\mathcal {E}_i\). As in the proof of Lemma 5, we can write

The face and the edge terms can be estimated following the lines in the proof of Lemma 6.

The first term, on the other hand, can be estimated following the lines in the proof of Lemma 5, that is using Lemma 2, as follows:

Again, these terms can be estimated in the same way as in the proof of Lemma 6.

Combining those estimates, we get the proof. \(\square \)

The next and final lemma provides estimates for the stability of decomposition for the two preconditioners presented in this paper, which are required in the proof of Theorem 1.

Lemma 8

(Stable Decomposition) Let \(k=1\) and \(k=2\) in the superscript refer to the two coarse spaces: the wire basket based coarse space and the vertex based coarse space, respectively. Then, for all \(u\in V_h\) there exists a representation \(u=u_0^{(k)}+\sum ^N_{i=1} u_i^{(k)}\) such that \(u_0^{(k)}\in V_0^{(k)}\), and \(u_i^{(k)}\in V_i\), \(i=1,\ldots ,N\), and

for \(k=1, 2\), with

Proof

For the wire basket based coarse space, let \(u_0^{(1)}=I_0^{\mathcal W}u\) and \(u_i^{(1)}=I_h(\theta _i(u-I_0^{{\mathcal {W}}}u))\). The corresponding statement of the lemma then follows immediately from the Lemmas 4 and 5.

For the vertex based coarse space, let \(u_0^{(2)}=I_0^{{\mathcal {V}}}u\) and \(u_i^{(2)}=I_h(\theta _i(u-I_0^{{\mathcal {V}}}u))\). It’s statement of the lemma then follows immediately from the Lemmas 6 and 7. \(\square \)

Proof of Theorem 1

The convergence theory of the abstract Schwarz framework indicates that, under three assumptions, the condition number of our method can be bounded as the following.

The three assumptions are concerned with estimating the three constants \(\omega \), \(\rho \) and \(C^2_0\). It is easy to that \(\omega =1\) here, as we have nested subspaces and are using exact solvers for the subproblems, and \(\rho \le N_c\), where \(N_c\) is the maximum number of subspaces that cover any \(x\in \varOmega \). We refer to [33, Sect. 5.2] or [36, Sect. 2.3] for details. An estimate of the last parameter \((C_0^{(k)})^2\), for \(k=1\) or \(k=2\), is given in Lemma 8. The proof of the theorem now follows. \(\square \)

6 Numerical results

In this section, we present four numerical tests. The test problems are defined on a unit cube domain, with a Dirichlet boundary condition and a constant right-hand side \(f(x) = 100\). For all the test problems, we use coefficient distributions with inclusions across subdomain boundaries (faces and edges), as is illustrated in the Figs. 2 and 3, and channels illustrated in Fig. 4. The coefficient either has a background-value of 1, or a significant value of \(10^6\). For any given distribution the region with high-value coefficient is independent of mesh parameters. We use the PCG method as a solver, with a stopping condition when the residual norm is reduced by a factor \(10^{-6}\).

The algorithms have been implemented in MATLAB, using the functions meshgrid and delaunayTriangulation for discretization, and routines from PDEToolbox for assembling the stiffness and mass matrices. For the iterative solver, we used pcgeig, an extension of MATLAB’s pcg routine, available at m2matlabdb.ma.tum.de. The pcgeig solver returns an estimate of the condition number for the preconditioned system. We use the built-in function eigs for solving the eigenvalue problems.

The spectral components of our coarse spaces consist of local eigenvectors with corresponding eigenvalues below a given threshold \(\lambda ^*\) (cf. Theorem 1). This threshold-parameter makes the coarse spaces adaptive, determining the size of the coarse spaces and the performance of the methods. In our experiments, we choose the thresholds as \(c\frac{h}{H}\), for some constant c. Another reasonable choice of threshold is the smallest non zero eigenvalue of the eigenvalue problems with the uniform background-value distribution. We refer to [18] for other threshold choices.

The distributions of Test 1. Regions with coefficient value \(\alpha =1\mathrm {e}{6}\) are shown in red. The inclusions are in the faces in the \(xz-\)plane. From left to right in the first row, the distributions are called Face 1, Face 2, Face 3, and Face 4. The distribution called Face 1–4 is shown from two different angles in the second row (color figure online)

In Test 1 the coefficient distributions are inclusions on faces, as shown in Fig. 2. The figure indicates five variants where the high conductivity regions (cells) are shown in red. The distributions vary both in geometrical shape and in the number of separate inclusions. The condition number estimate and the number of iterations are presented in Table 1. Each row corresponds to a particular distribution. The first column lists the condition number estimates when the coarse spaces have no enrichment, while the next three columns have estimates corresponding to solutions with adaptive coarse spaces for varying h. We observe in the first column that the jump in coefficient is reflected in the condition numbers. From the last three columns, we observe that both our suggested preconditioners improve the performance of the PCG method.

To have an idea of the number of eigenfunctions added to the coarse spaces in Test 1, we have listed the lowest eigenvalues of the generalized eigenvalue problems for the case \(\frac{H}{h} = 32\), in Table 2. Each distribution in the first row of Fig. 2 represents inclusions on one face. The eigenvalues in each column of Table 2 are from eigenvalue problems on each of these faces respectively. Any eigenvalue printed in boldface is an eigenvalue above the threshold. The first few eigenvalues in each column are several magnitudes lower than the threshold. In all observed cases, the number of eigenvalues that are several magnitudes lower than the threshold is equal to the number of separate inclusions on that particular face. The number of eigenfunctions included into the coarse space in each case is low.

In Test 2 the distributions in Fig. 2 are slightly modified. The distribution Face 1 is modified by changing the value of the coefficient in the region \(1/16<x<7/16\), \(4/16<y<5/16\) and \(1/16<z<7/16\) from 1 to \(10^6\). This new distribution Face \(1^*\) connects the separate inclusions of Face 1 inside a subdomain. Similarly, the distributions Face 2, Face 3 and Face 4 are modified such that the separate inclusions in each distribution are connected inside a subdomain. These modifications do not change the local eigenvalue problems and the eigenvalues in Table 2 also apply to Test 2. In Test 2 the mesh parameters are fixed \(H=1/2\) and \(H/h=32\). The threshold is the same as in Test 1. Additionally, we place a capping restriction on the number of basis functions selected from each local eigenvalue problem.

The numerical results of Test 2 are presented in Table 3. Each row corresponds to a specific distribution. The first column lists the results for the methods with no enrichment. The second and third column lists the results for the methods with capped adaptive coarse spaces. The last column lists the results for the methods with adaptive coarse spaces without a cap beyond the threshold. The condition numbers of the first column in Table 3 are notably lower then the condition numbers in the first column of Table 1. From the last column of Table 3 we see that our method improves the performance of PCG.

In Test 3 the distribution has inclusions on edges. The inclusions are two rectangular slabs horizontally placed, as is illustrated by in Fig. 3. The slabs trigger the solution of generalized eigenvalue problems both on edges and faces. The corresponding eigenvalues from the generalized eigenvalue problems with \(\frac{H}{h} = 32\) are presented in Table 4. Due to the symmetry of the distribution, the eigenvalue problems on the edges are identical. Moreover, there are only two unique eigenvalue problems on the faces.

The results of Test 3 are given in Table 5. The first row lists results for the edge distribution shown in Fig. 3. The second row lists results for the distribution where Edge and Face 1–4 are combined. The first column in the table lists the condition number estimates for the coarse space with no enrichment, while the next three columns list the results from solving the test problems with an adaptive coarse space for different mesh sizes. From the results in the last three columns, we see that our preconditioner improves the performance of PCG.

Up to now, we have seen that our method can handle various challenging distributions on a small number of subdomains. In Test 4, we divide the unit cube into 64 subdomains. This decomposition leads to 27 vertex nodes, 108 edges, and 144 faces, excluding all vertices, edges, and faces that are entirely part of the boundary of the domain. The distribution in this test, see Fig. 4, are channels that are parallel to the y axis and run through the entire domain touching the boundary at both sides. A third of all the faces have 4 inclusions each. For this test problem, we use a heterogeneous right-hand side \(f(x,y,z)=10^5e^{-5\sqrt{(x-0.25)^2+(y-0.25)^2+(z-0.25)^2}}\).

The results of Test 4 are provided in Table 6. The two rows present results for \(\frac{H}{h}=8\) and \(\frac{H}{h}=16\). The columns list the thresholds, the number of coarse functions in the non-spectral part, the number of coarse functions in the spectral part, and the condition number estimates for the adaptive coarse spaces. The first 4 columns are for the wire basket based preconditioner, and the 4 last columns are for the vertex based preconditioner. Both methods show condition numbers that are independent of the coefficient value in the test problem.

The distribution in Test 4 shown from two angles. The domain is divided into 64 subdomains. Through all the subdomains there are long channels along the y-axis touching the boundary on both sides. The elements indicated in red have a coefficient of \(10^6\) in the rest of the domain the coefficient is 1 (color figure online)

References

Bjørstad, P.E., Koster, J., Krzyżanowski, P.: Domain decomposition solvers for large scale industrial finite element problems. In: Applied Parallel Computing. New Paradigms for HPC in Industry and Academia, Lecture Notes in Computer Science, vol. 1947, pp. 373–383. Springer (2001)

Bjørstad, P.E., Krzyżanowski, P.: Flexible 2-level Neumann–Neumann method for structural analysis problems. In: Proceedings of the 4th International Conference on Parallel Processing and Applied Mathematics, PPAM2001 Naleczow, Poland, 9–12 Sept 2001, Lecture Notes in Computer Science, vol. 2328, pp. 387–394. Springer (2002)

Brenner, S.C., Scott, L.R.: The Mathematical Theory of Finite Element Methods. Texts in Applied Mathematics, vol. 15, 3rd edn. Springer, New York (2008)

Buhr, A., Engwer, C., Ohlberger, M., Rave, S.: Arbilomod, a simulation technique designed for arbitrary local modifications (2015). Eprint arXiv:1512.07840

Calvo, J.G., Widlund, O.B.: An adaptive choice of primal constraints for BDDC domain decomposition algorithms. Electron. Trans. Numer. Anal. 45, 524–544 (2016)

Chartier, T., Falgout, R.D., Henson, V.E., Jones, J., Manteuffel, T., McCormick, S., Ruge, J., Vassilevski, P.S.: Spectral AMGe (\(\rho \)AMGe). SIAM J. Sci. Comput. 25(1), 1–26 (2003). https://doi.org/10.1137/S106482750139892X

Chung, E., Efendiev, Y., Hou, T.Y.: Adaptive multiscale model reduction with generalized multiscale finite element methods. J. Comput. Phys. 320, 69–95 (2016)

Dolean, V., Jolivet, P., Nataf, F.: An Introduction to Domain Decomposition Methods. Society for Industrial and Applied Mathematics, Philadelphia (2015)

Dolean, V., Nataf, F., Scheichl, R., Spillane, N.: Analysis of a two-level schwarz method with coarse spaces based on local Dirichlet-to-Neumann maps. Comput. Methods Appl. Math. 12, 391–414 (2012)

Efendiev, Y., Galvis, J., Lazarov, R., Margenov, S., Ren, J.: Robust two-level domain decomposition preconditioners for high-contrast anisotropic flows in multiscale media. Comput. Methods Appl. Math. 12(4), 415–436 (2012). https://doi.org/10.2478/cmam-2012-0031

Efendiev, Y., Galvis, J., Lazarov, R., Willems, J.: Robust domain decomposition preconditioners for abstract symmetric positive definite bilinear forms. ESAIM Math. Model. Numer. Anal. 46, 1175–1199 (2012)

Galvis, J., Efendiev, Y.: Domain decomposition preconditioners for multiscale flows in high-contrast media. Multiscale Model. Simul. 8(4), 1461–1483 (2010). https://doi.org/10.1137/090751190

Galvis, J., Efendiev, Y.: Domain decomposition preconditioners for multiscale flows in high-contrast media: reduced dimension coarse spaces. Multiscale Model. Simul. 8, 1621–1644 (2010)

Gander, M.J., Loneland, A., Rahman, T.: Analysis of a new harmonically enriched multiscale coarse space for domain decompostion methods (2015). Eprint arXiv:1512.05285

Graham, I.G., Lechner, P.O., Scheichl, R.: Domain decomposition for multiscale PDEs. Numer. Math. 106(4), 589–626 (2007)

Kim, H.H., Chung, E., Wang, J.: BDDC and FETI-DP preconditioners with adaptive coarse spaces for three-dimensional elliptic problems with oscillatory and high contrast coefficients. J. Comput. Phys. 349, 191–214 (2017). https://doi.org/10.1016/j.jcp.2017.08.003

Kim, H.H., Chung, E.T.: A BDDC algorithm with enriched coarse spaces for two-dimensional elliptic problems with oscillatory and high contrast coefficients. Multiscale Model. Simul. 13(2), 571–593 (2015)

Klawonn, A., Kuhn, M., Rheinbach, O.: Adaptive coarse spaces for FETI-DP in three dimensions. SIAM J. Sci. Comput. 38(5), A2880–A2911 (2016)

Klawonn, A., Radtke, P., Rheinbach, O.: FETI-DP methods with an adaptive coarse space. SIAM J. Numer. Anal. 53(1), 297–320 (2015)

Klawonn, A., Radtke, P., Rheinbach, O.: A comparison of adaptive coarse spaces for iterative substructuring in two dimensions. Electron. Trans. Numer. Anal. 45, 75–106 (2016)

Kornhuber, R., Peterseim, D., Yserentant, H.: An analysis of a class of variational multiscale methods based on subspace decomposition (2016). Eprint arXiv:1608.04081

Málek, J., Strakoš, Z.: Preconditioning and the Conjugate Gradient Method in the Context of Solving PDEs. SIAM Spotlights, vol. 1. Society for Industrial and Applied Mathematics (SIAM), Philadelphia (2015)

Målqvist, A., Peterseim, D.: Localization of elliptic multiscale problems. Math. Comput. 83(290), 2583–2603 (2014). https://doi.org/10.1090/S0025-5718-2014-02868-8

Mandel, J., Sousedík, B.: Adaptive selection of face coarse degrees of freedom in the BDDC and the FETI-DP iterative substructuring methods. Comput. Methods Appl. Mech. Eng. 196(8), 1389–1399 (2007)

Mathew, T.P.A.: Domain Decomposition Methods for the Numerical Solution of Partial Differential Equations. Lecture Notes in Computational Science and Engineering, vol. 61. Springer, Berlin (2008)

Nataf, F., Xiang, H., Dolean, V., Spillane, N.: A coarse space construction based on local Dirichlet-to-Neumann maps. SIAM J. Sci. Comput. 33(4), 1623–1642 (2011)

Oh, D.S., Widlund, O.B., Zampini, S., Dohrmann, C.R.: BDDC algorithms with deluxe scaling and adaptive selection of primal constraints for Raviart–Thomas vector fields. Math. Comput. (2017). https://doi.org/10.1090/mcom/3254. Published electronicaly: June 21, 2017

Ohlberger, M., Schindler, F.: Error control for the localized reduced basis multiscale method with adaptive on-line enrichment. SIAM J. Sci. Comput. 37(6), A2865–A2895 (2015). https://doi.org/10.1137/151003660

Pechstein, C.: Finite and Boundary Element Tearing and Interconnecting Solvers for Multiscale Problems. Lecture Notes in Computational Science and Engineering, vol. 90. Springer, Berlin (2013)

Pechstein, C., Dohrmann, C.R.: A unified framework for adaptive BDDC. Electron. Trans. Numer. Anal. 46, 273–336 (2017)

Pechstein, C., Scheichl, R.: Weighted Poincaré inequalities. IMA J. Numer. Anal. 32, 652–686 (2013)

Smetana, K., Patera, A.T.: Optimal local approximation spaces for component-based static condensation procedures. SIAM J. Sci. Comput. 38(5), A3318–A3356 (2016). https://doi.org/10.1137/15M1009603

Smith, B.F., Bjørstad, P.E., Gropp, W.D.: Domain decomposition: parallel multilevel methods for elliptic partial differential equations. Cambridge University Press, Cambridge (1996). ISBN:0-521-49589-X

Sousedík, B., Šístek, J., Mandel, J.: Adaptive-multilevel BDDC and its parallel implementation. Computing 95(12), 1087–1119 (2013)

Spillane, N., Dolean, V., Hauret, P., Nataf, F., Pechstein, C., Scheichl, R.: Abstract robust coarse spaces for systems of PDEs via generalized eigenproblems in the overlaps. Numer. Math. 126, 741–770 (2014). https://doi.org/10.1007/s00211-013-0576-y

Toselli, A., Widlund, O.B.: Domain Decomposition Methods: Algorithms and Theory, vol. 34. Springer, Berlin (2005)

Author information

Authors and Affiliations

Corresponding author

Additional information

L. Marcinkowski: This work was partially supported by Polish National Science Center Grant: 2016/21/B/ST1/00350.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Eikeland, E., Marcinkowski, L. & Rahman, T. Overlapping Schwarz methods with adaptive coarse spaces for multiscale problems in 3D. Numer. Math. 142, 103–128 (2019). https://doi.org/10.1007/s00211-018-1008-9

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00211-018-1008-9