Abstract

We present a novel theoretical approach to the analysis of adaptive quadratures and adaptive Simpson quadratures in particular which leads to the construction of a new algorithm for automatic integration. For a given function \(f\in C^4\) with \(f^{(4)}\ge 0\) and possible endpoint singularities the algorithm produces an approximation to \(\int _a^bf(x)\,{\mathrm d}x\) within a given \(\varepsilon \) asymptotically as \(\varepsilon \rightarrow 0\). Moreover, it is optimal among all adaptive Simpson quadratures, i.e., needs the minimal number \(n(f,\varepsilon )\) of function evaluations to obtain an \(\varepsilon \)-approximation and runs in time proportional to \(n(f,\varepsilon )\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider a numerical approximation of the integral

for a function \(f:[a,b]\rightarrow {\mathbb R}\). Ideally we would like to have an automatic routine that for given \(f\) and error tolerance \(\varepsilon \) produces an approximation \(Q(f)\) to \(I(f)\) such that it uses as few function evaluations as possible and its error

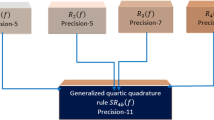

This is usually realized with the help of adaption. Recall a general principle. For a given interval two simple quadrature rules are applied, one more accurate than the other. If the difference between them is sufficiently small, the integral in this interval is approximated by the more accurate quadrature. Otherwise the interval is divided into smaller subintervals and the above rule is recursively applied for each of the subintervals. The oldest and probably most known examples of automatic integration are adaptive Simpson quadratures [8–11], see also [4] for an account on adaptive numerical integration.

An unquestionable advantage of adaptive quadratures is that they try to maintain the error on a prescribed level \(\varepsilon \) and simultaneously adjust the length of the successive subintervals to the underlying function. This often results in a much more efficient final subdivision of \([a,b]\) than the nonadaptive uniform subdivision. For those reasons adaptive quadratures are now frequently used in computational practice, and those using higher order Gauss-Kronrod rules [1, 5, 15] are standard components of numerical packages and libraries such as MATLAB, NAG or QUADPACK [13]. Nevertheless, to the author’s knowledge, there is no satisfactory and rigorous analysis that would explain good behavior of adaptive quadratures in a quantitative way or identify classes of functions for which they are superior to nonadaptive quadratures. This paper is an attempt to partially fill in this gap.

At this point we have to admit that there are theoretical results showing that adaptive quadratures are not better than nonadaptive quadratures. This holds in the worst case setting over convex and symmetric classes of functions. There are also corresponding adaption-does-not-help results in other settings, see, e.g., [12, 14, 17, 18]. On the other hand, if the class is not convex and/or a different from the worst case error criterion is used to compare algorithms then adaption can significantly help, see [2] or [16].

In this paper we present a novel theoretical approach to the analysis of adaptive Simpson quadratures. We want to stress that the restriction to the Simpson rule as a basic component of composite rules is only for simplicity and we could equally well use higher order quadratures. The Simpson rule is a relatively simple quadrature and therefore better enables clear development of our ideas. To be more specific, we analyze the adaptive Simpson quadratures from the point of view of computational complexity. Allowing all possible subdivision strategies our goal is to find an optimal strategy for which the corresponding algorithm returns an \(\varepsilon \)-approximation to the integral (1) using the minimal number of integrand evaluations or, equivalently, the minimal number of subintervals. The main analysis is asymptotic and done assuming that \(f\) is four times continuously differentiable and its \(4\)th derivative is positive.

To reach our goal we first derive formulas for the asymptotic error of adaptive Simpson quadratures. Following [7] we find that the optimal subdivision strategy produces the partition \(a=x_0^*<\cdots <x_m^*=b\) such that

This partition is practically realized by maintaining the error on successive subintervals on the same level. The optimal error corresponding to the subdivision into \(m\) subintervals is then proportional to \(L^{\mathrm{opt}}(f)\,m^{-4}\) where

For comparison, the errors for the standard adaptive (local) and for nonadaptive (using uniform subdivision) quadratures are respectively proportional to \(L^{\mathrm{std}}(f)\,m^{-4}\) and \(L^{\mathrm{non}}(f)\,m^{-4}\) where

Obviously, \(L^{\mathrm{opt}}(f)\le L^{\mathrm{std}}(f)\le L^{\mathrm{non}}(f)\). Hence the optimal Simpson quadrature is especially effective when \(L^{\mathrm{opt}}(f)\ll L^{\mathrm{std}}(f)\). An example is \(\int _\delta ^1 x^{-1/2}\,{\mathrm d}x\) with ‘small’ \(\delta \). If \(\delta =10^{-8}\) then \(L^{\mathrm{opt}}(f)\), \(L^{\mathrm{std}}(f)\), \(L^{\mathrm{non}}(f)\) are correspondingly about \(10^5\), \(10^8\), \(10^{28}\).

Even though the optimal strategy is global it can be efficiently harnessed to automatic integration and implemented in time proportional to \(m\). The only serious problem of how to choose the acceptable error \(\varepsilon _1\) for subintervals to obtain the final error \(\varepsilon \) is resolved by splitting the recursive subdivision process into two phases. In the first phase the process is run with the acceptable error set to a ‘test’ level \(\varepsilon _2=\varepsilon \). Then the acceptable error is updated to

where \(m_2\) is the number of subintervals obtained from the first phase. In the second phase, the recursive subdivision is continued with the ‘target’ error \(\varepsilon _1\).

As noted earlier, the main analysis is provided assuming that \(f\in C^4([a,b])\) and \(f^{(4)}>0\). It turns out that using additional arguments the obtained results can be extended to functions with \(f^{(4)}\ge 0\) and/or possible endpoint singularities, i.e., when \(f^{(4)}(x)\) goes to \(+\infty \) as \(x\rightarrow a,b\). For such integrals the optimal strategy works perfectly well while the other quadratures may even lose the convergence rate \(m^{-4}\).

The contents of the paper is as follows. In Sect. 2 we recall the standard (local) Simpson quadrature for automatic integration. In Sect. 3 we derive a formula for the asymptotic error of Simpson quadratures and find the optimal subdivision strategy. In Sect. 4 we show how the optimal strategy can be used to construct an optimal algorithm for automatic integration. The final Sect. 5 is devoted to the extensions of the main results. The paper is enriched with numerical tests where the optimal adaptive quadrature is compared with the standard adaptive and nonadaptive quadratures.

We use the following asymptotic notation. For two positive functions of \(m\), we write

A corresponding notation applies for functions of \(\varepsilon \) as \(\varepsilon \rightarrow 0\).

2 The standard adaptive Simpson quadrature

In its basic formulation the local adaptive Simpson quadrature for automatic integration, which will be called standard, can be written recursively as follows. Let Simpson \((u,v,f)\) be the procedure returning the value of the simple three-point Simpson rule on \([u,v]\) for the function \(f\), and let \(\varepsilon >0\) be the error demand.

A justification of STD that can be found in textbooks, e.g., [3, 6], is as follows. Denote by \(S_1(u,v;f)\) the three-point Simpson rule,

and by \(S_2(u,v;f)\) the composite Simpson rule that is based on subdivision of \([u,v]\) into two equal subintervals,

We also denote \(I(u,v;f)=\int _u^v f(x)\,{\mathrm d}x\). Suppose that

If the interval \([u,v]\subseteq [a,b]\) is small enough so that \(f^{(4)}\) is ‘almost’ a constant, \(f^{(4)}\approx C\) and \(C\ne 0\), then

Now let \(a=x_0<\cdots <x_m=b\) be the final subdivision produced by STD and

be the result returned by STD. Then, provided the estimate (2) holds for any \([x_{i-1},x_i]\), we have

This reasoning has a serious defect; namely, the approximate equality (2) can be applied only when the interval \([u,v]\) is sufficiently small. Hence STD can terminate too early and return a completely false result. In an extreme case of \([a,b]=[0,4]\) and \(f(x)=\prod _{i=0}^4 (x-i)^2\) we have \(I(f)>0\) but STD returns zero independently of how small \(\varepsilon \) is. Of course, concrete implementations of STD can be equipped with additional mechanisms to avoid or at least to reduce the probability of such unwanted occurrences. To radically cut the possibility of premature terminations we assume, in addition to \(f\in C^4([a,b])\), that the fourth derivative is of constant sign, say,

Equivalently, this obviously means that \(f^{(4)}(x)\ge c\) for some \(c>0\) that depends on \(f\). Assumption (3) assures that the maximum length of the subintervals produced by STD decreases to zero as \(\varepsilon \rightarrow 0\) and the asymptotic equality (2) holds. Indeed, denote by \(D(u,v;f)\) the divided difference of \(f\) corresponding to \(5\) equispaced points \(z_j=u+jh/4\), \(0\le j\le 4\), where \(h=v-u\), i.e.,

Since

the termination criterion

that is checked in line 3 of STD for the current subinterval \([u,v]\), is equivalent to

Our conclusion about applicability of (2) follows from the inequality \(D(u,v;f)\ge c/4!\)

Observe that each splitting of a subinterval \([u,v]\) results in the (asymptotic) decrease of the controlled value in (4) by the factor of \(2^4\). Thus the algorithm asymptotically returns the approximation of the integral within \(\varepsilon \), as desired. Specifically, we have

Remark 1

The inequality (5) explains why numerical tests often show better performance of STD than expected. To avoid this it is suggested to run STD with larger input parameter, say \(2\varepsilon \) instead of \(\varepsilon \).

3 Optimizing the process of interval subdivision

The error formula (5) for the standard adaptive Simpson quadrature does not say anything about how the number \(m\) of subintervals depends on \(\varepsilon \), or what is the actual error after producing \(m\) subintervals. We now study this question for different subdivision strategies. In order to be consistent with STD we assume that for a given subdivision \(a=x_0<x_1<\cdots <x_m=b\) we apply \(S_2(x_{i-1},x_i;f)\) for each of the subintervals \([x_{i-1},x_i]\), so that the final approximation

uses \(n=4m+1\) function evaluations.

The goal is to find optimal strategy, i.e., the one that for any function \(f\in C^4([a,b])\) satisfying (3) produces a subdivision for which the error of the corresponding Simpson quadrature \(S_m(f)\) is asymptotically minimal (as \(m\rightarrow \infty \)).

We first analyze two particular strategies, nonadaptive and standard adaptive, and then derive the optimal strategy. In what follows, the constant

In the nonadaptive strategy, the interval \([a,b]\) is divided into \(m\) equal subintervals \([x_{i-1},x_i]\) with \(x_i=a+ih\), \(h=(b-a)/m\). Let the corresponding Simpson quadrature be denoted by \(S_m^{\mathrm{non}}\). Then

as \(m\rightarrow \infty \).

Observe that for the asymptotic equality (6) to hold we do not need to assume (3).

We now analyze the standard adaptive strategy used by STD. To do this, we first need to rewrite STD in an equivalent way, where the input parameter is \(m\) instead of \(\varepsilon \). We have the following greedy algorithm.

The algorithm starts with the initial subdivision \(a=x_0^{(1)}<x_1^{(1)}=b\). In the \((k+1)\)st step, from the current subdivision \(a=x_0^{(k)}<\cdots <x_k^{(k)}=b\) a subinterval \([x_{i^*-1}^{(k)},x_{i^*}^{(k)}]\) is selected with the highest value

and the midpoint \((x_{i^*-1}^{(k)}+x_{i^*}^{(k)})/2\) is added to the subdivision.

Denote by \(S_m^{\mathrm{std}}(f)\) the result returned by the corresponding Simpson quadrature when applied to \(m\) subintervals. Then, in view of (4), the values \(S_m^{\mathrm{std}}(f)\) and \(S^{\mathrm{std}}(f;\varepsilon )\) are related as follows. Let \(m=m(\varepsilon )\) be the minimal number of steps after which (4) is satisfied by each of the subintervals \([x^{(m)}_{i-1},x^{(m)}_i]\). Then

We are ready to show the error formula for \(S_m^{\mathrm{std}}\) corresponding to (6).

Theorem 1

Let \(f\in C^4([a,b])\) and \(f^{(4)}(x)>0\) for all \(x\in [a,b]\). Then

Proof

We fix \(\ell \) and divide the interval \([a,b]\) into \(2^\ell \) equal subintervals \([z_{i-1},z_i]\) of length \((b-a)/2^\ell \). Call this partition a coarse grid, in contrast to the fine grid produced by \(S_m^\mathrm{std}\). Let

Let \(m\) be sufficiently large, so that the fine grid contains all the points of the coarse grid. Denote by \(z_{i-1}=x_{i,0}<x_{i,1}<\cdots <x_{i,m_i}=z_i\) the points of the fine grid contained in the \(i\)th interval of the coarse grid, and \(h_{i,j}=x_{i,j}-x_{i,j-1}\). Then the error can be bounded from below as

Suppose for a moment that for all \(i,j\) we have \(h_{i,j}^4c_i=A\) for some \(A\). Then \((b-a)/2^\ell =\sum _{j=1}^{m_i}h_{i,j}=m_i(A/c_i)^{1/4}\). Using \(\sum _{i=1}^{2^\ell }m_i=m\) we get

Observe now that any splitting of a subinterval decreases \(h_{i,j}^4c_i\) by the factor of \(16\). Hence

and consequently \(h_{i,j}^4c_i\ge A/16\) for all \(i,j\). Thus

To obtain the upper bound, we proceed similarly. Replacing \(c_i\) with \(C_i\) and using the equation \(h_{i,j}^4C_i\le 16 A\) we get that

To complete the proof we notice that both

are Riemann sums that converge to the integral \(\int _a^b\left( f^{(4)}(x)\right) ^{1/4}\!\,{\mathrm d}x\) as \(\ell \rightarrow \infty \). \(\square \)

Remark 2

From the proof it follows that the constants in the ‘\(\asymp \)’ notation in Theorem 1 are asymptotically between \(1/16\) and \(16\). The gap between the upper and lower constants is certainly much overestimated, see also Remark 4.

The two strategies, nonadaptive and standard adaptive, will be used as reference points for comparison with the optimal strategy that we now derive. We first allow all possible subdivisions of \([a,b]\) regardless of the possibility of their practical realization.

Proposition 1

The subdivision determined by points

where \(x_i^*\) satisfy

is optimal. For the corresponding quadrature \(S_m^*\) we have

Proof

We first show the lower bound. Let \(S_m\) be the Simpson quadrature that is based on an arbitrary subdivision. Proceeding as in the beginning of the proof of Theorem 1 we get that for sufficiently large \(m\) the error of \(S_m\) is lower bounded by

where \(m_i\) is the number of subintervals of the fine grid in the ith subinterval of the coarse grid. (We assume without loss of generality that the coarse grid is contained in the fine grid.) Minimizing this with respect to \(m_i\) such that \(\sum _{i=1}^{2^\ell }m_i=m\) we obtain the optimal values

After substituting \(m_i\) with \(m_i^*\) in the error formula we finally get

Since for the optimal \(m_i^*\) we have

the lower bound (9) is attained by the subdivision determined by \(\{x_i^*\}\). \(\square \)

Now the question is whether the optimal subdivision into \(m\) subintervals of Proposition 1 can be practically realized, i.e., using \(4m+1\) function evaluations. The answer is positive, at least up to an absolute constant. The corresponding algorithm \(S_m^\mathrm{opt}\) runs as \(S_m^\mathrm{std}\) with the only difference that in each step it halves the subinterval with the highest value

[instead of (7)].

Theorem 2

Let \(f\in C^4([a,b])\) and \(f^{(4)}(x)>0\) for all \(x\in [a,b]\). Then

where \(K\le 32\).

Proof

The proof goes as the proof of the upper bound of Theorem 1 with obvious changes related to the facts that now the algorithm tries to balance (11) [instead of (7)], and that

\(\square \)

Remark 3

The best constant \(K\) of Theorem 2 is certainly much less than 32, see also Remark 4.

We summarize the results of this section. All the three quadratures \(S_m^{\mathrm{non}}\), \(S_m^{\mathrm{std}}\), \(S_m^{\mathrm{opt}}\) converge at rate \(m^{-4}\) but the asymptotic constants depend on the integrand \(f\) through the multipliers

These multipliers indicate how difficult a function is to integrate using a given quadrature. Obviously, by Hölder’s inequality we have

Example 1

Consider the integral

In this case \(L^{\mathrm{non}}\), \(L^{\mathrm{std}}\), \(L^{\mathrm{opt}}\) rapidly increase as \(\delta \) decreases, as shown in Table 1.

Numerical computations confirm the theory very well. We tested all the three quadratures (the adaptive quadratures being implemented in \(m\log m\) running time using heap data structure) and ran them for different values of \(\delta \). Specific results are as follows.

For \(\delta =0.5\) the quadratures \(S_m^{\mathrm{non}}\), \(S_m^{\mathrm{std}}\), and \(S_m^{\mathrm{opt}}\) give almost identical results independently of \(m\). For instance, for \(m=10^2\) the errors are respectively \(1.31\times 10^{-13}\), \(1.46\times 10^{-13}\), \(1.46\times 10^{-13}\), and for \(m=10^3\) we have \(1.28\times 10^{-17}\), \(1.43\times 10^{-17}\), \(1.35\times 10^{-17}\). Note that the smallest error for the nonadaptive quadrature is caused by the fact that \(S_m^{\mathrm{non}}\) has a little better absolute constant in the error formula (6) than the adaptive quadratures.

However, the smaller \(\delta \), the more differences between the results. A characteristic behavior of the errors for \(\delta =10^{-2}\) and \(\delta =10^{-8}\) is illustrated by Figs. 1 and 2. Observe that in case \(\delta =10^{-8}\) the nonadaptive quadrature needs more than \(10^4\) subintervals to reach the right convergence rate \(m^{-4}\).

Remark 4

It is interesting to see the behavior of

By (6) we have that \(\lim _{m\rightarrow \infty }K_m^{\mathrm{non}}(f)=1\). The corresponding limits for the adaptive quadratures are unknown; however, we ran some numerical tests and we never obtained more than \(1.5\). This would mean, in particular, that \(S_m^{\mathrm{opt}}\) is at most \(50~\%\) worse than \(S_m^*\). Figure 3 shows the behavior of \(K_m^\mathrm{qad}(f)\) for the integral \(I_\delta \) of Example 1 with \(\delta =10^{-2}\).

4 Automatic integration using optimal subdivision strategy

We want to have an algorithm that automatically computes an integral within a given error tolerance \(\varepsilon \). An example of such algorithm is the recursive STD. Recall that the recursive nature of STD allows to implement it in time proportional to the number \(m\) of subintervals using stack data structure. However, it does not use the optimal subdivision strategy. On the other hand, the algorithm \(S_m^{\mathrm{opt}}\) uses the optimal strategy, but one does not know in advance how large \(m\) should be to have the error \(|S_m^{\mathrm{opt}}(f)-I(f)|\le \varepsilon \). In addition, the best implementation of \(S_m^{\mathrm{opt}}\) (that uses heap data structure) runs in time proportional to \(m\log m\). Thus the question now is whether there exists an algorithm that runs in time linear in \(m\) and produces an approximation to the integral within \(\varepsilon \) using the optimal subdivision strategy.

Since the optimal subdivision is such that the errors on subintervals are roughly equal, the suggestion is that one should run STD with the only difference that it is recursively called with parameter \(\varepsilon \) instead of \(\varepsilon /2\). Denote such modification by OPT.

Let

be the result returned by OPT. Analogously to (8) we have

if \(m\) is the minimal number of steps after which (11) is satisfied by all subintervals.

It is clear that OPT does not return an \(\varepsilon \)-approximation when \(\varepsilon \) is the input parameter. However we are able to estimate a posteriori error. Indeed, let \(m_1\) be the number of subintervals produced by OPT for an \(\varepsilon _1\). Then

We need to find \(\varepsilon _1\) such that \(m_1\varepsilon _1\le \varepsilon \). Since \(m_1\) depends not only on \(\varepsilon _1\) but also on \(L^{\mathrm{opt}}(f)\), it seems hopeless to predict \(\varepsilon _1\) in advance. Surprisingly this is not true.

The idea of the algorithm is as follows. We first run OPT with some \(\varepsilon _2\le \varepsilon \) obtaining a subdivision consisting of \(m_2\) subintervals. Next, using (12) and Theorem 2 we estimate \(L^{\mathrm{opt}}(f)\), and using again Theorem 2 we find the ‘right’ \(\varepsilon _1\). Finally OPT is resumed with the input \(\varepsilon _1\) and with subdivision obtained in the preliminary run of OPT. As we shall see later, this idea can be implemented in time proportional to \(m_1\).

Concrete calculations are as follows. From the equality

where \(\alpha _2\) and \(K_2\) depend on \(\varepsilon _2\), we have

We need \(\varepsilon _1\) such that for the corresponding \(m_1\) the error of \(S^{\mathrm{opt}}_{m_1}(f)\) is at most \(\varepsilon \), i.e.,

where \(\alpha _1\) and \(K_1\) depend on \(\varepsilon _1\). Substituting \(L^{\mathrm{opt}}(f)\) with the right hand side of (13) we obtain

and solving the inequality \(\alpha _1m_1\varepsilon _1\le \varepsilon \) with \(m_1\) given by (14) we get

Recall that, asymptotically, \(\alpha _1\) and \(\alpha _2\) are in \([1/32,1]\) which means that \(\beta \) can be asymptotically bounded from below by \(1\). Hence, taking

we have

The choice of \(\varepsilon _1\) given by (15) is rather conservative. In practice, we observe that the error of \(S^{\mathrm{opt}}(f;\varepsilon _1)\) is ‘on average’ even \(6\) or more times smaller than \(\varepsilon \). Hence we encounter the same phenomenon as for the standard Simpson quadrature, see Remark 1. Yet, in the latter case, the error is usually not so much smaller than \(\varepsilon \). As a consequence, for integrands \(f\) with \(L^{\mathrm{opt}}(f)\cong L^{\mathrm{std}}(f)\) the approximation \(S^{\mathrm{std}}(f;\varepsilon )\) may use less subintervals than \(S^{\mathrm{opt}}(f;\varepsilon _1)\).

To avoid an excessive work, we propose to run the optimal algorithm with the input \(B\,\varepsilon _1\) instead of \(\varepsilon _1\) where, say,

(This corresponds to \(\alpha _1,\alpha _2=1/4\).) We stress that such choice of \(B\) is based on some heuristics and is not justified by any rigorous arguments.

Example 2

We present test results for the integral \(I_\delta =\int _\delta ^1 x^{-1/2}/2\,{\mathrm d}x\) of Example 1 with \(\delta =10^{-2}\) and \(\delta =10^{-8}\), for the standard and optimal Simpson quadratures. In Tables 2 and 3 the results are given correspondingly for \(S^{\mathrm{std}}(f;\varepsilon )\) and \(S^{\mathrm{opt}}(f;\varepsilon _1)\), while in Tables 4 and 5 for \(S^{\mathrm{std}}(f;2\varepsilon )\) and \(S^{\mathrm{opt}}(f;4\sqrt{2}\varepsilon _1)\).

We end this section by presenting a rather detailed description of the optimal algorithm for automatic integration that runs in time proportional to \(m_1\). It uses two stacks, \(\mathrm {Stack1}\) and \(\mathrm {Stack2}\), corresponding to the two phases of the algorithm. The elements of the stacks, \(\mathrm {elt}\), \(\mathrm {elt1}\), \(\mathrm {elt2}\), represent subintervals. Each such element consists of \(6\) fields containing information about: the endpoints of the subinterval, function values at the endpoints and at the midpoint, and the value of the three-point Simpson quadrature for this subinterval. Such structure enables evaluation of \(f\) only once at each sample point. \(\mathrm {Push}\) and \(\mathrm {Pop}\) are usual stack commands for inserting and removing elements.

5 Extensions: \(f^{(4)}\ge 0\) and endpoint singularities

We have analyzed adaptive Simpson quadratures assuming that \(f\in C^4([a,b])\) and \(f^{(4)}>0\). It turns out that the obtained results hold and automatic integration can be successfully applied also for functions with \(f^{(4)}\ge 0\) and functions with endpoint singularities. An observed good behavior of adaptive quadratures for such functions cannot be explained using directly previous tools. What we need is a non-asymptotic error bound for \(S_2(u,v;f)\). Such a bound, together with the corresponding result for \(S_1(u,v;f)\), is provided by the following lemma.

Lemma 1

Suppose that \(f\in C([u,v])\) and \(f\in C^4([u_1,v_1])\) for all \(u<u_1<v_1<v\). If, in addition, \(f^{(4)}(x)\ge 0\) for all \(x\in (u,v)\), then

and

(with convention that \(0/0=1\)).

Proof

Given \(c\in (u,v)\), we have that for any \(x\in [u,v]\)

where \(T_c\) is a Taylor polynomial for \(f\) of degree \(3\) at \(c\). (The formula is obvious for \(x\in (a,b)\) and by continuity of \(f\) it extends to \(x=u,v\).) Furthermore, integrating (16) with respect to \(x\) we get that

Using (16) for \(z_j=u+jh/4\), \(0\le j\le 4\), \(h=v-u\), we then obtain

with the Peano kernel \(\psi _0(u,v;t)=h^4\Psi _0((t-u)/h)\) where

For the error of \(S_1\) we similarly find that

where \(\psi _1(u,v;t)=h^4\Psi _1((t-u)/h)\),

Since

(and both bounds are sharp), we get the desired bounds.

For the error of \(S_2(u,v;f)\) we analogously find that

where the kernel \(\psi _2(u,v;t)=h^4\Psi _2((t-u)/h)\),

The remaining bound follows from the inequality

The Peano kernels \(\Psi _0\), \(\Psi _1\), and \(\Psi _2\) are presented in Fig. 4. \(\square \)

In what follows we concentrate on generalizing Theorem 2 about \(S_m^\mathrm{opt}\) since the other results (Theorem 1 and Proposition 1) can be treated in a similar fashion.

First we prove that the assumption \(f^{(4)}>0\) in Theorem 2 can be replaced by

Proof

Suppose without loss of generality that \(f^{(4)}\) is not everywhere zero in \([a,b]\). We first produce a course grid \(\{z_i\}_{i=1}^{2^\ell }\) of length \((b-a)/2^\ell \) and remove from it all the points \(z_i\) (\(1\le i\le 2^\ell -1\)) such that

Denote the successive points of the modified grid by \(\{\hat{z}_i\}_{i=1}^k\), \(k\le 2^\ell \). Let

From (18) it follows that a subinterval is further subdivided if and only if \(f^{(4)}\not \equiv 0\) in this subinterval. Hence for sufficiently large \(m\) the coarse grid is contained in the fine grid produced by \(S_m^\mathrm{opt}\) and the subintervals \([\hat{z}_{i-1},\hat{z}_i]\) with \(C_i>0\) have been subdivided at least once.

Let \(\hat{z}_{i-1}=x_{i,0}<\cdots <x_{i,k_i}=\hat{z}_i\) be the points of the fine grid contained in \([\hat{z}_{i-1},\hat{z}_i]\), and \(h_{i,j}=x_{i,j}-x_{i,j-1}\). Define

We now make an important observation that for any \(i\in {\mathcal J_0}\) with \(C_i>0\) and any \(1\le j\le k_i\)

Indeed, if this were not satisfied by a subinterval \([x_{i^*,j^*-1},x_{i^*,j^*}]\) then its predecessor, whose length is \(2h_{i^*,j^*}\) and belongs to the \(i^*\)th subinterval of the coarse grid, would not be subdivided.

Hence, denoting by \(m_0\) the number of subintervals of the fine grid in \(P_0\), we have

This implies \(m_0\le 2\,(15\gamma )^{1/5}\,M_0\,\beta ^{-1/5}.\) Denoting by \(m_1\) the number of subintervals of the fine grid in \(P_1\), we have

which implies \(m_1\,\ge \,(15\gamma )^{1/5}\,M_1\,\beta ^{1/5}.\) Hence \(m_0/m_1\le 2M_0/M_1\) and this bound is independent of \(m\). However it depends on \(\ell \). Taking \(\ell \) large enough we can make \(m_0/m_1\) arbitrarily small.

From Lemma 1 it follows that the integration error in \(P_0\) is upper bounded by \(m_0\beta \). Since \(f^{(4)}\) is positive in \(P_1\), the error in \(P_1\) is asymptotically (as \(m\rightarrow \infty \)) lower bounded by \(m_1\beta /(15\cdot 32)\). Hence for \(\ell \) large enough the error in \(P_0\) is arbitrarily small compared to that in \(P_1\). In addition, the error in \(P_1\) follows the upper bound of Theorem 2. The proof is complete. \(\square \)

We now pass to functions with endpoint singularities. To fix the setting, we assume that \(f\) is continuous in the closed interval \([a,b]\) and \(f\in C^4([a_1,b])\) for all \(a<a_1<b\). Moreover,

and this divergence is asymptotically monotonic, i.e., there is \(\delta >0\) such that

As before, we prove that for such functions the upper error bound for \(S_m^\mathrm{opt}\) in Theorem 2 is still valid.

Proof

First, we observe that the difference \(S_1(a,a+h;f)-S_2(a,a+h;f)\) converges to zero faster than \(h\). Indeed, in view of (18) we have

This assures that the partition is denser and denser in the whole \([a,b]\) and the integration error goes to zero.

Second, we have that \(L^\mathrm{opt}(f)<\infty \). Indeed, by Hölder’s inequality

which is finite due to (16).

Now, let \(\ell \) be such that \((b-a)2^{-\ell }\le \delta \), and let \(\{z_i\}_{i=-\infty }^k\) with \(k=2^\ell -1\) be the (infinite) coarse grid defined as

Denote, as before, \(C_i=\max _{z_{i-1}\le x\le z_i}f^{(4)}(x)\). We obviously have \(C_i=f^{(4)}(z_{i-1})>0\) for all \(i\le 0\). For simplicity, we also assume \(C_i>0\) for \(1\le i\le k\).

Let \(m\) be sufficiently large so that the fine grid produced by \(S_m^\mathrm{opt}\) contains all the points \(z_i\) for \(i\ge 0\). Moreover, we can assume that each subinterval \([z_{i-1},z_i]\) with \(i\ge 1\) has been subdivided at least once. Let \([a,z_{-s}]\) be the first subinterval of the fine grid.

Let us further denote \(P_0=[a,z_0]\) and \(P_1=[z_0,b]\). Then \(P_0=P_{0,0}\cup P_{0,1}\) where \(P_{0,0}\) consists of \([0,z_{-s}]\) and all subintervals of the course grid that have not been subdivided by \(S_m^\mathrm{opt}\). Let \(m_{0,0}\), \(m_{0,1}\), \(m_1\) be the numbers of subintervals of the fine grid in \(P_{0,0}\), \(P_{0,1}\), \(P_1\), respectively.

Define \(\beta \) as in (20). In view of (24), the distance \((z_{-s}-a)\) decreases slower than \(\beta \) as \(m\rightarrow \infty \), and therefore \(m_{0,0}\) is at most proportional to \(\log _2(1/\beta )\). Since (21) holds for the subintervals in \(P_{0,1}\), the number \(m_{0,1}\) can be estimated as \(m_0\) in (22) with

where the last inequality follows from monotonicity of \(f^{(4)}\). Since \(m_1\) can be estimated as in (23) we obtain, analogously to the previous proof, that the number of subintervals in \(P_1\) and the error in \(P_1\) dominate the scene. The proof is complete. \(\square \)

We stress that for continuous functions with endpoint singularities we always have \(L^\mathrm{opt}(f)<\infty \), which is not true for \(L^\mathrm{std}(f)\). An example is provided by

with \(f^{(4)}(x)=(t\ln t)^{-4}.\) Indeed, since \(f(0)=\int _0^1(3!\,t\ln ^4t)^{-1}\,{\mathrm d}t<\infty \), the function is well defined and \(L^\mathrm{opt}(f)<\infty \), but

For such functions, the subdivision process of \(S_m^\mathrm{std}\) will not collapse [which follows from (24)] and the error will converge to zero; however, the convergence rate \(m^{-4}\) will be lost. On the other hand, if \(L^\mathrm{std}(f)<\infty \) then the error bounds of Theorem 1 hold true.

Example 3

Consider the integral

The integrand is continuous at \(0\) only if \(p\ge 0\). Then both, \(L^\mathrm{opt}(f)\) and \(L^\mathrm{std}(f)\), are finite. However, \(L^\mathrm{non}(f)<\infty \) only if \(p\) is a non-negative integer or \(p>3\). Figures 5 and 6, where the results for \(p=1/2\) and \(p=1/20\) are presented, show that, indeed, the adaptive quadratures \(S_m^\mathrm{std}\) and \(S_m^\mathrm{opt}\) converge as \(m^{-4}\), and \(S_m^\mathrm{non}\) converges at a very poor rate.

Error \(e\) versus \(m\) for function \(f\) of Example 4

We end this paper by showing the importance of continuity of \(f\).

Example 4

Consider the integral \(\int _a^b f(x)\,{\mathrm d}x\) with \(a=-1/2\), \(b=1\),

In this case \(L^\mathrm{opt}(f)<\infty \) but \(L^\mathrm{std}(f)=\infty \). Figure 7 shows that \(S_m^\mathrm{opt}\) enjoys the ‘right’ convergence \(m^{-4}\) but \(S_m^\mathrm{std}\) completely fails. This is because the critical value

does not converge faster than \(h\); the algorithm keeps dividing the subinterval containing \(0\). As a result, the standard adaptive algorithm is asymptotically even worse than the nonadaptive algorithm.

Equally striking is the difference between OPTIMAL and STD. While OPTIMAL works perfectly well, see Table 6, STD will never reach the stopping criterion for \(\varepsilon \le 10^{-3}\), and will loop forever.

Unfortunately, this example is misleading. The very good behavior of \(S_m^\mathrm{opt}\) is a consequence of our “lack of bad luck” rather than a rule. Indeed, it is enough to change the value of \(f\) in \([-1/2,0]\) from \(0\) to \(7/3\) to see that then \(S_1(a,(a+b)/2;f)-S_2(a,(a+b)/2;f)=0\) although \(\int _a^{(a+b)/2}f(x)\,{\mathrm d}x=19/12>0\). As a consequence, \(\lim _{m\rightarrow \infty }S_m^\mathrm{opt}(f)=13/6\) while the integral equals \(25/12\).

References

Calvetti, D., Golub, G.H., Gragg, W.B., Reichel, L.: Computation of Gauss-Kronrod quadrature rules. Math. Comput. 69, 1035–1052 (2000)

Clancy, N., Ding, Y., Hamilton, C., Hickernell, F.J., Zhang, Y.: The cost of deterministic, adaptive, automatic algorithms: cones, not balls. J. Complex. 30, 21–45 (2014)

Conte, S.D., de Boor, C.: Elementary numerical analysis—an algorithmic approach, 3rd edn. McGraw-Hill, New York (1980)

Davis, P.J., Rabinowitz, P.: Methods of numerical integration, 2nd edn. Academic Press, Orlando (1984)

Gander, W., Gautschi, W.: Adaptive quadrature—revisited. BIT 40, 84–101 (2000)

Kincaid, D., Cheney, W.: Numerical analysis: mathematics of scientific computing, 3rd edn. AMS, Providence, RI (2002)

Kruk, A.: Is the adaptive Simpson quadrature optimal? Faculty of Mathematics, Informatics and Mechanics, University of Warsaw, Master Thesis (in Polish) (2012)

Lyness, J.N.: Notes on the adaptive Simpson quadrature routine. J. Assoc. Comput. Mach. 16, 483–495 (1969)

Lyness, J.N.: When not to use an automatic quadrature routine? SIAM Rev. 25, 63–87 (1983)

McKeeman, W.M.: Algorithm 145: adaptive numerical integration by Simpson’s rule. Commun. ACM 5, 604 (1962)

Malcolm, M.A., Simpson, R.B.: Local versus global strategies for adaptive quadrature. ACM Trans. Math. Softw. 1, 129–146 (1975)

Novak, E.: On the power of adaption. J. Complex. 12, 199–238 (1996)

Piessens, R., de Doncker-Kapenga, E., Uberhuber, C.W., Kahaner, D.K.: QUADPACK. A subroutine package for automatic integration. Springer, Berlin (1983)

Plaskota, L.: Noisy Information and computational complexity. Cambridge University Press, Cambridge (1996)

Press, W.H., Teukolsky, S.A., Vetterling, W.T., Flannery, B.P.: Numerical recipes: the art of scientific computing, 3rd edn. Cambridge University Press, New York (2007)

Plaskota, L., Wasilkowski, G.W.: Adaption allows efficient integration of functions with unknown singularities. Numerische Mathematik 102, 123–144 (2005)

Traub, J.F., Wasilkowski, G.W., Woźniakowski, H.: Information-based complexity. Academic Press, New York (1988)

Wasilkowski, G.W.: Information of varying cardinality. J. Complex. 1, 107–117 (1986)

Acknowledgments

The author would like to thank Grzegorz Wasilkowski and Henryk Woźniakowski for discussions on the results of this paper, and an anonymous referee for constructive comments. This research was partially supported by the National Science Centre, Poland, based on the decision DEC-2013/09/B/ST1/04275.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Plaskota, L. Automatic integration using asymptotically optimal adaptive Simpson quadrature. Numer. Math. 131, 173–198 (2015). https://doi.org/10.1007/s00211-014-0684-3

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00211-014-0684-3