Abstract

We study the \(\ell ^1\)-summability of functions in the d-dimensional torus \({{\mathbb {T}}}^d\) and so-called \(\ell ^1\)-invariant functions. Those are functions on the torus whose Fourier coefficients depend only on the \(\ell ^1\)-norm of their indices. Such functions are characterized as divided differences that have \(\cos {\theta }_1,\ldots ,\cos {\theta }_d\) as knots for \(({\theta }_1\,\ldots , {\theta }_d) \in {{\mathbb {T}}}^d\). It leads us to consider the d-dimensional Fourier series of univariate B-splines with respect to its knots, which turns out to enjoy a simple bi-orthogonality that can be used to obtain an orthogonal series of the B-spline function.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider a problem originating from the \(\ell ^1\)-summability of multivariate Fourier series. Let f be a \(2\pi \)-periodic function in \(L^2({{\mathbb {T}}}^d)\) and let \({{\hat{f}}}_{\alpha }\) be the Fourier coefficient of f with the multi-index \({\alpha }= ({\alpha }_1,\ldots , {\alpha }_d) \in {{\mathbb {Z}}}^d\). Let

be the n-th \(\ell ^1\)-partial sum of its Fourier series, where \(|{\alpha }|=|{\alpha }|_1 = |{\alpha }_1|+ \cdots + |{\alpha }_d|\), so that the summation is over indices in the \(\ell ^1\)-ball of radius n. The \(\ell ^1\)-summability has been studied in [2, 5, 8,9,10,11] and it is closely related to the summability of Fourier series of orthogonal polynomials on the cube [12]. The Dirichlet kernel of \(S_n^{(1)}(f)\) turns out to be a divided difference to be defined below in the form

where \(G_{n,d}\) is a function of one variable as shown in [2, 12] (see (2.2) in the next section). The divided difference can be written as an integral with a Peano kernel; in particular, for a \((d-1)\)-times differentiable function \(F: [-1,1]\rightarrow {{\mathbb {C}}}\),

where \(u \mapsto M_{d-1}(u| \cos {\theta }_1,\ldots ,\cos {\theta }_d)\) is the B-spline function, which is a piecewise polynomial function in \(C^{d-2}([-1,1])\) with \(\cos {\theta }_1,\ldots , \cos {\theta }_d\) as its knots (see the next section for its definition). Motivated by the \(\ell ^1\)-summability and functions defined by the above divided difference, we call a function \(\ell ^1\)-invariant if \({{{\hat{f}}}_{\alpha }} = {{{\hat{f}}}_{\beta }}\) whenever \(|{\alpha }| = |{\beta }|\) and study properties of such functions.

Our analysis is partially motivated by the study in [2], where the \(\ell ^1\)-summability of the Fourier transform in \({{\mathbb {R}}}^d\), defined by

is treated and its associated Dirichlet kernel is shown to be given as a divided difference, namely

where \({{\mathfrak {G}}}_{\rho ,d}\) is a function of one variable. The Fourier transform of the B-spline function \(x \mapsto M_{d-1}(u| x_1^2,\ldots , x_d^2)\), considered as a function of its knots, is analyzed in [2], which turns out to enjoy a rich structure and provides necessary tools for studying the class of \(\ell ^1\)-invariant functions \(f(\Vert \cdot \Vert _1)\) defined on \({{\mathbb {R}}}^d\). In particular, it leads to a characterization of \(f: {{\mathbb {R}}}_+ \rightarrow {{\mathbb {R}}}\) so that \(x \mapsto f(\Vert \cdot \Vert _1)\) is a positive definite function on \({{\mathbb {R}}}^d\).

We will show that the \(\ell ^1\)-invariant functions on the torus are all given by divided differences with knots \(\cos \theta _1, \ldots , \cos \theta _d\), and we will study the Fourier series of the B-spline \({\theta }\mapsto M_{d-1}(u| \cos {\theta }_1,\ldots , \cos {\theta }_d)\), which is \(\ell ^1\)-invariant. While the Fourier transform of the B-spline \(x \mapsto M_{d-1}(u| x_1^2,\ldots , x_d^2)\) on \({{\mathbb {R}}}^d\) satisfies an integral recursive relation in dimension d, the Fourier coefficients of the B-spline \(x \mapsto M_{d-1}(u| \cos {\theta }_1,\ldots , \cos {\theta }_d)\) on \({{\mathbb {T}}}^d\) satisfy a somewhat surprising biorthogonal relation with a family of polynomials. Let \(m_{n,d}\) denote the Fourier coefficients of the B-spline function with the index \(|{\alpha }| = n\). Then there is a sequence of polynomials \(h_{n,d}\), given in terms of the Gegenbauer polynomials, such that \(\{m_{n,d}: n \in {{\mathbb {N}}}_0\}\) and \(\{h_{n,d}: n \in {{\mathbb {N}}}_0\}\) are biorthogonal in the sense that

This orthogonal relation can be used to derive the Fourier orthogonal series of the B-spline function in the Gegenbauer polynomials explicitly; the first term of the series gives, in particular, that

an identity that appears to be new. We will also give a characterization of \(\ell ^1\)-invariant functions that are either positive definite or strictly positive definite on \({{\mathbb {T}}}^d\).

The paper is organized as follows. We recall the definition and basic properties of \(\ell ^1\)-summability in the next section and establish several necessary identities. The Fourier orthogonal series of the B-spline with respect to its knot is given in the third section. The positive definite functions among \(\ell ^1\)-invariant functions are discussed in the fourth section.

2 \(\ell ^1\)-summability on \({{\mathbb {T}}}^d\)

Let f be a \(2\pi \)-periodic function defined on \({{\mathbb {T}}}^d\). If \(f\in L^2({{\mathbb {T}}}^d)\), then the Fourier series of f is defined by

We study the class of periodic functions that we call \(\ell ^1\)-invariant.

Definition 2.1

A function \(f: {{\mathbb {T}}}^d \rightarrow {{\mathbb {R}}}\) is called \(\ell ^1\)-invariant if

We denote the Fourier coefficient \({{\hat{f}}}_{\alpha }\) of such a function by \({{\hat{f}}}_{|{\alpha }|}\).

If f is \(\ell ^1\)-invariant, then its Fourier series is of the form

where \(|{\alpha }|\) is the \(\ell ^1\)-norm, that is \(|{\alpha }|:= |{\alpha }_1| + \cdots + |{\alpha }_d|\), of \({\alpha }\in {{\mathbb {Z}}}^d\).

A function f on \({{\mathbb {T}}}^d\) is called \(\ell ^1\)-summable if its partial sum \(S_n^{(1)} f\) over the expanding \(\ell ^1\)-ball, defined by

converges to f. The partial sum can be written as an integral operator

where the kernel \(D_{n,d}\) is the analog of the Dirichlet kernel defined by

It is shown in [2, 12] that the kernel \(D_{n,d}\) can be written as a divided difference

where \(G_{n,d}\) is a univariate function defined by

We briefly recall the notion of a divided difference of a function that is at least continuous. Let f be a real or complex function on \({{\mathbb {R}}}\), and let \(m \in {{\mathbb {N}}}_0\). The m-th divided difference of f at the (pairwise distinct) knots, \(x_0, x_1, \ldots , x_m\) in \({{\mathbb {R}}}\) is defined inductively as

The divided difference is a symmetric function of the knots. The knots of the divided difference may coalesce. In particular, if all knots coalesce and if the function is sufficiently differentiable, then the divided difference collapses to

Our analysis depends heavily on an integral representation of the divided difference, for which we need the definition of B-spline. For \(x_0< \cdots < x_m\), the B-spline of order m with knots \(x_0, \ldots , x_m\) is defined by

The B-spline vanishes outside the interval \((x_0,x_m)\) and it is strictly positive on the interval itself, and

For better reference, we state the integral representation of the divided difference as a lemma.

Lemma 2.2

Let \(f:{{\mathbb {R}}}\rightarrow {{\mathbb {C}}}\) be m-times continuously differentiable. Then

We shall also need the B-splines’ recurrence relation (Powell, 1982) [6]

We first write the function \(E_n\) as an integral against the B-spline. We will need the Gegenbauer polynomials, which are orthogonal polynomials with respect to the weight function

on the interval \([-1,1]\). Let \((a)_n = a(a+1)\cdots (a+n-1)\) denote the Pochhammer symbol. The Gegenbauer polynomial of degree n is denoted by \(C_n^{\lambda }\) and normalized by \(C_n^{\lambda }(1) = \frac{(2{\lambda })_n}{n!}\). The Gegenbauer polynomials satisfy the orthogonality

where \(c_{\lambda }\) is a constant so that \(c_{\lambda }\int _{-1}^1 w_{\lambda }(t) \,\textrm{d}t = 1\). For convenience, we also define

The generating function of the Gegenbauer polynomials is given by

Throughout this paper we define \(C_n^{\lambda }(t) =0\) whenever \(n < 0\).

Lemma 2.3

For \(\theta = ({\theta }_1,\ldots ,{\theta }_d)\in {{\mathbb {T}}}^d\), the function \(E_n\) satisfies

where \(H_{n,d}\) and \(h_{n,d}\) are defined by \(H_{0,d} = G_{0,d}\) and, for \(n \ge 1\),

and \(h_{n,d}\) is a polynomial of degree n given by, \(h_{0,d} =1\) and, for \(n \ge 1\),

Proof

By its definition, \(E_n({\theta }) = D_n({\theta }) - D_{n-1}({\theta })\), so that \(E_n({\theta })\) is a divided difference of \(H_{n,d} = G_{n,d} - G_{n-1,d}\), from which (2.4) follows readily with \(h_{n,d} = H_{n,d}^{(d-1)}\) and the identity (2.5) follows as a consequence of (2.2) and the trigonometric identities \(\cos (n+\frac{1}{2}){\theta }- \cos (n-\frac{1}{2}){\theta }= - 2 \sin n{\theta }\sin \frac{{\theta }}{2}\) and \(\sin (n+\frac{1}{2}){\theta }- \sin (n-\frac{1}{2}){\theta }= 2 \cos n{\theta }\sin \frac{{\theta }}{2}\). Now, it is shown in [2] that

where \(f_{n,d}\) is given in terms of the Gegenbauer polynomials by

Using the relation [7, (4.7.29)]

with \({\lambda }= d-1\), we then obtain

which is the second expression of \(h_{n,d}\) in (2.6) by recursion with \(Z_n^d\). Furthermore, the first identity in (2.6) follows from \(\left( {\begin{array}{c}d-1\\ j\end{array}}\right) + \left( {\begin{array}{c}d-1\\ j-1\end{array}}\right) = \left( {\begin{array}{c}d\\ j\end{array}}\right) \) and

where we define for convenience \(\left( {\begin{array}{c}d-1\\ m\end{array}}\right) = 0\) if \(m = -1\) or \(m = d\). \(\square \)

Let \(N_d(n) = \# \{{\alpha }\in {{\mathbb {N}}}_0^d: |{\alpha }| =n\}\) be the cardinality of the set \(\{{\alpha }: |{\alpha }| =n\}\). Then, \(N_{d}(n) = E_n(0)\). As a consequence of the identities (2.4) and (2.6), we obtain

where \((a)_n = a(a+1)\cdots (a+n-1)\) is the Pochhammer symbol. The last sum can be written as a hypergeometric \({}_3F_2\) function evaluated at 1,

but the series is not balanced so it does not have a closed-form formula. The first values of N(n, d) are given below

The function \(h_{n,d}\) satisfies a generating function identity which we state as the following result.

Lemma 2.4

Let \(0 \le r < 1\). Then

Proof

By the explicit formula of \(h_{n,d}\), we obtain

where we have used the generating function of the Gegenbauer polynomials. \(\square \)

Our next result is of interest in itself, which gives an explicit formula for the divided difference of the function

Proposition 2.5

For \(0 \le r < 1\),

In particular,

Proof

We start with the elementary identity

Reorganizing the d-fold product of this identity and setting \({\theta }= ({\theta }_1,\ldots , {\theta }_d)\) as above, we obtain the equalities

from which the identity (2.8) follows from the generating function of \(h_{n,d}\). Now, taking derivatives of \(P_r\), we obtain readily that

so that the left-hand side of (2.8) can be identified with the divided difference of \(P_r\), which gives (2.9). \(\square \)

3 Fourier series of B-splines with respect to its knots

As a function of its knots, the B-spline function is a periodic function on \({{\mathbb {T}}}^d\),

for each u, and we also define for convenience

Studying this case is sufficient since for \(|u| \ge 1\), \( M_{d-1}(u| \cos {\theta }_1,\ldots ,\cos {\theta }_d) = 0\) by definition, for all \({\theta }\in {{\mathbb {T}}}^d\). We first show that it is an integrable function on \({{\mathbb {T}}}^d\).

Proposition 3.1

For \(u \in (-1,1)\), the function \({\theta }\mapsto M_{d-1}(u| \cos {\theta }_1,\ldots ,\cos {\theta }_d)\) is in \(L^1({{\mathbb {T}}}^d)\).

Proof

Let \(u = \cos {\alpha }\) for \(0< {\alpha }< \pi \) be fixed. Since the function \({{\mathcal {M}}}_d({\alpha };\cdot )\) is obviously even in each of its variables, we only need to consider \({\theta }\in [0, \pi ]^d\). Furthermore, the divided difference is a symmetric function of its knots, the function \({{\mathcal {M}}}_{d}({\alpha };\cdot )\) is a symmetric function and it is nonnegative, so we only need to show that it is an \(L^1\) function on the domain

Indeed, the above consideration leads readily to

We start with the case \(d=2\); the univariate case is trivial by continuity and compact support. On the domain \(\triangle _2\), the function is given by

Hence, it follows readily that

where we have used the inequality \(\sin \frac{{\theta }_1-{\theta }_2}{2} \ge \frac{{\theta }_1-{\theta }_2}{\pi }\) and, if \(\frac{{\theta }_1+{\theta }_2}{2} \le \frac{\pi }{2}\), \(\sin \frac{{\theta }_1+{\theta }_2}{2} \ge \sin \frac{{\theta }_2}{2} \ge \sin \frac{{\alpha }}{2}\), whereas if \(\frac{{\theta }_1+{\theta }_2}{2} > \frac{\pi }{2}\), \(\sin \frac{{\theta }_1+{\theta }_2}{2} = \sin (\frac{\pi - {\theta }_1}{2} + \frac{\pi - {\theta }_2}{2}) \ge \sin \frac{\pi - {\alpha }}{2} = \cos \frac{{\alpha }}{2}\). The last integral is equal to \(-{\alpha }\ln {\alpha }+ \pi \ln \pi + ({\alpha }- \pi ) \ln (\pi -{\alpha })\), which is bounded for \(0< {\alpha }< \pi \), so that \({{\mathcal {M}}}_{1}({\alpha }; {\theta }) \in L^1({{\mathbb {T}}}^2)\) for \(0< {\alpha }< \pi \).

For \(d > 2\), we use induction on d and the already stated recurrence relation from above for B-splines. Since

and \({{\mathcal {M}}}_{d+1}( \alpha | {\theta }) =0\) if \(\alpha \not \in ({\theta }_1,{\theta }_{d+1})\), it follows that for \(\alpha \in [0,\pi ]\) and \(\theta \in \triangle _d\),

Consequently, the integrability of \({{\mathcal {M}}}_{d+1}({\alpha }| {\theta })\) follows from the integrability of \({{\mathcal {M}}}_d({\alpha }| {\theta })\) by induction. \(\square \)

Since \({{\mathcal {M}}}_d({\alpha };\cdot )\) is a nonnegative integrable function, we can expand it into multiple Fourier series, which leads us to consider the Fourier coefficients of the B-spline function as a function of its knots. More interestingly, we consider the \(\ell ^1\)-sum of its Fourier coefficients.

Definition 3.2

For \(d \ge 2\) and \(n \in {{\mathbb {N}}}_0\), we define

By the definition of \(E_n({\theta })\), \(m_{n,d}\) is the \(\ell ^1\) mean of the Fourier transform of the B-spline function \({\theta }\mapsto M_{d-1} (u | \cos {\theta }_1,\ldots ,\cos {\theta }_d)\) with respect to its knots.

Theorem 3.3

The family of functions \(\{m_{n,d}: n \in {{\mathbb {N}}}_0\}\) and the family of functions \(\{h_{n,d}: n \in {{\mathbb {N}}}_0\}\) are biorthogonal; more precisely,

Proof

Multiplying the first identity of (2.10) by \(E_n({\theta })\) and integrating over \({\theta }\in {{\mathbb {T}}}^d\), we obtain

Using (2.8) and exchanging the order of integrals on the right-hand side, we obtain

where the last step follows from (2.7). Since the above identity holds for \(|r| <1\), comparing the coefficients of \(r^n\) proves (3.1) by linear independence. \(\square \)

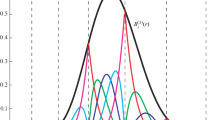

Using the orthogonality, we can now derive a series expansion of \(m_{n,d}\). Let us start with \(d =2\).

Proposition 3.4

For \(n =0, 1,2,\ldots \), \(m_{n,2}(u) = 0\) if \(|u| \ge 1\), and furthermore

Proof

Let \(n \ge 0\) be fixed. Since \(m_{n,2}(\pm 1) =0\), we assume that \(m_{n,2}(u)\) contains a factor \(\sqrt{1-u^2}\) and takes the form

where the coefficients \(a_k\) are real numbers that will be determined by the biorthogonality of (3.1) and \(U_n\) are as usual the Chebyshev polynomials of the second kind, satisfying \(U_n=C^1_n\). Using the orthogonality of \(U_n\),

and the second explicit formula, in (2.6),

we see that (3.1) becomes, for \(\ell =0,1,\ldots \),

If \(\ell \) and n have different parity, then both the right-hand and the left-hand side are zero. Assume now that \(\ell \) and n have the same parity. If \(\ell < n\), then the right-hand side is trivially zero by the orthogonality of \(U_n\). Thus, we only need to consider \(\ell = n + 2j\) for \(j = 0,1,\ldots \), for which the identity becomes

where \(a_{-1} =0\), so that \(a_0 =1\) and \(a_j =a_{j-1}\) for \(j \ge 0\). Hence, \(a_j = 1\) for \(j=0,1,\ldots \). Now, setting \(u = \cos {\alpha }\), then we get \(\sqrt{1-u^2} U_{n+2k}(u) = \sin ((n+2k+1) {\alpha })\), which gives the expression \(m_{n,d}\) in (3.2). \(\square \)

It turns out, surprisingly, that the expression (3.2) can be written, for each n, as a finite sum.

Theorem 3.5

For \(n =0, 1,2,\ldots \), and \(0< {\alpha }< \pi \),

In particular, we obtain

Proof

Let \(f_0\) and \(f_1\) be odd \(2\pi \)-periodic functions so that their restriction on \([0,\pi ]\) are defined by

A quick computation shows that the Fourier series of \(f_0\) is given by

where the convergence is pointwise. This gives immediately (3.5). Furthermore, for \(m_{2n,2}\), we obtain from (3.2)

which is (3.3). Another quick computation shows that the Fourier series of \(f_1(\theta )\) is

which shows in particular, together with the Fourier series of \(f_0\),

Thus, for \(m_{2n+1,2}\), we obtain from (3.2) that

which is (3.4). \(\square \)

Proposition 3.6

For \(d >2\), the function \(m_{n,d}\) is of the form

where \(c_{d-1}\) is the normalization constant defined by its reciprocal

Proof

For \(d > 2\), the function \(u\mapsto M_{d-1}(u | \cos \{\cdot \}_1, \ldots , \cos \{\cdot \}_d)\) has support in \((-1,1)\) and has \(d-2\) continuous derivatives, therefore it follows that \(m_{n,d}\) is a continuous and \(m_{n,d}^{(j)}(\pm 1) = 0\) for \(j = 0,1,\ldots , d-2\). We assume that \(m_{n,d}\) has the series expansion

where the coefficients \(a_k^n\) are to be determined by the biorthogonality, and the choice of index \(n+2k\) comes from (3.1) and the parity of \(h_{n,d}\). Now, the orthogonality of the Gegenbauer polynomials is equivalent to

Using the second explicit formula of \(h_{n,d}\) in (2.6), which shows that the term of the highest degree in \(h_{n,d}\) is \((d-1)!Z_n^{d-1}\), whereas the term of the lowest degree in \(m_{n,d}\) contains \(C_n^{d-1}\), or the term \(k = 0\) in the sum, the orthogonality (3.7) implies that the identity (3.1) for \(n' = n\) becomes

moreover, for \(n'=n+2\ell \) and \(\ell = 1,2,3,\ldots \), (3.1) becomes

Thus, using \(a_j^n = 0\) for \(j < 0\) and recalling that \(a_0^n = 1\), we see that \(a_j^n\) satisfy

It shows, in particular, that the \(a_j^n\) are independent of n and that they can be determined recursively so that the solution is unique. It turns out that the solution (3.8) is given explicitly by \(a_j = (d-1)_j / j!\). To verify that this is indeed the case, we write

where the second identity follows from \((-x)_{\ell -j} = (-x)_\ell (-1)^j (1-\ell -x)_j\), so that the right-hand side of (3.8) can be written as a hypergeometric function, namely

where the last step follows from the Chu-Vandermonde identity [1, p. 67]. Since \((-\ell +1)_\ell = 0\) for \(\ell \in {{\mathbb {N}}}\), this verifies that \(a_j = (d-1)_j / j!\) is the solution of (3.8). \(\square \)

Theorem 3.7

For \(d > 2\) and \(-1 \le u \le 1\),

Proof

Let \(g(u) = (1-u^2)^{- \frac{d-1}{2}}\). We compute the Fourier-Gegenbauer coefficients \({{\hat{g}}}^{d-1}_n\) defined by

By the parity of \(C_n^{d-1}\), \({{\hat{g}}}^{d-1}_n = 0\) if n is odd. To compute \({{\hat{g}}}_{2n}^{d-1}\), we use the connection coefficients of Gegenbauer polynomials [1, Theorem 7.1.4’, p. 360], which gives

Using the orthogonality of \(Z_m^{\frac{d-1}{2}}\), we then conclude that

(Note that \(c_\cdot \) is also defined for a non-integer index.) Consequently, the Fourier-Gegenbauer expansion of g is given by

where \(h_n^{\lambda }\) denotes the \(L^2([-1,1], (1-t^2)^{{\lambda }-\frac{1}{2}})\) norm of \(C_n^{\lambda }(t)\) and it is equal to

Comparing with (3.6) with \(n =0\), we see that

which is the stated result. \(\square \)

In the case of \(d =2\), it is easy to see that the explicit formula (3.2) implies the relation

The following corollary gives a d-dimensional version of this recursive relation for \(m_{n,d}\).

Corollary 3.8

For \(d \ge 2\), \(n=0,1,2,\ldots \),

Proof

Using the explicit formula of \(m_{n,d}\) in (3.6), we obtain

where we have used the convention that \((d-1)_{k-j} = 0\) if \(j > k\) and \(\left( {\begin{array}{c}d-1\\ j\end{array}}\right) =0\) if \(j > d-1\). The stated result then follows from (3.8). \(\square \)

In particular, the identity (3.10) shows that the finite combination of \(m_{n,d}\) on the left-hand side is a polynomial of degree n multiplied by \((1-t^2)^{d-\frac{3}{2}}\). The recursive formula can be used to determine \(m_{n,d}\) if we know the first \(d-1\) elements \(m_{0,d}, \ldots , m_{d-1,d}\). However, notice that the explicit expression of \(m_{0,d}\) in (3.9) contains the factor \((1-u^2)^{\frac{d-2}{2}}\), which has a power different from the power \(d - \frac{3}{2}\) on the right-hand side of (3.10), we see that an analog of (3.3) is unlikely to hold for \(d>2\); in particular, \(m_{n,d}\) will not be a polynomial when n is even for \(d >2\).

4 Positive definite \(\ell ^1\)-invariant functions

A function \(f\in C({{\mathbb {T}}}^d)\) is a positive definite function (PDF) if for every \(\Xi _N = \{\Theta _1,\ldots , \Theta _N\}\) of pairwise distinct points in \({{\mathbb {T}}}^d\) and \(N \in {{\mathbb {N}}}_0\), the matrix

is nonnegative definite; it is called a strictly positive definite function (SPDF) if the matrix is always positive definite. Let \(\Phi ({{\mathbb {T}}}^d)\) denote the set of PDFs on \({{\mathbb {T}}}^d\). By the definition of PDF, the space \(\Phi ({{\mathbb {T}}}^d)\) is closed under linear combination with nonnegative coefficients; that is, if \(f, g \in \Phi ({{\mathbb {T}}}^d)\) and \(c_i\) and nonnegative constants, then \(c_1 f + c_2 g \in \Phi ({{\mathbb {T}}}^d)\). The PDFs on \({{\mathbb {T}}}^d\) are characterized by the following theorem:

Theorem 4.1

A function \(f \in C({{\mathbb {T}}}^d)\) is a PDF on \({{\mathbb {T}}}^d\) if and only if the Fourier coefficients \({{\hat{f}}}_{\alpha }\) are nonnegative for all \({\alpha }\in {{\mathbb {N}}}_0^d\).

One direction of the theorem follows readily from the closedness of \(\Phi ({{\mathbb {T}}}^d)\) and from the fact that the exponential functions \(\textrm{e}^{\textrm{i}{\alpha }\cdot x} \in \Phi ({{\mathbb {T}}}^d)\) for all \({\alpha }\in {{\mathbb {N}}}_0^d\), since

In the other direction, if f is PDF on \({{\mathbb {T}}}^d\) then by the periodicity of f,

The right-hand side is nonnegative if f is a trigonometric polynomial, as can be seen by applying a positive cubature rule for the integral over \({{\mathbb {T}}}^d\) and using the positive definiteness of f. In particular, this shows that the left-hand side integral is nonnegative. Since \(\textrm{e}^{\textrm{i}{\alpha }\cdot {\theta }}\) is PDF, it follows from Schur’s theorem that

Recall that a function \(f: {{\mathbb {T}}}^d \rightarrow {{\mathbb {R}}}\) is \(\ell ^1\)-invariant if \({{\hat{f}}}_{\alpha }= {{\hat{f}}}_{\beta }\) for all \(|{\alpha }| = |{\beta }|\), \({\alpha },{\beta }\in {{\mathbb {N}}}_0^d\). These functions are given as follows.

Theorem 4.2

A function \(f \in L^1({{\mathbb {T}}}^d)\) is \(\ell ^1\)-invariant if and only if

where \(F_d: [-1,1]\rightarrow {{\mathbb {R}}}\) is a \((d-1)\)-times differentiable function and satisfies

with a sequence of real numbers \(\{a_n\}_{n\ge 0}\).

Proof

If f is the given divided difference of \(F_d\) provided in (4.2), then

By the definition of \(E_n({\theta })\), it follows readily that \({{\hat{f}}}_{\alpha }= {{\hat{f}}}_{\beta }\) if \(|{\alpha }| = |{\beta }|\). Moreover, when its knots coalesce, the divided difference becomes a derivative as we have mentioned before. By (2.3) and the \((d-1)\)-times differentiability of F, f is continuous.

In the other direction, if f is \(\ell ^1\)-invariant, then its Fourier series is given by (2.1). By (2.4), we obtain that \(f ({\theta }_1,\ldots ,{\theta }_d) = [\cos {\theta }_1, \ldots , \cos {\theta }_d] F\) with F given by

so that F is of the form (4.2) with \(a_n = \widehat{f}_n\) by (2.5). The continuity of f requires that F has continuous derivatives of \((d-1)\) orders by (2.3). \(\square \)

If f is \(\ell ^1\)-invariant, then \({{\hat{f}}}_{\alpha }= {{\hat{f}}}_{|{\alpha }|}\), so that \({{\hat{f}}}_{\alpha }\ge 0\) if and only if \({{\hat{f}}}_n \ge 0\) in (2.1). Consequently, the following characterization of PDFs holds.

Theorem 4.3

Let \(f \in C({{\mathbb {T}}}^d)\) be \(\ell ^1\)-invariant. Then f is a PDF on \({{\mathbb {T}}}^d\) if and only if f is given by (4.1) and (4.2) with \({{\hat{f}}}_n \ge 0\) for all \(n \in {{\mathbb {N}}}_0\).

The SPDFs have been characterized in [4, Theorem 1], where it is proved that a PDF function is also SPDF if and only if the set of indices \({\mathcal {G}}=\lbrace \alpha \in {{\mathbb {Z}}}^{d} \mid {\hat{f}}_{\alpha }>0 \rbrace \) intersects all the translations of each subgroup of \({{\mathbb {Z}}}^{d}\) that has the form

More precisely, for every pair of vectors \(\gamma \in {{\mathbb {Z}}}^{d},\beta \in {{\mathbb {N}}}^{d},\) there exists a \(z\in {{\mathbb {Z}}}^{d}\) with \({\hat{f}}_{\alpha }>0\) and \(\alpha _j=\gamma _j+z_j \beta _j\), \(j=1,\ldots ,d\). For \(\ell ^1\)-invariant functions, the SPDFs are characterized below.

Theorem 4.4

Let \(f \in C({{\mathbb {T}}}^d)\) be \(\ell ^1\)-invariant. Then f is a SPDF on \({{\mathbb {T}}}^d\) if and only if f is given by (4.1) and (4.2), f is PDF and for any pair \(n<\ell \in {{\mathbb {N}}}\) there is an \(m\in {{\mathbb {N}}}\) with \({\hat{f}}_{n+m\ell }>0\) or \({\hat{f}}_{(\ell -n)+m\ell }>0\).

Proof

We prove that the given condition is equivalent to the condition given in [4], if we assume the kernel to be \(\ell ^1\)-summable.

We start with sufficiency. Suppose f is PDF and satisfies the condition of the theorem. For any two vectors \(\gamma \in {{\mathbb {Z}}}^{d},\beta \in {{\mathbb {N}}}^{d},\) we can assume without loss of generality that \(0\le \gamma _j\le \beta _j\), \(j=1,\ldots d\). We define \(n=\vert \gamma \vert \) and \(\ell =\vert \beta \vert \). Then there exists an \(m\in {{\mathbb {N}}}_0\) with \({\hat{f}}_{n+\ell m}>0\) or \({\hat{f}}_{(\ell -n)+\ell m}>0\). If \({\hat{f}}_{n+\ell m}>0\), then

since \(\vert \gamma +m \beta \vert = n+ m\ell \). Whereas if \({\hat{f}}_{(n-\ell )+\ell m}>0\), we can choose \(m'=m+1\). Now \(\vert \gamma -m' \beta \vert =\vert \gamma -\beta - m \beta \vert =(\ell -n) +m \ell \) implies

For the necessity, suppose the assumption of the theorem does not hold, then there exists \(\ell >n \in {{\mathbb {N}}}\) such that \({\hat{f}}_{n+m\ell }=0\) and \({\hat{f}}_{(\ell -n)+\ell m}=0\) for all \(m\in {{\mathbb {N}}}\). Set

Then for any \(z\in {{\mathbb {Z}}}^{d}\) define \(\alpha \in {{\mathbb {Z}}}^{d}\), \(\alpha _j=\gamma _j+z_j \beta _j\), so that with \(m=\vert z\vert \),

Thereby, there would be no coefficients \({\hat{f}}_{\alpha }>0\), \(\alpha _j=\gamma _j+z_j \beta _j\) for this choice of \(\gamma \) and\(\beta \), contradicting strict positive definiteness. \(\square \)

References

Andrews, G.E., Askey, R., Roy, R.: Special Functions. Encyclopedia of Mathematics and its Applications 71. Cambridge University Press, Cambridge (1999)

Berens, H., Xu, Y.: Fejèr means for multivariate Fourier series. Math. Z. 221, 449–465 (1996)

Berens, H., Xu, Y.: \(\ell \)-1 summability for multivariate Fourier integrals and positivity. Math. Proc. Cambridge Phil. Soc. 122, 149–172 (1997)

Guella, J., Menegatto, V.A.: Strictly positive definite kernels on the torus. Constr. Approx. 46, 271–284 (2017)

Németh, Z.: On multivariate de la Vallée Poussin-type projection operators. J. Approx. Theory 186, 12–27 (2014)

Powell, M.J.D.: Approximation theory and methods. Cambridge University Press (1982)

Szegő, G.: Orthogonal polynomials, 4th edn. Amer. Math. Soc, Providence, RI (1975)

Szili, L., Vértesi, P.: On multivariate projection operators. J. Approx. Theory 159, 154–164 (2009)

Weisz, F.: \(\ell ^1\)-summability of higher-dimensional Fourier series. J. Approx. Theory 163, 99–116 (2011)

Weisz, F.: Summability of multi-dimensional trigonometric Fourier series. Surv. Approx. Theory 7, 1–179 (2012)

Weisz, F.: Lebesgue points of \(\ell ^1\)-Cesàro summability of \(d\)-dimensional Fourier series. Adv. Oper. Theory 6:48(3), 24 (2021)

Xu, Y.: Christoffel functions and Fourier Series for multivariate orthogonal polynomials. J. Approx. Theory 82, 205–239 (1995)

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The second author was funded by the Deutsche Forschungsgemeinschaft (DFG-German research foundation) Projektnummer: 461449252. The third author thanks the Alexander von Humboldt Foundation for an AvH award that supports his visit to Justus-Liebig University, during which the work was carried out; he was partially supported by Simons Foundation Grant #849676.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Buhmann, M., Jäger, J. & Xu, Y. \(\ell ^1\)-summability and Fourier series of B-splines with respect to their knots. Math. Z. 306, 53 (2024). https://doi.org/10.1007/s00209-024-03440-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00209-024-03440-9