Abstract

In 1936, Margarete C. Wolf showed that the ring of symmetric free polynomials in two or more variables is isomorphic to the ring of free polynomials in infinitely many variables. We show that Wolf’s theorem is a special case of a general theory of the ring of invariant free polynomials: every ring of invariant free polynomials is isomorphic to a free polynomial ring. Furthermore, we show that this isomorphism extends to the free functional calculus as a norm-preserving isomorphism of function spaces on a domain known as the row ball. We give explicit constructions of the ring of invariant free polynomials in terms of representation theory and develop a rudimentary theory of their structures. Specifically, we obtain a generating function for the number of basis elements of a given degree and explicit formulas for good bases in the abelian case.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A symmetric free polynomial in the letters \(x_1, \ldots , x_d\) is a free polynomial p over \({\mathbb {C}}\) which satisfies

for all permutations \(\sigma \) of \(\{1,\ldots , d\}.\) For example,

is a symmetric free polynomial in two variables. Classically, Wolf proved the following theorem about the structure of the ring of symmetric free polynomials [22].

Theorem 1.1

(Wolf) The symmetric free polynomials over \({\mathbb {C}}\) in \(d \ge 2\) variables is isomorphic to the ring of free polynomials in infinitely many variables.

More recently, free symmetric polynomials have been investigated by numerous authors [5, 7, 11], and some deep connections with representation theory are now known.

We are concerned with the ring of invariant free polynomials. Let G be a group. Let \(\pi : G \rightarrow {\mathcal {U}}_d\) be a unitary representation. That is, \(\pi \) is a homomorphism from the group G to the group of \(d \times d\) unitary matrices over \({\mathbb {C}}.\) An invariant free polynomial with respect to \(\pi \) is a free polynomial \(p \in {\mathbb {C}}\langle x_1, \ldots , x_d\rangle \) which satisfies

for all \(\sigma \) in G. (Here, the action of the \(d \times d\) matrices \(\pi (\sigma )\) on \((x_1,\ldots , x_d)\) is given by matrix multiplication. That is, for example, if

we define the action of U on \((x_1,x_2)\) to be given by the formula \(U(x_1, x_2) = (ax_1+bx_2, cx_1 + dx_2).\)) Notably, the invariant free polynomials form a ring (also, in fact, an algebra over \({\mathbb {C}}\)) called the ring of invariant free polynomials. We note to the reader that the ring of invariant free polynomials is best understood as an algebra, but for historical reasons, we use the term ring in analogy with the classical nomenclature of the ring of invariants (also ring of invariant polynomials), which dates back to Hilbert.

For example, the symmetric free polynomials in d variables are obtained in the special case where \(G = S_d\) is a symmetric group, and we define \(\pi : S_d \rightarrow {\mathcal {U}}_d\) to be the representation satisfying

where \(e_i\) is the ith elementary basis vector for \({\mathbb {C}}^d.\)

We now define the domains on which we intend to execute the function theory of invariant free polynomials. Fix an infinite dimensional separable Hilbert space \(\mathcal {H}\). There is only one infinite dimensional separable Hilbert space up to isomorphism [9], so what follows will be independent of the exact choice. Let \(\Lambda \) be an index set. Let \({\mathcal {C}}^\Lambda \) be the set of \((X_\lambda )_{\lambda \in \Lambda },\) sequences of elements in \({\mathcal {B}}(\mathcal {H})\) indexed by \(\Lambda ,\) such that

where \(A < B\) means that \(B-A\) is strictly positive definite (that is, \(B-A\) is positive semidefinite and has spectrum disjoint from 0) and \(A \le B\) means that \(B-A\) is positive semidefinite. Here the sum \(\sum _{\lambda \in \Lambda } X_\lambda X_\lambda ^*\) is required to be absolutely convergent in the weak operator topology and have \(\sum _{\lambda \in \Lambda } X_\lambda X_\lambda ^*< 1,\) or equivalently, there is an \(\varepsilon >0\) such that for every finite \(\Lambda '\subset \Lambda ,\)

If \({{\mathrm{card\,}}}\Lambda = d\) is a natural number, we identify \({\mathcal {C}}^\Lambda \) as \({\mathcal {C}}^d\). That is, \({\mathcal {C}}^d\) is the set of d-tuples of operators in \({\mathcal {B}}(\mathcal {H})\) such that

Some authors refer to \(\mathcal {\mathbb {C}}^\Lambda \) as the row ball, or the set of row contractions [14, 18]. Given a free polynomial p in the letters \((x_\lambda )_{\lambda \in \Lambda }\) and a point \(X = (X_\lambda )_{\lambda \in \Lambda } \in {\mathcal {C}}^\Lambda \), we form p(X) via the formula

which when \({{\mathrm{card\,}}}\Lambda = d\) reduces to \(p(X) = p(X_1, \ldots , X_d).\)

Let \({\mathcal {R}}\) be a subalgebra of \({\mathbb {C}}\langle x_1, \ldots , x_d\rangle .\) We define a basis for \({\mathcal {R}}\) to be an indexed set \((u_{\lambda })_{\lambda \in \Lambda }\) of free polynomials which generate \({\mathcal {R}}\) as an algebra, which is minimal in the sense that there is no \(\Lambda ' \subsetneq \Lambda \) such that the set \((u_{\lambda })_{\lambda \in \Lambda '}\) generates \({\mathcal {R}}\) as an algebra. We note that any basis for \({\mathcal {R}}\) is finite or countably infinite, since \({\mathcal {R}}\) has finite or countable dimension as a vector space as it is a subspace of \({\mathbb {C}}\langle x_1, \ldots , x_d\rangle ,\) and the minimality condition implies that the \((u_{\lambda })_{\lambda \in \Lambda }\) are linearly independent.

We prove the following theorem.

Theorem 1.2

Let G be a group. Let \(\pi :G\rightarrow \mathcal {U}_{d}\) be a unitary representation. There exists a basis for the ring of invariant free polynomials \((u_{\lambda })_{\lambda \in \Lambda }\) such that the map \(\Phi \) on \({\mathcal {C}}^d\) defined by the formula

satisfies the following properties:

-

The map \(\Phi \) takes \({\mathcal {C}}^{d}\) to \({\mathcal {C}}^{\Lambda }.\)

-

Furthermore, for p in the ring of invariant free polynomials for \(\pi \), there exists a unique free polynomial \(\hat{p}\) such that \(p=\hat{p}\circ \Phi .\)

-

Moreover,

$$\begin{aligned} \sup _{X \in {\mathcal {C}}^{d}} \Vert p(X)\Vert = \sup _{U \in {\mathcal {C}}^{\Lambda }} \Vert \hat{p}(U)\Vert . \end{aligned}$$

Namely, the map taking \(\hat{p}\) to p is an isomorphism of rings from the free algebra \({\mathbb {C}}\langle x_{\lambda }\rangle _{\lambda \in \Lambda }\) to the ring of invariant free polynomials.

Theorem 1.2 follows from Theorems 4.2 and 6.5. We note that a similar result was obtained for free functions on the domain

for symmetric free functions in two variables by Agler and Young [4].

In general, the basis in Theorem 1.2 is hard to compute. However, the number of elements of a certain degree is computed in Sect. 7.1. An explicit basis can be obtained if G is abelian, which we discuss in Sect. 7.2.

1.1 Some examples

We now give some concrete examples of what our main result says. First, we give an analogous theorem to that obtained in Agler and Young for symmetric free functions in two variables [4].

Proposition 1.3

Let

where

The map \(\Phi \) satisfies the following properties:

-

\(\Phi \) takes \({{\mathcal {C}}}^2\) to \({{\mathcal {C}}}^{\mathbb {N}}.\)

-

For any free polynomial p such that

$$\begin{aligned} p(X_1,X_2) = p(X_2,X_1), \end{aligned}$$there exists a unique free polynomial \(\hat{p}\) such that \(p=\hat{p}\circ \Phi .\)

-

Moreover,

$$\begin{aligned} \sup _{X \in {\mathcal {C}}^{2}} \Vert p(X)\Vert = \sup _{U \in {\mathcal {C}}^{{\mathbb {N}}}} \Vert \hat{p}(U)\Vert . \end{aligned}$$

Another simple example concerns the ring of even functions in two variables, that is, the ring of free polynomials in two variables f satisfying the identity

Here the group G in Theorem 1.2 is the cyclic group with two elements, \({\mathbb {Z}}_2 = \{0,1\},\) and the representation \(\pi \) is given by

Proposition 1.4

Let

The map \(\Phi \) satisfies the following properties:

-

\(\Phi \) takes \({{\mathcal {C}}}^2\) to \({{\mathcal {C}}}^4.\)

-

For any free polynomial p such that

$$\begin{aligned} p(X_1,X_2) = p(-X_1,-X_2), \end{aligned}$$there exists a unique free polynomial \(\hat{p}\) such that \(p=\hat{p}\circ \Phi .\)

-

Moreover,

$$\begin{aligned} \sup _{X \in {\mathcal {C}}^{2}} \Vert p(X)\Vert = \sup _{U \in {\mathcal {C}}^{4}} \Vert \hat{p}(U)\Vert . \end{aligned}$$

We discuss Proposition 1.4 in detail in Sect. 2. Here we see a concrete trade-off between the number of variables and degree in the optimization of a free polynomial: finding the supremum norm of a polynomial in 4 variables of degree d on \({{\mathcal {C}}}^4\) is the same as finding the supremum norm of an even polynomial in 2 variables of degree 2d on \({{\mathcal {C}}}^2.\)

Both Proposition 1.3 and Proposition 1.4 follow from our basis construction in the abelian case given in Theorem 7.4.

1.2 Geometry

Although the image of the map \(\Phi \) in Theorem 1.2 may have high codimension, in the sense that it is far from being literally surjective, the function \(\hat{p}\) is completely determined by p and has the same norm. We view this as an analogue of the celebrated work of Agler and

Carthy on norm preserving extensions of functions on varieties to whole domains [1, 2]. Additionally, since p totally determines \(\hat{p}\), the dimension of the Zariski closure of the image of a free polynomial map can apparently go up, in contrast with the commutative case [12]. Exploiting the aforementioned phenomenon is a critical step in the theory of change of variables for free polynomials and their generalizations, the free functions, which had been thought to be extremely rigid [13].

Carthy on norm preserving extensions of functions on varieties to whole domains [1, 2]. Additionally, since p totally determines \(\hat{p}\), the dimension of the Zariski closure of the image of a free polynomial map can apparently go up, in contrast with the commutative case [12]. Exploiting the aforementioned phenomenon is a critical step in the theory of change of variables for free polynomials and their generalizations, the free functions, which had been thought to be extremely rigid [13].

To prove Theorem 1.2, we develop a Hilbert space geometric theory of the ring of invariant free functions. As a consequence of the geometric structure, the ring of free invariant polynomials is always free (but perhaps infinitely generated), in contrast with the Chevalley–Shepard–Todd Theorem in the commutative case, in which freeness depends on the structure of G [8, 21].

1.3 Free analysis

A free function on \({\mathcal {C}}^{\Lambda }\) is a function from \({\mathcal {C}}^{\Lambda }\) to \({\mathcal {B}}(\mathcal H)\) which is the uniform limit of free polynomials on the sets \(r\overline{{\mathcal {C}}^{\Lambda }}.\) We let \(H({\mathcal {C}}^{\Lambda })\) denote the algebra of free functions on \({{\mathcal {C}}}^\Lambda \). The Banach algebra of bounded free functions \(H^\infty ({\mathcal {C}}^{\Lambda }) \subset H({\mathcal {C}}^{\Lambda })\) is the space of all bounded functions in \(H({\mathcal {C}}^{\Lambda })\) equipped with the norm

We note that there are many equivalent characterizations of a free function, such as [3, 14, 15, 18].

We can reinterpret Theorem 1.2 as an isomorphism of function algebras analogous to Wolf’s theorem. Let \(H^\infty _{\pi }({{\mathcal {C}}}^d)\) be the Banach algebra of bounded invariant free functions for \(\pi \) on \({{\mathcal {C}}}^d\).

Corollary 1.5

There is a countable set \(\Lambda \) such that

as Banach algebras.

Corollary 1.5 follows from Theorem 1.2 directly.

That is, the map taking \(\hat{f} \in H^\infty ({{\mathcal {C}}}^\Lambda )\) to \(\hat{f}\circ \Phi \in H^\infty _\pi ({{\mathcal {C}}}^d)\) is such an isomorphism. The map is injective and surjective since it is already since it is an isomorphism on the level of formal power series, which uniquely define a free function [15].

2 Example: an even free function in two variables

To begin, we discuss a simple nontrivial example of a free ring of invariants. We will now explain what our main result, Theorem 1.2, says about a specific even free polynomial in two variables.

We say a free polynomial \(p \in {\mathbb {C}}\langle x_1,x_2\rangle \) is even if

We note that the even free functions form an algebra.

Consider the even free polynomial

as a map on the domain \({\mathcal {C}}^2.\)

We first note that it is clearly not a coincidence that p has no odd degree terms. Furthermore, if we let

we get that

Let

and

Thus,

We are interested in the analytical properties of \(\hat{p}\) and \(\Phi .\)

We will show the remarkable fact that

Let \(X \in {\mathcal {C}}^2.\) That is,

Since

we get that

Thus, \(\Phi ({\mathcal {C}}^2) \subset {\mathcal {C}}^4.\)

We will now show a curious equality:

First we show that

Since p is a free polynomial and is thus continuous on the closure \(\overline{{\mathcal {C}}^2} \subset {\mathcal {B}}(\mathcal {H})^2,\) it is enough to show that

Let \((U_1,U_2,U_3,U_4) \in {\mathcal {C}}^4.\) Define operators \(X_1\) and \(X_2\) with the following block structure

First,

That is, \((X_1, X_2) \in \overline{{\mathcal {C}}^2}.\) Note

That is,

where \(\tilde{J}\) denotes some matrix tuple which is irrelevant to our aims. So,

Namely,

Thus,

To see that

note that since \(\Phi (X) \in {\mathcal {C}}^4,\)

3 The space of free polynomials as an inner product space

We now seek to understand the geometry of free polynomials as a Hilbert space.

Definition 3.1

We define \(H^2_d\) to be the Hilbert space of free formal power series in d variables whose sequence of coefficients is an element of \(\ell ^2\) with an inner product given by the inner product of the coefficients. We define \([H^2_d]_n\) to be the subspace of homogeneous free polynomials of degree n. For a free polynomial f in d variables, we define \(M_f\) to be the operator on \(H^2_d\) satisfying \(M_f g = fg.\)

The following lemma describes a grading structure on \(H^2_d.\)

Lemma 3.2

(Grading lemma) Let p, q be homogenous free polynomials of degree n and r, s be homogenous free polynomials of degree m. Then,

Thus, we identify

Proof

It suffices to show that the claim holds for monomials

of suitable degree. Note

Now,

\(\square \)

Lemma 3.3

Let V be a subspace of \([H^2_d]_n.\) Let \(u_1, \ldots , u_k\) be an orthonormal basis for V and \(v_1, \ldots , v_k\) be an orthonormal basis for V. Then,

Proof

It is sufficient to note that the equality of

as outer products since \(u_i\) and \(v_i\) give orthonomal bases for the same space, and we are done. \(\square \)

4 Superorthogonality

We can now define superorthogonality. We will later see that the ring of invariant free polynomials is itself superorthogonal.

Definition 4.1

An algebra \({\mathcal {A}} \subseteq {\mathbb {C}}\langle x_1, \ldots , x_d \rangle \) is called superorthogonal if there exists a superorthonormal basis for \({\mathcal {A}}\), i.e. a basis \((u_\lambda )_{\lambda \in \Lambda }\) for \({\mathcal {A}}\) such that \(u_\lambda \) are homogeneous polynomials, \(\left\| u_\lambda \right\| _{\mathcal {H}^2_d} = 1\), and for all \(\lambda , \mu \in \Lambda \) with \(\lambda \ne \mu \),

The goal of this section is to prove that a general version of Theorem 1.2 is true for superorthogonal algebras.

Theorem 4.2

Let \(\mathcal {A}\) be a superorthogonal algebra. Let \((u_{\lambda })_{\lambda \in \Lambda }\) be a superorthogonal basis. The map \(\Phi \) on \({\mathcal {C}}^d\) defined by the formula

satisfies the following properties:

-

The map \(\Phi \) takes \({\mathcal {C}}^{d}\) to \({\mathcal {C}}^{\Lambda }.\)

-

Furthermore, for \(p \in {\mathcal {A}}\) , there exists a unique free polynomial \(\hat{p}\) such that \(p=\hat{p}\circ \Phi .\)

-

Moreover,

$$\begin{aligned} \sup _{X \in {\mathcal {C}}^{d}} \Vert p(X)\Vert = \sup _{U \in {\mathcal {C}}^{\Lambda }} \Vert \hat{p}(U)\Vert . \end{aligned}$$

Namely, the map taking \(\hat{p}\) to p is an isomorphism of rings from the free algebra \({\mathbb {C}}\langle x_{\lambda }\rangle _{\lambda \in \Lambda }\) to \(\mathcal {A}\).

Theorem 4.2 follows from Lemma 4.3 and Lemma 4.5.

We now show that superorthogonal algebras are necessarily free.

Lemma 4.3

A superorthogonal algebra \(\mathcal {A}\) is isomorphic to a free algebra in perhaps infinitely many variables. Specifically, the map

satisfying \(\varphi (x_{\lambda }) = u_{\lambda }\) is an isomorphism.

Proof

It is enough to show that for any two distinct products \(\prod ^{n}_{i=1} u_{\lambda _i}\) and \(\prod ^{m}_{j=1} u_{\kappa _j}\) of the same degree as free polynomials in \({\mathcal {A}} \subset {\mathbb {C}}\langle x_1, \ldots , x_d \rangle \),

since then the words in \(u_\lambda \) are linearly independent. Since the words are distinct, there is a p such that \(\lambda _i = \kappa _i\) for all \(i<p\) and \(\lambda _p \ne \kappa _p.\) So,

Note that \(\prod ^{n}_{i=p} u_{\lambda _i} \in u_{\lambda _p}H^2_d\) and \(\prod ^{m}_{j=p} u_{\kappa _j} \in u_{\kappa _p}H^2_d\) which implies that the two products are orthogonal since \(u_{\lambda _p}\) and \(u_{\kappa _p}\) are superorthogonal and thus the desired inner product is 0. \(\square \)

We now show that the superorthogonal basis maps \({{\mathcal {C}}}^{d}\) into \({{\mathcal {C}}}^{\Lambda }\).

Lemma 4.4

Let \(\mathcal {A}\) be a superorthogonal algebra. For a superorthonormal basis \((u_\lambda )\), the map \(\Phi :{\mathcal {C}}^d \rightarrow {\mathcal {B}}(\mathcal {H})^{\Lambda }\) given by

has \({\text {ran}}\,\Phi \subseteq {{\mathcal {C}}}^{\Lambda }\), that is

We call \(\Phi \) the superorthogonal basis map.

Proof

Let \(X \in {{\mathcal {C}}}^d.\) That is,

Let \({\mathcal {I}}\) be the left ideal generated by \({\mathcal {A}} \backslash {\mathbb {C}}1\) in \({\mathbb {C}}\langle x_1, \ldots , x_d\rangle ,\) that is, the span of the elements of the form ab where \(a\in \mathcal {A} {\setminus } \{1\}\) and \(b \in {\mathbb {C}}\langle x_1, \ldots , x_d\rangle .\)

To show that

we will show by induction that

where \(w_{i,n}\) form an orthonormal basis for \(({\mathcal {I}} \cap [H^2_d]_n)^{\perp }.\)

Note that for \(n=1,\) Inequality (4.1) becomes

which holds by Lemma 3.3 since the set of \(u_\lambda \) of degree one combined with the set of \(w_{i,1}\) must form a basis for \([H^2_d]_1.\)

Now suppose that Inequality (4.1) holds for n. That is,

We will now show that it holds for \(n+1.\) Since

we get that

So

Note that any \(u_\lambda \) of degree \(n+1\) must be in the subspace \(({\mathcal {I}} \cap [H^2_d]_n)^{\perp }\otimes [H^2_d]_1\) by the definition of superorthogonality. Furthermore, the combination of the \(u_\lambda \) of degree \(n+1\) and \(w_{i,n+1}\) form an orthonormal basis for \(({\mathcal {I}} \cap [H^2_d]_n)^{\perp }\otimes [H^2_d]_1.\) On the other hand, the \(w_{i,n}x_j\) form an orthonormal basis for \(({\mathcal {I}} \cap [H^2_d]_n)^{\perp }\otimes [H^2_d]_1.\) So, by Lemma 3.3, we get that

which immediately implies that

\(\square \)

Lemma 4.5

Let \({\mathcal {A}}\) be a superorthogonal algebra. Let \(p \in \mathcal {A}\). Then there exists a unique free polynomial \(\hat{p}\) such that

where \(\Phi \) is a superorthonormal basis map for \({\mathcal {A}}\) as in Lemma 4.4. Furthermore,

Proof

The existence of \(\hat{p}\) follows from the fact that the coordinates of \(\Phi \) form a basis for \(\mathcal {A}.\) The uniqueness of \(\hat{p}\) follows from the fact that \(\mathcal {A}\) is free by Lemma 4.3.

So it remains to show the equality

To show that

we show the equivalent inequality

Similarly to our example for even functions (Sect. 2), given a \(U \in {\mathcal {C}}^{\Lambda }\) we would like to find an \(X\in \overline{{\mathcal {C}}^{d}}\) such that there is some projection P so that

and thus that

Let

as in Definition 3.1. Note that \(M_x \in \overline{{\mathcal {C}}^d}.\) So, \(\Phi (M_x) = (M_{u_\lambda })_{\lambda \in \Lambda }.\) Decompose \(H^2_d = \overline{{\mathcal {A}}}^{H^2_d} \oplus {\mathcal {J}}.\) Since the algebra \({\mathcal {A}}\) is a joint invariant subspace for all the \(M_{u_\lambda },\) we get that

with respect to the decomposition, where \(J_\lambda \) and \(K_\lambda \) are some operators which will be irrelevant to this discussion. The Frazho–Popescu dilation theorem [10, 17] states that for any \(U\in {\mathcal {C}}^{\Lambda }\), there is a projection \(\tilde{P}\) such that for any free polynomial q,

Thus, there is indeed an X and a projection as desired in (4.2), namely, taking \(X = (M_{x_1}\otimes I,\ldots ,M_{x_d}\otimes I)\) and the projection as constructed above.

Thus, for every \(U \in {\mathcal {C}}^{\Lambda }\) there exists an \(X \in \overline{{\mathcal {C}}^d}\) such that

and so

To see that

we note that \(\Phi (X) \in {\mathcal {C}}^\Lambda \) by Lemma 4.4. \(\square \)

Now Theorem 4.2 follows immediately.

5 Example: free functions in three variables invariant under the natural action of the cyclic group with three elements

We now show how to construct a superorthonormal basis for the ring of free polynomials in three variables which are invariant under the natural action of the free group. That is, we want to understand free functions which satisfy the identity

and show that they form a superorthogonal algebra.

Let \(\sigma \) denote a generator of the cyclic group with three elements. Define the action of \(\sigma \) on free functions in three variables by

With this notation we are trying to understand functions such that

Let \(\omega \) be a nontrivial third root of unity. Consider the following three linear polynomials:

Clearly, the function \(u_0\) is fixed by the natural action of the cyclic group with three elements. However,

and

That is, they are eigenfunctions of the action \(\sigma \) on free polynomials in three variables.

In fact, any product of the \(u_i\) will be an eigenfunction of the action of \(\sigma .\) For example,

Thus, it can be observed that \(\prod _j u_{i_j}\) is in the ring of invariant free polynomials under the action of the cyclic group with three elements if and only if \(\sum _j i_j \equiv _3 0,\) and furthermore that products satisfying this condition span the algebra of free polynomials in three variables which are fixed by the natural action of the cyclic group with three elements.

So, if we choose products \(\prod ^N_{j=1} u_{i_j}\) such that \(\sum _j i_j \equiv _3 0,\) which are primitive in that no partial product \(\prod ^n_{j=1} u_{i_j}\) is in the ring of invariant free polynomials, we will obtain a basis for our algebra. To show that this basis is superorthogonal, it is enough to show that any two distinct products \(\prod ^N_{j=1} u_{i_j},\) \(\prod ^N_{j=1} u_{k_j}\) are orthogonal. However, by the grading lemma,

Since the two products were assumed to be not equal, the orthogonality of the \(u_i\) implies that at least one of the \(\left\langle u_{i_j}, u_{k_j} \right\rangle =0,\) so we are done.

For the action of a general group, an explicit construction of a superorthogonal basis for the ring of invariants is more difficult. However, the existence of such a basis can be established using some basic representation theory which we do in the next section. Later, we will return to the issue of an explicit construction for specific classes of groups for which the problem is tractable.

6 The ring of invariant free polynomials is superorthogonal

In order to derive Theorem 1.2 from Theorem 4.2, it is sufficient to show that the ring of invariant free polynomials is superorthogonal.

Definition 6.1

Let \(\pi \) be a unitary representation of a group G. Let \(\pi _n\) denote the induced action of \(\pi \) on \([H^2_d]_n.\) We make the identification that \(\pi _1 = \pi .\)

Let \(p \in [H^2_d]_n\) and \(q \in [H^2_d]_m\). Note that

which translates to the following formal observation by the grading lemma.

Observation 6.2

Let \(\pi \) be a unitary representation of a group G. Then,

with the identification made in the grading lemma.

Observation 6.2 implies that the action on homogeneous polynomials of degree n is determined by the action on homogeneous polynomials of degree 1.

Corollary 6.3

Let \(\pi \) be a unitary representation of a group G. Then,

with the identification made in the grading lemma.

The following technical lemma gives key information about projections of invariant polynomials onto ideals.

Lemma 6.4

Let \(\pi \) be a unitary representation of a group G. Let \(h \in [H^2_d]_n\) be invariant. Let \(P_h\) be the orthogonal projection onto \(hH^2_d.\) If \(q\in H^2_d\) is an invariant, then so is \(P_h q.\)

Proof

Without loss of generality, we can assume q is homogeneous and of degree greater than or equal to n. Let \(w_k\) be a basis for \(h^\perp \) in \([H^2_d]_n.\) Decompose \(q = h \otimes v + \sum w_k \otimes v_k.\) So,

Now,

Simplifying,

Since \(\pi \) is unitary each \((\pi \cdot w_k)\) is still orthogonal to h. Thus, we conclude that \(v = \pi \cdot v,\) and we are done. \(\square \)

We can now prove that the ring of invariant free polynomials is superorthogonal by constructing a basis for it.

Theorem 6.5

Let G be a group. Let \(\pi : G \rightarrow U_d\) be a group representation. The ring of invariant free polynomials for \(\pi \) is superorthogonal.

Proof

For each positive integer i, let \(V_i \subseteq [H^2_d]_i\) denote the set of polynomials in the ring of invariants of degree n which are orthogonal to all polynomials generated by the polynomials in the ring of invariants of lower positive degree. Let for each i let \(u_{i,k}\) be an orthogonal basis for \(V_i.\) We claim that these \(u_{i,k}\) form a superorthogonal basis.

Let \(P_h\) be as in Lemma 6.4. To show superorthogonality, it is enough, by definition, to show that, for any free polynomial v,

which simplifies via the grading lemma to

Furthermore, if \(i \ge j,\) there is nothing to show.

So, suppose \(i<j.\) Let

By Lemma 6.4, we get that \(u_{i,k}v\) is invariant, so v is invariant. Since \(u_{i,k}v\) is a product of invariants of strictly lower degree, we get that v must be 0 by the definition of \(V_i.\) So, we are done. \(\square \)

7 Structure of the superorthogonal basis for the ring of invariants

7.1 Counting

We now calculate the number of elements in any superorthonormal basis for the ring of invariant free polynomials of a given degree in terms of generating functions. The main result of this section is as follows.

Theorem 7.1

Let \(\pi \) be a unitary representation of a compact topological group G and \(\chi = {\text {tr}}{\pi }\) be the character corresponding to \(\pi .\) Let \({\mathcal {C}}_G\) be a set of representatives for the conjugacy classes of G. Let \(f_{n}\) be dimension of invariant homogeneous free polynomials of degree n. Let \(g_{n}\) be the number of free polynomials of degree n in some superorthogonal basis for the ring of invariant free polynomials of \(\pi .\) Then,

where \(\mu \) denotes the normalized Haar measure on G, and

If G is finite,

where \(\#C_\tau \) denotes the number of elements in the conjugacy class of \(\tau \) and

Namely, the number of generators of a given degree is independent of the choice of superorthogonal basis.

We prove Theorem 7.1 in Sect. 7.1.1.

For example, consider symmetric functions in three variables. That is, take the group \(S_3\) acting on \({\mathbb {C}}^3\) via the representation \(\pi (\sigma )e_i = e_{\sigma (i)}.\) According to Theorem 7.1, the necessary information can be conveniently compiled in the following table.

So,

where \(F_n\) denotes the nth Fibonacci number.

Thus, in general, with the help of computer algebra software, it is a simple exercise to calculate the number of free polynomials of degree n in some superorthogonal basis for the ring of invariant free polynomials of \(\pi .\)

7.1.1 The proof of Theorem 7.1

Let \(f_{n}\) be the dimension of invariant homogeneous free polynomials of degree n. Let \(g_{n}\) be the number of free polynomials of degree n in some superorthogonal basis for the ring of invariant free polynomials of \(\pi .\) Let

Lemma 7.2

Proof

It can be shown using enumerative combinatorics that

Thus,

Calculating g from f gives that

\(\square \)

In order to calculate \(f_n\), we use character theory (see Serre [20, Chapter 2]).

Let \(\rho \) be a representation for G. For each \(\sigma \in G\), put \(\chi _{\rho }(g)={\text {tr}}(\rho (\sigma )).\) \(\chi _{\rho }\) is the character of \(\rho .\) Let \(\phi _{1}, \phi _{2}\) be functions from G into \({\mathbb {C}}.\) The scalar product \(\left\langle \cdot , \cdot \right\rangle \) is defined by

Let \(\tau :G\rightarrow \mathcal {U}_{1}\) be the trivial representation. The dimension of the space of fixed vectors of the action of \(\rho \) is given by \(\left\langle \chi _{\rho }, \tau \right\rangle .\) (See Serre [20, Chapter 2])

We make the identification \(\chi = \chi _{\pi }.\)

Lemma 7.3

Proof

By Lemma 6.3, the action of \(\pi \) on homogenous polynomials is given by \(\pi ^{\otimes n}\).

\(\square \)

Now Theorem 7.1 follows as an immediate corollary of Lemmas 7.2 and 7.3.

7.2 The ring of invariants of an abelian group

Theorem 7.4

Let G be an abelian group, and let \(\pi :G \rightarrow U_d\) be a unitary representation of G. Let \(v_1, \ldots , v_d\) be an orthonormal set of vectors of \([H^2_d]_1\) with corresponding characters \(\chi _1, \ldots , \chi _d\) such that \(\pi (g)v_i = \chi _i(g) v_i\). A basis for the free ring of invariants as a vector space is given by

Furthermore,

forms a superorthonormal basis.

Proof

Note that \(\pi ^n v^J = (\chi v)^J = \chi ^J v^J.\) So, \(v^J\) is invariant if and only if \(\chi ^J = \tau .\) Otherwise, it must be orthogonal to the ring of invariant free polynomials.

So, any invariant free polynomial is in the span of the \(v^J\in B,\) which are themselves invariant homogeneous free polynomials.

To show that \(\tilde{B}\) is superorthogonal, note for any two distinct words,

by the grading lemma, which implies the claim. \(\square \)

So, the calculation of a superorthogonal basis for the ring of invariants of a finite abelian group is tractable in general.

The superorthogonal basis given in Theorem 7.4 also implies that off of some variety, the ring of invariant functions is finitely generated. So, there are finitely many invariant free rational functions such that any invariant free polynomial can be written in terms of them, in spite of the fact that the ring of invariant free polynomials is not itself finitely generated. Similar phenomena occur in real algebraic geometry, such as the fact that positive polynomials cannot be written as sums of squares of polynomials [16], but can be written as sums of squares of rational functions, i.e. Artin’s resolution of Hilbert’s seventeenth problem [6].

Theorem 7.5

Let G be a finite abelian group. With the notation of Theorem 7.4 the ring of invariant functions in the algebra generated by \( v_1,\ldots , v_d, v_1^{-1}, \ldots , v_d^{-1}\) is finitely generated.

Proof

We note that by Pontryagin duality theorem [19, Theorem 1.7.2], the characters of an abelian group G form a group \(\hat{G}\) under multiplication which is noncanonically isomorphic to G. Let \(F_d\) be the free group with d generators. Let \(H = \{I \in F_d | \chi ^I =0\}.\) Consider the short exact sequence

So, H is of finite index in \(F_d\) and is thus finitely generated, which then implies that the ring is finitely generated. \(\square \)

7.3 Example: ad hoc methods for symmetric functions in three variables

We now turn our attention to symmetric functions in three variables.

Let \(u_{0},u_{1},u_{-1}\) be as in Sect. 5 and let \((b_{n})_{n\in {\mathbb {N}}}\) be the constructed superorthogonal basis for cyclic free polynomials in 3 variables, where we fix \(b_0 = u_0.\)

Let \(\tau \) be the following action on a free function f:

Note that

We recall that each \(b_n\) is of the form

such that \(\sum _k i_k \equiv _3 0.\) So, \(\tau \cdot b_n = b_{\tilde{n}}\) for some \(\tilde{n},\) since \(\tau \cdot \prod _k u_{i_k} = \prod _k u_{-i_k},\) and so \(\sum _k -i_k \equiv _3 0.\)

For \(n >0\) define

For \(n =0,\) let \(c^0_0 = u_0.\)

Note that each \(c_{n}^{0}\) is a symmetric free polynomial and that the product of an even number of \(c_{n}^{1}\) is also symmetric. In fact,

and

Set

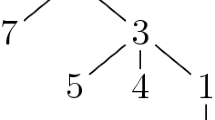

Now, B is a superorthogonal basis for the symmetric free polynomials in three variables. The elements of B of degree 4 and less are given in the following table.

Note the table agrees with the generating function obtained earlier. As there are 13 elements of the basis of degree 5, we will stop here.

We remark that the method above of iteratively constructing can be applied, in principal, for any solvable group, since we exploited the fact that \({\mathbb {Z}}_2\cong S_{3}/{\mathbb {Z}}_{3}\) acts on the ring of free polynomials invariant under \({\mathbb {Z}}_3.\) Since the ring of free polynomials invariant under \({\mathbb {Z}}_3\) is itself isomorphic to a free algebra in infinitely many variables, we are essentially repeating the construction done for any abelian group.

References

Agler, J., McCarthy, J.E.: Norm preserving extensions of holomorphic functions from subvarieties of the bidisk. Ann. Math. 157(1), 289–312 (2003)

Agler, J., McCarthy, J.E.: Distinguished varieties. Acta Math. 194, 133–153 (2005)

Agler, J., McCarthy, J.E.: Global holomorphic functions in several non-commuting variables. Can. J. Math. (2014). arXiv:1305.1636 (to appear)

Agler, J., Young, N.J.: Symmetric functions of two noncommuting variables. J. Funct. Anal. 266(9), 5709–5732 (2014)

Aguiar, Marcelo, André, Carlos, Benedetti, Carolina, Bergeron, Nantel, Chen, Zhi, Diaconis, Persi, Hendrickson, Anders, Samuel Hsiao, I., Isaacs, Martin, Jedwab, Andrea, Johnson, Kenneth, Karaali, Gizem, Lauve, Aaron, Le, Tung, Lewis, Stephen, Li, Huilan, Magaard, Kay, Marberg, Eric, Novelli, Jean-Christophe, Pang, Amy, Saliola, Franco, Tevlin, Lenny, Thibon, Jean-Yves, Thiem, Nathaniel, Venkateswaran, Vidya, Vinroot, C.Ryan, Yan, Ning, Zabrocki, Mike: Supercharacters, symmetric functions in noncommuting variables, and related Hopf algebras. Adv. Math. 229(4), 2310–2337 (2012)

Artin, E.: Uber die zerlegung definiter funktionen in quadrate. Abh. Math. Sem. Univ. Hambg. 5, 85–99 (1927)

Bergeron, N., Reutenauer, C., Rosas, M., Zabrocki, M.: Invariants and coinvariants of the symmetric group in noncommuting variables. Can. J. Math. 60, 266–296 (2008)

Chevalley, C.: Invariants of finite groups generated by reflections. Am. J. Math. 77, 778–782 (1955)

Conway, J.B.: A Course in Functional Analysis. Springer, New York (1985)

Frazho, A.E.: Complements to models for noncommuting operators. J. Funct. Anal. 59(3), 445–461 (1984)

Gelfand, I.M., Krob, D., Leclerc, B., Lascoux, A., Retakh, V.S., Thibon, J.-Y.: Noncommutative symmetric functions. Adv. Math. 112, 218–348 (1995)

Hartshorne, R.: Algebraic Geometry. Springer, New York (1997)

Helton, J.W., Klep, I., McCullough, S.: Proper free analytic maps. J. Funct. Anal. 260(5), 1476–1490 (2011)

Helton, J.W., Klep, I., McCullough, S., Slinglend, N.: Noncommutative ball maps. J. Funct. Anal. 257, 47–87 (2009)

Kaliuzhnyi-Verbovetskyi, D.S., Vinnikov, V.: Foundations of Free Noncommutative Function Theory. Mathematical surveys and monographs. American Mathematical Society, New York (2014)

Motzkin, T.S.: The arithmetic-geometric inequality. In: Inequalities. Academic Press, New York (1967)

Popescu, G.: Isometric dilations for infinite sequences of noncommuting operators. Trans. Am. Math. Soc. 316, 523–536 (1989)

Popescu, G.: Free holomorphic functions on the unit ball of \(B({\cal{H}})^n\). J. Funct. Anal. 241, 268–333 (2006)

Rudin, W.: Fourier Analysis on Groups. Wiley, New York (1962)

Serre, Jean-Pierre: Linear Representations of Finite Groups. Springer, New York (1977)

Shepard, G.C., Todd, J.A.: Finite unitary reflection groups. Can. J. Math. 6, 274–304 (1954)

Wolf, M.C.: Symmetric functions of noncommuting elements. Duke Math. J. 2, 626–637 (1936)

Author information

Authors and Affiliations

Corresponding author

Additional information

D. Cushing is partially supported by an EPSRC DTA grant and a Capstaff award. J. E. Pascoe is partially supported by National Science Foundation grant DMS 1361720.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Cushing, D., Pascoe, J.E. & Tully-Doyle, R. Free functions with symmetry. Math. Z. 289, 837–857 (2018). https://doi.org/10.1007/s00209-017-1977-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00209-017-1977-x

Keywords

- Invariant theory

- Symmetric functions in noncommuting variables

- Noncommutative invariant theory

- Free analysis