Abstract

Following our previous work on complementary boundary conditions, we write Cartesian product of two copies of a space of continuous functions on the real line as the direct sum of two subspaces that are invariant under a cosine family of operators underlying Brownian motion. Both these subspaces are formed by pairs of extensions of continuous functions: in the first subspace the form of these extensions is shaped unequivocally by the transmission conditions describing snapping out Brownian motion, in the second, it is shaped by the transmission conditions of skew Brownian motion with certain degree of stickiness. In this sense, the above transmission conditions are complementary to each other.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Boundary conditions and invariant subspaces

There is an intimate connection between boundary conditions for one-dimensional Laplace operator \(f\mapsto f''\) and invariant subspaces for the basic cosine family \( \{ C(t),\, t \in \mathbb {R}\}\) defined by

Elaborating on this succinct statement, let \(\mathfrak C[-\infty ,\infty ]\) be the space of continuous functions on the real line that have finite limits at plus and minus infinity; this space is equipped, as customary, with the supremum norm. The subspaces

of odd and even functions, respectively, are invariant under \( \{ C(t),\, t \in \mathbb {R}\}\), as seen as a family of operators in \(\mathfrak C[-\infty ,\infty ]\). This has an immediate bearing on generation theorems: Since the space of even functions is isometrically isomorphic to the space \(\mathfrak C[0,\infty ]\) of continuous functions on the non-negative half line that have limits at infinity, and since the even extension of a twice continuously differentiable function f on \([0,\infty )\) is twice continuously differentiable on the entire line iff \(f'(0)=0\), invariance of the space of even functions allows proving that the Laplace operator in \(\mathfrak C[0,\infty ]\) with domain described by the Neumann boundary condition \(f'(0)=0\) is a cosine family generator. To wit, the cosine family generated by the latter operator can be given explicitly:

where \(E:\mathfrak C[0,\infty ]\rightarrow \mathfrak C[-\infty ,\infty ]\) maps a function to its even extension, and \(R:\) \(\mathfrak C[-\infty ,\infty ]\rightarrow \mathfrak C[0,\infty ]\) maps a function to its restriction. The same analysis allows linking invariance of the subspace of odd functions with the Dirichlet boundary condition \(f(0)=0\)—this method of proving generation theorems is referred to as Lord Kelvin’s method of images (see e.g. [1,2,3,4]; in [5, pp. 340-343] or [6, Sect. 8.1] this method is used to prove generation theorems for the related semigroups, not the cosine families, but the analysis is analogous).

This example is just the tip of the iceberg: as established in [7], with nearly all Feller–Wentzel boundary conditions at \(x=0\) one can associate unequivocally extensions of functions in \(\mathfrak C[0,\infty ]\) in such a way that these extensions form a subspace that is invariant under the basic cosine family. Consequently, the cosine families (and semigroups) characterized by these boundary conditions are given by the abstract Kelvin formula like (2), except that extension operator E is of different form. For the future reference, we note the following specific instance of this association: the classical Robin boundary condition

(where \(\alpha \ge 0\) is a constant) is linked with the invariant subspace of \(g\in \mathfrak C[-\infty ,\infty ]\) that satisfy \( g(-x) = g(x) - 2\alpha \int _0^x \textrm{e}^{-\alpha (x-y)} g(y) \, \textrm{d}y, x\ge 0. \) Further examples of extensions shaped by boundary conditions can be found in the already cited [7] and [5, pp. 340–343], in [8, p. 125], and in what follows.

1.2 Complementary boundary conditions

The formula

says that \(\mathfrak C[-\infty ,\infty ]\) can be decomposed into two subspaces that are not only invariant but also complementary, and this leads to the conclusion that the related Neumann and Dirichlet boundary conditions are in a sense complementary as well. In fact, these conditions are nearly ‘perpendicular’, because projections on \(\mathfrak C_{\textrm{odd}}[-\infty ,\infty ]\) and \(\mathfrak C_{\textrm{even}}[-\infty ,\infty ]\), mapping a function to its odd and even parts, respectively, are inherited from the space of square integrable functions where they are orthogonal projections.

In this context, a natural question arrises whether there are any other decompositions of \(\mathfrak C[-\infty ,\infty ]\) into complementary invariant subspaces, preferably subspaces of extensions shaped by boundary conditions. In other words, are there any other complementary boundary conditions, besides those of Neumann and Dirichlet? The answer given in [9], is in affirmative: for \(\alpha >0\), the Robin boundary condition (3) is complementary to the boundary condition (see Fig. 1)

describing the slowly reflecting boundary [10, p. 421], known also as sticky boundary [8, p. 127], a particular case of Feller boundary conditions. To repeat, this means that (a) the related subspaces of extensions shaped by boundary conditions, say, \(\mathfrak C_R^\alpha \) and \(\mathfrak C_F^\alpha \) (‘R’ for ‘Robin’, ‘F’ for ‘Feller’), are invariant under the basic cosine family (and thus, by the Weierstrass formula—see e.g. [11, p. 219]—under the heat semigroup as well), (b) \(\mathfrak C[-\infty ,\infty ]\) is decomposed into these subspaces as follows

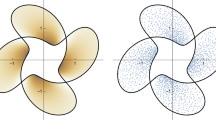

and (c) the corresponding projections \(P_\alpha :\mathfrak C[-\infty ,\infty ]\rightarrow \mathfrak C_R^\alpha \) and \(Q_\alpha =I-P_\alpha :\mathfrak C[-\infty ,\infty ]\rightarrow \mathfrak C_F^\alpha \) have a lot in common with orthogonal projections in Hilbert spaces of square integrable functions. As a side remark we note that, interestingly, \(P_\alpha ,\alpha \ge 0\) is a continuous family, leading from the projection on the subspace of even functions to the projection on the subspace of the odd functions, whereas \(Q_\alpha ,\alpha \ge 0\) leads in the other direction, and this via a completely different route (see Fig. 2; consult [9] for details).

Dependence of Robin and sticky boundary extensions on parameter \(\alpha \). In the case of Robin boundary, the smaller the \(\alpha \) the more the related extension resembles the even one, and the larger the \(\alpha \) the more the extension resembles to odd one. With sticky boundary it is the opposite: small \(\alpha \)s correspond to nearly odd extensions, whereas large \(\alpha \)s correspond to nearly even extensions

1.3 Invariant subspaces and transmission conditions; snapping out Brownian motion

The theory is not restricted to boundary conditions: also a number of transmission conditions shape unequivocally the form of invariant subspaces—see e.g. [12, 13]. For instance, as proved in [12], the method of images can be used in the case of transmission conditions describing snapping out Brownian motion.

The snapping out Brownian motion is a Feller process on the union

of two half-lines separated by a semi-permeable membrane located at 0; \(0-\) and \(0+\) are two distinct points, representing positions to the immediate left and to the immediate right of the membrane. The membrane separating the half-lines is characterized by two non-negative parameters, say, \(\alpha \) and \(\beta \), interpreted as permeability coefficients for a particle diffusing from the left to the right and from the right to the left, respectively. More precisely, the generator \(A_{\alpha ,\beta }\) of the related semigroup of operators in the space

of continuous functions on \(\mathbb {R}_\sharp \) is defined as follows: it is given by

on the domain composed of twice continuously differentiable \(f \in \mathfrak C(\mathbb {R}_\sharp )\) such that \(f', f''\in \mathfrak C(\mathbb {R}_\sharp )\) and

See [14, 15] or [16, Chapters 4 and 11] for a more detailed description of the stochastic mechanism of filtering through the membrane, as governed by (5), in terms of the celebrated Lévy local time for Brownian motion. The cited works provide also further literature on transmission conditions (5), including [17,18,19,20].

As proved in [12] using the method of images, the operator \(A_{\alpha ,\beta }\) generates not only a Feller semigroup but on top of that a strongly continuous cosine family \(\{Cos_{\alpha ,\beta }(t),\, t \in \mathbb {R}\} \) of operators in \(\mathfrak C(\mathbb {R}_\sharp )\). In fact (see also our Theorem 6), this cosine family can be written in a quite explicit form, analogous to (2), in terms of the Cartesian product basic cosine family

(‘D’ for ‘Descartes’) defined in the space

by the formula

where, to recall, C(t)s are introduced in (1). To wit,

where

-

(a)

\( E_{\alpha ,\beta }:\mathfrak C(\mathbb {R}_\sharp )\rightarrow \mathcal C\) maps an \(f \in \mathfrak C(\mathbb {R}_\sharp )\) to a pair \((\widetilde{f_{\ell }},\widetilde{f_{\text {r}}})\) of extensions of the left and right parts of \(f\in \mathfrak C(\mathbb {R}_\sharp )\) unequivocally shaped by transmission conditions (5)—see Fig. 3,

-

(b)

\(R:\mathcal C \rightarrow \mathfrak C(\mathbb {R}_\sharp )\) is the operator that to a pair \((f_1,f_2)\in \mathcal C\) assigns the member f of \(\mathfrak C(\mathbb {R}_\sharp )\) determined by

$$\begin{aligned} f(x) = f_1(x), \text { for } x<0 \quad \text { and }\quad f(x) = f_2(x), \text { for } x >0. \end{aligned}$$(We note that elements of \(\mathfrak C(\mathbb {R}_\sharp )\) can be identified with continuous functions on \((-\infty , 0)\cup (0,\infty )\) that have finite limits at \(\pm \infty \) and one-sided, finite limits at 0.)

We stress that the proof of formula (8) hinges on the fact that the image of the operator \(E_{\alpha ,\beta }\), that is, the space of extensions, is invariant under \(\{C_{\text {D}}(t),\, t\in \mathbb {R}\}\), and that these extensions are unequivocally shaped by transmission conditions (5).

1.4 Complementary transmission conditions: our main result

Our main goal in this paper is to find an analogue of decomposition (4) for the Cartesian product (6) and the basic Cartesian product cosine family of (7). More precisely, we search for two subspaces of \(\mathcal C\) such that

-

they are complementary to each other, and

-

each of them is shaped by a transmission condition to be invariant under the cosine family.

We discover that for this purpose transmission conditions (5) can be used and that they form a complementary pair with

as long as \({\alpha +\beta }>0\). In other words we prove that

where \(\mathcal {C}_{\alpha ,\beta }\) is the subspace composed of extensions shaped by transmission conditions (5) and \(\mathcal {D}_{\alpha ,\beta }\) is the subspace of extensions shaped by transmission conditions (9).

1.5 Interpretation of transmission conditions (9); skew Brownian motion

Transmission conditions (9) are not easy to interpret, and the main difficulty lies in the first of them. Even though its counterparts can be found in the literature (see e.g. [21, 22]), their physical and particularly stochastic meaning is unclear. The fundamental problem is that, because of the first condition in (9), the related semigroup of operators is defined in the rather unusual space \(\mathfrak C_{\text {ov}}(\mathbb {R}_\sharp )\subset \mathfrak C(\mathbb {R}_\sharp )\) composed of functions satisfying \(f(0+)=-f(0-)\) (‘\(\text {ov}\)’ stands for ‘opposite values’)—see Sect. 4.2; it is thus impossible to think of this semigroup as describing a stochastic process.

In order to circumvent this difficulty, we note that \(\mathfrak C_{\text {ov}}(\mathbb {R}_\sharp )\) is isometrically isomorphic to \(\mathfrak C[-\infty ,\infty ]\): the isomorphism \(I:\mathfrak C_{\text {ov}}(\mathbb {R}_\sharp )\rightarrow \mathfrak C[-\infty ,\infty ]\) we have in mind is given by \(If(x)= f(x), x \ge 0\) and \(If(x)=-f(x), x < 0\). In the space \(\mathfrak C[-\infty ,\infty ]\) the first of transmission conditions (9) becomes redundant, and the second takes the form

where \(p_1 = \frac{1}{\alpha +\beta + 1}, p_2 = \frac{\beta }{\alpha +\beta + 1}\) and \(p_3=\frac{\alpha }{\alpha + \beta + 1} \) are three non-negative numbers summing up to 1.

Relation (10) is best known in the case where \(p_1=0\) when it describes skew Brownian motion. As noted in [23] ‘The skew Brownian Motion appeared in the ’70 in [24, 25] as a natural generalization of the Brownian motion: it is a process that behaves like a Brownian motion except that the sign of each excursion is chosen using an independent Bernoulli random variable’. The paper by A. Lejay cited above discusses also a number of constructions of the skew Brownian motion that appeared in the literature since that time, and various contexts in which this process and its generalizations are studied; see also [26, pp. 115–117] and [27, p. 107]. It is well-known, for example, that the process can be obtained in the limit procedure of Friedlin and Wentzell’s averaging principle [28]—see [29, Thm. 5.1], comp. [13, Eq. (3.1)]. Moreover, in [12] it has been shown that the skew Brownian motion is a limit of snapping out Brownian motions as the permeability coefficients tend to infinity, but their ratio remains constant. Much more recently, in [30], a link has been provided between skew Brownian motion and kinetic models of motion of a phonon involving an interface, of the type studied in [31,32,33,34], and the telegraph process with elastic boundary at the origin [35, 36].

The case of \(p_1\not =0\) is a modification of skew Brownian motion which intuitively can be described as follows. In the skew Brownian motion a particle reaching \(x=0\) immediately continues its motion: it evolves in the right half-axis with probability \(p_2\) and in the left half-axis with probability \(p_3=1-p_2\). However, when \(p_1\) is non-zero, point \(x=0\) is more ‘sticky’ and so the process stays at this point longer. In its more general form (i.e., with \(p_1\not =0\)), condition (c) appears e.g. in [37, Eq. (2.5)], with yet one more term, describing loss of probability mass, and in a more general context of diffusions on graphs (see also [38, Sect. 6.3]).

Summarizing this section, one can thus say that transmission conditions (9), when translated to a proper space, characterize the Feller semigroup of skew Brownian motion with some degree of stickiness at \(x=0\).

1.6 Organization of the paper

In Sect. 2, we provide a step by step construction of the invariant subspace \(\mathcal {C}_{\alpha ,\beta }\) of \(\mathcal C\) that is unequivocally shaped by transmission conditions (5). Section 3 is devoted to a natural projection of \(\mathcal C\) on \(\mathcal {C}_{\alpha ,\beta }\). In Sect. 4 we show that the complement of \(\mathcal {C}_{\alpha ,\beta }\) is also invariant under the basic Cartesian product cosine family, and that this space is shaped by transmission conditions (9).

1.7 Commonly used notation

1.7.1 Constants \(\alpha \) and \(\beta \)

Throughout the paper we assume that \(\alpha \) and \(\beta \) are fixed non-negative constants. Also, to shorten formulae, we write

Since the case of \(\alpha =\beta =0\) is not interesting, in what follows we assume that \({\alpha +\beta }>0\), implying that \(\gamma >0\) as well.

1.7.2 Limits at infinities, and transformations of functions

For \(f\in \mathfrak C(\mathbb {R}_\sharp )\), we write

Moreover, we define \( f^\text {T}, f^e \) and \(f^o\), also belonging to \(\mathfrak C(\mathbb {R}_\sharp )\), by

and

so that

For f in the linear space \(\mathfrak C(\mathbb R)\) of all real continuous functions on \(\mathbb R\), these definitions naturally extend to \(x=0\) as well. In particular, for \(e_a, a \in \mathbb R\), defined by

we have \(e_a^\text {T}= e_{-a}\).

We note that the basic cosine family, treated as a family of operators in \(\mathfrak C(\mathbb {R})\), commutes with the operations described above, that is,

The following lemma will be used to establish existence of a number of limits encountered in the paper.

Lemma 1

Let \(f \in \mathfrak C[-\infty ,\infty ]\). Then, as long as \(a>0\),

Proof

This follows by l’Hospital’s Rule. In fact, the first and the third relations here are consequences of the second.

1.7.3 Laplace transform

By a slight abuse of notation, for \(f\in \mathfrak C(\mathbb {R})\), we write

even though, in fact, the right-hand side here is not the Laplace transform of f but of its restriction to the right half-axis. The integral featured above makes sense as long as, for example, there are M and \(\omega \ge 0 \) such that \(\vert f(x)\vert \le M\textrm{e}^{\omega x}, x\ge 0\); then, \(\widehat{f}(\lambda )\) is well-defined for \(\lambda >\omega \).

1.7.4 Convolution

For \(f,g\in \mathfrak C(\mathbb R)\), we write

we stress that, somewhat differently than in customary notation, this formula is valid for all \(x\in \mathbb {R}.\) We have then \(f*g=g*f\) and

Furthermore, \(e_a, a \in \mathbb R\), introduced above, satisfy the Hilbert equation

As a consequence, for

we obtain

2 Invariant subspaces related to transmission conditions (5)

We begin our analysis by connecting transmission conditions (5) with invariant subspaces of \(\mathcal C\). To this end, in Sect. 2.1, we first find a family of subspaces of \(\mathfrak C[-\infty ,\infty ]\) that are invariant under the basic cosine family (1) (see Lemma 2), and then use them to construct subspaces \(\mathcal {C}_{\alpha ,\beta }\) of \(\mathcal C\) which are invariant under the basic Cartesian product cosine family (7). In the process of analysis it turns out that \(\mathcal {C}_{\alpha ,\beta }\) is composed of pairs of extensions of members of \(\mathfrak C(\mathbb {R}_\sharp )\). Then, in Sect. 2.2 (see Thm. 6 in particular), we prove that the cosine family in \(\mathfrak C(\mathbb {R}_\sharp )\) generated by the operator \(A_{\alpha ,\beta }\), introduced in Sect. 1.3, is an isomorphic image of the cosine family that is obtained by restricting the basic Cartesian product cosine family to the subspace \(\mathcal {C}_{\alpha ,\beta }\) (i.e., it is given by (8)). Since the latter property of \(\mathcal {C}_{\alpha ,\beta }\) characterizes uniquely the space of extensions shaped by transmission conditions, \(\mathcal {C}_{\alpha ,\beta }\) is thus proved to be the invariant subspace of extensions corresponding to transmission conditions (5).

2.1 Definition of an invariant space \(\mathcal {C}_{\alpha ,\beta }\)

Let \(a>0\) be fixed, and let us think of the basic cosine family defined in (1) as composed of operators acting in \(\mathfrak C(\mathbb R)\) (the linear space of real continuous functions on \(\mathbb R\)). We start by noting the following formula, which can be proved by direct calculation, and holds for all \(t,x\in \mathbb {R}\) and \(\phi \in \mathfrak C(\mathbb {R})\):

This formula reveals that functions \(\phi \) that satisfy \((e_a*\phi )^e=0\) (i.e., function \(\phi \) such that \(e_a*\phi \) is odd) play a special role in \(\mathfrak C(\mathbb {R})\). In our first lemma, we characterize such functions in more detail.

Lemma 2

For \(\phi \in \mathfrak C(\mathbb {R})\) the following conditions are equivalent.

-

(a)

\(\phi ^o= a e_a *\phi \) on \(\mathbb {R}^+:=[0,\infty )\);

-

(b)

\(\phi ^o= a e_a *\phi \) on \(\mathbb {R}\);

-

(c)

\(e_a * \phi \) is odd, that is, \((e_a*\phi )^e = 0\) on \(\mathbb {R}\);

-

(d)

For all \(t\in \mathbb {R}\), \( C(t) (e_a * \phi ) = e_a * C(t)\phi \) on \(\mathbb {R}\).

Proof

We note that two members, say, \(\phi _1\) and \(\phi _2\), of \(\mathfrak C(\mathbb {R})\) are equal iff simultaneously \(\phi _1=\phi _2\) on \(\mathbb {R}^+\) and \(\phi _1^\text {T}= \phi _2^\text {T}\) on \(\mathbb {R}^+\).

Suppose now that (a) is true. To see that (b) holds also, we need to check, by (13) and the remark made above, that \(\phi ^o= a e_a^\text {T}*\phi ^\text {T}\) on \(\mathbb {R}^+\), that is, by (a), that \(e_a^\text {T}* \phi ^\text {T}= e_a * \phi \) on \(\mathbb {R}^+.\) On the other hand, using assumption (a) again, we see that

and the last expression equals \(e_a*\phi \) by the Hilbert equation (14). This completes the proof of (a)\(\implies \)(b); the converse is obvious.

If (b) holds, \(e_a * \phi \) must be odd because so is \(\phi ^o\); this shows (b)\(\implies \)(c). Conversely, (c) means that \(-e_a * \phi =(e_a*\phi )^\text {T}\) and this, by (13), can be expanded as \( \int _0^x \textrm{e}^{-a(x-y)}\phi (y) \, \textrm{d}y = \int _0^x \textrm{e}^{a(x-y)}\phi (-y)\, \textrm{d}y, x \in \mathbb {R}. \) Differentiating this relation with respect to x yields (b). Finally, (c) is equivalent to (d) by (16).

Our lemma has an immediate bearing on the following subspace of \(\mathcal C\):

where \(b>0\) is another constant. Namely, we have the following corollary.

Corollary 3

The subspace \(\mathsf C_{a,b}\) is invariant under the basic Cartesian product cosine family.

Since the proof of this result comes down to a straightforward calculation (based on points (b) and (d) of the lemma), we omit it. We note merely that this calculation shows also that the elements of \(\mathfrak C[-\infty ,\infty ]\) that satisfy the equivalent conditions of Lemma 2 form a subspace of \(\mathfrak C[-\infty ,\infty ]\) which is invariant under the basic cosine family. This subspace is in fact shaped by the boundary condition (3) (with \(\alpha =a\)).

To continue, we note that any real matrix \(M=\begin{pmatrix} m_{1,1} &{} m_{1,2} \\ m_{2,1} &{} m_{2,2} \end{pmatrix}\) induces a bounded linear operator in \(\mathcal C\), also denoted M, by the formula

By the third relation in (12), this operator commutes with the basic Cartesian product cosine family. It follows that the image of \(\mathsf C_{a,b}\) via M is also an invariant subspace of \(\mathcal C\) (under \( \{C_{\text {D}}(t),\, t\in \mathbb {R}\}\)). Specializing to (see Sect. 1.7) \(a:={\alpha +\beta }, b:={\textstyle \frac{1}{2}}\gamma ^2\) and

we obtain the invariant subspace

This definition, of course, is meaningless if \(\alpha =\beta \); and so in this case we proceed differently. Namely, we introduce

and note that this subspace is invariant under \(C_{\text {D}}\), because of Lemma 2 and the first relation in (12). Then, we define \(\mathcal C_{\alpha ,\alpha } \) as the image of this subspace via the operator \(M^\sharp \) of the form (17):

where

Lemma 4

For \((\psi _1,\psi _2)\in \mathcal C\) the following conditions are equivalent.

-

(a)

\((\psi _1,\psi _2)\) belongs to \(\mathcal {C}_{\alpha ,\beta }\);

-

(b)

\(\psi _1^o=\alpha e_{\alpha +\beta } *(\psi _2 - \psi _1^\text {T})\) and \(\psi _2^o=\beta e_{\alpha +\beta } *(\psi _2 - \psi _1^\text {T})\) on \(\mathbb {R}\);

-

(c)

\(\psi _1^o=\alpha e_{\alpha +\beta } *(\psi _2 - \psi _1^\text {T})\) and \(\psi _2^o=\beta e_{\alpha +\beta } *(\psi _2 - \psi _1^\text {T})\) on \(\mathbb {R}^+\).

Proof

(a) \(\iff \) (b). Case \(\alpha \not =\beta \). By definition, \((\psi _1,\psi _2)\) belongs to \(\mathcal {C}_{\alpha ,\beta }\) iff there are \(\phi _1,\phi _2\) such that

and

Hence, (a) implies

which is (b). The converse is proved analogously.

(a)\(\iff \) (b). Case \(\alpha =\beta \). We proceed similarly: \((\psi _1,\psi _2) \) belongs to \(\mathcal C_{\alpha ,\alpha }\) if there is \((\phi _1,\phi _2) \in \mathcal C\) such that

and

We omit the details.

(b)\(\iff \) (c). Since (b) obviously implies (c), we are left with showing (c)\(\implies \)(b). To this end, we note that \(\psi ^o_1\) and \(\psi _2^o\) are odd and thus it suffices to show that so is \(e_{\alpha + \beta } * (\psi _2 - \psi _1^\text {T})\) if (c) holds. On the other hand, for \(\phi :=\psi _2 - \psi _1^\text {T},\) (c) implies \(\phi ^o = (\alpha +\beta ) e_{\alpha +\beta }*\phi \) on \(\mathbb {R}^+,\) and this, by Lemma 2 proves that \(e_{\alpha +\beta } * \phi \) is odd.

Extensions \(E_{\alpha ,\beta }f\) of a single function \(f \in \mathfrak C(\mathbb {R}_\sharp )\). A single continuous function defined on \([-\infty ,0-] \cup [0+,\infty ] \) is extended to a pair of continuous functions on \(\mathbb R\): an extension of its left part and an extension of its right part. These extensions vary with parameters \(\alpha \) and \(\beta \)

2.2 Relation of \(\mathcal {C}_{\alpha ,\beta }\) to transmission conditions (5)

We are now ready to exhibit connection between \(\mathcal {C}_{\alpha ,\beta }\) and transmission conditions (5). To this end, given \(f\in \mathfrak C(\mathbb {R}_\sharp )\) we define extensions \(\widetilde{f_{\ell }},\widetilde{f_{\text {r}}}\in \mathfrak C(\mathbb {R})\) of its left and right parts (see Figs. 3 and 4) by

and \( \widetilde{f_{\text {r}}}(0)=f(0+), \widetilde{f_{\ell }}(0)=f(0-).\)

Proposition 5

The transformation \(E_{\alpha ,\beta }\): \( \mathfrak C(\mathbb {R}_\sharp )\ni f \mapsto (\widetilde{f_{\ell }},\widetilde{f_{\text {r}}}) \in [\mathfrak C(\mathbb {R})]^2 \) is an isomorphism of \(\mathfrak C(\mathbb {R}_\sharp )\) and \(\mathcal {C}_{\alpha ,\beta }\).

Proof

Our first claim is that \(\widetilde{f_{\ell }}\) and \(\widetilde{f_{\text {r}}}\) are members of \(\mathfrak C[-\infty ,\infty ]\). Since, by definition of \(\mathfrak C(\mathbb {R}_\sharp )\), existence of \(\lim _{x\rightarrow \infty } \widetilde{f_{\text {r}}}(x)\) and \(\lim _{x\rightarrow -\infty } \widetilde{f_{\ell }}(x)\) is assumed, this follows by Lemma 1: in view of (22), the lemma renders \(\lim _{x\rightarrow \infty }\widetilde{f_{\ell }}(x) = \frac{2\alpha }{\alpha +\beta }f(\infty ) +\frac{\beta -\alpha }{\alpha +\beta } f(-\infty ) \) and \(\lim _{x\rightarrow -\infty } \widetilde{f_{\text {r}}}(x)= \frac{\alpha -\beta }{\alpha +\beta } f(\infty )+ \frac{2\beta }{\alpha +\beta } f(-\infty ).\)

Next, relations (22) say that on \(\mathbb {R}^+\) we have

and this, when combined with the fact established above, by Lemma 4 proves that the pair \((\widetilde{f_{\ell }},\widetilde{f_{\text {r}}})\) belongs to \(\mathcal {C}_{\alpha ,\beta }\).

The extension operator \(E_{\alpha ,\beta }:\mathfrak C(\mathbb {R}_\sharp )\rightarrow \mathcal {C}_{\alpha ,\beta }\) is linear and bounded with

It is also surjective, because it has its right inverse—the operator R (introduced in Sect. 1.3) restricted to \(\mathcal {C}_{\alpha ,\beta }\). (This is just a restatement of the fact that, by (c) in Lemma 4, a pair \((\psi _1,\psi _2)\) in \(\mathcal {C}_{\alpha ,\beta }\) is determined by values of \(\psi _1\) on the negative half-axis together with the values of \(\psi _2\) on the positive half-axis. For instance, \(\psi _1(x) = \psi _1(-x) + 2 \alpha \int _0^x \textrm{e}^{-(\alpha +\beta )(x-y)}[\psi _2(y)- \psi _1(-y)]\, \textrm{d}y\), \(x>0\).) Since injectivity of \(E_{\alpha ,\beta }\) is beyond doubt, we are done.

Invariance of \(\mathcal {C}_{\alpha ,\beta }\) (established in Corollary 3) together with the fact that \(E_{\alpha ,\beta }\) is an isomorphism allows defining the following family of operators in \(\mathfrak C(\mathbb {R}_\sharp )\):

where R was defined in Sect. 1.3. It is easy to see that \(\{Cos_{\alpha ,\beta } (t), \,t \in \mathbb {R}\}\) is a strongly continuous cosine family; it is in fact an isomorphic image of the Cartesian product basic cosine family, restricted to the invariant subspace \(\mathcal {C}_{\alpha ,\beta }\). Our last proposition in this section says that the generator of this family is a Laplace operator with domain characterized by transmission conditions (5).

Theorem 6

The generator \(A_{\alpha ,\beta }\) of the snapping out Brownian motion introduced in Sect. 1.3 is the generator of the cosine family defined by the abstract Kelvin formula (23).

Proof

The proof proceeds in two steps.

Step 1. Let G be the generator of \(\{Cos_{\alpha ,\beta }, \,t \in \mathbb {R}\}\); we claim that \(D(A_{\alpha ,\beta })=D(G)\). To this end, we note that, by definition, an \(f\in \mathfrak C(\mathbb {R}_\sharp )\) belongs do \(D(G)\) iff the pair \((\widetilde{f_{\ell }},\widetilde{f_{\text {r}}})\) belongs do the domain of the generator of \(\{C_{\text {D}}(t)\vert _{\mathcal {C}_{\alpha ,\beta }}, t \in \mathbb {R}\},\) that is, if \(\widetilde{f_{\ell }}\) and \(\widetilde{f_{\text {r}}}\) are twice continuously differentiable on the entire \(\mathbb {R}\) with \(\widetilde{f_{\ell }}'',\widetilde{f_{\text {r}}}''\in \mathfrak C[-\infty ,\infty ].\) This is the case if f is twice continuously differentiable with \(f',f'' \in \mathfrak C(\mathbb {R}_\sharp )\) and both extensions have derivatives of second order at 0. Hence, the crux of the matter is to show that under such circumstances these latter derivatives exist iff f satisfies transmission conditions (5).

Assume thus that \(f\in \mathfrak C(\mathbb {R}_\sharp )\) is such that \(\widetilde{f_{\ell }}\) and \(\widetilde{f_{\text {r}}}\) are continuously differentiable on the entire \(\mathbb {R}\). Using (22), we can write out the formula for the difference quotient, to see that the right-hand derivative of \(\widetilde{f_{\ell }}\) at 0 is minus the left-hand derivative of \(\widetilde{f_{\ell }}\) at 0 plus \(2\alpha (f(0+) - f(0-))\). Since the one sided derivatives coincide by assumption, we thus obtain \((\widetilde{f_{\ell }})'(0)= \alpha (f(0+) - f(0-)).\) Assumed continuity of \((\widetilde{f_{\ell }})'\) together with the fact that the derivatives of \(\widetilde{f_{\ell }}\) and f coincide on \((-\infty ,0)\) leads to the conclusion that \(f'(0-)=\alpha (f(0+) - f(0-))\). The other condition in (5) is checked similarly.

To prove the converse, assume that f belongs to \(D(A_{\alpha ,\beta })\). Then, existence of the left-hand limit of f and \(f'\) at 0 together with l’Hospital’s Rule shows that the left-hand derivative of \(\widetilde{f_{\ell }}\) at this point exists and coincides with \(f'(0-).\) Moreover, writing out again the formula for the difference quotient and using l’Hospital’s Rule, we check that the right-hand derivative of \(\widetilde{f_{\ell }}\) at zero exists and equals \(-f'(0-)+2\alpha (f(0+) - f(0-))\). By assumption (5), it follows that the one-sided derivatives of \(\widetilde{f_{\ell }}\) coincide and equal \(\alpha (f(0+) - f(0-))\). Next, we note that for \(x>0\)

and argue similarly as above, using (5) and l’Hospital’s Rule, to see that one-sided second order derivatives of \(\widetilde{f_{\ell }}\) at 0 exist and coincide. The case of \(\widetilde{f_{\text {r}}}\) is analogous.

Step 2. Let now \(f\in D(G)=D(A_{\alpha ,\beta })\). By Step 1, \(E_{\alpha ,\beta }f=(\widetilde{f_{\ell }},\widetilde{f_{\text {r}}})\) is then a pair of twice continuously differentiable functions and thus we have

It follows that \(Gf = R((\widetilde{f_{\ell }})'',(\widetilde{f_{\text {r}}})'')= f''=A_{\alpha ,\beta }f\), completing the proof.

Remark 1

The extensions (22) are shaped so that (a) \(E_{\alpha ,\beta }\) is invariant under \(\{C_{\text {D}}(t), t \in \mathbb {R}\}\) and (b) the right-hand side of (23) coincides with the cosine family generated by \(A_{\alpha ,\beta }\) in \(\mathfrak C(\mathbb {R}_\sharp )\). It can be argued, using the fact that the operators \(C_{\text {D}}(t), t \in \mathbb {R}\) map \(D(A_{\alpha ,\beta })\) into itself, that these two conditions characterize such extensions unequivocally (see [7, 12]). Hence, \(\mathcal {C}_{\alpha ,\beta }\) is the space of extensions shaped by transmission conditions (5). An analogous remark concerns the space \(\mathcal {D}_{\alpha ,\beta }\) composed of extensions (36), studied later in the paper, in their relation to condition (9).

3 Natural projections on the space \(\mathcal {C}_{\alpha ,\beta }\) and its complement

In Sect. 2 we introduced \(\mathcal {C}_{\alpha ,\beta }\subset \mathcal {C}\) as a subspace that is invariant under the basic Cartesian product family, and then proved that it is the space of extensions of members of \(\mathfrak C(\mathbb {R}_\sharp )\), the extensions that are unequivocally shaped by the transmission conditions (5). In this section we find a natural projection on the space \(\mathcal {C}_{\alpha ,\beta }\).

First, in Sect. 3.1, we use a somewhat heuristic argument to derive a candidate for such a projection by using \(L^2\) intuitions. Then, in Sects. 3.2 and 3.3 we check that the formula guessed in Sect. 3.1 indeed defines a projection operator \(P_{\alpha ,\beta }: \mathcal C\rightarrow \mathcal {C}_{\alpha ,\beta }\). In the course of this analysis we also characterize the complement of \(\mathcal {C}_{\alpha ,\beta }\), that is, the space \(\mathcal {D}_{\alpha ,\beta }\) described in Sect. 3.3. The main result is summarized in Theorem 9.

To recall, by Lemma 4, \(\mathcal {C}_{\alpha ,\beta }\) is the space of \((g_1,g_2) \in \mathcal C\) satisfying

3.1 Heuristic derivation of projection

Led by the conviction that the most natural projections are orthogonal projections in a Hilbert space, given \((f_1,f_2) \in \mathcal C\) we fix \(n \in \mathbb {N}\) and search for a pair \((g_1,g_2) \in \mathcal {C}_{\alpha ,\beta }\) that minimizes the functional

the reason why we consider the integral over a finite interval, is that functions \(g_1\) and \(g_2\) need not belong to \(L^2(\mathbb {R})\). Using (24) we obtain that \(L(g_1,g_2)\) is equal to

In terms of functions \(h_1\) and \(h_2\) defined by

and related to \(g_1^\text {T}\) and \(g_2\) also by

the quantity \(L(g_1,g_2)\) can be written as

Therefore, the minimizing \((h_1,h_2)\) has to satisfy the Euler–Lagrange equations

where \(K(h_i,h_i',x)\), \(i=1,2\), is the integrand in (26). If we assume additionally that \(f_1\) and \(f_2\) are continuously differentiable, this leads to the following system of ODEs with constant coefficients for \((h_1,h_2)\):

with initial conditions \( h_i(0)=0, h_i'(0)=g_i(0),\) \(i=1,2\). Solving this system and using relations (25) we find the formula for \((g_1,g_2)\) that minimizes L. Namely, a somewhat long calculation shows that on the interval [0, n],

where \(c=c(n)\) is a real constant, and

To summarize: functions \(g_1\) and \(g_2\) defined by (27) and (28) form a pair that is a candidate for a projection of \((f_1,f_2)\) on \(\mathcal {C}_{\alpha ,\beta }\). However, so far they are defined merely on [0, n] and, since c depends on n, a priori we cannot assume that as n increases, formula (27) still defines the same functions. In fact, our \(L^2\)-based argument has not determined \(c= c(n)\) as yet. Our next step, therefore, is a leap of faith: we assume that c does not depend on n, so that (27) is a consistent definition on the entire right half-axis. Furthermore, we note that, by (22), for \((g_1,g_2) \in \mathcal {C}_{\alpha ,\beta }\), \(g_1\vert _{[0,\infty )}\) is determined by \(g_1\vert _{(-\infty ,0]}\) and \(g_2\vert _{[0,\infty )}\), and a similar remark applies to \(g_2\vert _{(-\infty ,0]}\). In fact, as in [9, Proposition 2.1 (c)], we conjecture that (27) works on the entire \(\mathbb {R}\).

To complete our search for \(g_1\) and \(g_2\), we should define c. To this end, we recall that for a function \(\phi :[0,\infty )\rightarrow \mathbb {R}\) a finite limit \(\lim _{x\rightarrow \infty }\textrm{e}^{\gamma x} \phi (x)\) cannot exist unless \(\lim _{x\rightarrow \infty }\phi (x)=0\) (because, by assumption, \(\gamma >0\)). It follows that, for \(\lim _{x\rightarrow \infty }g_2(x) \) to be finite, it is necessary that c in (27) be given by (see Sect. 1.7 for the notation we use here)

In the following sections, we show that formulae (27) and (28) complemented by this necessary condition for existence of \(\lim _{x\rightarrow \infty }g_2(x) \) define a pair of members of \(\mathfrak C[-\infty ,\infty ]\), and in fact, the map \((f_1,f_2) \mapsto (g_1,g_2) \) is a projection on \(\mathcal {C}_{\alpha ,\beta }\).

3.2 Formal analysis

In this section we study the map

where \(g_1\) and \(g_2\) are given by (27) (on the entire \(\mathbb {R}\)) supplemented by (28)–(29). Our ultimate aim (achieved in Sect. 3.3) is showing that \(P_{\alpha ,\beta }\) is a projection on \(\mathcal {C}_{\alpha ,\beta }\), but here we content ourselves with proving that the range of \(P_{\alpha ,\beta }\) is contained in \(\mathcal {C}_{\alpha ,\beta }\).

Proposition 7

For \((f_1,f_2)\in \mathcal C\), \(P_{\alpha ,\beta }(f_1,f_2)\) belongs to \(\mathcal {C}_{\alpha ,\beta }\).

Proof

The proof is carried out in two steps.

Step 1. We start with proving that for \((g_1,g_2)\) defined by (27)–(28) condition (24) is satisfied (regardless of the choice of c). Combining the definition of \(P_{\alpha ,\beta }\) and identities (11), (13) with the facts that \( k_1\) is odd and \( k_2\) is even, we obtain

Thus \(\beta g_1^o =\alpha g_2^o\) and we are left with showing that

Again, by (27), (11) and (13),

Therefore, by the first relation in (15), it suffices to show that

(We stress that this argument works regardless of the choice of constant c.) Since, by (15), both expressions in brackets vanish, our proof of the first step is completed.

Step 2. Here, we show that the limits \(\lim _{x\rightarrow \pm \infty } g_{i}( x)\), \(i=1,2\), exist and are finite. Starting with \(\lim _{x\rightarrow \infty }g_1(x)\), we write, by the definition of c given in (29),

Since \(f_2^e, k_1\) and \( k_2\) all belong to \(\mathfrak C[-\infty ,\infty ]\), the existence and finiteness of the limit \(\lim _{x\rightarrow \pm \infty } g_1(x)\) follows by Lemma 1.

Turning to \(\lim _{x\rightarrow -\infty } g_2(x)\), we observe that

and, as with the previous limit, we deduce existence of \(\lim _{x\rightarrow \pm \infty } g_2(x)\) from Lemma 1.

It is tempting to use arguments similar to these presented above to show that for \((f_1,f_2)\in \mathcal {C}_{\alpha ,\beta }\), \(P_{\alpha ,\beta }(f_1,f_2) \) coincides with \((f_1,f_2)\)—this would establish the fact that \(P_{\alpha ,\beta }\) is a projection on \(\mathcal {C}_{\alpha ,\beta }\). We will, however, take a different route and obtain this result as a consequence of Proposition 8 presented in the next section (see Theorem 9, further down).

3.3 A complementary subspace to \(\mathcal {C}_{\alpha ,\beta }\)

If \(\mathcal {C}_{\alpha ,\beta }\) is complemented and \(P_{\alpha ,\beta }\) is a projection on \(\mathcal {C}_{\alpha ,\beta }\), the range of the operator

is a complement to \(\mathcal {C}_{\alpha ,\beta }\). Our Proposition 8, presented a bit further down, says that the range is contained in the subspace \(\mathcal {D}_{\alpha ,\beta }\), composed of \((g_1,g_2) \in \mathcal C\) such that

In other words, the range of \(Q_{\alpha ,\beta }\) is contained in

This result, in turn, when combined with Proposition 7, allows showing that the range of \(Q_{\alpha ,\beta }\) indeed forms a complement to \(\mathcal {C}_{\alpha ,\beta }\) and that \(Q_{\alpha ,\beta }\) and \(P_{\alpha ,\beta }\) are projections on these subspaces (see Theorem 9).

Before continuing, for ease of reference, we note that for \((f_1,f_2) \in \mathcal C\), the pair \((g_1,g_2) :=Q_{\alpha ,\beta }(f_1,f_2)\) is defined by (see (27))

where functions \( k_1, k_2\) and the constant c are given by, resp., (28) and (29).

Proposition 8

Let \((f_1,f_2)\in \mathcal C\). Then \((g_1,g_2)\) defined by (31) belongs to \(\mathcal {D}_{\alpha ,\beta }\).

Proof

The fact that \((g_1,g_2)= (f_1,f_2)- P_{\alpha ,\beta }(f_1,f_2) \) belongs to \( \mathcal C\) follows from Proposition 7 (it is only here that the value of c is of importance). Hence, we need to show that (30) holds. To this end, using (31), we find that

Thus, the second relation of (30) is satisfied whenever the first one is.

On the other hand, by (31), (11) and (13), we have

Since, by (31), \(g_2(0)=-c\), the second relation in (15) reduces our task to proving that

To complete the proof we observe that, by (15), both expressions in brackets are identically zero.

Theorem 9

The space \(\mathcal C\) is a direct sum of two subspaces:

Moreover, \(P_{\alpha ,\beta }\) is a projection on \(\mathcal {C}_{\alpha ,\beta }\) and \(Q_{\alpha ,\beta }\) is a projection on \(\mathcal {D}_{\alpha ,\beta }\).

Proof

Since, by Propositions 7 and 8,

to prove the first part we need to show only that \(\mathcal {C}_{\alpha ,\beta }\cap \mathcal {D}_{\alpha ,\beta }= \{0\}.\)

To this end, we take \((g_1,g_2)\in \mathcal {C}_{\alpha ,\beta }\cap \mathcal {D}_{\alpha ,\beta }\). By \(g_1^\text {T}= g_1^e - g_1^o\) and \(g_2= g_2^e + g_2^o\), relations (24) and (30) yield

We note that all functions that feature here are of at most exponential growth. Therefore, their Laplace transforms are well-defined, and in the Laplace transform terms this system takes the form:

As long as \(\lambda >\gamma \), this system has a unique solution, given by

Therefore, \( g_2(x)=g_2(0)( \cosh _\gamma (x)+2\beta \gamma ^{-1}\sinh _\gamma (x))\) for \(x\ge 0.\) Since \(g_2 \in \mathfrak C[-\infty ,\infty ]\), however, we have to have \(g_2(0)=0\). Thus \(g_2{\vert }_{[0,\infty )}=g_1^T\vert _{[0,\infty )}= 0\). Now, Lemma 4 says that for \((g_1,g_2)\in \mathcal {C}_{\alpha ,\beta }\), the parts \(g_1^T\vert _{[0,\infty )}\) and \(g_2\vert _{[0,\infty )}\) determine the entire pair (comp. the end of the proof of Proposition 5) In particular, \(g_2\vert _{[0,\infty )}=g_1^T\vert _{[0,\infty )}= 0\) implies \(g_1=g_2=0\), completing the proof of the first part.

The second part now easily follows. To wit, if \((f_1,f_2)\) belongs to \(\mathcal {C}_{\alpha ,\beta }\), then so does \((f_1,f_2) - P_{\alpha ,\beta }(f_1,f_2)\), because of Proposition 7. On the other hand, by Proposition 8, \((f_1,f_2) - P_{\alpha ,\beta }(f_1,f_2)\) belongs to \(\mathcal {D}_{\alpha ,\beta }\). In the first part we have proved, however, that \(\mathcal {C}_{\alpha ,\beta }\cap \mathcal {D}_{\alpha ,\beta }=\{0\}\). Hence, \(P_{\alpha ,\beta }(f_1,f_2)=(f_1,f_2).\) Similarly, we show that \(Q_{\alpha ,\beta }(f_1,f_2) = (f_1,f_2)\) for \((f_1,f_2) \in \mathcal {D}_{\alpha ,\beta }.\) This together with Propositions 7 and 8 completes the proof.

4 \(\mathcal {D}_{\alpha ,\beta }\) as an invariant subspace related to conditions (9)

So far, our main result (Theorem 9) establishes that \(\mathcal C\) is a direct sum of two subspaces, one of which, namely \(\mathcal {C}_{\alpha ,\beta }\), is invariant under the basic Cartesian product cosine family. Moreover, by Theorem 6, the cosine family generated in \(\mathfrak C(\mathbb {R}_\sharp )\) by \(A_{\alpha ,\beta }\) is in fact isomorphic to the subspace cosine family in \(\mathcal {C}_{\alpha ,\beta }\). At present, however, it is yet unclear whether \(\mathcal {D}_{\alpha ,\beta }\) has a similar property. The aim of this section is to show that \(\mathcal {D}_{\alpha ,\beta }\) is indeed an invariant subspace and that the related subspace cosine family is isomorphic to the cosine family generated by a Laplace operator with transmission conditions (9). Invariance of \(\mathcal {D}_{\alpha ,\beta }\) is established in Theorem 13; connection with transmission conditions is provided in Theorem 16.

4.1 Further invariant subspaces for the Cartesian product cosine family

We take a similar approach to that presented in Sect. 2, that is, we start with some observations on the basic cosine family viewed as composed of linear operators acting in \(\mathfrak C(\mathbb R)\). We note, namely, that for any \(a>0\),

Therefore, by (16), we see that for all \(t,x\in \mathbb {R}\) and \(\phi \in \mathfrak C(\mathbb {R})\):

This counterpart of (16) shows that also functions \(\phi \in \mathfrak C(\mathbb {R})\) such that \(\phi - a(e_a*\phi ) - \phi (0)e_a\) is odd, are of special importance for the basic cosine family. Here is a lemma that summarizes their basic properties.

Lemma 10

For \(\phi \in \mathfrak C(\mathbb {R})\) the following conditions are equivalent.

-

(a)

\(\phi ^e= a e_a *\phi +\phi (0)e_a\) on \(\mathbb {R}^+\);

-

(b)

\(\phi ^e= a e_a *\phi +\phi (0)e_a\) on \(\mathbb {R}\);

-

(c)

\(\phi - ae_a*\phi -\phi (0)e_a\) is odd,

-

(d)

For all \(t\in \mathbb {R}\),

$$\begin{aligned} C(t)(a e_a * \phi +\phi (0)e_a)=a e_a *C(t)\phi +[C(t)\phi (0)]e_a \quad \text {on } \mathbb {R}. \end{aligned}$$

Proof

We proceed as in Lemma 2. In order to show (b), if (a) is assumed, we need to check, by (13), that \(\phi ^e=- a e_a^\text {T}*\phi ^\text {T}+ \phi (0)e_a^\text {T}\) on \(\mathbb {R}^+\), that is, by (a), that

On the other hand, using assumption (a) again, we obtain that the left hand side of (33) equals

and the last expression is equal to the right hand side of (33) by the Hilbert equation (14). This completes the proof of the implication. The converse is trivial.

Next, (b) says that \(\phi - ae_a*\phi -\phi (0)e_a\) is the odd part of \(\phi \), and thus clearly implies (c). Conversely, (c) says that

When expanded, this reads \(\phi - a (e_a * \phi ) + \phi ^\text {T}+ a (e_{-a} *\phi ^\text {T}) = 2\phi (0) \cosh _a \). Since the left-hand side here is the derivative of \(e_a *\phi + e_{-a}*\phi ^\text {T}\), we see that the derivatives of \((e_a *\phi )^o\) and \(a^{-1} \phi (0)\sinh _a\) coincide. It follows that \(a(e_a *\phi )^o = \phi (0)\sinh _a\) because both functions vanish at 0. This, together with (34) renders \(a e_a * \phi = a (e_a * \phi )^e + a (e_a * \phi )^o = \phi ^e - \phi (0)e_a \), and thus proves (b). Finally, (c) is equivalent to (d) by (32), completing the proof.

As a direct consequence of the lemma we obtain the following information on invariant subspaces for \(\{C_{\text {D}}(t),\, t\in \mathbb {R}\}\).

Corollary 11

The subspaces

are invariant under the basic Cartesian product cosine family.

Proof

To show invariance of \(\mathsf D_{a}\), similarly as in Corollary 3, we need to prove that \((C(t)\phi _2)^e = ae_a*C(t)\phi _2+[C(t)\phi _2(0)]e_a\) and \(({\textstyle \frac{a}{2}}C(t)\phi _1)^e =( C(t)\phi _2)^e\) for \((\phi _1,\phi _2) \in \mathsf D_a\) and \(t\in \mathbb {R}\). The second equality, however, is an immediate consequence of the second relation in (12) combined with the defining condition of \(\mathsf D_a\). Turning to the first equality, we note that \(\phi _2\) satisfies condition (b) in Lemma 10, and thus satisfies also the lemma’s condition (d). Therefore, using (12) and the defining condition of \( \mathsf D_a\) again, we obtain

as desired. Proof of invariance of \( \mathsf D_{a}^\sharp \) is analogous.

Our next result establishes an intimate, key connection between the space \(\mathcal {D}_{\alpha ,\beta }\) defined in Sect. 3.3 and the spaces \(\mathsf D_{\alpha +\beta }\) and \(\mathsf D_{\alpha +\beta }^\sharp \).

Lemma 12

We have

where M is defined by (18), as long as \(\alpha \ne \beta \). In the other case,

for \(M^\sharp \) introduced in (20).

Proof

We argue similarly as in Lemma 4. Let us assume that \(\alpha \not =\beta \), a pair \((\phi _1,\phi _2)\) belongs to \(\mathsf D_{\alpha +\beta }\), and \((\psi _1,\psi _2)\) satisfies (21). Then, by the definition of \(\mathsf D_{\alpha +\beta }\),

Also, by the same definition, \(\frac{\alpha +\beta }{2} \phi _1(0) = \phi _2(0)\) and this implies that \(\psi _2(0)=(\alpha +\beta )^{-1} \phi _2(0)\). It follows that the lower entry in the last matrix is \( e_{\alpha +\beta } * (\beta \psi _2 - \alpha \psi _1^\text {T})+\psi _2(0) e_{\alpha +\beta }\). This shows that \((\psi _1,\psi _2)\) belongs to \(\mathcal {D}_{\alpha ,\beta }\) (see (30)), that is, that \(\mathcal {D}_{\alpha ,\beta }\supset M(\mathsf D_{\alpha +\beta })\). The inclusion \(\mathcal {D}_{\alpha ,\beta }\subset M(\mathsf D_{\alpha +\beta })\) is proved similarly.

We omit the details of the case \(\alpha =\beta \).

Since, as we have already remarked, operators M and \(M^\sharp \) commute with the basic Cartesian product cosine family, Corollary 11 and Lemma 12, when combined, yield the following crucial result.

Theorem 13

The space \(\mathcal {D}_{\alpha ,\beta }\) is invariant under the basic Cartesian product cosine family.

We also note the following corollary to Lemma 12 which will be used in Sect. 4.2.

Corollary 14

For \((\psi _1,\psi _2)\in \mathcal C\) the following conditions are equivalent.

-

(a)

\((\psi _1,\psi _2)\) belongs to \(\mathcal {D}_{\alpha ,\beta }\),

-

(b)

\(\psi _2^e= e_{\alpha +\beta } *(\beta \psi _2 - \alpha \psi _1^\text {T})+\psi _2(0)e_{\alpha +\beta }=-\psi _1^e\) on \(\mathbb {R}_+\).

Proof

From the characterization (30) it follows that all we need to prove is that (b) implies (a). Let \(\alpha \not =\beta \). Assuming (b), we see that for \(\left( {\begin{array}{c}\phi _1\\ \phi _2\end{array}}\right) :=M^{-1} \left( {\begin{array}{c}\psi _1\\ \psi _2\end{array}}\right) = \left( {\begin{array}{c}\psi _2- \psi _1^\text {T}\\ \beta \psi _2-\alpha \psi _1^\text {T}\end{array}}\right) \) we have

on \(\mathbb {R}^+\). Since this means that \(\phi _2\) satisfies condition (a) in Lemma 10 (with \(a=\alpha +\beta \)), the lemma implies that \((\alpha +\beta ) e_{\alpha +\beta }*\phi _2+\phi _2(0)e_{\alpha +\beta }= \phi _2^e \) holds on \(\mathbb {R}\), and thus that (35) holds on \(\mathbb {R}\) also. By Lemma 12 this shows that \(\left( {\begin{array}{c}\psi _1\\ \psi _2\end{array}}\right) \) belongs to \(\mathcal {D}_{\alpha ,\beta }\).

The case of \(\alpha =\beta \) is proved similarly.

4.2 Connection with transmission conditions (9)

We define the space \(\mathfrak C_{\text {ov}}(\mathbb {R}_\sharp )\) as the subspace of \(\mathfrak C(\mathbb {R}_\sharp )\) composed of functions satisfying \(f(0+)=-f(0-)\) (‘\(\text {ov}\)’ stands for ‘opposite values’).

Proposition 15

Let the map \( E^\perp _{\alpha ,\beta } :\mathfrak C_{\text {ov}}(\mathbb {R}_\sharp )\rightarrow \mathcal C\) be given by \(f\overset{ E^\perp _{\alpha ,\beta }}{\longmapsto }(\widetilde{f_{\ell }},\widetilde{f_{\text {r}}})\), where

and \(\widetilde{f_{\ell }}(0)=f(0-)\), \(\widetilde{f_{\text {r}}}(0)=f(0+)\). Then \( E^\perp _{\alpha ,\beta } \) is an isomorphism of \(\mathfrak C_{\text {ov}}(\mathbb {R}_\sharp )\) and \(\mathcal {D}_{\alpha ,\beta }\).

Proof

To conclude that we use Lemma 1 and the existence of \(\widetilde{f_{\ell }}(-\infty )\) and \(\widetilde{f_{\text {r}}}(\infty )\), which is guaranteed by \(f\in \mathfrak C(\mathbb {R}_\sharp )\). The definition of \( E^\perp _{\alpha ,\beta } \) implies also that \((\widetilde{f_{\ell }},\widetilde{f_{\text {r}}})\) satisfies condition (b) in Corollary 14 and thus belongs to \(\mathcal {D}_{\alpha ,\beta }\).

Moreover, \( E^\perp _{\alpha ,\beta } \) is an injective bounded linear operator with

It is also surjective, since the operator R of Sect. 1.3, as restricted to \(\mathcal {D}_{\alpha ,\beta }\), is its right inverse.

To establish the link between the subspace \(\mathcal {D}_{\alpha ,\beta }\) and boundary conditions (9) we introduce the corresponding generator:

Definition 1

Let \(A^\perp _{\alpha ,\beta }\) be the operator in \(\mathfrak C_{\text {ov}}(\mathbb {R}_\sharp )\), defined by

on the domain \(D(A^\perp _{\alpha ,\beta })\) consisting of functions \(f \in \mathfrak C_{\text {ov}}(\mathbb {R}_\sharp )\) satisfying the following conditions:

-

(a)

f is twice continuously differentiable in both \((-\infty ,0]\) and \([0,\infty )\), separately, with left-hand and right-hand derivatives at \(x=0\), respectively,

-

(b)

both the limits \(\lim _{x\rightarrow \infty } f'' (x)\) and \(\lim _{x\rightarrow -\infty } f''(x) \) exist and are finite,

-

(c)

\(f''(0+)=\alpha f'(0-) + \beta f'(0+)\) and \(f''(0+)=-f''(0-).\)

Theorem 16

The operator \(A^\perp _{\alpha ,\beta }\) generates the cosine family \(\{Cos_{\alpha ,\beta }^\perp (t),\,t \in \mathbb {R}\}\) given by

where \(C_D(t)\) is defined in (7).

Proof

This result may be proved in much the same way as Theorem 6. The crucial step of the argument is to show that, for \(f\in \mathfrak C(\mathbb {R}_\sharp )\), the condition \(f\in D(A^\perp _{\alpha ,\beta })\) holds iff extensions \(\widetilde{f_{\ell }}, \widetilde{f_{\text {r}}}\) defined in Proposition 15 are twice continuously differentiable with \(\widetilde{f_{\ell }}''\), \(\widetilde{f_{\text {r}}}''\) belonging to \(\mathfrak C[-\infty ,\infty ]\). Transmission condition of point (c) above is a necessary and sufficient condition for differentiability of extensions at \(x=0\).

Data availability

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

References

Bobrowski, A.: Lord Kelvin’s method of images in the semigroup theory. Semigroup Forum 81, 435–445 (2010)

Bobrowski, A., Mugnolo, D.: On moments-preserving cosine families and semigroups in \({C}[0, 1]\). J. Evol. Equ. 13(4), 715–735 (2013). https://doi.org/10.1007/s00028-013-0199-x

Bobrowski, A., Gregosiewicz, A.: A general theorem on generation of moments-preserving cosine families by Laplace operators in \({C}[0,1]\). Semigroup Forum 88(3), 689–701 (2014). https://doi.org/10.1007/s00233-013-9561-0

Bobrowski, A., Gregosiewicz, A., Murat, M.: Functionals-preserving cosine families generated by Laplace operators in \({C}[0,1]\). Discr. Cont. Dyn. Syst. B 20(7), 1877–1895 (2015). https://doi.org/10.3934/dcdsb.2015.20.1877

Feller, W.: An Introduction to Probability Theory and Its Applications vol. 2. Wiley, New York (1966). Second edition, 1971

Bobrowski, A.: Functional Analysis for Probability and Stochastic Processes. An Introduction, p. 393. Cambridge University Press, Cambridge, (2005). https://doi.org/10.1017/CBO9780511614583

Bobrowski, A.: Generation of cosine families via Lord Kelvin’s method of images. J. Evol. Equ. 10(3), 663–675 (2010)

Liggett, T.M.: Continuous Time Markov Processes. An Introduction. Amer. Math. Soc., (2010)

Bobrowski, A., Ratajczyk, E.: On one-parameter continuous family of pairs of complementary boundary conditions. Studia Mathematica 266(1), 81–92 (2022). https://doi.org/10.4064/sm210618-3-11

Revuz, D., Yor, M.: Continuous Martingales and Brownian Motion. Springer, (1999). Third edition

Arendt, W., Batty, C.J.K., Hieber, M., Neubrander, F.: Vector-Valued Laplace Transforms and Cauchy Problems. Birkhäuser, Basel (2001)

Bobrowski, A.: Families of operators describing diffusion through permeable membranes. In: Operator Semigroups Meet Complex Analysis, Harmonic Analysis and Mathematical Physics , Arendt, W., Chill, R., Tomilov, Y., eds. Operator Theory, Advances and Applications, vol. 250, pp. 87–105. Birkhäuser, (2015)

Bobrowski, A.: Emergence of Freidlin–Wentzell’s transmission conditions as a result of a singular perturbation of a semigroup. Semigroup Forum 92(1), 1–22 (2015). https://doi.org/10.1007/s00233-015-9729-x

Bobrowski, A., Morawska, K.: From a PDE model to an ODE model of dynamics of synaptic depression. Discr. Cont. Dyn. Syst. B 17(7), 2313–2327 (2012). https://doi.org/10.3934/dcdsb.2012.17.2313

Lejay, A.: The snapping out Brownian motion. Ann. Appl. Probab. 26(3), 1727–1742 (2016). https://doi.org/10.1214/15-AAP1131

Bobrowski, A.: Convergence of One-parameter Operator Semigroups. In Models of Mathematical Biology and Elsewhere. New Mathematical Monographs, vol. 30, p. 438. Cambridge University Press, Cambridge, (2016). https://doi.org/10.1017/CBO9781316480663

Andrews, S.S.: Accurate particle-based simulation of adsorption, desorption and partial transmission. Phys. Biol. (6), 046015 (2010). https://doi.org/10.1088/1478-3975/6/4/046015

Fieremans, E., Novikov, D.S., Jensen, J.H., Helpern, J.A.: Monte Carlo study of a two-compartment exchange model of diffusion. NMR in Biomedicine 23, 711–724 (2010). https://doi.org/10.1002/nbm.1577

Tanner, J.E.: Transient diffusion in a system partitioned by permeable barriers. Application to NMR measurements with a pulsed field gradient. The Journal of Chemical Physics 69(4), 1748–1754 (1978). https://doi.org/10.1063/1.436751

Powles, J.G., Mallett, M.J.D., Rickayzen, G., Evans, W.A.B.: Exact analytic solutions for diffusion impeded by an infinite array of partially permeable barriers. Proc. Roy. Soc. Lond. Ser. A 436(1897), 391–403 (1992). https://doi.org/10.1098/rspa.1992.0025

Banasiak, J., Bloch, A.: Telegraph systems on networks and port-Hamiltonians. I. Boundary conditions and well-posedness. Evolution Equations and Control Theory 11(4), 1331–1355 (2022)

Banasiak, J., Bloch, A.: Telegraph systems on networks and port-Hamiltonians. II. Network realizability. Networks and Heterogeneous Media 17(1), 73–99 (2022)

Lejay, A.: On the constructions of the skew Brownian motion. Probab. Surv. 3, 413–466 (2006). https://doi.org/10.1214/154957807000000013

Itô, K., P., M.H.: Diffusion Processes and Their Sample Paths. Springer, Berlin (1996). Repr. of the 1974 ed

Walsh, J.B.: A diffusion with a discontinuous local time. In: Temps Locaus. Astérisque, Sociéte Mathématique De France, pp. 37–45. Astérisque, Sociéte Mathématique de France (1978)

Mansuy, R., Yor, M.: Aspects of Brownian Motion. Universitext, p. 195. Springer, (2008). https://doi.org/10.1007/978-3-540-49966-4

Yor, M.: Some Aspects of Brownian Motion. Part II. Lectures in Mathematics ETH Zürich, p. 144. Birkhäuser Verlag, Basel, (1997). https://doi.org/10.1007/978-3-0348-8954-4. Some recent martingale problems

Freidlin, M.I., Wentzell, A.D.: Random Perturbations of Dynamical Systems, Third edition edn. Grundlehren der Mathematischen Wissenschaften [Fundamental Principles of Mathematical Sciences], vol. 260, p. 458. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-25847-3. Translated from the 1979 Russian original by Joseph Szücs

Freidlin, M.I., Wentzell, A.D.: Diffusion processes on graphs and the averaging principle. Ann. Math. 21, 2215–2245 (1993)

Bobrowski, A., Komorowski, T.: Diffusion approximation for a simple kinetic model with asymmetric interface. J. Evol. Equ. 22, 42 (2022). https://doi.org/10.1007/s00028-022-00801-x

Basile, G., Komorowski, T., Olla, S.: Diffusion limit for a kinetic equation with a thermostatted interface. Kinet. Relat. Models 12(5), 1185–1196 (2019). https://doi.org/10.3934/krm.2019045

Komorowski, T., Olla, S.: Kinetic limit for a chain of harmonic oscillators with a point Langevin thermostat. J. Funct. Anal. 279(12), 108764–60 (2020). https://doi.org/10.1016/j.jfa.2020.108764

Komorowski, T., Olla, S., Ryzhik, L.: Fractional diffusion limit for a kinetic equation with an interface. Ann. Probab. 48(5), 2290–2322 (2020). https://doi.org/10.1214/20-AOP1423

Komorowski, T., Olla, S., Ryzhik, L., Spohn, H.: High frequency limit for a chain of harmonic oscillators with a point Langevin thermostat. Arch. Ration. Mech. Anal. 237(1), 497–543 (2020). https://doi.org/10.1007/s00205-020-01513-7

Di Crescenzo, A., Martinucci, B., Paraggio, P., Shelemyahu, Z.: Some results on the telegraph process confined by two non-standard boundaries. Methodol. Comput. Appl. Probab. (2020). https://doi.org/10.1007/s11009-020-09782-1

Di Crescenzo, A., Martinucci, B., Zacks, S.: Telegraph process with elastic boundary at the origin. Methodol. Comput. Appl. Probab. 20(4), 333–352 (2017)

Kostrykin, V., Potthoff, J., Schrader, R.: Brownian motions on metric graphs. J. Math. Phys. 53(9), 095206 (2012). https://doi.org/10.1063/1.4714661

Bobrowski, A.: Concatenation of dishonest Feller processes, exit laws, and limit theorems on graphs. To appear in SIAM J. of Math. Analysis, see also arXiv (2022). https://doi.org/10.48550/ARXIV.2204.09354

Acknowledgements

We are very grateful to Wojciech Chojnacki for various remarks which improved the presentation of the paper.

Author information

Authors and Affiliations

Contributions

Authors contributed equally to this work.

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bobrowski, A., Ratajczyk, E. Pairs of complementary transmission conditions for Brownian motion. Math. Ann. 388, 4317–4342 (2024). https://doi.org/10.1007/s00208-023-02613-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00208-023-02613-x