Abstract

We study the homogenization of the Dirichlet problem for the Stokes equations in \(\mathbb {R}^3\) perforated by m spherical particles. We assume the positions and velocities of the particles to be identically and independently distributed random variables. In the critical regime, when the radii of the particles are of order \(m^{-1}\), the homogenization limit u is given as the solution to the Brinkman equations. We provide optimal rates for the convergence \(u_m \rightarrow u\) in \(L^2\), namely \(m^{-\beta }\) for all \(\beta < 1/2\). Moreover, we consider the fluctuations. In the central limit scaling, we show that these converge to a Gaussian field, locally in \(L^2(\mathbb {R}^3)\), with an explicit covariance. Our analysis is based on explicit approximations for the solutions \(u_m\) in terms of u as well as the particle positions and their velocities. These are shown to be accurate in \(\dot{H}^1(\mathbb {R}^3)\) to order \(m^{-\beta }\) for all \(\beta < 1\). Our results also apply to the analogous problem regarding the homogenization of the Poisson equations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Numerous applications regarding the dynamics of suspensions and aerosols call for macro- and mesoscopic models which couple the particle evolution to the fluid. One of the most well-known models are the so-called Vlasov–Navier–Stokes equations for spherical, non-Brownian inertial particles. If the fluid inertia is neglected, they reduce to the so-called Vlasov–Stokes equations which take the dimensionless form

where f(t, x, v) is the particle density and h is some external force acting on the fluid. For questions regarding modeling and applications of this system, we refer the reader to [4] and the references therein.

The rigorous derivation of these equations from a microscopic system is a wide open problem. The main difficulty lies in the nature of the interaction of the particles which is only implicitly given through the fluid. Moreover it is singular and long range. A natural preliminary step towards the rigorous derivation of the Vlasov(–Navier)–Stokes equations consists in the derivation of the limit fluid equations in (1.1) without taking into account the particle evolution. These are the so-called Brinkman equations. The additional term \(\rho u - j\) describes the effective drag force that the particles exert on the fluid: the drag force of a single particle in a Stokes flow is given by

where R is the particle radius, \(V_i\) its velocity and \(u_i\) is the unperturbed fluid velocity at the position of the particle. Therefore, the total drag will be of order one if the number of particles m (in a finite volume) times their radius \(R_m\) is of order one. By making the convenient choice

the Brinkman equations in the form above arise based on a superposition principle for the drag forces.

The rigorous derivation of the Brinkman equations has attracted increasing attention over the last years, with results both in the cases of zero and non-zero particle velocities, see e.g. [2, 5, 12, 17, 18, 22], respectively. The most recent results focus on the derivation under very mild assumptions for (random) particle configurations. Such investigations seem compulsory in order to eventually accomplish the rigorous derivation of the Vlasov(–Navier)–Stokes equations. In this regard, it is also desirable to develop very accurate explicit approximations for the microscopic solution \(u_m\) and to characterize its convergence rate to the limit u as well as the associated fluctuations. In our paper, we focus on these aspects.

1.1 Statement of the Main Result

We consider the perforated domain

where the particles are given by \(B_i = B_{R_m}(X_i)\) with \(R_m\) as in (1.2). The particle positions \(X_1,\ldots ,X_m\) as well as their velocities \(V_1,\ldots ,V_m\) are random variables in \(\mathbb {R}^3\). For \(h \in \dot{H}^{-1}(\mathbb {R}^3)\), we study the solution \(u_m\) to the Stokes equations

We consider the case when \(Z_i = (X_i,V_i)\) are i.i.d. according to \(f\in \mathcal {P}(\mathbb {R}^3\times \mathbb {R}^3)\). We impose the following hypotheses on f:

-

(H1)

\(\int _{\mathbb {R}^3\times \mathbb {R}^3} |v|^2 f(\textrm{d}x, \textrm{d}v) <\infty \);

-

(H2)

the distribution of the centers \(\rho (\cdot ):= \int _{\mathbb {R}^3} f(\cdot ,\textrm{d}v)\in W^{1,\infty }(\mathbb {R}^3)\) is compactly supported;

-

(H3)

\(j(\cdot ):= \int _{\mathbb {R}^3} v f(\cdot ,\textrm{d}v) \in {H}^1(\mathbb {R}^3)\).

We remark that we in particular allow to choose \(f(\textrm{d}x, \textrm{d}v) = \rho (x) \, \textrm{d}x \delta _{v=0}\) which means that all particle velocities are zero.

We note that it is classical that the Stokes equations (1.3) are well-posed if the particles do not overlap in the sense that there exists a unique weak solution \(u_m \in \dot{H}^{1}(\mathbb {R}^3)\). As stated in the following lemma overlapping of particles does not occur with probability approaching \(1\) as \(m\rightarrow \infty \). The lemma is a standard result that can for example be found in [21, Proposition A.3].

Lemma 1.1

For \(\nu \geqq 0 \), \(L > 0\) let

Then, for all \(0 \leqq \nu < 1/3\) and all \(L > 0\), there exists \(m_0 > 0\) such that for all \(m \geqq m_0\)

where C depends only on \(\rho \).

For overcoming the problem of the ill-posedness of (1.3) for overlapping particles, we could restrict ourselves to configurations of non-overlapping particles. However, this results in the loss of the independence of the particle positions. Thus, for technical reasons, we prefer to define \(u_m\) to be the solution to (1.3) for \((Z_i)_{i=1}^{m} \in \mathcal {O}_{m,0,2}\) and \(u_m = u\) for \((Z_i)_{i=1}^{m} \notin \mathcal {O}_{m,0,2}\).

For the statement of our main result, we introduce \(u \in \dot{H}^1(\mathbb {R}^3)\) as the unique weak solution to the Brinkman equations

We remark that this problem is well-posed due to the assumptions (H2) and (H3) (note that j also has compact support and hence \(j \in \dot{H}^{-1}(\mathbb {R}^3)\)).

Moreover, we introduce the solution operator A for the Brinkman equations with vanishing flux j. More precisely, A, which depends on \(\rho \), maps g to to the solution w of the equation

Theorem 1.2

Let \(h \in \dot{H}^{-1}(\mathbb {R}^3)\) and let \(u_m \in \dot{H}^1(\mathbb {R}^3)\) and \(u \in \dot{H}^1(\mathbb {R}^3)\) be the unique weak solutions to (1.3) and (1.4). Then,

-

(i)

For any \(\beta < 1/2\) and any compact set \(K \subseteq \mathbb {R}^3\),

$$\begin{aligned} m^\beta \Vert u_m - u\Vert _{L^2(K)} \longrightarrow 0 \quad \text { in probability}; \end{aligned}$$ -

(ii)

For every \(g\in L^2(\mathbb {R}^3)\) with compact support,

$$\begin{aligned} m^{1/2} (g, u_m - u) \longrightarrow \xi [g] \end{aligned}$$in distribution, where \(\xi \) is a Gaussian field with mean zero and covariance

$$\begin{aligned} \begin{aligned} \mathbb {E}[\xi [g_1] \xi [g_2]]&= \int _{\mathbb {R}^3\times \mathbb {R}^3} \bigl ((u(x)-v) \cdot (A g_1)(x)\bigr ) \bigl ((u(x)-v) \cdot (A g_2)(x)\bigr ) f(\textrm{d}x,\textrm{d}v) \\&\quad - \left( \rho u -j,A g_1\right) _{L^2}\left( \rho u -j,Ag_2\right) _{L^2} \end{aligned} \end{aligned}$$(1.6)for all \(g_1, g_2 \in L^2(\mathbb {R}^3)\) with compact support.

Remark 1.3

-

(i)

The analogous result holds when the Stokes equations are replaced by the Poisson equation. Also for the Poisson equation, the result is new, see the discussion in Sect. 1.2. For the sake of conciseness, we do not state the result in a separate theorem but only point out the necessary adaptations: Instead of the Stokes equations (1.3), (1.4) and (1.5) we consider Poisson equations and the quantities \(V_i\) become scalars as well as \(u_m, u, h, j\), etc. Moreover, reflecting that the capacity of a ball of radius R is \(4 \pi R\), one should replace (1.2) by

$$\begin{aligned} R_m = \frac{1}{4 \pi m}. \end{aligned}$$(1.7) -

(ii)

For the proof, we will show the following statement that implies (ii), see Theorem 3.1 and Propositions 3.2–3.3: Denoting \(\Omega \) the underlying probability space, let \(\sigma _m \in L^2(\Omega ;L^2_{loc}(\mathbb {R}^3))\) be the i.i.d. random fields (cf. (2.21)) given by

$$\begin{aligned} \sigma _i = m^{-1/2} \left( A(\rho u - j) - (A(u(X_i) - V_i) \delta _{X_i})\right) \end{aligned}$$(1.8)and \(\tau _m:= \sum _{i=1}^m \sigma _i \). Then, for all \(\beta < 1/2\)

$$\begin{aligned} m^{1/\beta } \Vert m^{1/2}(u_m - u) - \tau _m\Vert _{L^2(K)} \rightarrow 0 \quad \text { in probability}. \end{aligned}$$(1.9)The assertion then follows from a standard CLT upon computing the covariance of \(\sigma _1\). In classical stochastic homogenization of elliptic PDEs with oscillating coefficients, the analogue of the convergence (1.9) is known as pathwise structure of fluctuations (see e.g. [11]). A heuristic explanation for this pathwise structure will be given in Sect. 2.

-

(iii)

Formally, we can write \(\xi = A \zeta \), where \(\zeta \) accounts for the fluctuations of the drag force \(j - \rho u\). The appearance of the second term on the right-hand side of (1.6) is classical for the fluctuations in m-particle systems, see e.g. [3], and is supposed to disappear if we modeled the particles by a Poisson Point Process instead. In particular, one can expect in this case, at least formally, \(\xi = A \zeta \) with

$$\begin{aligned} \zeta = \left( \int (v-u)\otimes (v-u) f(\cdot , \textrm{d}v)\right) ^{\frac{1}{2} } W, \end{aligned}$$where W is space white noise. This (and also the form of \(\sigma _i\) in (1.8)) means that the fluctuations are solely caused by the fluctuations of the effective particle drag forces \(V_i - u(X_i)\) due to the fluctuations of the positions and velocities of the particles. No other information on the Dirichlet boundary conditions is retained. The fluctuations of the drag force are then transferred to fluctuations of the fluid velocity u via the long-range solution operator A of the Brinkman equations.

-

(iv)

The rate of convergence in part (i) of Theorem 1.2 is optimal in view of part (ii). More precisely, since \(\xi \ne 0\) in general, part (i) cannot hold for \(\beta = 1/2\). By interpolating the estimate in part (i) with the energy bound, one obtains a convergence in \(H^s_\textrm{loc}\) for any \(s < 1\) with rate \(m^{-\beta +s/2}\) for any \(\beta < 1/2\), though. This might not be optimal, though. Indeed, we will show that the fluctuations \(m^{1/2}(u_m-u)\) are bounded in \(H^s_\textrm{loc}\), \(s < 1/2\) (cf. Proposition 3.3).

1.1.1 Possible Generalizations

We briefly comment on three aspects of possible generalizations and improvements of our main result. We address: (1) random radii of the particles; (2) more general distributions of particle positions; (3) space dimensions different from \(d = 3\); (4) different notions of probabilistic convergence in part (i) of the main theorem.

-

1.

Indeed, it is not difficult to extend the above result to the case where the radii of the particles are also random. More precisely, assume that the radius of each particle is \(R^m_i = r_i R_m\) with \(R_m\) as in (1.2), respectively. Assume that the radii \(r_i\) are independent bounded random variables, also independent of the positions, with expectation \(\mathbb {E}r = 1\). Then, the assertions of Theorem 1.2 still hold with an additional factor \(\mathbb {E}r^2\) in front of the first term on the right-hand side of the covariance. In order not to further burden the presentation, we restrict our attention to the case of identical radii.

-

2.

For the sake of simplicity, we restrict our analysis to m i.i.d. particles. As mentioned in Remark 1.3 (iii), we expect the result to extend to (inhomogeneous) Poisson Point Processes. Moreover, we expect similar results for sufficiently mixing processes. For instance, assume that the m particles are identically distibuted with \(V_i = 0\) for all \(1 \leqq i \leqq m\), i.e. \(f = \rho \otimes \delta _0\) and let \(\rho _2\) denote the 2-particle correlation function, i.e., \(\mathbb {E}(g(X_1,X_2)) = \int _{\mathbb {R}^3 \times \mathbb {R}^3} g(x_1,x_2) \, \textrm{d}\rho _2(x_1,x_2)\). Assume that the process is mixing in the sense of

$$\begin{aligned} \left| \rho _2\left( \frac{x_1}{m^{1/3}}, \frac{x_2}{m^{1/3}}\right) - \rho \left( \frac{x_1}{m^{1/3}}\right) \rho \left( \frac{x_2}{m^{1/3}}\right) \right| \leqq \left( 1+ \frac{|x_1-x_2|}{m^{1/3}}\right) ^{-\beta } \end{aligned}$$for some \(\beta > 3\). Then, \(\tau _m = \sum _{i=1}^m \sigma _i\) with \(\sigma _i\) as in (1.8) still has a bounded variance which seems necessary for the fluctuations to be of order \(m^{-1/2}\). We point out, that the condition \(\beta >3\) corresponds to the one under which the fluctuations have been shown to obey the central limit scaling in [7] in the case of oscillating coefficients. However, the probabilistic estimates in Sect. 5 involve expressions with up to 5 different particles. Hence, more assumptions on the particle correlations are likely to be necessary when the particles are not independently distributed.

-

3.

Regarding the space dimension, our analysis is restricted to the physically most relevant three-dimensional case. Applying the same techniques in dimension \(d=2\) seems possible with additional technicalities due the usual issues regarding the capacity of a set in \(d=2\).

We emphasize though that, for \(d \geqq 4\), we do not expect Theorem 1.2 to continue to hold without structural changes. More precisely, we expect that in higher dimensions, the fluctuations occur at a higher rate (than \(m^{-1/2}\)). Moreover, at leading order, we expect local effects to dominate rather than the long range fluctuations caused by the the fluctuations of the drag force in \(d=3\) (cf. Remark 1.3 (iii)). The reason for this is that the volume occupied by the particles becomes too big. Indeed, in order for the homogenized equation (1.4) to remain the Brinkman equations, the critical scaling of the radius of m spherical particles in dimension \(d \geqq 3\) is \(R_m \sim m^{-1/(d-2)}\). The results cited above ensure that under this scaling we still have \(u_m \rightharpoonup u\) weakly in \(\dot{H}^1(\mathbb {R}^d)\). However, in the case when the particle velocities are all zero, i.e. \(f=\rho \otimes \delta _0\), we obtain as a trivial upper bound for the rate of convergence in \(L^p_{\textrm{loc}}\)

$$\begin{aligned} \Vert u_m - u\Vert _{L^p_\textrm{loc}(\mathbb {R}^3)} \geqq&\Vert u_m - u\Vert _{L^p(\cup _{i=1}^m B_i)} = \Vert u\Vert _{L^p(\cup _{i=1}^m B_i)} \sim \left( \mathscr {L}^d\left( \bigcup _{i=1}^m B_i\right) \right) ^{\frac{1}{p}} \nonumber \\&\sim m^{-\frac{2}{p(d-2)}}. \end{aligned}$$This shows that Theorem 1.2 cannot hold in this form for \(d \geqq 5\). Moreover, in dimension \(d=4\), this error is of critical order, which suggests that the analysis of the fluctuations is much more delicate. One might expect, though, that the result remains true in \(d \in \{4,5\}\) by changing the space \(L^2_{\textrm{loc}}\) to \(L^p_{\textrm{loc}}\) for p sufficiently small such that \(\Vert \textbf{1}_{\cup _{i=1}^m B_i}\}\Vert _{L^p} \ll m^{-1/2}\). However, inspecting more carefully that the effect of the Dirichlet condition at the particles decays like the inverse distance and that the typical particle distance is \(m^{-1/d}\), reveals that (with overwhelming probability)

$$\begin{aligned} \Vert u_m - u\Vert _{L^p_\textrm{loc}(\mathbb {R}^3)} \gtrsim m^{-\frac{2}{d}} \end{aligned}$$for all \(p < \tfrac{d}{d-2}\) (such that the fundamental solution of the Stokes equations is in \(L^p_{\textrm{loc}}\)). We do not only believe that the scaling of the fluctuations change but also their nature. Indeed, the long-range fluctuations caused by the fluctuations of the drag force in \(d=3\) (cf. (1.8)) is not adapted to locally correct the failure of the Dirichlet boundary condition at the particles. Roughly speaking, the fluctuations in \(d=3\) at a given point is to leading order a collective long range effect due to the fluctuations of all particle positions and velocities. In \(d \geqq 5\), however, we expect the fluctuation to leading order to be a short range effect due to the fluctuation of the nearest particle position and its velocity. For \(d=4\), we expect both effects to be of the same order.

-

4.

Instead of convergence in probability, one could aim for convergence in \(L^p\). Following the proof of the theorem reveals that we actually prove

$$\begin{aligned} \mathbb {E}_m[\textbf{1}_{{\mathcal {O}}_{m,0,5}} \Vert u_m - u\Vert ^2_{L^2_\textrm{loc}}] \leqq C m^{-1}. \end{aligned}$$(1.10)This implies \(\mathbb {E}_m[\Vert u_m - u\Vert _{L^2_\textrm{loc}}] \leqq C m^{-1/6}\) by Lemma 1.1, provided an a priori bound \(\mathbb {E}_m[\Vert u_m - u\Vert ^2_{L^2_\textrm{loc}}] \leqq C\). Such a bound has been obtained in [5]. Although different particle distributions are considered in [5], one readily checks that [5, Lemma 3.4] also implies such an a priori estimate in our setting. Again, the power \(m^{-1/6}\) is presumably not optimal and one could aim for an estimate \(\mathbb {E}_m[\Vert u_m - u\Vert ^2_{L^2_\textrm{loc}}] \leqq C m^{-1}\). Following our present approach, one would need to adapt the approximation that we use for \(u_m\) in the set \( {\mathcal {O}}_{m,0,5}\). The adaptation needs to take into account in a more precise way the geometry of the particle configuration and one could take inspiration from the proof of [5, Lemma 3.4]. However, it seems unavoidable that this approach would drastically increase the technical part of our proof.

1.1.2 Comments on Assumption (H1)–(H3)

The second moment bound in the first assumption, (H1), is very natural. It ensures that the solution \(u_m\) is bounded in \(L^2(\Omega ;\dot{H}^1(\mathbb {R}^3))\), where \(\Omega \) denotes the probability space. Moreover, the covariance of the fluctuations provided in Theorem 1.2 involves this second moment.

The regularity assumptions on \(\rho \) and j, (H2)–(H3), are of more technical nature: they ensure that both j and \(\rho u\), which appear in the Brinkman equations (1.4), lie in \(\dot{H}^1(\mathbb {R}^3) \cap \dot{H}^{-1}(\mathbb {R}^3)\). The \(\dot{H}^{-1}\) property will be very useful to treat those terms as source terms of the Stokes equations. On the other hand, the \(H^1\)-regularity allows us to quantify the differences of those terms to some discrete and averaged versions involved in the setup of appropriate approximations for \(u_m\) that we detail in Sect. 2.

1.2 Discussion of Related Results

1.2.1 Previous Results on the Derivation of the Brinkman Equations

As indicated at the beginning of this introduction, there is a huge literature on the derivation of the Brinkman equations and corresponding results for the Poisson equation where one could mention for instance [6, 9, 19, 26,27,28]. For a more complete list and discussion of this literature, we refer the reader to [18, 19].

In [5, 18], the Brinkman equations have been derived under very mild assumptions on the particle configurations. In [18], the authors considered zero particle velocities. The particle positions can be distributed to rather general stationary processes, and the radii are i.i.d. with only a \((1+ \beta )\) moment bound. This allows for many clusters of overlapping particles. A corresponding result for the Poisson equation has been obtained in [19].

On the other hand, in [5], the particle radii are identical but their velocities are not necessarily zero. The authors consider more general particle distributions than i.i.d. configurations. The Brinkman equations are derived in this setting under assumptions including a 5th moment bound of the velocities. The result in [5] comes with an estimate of the convergence rate \(u_m \rightarrow u\) in \(L^2_\textrm{loc}\). However, this does not allow to deduce convergence faster than \(m^{-\beta }\) with \(\beta < 1/95\).

1.2.2 Results About Explicit Approximations for \(u_m\)

A widespread approach to homogenization of the Poisson and Stokes equations in perforated domains with homogeneous Dirichlet boundary conditions is the so-called method of oscillating test functions which is used for instance in [2, 6]. An oscillating test function \(w_m\) is constructed in such a way that it vanishes in the particles and converges to 1 weakly in \(H^1_\textrm{loc}\). This function \(w_m\) carries the information of the capacity (or resistance) of the particles. A natural question is then, how well \(w_m u\) approximates \(u_m\). Since the function \(w_m\) is usually constructed explicitly, this allows for an explicit approximation for \(u_m\). In [2, 25] it is shown that for periodic configurations \(\Vert u_m - w_m u\Vert _{\dot{H}^1} \leqq C m^{-1/3}\). This error is of the order of the particle distance and thus the optimal error that one can expect due to the discretization. Similar results have been obtained in [20] for the random configurations studied in [19], with a larger error due to particle clusters.

In the recent papers [13, 14], higher order approximations for the Poisson and the Stokes equations in periodically perforated domains are analyzed.

In the present paper, we do not work with oscillating test functions. However, we derive equally explicit approximations for \(u_m\) which we will denote by \({\tilde{u}}_m\) (see Sect. 2). As we will show in Theorem 3.1, we have \(\Vert u_m - {\tilde{u}}_m \Vert _{\dot{H}^1} \leqq C m^{-\beta }\) for all \(\beta < 1\). This error is much smaller than the one obtained in [2, 25]. The reason for that is twofold. First, we take into account the leading order discretization error in terms of fluctuations. Second, we benefit from the randomness which reduces the higher order dicretization errors on average. We believe that Theorem 3.1 could be of independent interest. In particular concerning the rigorous derivation of the Vlasov–Stokes equations (1.1), such explicit accurate approximations of \(u_m\) in good norms seem essential. Indeed, for the related derivation of the transport-Stokes system for inertialess suspensions in [23], corresponding approximations have been crucial.

1.2.3 Related Results Concerning Fluctuations and Preliminary Comments on Our Proof

In the classical theory of stochastic homogenization of elliptic equations with oscillating coefficients, the study of fluctuations has been a very active research field in recent years. Of the vast literature, one could mention for example [1, 7, 10, 11].

Regarding the homogenization in perforated domains, the literature is much more sparse. In the recent paper [8], the authors were able to adapt some of the techniques of quantitative stochastic homogenization of elliptic equations with oscillating coefficients to the Stokes equations in perforated domains with sedimentation boundary conditions which are different from the ones considered here.

Related results to Theorem 1.2 have been obtained in [15] for the Poisson equation and in [29] for the Stokes equations. However, in these papers, the authors were only able to treat the Poisson and the Stokes equations corresponding to (1.3) with an additional large massive term \(\lambda u_m\): they obtained a result corresponding to Theorem 1.2 provided that \(\lambda \) is sufficiently large (depending on \(\rho \)).

The approach in [15, 29] follows the approximation of the solution \(u_m\) by the so-called method of reflections. The idea behind this method is to express the solution operator of the problem in the perforated domain in terms of the solutions operators when only one of the particles is present. More precisely, let \(v_0\) be the solution of the problem in the whole space without any particles. Then, define \(v_1 = v_0 + \sum _i v_{1,i}\) in such a way that \(v_0 + v_{1,i}\) solves the problem if i was the only particle. Since \(v_{1,i}\) induces an error in \(B_j\) for \(j \ne i\), one adds further functions \(v_{2,i}\), this time starting from \(v_1\). Iterating this procedure yields a sequence \(v_k\). In general, \(v_k\) is not convergent. With the additional massive term though, one can show that the method of reflections does converge, provided that \(\lambda \) is sufficiently large.

In [24], the first author and Velázquez showed how the method of reflections can be modified to ensure convergence without a massive term and how this modified method can be used to obtain convergence results for the homogenization of the Poisson and Stokes equations. In order to study the fluctuations, a high accuracy of the approximation of \(u_m\) is needed. This would make it necessary to analyze many of the terms arising from the modified method of reflections which we were allowed to disregard for the qualitative convergence result of \(u_m\) in [24]. It seems very hard to control sufficiently well these additional terms which either do not arise or are of higher order for the (unmodified) method of reflections used in [15, 29].

Thus, in the present paper, we do not use the method of reflections but follow an alternative approach to obtain an approximation for \(u_m\). Again, we approximate \(u_m\) by \({\tilde{u}}_m = w_0 + \sum _i w_{i}\), where \(w_{i}\) solves the homogeneous Stokes equations outside of \({\overline{B}}_i\). However, we do not take \(w_{i}\) as in the method of reflections, where it is expressed in terms of \(w_0\). Instead \(w_{i}\) will depend on u, exploiting that we already know that \(u_m\) converges to u. In contrast to the approximation obtained from the method of reflections, we will be able to choose \(w_{i}\) in such a way that the approximation \({\tilde{u}}_m = w_0 + \sum _i w_{i}\) is sufficient to capture the fluctuations.

A related approach has recently been used in a parallel work by Gérard-Varet in [17] to give a very short proof of the homogenization result \(u_m \rightharpoonup u\) weakly in \(\dot{H}^1\) under rather mild assumptions on the positions of the particles. However, since we study the fluctuations in this paper, we need a more refined approximation than the one used in [17]. More precisely, to leading order, the function \(w_{i}\) will only depend on \(V_i\) and the value of u at \(B_i\). However, \(w_i\) will also include a lower-order term which is still relevant for the fluctuations. As we will see, this lower-order term will depend in some way on the fluctuations of the positions of all the other particles.

1.3 Organization of the Paper

The rest of the paper is devoted to the proof of the main result, Theorem 1.2.

In Sect. 2, we give a precise definition of the approximation \({\tilde{u}}_m = w_0 + \sum _i w_{i}\), outlined in the paragraph above, as well as a heuristic explanation for this choice.

In Sect. 3, we state three key estimates regarding this approximation and show how the proof of Theorem 1.2 follows from these estimates.

The proof of these key estimates contains a purely analytic part as well as a stochastic part which are given in Sects. 4 and 5, respectively.

2 The Approximation for the Microscopic Solution \(u_m\)

2.1 Notation

We introduce the following notation that is used throughout the paper.

We denote by \(G :\dot{H}^{-1}(\mathbb {R}^3) \rightarrow \dot{H}^1(\mathbb {R}^3)\) the solution operator for the Stokes equations. This operator is explicitly given as a convolution operator with kernel g, the fundamental solution to the Stokes equations, i.e.,

We recall from Theorem 1.2 that \(A :\dot{H}^{-1}(\mathbb {R}^3) \rightarrow \dot{H}^1(\mathbb {R}^3)\) is the solution operator for the limit problem (1.5). We observe the identities

We remark that multiplication by \(\rho \) maps from \(\dot{H}^1(\mathbb {R}^3)\) to \(H^1(\mathbb {R}^3) \cap \dot{H}^{-1}(\mathbb {R}^3)\). Indeed, this follows from \(\rho \in W^{1,\infty }(\mathbb {R}^3)\) with compact support and the fact that \(\dot{H}^1(\mathbb {R}^3) \subseteq L^6(\mathbb {R}^3)\) which implies \(L^{6/5}(\mathbb {R}^3) \subseteq \dot{H}^{-1} (\mathbb {R}^3)\). Furthermore, observe that A and G are bounded operators from \(L^2(\mathbb {R}^3) \cap H^{-1}(\mathbb {R}^3)\) to \(C^{0,\alpha }(\mathbb {R}^3)\), \(\alpha \leqq 1/2\), and from \(H^1(\mathbb {R}^3)\cap H^{-1}(\mathbb {R}^3)\) to \(W^{1,\infty }(\mathbb {R}^3)\). In particular, \(A \rho \) and \(G \rho \) are bounded operators from \(L^2(\mathop {\textrm{supp}}\limits \rho )\) (and in particular from \(\dot{H}^1(\mathbb {R}^3)\)) to \(L^\infty (\mathbb {R}^3)\) and from \(\dot{H}^1(\mathbb {R}^3)\) to \(W^{1,\infty }(\mathbb {R}^3)\).

We denote \(G^{-1}= -\Delta \). Then we have \(G G^{-1} = G^{-1} G = P_\sigma \), where \(P_\sigma \) is the projection to the divergence free functions. In fact, we will use \(G^{-1}\) in the expression \(A G^{-1}\) only. We observe that \(A = A P_\sigma \) and thus

We denote by \(B^m(x) = B_{R_m}(x)\) and the normalized Hausdorff measure on the sphere \(\partial B^m(x)\) by

and write \(\delta ^m_i:=\delta ^m_{X_i}\).

Moreover, we denote for any function \(\varphi \in L^1(B^m(x))\) the average on \(B^m(x)\) by \((\varphi )_x\), i.e.

and we abbreviate \((\varphi )_i:= (\varphi )_{X_i}\).

We will need a cut-off version of the fundamental solution. To this end, let \(\eta \in C^{\infty }_c(B_3(0))\) with \(\textbf{1}_{B_2(0)} \leqq \eta \leqq \textbf{1}_{B_3 (0)}\) and \(\eta _m (x):= \eta (x/R_m)\). Now consider \(\tilde{g}_m=(1-\eta _m)g\). We need an additional term in order to make \(\tilde{g}^m\) divergence free. This is obtained through the classical Bogovski operator (see e.g. [16, Theorem 3.1]) which provides the existence of a sequence \(\psi _m \in C_c^\infty (B_{3 R_m} {\setminus } B_{2 R_m})\) such that \(\mathop {\textrm{div}}\limits \psi _m = \mathop {\textrm{div}}\limits (\eta _m g)\) and

for all \(1< p < \infty \) and all \(k \geqq 1\). By scaling considerations, the constant C is independent of m. Then, we define \(G^m\) as the convolution operator with kernel

2.2 Approximation of \(u_m\) Using Monopoles Induced by u

To find a good approximation for \(u_m\), we observe that \(u_m\) satisfies

for some functions \(h_i \in \dot{H}^{-1}(\mathbb {R}^3)\), each supported in \({\overline{B}}_i\), which are the force distributions induced in the particles due to the Dirichlet boundary conditions.

We begin by observing that for most of the configurations of particles, the particles are sufficiently separated which allows us to determine good approximations for \(h_i\) by ignoring its direct interaction with another particle. As we will see, our approximation for \(h_i\) will only incorporate the effect of the other particles through the limit u.

To be more precise, let \(0< \nu < 1/3\). Then, by Lemma 1.1, we know that, for most of the particles, \(B_{m^{\nu } R_m }(X_i)\) only contains the particle \(B_i\). In this case, \(h_i\) is uniquely determined by the problem

We simplify this problem to derive an approximation for \(h_i\). First, we drop the right-hand side h in (2.6). Its contribution is expected to be negligible, since the volume of \(B_{m^{\nu } R_m }(X_i) {\setminus } \overline{B_i}\) is small compared to the difference of the boundary data at \(\partial B_i\) and \(\partial B_{m^{\nu } R_m }(X_i)\) which is typically of order 1. Next, we know that typically \(\partial B_{m^{\nu } R_m }(X_i)\) is very far from any particle. Since \(u_m \rightharpoonup u\) in \(\dot{H}^1(\mathbb {R}^3)\), we therefore replace (2.6) by

Here, we could also have chosen \(u(X_i)\) instead of \((u)_i\). The precise choice that we make will turn out to be convenient later. By our choice of \(R_m\) in (1.2), the explicit solution of (2.7) is given by \(v_i\) which solves \(- \Delta v_i + \nabla p = h_i\) in \(\mathbb {R}^3\) with

Therefore, resorting to (2.5), we are led to approximate \(u_m\) by

We emphasize that for this approximation it is not important to know the function u. We only used that \(u_m \rightharpoonup u\) in \(\dot{H}^1(\mathbb {R}^3)\) which is always true for a subsequence by standard energy estimates. On the contrary, we can now identify the limit u. Indeed, if we believe that \({\tilde{u}}_m\) approximates \(u_m\) sufficiently well,

which shows that u indeed solves (1.4).

This approximation \({\tilde{u}}_m\) cannot fully capture the fluctuations, though. In the next subsection we thus show how to refine this approximation.

We end this subsection by comparing this approximation to the one used in [15, 29] through the method of reflections. The first order approximation of the method of reflections is given by \({\tilde{u}}_m\) as defined in (2.8) but with Gh instead of u on the right-hand side. Since this is a much cruder approximation, one needs to iterate the approximation scheme. This only yields a convergent series in [15, 29] due to the additional large massive term. On the other hand, this series then approximates \(u_m\) sufficiently well without the refinement that we introduce in the next subsection.

2.3 Refined Approximation to Capture the Fluctuations

We make the ansatz that, macroscopically,

where \(\xi _m\) is a random function which needs to be determined. We assume that the fluctuations \(\xi _m\) are in some sense macroscopic, just as u, such that we can follow the same approximation scheme as in the previous subsection.

More precisely, we adjust the Dirichlet problem (2.7) by adding \(m^{-\frac{1}{2}} (\xi _m)_i\) on the right-hand side of the third line. This leads to the definition

We have not defined \(\xi _m\) yet. To make a good choice for \(\xi _m\), the idea is to use a similar argument as in (2.9) but only to take the limit \(m \rightarrow \infty \) in terms which are of lower order. More precisely, we observe, again taking for granted that \({\tilde{u}}_m\) approximates \(u_m\) sufficiently well and using \(u = G(h + j - \rho u) \),

We expect

Inserting this into (2.12), leads to

This equation could be used as a definition of \(\xi _m\). Although this turns out to be a good approximation on the level of equation (2.10), we will now argue that this is not the case for the definition of \({\tilde{u}}_m\) in (2.11). Indeed, the right-hand side of (2.14) is equal to \((u)_i - V_i\) in \(B_i\) to leading order. Hence, \((m^{-1/2}\xi _m)_i\) would be of the same order which would yield a contribution to \({\tilde{u}}_m\) through \(\xi _m\) of order 1 instead of order \(m^{-1/2}\).

Therefore, we need to be more careful and go back to microscopic considerations: Since \(u_m = V_i\) in \(B_i\) and \({\tilde{u}}_m \approx u_m\), we want to define \(\xi _m\) in such a way that \(\tilde{u}_m \approx V_i\) in \(B_i\). Thus we want to compute \({\tilde{u}}_m\) in \(B_i\) in order to find a good definition of \(\xi _m\). Since we expect \({\tilde{u}}_m = {\tilde{u}}_m (X_i) + O(m^{-1})\) in \(B_i\) (at least on average), we only compute \({\tilde{u}}_m(X_i)\), and by the same reasoning, we replace any average \((\xi _m)_i\) by \(\xi _m(X_i)\) at will. Then, we find, using again \(u = G(h + j - \rho u)\),

Requiring \(\tilde{u}_m(X_i) = V_i\) yields

In order to define \(\xi _m\) from this equation, we want the sum on the right-hand side to include i such that the function is the same for every i. To this end, we notice that by Lemma 1.1, with high probability, we have for all i and all \(W \in \mathbb {R}^3\)

where \(G^m\) is the operator introduced at the end of Sect. 2.1. Hence, we replace the right-hand side of (2.16) by

We expect \(\Theta _m \sim 1\) since the right-hand side of (2.18) represents the fluctuations of the discrete approximation of \(G (\rho u - j)\). As before, we replace the sum on the left-hand side of (2.16) by \(\rho \xi _m\). Combining these approximations leads to

In view of (2.2), it holds \((1+G\rho )AG^{-1} = P_\sigma \). Since, \(\Theta _m\) is divergence free, (2.19) leads to define \(\xi _m\) to be the solution of

Note that the only difference between this definition of \(\xi _m\) and (2.14) is the replacement of G by \(G^m\). As mentioned above, we expect that, on a macroscopic scale, the operators G and \(G^m\) are almost the same (we will make this argument rigorous in Lemma 5.4). Therefore, in equation (2.10), we expect, that it does not play a role (in \(L^2_\textrm{loc}(\mathbb {R}^3)\)) whether we take G or \(G^m\). Consequently, as an approximation for \(\xi _m\), we introduce

This function bears the advantage that it is the sum of i.i.d. random variables. Hence, it is straightforward to study the limit properties of \(\tau _m[g]:= (g,\tau _m)\). Notice that we both replaced the average \((u)_i\) by the value in the center of the ball \(u(X_i)\) and \(\delta ^m_i\) by \(\delta _{X_i}\). Since \(u\in \dot{H}^1(\mathbb {R}^3)\), \(\tau _m\) is not defined for every realization of particles. However, as we will see, it is well-defined as an \(L^2\)-function on the probability space with values in \(L^2_{\textrm{loc}}(\mathbb {R}^3)\).

3 Proof of the Main Result

The first step of the proof is to rigorously justify the approximation of \(u_m\) by \({\tilde{u}}_m\), defined in (2.11) with \(\xi _m\) and \(\Theta _m\) as in (2.20) and (2.18).

Theorem 3.1

For all \(\varepsilon > 0\) and all \(\beta <1\),

The next step is to show that we actually have

which was the starting point of our heuristics, i.e. \(\xi _m\) indeed describes the fluctuations of \(\tilde{u}_m\) around \(u\). In contrast to Theorem 3.1, we can only expect local \(L^2\)-estimates since not even \(u_m-u\) is small in the strong topology of \(\dot{H}^1(\mathbb {R}^3)\).

Proposition 3.2

For all \(\varepsilon >0\), all bounded sets \(K'\subseteq \mathbb {R}^3\) and all \(\beta < 1\),

Combining Propositions 3.1 and 3.2, we observe that we only have to prove the statements of Theorem 1.2 with \(u_m - u\) replaced by \(m^{-1/2}\xi _m\). We postpone the proofs of Theorem 3.1 and Proposition 3.2 to Sect. 4.

The next proposition shows that, instead of \(\xi _m\), we can actually consider \(\tau _m\) introduced in the previous section.

Proposition 3.3

For any bounded set \(K'\subseteq \mathbb {R}^3\) and every \(0\leqq s < \frac{1}{2}\) there is a constant \(C_s(K')>0\) independent of \(m\) such that

Let \(\tau _m\) be defined by (2.21). Then,

We postpone the proof of Proposition 3.3 to Sect. 5.2.

Note that for \(s=0\), these estimates include the case \(L^2(K')\) which we will use now in order to prove Theorem 1.2. Indeed, Theorem 1.2 is a direct consequence of the above results together with the classical Central Limit Theorem.

Proof of Theorem 1.2

Due to the uniform bound on \(\mathbb {E}_m[\Vert \xi _m\Vert ^2_{L^2(K)}]\) from Proposition 3.3, assertion (i) of the main theorem follows immediately from Theorem 3.1 and Proposition 3.2 since \(\dot{H}^1(\mathbb {R}^3)\) embeds into \(L^2_{loc}(\mathbb {R}^3)\).

Since convergence in probability implies convergence in distribution, Theorem 3.1 and Propositions 3.2 and 3.3 imply that it suffices to prove assertion (ii) of Theorem 1.2 with \(\xi _m[g]\) replaced by \(\tau _m[g]:= (g, \tau _m)_{L^2(\mathbb {R}^3)}\), i.e we need to prove that

in distribution for any \(g\in L^2(\mathbb {R}^3)\) with compact support. Since \(\tau _m[g]\) is a sum of independent random variables, this is a direct consequence of the Central Limit Theorem and the following computation for covariances: letting \(g_1,g_2\in L^2(\mathbb {R}^3)\) with compact support, then

Here we used that \(A\delta _x\in L^2_{\textrm{loc}}(\mathbb {R}^3)\) (see Lemma 5.3) and that \(A\) is a symmetric operator on \(L^2(\mathbb {R}^3)\). This finishes the proof. \(\square \)

4 Proof of Theorem 3.1 and Proposition 3.2

In this section, we will reduce the proof of Theorem 3.1 and Proposition 3.2 to proving the following single probabilistic lemma. The proof of this lemma, which is given in Sect. 5.3, is the main technical part of this paper. It makes rigorous the heuristic equation (2.13).

As we discussed in the heuristic arguments, we will exploit in what follows that the probability of having very close particles is vanishing, as stated in Lemma 1.1. In the notation of this lemma, we abbreviate as follows:

Lemma 4.1

Let \(\Lambda _m\),\(\Gamma _m\), \(\Xi _m\) and \({\tilde{\Xi }}_m\) be defined by

Then,

The proof of this lemma is the main technical work of the present paper. We postpone it to Sect. 5.3.

Proof of Proposition 3.2

Recall the definition of \({\tilde{u}}_m\) from (2.11). We compute using \(u = G (h - \rho u + j)\) and \(\xi _m = A G^{-1} \Theta _m = \Theta _m - G \rho \xi _m\) (cf. (2.2)) and the definition of \(\Theta _m\) from (2.18)

Hence,

and we now conclude by Lemmas 1.1 and 4.1. \(\square \)

Proof of Theorem 3.1

We observe that the assertion follows from the following claim: There exists a universal constant C such that for all \((X_1,\ldots , X_m) \in \mathcal {O}_m\) and all m sufficiently large

Indeed, accepting the claim for the moment, let \(\beta < 1\) and \(\varepsilon >0\). Then, using again \(u = G (h - \rho u + j)\)

Thus, the assertion follows again from Lemmas 1.1 and 4.1.

It remains to prove the claim above. It follows from the fact that \(u_m - {\tilde{u}}_m\) solves the homogeneous Stokes equations outside of the particles.

Let \((X_1,\ldots X_m) \in \mathcal {O}_m\). Then, by definition of this set, the balls \(B_{2R_m}(X_i)\) are disjoint for m sufficiently large and we may assume in the following that this is satisfied.

By definition of \(u_m\) and \({\tilde{u}}_m\), we have \(-\Delta ({\tilde{u}}_m - u_m) + \nabla p = 0\) in \(\mathbb {R}^3 {\setminus } \cup _i \overline{B_i}\). By classical arguments which we include for convenience, this implies

Indeed, \({\tilde{u}}_m - u_m\) minimizes the \(\dot{H}^1(\mathbb {R}^3)\)-norm among all divergence free functions w with \(w = {\tilde{u}}_m - u_m = {\tilde{u}}_m -V_i\) in \(\cup _i B_i\). Thus, to show (4.2), it suffices to construct a divergence free function w with \(w = {\tilde{u}}_m -V_i\) in \(\cup _i B_i\) such that \(\Vert w\Vert _{\dot{H}^1(\mathbb {R}^3)}\) is bounded by the right-hand side of (4.2). Since the balls \(B_{2R_m}(X_i)\) are disjoint as \((X_1,\dots ,X_m) \in \mathcal {O}_m\), we only need to construct divergence free functions \(w_i\) such that \(w_i \in H^1_0(B_{2R_m}(X_i))\), \(w_i = {\tilde{u}}_m - V_i\) in \(B_i\) and

It is not difficult to see that such functions \(w_i\) exist. For the convenience of the reader, we state this result in Lemma 4.2 below. Thus, the estimate (4.2) holds.

It remains to prove that the right-hand side of (4.2) is bounded by the right-hand side of (4.1). To this end, let \(x \in B_i\) for some \(1 \leqq i \leqq m\). We resort to the definition of \({\tilde{u}}_m\) in (2.11) to deduce, analogously as in (2.15), that

Recalling the definitions of \(\xi _m\) and \(\Theta _m\) from (2.20) and (2.18), the identity \(\xi _m = \Theta _m - G \rho \xi _m\) implies that for all \(y \in B_i\)

where we used that \((X_1,\dots ,X_m) \in \mathcal {O}_m\) to replace \(G^m\) by G. Thus,

To conclude the proof, we again use \((X_1,\dots ,X_m) \in \mathcal {O}_m\) to replace G by \(G^m\) appropriately. Finally, we combine this identity with (4.2) and the estimate \((\Xi _m)^2_i \leqq C m^3 \Vert \Xi _m\Vert ^2_{L^2(B_i)}\). \(\square \)

Lemma 4.2

Let \(x \in \mathbb {R}^3\), \(R > 0\) and \(w \in H^1(B_R(x))\) be divergence free. Then, there exists a divergence free function \(\varphi \in H^1_0(B_{2 R}(x))\) with \(\varphi = w\) in \(B_R(x)\) and

where  and C is a universal constant.

and C is a universal constant.

Proof

We write \(w = w - (w)_{x,R} + (w)_{x,R}\). By a classical extension result for Sobolev functions, there exists \(\varphi _1 \in H^1_0(B_{2R}(x))\) such that \(\varphi _1 = w - (w)_{x,R}\) in \(B_R(x)\) and

By scaling, the constant C does not depend on R.

Furthermore, we take \(\varphi _2 = (w)_{x,R} \theta _R\) where \(\theta _R \in C_c^\infty (B_{2R}(x))\) is a cut-off function with \(\theta _R = 1\) in \(B_R(x)\) and \(\Vert \nabla \theta _R\Vert _\infty \leqq C R^{-1}\). Then,

Finally, applying a standard Bogovski operator, there exists a function \(\varphi _3 \in H^1_0(B_{2r}(x) {\setminus } B_R(x))\) such that \(\mathop {\textrm{div}}\limits \varphi _3 = - \mathop {\textrm{div}}\limits (\varphi _1 + \varphi _2)\) and

Again, the constant C is independent of R by scaling considerations.

Choosing \(\varphi = \varphi _1 + \varphi _2 + \varphi _3\) finishes the proof. \(\square \)

5 Proof of Probabilistic Statements

This section contains the main technical part of the proof of our main result, the probabilistic estimates stated in Proposition 3.3 and Lemma 4.1. The strategy that we will use to estimate all these terms is to expand the square of sums over the particles and then to use independence of the positions of the particles to calculate the expectations, distinguishing between terms where different particles appear and where one or more particles appear more than once. Then, it will remain to observe that combinatorially relevant terms cancel and that the remaining terms can be bounded sufficiently well, uniformly in m. This proof is quite lengthy. Indeed, expanding the square will lead to terms with up to 5 indices, thus giving rise to a huge number of cases that need to be distinguished.

However, there are only relatively few and basic analytic tools that we will rely on to obtain these cancellations and estimates. These are collected in the following subsection. Their proofs are postponed to the appendix.

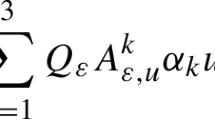

Some of those estimates concern expressions that will recurrently appear when we take expectations. Indeed, since many of the terms in Lemma 4.1 contain \(L^2\)-norms in the particles \(B_i\), we will often deal with terms of the form

Another term that recurrently appears due to the definitions of \({\tilde{u}}_m\) and \(\xi _m\) is

To justify this formal computation one tests the expression with a function \(\varphi \in C_c^\infty (\mathbb {R}^3)\) and performs some changes of variables.

For the sake of a more compact notation, we introduce

5.1 Some Analytic Estimates

In this subsection, we collect some auxiliary observations and estimates for future reference. All the proofs of the results in this subsection can be found in Sect. A of the appendix.

In what follows, we denote by K the bounded set defined by

Note that \(B_i \subseteq K\) almost surely for all \(1 \leqq i \leqq m\) and all \(m \geqq 1\).

Lemma 5.1

-

(i)

For all \(1 \leqq p \leqq \infty \) and all \(w \in L^p(\mathbb {R}^3)\)

$$\begin{aligned} \Vert (w)_\cdot \Vert _{L^p(\mathbb {R}^3)} \leqq \Vert w\Vert _{L^p(\mathbb {R}^3)} . \end{aligned}$$(5.6) -

(ii)

For all \(\alpha >0\), all \(1 \leqq p \leqq \infty \), and all \(w \in L^p(K)\), we have

$$\begin{aligned} \Vert \rho ^\alpha (w)_\cdot \Vert _{L^p(\mathbb {R}^3)}&\leqq C \Vert w\Vert _{L^p(K)} , \end{aligned}$$(5.7)where the constant C depends only on \(\rho \), p and \(\alpha \).

-

(iii)

For all \(w\in \dot{H}^1(\mathbb {R}^3)\)

$$\begin{aligned} \Vert w-(w)\Vert _{L^2(\mathbb {R}^3)}\leqq m^{-1} \Vert w\Vert _{\dot{H}^1(\mathbb {R}^3)}. \end{aligned}$$(5.8) -

(iv)

The operator \(\mathcal {R}\) defined in (5.1) is a bounded operator from \(L^2(K)\) to \(L^2(\mathbb {R}^3) \cap \dot{H}^{-1}(\mathbb {R}^3)\) and from \(H^1(K)\) to \(H^1(\mathbb {R}^3)\). Moreover, there is a constant \(C\) depending only on \(\rho \) such that

$$\begin{aligned} \left\| (\mathcal {R}- \rho ) w \right\| _{L^2(\mathbb {R}^3)}&\leqq C m^{-1} \Vert w\Vert _{H^1(K)} , \end{aligned}$$(5.9)$$\begin{aligned} \left\| (\mathcal {R}- \rho ) w\right\| _{\dot{H}^{-1}(\mathbb {R}^3)}&\leqq C m^{-1} \Vert w\Vert _{L^2(K)}. \end{aligned}$$(5.10) -

(v)

We have

$$\begin{aligned} \sup _m \Vert F\Vert _{\dot{H}^{-1}(\mathbb {R}^3)} + \Vert \mathcal {F}\Vert _{\dot{H}^{-1}(\mathbb {R}^3)} + \Vert F\Vert _{L^2(\mathbb {R}^3)} + \Vert \mathcal {F}\Vert _{L^2(\mathbb {R}^3)} + \mathbb {E}_m[W_1^2] < \infty , \end{aligned}$$(5.11)and there is a constant \(C\) depending only on \(\rho \) and \(j\) such that

$$\begin{aligned} \left\| F-\mathcal {F}\right\| _{L^2(\mathbb {R}^3)} + \left\| F-\mathcal {F}\right\| _{\dot{H}^{-1}(\mathbb {R}^3)}&\leqq C m^{-1}\left( \Vert u\Vert _{H^1(K)}+\Vert j\Vert _{H^1(\mathbb {R}^3)}\right) . \end{aligned}$$(5.12)

Lemma 5.2

There exists a constant C such that for all \(x,y \in \mathbb {R}^3\) and all \(m \geqq 1\), we have

In particular, for any bounded set \(K'\)

Moreover, for all \(m\geqq 1\) and \(y\in \mathbb {R}^3\), it holds

with a constant independent of y and m.

Lemma 5.3

For every \(0\leqq s < \frac{1}{2}\) and every bounded set \(K'\)

Furthermore, for every \(0<\varepsilon \leqq \frac{1}{2}\)

Lemma 5.4

For any \(k \in \mathbb {N}\), \(G^m\) is a bounded operator from \(\dot{H}^k(\mathbb {R}^3)\) to \(\dot{H}^{k+2}(\mathbb {R}^3)\). Moreover, there is a constant C that depends only on \(k\) such that

5.2 Proof of Proposition 3.3

For the proof of Proposition 3.3, we first introduce another function, \(\sigma _m\), intermediate between \(\tau _m\) and \(\xi _m\). We first show that \(\xi _m\) is close to \(\sigma _m\) in the following lemma, which we will also use in the proof of Lemma 4.1.

From now on, we will use the notation \(A\lesssim B\) for scalar quantities \(A\) and \(B\) whenever there is a constant \(C>0\) such that \(A\leqq CB\) and where \(C\) depends neither directly nor indirectly on \(m\).

Lemma 5.5

Using the notation from (5.2) and (5.3), let \(\sigma _m\) be defined by

Then, for every bounded \(K' \subseteq \mathbb {R}^3\)

and

Proof

Let K be the set defined in (5.5). We argue that \(A G^{-1}\) satisfies

for any \(K' \supset K\) and any (divergence free) \(w \in L^2(K')\). Indeed, by (2.2), we observe that

and therefore (5.23) follows from the regularity of \(A\rho \) discussed after (2.2).

We recall that both G and \(G^m\) (cf. (2.4)) map to divergence free functions. Thus, by (5.23), we have for any bounded set \(K' \supset K\)

Recalling the notation (5.4) and using (5.20), we deduce

due to (5.11). It remains to bound \(I_2\). By combining (5.21) with (5.17), we obtain

Thus, by (5.11)

For the gradient estimate, we can argue similarly: Since \(AG^{-1}\) is bounded from \(\dot{H}^1(\mathbb {R}^3)\) to \(\dot{H}^1(\mathbb {R}^3)\)

Using (5.21), we deduce

It remains to bound \(I_2\).

Using that both \(G^m\) and G are bounded operators from \(H^{-1}\) to \(\dot{H}^1\), we find with (5.17)

Thus,

This finishes the proof. \(\square \)

Corollary 5.6

For every \(0\leqq s < \frac{1}{2}\) and every \(K'\subseteq \mathbb {R}^3\) bounded, there is a constant \(C_s(K')>0\) independent of \(m\) such that

Proof

This follows from Lemma 5.5 and the interpolation inequality

This finishes the proof. \(\square \)

Proof of Proposition 3.3

By Lemma 5.5, it suffices to prove

for every \(0\leqq s < \frac{1}{2}\). We introduce \({\tilde{W}}_i:= u(X_i) - V_i\). It is easily seen that \(\mathbb {E}_m[\tilde{W}_1^2] \leqq C\) and \(\mathbb {E}_m[|W_1-\tilde{W}_1|] \lesssim \frac{1}{m}\) uniformly in m. Since \(\tilde{W}_i\delta _{X_i}\) are independent identically distributed random variables, we obtain

by (5.18).

Finally, we have to estimate \(\sigma _m-\tau _m\):

For \(I_1\), notice that by (5.12)

For \(I_2\), we estimate

by (5.18) and by combining (5.19) with the fact that \(A\) is a bounded operator from \(\dot{H}^{s-2}(K')\) to \(\dot{H}^s(K')\). Inserting this above, we find that

Combining the estimates for \(I_1\) and \(I_2\) yields (5.24) which finishes the proof. \(\square \)

5.3 Proof of Lemma 4.1

We begin the proof of Lemma 4.1 by observing that we have actually already proved the required estimate for \(\Lambda ^m\). Indeed, \(\Lambda ^m = m^{-1/2} (\Theta ^m - {\hat{\Theta }}^m)\) with \({\hat{\Theta }}_m\) as in Lemma 5.5. Moreover, in the proof of Lemma 5.5, we showed \(\Vert \Theta ^m - {\hat{\Theta }}^m\Vert _{L^2_\textrm{loc}(\mathbb {R}^3)}^2 \lesssim m^{-1}\).

We divide the rest of proof of Lemma 4.1 into three steps corresponding to the three terms

where \(K'\) is a bounded set. We need to prove \(I_k \leqq C m^{-2}\) for \(k=1,2,3\), uniformly in m with a constant depending only on h, \(\rho \) and \(K'\).

5.3.1 Step 1: Estimate of \(\mathbf {I_1}\).

Since \(\nabla Gh \in L^2(\mathbb {R}^3)\) is deterministic and the positions of the particles \(B_i\) are independent, we estimate

Here we used (5.6) together with \(\rho \in L^{\infty }(\mathbb {R}^3)\).

5.3.2 Step 2: Estimate of \(\mathbf {I_2}\).

Since \(\Gamma _m\) depends on m, the computation is more involved. According to the definition of \(\Gamma \), we split \(I_2\) again. More precisely, it suffices to estimate

In the first term, we used that for \((Z_1,\dots ,Z_m) \in \mathcal {O}_m\) we can replace \(G^m\) by G according to (2.17).

We first consider \(I_{2,1}\). We expand the square to obtain for any fixed i

We distinguish the cases \(j\ne k\) and \(j=k\). In the case \(j \ne k\), we apply a similar reasoning as for \(I_1\): due to the independence of \(Z_i\), \(Z_j\), \(Z_k\), we have with \(\mathcal {F}\) as in (5.4)

where we used again (5.6). Since by (5.11), \(\mathcal {F}\) is bounded in \(\dot{H}^{-1}(\mathbb {R}^3)\), we therefore conclude that

It remains to estimate \(I_{2,1}^{jj}\). We compute

By (5.17)

Combining this with (5.7), we conclude

by assumption (H1).

We now turn to \(I_{2,2}\). We estimate

with \(\sigma _m\) from Lemma 5.5. Using this lemma and the fact that \(G\rho \) is a bounded operator from \(\dot{H}^1(\mathbb {R}^3)\) to \(W^{1,\infty }(\mathbb {R}^3)\), we find

Recalling the definition of \(\sigma _m\) from Lemma 5.5, we have

This is a very rough estimate, since we actually expect cancellations from the difference. However, these cancellations are not needed here for the desired bound. Indeed, since \(G \rho A\) is a bounded operator from \(\dot{H}^{-1}(\mathbb {R}^3)\) to \(\dot{H}^1 (\mathbb {R}^3)\), \(I_{2,2,1}\) is controlled analogously as \(I_1\).

It remains to estimate \(I_{2,2,2}\). We expand the square again and write

We have to distinguish the cases where all \(i,j,k\) are distinct, the case where \(j=k\) but \(j\ne i\), the case where \(i=j\) or \(i=k\) but \(j\ne k\), and, finally, the case where \(i=j=k\).

In the first case, we can proceed analogously as for \(I^{j,k}_{2,1}\). In particular, we use the definition of \(\mathcal {F}\) to deduce

since \( G \rho A\) is also bounded from \(\dot{H}^{-1}(\mathbb {R}^3)\) to \(\dot{H}^1(\mathbb {R}^3)\).

Next, we estimate \(I_{2,2,2}^{i,j,j}\). Analogously as for \(I_{2,1}^{j,j}\), we obtain

Since \(\nabla G V\) is a bounded operator from \(\dot{H}^1(\mathbb {R}^3)\) to \(L^2(\mathbb {R}^3)\), we obtain by (5.17) combined with (5.7) and using (H1)

The third estimate concerns \(I^{i,i,k}_{2,2,2}\). By symmetry, \(I^{i,j,i}_{2,2,2}\) is dealt with analogously. We have, using (5.17), (5.11), and (5.7) together with (5.6),

We also used that the operator \(\nabla G\rho A\) maps \(\dot{H}^{-1}(\mathbb {R}^3)\) into \(L^{\infty }(\mathbb {R}^3)\), as well as \(j\in L^2(\mathbb {R}^3)\) by assumption (H3).

Finally, we estimate \(I^{i,i,i}_{2,2,2}\). Using (5.17) and (5.7), we obtain

This finishes the estimate of \(I_{2,2,2}\). Therefore, the estimate of \(I_{2,2}\) is complete, which also finishes the estimate of \(I_2\).

5.3.3 Step 3: Estimate of \(\mathbf {I_3}\).

We recall from (5.25) that \(I_3\) consists of three terms, which we denote by \(J_1, J_2\) and \(J_3\). We will focus on the proof on \(J_1\) as this is the most difficult term. We will comment on the adjustments needed to treat \(J_2\) and \(J_3\) along the estimates for \(J_1\). Roughly speaking, the main difference between \(J_1\) and \(J_2\) is that one considers \(L^2(\cup _i B_i)\) for \(J_1\) and \(L^2_\textrm{loc}(\mathbb {R}^3)\) for \(J_2\). Naively, \(J_1\) should therefore be better by a factor \(|\cup _i B_i| \sim m^{-2}\), which is exactly the estimate we obtain. Moreover, \(J_3\) concerns the gradient of the terms in \(J_1\). Since we may loose a factor \(m^{-2}\) going from \(J_1\) to \(J_3\), it will not be difficult to adapt the estimates for \(J_1\) to \(J_3\) using the gradient estimates in Sect. 5.1. For the sake of completeness we detail the estimates for \(J_3\) in the appendix.

5.3.4 Step 3.1: Expansion of the Terms

As in the previous step, we first want to replace all occurrences of \(G^m\) by G. Note that \(G^m\) is present both explicitly in the definition of \(\Xi ^m\) and also implicitly through \(\xi _m\). By (2.17) and independence of the position of the particles, it holds

where on the right-hand side, i is any of the m identically distributed particles. We use that \(G\rho \) is a bounded operator from \(L^2(K)\) to \(L^{\infty }(B_i)\) and Lemma 5.5 to deduce

This implies, that for the estimate of \(J_1\), it suffices to show that

By the definitions of \(m^{-\frac{1}{2}}\xi _m\) and \(m^{-\frac{1}{2}}\rho _m\) (cf. (2.20) and (5.22)) together with (2.17), we have in \(\mathcal {O}_m\)

(Strictly speaking \(\Psi _{j,k}\) depends on i, but we omit this dependence for the ease of notation.)

Thus,

Similarly, we have the estimate

with the same proof as before using that \(\nabla G\rho \) is a bounded operator from \(\dot{H}^1(\mathbb {R}^3)\) to \(W^{1,\infty }(\mathbb {R}^3)\) and the second part of Lemma 5.5.

Furthermore,

where \({\tilde{\Psi }}_{j,k}\) denotes the function that is obtained by omitting the factor \((1-\delta _{ij})\) in (5.26).

Relying on this structure enables us to make more precise the argument why the estimate for \(\mathfrak {J}_1\) is most difficult compared to \(\mathfrak {J}_2\) and \(\mathfrak {J}_3\). Indeed, for the estimate for \(\mathfrak {J}_3\), one just follows the same argument as for \(\mathfrak {J}_1\). The relevant estimates in Sect. 5.1 show that whenever \(\nabla G\) instead of G appears, we loose (at most) a factor \(m^{-1}\). For completeness, we provide the proof of the estimates regarding \(\mathfrak {J}_3\) in the appendix.

On the other hand, for \(\mathfrak {J}_2\), we can use the estimates that we will prove for the terms of \(\mathfrak {J}_1\) in the case when the index i is different from all the other indices. Indeed, in those cases, \(\Psi _{j,k} = {\tilde{\Psi }}_{j,k}\), and we will always estimate

Thus, the bound for \(\mathfrak {J}_2\) is a direct consequence of the estimates we will derive to bound \(\mathfrak {J}_1\).

Recall that we need to prove \(|\mathfrak {J}_1|\lesssim m^{-2}\). We will split the sum into the cases \(\#\{i,j,k,n,\ell \}=\alpha \), \(\alpha =1,\ldots ,5\). Then, since \(i\) is fixed, there will be \(m^{\alpha -1}\) summands for the case \(\#\{i,j,k,n,\ell \}=\alpha \). Thus, it is enough to show that in each of these cases

To prove this estimate, we have to rely on cancellations between the terms that \(\Psi _{j,k}\) is composed of. To this end, we denote the first part of \(\Psi _{j,k}\) by

and the second part by

We observe that

5.3.5 Step 3.2: The Cases in Which at Most \(\textbf{2}\) Indices are Equal

In many cases, we can rely on cancellations within \(\Psi _{k}^{(1)}\) and \(\Psi _{j,k}^{(2)}\). Indeed, we will prove the following lemma:

Lemma 5.7

Let \(K' \subseteq \mathbb {R}^3\) be bounded. Then,

There are only three cases (up to symmetry), where we have to rely on cancellations between \(\Psi _{k}^{(1)}\) and \(\Psi _{j,k}^{(2)}\) to estimate \(I_3^{i,j,k,n,\ell }\). These are the cross terms, when either \(j=n\), or \(k= \ell \), or \(j=\ell \), and all the other indices are different. In these cases, we will rely on the following lemma:

Lemma 5.8

Let \(K' \subseteq \mathbb {R}^3\) be bounded. Then,

Finally, we obtain the following estimates, useful in particular for the cases in which \(i=k\):

Lemma 5.9

Let \(K' \subseteq \mathbb {R}^3\) be bounded. Then, for any i, j, k,

Combining these lemmas allows us to estimate \(I_3^{i,j,k,n,\ell }\) in all the cases when \(\alpha = \#\{i,j,k,n,\ell \} \geqq 4\).

Corollary 5.10

The following estimates hold true where the implicit constants are independent of \(m\):

-

1.

If \(\#\{i,j,k,n,\ell \} = 5\), then

$$\begin{aligned} \big |I_3^{i,j,k,n,\ell }\big | \lesssim m^{-5}. \end{aligned}$$ -

2.

If \(\#\{i,j,k,n,\ell \} = 4\), then

$$\begin{aligned} \big |I_3^{i,j,k,n,\ell }\big | \lesssim m^{-4}. \end{aligned}$$

Proof

If \(\#\{i,j,k,n,\ell \} = 5\), then by independence, the Hölder inequality and Lemma 5.7

If \(\#\{i,j,k,n,\ell \} = 4\), we need to distinguish all the possible combinations of two indices being equal. Depending on which indices coincide, we split the product by independence of the other indices. If \(j=n\), \(k=\ell \) or \(j= \ell \) (or \(k=n\) which is the same), we rely on Lemma 5.8 and gain an additional factor \(m^{-3}\) from the expectation of \(\textbf{1}_{B^m_i}\).

If \(j=k\) (or analogously \(n= \ell \)), the expectation completely factorizes into \(\mathbb {E}_m[\textbf{1}_{B^m_i}] \mathbb {E}_m[ \Psi _{jj}] \mathbb {E}_m[\Psi _{n\ell }]\) and we can apply (5.34) for the second factor and Lemma 5.7 for the third factor.

Finally, in all the other cases we can, without loss of generality, split the expectation into \(\mathbb {E}_m[\textbf{1}_{B^m_i} \Psi _{jk}] \mathbb {E}_m[\Psi _{n\ell }]\) and apply (5.35) for the first factor and Lemma 5.7 for the second factor. \(\square \)

We finish this step by giving the proofs of Lemmas 5.7, 5.8 and 5.9.

Proof of Lemma 5.7

By (5.28), we have

and using (5.12) yields (5.29). Similarly, for \(j \ne k\), \(i\ne j\),

Using again (5.12) and recalling from Lemma 5.1 that \({\mathcal {R}}\) is a bounded operator from \(L^2(K)\) to \(\dot{H}^{-1}(\mathbb {R}^3)\) yields (5.30). For \(i=j\), \(\Psi ^{(2)}_{j,k} = 0\) and there is nothing to prove. \(\square \)

Proof of Lemma 5.8

Regarding (5.31), we have

We obtain

where we used (5.12) for both terms and (5.16) and (5.7) for the second term.

Regarding (5.32), we compute

Thus, we obtain

where we used (5.16) for both terms and (5.7) and (H1) for the second term.

Finally, to prove (5.33), we just apply Young’s inequality to reduce to the previous two estimates. Indeed,

These two terms are exactly the ones we have estimated in the previous two steps. \(\square \)

Proof of Lemma 5.9

The first estimate, (5.34), follows directly from (5.28) and (5.11) together with the fact that the operators \(G \rho A \) \(G \rho A\) \(G \mathcal {R}A\) and \(G \mathcal {R}A\) are bounded from \(\dot{H}^1(\mathbb {R}^3)\) to \(L^2_\textrm{loc}(\mathbb {R}^3)\).

Regarding (5.35), we first observe that these estimates follow directly from (5.34) in the cases, when \(i \ne k\). Indeed, if i is different from both j and k, the expectation factorizes. Moreover, the case \(i=j\) is trivial, since the terms with index j vanish for \(i=j\).

If \(i=k\), we only need to consider those terms, where k appears, i.e. \(\Psi ^{(1,2)}_k\) and \(\Psi ^{(2,2)}_{j,k}\). Again, we only need to consider the case \(j \ne k = i\).

We have for \(\Psi ^{(1,2)}_k\)

where we used (5.16), (5.6) and (5.7). Since for \(j \ne i\),

the estimate of this term is analogous. \(\square \)

5.3.6 Step 3.3: The Cases in Which the Number of Different Indices is \(\textbf{3}\) or Less

It remains to estimate \(|I_3^{i,j,k,n,\ell }|\), when \(\# \{i,j,k,n,\ell \} \leqq 3\). We will show that \(|I_3^{i,j,k,n,\ell }| \lesssim m^{-3}\) for \(\# \{i,j,k,n,\ell \} = 3\), and \(|I_3^{i,j,k,n,l}| \lesssim m^{-2}\) for \(\# \{i,j,k,n,\ell \} \leqq 2\). Formally, a factor \(m^{-3}\) can be expected to come from the term \(\textbf{1}_{B^m_i}\), so that cancellations are not needed for the estimates of those term. We will see that this strategy works for all the terms except for \(I_3^{i,j,i,j,\ell }\) with \(i,j,\ell \) mutually distinct.

Thus, in all cases except \(I_3^{i,j,i,j,\ell }\) with \(i,j,\ell \) mutually distinct, we just brutally estimate the product \( \Psi _{j,k} \Psi _{n,\ell }\) via the triangle inequality

with the convention that \(\Psi ^{(1,1)}_{j,k} = \Psi ^{(1,1)}\), and similarly for \(\Psi ^{(1,2)}_{j,k}\) and \(\Psi ^{(2,1)}_{j,k}\).

We now consider all possible cases of \((\alpha ,\beta ,\gamma ,\delta ) \in \{1,2\}^4\) and \(\# \{i,j,k,n,\ell \} \leqq 3\). Since \(\Psi ^{(1,1)}\) does not depend on any index and both \(\Psi ^{(1,2)}_{k}\) and \(\Psi ^{(2,1)}_{j}\) only depend on one index (not taking into account the dependence of i since \(\Psi ^{(2,1)}_{i} = 0\) anyway), the number of cases to be considered considerably reduces for these terms.

In order to exploit this in the sequel, we introduce the following slightly abusive notation. When considering the term \(\mathbb {E}_m[ \textbf{1}_{B^m_i} \Psi _{j,k}^{(\alpha ,\beta )} \Psi _{n,\ell }^{(\gamma ,\delta )}]\) for fixed \(\alpha , \beta , \gamma , \delta \), we define the notion of relevant indices to be the subset of indices \(\{i,j,k,n,\ell \}\) appearing in this product after replacing \(\Psi ^{(1,1)}_{j,k}\) by \(\Psi ^{(1,1)}\) and similarly for \(\Psi ^{(1,2)}_{j,k}\), \(\Psi ^{(2,1)}_{j,k}\) and for the indices \(n,\ell \).

To further reduce the number of cases that we have to consider, we next argue that we do not have to consider the cases \(\{j,k,n,\ell \}\) with \(J \cap \{j,k\} \cap \{n,\ell \} = \emptyset \), where J is the set of relevant indices. Indeed, in all these cases, the expectation factorizes, and we conclude by the bounds provided by Lemma 5.9. In particular, we do not have to consider any case where \(\Psi ^{(1,1)}\) appears.

Moreover, if j is a relevant index and \(i=j\), then \(\Psi ^{(2,2)}_{j,k} = \Psi ^{(2,1)}_{j} = 0\), and therefore, there is nothing to estimate. If \(j\) and \(k\) are both relevant indices and \(j=k\), then \(\Psi ^{(2,2)}_{j,j}=0\), and therefore, there is nothing to estimate either. The same reasoning applies to the cases where \(i=n\) and \(n=\ell \), respectively.

We now list all the cases that are left to consider. Cases that are equivalent by symmetry we list only once. We use the convention here, that we only specify which relevant indices coincide; relevant indices which are not explicitly denoted as equal are assumed to be different. The indices which are not relevant may take any number, in particular coinciding with each other or with relevant indices.

-

1.

\((\alpha ,\beta ,\gamma ,\delta ) = (2,2,2,2)\): Relevant indices: \(\{i,j,k,n,\ell \}\). Since all the indices are relevant, we only have to consider cases where at least two pairs or three indices coincide. All the other cases are already covered when we have estimated \(I^{i,j,k,n,\ell }\) with \(\#\{i,j,k,n,\ell \} \geqq 4\). The cases left to consider are

-

(a)

\(i=k\), \(j=n\),

-

(b)

\(i=k\), \(j=\ell \),

-

(c)

\(i=k=\ell \),

-

(d)

\(j=n\), \(k=\ell \),

-

(e)

\(j=\ell \), \(k=n\),

-

(f)

\(i=k=\ell \), \(j=n\).

-

(a)

-

2.

\((\alpha ,\beta ,\gamma ,\delta ) = (2,1,2,2)\): Relevant indices: \(\{i,j,n,\ell \}\). Cases to consider:

-

(a)

\(j=n\),

-

(b)

\(j=\ell \),

-

(c)

\(i=\ell \), \(j=n\).

-

(a)

-

3.

\((\alpha ,\beta ,\gamma ,\delta ) = (2,1,2,1)\): Relevant indices: \(\{i,j,n\}\). Only case to consider: \(j=n\).

-

4.

\((\alpha ,\beta ,\gamma ,\delta ) = (1,2,2,2)\): Relevant indices: \(\{i,k,n,\ell \}\). Cases to consider:

-

(a)

\(i=k=\ell \),

-

(b)

\(i=\ell \), \(k=n\),

-

(c)

\(k=n\).

-

(a)

-

5.

\((\alpha ,\beta ,\gamma ,\delta ) = (1,2,2,1)\): Relevant indices: \(\{i,k,n\}\). Only case to consider: \(k=n\).

-

6.

\((\alpha ,\beta ,\gamma ,\delta ) = (1,2,1,2)\): Relevant indices: \(\{i,k,\ell \}\). Cases to consider:

-

(a)

\(k=\ell \),

-

(b)

\(i = k = \ell \).

-

(a)

In order to conclude the proof of the lemma, it now remains to give estimates for the cases listed above.

The case (1a): As mentioned at the beginning of Step 3.3, this is the case, where we rely on cancellations with \(\Psi ^{(2,1)}\) coming from case (2c). We estimate

Hence, since \(A\) maps \(L^2(\mathbb {R}^3)\cap \dot{H}^{-1}(\mathbb {R}^3)\) to \(L^{\infty }(\mathbb {R}^3)\) and by (5.12)

By (5.13)

Combining this with the pointwise estimate (5.14) yields

where we used (5.7) and (H1).

The case (1b) is similar. However, it turns out to be easier, since the singularity is subcritical, so we do not need to take into account cancellations. Indeed,

Thus, since \(G\mathcal {R}\) maps \(L^2(K)\) to \(L^{\infty }(\mathbb {R}^3)\) and by (5.16)

Now we proceed as in the previous case to estimate

The case (1c): We have

Thus, using first that \(\Vert G\mathcal {R}A \delta _{y_1}^m\Vert _{L^{\infty }(\mathbb {R}^3)} \lesssim 1\) as above, (H1) and (5.6) together with (5.7).

The case (1d): We compute

Using (5.16) twice, (5.7) together with (H2) and (H1), we can successively estimate the integral in x, \(y_2\) and \((y_1,v_1)\) to deduce

The case (1e): We just observe that by Young’s inequality

Thus, this case is reduced to case (1d).

The case (1f). Note that \(\# \{i,j,k,n,\ell \} = 2\). Hence, we only need a bound \(m^{-2}\). We have

We can estimate the integral in x using again (5.36)

Moreover, using (5.14), we find

where we used (5.7) and (H1) in the last estimate. Note that this estimate is sufficient, since the number of different indices in this case is only 2.

The cases (2a) and (2b) are reduced to the cases (3) and (1d) by Young’s inequality, analogously as in the case (1e).

The case (2c) was estimated together with the case (1a) if k is different from the other indices.

If k coincides with one of the other indices, the number of different indices is 2 and we can reduce the case to the cases (3) and (1f) by Young’s inequality.

The case (3): In this case we get a factor \(m^{-3}\) from \(\textbf{1}_{B^m_i}\) and thus the desired estimate follows from

where we used (5.16) and (5.7).

The case (4a) is estimated by an analogous computation as the one at the end of the proof of Lemma 5.9, relying on the fact that

which is a direct consequence of (5.16) and the fact that \(G\rho \) is bounded from \(L^2(K)\) to \(L^\infty (\mathbb {R}^3)\). Since the index n is free, a similar bound can be used for \(\Psi ^{(2,2)}_{n,\ell }\). More precisely,

since \( G\mathcal {R}\) and \(G\rho \) map \(L^2(K)\) to \(L^{\infty }(\mathbb {R}^3)\) and using again (5.16). As before, integrating in x yields a factor \(m^{-3}\).

The case (4b): Using (5.38) yields

which is the same as (5.37) which we have already estimated.

The case (4c) is reduced to the cases (6a) and (1d) by Young’s inequality.

The case (5) is reduced to the cases (6a) and (3) by Young’s inequality.

The cases (6a) and (6b) are estimated by an analogous computation as the one at the end of the proof of Lemma 5.9, relying on (5.38) again.

Data Availability

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

References

Armstrong, S., Kuusi, T., Mourrat, J.-C.: The additive structure of elliptic homogenization. Invent. Math. 208(3), 999–1154, 2017

Allaire, G.: Homogenization of the Navier–Stokes equations in open sets perforated with tiny holes. I. Abstract framework, a volume distribution of holes. Arch. Ration. Mech. Anal. 113(3), 209–259, 1990

Braun, W., Hepp, K.: The Vlasov dynamics and its fluctuations in the \(1/N\) limit of interacting classical particles. Commun. Math. Phys. 56(2), 101–113, 1977

Boudin, L., Grandmont, C., Lorz, A., Moussa, A.: Modelling and numerics for respiratory aerosols. Commun. Comput. Phys. 18(3), 723–756, 2015

Carrapatoso, K., Hillairet, M.: On the derivation of a Stokes–Brinkman problem from Stokes equations around a random array of moving spheres. Commun. Math. Phys. 373(1), 265–325, 2020

Cioranescu, D., Murat, F.: Un terme étrange venu d’ailleurs. Nonlinear Partial Differential Equations and Their Applications. Collège de France Seminar, Vol. II (Paris, 1979/1980). Vol. 60. Research Notes in Mathematics Pitman, Boston, Mass.-London, pp. 98–138, 389–390, 1982

Duerinckx, M., Fischer, J., Gloria, A.: Scaling limit of the homogenization commutator for Gaussian coefficient fields. Ann. Appl. Probab. 32(2), 1179–1209, 2022

Duerinckx, M., Gloria, A.: Quantitative homogenization theory for random suspensions in steady Stokes flow. J. l’École Polytech. Math. 9, 1183–1244, 2022

Dal Maso, G., Garroni, A.: New results on the asymptotic behavior of Dirichlet problems in perforated domains. Math. Models Methods Appl. Sci. 4(3), 373–407, 1994

Duerinckx, M., Gloria, A., Otto, F.: Robustness of the pathwise structure of fluctuations in stochastic homogenization. Probab. Theory Relat. Fields 178(1–2), 531–566, 2020

Duerinckx, M., Gloria, A., Otto, F.: The structure of fluctuations in stochastic homogenization. Commun. Math. Phys. 377, 259–306, 2020

Desvillettes, L., Golse, F., Ricci, V.: The mean-field limit for solid particles in a Navier–Stokes flow. J. Stat. Phys. 131(5), 941–967, 2008

Feppon, F.: High-order homogenization of the Poisson equation in a perforated periodic domain. Optimization and Control for Partial Differential Equations: Uncertainty Quantification, Open and Closed-Loop Control, and Shape Optimization (Eds. Herzog R. et al.) De Gruyter, pp. 237–284, 2022

Feppon, F., Jing, W.: High order homogenized Stokes models capture all three regimes. SIAM J. Math. Anal. 54(4), 5013–5040, 2022

Figari, R., Orlandi, E., Teta, S.: The Laplacian in regions with many small obstacles: fluctuations around the limit operator. J. Stat. Phys. 41(3–4), 465–487, 1985

Galdi, G.P.: An Introduction to the Mathematical Theory of the Navier–Stokes Equations, Steady-State Problems. Springer Monographs in Mathematics, 2nd edn. Springer, New York, p. xiv+1018, 2011

Gérard-Varet, D.: A simple justification of effective models for conducting or fluid media with dilute spherical inclusions. Asymptotic Analysis 128.1. IOS Press, pp. 31–53, 2022

Giunti, A., Höfer, R.M.: Homogenisation for the Stokes equations in randomly perforated domains under almost minimal assumptions on the size of the holes. Ann. Inst. H. Poincaré Anal. Non Linéaire 36(7), 1829–1868, 2019

Giunti, A., Höfer, R., Velázquez, J.J.L.: Homogenization for the Poisson equation in randomly perforated domains under minimal assumptions on the size of the holes. Commun. Partial Differ. Equ. 43(9), 1377–1412, 2018

Giunti, A.: Convergence rates for the homogenization of the Poisson problem in randomly perforated domains. Netw. Heterog. Media 16(3), 341–375, 2021

Hauray, M.: Wasserstein distances for vortices approximation of Euler-type equations. Math. Models Methods Appl. Sci. 19(8), 1357–1384, 2009

Hillairet, M., Moussa, A., Sueur, F.: On the effect of polydispersity and rotation on the Brinkman force induced by a cloud of particles on a viscous incompressible flow. Kinet. Relat. Models 12(4), 681–701, 2019

Höfer, R.M.: Sedimentation of inertialess particles in Stokes flows. Commun. Math. Phys. 360(1), 55–101, 2018

Höfer, R.M., Velázquez, J.J.L.: The method of reflections, homogenization and screening for Poisson and Stokes equations in perforated domains. Arch. Ration. Mech. Anal. 227(3), 1165–1221, 2018

Kacimi, H., Murat, F.: Estimation de l’erreur dans des problèmes de Dirichlet oùapparait un terme étrange. Partial Differential Equations and the Calculus of Variations, Vol. II. Vol. 2. Progr. Nonlinear Differential Equations Appl. Birkhäuser Boston, Boston, MA, pp. 661–696, 1989

Marchenko, V.A., Khruslov, E.Y.: Boundary value problems in domains with fine-grained boundary. Izdat. Naukova Dumka Kiev, p. 279, 1974 , (in Russian)

Ozawa, S.: Point interaction potential approximation for \((-\Delta +U)^{-1}\) and eigenvalues of the Laplacian on wildly perturbed domain. Osaka J. Math. 20(4), 923–937, 1983

Papanicolaou, G.C., Varadhan, S.R.S.: Diffusion in regions with many small holes. Stochastic Differential Systems Filtering and Control: Proceedings of the IFIP-WG 7/1 Working Conference Vilnius, Lithuania, USSR, Aug. 28–Sept. 2, 1978. Springer, Berlin Heidelberg, pp. 190–206, 1980

Rubinstein, J.: On the macroscopic description of slow viscous flow past a random array of spheres. J. Stat. Phys. 44(5–6), 849–863, 1986

Acknowledgements

The authors thank Juan J.L. Velázquez for valuable discussions. The authors have been supported by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) through the collaborative research center “The Mathematics of Emerging Effects” (CRC 1060, Projekt-ID 211504053) and the Hausdorff Center for Mathematics (GZ 2047/1, Projekt-ID 390685813).

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Communicated by N. Masmoudi.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Proofs of the Auxiliary Estimates from Sect. 5.1

Proof of Lemma 5.1

(i) Define

We observe that for \(w \in W^{1,p}(\mathbb {R}^3)\), \(1\leqq p <\infty \)