Abstract

In this article we propose a novel geometric model to study the motion of a physical flag. In our approach, a flag is viewed as an isometric immersion from the square with values in \(\mathbb {R}^3\) satisfying certain boundary conditions at the flag pole. Under additional regularity constraints we show that the space of all such flags carries the structure of an infinite dimensional manifold and can be viewed as a submanifold of the space of all immersions. In the second part of the article we equip the space of isometric immersions with its natural kinetic energy and derive the corresponding equations of motion. This approach can be viewed in a spirit similar to Arnold’s geometric picture for the motion of an incompressible fluid.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

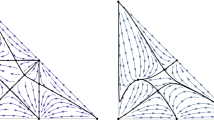

In this article we propose a geometric framework to model the motion of physical flags. Mathematically, a flag on a flagpole may be modeled as an isometric \(C^2\)-immersion of a square into \(\mathbb {R}^3\) subject to the constraint that one edge is mapped to the pole. To obtain the simplest possible model, we ignore external forces and model the flag as though it follows a geodesic in the space of isometric immersions with Riemannian metric determined by the physical kinetic energy. Although the local problem of deformability of isometric immersions is well-known, see for example, [31] and the references therein, an additional difficulty in our setup comes from our need for global coordinates, as well as the boundary conditions: matching the flag to the pole on one hand, and describing the edges of the square on the other hand; see Fig. 1 for three examples of flags (isometric immersions).

Three examples of isometric immersions from the plane in \(\mathbb {R}^3\) (flags). The flag pole is added for illustrative purposes only. The immersions have been constructed using the explicit characterization of Theorem 9

1.1 Modelling equations of motions as geodesic equations

Our approach follows similar geometrical models for other situations, such as modeling the motion of ideal fluids as a geodesic evolution in the group of volume-preserving diffeomorphisms, as first done by Arnold [2] in 1966. The advantage of this formulation is that it allows us to relate curvature of the manifold to stability of the system, and that it reduces the system to the simplest set of assumptions (without incorporating the details of external forces or the physical composition of the system). Another advantage is that it can lead to rigorous proofs of existence and uniqueness theorems by turning a PDE into an ODE, as in Ebin–Marsden [11] for the incompressible Euler-equation. Many other PDEs have been recast as geodesics in various spaces, especially on diffeomorphism groups. See [19, 20, 34] for survey articles on the topic and Arnold-Khesin [3] for an introduction to the field and more examples.

Diffeomorphism groups arise in studying motion of fluids which fill up their domain. In many other cases the system is a material moving in a higher-dimensional space, which leads one to work with spaces of embeddings and immersions; see [4] and the references therein. One example is the motion of inextensible threads in Euclidean space: assuming the geometric constraint that the curve \(\varvec{\eta }\) preserves arc length s, we have the equation

Here the function \(\sigma \), which is determined by the second order ODE in the middle, can be interpreted as the tension of the curve.

This is a very old equation, but the existence theory is rather recent, as is the geometric treatment (see [27, 28] and references therein, along with the more recent [29] for weak solutions). A natural extension of this to higher dimensions is to consider the space of embeddings of surfaces into \(\mathbb {R}^3\) with some constraint: either preserving the area element or preserving the Riemannian metric; those that preserve the area element, which serve as a model for the motion of membranes in biological systems, were studied by several researchers including the first author [5, 15, 23]. In this article we study those that preserve the metric, which can serve as a model for unstretchable fabric or paper.

1.2 Contributions of the Article

For a general surface M the study of the space of isometric immersions \(M\rightarrow \mathbb {R}^3\) comes with several difficulties. The main reason for this is that this space is relatively small and depends delicately on the geometry of the surface. For example, the Cohn-Vossen theorem says that if a closed surface has nonnegative Gaussian curvature and no open set where the the curvature is zero, then it is rigid: the only deformations are isometries of \(\mathbb {R}^3\). For a recent survey of such results, see Han–Hong [17]. If the Gaussian curvature is zero, as in our case, then there is a nontrivial family of deformations, but it is not very large: in the space of all immersions (three functions of two variables), the isometric immersions are generically described by two functions of one variable. Even this result is only valid locally, and we derive our own version. Furthermore, to work with the geodesic equation in this space, one would like to have a manifold structure, which cannot be expected for general surfaces M: in [37] it has been shown that the space of isometric immersions from \(S^2\) to \(\mathbb {R}^3\) is not locally arcwise connected and hence cannot be a manifold, which gives a counter example to an earlier result of [6]. For the situation of this article—isometric immersions from the flat square into \(\mathbb {R}^3\)—we are nevertheless able to overcome these difficulties and show a manifold result, as described below.

Our model for a flag is a function \(\textbf{r}:[0,1]^2 \rightarrow \mathbb {R}^3\) satisfying the isometry conditions \(|\textbf{r}_u|= |\textbf{r}_v|= 1\) and \(\textbf{r}_u\cdot \textbf{r}_v = 0\), along with the flagpole conditions \(\textbf{r}(0,v) = (0,v,0){:=v\hat{\mathbf {\jmath }}}\) and the horizontality condition \(\textbf{r}_u(0,v) ={(1,0,0):=} \hat{\mathbf {\imath }}\); in other words, the flag is fastened to the pole at \(u=0\) with fasteners along the \(\hat{\mathbf {\imath }}\) direction. Here \(\hat{\mathbf {\imath }}\), \(\hat{\mathbf {\jmath }}\) and \(\hat{k}\) denote a unit basis of \(\mathbb {R}^3\).

The first task is to classify maps satisfying these conditions. It is well-known that locally such immersions are determined by two functions of one variable; see for example, the classic textbook by do Carmo [10]. However, we need a global representation of these immersions to study the dynamics. In our situation it turns out that either the curve along the bottom edge of the flag or the curve along the top edge of the flag has a special role, and we will distinguish these two cases by referring to them as upturned (downturned, resp.) flags. In the case that both of these curves admit this special role, we call it a balanced flag; see Section 2 for further details. Since the analysis for upturned and downturned flags is entirely equivalent, we will only focus on the case of upturned flags and only comment on the minor differences in Section 2.4. Our first main result, Theorem 9, gives a full characterization of all upturned flags in terms of two functions of one variable. The main difference between this and the classical results is that we get a global characterization on the whole square, which requires an analysis of crossing characteristics.

In essence, an upturned flag is determined by the space curve that traces out its bottom edge, which is in turn determined by its torsion and curvature functions, as long as these functions satisfy some constraint to keep the asymptotic lines from crossing. As the set of these functions is an open subset of a Banach space, this characterization provides us at the same time with a manifold structure for the space of upturned flags; see Theorem 11. It seems natural to consider the space of regular, upturned flags as a submanifold of the space of all \(C^2\)-surfaces. Since there happens to be a loss of derivatives, similarly to Nash’s original investigations of the space of isometric immersions, this result seems unfortunately not true. This forces us to work in the smooth category, where we obtain a submanifold result using the inverse function theorem of Nash-Moser [16], cf. Theorem 15.

In the second part we study the natural kinetic energy metric on the space of flags, which allows us to model the motion of a flag as a geodesic curve with respect to this Riemannian structure. Towards this aim we then calculate the geodesic equation, which is obtained from the general principle that geodesics in a submanifold of a flat space satisfy the condition that the acceleration is normal to the submanifold. Deriving these equations turns out to be the most involved computation of this article. The complications illustrate the difficulty in modeling cloth or other unstretchable materials: isometric immersions are relatively rigid, but have some flexibility in special cases. This flexibility depends very much on the precise boundary conditions, however. In the final section, we present some preliminary numerical experiments for the geodesic boundary value problem that use the expression of the kinetic energy in terms of the two generating functions as derived in Section 3.

1.3 Future Directions

We plan in a future work to continue this line of research in several directions. First, it would be of particular interest to obtain similar results for a more general class of isometric immersions. The difficulty with actual isometrically embedded surfaces in \(\mathbb {R}^3\) is that those without boundary must be fairly rigid, while those with boundary generate very complicated boundary conditions. As a first step to understanding these spaces, it might be worth considering surfaces in \(\mathbb {R}^4\), as it is much easier to isometrically embed them in this higher dimensional space; for example the tangent space at the standard Clifford torus can be written in terms of functions of two variables, not the single-variable functions that this quasi-rigidity gives us. Hence the theory will be more similar to that for the motion of inextensible closed curves in \(\mathbb {R}^2\) or \(\mathbb {R}^3\). Although the practical applications are obviously fewer, it would be an interesting space to study and perhaps reveal some information about the geometry of isometric immersions. Second, from an application-oriented point of view, we would like to use our geometric framework for the actual modeling of fabric; see for example [8, 12], and [36] and references therein for discussions of current numerical methods for modeling fabric. This will require us to develop a comprehensive numerical framework for the calculation of both the geodesic initial and boundary value problem.

Furthermore, to model the movement of a real flag, one would want to incorporate the external force of gravity and the effect of wind (say, a uniform breeze in a fixed direction for simplicity). Indeed there is a rich literature on modeling the interaction of flags with the surrounding fluid (wind), see eg. [1, 30, 33, 38] or [13]. In our setup we would like to view this interaction with the external forces as an additional (potential) energy term, that we could then add to the total Lagrangian. While it is clear how to describe the potential energy due to gravity, it is far less straightforward how to incorporate even a simple model of wind. In the present article we describe these considerations briefly in Section 3.1, but a detailed study including the derivation of the resulting evolution equations is left open for future work.

Finally we would want a local existence theory for solutions of the geodesic equation in the space of flags. This already has major complications in the simpler case of inextensible curves (to which the flag equations reduce when nothing depends on the second spatial variable), as in the third author’s paper [27]. There we had a single wave equation with a tension determined by the solution of a second-order spatial ODE boundary value problem for each fixed time; here we have two coupled wave equations and a system of six first-order ODEs for each fixed time to determine the tensions. We have not attempted to address the local existence theory, since merely writing down the equations presents enough difficulty.

2 The Space of Flags

In this section we will introduce the basic notation of a flag (Definitions 1, 7 and 17) and show that any flag can be characterized uniquely by two functions of one variable (Theorem 9). This will allow us to equip the space of all flags with a manifold structure (Theorem 11) and characterize its tangent space (Proposition 18). In addition we will show that we can view the space of smooth, regular flags as a submanifold of all smooth surfaces, cf. Theorem 15. This will require us to use the Nash-Moser implicit function theorem.

We start by introducing the basic definition.

Definition 1

Let \(\textbf{r}\in C^2([0,1]\times [0,1],\mathbb {R}^3)\). We call \(\textbf{r}\) a flag if it is an isometric embedding of the square into \(\mathbb {R}^3\) such that \(\textbf{r}(0,v) = v\hat{\mathbf {\jmath }} \) and \(\textbf{r}_u(0,v) = \hat{\mathbf {\imath }}\) for \(v\in [0,1]\), where \(\hat{\mathbf {\imath }}, \hat{\mathbf {\jmath }}, \hat{k}\) is the standard basis of \(\mathbb {R}^3\). We then have

Remark 2

(Nonlinear bending theory vs constrained membrane theory). Note that one can make sense of the notion of a flag (isometric immersion, resp.) if the representing function \(\textbf{r}\) is only in \(C^1\). In the above definition we nevertheless require \(\textbf{r}\) to be of class \(C^2\). This regularity assumption has significant effects on the corresponding model of a flag: by the celebrated results of Nash and Kuiper the space of \(C^1\) isometric embeddings is dense in the space of short maps [7, 24], whereas a similar statement is clearly not true for the space of \(C^2\) isometric embeddings, cf. [9]. From a perspective of nonlinear elasticity theory, the \(C^2\) assumption puts us in the realm of nonlinear bending theory, whereas the \(C^1\) assumption can be viewed in the context of constrained membrane theory, cf. the seminal paper by Friesecke, James and Müller [14, Theorem 1, case (ii) and (iii)]. To be more precise, the appropriate modeling space for nonlinear bending theory is the Sobolev space of all \(W^{2,2}\)-isometric immersions; by a result of Pakzad [26] the space of smooth (\(C^2\), resp.) is dense in the space of \(W^{2,2}\) immersions, which connects it to the regularity assumption of the present article. From a mathematical point of view the \(C^2\)-assumption allows us to work with a continuous second fundamental, which serves as the basis for our chart construction. With exception of the submanifold result, we believe that all the constructions and results could be directly generalized to the \(W^{2,2}\) category but beyond that, we suspect that entirely different techniques would be necessary. From a modeling point of view the space of \(C^1\) immersions (corresponding to constrained membrane theory) would allow for kink-like singularities that might appear while folding or crumbling a sheet of paper. We believe that excluding such irregularities is a reasonable assumption in the context of a cloth-made flag, which justifies the regularity requirements of the present article.

Remark 3

(Rectangular flags). In this article we restrict ourself to flags that have a square shape. In practice, most flags are rather of a rectangular shape. By adding additional parameters to the definition of the space of flags, one could easily extend the analysis of this article to this situation, that is, by considering isometric immersions of the form \(\textbf{r}:[0,U]\times [0,V]\rightarrow \mathbb {R}^3\) with \(U,V>0\). As the notation in the present article is already somewhat cumbersome, we have refrained from introducing these additional parameters. We want to emphasize that all the results of the article are also true in that situation; this has also been used in the illustrative example in Fig. 1.

Letting \(\textbf{N}=\textbf{r}_u\times \textbf{r}_v\) denote the normal vector, the fields \(\{\textbf{r}_u, \textbf{r}_v, \textbf{N}\}\) form a convenient orthonormal basis. We compute that

where the functions e, f, g satisfy the Gauss-Codazzi and Codazzi-Mainardi equations

The second fundamental form \(\big ({\begin{matrix} e &{} f \\ f &{} g\end{matrix}}\big )\) always has 0 as an eigenvalue with eigenvector \(\big ({\begin{matrix} -f \\ e \end{matrix}}\big )\); the other eigenvalue is the mean curvature \(e+g\); see, for example, [31] or [10].

We assume for simplicity that there is no open set of flat points where \(e=f=g=0\), although we allow such points to occur. By a theorem, originally due to Pogorelov and Hartman-Wintner, as quoted in Ushakov [35], there exists, for each point of the square a unique line segment through the point extending to the boundary of the flag.Footnote 1 The flagpole is one of these lines, and thus no other line can be horizontal (or it would cross the flagpole). Consequently, we will consider the following three mutually exclusive cases:

-

Upturned flag: the asymptotic line through the upper right corner (1, 1) passes through the bottom edge at a point \((x^*,0)\) for \(0< x^*<1\).

-

Downturned flag: the asymptotic line through the lower right corner (1, 0) passes through the top edge at \((x^*,1)\) for \(0< x^*<1\).

-

Balanced flag: a single asymptotic line passes through both right corners.

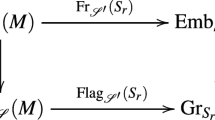

In the upturned case, asymptotic lines starting on the bottom edge will hit every point of both the top edge and the right side, so we parameterize everything along this bottom edge; the asymptotic line through the bottom right corner reduces to a point. Similarly in the downturned case, asymptotic lines through points of the top edge will pass through both the bottom edge and the right side, and we parameterize along the top edge. In the balanced case, we could use either parameterization along the top edge or bottom edge. We refer to Fig. 2 for an example of an upturned flag, which illustrates the above construction.

On the left, we plot asymptotic lines on the unit square in the (u, v) space for the function \(\alpha _b(x) = -\tfrac{1}{2}x - 2x^2 + \tfrac{21}{4} x^3\), for which \(x^*=\frac{2}{3}\). On the right, we plot these same lines in the new coordinates (x, y) given by (4), for \(y\le \gamma _b(x)\) as in (5). The condition in Theorem 6 ensures that the right side of this shape is always a function that strictly decreases from \((x^*,1)\) to (1, 0)

We start by defining a coordinate transformation, which will be central in the remainder of the article. We use x as the parameter along the bottom or top edge (depending on whether the flag is upturned or downturned), and y as the parameter along the lines.

-

For an upturned flag, let \(\alpha _b(x)\) be the reciprocal of the slope of the asymptotic line through (x, 0), so that the asymptotic line is given by

$$\begin{aligned} u=x+\alpha _b(x)y, \qquad v=y \end{aligned}$$(4)for \(0\le x\le x^*\), \(0\le y\le 1\) (to fill out the top edge) and \(x^*\le x\le 1\), \(0\le y\le \frac{1-x}{\alpha _b(x)}\) (to fill out the right edge). We denote the graph of y as a function of x by

$$\begin{aligned} \gamma _b(x)= {\left\{ \begin{array}{ll} 1 &{} 0\le x\le x^* \\ \frac{1-x}{\alpha _b(x)} &{} x^*< x\le 1.\end{array}\right. } \end{aligned}$$(5) -

For a downturned flag, let \(\alpha _t(x)\) be the reciprocal of the slope of the asymptotic line through (x, 1), so the line is given by

$$\begin{aligned} u=x-\alpha _t(x)y, \qquad v=1-y \end{aligned}$$(6)for \(0\le x\le x^*\) and \(0\le y\le 1\), or \(x^*\le x\le 1\) and \(0\le y\le -\frac{1-x}{\alpha _t(x)}\). Similarly we have a piecewise-defined function \(\gamma _t(x)\) which traces the bottom edge.

-

For a balanced flag we may use either representation, where \(x^*=1\).

Note that when the asymptotic line passes through both the top and bottom of the flag (rather than the right side), the line given through the bottom (4) for some x passes through the point (z, 1) for some z, and we have \(z=x+\alpha _b(x)\). The slope of this line is \(\alpha _b(x)\), but it is also equal to \(\alpha _t(z)\), and therefore we must have

Similarly we have

In the upturned case there is an \(x^*\) with \(\alpha _b(x^*)+x^*=1\), and here we will have \(\alpha _t(1) = \alpha _b(x^*)=1-x^*\); in particular \(\alpha _t(1)>0\). Similarly in the downturned case there is an \(x_*\) with \(x_*-\alpha _t(x_*)=1\), and in this case we will have \(\alpha _b(1) = \alpha _t(x_*)<0\). In the balanced case we have \(\alpha _b(1)=\alpha _t(1)=0\). We can thus distinguish the three cases in terms of the single number \(\alpha _b(1)\): if positive the flag is upturned, if negative the flag is downturned, and if zero the flag is balanced. Similarly we could do the same with \(\alpha _t\).

At points where \(e\ne 0\) we may define the ratio \(\phi (u,v) = f(u,v)/e(u,v)\). Since \(\big ({\begin{matrix} -f \\ e\end{matrix}}\big )\) is the vector in the direction of the nullspace, the functions \(\alpha _b\) and \(\alpha _t\) are related to \(\phi \) by

The following lemma explains the usefulness of our new coordinates (x, y):

Lemma 4

Let \(\textbf{r}\in C^2([0,1]\times [0,1],\mathbb {R}^3)\) and let e and f be given by (2). At points where \(e\ne 0\), the function \(\phi = f/e\) satisfies the inviscid Burgers’ equation \(\phi _v = \phi \phi _u\), and thus in (x, y) coordinates we have \(\phi _y=0\).

Proof

By the Gauss-Codazzi equation (3), the zero-curvature condition \(eg=f^2\) becomes \(f=\phi e\) and \(g=\phi ^2e\). The Codazzi-Mainardi equations then imply that

which reduces to \(e \phi \phi _u = e\phi _v\), yielding the inviscid Burgers’ equation, which is easily solved by the method of characteristics (the characteristics being precisely the asymptotic lines).

We conclude that if the initial data is given along the bottom edge (as in the upturned case), then \(\phi \) satisfies the implicit equation

while if the data is given along the top edge, then \(\phi \) satisfies

\(\square \)

The next corollary simply follows from the coordinate transformations (4)–(6) and the solution formulas (7)–(8).

Corollary 5

In the (x, y) coordinates given in the upturned case by (4), we may write \(\phi (u,v) = -\alpha _b(x)\). Similarly in the downturned case with coordinates (6), we may write \(\phi (u,v) = -\alpha _t(x)\). In he balanced case we can use either.

From here on, we will focus solely on the upturned case; all the following results also hold in the two other cases and we will comment on the small differences in the end of the section. In what follows we will skip the subscript b in functions such as \(\alpha \).

2.1 A Characterization for Upturned Flags

In this part we will show in the upturned case that the function \(\alpha \) determining the asymptotic lines, together with a curvature function \(\kappa (x):= e(x,0)\) specified along the bottom edge, completely determine the flag. In fact this is essentially a statement that the upturned flag is completely determined by the unit-speed curve \(\varvec{\eta }(x) = \textbf{r}(x,0)\) along the bottom of it.

We start by characterizing all functions \(\alpha \) that create an upturned flag. The central ingredient for this result is the observation that the asymptotic curves are characteristics of a homogeneous quasilinear PDE and that the solutions of this PDE can be differentiable iff the characteristics do not cross.

Theorem 6

A function \(\alpha \in C^1([0,1],\mathbb {R})\) with \(\alpha (0)=0\) and \(\alpha (1)>0\) generates a family of nonintersecting asymptotic lines in the upturned flag case, with the coordinate transformation (4) forming a global diffeomorphism on the square, iff it satisfies the condition

where

Proof

First suppose \(\alpha :[0,1]\rightarrow \mathbb {R}\) generates a family of nonintersecting lines filling up the square. The lines are given in the square \((u,v)\in [0,1]^2\) by the parameterization \(u = x+\alpha (x)v\), for \(0\le x\le 1\). For the upturned flag, there is some \(x^*\) such that the line passes through the top right corner (1, 1), so that we have \(1-x^*=\alpha (x^*)\). We will refer to this special line as the corner-bending line. Again see Fig. 2 for the visual interpretation.

For all lines with \(x\le x^*\), to the left of the corner-bending line, the vertical parameter v goes from 0 to 1 as u goes from x to \(x+\alpha (x)\). In particular, we know this line does not reach the right corner, so that \(x+\alpha (x)<1\) for \(x<x^*\). On the other hand, to the right of the corner-bending line, the asymptotic line crosses the right side of the square \(u=1\) before v reaches 1; in fact at height \(v=\frac{1-x}{\alpha (x)}>0\). In particular we see that \(\alpha (x)>1-x\) for \(x>x^*\). Thus \(\gamma \) defined by (10), the largest value of v such that the line segment remains in the square, satisfies

Hence we have a parameterization of the unit square \((u,v)\in [0,1]^2\) by the map

This parameterization is an invertible diffeomorphism on the entire square if and only if its Jacobian determinant is everywhere positive. That condition on the Jacobian is clearly

which is equivalent to \(\lambda (x)>0\) by the definition (10).

Conversely suppose a function \(\alpha \) which generates \(\gamma \) and \(\lambda \) by formula (10) has \(\lambda (x)>0\) for all \(x\in [0,1]\). We want to show the parameterization (11) fills up the square \((u,v)\in [0,1]^2\). For each fixed \((u,v)\in [0,1]^2\), define \(F_{(u,v)}:[0,1]\rightarrow \mathbb {R}\) by

We want to show that for each (u, v) there is a unique x such that \(F_{(u,v)}(x)=0\), which will imply (u, v) is reached by the parameterized line. Clearly if \(u=0\), then \(x=0\) is such a point, since \(F_{(u,v)}(0) = 0+v\alpha (0)=0\); while if \(v=0\), then \(x=u\) is obviously the unique such point. So we will assume \(u>0\) and \(v>0\) in what follows.

Since \(\alpha (0)=0\) and \(\alpha (1)>0\), we have

so there is always at least one such point. We now want to show uniqueness, which we will do by showing that \(F_{(u,v)}'(x)>0\) whenever \(F_{(u,v)}(x)=0\). So suppose \(F_{(u,v)}(x)=0\), and compare \(\alpha (x)\) to \(1-x\).

-

If \(\alpha (x)\le 1-x\), then \(\gamma (x)=1\) and \(\lambda (x)=1+\alpha '(x)\) by (10). The inequality (9) implies that \(\alpha '(x)>-1\), so that \(F_{(u,v)}'(x) = 1+v\alpha '(x) > 1-v\ge 0\).

-

If \(\alpha (x)>1-x\), then \(\gamma (x)=\frac{1-x}{\alpha (x)}\), and \(\lambda (x)=1+\frac{\alpha '(x)(1-x)}{\alpha (x)}\) by (10). In addition, since \(F_{(u,v)}(x)=0\), we get

$$\begin{aligned} v\alpha (x) = u-x, \end{aligned}$$so that

$$\begin{aligned} F_{(u,v)}'(x)&= 1 + v\alpha '(x) = 1 + \frac{v\alpha (x) (\lambda (x)-1)}{1-x} \\&> 1 - \frac{v\alpha (x)}{1-x} = 1 - \frac{u-x}{1-x} = \frac{1-u}{1-x} \ge 0. \end{aligned}$$

Either way, \(F_{(u,v)}'(x)>0\) whenever \(F_{(u,v)}(x)=0\), so there is exactly one x such that \(F_{(u,v)}(x)=0\) for each \(u>0\) and \(v>0\).

In particular, there is a unique \(x^*\) satisfying, that \(\alpha (x^*)=1-x^*\), and, for \(x<x^*\), we must have \(\alpha (x)<1-x\), while for \(x>x^*\) we must have \(\alpha (x)>1-x\). Hence the formula (10) defining \(\gamma \) becomes

The map (4) is thus a bijection for \(0\le y\le \gamma (x)\) and \(0\le x\le 1\) onto the square. Since its Jacobian determinant is given by \(J(x,y) = 1+y\alpha '(x)\), which for each fixed \(x\in [0,1]\) ranges from 1 to \(\lambda (x)\), we see this Jacobian determinant is positive, so the map (4) is a global diffeomorphism. \(\square \)

Based on the discussion above, we make the following definition for an upturned flag affixed to a vertical flagpole (pointing in the \(\hat{\jmath }\) direction) along a horizontal edge (in the \(\hat{\imath }\) direction). We require that \(\textbf{r}\) is a \(C^2\) function, so that \(\textbf{r}_{uu}\), \(\textbf{r}_{uv}\), and \(\textbf{r}_{vv}\) are all \(C^0\), but in addition we require that \(\alpha \) is a \(C^1\) function (which is not automatic, since it is the ratio of functions which are only a priori continuous).

Definition 7

A regular upturned flag is a \(C^2\) isometric embedding \(\textbf{r}:[0,1]^2 \rightarrow \mathbb {R}^3\) satisfying the conditions

and such that there is a \(C^1\) function \(\alpha :[0,1]\rightarrow \mathbb {R}\) satisfying \(\textbf{r}_{uv}(x,0) = -\alpha (x) \textbf{r}_{uu}(x,0)\) as well as the conditions of Theorem 6. That is, \(\alpha (0)=0\), \(\alpha (1)>0\), and

Above we saw that given an isometric nonsingular immersion of the square, much of the geometry is characterized by the properties of a single function \(\alpha :[0,1]\rightarrow \mathbb {R}\). It remains to show that this function, together with a curvature function \(\kappa (x):= e(x,0)\) specified along the bottom edge, completely determines the flag. This is essentially a statement that the upturned flag is completely determined by the unit-speed curve \(\varvec{\eta }(x) = \textbf{r}(x,0)\) along the bottom of it, since that curve is uniquely determined by its curvature and torsion via the classification theorem for curves. Here the curvature is the function \(\kappa (x)\), while the torsion is \(\tau (x) = -\alpha (x)\kappa (x)\).

We will not quite take the usual Frenet–Serret approach, since we want to allow the curvature to change sign, which allows us to reproduce the two-dimensional case where the curvature is signed. The ordinary Frenet–Serret theory assumes that the curvature is never zero, so that the normal is always well-defined; that is an issue for a general space curve, but not in this situation since we already have an orthonormal frame \(\{\textbf{r}_u, \textbf{r}_v, \textbf{N}\}\). This approach also has the advantage that it explicitly reconstructs both the curve and the flag from the curvature and torsion, via a system of ordinary differential equations for the spherical coordinates.

Lemma 8

If \(\alpha :[0,1]\rightarrow \mathbb {R}\) is \(C^1\) and \(\kappa :[0,1]\rightarrow \mathbb {R}\) is \(C^0\), then there is a unique \(C^2\) unit-speed curve \(\varvec{\eta }:[0,1]\rightarrow \mathbb {R}^3\) satisfying the Frenet–Serret equations:

with \(\{\textbf{t}= \varvec{\eta }', \textbf{n},\textbf{b}\}\) forming a \(C^1\) oriented orthonormal basis for each x, and such that \(\varvec{\eta }(0)=0\), \(\varvec{\eta }'(0)=\hat{\imath }\), and \(\textbf{n}(0)=\hat{k}\).

Proof

Since \(\varvec{\eta }'\) is to be a unit vector field, we define spherical coordinates by

The condition \(\varvec{\eta }'(0)=(1,0,0)\) means that \(\phi (0)=\theta (0)=0\). We suppose \(\theta \in [0,\pi )\) and \(\phi \in [0,2\pi )\).

Set \(e_1(x) = \varvec{\eta }'(x)\), and complete to an orthonormal basis \(\{e_1,e_2,e_3\}\) via the formulas

Setting

for some function \(\beta :[0,1]\rightarrow \mathbb {R}\), we see that \(\varvec{\eta }'(x)\times \textbf{n}(x) = \textbf{b}(x)\) for all x. The condition \(\textbf{n}(0)=\hat{k}\) together with \(e_2(0)=-\hat{k}\) and \(e_3(0) = \hat{\jmath }\) implies that we must have \(\beta (0)=0\).

Now consider the system

There is a unique solution \(\{\theta ,\phi ,\beta \}\) for x close to zero, and the solutions are \(C^1\) functions.

We have

using equations (18) and (19). In addition these equations imply

Orthonormality of the frame \(\{\textbf{t}, \textbf{n}, \textbf{b}\}\) then implies the remainder of the Frenet–Serret equations, that

Furthermore since the frame \(\{\textbf{t}, \textbf{n}, \textbf{b}\}\) remains orthonormal, the components remain bounded, and the solution of the ODE system exists for all \(x\in [0,1]\), not just locally.

If \(\alpha \) and \(\kappa \) are at least \(C^0\) functions, then the solution of the system (18)–(20) must be \(C^1\). This implies in particular that \(\varvec{\eta }'\) is \(C^1\), so that \(\varvec{\eta }\) is \(C^2\). \(\square \)

In the next theorem we demonstrate that all regular upturned flags are completely characterized by the continuous curvature function \(\kappa \) and the continuously differentiable function \(\alpha \), both specified along the bottom edge. The formula (21) is called the rectifying developable or the envelope of tangent planes of the bottom edge \(\varvec{\eta }\). It is a well-known classical result (see for example, Struik [32] or do Carmo [10]) that any such surface is a developable surface, that is, that it is locally the image of an isometric immersion of the plane. The reason for the somewhat more complicated presentation here is that we obtain a global description of the flag on the entire square, not merely a local representation of it. This allows us to also consider the set of all regular upturned flags as a topological space and a manifold, and study geodesic motion in it, as we shall do later. The parameterization we give here ends up being close to that of Izumiya et al. [18], who coined the term “modified Darboux vector” for the vector \(\textbf{D}(x)\) below.

Theorem 9

For any regular upturned flag \(\textbf{r}:[0,1]^2\rightarrow \mathbb {R}^3\) as in Definition 7, the bottom edge defined by \(\varvec{\eta }(x) = \textbf{r}(x,0)\) is a unit-speed curve, with (signed) curvature

and torsion given by \(\tau (x) = -\alpha (x) \kappa (x)\). The function \(\alpha \) satisfies the conditions \(\alpha (0)=0\), with \(\alpha (1)>0\) and the inequality (13). The Frenet–Serret frame \(\{\textbf{t}, \textbf{n}, \textbf{b}\}\) along \(\varvec{\eta }\) is given by

Conversely, given any \(C^1\) function \(\alpha :[0,1]\rightarrow \mathbb {R}\) satisfying the conditions of Theorem 6 or equivalently Definition 7, and any \(C^0\) function \(\kappa :[0,1]\rightarrow \mathbb {R}\), there is a unique regular upturned flag \(\textbf{r}\) given by

where x is defined for each \(u,v\in [0,1]^2\) to be the unique solution in [0, 1] of

and \(\textbf{D}(x)\) is the modified Darboux vector of \(\varvec{\eta }\).

Proof

Given the upturned flag \(\textbf{r}\), the fact that \(\textbf{r}\) is an isometry implies that \(\textbf{r}_u(x,0) = \varvec{\eta }'(x)\) is a unit vector for all \(x\in [0,1]\). We have \(\varvec{\eta }''(x) = \textbf{r}_{uu}(x,0) = e(x,0) \textbf{N}(x,0)\) by equations (2), and so if we define \(\textbf{n}(x) = \textbf{N}(x,0)\) to be the normal field along the curve, then \(\varvec{\eta }''(x) = \kappa (x) \textbf{n}(x)\) with \(\kappa (x)=e(x,0)\), which is the first of the Frenet–Serret equations (14). Since \(\textbf{r}_u \times \textbf{N} = -\textbf{r}_v\) by definition of \(\textbf{N}\), and \(\textbf{t}\times \textbf{n}=\textbf{b}\) by construction of the Frenet–Serret basis, we must have \(\textbf{b}(x) = -\textbf{r}_v(x,0)\). Furthermore, we compute that

using (2) and equation (7) from Lemma 4, which is the third of the Frenet–Serret equations (14). As in the proof of Lemma 8, the formula for the derivative of \(\textbf{n}\) is the second Frenet–Serret equation.

Now we consider the converse, supposing that \(\alpha \) is \(C^1\) and \(\kappa \) is a given \(C^0\) function satisfying \(\alpha (0)=\kappa (0)=0\), \(\alpha (1)>0\), and the inequality (13). Using Lemma 8, we construct the unique curve \(\varvec{\eta }:[0,1]\rightarrow \mathbb {R}^3\) along with its orthonormal Frenet–Serret frame \(\{\textbf{t}=\varvec{\eta }', \textbf{n},\textbf{b}\}\), subject to the conditions \(\varvec{\eta }(0)=0\), \(\varvec{\eta }'(0) = \hat{\imath }\), and \(\textbf{n}(0) = \hat{k}\). This curve \(\varvec{\eta }\) is \(C^2\), and its Frenet–Serret frame is \(C^1\). Then we define a surface by the parameterization (21). The fact that the function \((u,v)\mapsto x\) given by (22) is well-defined and continuously differentiable is a consequence of our regularity definition and Theorem 6.

We first show that this parameterized surface is a \(C^2\) isometric immersion, and to do this we compute \(\textbf{r}_u\) and \(\textbf{r}_v\) and show that these are \(C^1\) and orthonormal for all \((u,v)\in [0,1]^2\) as in (1).

First we compute the derivatives of x(u, v) implicitly from (22), which gives

and the fact that the denominators are always positive and well-defined for all \(v\in [0,\gamma (x)]\) is precisely the condition that \(\lambda (x)>0\) from Theorem 6. This shows that x is a \(C^1\) function of (u, v).

From the formulas (23) and the chain rule, we get

using the third Frenet–Serret equation (14) to eliminate the derivative of \(\textbf{b}\). Similarly we compute that

using the fact that \(x_v = -\alpha x_u\) and the definition of \(\textbf{D}\). Since \(\varvec{\eta }'=\textbf{t}\) and \(\textbf{b}\) are orthonormal at every x, we see that \(\textbf{r}_u\) and \(\textbf{r}_v\) are orthonormal at every (u, v) in the unit square.

Since \(\textbf{r}_u=\varvec{\eta }'(x)\) is a composition of the \(C^1\) function \(\varvec{\eta }'\) and the \(C^1\) function x, it is also \(C^1\). Similarly \(\textbf{r}_v = -\textbf{b}(x)\) is \(C^1\), and this implies that \(\textbf{r}\) is \(C^2\).

Finally we verify the boundary conditions. Since \(\alpha (0)=0\), we have \(x=0\) whenever \(u=0\) in equation (22), so that

since we constructed \(\varvec{\eta }\) to ensure \(\textbf{b}(0)=-\hat{\jmath }\). Because \(\textbf{r}_u(0,v) = \varvec{\eta }'(0) = \hat{\imath }\) for all v, the flag is indeed fastened in the horizontal direction all along the flagpole. \(\square \)

Remark 10

In the special case where \(\alpha \equiv 0\), the bottom curve \(\varvec{\eta }\) remains planar, in the \(\hat{\imath }\)-\(\hat{k}\) plane. In the spherical coordinates of Lemma 8, we have \(\phi \equiv \beta \equiv 0\), with \(\theta '(x) = \kappa (x)\) and \(\theta (0)=0\) determining the curve completely. Hence the binormal is constant and given by \(\textbf{b}(x) = -\hat{\jmath }\). In addition x(u, v) determined by (22) is given simply by \(x(u,v) = u\). Hence the parameterization (21) becomes \(\textbf{r}(u,v) = \varvec{\eta }(u) + v \hat{\jmath }\). In other words, the planar curve at the bottom is vertically translated to fill out the flag.

2.2 The Manifold Structure of the Space of Upturned Flags

By Theorem 9 a regular upturned flag is completely determined by the \(C^0\) function \(\kappa :[0,1]\rightarrow \mathbb {R}\) and the \(C^1\) function \(\alpha :[0,1]\rightarrow \mathbb {R}\) satisfying the conditions

Define our linear space containing the \(\alpha \) functions to be the space

We will prove that the conditions in (24) describe an open subset of this space, thereby obtaining the following result concerning the manifold structure of the space of upturned flags:

Theorem 11

(Manifold structure of regular, upturned flags). The space \(\mathcal {U}\) of functions satisfying the condition (24),

is an open subset of \(\mathcal {X}\) given by (25) and therefore a Banach manifold modeled on \(\mathcal {X}\).

Furthermore, the space \(\mathcal {F}\) of regular upturned flags is diffeomorphic to \(C([0,1])\times \mathcal {U}\), which is an open subset of the Banach space \(C([0,1])\times \mathcal {X}\) and thus a Banach manifold.

Proof

We have shown in Theorem 9 that regular, upturned flags are uniquely determined by the functions \(\kappa \in C([0,1])\) and \(\alpha \in \mathcal {U}\) and thereby we have established the identification with the set \(C([0,1])\times \mathcal {U}\). It remains to show that \(\mathcal {U}\) is an open subset of \(\mathcal {X}\). Therefore, let \(\alpha \in \mathcal {U}\). By Theorem 6, there is a unique point \(x^*\in (0,1)\) such that \(\alpha (x)< 1-x\) for \(x< x^*\) and \(\alpha (x)> 1-x\) for \(x>x^*\). Consider a function \(f\in \mathcal {X}\); we will show that if \(\Vert f\Vert _{\mathcal {X}}\) is sufficiently small, then \(\alpha +f\in \mathcal {U}\).

For \(x\in [0,x^*]\) we have \(1+\alpha '(x)>0\), and in particular there is an \(\varepsilon _1>0\) such that \(1+\alpha '(x)\ge \varepsilon _1\) for \(x\in [0,x^*]\). Thus we have

which remains positive on \([0,x^*]\) as long as \(\Vert f\Vert _{\mathcal {X}} < \varepsilon _1\).

For \(x\in [x^*,1]\) we similarly have \(\alpha (x) + (1-x) \alpha '(x)\ge \varepsilon _2\) for some \(\varepsilon _2>0\), and thus

which remains positive as long as \(\Vert f\Vert _{\mathcal {X}}< \varepsilon _2/2\).

Requiring that \(\Vert f\Vert < \min \{\varepsilon _1, \varepsilon _2/2\}\) ensures that on either interval \([0,x^*]\) or \([x^*,1]\), at least one of the functions is positive, and thus their maximum is also positive. Thus \(\alpha +f\in \mathcal {U}\). \(\square \)

Remark 12

The special case where \(\alpha \equiv 0\) generates two-dimensional whips, as mentioned in Remark 10. This is obviously a submanifold of \(\mathcal {F}\), with tangent space consisting of arbitrary functions \(\dot{\kappa }\) with \(\dot{\alpha }\equiv 0\). We will show later that in the kinetic energy metric induced on flags, the space of whips is totally geodesic.

2.3 The Space of Smooth, Regular Upturned Flags as a Submanifold

Next we would like to consider the space of regular upturned flags as a submanifold of the space of all \(C^2\) surfaces. Unfortunately this does not seem to work, in part due to the complicated smoothness conditions on flags themselves (a \(C^0\) function \(\kappa \) and a \(C^1\) function \(\alpha \) generate a \(C^2\) curve \(\varvec{\eta }\), but not every \(C^2\) curve \(\varvec{\eta }\) automatically has a \(C^1\) function \(\alpha \), and the smoothness of the flag surface \(\textbf{r}\) is even more involved). Even without these difficulties, the fundamental problem is the same one that arose in Nash’s study of isometric immersions [25]: the loss of derivatives in the function that maps a parameterized surface to the induced metric. Here we would like to say that the metric map that takes a parameterized surface \(\textbf{r}:[0,1]^2\rightarrow \mathbb {R}^3\) to its Riemannian metric coefficients via

has \((\tfrac{1}{2}, \tfrac{1}{2},0)\) as a regular value. This requires that the derivative of \(\mathcal {I}\) be surjective for any flag, and in particular for the regular upturned ones. In the proposition below we will see that this works in the smooth category (\(k=\infty \)), but not for flags of finite regularity.

Proposition 13

The differential of the map \(\mathcal {I}\) defined in (26), at a regular upturned flag \(\textbf{r}\) as in Definition 7 and parameterized as in Theorem 9, is given in (x, y) coordinates by

This has a right-inverse given for functions \((p,r,q) = D\mathcal {I}_{\textbf{r}}(f,g,h)\) by

which exists for every (p, q, r) iff \(\kappa \) is nowhere zero.

Proof of Proposition 13

The derivative of \(\mathcal {I}\) is given by

where \(z = \frac{\partial \textbf{r}}{\partial t}\big |_{t=0}\).

Using the chain rule formulas

as in equation (23), along with the fact from Theorem 9 that \(\textbf{r}_u(u,v) = \textbf{t}(x)\) and \(\textbf{r}_v(u,v) = -\textbf{b}(x)\), the equation (30) has components

Writing z in the Frenet–Serret basis as in (27) and using (14), we obtain

and plugging these into (31) yields the equation (27) for the derivative.

Using formula (27), we find the right-inverse operator by solving the system

Multiplying (34) by \(\alpha (x)\) and using equations (32) and (33) to replace the x-derivatives in it, we obtain

which is equivalent to

We can solve (35) for h to get (29), up to an arbitrary function of x which we set to zero.

Then differentiating (29) with respect to x and using (32) gives

and inserting this into (34) gives a single equation for f alone:

This is an ordinary differential equation in y, which can be solved assuming \(f(x,0)=0\) as

Straightforward manipulations using the definition of F in (35) turn this into (28).

Having obtained f, we know h from (29). If \(\kappa \) is nonzero, we can solve equation (32) for g. \(\square \)

Remark 14

(Loss of derivative). Note the loss of derivatives in the formulas (28)–(29). If we want to show that \(D\mathcal {I}_{\textbf{r}}\) is surjective from \(C^{k+1}\) surfaces to \(C^k\) metric components, then given any \(C^k\) functions (p, r, q), we want the solution (f, g, h) to be \(C^{k+1}\). However the formula (28) shows that in fact f is only \(C^{k-1}\), while h is also \(C^{k-1}\) and g is \(C^{k-2}\). This observation prevents us from using the inverse function theorem for Banach spaces to show that \(C^k\) flags form a smooth submanifold of \(C^k\) surfaces. Next we show that this difficulty can be overcome in the smooth category, that is, we will use the Nash-Moser inverse function theorem to prove that \(C^{\infty }\) flags for which the curvature \(\kappa \) is nowhere zero form a smooth submanifold of the space of \(C^{\infty }\) surfaces. These results are in accordance with the space of volume preserving diffeomorphisms as a sub-Lie group of the full diffeomorphism group, and with the space of regular, volume preserving embeddings as a submanifold of all regular embeddings [5, 23].

Theorem 15

(Submanifold structure for smooth regular flags). The space of smooth, regular flags with non-vanishing curvature function \(\kappa =e(x,0)\ne 0\) is a tame Fréchet submanifold of the space

which is an open subset of the space of all smooth immersions \(\text {Imm}([0,1]^2,\mathbb {R}^3)\).

Proof

Using the results of Proposition 13, the proof of this result will follow similarly as in [5, 23] and we will be rather brief in our arguments. Indeed the situation studied here is much simpler, as the space \(C^{\infty }([0,1]^2, \mathbb {R}^3)\) is a tame Fréchet space; in [5, 23] the authors consider immersions from a general finite dimensional manifold M, which makes the presentation significantly more complicated as it requires one to work in local coordinate charts.

We consider the map \(\mathcal I\) in the smooth category:

We first note that \(C^{\infty }([0,1]^2, \mathbb {R}^3)\) is a tame Fréchet space, and that \(\text {Imm}([0,1]^2,\mathbb {R}^3)^{\star } \) is an open subset of it. Thus in order to apply the Nash-Moser inverse function theorem, it remains to show that there exists an open subset \(U\subset \text {Imm}([0,1]^2,\mathbb {R}^3)^{\star }\) such that

-

(1)

\(\mathcal I\) is a smooth, tame map;

-

(2)

\(d\mathcal I(x)\) is a linear isomorphism for all \(x\in U\);

-

(3)

the map \(d\mathcal I^{-1}: U\times C^{\infty }([0,1]^2, \mathbb {R}^3)\rightarrow C^{\infty }([0,1]^2, \mathbb {R}^3)\) is a smooth tame map.

Since every nonlinear differential operator is a smooth tame map, see for example, [16, Cor. 2.2.7], it follows directly from the definition of \(\mathcal I\) that Property (1) holds. The remaining properties follow directly from the explicit formula for the inverse \(d\mathcal I^{-1}\) given in Proposition 13. Using that \(\text {Imm}([0,1]^2,\mathbb {R}^3)^{\star }\) is implicitly characterized by the condition \(\mathcal I(\textbf{r})=(1/2,1/2,0)\), the result then follows by the Nash-Moser version of the regular value theorem. \(\square \)

2.4 Downturned and Balanced Flags

Using the exact same methods, the analogous results also hold for the spaces of downward and balanced flags. The main difference can be seen in the following result, which is the analogue of Theorem 6:

Corollary 16

A function \(\alpha _t:[0,1]\rightarrow \mathbb {R}\) with \(\alpha _t(0)=0\) and \(\alpha _t(1)<0\) generates a family of nonintersecting asymptotic curves in the downturned flag case with diffeomorphic coordinate transformation (6) iff it satisfies the conditions

where

In the balanced case, a function \(\alpha _b:[0,1]\rightarrow \mathbb {R}\) with \(\alpha _b(0)=\alpha _b(1)=0\) generates nonintersecting asymptotic curves with either (4) generating a diffeomorphism on the square if and only if \(\lambda _b(x):= 1+\alpha _b'(x)>0\) for all \(x\in [0,1]\); here \(\gamma _b(x)\equiv 1\) for all \(x\in [0,1]\). Equivalently \(\alpha _t:[0,1]\rightarrow \mathbb {R}\) with \(\alpha _t(0)=\alpha _t(1)=0\) generates a diffeomorphism via (6) if and only if \(\lambda _t(x):= 1-\alpha _t'(x)>0\) for all \(x\in [0,1]\).

This naturally leads to the following definition:

Definition 17

A regular downturned flag is a \(C^2\) isometric embedding \(\textbf{r}:[0,1]^2 \rightarrow \mathbb {R}^3\) satisfying the conditions

and such that there is a \(C^1\) function \(\alpha _t:[0,1]\rightarrow \mathbb {R}\) satisfying \(\textbf{r}_{uv}(x,1) = -\alpha _t(x) \textbf{r}_{uu}(x,1)\) for all \(x\in [0,1]\) as well as the conditions of Theorem 16. That is, \(\alpha _t(0)=0\), \(\alpha _t(1)<0\), and

For a regular balanced flag, we can use either this definition or the definition for upturned flags, with the only modifications being that \(\alpha _b(1)=\alpha _t(1)=0\) and \(1+\alpha _b'(x)>0\) for all \(x \in [0,1]\). From the above definition and corollary, it is clear that everything we did for upturned flags can be done in a similar way for both downturned and balanced flags. For the latter case, the analysis will be significantly easier.

2.5 The Tangent Space

From here on we will continue to work again in the finite regularity regime and disregard the submanifold result from Section 2.3. We now compute tangent vectors to the space of flags by considering a curve in the space of flags \(\textbf{r}(t,u,v)\), and differentiating with respect to t. By Theorem 9, this corresponds to a pair of time-dependent functions \(\kappa (t,x)\) and \(\alpha (t,x)\), which generate a time-dependent bottom edge \(\varvec{\eta }(t,x)\) through the coordinates \(\theta (t,x)\), \(\phi (t,x)\), and \(\beta (t,x)\) satisfying the spatial equations (18)–(20) for each fixed t. As this notation gets somewhat complicated, we will consider variations using the dot notation, for example,

In other words, to compute the tangent space at a given regular upturned flag generated by functions \(\kappa (x)\) and \(\alpha (x)\), we extend to curves \(\tilde{\kappa }(t,x)\) and \(\tilde{\alpha }(t,x)\) in the space of functions passing through the functions at \(t=0\), and find equations for their velocities at time \(t=0\). An example, using the representation of Proposition 18, can be seen in Fig. 3. Note that we will use subscript notation for derivatives, and the reader should not confuse the time derivative \(\alpha _t(t,x)\) of a bottom-edge \(\alpha \) with \(\alpha _t(x)\), the top-edge \(\alpha \) for a downturned flag. Here all flags are upturned, and from now on it will only represent what we called \(\alpha _b\) earlier.

Proposition 18

Suppose \(\kappa , \alpha :[0,1]\rightarrow \mathbb {R}\) are \(C^0\) and \(C^1\) functions respectively, satisfying the conditions of Definition 7 to generate a regular upturned flag through Lemma 8 and Theorem 9. Let \(\dot{\kappa }\) and \(\dot{\alpha }\) be \(C^0\) and \(C^1\) variations, with corresponding variations \(\dot{\theta }\), \(\dot{\phi }\), \(\dot{\beta }\) of the functions in Lemma 8. Then the tangent vector to the flag is given by

where \(x=x(u,v)\) is the function solving (22). Here the functions \(\omega \) and \(\psi \) are related to the variations \(\dot{\kappa }\) and \(\dot{\alpha }\) by solving the ODEs

In particular if \(\kappa \) and \(\dot{\kappa }\) are \(C^0\) and \(\alpha \) and \(\dot{\alpha }\) are \(C^1\), then \(\omega \), \(\psi \), and \(\chi \) are all \(C^1\).

Proof

By formulas (21) and (22) in Theorem 9, we can write

where x(t, u, v) is defined to be the unique solution in [0, 1] of

Differentiating (40) once with respect to t gives (omitting the dependent variables on the right side for brevity):

the simplification in the second line being due to the Frenet–Serret equation (14). To find \(\frac{\partial x}{\partial t}\), we differentiate (41) with respect to t and solve to obtain

Using (43) in formula (42) and simplifying yields

It remains to compute \(\textbf{b}_t\) more explicitly.

The formulas (36) and (37)–(38) are all intrinsic, and can be derived directly from variations of the Frenet–Serret equations (14). However we will derive them as a consequence of the variations of the coordinate equations for \(\theta \), \(\phi \), and \(\beta \) given in (18)–(20), since these are convenient for explicitly constructing the flag numerically.

Differentiating (18)–(20) with respect to time gives

Since \(\theta \), \(\phi \), and \(\beta \) are all zero when \(x=0\) regardless of time, we must have \(\dot{\theta }\), \(\dot{\phi }\), and \(\dot{\theta }\) also equal to zero when \(x=0\).

With \(\varvec{\eta }'\) given in terms of \(\theta \) and \(\phi \) by (15), differentiating with respect to time gives, in the \(\{e_2,e_3\}\) basis of (16), the formula

and in terms of the Frenet–Serret basis, we can write this using (17) as

Since \(\dot{\theta }\) and \(\dot{\phi }\) are both zero at \(x=0\), we find that \(\omega (0)=\psi (0)=0\) as well.

Differentiating (49)–(50) with respect to x, we obtain

Matching with (45)–(46), we get the system

Solving for \(\dot{\kappa }\) gives

using equation (18), (20), (49), and (50). This is (37).

Similarly solving the system for \(\dot{\beta }\), we get

again using (18), (20), (49), and (50). Defining the auxiliary function \(\chi \) by the formula (38), this becomes

and the fact that \(\chi (0)=0\) follows from the fact that \(\dot{\beta }(0)=0\) together with \(\theta (0)=0\).

Now differentiating equation (51) with respect to x gives

and matching equation (47) for \(\dot{\beta }'\) leaves an equation for \(\dot{\alpha }\). We eliminate \(\theta '\) from this using (18), \(\beta '\) using (20), \(\omega '\) using (37), \(\dot{\theta }\) using (49), and \(\dot{\beta }\) using (51). What remains after the cancellations is equation (39).

Finally we return to the computation of \(\textbf{b}_t(t,x)\). By formula (17), we have \(\textbf{b}(t,x) = -\sin {\beta } e_2 - \cos {\beta } e_3\), so that using formula (16), we obtain

and inserting this into formula (44) gives the result (36). \(\square \)

Remark 19

Note that \(\dot{\kappa }\) is unconstrained since \(\kappa \) is thus far unconstrained, while \(\dot{\alpha }\) is unconstrained except that \(\alpha (0)=0\), since the nondegeneracy condition (13) is an open condition in the \(C^1\) topology. However the functions \(\omega \) and \(\psi \) are constrained: if we wish to solve the system (37)–(39) algebraically for \(\dot{\kappa }\) and \(\dot{\alpha }\) given \(\omega \) and \(\psi \), we need to worry about any points where \(\kappa \) is equal to zero. First we need to solve (38) for \(\chi \), which is only possible if \(\psi '=0\) whenever \(\kappa =0\), and then we need to ensure that \((\chi ' + \alpha \dot{\kappa })=0\) whenever \(\kappa =0\). Furthermore even if we could ensure these conditions, they would not necessarily lead to a \(C^1\) function \(\dot{\alpha }\), unless we knew higher-order derivative conditions on \(\kappa \). Later when needed to derive the geodesic equation, we will work formally, assuming either that \(\kappa \) is nowhere zero or that the functions \(\omega \) and \(\psi \) can be specified somewhat arbitrarily, but for the more rigorous analysis of this as an infinite-dimensional geodesic system, one would need to worry about this.

It will be convenient later, when deriving the geodesic equation, to specify extra smoothness conditions on the flag at the flagpole. Since the flag is constrained to have \(\textbf{r}(0,v)\) fixed at (0, v, 0) for all \(v\in [0,1]\), it is natural to demand that the \(C^2\) function \(\textbf{r}\) extend to an odd function in the u variable over \([-1,1]\), which imposes the additional condition that \(\textbf{r}_{uu}(0,v)=0\) for all v, and this is equivalent to requiring that \(\kappa (0)=0\). Since \(\alpha \) is a \(C^1\) function and we have already assumed that \(\alpha (0)=0\), there is no additional condition to impose on it.

Proposition 20

The space \(\mathcal {F}_o\) of odd regular upturned flags is defined to be those flags generated via Theorem 9 such that \(\kappa \) and \(\alpha \) extend to odd functions through \(x=0\). Its tangent space consists of \(C^0\) functions \(\dot{\kappa }\) and \(C^1\) functions \(\dot{\alpha }\) which extend to odd functions through \(x=0\), and \(\mathcal {F}_o\) is a submanifold of \( \mathcal {F}\).

For odd functions \(\kappa \) and \(\alpha \), the function \(\theta \) given by (18) is even, while \(\phi \) and \(\beta \) given by (19)–(20) are odd. Similarly for odd functions \(\dot{\kappa }\) and \(\dot{\alpha }\), the function \(\omega \) given by (37) is even, while the functions \(\psi \) and \(\chi \) given by (38)–(39) are odd.

Proof

Extend the solutions \(\theta \), \(\phi \), and \(\beta \) of (18)–(20) to the interval \([-1,1]\). Define \(\tilde{\theta }(x) = \theta (-x)\), \(\tilde{\phi }(x) = -\phi (-x)\), and \(\tilde{\beta }(x)=-\beta (x)\). Then these new functions satisfy the ODEs

using the assumption that \(\kappa \) and \(\alpha \) are odd. This is the same system as (18)–(20).

Since the initial conditions \(\tilde{\theta }(0)=\tilde{\phi }(0)=\tilde{\beta }(0)=0\) do not change, uniqueness of solutions of ODEs implies that \(\theta =\tilde{\theta }\), \(\phi =\tilde{\phi }\), and \(\beta =\tilde{\beta }\). Thus \(\theta \) is even while \(\phi \) and \(\beta \) are odd. The statements about \(\omega \), \(\psi \), and \(\chi \) follow the same way from the system (37)–(39).

The submanifold result for \(\mathcal {F}_o\) is obvious since the only additional constraint on the space is that \(\kappa (0)=0\), which is a closed linear subspace of the first component.

\(\square \)

3 The Kinetic Energy

In this section we will consider a natural Riemannian metric on the space of regular upturned flags, which is induced by the kinetic energy metric on the space of general surfaces.

Definition 21

If \(\textbf{r}\) is a regular upturned flag as in Definition 7, and \(\dot{\textbf{r}}\) is a tangent vector as in Proposition 18, then the kinetic energy Riemannian metric is defined to be

The corresponding kinetic energy Lagrangian is then given by

where \(\textbf{r}\) is a path of flags subject to endpoint conditions \(\textbf{r}(0) = \textbf{r}_0\) and \(\textbf{r}(T) = \textbf{r}_1\) for two given regular upturned flags \(\textbf{r}_0\) and \(\textbf{r}_1\).

The kinetic energy metric (Lagrangian, resp.) is naturally expressed in the (u, v) coordinates, but more easily computed in the (x, y) coordinates of formula (11), since all the important functions depend only on the x variable.

Proposition 22

In terms of the functions \(\omega \) and \(\psi \) defined in Proposition 18, and the functions \(\gamma \) and \(\lambda \) defined in Theorem 6 by formula (10), the Riemannian metric (53) is given by

where

Remark 23

An alternative formula, circumventing the functions \(\omega \) and \(\psi \) and involving instead the time derivative of \(\textbf{b}\) and the space derivative of \(\varvec{\eta }\), is given by

Proof

Using formula (36), we have that

where \(\dot{\varvec{\eta }}'(x) = \omega (x) \textbf{n}(x) + \psi (x) \textbf{b}(x)\) and \(\textbf{E}(x) = \textbf{t}(x) + \alpha (x) \textbf{b}(x)\), and (x, y) are related to (u, v) by the formula \(v=y\), \(u=x+\alpha (x)y\). The area forms are related by the Jacobian (12):

and the right side is always positive for \(0\le y\le \gamma (x)\) by the assumption (9).

Applying the change of variables, we then get

This can easily be simplified using \(\lambda (x) = 1+\alpha '(x) \gamma (x)\) to the formula (55). \(\square \)

While obviously quite complicated, the formula (55) has the advantage that it involves only functions of the x variable, and thus it represents a Riemannian metric directly on the space of unit-speed curves \(\varvec{\eta }\). More explicitly, since it is obviously quadratic in the velocity components \(\omega \) and \(\psi \), and since those depend in a linear (albeit very nonlocal) way on the functions \(\dot{\kappa }\) and \(\dot{\alpha }\) through the equations (37)–(39), we obtain a highly nonlocal Riemannian metric on the manifold \(\mathcal {F}\) defined by Theorem 11.

The reason this is useful is because we may then construct solutions of the boundary-value problem by minimizing the Lagrangian (54). Conceptually it is easy to consider an algorithm that chooses intermediate functions \(\kappa (t_i)\), \(\alpha (t_i)\), \(\dot{\kappa }(t_i)\), and \(\dot{\alpha }(t_i)\) for a partition \(\{t_0, \ldots , t_m\}\) of [0, T] in order to minimize the total action, although the actual computations to do this involve numerically solving the ODEs (18)–(20) and (37)–(39) for each fixed time \(t_i\) in order to be able to plug in to the action functional (54). We will follow this approach in Section 5, where we will present selected numerical experiments.

Remark 24

In the special case of whips, as in Remark 10 and Remark 12, we have \(\alpha \equiv 0\) and \(\dot{\alpha }\equiv 0\). As a result we get \(\gamma (x)\equiv 1\) and \(\lambda (x)\equiv 1\), from the definitions (10). Furthermore by (38)–(39), we have that \(\psi \) and \(\chi \) satisfy the system

whose unique solution is \(\psi \equiv \chi \equiv 0\). The formula (55) thus simplifies to

which is the usual kinetic energy for the space of two-dimensional inextensible curves. This shows that the space of whips is an isometrically embedded submanifold of the space of regular upturned flags.

3.1 Including the Effects of Gravity and Wind

Next we will describe how one could include the external effects of gravity and wind by including extra terms in the Lagrangian.

Definition 25

Let \(\textbf{r}\) be a regular upturned flag as in Definition 7. Then the gravitational energy is defined to be

where, for simplicity, we set the gravitational constant to be equal to one. The corresponding gravitational energy Lagrangian is then given by

where \(\textbf{r}\) is again a path of flags subject to endpoint conditions \(\textbf{r}(0) = \textbf{r}_0\) and \(\textbf{r}(T) = \textbf{r}_1\) for two given regular upturned flags \(\textbf{r}_0\) and \(\textbf{r}_1\).

Remark 26

Using this definition the motion of a flag considering its kinetic energy and gravity can be described as a solution to the total energy Lagrangian

subject to the same boundary conditions as above.

In the next proposition we calculate an expression for the gravitational energy in the (x, y) coordinates of formula (11):

Proposition 27

In terms of the curve \(\varvec{\eta }\) and the functions \(\alpha \) and \(\gamma \), the gravitational energy (59) of a flag \(\textbf{r}\) is given by

Proof

By formula (21) we have that

Using formula (57) for the Jacobian of the coordinate change, we thus have

Now the desired formula follows by integrating in the variable y. \(\square \)

Remark 28

(Modelling the effects of wind). The next step to obtain a physically realistic model would be to include the effects of wind, that is, the interaction of the flag with the surrounding fluid. As compared to the rather simple nature of the gravitational force, this is a much more challenging problem and several approaches have been considered in the literature, see for example [1, 30, 33, 38] or [13]. Assuming an ideal fluid with potential flows one would assume that one could define a new energy term by considering the velocity field generated by the movement of the flag in the fluid and the intrinsic kinetic energy of the fluid. This would, in particular, require one to solve the interface boundary conditions of the flag with the fluid. In future work it would be interesting to also perform a similar analysis for the model of the present article.

4 The Geodesic Equation

In this section we will calculate the geodesic equation of the kinetic energy metric introduced in the previous section. These equations can be interpreted as the governing equations for the motion of a flag (ignoring the effects of gravity and wind). As the resulting formulas are already rather technically involved, we will not present the Euler-Lagrange equation of the total energy, that is, including gravity and wind. For the case of gravity the derivation would follow similarly; as mentioned previously we believe that adding the effects of wind to this model, while certainly interesting, would be significantly more difficult and is outside the scope of the present article.

The geodesic equation is obtained by minimizing the action (54). We consider a family of regular upturned flags depending on time and on some small parameter \(\zeta \), as \(\textbf{r}(\zeta , t, u,v)\) for \(\zeta \in (-\varepsilon , \varepsilon )\), \(t\in [0,T]\), \(u,v\in [0,1]\). Differentiating the action with respect to \(\zeta \), we obtain the requirement that

for every variation \(\textbf{r}(\zeta , t, u,v)\) fixed at the endpoints \(t=0\) and \(t=T\). Since the Riemannian metric (53) does not depend explicitly on the flag \(\textbf{r}\) when expressed in (u, v) coordinates, we can simply integrate by parts in time to obtain the condition

The time-integral formula above is zero for all time-dependent variations \(W(t,u,v)\) if and only if the integrand is zero at each time: that is,

Equation (60) must hold for every possible choice of \(W(u,v)\), which means \(\ddot{\textbf{r}}\) must be orthogonal to every possible tangent vector at the given flag, all of which are described by Proposition 18.

In the following theorem we present these equations for a flag that is either balanced or upturned. In addition we will assume that \(\kappa (0)=0\)—the oddness condition—to ensure compatibility at \(x=0\).

Theorem 29

(Geodesic equation on the space of odd, upturned flags). Given initial conditions \(\textbf{r}(0) \in \mathcal F_o\) and \(\dot{\textbf{r}}(0)\in T_{\textbf{r}(0)} \mathcal F_o\) that are described by their generating functions \(\alpha \) and \(\kappa \) (\(\dot{\alpha }\) and \(\dot{\kappa }\) resp.), the geodesic equation on the space of odd, regular, upturned flags is given by the second order equation

where \(\omega , \psi , \chi :[0,1]\rightarrow \mathbb {R}\) are defined by equations (37)–(39), and where the remaining coefficient functions \((\sigma , \rho , z, \varphi , g, q)\) are defined as solutions to the following ODE system with homogeneous boundary conditions on [0, 1]:

Here \(\mu \) and \(\xi \) are defined via

Because \(\lambda >0\) always by assumption, the equations (63)–(70) have nonsingular coefficients, except where \(\gamma =0\). In the balanced case, this never happens since \(\gamma \) is always 1. In the upturned case the only time \(\gamma \) vanishes is at \(x=1\), and the equations can be rewritten in a nonsingular way there, which are likely easier to work with numerically.

Proposition 30

In the case of an upturned flag, the following functions are nonsingular on [0, 1]:

Equations (63)–(70) can all be rewritten in terms of them to avoid singular coefficients.

Proof

Recall that

On the interval \([x^*,1]\), we compute that \(\gamma (x)\) is a decreasing function since

By assumption \(\alpha (1)>0\), and so the only time \(\gamma \) vanishes is at \(x=1\), and when this happens \(\gamma '(1)<0\).

Since \(\sigma (1)=0\), equation (63) implies that \(\sigma '(1)=0\) as well, and thus

Similarly by (64) we have

Since \(\sigma \) and \(\rho \) behave like \((1-x)^2\) near \(x=1\), equation (65) implies that g behaves like \((1-x)^3\) near \(x=1\), and

This means that \(\mu \) defined by (69) has a finite limit as \(x\rightarrow 1\), and that \(\xi \) defined by (70) in fact approaches zero as \(x\rightarrow 1\), which means the terms appearing in (66)–(68) are continuous on all of [0, 1]. \(\square \)

Before we prove Theorem 29, we will first review the geodesic equation in the case of whips, which will illustrate the equation in a simpler case and how the oddness assumption of Proposition 20 arises.

4.1 The Space of Whips

The space of whips was analyzed in detail in the third author’s work [27, 28]. The one modification here is the condition \(\varvec{\eta }_x(t,0)=\hat{\imath }\) along with \(\varvec{\eta }(t,0)=0\), which corresponds to holding the handle of the whip at a fixed location and orientation, rather than just at a fixed location. In this section we will derive the geodesic equation for this situation and show that the space of whips can be totally geodesically embedded into the space of regular upturned flags.

Proposition 31

(Geodesic equation for whips). The geodesic equation for the Riemannian metric (58) for curves \(\varvec{\eta }\) subject to \(\varvec{\eta }(t,0)=0\) and \(\varvec{\eta }_x(t,0)=\hat{\imath }\), with \(|\varvec{\eta }_x(t,x)|\equiv 1\), is given by

where \(\sigma (t,x)\) is a function determined by the spatial ODE

Here \(\varvec{\eta }\) is assumed to be odd through \(x=0\), while \(\sigma \) is even through \(x=0\).

Proof

The variation condition on the kinetic energy is that

Differentiating the equation \(\langle \varvec{\eta }'(x), \varvec{\eta }'(x)\rangle \equiv 1\) with respect to the variation parameter, we find that every variation field must satisfy \(\langle \varvec{\eta }'(x), W'(x)\rangle \equiv 0\). In addition we must have \(W(0)=0\) and \(W'(0)=0\). Since \(W'\) is orthogonal to \(\varvec{\eta }'\), and since \(\varvec{\eta }\) remains a planar curve by the whip assumption, we consider only variations that are also planar. Hence we may write \(W'(x) = \delta (x) \textbf{n}(x)\) for some function \(\delta \) satisfying \(\delta (0)=0\), and so the variation field itself is

Plugging this formula into (73) gives, via interchanging order of integration and then switching the variable names,

which must be zero for every function \(\delta :[0,1]\rightarrow \mathbb {R}\).

We find therefore that

for some function \(\sigma \), which must satisfy \(\sigma (1)=0\). Differentiating with respect to x then gives

which is equation (71). The function \(\sigma \) is now a Lagrange multiplier for the condition \(|\varvec{\eta }'(x)|^2 \equiv 1\). Differentiating that condition twice in time gives

and plugging in (74) gives

Recalling again that \(|\varvec{\eta }'(x)|^2 = 1\), successive differentiations in x give \(\langle \varvec{\eta }'(x), \varvec{\eta }''(x)\rangle = 0\) and

so that (75) becomes (72). The boundary condition \(\sigma (1)=0\) follows from the discussion above. The boundary condition at \(x=0\) follows from the fact that we want \(\ddot{\varvec{\eta }}(0)=0\), and the compatibility condition is thus

The inner product of this condition with \(\varvec{\eta }'(0)\) implies that \(\sigma '(0)=0\). And while \(\sigma (0)\) may not be zero, if \(\varvec{\eta }\) is odd in x then \(\varvec{\eta }''(0)=0\), which produces compatibility.

If \(\varvec{\eta }\) and \(\dot{\varvec{\eta }}\) are odd in x, then \(|\varvec{\eta }''|^2\) and \(|\dot{\varvec{\eta }}'|^2\) are both even in x, so that \(\sigma \) is even in x as well. And as long as \(\sigma \) remains even in x, equation (71) ensures that \(\varvec{\eta }\) will remain odd in x. These conditions make the boundary conditions \(\varvec{\eta }(t,0)=0\) and \(\sigma _x(t,0)=0\) redundant. \(\square \)

At \(x=0\) the compatibility condition that \(\varvec{\eta }(t,0)=0\), which should imply \(\varvec{\eta }_{tt}(t,0)=0\), requires that \(\sigma _x(t,0)=0\) and that \(\sigma (t,0) \varvec{\eta }_{xx}(t,0)=0\). The first condition, together with \(\sigma (t,1)=0\), uniquely determines the solution \(\sigma \) of the ODE (72), so the second condition cannot also be imposed. However it is satisfied automatically if \(\varvec{\eta }\) is assumed to be the restriction of a \(C^2\) odd function. Ensuring this compatibility is the main reason the oddness condition is convenient.

Equation (71) is a nonlinear wave equation for \(\varvec{\eta }\), with tension determined nonlocally. Ordinarily one would specify two boundary conditions, one at \(x=0\) and one at \(x=1\). The fact that \(\sigma (t,1)=0\) means that the natural boundary condition at \(x=1\) for the symmetric differential operator \(f\mapsto \tfrac{\partial }{\partial x}(\sigma \tfrac{\partial f}{\partial x})\) is simply that f(1) is finite. Here the fact that \(\varvec{\eta }_x(t,1)\) must be a unit vector obviates any finiteness condition, and so it is more natural to impose two conditions at \(x=0\). The well-posedness theory needs to be constructed manually in any case, as no general theory applies to degenerate, nonlocal, nonlinear wave equations. See [27] and [29] for two approaches.

Corollary 32

In terms of the function \(\kappa \) defined by Lemma 8 and the function \(\omega \) defined by Proposition 18, the equations (71)–(72) take the form

Proof

Differentiating (71) with respect to x gives

Equation (15) gives \(\varvec{\eta }_x(t,x) = \big ( \cos {\theta (t,x)}, 0, \sin {\theta (t,x)}\big )\) in terms of a function \(\theta \) satisfying \(\theta (t,0)=0\), and if \(\varvec{\eta }\) is odd then additionally \(\theta _x(t,0)=0\). Plugging into equation (79) gives the components

and resolving these gives

By definition of \(\kappa \), we have \(\varvec{\eta }_{xx}(t,x) = \kappa (t,x) \textbf{n}(t,x)\) with

using Lemma 8 together with the fact from Remark 10 that \(\phi \) and \(\beta \) are both zero. Thus \(\kappa (t,x) = \theta _x(t,x)\). Similarly using Remark 24, we have \(\omega (t,x) = \theta _t(t,x)\). Since \(\theta \) is even by Proposition 20, we have \(\kappa (t,0)=0\) for all t, and since \(\theta (t,0)=0\) for all t, we must have \(\omega (t,0)=0\) for compatibility.

Thus equation (80) implies (76), while the compatibility condition \(\theta _{tx} = \theta _{xt}\) implies (77), and the equation (78) is simply (81) (which is the same as (72)) written in terms of \(\kappa \) and \(\omega \). \(\square \)

We will now connect this special case back to the general case by showing that the space of whips is totally geodesic in the space of all upturned/balanced flags. That is, we suppose that at some time we have that the flag is in the shape of a whip (that is, \(\alpha \equiv 0\)) and that its velocity field will keep it that way (that is, \(\dot{\alpha }\equiv 0\)). We want to prove that under these assumptions, \(\ddot{\alpha }\equiv 0\) as well.

Proposition 33

The space of whips is totally geodesic in the space of upturned/balanced flags, that is, if \(\kappa (t,x)\) and \(\alpha (t,x)\) solve the system in Theorem 29, and if \(\alpha (0,x)\equiv 0\) and \(\alpha _t(0,x)\equiv 0\), then \(\alpha (t,x)\equiv 0\) for all t and x.

Proof

Recall by Remark 10, the space of whips is embedded in the space of flags via the condition \(\alpha \equiv 0\). Furthermore by Remark 12, the tangent space to the subspace of whips is characterized by \(\dot{\alpha }\equiv 0\). Finally by Remark 24, the fact that \(\alpha \) and \(\dot{\alpha }\) are both zero implies that \(\psi \) and \(\chi \) are both identically zero. In addition we have \(\gamma \equiv 1\) and \(\lambda \equiv 1\), for all \(x\in [0,1]\).

Under these circumstances the system (63)–(70) simplifies to

where \(\mu = 12\,g - 6\sigma \) and \(\xi = 4\sigma - 6\,g\). Define \(G = g-\frac{1}{2} \sigma \); then the system becomes

with the other equations becoming

These latter equations form a homogeneous system of four ODEs with solution

The solution is unique since, for any solution, we have

using the boundary conditions. We conclude that G, q, and \(\varphi \) are all zero, and thus \(\rho \) must be zero as well. It follows that \(g=\tfrac{1}{2}\sigma \), so that \(\mu =0\) and \(\xi =\sigma \) in (69)–(70)

As such equation (82) becomes

which is precisely (78). Then equations (61)–(62) become

The first is the spatial derivative of the equation

which is precisely equation (80). The second shows that \(\ddot{\alpha }\) will remain zero as long as \(\alpha \) and \(\dot{\alpha }\) are zero, as claimed. \(\square \)

4.2 Proof of Theorem 29

With this motivation complete, we now want to actually prove that the geodesic equation on the full space of flags is given by the equations in Theorem 29. We will prove it using essentially the same method as for the space of whips. Therefore we first need an expression for \(\textbf{r}_{tt}(t,u,v)\). From now on we will work formally as needed, assuming that \(\kappa \) and \(\alpha \) have as many derivatives as any computation requires, just to derive the equations.

Lemma 34

Suppose \(\textbf{r}:[0,T]\times [0,1]^2\) is a time-dependent family of regular upturned flags given by functions \(\kappa ,\alpha :[0,T]\times [0,1]\rightarrow \mathbb {R}\) such that for each t, the function \(\alpha \) satisfies the conditions of Definition 7.

Then the first time derivative at \(t=0\), denoted by \(\dot{\textbf{r}}(u,v) = \textbf{r}_t(0,u,v)\), is given by formula (36) from Proposition 18, while the second time derivative at \(t=0\), denoted by \(\ddot{\textbf{r}}(u,v) = \textbf{r}_{tt}(0,u,v)\), is given by

Here x is the function of (u, v) given by (22), while \(\ddot{\varvec{\eta }}\), \(\ddot{\textbf{b}}\), and \(\ddot{\textbf{t}}\) denote the second time derivatives at \(t=0\) of \(\varvec{\eta }\), \(\textbf{b}\), and \(\textbf{t}\) respectively, and \(\dot{\alpha }\) denotes the first time derivative as in Proposition 18.

Proof

We start with the formula (44), derived in Proposition 18. Differentiate (44) again with respect to t, and we obtain (suppressing the independent variables on the right side):

Taking the time derivative of the equation \(\textbf{b}_x = \alpha \varvec{\eta }_{xx}\) shows that

which gives (83). \(\square \)

To deal with these formulas more explicitly, it is convenient to have expressions for the time derivatives of the Frenet–Serret basis. We have already derived part of this in Proposition 18.

Lemma 35