Abstract

Solar energy is one of the main renewable energies available to fulfill global clean energy targets. The main issue of solar energy like other renewable energies is its randomness and intermittency which affects power grids stability. As a solution for this issue, energy storage units could be used to store surplus energy and reuse it during low solar generation intervals. Also, in order to sustain stable power grid and better grid operation and energy storage management, photovoltaic (PV) power forecasting is inevitable. In this paper, new hybrid model based on deep learning techniques is proposed to predict short-term PV power generation. The proposed model incorporates convolutional neural network (CNN) and long short-term memory (LSTM) autoencoder network. The new model differentiates itself in accomplishing high prediction accuracy by extracting spatial features in time series via CNN layers and temporal features between the time series data through LSTM. The introduced model is tested on dataset of power generation from southern UK solar farm and the weather data corresponding to same location and time intervals; the forecasting performance of the suggested model is evaluated in metrics of root-mean-square error (RMSE) and mean absolute error (MAE). The used model is compared with different models from the literature either of pure type of network such as LSTM and gated recurrent unit (GRU) or hybrid combination of different networks like CNN-LSTM and CNN-GRU. The results show that proposed model provides enhanced results and reduces training time significantly compared to other competitive models, where the performance of the proposed model improved averagely by 5% to 25% in terms of RMSE and MAE performance metrics, and the execution time of training significantly reduced with almost 70% less compared to other models.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The future structure of the modern power systems will depend heavily on renewable energy systems that had become an essential part of the new power mix due to its low prices in comparison to other conventional type of power generation [1]. As a result of the renewable energy issues as its intermittent, randomness and unpredictable nature which affect not only the stability of the power system but also has a negative effect on the control performance of the grid in addition to economic impact, new power grids configurations, starts to take place to have an optimized grid size and utilization of the new smart tools in the new smart grids.

New hybrid grids configuration starts to take place instead of large power grids; these hybrid grids include different types of energy generation and storage unit to minimize the impacts of the drawbacks of renewable energy resources using new energy management techniques to optimize the grid performance and maximize its economic impact [2].

Accurate forecasting of PV power generation became essential for solving the issues of PV units planning and operation that can affect the entire power system stability and optimization as well as economical aspects of the system operation [3].

There are different techniques used for accurate PV power generation forecasting, from physical modeling to statistical methods and artificial intelligence methods, from simple artificial neural networks (ANN) to more advanced networks like LSTM and GRU to more complicated hybrid networks and configuration. In [4], ANN is used to predict the monthly average daily total solar irradiation. Different adaptive feed-forward back-propagation ANN (AFFNN) model with different parameters configurations were introduced for short-term forecasting of PV production and the proposed AFFNN shows better results than classical ANN [5]. A combination of fuzzy logic tools with RNN is used for long-term forecasting of PV generation in [6]. LSTM-RNN model is used for PV generation forecasting and gives best result comparing to three different methods multiple linear regression (MLR), bagged regression trees (BRT) and basic NN in [7], while in[8] a different structured LSTM networks model is used for hour ahead forecasting for solar power, that achieve good results with reduced computation time. Hybrid model based on modified CNN and Bi-GRU is used for short-term PV power generation prediction with a good prediction performance is introduced in [9]. CNN-LSTM networks combined model is proposed for solar energy production and achieves performance improvement in comparison to other traditional models [10]. LSTM autoencoder model used for day ahead forecast for PV power generation is introduced in [11] and autoencoder but with GRU network is also used for short-term PV power generation forecasting in [12]. A convolutional autoencoder combined generative adversarial network is proposed to be used for day ahead PV power forecasting [13].

In this paper, a new hybrid prediction model integrating CNN and LSTM autoencoder network is suggested for short-term PV power generation forecasting at different time spans, 0.5 h, 1 h and 2 h. CNN networks deals with Matrix formatting data inputs and with different dimensions that’s why it is famous in computer vision applications. In the proposed model, CNN layer extracts the internal relationship features between the adjacent measured values taken at the same time sequence and from that special features are extracted making use of the CNN powerful capabilities. While LSTM networks have high capabilities in capturing temporal relationship features of sequencing data including stationary and non-stationary dynamics by extracting the date-time features together with the past history of data using their recurrent architecture and memory units, that’s why it is famous in time series analysis. By combining both CNN and LSTM in addition to autoencoder block, a better result is provided as mentioned and explained in the manuscript results.

Besides, the weather data of temperature and solar radiation are taken into consideration for accurate PV power forecasting. Rather than taken PV power data of the preceding days as a factor to express the weather condition changes like the state-of-the art techniques introduced in literature, the actual values of PV power generation along with temperature and solar radiation data are given directly as input variables to train the proposed model. The performance of the proposed hybrid model is evaluated and compared to that of CNN, LSTM and GRU models in different metrics. All models are developed and simulated using Python software using TensorFlow and Keras libraries.

The contribution of this work can be abstracted as follows:

-

The proposed hybrid model permits to exploit both of the spatial and temporal features in time series data comparing to the competitive single models.

-

Besides, the architecture of the proposed model permits the incorporation of weather data with the PV power generation data which contributed in accurate prediction results.

-

The proposed model has the ability to reduce the errors between the predicted and the actual time sequences data in different metrics as well as the time reduction in both of training and test phases as well as the reduction of time elapsed in both of training and test phases.

The rest of this paper is organized as follows: Section 2 presents background to the deep networks and their structures that are utilized in our proposal. Section 3 explains the proposed model for PV power generation forecasting, its construction, the dataset and the performance evaluation metrics. Section 4 presents the simulation results. The conclusion and the future work are given in Sect. 5.

2 The proposed networks for PV power forecasting based on deep learning

There are different deep learning models that are utilized in this study for PV power generation forecasting such as LSTM, CNN, GRU and LSTM autoencoder, as well as the models that combines CNN with LSTM and another model combining CNN and GRU. All these networks are detailed in the following sections.

2.1 LSTM network

LSTM network is an advanced version of the conventional recurrent neural network (RNN), where LSTM networks solves the long-range dependencies issue in RNN. Since it was first introduced by Sepp Hochreiter and Jurgen Schmidhuber in 1997 [14], LSTM network has been widely used in different applications such as text recognition as in [15] where a LSTM network with up to ten hidden layers is used for handwriting recognition and achieved a significant performance improvement. Natural language processing is another application where LSTM is used as in [16]; an improved NLP method is depicted using LSTM structure. Time series forecasting is another area of using LSTM, such as price and stock market forecast as presented in [17], where RNN-LSTM model is used to forecast future values for both GOOGL and NKE assets, and it was employed as a predictor model for microclimate data inside the Greenhouse [18]. LSTM networks have been also used for PV generation forecasting such as in [8] and [19].

The basic LSTM network structure includes connected memory blocks where each block contains different gates that determine the state of the memory block and the output; this architecture solves the problem of gradient disappearance and gradient explosion faced RNN network, where long-term information can be captured. LSTM structure is shown in Fig. 1.

The LSTM block includes the following gates and memory cells:

-

Input Gate (\({i}_{k}\)) The Input gates control the input values to update the memory cell depending on certain conditions.

-

Forget Gate (\({f}_{k}\)) Forget gates set the information and internal states that need to be reset or throw away from the block depending on certain conditions.

-

Memory cell (\({C}_{k}\)) Memory cell is the main component of the LSTM block, its status is updated over time and depending on the previous state (\({C}_{k-1}\)) ensuring that gradient can pass across multiple time steps.

-

Output Gate (\({O}_{k}\)) Output gates generate the output based on the input values, previous output values and the status of memory cell that depends on certain conditions set what to output based on these givens.

Activation functions for each gate is sigmoid function \(\sigma \) and its hidden layers adopt the hyperbolic tangent function \({\text{tanh}}\)

The mathematical formula describing gates’ outputs in LSTM model are as follows:

where \({x}_{k}\) is the input, \({h}_{k}\) is the hidden layer output, \(w\) s are the cell state weight and \(b\) s are the bias terms for the input, output, forget gate, and cell.

2.2 GRU network

The gated recurrent unit (GRU) network architecture is similar to LSTM and is first introduced by Cho [20], in which both of them has input and output structures similar to ordinary RNN. Although GRU network has simpler structure comparing to LSTM networks, its internal structure is more complicated than the normal RNN. GRU has one gate less than LSTM, this reduces the matrix multiplication, and consequently, it can save a lot of time without impacting its performance [21, 22]. The structure of GRU block is shown in Fig. 2.

GRU’s are able to solve the vanishing gradient problem by using an update gate and a reset gate. The update gate handles information that flows into memory, and the reset gate controls the information that flows out of memory. Both of these gates are trained to save information from the past or remove information that is irrelevant to the prediction.

Here, the update gate \({Z}_{k}\), the reset gate \({r}_{k}\), the cell State \({C}_{k}\) and the new State \({h}_{k}\) are mathematically formulated as follows:

\({x}_{k}\) is the input, \({h}_{k}\) is the hidden layer output, \(w\) s are the cell state weights and \(U\) s are the bias terms.

2.3 CNN network

Convolutional neural network (CNN) is another class of deep learning techniques that was invented by Yann LCun late 80s [23]. CNN has been successfully applied in different domains and achieved good performance such as image processing, recognition and classifications [24] and [25]. Natural language processing and speech recognition are another areas where CNN is widely used with great success [26, 27]. Earlier, CNNs gain more interest to be applied in industrial applications such autonomous mobile robots and self-driving cars [28] and lately it has been used extensively in computer vision applications [29, 30]. The architecture of CNN is shown in Fig. 3.

As shown in Fig. 3, CNN basic architecture consists of several types of layers, such as convolution layer, pooling layer, and fully connected layer; these layers are then repeated and connected in different sizes and forms depending on the application. The different CNN layers are demonstrated as follows:

-

Convolution layer it is a fundamental component of CNN, which contains several convolution kernels to generate new feature maps. The convolution operation performs well in local feature extraction, where the kernel weights are shared across all input maps.

-

Pooling layer it is usually used to reduce the in-plane dimensionality of input which results in decreasing the number of learnable parameters and helping to avoid overfitting. The pooling operations can be different types, such as max pooling and average pooling.

-

Fully connected layer it is often used for high-level inference which maps the features processed by the convolution layers and the pooling layers to the output layer.

In addition, both the convolution layers and pooling layer are equipped with a nonlinear activation function, such as hyperbolic tangent function (tanh) and rectified linear unit (ReLU).

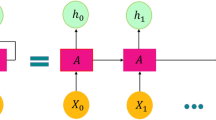

2.4 LSTM autoencoder network

LSTM autoencoder is a new approach utilized for time sequence data prediction; both of its encoder and decoder structures are implemented using LSTM cells that have the capability of learning from temporal dependencies between sequences data. Input data sequence to the encoder is compressed to a fixed length vector, which is considered a bottleneck at the midpoint of the model, and from this vector, the decoder reconstructs the output data which is considered the progressive prediction [31,32,33,34]. The autoencoder configuration includes basically encoder layer that compress the input data and convert it to a code and decoder layer which decode this code. The architecture of LSTM autoencoder is shown in Fig. 4.

3 Proposed hybrid CNN-LSTM autoencoder model

In this study, a new hybrid model is proposed to be used for short-term PV power generation forecasting by using CNN and LSTM autoencoder network. The contribution of the new hybrid model is to combine both advantages of CNN and LSTM autoencoder in features extraction and the ability to learn patterns in data over long sequences, where CNN network layers extract the main spatial features of the time series window and then the LSTM layers learn the time series gradient and the dependencies of long range in time series. To our knowledge, the proposed hybrid model was not used for the PV power generation forecasting until now.

The proposed CNN-LSTM autoencoder model structure consists of the following:

-

Input layer

-

CNN hidden layer with 24 filter and using kernel size of 3.

-

Max pooling layer

-

LSTM encoder and decoder layers, three layers

-

Output layer

-

The total number of trained parameters are 75,844, as depicted in Table 1

-

The used activation function is ReLU and the optimization method is adaptive moment estimation (Adam) which facilitates the computation of learning rates for each parameter using the first and second moment of the gradient. Adam optimization algorithm requires less memory and outperforms on large datasets than other methods. The architecture of the proposed hybrid model CNN-LSTM autoencoder is shown in Fig. 5.

The following sub-sections present the used dataset to validate the performance of the proposed hybrid network as well as the performance evaluation metrics of the proposed hybrid CNN-LSTM autoencoder model for PV power generation forecasting.

3.1 Dataset

The solar PV generation dataset used in this study is collected from a real 5MW solar farm, the location of the farm is in southern UK, the resolution of the data is 30 min and the duration time of the collected data was from November 3, 2017, till December 17, 2019, with total of 37,200 samples points. The PV power generation dataset is prepared for preprocessing and then is normalized.

where\({ x}_{{\text{min}}}\) is the minimum value in the dataset, \({x}_{{\text{max}}}\) is the maximum value and \({x}_{{\text{norm}}}\) is the normalized value of \(x\).

As weather is an important component of energy systems, a weather data is used in this study as well, it includes temperature and irradiance data that has been extracted for the same period from several sites surrounding the solar farms using the MERRA-2 reanalysis data, and then they had been averaged to get the weather condition at the solar farm.

The proposed model is applied on a PV power generation data with and without weather data as well. A sliding window technique was used with a window of two days and no classification of seasons were used. The datasets were divided so that 70% of the data were used for training the models (27,840 sample points) and 30% of the dataset were used for testing the models (9360 sample points).

The scatter plots of power generation data versus both the solar radiation data and temperature data are shown in Figs. 6 and 7, respectively.

3.2 Performance evaluation metrics

The forecasting performance of the suggested hybrid model CNN-LSTM autoencoder and the other models used in the comparison LSTM, GRU, CNN-LSTM and CNN-GRU models are evaluated using the common metrics used in the literature such as root-mean-square error (RMSE) and mean absolute error (MAE). RMSE and MAE are calculated as follows:

Also the elapsed time in training of each model had been calculated for the comparative models.

Here, \({y}_{k}\) is the actual value, \({Y}_{k}\) is the maximum value and \(n\) is the number of points.

4 Simulation results

The proposed hybrid CNN-LSTM autoencoder model for power generation forecasting is evaluated by the comparison with LSTM, GRU, CNN-LSTM and CNN-GRU models at different time horizons half hour ahead, one hour and two hours ahead using RMSE and MAE metrics. Simulation results of PV power generation forecasting using the proposed hybrid network and the other models performed on PV generation data with and without the corresponding weather data through the aforementioned metrics in training and test stages are demonstrated in Tables 2, 3, 4, 5, 6, 7 and also shown in Fig. 8. The prediction values versus the actual values are shown in Figs. 10, 11, 12, 13, 14, 15.

As given in the preceding tables from Tables 2, 3, 4, 5, 6, 7, the forecasting performance of the proposed CNN-LSTM autoencoder model accomplished best performance and lowest values of RMSE and MAE metrics at different time horizons either without or with weather data comparing to the other comparative models in all cases. Furthermore, the proposed CNN-LSTM autoencoder model in metrics of RMSE and MAE for 0.5, 1 and 2h ahead forecasting when taking into account the values of weather data, as depicted in Tables 3, 5 and 7, is achieving better results than those without weather data, as given in Tables 1, 4 and 6; this indicates that the proposed model can handle larger data with better performance comparing to other models. Also from the given results in the previous tables, it was found that both RMSE and MAE for all the models increased with increasing the horizon times of ahead forecasting.

Figure 8 shows the RMSE and the MAE of the used networks and for each forecasting horizon, the proposed CNN-LSTM autoencoder provides less error for all the forecasting horizons, and it is clear that the error of least horizon is better. Also it is obvious that using the weather data in the forecasting process improves the prediction, as it provides more input data that enhances the process. The proposed model works well regardless the dimensions of the input data.

In Fig. 9, the loss curve of the proposed model during the training and test phases versus the number of epochs either without or with weather data for all the forecasting horizons.

The loss curve over 100 epochs during the training and test phases of the proposed model at the different time horizons, (a) 0.5 h. ahead forecasting without weather data, (b) 0.5 h. ahead forecasting with weather data, (c) 1 h. ahead forecasting without weather data, (d) 1 h ahead 1 h. ahead forecasting with weather data, (e) 2 h. ahead forecasting without weather data and (f) 2 h. ahead forecasting with weather data

Loss curve represents mean square error (MSE) calculated over the number of epochs during the training phase to the proposed CNN-LSTM autoencoder model for updating its parameters and after that its performance assessment through the test phase, as shown in Fig. 9; the value of MSE increases with increasing the horizon times of forecasting in the two mentioned phases.

Figures 10, 11, 12, 13, 14, 15 show the original and the predicted values of the proposed hybrid CNN-LSTM autoencoder and the comparative methods with and without weather data at different time horizons.

From the results shown in previous Figs. 10, 11, 12, 13, 14, 15, it is clear that PV power generation is approximately near to zero value at night, whereas the tendency of all the prediction models is to attain satisfactory forecasting accuracy in the other durations' day. On the other hand, the forecasting power curves of the proposed hybrid CNN-LSTM autoencoder model at different horizons time are close to the actual values of PV power generation and its forecasting accuracy is higher than those with the other comparative models at for 0.5 h, one hour and two hours ahead forecasting either without or with weather data. From the results we can say that the proposed method was able to forecast the required horizon in different weather conditions. It is obvious that the prediction of less horizon is better than that for larger horizon.

Besides, an analysis of the comparative findings of the proposed hybrid model and some competitive approaches in literature are presented. Table 8 displays the results of the work presented and the recent studies [19] for PV power generation forecasting from the 0.5h ahead to 2h ahead. The results given in this work [19] are rescaled for conducting fair comparison.

The results indicate the superiority of the suggested hybrid model comparing to the state-of-the art methods to all time horizon in metrics of RMSE and MAE where their values were low as given in Table 8.

5 Conclusion

In this paper, new hybrid model combining CNN and LSTM autoencoder for forecasting PV power generation at different times ahead is suggested. Instead of considering the prior information to adjacent days as a representative to weather condition changes like the introduced work in literature [8] and [19], the weather data of temperature and solar radiation are given as input factors along with the actual values of PV power generation to train the proposed model for accurate forecasting. From the above results, it is clearly depicted that integrating CNN block either to LSTM or GRU and with the autoencoder blocks reduce significantly the execution time during training the models with almost 70% less. The performance of the proposed model is improved with a range of 5–25% from the other models compared with, and the CNN-LSTM autoencoder model provides the best performance. In addition to that, when we use weather data, the time taken in training the models for PV power generation prediction increases but the models performance improves better than that using the PV power generation data only. Besides, the suggested hybrid CNN-LSTM autoencoder model outperforms the state-of-the-art models in literature in metric of RMSE and MAE, where the suggested hybrid model achieves low values which are almost 40–80% compared to the other models in literature depending on the forecasting interval.

In the future work, the proposed hybrid model is also recommended to be used for forecasting the power consumption as it does not depend upon the intrinsic properties of the sequence of sample data.

Data availability

The datasets are publicly available at the Western Power Distribution Open Data Hub site (https://www.westernpower.co.uk/innovation/pod, accessed on August 6, 2021) upon login.

References

Ghafoor A, Munir A (2015) Design and economics analysis of an off-grid PV system for household electrification. Renew Sustain Energy Rev 42:496–502

Murty VVSN, Kumar A (2020) Multi-objective energy management in microgrids with hybrid energy sources and battery energy storage systems. Protect Control Mod Power Syst 5:2. https://doi.org/10.1186/s41601-019-0147-z

Antonanzas J, Osorio N, Escobar R, Urraca R, Martinez-de-Pison FJ, Antonanzas-Torres F (2016) Review of photovoltaic power forecasting. Sol Energy 136:78–111

Mubiru J (2008) Predicting total solar irradiation values using artificial neural networks. Renew Energy 33(10):2329–2332

Mellit A, Pavan AM, Lughi V (2014) Short-term forecasting of power production in a large-scale photovoltaic plant. Sol Energy 105:401–413

Yona A, Senjyu T, Funabashi T, Kim CH (2013) Determination method of insolation prediction with fuzzy and applying neural network for long-term ahead PV power output correction. IEEE Trans Sustain Energy 4(2):527–533

Abdel-Nasser M, Mahmoud K (2019) Accurate photovoltaic power forecasting models using deep LSTM-RNN. Neural Comput Appl 31:2727–2740

Zhang J, Chi Y, Xiao L (2018) Solar power generation forecast based on LSTM. In: International Conference on Software Engineering and Service Science (ICSESS)

Tian F, Fan X, Fan Y, Wang R, Lian C (2022) Ultra-short-term PV power generation prediction based on gated recurrent unit neural network. In: The proceedings of the 16th Annual Conference of China Electrotechnical Society, pp 60–76

Al-Ali EM, Hajji Y, Said Y, Hleili M, Alanzi AM, Laatar AH, Atri M (2023) Solar energy production forecasting based on a hybrid CNN-LSTM-transformer model. Mathematics. https://doi.org/10.3390/math11030676

Zhang Y, Qin C, Srivastava AK, Jin C, Sharma RK (2020) Data-driven day-ahead PV estimation using autoencoder-LSTM and persistence model. IEEE Trans Ind Appl. https://doi.org/10.1109/TIA.2020.3025742

Rai A, Shrivastava A, Jana KC (2022) A Robust auto encoder-gated recurrent unit (AE-GRU) based deep learning approach for short term solar power forecasting. Optik. https://doi.org/10.1016/j.ijleo.2021.168515

Pan X, Zhou J, Sun X, Cao Y, Cheng X, Farahmand H (2023) A hybrid method for day-ahead photovoltaic power forecasting based on generative adversarial network combined with convolutional autoencoder. IET Renew Power Gener. https://doi.org/10.1049/rpg2.12619

Hochreiter S, Schmidhuber J (1997) Long short term network. Neural Comput. https://doi.org/10.1162/neco.1997.9.8.1735

Voigtlaender P, Doetsch P, Ney H (2016) Handwriting recognition with large multidimensional long short-term memory recurrent neural networks. In: International Conference on Frontiers in Handwriting Recognition (ICFHR)

L. Yao and Y. Guan, An Improved LSTM Structure for Natural Language Processing, IEEE International Conference of Safety Produce Informatization (IICSPI), 2018.

Moghara A, Hamiche M (2020) Stock market prediction using LSTM recurrent neural network. Proc Comput Sci. https://doi.org/10.1016/j.procs.2020.03.049

Gharghory SM (2020) Deep network based on long short-term memory for time series prediction of microclimate data inside the greenhouse. Int J Comput Intell Appl (IJCIA) 19(2):2050013

Li G, Xie S, Wang B, Xin J, Liand U, Du S (2020) Photovoltaic power forecasting with a hybrid deep learning approach. IEEE Access 8:175871–175880

Cho K, van Merrienboer B, Gulcehre C, Bahdanau D, Bougares F, Schwenk H, Bengio Y (2014) Learning phrase representations using RNN encoder-decoder for statistical machine translation, arXiv:1406.1078

Yamak PT, Yujian L and Gadosey PK (2019) A comparison between arima, lstm, and gru for time series forecasting. In Proceedings of International Conference on Algorithms, Computing and Artificial Intelligence, Dec 2019, pp 49–55

Khan AT, Khan AR, Li S, Bakhsh S, Mehmood A, Zaib J (2021) Optimally configured gated recurrent unit using hyperband for the long-term forecasting of photovoltaic plant. Renew Energy Focus 39:49–58

LeCun Y, Boser B, Denker JS, Henderson D, Howard RE, Hubbard W, Jackel LD (1989) Backpropagation applied to handwritten zip code recognition. Neural Comput 1(4):541–551

Lawrence S, Tosi AC, Back AD (1997) Face recognition: a convolutional neural-network approach. IEEE Trans Neural Netw 8(1):98–113

Vaillant R, Monrocq C, Le Cun Y (1994) Original approach for the localisation of objects in images. IEE Proc-Vision Image Signal Process 141(4):245–250

Collobert R, Weston J, Bottou L, Karlen M, Kavukcuoglu K, Kuksa P (2011) Natural language processing (almost) from scratch. J Mach Learn Res 12:2493–2537

Sainath TN, Mohamed AR, Kingsbury B, Ramabhadran B (2013) Deep convolutional neural networks for LVCSR, Acoustics, Speech and Signal Processing (ICASSP). In: IEEE International Conference

Hadsell R, Sermanet P, Ben J, Erkan A, Scoffier M, Kavukcuoglu K, Muller U, LeCun Y (2009) Learning long-range vision for autonomous off-road driving. J Field Robot 26(2):120–144

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. Adv Neural Inform Process Syst 60:6. https://doi.org/10.1145/3065386

Yamashita R, Nishio M, Do RKG, Togashi K (2018) Convolutional neural networks: an overview and application in radiology. Insights Imaging 9(4):611–629

Essien A, Giannetti C (2020) A deep learning model for smart manufacturing using convolutional LSTM neural network autoencoders. IEEE Trans Ind Inform. https://doi.org/10.1109/TII.2020.2967556

Park SH, Kim B, Kang CM (2018) Sequence-to-sequence prediction of vehicle trajectory via lstm encoder-decoder architecture. In: IEEE Intelligent Vehicles Symposium (IV), IEEE, pp 1672–1678

Longari S, Humberto D, Valcarcel N, Zago M, Carminati M, Zanero S (2020) Cannolo: an anomaly detection system based on lstm autoencoders for controller area network. IEEE Trans Netw Serv Manag. https://doi.org/10.1109/TNSM.2020.3038991

Tong X, Wang J, Zhang C, Wu T, Wang H and Wang Y (2021) LS-LSTM-AE: power load forecasting via Long-Short series features and LSTM-Autoencoder, 8th International Conference on Power and Energy Systems Engineering (CPESE 2021)

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB).

Author information

Authors and Affiliations

Contributions

MSI, SMG and HAK contributed to conceptualization; MSI and SMG provided software; MSI and SMG involved in writing (original draft preparation); MSI, SMG and HAK involved in writing (review and editing); all authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors did not receive support from any organization for the submitted work. The authors have no relevant financial or non-financial interests to disclose. The authors have no competing interests to declare that are relevant to the content of this article. All authors certify that they have no affiliations with or involvement in any organization or entity with any financial interest or non-financial interest in the subject matter or materials discussed in this manuscript. The authors have no financial or proprietary interests in any material discussed in this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ibrahim, M.S., Gharghory, S.M. & Kamal, H.A. A hybrid model of CNN and LSTM autoencoder-based short-term PV power generation forecasting. Electr Eng (2024). https://doi.org/10.1007/s00202-023-02220-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00202-023-02220-8