Abstract

Efficient detection and classification of power quality disturbances is required with the increasing penetration of multi-energy systems such as microgrids and features from renewable energy resources. Machine learning approach is popular to generate useful and optimal features from data learning to improve the classification performance. This paper aims to analyse the classification performance using the hybrid model of multi-resolution analysis and long short-term memory network. The proposed model uses four-level decomposition wavelet transform to increase the resolution of input signals into multi-bands signal representation. Spatial and temporal feature representation of the wavelet coefficients are highlighted using attention mechanism before feeding into long short-term memory network for sequence feature extraction. The sequence feature output is then passed into multiple dense layer for the classification process. Synthetic disturbance signals are used as training samples. The performance test carried out includes the condition of 20–50 dB signal-to-noise ratio signals, where additive white Gaussian noise are added into the test samples.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

The integration of multiple energy systems especially renewable energy microgrids has been proved efficient in reducing carbon footprint [1]. However, the blooming integration of multiple energy systems also challenged the utility in providing good quality of power supplies [2, 3]. Power quality disturbances (PQD) are among the major concerns in improving functionality and efficiency of a microgrid [4]. PQD is defined as a series of disruption on the magnitude or frequency of the power supply sinusoidal waveform [5]. The occurrence of PQD causes problems such as reducing the lifespan of electrical devices, causing malfunctioning in sensitive electronics devices such as computers, causing unwanted power tripping, causes financial losses and decreases productivity. PQD detection and classification is thus an important tools for monitoring, preventive actions [6] as well as finding the root cause [7] of the power quality disturbance within the power systems. The ability to identify the presence of PQDs in a system helps in guiding the energy management operation.

PQD detection and classification process mainly involves three stages, signal analysis, feature extraction, and classification. Time-domain signal analysis techniques used includes fast Fourier transform [8], and discrete Fourier transform (DFT) [9]. Time-frequency domain analysis such as short time Fourier transform (STFT) [10], WT [11, 12], wavelet packet transform [13], S-Transform [14] have been widely applied as these methods solves the shortcoming of DFT and STFT. These signal analysis methods have been studied and proven to be effective in the field of detection and classification of PQDs.

Statistical feature extractions are usually being carried out after signal analysis stage [15, 16]. Statistical features such as mean, median, RMS, standard deviation, variance, and norm, are extracted as the output features before passing into next stage for higher-order feature extraction. A good feature extraction can improve both computation power and classification performance [17]. Traditional hand-engineered methods use as many statistical features that can be generated to ensure better classification accuracy, but the use of these statistical features never clearly justified [15]. Optimal feature selection methods were proposed to select the most important statistical features generated [18].

Most traditional three-stages PQD classification models uses independent feature extraction, where features extracted are not related to the classification performance. The classification performance of the network depends highly on the feature extraction stage. However, the handcrafted statistical features extracted may not be conducive and some features might cause adverse effect on the accuracy. This process often requires professional knowledge and usually changes over different scenarios. The lack of closed-loop feedback system between feature extraction and classification stages has been highlighted in [19] as an essential element to achieve automatic feature extraction and classification. Deep neural network (DNN) models [20,21,22,23] are proposed to attain automatic feature extraction and classification without human intervention.

Hybrid methods combining the advantages of WT with artificial neural networks (ANN) has first been presented by Santoso et al. [24, 25]. The output of WT are squared to form squared wavelet transform coefficients. The extracted features are processed using ANN layers and a simple thresholded voting is used as decision making for the classification. In [26], statistical feature extracted from WT is used with radial basis function neural network for classification. In [27], WT is used for the signal processing, and the statistical features extracted are classified by using wavelet networks. On the other hand, Khokhar et al. uses WT as feature extractor, the statistical features extracted are passed into artificial bee colony optimiser for feature selection, and finally the classification is done using probabilistic neural networks [15]. It is noticed that most of the hybrid model uses statistical features for the classification process.

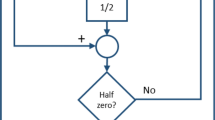

To solve above-mentioned problems, a novel hybrid approach of signal analysis with DNN method is proposed. Instead of extracting specific statistical features, the proposed multi-resolution attention LSTM model embed and align the wavelet transformation coefficients using a perceptron layer. Attention mechanism is applied on the embedded features to improve the generalisation capability of the network. The highlighted features are then being passed into LSTM layers for feature extraction. Finally, the sequence features extracted from the LSTM layer are then being passed into fully connected layer for classification process. An overview of the proposed method is depicted in Fig. 1.

2 Multi-resolution attention LSTM method

Multi-resolution signal decomposition (MSD) is a perfect tool to transform the time-series input signals into multiple frequency components signals or time–frequency domain signals [28]. Attention mechanism has been proposed along with MSD to achieve better classification performance especially under noisy environment. The proposed method as shown in Fig. 1 uses MSD with attention mechanism and LSTM layers to achieve the automatic feature extraction. Automatic PQD detection and classification can be achieved with automatic feature extractor. In order to achieve best feature extraction and classification performance, a series of feature manipulation has been tested. Two different attention mechanism has been tested, temporal feature attention (TFA) and spatial feature attention (SFA). The output coefficients of MSD is first passed through a feature align layer which embed different bands into similar dimensions. TFA is multiplied element-wise with the temporally aligned features from feature align layer outputs to get the temporal attention vector. While spatial attention vector is acquired by multiplying the spatially aligned features with the SFA attention weights. The spatial or temporal highlighted feature vector are then being arranged into temporal arrangement before passing into LSTM layers for feature extraction process. Two fully connected layers and softmax activation function are used to classify the features into respective disturbance classes. The details of the proposed mechanism are explained in the following sections.

2.1 Multi-resolution signal decomposition

Wavelet transform [29] is proved to be efficient in detecting discontinuity in signals. The characteristics of varying window sizes in WT allows it to achieve an optimal time-frequency resolution. The varying window sizes also allow wavelets to detect non-stationary signals, which are posed by most of the PQDs. Wavelet transform can be expressed as [29],

where \(a_{i,j}\) representing discrete wavelet transform (DWT) expansion coefficients of input f(x), with scaling and shifting parameters, i and j, respectively, while \(\psi _{i,j}\) represents the wavelet expansion function. The DWT coefficients can be expressed as:

Wavelet basis function can be generated from mother wavelet, \(\psi _{i,j}\) by tuning the scaling and shifting parameters.

where i and j are scaling and shifting parameters. MSD can be achieved by providing two-scaled equation, \(\psi _{i,j}\) and \(\phi _{i,j}\)

where \(g(j) = (-1)^j h(1-j)\). h(j) and g(j) can be viewed as high pass and loss pass filter coefficients. MSD is achieved with different \(I^{th}\) level decomposition as follows [29]:

MSD allows the signal to be band-filtered into levels of frequencies. The original signal is filtered using a high-pass filter (HPF) for high-frequency components, while low-pass filter (LPF) is used to extract low-frequency components. The band low-pass filter from each levels will be used as input to next level decomposition until the desired decomposition level. Figure 2 shows MSD with four levels of decomposition. The detailed coefficients, D1 to D4, are outputs from high-pass filter from respective decomposition levels, while A4 are low-pass filter output from last decomposition level.

2.2 Attention mechanism

Attention mechanism is used to highlight the important characteristics of the input signal. The input signal \(x_t\) with T dimension is passed through a dense layer with similar dimension to gain attention score, \(y_d\), as follows:

where \(x_t\) represents the input signal, \(w_{d,t}\) is the trainable weights kernel vectors of the dense layer. A softmax layer is used to normalise the Attention Score calculated into range between (0, 1), forming Attention Weight, \(a_{d}\),

The Attention Weight, \(a_{d}\), is then multiplied element-wise with the input signal, resulting in highlighted feature vector, \(a_d x_t\) which can be expressed as:

2.3 Long short-term memory

The main operating mechanism in LSTM is governed by three “gates” with the aids of activation functions [30]. LSTM achieves its “memory” by having cell state \(C_t\) in its architecture. LSTM takes in sequential inputs and processed using the three gates: input gate, \(i_t\), forget gate, \(f_t\), and output gate, \(o_t\). Input gate updates LSTM cell state with new information, forget gate filters unwanted information present in the cell state, and output gate outputs the hidden state or temporal encoding of each timestep. The output of input gate will be filtered by the hyperbolic tangent or tanh activation function, producing a new candidate, \({\tilde{c}}\) to update the cell state. Each unit of LSTM consists of one set of trainable weights, \(W_f\), \(W_i\), \(W_o\), \(W_c\), \(U_f\), \(U_i\), \(U_o\), \(U_c\), and biases, \(b_f\), \(b_i\), \(b_o\), \(b_c\). The dimension of these weights can be varied by using different units of neurons for the LSTM cell. Increasing number of units of neurons increased the number of trainable weights or the learning capability of the LSTM layer.

A new cell state, \(c_t\) is produced at every timestep. The new cell state, \(c_t\), is achieved by forgetting irrelevant information while learning new information. Equation below represents the cell state updating mechanism. Previous cell state, \(c_{t-1}\), is multiplied element-wise with the forget gate to remove the unwanted information, while candidate cell state, \(\tilde{c_{t}}\) is multiplied element-wise with input gate control for learning new information.

The third gate in an LSTM cell is the output gate, \(o_t\). Output gate controls the output information from an LSTM cell. The information in new cell state, \(c_t\), will be filtered by the output gate, \(o_t\) to form a hidden state output, \(h_t\). The output of LSTM layer represents the encoded sequence feature based on the input pattern. The output gate and hidden state output can be calculated as follows:

3 Experiment setup

The classification and generalisation capability of the proposed method is tested with 16 synthetic models of PQDs [19, 31] including normal signal waveform, single disturbance waveform, and multiple disturbance waveform as listed in Table 1. The entire experiments are carried out using AMD Ryzen 7 3800X 8-Core Processor with Nvidia P6000 graphic processing unit. Pytorch framework has been used for the experiments. The proposed method takes in 10-period waveform as input. A total of 76800 10-period PQD samples are randomly generated, where each disturbance classes are having 4800 samples. The sampling frequency used is 3200 Hz. As noise is always present during the real-world data collection, 20–50 dB signal-to-noise (SNR) ratio level of additive white Gaussian noise (AWGN) are added randomly into the generated training samples. tenfold cross-validation has been carried out, which consists of 90% training samples and 10% of total samples are used as validation samples. A total of five sets of testing data sets are generated for models bench-marking purpose. The five sets of the testing data includes a set of noiseless samples, and 20 dB, 30 dB, 40 dB, 50 dB SNR AWGN added samples. Each set of the testing data consists of 1000 samples per PQD classes. The SNR can be depicted as

The main evaluation matrix used in these experiments is the classification accuracy. The classification accuracy of individual class \(Acc_n\) is the true positive, \(TP_n\) over the total test samples for m classes of PQD, \(S_j\) as,

In this paper, two types of input arrangement are used to evaluate the proposed multi-resolution attention LSTM model. The feature are arranged in either TFA or SFA as described in Sect. 2. As shown in Fig. 1, feature align layer consists of a perceptron layer which encodes the different dimension band outputs coefficients into same dimension, as well as reshaping the output to produce either spatial or temporal arrangement before passing into attention network. In the setup of temporal feature, the attention is applied over respective bands, whereas for spatial feature, the attention mechanism is applied across bands. Bench-marking of the proposed method has also be done with multi-resolution LSTM model without attention mechanism, deep LSTM model [31], and deep convolution neural network (DCNN) model [19, 31]. The details of each of the models compared are given in Table 2.

4 Performance analysis of the proposed method

The classification performance of the proposed method and the bench-marking models are tabulated in Table 3. By comparing the classification performance of Deep LSTM with WT LSTM models, it can be noticed that the proposed MSD signal transformation increased the overall classification performance across different noise levels. This shows that hybrid model using MSD with DNN increases the classification performance of the DNN classifier. Comparing the performance of WT-TFA LSTM and WT-SFA LSTM against WT LSTM shows that attention mechanism helps in improving the noisy condition classification, especially on high noise 20 dB SNR tests. Spatial feature attention in WT-SFA LSTM model is however showing better improvement, that is, with the highest \(91.78\%\) classification accuracy on high noise 20 dB SNR AWGN test. Results shows that SFA mechanism can improve the performance of the classification under high noise condition. The classification performance of the proposed WT-SFA LSTM is also compared to the literature model, that is, deep CNN model [19]. Results shows that the proposed WT-SFA LSTM model is having better performance at the highest noise 20 dB SNR condition.

4.1 Classification accuracy analysis

The individual class accuracy of the proposed WT-SFA LSTM and Deep CNN models are given in Tables 4 and 5, respectively. From Table 4, it can be noticed that the model is having weaker classification on class P0-Normal and class P8-Notch under 20 dB SNR condition. This shows that the model is having difficulty in identifying normal class and notch class under high noise condition. As additive noise are introduced, notching effect which is having negative magnitude might be neutralised easily by the AWGN. High noise condition may also confuse the classifier with harmonic disturbance. On the other hand, deep CNN model in Table 5 is showing weaker classification performance on class P8-Notch and P13-Flicker with Harmonics under 20 dB SNR. Neutralised magnitude on the notching effect is showing more serious impact on the deep CNN model. The harmonics confusion however is having negative impact on class P13 in deep CNN model.

4.2 Confusion matrix analysis

The in-depth classification performance of the model can be visualised using confusion matrix. From Fig. 3, it can be shown that the confusion occurs on class P0-Normal, P8-Notch and P13-Flicker with harmonics. Class P0-Normal is having confusion with Class P13-Sag with Harmonics. There is only slight difference in the boundary while defining normal class and sag or swell disturbance class. Higher level of noise can easily disrupt the average magnitude signal. The confusion of slow disturbance class can be explained with higher resemblance of high noise signals with the harmonics classes. However, it can be noticed that the fast transient class P8-Notch is having high confusion of 0.26 with slow transient class P15-Sag with harmonics. This shows that the performance of Deep CNN can be seriously impacted with disturbance across different frequencies. The confusion of class P13-Flicker with harmonics with class P5-Spike and P6-Harmonics again showing the confusion of this classifier across fast and slow transient disturbances.

The confusion matrix of the WT-SFA LSTM model tested with 20 dB SNR AWGN is as shown in Fig. 4. From the confusion matrix, it can be noticed that class P0-Normal, P8-Notch, and P9-Flicker is having higher classification confusion with another class. It can be noticed that the classification of class P0 and P9 is having higher confusion with class P10-Sag with Harmonics, that is, 0.23 and 0.12, respectively. Both classes (P9 and P13) are categorised under slow transient disturbance. Class flicker can only be detected with multiple periods of waveforms. The confusion of class P0 and P9 is due to high noise condition resembles the condition of higher level of harmonics. The confusion on class P8-Notch with class P15-Flicker with Swell also occur in the proposed method, but with 38% improvement, that is, from confusion of 0.26 to only 0.16 in the WT-SFA LSTM model as compared to deep CNN model. This shows that the MSD splitting the signal into multiple frequency bands is contributing in the generalisation of classifying PQDs under different frequency bands.

4.3 Model complexity analysis

The model complexity comparison is shown in Table 6. From the comparison, it can be noticed that the proposed WT-SFA LSTM model is having the highest classification accuracy of 91.78% on high noise 20 dB SNR AWGN test. Although the current model has 272 thousand number of parameters and the model size of 1.069 MB is the highest among the models, the time required for each epoch of training is still kept at 34 s, which is comparatively low for an improved performance on high noise condition.

5 Conclusion

Automatic feature extraction is a vital process for accurate PQD detection and classification. In this paper, a novel model consisting wavelet transform, attention mechanism and LSTM is proposed. Multi-level signal decomposition using wavelet transform decompose signals into different frequency components. Results shows that wavelet transform helps in improving overall classification accuracy across different noise levels. The classification accuracy under the highest noise levels improved from 88.48 to 89.77%. Two attention mechanism has been examined, that is, temporal feature attention (TFA) and spatial feature attention (SFA). The classification performance of TFA and SFA under 20 dB SNR AWGN are 89.87% and 91.78%, respectively, which proves increased classification performance under high noise condition. The proposed model has also been bench-mark with state-of-the-art deep CNN model, which shows better performance under high noise condition. Model complexity of the model has also been compared in the experiment. For the future work, the model can be further simplify by simplifying the feature align layer, and LSTM layer can be replaced with transformer which comes with attention mechanism.

References

Yuping L, Ramzan M, Xincheng L, Murshed M, Awosusi AA, Bah SI, Adebayo TS (2021) Determinants of carbon emissions in Argentina: the roles of renewable energy consumption and globalization. Energy Rep 7:4747–4760

Luo A, Xu Q, Ma F, Chen Y (2016) Overview of power quality analysis and control technology for the smart grid. J Modern Power Syst Clean Energy 4(1):1–9

Hossain E, Tür MR, Padmanaban S, Ay S, Khan I (2018) Analysis and mitigation of power quality issues in distributed generation systems using custom power devices. IEEE Access 6:16816–16833

Yazdi F, Hosseinian S (2019) A novel “smart branch’’ for power quality improvement in microgrids. Int J Electr Power Energy Syst 110:161–170

Schipman K, Delincé F (2010) The importance of good power quality. ABB Power Qual. Prod, Charleroi, Belgium, ABB Review

Beniwal RK, Saini MK, Nayyar A, Qureshi B, Aggarwal A (2021) A critical analysis of methodologies for detection and classification of power quality events in smart grid. IEEE Access 9:83507–83534

Ma Y, Xiao X, Wang Y (2020) Identifying the root cause of power system disturbances based on waveform templates. Electric Power Syst Res 180:106107

Heydt G, Fjeld P, Liu C, Pierce D, Tu L, Hensley G (1999) Applications of the windowed FFT to electric power quality assessment. IEEE Trans Power Deliv 14(4):1411–1416

Szmajda M, órecki KG, Mroczka J (2007) DFT algorithm analysis in low-cost power quality measurement systems based on a DSP processor. In: 2007 9th international conference on electrical power quality and utilisation. IEEE, pp 1–6

Jurado F, Saenz JR (2002) Comparison between discrete STFT and wavelets for the analysis of power quality events. Electric Power Syst Res 62(3):183–190

De Yong D, Bhowmik S, Magnago F (2015) An effective power quality classifier using wavelet transform and support vector machines. Expert Syst Appl 42(15–16):6075–6081

Xiao F, Lu T, Wu M, Ai Q (2019) Maximal overlap discrete wavelet transform and deep learning for robust denoising and detection of power quality disturbance. IET Gener Transm Distrib 14(1):140–147

Panigrahi B, Pandi VR (2009) Optimal feature selection for classification of power quality disturbances using wavelet packet-based fuzzy k-nearest neighbour algorithm. IET Gener Transm Distrib 3(3):296–306

Reddy MV, Sodhi R (2017) A modified s-transform and random forests-based power quality assessment framework. IEEE Trans Instrum Meas 67(1):78–89

Khokhar S, Zin AAM, Memon AP, Mokhtar AS (2017) A new optimal feature selection algorithm for classification of power quality disturbances using discrete wavelet transform and probabilistic neural network. Measurement 95:246–259

Bhavani R, Prabha NR (2017) A hybrid classifier for power quality (PQ) problems using wavelets packet transform (WPT) and artificial neural networks (ANN). In: 2017 IEEE international conference on intelligent techniques in control, optimization and signal processing (INCOS). IEEE, pp 1–7

Samanta IS, Rout PK, Mishra S (2021) Feature extraction and power quality event classification using curvelet transform and optimized extreme learning machine Electr Eng 1–16

Chamchuen S, Siritaratiwat A, Fuangfoo P, Suthisopapan P, Khunkitti P (2021) High-accuracy power quality disturbance classification using the adaptive ABC-PSO as optimal feature selection algorithm. Energies 14(5):1238

Wang S, Chen H (2019) A novel deep learning method for the classification of power quality disturbances using deep convolutional neural network. Appl Energy 235:1126–1140

Junior WLR, Borges FAS, Rabelo RdAL, de Lima BVA, de Alencar JEA (2019)Classification of power quality disturbances using convolutional network and long short-term memory network. In: 2019 international joint conference on neural networks (IJCNN). IEEE, pp 1–6

Garcia CI, Grasso F, Luchetta A, Piccirilli MC, Paolucci L, Talluri G (2020) A comparison of power quality disturbance detection and classification methods using CNN, LSTM and CNN-LSTM. Appl Sci 10(19):6755

Liu J, Ni G (2020) An efficient optimal algorithm for high frequency in wavelet based image reconstruction. J Comput Anal Appl 28(5):865–878

Sindi H, Nour M, Rawa M, Öztürk Ş, Polat K (2021) A novel hybrid deep learning approach including combination of 1d power signals and 2d signal images for power quality disturbance classification. Expert Syst Appl 174:114785

Santoso S, Powers EJ, Grady WM, Parsons AC (2000) Power quality disturbance waveform recognition using wavelet-based neural classifier. I. Theoretical foundation. IEEE Trans Power Deliv 15(1):222–228

Santoso S, Powers EJ, Grady WM, Parsons AC (2000) Power quality disturbance waveform recognition using wavelet-based neural classifier. II. Application. IEEE Trans Power Deliv 15(1):229–235

Kanirajan P, Kumar VS (2015) Power quality disturbance detection and classification using wavelet and RBFNN. Appl Soft Comput 35:470–481

Masoum M, Jamali S, Ghaffarzadeh N (2010) Detection and classification of power quality disturbances using discrete wavelet transform and wavelet networks. IET Sci Meas Technol 4(4):193–205

Ku J, Kovoor BC (2021) A wavelet-based hybrid multi-step wind speed forecasting model using LSTM and SVR. Wind Eng 45(5):1123–1144

Uyar M, Yildirim S, Gencoglu MT (2008) An effective wavelet-based feature extraction method for classification of power quality disturbance signals. Electr Power Syst Res 78(10):1747–1755

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Machlev R, Chachkes A, Belikov J, Beck Y, Levron Y (2021) Open source dataset generator for power quality disturbances with deep-learning reference classifiers. Electric Power Syst Res 195:107152

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chiam, D.H., Lim, K.H. & Law, K.H. LSTM power quality disturbance classification with wavelets and attention mechanism. Electr Eng 105, 259–266 (2023). https://doi.org/10.1007/s00202-022-01667-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00202-022-01667-5