Abstract

Discretization methods are commonly used for solving standard semi-infinite optimization (SIP) problems. The transfer of these methods to the case of general semi-infinite optimization (GSIP) problems is difficult due to the \(\mathbf {x}\)-dependence of the infinite index set. On the other hand, under suitable conditions, a GSIP problem can be transformed into a SIP problem. In this paper we assume that such a transformation exists globally. However, this approach may destroy convexity in the lower level, which is very important for numerical methods. We present in this paper a solution approach for GSIP problems, which cleverly combines the above mentioned two techniques. It is shown that the convergence results for discretization methods in the case of SIP problems can be transferred to our transformation-based discretization method under suitable assumptions on the transformation. Finally, we illustrate the operation of our approach as well as its performance on several examples, including a problem of volume-maximal inscription of multiple variable bodies into a larger fixed body, which has never before been considered as a GSIP test problem.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the present paper we consider general semi-infinite optimization problems of the following form:

where \(Y: {\mathbb {R}}^m \rightrightarrows {\mathbb {R}}^n\) is a set-valued mapping, \(|Y(\mathbf {x})| = \infty \) for at least some \(\mathbf {x} \in X, I := \{ 1,...,p \}\) is a finite index set and f, \(g_i, i \in I,\) are real-valued and at least continuous functions. By X we summarize the finite restrictions on the decision variables. Moreover, we assume that X is non-empty and closed and for every \(\mathbf {x} \in X\) the so-called infinite index set \(Y(\mathbf {x})\) is closed and compact.

The infinite index set can, e.g., be given as the solution set of finitely many inequalities:

where \(J:=\{1, \dots , q \}\) is a finite index set and \(u_j, j \in J,\) are again real-valued and at least continuous functions. For the special case, where \(Y(\mathbf {x})\) does not depend on \(\mathbf {x}\), the problem above is called a standard or ordinary semi-infinite optimization problem and is abbreviated by SIP.

One of the keys for both the theoretical and numerical treatment of semi-infinite optimization problems lies in their bi-level structure. The parametric lower level problems of a semi-infinite problem are given by

The decision variables \(\mathbf {x}\) of the semi-infinite problem are the parameters of the lower level problems and the index variables \(\mathbf {y}\) of the semi-infinite problem are the decision variables of these problems. By

we denote the so-called optimal value functions of the semi-infinite optimization problem. Obviously,

but the functions \(\varphi _i, i \in I,\) are only given implicitly and may be non-differentiable even if the functions \(g_i, i \in I,\) and \(u_j, j \in J,\) are linear.

A natural and widespread solution technique for standard semi-infinite optimization problems is discretizing the infinite index set Y, solving the induced finite optimization problem, and refining the discretization (see e.g. Blankenship and Falk 1976; Reemtsen 1991; Reemtsen and Goerner 1998; López and Still 2007). The direct transfer of this approach to the case of a GSIP problem is possible in principle, but problematic: One problem in this case is the \(\mathbf {x}\)-dependency of the infinite index set. Thus, the discretization has to depend on \(\mathbf {x}\), too. To ensure the closedness of the feasible set of the discretization-induced optimization problems, the discretizations must even depend at least continuously on \(\mathbf {x}\). How such a discretization can be constructed is shown in Still (2001b). Due to additional conditions (see Still 2001b), which must be met for convergence of these methods in the case of GSIP problems, they are difficult to implement. And, to our knowledge, there are no numerical experiences with these discretization methods so far.

On the other hand, under suitable assumptions, any general semi-infinite optimization problem can be at least locally converted into a standard one (see Weber 1999; Still 1999). Such a transformation is of practical value only in cases where it is defined globally. We assume in the following that such a global transformation is given. For problems which have a geometric background, i.e. the infinite index set is a geometrically simple body or arises from geometrically simple bodies via intersection or union, such globally defined transformations can often be specified (see Floudas and Stein 2007; Steuermann 2011). Furthermore, these examples often have convex lower level problems. Unfortunately, the transfer to a SIP can destroy convexity of these problems. Then, heavy-weighted methods of global optimization must be applied to solve the resulting SIP (see Steuermann 2011).

We present in this paper a numerical method for solving general semi-infinite optimization problems, which can maintain convex lower-level problems. It combines the transformation into a standard semi-infinite problem with discretization techniques for such problems. In the algorithm we switch between two problems: the original GSIP problem and the SIP problem induced by the transformation. Starting from a given initial point, an initial discretization of the infinite index set of the GSIP problem is calculated and transferred into a discretization of the infinite index set of the induced SIP problem. Subsequently, a sequence of discretized SIP problems is solved, in which the discretization is refined from step to step. However, the lower level problems of the induced SIP problem are not solved for refinement, rather the lower level problems of the GSIP problem are solved. Then, the global solutions of these lower level problems are transferred into points of the index set of the induced SIP problem. It can be shown that the latter ones are global solutions, too. This is necessary for the correctness of the discretization method.

Numerical examples show that one only needs to add relatively few points to achieve feasibility within a given tolerance. Therefore this method is also suited for complex problems like the inscription of multiple bodies into a fixed design.

Early convergence results for discretization methods solving SIP problems are for example given by Reemtsen (1991) and Reemtsen and Goerner (1998). These results focus on the convergence to feasible points and the convergence of global solutions. Results regarding convergence rates of local solutions were presented by Still (2001a). We will show in Sect. 3 how these results carry over to the transformation-based discretization method. Moreover, we add an assumption to the main statement in Still (2001a) and present a counterexample for the case that the assumption is not fulfilled. This assumption is missing in Still (2001a).

A different reformulation approach to a SIP problem and subsequent discretization is followed by Mitsos and Tsoukalas (2015). There, a GSIP is reformulated using disjunctive semi-infinite constraints. The problem is then used to construct better upper and lower bounds for the original problem iteratively. This is done by a discretization strategy which adaptively adds discretization points. The disjunctive programs resulting from the discretization are solved globally using a solver for mixed integer nonlinear problems. In Djelassi et al. (2019) the strategy is extended to problems with equality constraints in the lower level. Instead of using a reformulation to a mixed integer program, in Kirst and Stein (2019) the authors develop tailored strategies to solve the disjunctive problems. An early attempt to globally solve GSIP problems was followed by Lemonidis (2008). In this work an inner and an outer approximation of the variable index set is calculated which doesn’t depend on the optimization variables anymore. Then, the same techniques as presented in Bhattacharjee et al. (2005) are applied to the standard semi-infinite optimization problems. These techniques provide lower and upper bounds, which can be used for global optimization. Furthermore, in Lemonidis (2008) it is shown for the SIP application kinetic model reduction (KMR) how GSIP problems with box-shaped infinite index sets can be transferred into an equivalent SIP problem by a linear coordinate transformation (convex combination of the lower and upper bounds).

Our focus is different from these approaches. We try to avoid a reformulation using disjunctive constraints. Our approach allows to maintain helpful structure, e.g. convexity of the lower level problems, which comes with the GSIP problem. And, we try to avoid solving problems by global solvers.

The paper is organized as follows. In Sect. 2 we show how a GSIP problem can be reformulated into a SIP one. We point out why it is beneficial to solve the lower level problem of the given GSIP. Then, we introduce the algorithm. Section 3 contains results about convergence to a feasible point or global solutions, along with rates of convergence. Section 4 discusses the conditions necessary to retain convexity of the induced SIP lower level problems and of the discretized SIP problems. In Sect. 5 we illustrate the application of our method and its performance on three classes of examples: some native GSIP problems, a design centering problem, and a problem of inscribing two (or more) variable bodies volume-maximal into a larger fixed container. Section 6 concludes the paper and points out future directions of research.

2 The transformation-based discretization method (TDM)

Transformation: In principle, under suitable assumptions, each GSIP problem can be transformed, at least locally, into an equivalent SIP problem (see Still 1999; Weber 1999 for details). However, such a transformation is only of practical value in cases where this transformation is defined globally. The ideal situation is the following:

Assumption 1

(Existence of transformation) There exist a nonempty, compact set \(Z \subseteq {\mathbb {R}}^{{\tilde{n}}}\) and a continuous function \(\mathbf {t} : X \times Z \rightarrow {\mathbb {R}}^{n}\), such that \(\mathbf {t}(\mathbf {x},Z) = Y(\mathbf {x})\) for all \(\mathbf {x} \in X\).

Given Assumption 1, for every \(i \in I\), the feasibility condition

can be equivalently written as

which is of ordinary semi-infinite type. Thus, the feasible sets of the GSIP problem and the induced SIP problem coincide. For the rest of the paper we assume that Assumption 1 is fulfilled.

For an interval, or more general box-shaped, index set \(Y(\mathbf {x}) = [a(\mathbf {x}), b(\mathbf {x})]\) with \(a(\cdot ) < b(\cdot )\), such a transformation can be easily constructed by convex combination of the interval ends resp. box vertices. In the case of higher dimensional index sets such a transformation exists for example, if they are star-shaped (see Still 1999 for details). But often the application itself already suggests such a transformation [see for example the design centering problems in Steuermann (2011)].

Remark 1

In general, it won’t be possible to parametrize the set \(Y(\mathbf {x})\) by one function. However, it is sufficient for the following considerations, that the set \(Y(\mathbf {x})\) can be partitioned such that for every subset \(Y_l(\mathbf {x}), l \in \{1, ..., s \},\) a set \(Z_l\) and a function \(\mathbf {t}_l\) according to Assumption 1 exist. The results and the algorithm presented in the following carry over to this case directly.

We denote the transformation-induced standard semi-infinite optimization problem by

where the functions \({\tilde{g}}_i\) are defined as in (2).

By

and

we denote the lower level problems and the optimal value functions of the (transformation-)induced SIP problem, respectively. We show in the following that the optimal value functions \({\tilde{\varphi }}_i\) and \(\varphi _i\) coincide for every \(i \in I\).

Discretization: A widespread approach for solving SIPs is discretizing the infinite index set. Therefore, for a subset \({\dot{Z}}\) of Z, we introduce the optimization problem

If \({\dot{Z}} \subseteq Z\) is a finite set, \(\widetilde{\text {SIP}} ({\dot{Z}})\) is called discretized SIP problem.

Discretization methods which adaptively add points to the discretization are typically very successful. An algorithm for SIP problems using this methodology was first introduced by Blankenship and Falk (1976). The points which are added are the global solutions of the lower level problem. This is important for guaranteeing feasibility. Unfortunately, the transformation may destroy given convexity in the lower level problem (see Kaplan and Tichatschke 1997; Still 2001b). More precisely, if \(g_i(\mathbf {x}, \mathbf {y})\) is convex in \(\mathbf {y}\), \({\tilde{g}}_i(\mathbf {x}, \mathbf {z})\) can be non-convex in \(\mathbf {z}\). We illustrate this effect by the following example.

Example 1

We consider a so-called squircle as infinite index set, i.e.

with \(\mathbf {x} \in X := {\mathbb {R}}^{2} \times {\mathbb {R}}^{}_{\ge 0}\). Furthermore, let \(g_1(\mathbf {x}, \mathbf {y}) := y_2 - y_1^2 \le 0\) be the semi-infinite constraint. Obviously, \(Y(\mathbf {x})\) is a convex set for all \(\mathbf {x}\) and \(g_1(\mathbf {x}, \mathbf {y})\) is concave in \(\mathbf {y}\) (for all \(\mathbf {x}\)). Hence, for all \(\mathbf {x}\) the (lower level) problem \(Q_1(\mathbf {x}): \max _{\mathbf {y} \in Y(\mathbf {x})} \ g_1(\mathbf {x}, \mathbf {y})\) is a convex one (see Fig. 1a for a graphical visualization). The representation of a circle as image of the set \([0,1] \times [0,\pi ]\) can be generalized to this case (see for example Jaklic̆ et al. 2000):

While \(Z = [0,1] \times [0,\pi ]\) is also a convex set, the function

is not concave in \(\mathbf {z}\) for all \(\mathbf {x}\), e.g. not in \(\mathbf {x} = (0.8,0.5,1)^T\) as one can see in Fig. 1b. There are two different local solutions for the transformed lower level problem \({\tilde{Q}}_1(\mathbf {x}): \max _{\mathbf {z} \in Z} \ {\tilde{g}}(\mathbf {x}, \mathbf {z})\). Hence, the lower level problems of \(\widetilde{\text {SIP}}\) may be non-convex in general.

Transformation destroys convexity in the lower level [dark gray - points with \(g_1(x,y) \le 0\) resp. \({\tilde{g}}_1(x,y) \le 0\), light gray—feasible set Y(x) resp. Z, circles - local solutions]: a original problem \(Q_1(x)\) with unique optimal solution. b Transformed problem \({\tilde{Q}}_1(x)\) with two local solutions

The loss of convexity can cause numerical difficulties in solving the lower level problems. In the worst case time-consuming global optimization routines have to be used to solve these problems. That’s why we consider the original convex lower level problems in the algorithm. Then, we can map these solutions to solutions of the possible non-convex lower level problems of \(\widetilde{\text {SIP}}\).

The following lemma states that this is a feasible approach. From it also follows that the transformation does not change the feasible set defining functions \(\varphi _i, i \in I\).

Lemma 1

Let \(\mathbf {x} \in X\) and \(i \in I\). The point \(\mathbf {z}^* \in Z\) is a global solution of \({\tilde{Q}}_i(\mathbf {x})\) if and only if \(\mathbf {y}^* = \mathbf {t}(\mathbf {x},\mathbf {z}^*)\) is a global solution of \(Q_i(\mathbf {x})\).

Proof

Let \(\mathbf {y}^*\) be a global solution of \(Q_i(\mathbf {x})\), i.e. \(g_i(\mathbf {x}, \mathbf {y}^*) \ge g_i(\mathbf {x}, \mathbf {y})\) for all \(\mathbf {y} \in Y(\mathbf {x})\). This relation is under \(\mathbf {y}^* = \mathbf {t}(\mathbf {x},\mathbf {z}^*)\) equivalent to

for all \(\mathbf {z} \in Z\). Thus, \(\mathbf {z}^*\) is a global solution of \({\tilde{Q}}_i(\mathbf {x})\). The reverse applies under analogous considerations and the surjectivity of \(\mathbf {t}\). \(\square \)

The above lemma states that one can calculate a global solution of the generally non-convex problem \(\tilde{\text {Q}}_i(\mathbf {x})\) by computing a global solution of the convex problem \(\text {Q}_i(\mathbf {x})\) and mapping this one via the transformation \(\mathbf {t}(\mathbf {x}, \cdot )\) into Z. Using an adaptive discretization as in Blankenship and Falk (1976), these considerations lead to the following algorithm:

If, in Step 2, an initial discretization \({\dot{Y}}_0 \left( \mathbf {x}^0 \right) \) of \(Y \left( \mathbf {x}^0 \right) \) is not at hand, one can be obtained by solving the lower level problems and transferring the solutions (see Steps 8 and 10 ). A feasible starting point for Step 8 can be calculated using the transformation \(\mathbf {t}(\mathbf {x}, \cdot )\) from any feasible point of Z.

While we demand to calculate a global solution in Step 8, we can either calculate a global or a local solution in Step 5. As we will see in the proof of Theorem 1, the global solution of the lower level problem is important to guarantee feasibility. The type of solution of the discretized problems determines the type of solution calculated for the GSIP problem.

If the feasible set of \(\widetilde{\text {SIP}} \left( {\dot{Z}}_k \right) \) becomes empty, also the feasible set M of the GSIP problem is empty and Algorithm 1 can be stopped.

With regard to one of the main applications of semi-infinite optimization, the inscribing of one or multiple designs, the infinite index sets are often simple geometric objects, such as (hyper)ellipsoids or (hyper)boxes, but also objects composed of geometrically simple objects. For these, (re-)parameterization, e.g. in the form of polar, cylindrical or spherical coordinates, are often well known. On the other hand, infinite index sets, especially in this context, can often be written as a translation, rotation, or scaling of fixed objects. Hence, the transformation is known, too. Finally, under suitable conditions, there are “generic” transformations. For example, any star-shaped set can be described by the star center and a radius (function) depending on the distance to the center and the direction. In Still (1999) conditions are stated under which such a constructive transformation exists for infinite index sets.

3 Convergence results

There is a huge variety of convergence results for adaptive discretization methods solving ordinary semi-infinite optimization problems. The goal of this section is to show that the results can be carried over to the transformation-based discretization method under suitable assumptions on the transformation.

We start by assuming that we can guarantee finding global solutions in Step 5 of Algorithm 1. Similar results regarding the convergence in the case of ordinary semi-infinite optimization problems can be found for example in Blankenship and Falk (1976), Reemtsen (1991) and Reemtsen and Goerner (1998). The next theorem summarizes the convergence properties for GSIP problems.

Theorem 1

Let the (initial) feasible set \({\tilde{M}}({\dot{Z}}_0)\) be compact and the feasible set M of GSIP be non-empty. Let the tolerance \(\alpha =0\). Then, either Algorithm 1 terminates after a finite number of steps, or it holds:

-

(i)

The problems GSIP and \(\widetilde{\mathsf {\text {SIP}}}({\dot{Z}}_k), k \in {\mathbb {N}}_0,\) have global solutions \(\mathbf {x}^* \in M\) and \(\mathbf {x}^{k+1} \in {\tilde{M}}({\dot{Z}}_k), k \in {\mathbb {N}}_0\).

-

(ii)

The sequence \(\{ \mathbf {x}^{k} \}_{k \in {\mathbb {N}}_0}\) has at least one accumulation point and each such accumulation point is a global solution of GSIP.

-

(iii)

If \(\mathbf {x}^* \in M\) is a unique global solution of GSIP, then it holds

$$\begin{aligned} \lim _{k \rightarrow \infty } \Vert \mathbf {x}^{k} - \mathbf {x}^* \Vert _2 = 0. \end{aligned}$$ -

(iv)

For a global solution \(\mathbf {x}^*\) of GSIP it holds \(f(\mathbf {x}^{k}) \le f(\mathbf {x}^{k+1}) \le f(\mathbf {x}^*), k \in {\mathbb {N}}_0,\) and \(\lim _{k \rightarrow \infty } f(\mathbf {x}^{k}) = f(\mathbf {x}^*)\).

Proof

For the discretizations \({\dot{Z}}_k, k \in {\mathbb {N}}_0,\) of Z calculated by Algorithm 1, the following relation is valid:

Hence, it holds for the feasible sets of the discretized SIP-problems \(\widetilde{\mathsf {\text {SIP}}}({\dot{Z}}_k), k \in {\mathbb {N}}_0,\) and the feasible set of \(\widetilde{\mathsf {\text {SIP}}}\):

The functions \({\tilde{g}}_i, i \in I,\) are continuous as compositions of continuous functions and, hence, the sets \({\tilde{M}}({\dot{Z}}_k), k \in {\mathbb {N}}_0,\) and \({\tilde{M}}\) are closed sets. Because of (3) they are bounded and, thus, are compact. Because of its continuity the function f takes its minimum on the sets \(M={\tilde{M}}\) and \({\tilde{M}}({\dot{Z}}_k), k \in {\mathbb {N}}_0\). Hence, assertion (i) is shown.

For (ii) note that \(\mathbf {x}^k\) and \(\mathbf {z}_i^{k}, i\in I\), are sequences in \({\tilde{M}}({\dot{Z}}_0)\) and Z respectively. Both sets are compact by assumption. Hence, there is at least one accumulation point. For every converging subsequence \(\{ \mathbf {x}^{k_l} \}_{l \in {\mathbb {N}}}\) we can choose a subsequence such that \(\mathbf {z}_i^{k_l}, i\in I\) is convergent as well. Using continuity of \({\tilde{g}}_i\) and optimality of \({\mathbf {z}}_i^{k_l}\) for \({\tilde{Q}}_i({\mathbf {x}}^{k_l})\), we have for \(i\in I\) and \(\mathbf {z}\in Z\)

Thus, every accumulation point is feasible. Moreover, because \({\mathbf {x}} \in M \Rightarrow {\mathbf {x}} \in {\tilde{M}}({\dot{Z}}_{k_l})\) by (3), we also have for \({\mathbf {x}}\in M\),

which shows optimality.

The remaining assertions (iii) and (iv) follow easily by (ii) and relation (3). \(\square \)

If the \(\widetilde{\mathsf {\text {SIP}}}\)-defining functions f and \({\tilde{g}}_i, i \in I, \) are non-convex in \(\mathbf {x}\), it can be costly to guarantee the calculation of global solutions. In the literature there also exist results regarding the case, that only local solutions can be calculated (see, e.g., Reemtsen 1994).The same techniques can be used here. Therefore, assume that for every \(k\in {\mathbb {N}}_0\), the current iterate \(\mathbf {x}^k\) is a local minimizer of \(\widetilde{\mathsf {\text {SIP}}}({\dot{Z}}_k)\) with radius \(r_k>0\), i.e. for all \(\mathbf {x}\) in \({\tilde{M}}({\dot{Z}}_k)\) with \(\Vert \mathbf {x}-\mathbf {x}^k\Vert < r_k\) the following holds:

If the radii do not converge towards zero, i.e.

then every accumulation point \(\mathbf {x}^*\) of the sequence \(\{\mathbf {x}^k\}_{k \in {\mathbb {N}}_0}\) is a local minimizer of GSIP. This can be proven by restricting the analysis to a ball of radius r/2 around \(\mathbf {x}^*\). For large k, the current iterates are global solutions within this ball.

These results and the assertions of Theorem 1 hold only for a limit point. We have introduced a parameter \(\alpha \) as a finite termination criterion. The next Lemma shows that this is a valid approach.

Lemma 2

Again let the (initial) feasible set \({\tilde{M}}({\dot{Z}}_0)\) be compact. For a tolerance \(\alpha >0\) Algorithm 1 terminates after finitely many steps.

Proof

Assume that the contrary is the case. In this case in every iteration the algorithm has to add at least one point to the discretization. This means that there is an \(i\in I\) and sequences \(\{{\mathbf {x}}^k\}_{k\in {\mathbb {N}}_0}\subseteq {\tilde{M}}({\dot{Z}}_0)\), \(\{{\mathbf {z}}^k\}_{k\in {\mathbb {N}}_0}\subseteq Z\) with:

and for all \({\hat{k}}>k\):

As Z and \({\tilde{M}}({\dot{Z}}_0)\) are both compact there are convergent subsequences with:

Because t is continuous, also \({\tilde{g}}_i\) is continuous. Together with (4) and (5) we have

which is a contradiction. Hence, no such sequences can exist and the algorithm terminates after a finite number of steps. \(\square \)

The following question arises: After k steps, what is the distance between the obtained solution and a local solution of the GSIP problem. In Still (2001a) the distance is bounded for a sequence of solutions for finer discretizations. The transfer of these results will be the topic of the remainder of this section.

To do so, we have to strengthen the initial assumptions. First, in Assumption 2 we assume that the iterates converge towards a strict local minimizer. Second, in Assumption 3 we assume some differentiability of the functions \(g_i, i \in I,\) and \({\mathbf {t}}\) as well as an extension of the Mangasarian-Fromovitz Constraint Qualification (MFCQ). Finally, we assume in Theorem 2 that the objective function is locally Lipschitz continuous.

A point \({\mathbf {x}}^*\in M\) is called a strict local minimizer of order \(\rho =1\) or \(\rho =2\), if there is a \(q>0\) and a neighborhood U such that for every \({\mathbf {x}} \in M\cap U\)

holds.

We now assume that the sequence generated by Algorithm 1 converges to a local solution:

Assumption 2

Let \(\mathbf {x}^*\) be a strict local minimizer of order \(\rho = 1\) or \(\rho = 2\) of GSIP in the neighborhood U and \(\{{\mathbf {x}}^{k}\}_{k\in {\mathbb {N}}_0}\) a sequence of local minimizers with radius \(r_k\) calculated by the transformation-based discretization method (TDM). Furthermore, assume that \(\Vert \mathbf {x}^{k} - \mathbf {x}^* \Vert _2 \rightarrow 0\) for \(k \rightarrow \infty \) and

The assumption presented here is very similar to the main assumption made in Still (2001a). The difference is the assumption of non-vanishing radii (6) of the local minimizers. As we will show in Sect. 3.1 this is a necessary assumption.

For the construction of a feasible point a MFCQ-like condition for \({\widetilde{\text {SIP}}}\) is needed. Therefore, we ask under which conditions, the functions \(\mathrm {D}_1 {\tilde{g}}_i, i \in I,\) are continuous on \(U_{\mathbf {x}^*} \times Z\), where \(U_{\mathbf {x}^*}\) is a neighborhood of \(\mathbf {x}^*\).

Lemma 3

Let the functions \(g_i({\mathbf {x}},{\mathbf {y}}), i \in I,\) be differentiable w.r.t. \((\mathbf {x},\mathbf {y})\) and \(\mathbf {t}({\mathbf {x}} , \mathbf {z})\) be differentiable with respect to its first argument \({\mathbf {x}}\). Let \(U_{\mathbf {x}^*}\) be a neighborhood of \(\mathbf {x}^*\). We assume that

-

(i)

the functions \(\mathrm {D} g_i(\mathbf {x},\mathbf {y}), i \in I\), are continuous on \(U_{\mathbf {x}^*} \times {\mathbb {R}}^{n}\) and

-

(ii)

the function \(\mathrm {D}_1\mathbf {t}(\mathbf {x}, \mathbf {z})\) is continuous on \(U_{\mathbf {x}^*} \times Z\).

Then, the functions \({\tilde{g}}_i,i \in I,\) are differentiable w.r.t. \(\mathbf {x}\) around \(\mathbf {x}^*\) and this derivative is given by:

which is continuous on \(U_{\mathbf {x}^*} \times Z\).

Before introducing Theorem 2, we collect the required conditions in an assumption.

Assumption 3

Let the functions \(g_i(\mathbf {x},\mathbf {y}), i \in I,\) be differentiable w.r.t. \((\mathbf {x},\mathbf {y})\) and \(\mathbf {t}(\mathbf {x} , \mathbf {z})\) be differentiable in its first argument \(\mathbf {x}\). Assume, that there exists a neighborhood \(U_{\mathbf {x}^*}\) of \(\mathbf {x}^*\) such that:

-

(i)

The functions \( \mathrm {D} g_i(\mathbf {x},\mathbf {y}), i \in I,\) are continuous on \(U_{\mathbf {x}^*} \times {\mathbb {R}}^{n}\).

-

(ii)

The function \(\mathrm {D}_1 \mathbf {t}(\mathbf {x}, \mathbf {z})\) is continuous on \(U_{\mathbf {x}^*} \times Z\).

-

(iii

There exists a vector \(\varvec{\xi } \in {\mathbb {R}}^{m}\) such that for all \(i \in I\):

$$\begin{aligned} \mathrm {D}_1{\tilde{g}}_i(\mathbf {x}^*, \mathbf {z}) \cdot \varvec{\xi } \le -1 \text { for all } \mathbf {z} \in Z_0^i(\mathbf {x}^*), \end{aligned}$$where \(Z_0^i(\mathbf {x}^*)=\{\mathbf {z} \in Z\mid {\tilde{g}}_i(\mathbf {x}^*,\mathbf {z})=0\}\) denotes the set of active indices.

For a sequence \(\{{\mathbf {x}}^{k}\}_{k\in {\mathbb {N}}_0}\) calculated by Algorithm 1 we denote the current violation for \(k\in {\mathbb {N}}_0\) by:

Now, we can bound the difference of the current iterate to the limit point.

Theorem 2

Let Assumptions 2 and 3 hold and the objective function f of GSIP be Lipschitz-continuous near \(\mathbf {x}^*\).

-

(i)

There is a constant \(c_1 > 0\) and a \(k' \in {\mathbb {N}}_0\) such that

$$\begin{aligned} 0 \le f(\mathbf {x}^*) - f(\mathbf {x}^{k}) \le c_1 \alpha _k \text { for all } k \ge k'. \end{aligned}$$ -

(ii)

There is a constant \(c_2 > 0\) and a \(k'' \in {\mathbb {N}}_0\) such that

$$\begin{aligned} \Vert \mathbf {x}^* - \mathbf {x}^{k} \Vert _2 \le c_2\alpha _k^{{1}/{\rho }} \text { for all } k \ge k''. \end{aligned}$$

We have added Eq. 6 to the assumptions. In Sect. 3.1 we will show that this additional assumption is needed and the statement is wrong without this assumption. That’s why we give a complete proof and don’t reduce the statement to the statements in Still (2001a)

Proof

Construction of a feasible point: In a first step we construct a feasible point \(\bar{\mathbf {x}}^{k}\). To do so, we move the current iterate towards feasibility: For \(k\in {\mathbb {N}}_0\) and \(t\in [0,1]\) let

where \(\varvec{\xi }\) is chosen according to Assumption 3.iii. We show next that the point \(\bar{\mathbf {x}}^{k}(1)\) is feasible. Firstly, we consider indices \(\mathbf {z}\in Z\) which are close to an active index.

For every \(i\in I\) the function \(\mathrm {D}_1{\tilde{g}}_i(\cdot , \cdot )\) is by Assumption 3 continuous and Z is compact. Thus, there is some \(\varepsilon \) such that for every \(i\in I\) it holds

for \({\mathbf {z}} \in Z^{i,\varepsilon }_0(\mathbf {x}^*)\) and \(\Vert {\mathbf {x}}-{\mathbf {x}}^*\Vert _2 < \varepsilon \), where

Choose \(k_1\) large enough such that

for \(k\ge k_1\). By the mean value theorem there is for every \(i\in I\), \({\mathbf {z}}\in Z_0^{i,\varepsilon }(\mathbf {x}^*)\) and \(k \ge k_1\) an \(s \in [0,1]\) such that

It remains to consider the indices which have a given distance to the active indices. As for \(i\in I\), the set \(Z\backslash Z_0^{i,\varepsilon }(\mathbf {x}^*)\) is compact and the functions \({\tilde{g}}_i(\cdot ,\cdot )\) are continuous, there is a \(\varepsilon _2\) such that

for \(z\in Z\backslash Z_0^{i,\varepsilon }(\mathbf {x}^*)\) and \(\Vert \mathbf {x}-\mathbf {x}^*\Vert _{2} < \varepsilon _2\). Choose \(k_2\) large enough such that

for \(k\ge k_2\). Thus for \(k \ge \max (k_1,k_2)\) the point \({\bar{x}}^k:={\bar{x}}^k(1)\) is feasible.

Now the two claims follow easily:

Proof of i): By Assumption 2 the radii \(r_k\) of the local minimizers \(\mathbf {x}^{k}\) don’t converge to 0. Thus for large k the distance of the local solution \(\mathbf {x}^*\) to \(\mathbf {x}^{k}\) is smaller than \(r_k\) and we have

Again, for large k, the feasible point \(\bar{\mathbf {x}}^k\) is contained in U. By the Lipschitz-continuity of f, there is a constant L such that for large k

which shows the first assertion.

Proof of ii): By Assumption 2 the limit point \({\mathbf {x}}^*\) is a local solution of order \(\rho \). Thus for large k:

where we’ve used Eqs. (7) and (8) for the third and fourth inequality. \(\square \)

3.1 Counterexample for rate of convergence

We have added the assumptions of non-vanishing radii (6) for the above convergence result. The following example shows that without this assumption the convergence rate may be arbitrarily bad.

We construct the example within two steps. First, we present a Lipschitz continuous function on \(X=[-1,1]^2\) with an infinite number of local solutions.

Therefore, let \(\{{\mathbf {c}}^{(i)}\}_{i\in {\mathbb {N}}}\) with

Around every \({\mathbf {c}}^{(i)}\) we choose a circle \(U_i\) with radius \(r_i :=\frac{1}{10^{i+1}}\):

Note that the circles \(U_i\) are disjoint. We introduce the objective function \(f:X \rightarrow {\mathbb {R}}\) by:

We summarize the properties of the objective function in the following lemma:

Lemma 4

For the above construction, the following is true:

-

(i)

The function f is Lipschitz continuous.

-

(ii)

For every \(i\in {\mathbb {N}}\) the center point \({\mathbf {c}}_i\) is a strict local minimum of f with radius \(r_i\).

Proof

-

(i)

It is easy to see that we can write f for every \(\mathbf {x}\in X\) as

$$\begin{aligned} f(\mathbf {x})=\min \{\Vert \mathbf {x}\Vert _2, \min _{i\in {\mathbb {N}}}\Vert \mathbf {x} \Vert _2+2\Vert \mathbf {c}^{(i)}-\mathbf {x}\Vert _2-2r_i \}. \end{aligned}$$As every single function is Lipschitz continuous with Lipschitz constant 3, the minimum is again Lipschitz continuous.

-

(ii)

Consider for \(i\in {\mathbb {N}}\) a point \(\mathbf {x}\in U_i\) with \(\mathbf {x} \ne \mathbf {c}^{(i)}\). The triangle inequality yields

$$\begin{aligned} f(\mathbf {x}) \; {=}&\ \Vert \mathbf {x}\Vert _2+2\Vert \mathbf {c}^{(i)}-\mathbf {x}\Vert _2-2r_i\\ \ge&\ \Vert \mathbf {c}^{(i)}\Vert _2+\Vert \mathbf {c}^{(i)}-\mathbf {x}\Vert _2-2r_i\\ >&\ \Vert \mathbf {c}^{(i)}\Vert _2 -2r_i\\ =&\ f(\mathbf {c}^{(i)}). \end{aligned}$$

\(\square \)

We consider the following standard semi-infinite problem:

As transformation we choose the identity. Then, we have \(Z=Y\). We investigate the structure of the problem in the next lemma.

Lemma 5

Problem \(\text {SIP}_{\text {ex}}\) fulfills the following properties:

-

(i)

The feasible set is given by

$$\begin{aligned} M=\{\mathbf {x} \in X \mid x_1 \le 0\}. \end{aligned}$$ -

(ii)

The origin \(\mathbf {x}^*=(0,0)\) is a local minimizer of order 1.

-

(iii)

There exists a vector \(\varvec{\xi }\in {\mathbb {R}}^2\) such that

$$\begin{aligned} \mathrm {D}_1g(\mathbf {x}^*,y_0)\cdot \varvec{\xi } \le -1 \end{aligned}$$for every \(y_0\in Y\) with \(g(\mathbf {x}^*,y_0)=0\).

Proof

-

(i)

Let \(\mathbf {x}\in X\). The solution of the lower level is given by \(y=x_2\). Thus,

$$\begin{aligned} \max _{y \in Y } g(\mathbf {x},y)=x_1. \end{aligned}$$ -

(ii)

On the feasible set M the objective function coincides with the norm, which clearly has a local minimum of order 1 in \(\mathbf {x}^*=(0,0)\).

-

(iii)

As by part (i) the only solution of the lower level for \(\mathbf {x}^*\) is given by \(y_0=0\), we have with \(\varvec{\xi }:=(-1,0)^{\top }\)

$$\begin{aligned} \mathrm {D}_1g(\mathbf {x}^*,y_0) \cdot \varvec{\xi } = \big (1, -2x_2\big ) \cdot \begin{pmatrix} -1\\ 0 \end{pmatrix}=1\cdot -1 =-1. \end{aligned}$$

\(\square \)

If we take the strict local solution \({\mathbf {c}}^{(1)}\) as initial point and no point as initial discretization for Algorithm 1, the first point added to the discretization will be \(\frac{1}{1}\). The next element of the sequence of strict local minimizers is still in \(M({\dot{Z}}_1)\) thus we can choose \({\mathbf {c}}^{(2)}\) as next iterate. Proceeding this way yields in the k-th iteration the local minima \(\mathbf {c}^{(k+1)}\) as iterate \(\mathbf {x}^{(k+1)}\) and the discretization \(Z^{k+1} = \{\frac{1}{i} \mid 1\le i \le k+1\}\). The constraint violation after the k-th step is

but the distance to limiting solution is greater than \(\frac{1}{k+1}\), which violates the convergence rate given in Theorem 2.

Remark 2

-

(i)

The second component of the local solutions \(\frac{1}{i}\) can be replaced by an arbitrarily slow converging sequence. For this reason, there can be an arbitrarily bad rate of convergence.

-

(ii)

We presented this example in the context of an adaptively chosen discretization. The same example can also be used to construct a counterexample for uniformly chosen discretization points. Thus, this example shows that the assumption of non-vanishing radii (6) is missing in Still (2001a).

4 Convexity preserving transformations

Of course not all transformations destroy the convexity in the lower level problems. The topic of this section outlines under which conditions convexity properties can be maintained. We begin with the lower level problem.

The lower level problems \(\tilde{\text {Q}}_i(\mathbf {x}), i \in I,\) of the transformation-induced SIP problem are convex for all \(\mathbf {x} \in X\), if the transformation-induced functions \({\tilde{g}}_i(\mathbf {x}, \mathbf {z}), i \in I,\) are concave in \(\mathbf {z}\) for all \(\mathbf {x}\) and the set Z is convex.

A class of mappings, which map convex sets onto convex sets and preserve concavity, are the affine-linear ones. Therefore, it holds:

Lemma 6

For all \(\mathbf {x} \in X\) let the functions \(g_i(\mathbf {x}, \cdot ), i \in I,\) be concave and the set Z convex. Furthermore, let the transformation \(\mathbf {t}\) be affine-linear in its second argument, i.e.

where the mappings \(\mathbf {A} : X \rightarrow {\mathbb {R}}^{ n \times {\tilde{n}}}\) and \(\mathbf {b} : X \rightarrow {\mathbb {R}}^{n}\) are at least continuous for all \(\mathbf {x} \in X\). Then, the problems \({\tilde{Q}}_i(\mathbf {x}), i \in I,\) are convex for all \(\mathbf {x} \in X\).

Proof

As the set Z is by definition convex, it only remains to prove the concavity of the objective function of the lower level problem. We show the concavity by checking the definition. Therefore fix \(\mathbf {x} \in X\) and consider \(\mathbf {z}_1, \mathbf {z}_2 \in Z, \lambda \in [0,1]\). With the concavity of \(g_i(\mathbf {x}, \cdot )\) it follows for \(i\in I\):

\(\square \)

After the lower level problems, we now consider the discretized problems solved in Step 5. For the computation of global solutions, it is of great value to have convex discretized problems, i. e., the functions \({\tilde{g}}_i(\mathbf {x}, \mathbf {z}), i \in I,\) (and the function f) are convex in \({\mathbf {x}}\) (for all \(\mathbf {z}\)). The following example illustrates that the transformation can destroy this property as well.

Example 2

Let the infinite index set be a semi-circle in arbitrary position with variable radius, which can be modeled as follows:

with \(\mathbf {x} \in X := {\mathbb {R}}^{2} \times {\mathbb {R}}^{3}_{>0} \times {\mathbb {R}}^{}\). The mapping, by which the semi-circle can be represented as image of the set \(Z = [0,1] \times \left[ -{1}/{2},{1}/{2} \right] \) is

with \({\text {atan2}}\) being the bivariate arctangent

Obviously, the sets \(Y(\mathbf {x})\), \(\mathbf {x} \in X\), as well as the set Z are convex. We consider the function \(g(\mathbf {x}, \mathbf {y}) = x_6^2-y_1+y_2\), which is convex in \(\mathbf {x}\) and \(\mathbf {y}\). However, the function

is not convex in \(\mathbf {x}\) for all \(\mathbf {z} \in Z\). For example with \( \mathbf {x_1}=(0,0,1,1,-1,0)^{T}\), \(\mathbf {x_2}=(0,0,1,1,0,0)^{T}\) and \({\mathbf {z}}=(1,0)^T\) one gets:

The next lemma states, under which conditions on the functions \(g_i, i \in I,\) and the transformation \(\mathbf {t}\), convexity in \(\mathbf {x}\) is preserved. It follows by the fact that the composition of a convex and a linear mapping is convex again.

Lemma 7

Let the functions \(g_i({\mathbf {x}}, \mathbf {y}), i \in I,\) be convex in \(({\mathbf {x}},{\mathbf {y}})\). Furthermore, let the transformation \(\mathbf {t}\) be affine-linear in its first argument, i.e.

where \(\mathbf {A} : {\mathbb {R}}^{{\tilde{n}}} \rightarrow {\mathbb {R}}^{n \times m}\) and \(\mathbf {b} : {\mathbb {R}}^{{\tilde{n}}} \rightarrow {\mathbb {R}}^{n}\). Then the functions \({\tilde{g}}_i(\mathbf {x}, \mathbf {z}), i \in I,\) are convex in \(\mathbf {x}\) for any \(\mathbf {z} \in Z\).

Obviously, the rotation used in Example 2 does not satisfy condition (10). However, for translation, scaling, and shearing condition (10) is satisfied.

5 Numerical examples

In this section we apply Algorithm 1 to some native GSIP problems and two GSIP problems stemming from an important application of semi-infinite optimization, namely design centering. We illustrate how the arising problems are performantly solved by the proposed algorithm. A more detailed numerical analysis of the transformation-based discretization method as well as its application to a real world problem, the volume-maximal utilization of gemstones, can be found in Schwientek (2013). Note that the following numerical examples shouldn’t be a full comparison to previously developed algorithms. Instead the examples illustrate that even complicated problems like the inscribing of two designs in a container can be solved in an easy way.

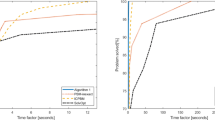

We implemented Algorithm 1 in Matlab R2016a. In Step 1 we chose \(\alpha = 10^{-6}\) as the termination tolerance. For the solution of the discretized SIP problems \(\widetilde{\mathrm {SIP}}({\dot{Z}}_k), k \in {\mathbb {N}}_0,\) (Step 5) as well as for the solution of the convex lower level problems \(Q_i(\mathbf {x}^k), i \in I, k \in {\mathbb {N}}_0,\) (Step 8) we used the SQP algorithm of the routine fmincon of the Optimization Toolbox V7.4 (R2016a) with default settings and using first derivatives. The computations have been performed on a 64-bit Windows machine with a Intel® Core™i7 5600U processor and 8 GB RAM. The time needed for the computation have been measured using the Matlab function timeit.

5.1 Some native examples

In the first numerical example we consider the general semi-infinite optimization problem outlined in Lemonidis (2008), Example 1 (see also Jongen et al. 1998, Example 4-2). We added the constraint \(x_1 \ge 0\), otherwise the infinite index set \(Y(\mathbf {x})\) could be empty.

with

The infinite index set \(Y({\mathbf {x}})\) can be explicitly calculated and is equal to \([-\sqrt{x_1},\sqrt{x_1}]\). The (only) lower level problem

is convex (in y), but the corresponding optimal value function \(\varphi (\mathbf {x}) = \sqrt{x_1} - x_2\) isn’t convex (in \(\mathbf {x}\)). The feasible set of \(\text {GSIP}_{\mathrm{1}}\) is

and its optimal solution \(\mathbf {x}^* = (0,0)\). By the simple transformation

\(\text {GSIP}_{\mathrm{1}}\) can be transferred into a standard semi-infinite optimization problem, whose discretized problems are convex.

We chose \(\mathbf {x}^0 = (1,1)\) as starting point (\(f^0 = {25}/{16}\)) and the point 0.5 as initial discretization. After 2 iterations (0.12 s) Algorithm 1 stopped with \(\mathbf {x}^* = (0, 0)\) and \(f^* = 0.0625\). The number of finite constraints induced by discretization grew from 1 to 2.

The global solution of the lower level problem is given by the lower bound of the interval \([-\sqrt{x_1},\sqrt{x_1}]\). In the first iteration the discretization point \(z=1\) is added. The chosen transformation maps this discretization point to the global solution of the lower level. This means that after the first iteration the discretized problem \(\widetilde{\mathsf {\text {SIP}}}({\dot{Z}}_k)\) and the GSIP problem have the same feasible set.

We tested our new algorithm on the other 15 examples collected by Lemonidis (2008), too. This test set was also used in the recent publications (Mitsos and Tsoukalas 2015; Kirst and Stein 2019). However, to apply Algorithm 1 we need to ensure that the central Assumption 1 is satisfied. This means in particular that the infinite index set \(Y(\mathbf {x})\) must be non-empty for every \(\mathbf {x} \in X\). Unfortunately, most of the examples collected in Lemonidis (2008) do not satisfy this condition, namely Examples 1, 2, 4-9, 11, 13, and 16. However, in most of these examples (1, 2, 6-8, 11, and 13) it is possible to meet this condition by adding a single constraint. For the remaining examples (4, 5, 9, and 16) it is as follows. As shown by Lemonidis (2008), the infinite index set in Example 4 is either empty or the semi-infinite constraint can not be satisfied. For the Examples 5, 9, and 16 we could not easily derive a transformation. That’s why we excluded these four examples from our numerical evaluations. The considered original and modified examples together with their transformation are listed in “Appendix 1”.

In most of the taken examples the infinite index set is given by an interval. Then, a transformation can easily be constructed by convex combination of the interval ends. In Example 3 the infinite index set can be obtained by scaling the unit ball by the radius. A possible transformation for Example 6 is given by

In Example 14 the infinite index set is a two-dimensional box. Thus, the Cartesian product of the convex combination of the lower and upper bounds is a suitable transformation.

In the 12 examples for which the transformation-based discretization method is applicable the algorithm stopped after two iterations. The time needed to solve the problems ranges from 0.08 to 0.14 s. The reasons for the small number of iterations are the same as described for the first example above.

In all examples, except Example 13, Algorithm 1 found the optimal solution reported in the literature. In Example 13 the solution can not be attained, because we removed it by adding the mentioned constraint to avoid an empty index set. The transformation-based discretization method then finds the point \(\mathbf{x }^*=(0,0.25,0.25)\) with an objective value \(f^*=3.5681\).

To further demonstrate our new method, we turn to more complex examples with multiple semi-infinite constraints now.

5.2 Design centering

One important application of (general) semi-infinite programming is design centering (DC). There, the task is the following: given a set \(C \subseteq {\mathbb {R}}^{n}\) - the so-called container - and a second, parametrized set \(D(\mathbf {x}) \subseteq {\mathbb {R}}^{n}\), \(\mathbf {x} \in X \subseteq {\mathbb {R}}^{m}\) - the so-called design - inscribe \(D(\mathbf {x})\) into C such that some functional, commonly the volume, of \(D(\mathbf {x})\) is maximized:

Under the assumption that the sets C and \(D(\mathbf {x})\) are given as solutions sets of some systems of inequalities

Problem DC can be rewritten as a general semi-infinite optimization problem

For a detailed discussion of the reformulation of design centering problems as semi-infinite ones we refer to Stein (2006) and the references therein. Different solution techniques are discussed in Harwood and Barton (2017).

One interesting application of design centering in the context of semi-infinite optimization is the maximal material usage in gemstone cutting. There, the task is to produce a set of precious gems from an irregularly shaped raw one in such a way that their total value is as high as possible. In the case of producing only one precious gem, the problem corresponds to a design centering, where the precious gem corresponds to the design, the raw one to the container and the volume of the precious shall be maximized. For a detailed introduction into the modeling and solution of this problem we refer to Winterfeld (2008); Küfer et al. (2008, (2015).

In a concrete case we consider a two-dimensional design centering problem, i.e. \(n = 2\). Firstly, we introduce the container, called concavified unit square (see Fig. 3a for a graphical illustration),

with

The last two constraints aren’t visible in Fig. 3, but are necessary to avoid unboundedness of the container.

Now, we present the design. Remember that in addition to a functional description of the design (12) and a calculation rule for their area, we need for the transformation-based discretization method a representation of the design as an image of a compact set Z under a continuously differentiable mapping \(\mathbf {t}: X \times Z \rightarrow {\mathbb {R}}^{2}\).

As design we consider the intersection of two variable, but equally sized circles. The center of each circle is a boundary point of the other one (see Fig. 2). We call this design boat. Thus, \(X = {\mathbb {R}}^{2} \times {\mathbb {R}}^{}_{\ge 0} \times {\mathbb {R}}^{2} {\setminus } \{ \mathbf {0} \}\),

The intersection points connecting line divides the boat into two equally sized circle segments of angle \({2\pi }/{3}\) and radius \(x_3\) (see Fig. 2). Consequently,

Moreover, we have,

and,

where \(\mathrm {atan2}\) is the bivariate arctangent.

We chose \(\mathbf {x}^0 = (0.25,0.5,0.5,1,0)\) as starting point with \(f^0 = -0.3071\) (see Fig. 3a for a graphical illustration of this configuration). The initial discretization of the design consists of the four points \(\{(1,-0.5)\), (1, 0.5), (1, 0), \((1,1)\}\) yielding 24 constraints (4 points for each of the 6 container constraints) in the initial discretized SIP problem. After 5 iterations/refinements (0.69 s) Algorithm 1 stopped with \(\mathbf {x}^* = (0.5367,0.0325,0.4685, {0.4997,1.2712})\) and \(f^* = -0.2696\) (see Fig. 3c for a visualization of the final solution). The final discretization consisted of 14 points and thus 84 discretization-induced constraints. A extensive numerical study of the solution of DC problems as semi-infinite ones with different design-container-combinations can be found in Schwientek (2013).

Area-maximal inscribing of the design \(D_{\mathrm{boat}}\) in the container \(C_{\mathrm{cus}}\) [dark gray—container, light gray—design, circles—points of current discretization, which do not violate the container restrictions, diamonds—points of current discretization, which violate container restrictions and will be added in the next iteration]: a start solution with given discretization, b after solution of the first discretized SIP problem \({\widetilde{\mathrm {SIP}}}({\dot{Z}}_0)\), and c final solution (after 5 refinements) with final discretization

The transformation-induced discretized SIP problems as well as the underlying GSIP problem stemming from a design centering problem are non-convex in general. For this reason, Algorithm 1 will terminate with either a local solution or a stationary point.

5.3 Inscribing multiple designs

A natural extension of the design centering task is to arrange two or more designs in a container yielding maximal total (design) volume. In practice, such problems arise as cutting or packing problems. In addition to the condition that the designs are completely located in the container, the designs are not allowed to overlap. In the following we will reformulate and evaluate such a problem as GSIP in the case of two designs. While we only consider the case of two designs, the extension of the approach to inscribe more than two designs is straightforward. For details we refer to Schwientek (2013), Küfer et al. (2015).

Let the container C be as given in (11) and \(D_1, D_2\) two (parametrized) designs as given in (12) with common parameter vector \(\mathbf {x} \in {\mathbb {R}}^{m}\). Then, the task in multi-body design centering (in the case of two designs) is the following:

Under the assumption that the designs \(D_1\) and \(D_2\) are convex sets for each \(\mathbf {x}\), a separation theorem can be applied, where \(\varvec{\eta } \in {\mathbb {R}}^{n} {\setminus } \mathbf {0}\) and \(\beta \) are the parameters of the separating hyperplane \(H(\varvec{\eta }, \beta ) = \{ \mathbf {y} \in {\mathbb {R}}^{n} \mid \varvec{\eta }^T \mathbf {y} = \beta \}\), \(\tilde{\mathbf {x}} = (\mathbf {x}, \varvec{\eta }, \beta ),\) and \({\tilde{X}} = \{ \tilde{\mathbf {x}} \mid \varvec{\eta } \ne \mathbf {0} \}\). Then, \(\text {MBDC}_2\) can be rewritten as a GSIP in the following way:

The condition \(\varvec{\eta } \ne \mathbf {0}\) can be assured e.g. by requiring \(\Vert \varvec{\eta } \Vert _2^2 = 1\). Note that we have two index sets here, but extending Algorithm 1 to this situation is straightforward.

Using this approach, it is also possible to consider forbidden areas in the container when placing the designs and to avoid overlapping these with the designs. If one models the forbidden areas similar to the designs (but independent of \(\mathbf {x}\)), the separation approach above carries directly over. For more detailed explanations in this regard and further separation techniques we refer to Schwientek (2013).

For a numerical example of problem \(\text {GSIP}_{\text {MBDC}_2}\) we consider the container from (13) and take as the first design, \(D_1\), the boat from (14). As our second design, \(D_2\), we consider an ellipse with variable semi-axis lengths in arbitrary position. For such an ellipse, we have \(m_2 = 5\),

with

and

This constellation results in 13 decision variables, 13 semi-infinite and 2 finite constraints. As starting point we chose the (infeasible) point \(\tilde{\mathbf {x}}^0\) \(=\) \((\mathbf {x}_{\mathrm{boat}}^0\), \(\mathbf {x}_{\mathrm{ellipse}}^0\), \(\varvec{\eta }^0\), \(\beta ^0)\) with \(\mathbf {x}_{\mathrm{boat}}^0\) \(=\) (0.75, 0.25, 0.25, 1, 0), \(\mathbf {x}_{\mathrm{ellipse}}^0\) \(=\) (0.25, 0.25, 0.25, 0, 0.25), \(\varvec{\eta }^0\) \(=\) \((-\frac{\sqrt{2}}{2},-\frac{\sqrt{2}}{2})\), and \(\beta ^0 =-0.625\) (see Fig. 4a). As initial discretizations we consider \({\dot{Z}}_0^{\mathrm{boat}}\) \(=\) \(\{(1,-0.5)\), (1, 0.5), (1, 0), \((1,1)\}\) and \({\dot{Z}}_0^{\mathrm{ellipse}}\) \(=\) \(\{(1,0)\), (1, 0.5), (1, 1), \((1,-0.5)\}\). These discretizations result in 56 finite constraints. After 12 iterations (simultaneous refinements of both infinite index sets) which takes 3.75 s Algorithm 1 terminates with solution \(\tilde{\mathbf {x}}^* = (\mathbf {x}_{\mathrm{boat}}^*, \mathbf {x}_{\mathrm{ellipse}}^*, \varvec{\eta }^*, \beta ^*)\) with

and \(f^* = -0.3487\). The final discretization of the boat consists of 37 points and the one of the ellipse of 30 points (see Fig. 4c).

Area-maximal inscribing of the design \(D_{\mathrm{boat}}\) and \(D_{\mathrm{ell}}\) in the container \(C_{\mathrm{cus}}\) [dark gray—container, light gray—designs, circles—points of current discretization, which do not violate the container and separation restrictions, diamonds—points of current discretization, which violate container or separation restrictions and will be added in the next iteration, black straight line—line that separates both designs]: a start solution with given discretization, b after solution of the first discretized SIP problem \({\widetilde{\text {SIP}}}({\dot{Z}}_0)\), and c final solution (after 12 refinements) with final discretization

Further numerical evaluations, also with respect to other separation approaches and regarding a minimal distance between the designs, can be found in Schwientek (2013). Concerning the modeling and solution of the gemstone utilization task as semi-infinite optimization problem by means of the transformation-based discretization we refer to Schwientek (2013) and Küfer et al. (2015).

6 Conclusions and future research

In the present paper we consider general semi-infinite optimization problems (GSIP), which have convex lower level problems and can be globally transformed into a standard semi-infinite optimization problem (SIP). For the numerical solution of such GSIPs we applied a discretization method to its SIP-reformulation. Because the convexity structure in the lower level can be lost through the transformation, but is essential for refining the discretization, we solve in the refinement step the (convex) lower level problems of the underlying GSIP and transfer the global solutions into global solutions of the lower level problems of the induced SIP via the transformation. The convergence results for discretization methods for solving SIPs directly carry over in the case of global and local solutions. An open issue for future research is the convergence of stationary points. Finally, we have demonstrated the operation and performance of our method using three numerical examples.

Two interesting aspects for future investigations are the following:

-

(1)

As known from Still (2001a), the convergence rates can be improved for discretization methods for solving SIPs, when boundary points of the infinite index set are added in a consistent manner for its discretization and the index set satisfies additional conditions. Since it is required for the transformation only to be surjective, the boundary of the infinite index set Z of the induced SIP is generally not mapped to the boundary of the infinite index set \(Y(\mathbf {x})\) and vice versa. This leads to the question whether it is sufficient to add boundary points of \(Y(\mathbf {x})\) in the mentioned consistent manner to get improved convergence rates for the transformation-based discretization method.

-

(2)

To keep the dimensions of discretized SIP problems moderate, strategies have been developed to retain only \(\alpha \)-active points in the discretization and remove the others during the process. Such algorithms are called exchange methods instead of discretization methods. An exploration of these deletion strategies applied to the transformation-based discretization method could prove interesting. Of special interest would be the impact this may have on the convergence of the method.

References

Bhattacharjee B, Lemonidis P, Green WH Jr, Barton PI (2005) Global solution of semi-infinite programs. Math Program 103(2):283–307

Blankenship JW, Falk JE (1976) Infinitely constrained optimization problems. J Optim Theory Appl 19(2):261–281

Djelassi H, Glass M, Mitsos A (2019) Discretization-based algorithms for generalized semi-infinite and bilevel programs with coupling equality constraints. J Global Optim 75(2):341–392

Floudas CA, Stein O (2007) The adaptive convexification algorithm: A feasible point method for semi-infinite programming. SIAM J Optim 18(4):1187–1208

Guerra Vázquez F, Rückmann JJ (2005) Extensions of the Kuhn-Tucker constraint qualification to generalized semi-infinite programming. SIAM J Optim 15(3):926–937

Harwood SM, Barton PI (2017) How to solve a design centering problem. Math Methods Oper Res 86(1):215–254

Jaklic̆ A, Leonardis A, Solina F (2000) Segmentation and recovery of superquadrics, computational imaging and vision, vol 20. Kluwer Academic Publishers, Dordrecht

Jongen HT, Rückmann JJ, Stein O (1998) Generalized semi-infinite optimization: a first order optimality condition and examples. Math Program 83:145–158

Kaplan A, Tichatschke R (1997) On the numerical treatment of a class of terminal problems. Optimization 41:1–36

Kirst P, Stein O (2019) Global optimization of generalized semi-infinite programs using disjunctive programming. J Global Optim 73(1):1–25

Küfer KH, Maag V, Schwientek J (2015) Maximal material yield in gemstone cutting. In: Neunzert H, Prätzel-Wolters D (eds) Currents in industrial mathematics: from concepts to research to education. Springer, Berlin, pp 229–290

Küfer KH, Stein O, Winterfeld A (2008) Semi-infinite optimization meets industry: a deterministic approach to gemstone cutting. SIAM News 41(8):

Lemonidis P (2008) Global optimization algorithms for semi-infinite and generalized semi-infinite programs. Ph.D. thesis, Massachusetts Institute of Technology

López M, Still G (2007) Semi-infinite programming. Eur J Oper Res 180(2):491–518

Mitsos A, Tsoukalas A (2015) Global optimization of generalized semi-infinite programs via restriction of the right hand side. J Global Optim 61(1):1–17

Reemtsen R (1991) Discretization methods for the solution of semi-infinite programming problems. J Optim Theory Appl 71(1):85–103

Reemtsen R (1994) Some outer approximation methods for semi-infinite optimization problems. J Comput Appl Math 53(1):87–108

Reemtsen R, Goerner S (1998) Numerical methods for semi-infinite programming: a survey. In: Reemtsen R, Rückmann JJ (eds) Semi-infinite programming, nonconvex optimization and its applications, vol 25. Kluwer Academic Publishers, Boston, pp 195–275

Rückmann JJ, Shapiro A (2001) Second-order optimality conditions in generalized semi-infinite programming. Set-Valued Anal 9(1–2):169–186

Schwientek J (2013) Modellierung und Lösung parametrischer Packungsprobleme mittels semi-infiniter Optimierung–Angewandt auf die Verwertung von Edelsteinen. Ph.D. thesis, TU Kaiserslautern

Stein O (2006) A semi-infinite approach to design centering. In: Dempe S, Kalashnikov V (eds) Optimization with multivalued mappings: theory, applications and algorithms, springer optimization and its applications, vol 2. Springer, New York, pp 209–228

Steuermann HP (2011) Adaptive algorithms for semi-infinite programming with arbitrary index sets. Ph.D. thesis, Karlsruher Institut für Technologie (KIT)

Still G (1999) Generalized semi-infinite programming: theory and methods. Eur J Oper Res 119:301–313

Still G (2001) Discretization in semi-infinite programming: the rate of convergence. Math Program 91:53–69

Still G (2001) Generalized semi-infinite programming: numerical aspects. Optimization 49:223–242

Weber GW (1999) Generalized semi-infinite optimization: on some foundations. J Comput Sci Technol 4:41–61

Winterfeld A (2008) Application of general semi-infinite programming to lapidary cutting problems. Eur J Oper Res 191:838–854

Acknowledgements

Open Access funding provided by Projekt DEAL. We would like to thank the two anonymous reviewers and the Associate Editor for their many valuable comments, which have contributed to a significant improvement of the article.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Considered examples from Lemonidis’ collection

Considered examples from Lemonidis’ collection

The following examples were collected by Lemonidis (2008). We modified some of the examples in such a way that there exists a transformation as demanded in Assumption 1. We use the original numbering of the examples as in Lemonidis (2008).

Example 1

(modified): \(\quad \min \limits _{\mathbf {x} \in [-1,1]^2} (x_1 - {1}/{4})^2 + x_2^2 \quad \mathrm {s.t.}\)

with

The constraint (18) is added to the original example. Then, a transformation of a fixed index set Z to the variable index set \(Y(\mathbf {x})\) is

Note that in the collection in Lemonidis (2008) the semi-infinite constraint is different. We use the version presented in the original source (Jongen et al. 1998).

Example 2

(modified): \(\quad \min \limits _{\mathbf {x} \in [-1,1]^2} x_2 \quad \mathrm {s.t.}\)

with

The constraint (19) is added to the original example. Then, a transformation of a fixed index set Z to the variable index set \(Y(\mathbf {x})\) is

Example 3

(original): \(\quad \min \limits _{\mathbf {x} \in [0,1]^2} {1}/{2} \, x_1^4 + 2x_1x_2 -2x_1^2 \quad \mathrm {s.t.}\)

with

A transformation of a fixed index set Z to the variable index set \(Y(\mathbf {x})\) is

Example 6

(modified): \(\quad \min \limits _{\mathbf {x} \in [-3,2]^2} 4x_1^2 - x_2 - x_2^2 \quad \mathrm {s.t.}\)

with

The constraint (20) is added to the original example. Then, a transformation of a fixed index set Z to the variable index set \(Y(\mathbf {x})\) is

Note that the semi-infinite constraint in the collection in Lemonidis (2008) contains a typing error. We use the version presented in the original source (Rückmann and Shapiro 2001).

Example 7

(modified): \(\quad \min \limits _{\mathbf {x} \in [0,1]^2} -x_1 \quad \mathrm {s.t.}\)

with

The constraint (21) is added to the original example. Then, a transformation of a fixed index set Z to the variable index set \(Y(\mathbf {x})\) is

Example 8

(modified): \(\quad \min \limits _{\mathbf {x} \in [-1,1]^2} -x_1 \quad \mathrm {s.t.}\)

with

The constraint (22) is added to the original example. Then, a transformation of a fixed index set Z to the variable index set \(Y(\mathbf {x})\) is

Note that the constraint describing the infinite index set is different in Lemonidis (2008). We use the version given in the original source (Guerra Vázquez and Rückmann 2005).

Example 10

(original): \(\quad \min \limits _{\mathbf {x} \in [-1,1]^2} x_1 + x_2 \quad \mathrm {s.t.}\)

with

A transformation of a fixed index set Z to the variable index set \(Y(\mathbf {x})\) is

Example 11

(modified): \(\quad \min \limits _{\mathbf {x} \in [-5,5]^3} x_1^2 + x_2^2 + x_3^2 \quad \mathrm {s.t.}\)

with

The constraint (23) is added to the original example. Then, a transformation of a fixed index set Z to the variable index set \(Y(\mathbf {x})\) is

Example 12

(original): \(\quad \min \limits _{\mathbf {x} \in [-1,1]} x^2 \quad \mathrm {s.t.}\)

with

A transformation of a fixed index set Z to the variable index set \(Y(\mathbf {x})\) is

Example 13

(modified): \(\quad \min \limits _{\mathbf {x} \in [-1,1]^3} \exp (x_1) + \exp (x_2) + \exp (x_3) \quad \mathrm {s.t.}\)

with

The constraint (24) is added to the original example. Then, a transformation of a fixed index set Z to the variable index set \(Y(\mathbf {x})\) is

Example 14

(original): \(\quad \min \limits _{\mathbf {x} \in [-1,0]^3} x_1^2 + x_2^2 + x_3^2 \quad \mathrm {s.t.}\)

with

A transformation of a fixed index set Z to the variable index set \(Y(\mathbf {x})\) is

Example 15

(original): \(\quad \min \limits _{\mathbf {x} \in [0,2]^2} x_2^2-4x_2 \quad \mathrm {s.t.}\)

with

A transformation of a fixed index set Z to the variable index set \(Y(\mathbf {x})\) is

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Schwientek, J., Seidel, T. & Küfer, KH. A transformation-based discretization method for solving general semi-infinite optimization problems. Math Meth Oper Res 93, 83–114 (2021). https://doi.org/10.1007/s00186-020-00724-8

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00186-020-00724-8