Abstract

In this paper, we analyse a wide class of discrete time one-dimensional dynamic optimization problems—with strictly concave current payoffs and linear state dependent constraints on the control parameter as well as non-negativity constraint on the state variable and control. This model suits well economic problems like extraction of a renewable resource (e.g. a fishery or forest harvesting). The class of sub-problems considered encompasses a linear quadratic optimal control problem as well as models with maximal carrying capacity of the environment (saturation). This problem is also interesting from theoretical point of view—although it seems simple in its linear quadratic form, calculation of the optimal control is nontrivial because of constraints and the solutions has a complicated form. We consider both the infinite time horizon problem and its finite horizon truncations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

As a motivating example, let us consider the simplest possible discrete time linear-quadratic model of extraction of a single renewable resource, e.g. a fishery, by a monopolist facing a linear demand and having a strictly convex quadratic cost and focus on the infinite horizon. To make such a model realistic, constraints have to appear: an obvious constraint that it is impossible to extract more than the current biomass or number of fish, or, equivalently, that the number of fish is always nonnegative. This model is very simple and inherent to the problem, and the problem of renewable resources is crucial for contemporary economics, which makes it is difficult to imagine, that, to the best of our knowledge, the problem has not been solved by now besides a simpler sub-problem in which the interest rate is by assumption identical to the rate of growth of the resource, examined in Singh and Wiszniewska-Matyszkiel (2018) and even that simple problem has quite surprising properties, although with level of compexity uncomparable to the results of this paper. This motivating example was the starting point to the analysis of this paper. The class of optimization problems examined in this paper is the largest class that suits the above description with the assumption that the decision maker is more impatient than in the model of Singh and Wiszniewska-Matyszkiel (2018), which is equivalent to higher interest rate, and this class of problems has been further generalized to encompass one more thing important in renewable resource extraction problems—presence of a saturation point of the resource.

Linear quadratic dynamic optimization problems seem to be the most extensively examined class of dynamic optimization problems and their solutions are regarded as a standard textbook material [although continuous time models are more common, there are also extensive studies of the discrete time case in e.g. Anderson and Moore (2007)]. However, this is mainly true for the unconstrained linear quadratic regulator problem in its simplest form.

The most general form of the discrete time infinite horizon linear quadratic regulator problem with discounting (for consistency with our paper, we write it as a maximization problem) is to

MAXIMIZE \(\sum \limits _{t=0}^{\infty }-\beta ^t[S(t)^T RS(t)+X(t)^T QX(t)+X(t)^T W S(t)]\),

for the state trajectory X defined by \(X(t+1)=AX(t)+BS(t)\) with \(X(0)=x_0\), if we consider the open loop information structure (the control S is dependent of time only and the initial condition \(x_0\) is known) or

MAXIMIZE \(\sum \limits _{t=0}^{\infty }-\beta ^t[S(X(t))^T RS(X(t))+X(t)^T QX(t)+X(t)^T W S(X(t))]\),

for the state trajectory X defined by \(X(t+1)=AX(t)+BS(X(t))\) with \(X(0)=x_0\), if we consider the feedback information structure (the control S is dependent on current state only).

In some papers, especially with finite time horizon, feedback controls are functions of both current state and time.

The square matrices R and Q are assumed to be nonnegative definite, usually positive definite, while the discount factor \(\beta \in (0,1]\), usually \(\beta =1\). The most common simplest form of this problem is without the mixed term, i.e. the matrix \(W=\mathbf {0}\).

When the infinite horizon linear regulator problem with constraints for states or controls is considered, the theoretical papers in this stream analyse the simplest form of it: undiscounted, with R and Q positive definite and \(W=\mathbf {0}\) with fixed constraints of the form \(Hx\le x\) and \(Gs\le g\), without any state dependent constraints on controls [e.g., Zhao and Lin (2008); Bemporad et al. (2002); Scokaert and Rawlings (1998); Thomas (1975); Chmielewski and Manousiouthakis (1996); Grieder et al. (2004); Sznaier and Damborg (1987); Scokaert and Rawlings (1998)]. To the best of our knowledge, no theoretical analysis of linear quadratic optimization problems with nontrivial discounting, non-zero mixed term and state dependent constraints on controls has been published.

In current papers related to automatic control in linear quadratic regulator problems with constraints, Model Predictive Control (MPC) method is frequently used in which at each stage a fixed finite horizon T (moving horizon or receeding horizon) dynamic optimization problem is solved, and it is usually assumed that after T, the control is equal to the optimal control for the unconstrained problem [e.g., Zhao and Lin (2008); Bemporad et al. (2002); Scokaert and Rawlings (1998); Thomas (1975); Chmielewski and Manousiouthakis (1996); Grieder et al. (2004)]. The MPC method in its simplest and computationally fastest form results in an open loop approximation of the open loop optimal control.

Another form of obtaining an optimal control in the open loop form is by a discrete time equivalent of the infinite horizon Pontryagin Maximum Principle [extensively studied in Blot and Hayek (2014); Aseev et al. (2016) or Brunovský and Holecyová (2018)].

On the contrary, the approach based on solving the Bellman equation, first stated by Bellman (1957) (often called Hamilton–Jacobi–Bellman equation as its counterpart in the continuous time), for problems with discounting introduced by Blackwell (1965), returns an optimal control in the feedback form (called sometimes also closed loop or closed loop no memory). This is the approach which we are going to use in this paper.

There are at least two reasons why feedback solutions are desired. Firstly, solutions in the class of feedback controls, although equivalent in simple dynamic optimization problems to solutions in the class of open loop controls, are usually not equivalent if we consider extension of a dynamic optimization problem to a dynamic game—a set of coupled dynamic optimization problems. In dynamic games, the feedback results are more plausible, because of the property of being strongly subgame perfect (see e.g., Başar et al. 2018). Another disadvantage of open loop solutions, especially important in the infinite time horizon case, is the fact that even a small error in estimation of the initial state may result in a substantial change of the optimal control. This may even happen in much simpler finite horizon problems.

A technique based on receding horizon is used also for feedback controls. The receding horizon approach in such papers means either that, as in our paper, a sequence of finite horizon truncation of the initial problem is considered or the unconstrained solution is used as the continuation. However, usually such papers concern convergence results for looking for a control stabilizing the system at \(\mathbf {0}\) in feedback form [e.g. Keerthi and Gilbert (1988) for a nonlinear problem, Sznaier and Damborg (1987); Scokaert and Rawlings (1998) and Bemporad et al. (2002) for linear quadratic regulator without the mixed term]. Zhang et al. (2009) develop an algorithm for finding an approximate feedback optimal control for a class of discrete-time constrained systems with non-linear dynamics in which constraints were related to saturation.

Classes of dynamic optimization problems closer to the problem considered in our paper are examined in eg., Le Van and Morhaim (2002), in which instead of stabilizing the system at \(\mathbf {0}\), optimization of a feedback law on consumption over time is considered in economy with a productive asset.

Constraints, including state constraints and state dependent constraints on controls are inherent in a vast majority of economic problems: there may be some borrowing constraints, so the current spendings cannot exceed accumulated wealth by more than some fixed amount; nonnegativity of consumption or production is often implicitly assumed; in a renewable or non-renewable natural resource extraction problem, the decision maker cannot extract more than currently available biomass in their area.

Because of high level of complexity caused by introducing constraints active at the optimum, problems in which there are constraints but the solutions are always interior are very popular in applications. One class of such problems are resource extraction problems with logarithmic payoffs [mainly so called Fish Wars e.g., Levhari and Mirman (1980); Fischer and Mirman (1992); Wiszniewska-Matyszkiel (2005); Mazalov and Rettieva (2010); Breton and Keoula (2014); Doyen et al. (2018)]. Another class are problems with quadratic payoffs in which constraints are deliberately large and therefore, not active at the optimum (more often in continuous time models e.g., Ehtamo and Hämäläinen (1993)). The fact that the inherent constraint about nonnegativity of controls like production, advertising effort or extraction has been noticed in some papers on sticky prices and the fact that the nonnegativity constraint is active at the optimum or equilibrium led to a non-quadratic value function (Fershtman and Kamien 1987; Wiszniewska-Matyszkiel et al. 2015), while in the majority of the other papers on the subject, only the steady state solutions are considered, which are interior and, therefore, the solutions are equal to the solutions of the unconstrained problem (at least whenever the initial state equals the steady state). Similarly, a problem of international environmental agreements concerning pollution of Rubio and Ulph (2007) with constraints results with a non-quadratic value function.

Usually, in papers on problems with constraints in which boundary solutions are also possible, authors often concentrate on the interior solutions (e.g., Santos (1991) considers a discrete time Ramsey-type model of capital accumulation and he proves that if the objective function is strictly concave and twice continuously differentiable, then any interior optimal path is continuously differentiable with respect to the initial state). To some extent, this is also the case in our problem. Although there is no solution which is interior for all nonzero initial conditions, there are solutions which are interior for some open set of initial conditions. Nevertheless, such solutions are something which we are the least interested in, since they are not realistic—in the interpretation as a fishery extraction problem, they correspond to a set of initial conditions which describe abundance of fish, whose biomass cannot be decreased even by the greedy myopic fishing.

It is worth mentioning that linear quadratic problems with state dependent constraints has been theoretically considered in more compound environment of linear quadratic dynamic games: theory of such games has been studied in Reddy and Zaccour (2015, 2017), where the linear quadratic dynamic games with linear constraints in discrete time with finite time horizon is considered, both in the open loop (Reddy and Zaccour 2015) and feedback form of strategies (Reddy and Zaccour 2017).

Although generally, continuous time models are better examined, discrete time models are more inherent for many problems related to ecological issues like water consumption (see e.g., Yakowitz 1982 or Krawczyk and Tidball 2006) with natural seasonality, exploitation of fisheries or some forests with natural subsequent harvesting seasons (like the interpretation of this model and the model of Singh and Wiszniewska-Matyszkiel (2018) and papers on Fish Wars mentioned above) or pollution problems with international environmental agreements with variable participation (e.g., Rubio and Ulph 2007; Breton et al. 2010) or river pollution (e.g. Krawczyk and Zaccour 1999).

In this paper, we solve analytically a class of one-dimensional discrete time linear quadratic dynamic optimization problems with state dependent constraints on control and a nonnegativity state constraint, using a sufficient condition based on the Bellman or Hamilton–Jacobi–Bellman equation with appropriate terminal condition (Stokey et al. 1989 or Wiszniewska-Matyszkiel 2011). Our problem does not belong to the class of constrained linear quadratic dynamic optimization problems solved in theoretical papers on constrained linear quadratic dynamic optimization mentioned above.

Our class of problems has an obvious application in resource extraction problems: either a single dynamic optimization mentioned in the motivating example in the case of lack of externalities and single well defined owner of the resource, but it also can be extended to Fish Wars type of problems—dynamic games of common resource extraction.

Because of that application, we extend the results for the basic constrained linear quadratic model to a problem in which the carrying capacity of the environment, i.e. a saturation point of the state variable, is introduced.

The theoretical part is a brick in the theory of linear quadratic dynamic optimization problems with constraints, especially state-dependent constraints on controls. Although it is simple and all its constituents can be easily interpreted by a non-mathematician—this problem is extremely complicated to solve. Moreover, this model constitutes an example in which a methodological approach often used in applications papers on optimal control or dynamic games does not work and some often used simplifications, if numerical methods are used to verify the Bellman equation, may lead to substantial errors.

The model is related to a linear quadratic multistage game that has been considered in Singh and Wiszniewska-Matyszkiel (2018, 2019) in which it also has properties contrary to expectations of readers familiar with linear quadratic dynamic games.

The paper is organized as follows. The problem in its simplest linear quadratic form is formulated in Sect. 2. Finite horizon truncations of the problem are solved in Sect. 3 with the limiting behaviour described in Sect. 3.1, while the basic infinite horizon problem is solved in Sect. 4. Section 5 is devoted to modification of the dynamics taking into account carrying capacity of the environment. Section 6 is devoted to a potential trap related to using the undetermined coefficients method to solve the infinite horizon problem. Technical lemmata are in the “Appendix”.

2 Formulation of the basic problem

We consider a discrete time linear quadratic dynamic optimization problem with linear constraints and its slight modification taking into account a saturation point of the state variable. Because it is important for applications, we want to restrict our attention to non-negative values of the state variable and the control parameters, which are both in \(\mathbb {R}_+\). Without loss of generality, the time set will be either \(\mathbb {N}\) or \(t=\{0,\ldots , N\}\) (this case is called a truncation of the problem with horizon N).

We consider the strictly concave quadratic current payoff function\(P:\mathbb {R}_+\rightarrow \mathbb {R}\)

for \(A>0\) and \(B>0\).

Nevertheless, the optimal control which we are going to define in the sequel, remains unchanged for any strictly concave quadratic payoff function which attains the maximum at a positive s, since for such optimization problems, the payoff has the form \(P(s)+c\) for a constant c, so the optimal control does not change.

There are linear state dependent constraints on available control parameters i.e., \(s \in [0,(1+\xi )x]\) for some \(\xi >0\), whose interpretation becomes obvious after introducing the dynamics. This results in the set of admissible state and control parameter pairs\(\varOmega =\left\{ (x,s)\in \mathbb {R}_+^2: s\le (1+\xi )x \right\} \).

We consider the optimization problem in the class of feedback controls: in the infinite horizon case, they are functions \(S:\mathbb {R}_+\rightarrow \mathbb {R}_+\), with the argument representing the state variable x, while in the case with the finite horizon N, they are functions \(S:\mathbb {R}_+\times \{0,\dots , N\}\rightarrow \mathbb {R}_+\). A control S is admissible in the infinite horizon case if \(S(x)\le (1+\xi )x\) for all x, while in the finite horizon case if \(S(x,t)\le (1+\xi )x\) for all x and t.

The set of admissible feedback controls is denoted by \(\mathfrak {S}\) in the infinite horizon case, while \(\mathfrak {S}^N\) in the case with horizon N.

The behaviour equation of the dynamical system, given a control \(S\in \mathfrak {S}\) is

with \( X(0)=x_0\ge 0\) as the initial state of the system. For \(S\in \mathfrak {S}^N\), analogously.

Initially, the function \(\phi : \varOmega \rightarrow \mathbb {R}_+\) is

where \(\xi >0\) denotes the rate of regeneration. However, in the sequel, we shall also consider its modification on some subset of arguments.

Note that for this dynamics, given nonnegative initial state, due to the state dependent constraint on control, the state remains always nonnegative, so we do not have to impose additional state constraints for positive t.

Remark 1

The set of admissible trajectories as well as the set of admissible controls remain unchanged if, instead of or beside the state dependent constraint on available control parameters, we consider the state space \(\mathbb {R}\), the state transition equation \(\phi : \mathbb {R} \times \mathbb {R}_+\rightarrow \mathbb {R}\), nonnegative initial states only and we impose the nonnegativity state constraint \(X(t)\ge 0\) for all t.

The optimal control remains unchanged for such an equivalent statement of the problem.

The payofffor the dynamic optimization problem in the infinite horizon \(J:\mathbb {R}_+\times \mathfrak {S}\rightarrow \mathbb {R}\) is defined by

with a discount factor\(\beta =\frac{1}{1+\xi }-\epsilon \) for some \(0< \epsilon <\frac{1}{1+\xi }\).

Whenever we want to emphasize the dependence on the initial condition, we write J(x, S) for arbitrary \(x \ge 0\).

In the finite horizon truncations with the horizon N, the payoff is \(J:\mathbb {R}_+ \times \{0,\dots ,N\} \times \mathfrak {S}\rightarrow \mathbb {R}\) (the second argument corresponds to time moment from which we start calculating the payoff)

for X being the solution of (2) (rewritten in the form suitable for \(S\in \mathfrak {S}^N\)) with the initial condition \(X(t_0)=x_0\). Again, if we want to emphasize dependence on the initial condition, we write \(J^N(x,t,S)\) for arbitrary \(x\ge 0\) and \(t\in \{0,\dots , N+1\}\).

Example 1

An example of application

Consider a problem of extraction of a renewable resource e.g., a fishery with quadratic cost\(c_1 s+ c_2 s^2\), with the controller being a monopolist selling the catch at a market with linear inverse demand, i.e., the price per unit if the firm catches/sells s is \(A_1-A_2s\). The state variable denotes the biomass of fish calculated at the beginning of the year (just after spawning; the year is calculated from the beginning of the spawning time) while the control parameter (the catch during the whole year besides a closed season around the spawning time). The nonnegativity constraint becomes obvious both for the state variable (the biomass of fish) and the control parameter (the catch). The state dependent constraint on control translates as ”the firm cannot fish out more than available—all the fish including current year’s offspring”. In presence of a closed season, using discrete time becomes well justified.

This example is a modification of the dynamic game problem with at least two players studied in Singh and Wiszniewska-Matyszkiel (2018, 2019) for specific value of B and for \(\epsilon =0\) and for the related social optimum problem.

2.1 The value function, optimal control and the sufficient condition for the infinite horizon

Definition 1

A function \(\bar{V}: \mathbb {R}_+\rightarrow \mathbb {R}\) is called the value function if for all \(x \in \mathbb {R}_+\),

Definition 2

A control variable \(\bar{S} \in \mathfrak {S}\) is an optimal control if for all \(x \in \mathbb {R}_+\), it fulfils

Theorem 1

A sufficient condition for \( V:\mathbb {R}_+\rightarrow \mathbb {R} \) to be the value function and \(S: \mathbb {R}_+\rightarrow \mathbb {R}_+\) to be an optimal control of the dynamic optimization problem given by Eqs. (1)–(4) consists of the Bellman Equation (6) and terminal condition given by Eq. (7), together with the fact that S(x) maximizes the right hand side of the Bellman equation given in Eq. (8).

with the terminal condition

while the optimal control S is any profile which fulfils

Under (6) and (7), V is the value function of the dynamic optimization problem considered, the maximal sum of payoffs is equal to \(V(x_0),\) and the optimal profile of strategies can be found by inclusion (8) for every x (for simpler reference, from now on, we call it the Bellman inclusion).

Generally, (7) is not a necessary condition [see e.g. an example from Wiszniewska-Matyszkiel (2011)] and the Bellman equation (6), even with the Bellman inclusion (8) fulfilled, may have multiple solutions [see e.g. Singh and Wiszniewska-Matyszkiel (2018); Wiszniewska-Matyszkiel and Singh (2018) for examples and uniqueness results].

This version of sufficient condition is often related to Stokey et al. (1989), Theorem 4.3. However, the problem considered by Stokey, Lucas and Prescott has a slightly different formulation. Theorem 1 is also consequence of a weaker sufficient condition—the main result of Wiszniewska-Matyszkiel (2011).

Eq. (6) and (8) constitute also a necessary condition whenever the rhs. of the Bellman equation is well defined [Stokey et al. (1989), Theorems 4.2 and 4.4 or Wiszniewska-Matyszkiel and Singh (2018), Theorem 4].

In our initial optimization problem, the Bellman equation (6) becomes

while the Bellman inclusion (8) becomes

2.2 The value function, optimal control and the necessary and sufficient condition for the finite horizon N

The value function \(V^N:\mathbb {X}\times \{0,\dots ,N+1\}\rightarrow \mathbb {R}\) obviously depends also directly on time, it is defined by

while the optimal control \(S^N:\mathbb {R_+}\times \{0,\dots , N\}\rightarrow \mathbb {R_+}\)

The sufficient condition given in Theorem 1 changes slightly: the Bellman equation (6) and inclusion (8) change to

while the terminal condition changes to

Remark 2

Equations (11)–(13), are not only sufficient but also necessary for \(V^N\) to be the value function and \(S^N\) to be the optimal control in the finite truncations of the initial problem.

2.3 The finite horizon solutions versus the infinite horizon solution

Although in finite horizon problems, the dynamic programming method based on the Bellman equation determines the optimal solution explicitly by backwards induction, in the infinite horizon case with a terminal condition at infinity, it cannot be done. So, we are going to solve it in a different way—as a limit of finite horizon solutions. However, it has to be emphasized that the convergence of the finite horizon feedback optimal controls for the truncated problems does not imply that the limit is the optimal control for the infinite horizon problem, and the sufficient condition [a simple one from Stokey et al. (1989) or more general from Wiszniewska-Matyszkiel (2011)] has to be checked for the limit [see counterexamples: Ex. 7 and 8 from Wiszniewska-Matyszkiel and Singh (2018)]. Moreover, generally, even the value functions of the truncated problems may converge to a limit which is not the infinite horizon value function [Ex. 5 from Wiszniewska-Matyszkiel and Singh (2018)]. So, we use the limits of the finite horizon value functions and optimal controls only as candidates, for which we check the sufficient condition.

We also show that the frequently used undetermined coefficient/Ansatz method is not of much use in the case of our class of problems.

Our method is based on finding a candidate for the value function and optimal control as limits of values, at the initial time moment, of value functions and optimal controls, correspondingly, of finite horizon truncations of the problem and verification whether the limits are indeed the value function and optimal control, using sufficient condition Eqs. (6)–(8).

3 Solution of the dynamic optimization problem for finite horizon truncations of the initial problem

Before we solve the more interesting infinite horizon problem, we are first going to solve its finite horizon truncations with the horizon N.

We can prove the following form of the value function and optimal control.

Theorem 2

The following function \(V^N: \mathbb {R_+}\times \{0,\dots , N+1\}\rightarrow \mathbb {R_+}\) is the value function while the following function \(S^N:\mathbb {R_+}\times \{0,\dots , N\}\rightarrow \mathbb {R_+}\) is the optimal control for the truncation of the initial problem with time horizon N.

with \(V^N(x,t)=V^{N-t}(x,0)\) for \(t\le N\) and \(V^N(x,N+1)=0\) and

with \(S^N(x,t)=S^{N-t}(x,0)\) for \(t\le {N}\), where the constants are

The number i corresponds to time to resource exhaustion for x in the interval \((\hat{x}_{i-1},\hat{x}_{i})\): for \(\hat{x}_{i-1}<x<\hat{x}_{i}\), the resource will be depleted in i stages. So, \(V_i\) and \(S_i\) correspond to time to resource exhaustion \(i+1\), \(\hat{x}_i\) is the highest state such that if \(x_0=\hat{x}_i\), then the optimal trajectory fulfils \(X(i)=0\), while \(\hat{y}_N\) is the lowest state such that if \(x_0=\hat{y}_N\), then \(\hat{s}\) is available in each of \(N+1\) stages. Equivalently, \(\hat{x}_i \) is the lowest state at which a control S with \(S(X(0),0)=a_i X(0)+b_i\), \(S(X(1),1)=a_{i-1} X(1)+b_{i-1}\), \(\dots \), \(S(X(i),i)=a_0 X(0)+b_0\) and \(S(X(k),k)=0\) for \(k>i\) can be admissible.

Equivalently \(a_i\), \(b_i\) and \(\hat{x}_{i+1}\) can be rewritten as

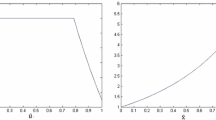

We present the results of Theorem 2—the optimal control \(S^N\) and the value function \(V^N\)—in Fig. 1. The graphs are drawn for the values \(A=1000, \, B=1,\ \epsilon =0.01, \ \xi =0.02\) and \(N=100\). Small diamonds correspond to subsequent \(\hat{x}_i\) and \(\hat{y}_{100}\), which are points of non-differentiability of \(S^N\). Both functions are continuous and non-decreasing in x. The consecutive \(\hat{x}_i\) correspond to consecutive number of time moments to depletion of the state variable, while over \(\hat{y}_{100}\) the resource is not depleted in the problem with time horizon 100.

To show how the optimal control and value function at the initial time change as the time horizon increases, we illustrate them for the same values of parameters \(A=1000, \, B=1,\ \epsilon =0.01\) and \(\xi =0.02\) and for four values of \(N=0,1,10,100\) in Fig. 2 (the optimal control) and Fig. 3 (the value function). Small diamonds correspond to subsequent \(\hat{x}_i\) and \(\hat{y}_i\); \(\hat{y}_{100}\) is out of the range. The non-differentiability of \(S^N\) is well visible, so is differentiability of \(V^N\). As we can see, the optimal control is non-increasing while the value function non-decreasing in N.

To prove Theorem 2, we need the following sequence of Lemmata. The part of proofs which are less interesting while elaborate are moved to the Appendix.

Lemma 1

-

(a)

\(\phi (x,S_N(x))\) is non-decreasing in x for all N and strictly increasing in x for \(N\ge 1\).

-

(b)

If \(x \ge \hat{x}_{N-1}\) then \(\phi (x,S_N(x))\ge \hat{x}_{N-2}\) and if \(x \le \hat{x}_N\) then \(\phi (x,S_N(x))\le \hat{x}_{N-1}\).

Proof

(a) Since, \(\phi (x,S_N(x))= ((1+\xi )-a_N)x-b_N\), it is true by the fact that \(a_N\le (1+\xi )\) and \(a_N<(1+\xi )\) for \(N>1\) resulting from Lemma 4(b) from the Appendix.

(b) By the fact that \(\phi (\hat{x}_N,S_N(x))= \hat{x}_{N-1}\) (Lemma 7(a) from the Appendix), continuity and monotonicity of \(S_N(x,0)\) in x (Lemma 8(a) from the Appendix). \(\square \)

Lemma 2

For all x, \(V^N\) is concave in x and it is strictly concave for \(x<\hat{y}_N\) and it is differentiable for all x.

Proof

This proof is based on basic properties of strictly concave functions and their derivatives or superdifferentials [an analogue of properties of convex functions and their subdifferentials, see. e.g., Rockafellar (2015)].

Since by Lemma 4 from the Appendix, \(H_i<0\), \(V_i\) are strictly concave and they are differentiable. Note that \(U_i\) is constant for every i, so, it is also concave.

Since \(V_i\) is strictly concave and by Lemma 9(a) from the Appendix, \(V^N\) is continuous, \({\frac{\partial V_i}{\partial x}}\) is strictly decreasing and since by Lemma 10 from the Appendix, \(V^\prime _i(\hat{x}_i)=V^\prime _{i+1}(\hat{x}_i)\), so, \(V^N(\cdot ,0)\) is differentiable for \(x<\hat{y}_N\) and its derivative is strictly decreasing on \((0,\hat{y}_N)\).

Since \(\frac{\partial V^N(\cdot ,0)}{\partial x}\) is strictly decreasing for \(x \le \hat{y}_N\), \(V^N(\cdot ,0)\) is strictly concave on the interval \([0, \hat{y}_N)\).

Since by Lemma 10(b) from the Appendix, \({\frac{\partial V_N(\hat{y}_N,0)}{\partial x}}=0={\frac{\partial U_N(\hat{y}_N)}{\partial x}}\), \({\frac{\partial V^N(x,0)}{\partial x}}=0\) for \(x\ge y_N\).

Since \(\frac{\partial V^N(\cdot ,0)}{\partial x}\) is non-increasing \(V^N(\cdot ,0)\) is concave on the whole domain. \(\square \)

Lemma 3

-

(a)

For any N, \(P(s)+\beta V^{N}\left( (1+\xi )x-s, 0\right) \) is strictly concave and differentiable in s and the supremum in the r.h.s. of the Bellman equation (9) is attained.

-

(b)

If for some \(s\in [0,(1+\xi )x]\), \(\frac{\partial (P(s)+\beta V^{N}(\phi (x,s(x)),0))}{\partial s}=0\), then s is the unique optimum of the right hand side of Bellman equation.

Proof

(a) Immediately by Lemma 2 and boundedness of P and \(V^N\) from above.

(b) If a point fulfils the first order condition for optimization of a strictly concave function then it is the unique optimum. \(\square \)

Proof of Theorem 2

We prove it inductively in two ways: by forward induction with respect to the horizon N and within a fixed horizon N, by backward induction corresponding to the dynamic programming techniques, which we rewrite to forward induction with respect to time to resource exhaustion.

For \(N=0\) it can be easily verified that the value function

fulfils the Bellman equation (11) and there is a unique optimal control

which fulfils the Bellman inclusion (12).

Assume that the value function and the optimal control are given by Eq. (14) and (15) for N and prove it for \(N+1\).

The Bellman equation (11) has the form

while the Bellman inclusion — necessary and sufficient condition for a control to be optimal is

By the Bellman optimality principle (Bellman 1957), at time \(t+1\), the solution has to coincide with the optimal solution of the N horizon problem with the state resulting from the first decision. Since the only dependence on time in the functions of the model is by discounting, so, \(V^{N+1}(x,1)=V^{N}(x,0)\) and \(S^{N+1}(x,1)=S^{N}(x,0)\). By analogous reasoning, we have \(V^{N+1}(x,t+1)=V^{N}(x,t)\) and \(S^{N+1}(x,t+1)=S^{N}(x,t)\) for all \(t\le N\). Thus, we only have to check the Eq. (20) and (21) for \(t=0\).

So, Eqs. (20) and (21) can be rewritten as

The maximum of the r.h.s. of Eq. (22) exists, it is unique by Lemma 3 and whenever there exists a point in \([0,(1+\xi )x]\) at which the derivative of the r.h.s. of Eq. (22) is 0, it is the maximum, while if this zero derivative point is greater than \((1+\xi )x\), the maximum is attained at \((1+\xi )x\).

We are going to locate the maximum. It depends on the interval to which x belongs. If \(x \in [x_0, x_1]\), then \(\phi (x,S_0(x))=0\), so, \(V^N\left( \phi (x,S_0(x))\right) =0\).

By Lemma 1, if \(x\in [\hat{x}_{k+1},\hat{x}_{k+2})\), then \(V^N\left( \phi (x,S^N(x,0)),0\right) =V_{k}\left( \phi (x,S_{k+1}(x))\right) \).

So, if \(S_{k+1}(x)\) maximizes the r.h.s. of

then for this x, Eq. (22) reduces to Eq. (24). So, what remains to be proven is the fact that \(S_{k+1}\) is really the maximizer of the r.h.s. of Eq. (24) and that this equation is fulfilled. We do it by induction with respect to k.

By substitution, we get it for \(k=0\) if we use auxiliary \(V_{-1}\equiv 0\).

Now we assume that it is fulfilled for k and prove it for \(k+1\).

The first order condition for s to be optimal is

By solving this equation for s, we get the optimal \(S_{k+1}\)

with the constants \(a_{k+1}=\frac{\beta H_{k}(1+\xi )}{\beta H_{k}-B}\) and \(b_{k+1}=\frac{\beta G_{k}-A}{\beta H_{k}-B}\).

Substituting the value from Eq. (25) into Eq. (24), we obtain \(V_{k+1}(x)=K_{k+1}+G_{k+1}x+\frac{H_{k+1}}{2} x^2\), with the recurrence equation for the constants as in Eq. (17).

So, what remains to be proven are two cases: \(x\in [\hat{x}_{N+1},\hat{y}_{N+1})\) and \(x\ge \hat{y}_{N+1}\).

In the latter case, obviously, \(\phi (x,\hat{s})\ge \hat{y}_N\), the Bellman equation (22) reduces to \(U_{N+1}(x)=\sup \nolimits _{s\in [0,(1+\xi )x]}P(s)+\beta V^{N}(\phi (x,s),0)=\sup \nolimits _{s\in [0,(1+\xi )x]}P(s)+\beta U_{N}(\phi (x,s))\) and it is fulfilled with \(s=\hat{s}\).

In the former case we have two sub-cases:

- (i)

If \(\phi (x,S_{N+1}(x))\in [\hat{x}_{N},\hat{y}_{N})\), then the Bellman equation (22) reduces to

\(V_{N+1}(x)=\sup \nolimits _{s\in [0,(1+\xi )x]}P(s)+\beta V_{N}(\phi (x,s))\), then the reasoning is the same as for \(x\in [\hat{x}_{k},\hat{x}_{k+1})\) for \(k< N\).

- (ii)

If \(\phi (x,S_{N+1}(x))\ge \hat{y}_N\), then the Bellman Eq. (22) reduces to

\(V_{N+1}(x)=\sup \nolimits _{s\in [0,(1+\xi )x]}P(s)+\beta U_{N}(\phi (x,s))\) and it is fulfilled with \(s=\hat{s}\).

Since \(S_{N+1}(\hat{y}_{N+1})=\hat{s}\), all the case has been taken into account (by Lemma 8(b)).

All formulae in (19) are immediate by (17) and (18). \(\square \)

3.1 Limit properties of the finite horizon truncations of the problem

In this subsection, we examine the limit properties of the finite horizon truncations of the problem.

Let us introduce

The first interesting property are the limits of \(V^N\) and \(S^N\), which for \(x<\tilde{x}\) is attained in finitely many steps.

Proposition 1

-

(a)

If \(x < \tilde{x}\) then \(\exists N_x\)\(\forall N_1, N_2>N_x\), \(\forall y \le x\), \(V^{N_1}(y,0)=V^{N_2}(y,0)\) and \(S^{N_1}(y,0)=S^{N_2}(y,0)\).

-

(b)

If \(x \ge \tilde{x}\) then \(\forall t\), \(\lim \nolimits _{N\rightarrow \infty } V^N(x,t)=\tilde{k}\) and \(\forall N, t\), \(S^N(x,t)=\hat{s}\) .

Proof

Immediate \(\square \)

Another interesting issue is the limit of \(V^N(x_N,0)\) and \(S^N(x_N,0)\) for a sequence \(x_N \nearrow \tilde{x}\). To calculate it, first, we need to check convergence of the sequences \(H_N \), \(G_N\), \(K_N\), \(a_N\) and \(b_N\).

Proposition 2

-

(a)

The limit of \(H_i\) is given by

$$\begin{aligned} \lim \limits _{i \rightarrow \infty } H_i={\left\{ \begin{array}{ll} \frac{B\left( 1-\beta {(1+\xi )}^2\right) }{\beta } &{} \text { for } \beta {(1+\xi )}^2> 1,\\ 0 &{} \text { for } \beta {(1+\xi )}^2\le 1. \end{array}\right. } \end{aligned}$$ -

(b)

The limit of \(a_i\) is given by

$$\begin{aligned} \lim \limits _{i \rightarrow \infty } a_i={\left\{ \begin{array}{ll} \frac{\beta {(1+\xi )}^2-1}{\beta (1+\xi )} &{} \text { for } \beta {(1+\xi )}^2> 1,\\ 0 &{} \text { for } \beta {(1+\xi )}^2\le 1. \end{array}\right. } \end{aligned}$$

Proof

(a) Consider the recurrence relation for \(H_i\) given by (17).

By calculating the fixed point, we obtain two values: 0 and \(\frac{B(1-\beta {(1+\xi )}^2)}{\beta }\).

By Lemma 4, we know that \(H_i\) is increasing and bounded from above by 0. So the limit exists and it is non-positive. Consider the following cases.

Case 1 If \(\beta {(1+\xi )}^2>1\), then \(H_1<\frac{B \left( 1-\beta {(1+\xi )}^2\right) }{\beta }\).

Consider any sequence given by Eq. (17) without predetermined initial condition.

Let us denote it by \(\{h_i\}\). Such \(h_i\) is increasing for \(h_1<\frac{B\left( 1-\beta {(1+\xi )}^2\right) }{\beta }\) and decreasing for \(\frac{B\left( 1-\beta {(1+\xi )}^2\right) }{\beta }<h_1<0\). So, 0 cannot be the limit of \(H_i\).

Therefore, in this case \(\lim \nolimits _{i \rightarrow \infty } H_i=\frac{B(1-\beta {(1+\xi )}^2)}{\beta }\).

Case 2 If \(\beta {(1+\xi )}^2\le 1\), then \(\frac{B\left( 1-\beta {(1+\xi )}^2\right) }{\beta }\ge 0\).

Either the limit is positive and hence it cannot be the limit of \(H_i\), or it is 0.

Therefore, in this case \(\lim \nolimits _{i \rightarrow \infty } H_i=0\).

(b) Immediate by substituting the limit of \(H_i\) obtained in (a) into \(a_i=\frac{-H_i}{B(1+\xi )}\). \(\square \)

Proposition 3

Consider \(F_i:=\frac{G_i}{H_i}\).

- (a)

The limit of \(F_i\) is given by \(\lim \limits _{i \rightarrow \infty } F_i=-\frac{A}{B\xi }\).

- (b)

The limit of \(G_i\) is given by

$$\begin{aligned}\lim \limits _{i \rightarrow \infty } G_i={\left\{ \begin{array}{ll} \frac{A(\beta {(1+\xi )}^2-1)}{\beta \xi } &{} \text { for } \beta {(1+\xi )}^2> 1,\\ 0 &{} \text { for } \beta {(1+\xi )}^2\le 1. \end{array}\right. } \end{aligned}$$ - (c)

The limit of \(b_i\) is given by

$$\begin{aligned}\lim \limits _{i \rightarrow \infty } b_i={\left\{ \begin{array}{ll} \hat{s} -\frac{A(\beta {(1+\xi )}^2-1)}{B \beta \xi (1+\xi )} &{} \text { for } \beta {(1+\xi )}^2> 1,\\ \hat{s} &{} \text { for } \beta {(1+\xi )}^2\le 1. \end{array}\right. } \end{aligned}$$

Proof

(a) We calculate the fixed point of \(F_i\) which is \(\frac{-\hat{s}}{\xi }\).

By Lemma (5) from the Appendix, \(F_i\) is decreasing.

Let us consider any sequence given by Eq. (33) without predetermined initial condition and denote it by \(\{f_i\}\).

If \(f_1>\frac{-\hat{s}}{\xi }\), then \(f_i\) is decreasing, while if \(f_1<\frac{-\hat{s}}{\xi }\), then \(f_i\) is increasing.

Therefore \(\lim \nolimits _{i \rightarrow \infty } F_i=\frac{-\hat{s}}{\xi }\).

(b) \(\lim \nolimits _{i \rightarrow \infty } G_i=\left( \lim \nolimits _{i \rightarrow \infty } H_i\right) \cdot \left( \lim \nolimits _{i \rightarrow \infty } F_i\right) \) since both \(H_i\) and \(F_i\) are convergent.

It is immediate by Proposition 3(a) and 2(a).

(c) Immediate by (b) and Eq. (19). \(\square \)

Proposition 4

\(\lim \nolimits _{i \rightarrow \infty } \hat{y}_i=\lim \nolimits _{i \rightarrow \infty } \hat{x}_i=\tilde{x}\).

Proof

Immediate by the definition of \(\hat{y}_i \), \(\hat{x}_i\) given in Eq. (16) and limits of \(a_i\) and \(b_i\).\(\square \)

Proposition 5

Consider a sequence \(x_N \nearrow \tilde{x}\).

Then \(\lim \nolimits _{N\rightarrow \infty }V^N(x_N,0)=\tilde{k}\) and \(\lim \nolimits _{N\rightarrow \infty }S^N(x_N,0)=\hat{s}\) and \(\lim \nolimits _{N\rightarrow \infty }\frac{\partial V^N(x_N,0)}{\partial x}=0\).

Proof

We know the limits of the sequences \(H_i\), \(G_i\), \(a_i\) and \(b_i\). To prove the result, we need to prove the convergence and to find the limit of \(K_i\).

By Lemma 9(b), \(V^i\) is continuous at \(\hat{y}_i\), so \(K_i=\tilde{k}-\frac{H_i}{2}(\hat{y}_i)^2 - G_i\hat{y}_i\). By taking the limit, we obtain that \(\lim \nolimits _{i \rightarrow \infty }K_i=\tilde{k}-\lim \nolimits _{i \rightarrow \infty }\frac{H_i}{2}(\tilde{x})^2 - \lim \nolimits _{i \rightarrow \infty }G_i\tilde{x}\).

Since \(\lim \nolimits _{N \rightarrow \infty } x_N=\tilde{x}\), there exists an increasing sequence of integers \(n_N\) such that for N large enough \(\hat{x}_{n_N}\le x_N <\tilde{x}\). By monotonicity of the functions \(V^i\), \(V^{n_N}(\hat{x}_{n_N},0)\le V^{n_N}(x_N, 0) \le V^{n_N}(\tilde{x},0)\). Taking the limit ends the proof for V. The proof for S is analogous.

Similarly, for \(\frac{\partial V^N(x_N,0)}{\partial x}\), with opposite side monotonicity of derivative resulting from concavity of \(V^N\). \(\square \)

4 The infinite time horizon

In this subsection we return to the infinite horizon problem.

Note that the optimal controls of the truncated problems converge. In such a case, in many papers, the limit is automatically treated as the optimal control for the infinite horizon problem. As we shall prove, the limit in our case is, indeed, the optimal control.

To make sure that the limit of finite horizon solutions is really the optimal control, we check the sufficient condition given by Theorem 1.

Theorem 3

The value function is,

for \(\tilde{k}, \tilde{x}\) defined by Eq. (26), \(\hat{x}_0=0\) and \(\hat{x}_N\) defined by Eq. (18) and \(V_N\) is given by Eq. (14) while the optimal control is

where \(S_N\) is given by Eq. (15).

We present results of Theorem 3 for values of parameters \(A=1000, \, B=1,\ \epsilon =0.01\) and \(\xi =0.02\) in Fig. 4 (approximation reduced to 1000 intervals below \(\tilde{x}\)). Small diamonds correspond to subsequent \(\hat{x}_i\) and \(\tilde{x}\). The consecutive \(\hat{x}_i\) correspond to consecutive number of time moments to depletion of the state variable below \(\tilde{x}\), while over \(\tilde{x}\) the resource is not depleted.

Proof

By Propositions 4 and 1, Lemma 9 and properties of concave functions [an analogue of properties of convex functions in e.g., Rockafellar (2015)], \(\bar{V}\) is continuous, differentiable and concave for all x. So, the r.h.s. of the Bellman equation (9) has a unique solution, either given by the zero derivative point or equal to \(s=(1+\xi )x\). We have checked where the zero derivative point is when \(x\in [\hat{x}_N,\hat{x}_{N+1})\), while proving Theorem 2. If \(x\ge \tilde{x}\), then the optimal control is attained at \(\hat{s}\).

So, \(\bar{V}\) fulfils the Bellman equation (9), while \(\bar{S}\) fulfils the Bellman inclusion (10) with \(\bar{V}\).

The terminal condition (7) is obviously fulfilled, since \(\bar{V}\) is bounded. So, \(\bar{V}\) is the value function while \(\bar{S}\) is the optimal control. \(\square \)

Corollary 1

-

(a)

\(\forall x<\tilde{x}\), \(\exists N\) such that \(\bar{V}(x)={V}^N(x,0)\).

-

(b)

\(\forall x<\tilde{x}\), \(\exists N_x\) such that \(\forall N> N_x, \, \bar{V}\vert _{[0, x]}={V}^N(\cdot ,0)\vert _{[0, x]}\).

Corollary 2

For all N, \(\bar{V} \vert _{[0, \hat{x}_N]}=V^N(\cdot ,0)\vert _{[0, \hat{x}_N]}\), \( \bar{S} \vert _{[0, \hat{x}_N]}=S^N(\cdot ,0)\vert _{[0, \hat{x}_N]}\) and for all N, \( \bar{S} \vert _{[\tilde{x},+\infty ]}=S^N(\cdot ,0)\vert _{[\tilde{x},+\infty ]}\).

So, the optimal control of the infinite horizon problem coincides with the optimal control at the initial time of its truncation with finite horizon N and longer for \(x\in \mathbb {R}_+ \setminus (\hat{x}_N,\tilde{x})\) , while the value function with the value function at the initial time of its truncation with finite horizon N and longer for \(x\in [0,\hat{x}_N]\).

5 The modified problem—introduction of the carrying capacity in ecological applications

Since, as we state in the introduction, we are interested in applications to exploitation of renewable resources, the linear dynamics is reasonable in real life applications only for low states and a saturation point should exist.

Therefore, we consider a modification of the problem introduced in Sect. 2—we modify the dynamics of the resource by considering Eq. (2) with \(\phi :\varOmega \rightarrow \mathbb {R}_+\) being any function such that

Theorem 4

Theorems 2 and 3 remain unchanged if we consider the modified dynamics.

Proof

Immediately by repeating the procedures obtained to prove Theorems 2 and 3 with the new \(\phi \): since \(\hat{s}\) remains the global unconstrained maximum of the current payoff, its availability does not change and the set \([\tilde{x},+\infty )\) is invariant for the new dynamics given \(s=\hat{s}\). \(\square \)

The saturation point may be introduced in many different ways. The simplest one is as follows.

Example 2

Consider Example 1 with \(\phi (x,s)= \min \{(1+\xi )x-s,M\tilde{x}\}\) for a constant \(M\ge 1\). Then we obtain the fishery model with the saturation point \(M\tilde{x}\). Theorems 2 and 3 hold for this model.

Recall that \(\tilde{x}\) is a state which is so large that the greedy myopic fishing does not reduce the population. Obviously, in current days real life situations, such a large biomass of fish is unrealistic. So, if modification of the state equation over such unrealistic level in a general way described by (29) does not influence the optimal behaviour, there is no need to work on more realistic approximation of the behaviour around the saturation point.

6 An important methodological issue

This subsection is devoted to a potential trap resulting from using the standard undetermined coefficient/Ansatz method for solving the infinite horizon problem, especially if the solution is not analytic, but only numerical.

Theorem 3 states that the actual value function is piecewise quadratic with infinitely many pieces while the optimal control piecewise linear with infinitely many pieces. This fact implies that obtaining such solutions by the Ansatz method is at least difficult, if not impossible. We show that it may be also misleading.

If we try to solve the infinite horizon problem by the undetermined coefficient method, we can find a function \(V^{\text {False}}\) for which the Bellman equation (9) is fulfilled besides a small interval \([0,x_{\min }(\epsilon )]\) with \(\lim \nolimits _{\epsilon \rightarrow 0^+}x_{\min }(\epsilon )\rightarrow 0\), and a function \(S^{\text {False}}\) for which the Bellman inclusion (10) with \(V^{\text {False}}\) is fulfilled everywhere (for details see Proposition 6).

So, for arbitrary small \(\eta >0\), there exists an \(\epsilon >0\) such that in the problem with this \(\epsilon \), the sufficient condition is fulfilled besides an interval of length less than \(\eta \).

The consequence of this error on such a small interval is the fact that the value function and the optimal control are incorrectly calculated on the whole interval \(\left( 0,\tilde{x}\right) \).

Proposition 6

Consider the function

for \( a^{\text {F}}=\frac{\left( {(1+\xi )}^2\epsilon -\xi \right) }{(1+\xi )\epsilon -1}, \ b^{\text {F}}=\frac{-A\epsilon \left( 1+\xi \right) }{B\xi \left( (1+\xi )\epsilon -1\right) }\) and \(x_{\min }=\tilde{x}\epsilon (1+\xi )\) and the function

for \( h^{\text {F}}=\frac{-B(1+\xi )\left( {(1+\xi )}^2 \epsilon -\xi \right) }{((1+\xi )\epsilon -1)}, \ g^{\text {F}}=\frac{A(1+\xi )\left( {(1+\xi )}^2 \epsilon -\xi \right) }{\xi \left( (1+\xi )\epsilon -1\right) }, \ k^{\text {F}}=\frac{-A^2 \epsilon ^2 {(1+\xi )}^4}{2B\xi ^2((1+\xi )\epsilon -1)((1+\xi )\epsilon +\xi )} \).

If \(\epsilon < \frac{\xi }{(1+\xi )^2}\), then \(S^{\text {False}}\) fulfils the Bellman inclusion (10) with \(V^{\text {False}}\), \(V^{\text {False}}\) fulfils the Bellman equation (9) on the set \([x_{\min }(\epsilon ), +\infty )\) and the terminal condition (7) is also fulfilled.

The functions \(V^{\text {False}}\) and \(S^{\text {False}}\) has been derived in quite a common way the undetermined coefficient method is used in the case some constraints appear, which we reflect in the proof.

Assume that the fact whether the Bellman equation is fulfilled is checked only numerically, and that \(\epsilon \) is small enough, then the length of the interval on which the Bellman equation does not hold may be below the level of accuracy of the floating-point arithmetic used. The remaining two parts of the sufficient condition do hold. So, in a class of problems similar to ours (obviously, 0 is well represented in each arithmetic, but a slight modification of the model may result in the critical point being nonzero), the sufficient condition may be treated as numerically proven.

We return to the reason of this trap after the proof.

Before the proof, we compare graphically the actual optimal control and value function for the infinite horizon (multicolour thin transparent line) to \(S^{\text {False}}\) and \(V^{\text {False}}\) (red thick line) in Fig. 5 and Fig. 6, respectively. The graphs are drawn for the values \(A=1000, \, B=1,\ \epsilon =0.01\) and \(\xi =0.02\). Small diamonds correspond to subsequent \(\hat{x}_i\), \(x_{\min }(\epsilon )\) and \(\tilde{x}\).

Note that the point \(x_{\min }(\epsilon )\), corresponding to the interval \([0,x_{\min }(\epsilon )]\) at which the Bellman equation is not fulfilled is so small that in the graph it is almost indistinguishable from 0 (the first diamond on the red thick line corresponds to \(x_{\min }(\epsilon )\)). Nevertheless, there is a substantial difference between the actual optimal control and value function for the infinite horizon and \(S^{\text {False}}\) and \(V^{\text {False}}\), correspondingly, and there is a perceivable difference on the whole interval \([0,\tilde{x})\).

Next, we proceed to the proof of Proposition 6. Generally, it is enough to substitute the functions \(S^{\text {False}}\) and \(V^{\text {False}}\) to Eqs. (6)–(8) and meticulously check they hold as stated in Proposition 6: Eq. (6) besides \([0,x_{\min }(\epsilon ))\), the remaining ones everywhere.

Conversely, we derive those function to illustrate an analogue of the process of looking for the optimal control by the undetermined coefficient methods for a problem with constraints. This proof follows similar lines as the proof of Theorem 2 from Singh and Wiszniewska-Matyszkiel (2018), although the function is different and here, the Bellman equation (9) is fulfilled for \(x \ge x_{\min }(\epsilon )\) only. The terminal condition (7) cannot be neglected in this proof—since, e.g. the dynamic optimization problem from Singh and Wiszniewska-Matyszkiel (2018) has two solutions and the ”most obvious” quadratic solution is not the value function, which is another potential trap that can appear while solving a linear quadratic problem with constraints by the undetermined coefficient method.

Proof of Proposition 6

By using the Ansatz method, let us assume that the value function is of quadratic form: \(\bar{V}(x)=k+g x+\frac{hx^2}{2}\). We look for a solution of the Bellman equation (9) in this class of functions.

Afterwards, we find s maximizing the right hand side of the Bellman equation (9) over the set of available decisions.

We check the first order condition for the internal s from the Bellman inclusion (10) and get the value of s as follows

Next, we substitute the value of s from Eq. (30) to the Bellman equation (9), which allows us to calculate the constants for which this equation is fulfilled, assuming that the supremum is attained at the zero-derivative point. In this way, we obtain three sets of values of unknowns as follows.

Nevertheless, since \( h\le 0 \) for all such sets of constants, s defined by Eq. (30), if \(s\in [0,(1+\xi )x] \), is the global maximizer and it is unique. We consider the following cases.

Case 1. The values of unknowns k, g and h in V are as in (31a), which yields \(\bar{V}_1 (x) :=\frac{h^{\text {F}} x^2}{2}+ g^{\text {F}}x+k^{\text {F}} \).

The candidate for the social optimum in this case is equal to \(\bar{S}_1(x):=a^{\text {F}}x+b^{\text {F}}\), which is in \([0,(1+\xi )x]\) iff \(x\ge x_{\min }(\epsilon )\), otherwise it exceeds \((1+\xi )x\). So, we change \(\bar{S}_1\) to the exceeded limit \((1+\xi )x\) on \([0,x_{\min }(\epsilon )]\) and obtain \(\bar{S}_1^{\text {corr}}(x)\). Since the maximized function is strictly concave, \(\bar{S}_1^{\text {corr}}(x)\) defines the unique maximizer for case 1.

However, the function \(\bar{V}_1(x)\) does not fulfil the terminal condition (7), since \(\lim \nolimits _{t\rightarrow +\infty } \bar{V}_1(X^0(t))\beta ^t=-\infty \) for \(X^0\) being the trajectory corresponding to the profile \(S\equiv 0\). So we have to continue looking for solutions.

Here, we want to note that in the normal procedure of looking for a value function assuming that we found a solution of the Bellman equation, the necessary terminal condition has to be checked. A part of it has the form ”\( \text {limsup}_{t\rightarrow +\infty } \bar{V}_1(X(t))\beta ^t< 0 \) implies that \(J(x_0,t_0, S)=-\infty \) for every S for which X is the corresponding trajectory (Theorem 4(b) of Wiszniewska-Matyszkiel and Singh (2018))”. In our case it is not fulfilled.

Case 2. The values of unknowns k, g and h are as in (31b), which yields:

Hence, the terminal condition (7) is obviously fulfilled, since \( \bar{V}_2\) is constant. The Bellman equation (9) has the form \( \tilde{k}=\sup \nolimits _{s\in {{[0,(1+\xi )x]}}}{P(s)}+\beta \tilde{k}\).

Therefore, the candidate for solution of the Bellman inclusion is independent of x and equal to \(\hat{s}\).

Since for \(x<\tilde{x}\), \(\hat{s}>(1+\xi )x\), so, in this case the Bellman equation is not fulfilled on a large interval \([0,\tilde{x})\).

Case 3. Consider a combination of case 1 and case 2.

Let us try the continuous, piecewice defined function with two pieces \(\bar{V}_1\) and \(\bar{V}_2\)—it is unique and it equals \(V^{\text {False}}\).

First, note that \(V^{\text {False}}\) is not only continuous, but also differentiable, since \(\bar{V}_1'(\tilde{x})=\bar{V}_2'(\tilde{x})\), and concave.

The terminal condition given by Eq. (7) is obvious, since \( V^{\text {False}}\) is bounded.

The corresponding zero-derivative point is

We correct \(\bar{S}_3\) on the interval \([0,x_{\min }(\epsilon )]\) by the exceeded limit \((1+\xi )x\) and we obtain \(S^{\text {False}}\) as the unique solution of the Bellman inclusion with \(V^{\text {False}}\).

So, what remains to be proven is checking that the Bellman equation is really fulfilled on \([x_{\min }(\epsilon ), +\infty )\) and Bellman inclusion on the whole \(\mathbb {R}_+\) by the piecewise defined functions.

We denote the set of s for which \( \phi (x,s)\le \tilde{x}\) by \( S_{\text {I}} \), while the set of the remaining s by \(S_{\text {II}}\). \( S_{\text {I}} \) is always nonempty.

If for some x, \( S_{\text {II}} \) is empty, which may hold only for \( x\le \tilde{x} \), then for this x, the Bellman equation (9) reduces to \( \bar{V}_1(x)=\sup \nolimits _{s\in {[0,(1+\xi ) x]}} P(s)+\beta \bar{V}_1(\phi (x,s))\), and the supremum in this case is attained at \(\bar{S}_1^{\text {corr}}(x)\), so the Bellman inclusion (10) is fulfilled, so is the Bellman equation for \([x_{\min }(\epsilon ), +\infty )\) (which we have already solved during calculation of coefficients in case 1).

So, let us consider the case when both \( S_{\text {I}} \) and \( S_{\text {II}} \) are nonempty. This situation can be decomposed into two cases.

(I) For \(x\le \tilde{x}\), the Bellman equation (9) can be rewritten as \(\bar{V}_1(x)=\text {max}\{\sup \nolimits _{s\in { S_{\text {I}}}} P(s)+\beta \bar{V}_1(\phi (x,s)), \sup \nolimits _{s\in { S_{\text {II}}}} P(s) \)\(+\beta \bar{V}_2(\phi (x,s))\}\).

Note that \(\sup \nolimits _{s}P(s)+\beta \bar{V}_1(\phi (x,s))\) is attained at \( \bar{S}_1^{\text {corr}} \), which is at least \(\xi x\), so it obviously belongs to \( S_{\text {I}} \). Since the right hand side of the Bellman equation is strictly concave, \( \bar{S}_1^{\text {corr}} \) defines the unique maximizer. The remaining calculations in this case reduce to case 1.

(II) If \(x>\tilde{x}\), then the Bellman equation (9) can be rewritten as

\( \bar{V}_2(x)=\text {max}\{\sup \nolimits _{s\in { S_{\text {I}} }}P(s)+\beta \bar{V}_1(\phi (x,s)), \sup \nolimits _{s\in { S_{\text {II}}}}P(s)+\beta \bar{V}_2(\phi (x,s))\}\). First, let us consider optimization over \( S_{\text {II}} \). As we have checked in Case 2, \(P(s)+\beta \bar{V}_2(\phi (x,s)) \) attains maximum at \( \hat{s} \), which is within the constraints, since \(x>\tilde{x}\). Since the right hand side of the Bellman equation is strictly concave, \(\hat{s}\) is its unique maximizer. The remaining calculations in this case reduce to case 2. \(\square \)

In the above proof we have copied, up to maximal applicable in this case level, the typical way of solving the optimal control problem by the Ansatz method in problems with constraints.

We can add that in such proofs sometimes (e.g. Fershtman and Kamien 1987 and Wiszniewska-Matyszkiel et al. 2015) at the last stage of the proof, the value function on the set where the constraint is exceeded by the zero-derivative solution, is replaced by some obvious value resulting from the guess implied by the fact that the control is made equal to the constraint. In Fershtman and Kamien (1987) and Wiszniewska-Matyszkiel et al. (2015) it was related to nonnegativity constraint, so an obvious guess was assuming waiting for the state variable to increase to level at which the zero-derivative point is nonnegative (which resulted in a non-quadratic value function, in those papers the procedure ended successfully). In our case, such an obvious guess related to the control value \((1+\xi )x\) at \([0,x_{\min }(\epsilon ))\) is correction of \(V^{\text {False}}\) on this set to \(P((1+\xi )x)\), because nothing remains to the next stage. Since it is less than \(V^{\text {False}}\), the Bellman equation as well as the Bellman inclusion are still fulfilled on \([x_{\min }(\epsilon ),\infty )\).

An obvious question is why an error in the Bellman equation on a small set only resulted in a substantial error in the value function and optimal control on a large set \([0,\tilde{x})\). And, consequently, what kind of errors should be avoided.

What is specific in our case, is the fact that the error in the Bellman equation appears in arbitrarily small neighbourhood of a stable steady state (if we consider the dynamics corresponding to the optimal control) and the resulting error in the value function is propagated on the whole basin of attraction of this steady state and influences the optimal control on it.

Thus, the Bellman equation around a stable steady state has to be checked especially carefully.

7 Conclusions and further research

In this paper, we have analysed a wide class of discrete time discounted dynamic optimization problems—with strictly concave quadratic current payoffs and linear state dependent constraints on the control parameter as well as non-negativity constraint on the one-dimensional state variable and control. This model suits well economic problems like extraction of renewable resources (e.g. a fishery harvesting). The class of sub-problems considered encompasses a linear quadratic optimal control problem as well as models with saturation level of the state variable.

The optimal control we have obtained is piecewice linear, with even infinitely many pieces in the infinite time horizon case, with consecutive pieces corresponding to consecutive number of time moments to depletion of the state variable, only at the last interval the state variable is not depleted.

From theoretical point of view, we derive a solution for a class of linear quadratic dynamic optimization problems, both the infinite time horizon problem and its finite horizon truncations, with applications in economy and environmental economics and modifications of this problem with nonlinear dynamics reflecting that there exists a saturation point of the state variable. The proof, besides inductive methods and calculus was nontrivially based on properties on concave functions and their derivatives. The standard undetermined coefficient method turned out to be ineffective for this class of problems.

This paper has four obvious extensions.

The first one is related to examining other relations between the discount rate and the rate of growth of the state variable, i.e. the case when \(\epsilon \) is negative, related to high rate of growth of the resource compared to the interest rate in the economic interpretation. Currently, we can only state that the solution is not, as often suggested in elementary solutions, ”wait until the last stage before the resource grows to the abundance level, to have exactly this value of the state variable from the next stage on”.

The second one is extension of the problem to more dimensional (in the economic fishery interpretation, this may be interpreted as introducing more than one species of interacting fish or nonuniform spatial allocation). This may result in essential difficulties related to the technique of the proof: proving that all the finite and infinite horizon value functions are concave and strictly concave below a certain level (related to abundance of fish) is related to complicated, highly nonlinear formulae on the coefficients.

The third one is returning to examining this problem as an infinite horizon dynamic game, currently studied only with vestigial results, with complete results either in the case of continuum of players in Singh and Wiszniewska-Matyszkiel (2018) or two stage truncations for \(\epsilon =0\) in Singh and Wiszniewska-Matyszkiel (2019).

The forth one is a deep theoretical study what kinds of errors in value functions do not propagate on optima and equilibria given some set of initial conditions.

References

Anderson B, Moore J (2007) Optimal control: linear quadratic methods. Courier Corporation, Chelmsford

Aseev S, Krastanov M, Veliov V (2016) Optimality conditions for discrete-time optimal control on infinite horizon. Research Paper 2016–09, ORCOS, Vienna University of Technology

Başar T, Haurie A, Zaccour G (2018) Nonzero-sum differential games. In: Başar T, Zaccour G (eds) Handbook of dynamic game theory. Springer International Publishing, pp 61–110

Bellman R (1957) Dynamic programming, vol 89. Princeton University Press, Princeton, p 92

Bemporad A, Morari M, Dua V, Pistikopoulos E (2002) The explicit linear quadratic regulator for constrained systems. Automatica 38(1):3–20

Blackwell D (1965) Discounted dynamic programming. Ann Math Stat 36(1):226–235

Blot J, Hayek N (2014) Infinite-horizon optimal control in the discrete-time framework. Springer, Berlin

Breton M, Keoula MY (2014) A great fish war model with asymmetric players. Ecol Econ 97:209–223

Breton M, Sbragia L, Zaccour G (2010) A dynamic model for international environmental agreements. Environ Resour Econ 45(1):25–48

Brunovský P, Holecyová M (2018) Optimal control, dichotomy, and closed range. SIAM J Control Optim 56(2):633–648

Chmielewski D, Manousiouthakis V (1996) On constrained infinite-time linear quadratic optimal control. In: Proceedings of the 35th IEEE conference on decision and control, vol 2, pp 1319–1324

Doyen L, Cissé A, Sanz N, Blanchard F, Pereau J-C (2018) The tragedy of open ecosystems. Dyn Games Appl 8:117–140

Ehtamo H, Hämäläinen RP (1993) A cooperative incentive equilibrium for a resource management problem. J Econ Dyn Control 17(4):659–678

Fershtman C, Kamien MI (1987) Dynamic duopolistic competition with sticky prices. Econometrica 55:1151–1164

Fischer RD, Mirman LJ (1992) Strategic dynamic interaction: fish wars. J Econ Dyn Control 16(2):267–287

Grieder P, Borrelli F, Torrisi F, Morari M (2004) Computation of the constrained infinite time linear quadratic regulator. Automatica 40(4):701–708

Keerthi S, Gilbert E (1988) Optimal infinite-horizon feedback laws for a general class of constrained discrete-time systems: stability and moving-horizon approximations. J Optim Theory Appl 57(2):265–293

Krawczyk J, Tidball M (2006) A discrete-time dynamic game of seasonal water allocation. J Optim Theory Appl 128(2):411–429

Krawczyk JB, Zaccour G (1999) Management of pollution from decentralised agents by local government. Int J Environ Poll 12(2–3):343–357

Le Van C, Morhaim L (2002) Optimal growth models with bounded or unbounded returns: a unifying approach. J Econ Theory 105(1):158–187

Levhari D, Mirman LJ (1980) The great fish war: an example using a dynamic Cournot–Nash solution. Bell J Econ 11:322–334

Mazalov VV, Rettieva AN (2010) Fish wars with many players. Int Game Theory Rev 12(04):385–405

Reddy P, Zaccour G (2015) Open-loop Nash equilibria in a class of linear-quadratic difference games with constraints. IEEE Trans Autom Control 60(9):2559–2564

Reddy PV, Zaccour G (2017) Feedback Nash equilibria in linear-quadratic difference games with constraints. IEEE Trans Autom Control 62(2):590–604

Rockafellar RT (2015) Convex analysis. Princeton University Press, Princeton

Rubio SJ, Ulph A (2007) An infinite-horizon model of dynamic membership of international environmental agreements. J Environ Econ Manag 54(3):296–310

Santos M (1991) Smoothness of the policy function in discrete time economic models. Econometrica 59:1365–1382

Scokaert P, Rawlings J (1998) Constrained linear quadratic regulation. IEEE Trans Autom Control 43(8):1163–1169

Singh R, Wiszniewska-Matyszkiel A (2018) Linear quadratic game of exploitation of common renewable resources with inherent constraints. Topol Methods Nonlinear Anal 51:23–54

Singh R, Wiszniewska-Matyszkiel A (2019) Discontinuous Nash equilibria in a two stage linear-quadratic dynamic game with linear constraints. IEEE Trans Autom Control 62:3074–3079

Stokey N, Lucas R, Prescott E (1989) Recursive methods in economic dynamics. Harvard University Press, Harvard

Sznaier M, Damborg M (1987) Suboptimal control of linear systems with state and control inequality constraints. In: 26th IEEE conference on decision and control, vol 26, pp 761–762

Thomas Y (1975) Linear quadratic optimal estimation and control with receding horizon. Electron Lett 11(1):19–21

Wiszniewska-Matyszkiel A (2005) A dynamic game with continuum of players and its counterpart with finitely many players. In: Nowak AS, Szajowski K (eds) Advances in dynamic games. Springer, Basel, pp 455–469

Wiszniewska-Matyszkiel A (2011) On the terminal condition for the Bellman equation for dynamic optimization with an infinite horizon. Appl Math Lett 24(6):943–949

Wiszniewska-Matyszkiel A, Bodnar M, Mirota F (2015) Dynamic oligopoly with sticky prices: off-steady-state analysis. Dyn Games Appl 5(4):568–598

Wiszniewska-Matyszkiel A, Singh R (2018) Infinite horizon dynamic optimization with unbounded returns—necessity, sufficiency, existence, uniqueness, convergence and a sequence of counterexamples, ssrn preprint, https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3295058

Yakowitz S (1982) Dynamic programming applications in water resources. Water Resour Res 18(4):673–696

Zhang H, Luo Y, Liu D (2009) Neural-network-based near-optimal control for a class of discrete-time affine nonlinear systems with control constraints. IEEE Trans Neural Netw 20(9):1490–1503

Zhao X, Lin Z (2008) On the horizons in constrained linear quadratic regulation. Automatica 44(12):3107–3112

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The research was financed by Grant 2016/21/B/HS4/00695 of National Science Centre, Poland.

Appendix

Appendix

In this Appendix we group technical lemmata used in the proofs of the main results of the paper.

Lemma 4

-

(a)

For every i, the constants \(H_{i}<0\) and \(H_{i+1}>H_{i}\).

-

(b)

For every i, the constant \((1+\xi )x\ge a_{i}>0\), \(a_{i+1}<a_{i}\) and \(a_i<(1+\xi ) x\) for \(i\ge 1\).

-

(c)

For every i, the constant \(G_i>0\).

Proof

(a) It can be easily verified that \(|H_1|>|H_2|\) with \(H_0, \ H_1<0\).

Next, we assume that \(H_{i}<0, \text { and } H_{i-1}<0\) with \(|H_i|<|H_{i-1}|\).

It is immediate by the definition that \(H_{i+1}<0\).

We consider \(\frac{|H_{i+1}|}{|H_{i}|}=\frac{|H_{i}|}{|H_{i-1}|} \frac{|B-\beta H_{i-1}|}{|B-\beta H_{i}|}<1\).

Since both the numerator and denominator are positive, we have

\(\left( \frac{B-\beta H_{i-1}}{B-\beta H_{i}}\right) <1 \left( \frac{B-\beta H_{i}}{B-\beta H_{i}}\right) =1\), which gives \(|H_{i+1}|<|H_{i}|\).

(b) By Eq. (19), \(a_i=\frac{-H_i}{B(1+\xi )}\).

It is immediate by (a) as the sign of \(a_i\) is opposite to \(H_i\).

(c) It can be easily verified that \(G_0, \ G_1>0\).

Next, we assume that \(G_{i}>0\) and we know by (a) that \(H_i<0\) for all i.

So, it is immediate from Eq. 17 that \(G_{i+1}>0\). \(\square \)

Lemma 5

For \(F_i=\frac{G_i}{H_i}\), \(F_i\) is given by the recurrence relation

and for every i, \({F}_{i}<0\). Moreover, \(F_{i+1}<F_{i}\).

Proof

By the definition, \(F_{i+1}=\frac{G_{i+1}}{H_{i+1}}\).

\(F_i<0\) for all i is an immediate consequence of Lemma (4).

It can be easily verified that \(F_0>F_1\).

Next, we assume that \(F_{i-1}>F_i\) and check for \(i+1\).

Consider \(F_{i+1}-F_{i}=\frac{F_i-F_{i-1}}{(1+\xi )}\), which gives \(F_{i}>F_{i+1}\). \(\square \)

Lemma 6

For every i: (a) \(\hat{y}_{i+1}> \hat{y}_i\);

(b) \(\hat{y}_{i+1}=- F_{i+1}\);

(c) the recursive formula for \(\hat{x}_i\) is

Proof

(a) It can be easily checked that \(\hat{y}_1>\hat{y}_0\).

Next, we assume that \(\hat{y}_i>\hat{y}_{i-1}\).

To check for \(i+1\), let us consider \(\hat{y}_{i+1}-\hat{y}_i=\frac{y_i+\hat{s}}{1+\xi }\)\(-\frac{y_{i-1}+\hat{s}}{1+\xi }\).

After simplifying and using the fact that \(\hat{y}_i>\hat{y}_{i-1}\), we get \(\hat{y}_{i+1}-\hat{y}_{i}>0\).

(b) It can be easily checked that \(\hat{y}_0=-F_0\).

Next, we assume that \(\hat{y}_i=- F_{i}\).

We check for \(i+1\), i.e., we consider \(\hat{y}_{i+1}=\frac{y_i+\hat{s}}{1+\xi }\).

By substituting \(\hat{y}_i=-F_{i}\), the recurrence Eq. (33), \(\hat{s}\) and simplifying, we get \(\hat{y}_{i+1}=- F_{i+1}\).

(c) For \(i=0\), we prove (34) by just substituting and simplifying.

Next, we consider \(i>0\) and in the part of formula (19) \(\hat{x}_{i+1}=\frac{G_{i+1}-G_i}{H_i-H_{i+1}}\), we substitute all the four constants by their values from the recursive formulae (17). We do it only once (we do not substitute for \(G_i\) and \(H_i\) which appear after substitution for \(G_{i+1}\) and \(H_{i+1}\)). We simplify the formula to get

We rewrite Eq. (19) to get \(G_{i-1}=\hat{x}_i(H_i-H_{i-1})+G_i\) and we substitute it into (35). After simplification, we get Eq. (34). \(\square \)

Lemma 7

-

(a)

For all i, \(\hat{x}_{i}\) fulfils

$$\begin{aligned} \hat{x}_{i+1}=\frac{\hat{x}_{i}+b_{i+1}}{(1+\xi )-a_{i+1}} \end{aligned}$$(36)or, equivalently,

$$\begin{aligned} \hat{x}_i=\phi \left( \hat{x}_{i+1},S_{i+1}(\hat{x}_{i+1})\right) . \end{aligned}$$(37) -

(b)

For all i, \(\hat{x}_{i}<\hat{y}_i\).

Proof

(a) It can be easily verified that \(\hat{x}_1=\frac{\hat{x}_{0}+b_1}{(1+\xi )-a_{1}}\)

To prove for another i, we rewrite the right hand side of Eq. (36) and we get \(\frac{\hat{x}_i+b_{i+1}}{(1+\xi )-a_{i+1}}:=\frac{(B-\beta H_{i})\hat{x}_{i}+A-\beta G_{i}}{B(1+\xi )}\). This is the right hand side of Eq. (34), so it equals \(\hat{x}_{i+1}\).

(b) It is immediate that \(\hat{x}_{0}<\hat{y}_0\).

Next, we assume that \(\hat{x}_i<\hat{y}_{i}\).

To check for \(i+1\), we rewrite into \(\hat{x}_{i+1}-\hat{y}_{i+1}\) using the formulae from Lemma 6(b)–(c) and Eq. (33).

After simplifying, we get \(\hat{x}_{i+1}-\hat{y}_{i+1}=(\hat{x_i}-\hat{y}_i)\frac{B(1+\xi )-\beta H_i}{B(1+\xi )}\).

By Lemma 4(a), \(H_i<0\) and since \(\hat{x}_i<\hat{y}_{i}\), we have, \(\hat{x}_{i+1}-\hat{y}_{i+1}<0\). \(\square \)

Lemma 8

The function \(S^N(x,0)\) is continuous, i.e., its components fulfil

- (a)

for all \(1 \le i \le N\),

\(S_{i-1}(\hat{x}_i)=S_{i}(\hat{x}_i)\).

- (b)

\(S_{N}(\hat{y}_N)=\hat{s}\).

Proof

(a) Since \(S_0(\hat{x}_1)=S_1(\hat{x}_1)\), so, \(S^N(x)\) is continuous in x at \(\hat{x}_1\).

Next, we assume that \(S^N(x)\) is continuous in x at \(\hat{x}_i\).

So, \(\hat{x}_i\) is a root of \(S_{i}(x)-S_{i-1}(x)=0\) i.e.,

We want to check that this holds for \(i+1\).

Consider the left hand side: \(S_{i+1}(\hat{x}_{i+1})-S_i(\hat{x}_{i+1})\)\(=(a_{i+1}-a_{i})\hat{x}_{i+1}+(b_{i+1}-b_{i})\).

By substituting \(\hat{x}_{i+1}=\frac{b_i-b_{i+1}}{a_{i+1}-a_i}\) and simplifying we get

\((a_{i+1}-a_{i})\hat{x}_{i+1}+(b_{i+1}-b_{i})=0\), equivalently \(S_{i+1}(\hat{x}_{i+1})-S_{i}(\hat{x}_{i+1})=0\).

Therefore, \(S^N(x)\) is continuous in x at \(\hat{x}_{i+1}\).

(b) It can be easily verified that \(S_0(\hat{y}_0)=\hat{s}\).

Now, we assume that \(S_{i}(\hat{y}_i)=\hat{s}\) and check for \(i+1\).

Consider the left hand side: \(S_{i+1}(\hat{y}_{i+1})=a_{i+1}\hat{y}_{i+1}+b_{i+1}\) .

By Lemma 6(b), \(\hat{y}_{i+1}=-F_{i+1}\). Substituting \({a}_{i+1}\), \({b}_{i+1}\) from Eq. (19)

and simplifying, we have \(a_{i+1}\hat{y}_{i+1}+b_{i+1}=\hat{s}\). \(\square \)

Lemma 9

The function \(V^N(0,x)\) is continuous, i.e.,

- (a)

For all \(2\le i \le N\), \(V_{i-1}(\hat{x}_i)=V_{i}(\hat{x}_i)\).

- (b)

\(V_N(\hat{y}_N)=U_N(\hat{y}_N)\).

Proof

(a) Since \(V_0(\hat{x}_1)=V_1(\hat{x}_1)\), so, \(V^N(x,0)\) is continuous in x at \(\hat{x}_1\).

Next, we assume that \(V^N(x,0)\) is continuous in x at \(\hat{x}_i\) i.e.,

and we check whether \(V_{i}(\hat{x}_{i+1})=V_{i+1}(\hat{x}_{i+1})\).

By Lemma 1, if \(x\in [\hat{x}_{i+1},\hat{x}_{i+2}]\), then \(\phi (x,S^N(x,0))\in [\hat{x}_{i},\hat{x}_{i+1}]\).

Consider the left hand side limit \(\lim \nolimits _{ \delta \rightarrow 0^-} V^N(\hat{x}_{i+1}+\delta ,0)\).

By the Bellman equation, \(\lim \nolimits _{ \delta \rightarrow 0^-} V^N(\hat{x}_{i+1}+\delta ,0)=\lim \nolimits _{ \delta \rightarrow 0^-}\)\(V_{i}(\hat{x}_{i+1}+\delta )=\lim \nolimits _{\delta \rightarrow 0^-}P(S_{i}(\hat{x}_{i+1}+\delta ))\)\(+\beta V_{i-1}\left( \phi (\hat{x}_{i+1},S_{i}(\hat{x}_{i+1}+\delta ))\right) \).

By Lemma 8 and continuity of \(V^{N}(\cdot , 0)\) and \(S^N(\cdot ,0)\) at \(\hat{x}_i\), \(\lim \nolimits _{ \delta \rightarrow 0^-} V_{i}(\hat{x}_{i+1}+\delta )=P(S_{i}(\hat{x}_{i+1}))+\beta V_{i-1}(\hat{x}_i)\).

Next, consider the right hand side limit \(\lim \nolimits _{ \delta \rightarrow 0^+} V^N(\hat{x}_{i+1}+\delta ,0)\).

\(\lim \nolimits _{ \delta \rightarrow 0^+} V^N(\hat{x}_{i+1}+\delta ,0)=\lim \nolimits _{ \delta \rightarrow 0^+} V_{i+1}\)\((\hat{x}_{i+1}+\delta )=\lim \nolimits _{ \delta \rightarrow 0^+}P(S_{i+1}(\hat{x}_{i+1}+\delta ))\)\(+\beta V_{i}\left( \phi (\hat{x}_{i+1},S_{i+1}(\hat{x}_{i+1}+\delta ))\right) \).

By Lemmata 7 and 8 and by using Eq. (39), we have

\(\lim \nolimits _{ \delta \rightarrow 0^+} V_{i+1}(\hat{x}_{i+1}+\delta )=P\)\(\left( S_{i+1}(\hat{x}_{i+1})\right) +\beta V_{i}\left( \hat{x}_{i}\right) \)\(=\lim \nolimits _{ \delta \rightarrow 0^-} V_i(\hat{x}_{i+1}+\delta )\), which shows that \(V^N(x,0)\) is continuous in x at \(\hat{x}_{i+1}\).

Therefore, \(V^N(x,0)\) is continuous in x at \(\hat{x}_i\) for all \(i\le N\).

(b) It can be easily verified that \(V_0(\hat{y}_0)=U_0(\hat{y}_0)\).

Next, we assume that \(V_i(\hat{y}_i)=U_i(\hat{y}_i)\).

To check for \(i+1\), we first consider the left hand side limit

\(\lim \nolimits _{ \delta \rightarrow 0^-} V_{i+1}(\hat{y}_{i+1}+\delta ){\,=\,}\lim \nolimits _{\delta \rightarrow 0^-}P\)\((S_{i+1}(\hat{y}_{i+1}+\delta ))+\beta V_{i}\big (\phi (\hat{y}_{i+1}\)\(+\delta , S_i(\hat{y}_{i+1}+\delta ))\big )\).

By Lemma 8(b), \(\lim \nolimits _{ \delta \rightarrow 0^-} V_{i+1}(\hat{y}_{i+1}+\delta )=P(S_{i+1}(\hat{y}_{i+1}))+\beta V_{i}(\hat{y}_{i})\).

By using Eq. (18) and the fact that \(V_{i}(\hat{y}_{i})=U_{i}(\hat{y}_{i})\), we have

\(\lim \nolimits _{ \delta \rightarrow 0^-} V_{i+1}(\hat{y}_{i+1}+\delta )=P(\hat{s})\)\(+\beta U_{i}(\hat{y}_i)=U_{i+1}(\hat{y}_{i+1})\), which equals the right hand side limit since \(U_{i+1}\) is constant. \(\square \)

Lemma 10

-

(a)

The function \(V^N(x,0)\) is differentiable in x for \(x<\hat{y}_N\) and for all i, its derivative is continuous at \(x=\hat{x}_i\) i.e., \(V'_{i-1}(\hat{x}_i)=V'_{i}(\hat{x}_i)\).

-

(b)

\(V^N(x,0)\) is differentiable at \(\hat{y}_N\) and \(V'_N(\hat{y}_N)=0\).

Proof

(a) Since \(V'_0(\hat{x}_1)=V'_1(\hat{x}_1)\), \(V^N(x,0)\) is differentiable in x at \(x=\hat{x}_1\) and the derivative is continuous.

Next, assume that \(V^N(x,0)\) is differentiable in x at \(\hat{x}_i\) and the derivative is continuous i.e. \(V'_{i-1}(\hat{x}_i)=V'_i(\hat{x}_i)\) which gives

We will show it for \(i+1\) i.e. \(\hat{x}_{i+1}=-\frac{b_{i+1}-b_{i+1}}{a_{i+1}-a_i}\) is a root of the equation \(V'_{i+1}(\hat{x}_{i+1})-V'_{i}(\hat{x}_{i+1})=0\) or equivalently, \((G_{i+1}-G_{i})+(H_{i+1}-H_{i})\hat{x}_{i+1}=0\).

By rewriting both equations in Eq. (19) for \(i+1\) and i and subtracting we get,

\(G_{i+1}-G_i=-B(1+\xi )\left( b_{i+1}-b_{i}\right) \) and \(H_{i+1}-H_i=-B(1+\xi )(a_{i+1}-a_i)\).

Notice that \(\frac{G_{i+1}-G_i}{H_{i+1}-H_i}=\frac{b_{i+1}-b_{i}}{a_{i+1}-a_i} =-\hat{x}_{i+1}\), implies that \(\hat{x}_{i+1}\) is a root of the equation \(V'_{i+1}(x)-V'_{i}(x)=0\).

So, \(V^N(x,0)\) is differentiable in x at \(\hat{x}_{i+1}\).

(b) It can be easily verified that \(V'_0(\hat{y}_0)=0\),

Now we assume that \(V'_i(\hat{y}_i)=0\).

To check for \(i+1\), consider \(V'_{i+1}(\hat{y}_{i+1})=G_{i+1}+\hat{y}_{i+1}H_{i+1}\).