Abstract

We consider a full information best-choice problem where an administrator who has only one on-line choice in m consecutive searches has to choose the best candidate in one of them.

Similar content being viewed by others

1 Introduction and notation

In the full information best-choice problem Gilbert and Mosteller (1966) we deal with a discrete time stochastic process \((X_1, \ldots , X_n)\) where \(X_1, \ldots , X_n\) are i.i.d. random variables with known continuous cumulative distribution function F. We observe elements of \((X_1, \ldots , X_n)\) one by one and our goal is to choose on-line the largest element of \(X_1, \ldots , X_n\) which is not a priori known. Stopping the process at a given moment means choosing the object we have observed at this moment according to the knowledge obtained in the hitherto observations. The best-choice problem consists of finding a strategy of stopping the process that maximizes the probability \(\mathbf P \left[ X_{\tau }=\max \left\{ X_1,\ldots ,X_n\right\} \right] \) over all stopping times \(\tau \le n\). [see Gnedin (1996)]

Let us recall basic results for this case.

Numbers \(d_k\), called decision numbers, are implicitly defined as satisfying the following equalities: \(d_0=0\) and for \(k=1, 2\ldots \),

or, equivalently,

The optimal stopping time is given by the following formula:

if the set under minimum is nonempty, otherwise \(\tau ^*_n=n\).

It is known [see Samuels (1991)], that the sequence \((d_k)\) is increasing in k, \(\lim \limits _{k\rightarrow \infty }d_k=1\) and \(\lim \limits _{k\rightarrow \infty }k(1-d_k)=c\), where \(c=0.804352\ldots \) is the solution of the following equation

The maximal probability (using the optimal stopping time)

does not depend on F, is strictly decreasing in n and

(\(v_{\infty }=0.580164\ldots \)) [see Samuels (1991)].

In this paper we consider a modification to the classical full information best-choice problem. Namely, we consider m consecutive classical full information searches. Our aim is to choose the largest element in one of them if we have only one choice. Our goal is to find a strategy that maximizes the probability of achieving this aim.

The problem considered here is related to real life situations where contests may be repeated several times but once in one of them the choice is made the procedure ends. Usually selectors know how many times they can repeat the contest and intuitively, the more contests are ahead the more selective they can be.

The solution of the no information version for the repeated contest problem was presented in Kuchta and Morayne (2014a).

Here is a formal description of the problem considered.

Let \(n_1,\ldots , n_m \in \texttt {N}\) and \(\left( X^{(m)}, X^{(m-1)}, \dots , X^{(1)}\right) \) be a sequence of m consecutive searches: for \(i=0,\ldots ,m-1,\)

where \(X_{1+\sum _{k=0}^{i-1}n_{m-k}},X_{2+\sum _{k=0}^{i-1}n_{m-k}} \ldots , X_{\sum _{k=0}^{i}n_{m-k}}\) are independent random variables with known continuous cumulative distribution function \(F_{m-i}\). (The inverse numbering simplifies some technicalities in the proof and is adjusted to the recursion we will use.) The continuous distribution \(F_{m-i}\) is known and since the largest measurement in a sample remains the largest under all monotonic transformations of its variable, we lose no generality by assuming that \(F_{m-i}\) is the standard uniform for all searches: \(F(x)=x\) on \(0\le x \le 1\).

Let for \(1\le i\le m\)

and

where \(\max (X^{(i)})\) is the largest element of the search \(X^{(i)}\), for \(i=1,\ldots ,m\).

Let t be an integer, \(1\le t \le \sum ^m_{i=1}n_i\). For the time \(t=\sum ^{i-1}_{k=0}n_{m-k}+j\), where \(0\le i \le m-1\), \(1\le j\le n_{m-i}\), the selector sees the whole sequences \(X^{(m)}, X^{(m-1)}, \dots , X^{(m-i+1)}\) and the first j values of the search \(X^{(m-i)}\). The goal of the selector is to stop the search at a time t maximizing the probability that \(X_t\in Max \left( Y^{(m)}\right) .\) Formally, for \(t\ge 1\), let \(\mathcal {F}_t\) be the \(\sigma \)-algebra generated by \(X_1,\ldots ,X_t\), \(\mathcal {F}_t=\sigma (X_1,\ldots ,X_t)\). Our aim is to find a stopping time \(\tau _m\) with respect to the filtration \((\mathcal {F}_t)\) maximizing the probability \(\mathbf P \left[ X_{\tau _m}\in Max\left( Y^{(m)}\right) \right] .\)

2 Optimal stopping time

Let us recall the Monotone Case Theorem [see Chow et al. (1971)], which is often a very useful tool when looking for an optimal stopping time.

Theorem 2.1

If \(\left\{ (Z_i, \mathcal {F}_i):i\le n\right\} \) is a stochastic process such that the inequality \(Z_i\ge E(Z_{i+1}|\mathcal {F}_i)\) implies the inequality \(Z_{i+1}\ge E(Z_{i+2}|\mathcal {F}_{i+1})\) for each \(i\le n-2\), then the stopping time

is optimal for maximizing \(E(Z_{\tau })\) over all \((\mathcal {F}_{i})\)-stopping times \(\tau \).

We apply this theorem to determine an optimal stopping time for m searches, i.e. for \(Y^{(m)}\).

Let \(\gamma _{m-1}\) be the probability of success using an optimal stopping time for \(Y^{(m-1)}\).

For \(1\le k\le n_m\) we define the sequence of multiple search decision numbers \(\hat{d}_k\) in the following way:

and if \(1\le k\le n_m-1\),

or, equivalently,

Notice that the numbers \(\hat{d}_k\) are to be used only in the first search \(X^{(m)}\). For \(m=1\), \(\hat{d}_k=d_k\).

Now let us define the following stopping times \(\tau _m\) for \(Y^{(m)}\).

For \(m=1\):

if the set under minimum is nonempty, otherwise \(\tau _1 = n_1\),

and, for \(m>1\):

In the first case of the definition above we choose from the first search \(X^{(m)}=\left( X_1,\ldots , X_{n_m}\right) \). In the second case we choose from among the remaining \(m-1\) searches: \(Y^{(m-1)}\), so the recursion is used.

Theorem 2.2

The stopping time \(\tau _m\) is optimal for \(Y^{(m)}\). When using \(\tau _m\) the probability of success equals

where we set \(\gamma _0=0\).

Proof

In the proof we use recursion with respect to m. If \(\tau _{m-1}\) is an optimal stopping time for the case \(Y^{(m-1)}\), then when looking for an optimal stopping time \(\tau _m\) for m searches, i.e. for \(Y^{(m)}\), the only stopping times that should be considered are the times of relative records for \(X^{(m)}\) and the optimal stopping time \(\tau _{m-1}\) in the remaining \(m-1\) searches \(Y^{(m-1)}\). Namely,

let \(\rho _1=1\) and, for \(2\le i\le \sum _{j=1}^m n_j\) if \(\rho _{i-1}\le n_m\), then

(Note that if \(\rho _{i}\le n_m\) then \(\rho _{i}\) is the time of i-th relative record. If \(\rho _{i-1}>n_m\) then \(\rho _i=\rho _{i-1}\).)

Let

and

Let us notice that in our notation the probability of stopping on a maximal element is equal to

Thus, our aim is maximizing \(E(Z_{\tau })\) over all \( \left( \mathcal {F}_{\rho _i}\right) _i\) - stopping times \({\tau }\). By Theorems 2.3, 4.1 and Proposition 5.2 of Kuchta and Morayne (2014b) the process Z satisfies the hypothesis of the Monotone Case Theorem.

Suppose we have seen the t-th element x from the first search and this element is maximal so far. Thus \(t=\rho _j\) for some j. There are still \(n_m-t\) elements to come in \(X^{(m)}\). The probability that the next \(n_m-t\) elements are not bigger than x is equal to \(x^{n_m-t}\) and this is the probability of winning if we stop now. The probability of winning in the time of the next relative record in \(X^{(m)}\) is equal to

where the i-th summand is the probability that exactly i elements from the remaining \(n_m-t\) ones are larger than x, and the maximum of those i elements appears first. Choosing the times when they come corresponds to the factor \({n_m-t \atopwithdelims ()i}\), the probability that exactly these elements are bigger than x is equal to \(x^{n_m-t-i}(1-x)^i\) and the probability that the largest element from this group comes before the other ones is equal to \(\frac{1}{i}\).

If there is no relative record after x till the time \(n_m\), i.e., within the first search \(X^{(m)}\), we use the optimal strategy for the remaining part which consists of \(m-1\) searches \(X^{(m-1)},\ldots , X^{(1)}\). The probability that this happens and that we succeed is equal to \(x^{n_m-t}\gamma _{m-1}.\) Thus, by the Monotone Case Theorem, we decide to stop at the t-th moment if it is the first moment of the relative record when

Since

(4) is equivalent to

The function \(f(x)=\sum _{i=1}^{n_m-t}\frac{1}{i}\left( x^{-i}-1\right) \) is decreasing in x, where \(0<x<1\) and \(1\le t\le n_m-1\). Thus the smallest x satisfying (5) is equal to the solution of the equation

Hence \(\tau _m\) is an optimal stopping time.

The probability that we choose from \(X^{(m)}\) and we are successful is equal to

where \(p_t\) is the probability that we stop at time t and it is successful, i.e. \(X_t=\max \left\{ X_1,\ldots , X_{n_m}\right\} \).

Thus

Let \(1\le t\le n_m-1\). The probability that no element among the first t is chosen and that the absolute largest is \(X_{t+1}\) is equal to (see the explanation below)

where the first integral is the probability that the i-th element is below \(\hat{d}_{n_m-i}\) and it is the biggest among the first t elements, the second integral is the probability that the i-th element is below \(\hat{d}_{n_m-i}\) and it is the absolute maximum, and the factor \(\frac{1}{n_m-t}\) is the probability that the best element among the remaining \({n_m-t}\) elements is exactly the \((t+1)\)-th one.

The probability that \(X_{t+1}\) is the largest in \(X^{(m)}\) but does not pass the threshold, is equal to

Note that if the last event (whose probability is given by (9)) happens then also the previous one (whose probability is given by (8)) does, because the thresholds \(\hat{d}_{n_m-i}\) are decreasing with i. Thus by (8) and (9), for \(1\le t\le n_m-1\),

We do not stop at the first search \(X^{(m)}\) if and only if, for every \(1\le t\le n_m-1\), \(X_t< \hat{d}_{n_m-t}\) when \(X_t=\max \lbrace X_1,\ldots X_t\rbrace \). Thus the probability that using \(\tau _m\) we do not stop at the first search \(X^{(m)}\) is equal to

The above equality, (7), (10) and (6) yield (3). \(\square \)

3 Asymptotics

In this section we examine the asymptotic behavior of the probability of success \(\gamma _m\) and the multiple search decision numbers \(\hat{d}_k\) as \(n_i\longrightarrow \infty \) for every \(i\in \left\{ 1,\dots , m\right\} \).

Let us define recursively the following sequence: \(r_0=0,\) and for \(\,\, i\ge 1\):

where \(c_i\) satisfies the following equation (\(i\ge 1\)):

or, equivalently,

Let \(n_i^*=\min \lbrace n_1,\ldots , n_i\rbrace \) for \(i=1,\ldots , m\) and let, for \(1\le k \le n_m-1\),

Theorem 3.1

\(\gamma _m\longrightarrow r_m\) as \(n_m^*\longrightarrow \infty \).

Proof

We prove this theorem by induction with respect to m.

For \(m=1\) we have only one search and

Of course, this is the asymptotic solution (1) of the classical full information best-choice problem.

Let \(m\ge 2\) and assume that \(\lim \limits _{n_{m-1}^*\rightarrow \infty }\gamma _{m-1}=r_{m-1}\).

Note that \(\alpha _k\) is, in fact, a function of m variables: \(k,n_{m-1},\ldots ,n_1\); \(\alpha _k=\alpha _k(n_{m-1},\ldots ,n_1)\).

Claim 1

\(\alpha _k\longrightarrow c_m\) as \((k,n_m^*)\longrightarrow (\infty , \infty )\) and \(k \le n_m-1\).

Proof of Claim 1

For \(1\le k \le n_m-1\), (2) and (13) yield

Thus

Since the function \(\frac{1}{x}\left( \left( 1+\frac{x}{k}\right) ^k-1\right) \) is increasing in k for \(x>0\) and, for \(k=1\), \(\int ^{\alpha _1}_0 1dx=\alpha _1=1-\gamma _{m-1}\le 1\), we always have \(0\le \alpha _k<1\) for \(1< k \le n_m-1\).

Let \(U=\limsup _{(k,n_m^*)\rightarrow (\infty ,\infty )}\alpha _k\) and \(L=\liminf _{(k,n_m^*)\rightarrow (\infty ,\infty )}\alpha _k\).

By Lebesgue’s bounded convergence theorem

and

which implies \(L=U\). Hence the limit from the statement of the claim exists. In view of the definition of \(c_m\) we also obtain \(\lim _{(k,n_m^*)\rightarrow (\infty ,\infty )}\alpha _k=c_m.\) \(\square \)

Further we follow the method used by Samuels (1982), [see also Samuels (1991)].

Consider the first search \(X^{(m)}\). Let \(M_t=\max \left\{ X_1,\ldots , X_t\right\} \), \(1\le t \le n_m\), and let \(M_0=0\). Let \(\sigma _{n_m}\) be the arrival time of the largest element in \(X^{(m)}\) and \(\hat{\sigma }_{n_m}\) be the arrival time of the largest element in \(X^{(m)}\) before the time \(\sigma _{n_m}\), i.e.

Because \(\hat{d}_{n_m-t}\) is decreasing and \(M_t\) is increasing in t for \(1\le t\le n_m\), the probability that we choose from \(X^{(m)}\) and we are successful is equal to

and the probability that we do not choose from \(X^{(m)}\) is equal to

Thus,

Claim 2

and

Proof of Claim 2

We change variables:

Then \(n_m-\sigma _{n_m}=n_m\left( 1-T_{n_m}\right) \) and \(n_m-\hat{\sigma }_{n_m}=n_m\left( 1-T_{n_m}\hat{T}_{n_m}\right) +\hat{T}_{n_m}\). Thus, applying (13),

and

where

Let us define the following events (depending on \(n_m\)):

where

Then

and

By the properties of the uniform distribution,

in the weak convergence as \(n_m^*\longrightarrow \infty \), where \(S, \hat{S}, T, \hat{T}\) are mutually independent variables, \(S, \hat{S}\) have the exponential distribution with parameter 1, and \(T, \hat{T}\) have the uniform distribution on \(\left[ 0,1\right] \).

We have

and

The conditional probability

is equal to

Now we integrate this probability multiplied by the exponential density of S and we obtain the conditional probability for given \(T=t\) and \(\hat{T}=\hat{t}\):

In the next step we integrate this expression over the unit square:

It is easy to see that

Making the following change of variables in the first integral of (17)

we obtain

We set \(w=v-u\). Interchanging the order of integration we obtain

The conditional probability of \(\left\{ S\ge c_m/\left( 1-t\right) \right\} \) for \(T=t\) and \(\hat{T}=\hat{t}\) is equal to

Analogously,

This completes the proof of the claim. \(\square \)

By (14), (15) and (16) and the induction hypothesis, we get

This completes the proof. \(\square \)

The following proposition describes the asymptotic behavior of the sequences \((c_m)\) and \((r_m)\) when \(m\longrightarrow \infty \) .

Proposition 3.2

The sequence \((c_m)\) is decreasing and \(\lim \limits _{m\rightarrow \infty }c_m=0\). The sequence \((r_m)\) is increasing and \(\lim \limits _{m\rightarrow \infty }r_m = 1\).

Proof

The function

is decreasing in y, (the derivative of this function is negative i.e. \(\frac{dg}{dy}=(e^y-1)\left( \int ^{\infty }_ye^{-x}x^{-1}dx -e^{-y}y^{-1}\right) <0\)). By (11), (12) and \(r_0=0\), \(c_1\approx 0.804\). It is easy to see that the sequence \((r_m)\) is increasing and \(c_m\) is decreasing with \(m\longrightarrow \infty \) and both sequences are bounded by 0 and 1. Thus, both sequences are convergent. Let \(\beta =\lim \limits _{m\rightarrow \infty }c_m\). By (11) and (12) the sequence \((c_m)\) satisfies the following recurrence

Thus, \(\beta \) is the solution of the following equation

It is easy to check that the only \(\beta \) satisfying this equation is \(\beta =0\).

By (18), it is now easy to check that \(\lim \limits _{m\rightarrow \infty }r_m=1\).

This completes the proof. \(\square \)

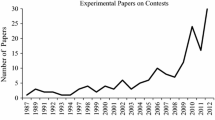

Approximations of the first ten elements of the sequences \((r_m)\) and \((c_m)\) are given in Table 1, see also Fig. 1. For comparison, the first column gives the corresponding probability of success \((a_m)\) for the iterated no information version (the classical secretary problem) [see also Kuchta and Morayne (2014a)].

The following proposition describes the asymptotic behavior of the decision numbers \(\hat{d}_k\) when \(k\longrightarrow \infty \) and \(n_m^*\longrightarrow \infty \).

Proposition 3.3

\(\hat{d}_k \longrightarrow 1\) as \((k, n_m^*)\longrightarrow (\infty ,\infty )\) and \(k \le n_m-1\).

Proof

By (13) we have \(\hat{d}_k= \left( \frac{\alpha _k}{k}+1\right) ^{-1}\). By Claim 1 \(\lim \alpha _k= c_m\) as \((k, n_m^*)\longrightarrow (\infty ,\infty )\) and \(k \le n_m-1\). Since \(0<c_m<1\), \(\hat{d}(k) \longrightarrow 1\) as \((k, n_m^*)\longrightarrow (\infty ,\infty )\) and \(k \le n_m-1\). \(\square \)

Example

Let us consider the case of two searches of the same length n. According to the optimal strategy using (13) for multiple search decision numbers \(\hat{d}(k)\) for \(n=2,3,4,5,6,7,8,9,10,11,12,20\), we obtain the approximations of \(\gamma _2\) from Table 2 (see Fig. 2). (\(\gamma _2\longrightarrow 0.7443...\) for \(n\longrightarrow \infty \).)

References

Chow YS, Robbins H, Siegmund D (1971) Great expectations: the theory of optimal stopping. Houghton Mifflin Co., Boston

Gnedin A (1996) On the full information best-choice problem. J Appl Probab 33:678–687

Gilbert JP, Mosteller F (1966) Recognizing the maximum of a sequence. J Am Stat Assoc 61:35–73

Kuchta M, Morayne M (2014a) A secretary problem with many lives. Commun Stat - Theory Methods 43:1:210–218

Kuchta M, Morayne M (2014b) Monotone case for extended process. Adv Appl Probab 46:1106–1125

Samuels SM (1991) Secretary problems. In: Ghosh BK, Sen PK (eds) Handbook of sequential analysis. Marcel Dekker, New York, pp 381–405

Samuels SM (1982) Exact solutions for the full information best choice problem. Technical Report #82–17, Department of Statistics, Purdue University

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Kuchta, M. Iterated full information secretary problem. Math Meth Oper Res 86, 277–292 (2017). https://doi.org/10.1007/s00186-017-0594-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00186-017-0594-0