Abstract

To capture the bivariate count time series showing piecewise phenomena, we introduce a first-order bivariate threshold Poisson integer-valued autoregressive process. Basic probabilistic and statistical properties of the model are discussed. Conditional least squares and conditional maximum likelihood estimators, as well as their asymptotic properties, are obtained for both the cases that the threshold parameter is known or not. A new algorithm to estimate the threshold parameter of the model is also provided. Moreover, the nonlinearity test and forecasting problems are also addressed. Finally, some numerical results of the estimates and a real data example are presented.

Similar content being viewed by others

Notes

Here \((X_1,X_2)\) larger than \((M_1,M_2)\) means that there is at least one component of \((X_1,X_2)\) larger than the corresponding component of \((M_1,M_2)\).

References

Aleksandrov B, Weiß CH (2020) Testing the dispersion structure of count time series using Pearson residuals. AStA Adv Stat Anal 104:325–361

Billingsley P (1961) Statistical inference for Markov processes. The University of Chicago Press, Chicago

Box GEP, Pierce DA (1970) Distribution of residual correlations in autoregressive-integrated moving average time series models. J Am Stat Assoc 65:1509–1526

Brännäs K, Quoreshi AMMS (2010) Integer-valued moving average modelling of the number of transactions in stocks. Appl Financ Econ 22:1429–1440

Bu R, McCabe B (2008) Model selection, estimation and forecasting in INAR(\(p\)) models: a likelihood-based Markov Chain approach. Int J Forecast 24:151–162

Chen CWS, Lee S (2016) Generalized Poisson autoregressive models for time series of counts. Comput Stat Data Anal 99:51–67

Darolles S, Fol GL, Lu Y, Sun R (2019) Bivariate integer-autoregressive process with an application to mutual fund flows. J Multivar Anal 173:181–203

Fokianos K, Rahbek A, Tjøstheim D (2009) Poisson autoregression. J Am Stat Assoc 104:1430–1439

Franke J, Rao TS (1993) Multivariate first-order integer-valued autoregressions. Technical report. Universität Kaiserslautern

Freeland RK, McCabe BPM (2004) Forecasting discrete valued low count time series. Int J Forecast 20:427–434

He Z, Wang Z, Tsung F, Shang Y (2016) A control scheme for autocorrelated bivariate binomial data. Comput Ind Eng 98:350–359

Hall P, Heyde CC (1980) Martinale limit theory and its application. Academic Press, New York

Johnson N, Kotz S, Balakrishnan N (1997) Multivariate discrete distributions. Wiley, New York

Karlis D, Pedeli X (2013) Flexible bivariate INAR(1) processes using copulas. Commun Stat - Theory Methods 42:723–740

Karlsen H, Tjøstheim D (1988) Consistent estimates for the NEAR(2) and NLAR time series models. J Roy Stat Soc B 50:313–320

Kocherlakota S, Kocherlakota K (1992) Bivariate discrete distributions, statistics: textbooks and monographs, vol 132. Markel Dekker, New York

Latour A (1997) The multivariate GINAR(\(p\)) process. Adv Appl Probab 29:228–248

Li H, Yang K, Wang D (2017) Quasi-likelihood inference for self-exciting threshold integer-valued autoregressive processes. Comput Stat 32:1597–1620

Li H, Yang K, Zhao S, Wang D (2018) First-order random coefficients integer-valued threshold autoregressive processes. AStA Adv Stat Anal 102:305–331

Liu M, Li Q, Zhu F (2020) Self-excited hysteretic negative binomial autoregression. AStA Adv Stat Anal 104:385–415

Liu Y, Wang D, Zhang H, Shi N (2016) Bivariate zero truncated Poisson INAR(1) process. J Kor Stat Soc 45:260–275

Monteiro M, Scotto MG, Pereira I (2012) Integer-valued self-exciting threshold autoregressive processes. Commun Stat - Theory Methods 41:2717–2737

Möller TA, Silva ME, Weiß CH et al (2016) Self-exciting threshold binomial autoregressive processes. AStA Adva Stat Anal 100:369–400

Pedeli X, Karlis D (2011) A bivariate INAR(1) process with application. Stat Model 11:325–349

Pedeli X, Karlis D (2012) On composite likelihood estimation of a multivariate INAR (1) model. J Time Ser Anal 34:206–220

Pedeli X, Karlis D (2013) On estimation of the bivariate Poisson INAR process. Commun Stat - Simul Comput 42:514–533

Pedeli X, Karlis D (2013) Some properties of multivariate INAR(1) processes. Comput Stat Data Anal 67:213–225

Pedeli X, Davison AC, Fokianos K (2015) Likelihood estimation for the INAR(\(p\)) model by saddlepoint approximation. J Am Stat Assoc 110:1229–1238

Popović PM (2016) A bivariate INAR(1) model with different thinning parameters. Stat Pap 57:517–538

Popović PM, Ristić MM, Nastić AS (2016) A geometric bivariate time series with different marginal parameters. Stat Pap 57:731–753

Quoreshi AMMS (2017) A bivariate integer-valued long-memory model for high-frequency financial count data. Commun Stat - Theory Methods 46:1080–1089

Ristić MM, Nastić AS, Jayakumar K, Bakouch HS (2012) A bivariate INAR(1) time series model with geometric marginals. Appl Math Lett 25:481–485

Rosenblatt M (1971) Markov Processes. Structure and Asymptotic Behaviour, Springer, Berlin

Ross SM (1996) Stochastic processes, 2nd edn. Wiley, New York

Schmidt AM, Pereira JBM (2011) Modelling time series of counts in epidemiology. Int Stat Rev 79:48–69

Scotto MG, Weiß CH, Silva ME, Pereira I (2014) Bivariate binomial autoregressive models. J Multivar Anal 125:233–251

Steutel F, van Harn K (1979) Discrete analogues of self-decomposability and stability. Ann Probab 7:893–899

Sunecher Y, Khan NM, Jowaheer V (2017) A GQL estimation approach for analysing nonstationary over-dispersed BINAR(1) time series. J Stat Comput Simul 87:1911–1924

Tong H (1978) On a Threshold Model. In: Chen CH (ed) Pattern recognition and signal processing. Sijthoff and Noordhoff, Amsterdam, pp 575–586

Tong H, Lim KS (1980) Threshold autoregressive, limit cycles and cyclical data. J Roy Stat Soc B 42:245–292

van der Vaart AW (1998) Asymptotic statistics. Cambridge University Press, Cambridge

Wang C, Liu H, Yao J, Davis RA, Li WK (2014) Self-excited threshold Poisson autoregression. J Am Stat Assoc 109:776–787

Wang X, Wang D, Yang K, Xu D (2021) Estimation and testing for the integer valued threshold autoregressive models based on negative binomial thinning. Commun Stat - Simul Comput 50:1622–1644

Weiß CH (2008) Thinning operations for modeling time series of counts—a survey. Adv Stat Anal 92:319–343

Yang K, Li H, Wang D (2018) Estimation of parameters in the self-exciting threshold autoregressive processes for nonlinear time series of counts. Appl Math Model 54:226–247

Yang K, Wang D, Li H (2018) Threshold autoregression analysis for finite-range time series of counts with an application on measles data. J Stat Comput Simul 88:597–614

Yang K, Wang D, Jia B, Li H (2018) An integer-valued threshold autoregressive process based on negative binomial thinning. Stat Pap 59:1131–1160

Yang K, Yu X, Zhang Q, Dong X (2022) On MCMC sampling in self-exciting integer-valued threshold time series models. Comput Stat Data Anal 169:107410

Yang K, Li A, Li H, Dong X (2023) High-order self-excited threshold integer-valued autoregressive model: estimation and testing. Commun Math Stat (forthcoming). https://doi.org/10.1007/s40304-022-00325-3

Yu M, Wang D, Yang K, Liu Y (2020) Bivariate first-order random coefficient integer-valued autoregressive processes. J Stat Plan Inference 204:153–176

Zhang Q, Wang D, Fan X (2020) A new bivariate INAR(1) process based on negative binomial thinning operators. Stat Neerl 74:517–537

Acknowledgements

We gratefully acknowledge the anonymous reviewers for their careful work and thoughtful suggestions for this work that have helped improve this article substantially.

Funding

This work is supported by National Natural Science Foundation of China (No. 11901053), Natural Science Foundation of Jilin Province (No. 20220101038JC), Scientific Research Project of Jilin Provincial Department of Education (No. JJKH20220671KJ, JJKH20230665KJ).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Proofs

We begin with some lemmas.

Lemma A1

Let \({\varvec{X}}\) be a bivariate non-negative integer-valued random vector. Let “\({\varvec{A}}\circ \)" and “\({\varvec{B}}\circ \)" be the 2\(\times \)2-matricial thinning operation defined in Definition 2.1. Then

-

(i)

\({\varvec{I}}\circ {\varvec{X}}={\varvec{X}}\) where \({\varvec{I}}\) denotes the unit matrix.

-

(ii)

\({\varvec{A}}\circ ({\varvec{B}}\circ {\varvec{X}})\overset{d}{=}({\varvec{A}}{\varvec{B}})\circ {\varvec{X}}\), where “\(\overset{d}{=}\)" stands for equal in distribution.

-

(iii)

\(E({\varvec{A}}\circ {\varvec{X}})={\varvec{A}}E({\varvec{X}})\).

Proof

The above statements are straightforward to verify. See, for example, Franke and Rao (1993) and Latour (1997). \(\square \)

Lemma A2

Let \({\varvec{\varepsilon }}= (\varepsilon _1,\varepsilon _2)^{\textsf {T}} \in {\mathbb {N}}_0^2\) be a bivariate random vector, \({\varvec{A}}=diag(\alpha _1,\alpha _2)\) with \(\alpha _i \in (0,1)\), i=1,2. Denote \(h(\alpha _1,\alpha _2):=P({\varvec{A}}\circ {\varvec{\varepsilon }}={\varvec{0}})\) with “\({\varvec{A}}\circ \)" stands for the matricial thinning operation defined in Definition 2.1. Then \(h(\alpha _1,\alpha _2)\) is a monotonic decreasing function with respect to \(\alpha _i\) for a fixed \(\alpha _j\), \(i,j \in \{1,2\}\) and \(i\ne j\).

Proof

Calculate to see that

Note that \(P({\varvec{\varepsilon }}=(k,s)^{\textsf {T}})\) is always nonnegative. This implies the conclusion is correct. \(\square \)

The proofs of Proposition 2.1 (i) Note that \(\{{\varvec{X}}_t\}_{t \in {\mathbb {Z}}}\) is a Markov chain on \({\mathbb {N}}_0^2\). The irreducible and aperiodic properties of \(\{{\varvec{X}}_t\}_{t \in {\mathbb {Z}}}\) are obviously since the transition probabilities given in (2.4) are positive for all the states.

Now we are going to show that \(\{{\varvec{X}}_t\}_{t \in {\mathbb {Z}}}\) is positive recurrent. To show this, it is sufficient to prove that \(\sum _{t=1}^\infty P^t({\varvec{0}}|{\varvec{0}})=+\infty \) with \(P^t({\varvec{y}}|{\varvec{x}}):=P({\varvec{X}}_t={\varvec{y}}|{\varvec{X}}_{0}={\varvec{x}})\) denotes the t-steps transition probabilities of \(\{{\varvec{X}}_t\}_{t \in {\mathbb {Z}}}\). Let \(s_t:=I\{Z_t > r\}\), i.e., \(s_t\) takes value 1 when \(Z_t > r\) and 0 otherwise. Then, (2.3) can be written as

By iterating (A.1) \(t-1\) times, we have

implying

Denote \({\varvec{A}}_{\max }=diag(\max (\alpha _{1,1},\alpha _{2,1}),\max (\alpha _{1,2},\alpha _{2,2}))\). It follows by Lemmas A1 and A2 that

By the proof of Theorem 1 in Franke and Rao (1993), we known that the right side of (A.2) is bounded from below by a positive number as \(t \rightarrow \infty \), which implies that \(\lim _{t \rightarrow \infty } P^t({\varvec{0}}|{\varvec{0}})\ne 0\). Therefore, we conclude that \(\sum _{t=1}^{+\infty }P^t({\varvec{0}}|{\varvec{0}})=+\infty \). Thus, it follows by Proposition 4.2.3 and Corollary 4.2.4 in Ross (1996) that \(\{{\varvec{X}}_t\}_{t \in {\mathbb {Z}}}\) is a recurrent Markov chain. We go on to show that state \({\varvec{0}}\) is positive recurrent. Note that \({\varvec{0}}\) is currently a recurrent state and \(\lim _{t \rightarrow \infty } P^t({\varvec{0}}|{\varvec{0}})\ne 0\), it follows by Theorem 4.3.3 in Ross (1996) that state \({\varvec{0}}\) is positive recurrent. Since \(\{{\varvec{X}}_t\}_{t \in {\mathbb {Z}}}\) is irreducible, it follows that all states of \(\{{\varvec{X}}_t\}\) are positive recurrent. This proves that \(\{{\varvec{X}}_t\}_{t \in {\mathbb {Z}}}\) is a positive recurrent Markov chain and hence ergodic.

(ii) The existence of a strictly stationary solution of (2.3) is followed by (i) immediately, since it is a classical conclusion. For completeness and reader’s convenience, we refer to Theorem 1.2.1 in Rosenblatt (1971) for a proof. \(\Box \)

The proofs of Proposition 2.2 The proofs of this Proposition are simple (for (i)–(iii)) or tedious calculations (for (iv)), so we omit the details here. However, the full version proof is available upon request. \(\square \)

The proof of Theorem 3.1 For sake of simplicity, we only prove (3.8) holds for \(\hat{{\varvec{\theta }}}_{1,CLS}\). Denote \(S_{i,0}=0\), \(S_{i,n}=-\frac{1}{2}\frac{\partial Q({\varvec{\theta }}_1,{\varvec{\theta }}_2)}{\partial \alpha _{i,1}}=\sum _{t=1}^n(X_{1,t}-\alpha _{1,1}X_{1,t-1}I_{1,t}-\alpha _{2,1}X_{1,t-1}I_{2,t}-\lambda _1)X_{1,t-1}I_{i,t}\), \(i=1,2\). Then we have

Thus, \(\{S_{i,n},{\mathcal {F}}_{n},n\ge 0\}\) (\(i=1,2\)) is a martingale. Note that \(E\Vert {\varvec{\varepsilon }}_t\Vert ^4<\infty \) implies \(E\Vert {\varvec{X}}_t\Vert ^4\). Thus, the sequence \(\{(X_{1,n}-\alpha _{1,1}X_{1,n-1}I_{1,n}-\alpha _{2,1}X_{1,n-1}I_{2,n}-\lambda _1)^2X_{1,n-1}^2I_{i,n},n\ge 1\}\) is uniformly integrable. By the Theorem 1.1 of Billingsley (1961), as \(n\rightarrow \infty \),

where \(\sigma _{ii}:=E[(X_{1,1}-\alpha _{1,1}X_{1,0}I_{1,1}-\alpha _{2,1}X_{1,0}I_{2,1}-\lambda _1)^2X_{1,0}^2I_{i,1}]\). Thus, by the Corollary 3.2 in Hall and Heyde (1980), and the martingale central limit theorem, we have, as \(n\rightarrow \infty ,\)

Let \(S_{3,n}=-\frac{1}{2}\frac{\partial Q({\varvec{\theta }}_1,{\varvec{\theta }}_2)}{\partial \lambda _1} =\sum _{t=1}^n(X_{1,t}-\alpha _{1,1}X_{1,t-1}I_{1,t}-\alpha _{2,1}X_{1,t-1}I_{2,t}-\lambda _1)\). Using similar arguments as the proof of (A.6), we can prove that \(\{S_{3,n},{\mathcal {F}}_{n},n\ge 0\}\) is a martingale. Then, as \(n\rightarrow \infty ,\) we have \(\frac{1}{n}\sum _{t=1}^n(X_{1,t}-\alpha _{1,1}X_{1,t-1}I_{1,t}-\alpha _{2,1}X_{1,t-1}I_{2,t}-\lambda _1)^2\overset{a.s}{\longrightarrow } \sigma _{33}\) and \(\frac{1}{\sqrt{n}}S_{3,n}\overset{L}{\longrightarrow }N(0,\sigma _{33})\), where \(\sigma _{33}:=E[(X_{1,1}-\alpha _{1,1}X_{1,0}I_{1,1}-\alpha _{2,1}X_{1,0}I_{2,1}-\lambda _1)^2]\). In the same way, for any \({\varvec{c}}=(c_1,c_2,c_3)^{\textsf {T}}\in {\mathbb {R}}^3\backslash (0,0,0)^{\textsf {T}}\), we have that \(\{{\varvec{c}}^{\textsf {T}}{\varvec{S}}_n\), \({\mathcal {F}}_{n},n\ge 1\}\) is a martingale, where \({\varvec{S}}_n=(S_{1,n},S_{2,n},S_{3,n})^{\textsf {T}}\). By the ergodic and stationary properties of \(\{{\varvec{X}}_t\}\), we have that as \(n\rightarrow \infty \),

where \(\sigma ^2:=E[(X_{1,1}-\alpha _{1,1}X_{1,0}I_{1,1}-\alpha _{2,1}X_{1,0}I_{2,1}-\lambda _1)^2 (c_1X_{1,0}I_{1,1}+c_2X_{1,0}I_{2,1}+c_3)^2]\). Then, we have \( \frac{1}{\sqrt{n}}{\varvec{c}}^{\textsf {T}}{\varvec{S}}_n \overset{L}{\longrightarrow }N(0,\sigma ^2). \) Thus, by the \(\mathrm{Cram\acute{e}r}\)-\(\textrm{Wold}\) device (see Chapter 2.3 in van der Vaart (1998)), we obtain

Denote \({\varvec{U}}_{t}=(X_{1,t-1}I_{1,t},X_{1,t-1}I_{2,t},1)^{\textsf {T}}\). Thus, we have \({\varvec{M}}_1=\sum _{t=1}^n {\varvec{U}}_{t}{\varvec{U}}_{t}^{\textsf {T}}\) and the CLS-estimators \(\hat{{\varvec{\theta }}}_{1,CLS}=(\sum _{t=1}^n {\varvec{U}}_{t}{\varvec{U}}_{t}^{\textsf {T}})^{-1}(\sum _{t=1}^n X_{1,t}{\varvec{U}}_{t})\). Since \(\{{\varvec{X_{t}}}\}\) is a stationary and ergodic process, by the ergodic theorem, we have

After some algebra, we have \( \hat{{\varvec{\theta }}}_{1,CLS}-{\varvec{\theta }}_{1,0}={\varvec{M}}_1^{-1}{\varvec{S}}_n. \) Therefore,

This ends the proof. \(\square \)

The proof of Theorem 3.2 The proof of Theorem 3.2 is similar to Theorem 3.1, so omitted.\(\square \)

The proof of Theorem 3.3 To prove Theorem 3.3, we want to apply the results of Theorems 2.1 and 2.2 in Billingsley (1961) on estimates for the parameters of Markov processes. For this purpose, we need to check that conditions (C1)–(C6) in Sect. 3.2 hold, which implies the regularity conditions of Theorems 2.1 and 2.2 in Billingsley (1961) hold.

Conditions (C1)–(C3) hold by the properties of \(BP(\lambda _1,\lambda _2,\phi )\) distribution. We go on to prove (C4) holds. Note that for any \({\varvec{\vartheta }}' \in {\mathcal {B}}\) and the neighborhood U of \({\varvec{\vartheta }}'\), \(\sum _{{\varvec{k}}\ge {\varvec{0}}} \sup _{{\varvec{\vartheta }} \in U} f({\varvec{k}},{\varvec{\vartheta }})<\infty \) holds trivially. Furthermore, we can calculate to see that

and

where \(k_j\) is the jth component of \({\varvec{k}}\). Thus, we have \(\sum _{{\varvec{k}}\ge {\varvec{0}}} \sup _{{\varvec{\vartheta }} \in U}|f_u({\varvec{k}},{\varvec{\vartheta }})|\le C_1\cdot E\Vert {\varvec{\varepsilon }}_1\Vert <\infty \), for some suitable constant \(C_1\), \(u=1,2,3\). Thus, by using similar arguments, we can see that \(\sum _{{\varvec{k}}\ge {\varvec{0}}} \sup _{{\varvec{\vartheta }} \in U}|f_{uv}({\varvec{k}},{\varvec{\vartheta }})|\le C_2\cdot E\Vert {\varvec{\varepsilon }}_1\Vert ^2<\infty \), for some suitable constant \(C_2\), \(u,v=1,2,3\). Therefore, condition (C4) holds true.

To prove (C5), we denote \({\varvec{1}}=(1,1)^{\textsf {T}}\), \(\delta =\min (\phi ,\lambda _1-\phi ,\lambda _2-\phi )/3\). Thus, we have

Therefore, let

then we have that \(\left| f_u({\varvec{k}},{\varvec{\vartheta }})\right| \le {\psi }_{u}({\varvec{n}})f({\varvec{k}},{\varvec{\vartheta }})\), \(\left| f_{uv}({\varvec{k}},{\varvec{\vartheta }})\right| \le {\psi }_{uv}({\varvec{n}})f({\varvec{k}},{\varvec{\vartheta }})\), and \(\left| f_{uvw}({\varvec{k}},{\varvec{\vartheta }})\right| \le {\psi }_{uvw}({\varvec{n}})f({\varvec{k}},{\varvec{\vartheta }})\). Furthermore, note that \(E\Vert {\varvec{\varepsilon }}_t\Vert ^3<\infty \) implies \(E\Vert {\varvec{X}}_t\Vert ^3\). We have that (C5) holds.

Now, let us verify the last condition also holds. To this end, we need to check the following statements are all true.

-

(S1) \(E\left| \frac{\partial }{\partial \alpha _{i,j}}\log p({\varvec{X}}_2|{\varvec{X}}_1,{\varvec{\theta }})\frac{\partial }{\partial \alpha _{u,v}}\log p({\varvec{X}}_2|{\varvec{X}}_1,{\varvec{\theta }})\right| < \infty ,~i,j,u,v=1,2\);

-

(S2) \(E\left| \frac{\partial }{\partial \phi } \log p({\varvec{X}}_2|{\varvec{X}}_1,{\varvec{\theta }})\right| ^2 < \infty \), \(E\left| \frac{\partial }{\partial \lambda _{i}} \log p({\varvec{X}}_2|{\varvec{X}}_1,{\varvec{\theta }})\right| ^2 < \infty ,~i=1,2\);

-

(S3) \(E\left| \frac{\partial }{\partial \alpha _{i,j}}\log p({\varvec{X}}_2|{\varvec{X}}_1,{\varvec{\theta }})\frac{\partial }{\partial \lambda _{u}}\log p({\varvec{X}}_2|{\varvec{X}}_1,{\varvec{\theta }})\right| < \infty ,~i,j,u=1,2\);

-

(S4) \(E\left| \frac{\partial }{\partial \alpha _{i,j}}\log p({\varvec{X}}_2|{\varvec{X}}_1,{\varvec{\theta }})\frac{\partial }{\partial \phi }\log p({\varvec{X}}_2|{\varvec{X}}_1,{\varvec{\theta }})\right| < \infty ,~i,j=1,2\);

-

(S5) \(E\left| \frac{\partial }{\partial \lambda _j}\log p({\varvec{X}}_2|{\varvec{X}}_1,{\varvec{\theta }})\frac{\partial }{\partial \phi }\log p({\varvec{X}}_2|{\varvec{X}}_1,{\varvec{\theta }})\right| < \infty ,~j=1,2\).

We shall first prove statement (S1). Recall that \(p({\varvec{X}}_t|{\varvec{X}}_{t-1},{\varvec{\theta }})=P({\varvec{X}}_t={\varvec{x}}_t|{\varvec{X}}_{t-1}={\varvec{x}}_{t-1})\) is the transition probability, i.e.,

where \(p_j(x) =\sum _{i=1}^2 I_{i,t} \left( {\begin{array}{c}x_{j,t-1}\\ x\end{array}}\right) \alpha _{i,j}^{x}(1-\alpha _{i,j})^{x_{j,t-1}-x}\), \(j=1,2\). Denote \(\alpha _{\max }=\max (\alpha _{1,1},\alpha _{1,2},\alpha _{2,1},\alpha _{2,2})\) and \(\alpha _{\min }=\min (\alpha _{1,1},\alpha _{1,2},\alpha _{2,1},\alpha _{2,2})\). Thus, a direct calculation and properly scaling gives

which implies \( E\left| \frac{\partial }{\partial \alpha _{i,j}}\log P({\varvec{X}}_1,{\varvec{X}}_2)\right| ^2< C_3\cdot E\Vert {\varvec{X}}_1\Vert ^2 < \infty , \) for some suitable constant \(C_3\).

Next we will prove statement (S2). Recall that by (C4), we have \(\left| f_u({\varvec{k}},{\varvec{\vartheta }})\right| \le {\psi }_{u}({\varvec{n}})f({\varvec{k}},{\varvec{\vartheta }})\) (\(u=1,2,3\)), which implies \( E\left| \frac{\partial }{\partial \lambda _{i}} \log P({\varvec{X}}_1,{\varvec{X}}_2)\right| ^2 \le E \psi _i^2({\varvec{X}}_1) < \infty ,~i=1,2, \) and

Therefore, (S2) holds.

Lastly, by (A.9) and (C4) we can verify that statements (S3)–(S5) hold. Therefore, the Fisher information matrix \({\varvec{I}}({\varvec{\theta }})\) is well-defined. Finally, some elementary but tedious calculation shows that (C6) is satisfied, too.

The proof is complete. \(\square \)

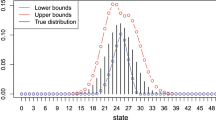

Appendix B: Figures

The time series plots of Poisson BINAR(1) model (Pedeli and Karlis 2011) with following parameter settings are given in Fig. 9.

-

Scenario D.

\((\alpha _{1},\alpha _{2},\lambda _1,\lambda _2,\phi )\) \(=(0.5,0.25,4,2,1)\).

-

Scenario E.

\((\alpha _{1},\alpha _{2},\lambda _1,\lambda _2,\phi )\) \(=(0.2,0.4,2,3,1)\).

-

Scenario F.

\((\alpha _{1},\alpha _{2},\lambda _1,\lambda _2,\phi )\) \(=(0.75,0.4,2,3,1)\).

As a comparison, the time series plots of Scenarios A–C are also drawn in Fig. 9. We can see from Fig. 9 that there is no piecewise characteristics in the time series of Scenarios D, E, and F, while there are obvious piecewise characteristics for Scenarios A, B, and C. Therefore, it is clearly to see that the BTINAR(1) models can capture the time series with piecewise component.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yang, K., Zhao, Y., Li, H. et al. On bivariate threshold Poisson integer-valued autoregressive processes. Metrika 86, 931–963 (2023). https://doi.org/10.1007/s00184-023-00899-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00184-023-00899-0