Abstract

The \(\gamma \)-divergence is well-known for having strong robustness against heavy contamination. By virtue of this property, many applications via the \(\gamma \)-divergence have been proposed. There are two types of \(\gamma \)-divergence for the regression problem, in which the base measures are handled differently. In this study, these two \(\gamma \)-divergences are compared, and a large difference is found between them under heterogeneous contamination, where the outlier ratio depends on the explanatory variable. One \(\gamma \)-divergence has the strong robustness even under heterogeneous contamination. The other does not have in general; however, it has under homogeneous contamination, where the outlier ratio does not depend on the explanatory variable, or when the parametric model of the response variable belongs to a location-scale family in which the scale does not depend on the explanatory variables. Hung et al. (Biometrics 74(1):145–154, 2018) discussed the strong robustness in a logistic regression model with an additional assumption that the tuning parameter \(\gamma \) is sufficiently large. The results obtained in this study hold for any parametric model without such an additional assumption.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The maximum likelihood estimation and least squares method have been widely used. However, these approaches are not robust against outliers. To overcome this problem, several robust methods have been proposed, primarily using M-estimation (Hampel et al. 2005; Maronna et al. 2006; Huber and Ronchetti 2009). The maximum likelihood estimation can be regarded as the minimization of the empirical estimator of the Kullback-Leibler divergence. As an extension of this concept, some robust estimators have been proposed as the minimization of the empirical estimators of the modified divergences, e.g., density power divergence (Basu et al. 1998), \(L_2\)-divergence (Scott 2001), \(\gamma \)-divergence (or the Type-0 divergence) (Jones et al. 2001; Fujisawa and Eguchi 2008).

Recently, some robust regression methods have been proposed based on divergences, using \(L_2\)-divergence (Chi and Scott 2014; Lozano et al. 2016), density power divergence (Ghosh and Basu 2016; Riani et al. 2020; Ghosh and Majumdar 2020), and \(\gamma \)-divergence (Kawashima and Fujisawa 2017; Hung et al. 2018; Ren et al. 2020). In these methods, the robust properties are generally investigated under the contaminated model. The difference between the i.i.d. problem and the regression problem lies in whether or not the outlier ratio in the contaminated model depends on the explanatory variable. They are called heterogeneous and homogeneous contaminations, respectively. Recently, Hung et al. (2018) showed that a logistic regression model with mislabeled data can be regarded as a logistic regression model with heterogeneous contamination. Then, they applied the \(\gamma \)-divergence to a usual logistic regression model, which enables estimation of the parameter of the model without modeling the mislabeled scheme, even if mislabeled data exist. They discussed the strong robustness that the latent bias can be sufficiently small against heavy contamination. However, this was under assumption that the tuning parameter \(\gamma \) was sufficiently large.

There are two types of \(\gamma \)-divergence for regression problems in which the treatments of the base measure are different (Fujisawa and Eguchi 2008; Kawashima and Fujisawa 2017). Kawashima and Fujisawa (2017) proposed another type of the \(\gamma \)-divergence proposed by Fujisawa and Eguchi (2008), and showed a Pythagorean relation, which does not hold for the previous \(\gamma \)-divergence. Hung et al. (2018) adopted the \(\gamma \)-divergence for the regression problem proposed by Fujisawa and Eguchi (2008) and investigated robust properties of the logistic regression model, as mentioned above. In addition, Ren et al. (2020) also adopted it and investigated theoretical properties of the variable consistency and estimation bound under a high-dimensional regression setting. In particular, its application to the generalized linear model (McCullagh and Nelder 1989) has been well studied. However, these studies focused on only one divergence proposed by Fujisawa and Eguchi (2008), and no comparison between two types has been studied.

In this study, two types of \(\gamma \)-divergence are compared in detail. Their differences in terms of the strong robustness are illustrated through numerical experiments. Comparing the obtained results with those of Hung et al. (2018), ours hold for any parametric model, including a logistic regression model, without the assumption that \(\gamma \) is sufficiently large, although Hung et al. (2018) makes this assumption.

The remainder of this paper is organized as follows. In Sect. 2, we show that existing robust regression methods may not work well under heavy heterogeneous contamination in the simple case of a univariate logistic regression model. In Sect. 3, two types of \(\gamma \)-divergence for the regression problem are reviewed. In Sect. 4, we elucidate a large difference between two types of \(\gamma \)-divergence from the viewpoint of robustness. In Sect. 5, the parameter estimation algorithm is proposed. In Sect. 6, numerical experiments are illustrated to verify the differences discussed in Sect. 4.

2 Illustrative example

Before getting into details, here we show an illustrative example that existing robust regression methods may not work well under heavy heterogeneous contamination, where the outlier ratio depends on the explanatory variable.

Here, a univariate logistic regression model was used as a simulation model. Outliers were incorporated in a similar setting to that described in Sect. 6. A weighted maximum likelihood estimator (WMLE), M-estimator (Mest), redescending weighted M-estimator (WMest), conditional unbiased bounded influence estimator (CUBIF), and robust quasi-likelihood estimator (MQLE) were adopted as existing robust logistic regression methods (see Chapter 7 in Maronna et al. (2018) for details).

Figure 1 shows the mean squared errors (MSEs) of existing methods, type I, and type II at each outlier ratio. All existing methods showed the almost identical results at each outlier ratio, and their performance worsened as the contamination became heavier. Type I, which is a robust regression method based on the \(\gamma \)-divergence proposed by Fujisawa and Eguchi (2008), performed well. In contrast, a similar method, type II, which is based on the \(\gamma \)-divergence proposed by Kawashima and Fujisawa (2017), presented different behaviors from type I. In the subsequent sections, we discuss a difference in robustness between the two types of \(\gamma \)-divergence for the regression problem. Specifically, why type I is better than type II is explored.

3 Regression based on \(\gamma \)-Divergence

The \(\gamma \)-divergence for regression was first proposed by Fujisawa and Eguchi (2008). It measures the difference between two conditional probability density functions. The other type of \(\gamma \)-divergence for regression was proposed by Kawashima and Fujisawa (2017), in which the treatment of the base measure on the explanatory variable was changed. For simplicity, the former is referred to as type I and the latter as type II. This section presents a brief review of both types of \(\gamma \)-divergence for regression alongside the corresponding parameter estimation.

3.1 Two types of \(\gamma \)-Divergence for regression

First, the \(\gamma \)-divergence for the i.i.d. problem is reviewed. Let g(u) and f(u) be two probability density functions. The \(\gamma \)-cross entropy and \(\gamma \)-divergence are defined by

This satisfies the following two basic properties of divergence:

Let us consider the \(\gamma \)-divergence for the regression problem. Suppose that g(x, y), g(y|x), and g(x) are the underlying probability density functions of (x, y), y given x, and x, respectively. Let f(y|x) be another conditional probability density function of y given x. Let \(\gamma \) be the positive tuning parameter which controls the trade-off between efficiency and robustness.

For the regression problem, Fujisawa and Eguchi (2008) proposed the following cross entropy and divergence:

-

Type I \(\gamma \)-cross entropy for regression:

$$\begin{aligned} d_{\gamma ,1} (g(y|x),f(y|x);g(x)) = -\frac{1}{\gamma } \log \int \int \left\{ \frac{ f(y|x)^{\gamma } }{ \left( \int f(y|x)^{1+\gamma }dy\right) ^{\frac{\gamma }{1+\gamma }} } \right\} g(x,y)dxdy . \end{aligned}$$(3.1) -

Type I \(\gamma \)-divergence for regression:

$$\begin{aligned}&D_{\gamma ,1} (g(y|x),f(y|x);g(x)) \\&\quad = - d_{\gamma ,1}(g(y|x),g(y|x);g(x)) + d_{\gamma ,1}(g(y|x),f(y|x);g(x)). \end{aligned}$$

The cross entropy is empirically estimable, as will be seen in Sect. 3.2, and the parameter estimation is easily defined. On the other hand, Kawashima and Fujisawa (2017) proposed the following cross entropy and divergence:

-

Type II \(\gamma \)-cross entropy for regression:

$$\begin{aligned}&d_{\gamma ,2} (g(y|x),f(y|x);g(x)) \nonumber \\&\quad = -\frac{1}{\gamma } \log \int \int f(y|x)^{\gamma } g(x,y) dxdy + \frac{1}{1+\gamma } \log \int \left( \int f(y|x)^{1+\gamma } dy \right) g(x) dx. \end{aligned}$$(3.2) -

Type II \(\gamma \)-divergence for regression:

$$\begin{aligned}&D_{\gamma ,2} (g(y|x),f(y|x);g(x)) \\&\quad = -d_{\gamma , 2}(g(y|x),g(y|x);g(x)) +d_{\gamma , 2}(g(y|x),f(y|x);g(x)). \end{aligned}$$

The base measures on the explanatory variable are taken twice on each term of the \(\gamma \)-divergence for the i.i.d. problem. This extension from the i.i.d. problem to the regression problem appears more natural than (3.1). The cross entropy is also empirically estimable. Both types of \(\gamma \)-divergence satisfy the following two basic properties of divergence for \(j=1,2\):

The equality holds for the conditional probability density function instead of usual probability density function.

Theoretical properties of the \(\gamma \)-divergence for the i.i.d. problem were deeply investigated by Fujisawa and Eguchi (2008). There have been several studies on theoretical properties for the regression problem (Kanamori and Fujisawa 2015; Kawashima and Fujisawa 2017; Hung et al. 2018; Ren et al. 2020). However, there is a lack of comprehensive studies, such as comparison between properties under heterogeneous contamination. Heterogeneous contamination appears as a specific case in the regression problem and does not appear in the i.i.d. problem. Hung et al. (2018) pointed out that a logistic regression model with mislabeled data can be regarded as a logistic regression model with heterogeneous contamination and then they applied type I to a usual logistic regression model, which enables us to estimate the parameter of the logistic regression model without modeling mislabeled scheme even if mislabeled data exist. They also investigated theoretical properties on robustness, but they assumed that \(\gamma \) is sufficiently large. In Sect. 4 of this paper, it is shown that type I is superior to type II under heterogeneous contamination in the sense of the strong robustness without assuming that \(\gamma \) is sufficiently large.

Finally, it is shown that the density power divergence (Basu et al. 1998) is another candidate for divergence which provides robustness; however, this does not have strong robustness (Hung et al. 2018). For the completeness of this paper, details of the robustness of the density power divergence under homogeneous and heterogeneous contaminations are provided, although some parts have been investigated by Hung et al. (2018). See Appendix G for details.

3.2 Estimation for \(\gamma \)-regression

Let \(f(y|x;\theta )\) be a conditional probability density function of y given x with parameter \(\theta \). Let \((x_i,y_i) \ (i=1 , \ldots , n)\) be the observations randomly drawn from the underlying distribution g(x, y). Using (3.1) and (3.2), both types of \(\gamma \)-cross entropy for regression can be empirically estimated by

The estimator is defined as the minimizer as follows:

Using an similar approach to that in Fujisawa and Eguchi (2008), we can show that \({\hat{\theta }}_{\gamma ,j}\) converges to \(\theta ^*_{\gamma ,j}\), where

Suppose that \(f(y|x;\theta ^*)\) is the target conditional probability density function. The latent bias is expressed as \(\theta ^*_{\gamma ,j}-\theta ^*\). This is zero when the underlying model belongs to a parametric model, in other words, \(g(y|x)=f(y|x;\theta ^*)\), but is not always zero when the underlying model is contaminated by outliers. This issue is discussed in Sect. 4.

3.3 Case of location-scale family

Here, it is shown that both types of \(\gamma \)-divergence give the same parameter estimation when the parametric conditional probability density function \(f(y|x;\theta )\) belongs to a location-scale family in which the scale does not depend on the explanatory variables. This is given by

where s(y) is a probability density function, \(\sigma \) is a scale parameter, and \(q(x;\zeta )\) is a location function with a regression parameter \(\zeta \), e.g., \(q(x;\zeta )=x^T \zeta \). Then, we can obtain

This does not depend on the explanatory variables x. Using this property, we can show that both types of \(\gamma \)-cross entropy are the same.

Proposition 1

Consider the location-scale family (3.3). We see

The proof is in Appendix A. As a result, both types of \(\gamma \)-divergence give the same parameter estimation, because the estimator is defined by the empirical estimation of the \(\gamma \)-cross entropy. However, it should be noted that both types of \(\gamma \)-divergence are not the same, because \(d_{\gamma ,1} (g(y|x),g(y|x);g(x)) \ne d_{\gamma ,2} (g(y|x),g(y|x);g(x)) \).

4 Robust properties

In this section, we show a large difference between the two types of \(\gamma \)-divergence.

4.1 Contamination model and basic condition

Let \(\delta (y|x)\) be the contaminated conditional probability density function related to outliers. Let \(\varepsilon (x)\) and \(\varepsilon \) denote the outlier ratios which depends on x and does not, respectively. Suppose that the underlying conditional probability density functions under heterogeneous and homogeneous contaminations are given by

-

Heterogeneous contamination:

$$\begin{aligned} g(y|x)&= (1-\varepsilon (x))f(y|x;\theta ^*) + \varepsilon (x) \delta (y|x), \end{aligned}$$ -

Homogeneous contamination:

$$\begin{aligned} g(y|x)&= (1-\varepsilon )f(y|x;\theta ^*) + \varepsilon \delta (y|x). \end{aligned}$$

Let

Here we assume that

This is an extended assumption used for the i.i.d. problem (Fujisawa and Eguchi 2008) to the regression problem. This assumption implies that

and illustrates that the contaminated conditional probability density function \(\delta (y|x)\) lies on the tail of the target conditional probability density function \(f(y|x;\theta ^*)\). For example, if \(\delta (y|x)\) is the Dirac delta function at the outlier \(y_{\dag }(x)\) given x, then we have \(\nu _{f_{\theta ^*},\gamma }(x) = f(y_{\dag }(x)|x;\theta ^*) \approx 0\), which is reasonable because \(y_{\dag }(x)\) is an outlier.

Here we also consider the condition \(\nu _{f_{\theta },\gamma } \approx 0\), as used in Hung et al. (2018). This will be true in the neighborhood of \(\theta =\theta ^*\). In addition, even when \(\theta \) is not close to \(\theta ^*\), if \(\delta (y|x)\) lies on the tail of \(f(y|x;\theta )\), we can see \(\nu _{f_{\theta },\gamma } \approx 0\).

To simplify the discussion, the monotone transformation of both types of \(\gamma \)-cross entropy for regression is prepared as follows:

4.2 Robustness of type I

Following some calculations, we have

where \({\tilde{g}}(x) = (1-\varepsilon (x))g(x)\). A detailed derivation is in Appendix B. From this relation, we can easily show the following theorem.

Theorem 1

Consider the case of heterogeneous contamination. Under the condition \(\nu _{f_{\theta },\gamma } \approx 0\), we have

Using this theorem, we can expect the strong robustness that the latent bias \(\theta ^*_{\gamma ,1}-\theta ^*\) is close to zero even when \(\varepsilon (x)\) is not small, because

The last equality holds even when g(x) is replaced by \({\tilde{g}}(x) = (1-\varepsilon (x))g(x)\).

In addition, we can have the modified Pythagorean relation approximately.

Theorem 2

Consider the case of heterogeneous contamination. Under the condition \(\nu _{f_{\theta },\gamma } \approx 0\), the modified Pythagorean relation among g(y|x), \(f(y|x;\theta ^*)\), \(f(y|x;\theta )\) approximately holds:

The modified Pythagorean relation also implies strong robustness in a similar manner to that in the subsequent discussion of Theorem 1.

Finally, the case of homogeneous contamination is discussed. Under homogeneous contamination, we have the following relation.

Theorem 3

Consider the case of homogeneous contamination. We see

The proof is in Appendix C. Then, the modified Pythagorean relation in Theorem 2 is changed to the usual Pythagorean relation as follows:

4.3 Robustness of type II

First, it is illustrated that in the case of type II, the strong robustness does not hold generally hold under heterogeneous contamination, unlike for type I. We see

A detailed derivation is in Appendix D. This cannot be expressed using

with an appropriate base measure b(x), unlike for type I, because the base measure of the numerator on the explanatory variables is different from that of the denominator. As mentioned in Sect. 3.3, when the parametric conditional probability density function belongs to a location-scale family (3.3), the cross entropy for type II is the same as that for type I and, thus type II can have the strong robustness.

Under homogeneous contamination, we have

and then the latent bias \(\theta ^*_{\gamma ,2}-\theta ^*\) can be expected to be sufficiently small, i.e., type II can have the strong robustness.

5 Parameter estimation algorithm

In this section, the parameter estimation algorithm of type I is proposed. Kawashima and Fujisawa (2017) proposed the iterative estimation algorithm for type II by Majorization-Minimization (MM) algorithm (Hunter and Lange 2004). This algorithm has a monotone decreasing property, i.e., the objective function monotonically decreases at each iterative step, which enables numerical stability and efficiency. The present study also utilizes the MM algorithm.

5.1 MM algorithm

Here, the principle of the MM algorithm is explained in brief. Let \(h(\eta )\) be the objective function. Let us prepare the majorization function \(h_{MM}\) satisfying

where \(\eta ^{(m)}\) is the parameter of the m-th iterative step for \(m=0,1,2,\ldots \). The MM algorithm optimizes the majorization function instead of the objective function as follows:

Then, it can be shown that the objective function \(h(\eta )\) monotonically decreases at each iterative step, because

Note that \(\eta ^{(m+1)}\) is not necessary to be the minimizer of \(h_{MM}(\eta |\eta ^{(m)})\). We only need

The problem with the MM algorithm is how to make a majorization function \(h_{MM}\).

5.2 MM algorithm for type I

Let us recall the objective function \(h(\theta )\) in type I:

The majorization function can be derived in a similar manner to that in Kawashima and Fujisawa (2017). We show the following theorem.

Theorem 4

Consider the function

where

and const is a term which does not depend on the parameter \(\theta \). It can be seen that it is a majorization function of \(h(\theta )\). Consequently, the sequence of iterates given by \(\theta ^{(m+1)} = \mathop {{\mathrm{argmin}}}\limits \nolimits _{\theta } h_{MM}(\theta |\theta ^{(m)})\) decreases the objective function \(h(\theta )\) at each iterative step.

The proof is in Appendix E. As mentioned in Sect. 3.3, when the parametric conditional probability density function \(f(y|x;\theta )\) belongs to a location-scale family (3.3), the cross entropy for type I is the same as that for type II, and the above majorization function is also the same as that for type II.

5.3 MM algorithm for logistic regression model

Here, the case of the logistic regression model is thoroughly investigated. This model does not belong to a location-scale family as the parametric conditional probability density function \(f(y|x;\theta )\). Let \(f(y|x;\beta )\) be the Bernoulli distribution given by

where \(\pi (x;\beta )= \{1+\exp (- x^{\top } \beta )\}^{-1}\). For simplicity, the intercept term \(\beta _0\) is omitted in the linear predictor. Following simple calculation, we have

Here, the constant term is ignored. By applying the idea of the quadratic approximation (the second-order Taylor polynomial) (Böhning and Lindsay 1988) to \(h_{MM}\), the new majorization function is obtained as follows:

where

Then, we provide the following theorem.

Theorem 5

Consider the function \({\tilde{h}}_{MM}(\beta | \beta ^{(m)})\). It can be seen that it is a majorization function of \(h(\beta )\). Consequently, the sequence of iterates given by \( \beta ^{(m+1)} = (X^{\top } X)^{-1} X^{\top } z^{(m)}\) decreases the objective function \(h(\beta ) \) at each iterative step.

The proof is in Appendix F. Therefore, to guarantee the monotone decreasing property, the proposed algorithm does not require a line search method (Armijo 1966; Wolfe 1969). On the other hand, existing estimation algorithms for logistic regression based on type I (Hung et al. 2018; Ren et al. 2020) require a line search method for the monotone decreasing property.

Finally, the pseudo-code of the proposed parameter estimation algorithm for the logistic regression model is presented.

6 Numerical experiments

In this section, using a simulation model, we compare type I with type II and the density power divergence (DPD).

As shown in Sect. 4, the large difference occurs under heterogeneous contamination when the parametric conditional probability density function \(f(y|x;\theta )\) does not belong to a location-scale family. Therefore, the logistic regression model is used as a simulation model, given by

where \(\pi (x;\beta )= \{1+\exp (- \beta _{0} - x_1 \beta _1- \cdots - x_p \beta _p )\}^{-1}\). All experiments were performed using R (http://www.r-project.org/index.html). The code used to implement the proposed method is available at https://sites.google.com/site/takayukikawashimaspage/software.

6.1 Synthetic data

We consider the following data generating scheme. The sample size and the number of explanatory variables were set to be \(n=200\) and \(p=10,20,40\), respectively. The true regression coefficients were given by \(\beta _{0}^*=0, \ \varvec{\beta }^* =(1,-1.5,2,-2.5,3, {\mathbf {0}}_{p-5}^{\top })^{\top }\). The explanatory variables were generated from a normal distribution \(N(0,{\varSigma })\) with \( {\varSigma }_{ij}=(0.2^{ | i-j | })_{1 \le i,j \le p }\). We generated 30 random samples.

Outliers were incorporated into simulations. The outliers were generated around the edge of the explanatory variables, where the explanatory variables were generated from \(N(\varvec{\mu }_{\mathrm{out}}, 0.5^{2} {\mathbf {I}})\) where \({\varvec{\mu }_{\mathrm{out}}}=(2,0,2,0,2, {\mathbf {0}}_{p-5}^{\top })^{\top }\), and the response variable y was set to 0. The outlier ratio was set to \(\varepsilon =0.1, 0.2 , 0.3 , 0.4\).

To verify the fitness of the regression coefficient, the mean squared error (MSE) was used as the performance measure, given by.

where \(\beta _j^*\)’s are the true coefficients. The tuning parameter \(\gamma \) in the \(\gamma \)-divergence was set to 1.0, 2.0, and 3.0. The tuning parameter \(\alpha \) in the DPD was set to 1.0, 2.0, and 3.0.

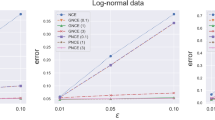

Figure 2 shows the MSE in the case \(\varepsilon =0.1\), 0.2, 0.3, and 0.4. Type I presented smaller MSEs than type II. The difference between the two types became more significant as the outlier ratio \(\varepsilon \) was increased. For types I and II, we can see similar results to those presented in Sect. 2 can be seen, even in the multivariate case. The DPD presented worse MSEs as the outlier ratio \(\varepsilon \) or p was larger.

The DPD seems to be better than the \(\gamma \)-divergence at the same value of the \(\gamma \) and \(\alpha \). However, the different divergences should not be compared at the same tuning parameter, because they have different meanings.

6.2 Application to real data

We compare type I with type II and the DPD in real data. The following datasets, which are available at the UCI Machine Learning Repository (Dua and Graff 2017), were used: Absenteeism at work (Work) (Martiniano et al. 2012), banknote authentication (Bank), Heart, Haberman’s Survival (Survival), and Libras Movement (Libras).

First, we applied the ordinal logistic regression to real data and supposed that estimated regression coefficients were true coefficients \(\beta _0^*\) and \(\varvec{\beta }^*\). Then, outliers were incorporated into the data as follows. The magnitudes of the explanatory variables were sorted in descending order \(\Vert x \Vert _{(1)} \ge \Vert x \Vert _{(2)} \ge \cdots \ge \Vert x \Vert _{(n)} \), where (i) denotes the i-th order. We selected \(\lceil \varepsilon \times n \rceil \) samples from the largest variables, and the corresponding each response variable was flipped, e.g., \(y=0 \rightarrow y=1\). The outlier ratio \(\varepsilon \) was set to 0.4.

In order to verify the fitness of the regression coefficient, we used the MSE as the performance measure. The tuning parameter \(\gamma \) in the \(\gamma \)-divergence was set to 1.0, 2.0, and 3.0. The tuning parameter \(\alpha \) in the DPD was set to 1.0, 2.0, and 3.0.

Table 1 shows the MSE in real data sets with outliers. Type I presented smaller MSEs than type II and showed better results than the DPD in most cases.

7 Conclusion

In shit study, the difference between two types of \(\gamma \)-divergence for the regression problem, referred to as type I and type II, were investigated. We showed that type I has the strong robustness unlike type II under heterogeneous contamination even when the parametric conditional probability density function does not belong to a location-scale family. In addition, we denoted difference on the robustness with an existing robust divergence, density power divergence for the regression problem. Further, an efficient estimation algorithm was proposed based on the principle of the MM algorithm. Numerical experiments supported the obtained theoretical results under various settings and the application to real data. In the experiments performed herein, the robust tuning parameter \(\gamma \) was fixed. However, in some cases, it may be better to select an appropriate tuning parameter from among the \(\gamma \) candidates than to fix the value. The monitoring approach (Riani et al. 2020) and robust cross-validation (Kawashima and Fujisawa 2017) can be utilized as a method of selecting \(\gamma \).

References

Armijo L (1966) Minimization of functions having lipschitz continuous first partial derivatives. Pacific J Math 16(1):1–3

Basu A, Harris IR, Hjort NL, Jones MC (1998) Robust and efficient estimation by minimising a density power divergence. Biometrika 85(3):549–559

Böhning D, Lindsay B (1988) Monotonicity of quadratic-approximation algorithms. Ann Inst Stat Math 40:641–663

Chi EC, Scott DW (2014) Robust parametric classification and variable selection by a minimum distance criterion. J Comput Graph Stat 23(1):111–128

Dua D, Graff C (2017) UCI machine learning repository

Fujisawa H, Eguchi S (2008) Robust parameter estimation with a small bias against heavy contamination. J Multivar Anal 99(9):2053–2081

Ghosh A, Basu A (2016) Robust estimation in generalized linear models: the density power divergence approach. TEST 25(2):269–290

Ghosh A, Majumdar S (2020) Ultrahigh-dimensional robust and efficient sparse regression using non-concave penalized density power divergence. IEEE Transactions on Information Theory pp 1–1

Hampel FR, Ronchetti EM, Rousseeuw PJ, Stahel WA (2005) Robust statistics: the approach based on influence functions. John Wiley & Sons

Huber PJ, Ronchetti EM (2009) Robust statistics. John Wiley & Sons

Hung H, Jou ZY, Huang SY (2018) Robust mislabel logistic regression without modeling mislabel probabilities. Biometrics 74(1):145–154

Hunter DR, Lange K (2004) A tutorial on mm algorithms. Am Stat 58(1):30–37

Jones MC, Hjort NL, Harris IR, Basu A (2001) A comparison of related density-based minimum divergence estimators. Biometrika 88:865–873

Kanamori T, Fujisawa H (2015) Robust estimation under heavy contamination using unnormalized models. Biometrika 102(3):559–572

Kawashima T, Fujisawa H (2017) Robust and sparse regression via \(\gamma \)-divergence. Entropy 19(608)

Lozano AC, Meinshausen N, Yang E (2016) Minimum distance lasso for robust high-dimensional regression. Electron J Statist 10(1):1296–1340. https://doi.org/10.1214/16-EJS1136

Maronna RA, Martin DR, Yohai VJ (2006) Robust Statistics: Theory and Methods. John Wiley and Sons

Maronna RA, Martin DR, Yohai VJ, Salibian-Barrera M (2018) Robust Statistics: Theory and Methods (with R), 2nd edn. Wiley Series in Probability and Statistics, John Wiley & Sons Ltd, New York https://doi.org/10.1002/9781119214656

Martiniano A, Ferreira RP, Sassi RJ, Affonso C (2012) Application of a neuro fuzzy network in prediction of absenteeism at work. In: 7th Iberian Conference on Information Systems and Technologies (CISTI 2012), pp 1–4

McCullagh P, Nelder J (1989) Generalized Linear Models, Second Edition. Chapman and Hall/CRC Monographs on Statistics and Applied Probability Series, Chapman & Hall

Ren M, Zhang S, Zhang Q (2020) Robust high-dimensional regression for data with anomalous responses. Annals of the Institute of Statistical Mathematics pp 1–34

Riani M, Atkinson AC, Corbellini A, Perrotta D (2020) Robust regression with density power divergence: Theory, comparisons, and data analysis. Entropy 22(4):399

Scott DW (2001) Parametric statistical modeling by minimum integrated square error. Technometrics 43(3):274–285

Wolfe P (1969) Convergence conditions for ascent methods. SIAM Rev 11(2):226–235

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Takayuki Kawashima was partially supported by JSPS KAKENHI Grant Numbers 19K24340 and 22K17859. Hironori Fujisawa was partially supported by JSPS KAKENHI Grant Number 17K00065.

Appendices

Proof of Proposition 1

We use the following relation:

This dose not depend on the explanatory variables x. Then, we have

Detailed derivation on robustness of type I in Sect. 4.2

We see

Proof of Theorem 3

Detailed derivation on robustness of type II in Sect. 4.3

The formulation of Type II under heterogeneous contamination is provided to show that the strong robustness does not hold in general.

Proof of Theorem 4

The majorization function \(h_{MM} (\theta | \theta ^{(m)} )\) is constructed from the following inequality:

where \(\kappa (u)\) is a convex function, \(a=(a_1, \ldots , a_n)^{\top }\), \(b=(b_1, \ldots , b_n)^{\top }\), \(b^{(m)} = (b_1^{(m)} , \ldots , b_n^{(m)})^{\top }\), and \(a_i\), \(b_i\) and \(b_i^{(m)}\) are positive. This inequality holds from Jensen’s inequality. Here we take \(a_i = \frac{1}{n} \), \(b_i = \displaystyle { \frac{ f(y_i|x_i;\theta )^{\gamma } }{ \left( \int f(y|x_i;\theta )^{1+\gamma }dy\right) ^{\frac{\gamma }{1+\gamma }} } } \), \( b_i^{(m)} = \displaystyle { \frac{ f(y_i|x_i;\theta ^{(m)})^{\gamma } }{ \left( \int f(y|x_i;\theta ^{(m)})^{1+\gamma }dy\right) ^{\frac{\gamma }{1+\gamma }} } } \), and \(\kappa (u) = - \frac{1}{\gamma } \log (u)\) in the above inequality. Then, the majorization function is obtained as follows:

where

This majorization function satisfies

and

By virtue of the property of the MM algorithm, \(\theta ^{(m+1)} = \mathop {{\mathrm{argmin}}}\limits _{\theta } h_{MM}(\theta |\theta ^{(m)})\) decreases the objective function, that is,

Consequently, the sequence of iterates \( \left\{ \theta ^{(m)} \right\} \) decreases the objective function \(h(\theta )\) at each iterative step.

Proof of Theorem 5

By applying the idea of the quadratic approximation (the second-order Taylor polynomial) (Böhning and Lindsay 1988) to \(h_{MM}\), we have

where \(\beta ^{\dagger }= \rho \beta +(1-\rho )\beta ^{(m)} \text{ for } \text{ some } \rho \in (0,1) \),

and

By \(w_{i}\left( \beta ^{(m)} \right) \le 1\) and \(0< \pi (x_i ; (1+\gamma )\beta ^{ \dagger }) < 1\),

From this relation, the following majorization function is obtained.

where

The majorization function \({\tilde{h}}_{MM}\) satisfies

and

The formula of the majorization function \({\tilde{h}}_{MM}\) is equal to that of the ordinary least squares regression, and it can be solved explicitly with respect to \(\beta \):

where \(X^{\top }=(x_1, \ldots , x_n)\) and \(z^{(m)} = (z_i^{(m)} , \ldots , z_n^{(m)})^{\top }\).

Robustness of density power divergence

For comparison with an existing robust divergence, the robustness of the density power divergence (DPD) for regression is considered under the same contamination models and conditions. The cross entropy of the DPD for regression is defined by:

where \(\alpha \) is the positive tuning parameter which controls the trade-off between efficiency and robustness.

We consider the homogeneous contamination. Hung et al. (2018) showed that the robust property does not hold whether or not \(f(y|x;\theta )\) belongs to a location-scale family, as follows:

This relation implies that the target conditional probability density function is affected by the outlier ratio \(\varepsilon \). Thus, we cannot expect that the latent bias is close to zero.

Next, we consider the heterogeneous contamination. We show the non-strong robustness for the DPD as follows:

The last term on the right-hand side is not negligible in general. Let us consider the following linear regression model as a specific example:

We have

This depends on the value of \(\sigma ^2\), and it generally cannot be close to zero, even though \(\alpha \rightarrow \infty \). Therefore, the latent bias cannot be expected to be close to zero under heterogeneous contamination.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kawashima, T., Fujisawa, H. Robust regression against heavy heterogeneous contamination. Metrika 86, 421–442 (2023). https://doi.org/10.1007/s00184-022-00874-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00184-022-00874-1