Abstract

We rank the quality of German macroeconomic forecasts using various methods for 17 regular annual German economic forecasts from 14 different institutions for the period from 1993 to 2019. Using data for just one year, rankings based on different methods correlate only weakly with each other. Correlations of rankings calculated for two consecutive years and a given method are often relatively low and statistically insignificant. For the total sample, rank correlations between institutions are generally relatively high among different criteria. We report substantial long-run differences in forecasting quality, which are mostly due to distinct average forecast horizons. In the long-run, choosing the criterion to rank the forecasters is of minor importance. Rankings based on recession years and normal periods are similar. The same does hold for rankings based on real-time vs revised data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Ranking forecasters has a long tradition in economics and finance (see, e.g., Cowles 1933). Forecast competitions are (perhaps even increasingly) popular in the media: Several newspapers and database providersFootnote 1 evaluate forecasters more or less regularly (see Silvia and Iqbal (2012), and Döhrn (2015) for an overview of recent German rankings).

Behind these efforts, we can assume a broad range of possible motivations: First, of course, there is the interest of the audience and, thus, an aspect of entertainment. Second, some authors are interested in comparing forecasts stemming from a particular institution with those of others as a benchmark for their quality (see, among others, Fritsche and Tarassow (2017) for the German IMK Institute or Pagan (2003) for the Bank of England). Third, it might be relevant how policy-makers rank within the forecasting industry: Lehmann and Wollmershäuser (2021), for example, consider the possibility that governments’ projections might have different properties as compared to forecasts of private institutions. In a similar vein, Gamber et al. (2014) analyze the quality of central bank forecasts. Additionally, it might be of interest from a monetary policy perspective, which institution ranks high in a list of forecasters since both FED and ECB conduct a survey of professional forecasters (see, for example, Meyler 2020; Rich and Tracy 2021, for the ECB). Finally, comparing forecast accuracy across countries (Heilemann and Müller 2018; Heilemann and Stekler 2013) might also give valuable insights, for example, in analyzing possible lower bounds of accuracy.

From a methodological perspective, several approaches have been proposed to rank forecasters (see, the literature cited in, Sinclair et al. 2012, 2016). To assess forecast quality, it is possible to use absolute or relative (that employ another prediction as a benchmark) accuracy measures. The criteria may rely on linear or quadratic loss functions. The evaluation may use numerical forecast errors or an analysis of directional change. One-dimensional rankings that refer to only one variable may lead to other results than multi-dimensional ones based on a vector of variables (Sinclair et al. 2015; Fortin et al. 2020). Our paper refers to numerical forecasts only, but it is noteworthy that Rybinski (2021) has recently suggested a ranking that relies on sentiment indices obtained from the texts of the forecast reports.

Other factors can influence the rankings as well: Do the forecasters foresee all variables equally well? Alternatively, emerge different rankings for growth, inflation, unemployment, or other variables? In other words: are there specialists among the institutions that are particularly good at predicting a specific variable, as it is considered by Timmermann and Zhu (2019)? Related, given findings according to which forecast errors strongly depend on business cycle phases (Dovern and Jannsen 2017), one might also ask whether some forecasters are specialists for specific periods, say, recessions.

From the perspective of economic policy, we also take into account some other aspects: First, not all relevant forecasters make their forecasts at the same time. Hence, the question arises, what is the impact of a longer forecast horizon in case of a fixed-event-forecast?(Knüppel and Vladu 2016) Is the horizon more important for accuracy as the institute, as found by, for example, Döhrn and Schmidt (2011)? Second, is it also crucial whether we use the most recently available data or real-time data? Döhrn (2019) argues using German data that the magnitude of forecast errors depends, to a substantial amount, on data revisions after the forecast has been made and evaluated. Does the same hold for rankings? Finally, ranking the institutes may rely not on one year only but on a more extended period. Evaluating forecasting institutions for a longer time ensures that a superior performance in a particular year is not the result of sheer luck. Consequently, several authors ask whether any forecaster is consistently better than all others (see, among others, Stekler 1987; Batchelor 1990; Qu et al. 2019).

All in all, these different aspects raise the question, whether criteria proposed by media, practitioners, and academic literature lead to similar results. Depend conclusions such as “all forecasters are equal”(Batchelor 1990) crucially on the criterion used to rank them? Are forecasting competitions meaningful beyond the aspect of pure entertainment? Or, as Döhrn (2015) puts it, lead such efforts just to “random results”?

The primary purpose of this paper is to discuss these questions empirically and compare the forecast quality of institutions that predict the macroeconomic development in Germany. To this end, we use annual data for 17 growth, inflation, unemployment rate, ex- and import changes forecasts from 1993 to 2019, which come from 14 institutions. The choice of these institutes and organizations is motivated by their importance for economic policy and the attention they receive from the media. The length of our sample includes all years for which the institutions made forecasts explicitly for unified Germany and not for West Germany only or separately for both parts.Footnote 2 Furthermore, we tried to include forecasts that comprise at least predictions for growth, inflation, unemployment, exports, and imports. The choice of these variables is also motivated by their relevance for economic policy since they roughly relate to the so-called magic square of German economic policy.Footnote 3

To these data, we employ various methods to rank the quality of the forecasters, which differ along the lines mentioned above. If one looks at only one particular year, the rankings vary widely: an institution that makes, say, good growth forecasts is not necessarily equally good at predicting inflation. Moreover, rankings of forecasters for a given variable vary considerably between two consecutive years: the institute that has the best growth forecast in one year might easily end up at the bottom of the ranking in the following year.

From a longer-term perspective, the rankings show a more stable picture. Using the total sample, the correlations between the forecast performances according to various criteria across institutions are pretty high. Also, some institutes are superior or inferior to others in this longer perspective. This over-or under-performance, though, is almost exclusively due to a shorter or longer average forecasting horizon.

We organize the paper as follows: Sect. 2 introduces several criteria to rank forecasters. Section 3 describes the dataset. Section 4 presents rankings of the forecasting performance of the respective institutions and compares the rankings based on different criteria. Furthermore, we discuss whether these rankings are stable over time and whether there is an institution that outperforms the other in the long-run. Section 5 concludes.

2 Criteria to rank forecasters

As already mentioned above, it is possible to evaluate forecasters in various dimensions. More specifically, (at least) the following dimensions should be considered: (i) The number of forecasters to be ranked, (ii) the number of predictions for each institution, (iii) the number of variables considered, (iv) the length of the forecast horizon, and, last but not least, the statistical method measuring forecast quality.

By combining these dimensions, one can create numerous rankings. For example, a ranking may include three forecasters who each forecast growth and inflation in October 2017 for 2018. Another ranking may rely just on growth forecasts, but for 20 years and include 20 institutions. These examples show that there is a huge number of possibilities to create a leaderboard for forecasters. This number increases even further if one takes into account the degrees of freedom within a specific statistical evaluation method. For example, in applying multi-dimensional methods, the individual variables can be weighted differently.

Among this large number of possible rankings, we opt, first, for fixed-event forecasts with a forecast horizon of at least 8 to a maximum of 16 monthsFootnote 4, which allows including all arguably policy-relevant institutions, which have regularly forecasted for many years, which yields 17 different predictions.Footnote 5

As mentioned above, we analyze these data in a first step, for one year only, because this is the state-of-the-art in most forecasting competitions. Then, we turn to the stability of yearly rankings before we consider the total sample of data. As regards the target variables, we chose five (real GDP growth, inflation, unemployment, real export and import growth), again motivated by relevance to economic policy.

Figure 1 gives a bird’s-eye view of the statistical methods to rank forecasters considered in this paper. On the one hand, we consider measures that are one-dimensional in the sense that they evaluate forecasts only for one target. On the other hand, we examine multi-dimensional measures, which assess several forecasts. Within the group of one-dimensional measures, it is possible to distinguish evaluations based on numerical forecast errors and figures relying on the analysis of directional change. Some readers might miss relative measures like Theil’s Inequality Coefficient or the Scaled Mean Error (Hyndman and Koehler 2006). Note, however, that both figures divide a series of absolute errors by the same denominator. Therefore, a ranking based on Theil’s inequality coefficient would be identical to a ranking based on the Root Mean Squared Error. In a similar vein, applying the Mean Absolute Errors and the Scaled Mean Error would result in the same hierarchy of forecasters.

2.1 One-dimensional evaluation of business cycle forecasts

The forecast error is defined as \(e_{t} = A_{t} - F_{t}\), where \(A_{t}\) is the actual value in period t minus the forecast \(F_{t}\) made in period \(t-1\) for period t. Hence, a negative forecast error corresponds to overestimating the variable at hand, whereas a positive value represents underestimating. We consider the following statistics:

Simple accuracy measures

-

The Mean Absolute Error indicates the average absolute distance between the forecast value and the one that actually occurred. A smaller value indicates a better forecast, and the assumed underlying loss function is a linear one.

$$\begin{aligned} \text {MAE} = \frac{1}{T}\sum _{t=1}^T \left| e_{t} \right| \end{aligned}$$(1) -

The Root Mean Squared Error is calculated from the square root of the average squared forecast error. By squaring them, large forecast errors are weighted more heavily, referring to a quadratic loss function. Again, a smaller value corresponds to a better forecast.

$$\begin{aligned} \text {RMSE} = \sqrt{\frac{1}{T} \sum _{t=1}^T e_{t}^2} \end{aligned}$$(2)

Measures of relative accuracy

One weakness of the rankings based on simple accuracy measures is that these numbers do not have a natural scaling. Therefore, it is necessary to compare it with a competing prediction. For forecast competitions, this shortcoming is of limited relevance, since, in most cases, the benchmark is identical for all institutions.

-

We follow Timmermann and Zhu (2019), who use the value of the Diebold and Mariano (1995) test (as compared to an AR(1) in their case or naive forecast, in our case) to classify the quality of a prediction. Hence, we calculate, based on the forecast error of a naive (no change) forecast (\(e_{\text {Naive}}\)) and of the respective institution (\(e_{\text {Institution}}\)), the Squared Errors (\(\text {SE}_{\text {Naive}}\) and \(\text {SE}_{\text {Institutions}}\), respectively). The difference between these time series—the loss differential (\(d_t= \text {SE}_{\text {Institution}} - \text {SE}_{\text {Naive}}\))—is used to obtain the test statistic:

$$\begin{aligned} \frac{\frac{1}{T}\sum _1^t d_t}{{\hat{\sigma _d}}}\rightarrow N(0,1) \end{aligned}$$(3)with \({\hat{\sigma _d}}\) representing a consistent estimate of the standard deviation of d. It should be estimated robustly since loss differentials are likely to be serially correlated(Diebold 2015). Equation 3 allows testing the hypothesis of equal accuracy of both predictions. Hence, the lower the implied test-statistic for this test is, the better is the forecast at hand compared to the naive benchmark.

Measures of directional change

The underlying notation for measures of the accuracy of directional change is taken from Diebold and Lopez (1996) and depicted in Table 1:

A forecast and an actual output can assume the state ‘i’ (for acceleration) and ‘j’ (for deceleration). An accurate acceleration forecast, for example, falls into cell \(O_{ii}\). If acceleration is predicted, but a deceleration occurs, then this case falls into cell \(O_{ij}\), and so forth. In particular, we calculate:

-

The Area under the Receiver Operating Characteristic Curve (AUROC), which plots the true positive rate (TPR) vs the false positive rate (FPR) at different classification thresholds on the Receiver Operating Characteristic Curve.

$$\begin{aligned}&\text {TPR}=\frac{O_{ii}}{O_{ii}+O_{ij}} \end{aligned}$$(4)$$\begin{aligned}&\text {FPR}=\frac{O_{ji}}{O_{ji}+O_{jj}} \end{aligned}$$(5)The value range of AUROC is 0 to 1, where 1 means that all forecasts are correct and 0 means that all forecasts are wrong.

-

Some authors suggest using the specificity of the forecast(Bailey et al. 2018) to compare forecasts, i.e., the share of correct forecast in relation to all forecasts:

$$\begin{aligned} \text {SPE}=\frac{O_{jj}}{O_{ij}+O_{jj}} \end{aligned}$$(6)

2.2 Multi-dimensional evaluation of business cycle forecasts

The measures sketched above refer to one dimension only. As Sinclair et al. (2016) and Döhrn (2015) argue, an evaluation can rest on more than one variable. Hence, it is necessary to refer to a vector of predicted variables. Döhrn (2015) discusses three possible criteria to judge a vector of forecasts:

-

The City-Block Metric:

$$\begin{aligned} D_{\text {CB}}=\sum _{m=1}^{M}|A_m-F_m| \end{aligned}$$(7)where M is the number of predicted series included, for example, M equals five, if we include the five variables mentioned above. The City Block Metric sums the absolute forecast errors across all variables. A lower value of \(D_{CB}\) indicates better forecast. The measure rests on the assumption of an underlying linear loss function.

-

The Euclidean Distance:

$$\begin{aligned} D_{\text {EU}}=\sqrt{\sum _{m=1}^{M}(A_m-F_m)^2} \end{aligned}$$(8)The Euclidean Distance assumes an underlying quadratic loss function. Similar to the Root Mean Squared Error, the method weights larger forecast errors more heavily. Again, a smaller value signals a better forecast.

-

The Mahalanobis (1936) Distance:

$$\begin{aligned} D_{\text {MA}} = (F-A)'W(F-A) \end{aligned}$$(9)with F and A as vectors of predictions and actuals, respectively, and W as the inverse variance–covariance matrix, which must be estimated from historical data of the forecasted time series. The primary motivation for this modification is that the forecast errors might be correlated with each other. Consequently, if this is not the case, the covariance matrix is the unit matrix and the Mahalanobis (1936) Distance equals the Euclidean Distance. The estimation of the covariance matrix is based on the last 10 years of actual outcomes (see Sinclair et al. (2016), who use 20 years instead).

2.3 Testing for long-run superiority

To check for a long-run superiority of an institution’s forecasting efficiency, we refer to a simple measure of long-run relative performance suggested by Stekler (1987) (see also D’Agostino et al. (2012); Meyler (2020); Rich and Tracy (2021)). In the first step, a score (\(R_{it}\)) is assigned to every forecast, which takes the value of the rank according to the respective criterion, for example, the Absolute Forecast Error. In the second step, the cumulated rank-sum of these scores is calculated:

Under the null hypothesis that each institution has the same predictive ability, each institution should have an expected cumulative sum of scores of:

In our case, we have 27 years and thus an expected value of \(\frac{27(17+1)}{2}=243\). To calculate the test statistic, we use the corrected standard deviation proposed by Batchelor (1990):

Additionally, we follow the approach suggested by Bürgi and Sinclair (2017). They calculate for each institution a dummy variable “that takes value 1 in a given period if that forecaster has a lower squared error in that period than the simple average and 0 otherwise.” (Bürgi and Sinclair 2017, ][p. 106). Over time, the average of the dummy equals the percentage share of periods each institution has beaten the simple average in the past. Equipped with this number, it is possible to check, whether a specific forecaster has been better than the average over a particular period, say, five years. Only the forecasters that meet this criterion will be taken into account in the next period.

3 Data

We use growth, inflation, unemployment, ex-, and import changes forecasts of 14 different forecasting institutions that have dominated German macroeconomic forecasting for a long time. Some institutions regularly provide more than one major report for a given year (usually “spring” and “autumn”). In these cases, we take both forecasts into account. All in all, we use 17 forecasting reports. The sample runs from 1993 to 2019. For more details on the dataset, compare the Data appendix.

Source: Own compilation from the forecasting reports. Legend: GDH: Joint diagnosis, autumn; GDF: Joint diagnosis, spring; SVR: Council of Economic Experts; IfW: Kiel Institute; DIW: Berlin Institute, HWWI: Hamburg Institute; ifo: Munich Institute; RWI: Essen Institute; IW: Cologne Institute; IMK: Düsseldorf Institute, EUH: European Commission, autumn, EUF: European Commission, spring, JWB: Governments’ Economic Report, IMFF: International Monetary Fund, spring, IMFH: International Monetary Fund, autumn

The “Forecasting season”for 2019 in Germany

In this paper, we refer to the “forecasting season” (see Fig. 2), which is usual in Germany. Therefore, we attribute some forecasts published in the recent year as “predictions.” Consequently, some forecasts made in period t are labelled as made in \(t-1\) in the following because they have been made between January and April.

The growth forecast is the predicted rate of change of real GDP. Regarding inflation, we use the predicted change of the consumer price index or—if it is not available—the deflator of private consumption. Other forecast values that are being investigated are the unemployed rate and the rate of change of real exports and imports. In the case of interval forecasts, we use the simple average of the upper and lower bound of the interval.

4 Empirical results

We organize our resultsFootnote 6 by the length of the analyzed period, starting with one year as an example, turning to the correlation of rankings for two subsequent years, and discussing a possible long-run superiority for an institution in the full sample.

4.1 Rankings for just one year: 2019 as an example

We start with one year—2019—as an example. This procedure relates to most of the forecasting competitions in the media that have been analyzed, for example, by Döhrn (2015). Table 2 starts with the arguably most popular measure—the Mean Absolute Error. For each of the five variables under investigation, we report a ranking. These rankings differ substantially across the variables: The institution with, say, the best growth forecast is not necessarily the one with the best inflation or unemployment prediction. This result comes as a slight surprise since several papers (see, for example, Casey 2020) suggest that forecasters rely on prominent relationships between variables, like Okun’s law or the Phillips curve, in making their forecasts. Furthermore, this finding calls for a ranking that considers all variables to figure out the best forecaster.

Therefore, the table also comprises the multi-dimensional methods—namely the City Block Metric, the Euclidean Distance, and the Mahalanobis Distance. While rankings based on the City Block Metric and the Euclidean Distance are pretty similar, there are some differences to the ranking based on the Mahalanobis Distance. Nevertheless, as regards the winner for 2019 the multi-dimensional methods point to the same institution: In all cases, the spring forecast of the European Commission ranks on top.

Table 3 further underlines the difficulties in creating a unique leaderboard for a given year. The exhibit compares the rankings based on the Spearman Rank Correlation Coefficient and Kendall’s Tau. The coefficients for the variables differ, in some cases, considerably. The correlation between two consecutive rankings is often low and not significantly different from zero. In one case (remarkably, unemployment and inflation), it is even slightly negative.

4.2 Are the rankings stable over time?

Based on a similar exercise for one year only, Döhrn (2015) considered the possibility that forecasting competition results are “purely random.” Therefore, we will look at yearly rankings and how they change from one year to the other. For this purpose, we have calculated rankings as in the previous section for each year from 1993 to 2019 and the Spearman Rank correlation of the rankings of two consecutive years. The result of this task is in Fig. 3.

If a particular group of forecasters would be regularly better than their competitors, the rank correlation coefficient should be (significantly) positive for most years. For any of the rankings relying on just one prediction, this does not appear to be the case. The Spearman Rank Correlation Coefficient is significantly positive for the multi-dimensional methods, only for a few years.

Notes: Source: Our own calculation. Shaded areas represent a 95% confidence interval calculated by \(\tanh (\arctan r \pm 1.96 / \sqrt{n-3})\) with r as the empirical Spearman rank correlation coefficient and n as number of observations

Rank correlation coefficients for two subsequent years by ranking method, 1993 to 2019

Instead, the correlations are about as often positive as negative, and the confidence bands regularly contain a zero correlation. This suggests that it is not possible using the ranking for a specific year to guess the forecasters you should listen to in the following year.

4.3 Has any institution superior forecasting skills in the long-run?

4.3.1 Rankings based on the total sample

For the following rankings, we consider the entire sample: 27 observations covering the period from 1993 to 2019 and calculate the measures already used in the previous sections. Additionally, we include now the Root Mean Squared Error since, in the case of more than one observation, a different ranking than according to the Mean Absolute Error may occur. Also, it is now possible to include direction-of-change measures. We use the specificity and the Area under the Receiver Operating Curve (AUROC). Furthermore, following Timmermann and Zhu (2017), we add the Diebold and Mariano (1995) statistic to rank the forecast.

Our results for the analysis of numerical forecast errors are in Table 4. For the sake of brevity, we only report the findings for growth and inflation and leave the respective statistics for other variables in the appendix (Tables 9 to 12 report the rankings, while Tables 13 and 14 show the numerical results for the respective criterion).

As becomes apparent, in contrast to the results based on the somewhat limited information of just one year, the rankings seem to be closer to each other. Still, there are some significant deviations from this rule. For example, the Essen Institute ranks relatively good regarding the predictions for growth, inflation, and unemployment, while more at the bottom for exports and imports. Rankings resulting from the three multi-dimensional criteria are also very similar.

Table 4 also reveals that the rankings based on specificity and AUROC differ in some cases. However, as is shown in Appendix Table 13, the measures are quite close to each other. The rankings for the Mean Absolute Error, the Root Mean Square Error, and the Diebold and Lopez (1996)-statistic are very alike. However, when comparing the rankings based on numerical forecast errors with the ones based on directional change analyses, a few differences in the rankings become apparent, because the direction-of-change measures show a smaller variance across institutions, i.e., several institutions show the same rank number.

Table 5 contains the Spearman Correlation Coefficients and Kendalls’s Tau for the rankings methods based on the total sample.Footnote 7We find that the correlations among numerical criteria are generally stronger than the correlations within direction-of-change measures. However, the correlations are always positive, generally much higher than in the case for just one year, and in almost every case, significantly different from zero at standard significance levels.

4.3.2 Measures of long-run superiority

After demonstrating in Sect. 4.2 that rankings are not stable over two consecutive years, it is still possible that certain institutions are significantly more often better than the average in the long-run. To analyze this problem, we calculate the statistics proposed by Stekler (1987) and Sinclair et al. (2016) for the full sample.

Figure 4 shows the development of the total cumulative rank-sum based on the Absolute Forecast Errors for each variable and the multi-dimensional methods. The last value of each time series represents the test statistics proposed by Stekler (Equation 10). The black diagonal line shows the rank-sum for each year expected for a forecaster that always makes the median rank. The vertical black line represents two standard deviations. In the exhibit, we can identify some institutions that perform significantly better or worse than the average, which is visible in the case of the multi-dimensional criteria. In the case of the one-dimensional criteria, in particular for ex- and imports, one has the impression of a relatively similar long-run performance. Appendix Table 15 also confirms this finding since it shows the rank correlation coefficients for the methods to be positive, relatively high and statistically different from zero.

Source: Our own calculation. The black line represents the expected development for a forecaster with a median rank in each period. The vertical black line represents two standard deviations. Legend: GDH: Joint diagnosis, autumn; GDF: Joint diagnosis, spring; SVR: Council of Economic Experts; IfW: Kiel Institute; DIW: Berlin Institute, HWWI: Hamburg Institute; ifo: Munich Institute; RWI: Essen Institute; IW: Cologne Institute; IMK: Düsseldorf Institute, EUH: European Commission, autumn, EUF: European Commission, spring, JWB: Governments’ Economic Report, IMFF: International Monetary Fund, spring, IMFH: International Monetary Fund, autumn

The evolution of cumulated rank-sums based on the Absolute Forecast Errors and multi-dimensional criteria, 1993 to 2019

Table 6 contains the Stekler statistic, i.e., the final (2019) cumulative rank-sums for each institution and method. Compared to the results in Table 2, which lists the rankings for 2019, the results suggest more essential differences in the forecasting quality since many institutions show values outside the two standard error bands. Hence, in the long-run, some institutions seem to be superior to the average forecaster.

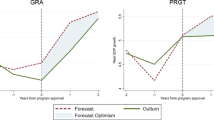

As mentioned above, however, the forecasters under investigation provide their predictions typically on diverging dates. Appendix Table 16 provides an overview of all forecasters and shows the average forecast horizon in days and its standard deviation. Since the ranking deals with fixed-event rather than with fixed-horizon forecasts (see Knüppel and Vladu 2016, for these concepts), the differing forecast horizons will influence accuracy. Figure 5 shows the relation between the average forecast horizon and the rank-sums based on the four selected measures. The ranks decline, signalling a better long-run relative performance with a shorter forecast horizon. The correlation is pretty strongly negative, and only a few deviations from the estimated trend are visible.

4.3.3 Selecting successful forecasters

Finally, we adopt the Bürgi and Sinclair (2017) approach outlined above to select successful forecasters for a given year. To this end, we refer to the last five years and demand that an institution should have been better than the average at least half of the time, i.e., the percentage threshold in our case is 50 %. Because—for example, in case of a pronounced downturn—the advantage of forecasting lately is significant, we restricted the sample to institutions with an average forecast horizon of more than 300 days. Figure 6 shows the results. The group of successful forecasters, according to the described rule, changes over time. In some years, only five of the 14 possible forecasts are selected by the procedure. Remarkably the group of forecasters selected by the approach changes quite often, and there is no institution that is in it for the total sample.

Source: Own calculation. Legend: GDH: Joint diagnosis, autumn; GDF: Joint diagnosis, spring; SVR: Council of Economic Experts; IfW: Kiel Institute; DIW: Berlin Institute, HWWI: Hamburg Institute; ifo: Munich Institute; RWI: Essen Institute; IW: Cologne Institute; IMK: Düsseldorf Institute, EUH: European Commission, autumn, EUF: European Commission, spring, JWB: Governments’ Economic Report, IMFF: International Monetary Fund, spring, IMFH: International Monetary Fund, autumn

Forecast horizon and forecast performance

Source: Own calculation. Legend: GDH: Joint diagnosis, autumn; GDF: Joint diagnosis, spring; SVR: Council of Economic Experts; IfW: Kiel Institute; DIW: Berlin Institute, HWWI: Hamburg Institute; ifo: Munich Institute; RWI: Essen Institute; IW: Cologne Institute; IMK: Düsseldorf Institute, EUH: European Commission, autumn, EUF: European Commission, spring, JWB: Governments’ Economic Report, IMFF: International Monetary Fund, spring, IMFH: International Monetary Fund, autumn

Selecting successful forecasters by different criteria

4.4 Forecast horizons and real-time data

The most recently available revised data can be compared with the first published data (“real-time data”) to determine the result. It is even possible that forecasters aim at different targets, i.e., one institution tries to predict the last available data vintage, while another institution seeks to anticipate real-time data (Clements 2019). To assess whether selecting one of these databases affects the ranking, we compared rankings based on the Mean Absolute Error and either database in Table 7. The results hardly show any variations in the rank order, suggesting that the data vintage does not matter very much in ranking German forecasters.

4.5 Rankings and recessions

Some papers (for example, Dovern and Jannsen 2017) have shown that the magnitude of forecast errors crucially depends on the phase of the business cycle in which the forecasts have been made. This fact raises the question of whether the relative position of the institute may also rely on that. For example, a certain institute may be a “recession specialist,” while others may be better in regular times. To check this idea with our dataset, we have restricted ourselves to institutes with a forecast horizon of at least 300 days and calculated the average rank for recession and non-recession years. In this context, we define a recession year as one in which real GDP shrunk, i.e., the growth rate of real GDP was negative. Table 8 shows the results of this task. For the sake of brevity, we consider just one one-dimensional (the Mean Absolute Forecast Error) and one multi-dimensional measure (the Mahalanobis (1936) distance) of accuracy. The differences between the business cycle phases are generally rather small and seem to be not systematic. Again, the forecast horizon is the prime suspect in explaining the changing differences between the forecasters.

5 Conclusion

We have turned not literally all but quite a few stones to rank the quality of German macroeconomic forecasts. To this end, we refer to the arguably “leading” institutions that provide predictions for the German economy. Using annual data from 1993 to 2019 for predictions of growth, inflation, the unemployment rate, and ex- and import changes, we base our analyses on 17 different forecasts from 13 separate institutions. To these data, we apply a variety of criteria to rank these institutions according to their predictive ability. We consider different horizons for the comparisons, from just one year over several subsequent years to the full sample.

To rank the forecasts, we use, first, simple accuracy measures such as the Mean Absolute Error, the Root Mean Squared Error, and the Diebold and Mariano (1995) statistic. Second, we apply directional change statistics: the Area Under the Receiving Operating Curve and the specificity of the forecasts. Third, we consider rankings based on multi-dimensional criteria: the City-Block Metric, the Euclidean Distance, and the Mahalanobis Distance.

Our main findings suggest that—for just one year—rankings based on different methods vary widely and, in some cases, correlate only weakly with each other. In other words, rankings for growth, inflation, unemployment, and so forth do not point to the same winner. Hence, we see not much value in year-by-year forecasting competitions beyond the aspect of pure entertainment.

Furthermore, correlations of rankings calculated for two consecutive years and a given method are often relatively low and statistically insignificant. Therefore, an interested audience, in particular policymakers, cannot guess from the results from one year to whom they should listen for the next year.

Analyses based on the entire sample, however, show a much more stable pattern: First, rank correlations between institutions are generally relatively high among different criteria. Also, we find substantial long-run differences in forecasting quality as reflected, for example, by the cumulative rank-sums. However, further inspections suggest that these differences are mostly due to distinct average forecast horizons. Third, we report that the rank correlations across the several ranking methods are, in the long-run, quite high and statistically different from zero, which implies that the choice of the criterion to rank the forecasters is of minor importance. The same does hold for using real-time vs finally revised data-sets. We also find no large differences in the rankings based on recession years and normal periods.

All in all, on the substantial side, we are not able to single out an institution that is superior in predicting the German economy. On the methodological side, we find only minor differences across the ranking methods.

Further research may try to broaden the database of the investigation. In particular, privately financed institutions may show different behavior as compared to the forecasters included in this study. Also, a higher data frequency may render it possible to find differences across forecasters in adjusting to new information.

Notes

Regarding some exceptions for the year 1993, see the data appendix.

Some—particularly older—forecasts just referred to growth and/or inflation, limiting our sample in the time dimension.

See Appendix Table 16 for details.

This choice is also motivated by data availability: We could not consider more forecasters and observation years due to missing forecasts.

For the sake of brevity, we left out some variables we have considered for the ranking based on just one year. These numbers are available upon request from authors.

References

Bailey DH, Borwein JM, Salehipour A, López de Prado M (2018) Evaluation and ranking of market forecasters. J Invest Manag 16(2):47–64

Batchelor RA (1990) All forecasters are equal. J Bus Econ Stat 8(1):143–144

Bürgi C, Sinclair TM (2017) A nonparametric approach to identifying a subset of forecasters that outperforms the simple average. Empir Econ 53(1):101–115

Casey E (2020) Do macroeconomic forecasters use macroeconomics to forecast? Int J Forecast 36(4):1439–1453

Clements MP (2019) Do forecasters target first or later releases of national accounts data? Int J Forecast 35(4):1240–1249

Conigrave J (2020) corx: create and format correlation matrices. https://CRAN.R-project.org/package=corx, r package version 1.0.6.1, last access: 2/24/2022

Consensus Forecast (ed) (2020) G7 & Western Europe 2020 Forecast Accuracy Award winners. https://www.consensuseconomics.com/cf-2020-forecast-accuracy-award-winners, last access 8/9/2021

Cowles A (1933) Can stock market forecasters forecast? Econometrica 1(3):309–324

Diebold FX (2015) Comparing predictive accuracy, twenty years later: a personal perspective on the use and abuse of Diebold-Mariano tests. J Bus Econ Stat 33(1):1–1

Diebold FX, Lopez JA (1996) Forecast evaluation and combination. In: Maddala G, Rao C (eds) Handbook of Statistics, vol 14, Elsevier, chap 8, pp 241–268

Diebold FX, Mariano RS (1995) Comparing pedictive accuracy. J Bus Econ Stat 13(13):235–265

Döhrn R (2015) Der Prognostiker des Jahres: Ein Zufallsergebnis? Möglichkeiten einer mehrdimensionalen Evaluierung von Konjunkturprognosen. Diskussionsbeitrag 208, University of Duisburg-Essen, Institute of Business and Economic Studie (IBES)

Döhrn R (2019) Revisionen der Volkswirtschaftlichen Gesamtrechnungen und ihre Auswirkungen auf Prognosen. AStA Wirtschafts-und Sozialstatistisches Archiv 13(2):99–123

Döhrn R, Schmidt CM (2011) Information or institution?: on the determinants of forecast accuracy. Jahrbücher für Nationalökonomie und Statistik 231(1):9–27

Dovern J, Jannsen N (2017) Systematic errors in growth expectations over the business cycle. Int J Forecast 33(4):760–769

Dowle M, Srinivasan A (2021) data.table: Extension of ‘data.frame‘. https://CRAN.R-project.org/package=data.table, r package version 1.14.2, last access: 2/24/2022

D’Agostino A, McQuinn K, Whelan K (2012) Are some forecasters really better than others? J Money Credit Bank 44(4):715–732

Fortin I, Koch SP, Weyerstrass K (2020) Evaluation of economic forecasts for Austria. Emp Econ 58(1):107–137

Fricke T (2018) Langzeitwertung der besten Prognostiker. Blog-entry "Neue Wirtschaftswunder", https://neuewirtschaftswunder.de/2018/12/20/langzeitwertung-der-besten-prognostiker/, last access: 8/9/2021

Fritsche U, Tarassow A (2017) Vergleichende Evaluation der Konjunkturprognosen des Instituts für Makroökonomie und Konjunkturforschung an der Hans-Böckler-Stiftung für den Zeitraum 2005-2014. IMK Study 54, Institut für Makroökonomie und Konjunkurforschung

Gamber EN, Smith JK, McNamara DC (2014) Where is the Fed in the distribution of forecasters? J Policy Model 36(2):296–312

Handelsblatt, NN (2014) Die besten Prognostiker im Land. Article "Handelsblatt", https://www.handelsblatt.com/infografiken/ranking-die-besten-prognostiker-im-land/9584620.html?ticket=ST-3358778-WEPGzZpcA4Hsif0udum7-ap1, last access: 8/9/2021

Heilemann U, Müller K (2018) Wenig Unterschiede-Zur Treffsicherheit Internationaler Prognosen und Prognostiker. AStA Wirtschafts-und Sozialstatistisches Archiv 12(3–4):195–233

Heilemann U, Stekler HO (2013) Has the accuracy of macroeconomic forecasts for Germany improved? German Econ Rev 14(2):235–253

Hyndman RJ, Khandakar Y (2008) Automatic time series forecasting: the forecast package for R. J Stat Softw 26(3):1–22

Hyndman RJ, Koehler AB (2006) Another look at measures of forecast accuracy. Int J Forecast 22(4):679–688

Kendall MG (1938) A new measure of rank correlation. Biometrika 30(1/2):81–93

Knüppel M, Vladu A (2016) Approximating fixed-horizon forecasts using fixed-event forecasts. Discussion Paper 28/2016, Deutsche Bundesbank

Lehmann R, Wollmershäuser T (2021) The macroeconomic projections of the german government: a comparison to an independent forecasting institution. German Econ Rev 21(2):235–270

Mahalanobis CP (1936) On the generalised distance in statistics. Proceedings of the National Institute of Sciences of India

Meyler A (2020) Forecast performance in the ECB SPF: ability or chance? Working Paper 2371, European Central Bank, https://www.ecb.europa.eu/pub/pdf/scpwps/ecb.wp2371~4edce8ed72.en.pdf?cd8f1ebfee28d30cca20ae6d4ecc6aee, last access: 8/17/2021

Pagan A (2003) Report on modelling and forecasting at the bank of england/bank’s response to the pagan report. Bank Engl Q Bull 43(1):60

Qu R, Timmermann A, Zhu Y (2019) Do any economists have superior forecasting skills? Discussion Paper 14112, Centre for Economic Policy Research (CEPR), https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3496601, last access: 2/19/21

R Core Team (2021) R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria, https://www.R-project.org/

Rich RW, Tracy J (2021) All forecasters are not the same: time-varying predictive ability across forecast environments. Working Paper 21/06, Federal Reserve Bank of Cleveland, https://www.clevelandfed.org/en/newsroom-and-events/publications/working-papers/2021-working-papers/wp-2106-all-forecasters-are-not-the-same.aspx, last access: 8/3/2021

Robin X, Turck N, Hainard A, Tiberti N, Lisacek F, Sanchez JC, Müller M (2011) proc: an open-source package for r and s+ to analyze and compare roc curves. BMC Bioinform 12:77

Rybinski K (2021) Ranking professional forecasters by the predictive power of their narratives. Int J Forecast 37(1):186–204

Silvia J, Iqbal A (2012) A new approach to rank forecasters in an unbalanced panel. Int J Econ Financ 4(9)

Sinclair T, Stekler HO, Muller-Droge HC (2016) Evaluating forecasts of a vector of variables: a German forecasting competition. J Forecast 35(6):493–503

Sinclair TM, Stekler HO, Carnow W, Hall M (2012) A new approach for evaluating economic forecasts. Econ Bull 32(3):2332–2342

Sinclair TM, Stekler HO, Carnow W (2015) Evaluating a vector of the Fed’s forecasts. Int J Forecast 31(1):157–164

Spearman C (1906) Footrule for measuring correlation. Br J Psychol 2(1):89

Stekler HO (1987) Who forecasts better? J Bus Econ Stat 5(1):155–158

Timmermann A, Zhu Y (2017) Monitoring forecasting performance. Working paper, Rady School of Management, https://rady.ucsd.edu/docs/faculty/timmermann/Monitoring_performance_August_29__2017.pdf, last access: 2/19/21

Timmermann A, Zhu Y (2019) Comparing forecasting performance with panel data. Discussion Paper 13746, Center of Economic Policy Research (CEPR), https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3395183, last access: 2/4/2022

Acknowledgements

The authors thank Lars Tegtmeier, Karsten Müller, Ulrich Fritsche, seminar participants at the 40th International Symposium on Forecasting, and an anonymous referee for helpful comments on a previous version of this paper. We thank the Deutsche Forschungsgemeinschaft (DFG) for financial support (projects FR 2677-4-1 and FR 2677-4-2 within the DFG Priority Program 1859 Experience and Expectation: Historical Foundations of Economic Behavior).

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

To compile our dataset, we have to make a couple of additional assumptions, we list below:

-

In case of interval forecasts, we take the average between the upper and lower bound of the interval.

-

The growth forecast is the predicted rate of change in real GNP (1983–1989) and in real GDP (for all other years). In doing so, we follow the headline figure of the Statistical Office for the respective year. Note that, frequently, the forecasts refer to growth rather than to either GDP or GNP. In these cases, we assume that the forecasters made no distinction between the concepts and had the same forecast for both figures.

-

Also, in some cases, the forecast report included no explicit reference on whether a mentioned inflation forecast referred to the consumption deflator or the CPI. In these cases, we assume that the forecaster did not wish to make a difference between the two concepts.

-

The Essen and the Düsseldorf Institute refer to West Germany in 1993.

-

In one case, the trade-union financed institute, we have combined two institutes to one. Up to 2004, the macroeconomic forecasts have been provided by the WSI, until then the IMK was responsible for the prediction in behalf of the trade unions. This is motivated by the fact that both institutes are formally departments of the Hans-Böckler-Stiftung.

-

In a similar vein, we have treated the (privately financed) HWWI Institute in Hamburg as the successor of the former HWWA Institute (financed by public money).

-

For the inflation forecast, we use the predicted change in the deflator of private consumption when this figure was available. In some cases, however, the forecast report included no explicit reference on whether a mentioned inflation forecast refers to the consumption deflator or the CPI. In such cases, we assume that no distinction between the figures was intended by the forecaster and use the available inflation forecast.

-

The subset still contained isolated missing forecast values. For these values, we have assumed the average absolute forecast error calculated from the other forecast errors of the respective report. Missing forecast values concerned the number of unemployment for the Cologne Institute for the years from 1994 to 1996 and unemployment, export, import forecasts of the Düsseldorf Institute for the year 2005.

-

For the European Commission, we also observe a pause in publishing forecast in 1997. In this case, we refer to forecasts with a two-year-forecast-horizon made in 1996.

-

Some forecasters predict the unemployment rate according to the ILO definition, some aim at the unemployment rate according to the national definition. Thus, for the OECD, the forecast error is calculated based on ILO data. See Tables 9, 10, 11, 12, 13, 14, 15, 16

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Köhler, T., Döpke, J. Will the last be the first? Ranking German macroeconomic forecasters based on different criteria. Empir Econ 64, 797–832 (2023). https://doi.org/10.1007/s00181-022-02267-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00181-022-02267-9